Abstract

Interventional C-arm imaging is crucial to percutaneous orthopedic procedures as it enables the surgeon to monitor the progress of surgery on the anatomy level. Minimally invasive interventions require repeated acquisition of X-ray images from different anatomical views to verify tool placement. Achieving and reproducing these views often comes at the cost of increased surgical time and radiation. We propose a marker-free ‘technician-in-the-loop’ Augmented Reality (AR) solution for C-arm repositioning. The X-ray technician operating the C-arm interventionally is equipped with a head-mounted display system capable of recording desired C-arm poses in 3D via an integrated infrared sensor. For C-arm repositioning to a target view, the recorded pose is restored as a virtual object and visualized in an AR environment, serving as a perceptual reference for the technician. Our proof-of-principle findings from a simulated trauma surgery indicate that the proposed system can decrease the 2.76 X-ray images required for re-aligning the scanner with an intra-operatively recorded C-arm view down to zero, suggesting substantial reductions of radiation dose. The proposed AR solution is a first step towards facilitating communication between the surgeon and the surgical staff, improving the quality of surgical image acquisition, and enabling context-aware guidance for surgery rooms of the future.

Keywords: augmented reality, orthopaedics, medical image processing, surgery, wearable computers, computerised tomography, biomedical equipment

Keywords: orthopaedic trauma surgery, X-ray images, intra-operatively recorded C-arm, radiation dose, C-arm repositioning, surgical image acquisition, technician-in-the-loop design, interventional C-arm imaging, percutaneous orthopaedic procedures, minimally invasive interventions, X-ray technician, C-arm interventionally, augmented reality-based feedback, marker-free technician-in-the-loop augmented reality solution, integrated infrared sensor

1. Introduction

Percutaneous approaches are the current clinical standard for internal fixation of many skeletal fractures, including pelvic trauma. This type of minimally invasive surgery is enabled by C-arm X-ray imaging systems that intra-operatively supply projective 2D images of the 3D surgical scene, tools and anatomy. Appropriate placement of implants is crucial for satisfactory outcome [1, 2], but verifying acceptable progress interventionally is challenging. This is because it requires the mental mapping of desired screw trajectories to the fractured anatomy in 3D based on 2D X-ray images acquired from different viewpoints [3–5]. To alleviate the associated challenges, surgeons are trained to use well defined X-ray views specific to the current task, such as the inlet or an obturator oblique view of the pelvis [6, 7]. Achieving these views, however, is not straight forward in practice due to multiple reasons. First, the desired views are usually difficult to obtain since the position of the internal anatomy is not obvious from the outside. Second, C-arm systems most commonly used today are non-robotic but have many degrees of freedom. Consequently, when trying to achieve a particular view, the X-ray technician operating the C-arm positions the device by repeated trial-and-error. Doing so increases the radiation dose to patient and surgical team. In surgical workflows where the C-arm has to be moved out of the way to ease access to the patient (as is the case in pelvis fixation), the problem of increased dose during so-called ‘fluoro hunting’ [8] is further amplified. The above reasoning suggests that a computer-assisted solution that aids the X-ray technician in finding the desired view has great potential in reducing X-ray dose to the patient and surgical staff.

Most previous works have focused on digitally rendering X-ray images from CT data rather than physically acquiring them. The authors of [9–11] use ‘virtual fluoroscopy’ to improve training of X-ray technicians and surgeons, while the authors of [8, 12, 13] generate digitally rendered radiographs intra-operatively from pre-operative CT. Doing so requires 3D/2D registration of the CT volume to the patient and tracking of the C-arm, which is achieved using an additional RGB camera or C-arm encoders, respectively. A complementary method most similar to the approach discussed here uses an external outside-in tracking system that accurately tracks an optical marker on the C-arm to verify accurate repositioning [14]. All the above approaches successfully reduce radiation dose due to C-arm repositioning, however, they make strong assumptions on the surgical environment by requiring pre-operative CT, an encoded C-arm, or external tracking systems.

In [15], a user interface concept is introduced for navigating and repositioning angiographic C-arms. First, the surgeon identifies the desired imaging outcome based on radiographs simulated from pre-operative CTA images on a tablet PC system. The 6 degree-of-freedom pose of the C-arm scanner is automatically estimated by using this planning information, the registration between the patient and the scanner, and the inverse kinematics of the C-arm. Consequently, this solution provides a transparent interface to the control of the imaging device. However, a major challenge associated with it is the offline planning stage, which prohibits its application and usefulness for percutaneous orthopaedic interventions considering their ergonomics and dynamic workflow.

In this work, we propose a technician-in-the-loop solution to C-arm repositioning during orthopaedic surgery in unprepared operating theatres. The proposed solution is based upon the realisation that X-ray technicians can easily align the real C-arm with a corresponding virtual model visualised in the desired pose rendered using an Augmented Reality (AR) environment. This is achieved by equipping the X-ray technician with an optical see-through head-mounted display (OST HMD) that tracks itself within its environment. The virtual model of the C-arm in any desired view is acquired intra-operatively once the C-arm is positioned appropriately, by sensing and storing the 3D point cloud of the C-shaped gantry. When a particular view must be restored, the corresponding 3D scene is visualised to the technician in an AR environment, providing intuitive feedback in 3D guiding the alignment of the real C-arm with its virtual representation.

2. Methods

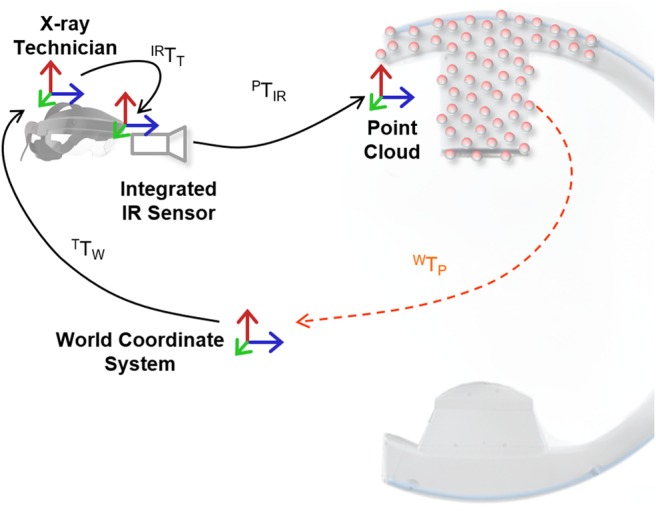

Similar to the AR environment delivered by the camera-augmented C-arm [3], the proposed solution for C-arm repositioning does not actively track the device to be positioned but intuitively visualises spatial relations and thus improves user performance. To this end, several transformations need to be estimated dynamically. These transforms are illustrated in Fig. 1 and their estimation is discussed in the remainder of this section.

Fig. 1.

Spatial relations that must be estimated dynamically to enable the proposed AR environment. Transformations shown in black are estimated directly while transformations shown in orange are derived

2.1. Localisation in the operating theatre

Tracking the Technician: The central mechanism of the proposed system is the ability to store the 3D appearance of a C-arm configuration (the intra-operative 3D point cloud as shown in Fig. 2) at the respective position in 3D space; and to recreate it in an AR environment. We use the Simultaneous Localisation and Mapping (SLAM) capabilities of the OST HMD to dynamically calibrate the headset, and thus the technician, to its environment. Using image features obtained via depth sensors or stereo cameras, SLAM incrementally constructs a spatial map of the environment and localises the sensing device therein [16]. In particular, SLAM solves

| (1) |

where is the desired pose of the technician relative to an arbitrary but static world coordinate system at the time t, are image features at that time, are the 3D locations of this feature, P is the projection operator, and is the feature similarity to be optimised [5, 16].

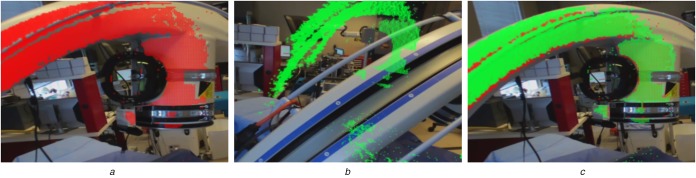

Fig. 2.

All images are shown from the X-ray technician's point of view

a Live 3D point cloud computed from the infrared depth image is displayed in red. This intra-operative point cloud is then saved for re-use

b C-arm has been moved to a different pose; the previously saved point cloud is visualised in green and serves as a reference to achieve the previous pose. After successful repositioning of the C-arm shown in:

c Saved and current point clouds shown in green and red, respectively, coincide. This means that the C-arm has been repositioned appropriately

Sensing the C-arm Position: In contrast to the technician, the C-arm is not tracked explicitly but only imaged in 3D using an infrared depth camera integrated into the HMD worn by the technician. Since the X-ray technician works in reasonable proximity of the C-arm, the infrared sensor will constantly observe a large area of the C-arm's surface (cf. Fig. 2). Once the C-arm has been moved to the desired location, the technician can save the C-arm position by either voice command (‘Save Position 1’) or by pressing a button on a hand-held remote control. Here, saving the position refers to saving the current intra-operative point cloud relative to the world coordinate system via

| (2) |

where is the time of voice command, is the HMD-specific transformation from tracking module of the HMD to its infrared camera, and is the mapping from the infrared sensor to metric 3D points .

It is worth emphasising that the proposed system is only targeted at repositioning the C-arm to previously achieved views, meaning that the current concept does not allow finding new desired views. This is because the AR environment and HMD are never calibrated (or registered) to the patient. While this may seem like a disadvantage on first glance, it makes the proposed system very flexible since there are no assumptions on the availability of data and environment, such as pre-operative CT and encoders, respectively.

2.2. Guidance by visualisation

The process of saving C-arm positions is repeated for every desired X-ray view such that point clouds of the C-arm device in every pose are available. During the procedure when previous C-arm views have to be re-produced, the X-ray technician requests visualisation of the desired position via voice command (‘Show Position 1’). Since the point cloud of C-arm position j is stored relative to the world coordinate frame, it can be visualised to the X-ray technician in an AR environment at a position

| (3) |

where denotes the time of calibration of view j, t is the current time, is the point in point cloud j in the world coordinate frame, and is computed according to (2). An example of the AR environment during visualisation of a representative point cloud is provided in Fig. 2b.

In contrast to previous approaches, there is no explicit guidance but intuitive 3D visualisation of the desired position. The X-ray technician adjusts the position of the C-arm using all available degrees of freedom (axial, orbital and swivel rotation in addition to base and gantry translations) such that the surface of the real C-arm perfectly matches the virtual point cloud. The live point cloud can be toggled on or off (see Fig. 2) for additional virtual-on-virtual assessment. [A video demonstrating the system is available at https://camp.lcsr.jhu.edu/miccai-2018-demonstration-videos/.].

2.3. Experiments and study

To test the described system, we setup an experiment mimicking pelvic trauma surgery using an anthropomorphic Sawbones pelvis phantom (Sawbones, Vashon, WA). The phantom was completely covered with a surgical drape and had metallic markers attached to define keypoints for evaluation. During this study, the C-arm was operated by a board-certified X-ray technician, who usually operates C-arm imaging systems during surgery. During the experiment and for every run, the X-ray technician was asked to: First, move the system into two clinically relevant C-arm poses, inlet and outlet view; second, retract the C-arm and reset to neutral position; and third, accurately reproduce the two previously defined C-arm positions using the conventional method (no assistance) and the proposed AR environment. Representative angulations of the C-arm are shown in Fig. 3. For direct quantitative comparison between C-arm poses, an infrared optical marker was rigidly attached to the gantry of the C-arm and tracked using an external tracking camera, namely a Polaris Spectra (Northern Digital Inc., Shelburne, VT). The workflow for one run was as follows:

Step 1: Define two target C-arm poses, save X-ray images, point cloud using HMD, and C-arm position using the external tracker.

Step 2: Retract C-arm from the scene and set in neutral position.

- Step 3: Restore target views

- Conventional: Store all X-ray views required for repositioning, and final C-arm position using an external marker.

- Proposed: Store final C-arm position, and one X-ray image for evaluation.

Step 4: Repeat Step 3 with other method (conventional/proposed).

We designed four runs covering a total of six different poses:

Run 1: Inlet/outlet.

Run 2: Cranial oblique/Caudal oblique.

Run 3: Cranial oblique/Caudal oblique (opposing).

Run 4: Inlet/outlet.

To avoid training bias, we alternate the order in which conventional and proposed approachs are utilised for every run.

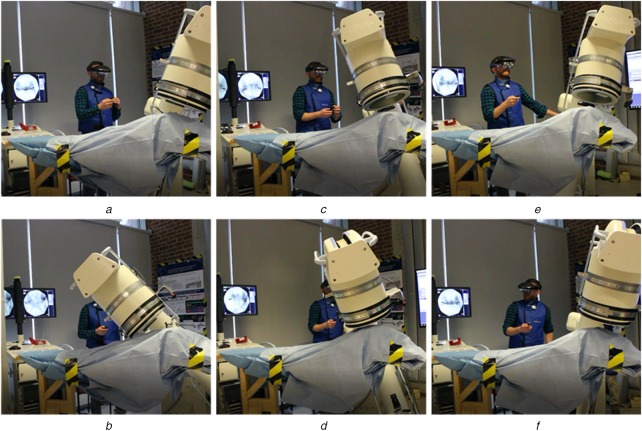

Fig. 3.

X-ray technician operating the C-arm during the experiment. Typical angulations for pelvic trauma surgery were selected according to [6]

a, b We show inlet and outlet views,

c, d Caudal oblique views, and

e, f Cranial oblique views, respectively

For quantitative evaluation, we report the mean Euclidean and angular difference of final C-arm poses compared to the target pose as measured by the external tracker. Further, we manually annotate the keypoint locations in all X-ray images and compute the average projection domain displacement using the first and final X-ray for the conventional approach, and using the verification X-ray for the proposed method. Finally, we record the total number of X-rays used during the conventional repositioning.

3. Results

We have omitted Run 3 from the quantitative evaluation since the X-ray technician erroneously restored the caudal oblique view requested in the previous run. Including this run would strongly bias the quantitative results of the conventional approach, and thus, positively bias the assessment of the proposed AR environment. In clinical practice, such errors become obvious upon acquisition of an X-ray image, however, they unnecessarily increase the dose to patient and surgical staff and are easily avoided using the proposed system.

Differences between the target and restored C-arm pose as per the external tracker are provided in Table 1 for the proposed and conventional approach, respectively. We state residuals averaged over all C-arm poses separately for translation and rotation and compute the mean Euclidean displacement and angular deviation of the reference marker, respectively. While the orientation of the C-arm is equally well restored in both proposed and conventional approaches, the positional error is larger for the proposed method. This observation will be discussed in the following section.

Table 1.

C-arm pose differences as per infrared marker tracking

| Proposed | Conventional | |

|---|---|---|

| mean distance ± SD | 51.6 ± 19.2 mm | 16.7 ± 6.3 mm |

| angle ± SD | 1.54 ± 0.92° | 1.23 ± 0.45° |

In addition, we state the average displacement of the projection domain keypoints relative to the target X-ray images. We evaluate this error for the verification X-ray images after C-arm repositioning with the proposed method, and for the initial and refined X-ray images acquired in the conventional approach. The values stated in Table 2 reflect the mean pixel displacement over all poses and keypoints. Three to four keypoints were used per image, depending on the field of view determined by the C-arm pose. Based on this projection domain metric, the proposed method outperforms C-arm repositioning based on user recollection, the conventional approach without using X-radiation to iteratively refine the C-arm pose. However, when X-ray images are acquired to verify and adjust the C-arm pose, the conventional approach substantially outperforms the proposed system.

Table 2.

Projection domain keypoint displacement in pixels (px)

| Proposed | Conventional on first try | Conventional |

|---|---|---|

| 210 ± 105 px | 257 ± 171 px | 68 ± 36 px |

Finally, we report the number of X-ray images acquired during C-arm repositioning. With the conventional method, a total of 16 X-rays where required to restore 6 poses yielding, on average, 2.76 X-rays per C-arm position. Using the proposed approach, the number of acquired images for C-arm pose restoration drops to zero, since our experiment did not allow for iterative refinement when the proposed technology was used.

4. Discussion

In this work, we have proposed and studied an AR system to assist X-ray technicians with re-aligning the C-arm scanner with poses that are interventionally defined by the surgeon. Our results suggest that substantial dose reductions are possible with the proposed AR system. Once the desired views have been identified and stored, C-arm repositioning can be achieved with clinically acceptable accuracy without any further X-ray acquisitions. At the same time, our results reveal that further research on improving tracking accuracy and perceptual quality will be required for X-ray technicians to not only save dose but also deliver improved performance with respect to accuracy and time-on-task. The experimental design described here is limited since only a single X-ray technician and four runs were considered. We understand the reported experiments as an exploratory study designed to reveal the shortcomings of the current prototype. Based upon these very preliminary results, we envision necessary refinements of the system that address the current challenges discussed in greater detail below. Solving these challenges will pave the way for a large scale evaluation of the proposed AR concept including the investigation of learning effects that is mandatory to compare time-on-task.

In contrast to previous methods [8, 12, 13], our approach can be directly deployed in the operating theatre without any preparation of the environment or assumptions on the procedure. This translates to two immediate benefits: First, our method does not require pre- or intra-operative 3D imaging, and therefore, circumvents intra-operative 3D/2D registration, a major challenge in clinical deployment [17, 18]. Second, there is no need for additional markers and external trackers as in Matthews [14] or access to internal encoders of the C-arm as in De Silva [8]. While internal encoders can be considered more elegant than external trackers, they are not yet widely available in mobile C-arms since these systems are usually non-robotic, and therefore, do not require encoding. In addition, the most recent C-arms that are commercially available, such as the Ziehm Vision RFD 3D (Ziehm Imaging, Vienna, Austria) or the Siemens Cios Alpha (Siemens Healthineers, Erlangen, Germany) have at most four robotised axes [8, 19], suggesting that not all of the required six degrees of freedom can be monitored.

The proposed system does not currently provide quantitative feedback on how well a previously achieved pose was restored but relies on the user's assessment. The current prototype, however, is capable of simultaneously displaying stored and live intra-operative point clouds, as described in Section 2.2. A natural next step would be to use quantitative methods such as the iterative closest point (ICP) algorithm [20] to provide rigorous feedback on both the accuracy of alignment and the required adjustments. We strongly believe that such information would substantially improve the performance of the proposed system since current verification is solely based on perception. Our results suggest that relying on perception works well for restoring C-arm orientation but does not perform well for restoring position. This limitation partly arises from the disadvantages and challenges with current technology, particularly because of two reasons: First, there is no interaction between real and virtual object, such as shadow or occlusion. Second, available hardware, such as the Microsoft HoloLens or the Meta 2, will render virtual content in a fixed focal plane, irrespective of the virtual objects position. Consequently, virtual and real content may not be in focus simultaneously despite occupying the exact same physical space [4, 5]. Integrating quantitative feedback, however, will require optimised implementations of rendering and alignment to deliver a pleasant user experience without substantial lag; a challenge that already arises for pure visualisation due to the immense computational load associated with real-time SLAM.

In addition to shortcomings regarding perception, the performance of our prototype system is further compromised by the SLAM-tracking performance of purchasable hardware. Our prototype was materialised using the Meta 2 (Meta, San Mateo, CA) since it was the only HMD that provided developer access to the infrared depth sensor at the time of implementation. Unfortunately, we have found the SLAM-tracking provided by the Meta 2 to be inferior to the HoloLens with respect to both lag and accuracy. In addition, the Meta 2 is cable-bound, which limits its appropriateness in highly dynamic environments such as operating theatres. While previous work suggests that the display quality of current HMDs may be sufficient for intra-operative visualisation [21–23], the accuracy and reliability of vision-based SLAM seem yet insufficient to warrant immediate clinical deployment [4, 5]. While incorporating external tracking may be a solution [24], we believe that this prerequisite will inhibit wide acceptance, as was observed with previous navigation techniques. Consequently, developing OST HMDs specifically designed to meet clinical needs, particularly regarding perceptual quality and tracking accuracy, will be of critical importance to bring medical AR into the operating room.

The study presented in this work is an early pre-clinical feasibility study, with the goal of understanding the current challenges of the technician-in-the-loop AR system, and identifying modules that require improvement in the next generation system. The impact and significance of this solution should be evaluated in the future with a larger group of X-ray technicians on cadaveric specimens.

5. Conclusion

We have proposed a technician-in-the-loop solution to C-arm repositioning during fluoroscopy-guided procedures. Our system stores 3D representations of the desired C-arm views using real-time 3D sensing via infrared depth cameras that are then stored. When a previously achieved pose needs to be restored, the corresponding 3D scene is displayed to the technician in an OST HMD-based AR environment. Achieving the target view then requires alignment of the real C-arm gantry with the virtual model thereof. In our proof-of-principle experiments, we have found that use of our system (i) is associated with a reduction in X-ray dose, and (ii) may prevent operator errors, such as restoring the wrong view. We have found that relying on perception as the only performance feedback mechanism is challenging with current HMD hardware, suggesting that future work should investigate possibilities to provide quantitative feedback on C-arm operator performance in real time.

6. Funding and declaration of interests

None declared.

7 References

- 1.Korovessis P., Baikousis A., Stamatakis M., et al. : ‘Medium- and long-term results of open reduction and internal fixation for unstable pelvic ring fractures’, Orthopedics, 2000, 23, (11), pp. 1165–1171 [DOI] [PubMed] [Google Scholar]

- 2.Mardanpour K., Rahbar M.: ‘The outcome of surgically treated traumatic unstable pelvic fractures by open reduction and internal fixation’, J. Inj. Violence Res., 2013, 5, (2), p. 77 (doi: 10.5249/jivr.v5i2.138) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tucker E., Fotouhi J., Unberath M., et al. : ‘Towards clinical translation of augmented orthopedic surgery: from pre-op CT to intra-op x-ray via RGBD sensing’. Medical Imaging 2018: Imaging Informatics for Healthcare, Research, and Applications, Houston, TX, 2018, vol. 10579, p. 105790J [Google Scholar]

- 4.Andress S., Johnson A., Unberath M., et al. : ‘On-the-fly augmented reality for orthopedic surgery using a multimodal fiducial’, J. Med. Imag., 2018, 5, (2), p. 021209 (doi: 10.1117/1.JMI.5.2.021209) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hajek J., Unberath M., Fotouhi J., et al. : ‘Closing the calibration loop: an inside-out-tracking paradigm for augmented reality in orthopedic surgery’, Proc. Conf. on Medical Image Computing and Computer Assisted Intervention, Granada, Spain, 2018, pp. 1–8 [Google Scholar]

- 6.Wheeless C.R.: ‘Radiology of pelvic fractures’, in Moore D. (ED.): ‘‘Wheeless’ textbook of orthopaedics’ (CR Wheeless, MD, 1996)http://www.wheelessonline.com/ortho/radiology_of_pelvic_fractures. Available at http://www.wheelessonline.com/ortho/radiology_of_pelvic_fractures [Google Scholar]

- 7. ‘AO Foundation surgical reference’, 2018. Available at https://www2.aofoundation.org/wps/portal/surgery , accessed 11 June 2018.

- 8.De.Silva T., Punnoose J., Uneri A., et al. : ‘Virtual fluoroscopy for intraoperative C-arm positioning and radiation dose reduction’, J. Med. Imaging, 2018, 5, p. 1 (doi: 10.1117/1.JMI.5.1.015005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bott O.J., Dresing K., Wagner M., et al. : ‘Informatics in radiology: use of a c-arm fluoroscopy simulator to support training in intraoperative radiography’, Radiographics, 2011, 31, (3), pp. E65–E75 (doi: 10.1148/rg.313105125) [DOI] [PubMed] [Google Scholar]

- 10.Gong R.H., Jenkins B., Sze R.W., et al. : ‘A cost effective and high fidelity fluoroscopy simulator using the image-guided surgery toolkit (IGSTK)’. Medical Imaging 2014: Image-Guided Procedures, Robotic Interventions, and Modeling, San Diego, CA, 2014, vol. 9036, p. 903618 [Google Scholar]

- 11.Stefan P., Habert S., Winkler A., et al. : ‘A mixed-reality approach to radiation-free training of C-arm based surgery’. Int. Conf. on Medical Image Computing and Computer-Assisted Intervention, Quebec City, Canada, 2017, pp. 540–547 [Google Scholar]

- 12.Klein T., Benhimane S., Traub J., et al. : ‘Interactive guidance system for C-arm repositioning without radiation’. Bildverarbeitung für die Medizin 2007, München, Germany, 2007, pp. 21–25 [Google Scholar]

- 13.Dressel P., Wang L., Kutter O., et al. : ‘Intraoperative positioning of mobile c-arms using artificial fluoroscopy’. Medical Imaging 2010: Visualization, Image-Guided Procedures, and Modeling, San Diego, CA, 2010, vol. 7625, p. 762506 [Google Scholar]

- 14.Matthews F., Hoigne D.J., Weiser M., et al. : ‘Navigating the fluoroscope's C-arm back into position: an accurate and practicable solution to cut radiation and optimize intraoperative workflow’, J. Orthop. Trauma, 2007, 21, (10), pp. 687–692 (doi: 10.1097/BOT.0b013e318158fd42) [DOI] [PubMed] [Google Scholar]

- 15.Fallavollita P., Winkler A., Habert S., et al. : ‘Desired-view controlled positioning of angiographic C-arms’. Int. Conf. on Medical Image Computing and Computer-Assisted Intervention, Cambridge, MA, 2014, pp. 659–666 [DOI] [PubMed] [Google Scholar]

- 16.Endres F., Hess J., Engelhard N., et al. : ‘An evaluation of the RGB-D SLAM system’. 2012 IEEE Int. Conf. on Robotics and Automation (ICRA), St Paul, MN, 2012, pp. 1691–1696 [Google Scholar]

- 17.Bier B., Unberath M., Zaech J.N., et al. : ‘X-ray-transform invariant anatomical landmark detection for pelvic trauma surgery’, Proc. Conf. on Medical Image Computing and Computer Assisted Intervention, Granada, Spain, 2018, pp. 1–8 [Google Scholar]

- 18.Fotouhi J., Fuerst B., Johnson A., et al. : ‘Pose-aware C-arm for automatic re-initialization of interventional 2D/3D image registration’, Int. J. Comput. Assist. Radiol. Surg., 2017, 12, (7), pp. 1221–1230 (doi: 10.1007/s11548-017-1611-8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. ‘Ziehm imaging Ziehm Vision RFD 3D’, 2018. Available at https://www.ziehm.com/en/us/products/c-arms-with-flat-panel-detector/ziehm-vision-rfd-3d.html , accessed 11 June 2018.

- 20.Besl P.J., McKay N.D.: ‘Method for registration of 3-d shapes’. Sensor Fusion IV: Control Paradigms and Data Structures, Boston, MA, 1992, vol. 1611, pp. 586–607 [Google Scholar]

- 21.Qian L., Unberath M., Yu K., et al. : ‘Towards virtual monitors for image guided interventions-real-time streaming to optical see-through head-mounted displays’, arXiv preprint arXiv:171000808, 2017

- 22.Deib G., Johnson A., Unberath M., et al. : ‘Image guided percutaneous spine procedures using an optical see-through head mounted display: proof of concept and rationale’, J. Neurointerv. Surg., 2018, doi: 10.1136/neurintsurg-2017-013649 [DOI] [PubMed] [Google Scholar]

- 23.Qian L., Barthel A., Johnson A., et al. : ‘Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2d-display’, Int. J. Comput. Assist. Radiol. Surg., 2017, 12, (6), pp. 901–910 (doi: 10.1007/s11548-017-1564-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chen X., Xu L., Wang Y., et al. : ‘Development of a surgical navigation system based on augmented reality using an optical see-through head-mounted display’, J. Biomed. Inf., 2015, 55, pp. 124–131 (doi: 10.1016/j.jbi.2015.04.003) [DOI] [PubMed] [Google Scholar]