Abstract

Psychometric functions (PFs) quantify how external stimuli affect behavior, and they play an important role in building models of sensory and cognitive processes. Adaptive stimulus-selection methods seek to select stimuli that are maximally informative about the PF given data observed so far in an experiment and thereby reduce the number of trials required to estimate the PF. Here we develop new adaptive stimulus-selection methods for flexible PF models in tasks with two or more alternatives. We model the PF with a multinomial logistic regression mixture model that incorporates realistic aspects of psychophysical behavior, including lapses and multiple alternatives for the response. We propose an information-theoretic criterion for stimulus selection and develop computationally efficient methods for inference and stimulus selection based on adaptive Markov-chain Monte Carlo sampling. We apply these methods to data from macaque monkeys performing a multi-alternative motion-discrimination task and show in simulated experiments that our method can achieve a substantial speed-up over random designs. These advances will reduce the amount of data needed to build accurate models of multi-alternative PFs and can be extended to high-dimensional PFs that would be infeasible to characterize with standard methods.

Keywords: adaptive stimulus selection, sequential optimal design, Bayesian adaptive design, psychometric function, closed-loop experiments

Introduction

Understanding the factors governing psychophysical behavior is a central problem in neuroscience and psychology. Although accurate quantification of the behavior is an important goal in itself, psychophysics provides an important tool for interrogating the mechanisms governing sensory and cognitive processing in the brain. As new technologies allow direct manipulations of neural activity in the brain, there is a growing need for methods that can characterize rapid changes in psychophysical behavior.

In a typical psychophysical experiment, an observer is trained to report judgments about a sensory stimulus by selecting a response from among two or more alternatives. The observer is assumed to have an internal probabilistic rule governing these decisions; this probabilistic map from stimulus to response is called the observer's psychometric function. Because the psychometric function is not directly observable, it must be inferred from multiple observations of stimulus–response pairs. However, such experiments are costly due to the large numbers of trials typically required to obtain good estimates of the psychometric function. Therefore, a problem of major practical importance is to develop efficient experimental designs that can minimize the amount of data required to accurately infer an observer's psychometric function.

Bayesian adaptive stimulus selection

A powerful approach for improving the efficiency of psychophysical experiments is to design the data-collection process so that the stimulus is adaptively selected on each trial by maximizing a suitably defined objective function (MacKay, 1992). Such methods are known by a variety of names, including active learning, adaptive or sequential optimal experimental design, and closed-loop experiments.

Bayesian approaches to adaptive stimulus selection define optimality of a stimulus in terms of its ability to improve a posterior distribution over the psychometric function, for example by reducing variance or entropy of the posterior. The three key ingredients of a Bayesian adaptive stimulus-selection method are (Chaloner & Verdinelli, 1995; Pillow & Park, 2016):

model, which parametrizes the psychometric function of interest;

prior, which captures initial beliefs about model parameters; and

utility function, which quantifies the usefulness of a hypothetical stimulus–response pair for improving the posterior.

Sequential algorithms for adaptive Bayesian experiments rely on repeated application of three basic steps: data collection (stimulus presentation and response measurement); inference (posterior updating using data from the most recent trial); and selection of an optimal stimulus for the next trial by maximizing expected utility (see Figure 1A). The inference step involves updating the posterior distribution over the model parameters according to Bayes's rule with data from the most recent trial. Stimulus selection involves calculating the expected utility (i.e., the expected improvement in the posterior) for a set of candidate stimuli, averaging over the responses that might be elicited for each stimulus, and selecting the stimulus for which the expected utility is highest. Example utility functions include the negative trace of the posterior covariance (corresponding to the sum of the posterior variances for each parameter) and the mutual information or information gain (which corresponds to minimizing the entropy of the posterior).

Figure 1.

(A) Schematic of Bayesian adaptive stimulus selection. On each trial, a stimulus is presented and the response observed; the posterior over the parameters θ is updated using all data collected so far in the experiment  ; and the stimulus that maximizes the expected utility (in our case, information gain) is selected for the next trial. (B) A graphical model illustrating a hierarchical psychophysical-observer model that incorporates lapses as well as the possibility of omissions. On each trial, a latent attention or lapse variable at is drawn from a Bernoulli distribution with parameter λ, to determine whether the observer attends to the stimulus xt on that trial or lapses. With probability 1 − λ, the observer attends to the stimulus (at = 0) and the response yt is drawn from a multinomial logistic regression model, where the probability of choosing option i is proportional to

; and the stimulus that maximizes the expected utility (in our case, information gain) is selected for the next trial. (B) A graphical model illustrating a hierarchical psychophysical-observer model that incorporates lapses as well as the possibility of omissions. On each trial, a latent attention or lapse variable at is drawn from a Bernoulli distribution with parameter λ, to determine whether the observer attends to the stimulus xt on that trial or lapses. With probability 1 − λ, the observer attends to the stimulus (at = 0) and the response yt is drawn from a multinomial logistic regression model, where the probability of choosing option i is proportional to  . With probability λ, the observer lapses (at = 1) and selects a choice from a (stimulus-independent) response distribution governed by parameter vector u. So-called omission trials, in which the observer does not select one of the valid response options, are modeled with an additional response category yt = k.

. With probability λ, the observer lapses (at = 1) and selects a choice from a (stimulus-independent) response distribution governed by parameter vector u. So-called omission trials, in which the observer does not select one of the valid response options, are modeled with an additional response category yt = k.

Methods for Bayesian adaptive stimulus selection have been developed over several decades in a variety of different disciplines. If we focus on the specific application of estimating psychometric functions, the field goes back to the QUEST (A. B. Watson & Pelli, 1983) and ZEST (King-Smith, Grigsby, Vingrys, Benes, & Supowit, 1994) algorithms, which are focused on the estimation of discrimination thresholds, and to the simple case of 1-D stimulus and binary responses (Treutwein, 1995). The Ψ method (Kontsevich & Tyler, 1999) uses Bayesian inference for estimating both the threshold and slope of a psychometric function, which have been extended to 2-D stimuli by Kujala and Lukka (2006). Further development of the method allowed for adaptive estimation of more complex psychometric functions, where the parameters are no longer limited to a threshold and a slope (Barthelmé & Mamassian, 2008; Kujala & Lukka, 2006; Lesmes, Lu, Baek, & Albright, 2010; Prins, 2013) and may exhibit statistical dependencies (Vul, Bergsma, & MacLeod, 2010). Models with multidimensional stimuli have also been considered (DiMattina, 2015; Kujala & Lukka, 2006; A. B. Watson, 2017).

Different models have been used to describe the psychometric function. Standard choices include the logistic regression model (Chaloner & Larntz, 1989; DiMattina, 2015; Zocchi & Atkinson, 1999), the Weibull distribution function (A. B. Watson & Pelli, 1983), and the Gaussian cumulative distribution function (Kontsevich & Tyler, 1999). More recent work has considered Gaussian process regression models (Gardner, Song, Weinberger, Barbour, & Cunningham, 2015). Most previous work, however, has been limited to the case of binary responses.

Bayesian methods for inferring psychometric functions (Kuss, Jäkel, & Wichmann, 2005; Prins, 2012; Wichmann & Hill, 2001a, 2001b) have enlarged the space of statistical models that can be used to describe psychophysical phenomena based on (often limited) data. A variety of recent advances have arisen in sensory neuroscience or neurophysiology, driven by the development of efficient inference techniques for neural encoding models (Lewi, Butera, & Paninski, 2009; M. Park, Horwitz, & Pillow, 2011) or model comparison and discrimination methods (Cavagnaro, Myung, Pitt, & Kujala, 2010; DiMattina & Zhang, 2011; Kim, Pitt, Lu, Steyvers, & Myung, 2014). These advances can in many cases be equally well applied to psychophysical experiments.

Our contributions

In this article, we develop methods for adaptive stimulus selection in psychophysical experiments that are applicable to realistic models of human and animal psychophysical behavior. First, we describe a psychophysical model that incorporates multiple response alternatives and lapses, in which the observer makes a response that does not depend on the stimulus. This model can also incorporate omission trials, where the observer does not make a valid response (e.g., breaking fixation before the go cue), by considering them as an additional response category. Second, we describe efficient methods for updating the posterior over the model parameters after every trial. Third, we introduce two algorithms for adaptive stimulus selection, one based on a Gaussian approximation to the posterior and a second based on Markov-chain Monte Carlo (MCMC) sampling. We apply these algorithms to simulated data and to real data analyzed with simulated closed-loop experiments and show that they can substantially reduce the number of trials required to estimate multi-alternative psychophysical functions.

Psychophysical-observer model

Here we describe a flexible model of psychometric functions (PFs) based on the multinomial logistic (MNL) response model (Glonek & McCullagh, 1995). We show how omission trials can be naturally incorporated into a model as one of the multiple response alternatives. We then develop a hierarchical extension of the model that incorporates lapses (see Figure 1B).

Multinomial logistic response model

We consider the setting where the observer is presented with a stimulus x ∈ ℝd and selects a response  from one of k discrete choices on each trial. We will assume the stimulus is represented internally by some (possibly nonlinear) feature vector ϕ(x), which we will write simply as ϕ for notational simplicity.

from one of k discrete choices on each trial. We will assume the stimulus is represented internally by some (possibly nonlinear) feature vector ϕ(x), which we will write simply as ϕ for notational simplicity.

In the MNL model, the probability pi of each possible outcome  is determined by the dot product between the feature ϕ and a vector of weights wi according to

is determined by the dot product between the feature ϕ and a vector of weights wi according to

|

where the denominator ensures that these probabilities sum to 1,  . The function from stimulus to a probability vector over choices,

. The function from stimulus to a probability vector over choices,  is the psychometric function, and the set of weights

is the psychometric function, and the set of weights  its parameters. Note that the model is overparameterized when written this way, since the requirement that probabilities sum to 1 removes one degree of freedom from the probability vector. Thus, we can without loss of generality fix one of the weight vectors to zero, for example wk = 0, so that the denominator in Equation 1 becomes

its parameters. Note that the model is overparameterized when written this way, since the requirement that probabilities sum to 1 removes one degree of freedom from the probability vector. Thus, we can without loss of generality fix one of the weight vectors to zero, for example wk = 0, so that the denominator in Equation 1 becomes  and

and  .

.

We consider the feature vector ϕ to be a known function of the stimulus x, even when the dependence is not written explicitly. For example, we can consider a simple form of feature embedding,  , corresponding to a linear function of the stimulus plus an offset. In this case, the weights for the ith choice would correspond to

, corresponding to a linear function of the stimulus plus an offset. In this case, the weights for the ith choice would correspond to  , where

, where  is the offset or bias for the ith choice and ai are the linear weights governing sensitivity to x. The resulting choice probability has the familiar form

is the offset or bias for the ith choice and ai are the linear weights governing sensitivity to x. The resulting choice probability has the familiar form  . Nonlinear stimulus dependencies can be incorporated by including nonlinear functions of x in the feature vector ϕ(x) (Knoblauch & Maloney, 2008; Murray, 2011; Neri & Heeger, 2002). Dependencies on the trial history, such as the previous stimulus or reward, may also be included as additional features in ϕ (see, e.g., Bak, Choi, Akrami, Witten, & Pillow, 2016).

. Nonlinear stimulus dependencies can be incorporated by including nonlinear functions of x in the feature vector ϕ(x) (Knoblauch & Maloney, 2008; Murray, 2011; Neri & Heeger, 2002). Dependencies on the trial history, such as the previous stimulus or reward, may also be included as additional features in ϕ (see, e.g., Bak, Choi, Akrami, Witten, & Pillow, 2016).

It is useful to always work with a normalized stimulus space, in which the mean of each stimulus component xα over the stimulus space is  and the standard deviation

and the standard deviation  . This normalization ensures that the values of the weight parameters are defined in more interpretable ways. The zero-mean condition ensures that the bias b is the expectation value of log probability over all possible stimuli. The unit-variance condition means that the effect of moving a certain distance along one dimension of the weight space is comparable to moving the same distance in another dimension, again averaged over all possible stimuli. In other words, we are justified in using the same unit along all dimensions of the weight space.

. This normalization ensures that the values of the weight parameters are defined in more interpretable ways. The zero-mean condition ensures that the bias b is the expectation value of log probability over all possible stimuli. The unit-variance condition means that the effect of moving a certain distance along one dimension of the weight space is comparable to moving the same distance in another dimension, again averaged over all possible stimuli. In other words, we are justified in using the same unit along all dimensions of the weight space.

Including omission trials

Even in binary tasks with only two possible choices per trial, there is often an implicit third choice, which is to make no response, make an illegal response, or interrupt the trial at some point before the allowed response period. For example, animals are often required to maintain an eye position or a nose poke or wait for a “go” cue before reporting a choice. Trials on which the animal fails to obey these instructions are commonly referred to as omissions or violations and are typically discarded from analysis. However, failure to take these trials into account may bias the estimates of the PF if these trials are more common for some stimuli than others (see Figure 2B).

Figure 2.

Effects of omission and lapse. Here we illustrate the undesirable effects of failing to take into account omission and lapse. (A) If the psychometric function (PF) follows an ideal binomial logistic model, it can be estimated very well from data. The black dashed line shows the true PF for one of the two responses (say y = R) and the gray dashed line shows the true PF for the other (say y = L), such that the two dashed curves always add up to 1. The black dots indicate the mean probability of observing this response y = R at each stimulus point x. We drew 20 observations per stimulus point, at each of the 21 stimulus points along the one-dimensional axis. The resulting estimate for P(y = 1) is shown by the solid black line. The inference method is not important for the current purpose, but we used the maximum a posteriori estimate. (B) Now suppose that some trials fell into the implicit third choice, which is omission (red dashed line). The observed probability of y = R at each stimulus point (open black circles) follows the true PF (black dashed line). But if the omitted trials are systematically excluded from analysis, as in common practice, the estimated PF (solid black line) reflects a biased set of observations (filled black circles) and fails to recover the true PF. (C) When there is a finite lapse rate (we used a total lapse of λ = 0.2, uniformly distributed to the two outcomes), the true PF (dashed black line) asymptotes to a finite offset from 0 or 1. If the resulting observations (black dots) are fitted to a plain binomial model without lapse, the slope of the estimated PF (solid black line) is systematically biased.

The multinomial response model provides a natural framework for incorporating omission trials because it accommodates an arbitrary number of response categories. Thus we can model omissions explicitly as one of the possible choices the observer can choose from, or as response category k + 1 in addition to the k valid responses. One could even consider different kinds of omissions separately—for example, allowing choice k + 1 to reflect fixation-period violations and choice k + 2 to reflect failure to report a choice during the response window. Henceforth, we will let k reflect the total number of choices including omission, as illustrated in Figure 1B.

This formulation can also be useful for the rated yes/no task in human psychophysics, where a “not sure” response is explicitly presented (C. S. Watson, Kellogg, Kawanishi, & Lucas, 1973). Although such a model was considered for adaptive stimulus selection (Lesmes et al., 2015), the third alternative was not handled as a fully independent choice, as the goal was only to estimate the two detection thresholds separately: one for a strict yes, another for a collapsed response of either yes or not sure. Our model treats each of the multiple alternatives equivalently.

Modeling lapses with a mixture model

Another important feature of real psychophysical observers is the tendency to occasionally make errors that are independent of the stimulus. Such choices, commonly known as lapses or button-press errors, may reflect lapses in concentration or memory of the response mapping (Kuss et al., 2005; Wichmann & Hill, 2001a). Lapses are most easily identified by errors on easy trials—that is, trials that should be performed perfectly if the observer is paying attention.

Although lapse rates can be negligible in highly trained observers (Carandini & Churchland, 2013), they can be substantially greater than zero in settings involving nonprimates or complicated psychophysical tasks. Lapses affect the PF by causing it to saturate above 0 and below 1, so that perfect performance is never achieved even for the easiest trials. Failure to incorporate lapses into the PF model may therefore bias estimates of sensitivity, as quantified by PF slope or threshold (illustrated in Figure 2C; also see Prins, 2012; Wichmann and Hill, 2001a, 2001b).

To model lapses, we use a mixture model that treats the observer's choice on each trial as coming from one of two probability distributions: a stimulus-dependent one (governed by the MNL model) or a stimulus-independent one (reflecting a fixed probability of choosing any option when lapsing). Simpler versions of such mixture model have been proposed previously (Kuss et al., 2005).

Figure 1B shows a schematic of the resulting model. On each trial, a Bernoulli random variable a ∼ Ber(λ) governs whether the observer lapses: With probability λ the observer lapses (i.e., ignores the stimulus), and with probability 1 − λ the observer attends to the stimulus. If the observer lapses (a = 1), the response is drawn according to the fixed-probability distribution  governing the probability of selecting options 1 to k, where

governing the probability of selecting options 1 to k, where  . If the observer does not lapse (a = 0), the response is selected according to the MNL model. Under this model, the conditional probability of choosing option i given the stimulus can be written as

. If the observer does not lapse (a = 0), the response is selected according to the MNL model. Under this model, the conditional probability of choosing option i given the stimulus can be written as

|

where qi is the lapse-free probability under the classical MNL model (Equation 1).

It is convenient to reparameterize this model so that λci, the conditional probability of choosing the ith option due to a lapse, is written

|

where each auxiliary lapse parameter ui is proportional to the log probability of choosing option i due to lapse. The lapse-conditional probabilities ci of each choice and the total lapse probability λ are respectively

|

Because each ui lives on the entire real line, fitting can be carried out with unconstrained optimization methods, although adding reasonable constraints may improve performance in some cases. The full parameter vector of the resulting model is  , which includes k additional lapse parameters

, which includes k additional lapse parameters  . Note that in some cases it might be desirable to assume that lapse choices obey a uniform distribution, where the probability of each option is ci = 1/k. For this simplified uniform-lapse model we need only a single lapse parameter u. Note that we have unified the parameterizations of the lapse rate (deviation of the upper asymptote of the PF from 1; in this case, λ − λci) and the guess rate (deviation of the lower asymptote from 0; in this case, λci), which have often been modeled separately in previous works with two-alternative responses (Schütt, Harmeling, Macke, & Wichmann, 2016; Wichmann & Hill, 2001a, 2001b). Here they are written in terms of a single family of parameters

. Note that in some cases it might be desirable to assume that lapse choices obey a uniform distribution, where the probability of each option is ci = 1/k. For this simplified uniform-lapse model we need only a single lapse parameter u. Note that we have unified the parameterizations of the lapse rate (deviation of the upper asymptote of the PF from 1; in this case, λ − λci) and the guess rate (deviation of the lower asymptote from 0; in this case, λci), which have often been modeled separately in previous works with two-alternative responses (Schütt, Harmeling, Macke, & Wichmann, 2016; Wichmann & Hill, 2001a, 2001b). Here they are written in terms of a single family of parameters  and extended naturally to multi-alternative responses.

and extended naturally to multi-alternative responses.

Our model provides a general and practical parameterization of PFs with lapses. Although previous work has considered the problem of modeling lapses in psychophysical data, much of it assumed a uniform-lapse model, where all options are equally likely during lapses. Earlier approaches have often assumed either that the lapse probability was known a priori (Kontsevich & Tyler, 1999) or was fitted by a grid search over a small set of candidate values (Wichmann & Hill, 2001a). Here we instead infer individual lapse probabilities for each response option, similar to recent approaches described by Kuss et al. (2005), Prins (2012, 2013), and Schütt et al. (2016). Importantly, our method infers the full parameter θ that includes both the weight and lapse parameters, rather than treating the lapse separately. In particular, our parameterization (Equation 3) has the advantage that there is no need to constrain the support of the lapse parameters ui. These parameters' relationship to lapse probabilities ci takes the same (softmax) functional form as the MNL model, placing both sets of parameters on an equal footing.

Before closing this section, we would like to reflect briefly on the key differences between omissions and lapses. First, although omissions and lapses both reflect errors in decision making, omissions are defined as invalid responses and are thus easily identifiable from the data; lapses, on the other hand, are indistinguishable from normal responses, and are identifiable only from the fact that the psychometric function does not saturate at 0 or 1. Second, modeling omissions as a response category under the MNL model means that the probability of omission is stimulus dependent (e.g., more likely to arise on trials with high difficulty, or generally when the evidence for other options is low). Even if the omissions are not stimulus dependent, and are instead governed entirely by a bias parameter, the probability of omission will still be higher when the evidence for other choices is low or lower when the evidence for other choices is high. Omissions that arise in a purely stimulus-independent fashion, on the other hand, will be modeled as arising from the lapse parameter associated with the omission response category. Omissions can thus arise in two ways under the model: as categories selected under the multinomial model or as lapses arising independent of the stimulus and other covariates.

Posterior inference

Bayesian methods for adaptive stimulus selection require the posterior distribution over model parameters given the data observed so far in an experiment. The posterior distribution results from the combination of two ingredients: a prior distribution p(θ), which captures prior uncertainty about the model parameters θ, and a likelihood function  , which captures information about the parameters from the data

, which captures information about the parameters from the data  , where s = 1, …, t consists of stimulus–response pairs observed up to the current time bin t.

, where s = 1, …, t consists of stimulus–response pairs observed up to the current time bin t.

Unfortunately, the posterior distribution for our model has no analytic form. We therefore describe two methods for approximate posterior inference: one relying on a Gaussian approximation to the posterior, known as the Laplace approximation, and a second one based on MCMC sampling.

Prior

The prior distribution specifies our beliefs about model parameters before we have collected any data, and serves to regularize estimates obtained from small amounts of data—for example, by shrinking estimated weights toward zero. Typically we want the prior to be weak enough that the likelihood dominates the posterior for reasonable-sized data sets. However, the choice of prior is especially important in adaptive stimulus-selection settings, because it determines the effective volume of the search space (M. Park & Pillow, 2012; M. Park, Weller, Horwitz, & Pillow, 2014). For example, if the weights are known to exhibit smoothness, then a correlated or smoothness-inducing prior can improve the performance of adaptive stimulus selection because the effective size (or entropy) of the parameter space is much smaller than under an independent prior (M. Park & Pillow, 2012).

In this study, we use a generic independent, zero-mean Gaussian prior over the weight vectors

|

for all  , with a fixed standard deviation σ. This choice of prior is appropriate when the regressors {x} are standardized, since any single weight can take values that allow for a range of PF shapes along that axis, from flat (w = 0) to steeply decreasing (w = −2σ) or increasing (w = +2σ). We used σ = 3 in the simulated experiments in Results. For the lapse parameters

, with a fixed standard deviation σ. This choice of prior is appropriate when the regressors {x} are standardized, since any single weight can take values that allow for a range of PF shapes along that axis, from flat (w = 0) to steeply decreasing (w = −2σ) or increasing (w = +2σ). We used σ = 3 in the simulated experiments in Results. For the lapse parameters  , we used a uniform prior over the range [log(0.001), 0] with the natural log, so that each lapse probability λci is bounded between 0.001 and 1/2. We set the lower range constraint below 1/N, where N = 100 is the number of observed trials in our simulations, since we cannot reasonably infer lapse probabilities with precision finer than 1/N. The upper range constraint gives maximal lapse probabilities of 1/(k + 1) if all ui take on the maximal value of 0. Note that our prior is uniform with respect to the rescaled lapse parameters

, we used a uniform prior over the range [log(0.001), 0] with the natural log, so that each lapse probability λci is bounded between 0.001 and 1/2. We set the lower range constraint below 1/N, where N = 100 is the number of observed trials in our simulations, since we cannot reasonably infer lapse probabilities with precision finer than 1/N. The upper range constraint gives maximal lapse probabilities of 1/(k + 1) if all ui take on the maximal value of 0. Note that our prior is uniform with respect to the rescaled lapse parameters  rather than to the actual lapse rates; projected to the space of the lapse probabilities, given the bounds, the prior increases toward smaller lapse. For a comprehensive study of the effect of different priors on lapse, see Schütt et al. (2016).

rather than to the actual lapse rates; projected to the space of the lapse probabilities, given the bounds, the prior increases toward smaller lapse. For a comprehensive study of the effect of different priors on lapse, see Schütt et al. (2016).

PF likelihood

The likelihood is the conditional probability of the data as a function of the model parameters. Although we have thus far considered the response variable y to be a scalar taking values in the set {1, … , k}, it is more convenient to use a “one-hot” or “1-of-k” representation, in which the response variable y for each trial is a vector of length k with one 1 and k − 1 zeros; the position of the 1 in this vector indicates the category selected. For example, in a task with four possible options per trial, a response vector y = [0 0 1 0] indicates a trial on which the observer selected the third option.

With this parameterization, the log-likelihood function for a single trial can be written

|

where pi(x, θ) denotes the probability  under the model (Equation 1), and

under the model (Equation 1), and  denotes the vector of probabilities for a single trial.

denotes the vector of probabilities for a single trial.

In the classical (lapse-free) MNL model, where  , the log likelihood is a concave function of θ, which guarantees that numerical optimization of the log likelihood will find a global optimum. With a finite lapse rate, however, the log likelihood is no longer concave (see Appendix A).

, the log likelihood is a concave function of θ, which guarantees that numerical optimization of the log likelihood will find a global optimum. With a finite lapse rate, however, the log likelihood is no longer concave (see Appendix A).

Posterior distribution

The log-posterior can be written as the sum of log prior and log likelihood summed over trials, plus a constant:

|

where  denotes the accumulated data up to trial t and

denotes the accumulated data up to trial t and  is a normalization constant that does not depend on the parameters θ. Because this constant has no tractable analytic form, we rely on two alternate methods for obtaining a normalized posterior distribution.

is a normalization constant that does not depend on the parameters θ. Because this constant has no tractable analytic form, we rely on two alternate methods for obtaining a normalized posterior distribution.

Inference via Laplace approximation

The Laplace approximation is a well-known Gaussian approximation to the posterior distribution, which can be derived from a second-order Tayler series approximation to the log posterior around its mode (Bishop, 2006).

Computing the Laplace approximation involves a two-step procedure. The first step is to perform a numerical optimization of  to find the posterior mode, or maximum a posteriori (MAP) estimate of θ. This vector, given by

to find the posterior mode, or maximum a posteriori (MAP) estimate of θ. This vector, given by

|

provides the mean of the Laplace approximation. Because we can explicitly provide the gradient and Hessian of the log likelihood (see Appendix A) and log prior, this optimization can be carried out efficiently via Newton–Raphson or trust-region methods.

The second step is to compute the second derivative (the Hessian matrix) of the log posterior at the mode, which provides the inverse covariance of the Gaussian. This gives us a local Gaussian approximation of the posterior, centered at the posterior mode:

|

where covariance  is the inverse Hessian of the log posterior,

is the inverse Hessian of the log posterior,  , evaluated at

, evaluated at  .

.

Note that when the log posterior is concave (i.e., when the model does not contain lapses), numerical optimization is guaranteed to find a global maximum of the posterior. Log concavity also strengthens the rationale for using the Laplace approximation, since the true and approximate posterior are both log-concave densities centered on the true mode (Paninski et al., 2010; Pillow, Ahmadian, & Paninski, 2011). When the model incorporates lapses, these guarantees no longer apply globally.

Inference via MCMC sampling

A second approach to inference is to generate samples from the posterior distribution over the parameters via MCMC sampling. Sampling-based methods are typically more computationally intensive than the Laplace approximation but may be warranted when the posterior is not provably log concave (as is the case when lapse rates are nonzero) and therefore not well approximated by a single Gaussian.

The basic idea in MCMC sampling is to set up an easy-to-sample Markov chain that has the posterior as its stationary distribution. Sampling from this chain produces a dependent sequence of posterior samples  which can be used to evaluate posterior expectations via Monte Carlo integrals:

which can be used to evaluate posterior expectations via Monte Carlo integrals:

|

for any function f(θ). The mean of the posterior is obtained from setting f(θ) = θ, although for adaptive stimulus selection we will be interested in the full shape of the posterior.

The Metropolis–Hastings algorithm is perhaps the simplest and most widely used MCMC sampling method (Metropolis, Rosenbluth, Rosenbluth, Teller, & Teller, 1953). It generates samples via a proposal distribution centered on the current sample (see Appendix B). The choice of proposal distribution is critical to the efficiency of the algorithm, since this governs the rate of mixing, or the number of Markov-chain samples required to obtain independent samples from the posterior distribution (Rosenthal, 2011). Faster mixing implies that fewer samples M are required to obtain an accurate approximation to the posterior.

Here we propose a semiadaptive Metropolis–Hastings algorithm, developed specifically for the current context of sequential learning. Our approach is based on an established observation that the optimal width of the proposal distribution should be proportional to the typical length scale of the distribution being sampled (Gelman, Roberts, & Gilks, 1996; Roberts, Gelman, & Gilks, 1997). Our algorithm is motivated by the adaptive Metropolis algorithm (Haario, Saksman, & Tamminen, 2001), where the proposal distribution is updated at each proposal within a single chain; here we adapt the proposal not within chains but rather after each trial. Specifically, we set the covariance of a Gaussian proposal distribution to be proportional to the covariance of the samples from the previous trial, using the scaling factor of Haario et al. (2001). See Appendix B for details. The adaptive algorithm takes advantage of the fact that the posterior cannot change too much between trials, since it changes only by a single-trial likelihood term on each trial.

Adaptive stimulus-selection methods

As data are collected during the experiment, the posterior distribution becomes narrower due to the fact that each trial carries some additional information about the model parameters (see Figure 3). This narrowing of the posterior is directly related to information gain. A stimulus that produces no expected narrowing of the posterior is, by definition, uninformative about the parameters. On the other hand, a stimulus that (on average) produces a large change in the current posterior is an informative stimulus. Selecting informative stimuli will reduce the number of stimuli required to obtain a narrow posterior, which is the essence of adaptive stimulus-selection methods. In this section, we introduce a precise measure of information gain between a stimulus and the model parameters, and propose an algorithm for selecting stimuli to maximize it.

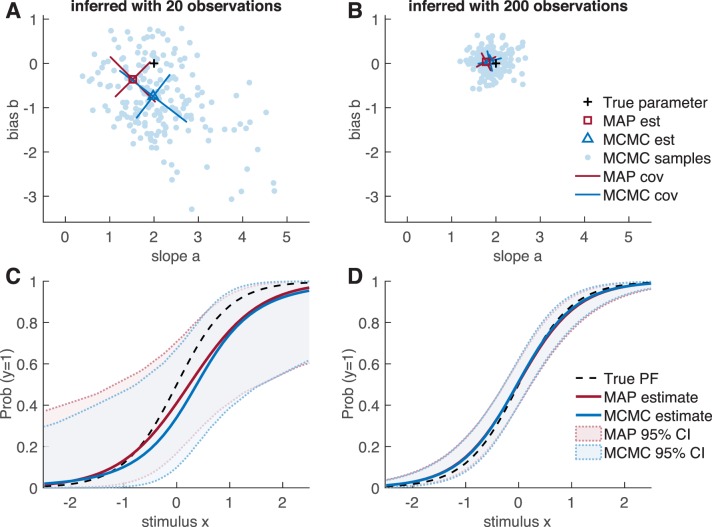

Figure 3.

Inferring the psychometric function. Example of a psychometric problem, with a lapse-free binomial logistic model  . Given a 1-D stimulus, a response was drawn from a “true” model P(y = 1) = f(b + ax) with two parameters, slope a = 2 and bias b = 0. (A–B) On the parameter space, the posterior distributions become sharper (and closer to the true parameter values) as the data-set size N increases. (A) N = 20 (small); (b) N = 200 (large). For the maximum a posteriori estimate, the mode of the distribution is marked with a square and the two standard deviations (“widths”) of its Gaussian approximation with bars. For the Markov-chain Monte Carlo sampling method, all M = 500 samples of the chain are shown with dots, the sample mean with a triangle, and the widths with bars. The widths are the standard deviations along the principal directions of the sampled posterior (eigenvectors of the covariance matrix; not necessary aligned with the a–b axes). (C–D) The accuracy of the estimated psychometric function improves with the number of observations N, using either of the two posterior inference methods (MAP or MCMC). (C) N = 20 (small); (D) N = 200 (large). The two methods are highly consistent in this simple case, especially when N is large enough.

. Given a 1-D stimulus, a response was drawn from a “true” model P(y = 1) = f(b + ax) with two parameters, slope a = 2 and bias b = 0. (A–B) On the parameter space, the posterior distributions become sharper (and closer to the true parameter values) as the data-set size N increases. (A) N = 20 (small); (b) N = 200 (large). For the maximum a posteriori estimate, the mode of the distribution is marked with a square and the two standard deviations (“widths”) of its Gaussian approximation with bars. For the Markov-chain Monte Carlo sampling method, all M = 500 samples of the chain are shown with dots, the sample mean with a triangle, and the widths with bars. The widths are the standard deviations along the principal directions of the sampled posterior (eigenvectors of the covariance matrix; not necessary aligned with the a–b axes). (C–D) The accuracy of the estimated psychometric function improves with the number of observations N, using either of the two posterior inference methods (MAP or MCMC). (C) N = 20 (small); (D) N = 200 (large). The two methods are highly consistent in this simple case, especially when N is large enough.

Infomax criterion for stimulus selection

At each trial, we present a stimulus x and observe the outcome y. After t trials, the expected gain in information from a stimulus x is equal to the mutual information between y and the model parameters θ, given the data  observed so far in the experiment. We denote this conditional mutual information:

observed so far in the experiment. We denote this conditional mutual information:

|

where  is the joint distribution of θ and y given a stimulus x and dataset

is the joint distribution of θ and y given a stimulus x and dataset  ;

;  is the current posterior distribution over the parameters from previous trials; and

is the current posterior distribution over the parameters from previous trials; and  is known as the posterior-predictive distribution of y given x.

is known as the posterior-predictive distribution of y given x.

It is useful to note that the mutual information can equivalently be written in two other ways involving Shannon entropy. The first is given by

|

where the first term is the entropy of the posterior at time t,

|

and the second is the conditional entropy of θ given y,

|

which is the entropy of the updated posterior after having observed x and y, averaged over draws of y from the posterior-predictive distribution. Written this way, the mutual information can be seen as the expected reduction in posterior entropy from a new stimulus–response pair. Moreover, the first term  is independent of the stimulus and response on the current trial, so infomax stimulus selection is equivalent to picking the stimulus that minimizes the expected posterior entropy

is independent of the stimulus and response on the current trial, so infomax stimulus selection is equivalent to picking the stimulus that minimizes the expected posterior entropy  .

.

A second equivalent expression for the mutual information, which will prove useful for our sampling-based method, is

|

which is the difference between the marginal entropy of the response distribution conditioned on x,

|

and the conditional entropy of the response y given θ, conditioned on the stimulus,

|

This formulation shows the mutual information to be equal to the difference between the entropy of the marginal distribution of y conditioned on x (with θ integrated out) and the average entropy of y given x and θ, averaged over the posterior distribution of θ. The dual expansion of the mutual information has also been used by Kujala and Lukka (2006).

In a sequential setting where t is the latest trial and t + 1 is the upcoming one, the optimal stimulus is the information-maximizing (“infomax”) solution:

|

Figure 4 shows an example of a simulated experiment where the stimulus was selected adaptively following the infomax criterion. Note that our algorithm takes a “greedy” approach of optimizing one trial at a time. For work on optimizing beyond the next trial, see for example Kim, Pitt, Lu, and Myung (2017).

Figure 4.

Example of infomax adaptive stimulus selection, simulated with a three-alternative lapse-free model on 1-D stimuli. The figure shows how, given a small set of data (the stimulus–response pairs shown in the top row), the psychometric functions are estimated based on the accumulated data (middle row) and the next stimulus is chosen to maximize the expected information gain (bottom row). Each column shows the instance after the N observations in a single adaptive stimulus-selection sequence, for N = 10, 11, 15, and 20, respectively. In the middle row, the estimated psychometric functions (solid lines) quickly approach the true functions (dashed lines) through the adaptive and optimal selection of stimuli. This example was generated using the Laplace approximation–based algorithm, with an independent Gaussian prior over the weights with mean zero and standard deviation σ = 10.

Selecting the optimal stimulus thus requires maximizing the mutual information over the set of all possible stimuli {x}. Since each evaluation of the mutual information involves a high-dimensional integral over parameter space and response space, this is a highly computationally demanding task. In the next sections, we present two algorithms for efficient infomax stimulus selection based on each of the two approximate inference methods described previously.

Infomax with Laplace approximation

Calculation of the mutual information is greatly simplified by a Gaussian approximation of the posterior. The entropy of a Gaussian distribution with covariance C is equal to  up to a constant factor. If we expand the mutual information as in Equation 12 and recall that we need only minimize the expected posterior entropy after observing the response, the optimal stimulus for time step t + 1 is given by

up to a constant factor. If we expand the mutual information as in Equation 12 and recall that we need only minimize the expected posterior entropy after observing the response, the optimal stimulus for time step t + 1 is given by

|

where  is the covariance of the updated (Gaussian) posterior after observing stimulus–response pair (x, y). To evaluate the updated covariance

is the covariance of the updated (Gaussian) posterior after observing stimulus–response pair (x, y). To evaluate the updated covariance  under the Laplace approximation, we would need to numerically optimize the posterior for θ for each possible response y for any candidate stimulus x, which would be computationally infeasible. We therefore use a fast approximate method for obtaining a closed-form update for

under the Laplace approximation, we would need to numerically optimize the posterior for θ for each possible response y for any candidate stimulus x, which would be computationally infeasible. We therefore use a fast approximate method for obtaining a closed-form update for  from the current posterior covariance Ct, following an approach developed by Lewi et al. (2009). See Appendix C for details. Note that this approximate sequential update is only used for calculating the expected utility of each candidate stimulus by approximating the posterior distribution at the next trial. For obtaining the MAP estimate of the current model parameter θt, numerical optimization needs to be performed using the full accumulated data

from the current posterior covariance Ct, following an approach developed by Lewi et al. (2009). See Appendix C for details. Note that this approximate sequential update is only used for calculating the expected utility of each candidate stimulus by approximating the posterior distribution at the next trial. For obtaining the MAP estimate of the current model parameter θt, numerical optimization needs to be performed using the full accumulated data  each time.

each time.

Once we have  for each given stimulus–observation pair, we numerically sum this over a set of discrete counts y that are likely under the posterior-predictive distribution. This is done in two steps, by separating the integral in Equation 19 as

for each given stimulus–observation pair, we numerically sum this over a set of discrete counts y that are likely under the posterior-predictive distribution. This is done in two steps, by separating the integral in Equation 19 as

|

Note that the outer integral is over the current posterior  , which is to be distinguished from the future posterior

, which is to be distinguished from the future posterior  , whose entropy we are trying to minimize. Whereas the inner integral is simply a weighted sum over the set of outcomes y, the outer integral over the parameter θ is in general challenging, especially when the parameter space is high dimensional. In the case of the standard MNL model that does not include lapse, we can exploit the linear structure of model to reduce this to a lower dimensional integral over the space of the linear predictor, which we evaluate numerically using Gauss–Hermite quadrature (Heiss & Winschel, 2008). (This integral is 1-D for classic logistic regression and has k − 1 dimensions for MNL regression with k classes; see Appendix C for details.) When the model incorporates lapses, the full parameter vector

, whose entropy we are trying to minimize. Whereas the inner integral is simply a weighted sum over the set of outcomes y, the outer integral over the parameter θ is in general challenging, especially when the parameter space is high dimensional. In the case of the standard MNL model that does not include lapse, we can exploit the linear structure of model to reduce this to a lower dimensional integral over the space of the linear predictor, which we evaluate numerically using Gauss–Hermite quadrature (Heiss & Winschel, 2008). (This integral is 1-D for classic logistic regression and has k − 1 dimensions for MNL regression with k classes; see Appendix C for details.) When the model incorporates lapses, the full parameter vector  includes the lapse parameters in addition to the weights w. In this case, our method with Laplace approximation may suffer from reduced accuracy due to the fact that the posterior may be less closely approximated by a Gaussian.

includes the lapse parameters in addition to the weights w. In this case, our method with Laplace approximation may suffer from reduced accuracy due to the fact that the posterior may be less closely approximated by a Gaussian.

In order to exploit the convenient structure of the reduced integral over the weight space, we choose to maximize the partial information  between the observation and the psychophysical weights instead of the full information

between the observation and the psychophysical weights instead of the full information  . This is a reasonable approximation in many cases where the stimulus-dependent behavior is the primary focus of the psychometric experiment (for a similar approach, see also Prins, 2013). However, we note that this is the only piece in this work where we treat the weights separately from the lapse parameters; posterior inference is still performed for the full parameter θ. Thus for Laplace-based infomax exclusively, the partial covariance

. This is a reasonable approximation in many cases where the stimulus-dependent behavior is the primary focus of the psychometric experiment (for a similar approach, see also Prins, 2013). However, we note that this is the only piece in this work where we treat the weights separately from the lapse parameters; posterior inference is still performed for the full parameter θ. Thus for Laplace-based infomax exclusively, the partial covariance  is used in place of the full covariance

is used in place of the full covariance  , where

, where  is the posterior distribution over the full parameter space. Because the positive semidefiniteness of the partial covariance is still not guaranteed, it needs to be approximated to the nearest symmetric positive semidefinite matrix when necessary (Higham, 1988). We can show, however, that the partial covariance is asymptotically positive semidefinite in the small-lapse limit (Appendix A).

is the posterior distribution over the full parameter space. Because the positive semidefiniteness of the partial covariance is still not guaranteed, it needs to be approximated to the nearest symmetric positive semidefinite matrix when necessary (Higham, 1988). We can show, however, that the partial covariance is asymptotically positive semidefinite in the small-lapse limit (Appendix A).

Infomax with MCMC

Sampling-based inference provides an attractive alternative to the Laplace method when the model includes nonzero lapse rates, where the posterior may be less well approximated by a Gaussian. To compute mutual information from samples, it is more convenient to use the expansion given in Equation 15, so that it is expressed as the expected uncertainty reduction in entropy of the response y instead of a reduction in the posterior entropy. This will make it straightforward to approximate integrals needed for mutual information by Monte Carlo integrals involving sums over samples. Also note that we are back in the full parameter space; we no longer treat the lapse parameters separately, as we did for the Laplace-based infomax.

Given a set of posterior samples  from the posterior distribution

from the posterior distribution  at time t, we can evaluate the mutual information using sums over “potential” terms that we denote by

at time t, we can evaluate the mutual information using sums over “potential” terms that we denote by

|

This allows us to evaluate the conditional response entropy as

|

and the marginal response entropy as

|

where we have evaluated the posterior-predictive distribution as

|

Putting together these terms, the mutual information can be evaluated as

|

which is straightforward to evaluate for a set of candidate stimuli {x}. The computational cost of this approach is therefore linear in the number of samples, and the primary concern is the cost of obtaining a representative sample from the posterior.

Results

We consider two approaches for testing the performance of our proposed stimulus-selection algorithms: one using simulated data, and a second using an off-line analysis of data from real psychophysical experiments.

Simulated experiments

We first tested the performance of our algorithms using simulated data from a fixed psychophysical-observer model. In these simulations, a stimulus x was selected on each trial and the observer's response y was sampled from a “true” psychometric function,  .

.

We considered psychophysical models defined on a continuous 2-D stimulus space with four discrete response alternatives for every trial, corresponding to the problem of estimating the direction of a 2-D stimulus moving along one of the four cardinal directions (up, down, left, right). We computed expected information gain over a set of discrete stimulus values corresponding to a 21 × 21 square grid (Figure 5A). The stimulus plane is colored in Figure 5A to indicate the most likely response (one of the four alternatives) in each stimulus region. Lapse probabilities λci were set to either zero (the lapse-free case) or a constant value of 0.05, resulting in a total lapse probability of λ = 0.2 across the four choices (Figure 5B). We compared performance of our adaptive algorithms with a method that selected a stimulus uniformly at random from the grid on each trial. We observed that the adaptive methods tended to sample more stimuli near the boundaries between colored regions on the stimulus space (Figure 5C), which led to more efficient estimates of the PF compared to the uniform stimulus-selection approach (Figure 5D). We also confirmed that the posterior entropy of the inferred parameters decreased more rapidly with our adaptive stimulus-sampling algorithms in all cases (Figure 5E and 5F). This was expected because our algorithms explicitly attempt to minimize the posterior entropy by maximizing the mutual information.

Figure 5.

The simulated experiment. (A) At each trial, a stimulus was selected from a 2-D stimulus plane with a 21 × 21 grid. The two lines, running along x1 and x2 respectively, indicate the cross-sections used in (C–D). Colors indicate the most likely response in the respective stimulus regime, according to the true psychometric function shown in (B), with a consistent color code. (B) Given each stimulus, a simulated response was drawn from a true model with four alternatives. Shown here is the model with lapse, characterized by a nondeterministic choice (i.e., the choice probability does not approach 0 or 1) even at an easy stimulus, far from the choice boundaries. (C–D) Examples of Laplace approximation–based inference results after 50 trials, where stimuli were selected either (C) using our adaptive infomax method or (D) uniformly, as shown at left. In both cases, the true model was lapse free, and the algorithm assumed that lapse was fixed at zero. The two sets of curves show the cross-sections of the true (dotted) and estimated (solid) psychometric functions, along the two lines marked in (A), after sampling these stimuli. (E–F) Traces of posterior entropy from simulated experiments, averaged over 100 runs each. The true model for simulation was either (E) lapse free or (F) with a finite lapse rate of λ = 0.2, with a uniform lapse scenario ci = 1/4 for each outcome i = 1, 2, 3, 4. In algorithms considering lapse (panels on the right), the shift in posterior entropy is due to the use of partial covariance (with respect to weight) in the case of Laplace approximation. The algorithm either used the classical multinomial logistic model that assumes zero lapse (left column) or our extended model that considers lapse (right column). Average performances of adaptive and uniform stimulus-selection algorithms are plotted in solid and dashed lines, respectively; algorithms based on Laplace approximation and Markov-chain Monte Carlo sampling are plotted in purple and cyan. The lighter lines show standard-error intervals over 100 runs, which are very narrow. All sampling-based algorithms used the semiadaptive Markov-chain Monte Carlo method with chain length M = 1,000.

For each true model, we compared the performances of four different adaptive methods (Figure 6A and 6B), defined by performing inference with MAP or MCMC and assuming the lapse rate to be fixed at zero or including nonzero lapse parameters. Each of these inference methods was also applied to data selected according to a uniform stimulus-selection algorithm. We quantified performance using the mean squared error between the true response probabilities  and the estimated probabilities

and the estimated probabilities  over the 21 × 21 grid of stimulus locations {xi} and the four possible responses {j}. For MAP-based inference, estimated probabilities were given by

over the 21 × 21 grid of stimulus locations {xi} and the four possible responses {j}. For MAP-based inference, estimated probabilities were given by  . For MCMC-based inference, probabilities were given by the predictive distribution, evaluated using an average over samples:

. For MCMC-based inference, probabilities were given by the predictive distribution, evaluated using an average over samples:  , where

, where  represent samples from the posterior.

represent samples from the posterior.

Figure 6.

The simulated experiment, continued; results from the same set of simulated experiments as in Figure 5. (A–B) Traces of the mean squared error, where the true model was either (A) lapse free or (B) with a total lapse rate of λ = 0.2, uniformly distributed to each outcome. Standard-error intervals are plotted in lighter lines as in Figure 5E and 5F. (C) Effect of lapse, tested by adding varying total lapse rates λ. Shown are the mean squared error after N = 100 trials of each stimulus-selection algorithm, equivalent to the endpoints in (B). Error bars indicate the standard error over 100 runs, equivalent to the lighter line intervals in Figure 5E and 5F.

When the true model was lapse free (Figure 6A), lapse-free and lapse-aware inference methods performed similarly, indicating that there was minimal cost to incorporating parameters governing lapse when lapses were absent. Under all inference methods, infomax stimulus selection outperformed uniform stimulus selection by a substantial margin. For example, infomax algorithms achieved in 50–60 trials the error levels that their uniform stimulus-selection counterparts required 100 trials to achieve.

By contrast, when the true model had a nonzero lapse rate (Figure 6B), adaptive stimulus-selection algorithms based on the lapse-free model failed to select optimal stimuli, performing even worse than uniform stimulus-selection algorithms. This emphasizes the impact of model mismatch in adaptive methods, and the importance of a realistic psychometric model. When lapse-aware models were used for inference, on the other hand, both Laplace-based and MCMC-based adaptive stimulus-selection algorithms achieved a significant speedup compared to uniform stimulus selection, while the MCMC-based adaptive algorithm performed better. This shows that the MCMC-based infomax stimulus-selection method can provide an efficient and robust platform for adaptive experiments with realistic models. When the true behavior had lapses, the MCMC-based adaptive stimulus-selection algorithm with the lapse-aware model automatically included easy trials, which provide maximal information about lapse probabilities. These easy trials are typically in the periphery of the stimulus space (strong-stimulus regimes, referred to as “asymptotic performance intensity” by Prins, 2012).

However, the effect of model mismatch due to nonzero lapse only becomes problematic at a high enough lapse rate; in the simulation shown in Figures 5F and 6B, we used a high lapse rate of λ = 0.2, which is more typical in the case of less sophisticated animals such as rodents (see, e.g., Scott, Constantinople, Erlich, Tank, & Brody, 2015). With lapse rates more typical in well-designed human psychophysics tasks ( ; see, e.g., Wichmann & Hill, 2001a, 2001b), infomax algorithms still tend to perform better than uniform sampling algorithms (Figure 6C).

; see, e.g., Wichmann & Hill, 2001a, 2001b), infomax algorithms still tend to perform better than uniform sampling algorithms (Figure 6C).

Finally, we measured the computation time per trial required by our adaptive stimulus-selection algorithms on a personal desktop computer with an Intel i7 processor. With the Laplace-based algorithm, the major computational bottleneck is the parameter-space integration in the infomax calculation, which scales directly with the model complexity. We could easily achieve tens-of-milliseconds trials in the case of the simple two-alternative forced-choice task, and subsecond trials with 2-D stimuli and four-alternative responses, as used in the current set of simulations (Figure 7A and 7B). With the MCMC-based algorithm, the time per trial in the sampling-based method is limited by the number of samples M in each MCMC chain rather than by the model complexity. Using the standard implementation for the Metropolis–Hastings sampler in Matlab, a time per trial of approximately 0.1 s was achieved with chains shorter than  (Figure 7C and 7D, top panels). This length of M ≈ 200 was good enough to represent the posterior distributions for our simulated examples (Figure 7C and 7D, bottom panels), although we note that longer chains are required to sample a more complex posterior distribution, and this particular length M should not be taken as the benchmark in general.

(Figure 7C and 7D, top panels). This length of M ≈ 200 was good enough to represent the posterior distributions for our simulated examples (Figure 7C and 7D, bottom panels), although we note that longer chains are required to sample a more complex posterior distribution, and this particular length M should not be taken as the benchmark in general.

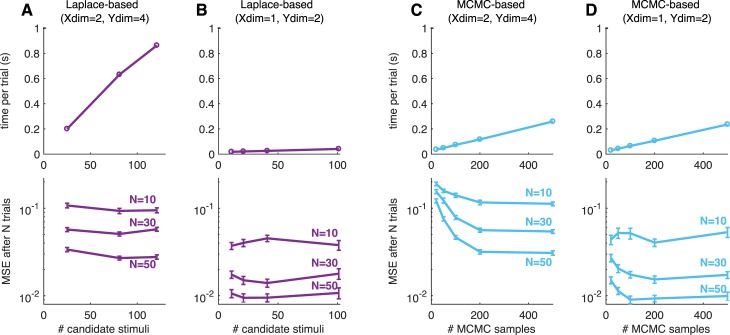

Figure 7.

Computation time and accuracy. (A–B) The computation times for the Laplace-based algorithms grow linearly with the number of candidate stimulus points, as shown on the top panels, because one needs to perform a numerical integration to compute the expected utility of each stimulus. In general, there is a trade-off between cost (computation time) and accuracy (inversely related to the estimation error). The bottom panels show the mean squared error of the estimated psychometric function, calculated after completing a sequence of N trials, where the 10 initial trials were selected at regular intervals and the following trials were selected under our adaptive algorithm. Error estimates were averaged over 100 independent sequences. Error bars indicate the standard errors. The true model used was the same as in either (A) Figure 5, with two-dimensional stimuli and four-alternative responses, described by nine parameters; or (B) Figure 3, with one-dimensional stimuli and binary responses, with only two parameters (slope and threshold). The different rates at which the computation time increases under the two models reflect the different complexities of numerical quadrature involved. We used lapse-free algorithms in all cases in this example. (C–D) We similarly tested the algorithms based on Markov-chain Monte Carlo sampling using the two models as in (A–B). In this case, the computation times (top panels) grow linearly with the number of samples in each chain and are not sensitive to the dimensionality of the parameter space. On the other hand, the estimation-error plots (bottom panels) suggest that a high-dimensional model requires more samples for accurate inference.

Optimal reordering of real data set

A second approach for testing the performance of our methods is to perform an off-line analysis of data from real psychophysical experiments. Here we take an existing data set and use our methods to reorder the trials so that the most informative stimuli are selected first (for a similar approach, see Lewi, Schneider, Woolley, & Paninski, 2011). To obtain a reordering, we iteratively apply our algorithm to the stimuli shown during the experiment. On each trial, we use our adaptive algorithm to select the optimal stimulus from the set of stimuli {xi} not yet incorporated into the model. This selection takes place without access to the actual responses {yi}. We update the posterior using the stimulus xi and the response yi it actually elicited during the experiment, then proceed to the next trial. We can then ask whether adding the data according to the proposed reordering would have led to faster narrowing of the posterior distribution than other orderings.

To perform this analysis, we used a data set from macaque monkeys performing a four-alternative motion-discrimination task (Churchland, Kiani, & Shadlen, 2008). Monkeys were trained to observe a motion stimulus with dots moving in one of the four cardinal directions and to report this direction of motion with an eye movement. The difficulty of the task was controlled by varying the fraction of coherently moving dots on each trial, with the remaining dots appearing randomly (Figure 8A). Each moving-dot stimulus in this experiment could be represented as a 2-D vector, where the direction of the vector is the direction of the mean movement of the dots, and the amplitude of the vector is given by the fraction of coherently moving dots (a number between 0 and 1). Each stimulus presented in the experiment was aligned with one of the two cardinal axes of the stimulus plane (Figure 8B). The PF for this data set consists of a set of four 2-D curves, where each curve specifies the probability of choosing a particular direction as a function of location in the 2-D stimulus plane (Figure 8C).

Figure 8.

Optimal reordering of a real monkey data set. (A) The psychometric task consisted of a 2-D stimulus presented as moving dots, characterized by a coherence and a mean direction of movement, and a four-alternative response. The four choices are color-coded consistently in (A–C). (B) The axes-only stimulus space of the original data set, with 15 fixed stimuli along each axis. Colors indicate the most likely response in the respective stimulus regime according to the best estimate of the psychometric function. (C) The best estimate of the psychometric function of monkeys in this task, inferred from all observations in the data set. (D) Stimulus selection in the first N = 100 trials during the reordering experiment, under the inference method that ignores lapse. Shown are histograms of x2 along one of the axes, x1 = 0, averaged over 100 independent runs in each case. (E–F) Error traces under different algorithms, averaged over 100 runs. Algorithms based on both Laplace approximation (purple) and Markov-chain Monte Carlo sampling (cyan; M = 1,000) achieve significant speedups over uniform sampling. Because the monkeys were almost lapse free in this task, inference methods that (E) ignore and (F) consider lapse performed similarly. Standard-error intervals over 100 runs are shown in lighter lines, but are very narrow.

This monkey data set contained more than 10,000 total observations at 29 distinct stimulus conditions, accumulating more than 300 observations per stimulus. This multiplicity of observations per stimulus ensured that the posterior distribution given the full data set was narrow enough that it could be considered to provide a ground-truth PF against which the inferences based on the reordering experiment could be compared.

The first 100 stimuli selected by the infomax algorithms had noticeably different statistics from the full data set or its uniform subsampling (the first N = 100 trials under uniform sampling). On the other hand, the sets of stimuli selected by both MAP-based and MCMC-based infomax algorithms were similar. Figure 8D shows the histogram of stimulus components along one of the axes,  , from the first N = 100 trials, averaged over 100 independent runs under each stimulus-selection algorithm using the lapse-free model.

, from the first N = 100 trials, averaged over 100 independent runs under each stimulus-selection algorithm using the lapse-free model.

Because the true PF was unknown, we compared the performance of each algorithm to an estimate of the PF from the entire data set. With the MAP algorithm, the full-data-set PF was given by  , evaluated at the MAP estimate of the log posterior,

, evaluated at the MAP estimate of the log posterior,  , given the full dataset

, given the full dataset  . For the MCMC algorithm, the full-data-set PF was computed by

. For the MCMC algorithm, the full-data-set PF was computed by  , where the MCMC chain

, where the MCMC chain  sampled the log posterior given the full data set. The reordering test on the monkey data set showed that our adaptive stimulus-sampling algorithms were able to infer the PF to a given accuracy in a smaller number of observations, compared to a uniform sampling algorithm (Figure 8E and 8F). In other words, data collection could have been faster with an optimal reordering of the experimental procedure.

sampled the log posterior given the full data set. The reordering test on the monkey data set showed that our adaptive stimulus-sampling algorithms were able to infer the PF to a given accuracy in a smaller number of observations, compared to a uniform sampling algorithm (Figure 8E and 8F). In other words, data collection could have been faster with an optimal reordering of the experimental procedure.

Exploiting the full stimulus space

In the experimental data set considered in the previous section, the motion stimuli were restricted to points along the cardinal axes of the 2-D stimulus plane (Figure 8B; Churchland et al., 2008). In some experimental settings, however, the PFs of interest may lack identifiable axes of alignment or may exhibit asymmetries in shape or orientation. Here we show that in such cases, adaptive stimulus-selection methods can benefit from the ability to select points from the full space of possible stimuli.

We performed experiments with a simulated observer governed by the lapse-free PF estimated from the macaque-monkey data set (Figure 8C). This PF was either aligned to the original stimulus axes (Figure 9A and 9B) or rotated counterclockwise by 45° (Figure 9C). We tested the performance of adaptive stimulus selection using the Laplace infomax algorithm, with stimuli restricted to points along the cardinal axes (Figure 9A) or allowed to be among a grid of points in the full 2-D stimulus plane (Figure 9B and 9C).

Figure 9.

Design of multidimensional stimulus space. (A–C) Three different stimulus-space designs were used in a simulated psychometric experiment. Responses were simulated according to fixed lapse-free psychometric functions (PFs), matched to our best estimate of the monkey PF (Figure 8C). Stimuli were selected within the respective stimulus spaces: (A) the cardinal-axes design, as in the original experiment; (B) the full stimulus plane, with the PF aligned to the cardinal axes of the original stimulus space; and (C) the full stimulus plane, with rotated PF. The black dots in (A–C) indicate which stimuli were sampled by the Laplace-based infomax algorithm during the first N = 100 trials of simulation, where the dot size is proportional to the number of trials in which the stimulus was selected (averaged over 20 independent runs, and excluding the 10 fixed initial stimuli). (D) The corresponding error traces, under infomax (solid lines) or uniform (dashed lines) stimulus selection, averaged over 100 runs respectively. Colors indicate the three stimulus-space designs, as shown in (A–C). Standard-error intervals over 100 runs are shown in lighter lines.

The simulated experiment indeed closely resembled the results of our data set reordering test in terms of the statistics of adaptively selected stimuli (compare Figure 9A to the purple histogram in Figure 8D). With the full 2-D stimulus space aligned to the cardinal axes, on the other hand, our adaptive infomax algorithm detected and sampled more stimuli near the boundaries between colored regions in the stimulus plane, which were usually not on the cardinal axes (Figure 9B). Finally, we observed that this automatic exploitation of the stimulus space was not limited by the lack of alignment between the PF and the stimulus axes; our adaptive infomax algorithm was just as effective in detecting and sampling the boundaries between stimulus regions in the case of the unaligned PF (Figure 9C).

The error traces in Figure 9D show that we can infer the PF at a given accuracy in an even smaller number of observations using our adaptive algorithm on the full 2-D stimulus plane (orange curves) compared to the cardinal-axes design (black curves). This also confirms that we can infer the PF accurately and effectively with an unaligned stimulus space (red curves) as well as with an aligned stimulus space. For comparison purposes, all errors were calculated over the same 2-D stimulus grid, even when the stimulus selection was from the cardinal axes. (This had negligible effects on the resulting error values: Compare the black curves in Figure 9D and the purple curves in Figure 8E.)

Discussion

We developed effective Bayesian adaptive stimulus-selection algorithms for inferring psychometric functions, with the objective of maximizing the expected informativeness of each stimulus. The algorithms select an optimal stimulus adaptively in each trial, based on the posterior distribution of model parameters inferred from the accumulating set of past observations.

We emphasize that in psychometric experiments, especially with animals, it is crucial to use models that can account for nonideal yet common behaviors, such as omission (no response; an additional possibility for the outcome) or lapse (resulting in a random, stimulus-independent response). Specifically, we constructed a hierarchical extension of a multinomial logistic model that incorporates both omission and lapse. Although we did not apply these additional features to real data, we performed simulated experiments to investigate their impacts on the accurate inference of PFs. To ensure applicability of the extended model in real-time closed-loop adaptive stimulus-selection algorithms, we also developed efficient methods for inferring the posterior distribution of the model parameters, with approximations specifically suited for sequential experiments.

Advantages of adaptive stimulus selection

We observed two important advantages of using Bayesian adaptive stimulus-selection methods in psychometric experiments. First, our adaptive stimulus-selection algorithms achieved significant speedups in learning time (number of measurements), both on simulated data and in a reordering test of a real experimental data set, with and without lapse in the underlying behavior. Importantly, the success of the algorithm depends heavily on the use of the correct model family; for example, adaptive stimulus selection fails when a classical (lapse-ignorant) model is used to measure behavior with a finite lapse rate. Based on the simulation results, it seems good practice to always use the lapse-aware model unless the behavior under study is known to be completely lapse free, although it should be checked that the addition of the lapse parameters does not make the inference problem intractable, given the constraints of the specific experiments. (One way to check this is using a simulated experiment, where lapse is added to the PF inferred by the lapse-free model, similar to what we did in this article.) The computational cost for incorporating lapses amounts to having k additional parameters to sample, one per each available choice, which is independent of the dimensionality of the stimulus space.

Second, our adaptive stimulus-selection study has implications on the optimization of experimental designs more generally. Contrary to the conventional practice of accumulating repeated observations at a small set of fixed stimuli, we suggest that the (potentially high-dimensional) stimulus space can be exploited more efficiently using our Bayesian adaptive stimulus-selection algorithm. Specifically, the algorithm can automatically detect the structure of the stimulus space (with respect to the PF) as part of the process. We also showed that there are benefits to using the full stimulus space even when the PF is aligned to the cardinal axes of the stimulus space.

Comparison of the two algorithms