Abstract

To explain consciousness as a physical process we must acknowledge the role of energy in the brain. Energetic activity is fundamental to all physical processes and causally drives biological behavior. Recent neuroscientific evidence can be interpreted in a way that suggests consciousness is a product of the organization of energetic activity in the brain. The nature of energy itself, though, remains largely mysterious, and we do not fully understand how it contributes to brain function or consciousness. According to the principle outlined here, energy, along with forces and work, can be described as actualized differences of motion and tension. By observing physical systems, we can infer there is something it is like to undergo actualized difference from the intrinsic perspective of the system. Consciousness occurs because there is something it is like, intrinsically, to undergo a certain organization of actualized differences in the brain.

Keywords: consciousness, metabolism, energy, brain, information theory, feedback

Introduction

“If mental processes are indeed physical processes, then there is something it is like, intrinsically, to undergo certain physical processes. What it is for such a thing to be the case remains a mystery.” (Nagel, 1974)

The philosopher Thomas Nagel summarized one of our greatest intellectual challenges: how to explain mental processes as physical processes. The aim of this paper is to outline a principle according to which consciousness could be explained as a physical process caused by the organization of energy in the brain1.

Energy is fundamentally important in all physical processes (Lotka, 1922; Schrödinger, 1944; Heisenberg, 1958; Boltzmann, 1886). As the biophysicist Harold Morowitz (1979) put it: “the flow of energy through a system acts to organize that system.” Light, chemical reactions, electricity, mechanical work, heat, and life itself can all be described in terms of energetic activity (Chaisson, 2001; Morowitz and Smith, 2007; Smil, 2008) as can metabolic processes in the body and brain (Magistretti, 2008; Perez Velazquez, 2009). It is surprising, therefore, that energy receives relatively little attention in neuroscientific and psychological studies of consciousness. Leading scientific theories of consciousness do not reference it (Crick and Koch, 2003; Edelman et al., 2011; Dehaene, 2014; Oizumi et al., 2014), assign it only a marginal role (Hameroff and Penrose, 2014), or treat it as an information-theoretical quantity (Friston, 2013; Riehl et al., 2017). If it is discussed, it is either as a substrate underpinning higher level emergent dynamics (Deacon, 2013) or as powering neural information processing (Sterling and Laughlin, 2017).

This lack of attention is all the more surprising given that some of the pioneers of neurobiology, psychology, and physiology found a central place for energy in their theories, including Hermann von Helmholtz (in Cahan, 1995), Gustav Fechner (1905), Sigmund Freud (Gay, 1988), William James (James, 1907), and Charles Sherrington (1940)2. There are, however, signs that attention is turning again to energetic or thermodynamic-related theories of consciousness in various branches of science (Deacon, 2013; Collell and Fauquet, 2015; Annila, 2016; Street, 2016; Tozzi et al., 2016; Marchetti, 2018) and in philosophy of mind (Strawson, 2008, 2017).

The present paper builds on this work by proposing that energy, and the related properties of force and work, can be described as actualized differences of motion and tension, and that – in Nagel’s phrase – ‘there is something it is like, intrinsically, to undergo’ actualized differences. Recent neuroscientific evidence suggests that consciousness is a product of the way energetic activity is organized in the brain. Following this evidence, I propose that we experience consciousness because there is something it is like, intrinsically, to undergo a certain organization of actualized differences in the brain.

Several researchers have tackled the problem of consciousness by treating the brain in principle as a neural information processor (e.g., Tononi et al., 2016; Dehaene et al., 2017; Ruffini, 2017). I will argue that the governing principle of the brain at the neural level is not information processing but energy processing. The information-theoretic approach to measuring and modeling brain activity, however, can usefully complement the energetic approach outlined here.

Consciousness and Energy in the Brain

We do not fully understand the biological function of energy in the brain or how it relates to the presence of consciousness in the person3. Given that the human brain accounts for only 2% of the body’s mass it demands a large portion of the body’s total energy budget, some 20% (Laughlin, 2001; Magistretti and Allaman, 2013). Most of this energy is derived from the oxidization of glucose supplied to the cerebral tissue through the blood. Roy and Sherrington were the first to propose a direct correspondence between changes in cerebral blood flow and functional activity (Roy and Sherrington, 1890). Many features of human brain anatomy, such as the number of blood vessels per unit of space, the lengths of neural connections, the width of axons, and even the ratio of brain to stomach size are thought to be determined by the high metabolic demands associated with complex cognitive processing (Allen, 2009).

For many neuroscientists, the main function of energy in the brain is to fuel neural signaling and information processing (Magistretti, 2013); energy supply is seen as a constraint on the design and operation of the brain’s computational architecture (Laughlin, 2001; Hall et al., 2012; Sterling and Laughlin, 2017). It has been calculated, for example, that the rate of energy supply available to the human brain places an upper ‘speed limit’ on neural processing of about 1 kHz (Attwell and Gibb, 2005). And Schölvinck et al. (2008) estimated that conscious perception of sensory stimuli increases energy consumption in primate brains by less that 6% compared to energy consumption in the absence of conscious perception4. They attribute this relatively small change to an energy efficient “design strategy” of the brain in which decreases in neural activity play a functional role in information processing as well as increases. Energy, on these accounts, plays no direct role in higher mental processes, like consciousness.

Robert Shulman and colleagues have argued there is a direct connection between energy in the brain and consciousness (Shulman et al., 2009; Shulman, 2013). By studying the progressive loss of behavioral response to external stimulus from wakefulness to deep anesthesia, they found a corresponding reduction and localization of cerebral metabolism (a marker of energy consumption). Therefore, they argue, high global metabolism is necessary for consciousness. However, they are also clear that high global metabolic rates are not sufficient as patients with locked-in-syndrome and those who suffer from some forms of epileptic seizure can register high levels of global brain metabolism without exhibiting the observable behavior that we expect from a conscious person (Shulman, 2013; Bazzigaluppi et al., 2017). Shulman’s thesis has been challenged on several grounds (Seth, 2014). For example, it has been pointed out that behavioral responsiveness may be inadequate as a measure of sentience given that vestiges of consciousness have been detected in people diagnosed as being in a vegetative state with a low cerebral metabolism (Owen et al., 2006). Moreover, some patients who recover from a vegetative state to regain consciousness do so despite having substantially reduced cerebral metabolism compared with normal controls (Laureys et al., 1999; Chatelle et al., 2011).

In recent years there has been a growing interest in intrinsic brain activity (Clarke and Sokoloff, 1999; Raichle, 2011). This background or spontaneous activity occurs in the resting awake state in the absence of external stimulation or directed attention, and its energy demands can greatly exceed those of localized activation due to task performance or attention. The discovery of this so-called ‘dark energy’ in the brain (Raichle, 2010) was greeted with some surprise in the neuroscience community and remains controversial (Morcom and Fletcher, 2007). Work on intrinsic activity led to the identification of a ‘default mode network’ in the brain, an extended set of interconnected regions that uses high levels of energy when a person is in a non-attentive state. Energy use drops significantly in this network when a more cognitively demanding task, such as paying attention to a stimulus, is performed (Shulman et al., 1997; Raichle et al., 2001). Vanhaudenhuyse et al. (2009) reported that connectivity within the default mode network in patients with severe brain-damage deteriorates in proportion to the degree of conscious impairment, suggesting it plays an important role in sustaining consciousness.

Meanwhile, it is somewhat surprising to find that energy use during non-rapid eye movement sleep remains at ∼85% of that in the waking state, while during rapid eye movement sleep it can be as high as in the waking state (Dinuzzo and Nedergaard, 2017). At the same time, consciousness can be minimally sustained with energy use at only 42% of the level that occurs in healthy conscious individuals, suggesting that much cerebral metabolic activity in normal waking states does not directly contribute to consciousness (Stender et al., 2016). Many anesthetic agents are thought to obliterate consciousness because they reduce the global rate of cerebral metabolism (Hudetz, 2012). Administering ketamine, on the other hand, increases brain metabolism yet can still lead to loss of responsiveness (Pai and Heining, 2007). Overall, it seems we find no clear correlation between the total amount of energy used by the brain, or the location where the energy is used, and the level of consciousness detectable in the person.

Consciousness and the Organization of Energetic Processing in the Brain

An alternative, or perhaps complementary, way to think about this issue is in terms of how the energetic activity in the brain is organized rather than its global level or localization. Indeed, this has implicitly been the focus of recent research that aims to provide quantitative measures of consciousness levels. In one study, researchers used transcranial magnetic stimulation (TMS) to send a magnetic pulse through the brains of healthy controls and patients with various states of impaired consciousness (Casali et al., 2013). By measuring how the pulse perturbed the cortex the researchers were able to determine the relative complexity and extent of the pathways through which the pulse propagated and correlate these to levels of consciousness. The researchers calculated a perturbation-complexity index (PCI) that quantified the levels of consciousness present in each person they studied. This method was further validated as a reliable objective measure of levels of consciousness by Casarotto et al. (2016).

The PCI was calculated using data from electroencephalographic (EEG) measurements of the cerebral perturbation following the TMS. Images from the EEG were filtered into binary data that was then analyzed using a Lempel–Ziv algorithm, a commonly used information-theoretical technique in which complexity is measured as a function of data string compressibility, with more complex data strings being less compressible (Ziv and Lempel, 1977; Aboy et al., 2006). Other researchers have developed similar information-theoretical methods for quantifying the complexity of brain activity and levels of consciousness. King et al. (2013) analyzed data from 181 EEG recordings of patients who were diagnosed with varying states of impaired consciousness and applied a measure of weighted symbolic mutual information (wSMI) that sharply distinguished between patients in vegetative state, minimally conscious state, and conscious state.

Although information theoretic tools were being used to analyze and interpret the data in these studies we should note that what was actually being detected by the experimental procedures was not information per se but the organization of energetic activity or processing in the brain. Energetic processing – the processes by which the brain regulates the flow of energy through its structures – is routinely detected at varying degrees of spatial and temporal resolution, either directly or indirectly, by neuroimaging techniques such as positron emission tomography (PET), functional magnetic resonance image (fMRI), and EEG (Niedermeyer and Lopes da Silva, 1987; Bailey et al., 2005; Shulman, 2013). Referring again to the study by Casali et al. (2013), the perturbations from which the PCI was calculated were generated by a pulse of magnetic energy from the TMS and were imaged with EEG that measures electrical voltage differences, that is, fluctuations in energetic potentials between clusters of neurons in the cortex (Niedermeyer and Lopes da Silva, 1987; Hu et al., 2009; Koponen et al., 2015). The PCI and wSMI can therefore be interpreted as measures of the complexity or organization of energetic processing in the brain during the experimental procedures.

Subsequent research has directly investigated the connection between brain metabolism (how the brain regulates energy conversion), brain organization, and levels of consciousness by combining EEG measures with PET, a more specific measure of cerebral metabolism. Chennu et al. (2017) collected data from 104 patients in varying states of conscious impairment using both techniques. By analyzing this data, they determined a metric that discriminated levels of consciousness to a high degree of accuracy. This study built on previous work by Demertzi et al. (2015) that used fMRI to correlate a measure of intrinsic functional connectivity in the brain with levels of consciousness. The PCI method has been further validated by a study combining EEG and 18F-fluorodeoxyglucose (FDG)-PET (Bodart et al., 2017), so reinforcing the link between levels of consciousness and the organization of metabolic activity in the brain.

Current brain imaging methods do not strictly speaking detect information processing5. They do, however, detect changes associated with increases in energy consumption (via fMRI and PET) and fluctuations in electrical potential energy (via EEG), both of which reliably correlate with changes in mental function and behavior. On the basis of what we can observe, the brain operates according to the principle of energetic processing. The evidence discussed above suggests levels of consciousness are determined by the organization of energy processing in the brain rather than on its global level or localization; wakeful conscious states are associated with more complex organization. To understand why this might be we need to consider the concept of energy in more depth.

Energy

The concept of energy that we are familiar with today emerged only slowly from its beginnings in the late eighteenth century. It developed through the study of thermodynamics in the nineteenth century, and then found its place at the center of theories of relativity, quantum mechanics, and cosmology in the twentieth (Coopersmith, 2010). In colloquial usage energy refers to ideas of vigor, vitality, power, activity, and zest. In scientific usage, however, energy is defined as the ability of a system to do work6. Work is defined as the transfer of energy involved in moving an object over a distance by an external force, at least part of which is applied in the direction of the displacement (Duncan, 2002). Scientists and engineers often refer to energy as an abstract property: “Energy is a mathematical abstraction that has no existence apart from its functional relationship to other variables” (Abbott and Van Ness, 1972; Rose, 1986). It is a property that can be converted from one form to another, and in an isolated system the total quantity is conserved (Smil, 2008).

Despite the enormous amount of interest in the physics of energy and its central importance in so many branches of science, its nature remains in many ways mysterious (Feynman, 1963; Smil, 2008; Coopersmith, 2010) and it has been the subject of relatively little philosophical interrogation (Coelho, 2009). Treating energy as an abstract accounting quantity is perfectly satisfactory for many scientific purposes, where there is little reason to question its nature. But if energetic activity plays a significant role in consciousness, as the evidence cited above suggests it might, then its nature deserves closer scrutiny.

The concept of energy in the European intellectual tradition can be traced back to Aristotle who used but never precisely defined the term energeia (εν ρ

ρ εια) to convey the ideas of action, activity, actuality, being at work, and acting purposefully (Sachs in Aristotle, 2002). Scholars have long debated the best way to translate energeia from ancient Greek. The word ‘energy’ itself has been used, as have ‘activity’ and ‘actuality,’ but ‘being-at-work’ is currently favored, partly due to energeia’s roots in ergon, the ancient Greek for work (Aristotle, 1818; Ellrod, 1982; Sachs in Aristotle, 2002). Modern scholars have tended to quarantine the ancient concept of energeia from contemporary ideas about energy. But Aristotle’s term may still have value when thinking about energy’s nature. This is especially so when we take into account the ideas of Aristotle’s intellectual forebear Heraclitus, whose cosmological view was informed by three main principles: (i) that activity in nature is driven by ‘fire’ – which has been interpreted as synonymous with energy (Heisenberg, 1958), (ii) is subject to continual flux or motion, and (iii) is structured by antagonism or tension and (Kahn, 1989; Sachs in Aristotle, 2002).

εια) to convey the ideas of action, activity, actuality, being at work, and acting purposefully (Sachs in Aristotle, 2002). Scholars have long debated the best way to translate energeia from ancient Greek. The word ‘energy’ itself has been used, as have ‘activity’ and ‘actuality,’ but ‘being-at-work’ is currently favored, partly due to energeia’s roots in ergon, the ancient Greek for work (Aristotle, 1818; Ellrod, 1982; Sachs in Aristotle, 2002). Modern scholars have tended to quarantine the ancient concept of energeia from contemporary ideas about energy. But Aristotle’s term may still have value when thinking about energy’s nature. This is especially so when we take into account the ideas of Aristotle’s intellectual forebear Heraclitus, whose cosmological view was informed by three main principles: (i) that activity in nature is driven by ‘fire’ – which has been interpreted as synonymous with energy (Heisenberg, 1958), (ii) is subject to continual flux or motion, and (iii) is structured by antagonism or tension and (Kahn, 1989; Sachs in Aristotle, 2002).

We now understand there to be two main forms of energy: kinetic and potential. Kinetic energy is possessed by objects due to their motion, while potential energy is possessed by objects due to their relative position or configuration. All other forms of energy, such as thermal, electromagnetic, solar, chemical, gravitational, atomic, and so on are in themselves forms of either kinetic or potential energy (Duncan, 2002; Smil, 2008). Much can be said about kinetic and potential energy, including the fact that they are causally efficacious, that is, they cause real change and activity in the material world7. But I want to draw attention here to the fact that they are both manifestations of difference. Kinetic energy is difference as motion or change; potential energy is difference as tension or antagonism. Neither kinetic nor potential energy inhere absolutely in objects but are relational properties; motion or change occurs relative to a frame of reference, and tension or antagonism occurs between one object, or force, and another. The concept of difference then is of utmost importance when considering the nature of energy and the related properties of force and work8.

Actualized Difference

If energy is the ability to do work then the displacement of a body undergoing work is due to force, defined as the “agency that tends to change the momentum of a massive body” (Rennie, 2015) or less formally as a “push or a pull.” Forces act and react antagonistically in equally opposing pairs and are therefore, like energy, manifestations of difference. The discipline of physics finds it convenient to treat energy, forces and work as distinct quantities to be balanced in abstract mathematical equations. But in nature they are integral and actualized, acting collectively in time and space with causal efficacy.

By observing nature, we can infer there is ‘something it is like’ to be a physical system undergoing antagonistic forceful interactions, and what it is like will vary as the interactions vary9. There is something it is like, for example, to be a piece of rope undergoing great tension that is different from what it is like to be the same rope when relaxed, or for a rock to crash to earth having been in freefall. Some effects of these interactions may be observed from an extrinsic perspective; we may hear a creak or a crunch. But the something it is like to undergo the interactions themselves is an intrinsic property of the observed system to which the extrinsic observer has no access. It is for this reason that its presence and nature can only be inferred10.

This is not to claim that forces acting at the subatomic scale between particles, or at the macroscopic scale in rope or rock, undergo anything like the experience we undergo as conscious humans11. Something it is like-ness is not in itself sufficient for consciousness. Rather it is to recognize that:

-

simple (i)

energy, forces, and work are actualized,

-

simple (ii)

they are expressions of difference, and

-

simple (iii)

there is something it is like, intrinsically, to undergo actualized difference.

I use the term actualized difference to refer to the active, antagonistic nature of energy, forces and work in a way that encompasses Heraclitean cosmology, Aristotelian energeia, and contemporary scientific descriptions of energy. Mathematical equations can represent actualized differences with abstract differences, in the form of symbols and numbers, but not whatever it is that puts the “fire in the equation” (Hawking, 1988)12. For that we must refer back to nature itself – to the territory rather than the map (Korzybski, 1933).

Energy and Information

For many contemporary scientists, information is a fundamental property of nature. For some it is the most fundamental property of nature (Davies, 2010). Neuroscientists often claim that the brain operates according to the principle of information processing. We read that “the brain is fundamentally an organ that manipulates information” (Sterling and Laughlin, 2017) and that brains are “information processing machines” (Ruffini, 2017). Individual neurons are treated as information processing units, while neural firing patterns are converted into sequences of binary digits (1s and 0s) that encode information (Koch, 2004). Recent prominent theories claim consciousness is identical with (Tononi et al., 2016) or results from (Dehaene et al., 2017) certain kinds of information structures or information processes in brains.

Information is variously and sometimes imprecisely defined in science (Capurro and Hjørland, 2003), its meaning is still strongly contested (Lombardi et al., 2016; Roederer, 2016), and many people regard it as being to some extent subjective, relativistic, or observer-dependent (von Foerster, 2003; Deacon, 2010; Werner, 2011; Logan, 2012; Searle, 2013; de-Wit et al., 2016). The term is often used in science colloquially (meaning ‘what is conveyed by an arrangement of things’) or “intuitively” (Erra et al., 2016). And where one might expect to find a clear definition, such as in a dictionary of physics, biology or chemistry, none appears (Hine, 2015; Rennie, 2015, 2016).

The most widely cited technical definition of information is that given by Shannon (1948) as part of his mathematical theory of communication. For Shannon, information does not refer to meaning or semantics, as it does colloquially. The information is the amount of uncertainty in a message (a sequence of data) measured through probabilistic analysis of its elements. Information theory has developed into an exceptionally powerful mathematical tool that can be used, among many other things, to measure the complexity of physical systems. But a quantity of Shannon information is a measure of what can be known about a system as distinct from the system itself. The information lies with the measurer rather than the measured13.

The other commonly cited definition of information is Gregory Bateson’s “a difference that makes a difference” (Bateson, 1979). Like his fellow cybernetic theorist Wiener (1948), Bateson sharply distinguished information from energy. Difference is not a property of what he calls the “ordinary material universe” governed by energetic activity. It is not subject to the effects of impacts and forces but is an abstract, relational property of the mind that exists outside the realm of physical causation: “Difference, being of the nature of relationship, is not located in time or space.” Information defined according to Bateson as a “non-substantial” abstract difference cannot be used to explain consciousness as a physical process14.

The integrated information theory of consciousness (IIT) proposed by Tononi et al. (2016) provides an alternative, non-Shannonian, definition of information as “a form in cause-effect space.” Cause-effect space, according to their theory, contains a “conceptual structure” – a constellation of related concepts – that is specified by the “physical substrate of consciousness” (PSC), this being the precise complexes of neural activation involved in any experience. Each conscious experience is identical with this “form,” denoted Φmax when maximally integrated. But while IIT is presented as a theory of integrated information, it could equally serve as a theory of how energetic processing is organized since the PSC consists in the causally interrelated patterns of neural firing that are identical with the conscious experience.

Treating brains as neural information processors does not help us to understand consciousness as a physical process because information, according to the commonly accepted definitions, is not a physical property of brains at the neural level; there is no information in a neuron15. It is useful, however, to apply information-theoretical methods to study the organization of physical systems, such as brains. Wiener (1948) stated: “…the amount of information in a system is a measure of its degree of organization…” As exemplified in several studies and theories cited here, we can measure and model the way the organization of energetic processes in the brain contributes to the presence of consciousness in a person16. But the abstract difference between 0 and 1 is not equivalent to the actualized difference between a neuron at rest and firing.

The Brain as a ‘Difference Engine’

The challenge of explaining consciousness as a physical process is made more tractable, I suggest, by recognizing that brains operate on the principle of energetic processing. Neurons, in concert with other material structures such as astrocytes and mitochondria, convert, distribute, and dissipate electro-chemical energy through highly organized pathways in order to drive behaviors critical to the organism’s survival. This makes sense when we consider the fact that organisms inhabit a physical world that is structured through the actions of energy, forces and work. To survive and prosper in this world they must continually work to acquire new supplies of high-grade or free energy to maintain an internal state far from thermodynamic equilibrium (Boltzmann, 1886; Schrödinger, 1944; Schneider and Sagan, 2005). Besides internal regulation, nervous systems enable organisms to perform two major tasks: discriminating between variations in environmental conditions, such as temperature, acidity, salinity, nutrient levels, or presence of predators, and moving toward environmental conditions that are beneficial to survival and away from those that are harmful.

The mechanisms that enable performance of these tasks can be seen at work in organisms with relatively simple nervous systems, such as the C. elegans worm (Sterling and Laughlin, 2017). Chemical gradients in the environment activate chemosensory neurons on the worm’s surface that connect via interneurons to motor neurons that control the action of dorsal and ventral muscles, which, in turn, control the worm’s movement (de Bono and Maricq, 2005). In this way, differences of chemical potential energy in the environment are converted into differences of electro-chemical energy in the sensing apparatus of the organism and then into differences of chemical energy in the muscles, which, by antagonistic action, are converted into the kinetic energy of the organism’s movement. The organism makes discriminations in the environment relevant to its interests so that it can take appropriate actions in response.

We can see the same basic principle at work in biology of far greater complexity. The human visual system, for example, is highly demanding on the brain’s energy budget (Wong-Riley, 2010). But the evolutionary benefit of human vision is the capacity it confers to guide finely controlled bodily actions in light of environmental conditions. This is achieved through an intricate sequence of energy conversions, beginning with the arrival at the retina of electro-magnetic energy from the environment and cascading through numerous energetic exchanges in the neural pathways of the visual system that progressively differentiate features of the environment (Hubel and Livingstone, 1987). This frequently results in the conversion of electro-chemical energy in the motor system and muscles to the kinetic energy of bodily movement (Goodale, 2014). The fact that our complex biology supports so rich a repertoire of sensory discriminations and motor responses may be only a difference of degree rather than of kind with the humble worm.

We might think of sensory cells responding to stimulation from environmental energy by becoming excited or by increasing local neural activation. But vertebrate photoreceptors are, contrary to what one might expect, hyperpolarized by photon absorption. This means they ‘turn off’ when exposed to light and ‘turn on’ in the dark, even though they use more energy in the dark (Wong-Riley, 2010)17. Meanwhile, some of the neurobiological evidence cited in Section “Consciousness and Energy in the Brain” cautions us against assuming that sensory stimulation always results in increased neural activation. Decreases in activation in the brain can occur in response to cognitively demanding tasks, yet can go unnoticed in imaging studies with methodologies designed to detect task-evoked increases in metabolic rate above baseline (Raichle et al., 2001; Schölvinck et al., 2008). And of course not all neural activation is excitatory; neural inhibition is vitally important in brain function, as elsewhere in the nervous system, and this also entails an energetic cost (Buzsáki et al., 2007). There is evidence that an optimal balance between neural excitation and inhibition (E-I balance) in the cerebral cortex is critical for the brain to function well (Zhou and Yu, 2018).

In light of these mechanisms, the energy-hungry brain might be understood as a kind of ‘difference engine’ that works by actuating complex patterns of motion (action potential propagation) and tension (antagonistic pushes and pulls between forces) at various spatiotemporal scales. Firing rates and electrical potentials vary within neurons, between neurons, between networks of neurons, and between brain regions, so maximizing the differential states the brain undergoes. A decrease in activation, or a reduction in firing rate, can create a differential state just as much as an increase. And, as is indicated by the work of Schölvinck et al. (2008), deactivation may be an energy efficient way for the brain to increase its repertoire of differential states. Maintaining a global E-I balance across spatiotemporal scales, meanwhile, is thought to promote ‘efficient coding’ in sensory and cognitive processing (Zhou and Yu, 2018). All this lends support to the idea, proposed above, that one of the roles of energetic activity in the brain is to efficiently actuate differences of motion and tension that advance the interests of the brain-bearing organism. It is the actualized difference that makes the difference.

Energetic Organization as the Cause of Consciousness

In theory, we could account for all the highly complex processes occurring in the brain in terms of energy, forces and work, that is, as physical, chemical and biological processes. But the seemingly unassailable problem of how any of these processes might cause consciousness remains. The principle outlined here – that there is something it is like, intrinsically to undergo differences due to the antagonistic action of energy, forces and work – may offer a toehold in the slippery face of the problem. There is something it is like, intrinsically, to be a tense muscle that is different from being a relaxed muscle. There is something it is like, intrinsically, to be networks of neurons in fantastically complex states of actualized differentiation from other networks, with action potentials propagating through vast arrays of fibers. But all this something is it like-ness is not in itself sufficient for consciousness. Muscles are not conscious, and networks of neurons are active in the brain when we are in dreamless sleep or under anesthesia. What is it about the organization of energetic processes in the brain, as discussed in Section “Consciousness and the Organization of Energetic Processing in the Brain,” which determines the level of consciousness we experience?

We gain some insight into the association between consciousness and the organization of energetic processing in the brain from studies of anesthesia. The reason why anesthetic agents obliterate consciousness is not understood (Mashour, 2004). Recent work has focused on the ways in which they interfere with the brain’s capacity to generate patterns of localized differentiation (often termed ‘information’) and to bind together or integrate those patterns across widely distributed brain networks (Hudetz, 2012; Hudetz and Mashour, 2016). Evidence from studies on the neurological effects of anesthetics suggests that consciousness is lost as distant regions of the brain become functionally isolated and global integration breaks down (Lewis et al., 2012). The idea that consciousness depends on maximizing differentiation and integration in the brain lies at the heart of IIT (Tononi, 2012; Oizumi et al., 2014).

A potential mechanism supporting global integration of local differentiation is recurrent or reentrant processing, in which widely distributed areas of the brain engage in complex loops of cortical feedback via massively parallel connections (Edelman et al., 2011; Edelman and Gally, 2013). A number of studies of the effects of anesthetics have shown that they disrupt feedback connectivity, and hence integration, particularly in the frontoparietal area of the brain (Lee et al., 2009; Hudetz and Mashour, 2016). Studies of brain organization during deep sleep have also reported an increase in modularity consistent with the loss of integration among regions of the brain found in the awake state (Tagliazucchi et al., 2013). This suggests that the presence of consciousness in a wakeful person depends on a certain level of functional integration supported by cortical feedback loops (Edelman, 2004; Alkire et al., 2008) but it is not known how or why.

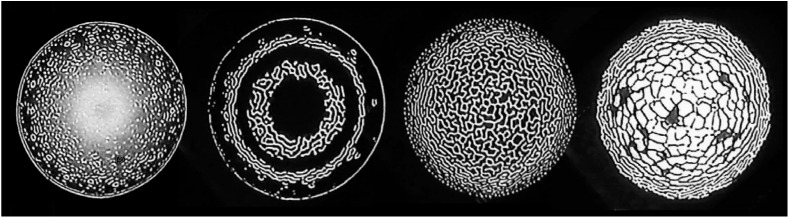

A major contribution of cybernetic theory was to recognize the importance of feedback mechanisms for controlling behavior in mechanical and living systems (Wiener, 1948; Bateson, 1972). Feedback systems are self-referential; one part of the system casually affects another, which in turn affects the first. Such systems are apt to generate behaviors that are an irreducible property of the system as a whole (Hofstadter, 2007; Deacon, 2013). One example is video feedback, which occurs when a video camera is pointed at a monitor showing the output from the camera (Crutchfield, 1984). When correctly arranged the monitor will at first show a tunnel-like image that will then spontaneously ‘blossom’ into an intricate, semi-stable pattern of remarkable diversity and fascinating beauty (see Figure 1)18. Since this is an energetically actuated process we can infer, following the arguments already given, that there is something it is like to be the video feedback system in full bloom, from its intrinsic perspective. But it is not conscious.

FIGURE 1.

Stills from a video feedback sequence generated by the author. These patterns are created by pointing a video camera at a screen showing the camera’s output. What begins as tunnel-like images soon ‘blossoms’ into an ever-changing pattern of great diversity and fascinating beauty. Pepperell (2018).

Gerald Edelman proposed that “phenomenal experience itself is entailed by appropriate reentrant intracortical activity” (Edelman and Gally, 2013). The human brain undergoes recursive or reentrant behavior of an unimaginably higher order of complexity than in the video system19. But the underlying operating principle may be analogous. Video feedback arises because the system is organized as a self-observing loop. If we assume that reentrant activity in the brain is also a kind of self-observing loop in which processes in one part the brain both affect and are affected by processes in other parts, then we can envisage a kind of pattern blooming in the brain analogous to that we see in video feedback. This pattern would be actuated by sufficiently organized electro-chemical activity, among neurons and neurotransmitters, channeled through reentrant neural circuits.

The something it is like-ness a brain organized in this way would be undergoing is of a different order to that of a brain with diminished integration in dreamless sleep or under anesthesia. No other physical system, as far as we know, has the same capacity for complex (differentiated and integrated) recursive processing as the human brain, and that dynamic organization reaches its apogee when we are wakefully conscious, as suggested by the evidence cited in Section “Consciousness and the Organization of Energetic Processing in the Brain.” When the energetic processes in our brains are operating at a certain level of dynamic recursive organization – the “appropriate reentrant intracortical activity” – then we undergo something it is like, intrinsically, to undergo something it is like, intrinsically, to undergo something it is like … recursively. In other words, there is something it is like, intrinsically, to be something it is like, recursively, to undergo the particular organization of actualized differences found in the conscious brain. For this, we have the most direct and irrefutable evidence possible – what it’s like to undergo our own conscious experience20.

Is it reasonable then to propose that consciousness is caused by the way energetic activity is dynamically and recursively organized in the brain? It is no less reasonable than attributing the causes of other biological phenomena, such as the behavior of the nematode worm, to the way energetic activity is organized. If consciousness is a physical (biological and chemical) process, and if physical processes are caused by energetic activity (alongside forces and work), then consciousness, in principle, could be caused by energetic activity and the way it is organized.

Naturalizing Consciousness

In 1937–8, Charles Sherrington gave a series of lectures on the relationship between energy and mind, collected in the volume Man on his Nature (Sherrington, 1940). In line with the physics of his day, Sherrington understood the natural world to be composed of forms of energy. But he could not conceive how the mind could be forged from energy: “The energy-concept of Science collects all so-called ‘forms’ of energy into a flock and looks in vain for the mind among them.” The mystery was deepened for him by the knowledge, then emerging through studies of electrical and metabolic activity in the brain, of how intimately energy and mental function must be linked. He was compelled to wonder “Is the mind in any strict sense energy?” but reluctantly concluded that “…thoughts, feelings, and so on are not amenable to the energy (matter) concept.” They lie beyond the purview of natural science, despite the “embarrassment” this causes for biology.

If we are to naturalize consciousness, then we must reconcile energy and the mind. I have outlined a principle that may help to explain consciousness as a physical process. It entails re-examining the modern scientific concept of energy in the light of Aristotle’s energeia and its Heraclitean roots. Accordingly, we arrive at a view of physical processes in nature as actualized differences of motion and tension. Sherrington understood that “Energy acts, i.e., is motion.” But he went on “…of a mind a difficulty is to know whether it is motion.” Treating the brain as a difference engine that works to actualize and organize differences of motion and tension to serve the interests of the organism is, I submit, a natural approach to understanding consciousness as a physical process.

Conclusion

If consciousness is a natural physical process then it should be explicable in terms of energy, forces and work. Energy is a physical property of nature that is causally efficacious and, like forces and work, can be conceived as actualized differences of motion and tension.

Evidence from neurobiology indicates that the brain operates on the principle of energetic processing and that a certain organization of energy in the brain, measured with information theoretic techniques, can be reliably predict the presence and level of consciousness. Since energy is causally efficacious in physical systems, it is reasonable to claim that consciousness is in principle caused by energetic activity and how it is dynamically organized in the brain.

Information in the biological context is best understood as a measure of the way energetic activity is organized, that is, its complexity or degree of differentiation and integration. Information theoretic techniques provide powerful tools for measuring, modeling, and mapping the organization of energetic processes, but we should not confuse the map with the territory.

Actualized differences, as distinct from abstract differences represented in mathematics and information theory, are characterized by there being something it is like, intrinsically, to undergo those differences, that is, to undergo antagonistic states of opposing forces. All actualized differences undergo this something it is like-ness, but not all contribute to consciousness.

It is proposed that a particular kind of activity occurs in human brains that causes our conscious experience. It is a certain dynamic organization of energetic processes having a high degree of differentiation and integration. This organization is recursively self-referential and results in a pattern of energetic activity that blossoms to a degree of complexity sufficient for consciousness.

If consciousness is a physical process, and physical processes are driven by actualized differences of motion and tension, then there is something it is like to undergo actualized differences organized in a certain way in the brain, and this is what we experience – intrinsically21.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

I am grateful to the following for discussions and suggestions: Alistair Burleigh, Alan Dix, Chris Doran, Robert K. Logan, Heddwyn Loudon, Chen Song, Galen Strawson, Emmett Thompson, Sander Van der Cruys, and to the reviewers for their comments and criticism.

Funding. I wish to acknowledge the support of the Vice Chancellor’s Board of Cardiff Metropolitan University.

I take it that physical processes occur in time and space and are causally determined by the actions of energy, forces and work upon matter. I take consciousness to be the capacity for awareness of self and world, which is particularly highly developed in humans.

For further discussion on the historical context see Pepperell (2018).

Although for the sake of brevity I refer in this paper to consciousness occurring in the brain, consciousness is something that people undergo. Brains cannot sustain consciousness independently of the people in which they are housed (Pepperell, 1995).

Strictly speaking energy is not consumed but converted from one form to another.

The authors of Wollstadt et al. (2017), for example, studied the breakdown of local information processing under anesthesia using information theoretic methods. They point out that the EEG procedure they used did not directly record information processing in the brain but local field potentials, that is, fluctuations in quantities of potential energy.

There seems to be an ambiguity in some textbooks about whether energy is an enabling property possessed by a system or body, e.g., Duncan (2002), or a measure of such a property, e.g., Rennie (2015). I will take energy to be a property possessed by systems or bodies, quantities of which can be measured.

“Energy may be called the fundamental cause for all change in the world” (Heisenberg, 1958). The neurobiologist Gerald Edelman neatly defined causal efficacy as “The action in the physical world of forces or energies that lead to effects or physical outcomes” (Edelman, 2004).

Neuroanthropologist Terence Deacon defines energy as a “relationship of difference” (Deacon, 2013). Note that energy is difference, but not all differences are energy; red is a color, not all colors are red.

Nagel clarified the term ‘something it is like’ as meaning not what something resembles but ‘how it is’ for the system (Nagel, 1974).

Note that this claim is not as far-fetched as it might at first seem: If (i) consciousness in people is a physical process – due to energy, forces and work – and (ii) we infer the presence of consciousness in other people on the basis of observing them extrinsically – as we habitually do – and (iii) there is something it is like to be a conscious person – as we assume there is – then (iv) we routinely infer the presence of an intrinsic something it is like-ness in a physical process on the basis of observing it from an extrinsic perspective. However, as discussed below, human consciousness is a particular kind of something it is like-ness that occurs only when certain conditions are met.

In discussions of the nature and behavior of forces at the microscopic level we often find references to the way they ‘feel’ (Feynman, 1963), or the way they ‘experience’ each other in fields (Rennie, 2015). It would be interesting to investigate what motivates the use of such terms in this context.

The difference between 1 and 0, for example, is an abstract difference conceived within a conscious mind.

Arieh Ben-Naim sets out in some detail how Shannon information is a probabilistic measure rather than a physical property (Ben-Naim, 2015). Note that the act of measurement presupposes a conscious mind capable of carrying out the measurement procedure and interpreting the result.

Had he a fuller understanding of the nature of energy Bateson might not have been so dismissive about its role in mental processes. In Mind and Nature (Bateson, 1979) he referred only to kinetic energy (which he defined as “MV2”), thus ignoring potential energy, and was by his own admission “not up to date in modern physics.” In fact, slightly modifying Bateson’s much-cited phrase to an actualized difference that makes a difference yields a description of the essence of energetic action that is, the way energy, forces and work act antagonistically to effect change and cause further actions.

Brains – as parts of people – process information in the colloquial sense, just as they process abstract ideas, equations, numbers, thoughts, emotions, or memories. But they do so as a consequence of the underlying energetic processing (conversion, distribution, and dissipation) going on in neural tissue. Computers also ‘process’ information in the colloquial sense. Mechanically and electronically speaking, however, they actually manipulate energy states (voltages, light, etc.) the results of which we, as conscious people, interpret informationally. It is worth noting that all mechanical information processing necessarily entails the dissipation of a certain amount of energy (Landauer, 1961). Recent experiments have confirmed this principle and demonstrated the intimate link between energy and what many refer to as information (Bérut et al., 2012).

Logan (2012), in work undertaken with Stuart Kauffman and others, defines ‘biotic information’ as the organization of the exchange of energy and matter between organism and environment – a further example of information theory being used to quantify the biological organization of energy flows.

It turns out this arrangement is energy efficient for the visual system overall (Wong-Riley, 2010).

Examples can be found on YouTube.

One way to quantify this relative complexity would be to follow the proposal of Chaisson (2001) and compare the energy rate density (a measure he calls Φm) between the two systems. Note also that Edelman and Gally are careful to distinguish cybernetic feedback in machine control systems from re-entrant processing in the brain, the latter being far more complex (Edelman and Gally, 2013).

‘I think therefore I am undergoing a certain recursive organization of actualized differences.’ Models of consciousness based on feedback loops in the brain have been discussed before, including by Douglas Hofstadter in his book I am a Strange Loop (Hofstadter, 2007). I have also previously proposed a feedback model of consciousness partly inspired by Edelman’s theory of reentrant processing as part of an attempt to design an artificially conscious work of art (Pepperell, 2003).

The explanatory principle outlined here might be construed as a form of panpsychism or panexperientialism. My claim is not that consciousness is a fundamental property of nature, universally distributed. Rather, I claim it is a property of all physical systems that there is something it is like, intrinsically, to undergo actualized differences, a certain organization of which causes consciousness.

References

- Abbott M., Van Ness C. (1972). Theory and Problems of Thermodynamics, by Schaum’s Outline Series in Engineering. New York, NY: McGraw-Hill Book Company. [Google Scholar]

- Aboy M., Hornero R., Abasolo D., Alvarez D. (2006) Interpretation of the Lempel-Ziv complexity measure in the context of biomedical signal analysis. IEEE Trans. Biomed. Eng. 53 2282–2288. 10.1109/TBME.2006.883696 [DOI] [PubMed] [Google Scholar]

- Alkire M. T., Hudetz A. G., Tononi G. (2008). Consciousness and Anesthesia, Science 322 876–880. 10.1126/science.1149213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen J. (2009). The Lives of the Brain: Human Evolution and the Organ of the Mind. London: Harvard University Press; 10.4159/9780674053496 [DOI] [Google Scholar]

- Annila A. (2016). On the character of consciousness. Front. Syst. Neurosci. 10:27 10.3389/fnsys.2016.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aristotle (1818). The Rhetoric, Poetic and Nicomachean Ethics of Aristotle (tr. Thomas Taylor). London: James Black and Son. [Google Scholar]

- Aristotle (2002). Metaphysics (tr. Joe Sachs). Santa Fe, NM: Green Lion Press. [Google Scholar]

- Attwell D., Gibb A. (2005). Neuroenergetics and the kinetic design of excitatory synapses. Nat. Rev. 6 841–849. 10.1038/nrn1784 [DOI] [PubMed] [Google Scholar]

- Bailey D., Townsend D., Valk P., Maisey M. (2005). Positron-Emission Tomography: Basic Sciences. Secaucus, NJ: Springer-Verla; 10.1007/b136169 [DOI] [Google Scholar]

- Bateson G. (1972). Steps Towards an Ecology of Mind. San Francisco, CA: Chandler Publication Company. [Google Scholar]

- Bateson G. (1979). Mind and Nature: a Necessary Unity. London: Wildwood. [Google Scholar]

- Bazzigaluppi P., Amini A. E., Weisspapier I., Stefanovic B., Carlen P. (2017). Hungry neurons: metabolic insights on seizure dynamics. Int. J. Mol. Sci. 18:E2269. 10.3390/ijms18112269 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Naim A. (2015). Information, Entropy, Life and The Universe: What We Know and What We Do Not Know. London: World Scientific; 10.1142/9479 [DOI] [Google Scholar]

- Bérut A., Arakelyan A., Petrosyan A., Ciliberto S., Dillenschneider R., Lutz E. (2012). Experimental verification of Landauer’s principle linking information and thermodynamics. Nature 483 187–189. 10.1038/nature10872. [DOI] [PubMed] [Google Scholar]

- Bodart O., Gosseries O., Wannez S., Thibaut A., Annen J., Boly M., et al. (2017). Measures of metabolism and complexity in the brain of patients with disorders of consciousness. Neuroimage Clin. 14 354–362. 10.1016/j.nicl.2017.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boltzmann L. (1886). “The second law of thermodynamics,” in Ludwig Boltzmann: Theoretical Physics and Philosophical Problems: Selected Writings, ed. McGinness B. (Dordrecht: D. Reidel; ). [Google Scholar]

- Buzsáki G., Kaila K., Raichle M. (2007). Inhibition and brain work, Neuron 56 771–783. 10.1016/j.neuron.2007.11.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahan D. (ed.). (1995). Hermann Von Helmholtz: Science and Culture. Chicago: Chicago University Press. [Google Scholar]

- Capurro R., Hjørland B. (2003). “The concept of information,” in Annual Review of Information Science and Technology (ARIST), ed. Cronin B. (Medford, NJ: Information Today; ), 343–411. [Google Scholar]

- Casali A. G., Gosseries O., Rosanova M., Boly M., Sarasso S., Casali K. R., et al. (2013). A theoretically based index of consciousness independent of sensory processing and behavior. Sci. Trans. Med. 5:198ra105. 10.1126/scitranslmed.3006294 [DOI] [PubMed] [Google Scholar]

- Casarotto S., Comanducci A., Rosanova M., Sarasso S. (2016). Stratification of unresponsive patients by an independently validated index of brain complexity. Ann. Neurol. 80 718–729. 10.1002/ana.24779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaisson E. J. (2001). Cosmic Evolution: The Rise of Complexity in Nature. Cambridge, MA: Harvard University Press. [Google Scholar]

- Chatelle C., Laureys S., Schnakers C. (2011). “Disorders of consciousness: what do we know,” in Characterizing Consciousness: From Cognition to the Clinic, eds Deheane S., Christen Y. (New York, NY: Springer; ). [Google Scholar]

- Chennu S., Annen J., Wannez S., Thibaut A., Chatelle C., Cassol H., et al. (2017). Brain networks predict metabolism, diagnosis and prognosis at the bedside in disorders of consciousness. Brain 140 2120–2132. 10.1093/brain/awx163 [DOI] [PubMed] [Google Scholar]

- Clarke D. D., Sokoloff L. (1999). “Circulation and energy metabolism of the brain,” in Basic Neurochemistry: Molecular, Cellular and Medical Aspects, eds Agranoff B., Siegel G. (Philadelphia, PA: Lippincott-Raven; ), 637–670. [Google Scholar]

- Coelho R. L. (2009). On the concept of energy: history and philosophy for science teaching. Proc. Soc. Behav. Sci. 1 2648–2652. 10.1016/j.sbspro.2009.01.468 [DOI] [Google Scholar]

- Collell G., Fauquet J. (2015). Brain activity and cognition: a connection from thermodynamics and information theory. Front. Psychol. 6:818. 10.3389/fpsyg.2015.00818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coopersmith J. (2010). Energy, the Subtle Concept: The Discovery of Feynman’s Blocks from Leibniz to Einstein. Oxford: Oxford University Press. [Google Scholar]

- Crick F., Koch C. (2003). A framework for consciousness. Nat. Neurosci. 6 119–126. 10.1038/nn0203-119 [DOI] [PubMed] [Google Scholar]

- Crutchfield J. (1984). Space-time dynamics in video feedback. Phys. D 10 229–245. 10.1016/0167-2789(84)90264-1 [DOI] [Google Scholar]

- Davies P. (2010). “Universe from bit,” in Information and the Nature of Reality: From Physics to Metaphysics, eds Davies P., Gregersen N. (Cambridge: Cambridge University Press; ). 10.1017/CBO9780511778759 [DOI] [Google Scholar]

- de Bono M., Maricq A. V. (2005). Neuronal substrates of complex behaviors in C. elegans. Annu. Rev. Neurosci. 28 451–501. 10.1146/annurev.neuro.27.070203.144259 [DOI] [PubMed] [Google Scholar]

- Deacon T. (2010). “What is missing from theories of information,” in Information and the Nature of Reality: From Physics to Metaphysics, eds Davies P., Gregersen N. (Cambridge: Cambridge University Press; ). [Google Scholar]

- Deacon T. (2013). Incomplete Nature: How Mind Emerged From Matter. New York, NY: W.W. Norton. [Google Scholar]

- Dehaene S. (2014). Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts. London: Penguin Books. [Google Scholar]

- Dehaene S., Lau H., Kouider S. (2017). What is consciousness, and could machines have it? Science 358 486–492. 10.1126/science.aan8871 [DOI] [PubMed] [Google Scholar]

- Demertzi A., Antonopoulos G., Heine L., Voss H. U., Crone J. S., Angeles C. D. L., et al. (2015). Intrinsic functional connectivity differentiates minimally conscious from unresponsive patients. Brain 138 2619–2631. 10.1093/brain/awv169 [DOI] [PubMed] [Google Scholar]

- de-Wit L., Alexander D., Ekroll V., Wagemans J. (2016). Is neuroimaging measuring information in the brain? Psychon. Bull. Rev. 23 1415–1428. 10.3758/s13423-016-1002-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinuzzo M., Nedergaard M. (2017). Brain energetics during the sleep–wake cycle. Curr. Opin. Neurobiol. 47 65–72. 10.1016/j.conb.2017.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan T. (2002). Advanced Physics. London: John Murray. [Google Scholar]

- Edelman G. (2004). Wider Than the Sky: The Phenomenal Gift of Consciousness. New Haven, CT: Yale University Press. [Google Scholar]

- Edelman G., Gally J., Baars B. (2011). Biology of consciousness. Front. Psychol. 2:4. 10.3389/fpsyg.2011.00004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edelman G., Gally J. (2013). Reentry: a key mechanism for integration of brain function. Front. Integr. Neurosci. 7:63. 10.3389/fnint.2013.00063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ellrod F. E. (1982). Energeia and process in Aristotle. Int. Phil. Q. XXII, 175–181. 10.5840/ipq198222216 [DOI] [Google Scholar]

- Erra R., Mateos D., Wennberg R., Perez Velazquez J. (2016). Statistical mechanics of consciousness: maximization of information content of network is associated with conscious awareness. Phys. Rev. E 94:052402. [DOI] [PubMed] [Google Scholar]

- Fechner G. T. (1905). The Little Book of Life After Death (tr. Mary C. Wadsworth). Boston: Little, Brown & Co. [Google Scholar]

- Feynman R. (1963). Conservation of Energy, in The Feynman Lectures on Physics. Available at: http://www.feynmanlectures.caltech.edu/I_04.html [accessed December 21, 2017]. [Google Scholar]

- Friston K. (2013). Consciousness and hierarchical inference. Neuropsychoanalysis 15 38–42. 10.1080/15294145.2013.10773716 [DOI] [Google Scholar]

- Gay P. (1988). Freud: A Life for Our Time. London: W. W. Norton & Co. [Google Scholar]

- Goodale M. (2014). How (and why) the visual control of action differs from visual perception. Proc. Biol. Sci. 281:20140337 10.1098/rspb.2014.0337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall C. N., Klein-Flugge M. C., Howarth C., Attwell D. (2012). Oxidative phosphorylation, not glycolysis, powers presynaptic and postsynaptic mechanisms underlying brain information processing. J. Neurosci. 32 8940–8951 10.1523/JNEUROSCI.0026-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hameroff S., Penrose R. (2014). Consciousness in the universe: a review of the ‘Orch OR’ theory. Phys. Life Rev. 11 39–78. 10.1016/j.plrev.2013.08.002 [DOI] [PubMed] [Google Scholar]

- Hawking S. (1988). A Brief History of Time. London: Bantam Books. [Google Scholar]

- Heisenberg W. (1958). Physics and Philosophy: The Revolution in Modern Science. New York, NY: Harper & Brothers Publishers. [Google Scholar]

- Hine R. (ed.). (2015). Oxford Dictionary of Biology. Oxford: Oxford University Press; 10.1093/acref/9780198714378.001.0001 [DOI] [Google Scholar]

- Hofstadter D. (2007). I am a Strange Loop. New York, NY: Basic Books. [Google Scholar]

- Hu S., Stead M., Liang H., Worrell G.A. (2009). “Reference signal impact on EEG energy,” Advances in Neural Networks – ISNN 2009 Lecture Notes in Computer Science, eds Yu W., He H., Zhang N. (Berlin: Springer Science & Business Media; ), 605–611. 10.1007/978-3-642-01513-7_66 [DOI] [Google Scholar]

- Hubel D., Livingstone M. (1987). Segregation of form, color, and stereopsis in primate area 18. J. Neurosci. 7 3378–3415. 10.1523/JNEUROSCI.07-11-03378.1987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hudetz A. G. (2012). General Anesthesia and human brain connectivity, Brain Connect. 2 291–302. 10.1089/brain.2012.0107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hudetz A. G., Mashour G. A. (2016). Disconnecting consciousness: is there an anesthetic end-point? Anesth. Analg. 123 1228–1240. 10.1213/ANE.0000000000001353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- James W. (1907). The energies of men. Philos. Rev. 16 1–20. 10.2307/2177575 [DOI] [Google Scholar]

- Kahn C. H. (1989). The Art and Thought of Heraclitus. Cambridge: Cambridge University Press. [Google Scholar]

- King J. -R., Sitt J. D., Faugeras F., Rohaut B., El Karoui I., Cohen L., et al. (2013). Information sharing in the brain indexes consciousness in noncommunicative patients, Curr. Biol. 23 1914–1919. 10.1016/j.cub.2013.07.075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch C. (2004). Biophysics of Computation: Information Processing in Single Neurons. Oxford: Oxford University Press. [Google Scholar]

- Koponen L. M., Nieminen J. O., Ilmoniemi R. J. (2015). Minimum-energy coils for transcranial magnetic stimulation: application to focal stimulation. Brain Stimul. 8 124–134. 10.1016/j.brs.2014.10.002 [DOI] [PubMed] [Google Scholar]

- Korzybski A. (1933). Science and Sanity. An Introduction to Non-Aristotelian Systems and General Semantics. New York, NY: The International Non-Aristotelian Library Pub. Co, 747–761. [Google Scholar]

- Landauer R. (1961). Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5 183–191. 10.1073/pnas.1219672110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laughlin S. (2001). Energy as a constraint on the coding and processing of information. Curr. Opin. Neurobiol. 11 475–480. 10.1016/S0959-4388(00)00237-3 [DOI] [PubMed] [Google Scholar]

- Laureys S., Lemaire C., Maquet P. (1999). Cerebral metabolism during vegetative state and after recovery to consciousness. J. Neurol. Neurosurg. Psychiatry 67 121–133. 10.1136/jnnp.67.1.121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee U., Kim S., Noh G. -J., Choi B. -M., Hwang E., Mashour G. A., et al. (2009). The directionality and functional organization of frontoparietal connectivity during consciousness and anesthesia in humans Conscious. Cogn. 18: 1069–1078. 10.1016/j.concog.2009.04.004 [DOI] [PubMed] [Google Scholar]

- Lewis L. D., Weiner V. S., Mukamel E. A., Donoghue J. A., Eskandar E. N., Madsen J. R., et al. (2012). Rapid fragmentation of neuronal networks at the onset of propofol-induced unconsciousness. Proc. Natl. Acad. Sci. 109 E3377–E3386. 10.1073/pnas.1210907109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logan R. (2012). What is information?: why is it relativistic and what is its relationship to materiality. Mean. Organ. Inform. 3 68–91. [Google Scholar]

- Lombardi O., Hernán F., Vanni L. (2016). What is shannon information? Synthese 193 1983–2012. 10.1007/s11229-015-0824-z [DOI] [Google Scholar]

- Lotka A. J. (1922). Contribution to the energetics of evolution. Proc. Natl. Acad. Sci. 8 147–151. 10.1073/pnas.8.6.147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magistretti P. (2013). Food for Thought: What Fuels Brain Cells? Report for Dana Alliance. Available at: http://www.dana.org/Publications/ReportOnProgress/Food_for_Thought__What_Fuels_Brain_Cells_/ [accessed March 27, 2018] [Google Scholar]

- Magistretti P. J. (2008). “Brain energy metabolism,” in Fundamental Neuroscience, eds Squire L. R., Berg D., Bloom F. E., du Lac S., Ghosh A., Spitzer N. C. (Burlington, MA: Elsevier; ). [Google Scholar]

- Magistretti P. J., Allaman I. (2013). “Brain energy metabolism,” in Neuroscience in the 21st Century, ed. Pfaff D. W. (New York, NY: Springer; ), 1591–1620. 10.1007/978-1-4614-1997-6_56 [DOI] [Google Scholar]

- Marchetti G. (2018) Consciousness: a unique way of processing information. Cogn. Process. 19 435–464. 10.1007/s10339-018-0855-8 [DOI] [PubMed] [Google Scholar]

- Mashour G. A. (2004). Consciousness unbound. Anesthesiology 100 428–433. 10.1097/00000542-200402000-00035 [DOI] [PubMed] [Google Scholar]

- Morcom A. M., Fletcher P. C. (2007). Does the brain have a baseline? Why we should be resisting a rest, Neuroimage 37 1073–1082. 10.1016/j.neuroimage.2006.09.013 [DOI] [PubMed] [Google Scholar]

- Morowitz H. (1979). Energy Flow in Biology. Woodbridge, NJ: Ox Bow Press. [Google Scholar]

- Morowitz H., Smith E. (2007). Energy flow and the organization of life. Complexity 13 51–59. 10.1002/cplx.20191 [DOI] [Google Scholar]

- Nagel T. (1974). What Is It Like to Be a Bat? Philos. Rev. 83 435–450. [Google Scholar]

- Niedermeyer E., Lopes da Silva F. (1987). Electroencephalography: Basic Principles, Clinical Applications, and Related Field. Munich: Urban & Schwarzenberg. [Google Scholar]

- Oizumi M., Albantakis L., Tononi G. (2014). From the phenomenology to the mechanisms of consciousness: integrated information theory 3.0. PLoS Comput. Biol. 10:e1003588. 10.1371/journal.pcbi.1003588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owen A., Coleman M., Boly M., Davis M., Laureys S., Pickard J. (2006). Detecting awareness in the vegetative state. Science 313:1420. 10.1126/science.1130197 [DOI] [PubMed] [Google Scholar]

- Pai A., Heining M. (2007). Ketamine. Contin. Educ. Anaesth. Crit. Care Pain 7 59–63. 10.1093/bjaceaccp/mkm008 [DOI] [Google Scholar]

- Pepperell R. (1995). The Posthuman Condition: Consciousness Beyond the Brain. Oxford: Intellect Books. [Google Scholar]

- Pepperell R. (2003). Towards a conscious art. Technoetic Arts 1:2 10.1386/tear.1.2.117.18695 [DOI] [Google Scholar]

- Pepperell R. (2018). “Art, energy and the brain,” in The Arts and The Brain: Psychology and Physiology Beyond Pleasure (Progress in Brain Research), eds Christensen J., Gomila A. (New York, NY: Elsevier; ). [DOI] [PubMed] [Google Scholar]

- Perez Velazquez J. (2009). Finding simplicity in complexity: general principles of biological and non- biological organization. J. Biol. Phys. 35 209–221. 10.1007/s10867-009-9146-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle M. (2011). “Intrinsic activity and consciousness,” in Characterizing Consciousness: From Cognition to the Clinic? eds Deheane S., Christen Y. (New York, NY: Springer; ). [Google Scholar]

- Raichle M. E. (2010). The brain’s dark energy. Sci. Am. 302 44–49. 10.1038/scientificamerican0310-44 [DOI] [PubMed] [Google Scholar]

- Raichle M. E., MacLeod A. M., Snyder A. Z., Powers W. J., Gusnard D. A., Shulman G. L. (2001). A default mode of brain function. Proc. Natl. Acad. Sci. U.S.A. 98 676–682. 10.1073/pnas.98.2.676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rennie R. (ed.). (2015). Oxford Dictionary of Physics. Oxford: Oxford University Press. [Google Scholar]

- Rennie R. (ed.). (2016). Oxford Dictionary of Chemistry. Oxford: Oxford University Press; 10.1093/acref/9780198722823.001.0001 [DOI] [Google Scholar]

- Riehl J. R., Palanca B. J., Ching S. (2017). High-energy brain dynamics during anesthesia-induced unconsciousness. Netw. Neurosci. 1 431–445. 10.1162/NETN_a_00023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roederer J. G. (2016). “Pragmatic information in biology and physics,” Philos. Trans. A Math. Phys. Eng. Sci. 374:20150152. 10.1088/0034-4885/79/5/052601 [DOI] [PubMed] [Google Scholar]

- Rose D. (1986). Learning About Energy. New York, NY: Plenum Press; 10.1007/978-1-4757-5647-0 [DOI] [Google Scholar]

- Roy C., Sherrington C. (1890). On the regulation of the blood supply in the brain. J. Physiol. 11 85–108, 158- 7–158-17. 10.1113/jphysiol.1890.sp000321 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruffini G. (2017). An algorithmic information theory of consciousness, Neurosci. Conscious. 3:nix019. 10.1093/nc/nix019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider E., Sagan D. (2005). Into the Cool: Energy Flow, Thermodynamics, and Life. Chicago, IL: University of Chicago Press. [Google Scholar]

- Schölvinck M. L., Howarth C., Attwell D. (2008). The cortical energy needed for conscious perception. Neuroimage 40 1460–1468. 10.1016/j.neuroimage.2008.01.032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schrödinger E. (1944). What is Life? The Physical Aspect of the Living Cell. Cambridge: Cambridge University Press. [Google Scholar]

- Searle J. (2013). Can Information Theory Explain Consciousness. Cambridge, MA: MIT Press. [Google Scholar]

- Seth A. (2014). What behaviourism can (and cannot) tell us about brain imaging. Trends Cogn. Sci. 18 5–6. 10.1016/j.tics.2013.08.013 [DOI] [Google Scholar]

- Shannon C. (1948). A mathematical theory of communication. Bell Syst. Tech. J. 27 379–423, 10.1002/j.1538-7305.1948.tb01338.x [DOI] [Google Scholar]

- Sherrington C. (1940). Man on his Nature. London: Pelican Books. [Google Scholar]

- Shulman G. L., Corbetta M., Buckner R. L., Fiez J. A., Miezin F. M., Raichle M. E., et al. (1997). Common blood flow changes across visual tasks: I. Increases in subcortical structures and cerebellum but not in nonvisual cortex. J. Cogn. Neurosci. 9 624–647. 10.1162/jocn.1997.9.5.624 [DOI] [PubMed] [Google Scholar]

- Shulman R., Hyder F., Rothman D. (2009). Baseline brain energy supports the state of consciousness. Proc. Natl. Acad. Sci. U.S.A. 106 11096–11101. 10.1073/pnas.0903941106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shulman R. G. (2013). Brain Imaging: What It Can (and Cannot) Tell Us About Consciousness. Oxford: Oxford University Press; 10.1093/acprof:oso/9780199838721.001.0001 [DOI] [Google Scholar]

- Smil V. (2008). Energy in Nature and Society: General Energetics of Complex Systems. Cambridge, MA: MIT Press. [Google Scholar]

- Stender J., Mortensen K. N., Thibaut A., Darkner S., Laureys S., Gjedde A., et al. (2016). The minimal energetic requirement of sustained awareness after brain injury. Curr. Biol. 26 1494–1499. 10.1016/j.cub.2016.04.024 [DOI] [PubMed] [Google Scholar]

- Sterling P., Laughlin S. (2017). Principles of Neural Design. Cambridge, MA.: MIT Press. [Google Scholar]

- Strawson G. (2008). “Real materialism,” in Real Materialism and Other Essays (Oxford: Oxford University Press; ), 19–52. 10.1093/acprof:oso/9780199267422.003.0002 [DOI] [Google Scholar]

- Strawson G. (2017). ‘Physicalist panpsychism’ in The Blackwell Companion to Consciousness, 2nd edn, eds Schneider S., Velmans M. (New York, NY: Wiley-Blackwell; ), 374–390. [Google Scholar]

- Street S. (2016). Neurobiology as information physics. Front. Syst. Neurosci. 10:90 10.3389/fnsys.2016.00090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliazucchi E., Behrens M., Laufs H. (2013). Sleep neuroimaging and models of consciousness, Front. Psychol. 4:259. 10.3389/fpsyg.2013.00256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tononi G. (2012). Integrated information theory of consciousness: an updated account. Arch. Ital. Biol. 150 290–326. [DOI] [PubMed] [Google Scholar]

- Tononi G., Boly M., Massimini M., Koch C. (2016). Integrated information theory: from consciousness to its physical substrate. Nat. Rev. Neurosci. 17 450–461. 10.1038/nrn.2016.44 [DOI] [PubMed] [Google Scholar]

- Tozzi A., Zare M., Benasich A. (2016). New perspectives on spontaneous brain activity: dynamic networks and energy matter. Front. Hum. Neurosci. 10:247. 10.3389/fnhum.2016.00247 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanhaudenhuyse A., Noirhomme Q., Tshibanda L. J.-F., Bruno M.-A., Boveroux P., Schnakers C., et al. (2009). Default network connectivity reflects the level of consciousness in non-communicative brain-damaged patients. Brain 133 161–171. 10.1093/brain/awp313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Foerster H. (2003). “Notes on an epistemology for living things,” in Understanding Understanding: Essays on Cybernetics and Cognition (New York, NY: Springer; ). [Google Scholar]

- Werner G. (2011). Letting the brain speak for itself. Front. Physiol. 2:60 10.3389/fphys.2011.00060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiener N. (1948). Cybernetics: or Control and Communication in the Animal and the Machine. Cambridge, MA: MIT Press. [Google Scholar]

- Wollstadt P., Sellers K. K., Rudelt L., Priesemann V., Hutt A., Fröhlich F., et al. (2017). Breakdown of local information processing may underlie isoflurane anesthesia effects. PLoS Comput. Biol. 13:e1005511. 10.1371/journal.pcbi.1005511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong-Riley M. (2010). Energy metabolism of the visual system. Eye Brain 2 99–116. 10.2147/EB.S9078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou S., Yu Y. (2018). Synaptic E-I balance underlies efficient neural coding, Front. Neurosci. 12:46. 10.3389/fnins.2018.00046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ziv J., Lempel A. (1977). A universal algorithm for sequential data compression. IEEE Trans. Inform. Theory 23 337–343. 10.1109/TIT.1977.1055714 [DOI] [Google Scholar]