Abstract

In this paper, we propose a segmentation method based on normalized cut and superpixels. The method relies on color and texture cues for fast computation and efficient use of memory. The method is used for food image segmentation as part of a mobile food record system we have developed for dietary assessment and management. The accurate estimate of nutrients relies on correctly labelled food items and sufficiently well-segmented regions. Our method achieves competitive results using the Berkeley Segmentation Dataset and outperforms some of the most popular techniques in a food image dataset.

Keywords: image segmentation, superpixel, graph model, nutrient analysis

1. INTRODUCTION

Six of the ten leading causes of death in the United States, including cancer, diabetes, and heart disease, can be directly linked to diet. Dietary intake, the process of determining what someone eats during the course of a day, provides valuable insights for mounting intervention programs for prevention of many of the above chronic diseases. Measuring accurate dietary intake is considered to be an open research problem in the nutrition and health fields. Our previous study [1] has shown the use of image based dietary assessment can improve the accuracy and reliability of estimating food and energy consumption. We have developed a mobile dietary assessment system, the Technology Assisted Dietary Assessment (TADA) system [2, 3] to automatically determine the food types and energy consumed by a user using image analysis techniques [4, 5].

The accurate estimate of energy and nutrients consumed using food image analysis is essentially dependent on the correctly labelled food item and a sufficiently well-segmented region. Food labeling largely relies on the correctness of interest region detection, which makes food segmentation extremely crucial. Although the human vision system is able to group pixels of an image into meaningful objects without knowing what objects are present, effective object segmentation from an image is in general a highly unconstrained problem [4].

Image segmentation is a process of partitioning an image into several disjoint and coherent regions in terms of some desired features. Segmentation methods can be generally classified into three major categories, i.e. region grouping methods [6, 7], contour to region methods [8, 9, 10], and graph-based methods [11, 12].

Alpert et. al [7] proposed a bottom-up probabilistic framework using multiple cue integration. It showed promising results when compared to other approaches. An example of a contour to region method is the hierarchical segmentation introduced in [9]. This has lead to several methods based the global probability of boundary (gPb), Ultrametric Contour Map (UCM) [9], and then methods that transform a contour into a hierarchy of regions while preserving contour quality [13]. Donoser et. al [10] proposed to locally predict oriented gradient signals by analyzing mid-level patches to reduce computational time of UCM. The common theme for graph-based approaches is the construction of a weighted graph where each vertex corresponds to a pixel or a region of the image. The weight of each edge connecting two pixels or two regions represents the likelihood that they belong to the same segment. A graph is partitioned into multiple components that minimize some cost functions. There are mainly two types of graph-based approaches: merging and splitting. The efficient graph based method, also known as local variation (LV) proposed by Pedro et al [11] is a merging method. It is an efficient algorithm in terms of computation complexity. Among splitting methods, normalized cuts (Ncut) [12] is extensively used.

Due to the complexity of food images (e.g. occlusion and cluttered background), food image segmentation is a difficult task. In[14], researchers experimented on 10 kinds of food with containers, thus formable part model and a circle detector were used to constrain the food region of interest. Bettadapura et. al [15] used hierarchical segmentation in their implementation. A semantic segmentation method based on deep neural network was recently proposed in [16]. Such method requires huge amount of manually segmented food images. [17] focuses on restaurant foods and utilize GPS information to get restaurant menus. With the correct restaurant information, they do not really need food segmentation, because they can already map the dish name to the responding nutrient table.

Food image segmentation has been extensively addressed in our previous work resulting in a joint segmentation/classification multiple hypothesis technique [4]. We also investigated the use of local variation and integrated it with food classifiers so that we can iteratively use the classification results to refine the segmented regions [5]. For each segment, a set of color, texture and local region features are used for our learning network, including the use of k-Nearest Neighbor (KNN), vocabulary tree and Support Vector Machine (SVM) [18].

In this paper, we propose a simple yet effective segmentation method that integrates normalized cut and superpixels using multiple features. This method is different than our previous work in that we do not use a feedback approach and we construct a new graph model. The main contributions are summarized as follows: (1) we introduce an efficient way of constructing weighted graph based on superpixels in multiple feature spaces, (2) we proposed a new image segmentation evaluation method which is specifically suitable for multi-food images, (3) we evaluate the proposed method on both the publicly available Berkeley Segmentation Database and our own food dataset. We are able to achieve competitive results, especially for food segmentation.

2. PROPOSED METHOD

General graph-based segmentation methods use low level features to measure similarity between two sets of pixels. For example, Ncut uses pixel intensity and difference of oriented Gaussian filter [12]. For an image of a complicated scene, using low level features often result in noisy segments. Another drawback with Ncut is the increase in computation associated with increased image size. “Superpixels” often are referred as local groups of pixels which have similar characteristics [19]. Superpixel methods have several useful properties such as they usually align well with object contours if the objects are not too blurry or the background is not too cluttered. They also enforce local smoothness because pixels belong to a superpixel are often from the same object.

To address the above disadvantages using Ncut, intuitively we might want to combine Ncut with superpixels. We can obtain higher level features from superpixels and reduce the size of the affinity matrix in Ncut. We shall call this approach ‘SNcut.’ We will discuss below the challenges of doing this including superpixel denoising, graph formation and food segmentation evaluation.

2.1. Superpixels and Denoising

We use simple linear iterative clustering (SLIC) [20] to provide an initial segmentation in SNcut. SLIC is a fast and memory efficient method that address local clusterings of pixels in 5D space consisting of L, a, b from the CIELAB color space and x, y pixel coordinates. It has shown good performance for several popular segmentation datasets [20]. When SLIC tries to preserve the shape or boundary of objects, there is a tradeoff between the similarity measure in the LAB color space and spatial distance between pixels, also regarded as proximity. This sometimes results in small segmented regions scattering around the “true segment”. We propose to use a Gaussian filter with a variance, σ, on the original image and use a median filter [21] with a fixed radius in pixel, r, following SLIC to merge the noisy segments. We then construct a weighted graph by using the proximity and similarity in color and texture features within the superpixel neighborhood, which will be discussed in Section 2.2.

2.2. Proximity, Color and Texture Cues

When constructing the weighed graph of superpixels we only allow adjacent superpixels to be connected which ensures a local Markov relationship [22]. We use average RGB pixel values and a customized local binary pattern (LBP) [23], discussed below, as the color and texture cues for the superpixels. The average RGB pixel value is obtained for all the pixels in the corresponding superpixel. LBP is a particular case of the Texture Spectrum model proposed in [24]. The customized LBP feature vector is created in the following manner: (1) Examine each pixel that has a 3 × 3 neighborhood in the superpixel with its 8 neighbors. If the center pixel value in L channel is greater than its neighbor, the output is 1, otherwise 0. Following a clockwise order, concatenate the binary results in an 8-dimensional vector, (3) By using the resulting vector as a binary number, we can convert it to a decimal number and then construct a histogram for each superpixel, (4) Finally, the histogram is sampled into 9 bins and normalized.

To translate the color and texture features into edge weights in our graph model, we first use χ2 test [25] in texture space and L2 distance in color space to obtain similarity measures. The χ2 distance between two LBP vectors, g and h, associated with two superpixels, Sg, Sh is defined as:

| (1) |

L2 measure in the RGB space is denoted as Dcolor. We then map the similarity measurement to a probability estimate:

| (2) |

Based on the experiments, we set σcolor to be 255 and σtexture = βDtexture, where β ∈ [8, 12]. Finally, the edge weight is obtained,

| (3) |

where I(·) represents the indicator function:

| (4) |

2.3. Object Based Segmentation Evaluation

Similarity between the automatic segmented image and a human annotated image (ground-truth) can be used to evaluate the performance of proposed method. Existing segmentation evaluation metrics include region differencing assessments [26, 27, 28] which count the degree of overlapping between segmented regions, boundary matching [29] and [30], Variation of Information (VoI) [31] and non-parametric tests such as Cohen Kappa [32] and normalized Probabilistic Rand Index (PRI) [33].

Precision and recall [29, 30] are widely used in boundary-based segmentation evaluations. However, some types of error, such as an under-segmented region that overlaps with two ground-truth segments with only a few missing boundary pixels in between, cannot be detected by boundary-based evaluation. We believe for food image segmentation a precise segment of each food is preferred over partially accurate boundaries. We adopt the region-based precision and recall proposed in [26] and adjust several criteria to focus on the objects of interest, or foods in our case.

Let S = {S1, S2, …, SM} be a set of segments in an image generated by a segmentation method and G = {G1, G2, …, GN} a set of ground-truth segments of all foreground objects in the same image, where Si (i = 1, …, M) and Gj (j = 1, … N) represent the individual segment respectively. M is the total number of segments generated by the segmentation method and N is the total numbers of segments of the ground-truth.

The precision P for segment Si and ground-truth Gj can be defined as , where |A| is the total number of pixels in A. Similarly, the recall R for Si and Gj can be defined as . The F-measure, F [34] can then be estimated using precision and recall:

| (5) |

where α is the weight. We do not have a preference between precision and recall, hence we set α = 0.5.

Furthermore, we want to know which objects in the image are correctly segmented and how accurate are those segments. It is likely that M ≠ N, hence we want to match each foreground segment Si to a Gj where Si and Gj represent the same object in the image. In order to fairly match the segments, we introduce the overlap score (O) [27], . Unlike precision and re-call, the overlap score is low for both over-segmentation and under-segmentation, but only high when the segmentation is precise and accurate. The evaluation is then done as follows:

The background ground-truth segment GB can be found as .

For each segment Si in S, P (Si, GB) and P (Si, Gj) with all the Gj in G are estimated. If P (Si, GB) is higher than all the P (Si, Gj) for all Gj, we consider (Si) as a background segment.

For each remaining Si, O(Si, Gj) is estimated with all the Gj in G, and (Si, Gj) is considered as a matched pair if it has the highest overlap score.

- Let Ij = {Si: (Si, Gj) is a matched pair, i ∈ [1, …, M]} and l(Ij) be the number of elements in Ij that matches Gj, the precision for this image can be estimated as:

The Recall and Overlap score can be estimated in a similar way.(6)

The above conveys our ideas of “good food image segmentation”:(1) Compared to the natural image segmentation criteria, we do not care much about the background extraction because some simple filters are able to eliminate over-segmented background [5]. Classifiers are often more effective in differentiating background from foods.(2) We favor a precise food segment and punish over-segmentation by averaging all suitable matches, because classifying many small segments are time-consuming and often generates unreliable results.

3. EXPERIMENTAL RESULTS

In this section, we evaluate the proposed image segmentation method on the TADA food segmentation dataset (TSDS) and the Berkeley Segmentation Dataset (BSDS) [35]. The TSDS contains 200 hand-segmented food images, which are collected from a larger dataset including a total of 1453 images of 56 commonly eaten food items acquired by 45 users in natural eating conditions. Each image contains 5 different food items and 3 utensil items on average. The BSDS consists of 500 natural images of diverse scene categories. Each image is manually segmented by 5 human subjects on average.

3.1. TADA Food Dataset

In the TSDS, we mainly compare the proposed segmentation method with local variation [11] and hierarchical segmentation [9] for two reasons. First, based on our previous work [4, 5], local variation outperforms other methods including Ncut, Mean Shift, active contours for the goal of food segmentation. Second, hierarchical segmentation is a popular method for general image segmentation, and has recently been adopted for food identification tasks [15]. For the proposed method discussed in Section 2, we use σ = 0.9, r = 12, β = 10. ϵ1, ϵ2 ∈ [0.6, 0.8] and the number of superpixels ranging from 120 to 200 for the following experiment.

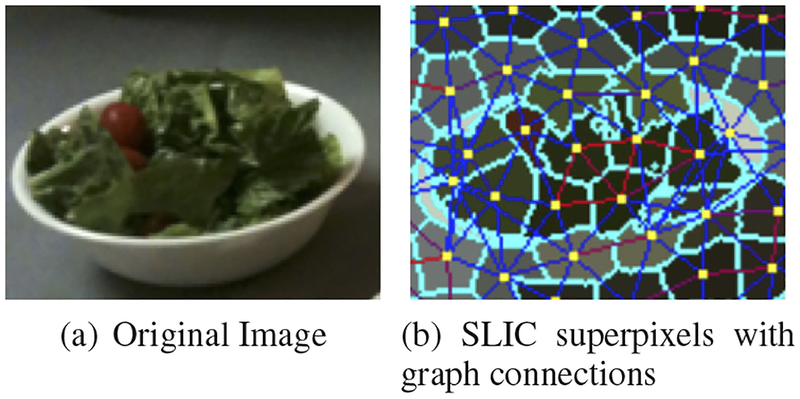

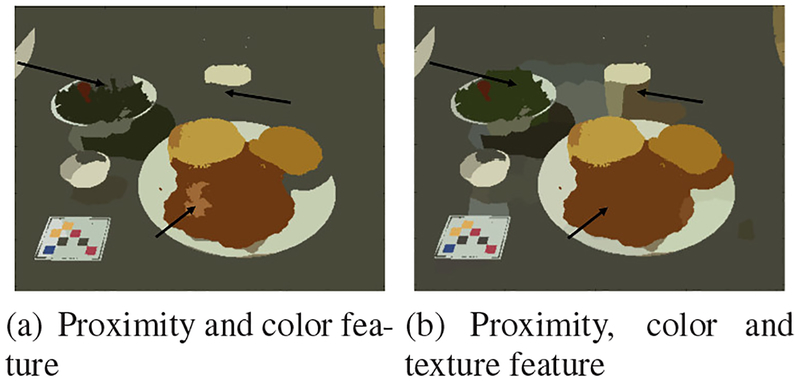

Figure 1 demonstrates the formation of the weighted graph using SLIC superpixels. In Figure 1, all the adjacent superpixels are connected based on their similarity in proximity, color and texture spaces. The red connections indicate that two superpixels are more similar while the blue connections show dissimilarity. Figure 2 shows the efficacy of the customized LBP feature. Using a graph model based only on color feature and proximity results in the image on the left. The image on the right takes texture feature into account as well. The black arrows in Figure 2 point at some of the segmentation variations. As shown in Figure 2(b), texture feature helps to include food regions with higher color variance.

Fig. 1.

SLIC superpixel with labeled graph connections. The red connections indicate two similar superpixels, and the blue connections indicate two dissimilar superpixels.

Fig. 2.

Proposed segmentation method with different features. Arrows points to areas of segmentation difference.

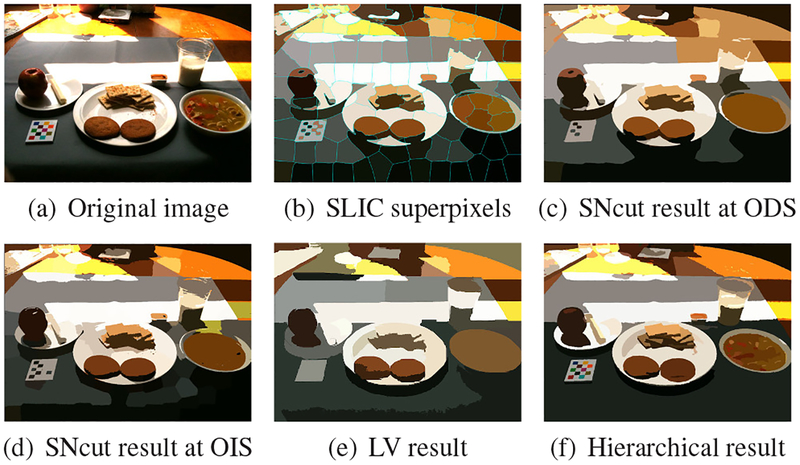

Figure 3 compares the results of SNcut to LV and hierarchical segmentation. We see that both SNcut and the hierarchical image segmentation are better at preserving the actual food contour thanLV. However, hierarchical segmentation is often sensitive to edges insides food items, resulting over-segmented regions, for example the noodle soup in Figure 3.

Fig. 3. Proposed segmentation method.

From (a) to (f): Original image, initial SLIC superpixels, SNcut output by thresholding at Optimal Dataset Scale [13], SNcut output by thresholding at Optimal Image Scale [13], output of LV and the hierachical segmentation (More examples are included in the supplemental material).

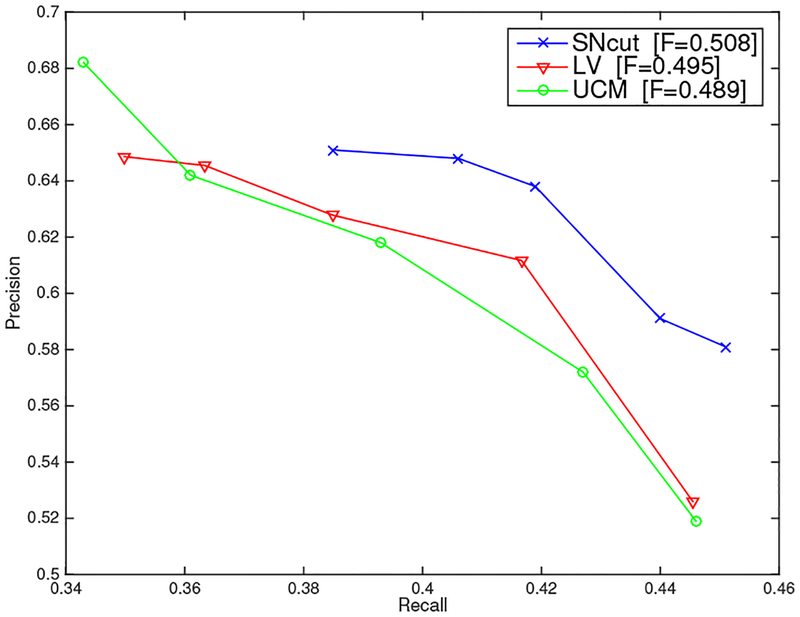

Figure 4 shows the PR curve and F-measure scores of SNcut, LV and hierarchical segmentation in different configurations. We evaluate all three methods using the object based evaluation metric discussed in Section 2.3. As illustrated, SNcut outperforms both LV and hierarchical segmentation. Due to the fact that our evaluation method averages precision and recall over the number of segments associated with a certain food ground-truth, both precision and recall scores are low when the image is severely under-segmented or over-segmented.

Fig. 4.

Precision and Recall on TSDS using the evaluation method discussed in Section 2.3.

3.2. Berkeley Dataset

We report 4 different segmentation metrics for the BSDS based on the region based evaluation method proposed in [13]: the Optimal Dataset Scale (ODS) or best F-measure on the dataset for a fixed scale, the Optimal Image Scale (OIS) or aggregate F-measure on the dataset for the best scale in each image, Variation of Information (VoI) and Probabilistic Rand Index (PRI). VoI computes the amount of information in ground-truth not contained in the segmentation result. PRI measures the likelihood of a pair of pixels being grouped in two segmentations. Better segmentation usually has higher PRI and lower VoI.

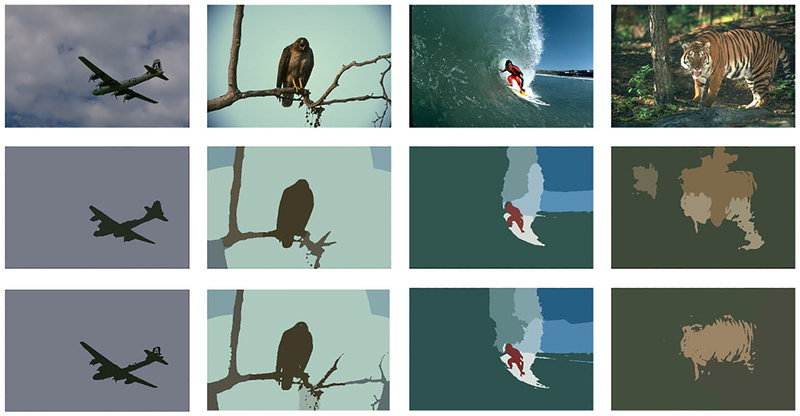

Some examples are shown in Figure 5. The scores are summarized in Table 1. The results for methods other than SNcut are collected from [13]. We see that SNcut improves the score from Ncut by a relatively large margin. However, SNcut does not obviously separate itself from other methods when it compares to the hierarchical segmentation or gPb-owt-UCM. gPb-owt-UCM trains on natural images to learn significant object contours, so it is more resistant to over-segmentation inside the object of interest when we set a proper threshold for the boundaries. Moreover, SNcut depends on the initial superpixel map to extract the objects of interest, which results in loss of global information. Compared with the objects in the natural images, foods usually have more homogeneous textures and more uniform colors. Thus, the way superpixels enforce local smoothness works better for food images.

Fig. 5. Proposed segmentation method.

From top to bottom: Original image, segmentations output by thresholding at ODS and OIS. We have included a supplementary file which contains more examples of our segmentation method.

Table 1.

Region based segmentation evaluation on the BSDS.

| Methods | PRI | VoI | ODS | OIS |

|---|---|---|---|---|

| Ncut | 0.75 | 2.18 | 0.44 | 0.53 |

| LV | 0.77 | 2.15 | 0.51 | 0.58 |

| Mean Shift | 0.78 | 1.83 | 0.54 | 0.58 |

| SNcut | 0.78 | 2.11 | 0.55 | 0.59 |

| gPb-owt-UCM | 0.81 | 1.68 | 0.58 | 0.64 |

On average, SNcut takes less than 3 seconds to segment one 481 × 321 image in BSDS. It is comparable to LV and 20 times faster than Ncut. For the full resolution 2048 × 1536 images in the TADA food dataset, SNcut takes 45 seconds to compute on average, which is 1.5 times faster than gPb-owt-ucm. All experiments were conducted on a desktop with quad core 3.7GHz CPU and 16GB RAM.

4. CONCLUSION AND FUTURE WORK

In this paper, we present a segmentation method that combines Ncut and superpixel techniques. We also introduce an object based segmentation evaluation method, especially for multi-food images. Experimental results suggest that the proposed method using multiple simple features is effective for food segmentation. According to our evaluation metric, SNcut outperforms some widely used segmentation methods and it also produces competitive results for natural images based on other segmentation benchmarks. In the future, we would like to investigate supervised learning on the weighted graph formation and explore a GPU implementation to further speed up the segmentation process.

Acknowledgments

This work was partially sponsored by a grant from the US National Institutes of Health under grant NIEH/NIH 2R01ES012459–06. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the US National Institutes of Health. Address all correspondence to Edward J. Delp, ace@ecn.purdue.edu or see www.tadaproject.org.

5. REFERENCES

- [1].Daugherty BL, Schap TE, Ettienne-Gittens R, Zhu F,Bosch M, Delp EJ, Ebert DS, Kerr DA, and Boushey CJ, “Novel technologies for assessing dietary intake: Evaluating the usability of a mobile telephone food record among adults and adolescents,” Journal of Medical Internet Research, vol. 14, no. 2, p. e58, April 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Six BL, Schap TE, Zhu F, Mariappan A, Bosch M, Delp EJ, Ebert DS, Kerr DA, and Boushey CJ, “Evidence-based development of a mobile telephone food record,” Journal of the American Dietetic Association, vol. 110, no. 1, pp. 74–79, January 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Zhu F, Bosch M, Woo I, Kim S, Boushey C, Ebert D, and Delp E, “The use of mobile devices in aiding dietary assessment and evaluation,” IEEE Journal of Selected Topics in Signal Processing, vol. 4, no. 4, pp. 756–766, August 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Zhu F, Bosch M, Khanna N, Boushey C, and Delp E, “Multiple hypotheses image segmentation and classification with application to dietary assessment.” IEEE Journal of Biomedical and Health Informatics, vol. 19, no. 1, pp. 377–388, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].He Y, Xu C, Khanna N, Boushey C, and Delp E, “Food image analysis: Segmentation, identification and weight estimation,” Proceedings of IEEE International Conference on Multimedia and Expo, pp. 1–10, July 2013, San Jose, CA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Beucher S, “Watershed, hierarchical segmentation and waterfall algorithm,” Mathematical morphology and its applications to image processing. Springer, 1994, pp. 69–76. [Google Scholar]

- [7].Alpert S, Galun M, Brandt A, and Basri R, “Image segmentation by probabilistic bottom-up aggregation and cue integration,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 2, pp. 315–327, 2012. [DOI] [PubMed] [Google Scholar]

- [8].Arbelaez P, “Boundary extraction in natural images using ultrametric contour maps,” Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition Workshop, pp. 182–182, June 2006, New York, NY. [Google Scholar]

- [9].Arbelaez P, Maire M, Fowlkes C, and Malik J, “Contour detection and hierarchical image segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 5, pp. 898–916, 2011. [DOI] [PubMed] [Google Scholar]

- [10].Donoser M and Schmalstieg D, “Discrete-continuous gradient orientation estimation for faster image segmentation,” Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition, pp. 3158–3165, 2014. [Google Scholar]

- [11].Felzenszwalb PF and Huttenlocher DP, “Efficient graph-based image segmentation,” International Journal of Computer Vision, vol. 59, no. 2, pp. 167–181, 2004. [Google Scholar]

- [12].Shi J and Malik J, “Normalized cuts and image segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 22, no. 8, pp. 888–905, August 2000. [Google Scholar]

- [13].Arbel’aez P, Maire M, Fowlkes C, and Malik J, “From contours to regions: An empirical evaluation,” Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition, pp. 2294–2301, June 2009, Miami, FL. [Google Scholar]

- [14].Matsuda Y, Hoashi H, and Yanai K, “Recognition of multiple-food images by detecting candidate regions,” Proceedings of IEEE International Conference on Multimedia and Expo, pp. 25–30, July 2012, Melbourne, Australia. [Google Scholar]

- [15].Bettadapura V, Thomaz E, Parnami A, Abowd G, and Essa I, “Leveraging context to support automated food recognition in restaurants,” Proceedings of IEEE Winter Conference on Applications of Computer Vision, pp. 580–587, January 2015, Waikoloa Beach, HI. [Google Scholar]

- [16].Meyers A, Johnston N, Rathod V, Korattikara A, Gorban A, Silberman N, Guadarrama S, Papandreou G, Huang J, and Murphy KP, “Im2calories: Towards an automated mobile vision food diary,” Proceedings of the IEEE International Conference on Computer Vision, pp. 1233–1241, December 2015, Santiago, Chile. [Google Scholar]

- [17].Beijbom O, Joshi N, Morris D, Saponas S, and Khullar S, “Menu-match: Restaurant-specific food logging from images,” Proceedings of IEEE Winter Conference on Applications of Computer Vision, pp. 844–851, January 2015, Waikoloa Beach, HI. [Google Scholar]

- [18].Bosch M, Zhu F, Khanna N, Boushey C, and Delp E, “Combining global and local features for food identification and dietary assessment,” Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, September 2011, pp. 1789–1792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Ren X and Malik J, “Learning a classification model for segmentation,” Proceedings of IEEE International Conference on Computer Vision, pp. 10–17, June 2003, Madison,Wisconsin. [Google Scholar]

- [20].Achanta R, Shaji A, Smith K, Lucchi A, Fua P, and Susstrunk S, “SLIC superpixels compared to state-of-the-art superpixel methods,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 11, pp. 2274–2282, November 2012. [DOI] [PubMed] [Google Scholar]

- [21].Brownrigg DRK, “The weighted median filter,” Communications of the ACM, vol. 27, no. 8, pp. 807–818, August 1984. [Google Scholar]

- [22].Lauritzen SL, Dawid AP, Larsen BN, and Leimer H-G, “Independence properties of directed markov fields,” Networks, vol. 20, no. 5, pp. 491–505, 1990. [Google Scholar]

- [23].Ojala T, Pietikäinen M, and Mäenpää T, “Multiresolution gray-scale and rotation invariant texture classification with local binary patterns,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, no. 7, pp. 971–987, 2002. [Google Scholar]

- [24].Wang L and He D-C, “Texture classification using texture spectrum,” Pattern Recognition, vol. 23, no. 8, pp. 905–910, 1990. [Google Scholar]

- [25].Yates F, “Contingency tables involving small numbers and the χ 2 test,” Supplement to the Journal of the Royal Statistical Society, vol. 1, no. 2, pp. 217–235, 1934. [Google Scholar]

- [26].Martin DR, “An empirical approach to grouping and segmentation,” Ph.D. dissertation, Electrical Engineering and Computer Sciences Department, University of California, Berkeley, August 2003. [Google Scholar]

- [27].Malisiewicz T and Efros AA, “Improving spatial support for objects via multiple segmentations,” British Machine Vision Conference, September 2007. [Google Scholar]

- [28].Weidner U, “Contribution to the assessment of segmentation quality for remote sensing applications,” International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. 37, no. B7, pp. 479–484, 2008. [Google Scholar]

- [29].Martin DR, Fowlkes C, and Malik J, “Learning to detect natural image boundaries using local brightness, color, and texture cues,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 26, no. 5, pp. 530–549, 2004. [DOI] [PubMed] [Google Scholar]

- [30].Estrada FJ and Jepson AD, “Benchmarking image segmentation algorithms,” International Journal of Computer Vision, vol. 85, no. 2, pp. 167–181, November 2009. [Google Scholar]

- [31].Meilă M, “Comparing clusterings: an axiomatic view,” Proceedings of the International Conference on Machine Learning, pp. 577–584, August 2005, Bonn,Germany. [Google Scholar]

- [32].Cohen J, “A coefficient of agreement for nominal scales,” Educational and Psychological Measurement, vol. 20, no. 1, pp. 37–46, 1960. [Google Scholar]

- [33].Unnikrishnan R, Pantofaru C, and Hebert M, “Toward objective evaluation of image segmentation algorithms,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 29, no. 6, pp. 929–944, 2007. [DOI] [PubMed] [Google Scholar]

- [34].Rijsbergen CJV, Information Retrieval, 2nd ed Newton, MA, USA: Butterworth-Heinemann, 1979. [Google Scholar]

- [35].Martin DR, Fowlkes C, Tal D, and Malik J, “A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics,” Proceedings of IEEE International Conference on Computer Vision, vol. 2, pp. 416–423, July 2001. [Google Scholar]