Over the past several decades the focus of the school psychologist has shifted in many ways, with none greater than their role in the assessment process. The shift away from the test/deficit/place model to a Multi-Tiered System of Supports (MTSS) approach is monumental in many ways and recent literature is replete with studies and recommendations for adapting to and implementing this shift (Eagle, Dowd-Eagle, Snyder & Holtzman, 2015; Utley & Obiakor, 2015). Regardless of the level of MTSS application and implementation, school psychologists remain presented with the difficult task of assessing a variety of students, many with intellectual disability (ID).

Advances ranging from the introduction of new assessment tools designed to measure specific constructs to the mapping of the human genome and subsequent identification of genetically linked disabilities have both improved and further complicated the assessment process (Munger, Gill, Ormond, & Kirschner, 2007). The difficulty for the school psychologist lies not only in dealing with the developmental and behavioral characteristics associated with these disorders, but also with the challenge of finding the best match between the child and the assessment tool and providing accommodations to enhance validity of results. This situation is further compromised by the various reasons for which assessments are conducted; including but not limited to eligibility, placement, Individual Education Program (IEP) development, progress monitoring, and research.

A multi-site [Information omitted for blind reviewing process] funded project is validating the [Information omitted for blind reviewing process] and is the context for this paper (citation removed for blind reviewing process). Extensive field research coupled with a review of the literature has revealed useful strategies to improve standardized assessments for individuals with ID. This paper outlines a model for practice developed through this research, and provides unique considerations for assessing individuals with ID. First, it explores professional standards for assessment with individuals with disabilities and the legal precedence for providing accommodations. Next, a model for assessment with individuals with ID will be proposed, consisting of a four-stage cyclical approach to the assessment process. Practical ideas for accommodations will inform researchers, clinicians, and educators so they can appropriately meet the unique needs of individuals with ID when administering standardized assessments, with the aim of yielding more valid and representative results. Finally, this report ends with three subsections regarding assessment issues specific to the behavioral phenotypes of those with Fragile X syndrome (FXS), Down syndrome (DS), and comorbid ID with Autism Spectrum Disorder (ASD).

For the purposes of this paper, there are a number of key terms that merit definition. First, this paper addresses a variety of professionals, including but not limited to school psychologists, clinical psychologists, research psychologists, special educators, and speech language pathologists. The term assessment administrator (AA) refers to anyone conducting psychoeducational assessments. This report discusses assessment practices with individuals with ID, who may be students, patients, research participants, consumers, or clients. ID is a disability characterized by significant deficits in the areas of intellectual functioning, adaptive behaviors, and academic abilities (IDEA, 2004). For the purposes of this paper, standardized assessments are individually administered, norm-referenced tests with “standard” or consistent administration requirements (Sattler, 2008). Assessment refers to the process of data collection for evaluation of current developmental levels. This may be a part of a special education evaluation, a clinical evaluation, or a research protocol. It is important to differentiate this type of assessment from high-stakes group administered achievement and state accountability tests (see Christensen, Carver, VanDeZande, & Lazarus, 2011).

Standardized Assessments and Intellectual Disability

Standardized cognitive and educational assessments of individuals with ID provide crucial information for parents, researchers, and educators. Understanding the unique developmental strengths and challenges of an individual with ID is imperative to determining appropriate educational placements and intervention plans (Salvia, Ysseldyke, & Witmer, 2013). Furthermore, assessments can be used in conjunction with targeted medication trials or behavioral/cognitive interventions to measure individual baseline data and examine growth in a variety of cognitive processes over time (Berry-Kravis et al., 2012). There are also legal implications, such as using cognitive assessment results to determine whether individuals are competent to stand trial (Cheung, 2013). It is essential that educators, clinicians, and researchers gain an accurate measure of developmental progress and growth trajectories for individuals with ID so that appropriate interventions can be validated and promulgated.

Research indicates that participating in standardized assessments can be a taxing experience for individuals with ID and a challenging endeavor for administrators (Berry-Kravis et al., 2006; Hall, Hammond, Hirt, & Reiss, 2012; Herschell, Greco, Filcheck, & McNeil, 2002; Kasari, Brady, Lord, Tager-Flusberg, 2013; Koegel, Koegel, & Smith, 1997). This is due, in part, to a variety of factors related to ID, including preferences for familiar and predictable routines and people, executive function challenges, sensorimotor delays, and communication deficits associated with speech, language and/or social-emotional delays (Kenworthy & Anthony, 2013). These unique individual attributes coupled with the demands of standardized assessments can be problematic. For example, frequently, the assessment room is a new setting and may unintentionally cause anxiety and discomfort for the individual with ID (Kasari et al., 2013). The AA may also be new to the individual and unfamiliar with his or her specific needs and preferences (Szarko, Brown, & Watkins, 2013). Tasks are artificial and removed from the daily context and routines familiar to the examinee. The use of ceilings (e.g. six incorrect answers in a row) as stop points for assessments can make it impossible to let the individual with ID know exactly when the session will end, thus decreasing predictability and potentially increasing stress. If the examinee has articulation delays, it may be difficult to understand verbal responses well enough to score them accurately. Social and emotional delays, oppositional temperament, or mood dysregulation can substantially impact the examinee’s motivation and self-regulation to perform well with strong effort or persistence (Koegel et al., 1997; Wolf-Schein, 1998). At times, these testing behaviors interfere enough with feasibility to render scores invalid for analysis (Berry-Kravis et al., 2006), or result in the unhelpful practice of deeming an individual “untestable,” negating the effort of the assessment and placing yet another negative label on the individual with ID (Bagnato & Neisworth, 1994; Bathurst, & Gottfried, 1987; Skwerer, Jordan, Brukilacchio, & Tager-Flusberg, 2016).

Unfortunately, there is a paucity of research regarding the administrative procedures that yield valid standardized assessment results, and the potential importance of accommodations with the ID population. Existing empirical evidence is limited and primarily focused on specific etiologies. Notably, one meta-analysis documented that accommodations aimed at improving motivation during standardized cognitive assessments significantly and positively impacted performance for examinees with below average IQ (Duckworth, Quinn, Lynam, Loeber, & Stouthamer-Loeber, 2011). Further, examiner familiarity (gained through intentional rapport building) may have positive testing effects on students with ASD (Szarko et al., 2013). The implementation of motivation-based accommodations in standardized assessment with individuals with ASD may reduce testing bias related to ASD symptomology (e.g., lack of social-reciprocity), and enhance validity of results (Koegel et al., 1997). In the FXS research literature, accommodations to standardized procedures have been found to enhance feasibility. Limiting complex verbal instructions, using structured teaching items with reinforcement, and employing a computerized administration can address known deficits in social communication skills related to FXS during standardized assessment procedures (Hall et al, 2012; Scerif et al., 2005). Furthermore, contingency reinforcement, frequent breaks for physical activity, and behavioral redirection have been found to increase completion rates for individuals with FXS in a research setting (Berry-Kravis, Sumis, Kim, Lara, Wuu, 2008). Thus, while limited empirical evidence does appear to support adapting standardized assessments for individuals with ID, the emerging research currently lacks depth and practical applications for practitioners.

Professional Mandates and Legal Precedence

There are several recommendations from professional organizations designed to ensure fair and valid assessment results when working with special populations (American Education Research Association (AERA, 2014); National Association of School Psychologists (NASP, 2010); American Psychological Association (APA, 2014) (see Table 1). These organizations emphasize a person-centered approach to assessment, tailored to the individual’s needs. The recommendations include using psychometrically sound assessment measures that are validated, through empirical research, with individuals with disabilities. Accommodations are critical to ensure that results measure the individual's ability as fairly as possible. Another recommendation is the use of ecological assessments, in which data are collected from a variety of sources and results are interpreted in context. Assessment administrators should ensure fairness of the assessments by reducing bias in the testing process and carefully evaluating the validity of results. Finally, reporting of results should be easy to understand and accessible to the family (AERA, 2014; NASP, 2010).

Table 1.

Alignment of Recommended Practices from Professional Organizations.

| Recommended Practices | American Education Research Association Standards for Educational and Psychological Testing (AERA, 2014) | American Psychological Association Guidelines for Assessment of and Intervention with Persons with Disabilities (APA, 2014) | National Association of School Psychology Professional Principals for Professional Ethics (NASP, 2010) |

|---|---|---|---|

| 1. Individualize the assessment process | Standard 10.5:

|

Guideline 13:

|

Principle II.3. Responsible Assessment and Intervention Practices, Standard II.3.5:

|

| 2. Use psychometrically sound assessments with appropriate accommodations | Standard 3.0:

|

Guideline 14:

|

Principle II.3. Responsible Assessment and Intervention Practices, Standard II.3.2:

|

Standard 3.9:

|

Guideline 15:

|

. | |

Standard 3.10:

|

|||

| 3. Consider data from a variety of sources | Standard 10.12:

|

Guideline 16:

|

Principle II.3. Responsible Assessment and Intervention Practices, Standard II.3.3:

|

Standard II.3.4:

|

|||

| 4. Evaluate fairness of assessment to reduce bias and ensure validity | Standard 3.11:

|

Guideline 17:

|

Principle I.3. Fairness and Justice, Standard I.3.1:

|

| 5. Use family- friendly language when reporting results | Standard 10.11:

|

APA Guidelines do not specifically address this topic. | Principle II.3. Responsible Assessment and Intervention Practices, Standard II.3.8:

|

Accommodations

Accommodations are a critical component of fair and valid assessments for individuals with ID. The AERA defines accommodations in assessments as, “relatively minor changes to the presentation and/or format of the test, test administration, or response procedures that maintain the original construct and result in scores comparable to those on the original test” (2014, p. 58). This language was originally derived from legal cases addressing accommodations in high stakes achievement and accountability tests (Brookhart vs. Illinois, 1983; Hawaii, 1990). Professional organizations and standardized test developers adopted the language established by these cases to provide guidelines to the administrators of individual standardized assessments (e.g. Roid, 2003).

Legal cases emphasized that accommodations are mandated under Section 504 of the Rehabilitation Act of 1973 (Brookhart vs. Illinois, 1983). Furthermore, AAs must consider the skills being measured, and then ensure that a change in procedures does not impact the validity of the assessment. For instance, providing a reader to a student with dyslexia is inappropriate for those sections of a state-wide graduation assessment specifically designed to assess reading ability. Conversely, providing a reader during the math or science portions of the assessment is an appropriate accommodation that preserves the validity of the math or science skills being measured. Most importantly, these cases emphasized the importance of considering accommodations on an individual basis with regards to the skills of the individual and the goal of the assessment (Hawaii, 1990).

When deciding the appropriateness of any given accommodation, both target skills and access skills should be reviewed before an accommodation is provided (Braden & Elliott, 2003; Phillips, 1994). Target skills refer to the construct that an assessment intends to measure, while access skills are those abilities–such as joint attention, fine motor skills, and language ability–that are required in order for an individual to demonstrate his or her understanding of testing conditions, content, and instructions during a standardized assessment (Braden & Elliot, 2003). When conducting assessments with individuals with ID, results can be rendered invalid by a variety of access skills, including reduced communicative ability, lack of behavior regulation, compromised fine motor dexterity, and poor trunk stability. Practitioners must consider the individual’s abilities and implement accommodations that are appropriate for the individual’s profile of access skills (Braden & Elliott, 2003).

It is also important to delineate the difference between accommodations and modifications. Modifications are changes in standardized assessment procedures that result in an underrepresentation of the desired target skill (AERA, 2014). Consider a subtest designed to measure auditory memory by asking the individual to listen to a stream of spoken numbers and repeat them back to the AA. Individuals with ID must have the access skill of joint attention with the AA to demonstrate the target skill of auditory working memory with validity. An AA may provide an accommodation to support deficits in joint attention by providing a visual or verbal cue to indicate the item will soon be presented and to encourage the examinee to attend to the task. Conversely, changing the standardization procedures by repeating the stimulus or presenting it visually would change the target skill of the assessment and therefore render norm-based scores invalid (AERA, 2014).

A Model for Assessment with Individuals with ID

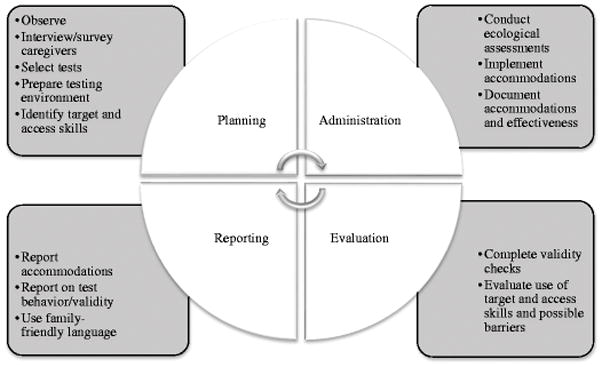

When conducting standardized assessments with individuals with ID, it may be helpful to utilize a cyclical approach to the assessment process. The proposed cycle of assessment has been successfully used in a research setting to increase validity and enhance feasibility for several standardized assessments with a variety of individuals with ID (citation omitted for blind reviewing purposes). It includes four distinct, yet iterative stages, including planning, administration, evaluation, and reporting (see Figure 1). This framework fills a gap in the current assessment literature by synthesizing and operationalizing recommended practices from a variety of professional organizations (AERA, 2014; APA, 2014; NASP, 2010) and several empirically tested methods, to provide the AA with direction and guidance for the assessment process. Furthermore, a set of guiding questions for each stage of the cycle (see Table 2) encourages thoughtful planning and reflection to enhance professional judgment when conducting assessments with individuals with ID.

Figure 1.

Assessment Cycle.

Table 2. Guiding Questions.

for the Assessment Cycle

| Stage of the Assessment Cycle | Guiding Questions |

|---|---|

| Planning | What is the purpose of the assessment? |

| What target skills do I want to measure? | |

| What access skills, specific to this individual, may interfere with the assessments? | |

| What do I know about this individual’s phenotype that can guide my assessment plan? | |

| How will I reduce barriers in the assessment process? | |

| Which test will best help me to measure the target skill with this individual? | |

| What can I learn from prior assessment reports for this individual? What worked, and what was challenging? | |

| Administration | Have I considered multiple sources of data? |

| What accommodation is required to assure that I am measuring the target skill? | |

| Will the accommodation affect the validity of the results of the test? | |

| Evaluation | What target skill did I aim to measure? |

| What access skills may have interfered? (e.g. self regulation, dexterity, etc.) | |

| What accommodations did I use to address access skills? | |

| How effective were those strategies? | |

| Did I accurately measure the target skills in this administration? | |

| Was this administration valid? Why or why not? | |

| Reporting | Did I answer the referral questions? |

| Did I use multiple methods to convey assessment results to the family? | |

| Did I report on accommodations, testing behavior, and validity? | |

| Did I include suggestions for future assessments? |

Planning

Time dedicated to the planning phase is well spent, as it can be recaptured through a smooth assessment session. The AA must prepare both the examinee and the environment. One way to prepare the individual with ID is to provide as much information as possible in a manner that he or she can understand. Time spent intentionally building rapport, during a home or classroom visit, may allow the individual to become familiar with the AA in a safe and predictable environment, thereby reducing the anxiety of the individual with ID when he or she comes to the assessment room for testing (Szarko et al., 2013). A social story is also a useful method for preparing the individual for the assessment process (Gray, 2015). The AA can provide a detailed and illustrated social story to the family ahead of time, with drawings, photographs, or videos of the environment, the AA, the assessment items, as well as a storyline for what to expect during the assessment (Hessl et al., 2016). Finally, the AA might consider meeting the family in the parking lot of an unfamiliar setting in order to support a smooth transition inside for assessment.

Semi-structured interviews or parent surveys can provide the AA with crucial information about the individual being tested (Kasari et al., 2013). If the individual has a known developmental disability (e.g. FXS, ASD, DS) related to the ID, the AA can use information on the phenotype of the disability to guide the questioning. AAs may wish to inquire about triggers for anxiety, sensory preferences or aversions, history of aggression, current behavior plans or reward systems, and preferred foods, items, or experiences to be used for behavior reinforcement. Observations in the individual’s home or school setting can also inform the AA of behavioral challenges that may act as a barrier during the assessment process (Braden & Elliot, 2003). The AA can prepare accommodations for the assessment by making note of any current accommodations and verbal cues used in the individual’s regular routine. A review of records will inform the AA about prior accommodations that were used successfully in previous assessment sessions.

Equipped with information about the unique individual from surveys, interviews, observations, and a review of records, the AA can then engage in thoughtful test selection. It is best practice to utilize assessments that have been shown to be valid and reliable with individuals with ID and any other comorbid conditions such as Attention-Deficit/Hyperactivity Disorder (ADHD), anxiety, or ASD (Brue & Wilmshurst, 2016, Crepeau-Hobson, 2014). The AA should check the standardization manuals to see if any of these subpopulations were included in the norming sample or in validity testing. Examiner’s manuals may also include suggestions for specific accommodations when working with special populations. Research has demonstrated that certain cognitive assessments consistently result in significantly higher or lower IQ scores for individuals with ID and ASD (Bodner, Williams, Engelhardt, & Minshew, 2014; Silverman et al., 2010). AAs must take special care to research the validity of the tests they intend to use with this population, as results may be skewed depending on the test. For individuals functioning developmentally below the age of six, the AA may consider supplementing standardized testing with a non-standardized assessment such as the Transdisciplinary Play-Based Assessment (TPBA2) (Linder, 2008). While these types of assessments do not provide a standardized score, they can help provide more authentic information related to developmental progress (Bagnato, 2008).

Next, the AA can prepare the assessment environment to reduce any distractions or stress triggers for the individual with ID (Sattler, 2008). Whenever possible, the assessment should take place in a quiet room, free of distractions. Limiting the number of visual distractions on the walls, or extraneous sounds, may help the individual to focus his or her attention on the assessment tasks (Kylliainen, Jones, Gomot, Warreyn, Faick-Ytter, 2014). Any visual schedule or behavioral prompts should be prepared ahead of time and should be readily available to the practitioner during testing. It is helpful to have all test materials organized and prepared to begin assessment before bringing the examinee into the testing space (Sattler, 2008). This ensures that the AA can focus his or her attention and efforts to further establish rapport, respond to the any initial discomfort, and gauge the appropriate time to begin testing.

Finally, AAs should ensure that the individual being tested has used the bathroom, eaten a meal, and has had adequate sleep. Examinees must bring along corrective lenses and any augmentative communication devices so that sensory or communication impairments do not interfere with results. AAs may consider rescheduling an assessment session if the individual is ill, or if he or she is managing any complicating side effects from medications. This is also a good time to discuss whether a caregiver should or should not be present in the assessment room. For some individuals, this will bring emotional comfort, while for others this can be highly disruptive (Perry, Condillac, & Freeman, 2002; Sattler, 2008). If a caregiver is present, the AA should explain the standardized nature of the assessment and emphasize the caregiver’s role in providing reassurance and support rather than assistance in answering questions.

Administration

During the administration stage of the assessment cycle, the AA can utilize several testing accommodations to enhance validity of the test results (see Braden & Elliot, 2003; Mather & Wendling, 2014. p. 41). It is critical that the accommodations do not alter the construct being measured and only reduce barriers (AERA, 2014). Some common accommodations that may be useful when testing individuals with ID are organized by domain type in Table 3. There are many other appropriate accommodations that will work well based on the individual’s strengths and needs. Furthermore, some of these accommodations may not be relevant or useful depending on the target and access skills identified as critical during the planning stage of assessment. AAs should always document and report the specific accommodations used and the potential effects. This practice provides insight into the assessment being reported upon and can help to inform accommodations for future sessions (AERA, 2014).

Table 3.

Accommodations for Standardized Assessment with Individuals with ID

| Domain | Accommodation |

|---|---|

| Behavioral | Reinforce engagement/effort |

| Token economy system | |

| Planned ignoring for undesirable behaviors | |

| Visual cues for behavioral expectations (e.g.; first/then board, active listening visual prompt) | |

| Provide frequent breaks to accommodate attention span and low stamina | |

| Provide breaks following positive behaviors so as not to reinforce avoidant behaviors | |

| Use abbreviated forms of tests to reduce time spent in testing situation | |

| Communication | Visual schedule (can be detailed with photos of actual test sections) |

| Provide examinee with a break card or alternative method to request a break | |

| Use only nonverbal subtests, a nonverbal composite, or a nonverbal test | |

| Use simplified instructions to emphasize key phrases | |

| Allow talkers or other assistive technology for communicating responses | |

| Allow examinee to point, rather than verbalize response | |

| Provide ample/extended wait time for responses | |

| Repeat instructions as needed (unless invalidates item) | |

| Relational | Home visit prior to testing |

| Provide plenty of time to build rapport | |

| Start session with play, or a social activity to connect | |

| Utilize digital assessment measures to increase motivation and/or remove stress | |

| caused by social interactions with examiner | |

| Allow a transitional object from home to address issues of separation anxiety | |

| Sensory | Use substitute subtests if there are sensory concerns with subtest (e.g.; block tapping is too loud, visual scanning task is visually over-stimulating, etc.) |

| Implement individualized sensory integration therapy/sensory diet strategies before testing and in between subtests1 | |

| Provide fidget toys when examinee does not need to use hands for assessment tasks | |

| Environmental | Provide a cozy corner, tunnel, or tent for breaks |

| Provide a sensory area with sensory toys/items | |

| Test examinee over multiple days | |

| Choose a test with no time limits or remove time limits- unless time limits impact target skills (e.g.; when measuring processing speed) | |

| Test examinee in a familiar room (in the home, a familiar classroom, etc.) Allow family member or familiar companion to be present in room (if this is helpful) | |

| Motor | Allow examinee to provide examiner with verbal directions for item manipulation if he or she is unable to execute action with precision |

| Use touch/scan response, where the examiner scans items with finger, and examinee indicates his or her choice with verbal or nonverbal signals |

Empirical results on the use of sensory integration (SI) therapy are mixed (Leong, Carter, & Stephenson, 2015). However, SI therapy is used extensively in clinical practice and may be useful for the assessment process (see Hickman, Stackhouse, & Scharfenaker, 2008). Examples of sensory diet activities include: deep pressure, sensory table, walking/motor breaks, swinging, pulling, pushing, lifting, blowing bubbles, chewing gum.

It is important to note that standardized assessments are only one part of a comprehensive assessment process, especially in clinical or school-based evaluations. An ecological assessment model is the recommended approach, in which the AA gathers data from multiple sources to assemble a comprehensive understanding of an individual’s strengths and needs (APA, 2014; Brue & Wilmshurst, 2016; Crepeau-Hobson, 2014; NASP, 2010). However, for research purposes, standardized assessments may be the sole source of data. In either case, it is critical that assessment results are a valid representation of the individual’s ability (AERA, 2014).

Evaluation

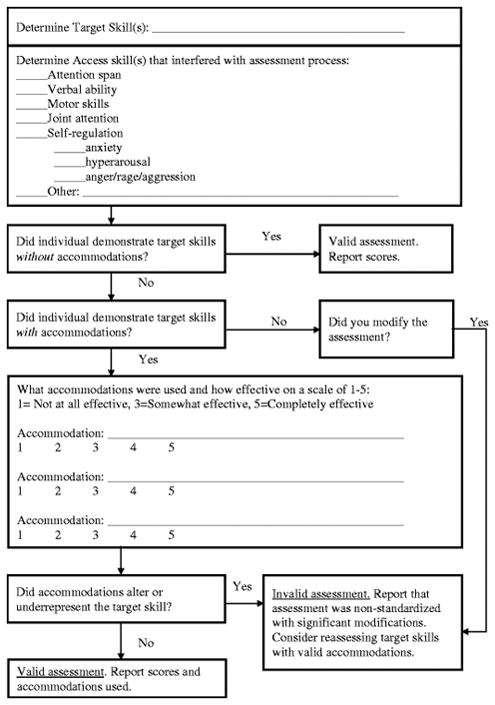

The evaluation stage of the assessment cycle involves reflection on the fairness of the assessment administration and the validity of the test results. Assessment results should be interpreted in context, considering each individual’s diverse life experiences and profile of access skills (AERA, 2014). This stage may reveal some flaws in the testing situation, and some ideas for improving validity upon future testing. Figure 2 depicts a decision-making process for the AA to utilize in the evaluation stage. Careful consideration of target skills, access skills, accommodations used, and the effectiveness of such adaptations will help the AA to determine validity of the assessment process.

Figure 2.

Evaluation Stage Validity Check. A data-based decision-making process for the AA to use when determining validity of assessment.

Evaluation of testing validity can help the AA to differentiate between an invalid administration and low performance. If the AA determines that the assessment was valid, and that target skills were, indeed, measured fairly during administration, then he or she can continue to the reporting stage of assessment. However, the evaluation stage data may indicate a need to return to the planning and administration stages before moving on to the reporting stage. Data from the evaluation process can act as a guide to address barriers that interfered with the testing process as the AA conducts further assessments. It is critical that AAs determine their test results to be valid and meaningful before reporting and using data for critical decision-making.

Reporting

The synthesis of information gained through the assessment and translation of that information into applicable knowledge is the true goal of assessment (Riley, 2008). In the reporting stage of the assessment cycle, the AA presents the data to the family and decision-making team in a useful and family-friendly manner (AERA, 2014; NASP, 2010). A strengths-based approach is recommended, in which the report includes strengths and preferences of the individual (APA, 2014; Climie & Henley, 2016; Mastoras, Climie, McCrimmon, & Schwean, 2011).

Conveying the essence of the assessment experience while capturing the profile of the child and expressing it in a manner that is clear, accurate, and sensitive is a complex task (Riley, 2008). The written report is not a perfunctory recitation of skills and numbers. Rather, it is a descriptive picture of an individual that will 1) help the family and other professionals who will serve the individual to better understand his or her development and factors that are influencing that development, 2) lead the family and professionals who will interact with individual to a better understanding of his or her needs, and 3) integrate the family in a meaningful way into the assessment outcomes and intervention plan (Brotherton, 2001; Soodak & Erwin, 2000). The report should emphasize what the individual can do at this time, and what the next steps are in his or her development. Individual preferences, likes, and dislikes can also be included in reporting to encourage strengths-based intervention planning. However, this is not to say that reporting should not document the individual’s delays or challenges. It is crucial to address an individual’s areas of concerns and next steps in order to determine accommodations for the classroom and/or community, and to inform intervention plans. The report is the critical bridge that connects assessment with intervention, and also can inform planning for future assessments. In order to construct a solid functional bridge, it is necessary to understand the varied audiences and purposes of the report and, therefore, the crucial components and characteristics of the document (Riley, 2008).

Data from standardized assessments can be complicated, and may be difficult for parents to understand, especially if the results trigger an emotional response (e.g. learning your child has a lifelong disability; Graungaard, & Skov, 2007). AAs can address these concerns by using multiple modes of representation when reporting data (e.g. provide visuals and concrete examples of skills being measured), and using an empathic and collaborative communication style (Graungaard & Skov, 2007; Tharinger et al., 2008). Furthermore, a pre-meeting to go over results prior to a larger team meeting can allow the family to process results in a less stressful and more intimate setting. If possible, smaller meetings limited to the core team members reporting results from assessments may help reduce anxiety for parents. Debriefing after the meeting can help assure comprehension of results and allow the family to communicate any concerns or questions in a more private setting.

Additionally, person-first language is a critical component of reporting. Person-first language is a form of expression in which the author conveys respect and a positive attitude for the subject of the report (Snow, 2009). The emphasis is placed on the person first, before the disability and literally means that the author will describe the person before the disability (e.g. “patient with Down syndrome” rather than “Down syndrome patient”, or “student with an intellectual disability” rather than “disabled student”). This language communicates respect and emphasizes that there is much more to understand about the individual than his or her disability.

AAs must report any accommodations used during testing, and whether standardization was upheld through these accommodations (AERA, 2014). If the AA determined in the evaluation stage that the administration was nonstandardized, the report should include a statement about interpreting test results with caution, or abandoning standardized scores altogether and relying on qualitative results and clinical judgment instead (Brue & Wilmshurst, 2016). Next, the AA should report on the individual’s testing behavior, noting whether this behavior is typical or unique to this testing situation. A clear declaration of validity based on accommodations and testing behavior will help readers with interpretation of results. AAs will also want to report that the standardized scores are merely an estimate of ability in a given area, and they may over or under estimate the individual’s true ability or what he or she might express in his or her natural environment. If access skills had a depressing effect on the individual’s scores, the AA can emphasize that, although skills may be an under-estimate, results may be an accurate depiction of the individual’s current functioning in the community without proper accommodations. Furthermore, the individual’s abilities may change over time to varying degrees depending on how fluid or crystallized the constructs of functioning are (Sattler, 2008).

Finally, statistical issues with standardized scores in the lower ranges of performance on cognitive assessments can preclude AAs from obtaining and reporting meaningful assessment results. Floor effects limit the sensitivity of the assessment tools, especially in the lower ranges (e.g. below standard score of 40 on many tests) (Whitaker & Gordon, 2012). Hessl et. al. (2009) and Sansone et. al., (2014) addressed this flooring effect problem in some commonly used IQ tests by creating “deviation scores” using a z-transformation of raw scores relative to normative data, by age band of each examinee. The deviation scores captured clinically significant and meaningful variation on the group and individual level that was not documented using traditional standard scores. The authors recommend the use of deviation scores for clinical studies that need to track changes over time or measure response to interventions. Importantly, this method improves sensitivity only among individuals with ID who have subtest scaled scores at the floor (SS=1), which is quite common among those in the moderate or severe range of ID. They may be also useful for studies examining cognitive profiles, or for group matching on dimensions such as IQ. Researchers examining IQ test results of individuals with ID may wish to consult these authors for assistance with the scoring method. Professionals should strongly consider using this method in educational and clinical settings. As such, they should present both the standard scores (in cases where this is mandatory) and the z deviation scores derived from this method with appropriate citations. Comparison of the traditional and revised scoring method over time by various professionals in multiple contexts will help determine the predictive validity of the new approach.

Special Populations With ID

The following sections address specific syndromes frequently associated with a diagnosis of ID. Using knowledge about the etiology of an individual’s ID may help the AA to tailor the assessment process based on an individual’s biological needs and behavioral tendencies consistent with the phenotype of each condition. While there are documented behavioral phenotypes for individuals with FXS, DS, and ASD, every individual is quite different, and an individualized approach is still recommended for each assessment. These three examples will provide information on how specific syndromes or phenotypes are important to consider in preparing for, conducting, and reporting on standardized assessments, concepts and issues which may be applied to other conditions associated with ID.

Fragile X Syndrome

FXS is the most common inherited form of ID (Hagerman & Hagerman, 2002). FXS is caused by a mutation of the fragile X mental retardation 1 (FMR1) gene on the X chromosome, which results in reduction or absence of the FMR1 protein (FMRP) and consequent abnormal brain development (Hessl et al., 2009). The spectrum of phenotypic expression of FXS in males includes overall developmental delays ranging from mild to severe with approximately 15% exhibiting borderline IQ and learning disabilities (Cornish, Levitas & Sudhalter 2007). The vast majority of males with FXS present with IQ scores below 70, with an average in the 40s (Schneider et al., 2009). Additional characteristics include ADHD, speech and language delay, anxiety, sensory processing dysfunction, low tone accompanying gross and fine motor delay, poor eye contact, substantial difficulties in executive function, perseverative speech and repetitive behaviors. Approximately 25 to 33% of males with FXS also meet the criteria for ASD (Hagerman, 2006). Perhaps it is not surprising, given the constellation of characteristics associated with the disorder, that families of individuals with FXS are frequently presented with reports in which the individual was considered “untestable.”

Individuals with FXS tend to be visual learners with a “gestalt” learning style (Braden et al., 2013). This means that, rather than learning in a sequential or step-be-step manner, individuals with FXS learn best when they can first observe a whole process or skill and then replicate by imitating a model (Scharfenaker & Stackhouse, 2015). The use of teaching items in standardized assessments is critical when working with individuals with FXS. Modeling the tasks, as much is allowed within the standardized procedure, will demonstrate the expectations and provide the individual with FXS with the “gestalt” of the task.

Hyperactivity and poor attention frequently interfere with the assessment process when testing an individual with FXS. When standardization does not allow for a verbal or visual cue to attend (such as on a continuous performance test for attention), the AA should make sure to use teaching items until the individual with FXS fully understands the testing procedure and the need to sustain attention to the task. It will be important to remind the individual with FXS to slow down, “stop and think”, and consider all options before answering test questions so that impulsivity does not interfere with measurement of target skills. The AA may wish to wait to record answers or move onto the next page or item in order to allow the individual with FXS to change his or her mind about a response.

Additionally, individuals with FXS tend to enjoy social interactions and may have a playful and humorous interaction style (Stackhouse & Scharfenaker, 2015). An individual with FXS may enjoy playing a game, having a dance party, talking about a favorite sports team or well-known figure, or simply chatting with the AA during breaks. It is important to note that individuals with FXS tend to have better developed receptive than expressive language skills (Braden et al., 2013). Nonverbal assessments and/or composites will allow the individual with FXS to demonstrate knowledge in a target skill without the access skill barriers related to verbal communication demands (Dowling & Barbouth, 2012).

Individuals with FXS often display increased anxiety when approaching new or unfamiliar people, events, and environments and up to 80%, meet diagnostic criteria for an anxiety disorder (Bagni, Tassone, Neri, & Hagerman, 2012; Cordeiro, Ballinger, Hagerman, & Hessl, 2011). Furthermore, hyperarousal can impede an individual’s ability to demonstrate his or her abilities (Sudhalter, 2012). During an assessment, the AA can address behavioral concerns with an individual with FXS by attending to sensory needs and anxiety. For example, the AA can avoid eye contact by looking to the side of the examinee’s face with a warm, approachable expression. The AA should thoroughly prepare the testing environment to reduce loud or bothersome noises that can lead to hyperarousal. Precautions to limit extraneous noises and muffle the sounds of noisy clocks, timers, buzzers, or other aspects of testing materials may help the individual with FXS to stay calm during testing (Stackhouse & Scharfenaker, 2015). Additionally, taking time to build rapport and connect with the individual with FXS may alleviate the negative behaviors associated with social anxiety during testing. Finally, providing a highly individualized visual schedule may alleviate some of the stress associated with approaching a novel task. The individual with FXS can check off items as they are completed, so that he or she knows what has been accomplished and what is left in the session (Hessl et al., 2016). Breaks can be added to the schedule so the individual knows exactly how many more items he or she must complete until a break.

Down Syndrome

Down syndrome (DS) is the most common known genetic cause of ID (Roizen, 2013). DS occurs when an individual has three, rather than two, copies of the long arm of human chromosome 21. The extra genetic material associated with Down syndrome can cause a variety of developmental and medical outcomes. In terms of physical health, a diagnosis of DS places an individual at risk for a number of medical conditions, including microcephaly, developmental delays and small stature, congenital heart defects, hypothyroidism, and orthopedic conditions. Individuals with DS can have significant learning and memory problems that may intensify throughout childhood and adolescence. Functional living skills and executive functioning are diminished when compared to peers without DS and the disability is associated with pervasive deficits in the area of expressive communication (Roizen, 2013). In terms of assessment, there are several factors to consider regarding the DS phenotype. First, the small stature associated with DS requires planning for a size-appropriate table and chair for the testing environment. The individual’s feet should rest comfortably on the floor (or block) while seated. Issues with low muscular tone can create loose ligaments, poor trunk control, and hyperextension of the joints (Perlman, 2014). These traits mean that sitting upright for long periods of time may exhaust an individual with DS, resulting in low energy and task avoidance behaviors. Proper seating, postural support, and frequent motor breaks can reduce fatigue and keep the examinee engaged with the task at hand (New York State Department, n.d.). The AA should closely monitor the individual with DS for levels of engagement and physical comfort.

The unique cognitive profile associated with DS may also be useful when planning accommodations for standardized assessments. Research has documented significant relative strengths in visual memory, visual-motor integration, and visual imitation for individuals with DS, in comparison with their relative weaknesses in verbal processing abilities (Fidler, 2005). Davis (2008) encourages emphasizing these visual spatial processing strengths when working with individuals with DS. In the assessment process, this may involve providing visual cues for behavioral expectations and using adequate modeling so that the examinee can imitate the expected testing activities. The AA should also make sure to select tests that adequately measure a variety of visual-spatial target skills so that potential cognitive strengths can be quantified and utilized in intervention planning.

Another consideration when working with individuals with DS is that of motivation toward a task. Individuals with DS have a unique profile of relative strengths in social skills and significant deficits in problem solving skills. This combination of strengths and weaknesses can result in a social-motivation profile, wherein the individual with DS engages in highly social escape behaviors to avoid a challenging task (Fidler, 2005). In particular, these behaviors may include playful, distracting actions, where the individual attempts to engage the AA in social banter in order to avoid the assessment requirements. In the assessment setting, the AA must be firm, yet warm; making sure to let the individual with DS know that playful conversation can take place during the break, as soon as the assessment tasks have been completed. Planned ignoring is a useful strategy, where the AA continues with the assessment process, ignores off-task behavior and only attends to the individual with DS when he or she is on-task. The AA must limit his or her emotional expression and affect so as not to encourage silly, off-task behaviors. Clear expectations and visual cues for behavior are useful for individuals with DS. For example, the AA can teach the examinee a “ready position” (e.g. “ready hands” visual prompt for where to place hands), and/or provide a list of “Active Listening” pictures (e.g. “eyes watching”, “body still”, “voice quiet”, and “ears listening”) in the examination room (Herschell et al., 2002). When the individual with DS becomes off-task, the AA can simply point to the area of behavioral concern and provide a simple verbal cue. Finally, carefully placing the examinee away from windows, doors, and light switches may also help keep the individual with DS on-task in the assessment setting.

Comorbid Intellectual Disability and Autism Spectrum Disorder

ASD is characterized by a continuum of deficits in the areas of social reciprocity and communication, as well as restricted interests and/or repetitive behaviors (Hyman & Levy, 2013). ASD is the fastest growing developmental disability in terms of prevalence (Gargiulo & Bouck, 2018). Recent estimates indicate that 1 in 68 children have been identified with ASD, with boys being 4.5 times more likely than girls to receive the diagnosis (Center for Disease Control and Prevention, 2012). Approximately 40–70% of individuals with a diagnosis of ASD have ID (Hyman & Levy, 2013).

Individuals with ASD tend to prefer predictability and routines (Gargiulo & Bouck, 2018). This preference is frequently challenged in a testing situation, where the entire assessment process is unfamiliar. The AA can alleviate some of the stress associated with the novelty and unpredictable nature of an assessment by providing social stories ahead of time and a visual schedule during the assessment process. The AA may also consider bringing a trusted adult caregiver into the room to help with rapport and comfort. AAs may consider consulting with the caregiver to determine whether the individual may be able to demonstrate the skill with a different behavioral prompt or request (Perry et al., 2002).

A lack of joint attention is common for individuals with ASD and can interfere significantly with the AA’s ability to measure a target skill (Gargiulo & Bouck, 2018). Providing a visual prompt paired with a verbal cue to “watch” acts as an attention grabber and helps to direct the examinee’s attention to the task at hand (Kylliäinen et al., 2014). Some research has indicated that using tablets for instruction can improve behaviors and enhance engagement for individuals with ASD (Neely et al, 2013), and that computer administered assessments may be a useful tool with this population (Skerwer et al., 2016). The recent availability of digital assessment tools (Noland, 2017) may prove to be useful for the population of individuals with ASD, though more research is needed in this area and with this specific population.

Repetitive and restricted behaviors associated with ASD, such as hand flapping or verbal scripting, can also significantly interfere with the standardized assessment process (Gargiulo & Bouck, 2018). The AA may need to wait for the examinee to finish a self-stimulating behavior, or complete a verbal script before he or she is ready to move on to the next part of the assessment. The AA should make sure to record and measure a true response to the question, rather than a scripted verbal response that is off topic. Therefore, the AA must allow ample time for testing, with significant breaks, and may consider breaking an assessment into multiple sessions (Perry et al., 2002).

The use of applied behavioral analysis (ABA) techniques may enhance engagement in the testing process for individuals with ASD (Hyman & Levy, 2013). The AA should survey the caregivers of the examinee ahead of time, making sure to note any current behavior plans and preferred reinforcement items (e.g., stickers, activities, toys, snacks, etc.). Then, the AA can set up a similar behavior plan for the assessment session, using a token-economy system to reinforce desirable behaviors and reduce off-task and undesirable behaviors. Whenever possible, the AA should use planned ignoring for inappropriate behaviors related to ASD (Perry et al., 2002).

Finally, it is important to note some families and self-advocates with ASD may prefer identity-first language to person-first language (Dunn & Andrews, 2015). For instance, the term “autistic” may be preferred to the person-first version “individual with ASD” because identity-first language affirms that a disability is a unique aspect of an individual’s “diverse cultural and sociopolitical experience and identity” and should be emphasized rather than diminished (Dunn & Andres, 2015, p. 259). Therefore, the AA can discuss this ahead of time with the individual with ASD and his or her family, making sure to inquire about the family’s preferred language when referring to the ASD diagnosis. The reporting phase of the assessment should reflect the family’s preferences in this regard.

Future Directions

There is a need for researchers, clinicians, and educators to conduct valid standardized assessments with individuals with ID. Assessment results can be used to develop comprehensive treatment and intervention plans, make important placement decisions in the schools or in the community, and further our understanding of ID through research. Understanding the basic etiology, phenotype, and developmental trajectory of an individual with ID is necessary in order to evaluate the efficacy of specific assessment tools, medical procedures, and educational interventions. However, there are specific attributes associated with ID (as well as the FXS, DS, and ASD phenotypes) that can interfere with assessment processes and render results invalid or less accurate. Optimizing the assessment process through evidence-based changes in test selection and administration can serve to decrease the number of children and families who receive reports with inaccurate or inadequate information. With this proposed cyclical model for assessment, based on recommended practices from professional organizations and an emerging body of evidence-based practice, school psychologists are equipped to assess many individuals who have previously been considered “untestable.” Future research should specifically investigate the validity of the proposed model for assessment. Although this model has been used effectively in a research setting to enhance feasibility and validity of assessments with individuals with ID (citation omitted for blind reviewing process), the model itself has not been tested empirically. Determining its efficacy and face validity will ensure the use of best practices in both research and clinical settings, thereby producing more useful data for critical decision-making with individuals with ID.

Acknowledgments

Research for this paper was funded by a grant from the National Institute of Child Health and Human Development (NICHD), (A Cognitive Assessment Battery Intellectual Disabilities, R01 HD076189).

Funding

This study was funded by the National Institutes of Child Health and Human Development (NIHCD) (A Cognitive Assessment Battery Intellectual Disabilities, R01 HD076189)

Footnotes

Conflicts of Interest:

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Compliance with Ethical Standards

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. This study was approved by the Institutional Review Boards of UC Davis (#681782) and the University of Denver (#698133).

Informed consent

All participants or their legal guardians gave written consent to participate in the research, and capable participants gave their written consent or assent as determined by the IRBs.

References

- American Educational Research Association, author. Standards for educational and psychological testing. Washington, DC: American Educational Research Association; 2014. [Google Scholar]

- American Psychological Association. Guidelines for assessment of and intervention with persons with disabilities. The American Psychologist. 2012;67(1):43. doi: 10.1037/a0025892. [DOI] [PubMed] [Google Scholar]

- Bagni C, Tassone F, Neri G, Hagerman R. Fragile X syndrome: Causes, diagnosis, mechanisms, and therapeutics. Journal of Clinical Investigation. 2012;122(12):4314–22. doi: 10.1172/JCI63141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagnato SJ. Authentic assessment for early childhood intervention: Best practices. New York, NY: Guilford Press; 2008. [Google Scholar]

- Bagnato SJ, Neisworth JT. A national study of the social and treatment “invalidity” of intelligence testing for early intervention. School Psychology Quarterly. 1994;9(2):81–102. [Google Scholar]

- Bathurst K, Gottfried AW. Untestable subjects in child development research: Developmental implications. Child Development. 1987;58:1135–1144. [Google Scholar]

- Berry-Kravis E, Hessl D, Rathmell B, Zarevics P, Cherubini M, Walton-Bowen K, Mu Y, Nguyen DV, Gonzalez-Heydrich J, Wang PP, Carpenter RL, Bear MF, Hagerman RJ. Effects of STX209 (arbaclofen) on neurobehavioral function in children and adults with fragile X syndrome: a randomized, controlled, phase 2 trial. Scientific Translation Medicine. 2012;4(152):152ra127. doi: 10.1126/scitranslmed.3004214. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Krause SE, Block SS, Guter S, Wuu J, Leurgans S, … Cogswell J. Effect of CX516, an AMPA-modulating compound, on cognition and behavior in fragile X syndrome: A controlled trial. Journal of Child and Adolescent Psychopharmacology. 2006;16(5):525–40. doi: 10.1089/cap.2006.16.525. doi: http://dx.doi.org.du.idm.oclc.org/10.1089/cap.2006.16.525. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Sumis A, Kim OK, Lara R, Wuu J. Characterization of potential outcome measures for future clinical trials in fragile X syndrome. Journal of Autism and Developmental Disorders. 2008;38(9):1751–1757. doi: 10.1007/s10803-008-0564-8. [DOI] [PubMed] [Google Scholar]

- Bodner KE, Williams DL, Engelhardt CR, Minshew NJ. A comparison of measures for assessing the level and nature of intelligence in verbal children and adults with autism spectrum disorder. Research In Autism Spectrum Disorders. 2014;8(11):1434–1442. doi: 10.1016/j.rasd.2014.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braden JP, Elliott SN. Accommodations on the stanford-binet intelligence scales, fifth edition. In: Roid G, editor. Stanford-binet intelligence scales, fifth edition, interpretive manual: Expanded guide to the interpretation of SB5 test results. Rolling Meadows, IL: Riverside Publishing; 2003. pp. 135–143. [Google Scholar]

- Braden M, Riley K, Zoladz J, Howell S, Berry-Kravis E. Educational guidelines for fragile x syndrome: General. Consensus of the Fragile X Clinical & Research Consortium. 2013 Retrieved from: https://fragilex.org/wp-content/uploads/2012/08/Educational-Guidelines-for-Fragile-X-Syndrome-General2013-Sept.pdf.

- Brookhart vs. Illinois State Board of Education, 697 F. 2d 179 (7th Cir. 1983).

- Brotherton MJ. The role of families in accountability. Journal of Early Intervention. 2001;24(1):22–24. [Google Scholar]

- Brue A, Wilmshurst L. Essentials of intellectual disability assessment and identification. Hoboken, New Jersey: John Wiley & Sons, Inc; 2016. [Google Scholar]

- Centers for Disease Control and Prevention. Prevalence of autism spectrum disorders. Autism and Developmental Disabilities Monitoring Network. 2012 [pdf], Retrieved from https://www.cdc.gov/ncbddd/autism/documents/addm-fact-sheet---comp508.pdf.

- Cheung Natalie. Defining intellectual disability and establishing a standard of proof: Suggestions for a national model standard. Health Matrix. 2013;23(1):317. [PubMed] [Google Scholar]

- Christensen L, Carver W, VanDeZande J, Lazarus S Council of Chief State School Officers. Accommodations Manual : How to Select, Administer, and Evaluate Use of Accommodations for Instruction and Assessment of Students with Disabilities. (3) 2011 Retrieved from http://www.ccsso.org/Resources/Publications/Accommodations_Manual_How_to_Select_Administer_and_Evaluate_the_Use_Of_Accomocations_For_Instruction_and_Assessment_Of_Students_With_Disabilities_.html.

- Climie Emma, Henley Laura. A Renewed Focus on Strengths-Based Assessment in Schools. British Journal of Special Education. 2016;43(2):108–121. [Google Scholar]

- Cordeiro L, Ballinger E, Hagerman R, Hessl D. Clinical assessment of DSM-IV anxiety disorders in fragile X syndrome: Prevalence and characterization. Journal of Neurodevelopmental Disorders. 2011;3(1):57–67. doi: 10.1007/s11689-010-9067-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornish KM, Levitas A, Sudhalter V. Fragile X syndrome: The journey from genes to behavior. In: Mazzocco MM, Ross JL, Mazzocco MM, Ross JL, editors. Neurogenetic developmental disorders: Variation of manifestation in childhood. Cambridge, MA, US: The MIT Press; 2007. pp. 73–103. [Google Scholar]

- Crepeau-Hobson F. Best practices in supporting the education of students with severe and low incidence disabilities. In: Harrison PL, Thomas A, editors. Best practices in school psychology: Systems-level services. Bethesda, MD: NASP Publications; 2014. pp. 111–123. [Google Scholar]

- Davis AS. Children with down syndrome: Implications for assessment and intervention in the school. School Psychology Quarterly. 2008;23(2):271–281. [Google Scholar]

- Dowling M, Barbouth D. Assessment of Fragile X Syndrome. Consensus of the Fragile X Clinical & Research Consortium on Clinical Practices. 2012 Retrieved from https://fragilex.org/wp-content/uploads/2012/08/Assessment-in-Fragile-X-Syndrome2012-Oct.pdf.

- Duckworth AL, Quinn PD, Lynam DR, Loeber R, Stouthamer-Loeber M. Role of test motivation in intelligence testing. PNAS Proceedings of the National Academy of Sciences of the United States of America. 2011;108(19):7716–7720. doi: 10.1073/pnas.1018601108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn DS, Andrews EE. Person-first and identity-first language: Developing psychologists’ cultural competence using disability language. American Psychologist. 2015;70(3):255–264. doi: 10.1037/a0038636. [DOI] [PubMed] [Google Scholar]

- Eagle JW, Dowd-Eagle S, Snyder A, Holtzman EG. Implementing a multi-tiered system of support (MTSS): Collaboration between school psychologists and administrators to promote systems-level change. Journal of Educational & Psychological Consultation. 2015;25(2):18. [Google Scholar]

- Fidler DJ. The emergence of a syndrome-specific personality profile in young children with Down syndrome. Down Syndrome Research and Practice. 2005;10(2):53–60. doi: 10.3104/reprints.305. [DOI] [PubMed] [Google Scholar]

- Gargiulo RM, Bouck E. Special education in contemporary society: An introduction to exceptionality, sixth edition. New York: Sage Publications; 2018. [Google Scholar]

- Gray C. The new social story book: 15th anniversary edition. Arlington, TX: Future Horizons, Inc; 2015. [Google Scholar]

- Graungaard AH, Skov L. Why do we need a diagnosis? A qualitative study of parents’ experiences, coping and needs, when the newborn child is severely disabled. Child: Care, Health and Development. 2007;33:296–307. doi: 10.1111/j.1365-2214.2006.00666.x. [DOI] [PubMed] [Google Scholar]

- Hagerman RJ. Lessons from fragile X regarding neurobiology, autism, and neurodegeneration. Journal Of Developmental And Behavioral Pediatrics. 2006;27(1):63–74. doi: 10.1097/00004703-200602000-00012. [DOI] [PubMed] [Google Scholar]

- Hagerman RJ, Hagerman PJ. Fragile x syndrome: Diagnosis, treatment, and research. 3. Baltimore, MD: The Johns Hopkins University Press; 2002. [Google Scholar]

- Hall SS, Hammond JL, Hirt M, Reiss AL. A ‘learning platform’ approach to outcome measurement in fragile X syndrome: A preliminary psychometric study. Journal of Intellectual Disability Research. 2012;56(10):947–960. doi: 10.1111/j.1365-2788.2012.01560.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawaii State Department of Education, 17 EHLR 360 (OCR 1990).

- Herschell AD, Greco LA, Filcheck HA, McNeil CB. Who is testing whom? Ten suggestions for managing the disruptive behavior of young children during testing. Intervention in School and Clinic. 2002;37(3):140–148. [Google Scholar]

- Hessl D, Nguyen D, Green C, Chavez A, Tassone F, Hagerman R, … Hall S. A solution to limitations of cognitive testing in children with intellectual disabilities: The case of fragile X syndrome. Journal of Neurodevelopmental Disorders,1. 2009;(1):33–45. doi: 10.1007/s11689-008-9001-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessl D, Sansone S, Berry-Kravis E, Riley K, Widaman K, Abbeduto L, … Gershon R. The NIH Toolbox Cognitive Battery for intellectual disabilities: Three preliminary studies and future directions. Journal of Neurodevelopmental Disorders. 2016:8. doi: 10.1186/s11689-016-9167-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickman L, Stackhouse TM, Scharfenaker SK. Sensory diet suggested activities. Consensus of the Fragile X Clinical & Research Consortium on Clinical Practices. 2008 Retrieved from https://fragilex.org/wpcontent/uploads/2012/01/Sensory_Diet_Activity_List_by_Mouse_and_Tracy.pdf.

- Hyman SL, Levy SE. Autism Spectrum Disorders. In: Batshaw M, Roizen N, Lotrecchiano G, editors. Children with disabilities. 7. Baltimore, MD: Paul H. Brookes Publishing Co; 2013. pp. 345–368. [Google Scholar]

- Individuals With Disabilities Education Act, 20 U.S.C. § 1400 (2004).

- Kasari C, Brady N, Lord C, Tager-Flusberg H. Assessing the minimally verbal school-aged child with autism spectrum disorder. Autism Research. 2013;6:479–493. doi: 10.1002/aur.1334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kenworthy L, Anthony LG. In: Children with disabilities. 7. Batshaw M, Roizen N, Lotrecchiano G, editors. Baltimore, MD: Paul H. Brookes Publishing Co; 2013. pp. 307–319. [Google Scholar]

- Koegel LK, Koegel RL, Smith A. Variables related to differences in standardized test outcomes for children with autism. Journal of Autism and Developmental Disorders. 1997;27(3):233–243. doi: 10.1023/a:1025894213424. [DOI] [PubMed] [Google Scholar]

- Kylliäinen A, Jones EJH, Gomot M, Warreyn P, Falck-Ytter T. Practical guidelines for studying young children with autism spectrum disorder in psychophysiological experiments. Review Journal of Autism and Developmental Disorders. 2014;1:373–386. [Google Scholar]

- Leong HM, Carter M, Stephenson J. Systematic review of sensory integration therapy for individuals with disabilities: Single case design studies. Research In Developmental Disabilities. 2015:47334–351. doi: 10.1016/j.ridd.2015.09.022. [DOI] [PubMed] [Google Scholar]

- Linder T. Administration guide for transdisciplinary play-based assessment-2 and transdisciplinary play-based assessment-2. Baltimore, MD: Brookes Publishing; 2008. [Google Scholar]

- Mastoras SM, Climie EA, McCrimmon AW, Schwean VL. A C.L.E.A.R. approach to report writing: A Framework for improving the efficacy of psychoeducational reports. Canadian Journal of School Psychology. 2011;26(2):127–147. [Google Scholar]

- Mather N, Wendling BJ. Woodcock-Johnson IV Tests of Cognitive Abilities. Rolling Meadows, IL: Riverside Publishing; 2014. Examiners manual. [Google Scholar]

- Munger KM, Gill CJ, Ormond KE, Kirschner KL. The next exclusion debate: Assessment technology, ethics, and intellectual disability after the Human Genome Project. Mental Retardation And Developmental Research Reviews. 2007;13(2):121–128. doi: 10.1002/mrdd.20146. [DOI] [PubMed] [Google Scholar]

- National Association of School Psychologists. Principles for professional ethics [PDF document] 2010 Retrieved from https://www.nasponline.org/Documents/Standards%20and%20Certification/Standards/1_%20Ethical%20Principles.pdf.

- Neely L, Rispoli M, Camargo S, Davis H, Boles M. The effect of instructional use of an iPad on challenging behavior and academic engagement for two students with autism. Research in Autism Spectrum Disorders. 2013;7:509–516. [Google Scholar]

- New york state department of health division of family health bureau of early intervention. Down syndrome assessment and intervention for young children (age 0–3 years) Clinical practice guideline report of the recommendations. n.d Retrieved from https://www.health.ny.gov/community/infants_children/early_intervention/docs/guidelines_down_syndrome_assessment_and_intervention.pdf.

- Noland RM. Intelligence testing using a tablet computer: experiences with using Q-Interactive. Training and Education in Professional Psychology. 2017;11(3):156–163. [Google Scholar]

- Perlman SL. Down’s Syndrome. Encyclopedia of the Neurological Sciences. 2014:1. [Google Scholar]

- Perry A, Condillac RA, Freeman NL. Best practices and practical strategies for assessment and diagnosis of autism. Journal on Developmental Disabilities. 2002;9(2):61–75. [Google Scholar]

- Phillips SE. High-stakes testing accommodations: Validity versus disabled rights. Applied Measurement in Education. 1994;7(2):93–120. [Google Scholar]

- Riley K. Report writing: Structure, process, and case studies. In: Linder T, editor. Administration guide for transdisciplinary play-based assessment-2 and transdisciplinary play-based intervention-2. Baltimore, MD: Paul H. Brookes Publishing; 2008. pp. 171–184. [Google Scholar]

- Roid GH. Interpretive manual: Expanded guide to the interpretation of SB5 test results, Stanford-Binet Intelligence Scales, Fifth Edition. Rolling Meadows, IL: Riverside Publishing; 2003. [Google Scholar]

- Roizen N. Down syndrome (Trisomy 21) In: Batshaw M, Roizen N, Lotrecchiano G, editors. Children with disabilities. 7. Baltimore, MD: Paul H. Brookes Publishing Co; 2013. pp. 307–319. [Google Scholar]

- Salvia J, Ysseldyke J, Witmer S. Assessment in special and inclusive education. 12. Belmont, CA: Wadsworth, Cengage Learning; 2013. [Google Scholar]

- Sansone S, Schneider A, Bickel E, Berry-Kravis E, Prescott C, Hessl D. Improving IQ measurement in intellectual disabilities using true deviation from population norms. Journal Of Neurodevelopmental Disorders. 2014;8(6) doi: 10.1186/1866-1955-6-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sattler JM. Assessment of children: Cognitive foundations. 5. San Diego: Author; 2008. [Google Scholar]

- Scerif G, Karmiloff-Smith A, Campos R, Elsabbagh M, Driver J, Cornish K. To look or not to look? Typical and atypical development of oculomotor control. Journal of Cognitive Neuroscience. 2005;17:591–604. doi: 10.1162/0898929053467523. [DOI] [PubMed] [Google Scholar]

- Scharfenaker S, Stackhouse T. Adapting autism interventions for Fragile X syndrome. National Fragile X Foundation. 2015 Retrieved from https://fragilex.org/2015/treatment-and-intervention/coffee-talk-with-mouse-and-tracy/adapting-autism-interventions-for-fragile-x-syndrome/

- Schneider A, Hagerman R, Hessl D, Ross J, Hoeft Fumiko. Fragile X syndrome—From genes to cognition. Developmental Disabilities Research Reviews. 2009;15(4):333–342. doi: 10.1002/ddrr.80. [DOI] [PubMed] [Google Scholar]

- Shapiro BK, Batshaw ML. Developmental delay and intellectual disability. In: Batshaw M, Roizen N, Lotrecchiano G, editors. Children with disabilities. 7. Baltimore, MD: Paul H. Brookes Publishing; 2013. pp. 291–306. [Google Scholar]

- Silverman W, Miezejeski C, Ryan R, Zigman W, Krinsky-McHale S, Urv T. Stanford-Binet and WAIS IQ differences and their implications for adults with intellectual disability (aka mental retardation) Intelligence. 2010;38(2):242–248. doi: 10.1016/j.intell.2009.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skwerer DP, Jordan SE, Brukilacchio BH, Tager-Flusberg H. Comparing methods for assessing receptive language skills in minimally verbal children and adolescents with autism spectrum disorders. Autism. 2016;20(5):591–604. doi: 10.1177/1362361315600146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snow K. People first language. Disability is Natural. 2009 Retrieved from: http://www.disabilityisnatural.com/peoplefirstlanguage.htm.

- Soodak LC, Erwin EJ. Valued member or tolerated participant: Parents’ experiences in inclusive early childhood settings. Journal of the Association for Persons with Severe Handicaps. 2000;25:29–41. [Google Scholar]

- Stackhouse T, Scharfenaker S. Welcome to the FX MAX: A Helpful Fragile X Intervention Planning Tool. PowerPoint Slides. 2015 Retrieved from workshop on November 2, 2015. [Google Scholar]

- Sudhalter V. Hyperarousal in Fragile X syndrome. Consensus of the Fragile X Clinical & Research Consortium on Clinical Practices. 2012 Retrieved from https://fragilex.org/wp-content/uploads/2012/08/Hyperarousal-in-Fragile-X-Syndrome2012-Oct.pdf.

- Szarko JE, Brown AJ, Watkins MW. Examiner familiarity effects for children with autism spectrum disorders. Journal of Applied School Psychology. 2013;29:37–51. [Google Scholar]

- Tharinger DJ, Finn SE>, Hersh B, Wilkinson A, Christopher GB, Tran A. Assessment feedback with parents and preadolescent children: A collaborative approach. Professional Psychology: Research and Practice. 2008;39(6):600–609. [Google Scholar]

- Utley CA, Obiakor FE. Special issue: Research perspectives on multi-tiered system of support. Learning Disabilities: A Contemporary Journal. 2015;13(1):1–2. [Google Scholar]

- Whitaker S, Gordon S. Floor effects on the WISC-IV. International Journal of Developmental Disabilities. 2012;58(1):111–119. [Google Scholar]

- Wolf-Schein EG. Considerations in assessment of children with severe disabilities including deaf-blindness and autism. International Journal of Disability and Education. 1998;45(1):35–55. [Google Scholar]