Abstract

Shannon information theory provides various measures of so-called syntactic information, which reflect the amount of statistical correlation between systems. By contrast, the concept of ‘semantic information’ refers to those correlations which carry significance or ‘meaning’ for a given system. Semantic information plays an important role in many fields, including biology, cognitive science and philosophy, and there has been a long-standing interest in formulating a broadly applicable and formal theory of semantic information. In this paper, we introduce such a theory. We define semantic information as the syntactic information that a physical system has about its environment which is causally necessary for the system to maintain its own existence. ‘Causal necessity’ is defined in terms of counter-factual interventions which scramble correlations between the system and its environment, while ‘maintaining existence’ is defined in terms of the system's ability to keep itself in a low entropy state. We also use recent results in non-equilibrium statistical physics to analyse semantic information from a thermodynamic point of view. Our framework is grounded in the intrinsic dynamics of a system coupled to an environment, and is applicable to any physical system, living or otherwise. It leads to formal definitions of several concepts that have been intuitively understood to be related to semantic information, including ‘value of information’, ‘semantic content’ and ‘agency’.

Keywords: information theory, semantic information, agency, autonomy, non-equilibrium, entropy

1. Introduction

The concept of semantic information refers to information which is in some sense meaningful for a system, rather than merely correlational. It plays an important role in many fields, including biology [1–9], cognitive science [10–14], artificial intelligence [15–17], information theory [18–21] and philosophy [22–24].1 Given the ubiquity of this concept, an important question is whether it can be defined in a formal and broadly applicable manner. Such a definition could be used to analyse and clarify issues concerning semantic information in a variety of fields, and possibly to uncover novel connections between those fields. A second, related question is whether one can construct a formal definition of semantic information that applies not only to living beings but also any physical system—whether a rock, a hurricane or a cell. A formal definition which can be applied to the full range of physical systems may provide novel insights into how living and non-living systems are related.

The main contribution of this paper is a definition of semantic information that positively answers both of these questions, following ideas publicly presented at the FQXi's 5th International Conference [31] and explored by Carlo Rovelli [32]. In a nutshell, we define semantic information as ‘the information that a physical system has about its environment that is causally necessary for the system to maintain its own existence over time’. Our definition is grounded in the intrinsic dynamics of a system and its environment, and, as we will show, it formalizes existing intuitions while leveraging ideas from information theory and non-equilibrium statistical physics [33,34]. It also leads to a non-negative decomposition of information measures into ‘meaningful bits’ and ‘meaningless bits’, and provides a coherent quantitative framework for expressing a constellation of concepts related to ‘semantic information’, such as ‘value of information’, ‘semantic content’ and ‘agency’.

1.1. Background

Historically, semantic information has been contrasted with syntactic information, which quantifies various kinds of statistical correlation between two systems, with no consideration of what such correlations ‘mean’. Syntactic information is usually studied using Shannon's well-known information theory and its extensions [35,36], which provide measures that quantify how much knowledge of the state of one system reduces statistical uncertainty about the state of the other system, possibly at a different point in time. When introducing his information theory, Shannon focused on the engineering problem of accurately transmitting messages across a telecommunication channel, and explicitly sidestepped questions regarding what meaning, if any, the messages might have [35].

How should we fill in the gap that Shannon explicitly introduced? One kind of approach—common in economics, game theory and statistics—begins by assuming an idealized system that pursues some externally assigned goal, usually formulated as the optimization of an objective function, such as utility [37–41], distortion [36] or prediction error [19,42–44]. Semantic information is then defined as information which helps the system to achieve its goal (e.g. information about tomorrow's stock market prices would help a trader increase their economic utility). Such approaches can be quite useful and have lent themselves to important formal developments. However, they have the major shortcoming that they specify the goal of the system exogenously, meaning that they are not appropriate for grounding meaning in the intrinsic properties of a particular physical system. The semantic information they quantify has meaning for the external scientist who imputes goals to the system, rather than for the system itself.

In biology, the goal of an organism is often considered to be evolutionary success (i.e. the maximization of fitness), which has led to the so-called teleosemantic approach to semantic information. Loosely speaking, teleosemantics proposes that a biological trait carries semantic information if the presence of the trait was ‘selected for’ because, in the evolutionary past, the trait correlated with particular states of the environment [1–7]. To use a well-known example, when a frog sees a small black spot in its visual field, it snaps out its tongue and attempts to catch a fly. This stimulus–response behaviour was selected for, since small black spots in the visual field correlated with the presence of flies and eating flies was good for frog fitness. Thus, a small black spot in the visual field of a frog has semantic information, and refers to the presence of flies.

While in-depth discussion of teleosemantics is beyond the scope of this paper, we note that some of its central features make it deficient for our purposes. First, it is only applicable to physical systems that undergo natural selection. Thus, it is not clear how to apply it to entities like non-living systems, protocells or synthetically designed organisms. Moreover, teleosemantics is ‘etiological’ [45,46], meaning that it defines semantic information in terms of the past history of a system. Our goal is to develop a theory of semantic information that is based purely on the intrinsic dynamics of a system in a given environment, irrespective of the system's origin and past history.

Finally, another approach to semantic information comes from literature on so-called autonomous agents [11,12,14,45–49]. An autonomous agent is a far-from-equilibrium system which actively maintains its own existence within some environment [11–14,25,50–54]. A prototypical example of an autonomous agent is an organism, but in principle, the notion can also be applied to robots [55,56] and other non-living systems [57,58]. For an autonomous agent, self-maintenance is a fundamentally intrinsic goal, which is neither assigned by an external scientist analysing the system, nor based on past evolutionary history.

In order to maintain themselves, autonomous agents must typically observe (i.e. acquire information about) their environment, and then respond in different and ‘appropriate’ ways. For instance, a chemotactic bacterium senses the direction of chemical gradients in its particular environment and then moves in the direction of those gradients, thereby locating food and maintaining its own existence. In this sense, autonomous agents can be distinguished from ‘passive’ self-maintaining structures that emerge whenever appropriate boundary conditions are provided, such as Bénard cells [59] and some other well-known non-equilibrium systems.

Research on autonomous agents suggests that information about the environment that is used by an autonomous agent for self-maintenance is intrinsically meaningful [10–14,25,26,48,49,60]. However, until now, such ideas have remained largely informal. In particular, there has been no formal proposal in the autonomous agents literature for quantifying the amount of semantic information possessed by any given physical system, nor for identifying the meaning (i.e. the semantic content) of particular system states.

1.2. Our contribution

We propose a formal, intrinsic definition of semantic information, applicable to any physical system coupled to an external environment, whether a rock, a hurricane, a bacterium, or a sample from an alien planet.2

We assume the following set-up: there is a physical world which can be decomposed into two subsystems, which we refer to as ‘the system  ’ and ‘the environment

’ and ‘the environment  ’, respectively. We suppose that at some initial time t = 0, the system and environment are jointly distributed according to some initial distribution p(x0, y0). They then undergo coupled (possibly stochastic) dynamics until time τ, where τ is some timescale of interest.

’, respectively. We suppose that at some initial time t = 0, the system and environment are jointly distributed according to some initial distribution p(x0, y0). They then undergo coupled (possibly stochastic) dynamics until time τ, where τ is some timescale of interest.

Our goal is to define the semantic information that the system has about the environment. To do so, we make use of a viability function, a real-valued function which quantifies the system's ‘degree of existence’ at a given time. While there are several possible ways to define a viability function, in this paper we take inspiration from statistical physics [61–63] and define the viability function as the negative Shannon entropy of the distribution over the states of system  . This choice is motivated by the fact that Shannon entropy provides an upper bound on the probability that the system occupies any small set of ‘viable’ states [64–67]. We are also motivated by the connection between Shannon entropy and thermodynamics [33,34,68–71], which allows us to connect our framework to results in non-equilibrium statistical physics. Further discussion of this viability function, as well as other possible viability functions, is found in §4.

. This choice is motivated by the fact that Shannon entropy provides an upper bound on the probability that the system occupies any small set of ‘viable’ states [64–67]. We are also motivated by the connection between Shannon entropy and thermodynamics [33,34,68–71], which allows us to connect our framework to results in non-equilibrium statistical physics. Further discussion of this viability function, as well as other possible viability functions, is found in §4.

Information theory provides many measures of the syntactic information shared between the system and its environment. For any particular measure of syntactic information, we define semantic information to be that syntactic information between the system and the environment that causally contributes to the continued existence of the system, i.e. to maintaining the value of the viability function. To quantify the causal contribution, we define counter-factual intervened distributions in which some of the syntactic information between the system and its environment is scrambled. This approach is inspired by the framework of causal interventions [72,73], in which causal effects are measured by counter-factually intervening on one part of a system and then measuring the resulting changes in other parts of the system.

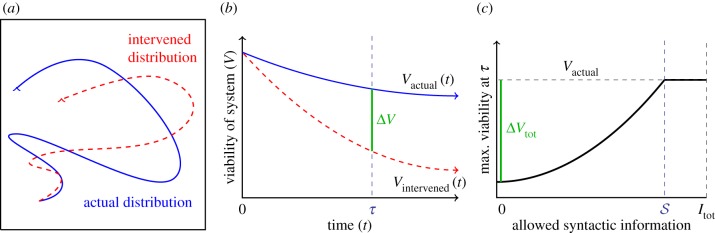

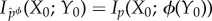

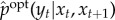

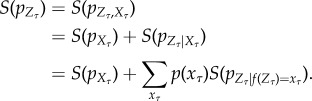

The trajectories of the actual and intervened distributions are schematically illustrated in figure 1a. We define the (viability) value of information as the difference between the system's viability after time τ under the actual distribution, versus the system's viability after time τ under the intervened distribution (figure 1b). A positive difference means that at least some of the syntactic information between the system and environment plays a causal role in maintaining the system's existence. The difference can also be negative, which means that the syntactic information decreases the system's ability to exist. This occurs if the system behaves ‘pathologically’, i.e. it takes the wrong actions given available information (e.g. consider a mutant ‘anti-chemotactic’ bacterium, which senses the direction of food and then swims away from it).

Figure 1.

Schematic illustration of our approach to semantic information. (a) The trajectory of the actual distribution (within the space of distribution over joint system–environment states) is in blue. The trajectory of the intervened distribution, where some syntactic information between the system and environment is scrambled, is in dashed red. (b) The viability function computed for both the actual and intervened trajectories. ΔV indicates the viability difference between actual and intervened trajectories, at some time τ. (c) Different ways of scrambling the syntactic information lead to different values of remaining syntactic information and different viability values. The maximum achievable viability at time τ at each level of remaining syntactic information specifies the information/viability curve. The viability value of information, ΔVtot, is the total viability cost of scrambling all syntactic information. The amount of semantic information,  , is the minimum level of syntactic information at which no viability is lost. Itot is the total amount of syntactic information between system and environment. (Online version in colour.)

, is the minimum level of syntactic information at which no viability is lost. Itot is the total amount of syntactic information between system and environment. (Online version in colour.)

To make things more concrete, we illustrate our approach using a few examples:

(1) Consider a distribution over rocks (the system) and fields (the environment) over a timescale of τ = 1 year. Rocks tend to stay in a low entropy state for long periods of time due to their very slow dynamics. If we ‘scramble the information’ between rocks and their environments by swapping rocks between different fields, this will not significantly change the propensity of rocks to disintegrate into (high entropy) dust after 1 year. Since the viability does not change significantly due to the intervention, the viability value of information is very low for a rock.

(2) Consider a distribution over hurricanes (the system) and the summertime Caribbean ocean and atmosphere (the environment), over a timescale of τ = 1 h. Unlike a rock, a hurricane is a genuinely non-equilibrium system which is driven by free energy fluxing from the warm ocean to the cold atmosphere. Nonetheless, if we ‘scramble the information’ by placing hurricanes in new surroundings that still correspond to warm oceans and cool atmospheres, after 1 h the intervened hurricanes' viability will be similar to that of the non-intervened hurricanes. Thus, like rocks, hurricanes have a low viability value of information.

(3) Consider a distribution over food-caching birds (the system) in the forest (the environment), over a timescale of τ = 1 year. Assume that at t = 0 the birds have cached their food and stored the location of the caches in some type of neural memory. If we ‘scramble the information’ by placing birds in random environments, they will not be able to locate their food and be more likely to die, thus decreasing their viability. Thus, a food-caching bird exhibits a high value of information.

So far, we have spoken of interventions in a rather informal manner. In order to make things rigorous, we require a formal definition of how to transform an actual distribution into an intervened distribution. While we do not claim that there is a single best choice for defining interventions, we propose to use information-theoretic ‘coarse-graining’ methods to scramble the channel between the system and environment [74–79]. Importantly, such methods allow us to choose different coarse-grainings, which lets us vary the syntactic information that is preserved under different interventions, and the resulting viability of the system at time τ. By considering different interventions, we define a trade-off between the amount of preserved syntactic information versus the resulting viability of the system at time τ. This trade-off is formally represented by an information/viability curve (figure 1c), which is loosely analogous to the rate-distortion curves in information theory [36].

Note that some intervened distributions may achieve the same viability as the actual distribution but have less syntactic information. We call the (viability-) optimal intervention that intervened distribution which achieves the same viability as the actual distribution while preserving the smallest amount of syntactic information. Using the optimal intervention, we define a number of interesting measures. First, by definition, any further scrambling of the optimal intervention leads to a change in viability of the system, relative to its actual (non-intervened) viability. We interpret this to mean that all syntactic information in the optimal intervention is semantic information. Thus, we define the amount of semantic information possessed by the system as the amount of syntactic information preserved by the optimal intervention. We show that the amount of semantic information is upper bounded by the amount of syntactic information under the actual distribution, meaning that having non-zero syntactic information is a necessary, but not sufficient, condition for having non-zero semantic information. Moreover, we can decompose the total amount of syntactic information into ‘meaningful bits’ (the semantic information) and the ‘meaningless bits’ (the rest), and define the semantic efficiency of the system as the ratio of the semantic information to the syntactic information. Semantic efficiency falls between 0 and 1, and quantifies how much the system is ‘tuned’ to only possess syntactic information which is relevant for maintaining its existence (see also [80]).

Because all syntactic information in the optimal intervention is semantic information, we use the optimal intervention to define the ‘content’ of the semantic information. The semantic content of a particular system state x is defined as the conditional distribution (under the optimal intervention) of the environment's states, given that the system is in state x. The semantic content of x reflects the correlations which are relevant to maintaining the existence of the system, once all other ‘meaningless’ correlations are scrambled away. To use a previous example, the semantic content for a food-caching bird would include the conditional probabilities of different food-caching locations in the forest, given bird neural states. By applying appropriate ‘pointwise’ measures of syntactic information to the optimal intervention, we also derive measures of pointwise semantic information in particular system states (see §5 for details).

As mentioned, our framework is not tied to one particular measure of syntactic information, but rather can be used to derive different kinds of semantic information from different measures of syntactic information. In §5.1, we consider semantic information derived from the mutual information between the system and environment in the initial distribution p(x0, y0), which defines what we call stored semantic information. Note that stored semantic information does not measure semantic information which is acquired by ongoing dynamic interactions between system and environment, which is the primary kind of semantic information discussed in the literature on autonomous agents [14]. In §5.2, we derive this kind of dynamically acquired semantic information, which we call observed semantic information, from a syntactic information measure called transfer entropy [81]. Observed semantic information provides one quantitative definition of observation, as dynamically acquired information that is used by a system to maintain its own existence, and allows us to distinguish observation from the mere build-up of syntactic information between physical systems (as generally happens whenever physical systems come into contact). In §5.3, we briefly discuss other possible choices of syntactic information measures, which lead to other measures of semantic information.

Given recent work on the statistical physics of information processing, several of our measures—including value of information and semantic efficiency—can be given thermodynamic interpretations. We review these connections between semantic information and statistical physics in §2, as well as in more depth in §5 when defining stored and observed semantic information.

To summarize, we propose a formal definition of semantic information that is applicable to any physical system. Our definition depends on the specification of a viability function, a syntactic information measure, and a way of producing interventions. We suggest some natural ways of defining these factors, though we have been careful to formulate our approach in a flexible manner, allowing them to be chosen according to the needs of the researcher. Once these factors are determined, our measures of semantic information are defined relative to choice of

(1) the particular division of the physical world into ‘the system’ and ‘the environment’;

(2) the timescale τ; and

(3) the initial probability distribution over the system and environment.

These choices specify the particular spatio-temporal scale and state-space regions that interest the researcher, and should generally be chosen in a way to be relevant to the dynamics of the system under study. For instance, if studying semantic information in human beings, one should choose timescales over which information has some effect on the probability of survival (somewhere between ≈100 ms, corresponding to the fastest reaction times, and ≈100 years). In §6, we discuss how the system/environment decomposition, timescale and initial distribution might be chosen ‘objectively’, in particular, so as to maximize measures of semantic information. We also discuss how this might be used to automatically identify the presence of agents in physical systems, and more generally the implications of our framework for an intrinsic definition of autonomous agency in physical systems.

The rest of the paper is laid out as follows. The next section provides a review of some relevant aspects of non-equilibrium statistical physics. In §3, we provide preliminaries concerning our notation and physical assumptions, while §4 provides a discussion of the viability function. In §5, we state our formal definitions of semantic information and related concepts. Section 6 discusses ways of automatically selecting systems, timescales, and initial distributions so as to maximize semantic information, and implications for a definition of agency. We conclude in §7.

2. Non-equilibrium statistical physics

The connection between the maintenance of low entropy and autonomous agents was first noted when considering the thermodynamics of living systems. In particular, the fact that organisms must maintain themselves in a low entropy state was famously proposed, in an informal manner, by Schrödinger [82], as well as Brillouin [83] and others [84,85]. This had led to an important line of work on quantifying the entropy of various kinds of living matter [86–89]. However, this research did not consider the role of organism–environment information exchanges in maintaining the organism's low entropy state.

Others have observed that organisms not only maintain a low entropy state but also constantly acquire and use information about their environment to do so [52,90–95]. Moreover, it has been suggested that natural selection can drive improvements in the mechanisms that gather and store information about the environment [96]. However, these proposals did not specify how to formally quantify the amount and content of information which contributes to the self-maintenance of any given organism.

Recently, there has been dramatic progress in our understanding of the physics of non-equilibrium processes which acquire, transform, and use information, as part of the development of the so-called thermodynamics of information [34]. It is now well understood that, as a consequence of the Second Law of Thermodynamics, any process that reduces the entropy of a system must incur some thermodynamic costs. In particular, the so-called generalized Landauer's principle [69,97,98] states that, given a system coupled to a heat bath at temperature T, any process that reduces the entropy of the system by n bits must release at least n · kBT ln 2 of energy as heat (alternatively, at most n · kBT ln 2 of heat can be absorbed by any process that increases entropy by n bits). It has also been shown that in certain scenarios, heat must be generated in order to acquire syntactic information, whether mutual information [34,99–101], transfer entropy [102–106], or other measures [107–111].

Owing to these developments, non-equilibrium statistical physics now has a fully rigorous understanding of ‘information-powered non-equilibrium states’ [63,99–101,103,112–122], i.e. systems in which non-equilibrium is maintained by the ongoing exchange of information between subsystems. The prototypical case of such situations are ‘feedback-control’ processes, in which one subsystem acquires information about another subsystem, and then uses this information to apply appropriate control protocols so as to keep itself or the other system out of equilibrium (e.g. Maxwell's demon [121–123], feedback cooling [120], etc.). Information-powered non-equilibrium states differ from the kinds of non-equilibrium systems traditionally considered in statistical physics, which are driven by work reservoirs with (feedback-less) control protocols, or by coupling to multiple thermodynamic reservoirs (e.g. Bénard cells).

Recall that we define our viability functions as the negative entropy of the system. As stated, results from non-equilibrium statistical physics show that both decreasing entropy (i.e. increasing viability) and acquiring syntactic information carries thermodynamic costs, and these costs can be related to each other. In particular, the syntactic information that a system has about its environment will often require some work to acquire. However, the same information may carry an arbitrarily large benefit [124], for instance by indicating the location of a large source of free energy, or a danger to avoid. To compare the benefit and the cost of the syntactic information to the system, below we define the thermodynamic multiplier as the ratio between the viability value of the information and the amount of syntactic information. Having a large thermodynamic multiplier indicates that the information that the system has about the environment leads to a large ‘bang-per-bit’ in terms of viability. As we will see, the thermodynamic multiplier is related to the semantic efficiency of a system: systems with positive value of information and high semantic efficiency tend to have larger thermodynamic multipliers.

3. Preliminaries and physical set-up

We indicate random variables by capital letters, such as X, and particular outcomes of random variables by corresponding lower-case letters, such as x. Lower-case letters p, q, … are also used to refer to probability distributions. Where not clear from context, we use notation like pX to indicate that p is a distribution of the random variable X. We also use notation like pX,Y for the joint distribution of X and Y , and pX|Y for the conditional distribution of X given Y. We use notation like pXpY to indicate product distributions, i.e. [pXpY](x, y) = pX(x)pY(y) for all x, y.

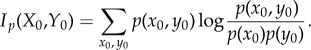

We assume that the reader is familiar with the basics of information theory [36]. We write S(pX) for the Shannon entropy of distribution pX, Ip(X; Y ) for the mutual information between random variables X and Y with joint distribution pX,Y, and Ip(X; Y|Z) for the conditional mutual information given joint distribution pX,Y,Z. We measure information in bits, except where noted.

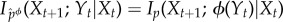

In addition to the standard measures from information theory, we also use a measure called transfer entropy [81]. Given a distribution p over a sequence of paired random variables (X0, Y0), (X1, Y1), …, (Xτ, Yτ) indexed by timestep t ∈ {0, …, τ}, the transfer entropy from Y to X at timestep t is defined as the conditional mutual information,

| 3.1 |

Transfer entropy reflects how much knowledge of the state of Y at timestep t reduces uncertainty about the next state of X at the next timestep t + 1, conditioned on knowing the state of X at timestep t. It thus reflects ‘new information’ about Y that is acquired by X at time t.

In our analysis below, we assume that there are two coupled systems, called ‘the system  ’ and ‘the environment

’ and ‘the environment  ’, with state-spaces indicated by X and Y, respectively. The system/environment X × Y may be isolated from the rest of the universe, or may be coupled to one or more thermodynamic reservoirs and/or work reservoirs. For simplicity, we assume that the joint state space X × Y is discrete and finite (in physics, such a discrete state space is often derived by coarse-graining an underlying Hamiltonian system [125,126]), though in principle our approach can also be extended to continuous state-spaces. In some cases, X × Y may also represent a space of coarse-grained macrostates rather than microstates (e.g. a vector of chemical concentrations at different spatial locations), usually under the assumption that local equilibrium holds within each macrostate (see appendix B for an example).

’, with state-spaces indicated by X and Y, respectively. The system/environment X × Y may be isolated from the rest of the universe, or may be coupled to one or more thermodynamic reservoirs and/or work reservoirs. For simplicity, we assume that the joint state space X × Y is discrete and finite (in physics, such a discrete state space is often derived by coarse-graining an underlying Hamiltonian system [125,126]), though in principle our approach can also be extended to continuous state-spaces. In some cases, X × Y may also represent a space of coarse-grained macrostates rather than microstates (e.g. a vector of chemical concentrations at different spatial locations), usually under the assumption that local equilibrium holds within each macrostate (see appendix B for an example).

The joint system evolves dynamically from initial time t = 0 to final time t = τ. We assume that the decomposition into system/environment remains constant over this time (in future work, it may be interesting to consider time-inhomogeneous decompositions, e.g. for analysing growing systems). In our analysis of observed semantic information in §5.2, we assume for simplicity that the coupled dynamics of  and

and  are stochastic, discrete-time and first-order Markovian. However, we do not assume that dynamics are time-homogeneous (meaning that, in principle, our framework allows for external driving by the work reservoir). Other kinds of dynamics (e.g. Hamiltonian dynamics, which are continuous-time and deterministic) can also be considered, though care is needed when defining measures like transfer entropy for continuous-time systems [106].

are stochastic, discrete-time and first-order Markovian. However, we do not assume that dynamics are time-homogeneous (meaning that, in principle, our framework allows for external driving by the work reservoir). Other kinds of dynamics (e.g. Hamiltonian dynamics, which are continuous-time and deterministic) can also be considered, though care is needed when defining measures like transfer entropy for continuous-time systems [106].

We use random variables Xt and Yt to represent the state of  and

and  at some particular time t ≥ 0, and random variables X0..τ = 〈X0, …, Xτ〉 and Y0..τ = 〈Y0, …, Yτ〉 to indicate entire trajectories of

at some particular time t ≥ 0, and random variables X0..τ = 〈X0, …, Xτ〉 and Y0..τ = 〈Y0, …, Yτ〉 to indicate entire trajectories of  and

and  from time t = 0 to t = τ.

from time t = 0 to t = τ.

4. The viability function

We quantify the ‘level of existence’ of a given system at any given time with a viability function

V . Though several viability functions can be considered, in this paper we define the viability function as the negative of the Shannon entropy of the marginal distribution of system  at time τ,

at time τ,

| 4.1 |

If the state space of  represents a set of coarse-grained macrostates, equation (4.1) should be amended to include the contribution from ‘internal entropies’ of each macrostate (see appendix B for an example).

represents a set of coarse-grained macrostates, equation (4.1) should be amended to include the contribution from ‘internal entropies’ of each macrostate (see appendix B for an example).

There are several reasons for selecting negative entropy as the viability function. First, as discussed in §2, results in non-equilibrium statistical physics relate changes of the Shannon entropy of a physical system to thermodynamic quantities like heat and work [33,34,68–71]. These relations allow us to analyse our measures in terms of thermodynamic costs.

The second reason we define viability as negative entropy is that entropy provides an upper bound on the amount of probability that can be concentrated in any small subset of the state space X (for this reason, entropy has been used as a measure of the performance of a controller [61–63]). For us, this is relevant because there is often a naturally defined ‘viability set’ [64–67,127,128], which is the set of states in which the system  can continue to perform self-maintenance functions. Typically, the viability set will be a very small subset of the overall state space X. For instance, the total number of ways in which the atoms in an E. coli bacterium can be arranged, relative to the number of ways they can be arranged to constitute a living E. coli, has been estimated to be of the order of 246 000 000 [86]. If the entropy of system

can continue to perform self-maintenance functions. Typically, the viability set will be a very small subset of the overall state space X. For instance, the total number of ways in which the atoms in an E. coli bacterium can be arranged, relative to the number of ways they can be arranged to constitute a living E. coli, has been estimated to be of the order of 246 000 000 [86]. If the entropy of system  is large and the viability set is small, then the probability that the system state is within the viability set must be small, no matter where that viability set is in X. Thus, maintaining low entropy is a necessary condition for remaining within the viability set. (Appendix A elaborates these points, deriving a bound between Shannon entropy and the probability of the system being within any small subset of its state space.)

is large and the viability set is small, then the probability that the system state is within the viability set must be small, no matter where that viability set is in X. Thus, maintaining low entropy is a necessary condition for remaining within the viability set. (Appendix A elaborates these points, deriving a bound between Shannon entropy and the probability of the system being within any small subset of its state space.)

At the same time, negative entropy may have some disadvantages as a viability function. Most obviously, a distribution can have low entropy but still assign a low probability to being in a particular viability set. In addition, a system that maintains low entropy over time does not necessarily ‘maintain its identity’ (e.g. both a rhinoceros and a human have low entropy). Whether this is an advantage or a drawback of the measure depends partly on how the notion of ‘self-maintenance’ is conceptualized.

There are other ways to define the viability function, some of which address these potential disadvantages of using negative entropy. Given a particular viability set  , a natural definition of the viability function is the probability that the system's state is in the viability set,

, a natural definition of the viability function is the probability that the system's state is in the viability set,  . However, this definition requires the viability set to be specified, and in many scenarios we might know that there is a viability set but not be able to specify it precisely. To use a previous example, identifying the viability set of an E. coli is an incredibly challenging problem [86].

. However, this definition requires the viability set to be specified, and in many scenarios we might know that there is a viability set but not be able to specify it precisely. To use a previous example, identifying the viability set of an E. coli is an incredibly challenging problem [86].

Alternatively, it is often stated that self-maintaining systems must remain out of thermodynamic equilibrium [11,14,52]. This suggests defining the viability function in a way that captures the ‘distance from equilibrium’ of system  . One such measure is the Kullback–Leibler divergence (in nats) between the actual distribution over Xτ and the equilibrium distribution of

. One such measure is the Kullback–Leibler divergence (in nats) between the actual distribution over Xτ and the equilibrium distribution of  at time τ, indicated here by πXτ,

at time τ, indicated here by πXτ,

| 4.2 |

This viability function, which is sometimes called ‘exergy’ or ‘availability’ in the literature [129,130], has a natural physical interpretation [68]: if the system were separated from environment  and coupled to a single heat bath at temperature T, then up to kBT · DKL(pXτ∥πXτ) work could be extracted by bringing the system from pXτ to πXτ.

and coupled to a single heat bath at temperature T, then up to kBT · DKL(pXτ∥πXτ) work could be extracted by bringing the system from pXτ to πXτ.

Unfortunately, there are difficulties in using equation (4.2) as the viability function in the general case. In statistical physics, the equilibrium distribution is defined as a stationary distribution in which all probability fluxes vanish. Since the system  is open (it is coupled to the environment

is open (it is coupled to the environment  , and possibly multiple thermodynamic reservoirs), such an equilibrium distribution will not exist in the general case, and equation (4.2) may be undefined. For instance, a Bénard cell, a well-known non-equilibrium system which is coupled to both hot and cold thermal reservoirs [59], will evolve to a non-equilibrium stationary distribution, in which probability fluxes do not vanish. While it is certainly true that a Bénard cell is out of thermodynamic equilibrium, one cannot quantify ‘how far’ from equilibrium it is by using equation (4.2).

, and possibly multiple thermodynamic reservoirs), such an equilibrium distribution will not exist in the general case, and equation (4.2) may be undefined. For instance, a Bénard cell, a well-known non-equilibrium system which is coupled to both hot and cold thermal reservoirs [59], will evolve to a non-equilibrium stationary distribution, in which probability fluxes do not vanish. While it is certainly true that a Bénard cell is out of thermodynamic equilibrium, one cannot quantify ‘how far’ from equilibrium it is by using equation (4.2).

In principle, it is possible to quantify the ‘amount of non-equilibrium’ without making reference to an equilibrium distribution, in particular, by measuring the amount of probability flux in a system (e.g. instantaneous entropy production [131,132] or the norm of the probability fluxes [133,134]). However, there is not necessarily a clear relationship between the amount of probability flux and the capacity of a system to carry out self-maintenance functions [135]. We leave exploration of these alternative viability functions for future work.

It is important to re-emphasize that, in our framework, the viability function is exogenously determined by the scientist analysing the system, rather than being a purely endogenous characteristic of the system. At first glance, our approach may appear to suffer some of the same problems as do approaches that define semantic information in terms of an exogenously specified utility function (see the discussion in §1.1). However, there are important differences between a utility function and a viability function. First, we require that a viability function is well defined for any physical system, whether a rock, a human, a city, a galaxy; utility functions, on the other hand, are generally scenario-specific and far from universal. Furthermore, given an agent with an exogenously defined utility function operating in a time-extended scenario, maintaining existence is almost always a necessary (though usually implicit) condition for high utility. A reasonably chosen viability function should capture this minimal, universal component of nearly all utility functions. Finally, unlike utility functions, in principle, it may be possible to derive the viability function in some objective way (e.g. in terms of the attractor landscape of the coupled system–environment dynamics [64,128]).

5. Semantic information via interventions

As described above, we quantify semantic information in terms of the amount of syntactic information which contributes to the ability of the system to continue existing.

We use the term actual distribution to refer to the original, unintervened distribution of trajectories of the joint system–environment over time t = 0 to t = τ, which will usually be indicated with the symbol p. Our goal is to quantify how much semantic information the system has about the environment under the actual distribution. To do this, we define a set of counter-factual intervened distributions over trajectories, which are similar to the actual distribution except that some of syntactic information between system and environment is scrambled, and which will usually be indicated with some variant of the symbol  . We define measures of semantic information by analysing how the viability of the system at time τ changes between the actual and the intervened distributions.

. We define measures of semantic information by analysing how the viability of the system at time τ changes between the actual and the intervened distributions.

Information theory provides many different measures of syntactic information between the system and environment, each of which requires a special type of intervention, and each of which gives rise to a particular set of semantic information measures. In this paper, we focus on two types of syntactic information. In §5.1, we consider stored semantic information, which is defined by scrambling the mutual information between system and environment in the actual initial distribution pX0,Y0, while leaving the dynamics unchanged. In §5.2, we instead consider observed semantic information, which is defined via a ‘dynamic’ intervention in which we keep the initial distribution the same but change the dynamics so as to scramble the transfer entropy from the environment to the system. Observed semantic information identifies semantic information that is acquired by dynamic interactions between the system and environment, rather than present in the initial mutual information. An example of observed semantic information is exhibited by a chemotactic bacterium, which makes ongoing measurements of the direction of food in its environment, and then uses this information to move towards food. In §5.3, we briefly discuss other possible measures of semantic information.

5.1. Stored semantic information

5.1.1. Overview

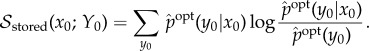

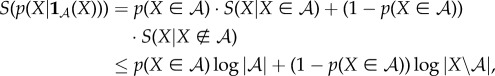

Stored semantic information is derived from the mutual information between system and environment at time t = 0. This mutual information can be written as

|

5.1 |

Mutual information achieves its minimum value of 0 if and only if X0 and Y0 are statistically independent under p, i.e. when pX0, Y0 = pX0pY0. Thus, we first consider an intervention that destroys all mutual information by transforming the actual initial distribution pX0, Y0 to the product initial distribution,

| 5.2 |

(We use the superscript ‘full’ to indicate that this is a ‘full scrambling’ of the mutual information.)

To compute the viability value of stored semantic information at t = 0, we run the coupled system–environment dynamics starting from both the actual initial distribution pX0, Y0 and the intervened initial distribution  , and then measure the difference in the viability of the system at time τ,

, and then measure the difference in the viability of the system at time τ,

| 5.3 |

For the particular viability function we are considering (negative entropy), the viability value is

| 5.4 |

Equation (5.3) measures the difference of viability under the ‘full scrambling’, but does not specify which part of the mutual information actually causes this difference. To illustrate this issue, consider a system in an environment where food can be in one of two locations with 50% probability each, and the system starts at t = 0 with perfect information about the food location. Imagine that system's viability depends upon it finding and eating the food. Now suppose that the system also has 1000 bits of mutual information about the state of the environment which does not contribute in any way to the system's viability. In this case, the initial mutual information will be 1001 bits, though only 1 bit (the location of the food) is ‘meaningful’ to the system, in that it affects the system's ability to maintain high viability.

In order to find that part of the mutual information which is meaningful, we define an entire set of ‘partial’ interventions (rather than just considering the single ‘full’ intervention mentioned above). We then find the partial intervention which destroys the most syntactic information while leaving the viability unchanged, which we call the (viability-) optimal intervention. The optimal intervention specifies which part of the mutual information is meaningless, in that it can be scrambled without affecting viability, and which part is meaningful, in the sense that it must be preserved in order to achieve the actual viability value. For the example mentioned in the previous paragraph, the viability-optimal intervention would preserve the 1 bit of information concerning the location of the food, while scrambling away the remaining 1000 bits.

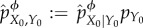

Each partial interventions in the set of possible partial interventions is induced by a particular ‘coarse-graining function’. First, consider the actual conditional probability of system given environment at t = 0, pX0|Y0, as a communication channel over which the system acquires information from its environment. To define each partial intervention, we coarse-grain this communication channel pX0|Y0 using a coarse-graining function ϕ(y), which specifies which distinctions the system can make about the environment. Formally, the intervened channel from Y0 to X0 induced by ϕ, indicated as  , is taken to be the actual conditional probability of system states X0 given coarse-grained environments ϕ(Y0),

, is taken to be the actual conditional probability of system states X0 given coarse-grained environments ϕ(Y0),

|

5.5 |

We then define the intervened joint distribution at t = 0 as  . Under the intervened distribution

. Under the intervened distribution  , X0 is conditionally independent of Y0 given ϕ(Y0), and any two states of the environment y0 and y0′ which have ϕ(y0) = ϕ(y0′) will be indistinguishable from the point of view of the system. Said differently, X0 will only have information about ϕ(Y0), not Y0 itself, and it can be verified that

, X0 is conditionally independent of Y0 given ϕ(Y0), and any two states of the environment y0 and y0′ which have ϕ(y0) = ϕ(y0′) will be indistinguishable from the point of view of the system. Said differently, X0 will only have information about ϕ(Y0), not Y0 itself, and it can be verified that  . In the information-theory literature, the coarse-grained channel

. In the information-theory literature, the coarse-grained channel  is sometimes called a ‘Markov approximation’ of the actual channel pX0|Y0 [77], which is itself a special case of the so-called channel pre-garbling or channel input-degradation [77–79]. Pre-garbling is a principled way to destroy part of the information flowing across a channel, and has important operationalizations in terms of coding and game theory [78].

is sometimes called a ‘Markov approximation’ of the actual channel pX0|Y0 [77], which is itself a special case of the so-called channel pre-garbling or channel input-degradation [77–79]. Pre-garbling is a principled way to destroy part of the information flowing across a channel, and has important operationalizations in terms of coding and game theory [78].

So far we have left unspecified how the coarse-graining function ϕ is chosen. In fact, one can choose different ϕ, in this way inducing different partial interventions. The ‘most conservative’ intervention corresponds to any ϕ which is a one-to-one function of Y, such as the identity map ϕ(y) = y. In this case, one can use equation (5.5) to verify that the intervened channel from Y0 to X0 will be the same as the actual channel, and the intervention will have no effect. The ‘least conservative’ intervention occurs when ϕ is a constant function, such as ϕ(y) = 0. In this case, the intervened distribution will be the ‘full scrambling’ of equation (5.2), for which  . We use Φ to indicate the set of all possible coarse-graining functions (without loss of generality, we can assume that each element of this set is

. We use Φ to indicate the set of all possible coarse-graining functions (without loss of generality, we can assume that each element of this set is  ).

).

We are now ready to define our remaining measures of stored semantic information. We first define the information/viability curve as the maximal achievable viability at time τ under any possible intervention,

where R indicates the amount of mutual information that is preserved. (Note that  is undefined for values of R when there is no function ϕ such that

is undefined for values of R when there is no function ϕ such that  .)

.)  is the curve schematically diagrammed in figure 1c.

is the curve schematically diagrammed in figure 1c.

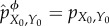

We define the (viability-) optimal intervention

as the intervention that achieves the same viability value as the actual distribution while having the smallest amount of syntactic information,

as the intervention that achieves the same viability value as the actual distribution while having the smallest amount of syntactic information,

| 5.6 |

By definition, any further scrambling of  would change system viability, meaning that in

would change system viability, meaning that in  all remaining mutual information is meaningful. Therefore, we define the amount of stored semantic information as the mutual information in the optimal intervention,

all remaining mutual information is meaningful. Therefore, we define the amount of stored semantic information as the mutual information in the optimal intervention,

| 5.7 |

While the value of information ΔVstoredtot can be positive or negative, the amount of stored semantic information is always non-negative. Moreover, stored semantic information reflects the number of bits that play a causal role in determining the viability of the system at time τ, regardless in whether they cause it to change positively or negatively.

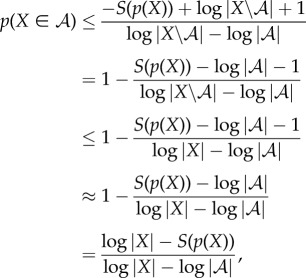

Since the actual distribution pX0,Y0 is part of the domain of the minimization in equation (5.6) (it corresponds to any ϕ which is one-to-one), the amount of stored semantic information  must be less than the actual mutual information Ip(X0, Y0). We define the semantic efficiency as the ratio of the stored semantic information to the overall syntactic information,

must be less than the actual mutual information Ip(X0, Y0). We define the semantic efficiency as the ratio of the stored semantic information to the overall syntactic information,

| 5.8 |

Semantic efficiency measures what portion of the initial mutual information between the system and environment causally contributes to the viability of the system at time τ.

5.1.2. Pointwise measures

As mentioned, the optimal intervention only contains semantic information, i.e. only information which affects the viability of the system at time τ. We use this to define the pointwise semantic information of individual states of the system and environment in terms of ‘pointwise’ measures of mutual information [136] under  ,

,

| 5.9 |

We similarly define the specific semantic information in system state x0 as the ‘specific information’ [137] about Y given x0,

|

5.10 |

These measures quantify the extent to which a system state x0, and a system–environment state x0, y0, carry correlations which causally affect the system's viability at t = τ. Note that the specific semantic information, equation (5.10), and overall stored semantic information, equation (5.7), are expectations of the pointwise semantic information, equation (5.9).

Finally, we define the semantic content of system state x0 as the conditional distribution  over all y0 ∈ Y . The semantic content of x0 reflects the precise set of correlations between x0 and the environment at t = 0 that causally affect the system's viability at time τ.

over all y0 ∈ Y . The semantic content of x0 reflects the precise set of correlations between x0 and the environment at t = 0 that causally affect the system's viability at time τ.

It is important to note that the optimal intervention may not be unique, i.e. there might be multiple minimizers of equation (5.6). In case there are multiple optimal interventions, each optimal intervention will have its own measures of semantic content, and its own measures of pointwise and specific semantic information. The non-uniqueness of the optimal intervention, if it occurs, indicates that the system possesses multiple redundant sources of semantic information, any one of which is sufficient to achieve the actual viability value at time τ. A prototypical example is when the system has information about multiple sources of food which all provide the same viability benefit, and where the system can access at most one food source during t ∈ [0, τ].

5.1.3. Thermodynamics

In this section, we use ideas from statistical physics to define the thermodynamic multiplier of stored semantic information. This measure compares the physical costs to the benefits of system–environment mutual information.

We begin with a simple illustrative example. Imagine a system coupled to a heat bath at temperature T, as well as an environment which contains a source of 106 J of free energy (e.g. a hamburger) in one of two locations (A or B), with 50% probability each. Assume that the system only has time to move to only one of these locations during the interval t ∈ [0, τ]. We now consider two scenarios. In the first, the system initially has 1 bit of information about the location of the hamburger, which will generally cost at least kBT ln 2 of work to acquire. The system can use this information to move to the hamburger's location and then extract 106 J of free energy. In the second scenario, the system never acquires the 1 bit of information about the hamburger location, and instead starts from the ‘fully scrambled’ distribution  (equation (5.2)). By not acquiring the 1 bit of information, the system can save kBT ln 2 of work, which could be used at time τ to decrease its entropy (i.e. increase its viability) by 1 bit. However, because the system has no information about the hamburger location, it only finds the hamburger 50% of the time, thereby missing out on 0.5 × 106 J of free energy on average. This amount of lost free energy could have been used to decrease the system's entropy by 0.5 × 106/(kBT ln 2) bits at time t = τ. At typical temperatures, 0.5 × 106/(kBT ln 2) ≫ 1, meaning that the benefit of having the bit of information about the hamburger location far outweighs the cost of acquiring that bit.

(equation (5.2)). By not acquiring the 1 bit of information, the system can save kBT ln 2 of work, which could be used at time τ to decrease its entropy (i.e. increase its viability) by 1 bit. However, because the system has no information about the hamburger location, it only finds the hamburger 50% of the time, thereby missing out on 0.5 × 106 J of free energy on average. This amount of lost free energy could have been used to decrease the system's entropy by 0.5 × 106/(kBT ln 2) bits at time t = τ. At typical temperatures, 0.5 × 106/(kBT ln 2) ≫ 1, meaning that the benefit of having the bit of information about the hamburger location far outweighs the cost of acquiring that bit.

To make this argument formal, imagine a physical ‘measurement’ process that transforms the fully scrambled system–environment distribution  to the actual joint distribution pX0,Y0. Assume that during the course of this process, the interaction energy between

to the actual joint distribution pX0,Y0. Assume that during the course of this process, the interaction energy between  and

and  is negligible and that a heat bath at temperature T is available. The minimum amount of work required by any such measurement process [34,100] is kBT ln 2 times the change of system-environment entropy in bits, ΔS = [S(pX0) + S(pY0)] − S(pX0,Y0) = Ip(X0; Y0). We take this minimum work,

is negligible and that a heat bath at temperature T is available. The minimum amount of work required by any such measurement process [34,100] is kBT ln 2 times the change of system-environment entropy in bits, ΔS = [S(pX0) + S(pY0)] − S(pX0,Y0) = Ip(X0; Y0). We take this minimum work,

| 5.11 |

to be the cost of acquiring the mutual information. If this work were not spent acquiring the initial mutual information, it could have been used at time τ to decrease the entropy of the system, and thereby increase its viability, by Ip(X0; Y0) (again ignoring energetic considerations).

The benefit of the mutual information is quantified by the viability value ΔVstoredtot, which reflects the difference in entropy at time t = τ when the system is started in its actual initial distribution pX0,Y0 versus the fully scrambled initial distribution  , as in equation (5.4).

, as in equation (5.4).

Combining, we define the thermodynamic multiplier of stored semantic information, κstored, as the benefit/cost ratio of the mutual information,3

|

5.12 |

The thermodynamic multiplier quantifies the ‘bang-per-bit’ that the syntactic information provides to the system, and provides a way to compare the ability of different systems to use information to maintain their viability high. κstored > 1 means that the benefit of the information outweighs its cost. The thermodynamic multiplier can also be related to semantic efficiency, equation (5.8), via

If the value of information is positive, then having a low semantic efficiency ηstored translates into having a low thermodynamic multiplier. Thus, there is a connection between ‘paying attention to the right information’, as measured by semantic efficiency, and being thermodynamically efficient.

It is important to emphasize that we do not claim that the system actually spends kBT ln 2 · Ip(X0; Y0) of work to acquire the mutual information in pX0,Y0. The actual cost could be larger, or it could be paid by the environment  rather than the system, or by an external agent that prepares the joint initial condition of

rather than the system, or by an external agent that prepares the joint initial condition of  and

and  , etc. Instead, the above analysis provides a way to compare the thermodynamic cost of acquiring the initial mutual information to the viability benefit of that mutual information. In situations where the actual cost of measurements performed by a system can be quantified (e.g. by counting the number of used ATPs), one could define the thermodynamic multiplier in terms of this actual cost.

, etc. Instead, the above analysis provides a way to compare the thermodynamic cost of acquiring the initial mutual information to the viability benefit of that mutual information. In situations where the actual cost of measurements performed by a system can be quantified (e.g. by counting the number of used ATPs), one could define the thermodynamic multiplier in terms of this actual cost.

Finally, we also emphasize that we ignore all energetic considerations in the above operationalization of the thermodynamic multiplier, in part by assuming a negligible interaction energy between system and environment. We have similarly ignored all energetic consequences in our analysis of interventions, as described above. It is not clear whether this approach is always justified. For instance, imagine that the system and environment have a large interaction energy at t = 0. In this case, a ‘measurement process’ that performs the transformation pX0pY0 ↦ pX0,Y0—or alternatively an ‘intervention process’ that performs the full scrambling pX0,Y0 ↦ pX0pY0—may involve a very large (positive or negative) change in expected energy. Assuming the system–environment Hamiltonian is specified, one may consider defining a thermodynamic multiplier that takes into account changes in expected energy. Furthermore, one may also consider defining interventions in a way that obeys energetic constraints, so that interventions scramble information without injecting or extracting a large amount of energy into the system and environment. Exploring such extensions remains for future work.

5.1.4. Example: food-seeking agent

We demonstrate our framework using a simple model of a food-seeking agent. In this model, the environment  contains food in one of five locations (initially uniformly distributed). The agent

contains food in one of five locations (initially uniformly distributed). The agent  can also be located in one of these five locations, and has internal information about the location of the food (i.e. its ‘target’). The agent always begins in location three (the middle of the world). Under the actual initial distribution, the agent has exact information about the location of the food. In each timestep, the agent moves towards its target and if it ever finds itself within one location of the food, it eats the food. If the agent does not eat food for a certain number of timesteps, it enters a high-entropy ‘death’ macrostate, which it can only exit with an extremely small probability (of the order of ≈10−34).

can also be located in one of these five locations, and has internal information about the location of the food (i.e. its ‘target’). The agent always begins in location three (the middle of the world). Under the actual initial distribution, the agent has exact information about the location of the food. In each timestep, the agent moves towards its target and if it ever finds itself within one location of the food, it eats the food. If the agent does not eat food for a certain number of timesteps, it enters a high-entropy ‘death’ macrostate, which it can only exit with an extremely small probability (of the order of ≈10−34).

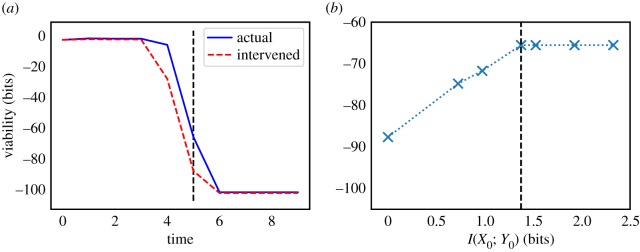

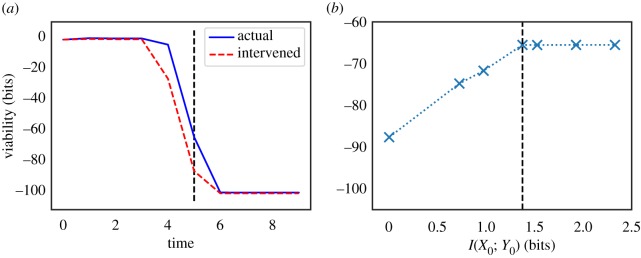

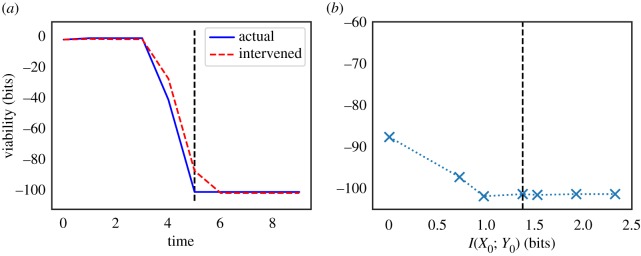

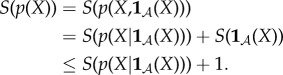

Figure 2 shows the results for timescale τ = 5. The initial mutual information is log2 5 ≈ 2.32 bits, corresponding to the five possible locations of the food. However, the total amount of stored semantic information is only ≈1.37 bits, giving a semantic efficiency of ηstored ≈ 0.6. This occurs because if the food is initially in locations {2, 3, 4}, the agent is close enough to eat it immediately. From the point of view of the agent, differences between these three locations are ‘meaningless’ and can be scrambled with no loss of viability. Formally, the (unique) optimal intervention  is induced by the following coarse-graining function:

is induced by the following coarse-graining function:

|

which is neither one-to-one nor a constant function (thus, it is a strictly partial intervention). The value of information is ΔVstoredtot ≈ 22.1 bits, giving a thermodynamic multiplier of κstored ≈ 9.5 (the food is ‘worth’ about 9.5 times more than the possible cost of acquiring information about its location).

Figure 2.

Illustration of our approach using a simple model of a food-seeking agent. (a) We plot viability values over time under both the actual and (fully scrambled) intervened distributions. The vertical dashed line corresponds to our timescale of interest (τ = 5 timesteps). (b) We plot the information/viability curve for τ = 5 ( × 's are actual points on the curve, dashed line is interpolation). The vertical dashed line indicates the amount of stored semantic information. See text for details. (Online version in colour.)

In appendix B, we describe this model in detail, as well as a variation in which the system moves away from food rather than towards it, and thus has negative value of information. A Python implementation can be found at https://github.com/artemyk/semantic_information/.

5.2. Observed semantic information

To identify dynamically acquired semantic information, which we call observed semantic information, we define interventions in which we perturb the dynamic flow of syntactic information from environment to system, without modifying the initial system–environment distribution. While there are many ways of quantifying such information flow, here we focus on a widely used measure called transfer entropy [81]. Transfer entropy has several attractive features: it is directed (the transfer entropy from environment to system is not necessarily the same as the transfer entropy from system to environment), it captures common intuitions about information flow, and it has undergone extensive study, including in non-equilibrium statistical physics [102–106].

Observed semantic information can be illustrated with the following example. Imagine a system coupled to an environment in which the food can be in one of two locations (A or B), each of which occurs with 50% probability. At t = 0, the system has no information about the location of the food, but the dynamics are such that it acquires and internally stores this location in transitioning from t = 0 to t = 1. If we intervene and ‘fully’ scramble the transfer entropy, then in transitioning from t = 0 to t = 1 the system would find itself ‘measuring’ location A and B with 50% probability each, independently of the actual food location. Thus, if the system used its measurements to move towards food, it would find itself finding food with only 50% probability, and its viability would suffer. In this case, the transfer entropy from environment to system would contain observed semantic information.

Our approach is formally and conceptually similar to the one used to define stored semantic information (§5.1), and we proceed in a more cursory manner.

The transfer entropy from Y to X over t ∈ [1..τ] under the actual distribution can be expressed as a sum of conditional mutual information terms (see equation (3.1)),

|

5.13 |

Note that the overall stochastic dynamics of the system and environment at time t can be written as pXt+1, Yt+1|Xt, Yt = pXt+1|Xt, Yt pYt+1|Xt, Yt, Xt+1, where pXt+1|Xt, Yt represents the response of the system to the previous state of itself and the environment, while pYt+1|Xt, Yt, Xt+1 represents the response of the environment to the previous state of itself and the system, as well as the current state of the system. Observe that the conditional mutual information at time t depends only on pXt+1|Xt, Yt, not on pYt+1|Xt, Yt, Xt+1. Thus, we define a set of partial interventions in which we partially scramble the conditional distribution pXt+1|Xt, Yt, while keeping the conditional distribution pYt+1|Xt, Yt, Xt+1 undistributed. This ensures that our interventions only perturb the information flow from the environment to the system, and not vice versa.4

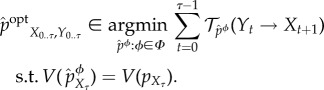

We now define our intervention procedure formally. As mentioned, the conditional distribution pXt+1|Xt, Yt specifies how information flows from the environment to the system at time t. Each partial intervention is defined by using a coarse-graining function ϕ(y), which is used to produce an intervened ‘coarse-grained’ version of this conditional distribution at all times t. The intervened conditional distribution induced by ϕ at time t, indicated as  , is defined to be the same as the conditional distribution of Xt+1 given Xt and the coarse-grained environment ϕ(Yt),

, is defined to be the same as the conditional distribution of Xt+1 given Xt and the coarse-grained environment ϕ(Yt),

| 5.14 |

|

5.15 |

Note that this definition depends on both the actual dynamics, pXt+1|Xt, Yt and on the intervened system–environment distribution at time t,  . Under the intervened distribution, Xt+1 is guaranteed to only have conditional information about ϕ(Yt), not Yt itself; formally, one can verify that

. Under the intervened distribution, Xt+1 is guaranteed to only have conditional information about ϕ(Yt), not Yt itself; formally, one can verify that  . These definitions are largely analogous to the ones defined for stored semantic information, and the reader should consult that section for more motivation of such coarse-graining procedures.

. These definitions are largely analogous to the ones defined for stored semantic information, and the reader should consult that section for more motivation of such coarse-graining procedures.

Under the intervened distribution, the joint system–environment dynamics at time t are computed as  . Then, the overall intervened dynamical trajectory from time t = 0 to t = τ, indicated by

. Then, the overall intervened dynamical trajectory from time t = 0 to t = τ, indicated by  , is computed via the following iterative procedure:

, is computed via the following iterative procedure:

(1) At t = 0, the intervened system–environment distribution is equal to the actual one,

.

.(2) Using

and the above definitions, compute

and the above definitions, compute  .

.(3) Using

, update

, update  to

to  .

.(4) Set

and repeat the above steps if t < τ.

and repeat the above steps if t < τ.

We define Φ to be set of all possible coarse-graining functions. By choosing different coarse-graining functions ϕ ∈ Φ, we can produce different partial interventions. One can verify from equation (5.14) that the intervened distribution  will equal to the actual pX0..τ, Y0..τ whenever ϕ is a one-to-one function. When ϕ is a constant function, the intervened distribution will be a ‘fully scrambled’ one, in which Xt+1 is conditionally independent of Yt given Xt for all times t,

will equal to the actual pX0..τ, Y0..τ whenever ϕ is a one-to-one function. When ϕ is a constant function, the intervened distribution will be a ‘fully scrambled’ one, in which Xt+1 is conditionally independent of Yt given Xt for all times t,

| 5.16 |

In this case, the transfer entropy at every time step will vanish.

We are now ready to define our measures of observed semantic information, which are analogous to the definition in §5.1, but now defined for transfer entropy rather than initial mutual information. The viability value of transfer entropy is the difference in viability at time τ between the actual distribution and the fully scrambled distribution,

| 5.17 |

where  is the distribution over X at time τ induced by the fully scrambled intervention. The viability value measures the overall impact of scrambling all transfer entropy on viability. We define information/viability curve as the maximal achievable viability for any given level of preserved transfer entropy,

is the distribution over X at time τ induced by the fully scrambled intervention. The viability value measures the overall impact of scrambling all transfer entropy on viability. We define information/viability curve as the maximal achievable viability for any given level of preserved transfer entropy,

|

The (viability-) optimal intervention

is defined as the intervened distribution that achieves the same viability value as the actual distribution while having the smallest amount of transfer entropy,

is defined as the intervened distribution that achieves the same viability value as the actual distribution while having the smallest amount of transfer entropy,

|

5.18 |

Under the optimal intervention,  , all meaningless bits of transfer entropy are scrambled while all remaining transfer entropy is meaningful. We use this to define the amount of observed semantic information as the amount of transfer entropy under the optimal intervention,

, all meaningless bits of transfer entropy are scrambled while all remaining transfer entropy is meaningful. We use this to define the amount of observed semantic information as the amount of transfer entropy under the optimal intervention,

|

5.19 |

Finally, we define the semantic efficiency of observed semantic information as the ratio of the amount of observed semantic information to the overall transfer entropy,

Semantic efficiency quantifies which portion of transfer entropy determines the system's viability at time τ. It is non-negative due the non-negativity of transfer entropy. It is upper bounded by 1 because the actual distribution over system–environment trajectories, pX0..τ, Y0..τ, is part of the domain of the minimization in equation (5.17) (corresponding to any ϕ which is a one-to-one function), thus the amount of observed semantic information  will always be less than the actual amount of transfer entropy

will always be less than the actual amount of transfer entropy  .

.

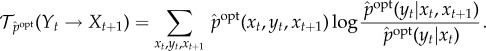

We now use the fact that  contains only meaningful bits of transfer entropy to define both the semantic content and pointwise measures of observed semantic information. Note that transfer entropy at time t can be written as

contains only meaningful bits of transfer entropy to define both the semantic content and pointwise measures of observed semantic information. Note that transfer entropy at time t can be written as

|

We define the semantic content of the transition

xt ↦ xt+1 as the conditional distribution  for all yt ∈ Y . This conditional distribution captures only those correlations between (xt, xt+1) and Yt that contribute to the system's viability. Similarly, we define pointwise observed semantic information using ‘pointwise’ measures of transfer entropy [138,139] under

for all yt ∈ Y . This conditional distribution captures only those correlations between (xt, xt+1) and Yt that contribute to the system's viability. Similarly, we define pointwise observed semantic information using ‘pointwise’ measures of transfer entropy [138,139] under  . In particular, the pointwise observed semantic information for the transition xt ↦ xt+1 can be defined as

. In particular, the pointwise observed semantic information for the transition xt ↦ xt+1 can be defined as

It is of interest to define the thermodynamic multiplier for observed semantic information, so as to compare the viability value of transfer entropy to the cost of acquiring that transfer entropy. However, there are different ways of quantifying the thermodynamic cost of acquiring transfer entropy, which depend on the particular way that the measurement process is operationalized [102–106]. Because this thermodynamic analysis is more involved than the one for stored semantic information, we leave it for future work.

5.3. Other kinds of semantic information

We have discussed semantic information defined relative to two measures of syntactic information: mutual information at t = 0, and transfer entropy incurred over the course of t ∈ [0..τ]. In future work, a similar approach can be used to define the semantic information relative to other measures of syntactic information. For example, one could consider the semantic information in the transfer entropy from the system to the environment, which would reflect how much ‘observations by the environment’ affect the viability of the system (an example of a system with this kind of semantic information is a human coupled to a so-called ‘artificial pancreas’ [140], a medical device which measures a person's blood glucose and automatically delivers necessary levels of insulin). Alternatively, one might evaluate how mutual information (or transfer entropy, etc.) between internal subsystems of system  affect the viability of the system. This would uncover ‘internal’ semantic information which would be involved in internal self-maintenance processes, such as homeostasis.

affect the viability of the system. This would uncover ‘internal’ semantic information which would be involved in internal self-maintenance processes, such as homeostasis.

6. Automatic identification of initial distributions, timescales and decompositions of interest

Our measures of semantic information depend on: (1) the decomposition of the world into the system  and the environment

and the environment  ; (2) the timescale τ; and (3) the initial distribution over joint states of the system and environment. The factors generally represent ‘subjective’ choices of the scientist, indicating for which systems, temporal scales, and initial conditions the scientist wishes to quantify semantic information.

; (2) the timescale τ; and (3) the initial distribution over joint states of the system and environment. The factors generally represent ‘subjective’ choices of the scientist, indicating for which systems, temporal scales, and initial conditions the scientist wishes to quantify semantic information.

However, it is also possible to select these factors in a more ‘objective’ manner, in particular by choosing decompositions, timescales, and initial distributions for which semantic information measures—such as the value of information or the amount of semantic information—are maximized.