Abstract

The present study sought to determine whether scalp electroencephalogram (EEG) signals contain decodable information about the direction of motion in random dot kinematograms (RDKs), in which the motion information is spatially distributed and mixed with random noise. Any direction of motion from 0–360° was possible, and observers reported the precise direction of motion at the end of a 1500-ms stimulus display. We decoded the direction of motion separately during the motion period (during which motion information was being accumulated) and the report period (during which a shift of attention was necessary to make a fine-tuned direction report). Machine learning was used to decode the precise direction of motion (within ±11.25°) from the scalp distribution of either alpha-band EEG activity or sustained event-related potentials (ERPs). We found that ERP-based decoding was above chance (1/16) during both the stimulus and the report periods, whereas alpha-based decoding was above chance only during the report period. Thus, sustained ERPs contain information about spatially distributed direction-of-motion information, providing a new method for observing the accumulation of sensory information with high temporal resolution. By contrast, the scalp topography of alpha-band EEG activity appeared to mainly reflect spatially focused attentional processes rather than sensory information.

Keywords: Decoding, motion perception, EEG, ERPs, alpha-band oscillations

1. Introduction

The nature of the brain’s representation of visual stimuli is one of the most fundamental issues for understanding human cognitive processing. Substantial effort has been devoted to understanding the neural coding of visual stimuli using invasive methods in animals and functional magnetic resonance imaging (fMRI) in humans, and the recent development of pattern classification methods has begun to make it possible to decode visual representations with high temporal resolution in humans using scalp EEG recordings. Several recent studies have shown that representations of visual stimuli can be decoded—during both perception and working memory—using the spatial pattern of alpha-band EEG oscillations (Bae & Luck, 2018a; Foster et al., 2016, 2017a, 2017b; van Ede et al., 2018; van Moorselaar et al, 2018) and the spatial pattern of transient and sustained event-related potentials (ERPs) (Bae & Luck, 2018a; Fahrenfort et al., 2017; LaRocque et al., 2013; Nemrodov et al.,2016; 2018; Rose et al., 2016; Wolff et al., 2017). Some of these studies decoded stimulus categories (e.g., faces versus words), whereas others decoded feature values along continuous dimensions (e.g., orientation). Thus, scalp EEG signals contain information about both high-level categories and low-level continuous features that can be decoded with high temporal resolution using multivariate analysis techniques.

In many studies of continuous dimensions, however, it is not clear whether the decoding was based on a representation of the stimulus per se or the spatial distribution of attention. This stems from the fact that most studies that attempted to decode continuous feature values have used tasks in which stimulus identity and stimulus location were correlated. Both ERPs and alpha-band EEG oscillations are known to vary according to the location being attended (Rihs et al., 2007; Sauseng et al., 2005; Luck, 2012), and these signals could easily underlie the decoding of stimulus location in spatial working memory tasks (Foster et al., 2016). Similarly, decoding of orientation could potentially reflect the focusing of spatial attention onto a key part of the object (e.g., the tip of the longest line within a Gabor grating) (van Ede et al., 2018; Wolff et al., 2017).

Bae and Luck (2018a) were able to distinguish between spatial attention and true orientation representations by independently manipulating the orientation and location of an object in a working memory task. They found that the location information was present in the oscillatory alpha band activity, but location-independent orientation information was not, consistent with prior evidence that alpha band activity is closely tied with spatial attention (Rihs et al., 2007; Sauseng et al., 2005). By contrast, both the location and the orientation of the object could be independently decoded from the scalp distribution of sustained ERPs during the delay period of the working memory task, indicating that ERPs contain information about both stimulus identity and the spatial locus of attention.

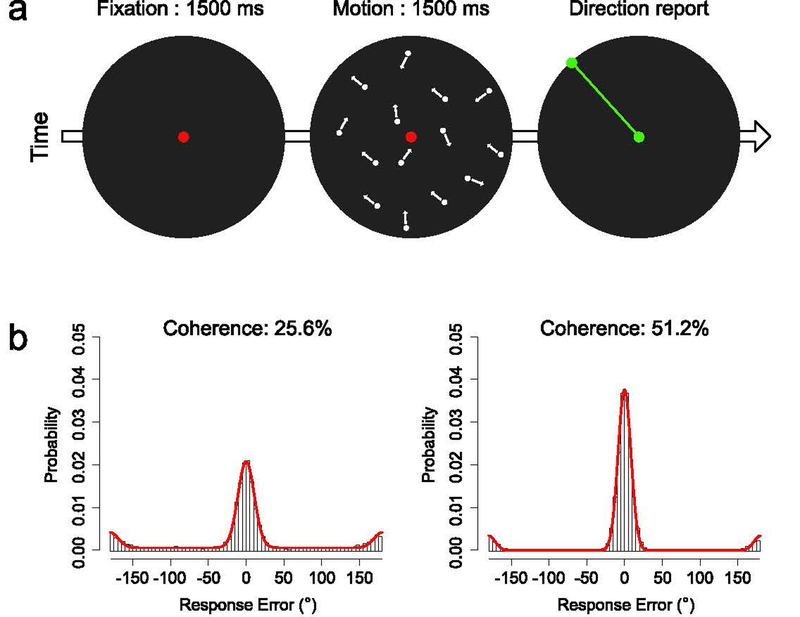

The present study asked whether alpha-band EEG activity and sustained ERPs1 could be used to decode the direction of motion in a widely used motion display. As illustrated in Figure 1a, participants attempted to perceive the precise direction of a coherent motion signal embedded within noise in a random dot kinematogram (RDK) (Roitman & Shadlen, 2002). Motion with one of the two coherence levels — 25.6% or 51.2% — was presented in any direction between 0 and 360° within a circular aperture, and observers estimated the exact motion direction at the end of each 1500-ms motion period by adjusting a ray to match the perceived motion direction (Figure 1a). We used the scalp distribution of the EEG signals to decode the stimulus direction at each moment in time during the motion period and during the following report period, taking advantage of the high temporal resolution of these signals.

Figure 1.

(a) Overview of procedure. On each trial, the observer fixated the central red dot for 1500 ms and then saw a random dot kinematogram (RDK) for 1500 ms. Motion coherence was either 25.6% or 51.2%, and the coherent motion could be in any direction from the 360° space. At the end of the RDK, the central dot turned green, indicating that the observer should report the motion direction by adjusting a green line to match the perceived motion direction. (b) Probability distribution of response errors for each coherence level, collapsed across observers. The red curve in each panel represents the maximum likelihood estimate of the simple model of task performance described in the main text.

RDKs have been widely used to study both low-level motion perception and the mechanisms by which perceptual systems make decisions about gradually-accumulating sensory information. It would be very useful to have a noninvasive method that can track the accumulation of information from the human brain with high temporal resolution. Previous research has shown that a P3-like ERP response tracks the decision process (Kelly & O’Connell, 2013), and a central goal of the present research was to determine whether fine-grained information about the direction of motion is also present in the scalp distribution of EEG signal.

The task used in the present study has two important features. First, because the motion information was distributed across the entire RDK aperture, participants were motivated to attend broadly, and they had no reason to attend to different locations for different directions of motion during the motion period. Indeed, restricting attention to one part of the aperture would decrease the availability of relevant motion information. Second, the nature of the response (see Figure 1a) encouraged participants to shift their covert attention to a point on a circumference of the aperture during the report period. Consequently, once participants began to prepare the response, spatial attention was correlated with the direction of motion. Given that the scalp topography of ERPs reflects both spatial attention and location-independent stimulus properties (Bae & Luck, 2018a), we predicted that we could decode the direction of motion during both the stimulus and report periods from the sustained ERPs. In contrast, given that the scalp topography of alpha-band EEG activity appears to mainly reflect the direction of spatial attention rather than the identity of the stimulus, we predicted that we could decode the direction of motion from the alpha-band signal mainly during the report period. Such findings would provide converging evidence about the types of information that are present in the scalp topography of EEG and ERP signals and would also make it possible for future research to use these signals to track the processes involved in accumulating sensory evidence in humans with high temporal resolution.

2. Materials and Methods

2.1. Participants

Sixteen college students between the ages of 18 and 30 with normal or corrected-to-normal visual acuity participated for monetary compensation (10 female, 6 male). The sample size was determined a priori on the basis of similar decoding studies (Bae & Luck 2018a; Foster et al., 2016). The study was approved by the UC Davis Institutional Review Board.

2.2. Stimuli & Task

Stimuli were generated in Matlab (The Mathworks, Inc.) using PsychToolbox (Brainard, 1997; Pelli, 1997) and were presented at 60 Hz on an LCD monitor (Dell U2412M, 60Hz) with a white background (87.6 cd/m2) and a continuously visible black circular aperture (diameter 5°, <0.1 cd/m2) at a viewing distance of 100 cm. The motion stimulus was generated using a standard random dot kinematogram (RDK) algorithm (Roitman & Shadlen, 2002; Gold & Shadlen, 2003). Briefly, a set of white dots was presented at random locations inside the circular aperture. Each dot remained stationary for one video frame (16.67 ms) and was then replotted two frames later (different dots appeared in different frames so that 1/3 of the dots were visible in any given frame). When replotted, a given dot had a 25.6% or 51.2% chance (depending on the coherence level) of moving in the coherent direction of motion (by being offset from its current location according to the direction and the speed of motion). Otherwise, the dot was replotted at a random location within the aperture. Thus, even at the higher coherence level, a given dot rarely moved in the direction of coherent motion for more than a few cycles, making it difficult to perceive the direction by tracking an individual dot. When the offset position of a dot was outside the aperture, that dot was replotted at a random location on the circumference of the aperture to maintain the dot density. The dot density was set to 16.7 dots per deg2/s and the speed of motion was set to 6°/s.

The task is depicted in Figure 1a. Each trial began with a 1500-ms presentation of a red fixation dot (RGB = [200, 0, 0]) at the center of the circular aperture, and this was followed by a 1500-ms presentation of the RDK. Participants were instructed to attend carefully to the direction of motion during the entire motion period. At the end of the motion display, the dots disappeared, and the fixation dot turned green (RGB = [0, 200, 0]) to indicate that a response should be made. Once the participant started moving the mouse to respond, a green probe dot appeared at a point on the circumference of the aperture that was in line with the position of the mouse cursor. A green line connecting the central dot and the probe dot was presented to indicate the direction. Participants were instructed to adjust the dot and line so that they matched the perceived direction of motion. The orientation of the line was continuously updated while the mouse moved so that participants could report the direction of motion in a continuous manner. Once participants were satisfied with the direction, they finalized the report by clicking a mouse button. The display then blanked completely, and the next trial started after a 1000-ms delay.

The coherence level (25.6% or 51.2%) varied unpredictably from trial to trial. The net direction of motion on a given trial was selected unpredictably from sixteen bins of discrete motion directions (0°, 22.5°, 45°, 67.5°, 90°, 112.5°, 135°, 157.5°, 180°, 202.5°, 225°, 247.5°, 270°, 292.5°, 315°, and 337.5°). On each trial, the actual direction of motion was selected at random from within the ±11.25° range of the selected bin. Thus, we were guaranteed to have exactly the same number of trials (=40 trials) in each bin at each coherence level, but any direction across the entire 360° space was possible.

Each session began with a minimum of 16 practice trials during which a response feedback indicating the true motion direction was provided after each report. Each participant completed a total of 1280 trials (40 trials for each of the 16 direction bins, with each of the two coherence levels, in random order). These trials were divided into 20 blocks of 64 trials.

2.3. Eye-movement calibration

At the beginning of the session, we conducted an eye-movement calibration procedure to measure the scalp voltages associated with eye movements directed toward the location corresponding to each of the 16 motion directions on the perimeter of the RDK aperture. Each trial started with a central presentation of a white bulls-eye object (1° diameter) for 500 ms. The bulls-eye then moved to one of the 16 locations on the perimeter of the aperture. Participants were instructed to move their eyes to that location. The bulls-eye remained visible at that location until the participant pressed a spacebar to terminate the trial. Each of 16 fixed locations were tested three times in random order (48 total trials). The recordings from this session were included in the artifact correction procedure (see Section 2.4) to increase our ability to isolate and remove signals that were related to systematic eye movements toward the 16 locations.

2.4. EEG Recording & Preprocessing

The recording and analysis procedures were nearly identical to those of Bae & Luck (2018), and here we provide a brief summary. The EEG was recorded (500 Hz sampling rate, 130 Hz half-power antialiasing filter) from a broad set of electrodes (FP1, FP2, F3, F4, F7, F8, C3, C4, P3, P4, P5, P6, P7, P8, P9, P10, PO3, PO4, PO7, PO8, O1, O2, Fz, Cz, Pz, POz, Oz, Lm, Rm, VEOG, HEOG-L, HEOG-R), similar to that used in prior decoding studies (Bae & Luck, 2018a; Foster et al., 2016). Signal processing and analysis was performed in Matlab using EEGLAB Toolbox (Delorme & Makeig, 2004) and ERPLAB Toolbox (Lopez-Calderon & Luck, 2014). The data were referenced offline, using the average of the left and right mastoids for the scalp sites. Bipolar horizontal and vertical electrooculogram (HEOG and VEOG) signals were computed. The data were band-pass filtered (non-causal Butterworth impulse response function, half-amplitude cutoffs at 0.1 and 80 Hz, 12 dB/oct roll-off) and resampled at 250 Hz. EEG segments with large artifacts (e.g., movement artifacts during breaks) were removed. Independent component analysis (ICA) was then performed to identify and remove components associated with blinks (Jung et al., 2000) and eye movements (Drisdelle et al., 2017).

The ICA-corrected EEG data were segmented from −500 to +3000 ms. This included 500 ms of prestimulus baseline (which was used to perform subtractive baseline correction), the 1500-ms duration of the motion stimulus, and the first 1500 ms of the direction report. The report period varied in duration depending on the participant’s response time, and we used the first 1500-ms duration of the report period to match the size of time window of the stimulus period. Later time points in this window could be contaminated by post-report processes, so we focused on overall decodability during the first 1500 ms of report period instead of point-by-point decodability.

To verify that eye movements did not impact the decoding results, we also conducted a set of decoding analyses in which trials with eye movements were excluded and ICA-based correction was not applied (see Section 3.4). In addition, because a moving stimulus can elicit systematic eye movements (e.g., optokinetic nystagmus) which might impact the scalp EEG, we also conducted decoding analyses for the data only from the EOG channels (without ICA-based correction) to test whether the pattern of eye movements was systematic with respect to stimulus direction (see Figure S7).

2.5. Decoding analyses

All analyses were performed in Matlab (The Mathworks, Natick, MA). The data and analysis scripts are available online at osf.io/2h6w9.

2.5.1. Basic decoding procedure

Using the same approach as in our previous study (Bae & Luck, 2018a), we attempted to decode the direction of motion on the basis of the scalp distribution of two different signals, the phase-independent alpha-band EEG power and the phase-locked ERP voltage. To ensure that these two analyses were independent, the ERP decoding procedure was limited to frequencies below 6 Hz, and the alpha-band decoding procedure was limited to frequencies between 8 and 12 Hz. In an exploratory analysis, we confirmed that no clear decoding could be obtained with the data from any other frequency band (4 Hz bands between 4 and 40 Hz; see Supplementary Figure S1). We used a decoding approach rather than an inverted encoding approach (Brouwer & Heeger, 2013; Fahrenfort et al., 2017; Foster et al., 2016; Serences et al., 2009) because the goal was to determine whether the EEG signals contain information about the motion direction without making assumptions about the nature of the underlying neural representation.

We used the same decoding procedure for the alpha-band and ERP analyses, except for the initial steps used to isolate the signal of interest (see Bae & Luck, 2018a, for a more detailed description). For the alpha-band decoding, the segmented EEG was bandpass filtered at 8–12 Hz, and these EEG segments were then submitted to a Hilbert transform to compute the magnitude of the complex analytic signal (squared to compute total 8–12 Hz power at each time point). For the ERP decoding, the segmented EEG was low-pass filtered at 6 Hz. Filtering was performed with the EEGLAB eegfilt() routine to produce maximally steep rolloffs. In both cases, the filtered data were then resampled at 50 Hz (20 ms sample period) to increase analysis speed. For each of the two signals, this gave us a 4-dimensional data matrix for each participant, with dimensions of time (176 time points), motion direction (16 different direction bins), trial (40 individual trials for each direction), and electrode site (the 27 scalp sites).

Motion direction was decoded on the basis of the spatial distribution of the signal over the 27 scalp electrodes. We decoded the direction separately for each of the 176 time points from −500 ms to +3000 ms (relative to stimulus onset), which made it possible to use the high temporal resolution of the EEG to track the time course of processing. The decoding procedure classified the motion direction using support vector machines (SVMs) with error-correcting output codes (ECOC — Dietterich & Bakiri, 1995), implemented through the Matlab fitcecoc() function.

As in other EEG/ERP decoding studies (Bae & Luck, 2018a; Foster et al., 2016; 2017a; 2017b), the decoding was not performed on single-trial data (which tends to be ineffective given the low signal-to-noise ratio of single-trial EEG data). Instead, sets of single-trial epochs with the same direction of motion were averaged together (after the above-described preprocessing) for the decoding. In the training phase, 16 different SVMs were trained, one for each of the 16 directions of motion. A one-versus-all coding design was taken, in which each SVM was trained to distinguish between one specific direction and all the other directions. In the test phase, new data from each of the 16 directions was fed into all 16 SVMs, and the set of direction assignments that minimized the average binary loss across the set of 16 SVMs was selected (see below). This procedure was used to classify the test data for each of the 16 directions.

We used a 3-fold cross-validation procedure in which the data from 2/3 of the trials (selected at random) were averaged together to provide the training data, and then the performance of the classifier was assessed with the averaged data from the remaining 1/3 of trials. This procedure was iterated multiple times with different randomly chosen trials in the training and test data, which provides a more robust estimate of decoding accuracy. For each iteration, the data from a given motion direction were divided into three equal sized groups of trials (three groups of 13 trials for each of the 16 directions; because the 40 trials for a given direction could not be evenly divided into 3 sets, one randomly selected trial was omitted from each direction). The trials for a given direction in each group were averaged together, producing a scalp distribution for the time point being analyzed (a matrix of 3 groups x 16 directions x 27 electrodes). The averaged data from two of the three groups served as the training dataset, and the averaged data from the remaining group served as the testing dataset. The two training datasets were simultaneously submitted to the ECOC model with known direction labels to train the 16 SVMs. Each SVM corresponded to one of the 16 directions of motion and was trained to classify a given scalp distribution as either belonging to that direction or belonging to any of the other 15 directions at the current time point (a binary classification). Note that a separate set of 16 SVMs was trained and subsequently tested for each time point.

After the training phase, the set of 16 SVMs for a given time point was used to predict the direction of motion for each set of (unlabeled) data in the test set at that time point. Specifically, the Matlab predict() function was used to predict the direction of motion for each observation in the test set by minimizing the average binary loss over the 16 SVMs. Decoding accuracy was then computed by comparing the true direction with the predicted direction. To be considered correct, we required that the predicted direction exactly match the true direction. Chance performance was therefore 0.0625 (=1/16). We also used confusion matrices to examine the distribution of errors.

This procedure was repeated three times, once with each of the three groups of data serving as the testing dataset, and the entire procedure was then iterated 10 times with new random assignments of trials to the three groups. Decoding accuracy was collapsed across the 16 directions, producing a decoding percentage for a given time point that was based on 480 decoding attempts (16 directions x 3 cross validations x 10 iterations). After this procedure was applied to each time point, the averaged decoding accuracy values were smoothed across time points to minimize noise using a 5-point moving window (equivalent to a time window of ±40 ms). As described previously (Bae & Luck, 2018a), the temporal precision resulting from the entire EEG processing and decoding pipeline was approximately ±50 ms. In other words, if decoding is significantly above chance at a given time point, we can be certain that the scalp distribution of the EEG contained information about the direction of motion at some moment within ±50 ms of this point. The entire procedure was applied separately to the 25.6% and 51.2% coherence levels.

2.5.2. Decoding analysis for the reported direction

The reported direction of motion could be quite different from the actual direction, especially on the low-coherence trials. To investigate whether the EEG signals contained information about the reported direction of motion, we conducted an additional analysis in which we attempted to decode the reported direction rather than the stimulus direction. In this analysis, we discretized the reported direction of motion into 16 bins, each ±11.25° wide, centered at 0°, 22.5°, 45°, 67.5°, 90°, 112.5°, 135°, 157.5°, 180°, 202.5°, 225°, 247.5°, 270°, 292.5°, 315°, and 337.5°. We then combined the trials using these labels instead of the true stimulus labels and performed the same SVM-ECOC decoding procedure that was used to decode the stimulus direction. However, whereas we had exactly 40 trials for each stimulus direction at each coherence level, the number of trials was not guaranteed to be the same for each of the 16 reported directions. To avoid overrepresenting commonly reported directions of motion and to prevent biases in the classification, we subsampled the available trials as necessary so that the number of trials was equal across bins for a given participant. On average, we used three groups of 5.4 trials (SEM = 0.385) for the decoding of 25.6% coherence trials and three groups of 7.12 trials (SEM = 0.384) for the decoding of 51.2% coherence trials. This was less than half the number of trials in our main decoding analysis, which likely reduced the reliability of decoding. Thus, we focused on the overall decodability during the motion period or report period rather than the decodability at each time point.

2.5.3. Statistical analysis of decoding accuracy

We conducted statistical analyses of the decoding accuracy on each individual time point and also aggregated over time points. To aggregate across time points, we simply averaged the decoding accuracy across all time points during the motion period (the 1500-ms RDK presentation) or all points during the report period (the first 1500 ms after the offset of the RDK). The aggregated decoding accuracy was computed separately for each participant, and we compared the group accuracy to chance (1/16) using one-sample t tests. Below-chance decoding performance is meaningless in this context, so we used one-tailed tests.

The point-by-point statistical analyses were more complicated because of the need to control for multiple comparisons. To accomplish this, we used a nonparametric cluster-based permutation technique that is analogous to the cluster-based mass univariate approach that is commonly used in EEG research (Maris & Oostenveld, 2007; Groppe et al., 2011). This method looks for temporal clusters of individually significant time points, taking advantage of the fact that information is typically distributed over adjacent time points, but it does not require any assumptions about normality. Note that this is slightly different from the approach used by Bae and Luck (2018a), which used a Monte Carlo approach rather than a permutation approach and did not fully account for autocorrelation in the EEG data.

To implement this approach, we first used one-sample t tests to determine whether decoding accuracy at each individual time point was significantly greater than chance (1/16). Again, these tests were one-tailed because below-chance decoding accuracy was not meaningful. We then found clusters of contiguous time points for which the single-point t tests were significant (p < .05) and computed the cluster-level t mass (the sum of the t scores within each cluster). We then asked whether a given cluster mass was greater than the mass that would be expected by chance, as determined via permutation tests (see below). This controls the Type I error rate at the cluster level, yielding a probability of .05 that one or more clusters would be significant if true decoding accuracy were at chance (Groppe et al., 2011).

To determine whether a cluster mass was larger than expected by chance, we constructed a null distribution of cluster-level t mass values using permutation tests. We used an approach similar to that used in previous EEG decoding studies (Foster et al., 2016; 2017a; 2017b; Fahrentfort et al., 2017), but to reduce computing time (which is a major issue for our SVM-ECOC approach), we permuted at the stage of testing the decoder output rather than at the stage of training the decoder.

Specifically, for each iteration of the permutation procedure, we shuffled the labels of the 16 motion directions before determining whether the decoder output was correct. This was designed to yield the accuracy values that would be obtained by chance if the decoder had no information about the true direction of motion. Importantly, we used the same shuffled target labels for all the time points in a given trial, instead of using different shuffled target labels for each time point independently, to reflect the temporal auto-correlation of the continuous EEG data (Linkenkaer-Hansen et al., 2001). As in our main statistical procedure, decoding accuracy for a given permutation iteration was computed 30 times (3 cross-validations x 10 iterations), and thus produced 480 decoding scores (16 directions x 30 repetitions) for a time point, which were then aggregated to compute the decoding accuracy at that time point for that permutation iteration. The time course of the permutation-based decoding accuracy was then smoothed with the same 5-point running average filter that was applied to the real decoding accuracy values. This procedure was performed for each of the 16 participants. We then used the decoding accuracy values to compute the cluster-level t mass (with a mass of zero if there were no significant t values). If there was more than one cluster of individually significant t values, we took the mass of the largest cluster.

This procedure was iterated 1000 times to produce a null distribution for the cluster mass (with a resolution of p = 10−3). To compute the p value corresponding to a cluster-level t mass in the actual data set, we simply found where this value fell within the null distribution. The p value for a given cluster was set based on the nearest percentiles of the null distribution (using linear interpolation; we report p < 10−3 if the observed mass was greater than all masses in the null distribution). We rejected the null hypothesis and concluded that the decoding was above chance for any observed cluster if the observed t mass for that cluster was in the top 95% of the null distribution. Separate null distributions were computed for the 1500-ms time period for motion stimulus and for the 1500-ms time period for the report, for each coherence level, and for each alpha-based and ERP-based decoding.

3. Results

3.1. Behavioral performance

The mean amount of time to finalize the report by clicking the mouse button after the offset of the RDK was 1998 ms (with a single-trial range of 816–4854 ms, excluding the fastest and slowest 2.5% of trials).

On each trial, behavioral performance was quantified as the response error (the angular difference between the true motion direction and the reported motion direction). Figure 1b shows the distributions of response errors for the two coherence levels. The vast majority of response errors were clustered around 0°, especially for the 51.2% coherence level, and large response errors (>30°) were more common at the 25.6% coherence level than at the 51.2% coherence level. As we have observed previously (Bae & Luck, 2018b), there was a cluster of response errors near ±180°, indicating a tendency for observers to perceive the motion in the direction opposite to the true direction. There were also occasional errors at intermediate directions, which may reflect random guesses.

Although behavioral performance was not the main focus of this study, we conducted a preliminary quantification using a 3-parameter model that characterized the distribution of response errors as a mixture of a von Mises distribution centered at 0° error (true motion perception), another von Mises distribution centered at 180° error (opposite-direction perception), and a uniform distribution (random guesses) (see red curves in Figure 1b). This leads to one parameter for precision (the kappa parameter from the von Mises distributions, which was assumed to be the same for both true and opposite-direction motion perception), one parameter indicating the probability of an opposite-direction error, and one parameter indicating the probability of a random guess. We are not claiming that this model characterizes the processes that determine behavioral performance in this task, but these three parameters provide a useful preliminary description of the observed data.

We found that the precision was higher for the 51.2% coherence level (M = 45.13, SEM = 4.45) than for the 25.6% coherence level (M = 27.89, SEM = 2.60) (t(15) = 6.684, p < .001), and the guess rate was lower for the 51.2% coherence level (M = .08, SEM = .02) than for the 25.6% coherence level (M = .32, SEM = .05) (t(15) = 9.213, p < .001). We also found a non-zero proportion of opposite-direction reports for both coherence levels (25.6%: M = .10, SEM = .01; 51.2%: M = .08, SEM = .02), but these proportions were not significantly different from each other (t(15) = 1.548, p = .143). Because opposite-direction reports were rare (≦10% of total trials), it was not feasible to separately decode those trials, and this interesting phenomenon will not be considered further in the present article.

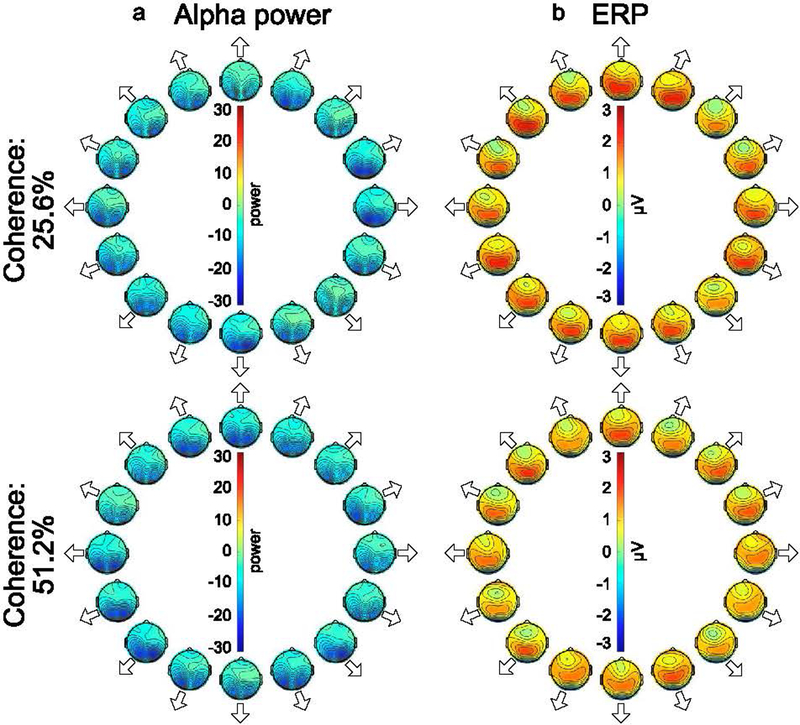

3.2. Scalp Distributions

Our decoding methods rely on differences in scalp distributions among the 16 motion directions, and Figure 2 shows the grand average alpha-band and ERP scalp maps for each direction. For the sake of simplicity, these maps were averaged across the entire motion period, but they do not change much after the initial sensory response (see Supplementary Figure S6, which shows the ERP scalp distribution for the 51.2% coherence condition at a single time point). Alpha-band activity was suppressed over occipital scalp sites during the stimulus period relative to the prestimulus baseline, consistent with prior research demonstrating that alpha-band activity is suppressed in response to an attended stimulus (Hanslmayr et al., 2011; Ray & Cole, 1985). The ERP maps show a positive voltage over posterior scalp sites, which reflects many ERP components that were present during the period of the stimulus (as shown in Supplementary Figure S2, which displays the ERP waveforms at key electrode sites). Note, however, that the maps shown in Figure 2 were averaged across participants and time points, whereas the decoding was performed individually for each participant at each time point. A key advantage of decoding methods is that they do not typically assume consistency across participants and across time points in the spatial pattern of neural activity.

Figure 2.

Topography of (a) alpha power and (b) ERP activity for each combination of motion directions and motion coherences during the stimulus period. The data were averaged across observers and the entire 1500-ms stimulus duration. The arrow adjacent to each topographic map indicates the direction of motion corresponding to that map.

Figure 3 shows the grand average alpha-band and ERP scalp maps for each direction during the report period. Alpha-band suppression was stronger during the report period than during the stimulus period, but the overall distribution was similar across periods. The ERP distributions show a strong frontal negativity that was less prominent in the ERPs from the stimulus period. The ERP distributions during the response period also show positive voltages at the frontal pole, which may reflect ocular activity that was not completely removed by the ICA artifact correction process. The possibility of ocular activity during the report period was even greater given that participants were allowed to make eye movements during this period. Because eye movements could potentially impact decoding during the report period, we conducted additional decoding analyses that excluded all the frontal and central electrodes (as described in more detail in Section 3.4).

Figure 3.

Topography of (a) instantaneous alpha power and (b) ERP activity for each combination of motion direction and motion coherence during the report period. The data were averaged across observers and the first 1500 ms of the report period. The position of each scalp map corresponds to the direction of motion.

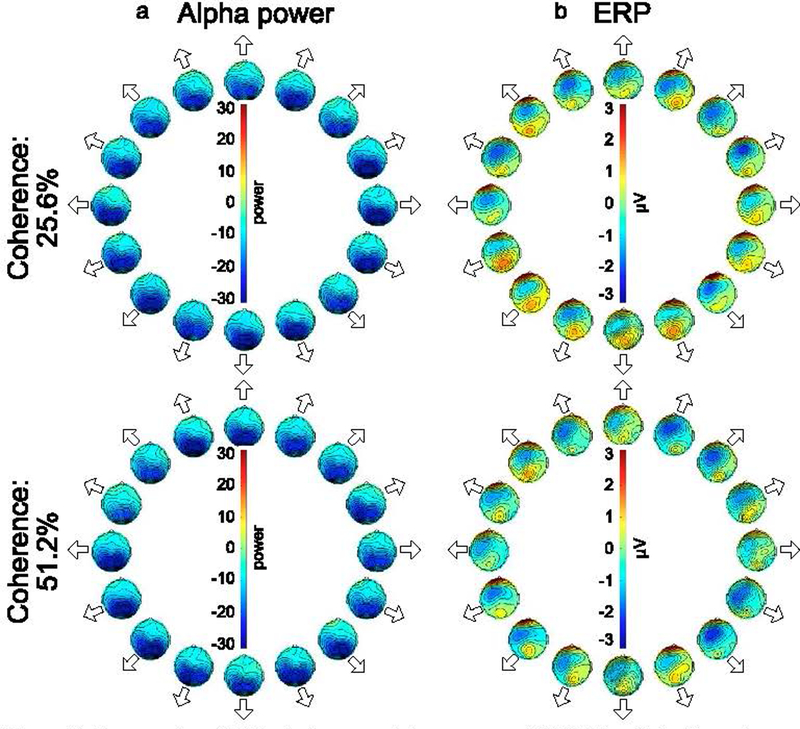

3.3. Decoding of stimulus direction

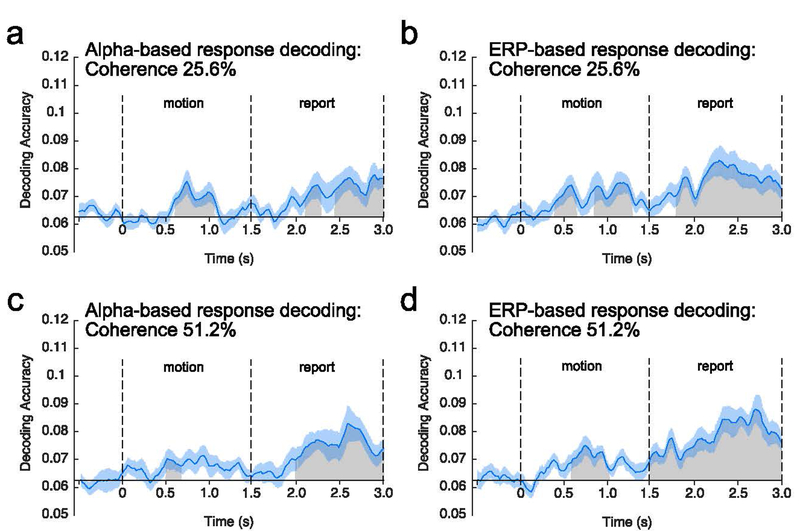

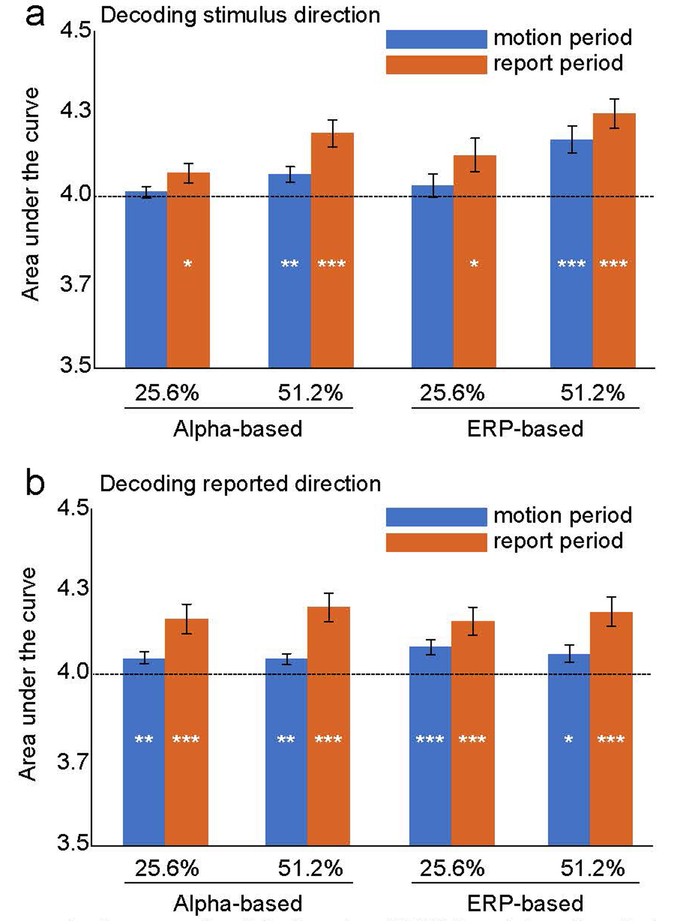

Figure 4 shows average decoding accuracy at each time point for alpha-based and ERP-based decoding for each motion coherence level. Table 1 summarizes average decoding accuracy for the alpha-based and ERP-based decoding during the motion and the report period for each coherence level. Because these different combinations yielded 8 different decoding accuracy values, each of which was compared to chance with a one-sample t test, we performed a false discovery rate (FDR) correction (Benjamini & Hochberg, 1995) for this family of 8 tests. All of the decoding accuracy values that were significantly above chance without correction survived correction, so we report uncorrected p values in the text.

Figure 4.

Average decoding accuracy at each time point relative to motion onset. Decoding was applied either to alpha-band activity (a, c) or sustained event-related potential (ERP) activity (b, d), and the decoding was performed separately for the 25.6% motion coherence (a, b) and for the 51.2% motion coherence (c, d). Time 0 represents motion onset, and time 1.5 represents motion offset. The gray regions represent clusters of time points for which the decoding accuracy was greater than chance after correction for multiple comparisons. The orange shading indicates ±1 SEM.

Table 1.

Mean accuracy for decoding of the stimulus direction and the reported direction averaged across the motion and the report period for alpha-based and ERP-based decoding at each coherence level. Chance level decoding is .0625 (1/16). Numbers in the parenthesis indicate SEM. The right column shows the results of a statistical comparison of the two types of decoding (uncorrected for multiple comparisons). Asterisks indicate statistical significance after false discovery rate correction for the family of tests within a given column.

| Coherence | Signal | Time period | Decoding of actual direction | Decoding of reported direction | Actual direction vs. Reported direction |

|---|---|---|---|---|---|

| 25.6% | Alpha | Motion | .0639 (.0018) | .0653 (.0010) ** | t(15) = .632, p = .537 |

| Report | .0662 (.0027) | .0705 (.0026) ** | t(15) = 1.474, p = .161 | ||

| ERP | Motion | .0603 (.0025) | .0689 (.0027) * | t(15) = 2.236, p = .041 | |

| Report | .0675 (.0035) | .0757 (.0031) *** | t(15) = 1.751, p = .100 | ||

| 51.2% | Alpha | Motion | .0633 (.0019) | .0673 (.0013) *** | t(15) = 1.949, p = .070 |

| Report | .0707 (.0027) ** | .0730 (.0028) *** | t(15) = 1.616, p = .127 | ||

| ERP | Motion | .0745 (.0037) ** | .0670 (.0017) ** | t(15) = −1.947, p = .071 | |

| Report | .0824 (.0048) *** | .0779 (.0033) *** | t(15) = −1.121, p = .280 |

= < .05,

= < .01,

= < .001

For the 51.2% coherence level, the direction of motion could be decoded from the sustained ERP activity during both the motion and report periods, whereas the direction could be decoded from the alpha-band activity only during the report period. For the 25.6% coherence level, decoding was near chance during both the motion and report periods for both the ERP and alpha-band signals. These general observations were supported by the following statistical analyses.

Alpha-based decoding for the 25.6% coherence level was near chance (1/16 = .0625) when averaged across the motion period (t(15) = .736, p = .237, one-tailed) and when averaged across the report period (t(15) = 1.373, p = .095, one-tailed). When point-by-point decoding accuracy was examined, the cluster-based permutation test yielded no significant time clusters. Alpha-based decoding for the 51.2% coherence level was also near chance when averaged across the motion period (t(15) = .430, p = .337, one-tailed), but it was significantly above chance when averaged across the report period (t(15) = 3.017, p = .004, one-tailed). The point-by-point analyses yielded 2 significant clusters of time points (p = .0220 and p < 10−3) during the report period. A follow-up test indicated that average alpha-based decoding accuracy for the 51.2% coherence level was significantly greater during the report period than during the motion period (t(15) = 2.750, p = .015, paired t test, two-tailed). As described earlier, we used one-tailed statistical tests when comparing decoding to chance (because below-chance decoding is meaningless in this context), but comparisons of accuracy across conditions or time periods were two-tailed.

ERP-based decoding was also near chance for the 25.6% coherence level when averaged across the motion period (t(15) = −.853, p = .797, one-tailed) and when averaged across the report period (t(15) = 1.432, p = .086, one-tailed). The point-by-point analyses yielded no significant clusters in either time period. In contrast, ERP-based decoding for the 51.2% coherence level gradually increased from the onset of the stimulus and remained well above chance until the end of the RDK. This led to significantly above-chance decoding when averaged across the motion period (t(15) = 3.352, p = .002, one-tailed) and a large cluster of significant time points that started approximately 500 ms after stimulus onset and continued through the end of the motion period (1 cluster, p < 10−3). ERP-based decoding for the 51.2% coherence level was also significantly above chance when averaged across the report period (t(15) = 4.116, p < .001, one-tailed), with one large significant cluster (p < 10−3). Although ERP decoding accuracy was numerically greater during the report period than during the motion period for the 51.2% coherence level, this difference was not statistically significant (t(15) = 1.895, p = .078, two-tailed).

Decoding accuracy for the 51.2% coherence level was generally better for the ERP-based decoding than the alpha-based decoding. A statistical comparison showed that this difference was significant during both the motion period (t(15) = 3.351, p = .004, two-tailed) and the report period (t(15) = 2.574, p = .021, two-tailed).

Together, these results demonstrate that the scalp distribution of sustained ERP activity contains information about the direction of motion during both the stimulus and response periods whereas the scalp distribution of the alpha-band activity contains decodable information about the direction of motion only during the report period (which presumably involves shifting attention to the line and dot used to report the direction).

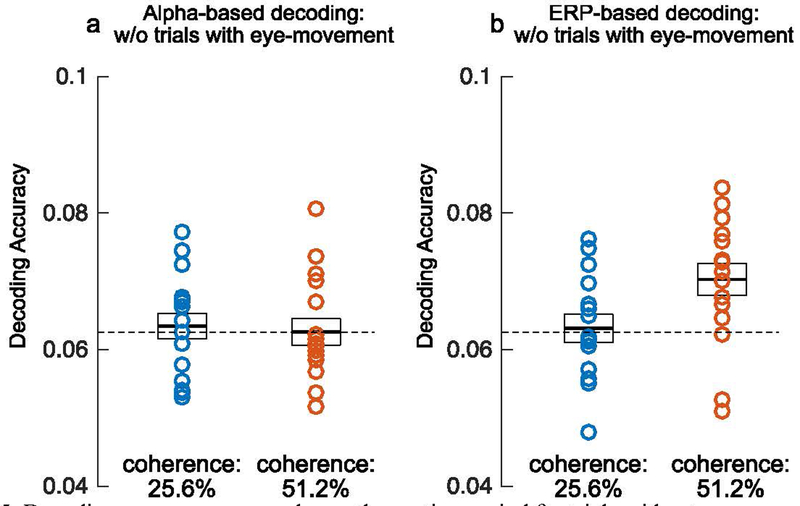

3.4. Ruling out contributions from eye movements

Although the electrical potentials produced by eye movements were estimated and removed from the data prior to the decoding analyses, it is possible that some EOG activity remained in the data. To ensure that our decoding results were not a result of residual EOG activity or a downstream consequence of changes in eye position, we conducted additional decoding analyses in which we excluded trials with eye movements during the motion period (see supplementary materials for methodological details). Because this procedure resulted in many fewer trials (especially once we equated the number of trials per direction bin), the single-point decoding would be expected to be less reliable. We therefore focused on decoding accuracy averaged across the motion period. Figure 6 shows the average accuracy of alpha-based and ERP-based decoding for the two motion coherence levels after excluding trials with eye movement artifacts. Alpha-based decoding produced near-chance decoding for both coherence levels. In contrast, ERP-based decoding was significantly greater than chance for the 51.2% coherence level (t(15) = 3.347, p = .002, one-tailed), and more than 80% of participants showed above-chance decoding in this condition. In addition, ERP-based decoding was significantly more accurate than alpha-based decoding at this coherence level (t(15) = 2.814, p = .013, two-tailed). Note that this analysis was performed on the motion period, not the report period, because participants were allowed to make eye movements while making the report. Thus, although we can rule out eye movements as a contributor to decoding accuracy during the motion period, we are relying solely on the ICA-based ocular correction to eliminate contributions of eye movements during the report period.

Figure 6.

Average accuracy for decoding of the reported direction, plotted as in Figure 4. In these analyses, each trial was binned in terms of the reported direction rather than the stimulus direction. Time 0 represents motion onset and time 1.5 represents motion offset. The gray regions represent clusters of time points for which the decoding accuracy was greater than chance after correction for multiple comparisons. The blue shading indicates ±1 SEM.

As mentioned earlier, positive voltages were observed during the report period at frontal pole electrode sites, which may reflect ocular activity that was not fully eliminated by the ICA-based correction procedure. This creates the possibility that decoding during the report period was at least partly based on differences in eye movements across the different directions of motion. To assess this possibility, we conducted additional decoding analyses using only the parietal, posterior temporal, and occipital electrodes (i.e., excluding FP1, FP2, Fz, F3, F4, F7, F8, C3, Cz, and C4 from the analyses). We found that the decoding during the report period was at least as great when the frontal and central electrodes were excluded as when they were included (see Supplementary Figure S3). This suggests that the above-chance decoding during the report period in our main analysis was not a direct consequence of differences in ocular voltages across directions of motion.

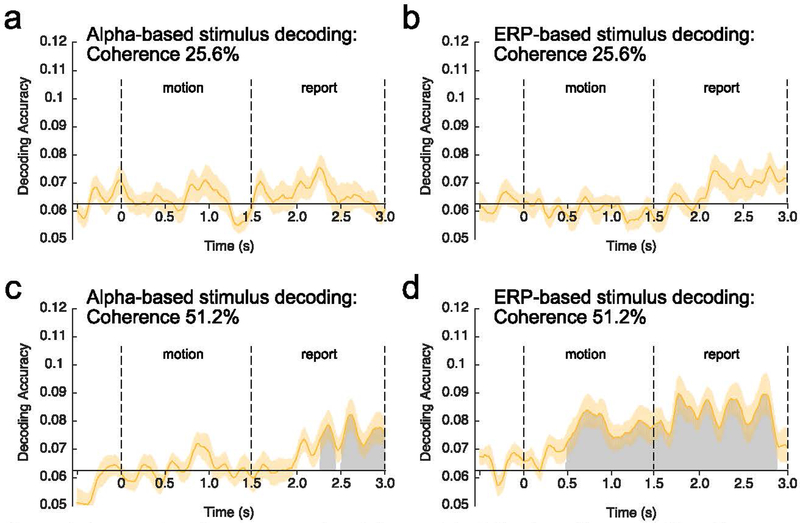

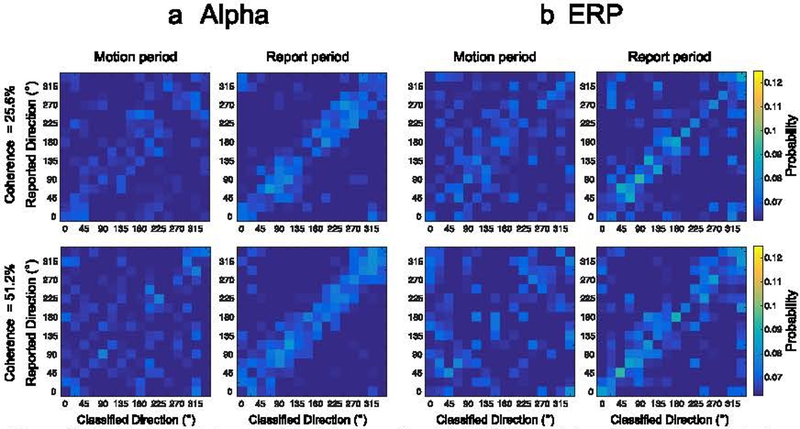

3.5. Decoding of reported direction

The reported direction of motion was often quite different from the true direction of coherent motion, especially at the lower coherence level (Figure 1b). 68% of the responses for the high coherence trials were within the bin width used for decoding (±11.25°), whereas only 43% of the responses were within this bin for the low coherence trials. In the next analyses, we therefore tested whether we could decode the reported direction (which may more closely reflect the observer’s decision). We did this during both the motion period and the report period. In other words, the previous analyses asked how well we could decode the actual stimulus direction during each of these periods, and the new analyses asked how well we could decode the reported stimulus direction during each of these periods. During the motion period, for example, the actual direction of motion might be misperceived, and the direction that was later reported during the report period may reflect the perceived direction during the motion period.

Figures S4 and S5 show scalp maps for each reported direction of motion. These maps are very similar to the maps based on the actual stimulus direction (Figures 2 and 3). However, these maps were constructed from grand averages, whereas the decoding analyses were performed on single-participant data and can pick up on regularities that are not obvious in the maps.

In the new analyses, each trial was binned according to the reported motion direction rather than the stimulus direction, and we asked whether the decoder could learn to predict the reported direction of motion. However, this binning produced different numbers of trials for each bin for a given participant, and we subsampled from the available trials to achieve the same number of trials per bin (which avoids having some bins overrepresented, which could distort the accuracy of the decoding). This tends to make the report-based decoding less reliable than stimulus-based decoding (all else being equal).

Figure 6 shows average decoding accuracy at each time point for the reported motion direction. Table 1 summarizes average decoding accuracy of alpha-based and ERP-based decoding during the motion and the report period for each coherence level. We performed FDR correction for this family of eight tests. All of the decoding accuracy values that were significantly above chance without correction survived correction, so we report uncorrected p values in the text.

Averaged across the motion period, alpha-based decoding was slightly but significantly above chance for both the 25.6% coherence level (t(15) = 2.674, p = .009, one-tailed) and the 51.2% coherence level (t(15) = 3.827, p < .001, one-tailed). The cluster-based permutation analyses yielded a small but significant cluster of time points for both the 25.6% coherence level (p < 10−3) and the 51.2% coherence level (p = .031). During the report period, alpha based decoding was significantly above chance for both coherence levels (25.6%: t(15) = 3.011, p = .004, one-tailed; 51.2%: t(15) = 3.769, p < .001, one-tailed). The cluster-based permutation analyses yielded significant time clusters for both coherence levels (25.6%: 2 clusters, p = .017, p < 10−3; 51.2%: %: 1 cluster, p < 10−3). Overall decoding accuracy was significantly greater during the report period than during the motion period for both coherence levels (25.6%: t(15) = 2.181, p = .046, two-tailed; 51.2%: t(15) = 2.196, p = .044, two-tailed).

ERP-based decoding was significantly greater than chance for both coherence levels when averaged across the motion period (25.6%: t(15) = 2.370, p = .016, one-tailed; 51.2%: t(15) = 2.690, p = .008), and when averaged across the report period (25.6%: t(15) = 4.193, p < .001; 51.2%: t(15) = 4.729, p < .001). For the 25.6% coherence level, the cluster-based permutation analyses indicated that ERP-based decoding was significantly greater than chance for more than half of the time points during the motion period (2 clusters, p = .007, p < 10−3) and for most of the time points during the report period (1 cluster, p < 10−3). For the 51.2% coherence level, the cluster-based permutation analyses showed that ERP-based decoding was significantly greater than chance for a cluster of time points during the motion period (p < 10−3) and for a cluster that contained the entire report period (p < 10−3).

Next, we asked whether the decoding of the reported direction was significantly more accurate than the decoding of the actual stimulus direction (averaged across the entire stimulus or report period). These results can be visualized by comparing Figure 4 (decoding of the stimulus) and Figure 6 (decoding of the report), and the statistical comparisons are provided in Table 1. As described in Section 2.5.2, fewer trials were available for decoding the reported direction than for decoding the actual direction, so one would expect poorer report decoding than stimulus decoding (all else being equal). Therefore, we focus here on the cases where decoding was more accurate for the reported direction than for the actual direction. At the low coherence level, decoding of the reported direction was numerically more accurate than decoding of the actual direction for both ERP-based and alpha-based decoding during both the motion period and the report period. The difference between ERP-based decoding of the reported direction and ERP-based decoding of the actual direction during the motion period reached statistical significance. However, this effect did not survive a familywise FDR correction for the eight comparisons between decoding accuracy for the actual and reported directions (see Table 1), so it should be treated with caution.

At the high coherence level, decoding of the reported direction was not significantly different from decoding of the actual direction for both ERP-based and alpha-based decoding during both the motion and the report period. Thus, decoding of the reported direction tended to be superior to decoding of the actual direction mainly for the low coherence level, which matches the behavioral finding that the reported direction deviated from the true direction more for the low coherence level than for the high coherence level. However, given the restricted number of trials available for decoding the report, we urge caution in interpreting these results.

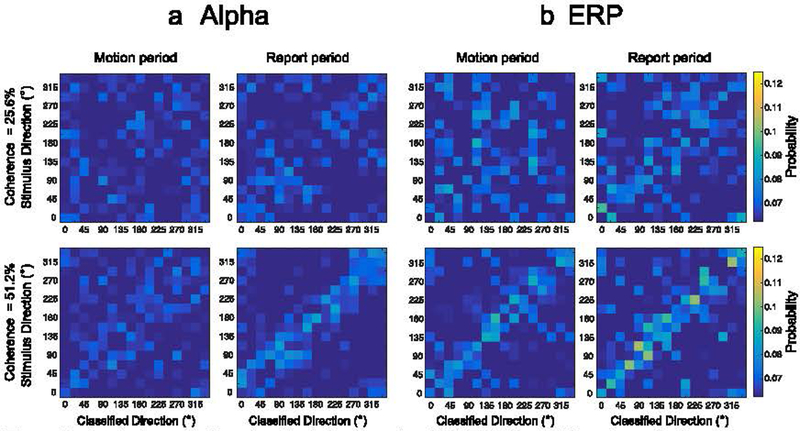

3.6. Pattern of decoding errors

Our main decoding analyses focused on the ability to exactly decode the stimulus direction, and we averaged the decoding accuracy across the 16 different directions. This creates the possibility that the decoding was based on a few unusual directions of motion (e.g., the cardinal directions) rather than reflecting continuous information about direction of motion. This can be assessed by examining the pattern of classification errors for each individual direction of motion (the confusion matrix). Figure 7 shows confusion matrices indicating the probability of each possible classification for each possible motion direction. That is, each cell in a matrix indicates the probability that 1 of the 16 directions was classified as falling into 1 of the 16 decoding categories. Separate confusion matrices are provided for each combination of signal type (alpha or sustained ERP), motion coherence (25.6% and 51.2%), and general time period (stimulus and report). Exact decoding lies on the diagonal moving from the lower-left to the upper-right of the confusion matrix, and small errors are values near this diagonal.

Figure 7.

Confusion matrices for (a) alpha-based and (b) ERP-based decoding of stimulus direction during motion period (left column) and during the report period (right column) for the two coherence levels. The proportion of classification responses for a given stimulus direction was averaged over the entire motion duration (0–1.5 s) and report duration (1.5– 3.0 s). (b) Confusion matrices for ERP-based decoding potted as (a).

For the 25.6% coherence level, classification responses for both alpha-based and ERP-based decoding were broadly distributed rather than being clustered tightly around the diagonal, with some weak clustering around the diagonal during the report period. This is consistent with the near-chance decoding performance during the motion period and the slightly above-chance decoding during the report period. Decoding was not very uniform across the different directions, but this may reflect chance given the low overall decoding accuracy. For the 51.2% coherence level, ERP-based classification responses were clearly clustered around the target diagonal during the motion period, with evidence of above-chance decoding across the entire range of stimulus directions and no obvious sign that the decoding was restricted to a few unusual directions. For alpha-based decoding, there was only a hint of clustering around the target diagonal during the motion period, but there was a clear cluster around the diagonal during the report period. This result is consistent with the near-chance decoding accuracy that was obtained during the motion period and the above-chance decoding accuracy during the report period for alpha-band activity.

The confusion matrices also provide important information about the nature of the information being decoded. In particular, it is theoretically possible that the participants perceived a general axis of motion and that we were decoding the orientation of this axis. For example, both leftward and rightward motion create a horizontal axis, whereas both upward and downward motion create a vertical axis. If the EEG contained information about the axis, but not the direction of motion along the axis, this could potentially lead to above-chance decoding. However, it would be expected to lead to a large number of decoding confusions between the true direction of motion and the opposite direction of motion (180° errors). There is no obvious sign of such 180° errors in the confusion matrices, indicating that the signal being decoded represents the actual direction of motion rather than the orientation of the overall axis of motion.

For the sake of completeness, we provide confusion matrices for the decoding of reported direction during both the motion period and the response period (Figure 8). For the 25.6% coherence level, classification responses for both alpha-based and ERP based decoding were weakly clustered around the diagonal during the motion period, but they were clearly clustered around the diagonal during the report period. For the 51.2% coherence level, there was a hint of clustering around the target diagonal for both alpha-based and ERP-based decoding during the motion period, but there was clear clustering around the target diagonal during the report period.

Figure 8.

Confusion matrices for (a) alpha-based and (b) ERP-based decoding of the reported direction of motion (rather than the actual direction of motion), plotted as in Figure 7.

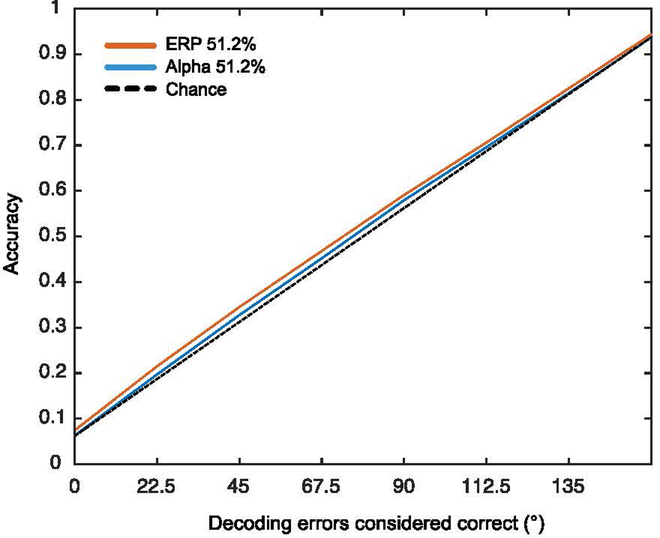

3.7. Effects of varying the criterion for correct classification

In our main analyses, decoding was considered correct only when the direction predicted by the classifier exactly matched the actual motion direction. However, as indicated by the confusion matrices shown in Figures 7 and 8, the classifier often produced “near misses” that also indicate the presence of motion direction information. Although it is valuable to quantify the ability of the classifier to predict the exact direction of motion (because our human observers were very good at reporting the exact direction), it is also useful to quantify the overall amount of information about direction of motion in the EEG. To accomplish this, we conducted an analysis that is analogous to the use of receiver operating curves (ROCs) in signal detection experiments. Specifically, we systematically varied our criterion for considering a prediction as correct, and we examined how decoding accuracy varied as the criterion varied (considering that this also influenced chance performance). We varied the criterion in steps of 22.5°. As illustrated in Figure 9 for the 51.2% coherence condition, we examined accuracy when a prediction was exactly correct (0° error), within ±22.5° of being correct, within ±45° of being correct, etc. The decoding accuracy that would be expected by chance is indicated by the broken line that falls on the diagonal.

Figure 9.

Decoding accuracy as a function of the range of decision window for 51.2% coherence level during the stimulus period of the stimulus decoding.

As can be seen in Figure 9, decoding accuracy was slightly above the chance line at every criterion level, with a greater deviation from chance for the ERP-based decoding than for the alpha-based decoding. The point at which the X axis is zero reflects the exact decoding described in our previous analyses. The difference between the observed decoding accuracy and chance increased somewhat as the criterion for correctness increased, but the decoding was never very far above chance (which is not surprising for the decoding of a difficult-to-perceive feature from the pattern of voltage on the scalp). ERP-based decoding was also more accurate than alpha-based decoding for each criterion level. Thus, these results are largely consistent with our main analyses.

We tested whether the decoding was significantly greater than chance across the whole set of criterion levels by comparing the area under the curve relative to the area under the chance curve (much as ROC curves are compared to chance). These analyses were collapsed across all time points within a given time period (i.e., within the motion period or within the report period). Figure 10 shows the mean area under the curve for alpha-based and ERP-based decoding of stimulus direction and reported direction during the stimulus and report periods. Note that the area under the chance diagonal is 4.0 for this set of criterion values, and we used one-sample t tests to compare the observed area to this chance area. Below-chance decoding would not be meaningful in these analyses, so one-tailed tests were used. We performed familywise FDR corrections for the family of stimulus direction tests and the family of reported direction tests.

Figure 10.

Mean area under the curve for alpha-based and ERP-based decoding during the stimulus and report periods for decoding of the actual stimulus direction (a) and the reported direction (b). Asterisks indicate statistical significance after application of the false discovery rate correction for multiple comparisons (* = p < .05; ** = p < .01; *** = p < .001).

When the actual stimulus direction was decoded during the stimulus period (blue bars in Figure 10a), the strongest decoding was observed for ERP-based decoding of the 51.2% motion coherence stimulus, with weaker but statistically significant alpha-based decoding of this stimulus as well. During the report period, decoding was stronger for the 51.2% coherence than for the 25.5% coherence (for both ERP-based and alpha-based decoding), but the decoding was significantly above chance in all cases.

When the reported direction was decoded, the area under the curve was significantly greater than chance for all combinations of coherence and decoding period for both ERP-based and alpha-based decoding. Numerically, this decoding tended to be stronger during the report period than during the stimulus period but was largely independent of the coherence level.

In general, these ROC-like analyses match the pattern observed for the main analyses, in which the output of the classifier was considered correct only if it exactly matched the direction of motion. The only case that differed in terms of significance was that alpha-based decoding of stimulus direction during the motion period was significantly above chance in the ROC-like but not in the main analysis. However, care must be taken when interpreting this slightly-but-significantly above-chance decoding because it may reflect a shift of spatial attention to the location used to report the direction of motion.

4. Discussion

4.1. Decoding of direction-of-motion information

The present study sought to determine whether spatially distributed, noisy sensory information about direction of motion can be decoded from the topography of human scalp EEG/ERP signals. Such a finding would have implications for the nature of the EEG/ERP signals and would provide a noninvasive method that could be used to track the accumulation of sensory evidence in humans with high temporal resolution.

We used random dot kinematograms (RDKs), in which coherently moving dots were intermixed with random noise. Whereas most RDK studies use only two directions of motion and require a binary decision (for a review, see Gold & Shadlen, 2007), we used all possible directions across the 360° space and required participants to report the precise direction of motion at the end of the motion display (see also Emrich et al., 2013; Ester et al., 2014). In this task, observers had no motivation to focus attention on different locations for different directions of motion, increasing the likelihood that we were decoding a representation of the direction of motion rather than decoding the direction of spatial attention (at least during the motion period). In addition, because all motion directions across the 360° space were equally likely, it is likely that we were decoding true direction-of-motion information rather than the outcome of a binary decision.

In this setting, we tested whether alpha-band EEG activity and sustained ERP activity contained information about the direction of motion using a multivariate classification method. For the higher coherence level, we found that motion directions could be decoded from the ERP activity during most of the motion period, with less evidence of sustained alpha-band decoding during this period. The ERP-based decoding gradually increased over the course of the motion period, significantly exceeding chance (=1/16) within 500 ms of motion onset. This time course is very distinct from that observed for orientation decoding (Bae & Luck, 2018a; van Ede et al., 2018; Wolff et al., 2017), in which accuracy sharply increases during the initial ~200 ms of the stimulus onset and then declines over time. This difference is likely a result of the fact that the motion direction information in the present study was embedded in random noise, and thus it took substantial time to accumulate evidence about the direction of motion. By contrast, the previous orientation decoding studies used noise-free stimuli that could be perceived very rapidly. These results suggest that ERPs are sensitive to the time course of perceptual evidence accumulation.

However, the time at which motion decoding became significant in the present study is somewhat later than the time at which the direction of motion can be decoded from single-unit recordings (150–200 ms; see Gold & Shadlen, 2001). The later onset of ERP-based decoding may have been a result of the fact that the present task required precise encoding of the direction of motion whereas the single-unit studies have mainly used binary choice tasks (e.g., leftward-versus-rightward). Future research will be needed to determine whether the onset of ERP-based decoding is earlier when there are only two possible directions of motion.

Neither alpha-based nor ERP-based decoding of stimulus direction was significantly above chance for the 25.6% coherence level during the motion period. This likely reflects two factors. First, the direction of motion is not easy to perceive at this coherence level, which presumably led to weaker and more variable neural representations of the direction of motion. Second, the reported direction of motion was sometimes quite different from the actual direction of motion. Correct decoding required classification of the motion direction within ±11.25°, and the behaviorally reported motion direction was within 11.25° of the true motion direction on only 43% of trials at the 25.6% motion coherence level (see Figure 1b). By contrast, the reported direction was within 11.25° of the true direction on 68% of trials at the 51.2% coherence level. Consequently, it should not be surprising that motion decoding was weak for the 25.6% coherence level. It is possible that stimulus decoding would be possible at low coherence levels with a larger number of trials, a smaller number of possible motion directions, or a higher electrode density (Robinson et al., 2017).

For the 51.2% coherence level, both alpha-based and ERP-based decoding were well above chance during the report period. During this period, participants adjusted a line and dot to match the direction of motion, which presumably involves focusing spatial attention onto a different spatial region for different directions of motion (see Figure 1a). Thus, the decoding during this period likely reflected, at least in part, the locus of spatial attention. The alpha-band decoding during this period is therefore consistent with the hypothesis that alpha-band EEG activity is related to the spatial distribution of attention. There was also some evidence of alpha-band decoding during the motion period as well, which became visible when we used a ROC-like analysis that included both precise and imprecise decoding. However, the lack of precise decoding of the direction during the motion period suggests that this small-but-significant effect may have been the result of preparatory shifts of attention to the location of the response rather than a coding of direction per se. Additional research will be needed to determine this with certainty.

It should also be noted that we cannot rule out the possibility that eye movements contributed to the decoding during the report period, during which participants were allowed to move their eyes freely. We used ICA to estimate and remove ocular potentials, and we also found that the ERP-based decoding remained well above chance during the response period when the electrodes in the front of the head were excluded from the analyses (see Figure S3). However, eye movements may indirectly impact activity in the visual system, so we cannot completely exclude the possibility that decoding during the report period was influenced by eye movements. By contrast, the analyses presented in Section 3.4. provided strong evidence against the possibility that the decoding during the motion period was a direct or indirect result of eye movements.

4.2. Decoding of the reported direction of motion

Because the reported direction often deviated substantially from the true direction, especially at the 25.6% coherence level, we also attempted to decode the reported direction. These analyses were limited by the fact that we could not control the number of trials for each reported direction. Because different numbers of trials were present for the different reported directions, we subsampled from the available trials so that we would use the same number of trials for each of the 16 directions (which avoids biasing the decoding accuracy). As a result, the report-based decoding was based on less than half as many trials as the stimulus-based decoding. Nonetheless, both ERP-based and alpha-based decoding of the reported direction of motion were slightly but significantly better than chance during the motion period for both the 25.6% and 51.2% coherence levels (see Figure 6). The finding may be related to recent evidence that prestimulus alpha-band activity is related to subjective measures of awareness and confidence rather than objective visual performance (Benwell et al., 2017; Samaha, Iemi, & Postle, 2017). Further research is necessary to elucidate the relationship between decodable alpha-band EEG activity and subjective experience.

In our report-based decoding, we used the reported direction during the report period to decode the activity during the prior motion period. It is quite possible that the perceived direction sometimes changed between a given moment in the motion period and the time of the report, and some of the “errors” in decoding the reported direction reflect these changes in perception. In other words, the decoding accuracy likely underestimates the true ability of the decoder to classify the perceived direction of motion at a given moment in time. It is therefore possible that future decoding studies may be able to track how the perceived direction of motion varies from moment to moment and determine when there has been a “change of mind” (Resulaj et al., 2009).

It should also be noted that the report-based decoding may reflect shifts of spatial attention as the participants prepare to report the perceived direction of motion. Consequently, the fact that alpha-based decoding of the reported direction was slightly but significantly above chance during the motion period should not be used as evidence that the alpha-band EEG signal contains direction-of-motion information per se. Additional research will be needed to determine the precise nature of the report-based decoding.

4.3. Alpha-band oscillations versus sustained ERPs

In the present study, decoding of the actual direction of motion during the period of the stimulation was generally more accurate for the sustained ERP signals than for the alpha-band oscillations. This matches our previous study of working memory for orientation, in which location-independent orientation could be decoded more accurately from sustained ERPs than from alpha-band oscillations (Bae & Luck, 2018a). However, the sustained ERPs in both studies could arise from modulations of ongoing low-frequency oscillations (Bastiaansen, Mazaheri, & Jensen, 2012), so these results do not imply any kind of intrinsic difference between phase-locked ERPs and phase-random oscillations. Nonetheless, the present results suggest that it is worth examining phase-locked ERPs in addition to phase-random oscillations when asking whether the EEG contains information about a particular stimulus.

4.4. EEG decoding as a tool for studying perceptual decision making

Numerous studies using invasive methods in animals have identified important neural signatures for perceptual decision-making processes (see for a review, Gold & Shadlen, 2001; 2007). However, relatively little is known about the neural signatures associated with perceptual decision making in humans at the whole-brain level. There have been several attempts to understand human perceptual decision making using fMRI (Ahlfors et al., 1999; Kamitani & Tong, 2006), but the poor temporal resolution of this method and the complex relationship between the BOLD signal and specific neural responses has made it difficult to isolate the processes involved in perception, decision, and response (Mulder et al., 2014). In contrast, EEG signals are recorded with millisecond resolution and primarily reflect summed postsynaptic potentials (Buzsáki et al. 2012), allowing us to measure the formation of perceptual decisions at the whole brain level. Indeed, recent studies have identified a P3-like ERP component (CPP, centro-parietal positivity) that covaried with the strength of motion in RDKs in a binary discrimination task and mirrored the firing rates observed in LIP neurons (Kelly & O’Connell, 2013), suggesting that it reflects the operation of the decision process (e.g., left versus right). The present study complements this finding by showing that the precise direction of motion (within ±11.25°) can be decoded from ERP signals. The time period during which direction of motion can be decoded appears to be longer than the duration of the CPP (compare, e.g., Figure 4D with Figure S2), but additional research will be needed to determine the relationship between the CPP and the EEG signals underlying the decoding of motion direction.

Supplementary Material

Figure 5.

Decoding accuracy averaged over the motion period for trials without eye movements for (a) alpha-band activity and for (b) sustained event-related potential (ERP) activity. The black horizontal line inside the white box represents average decoding accuracy, and the top and bottom edges of the box represent ±1 SEM. Each circle represents the decoding accuracy for an individual observer.

Acknowledgements

This research was made possible by NIH grants R01MH076226 and R01MH065034. We thank Aaron Simmons for assistance with data collection.

Footnotes

Conflict of Interest: The authors declare no competing financial interests.

We are using the term ERP to refer to any activity that is phase-locked to the event of interest and therefore survives the conventional signal-averaging process. Such activity could reflect either phase-resetting of an ongoing oscillation or a transient change in voltage.

Reference

- Ahlfors SP, Simpson GV, Dale AM, Belliveau JW, Liu AK, Korvenoja A, & Ilmoniemi RJ (1999). Spatiotemporal activity of a cortical network for processing visual motion revealed by MEG and fMRI. Journal of Neurophysiology, 82(5), 2545–2555. [DOI] [PubMed] [Google Scholar]

- Bastiaansen M, Mazaheri A, & Jensen O (2012). Beyond ERPs: Oscillatory neuronal dynamics In Luck SJ & Kappenman ES (Eds.), The Oxford Handbook of ERP Components (pp. 31–49). New York: Oxford University Press. [Google Scholar]

- Bae GY & Luck SJ (2018a). Dissociable decoding of spatial attention and working memory from EEG oscillations and sustained potentials. Journal of Neuroscience, 38, 409–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bae GY & Luck SJ (2018b). Motion perception in 360°. Vision Science Society annual meeting, St Pete Beach, FL.

- Benjamini Y, & Hochberg Y (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the royal statistical society. Series B (Methodological), 289–300.

- Benwell CSY, Tagliabue CF, Veniero D, Cecere R, Savazzi S, Thut G (2017) Prestimulus EEG power predicts conscious awareness but not objective visual performance. eNeuro. 4(6). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwer GJ, & Heeger DJ (2013). Categorical clustering of the neural representation of color. Journal of Neuroscience, 33(39), 15454–15465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsáki G, Anastassiou CA, & Koch C (2012). The origin of extracellular fields and currents-EEG, ECoG, LFP and spikes. Nature Reviews Neuroscience, 13(6), 407–420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, & Makeig S (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of neuroscience methods, 134(1), 9–21. [DOI] [PubMed] [Google Scholar]

- Dietterich TG, & Bakiri G (1994). Solving multiclass learning problems via error-correcting output codes. Journal of artificial intelligence research, 2, 263–286. [Google Scholar]

- Drisdelle BL, Aubin S, & Jolicoeur P (2017). Dealing with ocular artifacts on lateralized ERPs in studies of visual‐spatial attention and memory: ICA correction versus epoch rejection. Psychophysiology, 54(1), 83–99. [DOI] [PubMed] [Google Scholar]

- Emrich SM, Riggall AC, LaRocque JJ, & Postle BR (2013). Distributed patterns of activity in sensory cortex reflect the precision of multiple items maintained in visual short-term memory. Journal of Neuroscience, 33(15), 6516–6523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Ho TC, Brown SD, & Serences JT (2014). Variability in visual working memory ability limits the efficiency of perceptual decision making. Journal of Vision, 14(4):2, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fahrenfort JJ, Grubert A, Olivers CN, & Eimer M (2017). Multivariate EEG analyses support high-resolution tracking of feature-based attentional selection. Scientific reports, 7(1), 1886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster J, Sutterer D, Serences J, Vogel E, & Awh E (2016) The topography of Alpha-band activity tracks the contents of spatial working memory. Journal of Neurophysiology, 115, 168–177. doi: 10.1152/jn.00860.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster JJ, Bsales EM, Jaffe RJ, & Awh E (2017a). Alpha-band activity reveals spontaneous representations of spatial position in visual working memory. Current Biology, 27(20), 3216–3223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster JJ, Sutterer DW, Serences JT, Vogel EK, & Awh E (2017b). Alpha-band oscillations enable spatially and temporally resolved tracking of covert spatial attention. Psychological science, 28(7), 929–941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold J & Shadlen M (2001). Neural computations that underlie decisions about sensory stimuli. Trends in cognitive sciences, 5, 10–16. [DOI] [PubMed] [Google Scholar]

- Gold JI, & Shadlen MN (2003). The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. Journal of Neuroscience, 23(2), 632–651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold J & Shadlen M (2007). The nueral basis of decision making. Annual Review of Neuroscience, 30, 535–574. [DOI] [PubMed] [Google Scholar]

- Hanslmayr S, Gross J, Klimesch W, & Shapiro KL (2011). The role of alpha oscillations in temporal attention. Brain research reviews, 67(1-2), 331–343. [DOI] [PubMed] [Google Scholar]