Abstract

Neural circuits are able to perform computations under very diverse conditions and requirements. The required computations impose clear constraints on their fine-tuning: a rapid and maximally informative response to stimuli in general requires decorrelated baseline neural activity. Such network dynamics is known as asynchronous-irregular. In contrast, spatio-temporal integration of information requires maintenance and transfer of stimulus information over extended time periods. This can be realized at criticality, a phase transition where correlations, sensitivity and integration time diverge. Being able to flexibly switch, or even combine the above properties in a task-dependent manner would present a clear functional advantage. We propose that cortex operates in a “reverberating regime” because it is particularly favorable for ready adaptation of computational properties to context and task. This reverberating regime enables cortical networks to interpolate between the asynchronous-irregular and the critical state by small changes in effective synaptic strength or excitation-inhibition ratio. These changes directly adapt computational properties, including sensitivity, amplification, integration time and correlation length within the local network. We review recent converging evidence that cortex in vivo operates in the reverberating regime, and that various cortical areas have adapted their integration times to processing requirements. In addition, we propose that neuromodulation enables a fine-tuning of the network, so that local circuits can either decorrelate or integrate, and quench or maintain their input depending on task. We argue that this task-dependent tuning, which we call “dynamic adaptive computation,” presents a central organization principle of cortical networks and discuss first experimental evidence.

Keywords: adaptation, collective dynamics, neural network, cognitive states, neuromodulation, criticality, balanced state, hierarchy

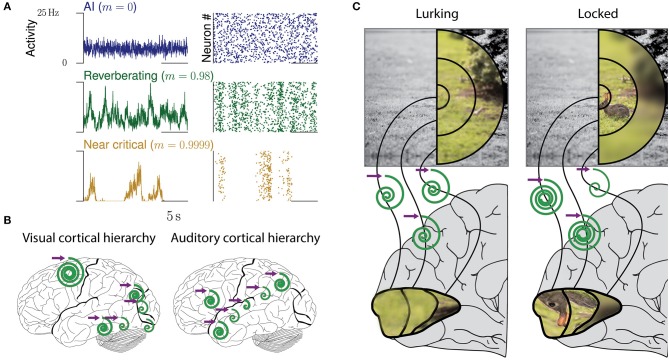

Cortical networks are confronted with ever-changing conditions, whether these are imposed on them by a natural environment, or induced by the actions of the subjects themselves. For example, when a predator is lurking for a prey it should detect the smallest movement in the bushes anywhere in the visual field, but as soon as the prey is in full view and the predator moves to strike, visual attention should focus on the prey (Figure 1C). Optimal adaptation for these changing tasks requires a precise and flexible adjustment of input amplification and other properties within the local, specialized circuits of primary visual cortex: strong amplification of small input while lurking, but quenching of any irrelevant input when chasing. These are changes from one task to another. However, even the processing within a single task may require the joint contributions of networks with diverse computational properties. For example, listening to spoken language involves the integration of phonemes at the timescale of milliseconds to words and whole sentences lasting for seconds. Such temporal integration might be realized by a hierarchy of temporal receptive fields, a prime example of adaption to different processing requirements of each brain area (Murray et al., 2014; Hasson et al., 2015, Figure 1B).

Figure 1.

Collective dynamics of cortical networks. (A) Examples of collective spiking dynamics representing either irregular and uncorrelated activity (blue), reverberations (green), or dynamics close to a critical state (yellow). Population spiking activity and raster plots of 50 neurons are shown. (B) Hierarchical organization of collective cortical dynamics. In primary sensory areas, input is maintained and integrated only for tens of milliseconds, whereas higher areas show longer reverberations and integration. The purple arrow represents any input to the respective area, the spirals the maintenance of the input over time (inspired from Hasson et al., 2015). (C) Dynamic adaptation of collective dynamics in local circuits. When a predator is lurking for prey, the whole field of view needs to be presented equally in cortex. Upon locking on prey, attention focuses on the prey. This could be realized by local adaptation of the network dynamics, which amplifies the inputs from the receptive fields representing the rabbit (“tuning in”), while quenching others (“tuning out”).

Basic network properties like sensitivity, amplification, and integration timescale optimize different aspects of computation, and hence a generic input-output relation can be used to infer signatures of the computational properties, and changes thereof (Kubo, 1957; Wilting and Priesemann, 2018a). Throughout this manuscript, we refer to computation capability in the following two, high-level senses. First, the integration timescale determines the capability to process sequential stimuli. If small inputs are quenched away rapidly, the network may quickly be ready to process the next input. In contrast, networks that maintain input for long timescales may be slow at responding to novel input, but instead they can integrate information and input over extended time periods (Bertschinger and Natschläger, 2004; Lazar, 2009; Boedecker et al., 2012; Del Papa et al., 2017). This is at the heart of reservoir computing in echo state networks or liquid state machines (Buonomano and Merzenich, 1995; Maass et al., 2002; Jaeger and Haas, 2004; Schiller and Steil, 2005; Jaeger et al., 2007; Boedecker et al., 2012). Second, the detection of small stimuli relies on a sufficient amplification (Douglas et al., 1995). However, increased sensitivity to weak stimuli can lead to increased trial-to-trial variability (Gollo, 2017).

These examples show that local networks that are tuned to one task may perform worse at a different one, and there is no one-type-fits-all network for every environmental and computational demand. How does a neural network manage to both react quickly to new inputs when needed, but also maintain memory of the recent input, e.g., when a human listens to language? Did the brain evolve a large set of specialized circuits, or did it develop a manner to fine-tune its circuits quickly to the computational needs? A flexible tuning of response properties would be desirable in the light of resource and space constraints. Indeed, in experiments one of the most prominent features of cortical responses is their strong dependence on cognitive state and context. For example, the cognitive state clearly impacts the strength, delay and duration of responses, the trial-to-trial variability, the network synchrony, and the cross-correlation between units (Kisley and Gerstein, 1999; Massimini et al., 2005; Poulet and Petersen, 2008; Curto et al., 2009; Goard and Dan, 2009; Kohn et al., 2009; Harris and Thiele, 2011; Marguet and Harris, 2011; Priesemann et al., 2013; Scholvinck et al., 2015). Transitions between different cognitive states have been described by phase transitions (Galka et al., 2010; Steyn-Ross and Steyn-Ross, 2010; Steyn-Ross et al., 2010).

While a phase transition can be very useful to realize cognitive state changes, we here want to emphasize a particular property of systems close to phase transitions: without actually crossing the critical point, already small parameter changes can have a large impact on the network dynamics and function. Hence a classical phase transition may not be necessary for adaptation. In addition to the well-established phase transitions, adaptation could be realized as a dynamic process that regulates the proximity to a phase transition and allows cortical networks to fine-tune their sensitivity, amplification, and integration timescale within one cognitive state, depending on the specific requirements. In order to allow efficient adaptation, cortical networks must evidently satisfy the following requirements. (i) The network properties are easily tunable to changing requirements, e.g., the required synaptic or neural changes should be small. (ii) The network is fully functional in its ground state, and also in the entire vicinity, i.e., the adaptive tuning does not destabilize or dysfunctionalize it. (iii) The network receives, modifies and transfers information according to its needs, e.g., it amplifies or quenches the input depending on task. (iv) The network's ground state in general should enable integration of input over any specific past window, as required by a given task.

We propose that cortex operates in a particular dynamic regime, the “reverberating regime”, because in this regime small changes in neural efficacy can tune computational properties over a wide range – a mechanism that we propose to call dynamic adaptive computation. In this regime a cortical circuit can interpolate between two states described below, which both have been hypothesized to govern cortical dynamics and optimize different aspects of computation (Burns and Webb, 1976; Softky and Koch, 1993; Vreeswijk and Sompolinsky, 1996; Brunel, 2000; Beggs and Plenz, 2003; Stein et al., 2005; Beggs and Timme, 2012; Plenz and Niebur, 2014; Tkačik et al., 2015; Humplik and Tkačik, 2017; Muñoz, 2017; Wilting and Priesemann, 2018a,b). In the following, we recapitulate the computational properties of these two states and then identify recent converging evidence that in fact the reverberating regime governs cortical dynamics in vivo. We then show how specifically the reverberating regime can combine the computational properties of the two extreme states while maintaining stability and thereby satisfies all requirements for cortical network function postulated above. Finally, we outline future theoretical challenges and experimental predictions.

One hypothesis suggests that spiking statistics in the cortical ground state is asynchronous and irregular (Burns and Webb, 1976; Softky and Koch, 1993; Stein et al., 2005), i.e., neurons spike independently of each other and in a Poisson manner (Figure 1A). Such dynamics may be generated by a “balanced state,” which is characterized by weak recurrent excitation compared to inhibition (Vreeswijk and Sompolinsky, 1996; Brunel, 2000). The typical balanced state minimizes redundancy, has maximal entropy in its spike patterns, and supports fast network responses (Vreeswijk and Sompolinsky, 1996; Denève and Machens, 2016). The other hypothesis proposes that neuronal networks operate at criticality (Bienenstock and Lehmann, 1998; Beggs and Plenz, 2003; Levina et al., 2007; Beggs and Timme, 2012; Plenz and Niebur, 2014; Tkačik et al., 2015; Humplik and Tkačik, 2017; Muñoz, 2017; Kossio et al., 2018), and thus in a particularly sensitive state at a phase transition. This state is characterized by long-range correlations in space and time, and in models optimizes performance in tasks that profit from extended reverberations of input in the network (Bertschinger and Natschläger, 2004; Haldeman and Beggs, 2005; Kinouchi and Copelli, 2006; Wang et al., 2011; Boedecker et al., 2012; Shew and Plenz, 2013; Del Papa et al., 2017). These two hypotheses, asynchronous-irregular and critical, can be interpreted as the two extreme points on a continuous spectrum of response properties to minimal perturbations, the first quenching any rate perturbation quickly within milliseconds, the other maintaining it for much longer. Hence the two hypotheses clearly differ already in the basic response properties they imply.

A general first approach to characterize the response properties of any dynamical system is based on linear response theory: When applying a minimal perturbation or stimulation, e.g., adding a single extra spike to neuron i, the basic response is characterized by mi, the number of additional spikes triggered in all postsynaptic neurons (London et al., 2010), which can be interpreted as efficacy of the one neuron. If the efficacy is sufficiently homogeneous across neurons, then the average neural efficacy m represents a control parameter, and quantifies the impact of any single extra spike in a neuron on its postsynaptic neurons, and thus the basic network response properties to small input. In the next step, any of these triggered spikes in turn can trigger spikes in a similar manner, and thereby the small stimulation may cascade through the network. The network response may vary from trial to trial and from neuron to neuron, depending on excitation-inhibition ratio, synaptic strength, and membrane potential of the postsynaptic neurons. Thus m does not describe each single response, but the expected (average) response of the network, and thereby enables an assessment of the network's stability and computational properties. The magnitude of the neural efficacy m defines two different response regimes: If one spike triggers on average less than one spike in the next time step (m < 1), then any stimulation will die out in finite time. For m > 1, stimuli can be amplified infinitely, and m = 1 marks precisely the transition between stable and unstable dynamics (Figure 2B). In addition, m directly determines the amplification of the stimulus, the duration of the response, the intrinsic network timescale and the response variability, among others in the framework of autoregressive processes (Harris, 1963; Wilting and Priesemann, 2018a,b). Although details may depend on the specific process or model, many results presented in the following are qualitatively universal across diverse models that show a (phase) transition from stable to unstable, from ordered to chaotic, or from non-oscillatory to oscillatory activity. These include for example AR(1), Kesten, branching, and Ornstein-Uhlenbeck processes, systems that show a Hopf bifurcation, or systems at the transition to chaos (Harris, 1963; Camalet et al., 2000; Boedecker et al., 2012; Huang and Doiron, 2017; Wilting and Priesemann, 2018a,b). Hence the principle of dynamic adaptive computation detailed below can be implemented and exploited in very diverse types of neural networks, and thus presents a general framework.

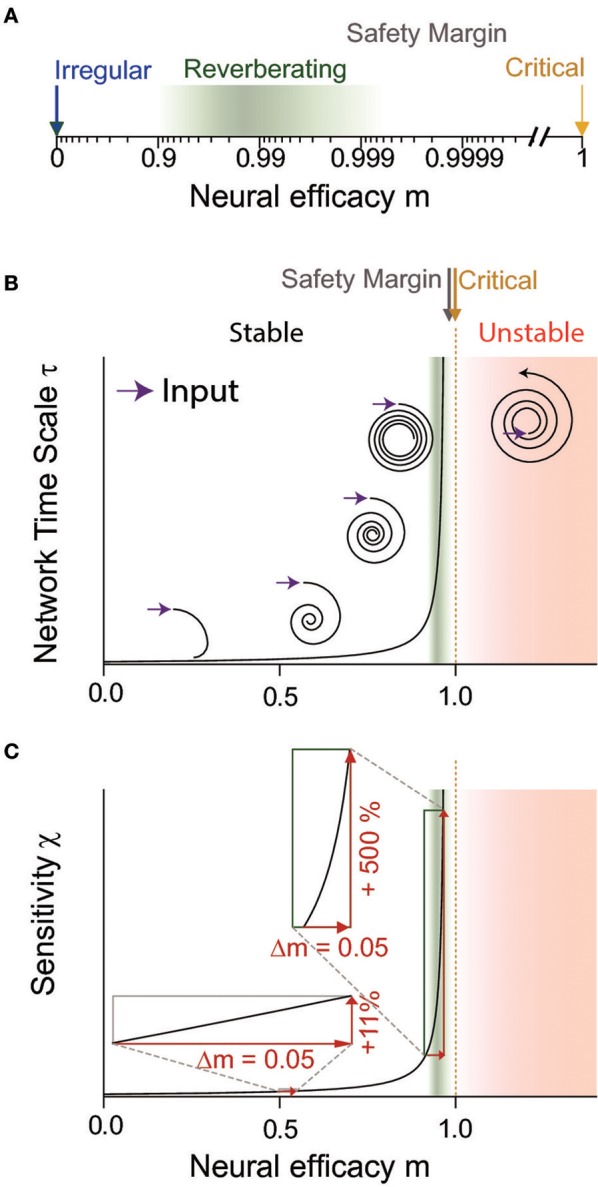

Figure 2.

The neural efficacy m determines the average impact any spike has on the network. Depending on m, network dynamics can range from irregular (m = 0) to critical (m = 1) and unstable (m > 1) dynamics. (A) In a logarithmic depiction of m, the “reverberating regime” (green) observed for cortex in vivo is well visible. It has clearly a larger m than the irregular state (blue), but maintains a safety margin to criticality (yellow) and the instability associated with the supercritical regime (red in B,C). (B,C) Sketch to illustrate the divergence of dynamical and computational properties at a critical phase transition, at the example of the network timescale and the sensitivity, respectively. (B) The network timescale determines how long input is maintained in the network. While any rate change is rapidly quenched close to the irregular state (m = 0), input “reverberates” in the network activity for increasingly long timescales when approaching criticality (m = 1). In the reverberating regime, the network timescale is tens to hundreds of milliseconds. For m > 1, input is amplified by the network, implying instability (assuming a supercritical Hopf bifurcation here for illustration). The reverberating regime keeps a sufficient safety margin from this instability. (C) The reverberating regime found in vivo allows large tuning of the sensitivity by small changes of the neural efficacies (e.g., synaptic strength or excitation-inhibition balance), in contrast to states further away from criticality (insets).

The neural efficacy m is a statistical description of the effective recurrent activation, which takes into account both excitatory and inhibitory contributions. We here use it to focus on the mechanism of dynamic adaptive computation in an isolated setting, instead of including as many details as possible. We take this approach for two reasons. First, this abstraction allows to discuss possible generic principles for adaptation. Second, our approach enables us to assess the network state m and possible adaptation m(t) from experiments. The abstract adaptation principles we consider here can be implemented by numerous physiological mechanisms, including top-down attention, adaptation of synaptic strengths or neuronal excitability, dendritic processing, disinhibition, changes of the local gain, or up-and-down states (Hirsch and Gilbert, 1991; London and Häusser, 2005; Wilson, 2008; Piech et al., 2013; Ramalingam et al., 2013; Karnani et al., 2016).

Inferring the neural efficacy m experimentally is challenging, because only a tiny fraction of all neurons can be recorded with the required millisecond precision (Priesemann et al., 2009; Ribeiro et al., 2014; Levina and Priesemann, 2017). In fact, such spatial subsampling can lead to strong underestimation of correlations in networks, and subsequently of m (Wilting and Priesemann, 2018a,b). However, recently, a subsampling-invariant method has been developed that enables a precise quantification of m even from only tens of recorded neurons (Wilting and Priesemann, 2018a,b). Together with complementary approaches, either derived from the distribution of covariances or from a heuristic estimation, evidence is mounting that m is between ≈0.9 and ≈0.995, consistently for visual, somatosensory, motor and frontal cortices as well as hippocampus (Priesemann et al., 2014; Dahmen et al., 2016; Wilting and Priesemann, 2018a,b). Hence, collective spiking activity is neither fully asynchronous nor critical, but in a reverberating regime between the two, and any input persists for tens to hundreds of milliseconds (Murray et al., 2014; Priesemann et al., 2014; Hasson et al., 2015; Dahmen et al., 2016; Wilting and Priesemann, 2018a,b). In more detail, both Dahmen and colleagues as well as Wilting & Priesemann estimated the neural efficacy to be about m = 0.98, ranging from about 0.9 to 0.995 when assessing spiking activity in various cortical areas (Figures 1A, 2A). This magnitude of neural efficacy m implies intrinsic timescales of tens to hundreds of milliseconds. Such intrinsic timescales were directly estimated from cortical recordings in macaque by Murray and colleagues, who identified a hierarchical organization of timescales across somatosensory, medial temporal, prefrontal, orbitofrontal, and anterior cingulate cortex—indicating that every cortical area has adapted its response properties to its role in information processing (Murray et al., 2014). These findings are also in agreement with the experiments by London and colleagues, who directly probed the neural efficacy by stimulating a single neuron in barrel cortex. They found the response to last at least 50 ms in primary sensory cortex, implying m ≳ 0.92 (London et al., 2010; Wilting and Priesemann, 2018a,b).

We will show that this reverberating regime with median m = 0.98 (0.9 < m < 0.995) observed in vivo is optimal in preparing cortical networks for flexible adaptation to a given task, and meets all the requirements for dynamic adaptive computation we postulated above.

The reverberating regime allows tuning of computational properties by small, physiologically plausible changes of network parameters. This is because the closer a system is to criticality (m = 1), the more sensitive its properties are to small changes in the neural efficacy m, e.g., to any change of synaptic strength or excitation-inhibition ratio (Figure 2C). In the reverberating regime the network can draw on this sensitivity: inducing small overall synaptic changes allows to adapt network properties to task requirements over a wide range. Assuming for example an AR(1), Ornstein-Uhlenbeck, or branching process, increasing m from 0.94 to 0.99 leads to a six-fold increase in the sensitivity of the network (Figure 2C). Here, the sensitivity ∂r/∂h ~ (1−m)−1 describes how the average network rate r responds to changes of the input h (Wilting and Priesemann, 2018a). In contrast, the same absolute change from m = 0.5 to m = 0.55 only increases the sensitivity by about 11%. Similar relations apply to the network's amplification and intrinsic timescale (Wilting and Priesemann, 2018b). Thereby, any mechanism that increases or decreases the overall likelihood that a spike excites a postsynaptic neuron can mediate the change in neural efficacy m. Such mechanisms may act on the synaptic strength of many neurons in a given network, including neuromodulation that can change the response properties of a small population within a few hundred milliseconds, and homeostatic plasticity or long term potentiation or depression, which adapt the network over hours (Rang et al., 2003; Turrigiano and Nelson, 2004; Zierenberg et al., 2018). An alternative target could be the excitability of neurons, either rapidly by modulatory input, dendritic processing, disinhibition, changes of the local gain, or up-and-down states (Hirsch and Gilbert, 1991; London and Häusser, 2005; Wilson, 2008; Larkum, 2013; Piech et al., 2013; Ramalingam et al., 2013; Karnani et al., 2016); or by changes of the intrinsic conductance properties over hours to days (Turrigiano et al., 1994).

While states even closer to the phase transition than m = 0.98 would imply even stronger sensitivity to changes in m, being too close to the phase transition comes with the risk of crossing over to instability (m > 1), because synapses are altered continuously by a number of processes, ranging from depression and facilitation to long-term plasticity. Hence, posing a system too close to a critical phase transition may lead to instabilities and potentially causes epileptic seizures (Meisel et al., 2012; Priesemann et al., 2014; Wilting and Priesemann, 2018a). In the following, we estimate that the typical synaptic variability limits the precision of network tuning, and thereby defines an optimal regime for functional tuning that is about one percent away from the phase transition. Typically, single synapses exhibit about 50% variability in their strengths w, i.e., σw ≈ 0.5w over the course of hours and days (Statman et al., 2014). If these fluctuations are not strongly correlated across synapses, the variance of the single neuron efficacy scales with the number k of outgoing synapses, and gives

| (1) |

Assuming the network keeps a “safety margin” from instability (m > 1) of three standard deviations renders the network stable 99.9% of the time. The remaining, transient excursions into the unstable regime may be tolerable, because even in a slightly supercritical regime (m ⪆ 1), runaway activity occurs only rarely (Harris, 1963; Zierenberg et al., 2018). Thus assuming on average synapses per neuron (DeFelipe et al., 2002) yields that a safety margin of about 1.5% from criticality is sufficient to establish stability. The safety margin can be even smaller if one assumes furthermore that the variability of mi among neurons in a local network is not strongly correlated, because the stability of network dynamics is determined by the average neural efficacy , not by the individual efficacies. Furthermore, network structure might also contribute to stabilizing network activity (Kaiser and Simonotto, 2010). The resulting margin from criticality is compatible with the m observed in vivo.

The generic model reproduces statistical properties of networks where excitatory and inhibitory dynamics can be described by an effective excitation, e.g., because of a tight balance between excitation and inhibition (Sompolinsky et al., 1988; Vreeswijk and Sompolinsky, 1996; Ostojic, 2014; Kadmon and Sompolinsky, 2015; Huang and Doiron, 2017). In general, models should operate in a regime with m < 1 to maintain long-term stability and a safety-margin. However, transient and strong stimulus-induced activation is key for certain types of computation, such as direction selectivity, or sub- and supra-linear summation, e.g., in networks with non-saturating excitation and feedback inhibition (Douglas et al., 1995; Suarez et al., 1995; Murphy and Miller, 2009; Lim and Goldman, 2013; Hennequin et al., 2014, 2017; Rubin et al., 2015; Miller, 2016). These networks show transient instability (i.e., m(t) > 1) until inhibition stabilizes the activity. Whether such transient, large changes in m(t) on a millisecond scale should be considered a “state” is an open question. Nonetheless, experimentally a time resolved m(t) can be estimated with high temporal resolution, e.g., in experiments with a trial-based design. This measurement could then give insight into the state changes required for computation.

Besides the sensitivity, a number of other network properties also diverge or are maximized at the critical point and are hence equally tunable under dynamic adaptive computation. They include the spatial correlation length, amplification, active information storage, trial-to-trial variability, and the intrinsic network timescale (Harris, 1963; Sethna, 2006; Boedecker et al., 2012; Barnett et al., 2013; Wilting and Priesemann, 2018a). Some of these properties, which diverge at the critical point as (1−m)−β (with a specific scaling exponent β), are advantageous for a given task; others, in contrast, may be detrimental. For example, at criticality the trial-to-trial variability diverges and undermines reliable responses. Moreover, in the vicinity of the critical point convergence to equilibrium slows down (Scheffer et al., 2012; Shriki and Yellin, 2016). Thus, network fine-tuning most likely is not based on optimizing one single network response property alone, but represents a trade-off between desirable and detrimental aspects. This tradeoff can be represented in the most simple case by a goal function

| (2) |

which weighs the desired (Φ+) and detrimental (Φ−) aspects by a task dependent weight factor α, and might be called free energy in the sense of Friston (2010). Close to a phase transition, the desired and detrimental aspects diverge and depend on the critical scaling exponents β+ and β−. In this case, the normalization constants of Φ+ and Φ− are taken into account by the rescaled weight α′. Maximizing the goal function then yields an optimal neural efficacy m*, which is here given by

| (3) |

This optimal neural efficacy (i) is in a subcritical regime unless α = 0 (i.e., detrimental aspects do not matter) and (ii) depends on the weight α′ and the exponents β+ and β−. In the simplified picture of branching processes, the resulting m* determines a large set of response properties, which can thus only be varied simultaneously. An “ideal” network should combine the capability of dynamic adaptive computation with the ability to tune many response properties independently. To which extent such a network is conceivable at all and how it would have to be designed is an open question.

One particularly important network property is the intrinsic network timescale τ. In many processes this intrinsic network timescale emerges from recurrent activation and is connected to the neural efficacy as τ = −Δt/log(m) ≈ Δt/(1 − m) (Wilting and Priesemann, 2018a), where Δt is a typical lag of spike propagation from the presynaptic to the postsynaptic neuron. Input reverberates in the network over this timescale τ, and can thereby enable short-term memory without any changes in synaptic strength (Figure 2B). It has been proposed before that cortical computation relies on reverberating activity (Buonomano and Merzenich, 1995; Herz and Hopfield, 1995; Wang, 2002). Reverberations are also at the core of reservoir computing in echo state networks and liquid state machines (Maass et al., 2002; Jaeger et al., 2007; Boedecker et al., 2012). Here, we extend on this concept and propose that cortical networks not only rely on reverberations, but specifically harness the reverberating regime in order to change their computational properties, in particular the specific τ, amplification, and sensitivity depending on needs.

We expect dynamic adaptive computation to fine-tune computational properties when switching from one task to the next, potentially mediated by neuromodulators, but we also expect that with development every brain area or circuit has developed computational properties that match their respective role in processing. Experimentally, evidence for a developmental or evolutionary tuning has been provided by Murray and colleagues, who showed that cortical areas developed a hierarchical organization as detailed above, with somatosensory areas showing fast responses (τ ≈ 100 ms), and frontal slower ones (τ ≈ 300 ms) (Murray et al., 2014). This hierarchy indicates that the ground-state dynamics of cortical circuits is indeed precisely tuned, and it is hypothesized that the hierarchical organization provides increasingly larger windows for information integration for example across the visual hierarchy (Hasson et al., 2008, 2015; Badre and D'Esposito, 2009; Chaudhuri et al., 2015; Chen et al., 2015). In addition to that backbone of hierarchical cortical organization, dynamic adaptive computation enables the fine-tuning of a given local circuit to specific task conditions. Indeed, experimental studies have shown that the response properties of cortical networks clearly change with task condition and cognitive state (Kisley and Gerstein, 1999; Massimini et al., 2005; Poulet and Petersen, 2008; Curto et al., 2009; Goard and Dan, 2009; Kohn et al., 2009; Harris and Thiele, 2011; Marguet and Harris, 2011; Priesemann et al., 2013; Scholvinck et al., 2015). Relating these changes to specific functional task requirements remains a theoretical and experimental challenge for the future.

Author contributions

All authors were involved in the conception and revision of this study. JW, JZ, and VP wrote the manuscript. JW, JZ, and VP calculated the presented derivations. JW, JP, and VP drafted the figures.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Figure 1C was partly derived under CC BY-SA 4.0 license, creative credit to Jaygandhi786.

Footnotes

Funding. All authors received support from the Max-Planck-Society. JZ and VP received financial support from the German Ministry of Education and Research (BMBF) via the Bernstein Center for Computational Neuroscience (BCCN) Göttingen under Grant No. 01GQ1005B. JW was financially supported by Gertrud-Reemtsma-Stiftung. JP received financial support from the Brazilian National Council for Scientific and Technological Development (CNPq) under grant 206891/2014-8.

References

- Badre D., D'Esposito M. (2009). Is the rostro-caudal axis of the frontal lobe hierarchical? Nat. Rev. Neurosci. 10, 659–669. 10.1038/nrn2667 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnett L., Lizier J. T., Harré M., Seth A. K., Bossomaier T. (2013). Information flow in a kinetic ising model peaks in the disordered phase. Phys. Rev. Lett. 111, 1–4. 10.1103/PhysRevLett.111.177203 [DOI] [PubMed] [Google Scholar]

- Beggs J. M., Plenz D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177. 10.1523/JNEUROSCI.23-35-11167.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beggs J. M., Timme N. (2012). Being critical of criticality in the brain. Front. Physiol. 3:163. 10.3389/fphys.2012.00163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertschinger N., Natschläger T. (2004). Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 16, 1413–1436. 10.1162/089976604323057443 [DOI] [PubMed] [Google Scholar]

- Bienenstock E., Lehmann D. (1998). Regulated criticality in the brain? Adv. Complex Syst. 1, 361–384. 10.1142/S0219525998000223 [DOI] [Google Scholar]

- Boedecker J., Obst O., Lizier J. T., Mayer N. M., Asada M. (2012). Information processing in echo state networks at the edge of chaos. Theory Biosci. 131, 205–213. 10.1007/s12064-011-0146-8 [DOI] [PubMed] [Google Scholar]

- Brunel N. (2000). Dynamics of networks of randomly connected excitatory and inhibitory spiking neurons. J. Physiol. Paris 94, 445–463. 10.1016/S0928-4257(00)01084-6 [DOI] [PubMed] [Google Scholar]

- Buonomano D., Merzenich M. (1995). Temporal information transformed into a spatial code by a neural network with realistic properties. Science 267, 1028–1030. 10.1126/science.7863330 [DOI] [PubMed] [Google Scholar]

- Burns B. D., Webb A. C. (1976). The spontaneous activity of neurones in the cat's cerebral cortex. Proc. R. Soc. B Biol. Sci. 194, 211–223. 10.1098/rspb.1976.0074 [DOI] [PubMed] [Google Scholar]

- Camalet S., Duke T., Julicher F., Prost J. (2000). Auditory sensitivity provided by self-tuned critical oscillations of hair cells. Proc. Natl. Acad. Sci. U.S.A. 97, 3183–3188. 10.1073/pnas.97.7.3183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaudhuri R., Knoblauch K., Gariel M. A., Kennedy H., Wang X. J. (2015). A large-scale circuit mechanism for hierarchical dynamical processing in the primate cortex. Neuron 88, 419–431. 10.1016/j.neuron.2015.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J., Hasson U., Honey C. J. (2015). Processing timescales as an organizing principle for primate cortex. Neuron 88, 244–246. 10.1016/j.neuron.2015.10.010 [DOI] [PubMed] [Google Scholar]

- Curto C., Sakata S., Marguet S., Itskov V., Harris K. D. (2009). A simple model of cortical dynamics explains variability and state dependence of sensory responses in urethane-anesthetized auditory cortex. J. Neurosci. 29, 10600–10612. 10.1523/JNEUROSCI.2053-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen D., Diesmann M., Helias M. (2016). Distributions of covariances as a window into the operational regime of neuronal networks. Available onine at: http://arxiv.org/abs/1605.04153

- DeFelipe J., Alonso-Nanclares L., Arellano J. I. (2002). Microstructure of the neocortex: comparative aspects. J. Neurocytol. 31, 299–316. 10.1023/A:1024130211265 [DOI] [PubMed] [Google Scholar]

- Del Papa B., Priesemann V., Triesch J. (2017). Criticality meets learning: criticality signatures in a self-organizing recurrent neural network. PLoS ONE 12:e0178683. 10.1371/journal.pone.0178683 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denève S., Machens C. K. (2016). Efficient codes and balanced networks. Nat. Neurosci. 19, 375–382. 10.1038/nn.4243 [DOI] [PubMed] [Google Scholar]

- Douglas R., Koch C., Mahowald M., Martin K., Suarez H. (1995). Recurrent excitation in neocortical circuits. Science 269, 981–985. 10.1126/science.7638624 [DOI] [PubMed] [Google Scholar]

- Friston K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138. 10.1038/nrn2787 [DOI] [PubMed] [Google Scholar]

- Galka A., Wong K., Ozaki T. (2010). Generalized state-space models for modeling nonstationary EEG time-series, in Modeling Phase Transitions in the Brain, eds Steyn-Ross A., Steyn-Ross M. (New York, NY: Springer; ), 27–52. 10.1007/978-1-4419-0796-7_2 [DOI] [Google Scholar]

- Goard M., Dan Y. (2009). Basal forebrain activation enhances cortical coding of natural scenes. Nat. Neurosci. 12, 1444–1449. 10.1038/nn.2402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gollo L. L. (2017). Coexistence of critical sensitivity and subcritical specificity can yield optimal population coding. J. R. Soc. Interface 14:20170207. 10.1098/rsif.2017.0207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haldeman C., Beggs J. (2005). Critical branching captures activity in living neural networks and maximizes the number of metastable states. Phys. Rev. Lett. 94:058101. 10.1103/PhysRevLett.94.058101 [DOI] [PubMed] [Google Scholar]

- Harris K. D., Thiele A. (2011). Cortical state and attention. Nat. Rev. Neurosci. 12, 509–523. 10.1038/nrn3084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris T. E. (1963). The Theory of Branching Processes. Berlin: Springer. [Google Scholar]

- Hasson U., Chen J., Honey C. J. (2015). Hierarchical process memory: memory as an integral component of information processing. Trends Cogn. Sci. 19, 304–313. 10.1016/j.tics.2015.04.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U., Yang E., Vallines I., Heeger D. J., Rubin N. (2008). A hierarchy of temporal receptive windows in human cortex. J. Neurosci. 28, 2539–2550. 10.1523/JNEUROSCI.5487-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hennequin G., Agnes E. J., Vogels T. P. (2017). Inhibitory plasticity: balance, control, and codependence. Annu. Rev. Neurosci. 40, 557–579. 10.1146/annurev-neuro-072116-031005 [DOI] [PubMed] [Google Scholar]

- Hennequin G., Vogels T. P., Gerstner W. (2014). Optimal control of transient dynamics in balanced networks supports generation of complex movements. Neuron 82, 1394–1406. 10.1016/j.neuron.2014.04.045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herz A. V. M., Hopfield J. J. (1995). Earthquake cycles and neural reverberations: collective oscillations in systems with pulse-coupled threshold elements. Phys. Rev. Lett. 75, 1222–1225. 10.1103/PhysRevLett.75.1222 [DOI] [PubMed] [Google Scholar]

- Hirsch J., Gilbert C. (1991). Synaptic physiology of horizontal connections in the cat's visual cortex. J. Neurosci. 11, 1800–1809. 10.1523/JNEUROSCI.11-06-01800.1991 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang C., Doiron B. (2017). Once upon a (slow) time in the land of recurrent neuronal networks…. Curr. Opin. Neurobiol. 46, 31–38. 10.1016/j.conb.2017.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humplik J., Tkačik G. (2017). Probabilistic models for neural populations that naturally capture global coupling and criticality. PLoS Comput. Biol. 13:e1005763. 10.1371/journal.pcbi.1005763 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaeger H., Haas H. (2004). Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304, 78–80. 10.1126/science.1091277 [DOI] [PubMed] [Google Scholar]

- Jaeger H., Maass W., Principe J. (2007). Special issue on echo state networks and liquid state machines. Neural Netw. 20, 287–289. 10.1016/j.neunet.2007.04.001 [DOI] [Google Scholar]

- Kadmon J., Sompolinsky H. (2015). Transition to chaos in random neuronal networks. Phys. Rev. X 5, 1–28. 10.1103/PhysRevX.5.041030 [DOI] [Google Scholar]

- Kaiser M., Simonotto J. (2010). Limited spreading: how hierarchical networks prevent the transition to the epileptic state, in Modeling Phase Transitions in the Brain eds Steyn-Ross A., Steyn-Ross M. (New York, NY: Springer; ), 99–116. 10.1007/978-1-4419-0796-7_5 [DOI] [Google Scholar]

- Karnani M. M., Jackson J., Ayzenshtat I., Hamzehei Sichani A., Manoocheri K., Kim S., et al. (2016). Opening holes in the blanket of inhibition: localized lateral disinhibition by VIP interneurons. J. Neurosci. 36, 3471–3480. 10.1523/JNEUROSCI.3646-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kinouchi O., Copelli M. (2006). Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351. 10.1038/nphys289 [DOI] [Google Scholar]

- Kisley M. A., Gerstein G. L. (1999). Trial-to-trial variability and state-dependent modulation of auditory-evoked responses in cortex. J. Neurosci. 19, 10451–10460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohn A., Zandvakili A., Smith M. A. (2009). Correlations and brain states: from electrophysiology to functional imaging. Curr. Opin. Neurobiol. 19, 434–438. 10.1016/j.conb.2009.06.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kossio F. Y. K., Goedeke S., van den Akker B., Ibarz B., Memmesheimer R. -M. (2018). Growing critical: self-organized criticality in a developing neural system. Phys. Rev. Lett. 121:058301. 10.1103/PhysRevLett.121.058301 [DOI] [PubMed] [Google Scholar]

- Kubo R. (1957). Statistical-mechanical theory of irreversible processes. I. General theory and simple applications to magnetic and conduction problems. J. Phys. Soc. Japan 12, 570–586. [Google Scholar]

- Larkum M. (2013). A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends Neurosci. 36, 141–151. 10.1016/j.tins.2012.11.006 [DOI] [PubMed] [Google Scholar]

- Lazar A. (2009). SORN: a self-organizing recurrent neural network. Front. Comput. Neurosci. 3:23. 10.3389/neuro.10.023.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levina A., Herrmann J. M., Geisel T. (2007). Dynamical synapses causing self-organized criticality in neural networks. Nat. Phys. 3, 857–860. 10.1038/nphys758 [DOI] [Google Scholar]

- Levina A., Priesemann V. (2017). Subsampling scaling. Nat. Commun. 8, 1–9. 10.1038/ncomms15140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim S., Goldman M. S. (2013). Balanced cortical microcircuitry for maintaining information in working memory. Nat. Neurosci. 16, 1306–1314. 10.1038/nn.3492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- London M., Häusser M. (2005). Dendritic computation. Annu. Rev. Neurosci. 28, 503–532. 10.1146/annurev.neuro.28.061604.135703 [DOI] [PubMed] [Google Scholar]

- London M., Roth A., Beeren L., Häusser M., Latham P. E. (2010). Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature 466, 123–127. 10.1038/nature09086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maass W., Natschläger T., Markram H. (2002). Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560. 10.1162/089976602760407955 [DOI] [PubMed] [Google Scholar]

- Marguet S. L., Harris K. D. (2011). State-dependent representation of amplitude-modulated noise stimuli in rat auditory cortex. J. Neurosci. 31, 6414–6420. 10.1523/JNEUROSCI.5773-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massimini M., Ferrarelli F., Huber R., Esser S. K., Singh H., Tononi G. (2005). Breakdown of cortical effective connectivity during sleep. Science 309, 2228–2232. 10.1126/science.1117256 [DOI] [PubMed] [Google Scholar]

- Meisel C., Storch A., Hallmeyer-Elgner S., Bullmore E., Gross T. (2012). Failure of adaptive self-organized criticality during epileptic seizure attacks. PLoS Comput. Biol. 8:e1002312. 10.1371/journal.pcbi.1002312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller K. D. (2016). Canonical computations of cerebral cortex. Curr. Opin. Neurobiol. 37, 75–84. 10.1016/j.conb.2016.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muñoz M. A. (2017). Colloquium: criticality and dynamical scaling in living systems. Rev. Mod. Phys. 90:31001 10.1103/RevModPhys.90.031001 [DOI] [Google Scholar]

- Murphy B. K., Miller K. D. (2009). Balanced amplification: a new mechanism of selective amplification of neural activity patterns. Neuron 61, 635–648. 10.1016/j.neuron.2009.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray J. D., Bernacchia A., Freedman D. J., Romo R., Wallis J. D., Cai X., et al. (2014). A hierarchy of intrinsic timescales across primate cortex. Nat. Neurosci. 17, 1661–1663. 10.1038/nn.3862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostojic S. (2014). Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nat. Neurosci. 17, 594–600. 10.1038/nn.3658 [DOI] [PubMed] [Google Scholar]

- Piech V., Li W., Reeke G. N., Gilbert C. D. (2013). Network model of top-down influences on local gain and contextual interactions in visual cortex. Proc. Natl. Acad. Sci. U.S.A. 110, E4108–E4117. 10.1073/pnas.1317019110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plenz D., Niebur E. (eds.) (2014). Criticality in Neural Systems. Weinheim: Wiley-VCH Verlag GmbH & Co. KGaA; ). 10.1002/9783527651009 [DOI] [Google Scholar]

- Poulet J. F. A., Petersen C. C. H. (2008). Internal brain state regulates membrane potential synchrony in barrel cortex of behaving mice. Nature 454, 881–885. 10.1038/nature07150 [DOI] [PubMed] [Google Scholar]

- Priesemann V., Munk M. H. J., Wibral M. (2009). Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC Neurosci. 10:40. 10.1186/1471-2202-10-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V., Valderrama M., Wibral M., Le Van Quyen M. (2013). Neuronal avalanches differ from wakefulness to deep sleep–evidence from intracranial depth recordings in humans. PLoS Comput. Biol. 9:e1002985. 10.1371/journal.pcbi.1002985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V., Wibral M., Valderrama M., Pröpper R., Le Van Quyen M., Geisel T., et al. (2014). Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Front. Syst. Neurosci. 8:108. 10.3389/fnsys.2014.00108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramalingam N., McManus J. N. J., Li W., Gilbert C. D. (2013). Top-down modulation of lateral interactions in visual cortex. J. Neurosci. 33, 1773–1789. 10.1523/JNEUROSCI.3825-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rang H. P., Dale M. M., Ritter J. M., Moore P. K. (2003). Pharmacology, 5th Edn. Edinburgh: Churchill Livingstone. [Google Scholar]

- Ribeiro T. L., Ribeiro S., Belchior H., Caixeta F., Copelli M. (2014). Undersampled critical branching processes on small-world and random networks fail to reproduce the statistics of spike avalanches. PLoS ONE 9:e94992. 10.1371/journal.pone.0094992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubin D. B., VanHooser S. D., Miller K. D. (2015). The stabilized supralinear network: a unifying circuit motif underlying multi-input integration in sensory cortex. Neuron 85, 402–417. 10.1016/j.neuroN.2014.12.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheffer M., Carpenter S. R., Lenton T. M., Bascompte J., Brock W., Dakos V., et al. (2012). Anticipating critical transitions. Science 338, 344–348. 10.1126/science.1225244 [DOI] [PubMed] [Google Scholar]

- Schiller U. D., Steil J. J. (2005). Analyzing the weight dynamics of recurrent learning algorithms. Neurocomputing 63, 5–23. 10.1016/j.neucom.2004.04.006 [DOI] [Google Scholar]

- Scholvinck M. L., Saleem A. B., Benucci A., Harris K. D., Carandini M. (2015). Cortical state determines global variability and correlations in visual cortex. J. Neurosci. 35, 170–178. 10.1523/JNEUROSCI.4994-13.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sethna J. (2006). Statistical Mechanics: Entropy, Order Parameters, and Complexity. Oxford: Oxford University Press. [Google Scholar]

- Shew W. L., Plenz D. (2013). The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100. 10.1177/1073858412445487 [DOI] [PubMed] [Google Scholar]

- Shriki O. and Yellin, D. (2016). Optimal information representation and criticality in an adaptive sensory recurrent neuronal network. PLoS Comput. Biol. 12:e1004698. 10.1371/journal.pcbi.1004698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Softky W. R., Koch C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J. Neurosci. 13, 334–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sompolinsky H., Crisanti A., Sommers H. J. (1988). Chaos in random neural networks. Phys. Rev. Lett. 61, 259–262. 10.1103/PhysRevLett.61.259 [DOI] [PubMed] [Google Scholar]

- Statman A., Kaufman M., Minerbi A., Ziv N. E., Brenner N. (2014). Synaptic size dynamics as an effectively stochastic process. PLoS Comput. Biol. 10:e1003846. 10.1371/journal.pcbi.1003846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein R. B., Gossen E. R., Jones K. E. (2005). Neuronal variability: noise or part of the signal? Nat. Rev. Neurosci. 6, 389–397. 10.1038/nrn1668 [DOI] [PubMed] [Google Scholar]

- Steyn-Ross A., Steyn-Ross M. (2010). Modeling Phase Transitions in the Brain. New York, NY: Springer; 10.1007/978-1-4419-0796-7 [DOI] [Google Scholar]

- Steyn-Ross D., Steyn-Ross M., Wilson M., Sleigh J. (2010). Phase transitions in single neurons and neural populations: critical slowing, anesthesia, and sleep cycles in Model. Phase Transitions Brain, eds Steyn-Ross A., Steyn-Ross M. (New York, NY: Springer; ), 1–26. [Google Scholar]

- Suarez H., Koch C., Douglas R. (1995). Modeling direction selectivity of simple cells in striate visual cortex within the framework of the canonical microcircuit. J. Neurosci. 15, 6700–6719. 10.1523/JNEUROSCI.15-10-06700.1995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tkačik G., Mora T., Marre O., Amodei D., Palmer S. E., Berry M. J., et al. (2015). Thermodynamics and signatures of criticality in a network of neurons. Proc. Natl. Acad. Sci. U.S.A. 112, 11508–11513. 10.1073/pnas.1514188112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turrigiano G., Abbott L. F., Marder E. (1994). Activity-dependent changes in the intrisic properties of cultured neurons. Science 264, 974–977. [DOI] [PubMed] [Google Scholar]

- Turrigiano G. G., Nelson S. B. (2004). Homeostatic plasticity in the developing nervous system. Nat. Rev. Neurosci. 5, 97–107. 10.1038/nrn1327 [DOI] [PubMed] [Google Scholar]

- Vreeswijk C. V., Sompolinsky H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726. 10.1126/science.274.5293.1724 [DOI] [PubMed] [Google Scholar]

- Wang X. J. (2002). Probabilistic decision making by slow reverberation in cortical circuits. Neuron 36, 955–968. 10.1016/S0896-6273(02)01092-9 [DOI] [PubMed] [Google Scholar]

- Wang X. R., Lizier J. T., Prokopenko M. (2011). Fisher information at the edge of chaos in random Boolean networks. Artif. Life (Oxford: Oxford University Press; ), 17, 315–329. 10.1162/artl_a_00041 [DOI] [PubMed] [Google Scholar]

- Wilson C. (2008). Up and down states. Scholarpedia 3:1410. 10.4249/scholarpedia.1410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilting J., Priesemann V. (2018a). Inferring collective dynamical states from widely unobserved systems. Nat. Commun. 9:2325. 10.1038/s41467-018-04725-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilting J., Priesemann V. (2018b). On the ground state of spiking network activity in mammalian cortex. Available online at: http://arxiv.org/abs/1804.07864

- Zierenberg J., Wilting J., Priesemann V. (2018). Homeostatic plasticity and external input shape neural network dynamics. Phys. Rev. X 8:031018 10.1103/PhysRevX.8.031018 [DOI] [Google Scholar]