Abstract

Driver fatigue is attracting more and more attention, as it is the main cause of traffic accidents, which bring great harm to society and families. This paper proposes to use deep convolutional neural networks, and deep residual learning, to predict the mental states of drivers from electroencephalography (EEG) signals. Accordingly we have developed two mental state classification models called EEG-Conv and EEG-Conv-R. Tested on intra- and inter-subject, our results show that both models outperform the traditional LSTM- and SVM-based classifiers. Our major findings include (1) Both EEG-Conv and EEG-Conv-R yield very good classification performance for mental state prediction; (2) EEG-Conv-R is more suitable for inter-subject mental state prediction; (3) EEG-Conv-R converges more quickly than EEG-Conv. In summary, our proposed classifiers have better predictive power and are promising for application in practical brain-computer interaction .

Keywords: Driver fatigue, Electroencephalography (EEG), Residual learning, EEG-Conv, EEG-Conv-R

Introduction

Fatigue is a complex mental state, often accompanied by drowsiness (Kar et al. 2010) and usually manifesting as lack of vigilance and reduced attention. It becomes one of the major causes of motor vehicle accidents (Sahayadhas et al. 2012; Khushaba et al. 2010), which bring serious physiological injuries, psychological distress, and significant economic loss to drivers and their families. Driver fatigue is reported to account for 35–45% of all vehicle accidents (Idogawa 2006). Therefore, detecting drivers’ cognitive ability in driving process, specially fatigue state, has great potential in reducing vehicle accidents.

Many methods for driver fatigue detection are based on physiological signals, such as electroencephalography (EEG), electrooculogram (EOG), Electromyogram (EMG), and Electrocardiogram (ECG) (Khushaba et al. 2010; Kong et al. 2017; Hu and Zheng 2009; Fu and Wang 2014; Ahn et al. 2016; Lin et al. 2014), or their combination. Most studies report that there is strong correlation between these signals and drivers’ cognitive state and they can be used to detect driver fatigue accurately. For instance, some studies (Khushaba et al. 2010; Brookhuis and De 1993; Jap et al. 2009) report that the change in the cognitive state is usually accompanied with the significant changes of EEG frequency bands, such as delta (0.5–3.5 Hz), theta (4–7 Hz), alpha (8–12 Hz), and beta (13–30 Hz). Eye movement and closure are also considered two important indictors of driver fatigue (Kar et al. 2010). When a person is in fatigue statue, his eye movement decreases and blink rate increases (Lal and Craig 2001). In addition, it is reported that the variability of heart rate can be used to distinguish fatigue from other cognitive states by ECG power spectrum (Tsuchida et al. 2009). The variability of heart rate (Jeong et al. 2007) deceases when a person is in fatigue or drowsiness state.

EEG records the electrical potentials generated by cerebral cortex’s nerve cells (Liang et al. 2010), has rich sample data with high temporal resolution (Zeng et al. 2017; Stein et al. 2013), and contains abundant physiological or psychological information. EEG-based methods are considered to be the most convenient and effective among these physiology-based methods. In general, most EEG-based methods for driver fatigue detection utilize waveform information, power spectrum, nonlinear analysis and some modeling techniques (Kong et al. 2017; Chen et al. 2017). For example, Correa et al. (2014) developed an automatic method to detect the drowsiness stage in EEG records using time, spectral and wavelet analysis, and obtained 87.4 and 83.6% accuracy in detecting alertness and drowsiness, respectively. Khushaba et al. (2010) proposed a fuzzy mutual-information(MI)-based wavelet packet transform feature-extraction method to predict drowsiness levels. Pal et al. (2008) found out that the power spectrum of alpha band in the EEG is related to the loss of alertness. Similar work is also reported in Jap et al. (2009), Lin et al. (2010). Mu et al. (2017) used four types of entropy, including spectrum entropy, approximation entropy, sample entropy and fuzzy entropy, to extract EEG features for driver fatigue detection. In addition, some modeling techniques are used to detect driver state. Hu (2017) developed an AdaBoost classifier for automated detection of driver fatigue with EEG signals. Wali et al. (2013) fused discrete wavelet packet transformation (DWPT) and fast Fourier transformation (FFT) to classify the driver distraction level, and achieved up to 85% classification accuracy. In Zhao et al. (2010), KPCA-SVM classifier was employed to differentiate the normal and mental fatigue state, and got a higher accuracy 98.7%. Fu et al. (2016) presented a HMM (Hidden Markov Model)-based dynamic fatigue detection model to estimate the driver fatigue, and obtained 92.5% accuracy.

Despite those advancement, robust and accurate detection of driver cognitive performance by EEG still remains challenge. First, it is well known that EEG manifests highly non-stationary, and varies over time within a single subject (intra-subject) and between two different subjects (inter-subject) (Thodoroff et al. 2016). It is challenging to identify general patterns from non-stationary EEG signals. Second, the above-mentioned methods generally separate the detection process into two steps: feature exaction and classification. The process of feature extraction usually needs hand-crafted operation, which may cause the loss of useful information in EEG (Tang et al. 2017). Third, the low signal-to-noise ratio (SNR) of EEG also impacts the detection accuracy.

Deep learning (DL) (LeCun et al. 2015) has been applied in various domains, such as computer vision, speech recognition and natural language processing. Convolutional neural networks (CNN) represents one of the most significant advances in DL due to its success in many challenging classification tasks (He et al. 2016; Abdel-Hamid et al. 2014; Domhan et al. 2015). CNN are feed-forward neural networks, usually including feature extraction layer and feature mapping layer, and can learn local patterns in data by convolution. A distinctive property of CNN is that it is suitable for end-to-end learning without any a priori feature selection (Schirrmeister et al. 2017), which avoids information loss, and is specially fit for low SNR, task-irrelevant EEG raw data. Hence, lots of EEG-based researches and applications have emerged these years such as P300 feature detection (Cecotti and Graser 2011; Puanhvuan et al. 2017), motor imagery classification (Sakhavi et al. 2015), seizure detection (Page et al. 2016; Raghu et al. 2017), cognitive therapy in depressive disorder (Bornas et al. 2015; Schoenberg and Speckens 2015), drowsy and alert states prediction (Hajinoroozi et al. 2016), momentary mental workload recognition and classification (Zhang et al. 2017, 2017), emergent visual attention model for identifying the possible cause of autism (Gravier et al. 2016) and brain-computer interface communication (Lawhern et al. 2016; Manor and Geva 2015), etc.

In this work, we construct two novel classifiers: EEG-Conv and EEG-Conv-R, where EEG-Conv is based on the traditional CNN and EEG-Conv-R combines CNN with recent deep residual learning. We study the prediction performance of our proposed classifiers on both intra- and inter-subject with raw EEG data. We also compare our EEG-Conv and EEG-Conv-R with support vector machine (SVM) and an existing deep learning method LSTM (long short term memory).

The rest of the paper is organized as follows: “Materials” section introduces the experiment design, EEG data acquisition, as well as data preprocessing, respectively. “Methods” section provides a detailed description of our proposed classifiers, including the design of EEG-Conv and EEG-Conv-R. The results and discussion of experiment are shown in “Results and discussion” section. Finally, conclusion is presented in “Conclusion” section.

Materials

Driving simulation platform

We construct a driving simulation platform, as shown in Fig. 1. The platform is made up of 1) stimulation driving operation devices, including racing seat, steering wheel, liquid crystal display (LCD), loudspeaker, and projector; 2) the physiological signal collection instruments, including Neuroscan with 64 electrodes for EEG collection, a camera for eye-blink detection, and a heart-rate sensor for counting the heart rate. The physiological signals are acquired simultaneously. ( Herein, eye-blink and heart-rate detection is to determine the rank of mental states. For instance, if the number of eye-blink per minute is less than 20 times and heart-rate is greater than 70 times per minute, we define it as sober state which we call ‘TAV’. Correspondingly, if the number of eye-blink exceeds 30 times, and heart rate is less than 60 times, we define it as fatigue statue which we call ‘DROWS’ ); 3) one computer for data recording, which has installed a driving simulation software - Need For Speed-Shift 2 Unleashed (NFS-S2U), and ‘WorldRecord’ software for recording all the parameters during driving; 4) another computer for collecting alert tasks like image and sound stimuli, and processing physiological signals.

Fig. 1.

Driving simulation experiment platform

Experiment protocol

Ten healthy subjects aged 23–25 participate in the experiment for driving data collection. All of them possess C1 (Manual Transmission, MT) driving licenses, and know the whole experiment procedure in advance. They are asked to ensure adequate sleep the day before the experiment, and are told not to drink excitant or inhibitory drinks like coffee, alcohol, tea, and to avoid strenuous exercises during the experiment day. The study is approved by a local Ethics committee, and all participants voluntarily sign the written consent form before experiment. The experiment is performed in a quiet and isolated room between 18:00 and 21:00. In addition, the face expression is recorded by the camera in front of the driver, and the heart rate is collected by the corresponding electrode attached on the subject’s right wrist.

Experiment setup

The experiment consists of two stages: the practice stage and experimental stage (Kong et al. 2017). They are performed in two successive days, respectively. The aim of practice is to make sure all subjects become familiar with the stimulation driving environment, and are able to respond correctly to various stimuli. After training, every subject is asked to drive at the specified track for two laps, and should not deviate from the track to ensure safety drive.

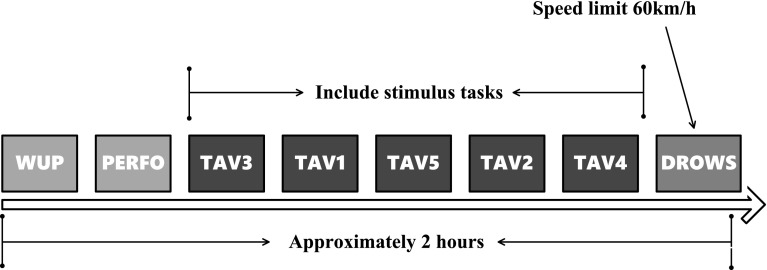

When collecting EEG data, we simultaneously record the number of eye blinks per minute of the subject. Combined with the heart rate collected by EKG, we divide the mental states into 8 phases: WUP, PERFO, TAV3, TAV1, TAV5, TAV2, TAV4, and DROWS (Kong et al. 2017), as shown in Table 1. The schematic diagram of experimental procedure is shown in Fig. 2. WUP corresponds to the incipient stage of the experiment, which needs the subject drives as practice dirving for about 10 min without any stimuli. PERFO is similar with WUP, only requires the subject to finish the tracks reducing 2% of the baseline time of the WUP state. From TAV1 to TAV5, the subject is exerted the tasks of video and audition (we call them as alert and vigilance stimuli, respectively) to enhance the subject’s workload, and respond to these stimuli by pressing the ‘RIGHT’ or ‘LEFT’ button on the steering wheel. The ‘RIGHT’ button is for video task with alert stimuli, and the ‘LEFT’ button for auditory task with vigilance stimuli. That is, in the condition of alert stimuli, the traffic jam is simulated, and the subject should press the ‘RIGHT’ button by right index finger when an ‘X’ appears on the screen 1m ahead of the subject. In the condition of vigilance state, the subject should press the ‘LEFT’ button by left index finger when two consecutive “beep”s come. Thus, it ensures the subjects to be alert or vigilant, and can collect EEG signals of the wake condition. The difference among these TAV states is the stimuli frequency. From TAV1 to TAV5, the stimuli intervals are 9800–10,200, 7700–8100, 5900–6300, 4100–4500 and 2300–2700 ms, respectively (Kong et al. 2017). DROWS is a boring drive condition at the speed of about 60 km/h without any extra video or audition stimuli, and the subject is apt to be immersed in drowsiness.

Table 1.

Blink and heart rate times of eight mental states

| Mental state | Eye-blinks(avg) | Eye-blinks(max) | Eye-blinks(min) | Heart rate (avg) | Heart rate (max) | Heart rate (min) |

|---|---|---|---|---|---|---|

| (Times/min) | (Times/min) | (Times/min) | (Times/min) | (Times/min) | (Times/min) | |

| WUP | 24 | 13 | 36 | 86 | 78 | 92 |

| PERFO | 20 | 11 | 27 | 89 | 79 | 100 |

| TAV3 | 12 | 7 | 15 | 85 | 76 | 94 |

| TAV1 | 18 | 15 | 24 | 83 | 75 | 91 |

| TAV5 | 16 | 13 | 24 | 82 | 75 | 90 |

| TAV2 | 20 | 13 | 30 | 78 | 73 | 85 |

| TAV4 | 16 | 12 | 24 | 79 | 73 | 88 |

| DROWS | 22 | 12 | 33 | 73 | 70 | 78 |

Fig. 2.

Schematic diagram of experiment procedure

In the present experiment, TAV3 is the first stage when video and sound stimuli appear, and the subject will pay higher attention to these tasks, and be in the most sober state. DROWS is the last stage of the experiment. After nearly 2 h of driving, the subject is prone to fatigue. Moreover, the drive process at a constant speed of 60km/h is monotonous, and easier to be fatigue. Also, as shown in Table 1, the obvious difference of eye-blinks and heart rate between TAV3 and DROWS confirms our experiment design. Therefore, in this paper, the collected EEG data of TAV3 and DROWS is selected for the prediction of drive fatigue.

EEG data acquisition

EEG is collected by gUSBamp amplifier with 16 channels (g.Tec Medical Engineering GmbH), and is continuously sampled with frequency at 256 Hz and impedance below 5K. The electrodes are deployed in accordance with the international 10/20 standard. Fifteen channels, Fz, Pz, Oz, Fp1, Fp2, F7, F3, F4, F8, C3, C4, P7, P3, P4, and P8, are used to record EEG signals. EKG electrode is placed on the fore-breast for recording the heart rate, and an additional electrode is attached on the left ear lobe as the reference.

EEG data preprocessing

First, by independent component analysis (ICA) (Jung et al. 2000), all trials that contain ocular artifacts are discarded. Then, EEG data between 1 and 40 Hz is retained by band-pass filter. Second, we convert EEG data of 15 channels into the format {SP*CH*TR}, except for EKG channel. Herein, SP refers to the sample rate which is 256 Hz in the experiment, CH is the corresponding sample channel, and TR is the event. EKG is used to record ECG data. Current EEG data format does not fit well with the DL structure, so we segment EEG data into 0.5-second (0.5s) epochs. Because the sample rate is 256 Hz, and there are 15 channels, each epoch can be represented as a matrix. Also, we label ‘0’ for DROWS state, and ‘1’ for TAV3. Thus, in total 28,176 epochs are obtained, including 18,672 DROWS epochs and 9504 TAV3 epochs, as shown in Table 2. Our purpose is to train a classifier using these epochs to better predict the cognitive performance. The last step in the preprocessing is the normalization of EEG data to eliminate the otherness effect of inter-subject EEG data. We adopt z-score function for normalization, which can be denoted by:

| 1 |

where X is the amplitude of raw EEG data and is the value after normalization. and are called the mean and standard deviation of all EEG data, respectively.

Table 2.

DROWS and TAV3 epochs of subjects

| Subject | Samples of DROWS | Samples of TAV3 | Total |

|---|---|---|---|

| s1 | 2136 | 974 | 3110 |

| s2 | 1970 | 784 | 2754 |

| s3 | 1824 | 994 | 2818 |

| s4 | 1648 | 1102 | 2750 |

| s5 | 1798 | 894 | 2692 |

| s6 | 2136 | 908 | 3044 |

| s7 | 1906 | 1078 | 2984 |

| s8 | 1822 | 1004 | 2826 |

| s9 | 1930 | 976 | 2906 |

| s10 | 1502 | 790 | 2292 |

| Total | 18672 | 9504 | 28176 |

Methods

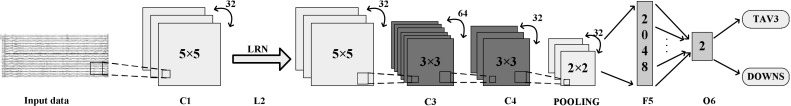

Construction of EEG-Conv classifier

The architecture of our CNN-based EEG classifier (Hereinafter referred to as EEG-Conv) is illustrated in Fig. 3. It contains eight layers: the input layer, three convolutional layers, a pooling layer, a LRN (Local Response Normalization) layer, a fully connected layer and the output layer.

Fig. 3.

The overall architecture of EEG-Conv classifier

Conv1 The input data is a matrix of . The first convolutional layer convolves the input with a kernel of . The stride is 1, and the bias is set to 0. After convolution, 32 feature maps of size are generated.

LRN2 A local response normalization layer after Conv1 applies local normalization to the previous dataflow. This type of normalization implements a kind of lateral inhibition inspired by biological phenomenon observed in real neurons, providing competition for big activities among neuron outputs calculated using different kernels. In EEG-Conv classifier, we employ the local response normalization layer to inhibit outputs from activation functions and highlight the peak value of the corresponding local region. In EEG brain signal domain, the highlighted high frequency features are more important for detecting driver cognitive states.

Conv3 The second convolutional layer convolves data generated by the previous layer with kernel of . The stride is 1 and the initial bias is set to 0. After this convolution, 64 feature maps of size are generated.

Conv4 The third convolutional layer convolves data generated by the previous layer with kernel of . The stride is 1 and the initial bias is set to 0. After this convolution, 32 feature maps of size are generated.

Pool5 A max pooling layer is placed after the third convolutional layer. The kernel size in Pool5 is and the stride is 2. The pooling layer lowers the computational burden by reducing the number of connections between the hidden layers in EEG-Conv. By stacking three convolutional layers and a pooling layer, a relative concise EEG signal feature representation is extracted.

FC6 The fully connected layer aims to perform high level reasoning on EEG signal feature representation. FC6 takes all neurons in Pool5 and connects them to every single neuron of current layer to generate global semantics of EEG signals. FC6 is composed of 2048 neurons. The dropout strategy is applied to prevent overfitting. The output of each hidden neuron in FC6 is set to 0 with probability 0.5. The dropout strategy forces EEG-Conv to learn more robust EEG signal features.

Out7 Logistic regression is put on top of the previous hidden layers as the output layer of the EEG-Conv classifier. A single logistic regression layer itself is a linear, probabilistic classifier. Detecting driver cognitive states is done by projecting data points onto a set of hyperplanes, the distances to which reflect a class membership probability. Out7 is parameterized by a weight matrix and a bias vector . The logistic regression layer can be calculated by:

| 2 |

where is the output of layer Out7. The output of the EEG-Conv classifier is then generated by taking the argmax of the vector whose i-th element is . It can be calculated by Eq. 3, where the output result is denoted by .

| 3 |

Activation function Each neuron in the deep CNNs has nonlinearity (activation function) and linearity (affine transformation unit). The proper activation functions selected according to EEG signal domain knowledge are very important for the performance of the networks. An activation function shall satisfy the following requirements: nonlinearity, saturability, continuity, smoothness and monotonicity. The nonlinear activation function are generally chosen to be sigmoid function, tanh function, or ReLU (Rectified Linear Unit) function. We chose ReLU as activation function in the convolutional layers and the fully connected layer due to its following advantages: 1) it is more efficient than sigmoid or tanh functions; 2) it induces the sparsity in the hidden units and allows the EEG classifier to easily obtain sparse brain signal feature representations. The ReLU function used in EEG-Conv is defined as:

| 4 |

where is the input of the activation at location (i, j) on the k-th channel. ReLU works better than logistic sigmod and tanh functions in our experiments.

Training of the EEG-Conv classifier

Training the EEG-Conv classifier can be regarded as solving a non convex optimization problem, because the loss function is not a convex function of the network parameters. Hence applying combined strategies to the training phase is necessary. We describe some practical strategies which we used during training in this subsection. Choosing proper learning rates makes learned weights approximates the global optimal solution as far as possible; the dropout method is used to prevent over fitting.

Learning rate The BP (back propagation) algorithm provides an approximation of the trajectory calculated by using the steepest descent in the weight parameter space. The learning rate is initialized to 0.01 at the beginning, and changed throughout the training phase. Step strategy is adopted so that the learning rate is adjusted after a fixed number of iterations in the training phase to prevent the network from oscillating. The learning rate is adjusted according to the below formula:

| 5 |

where is the current learning rate, is a fixed hyper parameter which is set to 0.1 in the experiments, iter is the current number of iterations, stepsize indicates the number of iterations when the learning rate will be changed (it has been set to 20000 in our experiments), is the rounding down operation.

Dropout In order to prevent over fitting, we apply the dropout strategy to the fully connected layer FC6. That is, we drop the neurons of FC6 with probability 0.5. The dropout strategy prevent the neurons in FC6 from cooperating with other nodes at the training phase, hence the other hidden nodes maybe discarded. Each time an input is presented, the EEG-Conv classifier samples a different architecture. This training strategy reduces sophisticated co-adaptations of neurons, since a neuron cannot rely on the presence of particular other neurons.

Improved EEG classifier with residual learning

Our EEG-Conv classifier has good prediction accuracy on the test set. To further improve accuracy, we develop an EEG-Conv-R classifier by combining EEG-Conv with residual learning.

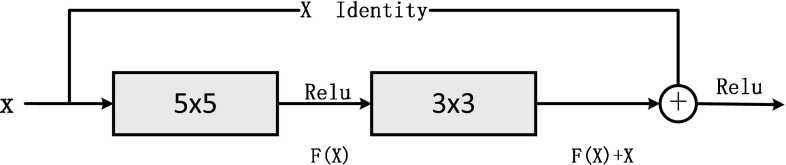

Residual learning explicitly reformulates the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions (He et al. 2016; Qin et al. 2018). In other words, the residual layer learns the change of perturbations. As shown in Fig. 4, we add a shortcut after the input X, and the output of the block is superimposed upon the input, hence the output of the block becomes , and the network weight parameters needs to learn is F(X). In EEG-Conv-R classifier, the residual block is defined as:

| 6 |

where X and Y are the input and output vectors of the layers considered. The function describes the residual mapping to be learned.

Fig. 4.

The residual block

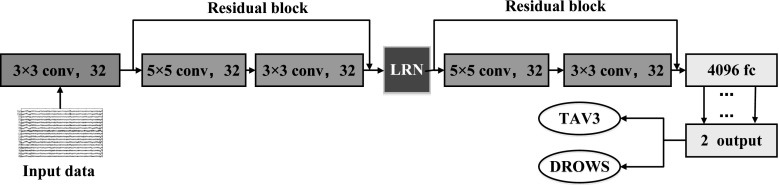

Currently we add two residual blocks to EEG-Conv classifier to improve its performance. The architecture of EEG-Conv-R classifier is shown in Fig. 5.

Fig. 5.

The architecture of EEG-Conv-R classifier with residual learning

Results and discussion

Here we evaluate the predictive performance of EEG-Conv and EEG-Conv-R on both intra-subject and inter-subject. Intra-subject prediction means the training and test data comes from the same subject, whereas inter-subject prediction means that the training and test data comes from different subjects.

Intra-subject classification performance

We randomly take 80% of the TAV3 and DROWS samples of each subject to form a training set, named , the remaining 20% of each subject as the test set, named , . Here, and are the training and test sets of the subject, respectively. In order to avoid loss of generality, the TAV3 and DROWS samples are randomly taken from each . During the training step, each subject’s EEG data is used to train individual classification models. Thus, each is used as input to train EEG-Conv and EEG-Conv-R, and each is used to test both classifiers. Furthermore, we randomly extract 10% samples as the validation set from each for cross validation, named , .

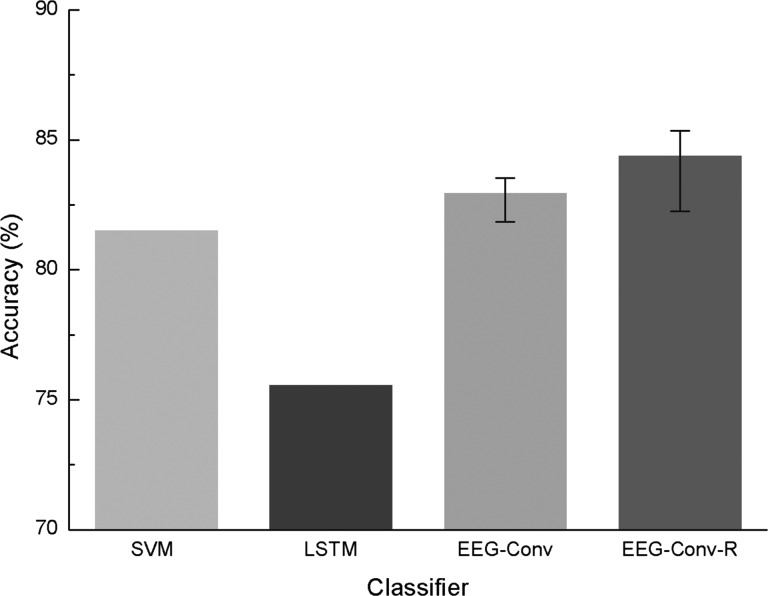

The experimental results are shown in Fig. 6. For mental state detection of intra-subject, EEG-Conv and EEG-Conv-R have similar performance, their average accuracy reaches 91.788 and 92.682%, respectively.

Fig. 6.

Accuracy of EEG-Conv, EEG-Conv-R, LSTM and SVM in intra-subject test

As a control, the common LSTM (Long-short-term-memory) neural network model and SVM classifier are used for performance comparison with our proposed models. For SVM, A Gaussian kernel function is used, the penalty factor is set to 9.6, and probability estimation is not enabled. For LSTM, stacked layer is 2, the time step is set to 128, the learning rate is 0.01, and the stochastic gradient descent is used for dimension reduction. In addition, we refer to the literatures (Chang and Lin 2011) and (Hochreiter and Schmidhuber 1997) for the training of SVM and LSTM, respectively.

LSTM yields an average accuracy 85.132%, lower than our EEG-Conv and EEG-Conv-R. SVM has an average accuracy 88.070% with CSP (Common spatial pattern) feature extraction. Among the 10 subjects, SVM outperforms our EEG-Conv and EEG-Conv-R on only two of them, i.e., s2 and s4. The average classification accuracy of four models is shown in Table 3.

Table 3.

Average classification accuracy of EEG-Conv, EEG-Conv-R, LSTM and SVM in intra-subject

| Model | EEG-Conv | EEG-Conv-R | SVM | LSTM |

|---|---|---|---|---|

| Average accuracy (%) | 91.788 | 92.682 | 88.070 | 85.132 |

The above results show that although the significant variances of EEG signal among different subjects, our proposed models, especially EEG-Conv-R, could learn better the features of EEG data, and yield excellent classification result for intra-subject.

We also perform variance analysis of SVM, LSTM, EEG-Conv, and EEG-Conv-R in intra-subject, as shown in Table 4. The stability of EEG-Conv and EEG-Conv-R is close to or slightly lower than SVM, but much higher than LSTM.

Table 4.

Variance analysis of EEG-Conv, EEG-Conv-R, SVM and LSTM in intra-subject

| Model | EEG-Conv | EEG-Conv-R | SVM | LSTM |

|---|---|---|---|---|

| Variance | 0.0028 | 0.0046 | 0.0023 | 0.0109 |

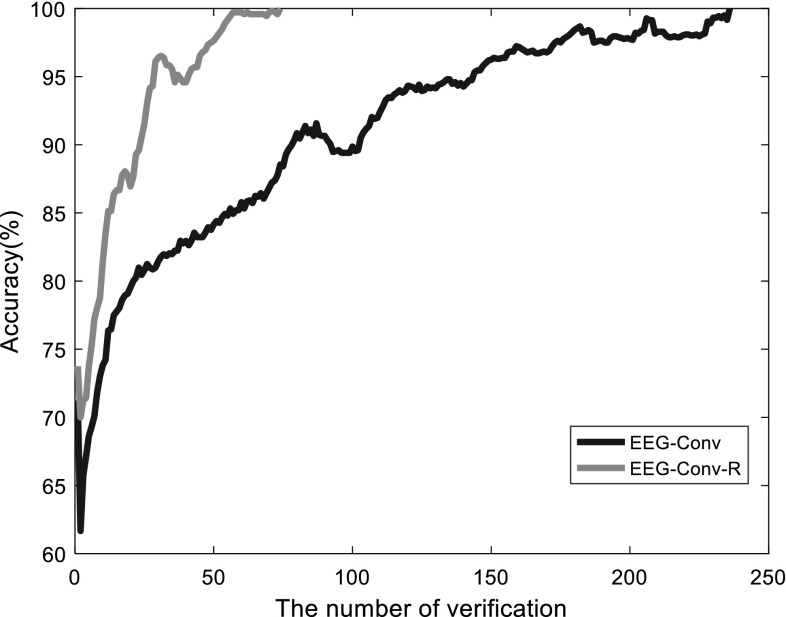

Furthermore, we also use cross validation to compare EEG-Conv and EEG-Conv-R, as shown in Fig. 7. EEG-Conv-R can quickly approach 100%, although its validation accuracy fluctuate slightly. The main reason of fluctuations is the insufficient number of samples of each subject (the number of samples per subject is between 2000 and 3200, as shown in Table 2). But the application of residual blocks in EEG-Conv-R classifier makes the training much faster than EEG-Conv. Overall, EEG-Conv-R exhibits significant improvement in training speed over EEG-Conv.

Fig. 7.

The accuracy increase process of EEG-Conv-R versus EEG-Conv during training process

Inter-subject classification performance

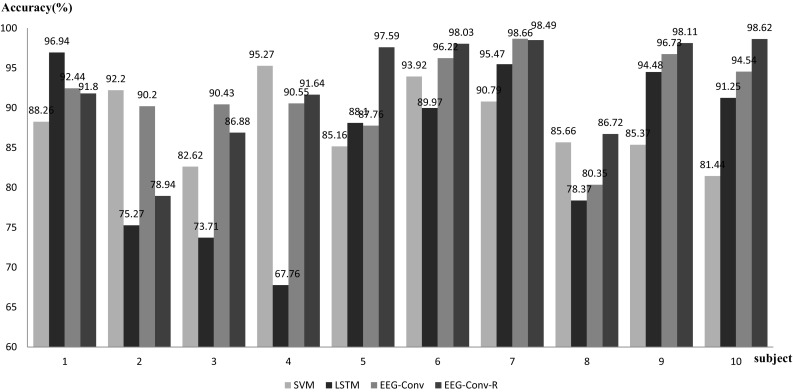

To test the classification performance of EEG-Conv and EEG-Conv-R, we mix the TAV3 and DROWS samples of all the subjects together. Similarly, 80% of the samples are extracted as the training set, and the remaining as the test set. Also we randomly choose 10% of the training set for cross validation.

As shown in Fig. 8, our EEG-Conv and EEG-Conv-R classifiers achieve higher classification accuracy than SVM and LSTM for inter-subject mental state recognition. The average accuracy of our EEG-Conv and EEG-Conv-R is 82.95 and 84.38%, respectively, while those of SVM and LSTM are 81.85 and 75.55%, respectively. This result suggests that our methods generalize better to the mental state detection among different subjects.

Fig. 8.

Accuracy of SVM, LSTM, EEG-Conv and EEG-Conv-R on inter-subject

The convergence of EEG-Conv-R versus EEG-Conv

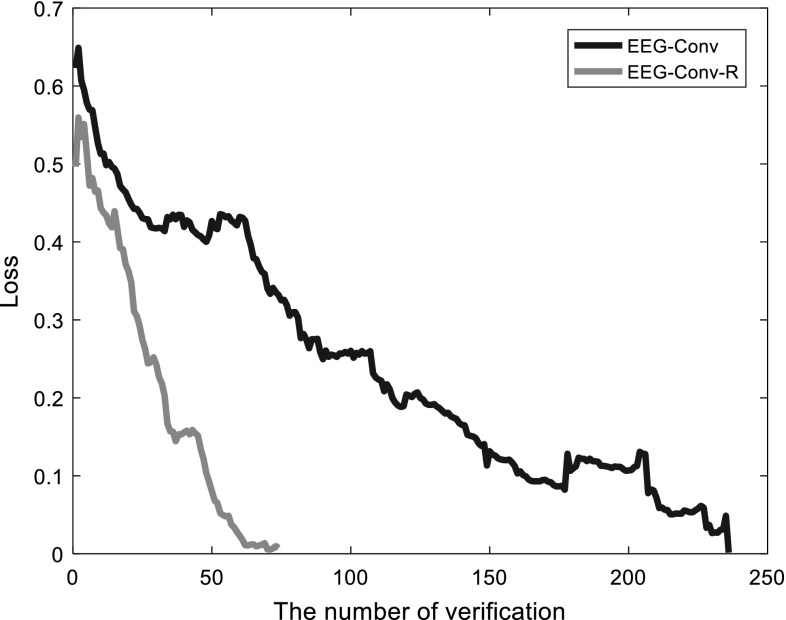

Figure 9 depicts the loss descending of EEG-Conv and EEG-Conv-R during the training process. The loss of EEG-Conv decreases slowly, and shows larger fluctuations. It needs nearly 250 batches to reach convergence for EEG-Conv. EEG-Conv-R converges quickly, achieving better convergence effect than EEG-Conv within 70 batches. That is, it takes less time to train EEG-Conv-R. The underlying reason is that EEG-Conv-R adds two convolution layers with a and a convolution kernel, respectively, to the depth of the model, and introduces the idea of residual learning into the model design. The convolution kernel results in a greater receptive field, and process more parameters, which reduce the number of convolution layers. For residual learning of non-linear EEG signals, it has a good learning effect and can capture the difference between the output of the basic map and the real-world Gaussian response, so that the output of EEG-Conv-R is closer to the true value, and more easily to detect the disturbances of the signals. According to the experiment, the residual function we learned usually has a small response and fits faster.

Fig. 9.

The convergence comparison between EEG-Conv and EEG-Conv-R

Conclusion

In this paper, we have described two deep learning-based models EEG-Conv and EEG-Conv-R to predict the mental state of driver, respectively. A 5-layer convolution neural network is built to classify the mental states of drive fatigue, and both classifiers are tested by raw EEG data. The classification performances of these two models are compared to the classical SVM classifier and LSTM deep learning model with the same EEG data.

Our experimental results suggest the following findings: 1) for mental state detection of intra-subject , both EEG-Conv and EEG-Conv-R achieve better classification performances than the traditional classifiers like SVM and LSTM; 2) for mental state detection of inter-subject, EEG-Conv-R performs better than EEG-Conv, LSTM and SVM-based classifier; 3) EEG-Conv-R converges faster than EEG-Conv, and takes less time for feature extraction at the training stage.

However, insufficient sample of each intra-subject limits the performance improvement of EEG-Conv-R. We will collect more EEG data to further validation of EEG-Conv-R. Currently, we just study a binary classification. In our future work, we will apply the proposed deep learning methods to study multi-label classification of EEG signals.

Acknowledgements

The authors would also like to thank the anonymous referees for their valuable comments and helpful suggestions. The work is supported by the National Natural Science Foundation of China under Grant Nos. {61671193, 61633010, 61473110, 61502129}, Key Research and Development Plan of Zhejiang Province under Grant No. 2018C04012, Zhejiang Provincial Natural Science Foundation of China under Grant No. LQ16F020004. Science and technology platform construction project of Fujian science and Technology Department No. 2015Y2001.

References

- Abdel-Hamid O, Mohamed AR, Jiang H, Deng L, Penn G, Yu D. Convolutional neural networks for speech recognition. IEEE/ACM Trans Audio Speech Lang Pprocess. 2014;22(10):1533–1545. doi: 10.1109/TASLP.2014.2339736. [DOI] [Google Scholar]

- Ahn S, Nguyen T, Jang H, Kim JG, Jun SC. Exploring neuro-physiological correlates of drivers’ mental fatigue caused by sleep deprivation using simultaneous EEG, ECG, and FNIRS data. Front Hum Neurosci. 2016;10:219. doi: 10.3389/fnhum.2016.00219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornas X, Fiolveny A, Balle M, Morillasromero A, Tortellafeliu M. Long range temporal correlations in eeg oscillations of subclinically depressed individuals: their association with brooding and suppression. Cognit Neurodyn. 2015;9(1):53–62. doi: 10.1007/s11571-014-9313-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookhuis KA, De WD. The use of psychophysiology to assess driver status. Ergonomics. 1993;36(9):1099. doi: 10.1080/00140139308967981. [DOI] [PubMed] [Google Scholar]

- Cecotti H, Graser A. Convolutional neural networks for p300 detection with application to brain-computer interfaces. IEEE Trans Pattern Anal Mach Intell. 2011;33(3):433–445. doi: 10.1109/TPAMI.2010.125. [DOI] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. Libsvm: a library for support vector machines. ACM Trans Intell Syst Technol. 2011;2(3):1–27. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- Chen LL, Zhao Y, Ye PF, Zhang J, Zou JZ. Detecting driving stress in physiological signals based on multimodal feature analysis and kernel classifiers. Expert Syst Appl. 2017;85(C):279–291. doi: 10.1016/j.eswa.2017.01.040. [DOI] [Google Scholar]

- Correa AG, Orosco L, Laciar E. Automatic detection of drowsiness in eeg records based on multimodal analysis. Med Eng Phys. 2014;36(2):244. doi: 10.1016/j.medengphy.2013.07.011. [DOI] [PubMed] [Google Scholar]

- Domhan T, Springenberg JT, Hutter F (2015) Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves. In: IJCAI, pp 3460–3468

- Fu R, Wang H. Detection of driving fatigue by using noncontact emg and ecg signals measurement system. Int J Neural Syst. 2014;24(03):1450006. doi: 10.1142/S0129065714500063. [DOI] [PubMed] [Google Scholar]

- Fu RR, Wang H, Zhao WB. Dynamic driver fatigue detection using hidden markov model in real driving condition. Expert Syst Appl. 2016;63(C):397–411. doi: 10.1016/j.eswa.2016.06.042. [DOI] [Google Scholar]

- Gravier A, Quek C, Duch W, Wahab A, Gravier-Rymaszewska J. Neural network modelling of the influence of channelopathies on reflex visual attention. Cognit Neurodyn. 2016;10(1):49–72. doi: 10.1007/s11571-015-9365-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hajinoroozi M, Mao Z, Huang Y (2016) Prediction of driver’s drowsy and alert states from eeg signals with deep learning. In: IEEE international workshop on computational advances in multi-sensor adaptive processing, pp 493–496

- He KM, Zhang XY, Ren SQ, Sun J (2016) Deep residual learning for image recognition. In: Computer vision and pattern recognition, pp 770–778

- Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- Hu JF. Automated detection of driver fatigue based on adaboost classifier with eeg signals. Front Comput Neurosci. 2017;11:72. doi: 10.3389/fncom.2017.00072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu SH, Zheng GT. Driver drowsiness detection with eyelid related parameters by support vector machine. Expert Syst Appl. 2009;36(4):7651–7658. doi: 10.1016/j.eswa.2008.09.030. [DOI] [Google Scholar]

- Idogawa K. On the brain wave activity of professional drivers during monotonous work. Behaviormetrika. 2006;18(30):23–34. doi: 10.2333/bhmk.18.30_23. [DOI] [Google Scholar]

- Jap BT, Lal S, Fischer P, Bekiaris E. Using eeg spectral components to assess algorithms for detecting fatigue. Expert Syst Appl. 2009;36(2):2352–2359. doi: 10.1016/j.eswa.2007.12.043. [DOI] [Google Scholar]

- Jeong IC, Lee DH, Park SW, Ko JI, Yoon HR (2007) Automobile driver’s stress index provision system that utilizes electrocardiogram. In: Intelligent vehicles symposium, 2007 IEEE. IEEE, pp 652–656

- Jung TP, Makeig S, Humphries C, Lee TW, Mckeown MJ, Iragui V, Sejnowski TJ. Removing electroencephalographic artifacts by blind source separation. Psychophysiology. 2000;37(2):163–178. doi: 10.1111/1469-8986.3720163. [DOI] [PubMed] [Google Scholar]

- Kar S, Bhagat M, Routray A. Eeg signal analysis for the assessment and quantification of drivers fatigue. Transp Res Part F Traffic Psychol Behav. 2010;13(5):297–306. doi: 10.1016/j.trf.2010.06.006. [DOI] [Google Scholar]

- Khushaba RN, Kodagoda S, Lal S, Dissanayake G. Driver drowsiness classification using fuzzy wavelet-packet-based feature-extraction algorithm. IEEE Trans Biomed Eng. 2010;58(1):121–131. doi: 10.1109/TBME.2010.2077291. [DOI] [PubMed] [Google Scholar]

- Kong WZ, Zhou ZP, Jiang B, Babiloni F, Borghini G. Assessment of driving fatigue based on intra/inter-region phase synchronization. Neurocomputing. 2017;219(5):474–482. doi: 10.1016/j.neucom.2016.09.057. [DOI] [Google Scholar]

- Lal SK, Craig A. A critical review of the psychophysiology of driver fatigue. Biol Psychol. 2001;55(3):173–194. doi: 10.1016/S0301-0511(00)00085-5. [DOI] [PubMed] [Google Scholar]

- Lawhern VJ, Solon AJ, Waytowich NR, Gordon SM, Hung CP, Lance BJ (2016) Eegnet: a compact convolutional network for EEG-based brain-computer interfaces. arXiv preprint arXiv:1611.08024 [DOI] [PubMed]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Liang SF, Wang HC, Chang WL. Combination of eeg complexity and spectral analysis for epilepsy diagnosis and seizure detection. Eurasip J Adv Signal Process. 2010;2010(1):1–15. [Google Scholar]

- Lin CT, Huang KC, Chao CF, Chen JA, Chiu TW, Ko LW, Jung TP. Tonic and phasic eeg and behavioral changes induced by arousing feedback. NeuroImage. 2010;52(2):633–642. doi: 10.1016/j.neuroimage.2010.04.250. [DOI] [PubMed] [Google Scholar]

- Lin CT, Wang YK, Chen SA. An eeg-based brain-computer interface for dual task driving detection. Neurocomputing. 2014;129(4):85–93. [Google Scholar]

- Manor R, Geva AB. Convolutional neural network for multi-category rapid serial visual presentation BCI. Front Comput Neurosci. 2015;9:146. doi: 10.3389/fncom.2015.00146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mu ZD, Hu JF, Min JL. Driver fatigue detection system using electroencephalography signals based on combined entropy features. Appl Sci. 2017;7(2):150. doi: 10.3390/app7020150. [DOI] [Google Scholar]

- Page A, Shea C, Mohsenin T (2016) Wearable seizure detection using convolutional neural networks with transfer learning. In: IEEE international symposium on circuits and systems (ISCAS). IEEE, pp 1086–1089

- Pal NR, Chuang CY, Ko LW, Chao CF, Jung TP, Liang SF, Lin CT. Eeg-based subject-and session-independent drowsiness detection: an unsupervised approach. EURASIP J Adv Signal Process. 2008;2008(1):519480. doi: 10.1155/2008/519480. [DOI] [Google Scholar]

- Puanhvuan D, Khemmachotikun S, Wechakarn P, Wijarn B, Wongsawat Y. Navigation-synchronized multimodal control wheelchair from brain to alternative assistive technologies for persons with severe disabilities. Cognit Neurodyn. 2017;11(2):117–134. doi: 10.1007/s11571-017-9424-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin FW, Gao NN, Peng Y, Wu ZZ, Shen SY, Grudtsin A. Fine-grained leukocyte classification with deep residual learning for microscopic images. Comput Methods Programs Biomed. 2018;162(8):243–252. doi: 10.1016/j.cmpb.2018.05.024. [DOI] [PubMed] [Google Scholar]

- Raghu S, Sriraam N, Kumar GP. Classification of epileptic seizures using wavelet packet log energy and norm entropies with recurrent elman neural network classifier. Cognit Neurodyn. 2017;11(1):51–66. doi: 10.1007/s11571-016-9408-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sahayadhas A, Sundaraj K, Murugappan M. Detecting driver drowsiness based on sensors: a review. Sensors. 2012;12(12):16937. doi: 10.3390/s121216937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakhavi S, Guan CT, Yan SC (2015) Parallel convolutional-linear neural network for motor imagery classification. In: Signal processing conference (EUSIPCO). IEEE, pp 2736–2740

- Schirrmeister RT, Springenberg JT, Fiederer LDJ, Glasstetter M, Eggensperger K, Tangermann M, Hutter F, Burgard W, Ball T (2017) Deep learning with convolutional neural networks for brain mapping and decoding of movement-related information from the human eeg. arXiv preprint arXiv:1703.05051 [DOI] [PMC free article] [PubMed]

- Schoenberg PLA, Speckens AEM. Multi-dimensional modulations of alpha and gamma cortical dynamics following mindfulness-based cognitive therapy in major depressive disorder. Cognit Neurodyn. 2015;9(1):13–29. doi: 10.1007/s11571-014-9308-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein D, Orbach ISM, Har ED, Yaruslasky A, Roth D, Meged S, Apter A. Eeg alpha band synchrony predicts cognitive and motor performance in patients with ischemic stroke. Behav Neurol. 2013;26(3):187. doi: 10.1155/2013/109764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang ZC, Li C, Sun SQ. Single-trial eeg classification of motor imagery using deep convolutional neural networks. Opt Int J Light Electron Opt. 2017;130:11–18. doi: 10.1016/j.ijleo.2016.10.117. [DOI] [Google Scholar]

- Thodoroff P, Pineau J, Lim A (2016) Learning robust features using deep learning for automatic seizure detection. In: Machine learning for healthcare conference, pp 178–190

- Tsuchida A, Bhuiyan M, Oguri K (2009) Estimation of drowsiness level based on eyelid closure and heart rate variability. In: EMBC 2009 international conference of the IEEE Engineering in Medicine and Biology Society, 2009, pp 2543–2546 [DOI] [PubMed]

- Wali MK, Murugappan M, Ahmmad B. Wavelet packet transform based driver distraction level classification using eeg. Math Probl Eng. 2013;2013(3):841–860. [Google Scholar]

- Zeng H, Dai GJ, Kong WZ, Chen FY, Wang LY. A novel nonlinear dynamic method for stroke rehabilitation effect evaluation using eeg. IEEE Trans Neural Syst Rehabil Eng. 2017;25(12):2488–2497. doi: 10.1109/TNSRE.2017.2744664. [DOI] [PubMed] [Google Scholar]

- Zhang JH, Li SN, Wang RB. Pattern recognition of momentary mental workload based on multi-channel electrophysiological data and ensemble convolutional neural networks. Front Neurosci. 2017;11:310. doi: 10.3389/fnins.2017.00310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang JH, Cui XQ, Li JR, Wang RB. Imbalanced classification of mental workload using a cost-sensitive majority weighted minority oversampling strategy. Cognit Technol Work. 2017;19(4):633–653. doi: 10.1007/s10111-017-0447-x. [DOI] [Google Scholar]

- Zhao CL, Zheng CX, Zhao M, Liu JP. Physiological assessment of driving mental fatigue using wavelet packet energy and random forests. Am J Biomed Sci. 2010;2(3):262–274. doi: 10.5099/aj100300262. [DOI] [Google Scholar]