Abstract

Background:

The institutions which comprise the Clinical and Translational Science Award consortium and the National Center for Advancing Translational Sciences continue to explore and develop community engaged research strategies and to study the role of community academic partnerships in advancing the science of community engagement.

Objectives:

To explore Clinical and Translational Science institutions (CTSA) in relation to an Institute of Medicine recommendation that community engagement occur in all stages of translational research and be defined and evaluated consistently.

Methods:

A sequential multi-methods study starting with an online pilot survey followed by survey respondents and site informant interviews. A revised survey was sent to the community engagement and evaluation leads at each CTSA institution, requesting a single institutional response about definitions, indicators and metrics of community engagement and community-engaged research.

Results:

A plurality of CTSA institutions selected the definition of community engagement from the Principles of Community Engagement. While claiming unique institutional priorities create barriers to developing shared metrics, responses indicate an overall lack of attention to the development and deployment of metrics to assess community engagement in and contributions to research.

Conclusions:

Although definitions of community engagement differ among CTSAs, there appears more similarities than differences in the indicators and measures tracked and reported on across all definitions, perhaps due to commonalities among program infrastructures and goals. Metrics will likely need to be specific to translational research stages. Assessment of community engagement within translational science will require increased institutional commitment.

Keywords: Community engagement, community based participatory research, community health partnerships, metrics and outcomes, outcomes research evaluation, Clinical and translational science

The role of community voices within clinical research and translational science continues to evolve for institutions participating in the National Institutes of Health (NIH) Clinical and Translational Science consortium (CTSA). Historically, the Centers for Disease Control and Prevention made federal funds available for community capacity building in the 1980s through the Racial/Ethnic Approaches to Community Health (REACH) program. In 1995 the National Institute of Environmental Health Sciences launched a program to fund community-based participatory research (CBPR). Other NIH Institutes and Centers provided funding in support of community-academic research partnerships, augmenting support from the CDC and private foundations, most notably the National Center and later called the Institute for Minority Health and Health Disparities.1 Encouraged by a 1998 Institute of Medicine call to formalize “public” participation in the NIH funding allocation process,2 the NIH established the Director’s Council of Public Representatives. The now-defunct Council provided input on NIH priorities, on allocating research dollars and on public participation.3 The Council’s Public in Research Work Group recommended the NIH adopt a community-engaged research framework for the CTSA program.4,5

A 2013 Institute of Medicine report commented directly on the role of community voices within clinical research and translational science. The report recommended the CTSA consortium engage communities across the full spectrum of translational research. The IOM encouraged development of new community-academic research partnerships that would focus on the discovery and assessment of new treatments and procedures characteristic of earlier stage translational research. The report also recommended developing a broad definition of community engagement and consistent use of the definition by NCATS in funding announcements and CTSA program communications.6 Such a definition would advance science by serving as a foundation for assessing community involvement in research partnerships and in achieving a primary translational research outcome of improving community health.7

Over that past few decades, community-based participatory research (CBPR), a well-defined form of participatory social action that integrates community members as partners in prioritizing, developing and implementing research, emerged as the prominent approach to community engaged research.8 CBPR recognizes community members as experts in their own right and as key participants in knowledge creation. Other community engagement approaches that share CBPR characteristics include community action research,9 participatory action research,10 and community-partnered participatory research.11 In addition to specific engagement methods, the intensity of engagement can be situated along a continuum from outreach through shared leadership. The continuum organizes increases in intensity as indicative of advances in the relational dynamics of community-academic partnerships.12 Productive partnerships should function as “communities of practice” recognizing, addressing and learning from challenges, barriers and successes across research questions and contexts.13

This manuscript reports on a survey of community engagement directors and evaluators at CTSA consortium institutions. The survey was designed to assess the consortium as a community of practice. Specifically, to learn about how CTSA institutions define and measure community engagement and community-engaged research (CEnR). Reporting on the survey responses provides insights into Academic Health and Science Center perspectives on their community engagement activities and on how to define and measure community engagement and community engaged research.

METHODS

This study was organized and conducted by a voluntary group of researchers and community partners associated with CTSA institutions. Many research team members were previously involved in developing a community engagement Logic Model, which articulated a common framework emerging at CTSA institutions.14 The study team represented multiple institutions and communities. Work was initially conducted through a series of regularly scheduled conference calls with agendas focusing discussion on identifying study domains and creating and refining the survey questions, data collection and data analysis, and overall study progress. The preparation of this manuscript largely occurred through email. All study team members could participate in any study activity and most contributed to the writing of this manuscript. Between April and October 2014, study team members variously contributed to the design of the survey, to implementing a pilot test, to analyzing responses and conducting key informant interviews with respondents to the pilot survey. These activities contributed to the development of a survey instrument, designed to address three ambitious goals:

identify definitions of community engagement and community-engaged research in use at CTSAs;

learn about the indicators and metrics of community engagement used by individual CTSAs; and

share ideas about how clinical research partnerships can advance the science of community engagement within the CTSA consortium.

The finalized survey was used to collect data in support of a descriptive, cross-sectional key informant study of all institutions within the CTSA consortium.

Participants and Sampling

Community engagement and evaluation program directors and managers at every CTSA were sent an email invitation in January 2015 to complete the online survey. This census of all sites (n=62) requested that the leaders of the community engagement and evaluation cores collaborate and submit one response to the survey per institution. While the respondents or key informants were prompted to identify their institution, no response was mandatory for advancing through the questions or submitting the survey. University of California team members downloaded the institutional responses into spreadsheet format from the Formstack website for analysis.

Instrument

A mixed-methods survey using Survey Monkey© was created to collect responses from participants. The survey was divided into five broad categories as follows: (1) definition of community engagement – participants were asked to provide definitions that guided their community engagement effort at their CTSAs. (2) Representation of community stakeholders – the survey collected data on the breadth and range of community stakeholders that their CTSA was partnering with on translational research efforts at individual CTSA institution. (3) Process and outcome measures –responses indicated the types of data each hub institution was collecting to measure the operational progress and impact of their CTSA research enterprise. (4) Institutional transformation – The CTSA program sought to provoke a transformation in institutional approaches to clinical research. Following the IOM Report and the work of the NCATS Advisory Council Working Group on the IOM Report (May 2014), each CTSA was deemed a “hub.” Hubs would create and support collaboration within the institution; between the institution and local community organizations and environments; and with other CTSA institutions regionally and nationally. CTSA institutions managing a complex network of relationships were also expected to engage in self-reflective learning. The survey framed inquiry into institutional transformation by asking informants about similarities and differences in community engagement activities and goals between the Academic Health and Science Center and CTSA hub. (5) Community advisory boards – Individual CTSAs invest substantial human capital to develop Community Advisory Boards for engaging community stakeholders in the research process.15–18 The survey sought responses related to the number, types, composition and purpose of community advisory boards at each CTSA.

Given the study’s focus on institutional definitions, metrics and infrastructure, the voluntary nature of providing a response to blast email invitations, the opportunity to respond anonymously and the absence of personal health information, institutional review board oversight of this study was not sought.

RESULTS:

Respondents

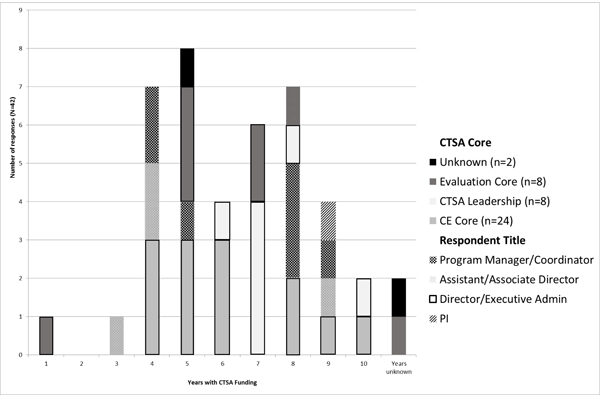

The 44 total responses included 39 from unique institutions. Three surveys were returned anonymously and two sites submitted two responses. The majority of the results are based upon a 68% response rate (n=42). Respondents indicated primary community engagement responsibilities (57%), evaluation responsibilities (19%) or other CTSA leadership positions (19%). Half of all respondents occupied leadership positions, primarily director or co-director. Two submissions identified multiple individuals as contributing to the response. No submission indicated community member involvement in responding to the survey (Figure 1). The survey team sought and received feedback from key informants.

Figure 1.

Survey Respondent Position/Title and number of years institution had CTSA funding

Survey Part 1 – Definitions, Goals and Activities

Defining community engagement:

To create an initial context, the survey reproduced three widely used definitions of community engagement (i.e., NIH Director’s Council of Public Representatives (2010);4 Principles of Community Engagement (2011);10 and the Kellogg Foundation CBPR definition (2001)14) and the community engagement Logic Model.14 The first question asked respondents to select the definition that best described the community engagement program operations of their CTSA. Informants were also asked if their institution used a different definition of community engagement and to explain how their institutional approach compared to their selected definition. Informants could also select ‘no formal or agreed upon definition’ (Table 1).

Table 1.

Survey Framing: Definitions, Goals and Activities

| 1. Among the following definitions and alternative options, please select ONE BEST response describing your CTSA’s community engagement (CE) program operations. | ||

|---|---|---|

| Definition | Count (#) | % Total |

| a. “Community Engagement in Research (CEnR) is a core element of any research effort involving communities which requires academic members to become part of the community and community members to become part of the research team, thereby creating a unique working and learning environment before, during, and after the research.” (from: NIH Council of Public Representatives, in Ahmed and Palermo, 2010). | 17 | 39% |

| b. “Community engagement is the process of working collaboratively with and through groups of people affiliated by geographic proximity, special interest, or similar situations to address issues affecting the well-being of those people... It often involves partnerships and coalitions that help mobilize resources and influence systems, change relationships among partners, and serve as catalysts for changing policies, programs, and practices” (from: Principles of Community Engagement, 2nd edition, 2011). | 10 | 23% |

| c. “A collaborative approach to research that equitably involves all partners in the research process and recognizes the unique strengths that each brings. CBPR begins with a research middleic of importance to the community with the aim of combining knowledge and action for social change to improve community health and eliminate health disparities.” (from: W.K. Kellogg Community Scholar’s Program, 2001) | 7 | 16% |

| d. We don’t have a definition | 5 | 11% |

| e. Our definition has some similarities but also differences from those list above. If Our definition has some similarities…The differences are as follows: [Enter free text] | 5 | 11% |

| f. Other | 0 | 0% |

| Total | 44 | 100% |

Note: One respondent from the two institutions submitting two responses selected “We don’t have a definition.” Eliminating either institutional response changes the totals by approximately two percent points. This table includes all responses.

A plurality of CTSAs chose the definition from the Principles of Community Engagement: “Community engagement is the process of working collaboratively with and through groups of people affiliated by geographic proximity, special interest, or similar situations to address issues affecting the well‐being of those people.... It often involves partnerships and coalitions that help mobilize resources and influence systems, change relationships among partners, and serve as catalysts for changing policies, programs, and practices.”10 A smaller but equal number (n=5) chose the NIH COPR definition, the Kellogg Foundation CBPR definition19 or indicated they had no definition. Ten respondents selected “similarities but also differences” with all the definitions. Finally, one institution uniquely defined community and community-based organizations according to the NIH’s Program Announcement (PA)-08–074 ‘Community Participation in Research (R01)’ without selecting “other” as a response.

Providing respondents an opportunity to comment on their responses complicates survey analyses and reporting. A comment such as “Our definition does not go as far, or as ‘deep’ as the definition in the Principles of Community Engagement…, we do not feel that our partnerships and coalitions have, thus far, served as catalysts for changing policies, programs, and practices” makes it difficult to determine if that indicates a rejection or perhaps a lack of complete alignment with a particular definition. Similarly, another respondent explained they “may or may not include community partners in every phase of the research process,” and a third noted “many similarities, especially to the NIH, but we also emphasize community partnered participatory research, which focuses on the partnership.” While Table 1 records actual responses, it is important to recognize that some comments suggest agreement with a definition that was not selected.

Institutional Partners:

Survey respondents also identified all their community stakeholders. Table 2 groups CTSA institutional stakeholder partners according to those institutions who selected greater than 75%, 75% - 50%, and less than 50% of the specific stakeholders, beginning with the most common definitions. Community stakeholders appear similarly distributed across definitions with very few exceptions (e.g., industry representation among institutions using the NIH Council 2010 definition). ‘Other” stakeholders include “members of the media” and health system leadership.

Table 2.

Community Representation: Stakeholder and Advisory Boards by Definitional Groups (Select all that apply)

| Definition\Community Stakeholders | n= 44* | Health care providers and clinicians | Health department or other public department employees | Representatives of community organizations | Individual community members and leaders | Faith community | Policy-makers | Local business | Disease interest groups | Neighborhood associations | Industry | Other |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| b. Principles 2011. | 17 | 17 (12) | 17 (8) | 17 (12) | 16 (10) | 14 (13) | 12 | 12 | 10 | 10 | 7 | 1 |

| e. Similarities & Differences | 10 | 9 (8) | 10 (9) | 10 (9) | 7 (6) | 9 (6) | 10 | 7 | 8 | 8 | 4 | 2 |

| d. We don’t have a definition | 7† | 7 (4) | 4 (2) | 6 (4) | 7 (1) | 6 (2) | 3 | 3 | 5 | 3 | 2 | 0 |

| a. NIH Council 2010 | 5 | 5 (4) | 4 (3) | 5 (3) | 5 (2) | 4 (2) | 4 | 3 | 4 | 2 | 5 | 0 |

| c. Kellogg 2001 | 5 | 4 (2) | 5 (2) | 5 (3) | 5 (3) | 5 (3) | 3 | 4 | 3 | 4 | 1 | 1 |

Including all responses creates a <5% to<1% margin of error, depending on the unit of analysis

Two responses did not select a definition and were included in ‘d. We don’t have a definition’

Parentheses = number of institutions indicating CAB membership representation

While a large proportion of community stakeholders are involved in healthcare related activities, informants were not asked to distinguish external professionals from lay community members. Table 2 also includes data on the CAB representation for the most often represented community stakeholders. Of note is the high variability of CAB membership by community members and leaders among definitions. The principle of equitable participation in specific research collaborations may help explain lower CAB representation among institutions using a CBPR definition. CTSA support of CABs will be further discussed below.

Institutional transformation:

Two thirds of the key informants across all definitions reported that goals of the larger institution influenced their CTSA community engagement program by increasing the number and intensity of local collaborations and partnerships as well as the institutional resources available to support community engagement. Respondents reported that CTSA community engagement activities contributed to the overall institution’s relationship with local communities and that their institutions considered service programs more important than research projects. Respondents also credited CTSAs with advancing institutional understanding of and work in the community by renewing, enhancing or developing connections to new communities (e.g., military, area youth). Almost all respondents credited their CTSA community engagement component for increasing institutional awareness and action to address health disparities.

Survey Part 2 – Measures and Indicators

About three quarters of the key informants indicated collecting process measures, including tracking community member and faculty training in research. A slightly higher percentage of institutions track funded grants and publications (Table 3, Part I). CTSA hubs report information on grants and publications more often than other measures in their Annual Performance Reports and to their External Advisory Board/Committee. 60% of all respondents count the number of basic science projects that use a community engagement core or consultation service.20 Fewer institutions track the number of basic science research projects that seek input from community sources. The survey development team considered project consultations an appropriate metric for CTSA infrastructure.

Table 3.

Selected Indicators and Measures by Definition

| Part I |

| Definition\Community Stakeholders | n=42* | # community members trained for research | Report on APR | Report to EAB | # faculty trained | Report on APR | Report to EAB | Tracking # of collaborative grants | Report on APR | Report to EAB | Tracking# of Publications | Report on APR | Report to EAB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| b. Principles 2011. | 16 | 81% | 25% | 25% | 75% | 25% | 31% | 75% | 19% | 25% | 69% | 31% | 6% |

| e. Similarities & Differences | 10 | 60% | 20% | 30% | 70% | 30% | 40% | 90% | 60% | 40% | 100% | 50% | 40% |

| d. We don’have a definition | 7† | 86% | 29% | 0% | 71% | 14% | 0% | 71% | 29% | 29% | 57% | 29% | 29% |

| a.NIH Council 2010 | 5 | 80% | 0% | 20% | 60% | 0% | 20% | 100% | 20% | 20% | 100% | 40% | 20% |

| c. Kellogg 2001. | 4 | 75% | 75% | 0% | 75% | 75% | 0% | 100% | 100% | 25% | 100% | 100% | 25% |

| average of all responses | 76% | 26% | 19% | 71% | 26% | 24% | 83% | 38% | 29% | 81% | 43% | 21% | |

| Part II |

| Definition\Community Stakeholders | n=42* | Number of basic science research projects that seek input or advice from community engagement core or internal consultation service | Report on APR | Report to EAB | Increased level of trust between university and community members | Report on APR | Report to EAB | Increased collaboration of community partners in basic science research | Report on APR | Report to EAB | Number of basic science research projects that seek input or advice from external community stakeholders | Report on APR | Report to EAB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| b. Principles 2011. | 16 | 63% | 13% | 19% | 56% | 0% | 13% | 50% | 6% | 13% | 44% | 0% | 0% |

| e. Similarities & Differences | 10 | 70% | 30% | 20% | 20% | 0% | 10% | 30% | 0% | 0% | 50% | 0% | 10% |

| d. We don’have a definition | 7† | 43% | 14% | 14% | 0% | 0% | 0% | 57% | 29% | 14% | 14% | 0% | 14% |

| a.NIH Council 2010 | 5 | 40% | 20% | 20% | 60% | 0% | 40% | 80% | 0% | 20% | 40% | 0% | 20% |

| c. Kellogg 2001 | 4 | 75% | 50% | 25% | 25% | 25% | 0% | 25% | 25% | 0% | 75% | 0% | 0% |

| average of all responses | 60% | 21% | 19% | 36% | 2% | 12% | 48% | 10% | 10% | 43% | 0% | 7% | |

Two responses did not select a definition and were included in ‘d. We don’t have a definition’

Recorded only shared responses for the two institutions with two submissions

There appears more similarities than differences in the indicators and measures tracked and reported on across all definitions. Fewer institutions assess level of trust between community-academic partners than community involvement in basic science, although both measures are among the least reported. Other measures infrequently reported on include aggregate counts of pilot studies funded and community partners involved, the number of community academic interactions during project development, and overall involvement of racial and ethnic minorities. Taking into account all key informant responses regarding the seventeen indicators and measures queried in the survey, sharing research findings or results within community contexts receives the least attention.

Key informants were also provided an opportunity to recommend new metrics. Respondents shared interests in assessing the value of training programs on subsequent research interactions, of community input on translational science projects, and on the impact of community-engaged research on local health outcomes over time. Additional recommendations for metrics include partnership dynamics and trust; assessment of team science by counting projects with multiple principal investigators. In addition, social network inquiry was proposed as a way to study community engagement and team science. Table 4 contains a complete list of the suggested metrics.

Table 4:

Suggested Metrics for CTSA Institutions

| Counts based upon program activities |

| Number of early career (KL2) scholars trained for Community-engaged Research |

| Areas of CEnR partnerships (e.g., pediatrics, geriatrics, health disparities) |

| Use of Community Engagement consulting service by a) basic scientists, b) individual CTSA-funded studies |

| Researchers and projects seeking community stakeholder input |

| Community members involved in all individual CTSA-supported partnerships |

| Community member recruitment to clinical trials |

| Time to completion of Community-engaged Research projects |

| Repeat grant submissions, awards, publications by partnership – (assess longevity) |

| Contribution of community-engaged research to outcome measures |

| Outcomes of training for Translational stage 3 and Translational stage 4 research |

| Changes in research due to community-engaged activities |

| Shared-decision making in developing, conducting and reporting on research |

| Social network analysis to assess, for example, investigator collaboration with and input from community partner at key process points (e.g., scientific review, proposal submission and award, IRB submission, project implementation…). |

| Change in the community sense of accountability on the part of researchers |

| Changed community perception of academic research in the University and community |

| Community partner perception of benefit |

| Dissemination and implementation of research findings |

| Policy changes |

| Grants to communities informed by but independent of research |

| Counts of interactions among individual CTSAs |

| Number of institutions requesting measurement and evaluation information |

| Collaborative projects |

Community advisory boards

Eighty percent of the CTSAs responding maintain at least one Board with many CTSAs maintaining two or more. Only one CTSA among those without a definition of community engagement supports multiple CABs, which is far below the overall average. Community Advisory Boards most frequently include between 11–20 members; two institutions reported Boards of 50 members or more. Variability in the number and size of boards is one reason an average of community members on all boards was not calculated. Some CABs are populated primarily by professionals and funders from outside the institution (e.g., clinicians, independent research organizations, and pharmaceutical stakeholders). A few institutions report their CABs have developed principles for governance and decision-making to facilitate partnership capacity-building and group solidarity.21–23 Respondents indicate that CTSAs regularly seek CAB input on prioritizing diseases to research, on allocating pilot funds, and infrequently on institutional strategy or leadership.

Key informants indicated that multiple CABs may possess distinct responsibilities: CABs may be involved in distinct institutional program areas, may be developed for distinct research projects, may represent specific geographical areas, or may be organized around specific stakeholder interests. CABs variously advise both CTSA and Academic Health Center leadership.

Study Limitations

In seeking to understand the role of community voices within clinical research and translational science, the study team acknowledges a small universe of eligible participants, while also observing that the Academic Health and Science Centers within the CTSA consortium are not inconsequential in terms of clinical research scope and total NIH funding. This study accepted a single self-report per institution to develop an initial understanding of current definitions and metrics, allowing real or perceived institutional variation to go unreported. Additionally, while requesting a single institutional response, the survey neither encouraged nor discouraged input from community partners. Finally, the survey did not assess the extent or adequacy of the resources available to support community engagement, community-engaged research or evaluation activities.

DISCUSSION

1. The selection of survey data highlights the institutional development of infrastructure to support translational science and the assessment of the institutional changes involved. The dates of the definitions used to frame the survey point to previously established approaches to community engagement within some translational research areas. In terms of assessment, some widely used metrics and indicators have been appropriated from established academic measures of success. It is clear that traditional measures do not identify or reward the development of community-academic research partnerships. It is also clear that current, prominent metrics and indicators are unlikely to provide insights into the benefits made possible by translational research.

The CTSA program was launched under the auspices of the National Center for Research Resources to transform how institutions with significant NIH support conduct research. CTSAs would study and refine their management of research and become more efficient. Among the components needed to support translational science, CTSAs were charged with engaging communities to overcome the medical ecology of academic research centers; engaging more diverse individuals in clinical research is just good science. CTSAs began to diversify involvement in clinical trials while also developing the technology and capacity to engage large populations in clinical research.

Translational science on a population scale challenges an ethical program designed to provide oversight for research conducted through face-to-face encounters. With the capacity to encode markers onto specimens and into data on an industrial scale, translational research is now confronting questions about broad-based consent and the voluntary nature of research participation. It seems unlikely that transformations at CTSA institutions were projected to be so disruptive.

2. Although CTSA institutions function within unique local contexts and pursue distinct site priorities in terms of communities to engage and for what purpose,24 many CTSA institutions define community engagement similarly. Even when definitions differ, similar programmatic characteristics are evident in the alignment of partners, stakeholders and Community Advisory Board members. Similarities in infrastructure lends itself to the development and use of shared metrics for reports.

The limited attention given to assessing CAB advice combined with a lack of attention to reporting research results in community contexts suggests that CTSAs have not genuinely explored bi-directional communication within their translational science programs. Currently metrics in use within CTSAs and academic health and science centers support traditional academic expectations. A lack of appropriate indicators and metrics challenges individual researchers and research teams to define and demonstrate satisfactory progress and productivity

Determining whether CTSAs are able to achieve community goals through translational research will require additional metrics. New metrics must provide insights into partnership dynamics. Initially, case studies of community-academic partnerships may prove more valuable than quantitative work to advance our capacity to engage with participant and public perceptions of clinical research activities. Altmetrics offer researchers an approach to examining the reach of research information within multiple communities,25–26 translational science needs similarly to understand and measure the capacity of community-academic research partnerships to address the willingness, particularly among communities under-represented in research, to partner with academic medical centers researchers and/or to participate in research.27–29

3. Multiple CBPR teams across the U.S. and Canada have conducted evaluations of partnership practices and contributions to individual research projects. Evaluations of individual programs have also helped us understand the community capacity to participate in research and influence health outcomes. We reiterate a recommendation and encourage the CTSA consortium to assess trust and synergy as indicators of bi-directional communication within community-academic research partnerships and the broader public trust.15,30–38 Bi-directionality can be studied as a partnership process by looking at how information moves through communities and with attention to the communication modalities used by community and academic partners and the broader public. To our current capacity to assess Internet views, downloads of presentations, videos, web pages, and to assess information sharing through social media, CTSAs must develop the capacity to assess public engagement with the information shared. Additionally, bi-directional communication within translational science should also be informed by interpersonal studies that address health literacy and numeracy issues, that examine therapeutic relationships (particularly those beyond the clinical encounter), and that improve informed consent while avoiding therapeutic misconception.

4. Even with a common definition and metrics, it is unrealistic to expect researchers to develop community research partnerships, conduct research and also develop complex, innovative evaluation approaches that assess synergy within partnership dynamics and the influence of research partnerships on public trust. Incorporating these evaluation questions will require expertise in systems science methods analysis (e.g., system dynamics, network analysis, and agent-based modeling);39–41 CTSAs will need to support professionals skilled in customizing measurement and evaluating team science, community-academic partnerships to begin to understand the benefits of community-engaged research within translational science.

CONCLUSION

Claims about the uniqueness of specific institutional-community contexts and relationships must not be allowed to further delay the development and deployment of a common definition and metrics for studying community and stakeholder engagement in translational research and science. CTSAs rely on a few definitions of community engagement and community-engaged research that could be combined to meet the Institute of Medicine’s call for a shared definition. Combining definitions, as in a dictionary, would indicate variations in approach such as exists between the CBPR and Principles definition, contrasting self-identification and social construction. More importantly, combining definitions adds the necessary flexibility to develop metrics and indicators across the clinical translational research spectrum.

This study of community engagement programs within complex institutional systems reveals common community engagement and community-academic research partnerships and activities, similarities across program definitions and evaluation approaches. Creating a viable response to the IOM’s recommendation of developing a shared definition and metrics must be accompanied by a more robust evaluation component within CTSA hubs in order to more directly assess the role of translational research in improving health outcomes locally and the consortium as a sustainable community of research practice nationally.

Supplementary Material

Table 5.

CTSA Support for Community Advisory Boards

| Community Definition\Stakeholders | n=42* | Do you have a community advisory board (CAB) (s) connected to your CTSA? | Does your institution have more than one CAB? | Are the functions of the boards distinct? | Average percent of community members on CAB |

|---|---|---|---|---|---|

| b. Principles of Community Engagement, 2nd edition, 2011. | 16 | 94% | 50% | 50% | 88% |

| e. Definition has some similarities but also differences | 10 | 90% | 50% | 60% | 61% |

| d. We don’t have a definition | 7† | 71% | 14% | 0% | 58% |

| a.NIH Council of Public Representatives (Ahmed and Palermo, 2010). | 5 | 80% | 60% | 80% | 73% |

| c. CBPR - W.K. Kellogg Community Scholar’s Program, 2001 | 4 | 50% | 50% | 25% | 73% |

| average of all responses | 83% | 45% | 45% | ||

Two responses that did not select a definition were included in d. We don’t have a definition

Recorded only shared responses for the two institutions with two submissions

ACKNOWLEDGEMENTS:

The authors would like to thank all the key site informants for responding to the surveys; Meryl Sufian, Division of Clinical Innovation, National Center for Advancing Translational Sciences, National Institutes of Health, and Adina Black (Research Assistant), North Carolina Translational and Clinical Sciences Institute, UNC Chapel Hill, for their encouragement and contributions to this project.

Financial Support

This study received no direct funding. The authors were provided partial salary support through the following grants:

NIH National Center for Advancing Translational Sciences. Grant Numbers: UL1TR000114, UL1TR000130, UL1TR000055, UL1TR000443, UL1TR001111, UL1TR002240, UL1RR029887 and UL1TR000041

NIH National Heart, Lung, and Blood Institute. Grant Number: K24HL105493

Agency for Healthcare Research and Quality. Grant Number: U18HS020518

Native American Research Centers for Health (NARCH V): Grant Numbers: U261HS300293 and U261IHS0036–04-00 (funded by Indian Health Service, National Institute of General Medical Sciences, Health Resources Services Administration, National Cancer Institute, National Center for Research Resources, National Institute of Drug Abuse, National Institute of Minority Health and Health Disparities, and Office of Behavioral Social Sciences Research)

Footnotes

The authors declare that they have no conflicts of interest in relation to the design, conduct or dissemination of the study reported on in this manuscript.

References

- 1.Mercer SL, Green LW. Federal funding and support for participatory research in public health and health care In: Minkler M, Wallerstein N, eds. Community-based participatory research for health: From process to outcomes. 2nd ed. San Francisco, CA: Jossey-Bass; 2008:399–406. [Google Scholar]

- 2.Institute of Medicine Scientific Opportunities and Public Needs: Improving Priority Setting and Public Input at the National Institutes of Health. Washington, DC: National Academies Press; 1998. [PubMed] [Google Scholar]

- 3.Dollars Burgin E., disease, and democracy: Has the Director’s Council of Public Representatives improved the National Institutes of Health? Politics and the Life Sciences. 2005;24(1 & 2):43. [DOI] [PubMed] [Google Scholar]

- 4.National Institutes of Health (U.S.) Director’s Council of Public Representatives. (National Institutes of Health Center for Information Technology). (2008). National Institutes of Health Director’s Council of Public Representatives - October 2008 [Video file]. Retrieved from https://videocast.nih.gov/Summary.asp?file=14737&bhcp=1

- 5.Ahmed SM, Palermo A : Community Engagement in Research :Frameworks for Education and Peer Review . American Journal of Public Health 2010; 100 (8) :1380–1387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Institute of Medicine Committee to Review the CTSA Program at NCATS The CTSA Program at NIH: Opportunities for Advancing Clinical and Translational Research. Washington, DC: Institute of Medicine; 2013. [PubMed] [Google Scholar]

- 7.National Institutes of Health. PAR-15–304: Clinical and Translational Science Award (U54). http://grants.nih.gov/grants/guide/pa-files/PAR-15-304.html. August 10, 2015.

- 8.Israel BA, Schulz AJ, Parker EA, Becker AB. Review of community-based research: Assessing partnership approaches to improve public health. Annu Rev Public Health. 1998;19(1):173–202. [DOI] [PubMed] [Google Scholar]

- 9.Senge P, Scharmer O. Community Action Research In: Reason P, Bradbury H, eds. Handbook of Action Research. Thousand Oaks, CA: Sage Publications; 2001:238–50. [Google Scholar]

- 10.Baum F, MacDougall C, Smith D. Participatory action research. J Epidemiol Community Health. 2006;60(10):854–857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wells K, Jones L. Commentary: “Research” in Community-Partnered, Participatory Research. JAMA. 2009;302(3):320–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Clinical and Translational Science Awards Community Engagement Key Function Committee Task Force on the Principles of Community Engagement. Principles of Community Engagement. 2nd ed. Bethesda, MD: National Institutes of Health; 2011. [Google Scholar]

- 13.Wenger E Communities of practice: learning, meaning, and identity. New York, N.Y.: Cambridge University Press; 1998. [Google Scholar]

- 14.Eder M, Carter-Edwards L, Hurd TC, Rumala BB, Wallerstein N. A Logic Model for Community Engagement Within the Clinical and Translational Science Awards Consortium: Can We Measure What We Model? Acad Med. 2013;88(10):1430–1436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yarborough M, Sharp RR. Restoring and preserving trust in biomedical research. Acad Med. 2002;77(1):8–14. [DOI] [PubMed] [Google Scholar]

- 16.Quinn SC. Ethics in public health research: protecting human subjects: the role of community advisory boards. Am J Public Health. 2004;94(6):918–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Morin SF, Morfit S, Maiorana A, et al. Building community partnerships: case studies of Community Advisory Boards at research sites in Peru, Zimbabwe, and Thailand. Clin Trials. 2008;5(2):147–156. [DOI] [PubMed] [Google Scholar]

- 18.Newman SD, Andrews JO, Magwood GS, Jenkins C, Cox MJ, Williamson DC. Community Advisory Boards in Community-Based Participatory Research: A Synthesis of Best Processes. Prev Chronic Dis. 2011;8(3):12. [PMC free article] [PubMed] [Google Scholar]

- 19.Kellogg WK Foundation Community Health Scholars Program Stories of Impact. Ann Arbor, MI: W. K: Kellogg Foundation Community Health Scholars Program, 2001. Print. [Google Scholar]

- 20.Pelfrey CM, Cain KD, Lawless ME, Pike E and Sehgal AR (2016) ‘A consult service to support and promote community-based research: Tracking and evaluating a community-based research consult service’, Journal of Clinical and Translational Science Published online: 29 December 2016, 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brieland D Community advisory boards and maximum feasible participation. Am J Public Health. 1971;61(2):292–296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Norris KC, Brusuelas R, Jones L, Miranda J, Duru OK, Mangione CM. Partnering with community-based organizations: an academic institution’s evolving perspective. Ethn Dis. 2007;17(1 Suppl 1):S27–32. [PubMed] [Google Scholar]

- 23.Aguilar-Gaxiola S, Allen A III, Brown J, et al. Community Engagement Program External Review Report. University of Michigan Institute for Clinical and Health Research; February 15, 2015. [Google Scholar]

- 24.Kane C, Alexander A, Hogle JA, Parsons HM, Phelps L. Heterogeneity at Work: Implications of the 2012 Clinical Translational Science Award Evaluators Survey. Eval Health Prof. 2013;36(4):447–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Piwowar H Altmetrics: Value all research products. Nature. 2013;493(7431):159. [DOI] [PubMed] [Google Scholar]

- 26.Galligan F, Dyas-Correia S. Altmetrics: Rethinking the Way We measure. Serials Review. 2013;39(1):56–61. [Google Scholar]

- 27.Hicks S, Duran B, Wallerstein N, et al. Evaluating community-based participatory research to improve community-partnered science and community health. Prog Community Health Partnersh. 2012;6(3):289–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lasker RD, Weiss ES, Miller R. Partnership synergy: a practical framework for studying and strengthening the collaborative advantage. Milbank Q. 2001;79(2):179–205, III-IV. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Braun KL, Nguyen TT, Tanjasiri SP, et al. Operationalization of community-based participatory research principles: assessment of the national cancer institute’s community network programs. Am J Public Health. 2012;102(6):1195–1203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Jagosh J, Macaulay AC, Pluye P, et al. Uncovering the benefits of participatory research: implications of a realist review for health research and practice. Milbank Q. 2012;90(2):311–346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lucero J, Wallerstein N, Duran B, et al. Development of a mixed methods investigation of process and outcomes of community based participatory research. Journal of Mixed Methods, first published on-line, 2016. DOI: 10.1177/1558689816633309S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Israel BA, Lantz PM, McGranaghan RJ, Guzman JR, Rowe Z, Parker EA. Documentation and evaluation of CBPR partnerships: The use of in-depth interviews and closed-ended questionnaires In: Israel BA, Eng E, Schulz AJ, eds. Methods for Community-Based Participatory Research for Health. 2nd ed. San Francisco, CA: Jossey-Bass; 2012:369–404. [Google Scholar]

- 33.Sandoval JA, Lucero J, Oetzel J, et al. Process and outcome constructs for evaluating community-based participatory research projects: a matrix of existing measures. Health Educ Res. 2012;27(4):680–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Khodyakov D, Stockdale S, Jones A, Mango J, Jones F, Lizaola E. On measuring community participation in research. Health Educ Behav. 2013;40(3):346–354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Oetzel JG, Zhou C, Duran B, et al. Establishing the psychometric properties of constructs in a community-based participatory research conceptual model. Am J Health Promot. 2015;29(5):e188–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Aguilar-Gaxiola S, Ahmed S, Franco Z, et al. Towards a unified taxonomy of health indicators: academic health centers and communities working together to improve population health. Acad Med. 2014;89(4):564–572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Butterfoss FD. Coalitions and Partnerships in Community Health. San Francisco, CA: Jossey-Bass; 2007. [Google Scholar]

- 38.Becker AB, Israel BA, Gustat J, Reyes AG, Allen AJ III. Strategies and techniques for effective group process in cbpr partnerships In: Israel BA, Eng E, Shulz AJ, Parker EA, eds. Methods for Community-Based Participatory Research for Health. 2nd ed. San Francisco, CA: Jossey-Bass; 2012:69–96. [Google Scholar]

- 39.Hawe P Lessons from complex interventions to improve health. Annu Rev Public Health. 2015;36:307–323. [DOI] [PubMed] [Google Scholar]

- 40.Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annual Review of Public Health. 2012;33:357–376. doi: 10.1146/annurev-publhealth-031210-101222.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Franco ZE, Ahmed SM, Maurana CA, DeFino MC, Brewer DD. A social network analysis of 140 community-academic partnerships for health: examining the healthier Wisconsin partnership program. Clin Transl Sci. 2015;8(4):311–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.