Abstract

Self‐motion induces spontaneous eye movements which serve the purpose of stabilizing the visual image on the retina. Previous studies have mainly focused on their reflexive nature and how the perceptual system disentangles visual flow components caused by eye movements and self‐motion. Here, we investigated the role of eye movements in distance reproduction (path integration). We used bimodal (visual‐auditory)‐simulated self‐motion: visual optic flow was paired with an auditory stimulus whose frequency was scaled with simulated speed. The task of the subjects in each trial was, first, to observe the simulated self‐motion over a certain distance (Encoding phase) and, second, to actively reproduce the observed distance using only visual, only auditory, or bimodal feedback (Reproduction phase). We found that eye positions and eye speeds were strongly correlated between the Encoding and the Reproduction phases. This was the case even when reproduction relied solely on auditory information and thus no visual stimulus was presented. We believe that these correlations are indicative of a contribution of eye movements to path integration.

Keywords: Auditory, distance reproduction, Eye movements, multi‐modal, self‐motion, visual

Introduction

Movement of an observer through an environment generates a visual flow field on the retina. The properties of this flow field were shown to provide important cues for the estimation of parameters of self‐motion, such as direction (heading), speed, and traveled distance, i.e., path integration (Gibson 1950; Andersen et al. 1993; Lappe and Rauschecker 1994; Lappe et al. 1996, 1999; Bossard et al. 2016). Radial optic flow elicits a nystagmus‐like pattern of slow and fast conjugate eye movements (Niemann et al. 1999). One function of these eye movements is to stabilize the visual image on the retina and thereby improve the visibility of potential targets (Warren and Rushton 2009). On the other hand, the extraction of self‐motion information from optic flow is complicated by these eye movements which by themselves induce large‐scale shifts of the retinal image (Warren and Hannon 1990; Bremmer et al. 2017). Thus, successful navigation critically depends on the ability to distinguish those components of the optic flow that are caused by self‐motion from those caused by eye movements (Royden et al. 1992, 1994; for a review see Angelaki and Hess 2005).

It is well‐known, that path integration, i.e., the extraction and integration of motion information to guide spatial behavior, involves sensory and motor processing (Etienne and Jeffery 2004). On the sensory side, visual (Bremmer and Lappe 1999; Lappe et al. 2007), auditory (von Hopffgarten & Bremmer 2011), tactile (Churan et al. 2017), and vestibular (Berthoz et al. 1995; Glasauer et al. 2002) signals have been unequivocally shown to contribute to estimating traveled distance. On the motor side, as an example, efference copy signals of walking have been implicated in path integration behavior (Mittelstaedt and Mittelstaedt 2001). While eye movements as reactions to an actual visual flow field are well described (e.g., Lappe et al. 1998; Angelaki and Hess 2005; Bremmer 2011); not much is known on how they contribute to behavioral tasks like path integration.

In our experiments, we investigated eye movements during a distance reproduction task (von Hopffgarten & Bremmer 2011) based on visual and/or auditory cues. In natural environments, auditory cues can provide information about self‐motion by changes e.g., of loudness, the Doppler effect or interaural time differences (Lutfi and Wang 1999). Here, we used differences in the frequency of a tone as an indicator of the speed of self‐motion. This association was rather learned than natural – although it occurs frequently, for example during operation of motor powered vehicles. The task of the subjects was (1) to monitor the distance of a simulated self‐motion based on visual and auditory cues (Encoding phase) and then (2) to reproduce the perceived distance actively while using only auditory, only visual or bimodal (visual‐auditory) information (Reproduction phase). Like during real self‐motion, subjects were free to move their eyes in both, the Encoding and the Reproduction phase. We focused on analyzing the role of slow eye movements, since fast eye movements recently have been shown to compromise encoding of (visually simulated) self‐motion (Bremmer et al. 2017). In particular, we were interested in the oculomotor behavior during the Reproduction phase when no visual stimulus was available, and the subjects had to rely only on auditory information.

Methods

Behavioral aspects of this study concerning the ability of participants to reproduce travel distance have been published before (von Hopffgarten & Bremmer 2011). Here, we focused on the oculomotor behavior of the subjects from the same dataset, which was not analyzed in this previous work.

Subjects

Six human subjects (two male and four female, mean age 23 years) took part in the experiment. The subjects had normal or corrected‐to‐normal vision and normal hearing. All subjects gave informed written consent prior to the testing and were reimbursed after the experiment with 8 €/h. All procedures in this study conformed to the Declaration of Helsinki and were approved by a local ethics committee.

Apparatus

Experiments were conducted in a darkened (but not completely dark) sound attenuated room. Subjects were seated at a distance of 114 cm from a tangential screen (70° x 55° visual angle) and their head‐position was stabilized by a chin‐rest. Visual stimuli were generated on a windows PC using an in‐house built stimulus package and were back‐projected onto the screen by a CRT‐Projector (Electrohome Marquee 8000) at a resolution of 1152 x 864 pixels and a frame rate of 100 Hz. The auditory stimuli were also generated using MATLAB and presented to the subjects by head‐phones (Philips SHS390). The eye position was recorded by a video‐based eye‐tracker (EyeLink II, SR Research) at a sampling rate of 500 Hz and an average accuracy of ~0.5°. During the distance reproduction, the subjects controlled the speed of simulated self‐motion using an analog joystick (Logitech ATK3) that was placed on a desk in front of them. The speed of the simulated self‐motion was proportional to the inclination angle of the joystick. The data from the joystick were acquired at a rate of 100 Hz and minimal change in speed of simulated self‐motion that could be triggered by the joystick was 1/1000 of the maximum range of speeds used in the experiments.

Stimuli

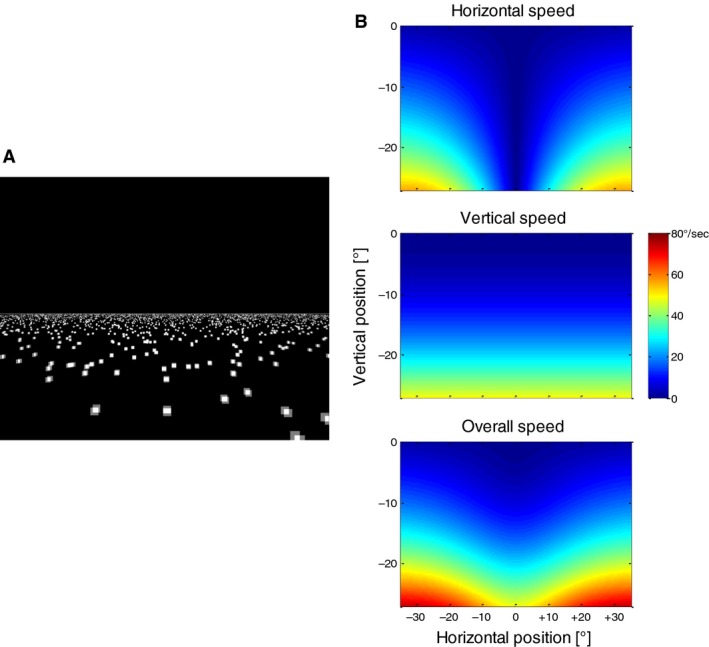

The visual stimulus consisted of a horizontal plane of white (luminance: 90 cd/m2) randomly placed small squares (Fig. 1A) on a dark (<0.1 cd/m2) background that filled the lower half of the screen. The size of the squares was scaled between 0.2° and 1.9° in order to simulate depth. The direction of the simulated self‐motion was always straight‐ahead (orthogonal relative to the orientation of the horizon within the stimulus). Since the subjects did not know the absolute size of the squares and the absolute altitude of the observer above the plane, they could not determine the absolute speed of simulated self‐motion from the stimulus display. Thus, here, the distances are always quantified in arbitrary units (AU) and the speed of simulated self‐motion in AU/s. The local horizontal and vertical speeds of the optic flow depended on the position on the screen (Fig. 1B), with lowest speeds close to the focus of expansion (in the center of the screen) and increasing with increasing eccentricity. The auditory stimuli were sinusoidal tones (SPL approximately 80 dB) with a frequency proportional to the simulated speed. The frequencies were in a range between 220 and 440 Hz and changed linearly as a function of the speed of the simulated self‐motion, which was in the range of 0–20 AU/s.

Figure 1.

(A) Screenshot of the stimulus simulating self‐motion across a ground plane of randomly placed squares. (B) Distribution of visual speeds in different parts of the optic flow pattern. Note that only the lower half of the screen is depicted in the colored displays since no stimulus was present in the upper half.

Procedure

Each trial consisted of two phases. During the “Encoding phase” the subjects were presented with a simulated self‐motion at one of the three speeds (8, 12 or 16 AU/s). The presentation lasted 4 seconds each which resulted in three different traveled distances (32, 48, 64 AU). The presentation was always bimodal, i.e., visual motion was accompanied by a sound representing the respective speed. The sound frequencies corresponding to the three speeds used during the Encoding phase were 308, 354, and 396 Hz, respectively. The task of the subjects in this phase was to monitor the distance covered for later reproduction. After the Encoding phase, a dark screen was presented for 500 msec and then the subjects had to reproduce the previously observed distance using a joystick. In different conditions of this “Reproduction phase,” either only the visual display was presented (visual condition) or only the auditory stimulus was presented while the screen was dark (auditory condition) or both sources of information were available at the same time (bimodal condition). During reproduction, the subjects were able to change the simulated speed by changing the inclination of the joystick. After the subjects had reached the distance they perceived to be identical to that during the Encoding phase, they had to press a joystick button to complete the trial. The subjects were allowed to move their eyes freely during the Encoding and the Reproduction phases. There were thus nine different experimental conditions: three different speeds in three different modalities. In each experimental condition, 80 trials were recorded. All conditions were presented in a pseudo‐randomized order and the subjects were not informed in advance about the sensory modality of the Reproduction phase. The duration of the experiment for each subject was ~4 h, including breaks.

Data analysis

Eye position as well as the speed of the simulated self‐motion were recorded at a sampling rate of 500 Hz. As detailed above, we focused on the slow eye movements in the different experimental conditions. To this end, we removed the parts of the data in which the subjects made saccades, fast resetting eye movements, or blinks. In addition, we removed all other parts of the data in which the eye‐speed exceeded 50°/sec. These de‐saccaded data were used to calculate the average position, speed, and direction of the slow eye movements per trial. All calculations and data transformations were done using MATLAB (The MathWorks). Some statistical analyses were performed using the SPSS package (IBM).

The spatial distribution of eye positions and the relationship between eye position and eye speed were determined for each subject and experimental condition by binning the horizontal and vertical eye positions in bins of 0.1 × 0.1° and then calculating the average horizontal and vertical eye speeds in each bin. We used Pearson correlations to calculate the dependence between position and eye speed; however, the results were not different when a nonparametric measure (Spearman correlation) was used. Preferred eye positions were calculated by fitting a two‐dimensional Gaussian function to the frequency distribution of eye positions by a least squares algorithm.

Results

Eye‐position during the encoding‐ and reproduction phases

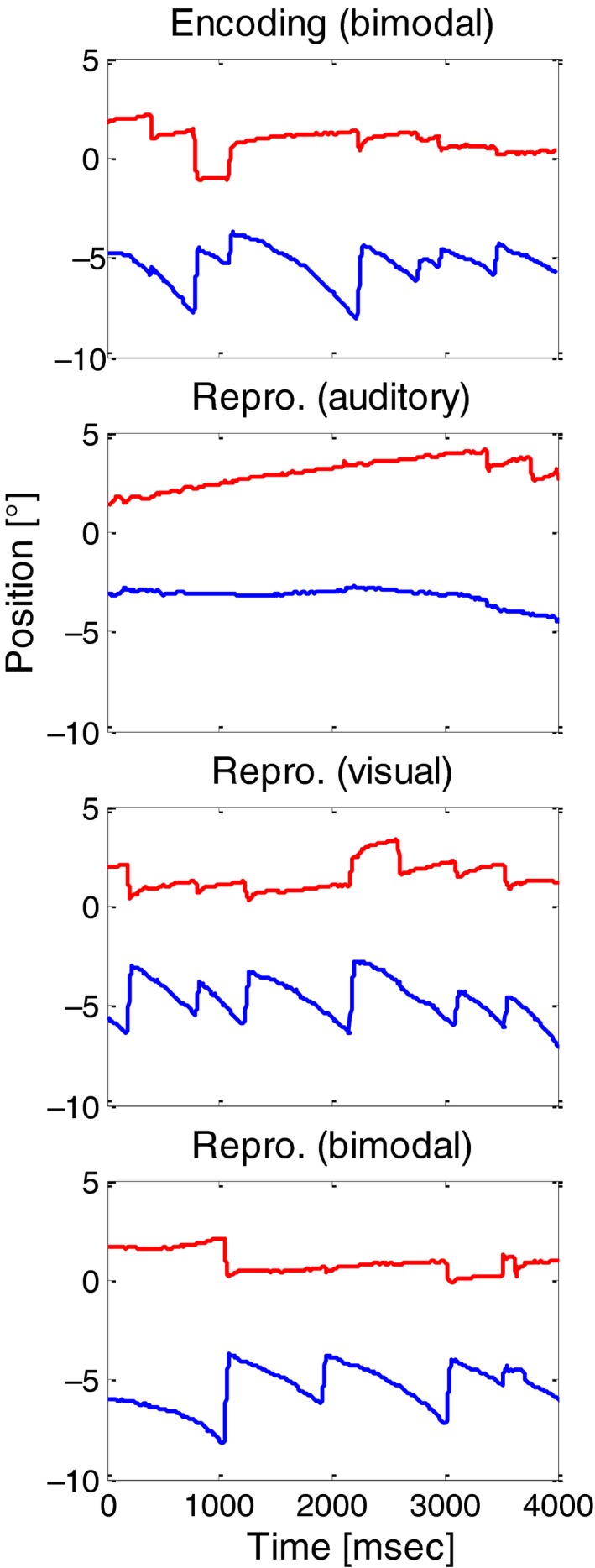

Figure 2 shows example of eye traces during the Encoding phase (bimodal) and the three different modality conditions of the Reproduction phase. When a visual stimulus was present (visual and bimodal conditions), a nystagmus‐like eye movement pattern emerged consisting of slow eye movements in the direction of the optical flow (mostly vertical) and fast resetting eye movements in the opposite direction. In the purely auditory condition (i.e., on a dark screen), the eye traces revealed mostly prolonged drifts with fewer saccadic eye movements in between.

Figure 2.

Example horizontal (red) and vertical (blue) eye traces (from subject S4) for the Encoding phase (upper panel) and for the three different modality conditions in the Reproduction phase. The time axes represent the time from the start of the stimulus motion. The speed of the simulated self‐motion was 16 AU/sec.

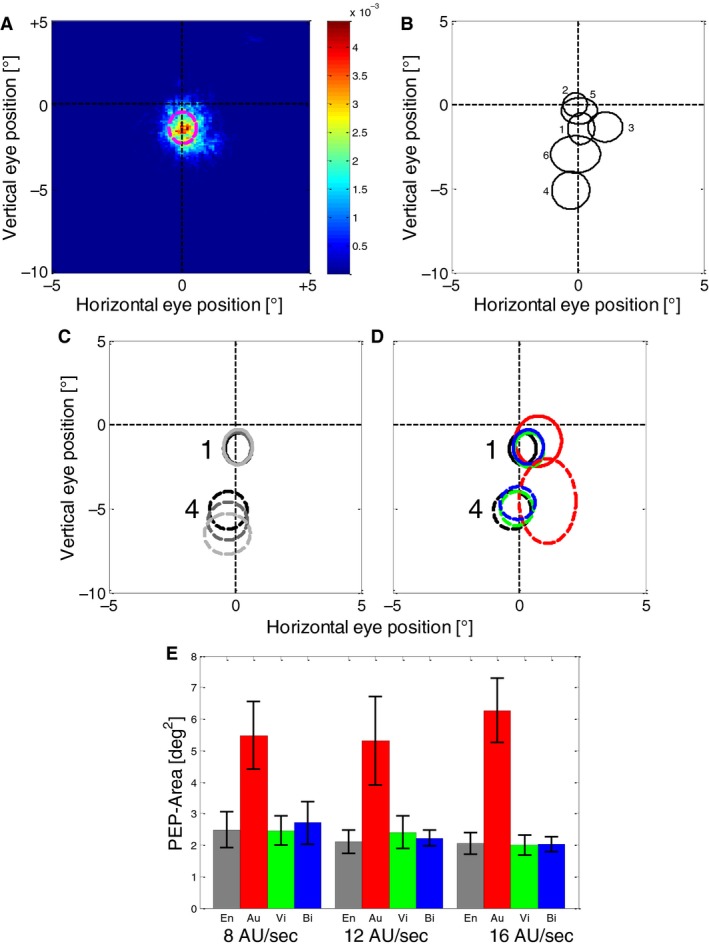

As shown in Figure 3A for one example subject (S1), eye positions during the Encoding phase covered only a very small portion of the stimulus display. We fitted two‐dimensional Gaussians to the spatial distributions of eye‐position for each subject and condition and found that each subject used a very localized – but also highly individual part of the stimulus to obtain information. An example is shown in Figure 3B for all subjects in the Encoding phase at a simulated speed of 16 AU/s. In the following we name the area within one standard deviation around the center of the Gaussian fit the Preferred Eye Position (PEP). The PEP depended to some extent on the speed of the stimulus – in particular for those subjects that preferred to sample higher stimulus speeds (i.e., positioned their eyes lower on the screen, subject 4 in Fig. 3C). However, significant overall changes in the position of the PEP were not found in the Encoding phase neither in the horizontal (repeated‐measures ANOVA, F = 2.61, P = 0.12) nor in the vertical (repeated‐measures ANOVA, F = 1.21, P = 0.32) dimension. The subjects adhered to the PEP they showed during the Encoding phase, even during the Reproduction phase (Fig. 3D). When no visual stimulus was present on the screen (auditory Reproduction phase, red ellipses in Fig. 3D), the PEP was slightly shifted relative to the other conditions but the average shift was only ~0.5° in the horizontal and ~1° in the vertical direction. Also the variability of eye‐positions and thus the size of the PEP was higher when no visual stimulus was present as shown in Figures 3D and E, while for all other conditions it remained largely the same. To further investigate these effects we conducted three 2‐way repeated measures ANOVAs with the factors “Speed of simulated self‐motion” and “Condition” (Encoding, Reproduction auditory, Reproduction visual, Reproduction bimodal) for the variables “horizontal PEP position,” “vertical PEP position,” and “PEP size.” The factor “Speed of simulated self‐motion” was not significant (P > 0.05) for each of the investigated variables, while the factor “Condition” was significant for each (Horizontal position: F = 7.1, P = 0.04, Vertical position: F = 12.7, P = 0.01, Size: F = 23.4, P < 0.01). Post‐hoc t‐tests revealed only significant (P < 0.05) differences between the auditory condition and all other conditions for each simulated speed, confirming that the “Reproduction auditory” condition induced different effects from all other conditions.

Figure 3.

(A) The distribution of eye positions on the screen during the Encoding phase (speed = 16 AU/sec, 240 trials) for another example subject (S1). The colors show the probability of eye position to be in a certain spatial bin with a resolution of 0.1 × 0.1°. The dashed magenta line shows one standard deviation of a 2D Gaussian fitted to the distribution data. Vertical position 0° represents the horizon of the simulated ground plane. Note that the figure does not show the entire screen (that was 70 × 55° large) but only a central part of 10 × 15°. (B) 2D Gaussian fits of position distribution (preferred eye positions, PEPs) of all six subjects in the Encoding phase at a simulated speed of 16 AU/sec. Like in 3A, the lines represent one standard deviation of a 2D Gaussian distribution fitted to the data of each subject. (C) PEPs during the Encoding phase at different speeds for two example subjects (distinguished by different line styles). Light grey lines show the PEPs at a simulated speed of 8 AU/sec, dark grey at 12 AU/sec, and black at 16 AU/s. (D) Comparison of PEPs in the Encoding phase and different modality conditions of the Reproduction phase for two example subjects (the same as in C) at a simulated speed of 16 AU/sec. Data from (i) the Encoding phase (which was always bimodal) is shown in black, (ii) auditory Reproduction in red, (iii) visual Reproduction in green, and (iv) bimodal Reproduction in blue. (E) Means and standard errors of the PEP‐size for all subjects. Different bars show different conditions (combinations of simulated speed, Encoding and Reproduction phase, and modality used in the Reproduction phase). Grey bars represent eye positions during the Encoding phase (bimodal), red bars eye positions during auditory Reproduction (empty screen), green bars during visual Reproduction and blue bars during bimodal Reproduction, respectively.

In the next step, we evaluated the eye positions on trial‐by‐trial basis by averaging the eye‐positions along each spatial dimension during the Encoding and during the following Reproduction phases. Then we calculated a Pearson correlation to determine how much the oculomotor behavior was related during encoding and subsequent reproduction (see example subject in Fig. 4A). We found that when a visual stimulus was present on the screen (visual and bimodal conditions), the eye positions were highly correlated (P < 0.001 for each subject and condition, Fig. 4B, C, green and blue bars). Remarkably, this was also the case when only auditory feedback was given during the reproduction (red bars in Fig. 4B and C). Here, the correlations between encoding and reproduction were lower than in cases when visual feedback was present, but still highly significant (P < 0.001 in each subject and condition except for one condition in one subject, with P < 0.05 and one condition in one subject with P > 0.05).

Figure 4.

(A) Trial‐by‐trial correlations between the average eye position (horizontal on left panel, vertical on right panel) during encoding and the subsequent reproduction for one example subject (S1) and one example stimulus speed (16 AU/sec). In each condition, 80 trials were performed. Red markers indicate data from auditory, green markers from visual and blue markers from bimodal reproduction. (B) Averages and standard errors (over six subjects) of the correlations between the horizontal eye position during the Encoding and during the Reproduction phases. Different colors indicate which modalities were available during the reproduction. Red bars indicate data from auditory trials, green bars show those from visual trials and blue bars those from bimodal trials. Black horizontal lines indicate the correlation value that was required to reach significance (dashed line, P = 0.05; solid line P = 0.01) for one subject (based on 80 trials that were performed in each condition). (C) Same conventions as in panel b, but for vertical eye positions.

Eye movements during the encoding and reproduction phases

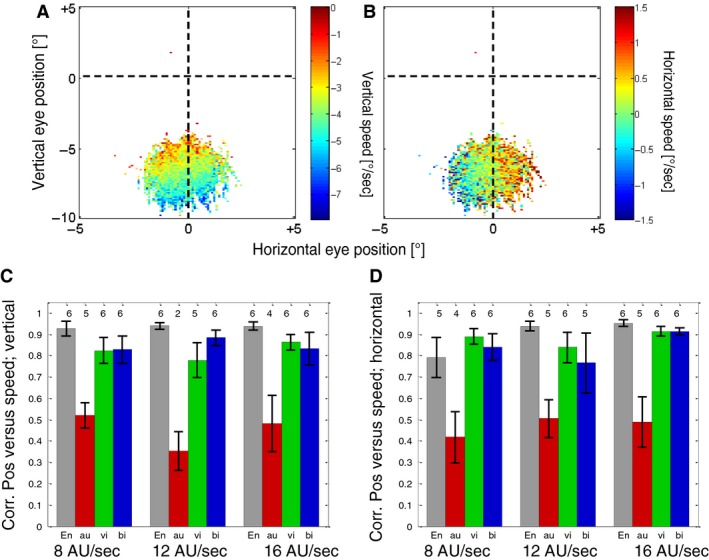

In the next step, we investigated the velocity of slow eye movements during the different conditions of the reproduction task. Figure 5 shows the velocity of the slow phases of eye movements (see Methods) depending on the eye position during the Encoding phase for one example subject. Both the vertical (Fig. 5A) and the horizontal (Fig. 5B) velocities were highly dependent on the position of the eye on the screen and hence the motion vector of the underlying motion stimulus (Fig. 1B). As expected, the downward eye‐velocity increased when the eye position was lower in the display (this is where the vertical stimulus speeds within the stimulus field were highest). The same was true for the horizontal component as shown in Figure 5B, where the direction and the speed of the horizontal component of the eye movements depended strongly on the horizontal eye position. To quantify this relationship, we calculated for each subject a Pearson correlation between the (vertical or horizontal) eye position and the corresponding eye velocity. For the vertical eye movements, we only used the eye positions that were within the area of the visual motion stimulus (y < 0°, see Fig. 1A). The results are summarized in Figure 5C and D. They show that when a visual stimulus was present on the screen (Encoding phase, Reproduction visual, Reproduction bimodal), there was a robust correlation between eye position and eye speed in both, the horizontal and the vertical dimensions as expected when the eye movement is driven by the movement of the stimulus. When no visual stimulus was present (Reproduction auditory, red bars), the correlations were weaker, but still significant (P < 0.05) in the majority of cases. Interestingly, for one of the subjects the sign of the (significant) correlation reversed in the reproduction auditory condition compared to the visual condition.

Figure 5.

(A) Average vertical speed of the eyes at different positions for one example subject (S4) and condition (Encoding phase, simulated speed of 16 AU/sec). Blue colors indicate fast downward speeds while red and yellow colors indicate slower speeds. (B) Average horizontal speed of the eyes at different positions for the same subject and condition as in 5A. Blue colors indicate data for leftward motion while red colors indicate data for rightward eye movements. (C) Average Pearson correlations between the vertical eye position and vertical eye speed over all subjects. Different bars show the results of different conditions (grey, Encoding phase; red, Reproduction auditory; green Reproduction visual; blue, Reproduction bimodal). Numbers above the bars show the number of subjects (out of six) for whom the correlation was significant (P < 0.05). Error bars show SEMs. (D) Average Pearson correlations between the horizontal eye position and horizontal eye speed over all subjects. Conventions are the same as in Figure 5C.

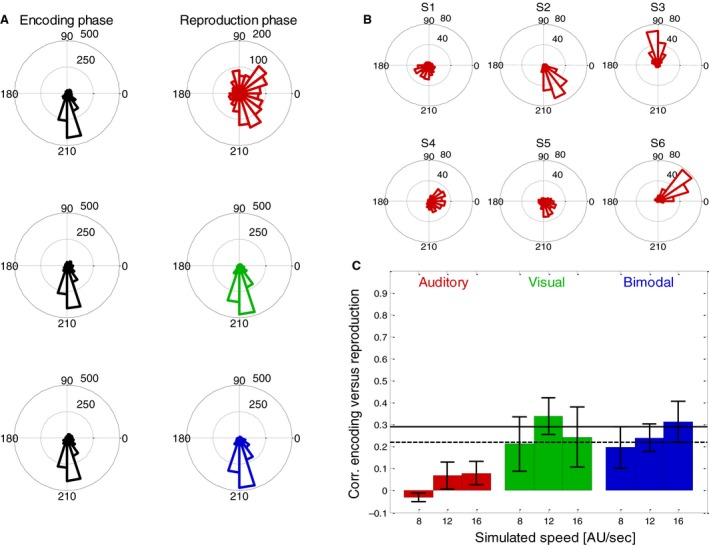

When we compared the relative speeds of eye movements in vertical and horizontal directions for the example subject in Figure 5A and B, we found the vertical component to be stronger – which is also a result of the PEPs being placed mostly around the vertical midline (see Fig. 3B), where the horizontal component of the stimulus motion is smallest (see Fig. 1B). Thus, we expected (at least in presence of a visual stimulus) the main component of the eye movements to be downward. This was confirmed when the mean direction of the eye movement was calculated in each trial. Figure 6A shows the distribution of eye movement directions for all conditions. For simplicity, we pooled data from different stimulus speeds and different subjects since the speed had no major effect on the distribution of directions and the subjects were (with an exception that we describe in detail later) quite uniform. As expected, in the presence of a visual stimulus, the main component of the eye movement was downward. The exception is the eye movement during auditory Reproduction phase that shows a broader distribution of directions. When we looked at the results of individual subjects (Fig. 6B), we found that even in the absence of a visual stimulus each subject had a narrow but also very individual distribution of eye movement directions. In the next step, we calculated the circular correlation (Berens 2009) between the mean eye movement direction in the Encoding and the following Reproduction phases. We obtained low correlations in conditions when visual stimuli were present and no significant correlations when a visual stimulus was absent in the Reproduction phase (Fig. 6C).

Figure 6.

(A) Distribution of average directions of eye movements in all trials of six subjects. The left column shows results from the (always bimodal) Encoding phase and right column results from the different modality conditions (red for auditory, green for visual, blue for bimodal) of the Reproduction phase. (B) Individual distributions of eye movement directions during the Reproduction phase in the auditory condition for each of the six subjects. (C) Averages and standard errors (over six subjects) of the correlations between average motion direction during the Encoding and during the Reproduction phases. Different colors indicate which modality was available during the reproduction. Red bars indicate data from auditory trials, green bars those from visual trials and blue bars those from bimodal trials. Black horizontal lines indicate the correlation that was required to reach significance (dashed line, P = 0.05; solid line P = 0.01) for each subject (based on 80 trials that were performed in each condition).

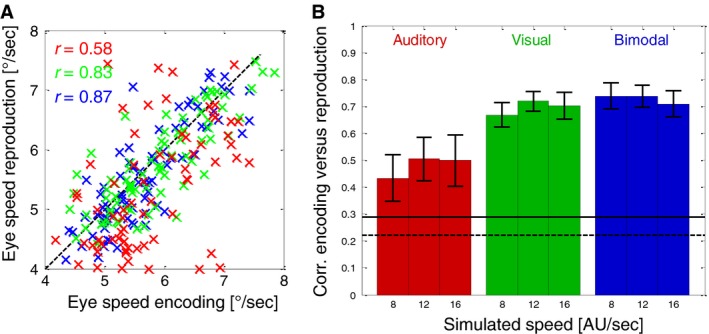

From the vertical and horizontal velocity components of the eye movements, we calculated the average absolute speed in each trial, i.e., combining horizontal and vertical components of the eye movements. We then compared the absolute speed of eye movements during Encoding with those during the following Reproduction phases. Figure 7A shows the results for one example subject in all conditions at a simulated speed of 16 AU/sec. It shows significant (P < 0.01) correlations for the visual (green marks) and bimodal (blue marks) conditions but as well a somewhat weaker but still highly significant (P < 0.01) correlation for the auditory condition. The average correlations for all subjects for all conditions are shown in Figure 7B. Again, visual and bimodal conditions show the highest average correlations while the correlations during auditory reproduction are lower but still clearly significant (significance levels of 5% and 1% are depicted as horizontal black lines in Fig. 7B).

Figure 7.

(A) Trial‐by‐trial correlations between the average total eye speed during encoding and the subsequent reproduction for one example subject (S4) and one example stimulus speed (16 AU/sec). The subject performed 80 trials in each condition. Different colors indicate which modalities were available during reproduction: Red markers indicate auditory, green markers visual and blue markers bimodal reproduction. (B) Averages and standard errors (over six subjects) of the correlations between the total eye‐speed during the Encoding and during the Reproduction phases. Different colors indicate which modalities were available during reproduction. Red bars indicate auditory trials, green bars show visual trials and blue bars bimodal trials. Black horizontal lines indicate the correlation that was required to reach significance (dashed line, P = 0.05; solid line P = 0.01) for one subject (based on 80 trials that were performed in each condition).

Discussion

Our results have shown that different aspects of the eye movements in a distance reproduction task correlate between the Encoding and the Reproduction phases. The correlation was weaker ‐ but still highly significant ‐ when no visual stimuli were shown during the Reproduction phase and thus no visual information about self‐motion parameters could be extracted. Our results are consistent with what is known about the role of eye movements in multisensory path integration.

Eye positions during encoding and reproduction of distance

All our participants have observed a very restricted area of the visual field during the Encoding phase. Since the heading of the stimulated motion was always straight ahead, the main task of the subjects during the Encoding phase was an integration of the self‐motion information to obtain an estimate of traveled distance. Since the speed in the flow field was different at different positions in the visual field, it appears as a useful strategy to utilize a restricted area to reduce the variability of observed visual speeds. If, participants have to estimate the heading for navigation, different parts of the flow field are differently informative. For example, the area around the focus of expansion of the visual flow can become very informative for judgment of heading (Lappe et al. 1999) and the changing positions of this informative area may also be reflected by a higher variability of eye positions. Under natural conditions, factors such as the curvature of a self‐motion trajectory also contribute to the variability of chosen eye positions (Land and Lee 1994; Wilson et al. 2008). A similar behavior as in our experiment was observed at least in a part of subjects during a headway reproduction task in a more naturalistic environment using a driving simulator (Bian et al. 2011). Here, too, as in our study, the task did not involve any heading judgments or requirements for orientation. However, during a real driving task involving steering, it was shown that the subjects covered a much larger area of the visual field with their eye movements (Land and Lee 1994; Crundall and Underwood 1998). This is consistent with the fact that in real driving, not only the speed of the self‐motion but also the heading is important. The changing heading of self‐motion may induce a larger variability of eye movements by generating a larger variability of optic flow. Each of our subjects used a different, idiosyncratic area of the optic flow field during the task. To our surprise, two of our subjects chose an area close to the focus of expansion where the speed of the optic flow was very slow. This may indicate the use of extra‐foveal vision in assessing of the speed of simulated self‐motion.

During reproduction of the previously encoded distance (path integration), the observed area of the visual field did not change. In cases where a visual stimulus was present on the screen, this appears to be a useful strategy as it ensures that the visual motion patterns during reproduction can be compared more easily than those observed during encoding. If only the auditory stimulus was used during reproduction, this behavior appears unnecessary as no visual information can be compared with the encoding. This finding may be interpreted in different ways dependent on whether or not a causal effect of eye movements on path integration is assumed. On the one hand, it can be related to a recent result which shows that eye movements are able to influence the perception of self‐motion even without visual stimuli (Clemens et al. 2017). Thus, the reproduction of the eye movements generated in the encoding phase could be a useful strategy during reproduction to keep this influencing factor constant between the two phases. On the other hand, it may also reflect the functions of memory and imagery in the distance reproduction task.

Memory and mental imagery during path integration

In the reproduction task, the subjects had to monitor and memorize the distance observed in the Encoding phase and then actively reproduce it. It has been shown that mental imagery is related to visual and auditory memory (e.g., Wheeler et al. 2000) and especially to memory retrieval (Slotnick et al. 2005, 2012; Albers et al. 2013). These previous findings might suggest that mental imagery of visually and auditorily simulated self‐motion information was used in the reproduction phase in particular in cases where no visual information was provided. There is ample evidence showing that visual mental imagery activates areas of the brain that are regularly involved in visual perception (Kosslyn et al. 1995; O'Craven and Kanwisher 2000; Ganis et al. 2004; for a review Kosslyn et al. 2001; Pearson and Kosslyn 2015). In particular, the mental imagery of motion was often shown to activate the same neuronal substrate that is activated during perception of motion (Goebel et al. 1998; Kourtzi and Kanwisher 2000; Emmerling et al. 2016). These motion sensitive areas are also critically involved in the generation of smooth eye movements (Komatsu and Wurtz 1989; for review see: Thier and Ilg 2005). Consequently, it has also been shown earlier that imagery of motion has some of the same consequences as the perception of motion. In particular it is capable of generating a motion aftereffect (Winawer et al. 2010) and slow eye movements (Brown 1968). In line with these previous results, our data show that the (slow) eye movements during the perception of a specific motion stimulus and the recollection/imagery of the same stimulus are strongly correlated.

Role of after‐nystagmus

Earlier research has shown that a prolonged optokinetic nystagmus is followed by a so‐called optokinetic after‐nystagmus (OKAN, Ohm 1921; Brantberg 1992; Büttner et al. 1976; Cohen et al. 1977) when the subject is placed in complete darkness. OKAN consists of two phases (OKAN I and OKAN II) that are observed at different times after the stimulus is extinguished. In the first phase, the direction of OKAN is the same as the original direction of the nystagmus, while in the second phase the direction is reversed. One may wonder whether the observed eye movements in the absence of the visual stimulus during the reproduction phase might be due to OKAN. We believe that the OKAN did not play a major role in our experiments, since the direction of OKAN in its different phases is either identical to or opposite to the direction of the nystagmus (Büttner et al. 1976). In contrast, the directions of the slow phases of eye movement varied strongly between our subjects (see Fig. 6B). Some of them have even shown directions that were perpendicular to the direction of the original nystagmus which is not consistent with the oculomotor properties of an OKAN.

Role of eye movements in path integration

Path integration includes the estimation of traveled distance. Mechanisms of path integration were investigated in many different species from insects (Wehner and Srinivasan 1981; Müller and Wehner 1988; Esch and Burns 1996) to humans (Bremmer and Lappe 1999). The main scope of this previous research was the question which internal and external cues are used for path integration and how information from different sources is combined.

During simulated self‐motion, optic flow was identified as one of the important sources of information (bees: Esch and Burns 1996; humans: Bremmer and Lappe 1999; Churan et al. 2017). During active walking without vision, the assessment of path length has been shown to rely on proprioceptive feedback and efference copies. This has been observed, for example in rodents, which update their position much more accurately during actively performed translations than during passive shifts (Mittelstaedt and Mittelstaedt 1982; Etienne et al. 1988). Similar conclusions can be drawn from experiments on normal (Mittelstaedt and Mittelstaedt 2001) and labyrinthine‐defective (Glasauer et al. 1994, 1999) human subjects who had to estimate and reproduce the length of a path or to cover the distance to a previously seen visual target.

During a distance reproduction task, subjects can apply different strategies, such as imitating speed and duration of the Encoding phase. We discussed these strategies in more detail in an earlier publication (Churan et al. 2017) on visuo‐tactile distance reproduction. We concluded that while the possibility for using this strategy cannot be fully discarded, the subjects were able to adjust the duration of individual reproduction trials to account for differences in chosen speed. This indicates that the subjects rather processed an integrated combination of duration and speed than reproducing them independently.

In our experiments, participants were allowed to freely move their eyes. The control of eye movements involves proprioception and efference copies alike (Bremmer et al. 2009; Sun and Goldberg 2016; Zimmermann and Bremmer 2016), also in the context of self‐motion processing (Bremmer et al. 2017). Our results emphasize the role of eye movements and nonvisual processing in path integration and reproduction. This might suggest that oculomotor activity is used as a pacemaker in both distance encoding and reproduction. More research, however, is needed to test this idea and to show to what degree eye movement strategies causally influence performance during path integration. This could be tested, for example by instructing the subjects to fixate a stationary spot during the reproduction task (e.g., Johansson et al. 2012).

Conflict of Interest

None declared.

Churan J., von Hopffgarten A., Bremmer F.. Eye movements during path integration. Physiol Rep, 6 (22), 2018, e13921, 10.14814/phy2.13921

Funding Information

This work was supported by Deutsche Forschungsgemeinschaft (CRC/TRR‐135).

References

- Albers, A. M. , Kok P., Toni I., Dijkerman H. C., and de Lange F. P.. 2013. Shared representations for working memory and mental imagery in early visual cortex. Curr. Biol. 23:1427–1431. [DOI] [PubMed] [Google Scholar]

- Andersen, R. A. , Treue S., Graziano M., Snowden R. J., and Qian N.. 1993. From direction of motion to patterns of motion: hierarchies of motion analysis in the visual cortex Pp. 183–189 in Ono T., Squire L. R., Raichle M. E., Perrett D. I., Fukuda M., eds. Brain mechanisms of perception and memory from neuron to behavior. Oxford University Press, New York. [Google Scholar]

- Angelaki, D. E. , and Hess B. J. M.. 2005. Self‐motion‐induced eye movements: effects on visual acuity and navigation. Nat. Rev. Neurosci. 6:966–976. [DOI] [PubMed] [Google Scholar]

- Berens, P . 2009. CircStat: a MATLAB toolbox for circular statistics. J. Stat. Softw. 31:1–21. [Google Scholar]

- Berthoz, A. , Israël I., Georges‐François P., Grasso R., and Tsuzuku T.. 1995. Spatial memory of body linear displacement: what is being stored?. Science. 269:95–98. [DOI] [PubMed] [Google Scholar]

- Bian, Z. , Pierce R., and Andersen G.. 2011. Eye movement patterns and driving performance. In PROCEEDINGS of the Sixth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design (pp. 503–509).

- Bossard, M. , Goulon C., and Mestre D. R.. 2016. Viewpoint oscillation improves the perception of distance travelled based on optic flow. J. Vis. 16:4. [DOI] [PubMed] [Google Scholar]

- Brantberg, K. 1992. Human optokinetic after‐nystagmus: variability in serial test results. Acta Otolaryngol. 112:7–13. [DOI] [PubMed] [Google Scholar]

- Bremmer, F. 2011. Multisensory space: from eye‐movements to self‐motion. J. Physiol. 589:815–823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer, F. , and Lappe M.. 1999. The use of optical velocities for distance discrimination and reproduction during visually simulated self motion. Exp. Brain Res. 127:33–42. [DOI] [PubMed] [Google Scholar]

- Bremmer, F. , Kubischik M., Hoffmann K.‐P., and Krekelberg B.. 2009. Neural dynamics of saccadic suppression. J. Neurosci. 29:12374–12383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer, F. , Churan J., and Lappe M.. 2017. Heading representations in primates are compressed by saccades. Nat. Commun. 8:920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown, B. B. 1968. Visual recall ability and eye movements. Psychophysiology 4:300–306. [DOI] [PubMed] [Google Scholar]

- Büttner, U. , Waespe W., and Henn V.. 1976. Duration and direction of optokinetic after‐nystagmus as a function of stimulus exposure time in the monkey. Arch. Psychiatr. Nervenkr. 222:281–291. [DOI] [PubMed] [Google Scholar]

- Churan, J. , Paul J., Klingenhoefer S., and Bremmer F.. 2017. Integration of visual and tactile information in reproduction of traveled distance. J. Neurophysiol. 118:1650–1663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clemens, I. A. H. , Selen L. P. J., Pomante A., MacNeilage P. R., and Medendorp W. P.. 2017. Eye movements in darkness modulate self‐motion perception. ENeuro 4:ENEURO.0211–ENEURO16.2016. 10.1523/eneuro.0211-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, B. , Matsuo V., and Raphan T.. 1977. Quantitative analysis of the velocity characteristics of optokinetic nystagmus and optokinetic after‐nystagmus. J. Physiol. 270:321–344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crundall, D. E. , and Underwood G.. 1998. Effects of experience and processing demands on visual information acquisition in drivers. Ergonomics 41:448–458. [Google Scholar]

- Emmerling, T. C. , Zimmermann J., Sorger B., Frost M. A., and Goebel R.. 2016. Decoding the direction of imagined visual motion using 7 T ultra‐high field fMRI. NeuroImage 125:61–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esch, H. , and Burns J.. 1996. Distance estimation by foraging honeybees. J. Exp. Biol.. 199:155–162. [DOI] [PubMed] [Google Scholar]

- Etienne, A. S. , and Jeffery K. J.. 2004. Path integration in mammals. Hippocampus 14:180–192. [DOI] [PubMed] [Google Scholar]

- Etienne, A. S. , Maurer R., and Saucy F.. 1988. Limitations in the assessment of path dependent information. Behaviour 106:81–110. [Google Scholar]

- Ganis, G. , Thompson W. L., and Kosslyn S. M.. 2004. Brain areas underlying visual mental imagery and visual perception: an fMRI study. Brain Res. Cogn. Brain Res. 20:226–241. [DOI] [PubMed] [Google Scholar]

- Gibson, J. J. 1950. The perception of the visual world. Houghton Mifflin, Boston. [Google Scholar]

- Glasauer, S. , Amorim M. A., Vitte E., and Berthoz A.. 1994. Goal‐directed linear locomotion in normal and labyrinthine‐defective subjects. Exp. Brain Res. 98:323–335. [DOI] [PubMed] [Google Scholar]

- Glasauer, S. , Dieterich M., and Brandt T.. 1999. Simulation of pathological ocular counter‐roll and skew‐torsion by a 3‐D mathematical model. NeuroReport 10:1843–1848. [DOI] [PubMed] [Google Scholar]

- Glasauer, S. , Amorim M.‐A., Viaud‐Delmon I., and Berthoz A.. 2002. Differential effects of labyrinthine dysfunction on distance and direction during blindfolded walking of a triangular path. Exp. Brain Res. 145:489–497. [DOI] [PubMed] [Google Scholar]

- Goebel, R. , Khorram‐Sefat D., Muckli L., Hacker H., and Singer W.. 1998. The constructive nature of vision: direct evidence from functional magnetic resonance imaging studies of apparent motion and motion imagery. Eur. J. Neuorsci. 10:1563–1573. [DOI] [PubMed] [Google Scholar]

- Johansson, R. , Holsanova J., Dewhurst R., and Holmqvist K.. 2012. Eye movements during scene recollection have a functional role, but they are not reinstatements of those produced during encoding. J. Exp. Psychol. Hum. Percept. Perform. 38:1289–1314. [DOI] [PubMed] [Google Scholar]

- Komatsu, H. , and Wurtz R. H.. 1989. Modulation of pursuit eye movements by stimulation of cortical areas MT and MST. J. Neurophysiol. 62:31–47. [DOI] [PubMed] [Google Scholar]

- Kosslyn, S. M. , Thompson W. L., Klm I. J., and Alpert N. M.. 1995. Topographical representations of mental images in primary visual cortex. Nature 378:496–498. [DOI] [PubMed] [Google Scholar]

- Kosslyn, S. M. , Ganis G., and Thompson W. L.. 2001. Neural foundations of imagery. Nat. Rev. Neurosci. 2:635–642. [DOI] [PubMed] [Google Scholar]

- Kourtzi, Z. , and Kanwisher N.. 2000. Activation in human MT/MST by static images with implied motion. J. Cogn. Neurosci. 12:48–55. [DOI] [PubMed] [Google Scholar]

- Land, M. F. , and Lee D. N.. 1994. Where we look when we steer. Nature 369:742–744. [DOI] [PubMed] [Google Scholar]

- Lappe, M. , and Rauschecker J. P.. 1994. Heading detection from optic flow. Nature 369:712–713. [DOI] [PubMed] [Google Scholar]

- Lappe, M. , Bremmer F., Pekel M., Thiele A., and Hoffmann K. P.. 1996. Optic flow processing in monkey STS: a theoretical and experimental approach. J. Neurosci. 16:6265–6285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lappe, M. , Pekel M., and Hoffmann K.‐P.. 1998. Optokinetic eye movements elicited by radial optic flow in the macaque monkey. J. Neurophysiol. 79:1461–1480. [DOI] [PubMed] [Google Scholar]

- Lappe, M. , Bremmer F., and Van Den Berg A. V.. 1999. Perception of self‐motion from visual flow. Trends Cogn. Sci. 3:329–336. [DOI] [PubMed] [Google Scholar]

- Lappe, M. , Jenkin M., and Harris L. R.. 2007. Travel distance estimation from visual motion by leaky path integration. Exp. Brain Res. 180:35–48. [DOI] [PubMed] [Google Scholar]

- Lutfi, R. A. , and Wang W.. 1999. Correlational analysis of acoustic cues for the discrimination of auditory motion. J. Acoust. Soc. Am. 106:919–928. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt, H. , and Mittelstaedt M.‐L.. 1982. Homing by path integration. Springer, Berlin, Heidelberg: Pp. 290–297. [Google Scholar]

- Mittelstaedt, M. L. , and Mittelstaedt H.. 2001. Idiothetic navigation in humans: estimation of path length. Exp. Brain Res. 139:318–332. [DOI] [PubMed] [Google Scholar]

- Müller, M. , and Wehner R.. 1988. Path integration in desert ants, Cataglyphis fortis . Proc. Natl Acad. Sci. USA 85:5287–5290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niemann, T. , Lappe M., Büscher A., and Hoffmann K. P.. 1999. Ocular responses to radial optic flow and single accelerated targets in humans. Vision. Res. 39:1359–1371. [DOI] [PubMed] [Google Scholar]

- O'Craven, K. M. , and Kanwisher N.. 2000. Mental imagery of faces and places activates corresponding stiimulus‐specific brain regions. J. Cogn. Neurosci. 12:1013–1023. [DOI] [PubMed] [Google Scholar]

- Ohm, J. 1921. Über Optischen Drehnystagmus. Klin Mbl Augenheilk 68:234–235. [Google Scholar]

- Pearson, J. , and Kosslyn S. M.. 2015. The heterogeneity of mental representation: ending the imagery debate: Fig. 1.Proc. Natl Acad. Sci. 112:10089–10092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Royden, C. S. , Banks M. S., and Crowell J. A.. 1992. The perception of heading during eye movements. Nature 360:583–585. [DOI] [PubMed] [Google Scholar]

- Royden, C. S. , Crowell J. A., and Banks M. S.. 1994. Estimating heading during eye movements. Vision. Res. 34:3197–3214. [DOI] [PubMed] [Google Scholar]

- Slotnick, S. D. , Thompson W. L., and Kosslyn S. M.. 2005. Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb. Cortex 15:1570–1583. [DOI] [PubMed] [Google Scholar]

- Slotnick, S. D. , Thompson W. L., and Kosslyn S. M.. 2012. Visual memory and visual mental imagery recruit common control and sensory regions of the brain. Cogn. Neurosci. 3:14–20. [DOI] [PubMed] [Google Scholar]

- Sun, L. D. , and Goldberg M. E.. 2016. Corollary discharge and oculomotor proprioception: cortical mechanisms for spatially accurate vision. Annu. Rev. Vis. Sci. 2:61–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thier, P. , and Ilg U. J.. 2005. The neural basis of smooth‐pursuit eye movements. Curr. Opin. Neurobiol. 15:645–652. [DOI] [PubMed] [Google Scholar]

- von Hopffgarten, A. , and Bremmer F.. 2011. Self‐motion reproduction can be affected by associated auditory cues. Seeing Perceiving 24:203–222. [DOI] [PubMed] [Google Scholar]

- Warren, W. H. , and Hannon D. J.. 1990. Eye movements and optical flow. J. Opt. Soc. Am. A 7:160–169. [DOI] [PubMed] [Google Scholar]

- Warren, P. A. , and Rushton S. K.. 2009. Optic flow processing for the assessment of object movement during ego movement. Curr. Biol. 19:1555–1560. [DOI] [PubMed] [Google Scholar]

- Wehner, R. , and Srinivasan M. V.. 1981. Searching behaviour of desert ants, genus Cataglyphis (Formicidae, Hymenoptera). J. Comp. Physiol. 142:315–338. [Google Scholar]

- Wheeler, M. E. , Petersen S. E., and Buckner R. L.. 2000. Memory's echo: vivid remembering reactivates sensory‐specific cortex. Proc. Natl Acad. Sci. USA 97:11125–11129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson, M. , Chattington M., and Marple‐Horvat D. E.. 2008. Eye movements drive steering: reduced eye movement distribution impairs steering and driving performance. J. Mot. Behav. 40:190–202. [DOI] [PubMed] [Google Scholar]

- Winawer, J. , Huk A. C., and Boroditsky L.. 2010. A motion aftereffect from visual imagery of motion. Cognition 114:276–284. [DOI] [PubMed] [Google Scholar]

- Zimmermann, E. , and Bremmer F.. 2016. Visual neuroscience: the puzzle of perceptual stability. Curr. Biol. 26:R199–R201. [DOI] [PubMed] [Google Scholar]