Abstract

Hypothesis:

Significant variability in speech recognition persists among post-lingually deafened adults with cochlear implants (CIs). We hypothesize that scores of nonverbal reasoning predict sentence recognition in adult CI users.

Background:

Cognitive functions contribute to speech recognition outcomes in adults with hearing loss. These functions may be particularly important for CI users who must interpret highly degraded speech signals through their devices. This study used a visual measure of reasoning (the ability to solve novel problems), the Raven’s Progressive Matrices (RPM), to predict sentence recognition in CI users.

Methods:

Participants were 39 post-lingually deafened adults with CIs and 43 age-matched NH controls. CI users were assessed for recognition of words in sentences in quiet, and NH controls listened to 8-channel vocoded versions to simulate the degraded signal delivered by a CI. A computerized visual task of the RPM, requiring participants to identify the correct missing piece in a 3×3 matrix of geometric designs, was also performed. Particular items from the RPM were examined for their associations with sentence recognition abilities, and a subset of items on the RPM was tested for the ability to predict degraded sentence recognition in the NH controls.

Results:

The overall number of items answered correctly on the 48-item RPM significantly correlated with sentence recognition in CI users (r = 0.35 – 0.47) and NH controls (r = 0.36 – 0.57). An abbreviated 12-item version of the RPM was created and performance also correlated with sentence recognition in CI users (r = 0.40 – 0.48) and NH controls (r = 0.49 – 0.56).

Conclusions:

Nonverbal reasoning skills correlated with sentence recognition in both CI and NH subjects. Our findings provide further converging evidence that cognitive factors contribute to speech processing by adult CI users and can help explain variability in outcomes. Our abbreviated version of the RPM may serve as a clinically meaningful assessment for predicting sentence recognition outcomes in CI users.

Keywords: Cochlear implants, cognition, nonverbal reasoning, Raven’s Progressive Matrices, speech perception

Introduction:

Cochlear implantation continues to be the most successful treatment approach to restore auditory sensation to individuals with severe-to-profound sensorineural hearing loss (SNHL). Nonetheless, variability in post-operative cochlear implant (CI) outcomes is well established, and continues to be a pressing issue, both in terms of evidence-based clinical therapeutic applications and theoretical research investigations into speech perception abilities after implantation. This outcome variability can be difficult to explain due to the numerous factors that contribute to the final endpoint outcome of speech recognition, which is the type of measure usually assessed in both clinical settings and research studies (1). This creates challenges in predicting outcomes as well as identifying specific areas to target in post-operative rehabilitation. Furthermore, standard audiometric and demographic factors have been shown to be only weak predictors of post-operative performance.

Obtaining a better understanding of the post-operative variability in speech recognition outcomes in CI users will require an examination of the interplay between the quality of the sensory input received by individuals’ peripheral auditory systems – their “bottom-up” sensory processing – and those listeners’ cognitive skills – their “top-down” processing. Together, these processes are thought to be the overwhelming contributors to speech recognition under degraded listening conditions (2), such as in CI users (3–7). Briefly, bottom-up processes have received a significant amount of attention in the literature, and are related to the CI or speech processor itself, health of the peripheral auditory system, and/or surgical variables (e.g., trauma during insertion) (8–10). While these factors are certainly critical for speech recognition, they cannot account for all the variability in outcomes, even after optimization of the device and fitting parameters.

The primary issue regarding bottom-up processes in CI users is that the signal from a CI is underspecified regarding spectral and temporal information, and is thus inherently degraded relative to unprocessed speech signals (11–13). These signal degradations require the listener to use a variety of neurocognitive top-down processes to successfully interpret speech. Top-down processes generally include linguistics skills and fundamental cognitive processes that underlie the perceptual processing of speech, such as working memory, information-processing speed, inhibition-concentration, and perceptual closure (5). In general, though, these factors have primarily been examined in relation to speech recognition abilities in patients with milder forms of SNHL (14–16), with a few recent explorations in CI users (6,7).

Further understanding the contributions of top-down processes in CI user outcomes has proven to be difficult due to the mixed results of previous studies and the heterogeneity of methods. For example, general tests of intelligence (IQ) or cognitive abilities have not been shown to correlate well with sentence or speech recognition in populations with hearing loss (14,17–20). Nonverbal reasoning tests have also been suggested as a means to predict speech recognition in adult CI users, although findings in the literature have been mixed regarding this conclusion (9,17). Intuitively, the ability to solve novel reasoning tasks involving new information without relying on an explicit prior knowledge base should relate to one’s ability to piece together a degraded auditory sentence into a meaningful percept (21). Moreover, most reasoning tasks tap into more basic underlying cognitive functions, like working memory, information-processing speed, and inhibitory control, which are known to relate to speech recognition abilities in patients with hearing loss (14).

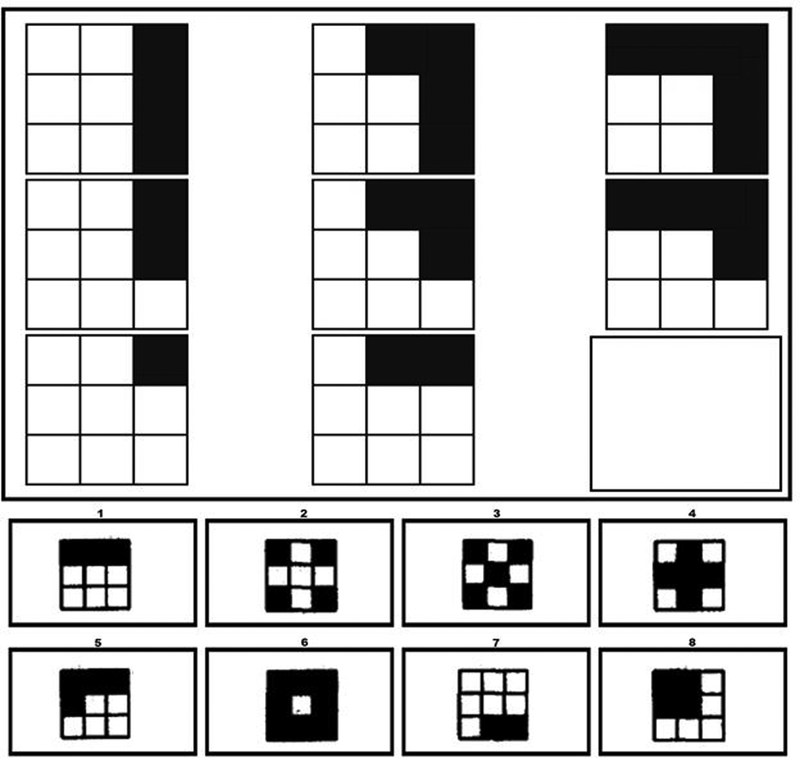

We sought to expand upon these results in a group of post-lingually deafened adults with CIs. The goal of this study was to evaluate relations between performance on a visual nonverbal reasoning test and auditory sentence recognition post-operatively in experienced CI users. A classic test of nonverbal reasoning was selected, the Raven’s Progressive Matrices (RPM), which was developed in the 1950s (21). This test uses a series of progressively difficult 3×3 matrices of geometric designs for which the participant must identify the missing piece of the pattern (see Figure 1 for an example similar to an item on the RPM). Participants in the current study completed a 48-item RPM task and were tested using several assessments of sentence recognition in quiet. We further sought to identify particular items from the RPM that drove any correlations with speech recognition outcomes, and to test the predictive power of this subset of RPM items in a second population: normal-hearing (NH), age-matched controls listening to speech that has been degraded using noise-vocoding, a process similar to the speech processing performed by a CI. Findings from this analysis could result in an abbreviated version of the RPM that could be easily implemented in a clinical setting to predict speech recognition outcomes for adults considering cochlear implantation.

Figure 1.

Example similar to an item from the Raven’s Progressive Matrices. The correct response in this scenario is item #1.

Methods:

Participants

The Institutional Review Board of The Ohio State University approved the study protocol. All participants provided informed, written consent, and were reimbursed $15 per hour for their participation. Testing was completed over a single 2-hour session, with frequent breaks to prevent fatigue, with a subset of measures collected reported here. During testing, CI users used their typical hearing prostheses, including any contralateral hearing aid, except during the unaided audiogram. Prior to the start of testing, examiners checked the integrity of the individual’s hearing prostheses by administering a brief vowel and consonant repetition task, and all participants passed this integrity check.

Participants included 39 post-lingually deafened adults with CIs and 43 age-matched normal-hearing (NH) controls. Inclusion criteria for the CI group included being a native English speaker and being post-lingually deaf. All CI users self-reported early hearing aid intervention and typical auditory-only spoken language development during childhood, inclusion in mainstream, conventional education programs, and experienced progressive hearing losses into adulthood. Exclusion criteria for participation in the study included pre-lingual deafness, inner ear malformation on pre-operative imaging (either CT or MRI), history of stroke or neurological disorder that might impact CI functioning (e.g., multiple sclerosis), or history of diagnosed cognitive impairment. All NH participants were screened for age-normal hearing, meaning they demonstrated pure-tone averages (PTA) across the speech frequencies (0.5, 1, 2, and 4 kHz) of better than 25 dB HL. This criterion was relaxed to a PTA of 35 dB HL for participants age 65 years or older, but only five participants demonstrated PTAs worse than 25 dB HL.

All CI and NH participants were screened for vision using a basic near-vision test and were required to have better than 20/40 near vision, because all of the cognitive measures were presented visually. Two CI participants had vision scores of 20/50; however, they still displayed normal reading scores, suggesting sufficient visual abilities to include their data in analyses. A screening task for cognitive impairment was completed, using a written version of the Mini Mental State Examination (MMSE) (22), with a MMSE raw score ≥ 26 required; all participants met this criterion, suggesting no evidence of cognitive impairment. A final screening test of basic word reading was completed, using the Wide Range Achievement Test (WRAT) (23). Participants were required to have a word reading standard score ≥ 75, suggesting reasonably normal general language proficiency. Socioeconomic status (SES) of participants was also collected because it may be a proxy of speech and language abilities. This was accomplished by quantifying SES based on a metric developed by Nittrouer and Burton (24), consisting of occupational and educational levels. There were two scales for occupational and education levels, each ranging from 1–8, with eight being the highest level. These two numerical scores were then multiplied, resulting in scores between 1 and 64. Lastly, a screening audiogram of unaided residual hearing was performed for each ear separately for all participants.

CI users were between the ages of 50 and 83 years (M = 67.47), and were post-lingually deafened. Duration of hearing loss ranged from 4 to 76 years (M = 40.0, SD =18.2), and the duration of CI use ranged from 18 months to 34 years (M = 7.4, SD = 6.8). See Table 1 for individual CI participant details. NH controls were between the ages of 50 and 81 years (M = 67.4, SD = 6.9)

Table 1.

Participant demographics for individual cochlear implant (CI) users.

| Participant | Gender | Age (years) | Implantation Age (years) | Duration of Hearing Loss (years) | SES | Side of Implant | Hearing Aid | Etiology of Hearing Loss | Better ear PTA (dB HL) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | F | 65 | 54 | 24 | 24 | Bilateral | No | Genetic/Progressive as adult | 120 |

| 2 | F | 66 | 62 | 31 | 35 | Right | Yes | Sudden, otosclerosis, progressive as adult | 78.75 |

| 3 | F | 67 | 58 | 42 | 12 | Right | Yes | Genetic, progressive as adult | 103.75 |

| 4 | F | 55 | 44 | 30 | 15 | Left | No | Measles | 120 |

| 5 | M | 70 | 65 | 53 | 30 | Right | No | Genetic, progressive as adult | 88.75 |

| 6 | M | 60 | 52 | 54 | 36 | bilateral | No | Genetic, progressive as adult, noise | 105 |

| 7 | F | 57 | 48 | 50 | 25 | Right | Yes | Otosclerosis, progressive as adult | 82.5 |

| 8 | M | 79 | 76 | 19 | 48 | Right | Yes | Progressive loss as an adult | 70 |

| 9 | F | 69 | 56 | 54 | 10.5 | Bilateral | No | Progressive as adult, autoimmune | 112.5 |

| 10 | M | 55 | 50 | 34 | 30 | Bilateral | No | unknown | 120 |

| 11 | F | 76 | 68 | 31 | 30 | Left | No | Progressive as adult, sudden hearing loss | 108.75 |

| 12 | M | 79 | 74 | 76 | 10 | Left | No | Sudden hearing loss | 108.75 |

| 13 | F | 81 | 71 | 20 | 30 | Right | No | Progressive as adult | 88.75 |

| 14 | M | 59 | 57 | 4 | 24 | Bilateral | No | Genetic, progressive as child | 120 |

| 15 | M | 78 | 72 | 22 | 12.5 | Bilateral | No | Progressive as child | 120 |

| 16 | M | 69 | 62 | 55 | 56 | Bilateral | No | Progressive as adult | 120 |

| 17 | F | 50 | 35 | 50 | 32.5 | Bilateral | No | Genetic, Meniere’s | 117.5 |

| 18 | F | 64 | 61 | 10 | 30 | Right | No | Progressive as adult, noise | 103.75 |

| 19 | F | 67 | 58 | 31 | 9 | Bilateral | No | Progressive as adult | 120 |

| 20 | M | 83 | 76 | 33 | 42 | Right | Yes | Progressive as adult, noise | 68.75 |

| 21 | F | 73 | 67 | 73 | 15 | Right | No | Progressive as adult | 98.75 |

| 22 | M | 76 | 73 | 16 | 49 | Left | Yes | Progressive as child | 72.5 |

| 23 | F | 79 | 45 | 49 | 15 | Right | Yes | Meniere’s disease | 57.5 |

| 24 | M | 74 | 72 | 74 | 64 | Right | No | Chronic ear infections | 92.5 |

| 25 | M | 66 | 60 | 52 | 18 | Left | No | Genetic, progressive as adult | 80 |

| 26 | M | 77 | 75 | 65 | 9 | Bilateral | No | Unknown | 105 |

| 27 | F | 65 | 63 | 59 | 36 | Right | No | Genetic, progressive as adult | 86.25 |

| 28 | M | 61 | 55 | 16 | 24 | Right | No | Progressive as child | 111.25 |

| 29 | M | 60 | 55 | 46 | 10.5 | Left | Yes | Genetic | 115 |

| 30 | F | 64 | 59 | 55 | 24 | Left | No | Genetic | 95 |

| 31 | M | 59 | 54 | 44 | 6 | Right | Yes | Progressive as adult, noise | 108.75 |

| 32 | M | 57 | 55 | 40 | 6 | right | No | Genetic | 101.25 |

| 33 | M | 65 | 38 | 50 | 12 | Right | No | Progressive as adult | 92.5 |

| 34 | M | 50 | 35 | 34 | 25 | Bilateral | Yes | Progressive as adult | 120 |

| 35 | M | 81 | 80 | 13 | 49 | Left | No | Progressive as adult | 75 |

| 36 | M | 70 | 68 | 30 | 42 | Left | Yes | Progressive as adult | 93.75 |

| 37 | M | 53 | 36 | 50 | 20 | Bilateral | Yes | Progressive as adult, noise | 120 |

| 38 | F | 74 | 72 | 56 | 35 | Left | No | Progressive as adult | 60 |

| 39 | M | 68 | 65 | 23 | 30 | Right | Yes | Meniere’s disease | 100 |

| Mean (SD) | 67.2 (9.1) | 59.6 (12.1) | 40.2 (18.4) | 26.4 (14.6) | 99.0 (18.6) |

Notes: SES: socioeconomic status; PTA: pure-tone average; HL: hearing level

Equipment and Materials

All testing took place at the Eye and Ear Institute of The Ohio State University Wexner Medical Center using sound-proof booths and acoustically insulated rooms for testing. Sentence recognition test responses were audio-visually recorded for later scoring. Participants wore FM transmitters using specially designed vests. This allowed their responses to have direct input into the camera, permitting later off-line scoring of tasks. Each task was scored by two separate individuals for 25% of responses to ensure reliable results. Reliability was determined to be >95% for all measures.

Visual stimuli were presented on paper or a touch screen monitor made by KEYTEC, INC., placed two feet in front of the participant. Auditory stimuli were presented via a Roland MA-12C speaker placed one meter in front of the participant at zero degrees azimuth. Prior to the testing session, the speaker was calibrated to 68 dB SPL using a sound level meter positioned one meter in front of the speaker at zero degrees azimuth. After the screening measures were completed, the measures outlined below were collected.

Measures of Sentence Recognition

Sentence recognition tasks were presented in the clear over a loud speaker for CI users. NH participants listened to 8-channel noise-vocoded versions of the stimuli. Two sentence recognition measures were included to assess perception of speech under the different conditions. These research measures were selected instead of using clinical stimuli (e.g., AzBio sentences or CNC words) because participants were not familiar with these materials, and because they are challenging enough to avoid ceiling effects for CI users during testing. Sentence recognition tasks were scored for both percent correct words and percent correct whole sentences.

Perceptually Robust English Sentence Test Open-Set (PRESTO) sentences were chosen from the TIMIT (Texas Instruments/Massachusetts Institute of Technology) speech collection, which were created to balance talker gender, keywords, frequency, and familiarity, with sentences varying broadly in speaker dialect and accent (25). Sentences were presented via loudspeaker, and participants were asked to repeat as much of the sentence as they could. PRESTO sentences are high-variability, complex sentences, which would be more challenging for listeners to recognize, but also more ecologically valid, representing everyday listening conditions. An example of a sentence is “A flame would use up air.” Participants were instructed to repeat 32 sentences.

Harvard Standard sentence recognition was also tested. Thirty sentences from the Harvard Standard lists were used, which were spoken and recorded by a single male talker (26). These sentences are long, complex, and semantically meaningful, consisting of an imperative or declarative structure. An example is “A pot of tea helps to pass the evening.”

The RPM was administered as a measure of nonverbal reasoning (27). The RPM was presented visually on a touch screen computer monitor and consisted of displays of geometric designs in a 3 × 3 matrix. Each design contains a missing piece. Below the matrix are eight possible response boxes, each containing a design. Participants were instructed to select a response box on the touch screen monitor to complete the pattern. Two practice trials were presented to familiarize participants with the touch screen monitor, followed by 46 test trials. Participants completed as many trials as possible and the assessment was terminated after 10 minutes. The total number of correct items on the RPM served as the primary dependent variable.

Analyses

To examine for differences in RPM scores between groups, two-tailed independent-samples t-tests were performed. Pearson bivariate correlations were performed to examine the relations between RPM scores and speech recognition scores. CI participants were then divided into high vs. low performers based on PRESTO scores, and individual RPM items were examined for their ability to differentiate high-PRESTO vs. low-PRESTO performers. Those items that differentiated these two groups were then used in further analyses as a “Subset RPM score,” described in detail below.

Results

Group Differences

CI and NH samples did not differ on age (t(81) = 0.14, p = .89), gender (p = .13 by Fisher’s Exact Test), or reading scores (t(81) = −1.58, p = .12). CI and NH controls did differ on SES (t(81) = −2.98, p = .004), with CI users demonstrating lower SES than NH controls. Two-tailed independent samples t-tests were conducted to assess differences in RPM scores, with results demonstrated in Table 2. Despite CI and NH controls attempting the same number of items on the RPM, CI users scored significantly more poorly compared to NH controls. On average, CI users attempted to answer 21 (44%) items and NH controls attempted to answer 23 (48%) items on the RPM, suggesting that the 48-item RPM may be too long to complete within the 10-minute time constraint.1

Table 2.

Raven’s Progressive Matrices scores and speech recognition scores for cochlear implant (CI) and normal-hearing (NH) participants

| CI (N = 39) | NH (N = 43) | |||||

|---|---|---|---|---|---|---|

| Raven’s Progressive Matrices | Mean | (SD) | Mean | (SD) | t value | p value |

| Items completed (number) | 21.1 | (8.7) | 22.8 | (8.6) | .93 | .355 |

| Items completed (% of total) | 43.9 | (18.2) | 47.6 | (18.0) | .93 | .355 |

| Items correct (number) | 9.9 | (5.0) | 13.0 | (5.8) | 2.61 | .011 |

| Items correct (% of total) | 20.6 | (10.4) | 27.1 | (12.0) | 2.61 | .011 |

| Speech Recognition | ||||||

| Harvard Standard Sentences (% words correct) | 71.5 | (18.4) | 65.7 | (12.3) | ||

| Harvard Standard Sentences (% whole sentences correct) | 41.1 | (23.3) | 27.8 | (14.4) | ||

| PRESTO Sentences (% words correct) | 55.9 | (24.1) | 54.5 | (11.9) | ||

Note: Speech recognition scores were not directly compared between groups because stimuli were presented in the clear for CI users and after 8-channel noise-vocoding for NH controls

Mean (and SD) speech recognition scores are also demonstrated for both groups in Table 2. For the CI group, side of implantation (left, right, or bilateral) did not influence any speech recognition or cognitive performance scores. Also, no differences in any scores were found for CI users who wore only CIs versus a CI plus hearing aid. Therefore, the data were collapsed across all CI users in all subsequent analyses reported below.

Considering demographic/audiologic factors that might contribute to speech recognition outcomes in CI users, correlation analyses were performed among speech recognition scores and measures of age, duration of hearing loss, age at onset of hearing loss, and residual hearing (considered as the better-ear unaided PTA) in the CI users. None of these correlations were significant. Next, bivariate correlations between speech recognition measures and RPM scores were performed and are summarized in Table 3. In both groups of participants, RPM scores (items correct) were positively correlated with speech recognition measures.2

Table 3.

Pearson’s bivariate correlation r values for analyses among scores on Raven’s Progressive Matrices and speech recognition scores for cochlear implant (CI) and normal-hearing (NH) participants

| RPM (# items correct) | ||

|---|---|---|

| Pearson’s r | CI (N = 39) | NH (N = 43) |

| Speech Recognition | ||

| Harvard Standard Sentences (% words correct) | .35* | .57** |

| Harvard Standard Sentences (% whole sentences correct) | .46** | .47** |

| PRESTO Sentences (% words correct) | .41** | .36* |

| PRESTO Sentences (% whole sentences correct) | .47** | .46** |

p value < 0.05

p value < 0.01

CI Individual Differences

Performance on the PRESTO whole sentences, our most ecologically relevant sentence test and the measure that was most strongly correlated with RPM scores, was used to categorize CI users as low vs. high performers based on a median split. CI users with PRESTO sentence scores higher than 21.67% were categorized as high-performing CI users, and those who scored lower were categorized as low-performing CI users. Fishers’ Exact Tests were used to compare low- vs. high-performing CI users on individual RPM items (correct vs. incorrect). Items that were not administered to specific participants due to meeting the 10-minute time constraint were treated as missing data and were not included in the Fishers’ Exact Tests of the RPM subtest items. Low-performing CI users scored significantly worse than high-performing CI users on 12 test items of the RPM. A new variable, total number of correct items out of these twelve RPM trials, was then computed for all CI users and will be referred to as the “Subset RPM score.” These Subset RPM scores were positively correlated with the percent correct on Harvard Standard whole sentences (r = .40, p = .01) and PRESTO whole sentences (r = .48, p = .002). Correlations between CI users’ performance on sentence recognition tests and nonverbal reasoning skills were similar across the 48-item RPM and the 12-item RPM (z-test comparing the magnitude of these correlations = −0.23, p = .82 for Harvard Standard; z-test = −0.25, p = .80 for PRESTO sentences). These findings suggest that the abbreviated 12-item version of the RPM has comparable predictive validity.

As a form of replication and to test the ability of this subset of RPM items to predict speech recognition in a different population, Subset RPM scores were computed for our NH controls, using the same 12 test items as used above for CI participants. In NH controls, Subset RPM scores were also positively correlated with the percent correct on Harvard Standard sentences (r = .56, p > .001), and PRESTO sentences (r = .49, p = .001).3 Correlations between NH controls’ performance on sentence recognition tests and nonverbal reasoning skills were similar across the 48-item RPM and the 12-item RPM (z-test comparing the magnitude of these correlations = −0.09, p = .93 for Harvard Standard; z-test = −0.20, p = .84 for PRESTO sentences).

Discussion

Variability in outcomes for CI users continues to be an unresolved issue. Until recently, evaluation of performance has focused on the implant itself (bottom-up processes), and once the device has been deemed functional and optimally programmed, minimal options exist for further rehabilitation. This lack of an organized structure to help identify particular patient deficits and then to improve outcomes can be very frustrating for patients and clinicians alike (28). This also leads to difficulties in establishing improved criteria for implantation or pre-operative prognostication of performance for patients considering implantation.

The goal in the current study was to determine if a visual RPM measure of nonverbal reasoning could predict speech recognition in a group of post-lingually deaf CI patients, and whether an abbreviated subset of items from the RPM could be identified to develop a shorter version of the RPM for clinical use. Our findings demonstrated that in CI users there was a moderate correlation between performance on RPM and both sentence recognition measures used. Findings were similar in the NH group listening to noise-vocoded speech. This finding of a moderate correlation between tests of nonverbal reasoning, such as RPM, and sentence or speech recognition in CI users is similar to previous studies. Moberly et al. (unpublished data) recently identified RPM as a cognitive mediator of the detrimental effects of advancing age on degraded speech recognition in this population. Holden et al. examined factors that influence CI outcomes, including cognitive abilities, and found a correlation between a composite cognitive score (consisting of measures of short-term and working memory, language, reasoning and executive function, and verbal learning) and speech recognition (9). Knutson et al. also reported findings similar to ours, showing a moderate correlation (r = 0.44) of RPM and speech recognition outcomes in CI users using multiple tests of speech (17). The authors of that study supported the notion that psychological variables could play a role in CI outcomes (17). However, they concluded that RPM is a measure of generalized intelligence and, therefore, did not rely upon rapid responses, which are thought to be critical components of cognitive information-processing skills necessary for successful outcomes with a CI. This consideration of rapid responses mentioned by Knutson et al. requires further exploration. It is quite possible the difference in interpretation between these results (17) and those presented in the current manuscript involves the time constraints (40 minutes vs. 10 minutes) presented during our testing. Each participant in the current study was given only 10 minutes to complete the testing, which is too short of a time to get through all items, and the majority of participants only completed about half of the matrices. It could be that part of the explanation as to the relationship between RPM and speech recognition in our groups was related to how quickly and accurately (i.e., how efficiently) the cognitive task could be completed. This was previously postulated by Salthouse, who examined elderly subjects, whose performance on the RPM appeared to be constrained by their information-processing speed (29). This factor needs to be further explored, specifically what roles underlying cognitive skills, such as information-processing speed, contribute to performance on tests of non-verbal reasoning. It should be noted that our groups were not made aware of any time constraints during testing; nonetheless, efficiency of responses may have contributed to their resulting RPM scores.

To expand on our initial analyses, sub-item analyses were also performed in our CI group. A goal of sub-item analyses was to determine if an abridged version of the RPM could be developed, which could be completed, scored, and interpreted rapidly in busy clinical settings. To perform these analyses, the CI group was categorized as low- vs. high-performers on speech testing, and RPM items were identified for which differences existed between the high- and low-performing groups. Twelve items were significantly different between these groups of CI users. As a form of replication using a different sample of participants, Subset RPM scores were then computed for NH controls using the same set of items. In this group, Subset RPM scores were also positively correlated with both sentence recognition tasks. Thus, this abridged version of the RPM, consisting of only twelve test items instead of the standard 48 items, should be able to be completed in only three to four minutes and may have clinical utility in predicting post-operative performance.

Identifying strong pre-operative non-auditory predictors of post-operative speech recognition is an ultimate goal of this work. Specifically, predicting CI patients’ outcome could support identification of particular individuals who are at risk for poor post-operative outcomes, improve patient counseling regarding expected post-operative outcomes, and provide the opportunity to target particular weaknesses that could be addressed in a rehabilitative program. It should be acknowledged that these bottom-up and top-down processes likely interact in a complex fashion: for example, there may be a limit to the severity of the incoming signal degradation for which top-down cognitive processes can compensate. Nonetheless, RPM appears to be a reasonable method, likely in combination with other measures, to predict speech outcomes post-operatively, and an abridged version would be much more clinically feasible. Additional studies are needed to determine this test’s prognostic utility in CI users.

Conclusion

Variability in speech recognition outcomes in CI users continues to be an ongoing issue. Our findings suggest that RPM, a visual test of nonverbal reasoning, correlates with post-operative speech recognition performance. Further analyses of the sub-items within the test revealed twelve items that predicted sentence recognition, and these items were similarly predictive in a separate NH group listening to noise-vocoded speech. An abridged version of RPM may be more useful in a busy clinical setting, and further testing using this shorter version will ideally shed light on the predictive power and feasibility of RPM. Additionally, as RPM is a complex cognitive task, additional studies are warranted to examine if more basic cognitive functions, such as information-processing speed, underlie RPM’s ability to predict speech recognition outcomes.

Acknowledgements

None.

Funding:

This work was supported by the American Otological Society Clinician-Scientist Award and the National Institutes of Health, National Institute on Deafness and Other Communication Disorders (NIDCD) Career Development Award 5K23DC015539–02 to Aaron C. Moberly.

Footnotes

Conflict of Interest Statement: ACM receives grant funding support from Cochlear Americas for an unrelated investigator-initiated research study.

No significant correlation was found between number of RPM items completed and age (r = −.10, p = .383), but a significant negative correlation was found between RPM items correct and age (r = −.45, p <.001).

Partial correlations between speech recognition measures and RPM scores remained significant after controlling for age for all speech recognition measures in both participant groups.

Partial correlations between speech recognition measures and 12-item RPM scores remained significant after controlling for age for all speech recognition measures in both participant groups.

References

- 1.Moberly AC, Castellanos I, Vasil KJ et al. “Product” Versus “Process” Measures in Assessing Speech Recognition Outcomes in Adults With Cochlear Implants. Otol Neurotol 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Norris D, McQueen JM. Shortlist B: a Bayesian model of continuous speech recognition. Psychol Rev 2008;115:357–95. [DOI] [PubMed] [Google Scholar]

- 3.Bhargava P, Gaudrain E, Baskent D. Top-down restoration of speech in cochlear-implant users. Hear Res 2014;309:113–23. [DOI] [PubMed] [Google Scholar]

- 4.Poeppel D, Idsardi WJ, van Wassenhove V. Speech perception at the interface of neurobiology and linguistics. Philos Trans R Soc Lond B Biol Sci 2008;363:1071–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Moberly AC, Bates C, Harris MS et al. The Enigma of Poor Performance by Adults With Cochlear Implants. Otol Neurotol 2016;37:1522–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Moberly AC, Houston DM, Castellanos I. Non-auditory neurocognitive skills contribute to speech recognition in adults with cochlear implants. Laryngoscope Investig Otolaryngol 2016;1:154–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Moberly AC, Houston DM, Harris MS et al. Verbal working memory and inhibition-concentration in adults with cochlear implants. Laryngoscope Investig Otolaryngol 2017;2:254–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fitzpatrick DC, Campbell AP, Choudhury B et al. Round window electrocochleography just before cochlear implantation: relationship to word recognition outcomes in adults. Otol Neurotol 2014;35:64–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Holden LK, Finley CC, Firszt JB et al. Factors affecting open-set word recognition in adults with cochlear implants. Ear Hear 2013;34:342–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Blamey P, Arndt P, Bergeron F et al. Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants. Audiol Neurootol 1996;1:293–306. [DOI] [PubMed] [Google Scholar]

- 11.Guerit F, Santurette S, Chalupper J et al. Investigating interaural frequency-place mismatches via bimodal vowel integration. Trends Hear 2014;18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Svirsky MA, Fitzgerald MB, Sagi E et al. Bilateral cochlear implants with large asymmetries in electrode insertion depth: implications for the study of auditory plasticity. Acta Otolaryngol 2015;135:354–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Won JH, Clinard CG, Kwon S et al. Relationship between behavioral and physiological spectral-ripple discrimination. J Assoc Res Otolaryngol 2011;12:375–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Akeroyd MA. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int J Audiol 2008;47 Suppl 2:S53–71. [DOI] [PubMed] [Google Scholar]

- 15.Pisoni DB. Cognitive factors and cochlear implants: some thoughts on perception, learning, and memory in speech perception. Ear Hear 2000;21:70–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Arehart KH, Souza P, Baca R et al. Working memory, age, and hearing loss: susceptibility to hearing aid distortion. Ear Hear 2013;34:251–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Knutson JF, Hinrichs JV, Tyler RS et al. Psychological predictors of audiological outcomes of multichannel cochlear implants: preliminary findings. Ann Otol Rhinol Laryngol 1991;100:817–22. [DOI] [PubMed] [Google Scholar]

- 18.Jerger J, Jerger S, Pirozzolo F. Correlational analysis of speech audiometric scores, hearing loss, age, and cognitive abilities in the elderly. Ear Hear 1991;12:103–9. [DOI] [PubMed] [Google Scholar]

- 19.Collison EA, Munson B, Carney AE. Relations among linguistic and cognitive skills and spoken word recognition in adults with cochlear implants. J Speech Lang Hear Res 2004;47:496–508. [DOI] [PubMed] [Google Scholar]

- 20.Heydebrand G, Hale S, Potts L et al. Cognitive predictors of improvements in adults’ spoken word recognition six months after cochlear implant activation. Audiol Neurootol 2007;12:254–64. [DOI] [PubMed] [Google Scholar]

- 21.Carpenter PA, Just MA, Shell P. What one intelligence test measures: a theoretical account of the processing in the Raven Progressive Matrices Test. Psychol Rev 1990;97:404–31. [PubMed] [Google Scholar]

- 22.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 1975;12:189–98. [DOI] [PubMed] [Google Scholar]

- 23.Wilkinson G, Robertson G. Wide Range Achievement Test-4th Ed. Lutz, FL: Psychological Assessment Resources, 2006. [Google Scholar]

- 24.Nittrouer S, Burton LT. The role of early language experience in the development of speech perception and phonological processing abilities: evidence from 5-year-olds with histories of otitis media with effusion and low socioeconomic status. J Commun Disord 2005;38:29–63. [DOI] [PubMed] [Google Scholar]

- 25.Gilbert JL, Tamati TN, Pisoni DB. Development, reliability, and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol 2013;24:26–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.IEEE. IEEE recommended practice for speech quality measurements: IEEE Report, 1969.

- 27.J. R. Advanced Progressive Matrices, Set II. London: H. K. Lewis, 1962. [Google Scholar]

- 28.Harris MS, Capretta NR, Henning SC et al. Postoperative Rehabilitation Strategies Used by Adults With Cochlear Implants: A Pilot Study. Laryngoscope Investig Otolaryngol 2016;1:42–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Salthouse TA. Influence of working memory on adult age differences in matrix reasoning. Br J Psychol 1993;84 (Pt 2):171–99. [DOI] [PubMed] [Google Scholar]