Summary

Objectives: Evidence-based clinical scores are used frequently in clinical practice, but data collection and data entry can be time consuming and hinder their use. We investigated the programmability of 168 common clinical calculators for automation within electronic health records.

Methods: We manually reviewed and categorized variables from 168 clinical calculators as being extractable from structured data, unstructured data, or both. Advanced data retrieval methods from unstructured data sources were tabulated for diagnoses, non-laboratory test results, clinical history, and examination findings.

Results: We identified 534 unique variables, of which 203/534 (37.8%) were extractable from structured data and 269/534 (50.4.7%) were potentially extractable using advanced techniques. Nearly half (265/534, 49.6%) of all variables were not retrievable. Only 26/168 (15.5%) of scores were completely programmable using only structured data and 43/168 (25.6%) could potentially be programmable using widely available advanced information retrieval techniques. Scores relying on clinical examination findings or clinical judgments were most often not completely programmable.

Conclusion: Complete automation is not possible for most clinical scores because of the high prevalence of clinical examination findings or clinical judgments – partial automation is the most that can be achieved. The effect of fully or partially automated score calculation on clinical efficiency and clinical guideline adherence requires further study.

Citation: Aakre C, Dziadzko M, Keegan MT, Herasevich V. Automating clinical score calculation within the electronic health record: A feasibility assessment. Appl Clin Inform 2017; 8: 369–380 https://doi.org/10.4338/ACI-2016-09-RA-0149

Keywords: Automation, decision support algorithm, clinical score, knowledge translation, workflow, clinical practice guideline

1. Background and Significance

Scoring is a part of modern medical practice. In general, scores have been created to predict clinical outcomes, perform risk stratification, aid in clinical decision making, assess disease severity or assist diagnosis. Methods used for scoring range from simple summation to complex mathematical models. After score creation, several factors limiting generalized usage have been identified: lack of external validation, failure to provide clinically useful predictions, time-consuming data collection, complex mathematical computations, arbitrary categorical cutoffs for clinical predictors, imprecise predictor definitions, usage of non-routinely collected data elements, and poor accuracy in real practice [ 1 ]. Even among scores accepted by clinicians in clinical practice guidelines, these same weaknesses can still be still barriers to consistent, widespread use [ 2 – 5 ]. Identifying methods to overcome these weaknesses may help improve evidence-based clinical practice guideline adherence and patient care [ 1 ].

Score complexity can be a barrier to manual score calculation, especially given the time constraints of modern clinical practice. For example, the Acute Physiology and Chronic Health Evaluation (APACHE) score, widely accepted in critical care practice, consisted of 34 physiologic variables in its original iteration. Data collection and calculation is time-consuming, therefore subsequent APACHE scoring models (APACHE II and III) were simplified to include fewer variables to increase usage [ 6 – 8 ]. Fewer variables also reduced the risk of missing data elements. Other popular scores, such as CHADS 2 and HAS-BLED, have employed mnemonics and point-based scoring systems for ease of use at the point-of-care [ 9 , 10 ]. Despite these simplifications to support manual calculation, many popular and useful clinical scores have been translated to mobile and web-based calculators for use at the bedside [ 11 – 13 ]. The use of mobile clinical decision support tools at the point-of-care is a promising development, however these tools largely remain isolated from the clinical data present in the Electronic Health Record (EHR) [ 14 ].

In 2009, Congress passed the Health Information Technology for Economic and Clinical Health Act, which aimed to stimulate EHR adoption by hospitals and medical practices. As a result, by the end of 2014, 96.9% of hospitals were using a certified EHR and 75.5% were using an EHR with basic capabilities [ 15 ]. Concurrent with widespread EHR adoption, there has been a renewed emphasis on clinical quality and safety and the practice of evidence-based medicine. Integration of useful evidence-based clinical score models into the EHR with automated score calculation based on realtime data is a logical step towards meaningful use of EHR’s to improve patient care. Time-motion studies of hospitalists and emergency department physicians have shown that they spend about 34% and 56% of their time interacting with the EHR, respectively [ 16 , 17 ]. Automation of common, recurrent clinical tasks may reduce the time clinicians spend in front of the computer performing data retrieval or data entry. Potential benefits are twofold – allowing clinicians to spend more time at the bedside and reducing error associated with data entry [ 18 ].

2. Objectives

The goal of this study was to quantify the “programmability” (defined as the opportunity to automate calculation through computerized extraction of clinical score components) of validated clinical scores.

3. Methods

3.1 Calculator identification

This study was performed at Mayo Clinic in Rochester, Minnesota and was deemed exempt by the Institutional Review Board. One hundred and sixty-eight externally validated clinical scores published in the medical literature were identified as described previously [ 19 ]. In brief, we extracted online clinical calculators from twenty-eight dedicated online medical information resources [ 11 – 13 , 20 ]. A total of 371 calculators were identified; two-hundred three calculators were excluded from this analysis because they consisted of simple formula or conversions. Only validated clinical scores published in PubMed were included in our analysis.

3.2 Score variable classification

Data variables for the remaining 168 calculators were tabulated and categorized as being theoretically retrievable from structured or unstructured EHR data sources. We defined structured EHR data sources as objective data present in the EHR in a retrievable, structured format. Examples include: laboratory values (e.g. “creatinine”), ICD-9-CM or ICD-10-CM coded diagnoses (e.g. “atrial fibrillation”), medications (e.g. “Preoperative treatment with insulin”), demographic values (e.g. “age”), vital signs (e.g. “heart rate”), and regularly charted structured data (e.g. “stool frequency”). Unstructured EHR data sources included subjective variables, such as descriptive elements of clinical history, examination findings (e.g. “withdraws to painful stimuli”), non-laboratory test results (e.g. “echocardiogram findings”), and clinical judgments entered into the EHR (e.g. “cancer part of presenting problem”). Variable categorization was not exclusive - some data elements could potentially be found in both structured and unstructured data sources depending on the clinical context.

3.3 Programmability analysis

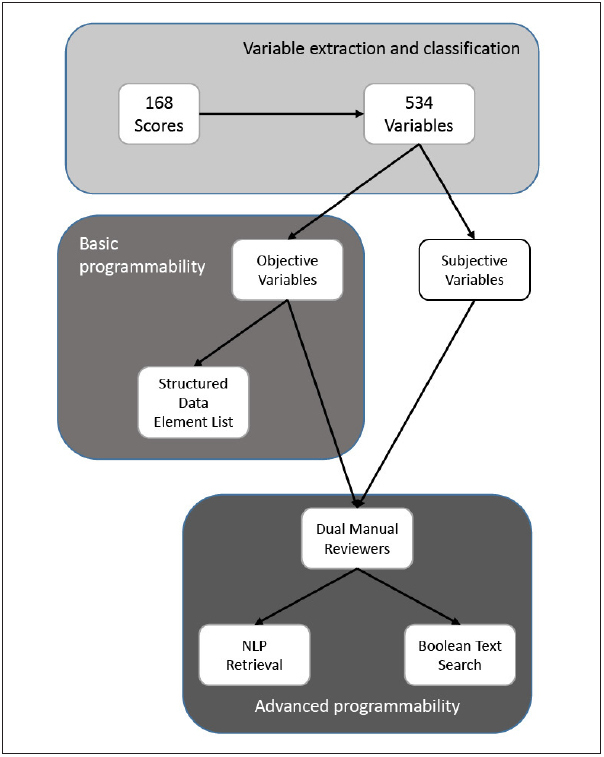

Two definitions of programmability were used in our analysis. We defined basic programmability as the proportion of variables in each score for which calculation is possible using only structured or numerical data found in the EHR. Advanced programmability was defined as the proportion of variables within each score potentially retrievable by a combination of structured data or advanced information retrieval techniques. A summary of our review process is shown in ► Figure 1 .

Fig. 1 Study Methodology.

Variables were extracted from all 168 scores and tabulated. The list of variables was compared against a list of structured variables available in the EHR. Additionally, two reviewers analyzed the theoretical extractability of each variable from the EHR with either NLP or Boolean text search.

First, to assess the basic programmability of each score, we compared each variable against a list of structured data elements available in our local EHR. Next, two reviewers with local experience in automated score calculator creation (CA and MD) manually reviewed each variable to assess if two advanced information retrieval techniques, Boolean logic text search or natural language processing (NLP) [ 21 ], could theoretically be utilized to abstract the following specific clinical variable categories when structured data was not present: diagnoses, non-laboratory test results (e.g. procedure reports, radiology reports), and clinical history. We interpreted the availability of each variable in the clinical context of each score’s target population; clinical examination findings were assumed to be unextractable. Disagreement on the ability to capture each score’s variables using advanced information retrieval techniques was completely adjudicated between the reviewers. Descriptive statistical methods were used. All statistical analyses were performed with R version 3.1.1 [ 22 ].

4. Results

We identified five-hundred thirty-four unique variables in 168 clinical scores. The five most utilized variables were “Age” (n = 83), “Heart rate” (n = 34), “Systolic blood pressure” (n = 34), “Creatinine/ eGFR” (n = 31) and “Sex” (n = 27). A summary of categorization is as follows: 25/534 (4.7%) vital signs, 7/534 (1.3%) demographic variables, 97/534 (18.2%) coded diagnoses or procedures, 131/534 (24.5%) history of present illness, 91/534 (17.0%) laboratory values, 20/534 (3.7%) medications, 133/534 (24.9%) clinical examination, 106/534 (19.9%) clinical judgment, 29/534 (5.4%) another clinical score, 75/534 (14.0%) non-laboratory test result, 40/534 (7.5%) non-vital sign regularly charted variables. Categorization was not mutually exclusive: 360/534 (67.4%) were assigned to one category, 136/534 (25.5%) to two categories and 38/534 (7.1%) to three or more categories.

Structured data sources were available for 202/534 (37.8%) variables. Using a combination of structured data and advanced information retrieval techniques, the proportion of variables theoretically retrievable increased to 269/534 (50.4%). About half of variables, 265/534 (49.6%), rely on data existing outside of the EHR and cannot be reliably extracted. Basic and advanced programmability assessments for all 168 scores can be found in ► Table 1 and ► Supplemental Table 1. For brevity, ► Table 1 only lists scores with greater than 85% advanced programmability. Only 26/168 (15.5%) scores were 100% programmable using solely structured data and 43/168 (25.6%) were potentially 100% programmable when supplemented with advanced information retrieval methods. Non-programmable data elements included clinical examination findings, clinical judgments, radiology findings, and planned procedures. Representative examples of non-programmable data elements can be found in ► Table 2 . We have described the individual scores evaluated in our study further in ►Supplemental Table 1. The supplemental table includes the PubMed ID, clinical outcomes predicted, applicable populations extracted from the derivation study, variables, and programmability assessments for each score.

Table 1.

Programmability of clinical scores.

| Score name | Number of variables | Basic programmability (%) | Advanced programmability (%) |

|---|---|---|---|

| Completely programmable using only structured data sources | |||

| ABIC score | 4 | 100 | 100 |

| ATRIA bleeding risk score | 5 | 100 | 100 |

| ATRIA stroke risk score | 8 | 100 | 100 |

| Bleeding risk score | 4 | 100 | 100 |

| CHA2DS2-VASc | 7 | 100 | 100 |

| CHADS2 | 5 | 100 | 100 |

| Charlson Comorbidity index | 16 | 100 | 100 |

| CRIB II | 5 | 100 | 100 |

| Glasgow alcoholic hepatitis score | 5 | 100 | 100 |

| JAMA kidney failure risk equation | 8 | 100 | 100 |

| LODS score | 11 | 100 | 100 |

| LRINEC Score for Necrotizing STI | 6 | 100 | 100 |

| MOD score | 6 | 100 | 100 |

| Oxygenation index | 3 | 100 | 100 |

| Pancreatitis outcome prediction score | 6 | 100 | 100 |

| PELD score | 5 | 100 | 100 |

| Ranson’s criteria | 11 | 100 | 100 |

| RAPS | 4 | 100 | 100 |

| REMS | 6 | 100 | 100 |

| Renal risk score | 6 | 100 | 100 |

| Revised Trauma score | 3 | 100 | 100 |

| Rockall score | 3 | 100 | 100 |

| Rotterdam score | 4 | 100 | 100 |

| SOFA | 6 | 100 | 100 |

| sPESI | 6 | 100 | 100 |

| TIMI risk index | 3 | 100 | 100 |

| Completely programmable with advanced information retrieval methods | |||

| APACHE II | 14 | 93 | 100 |

| EHMRG | 10 | 90 | 100 |

| ASCVD pooled cohort equations | 9 | 89 | 100 |

| HAS-BLED | 9 | 89 | 100 |

| IgA nephropathy score | 8 | 88 | 100 |

| Framingham coronary heart disease risk score | 7 | 86 | 100 |

| SIRS, Sepsis, and Septic Shock criteria | 7 | 86 | 100 |

| RIETE score | 6 | 83 | 100 |

| MELD score | 5 | 80 | 100 |

| SWIFT score | 5 | 80 | 100 |

| Lung Injury score | 4 | 75 | 100 |

| Malnutrition universal screening tool | 4 | 75 | 100 |

| PIRO score | 8 | 75 | 100 |

| Panc 3 score | 3 | 67 | 100 |

| Surgical Apgar score | 3 | 67 | 100 |

| GAP risk assessment score | 4 | 50 | 100 |

| Clinical Pulmonary Infection Score | 7 | 43 | 100 |

| Partial automation, some manual input required | |||

| Qstroke score | 16 | 88 | 94 |

| SAPS II | 16 | 94 | 94 |

| PRISM score | 14 | 93 | 93 |

| MPMII – 24–48–72 | 13 | 85 | 92 |

| QRISK2 | 13 | 85 | 92 |

| PELOD score | 12 | 92 | 92 |

| 30 day PCI readmission risk | 11 | 82 | 91 |

| PESI | 11 | 91 | 91 |

| QMMI score | 11 | 82 | 91 |

| SNAP-PE | 31 | 90 | 90 |

| Cardiac surgery score | 10 | 60 | 90 |

| PORT/PSI score | 20 | 85 | 90 |

| SNAP | 28 | 89 | 89 |

| Hemorr2hages score | 9 | 89 | 89 |

| SNAP-PE II | 9 | 89 | 89 |

| CRUSADE score | 8 | 88 | 88 |

| SHARF score | 8 | 88 | 88 |

| MPMII – admission | 15 | 67 | 87 |

| Mayo Clinic Risk Score – inpatient death after CABG | 7 | 71 | 86 |

| Mayo Clinic Risk Score – inpatient death after PCI | 7 | 71 | 86 |

| Mayo Clinic Risk Score – inpatient MACE after PCI | 7 | 71 | 86 |

| Meningococcal septic shock score | 7 | 71 | 86 |

| MPM – 24hr | 14 | 86 | 86 |

| SCORETEN scale | 7 | 86 | 86 |

Table 2.

Representative sample of non-programmable variables.

| Score variable | Score containing variable |

|---|---|

| Clinical examination | |

| Wheezing present | Clinical asthma evaluation score – 2, Pulmonary score, Modified pulmonary index score (MPIS), Pediatric asthma severity score (PASS) |

| Clinical judgment | |

| Alternative diagnosis as or more likely than deep venous thrombosis | Well’s criteria for DVT |

| Cancer part of the presenting problem | Mortality probability model (MPM-0) |

| Clinically unstable pelvic fracture | TASH score |

| Another clinical score | |

| MMRC dyspnea index | BODE score |

| MMSE-KC | Multidimensional frailty score |

| Non-laboratory test result | |

| Progression of chest radiographic abnormalities | Clinical pulmonary infection score (CPIS) |

| Pancreatic necrosis | CT severity index |

| Clinical history | |

| Previous ICU admission in last 6 months | MPM-0 |

| History of angina | TIMI risk score |

| History of being hurt in a fall in the last year | Mayo Ambulatory Geriatric Evaluation |

5. Discussion

In this study, we analyzed the theoretical programmability of 168 common, evidence-based clinical score calculators available online to explore the feasibility of automated score calculators integrated into the EHR. In general, variable values can be extracted from either structured or unstructured data sources. Methods of information retrieval from unstructured data sources, such as Boolean text search and NLP, may not be available in all EHR systems and success may depend on local expertise. Consequently, we dichotomized our programmability evaluation of each variable into information retrieval methods that either (1) used only structured data (“basic programmability”) or (2) used structured data supplemented with information from unstructured data sources (“advanced programmability”). Data elements categorized as structured variables included laboratory values, diagnoses, medications, vital signs and demographic parameters. These parameters are commonly available as structured EHR data elements; availability as structured data is likely to be similar in other settings. Therefore, we would expect wide generalizability of automation methods for clinical scores that utilize only structured data sources.

Although the list of clinical score calculators we analyzed was not comprehensive, all (1) have been externally validated in the medical literature, (2) are found on commonly used medical reference web portals or calculator repositories, and (3) have been paired with calculators to assist score computation and interpretation. Calculator availability through these online sites and apps is driven by consumer demand; most calculator repositories include methods allowing users to request inclusion of additional calculators. Therefore, we believe that the calculators examined in this study reflect most of the in-demand scores used in clinical practice. The programmability of other important scores not included in this study could be determined through a similar process. We also noted a non-significant trend towards increased basic programmability of newer clinical scores (data not shown), potentially reflecting the use of readily available EHR data for model development and validation. If this trend is real, one would expect high programmability of future evidence-based clinical scores – facilitating automation.

Usage of the manual score calculators analyzed by our study requires manual data collection and entry into a web-based service or mobile application. Automating the data collection and entry processes would create time-saving efficiencies; these efficiencies may be larger for scores with cognitively demanding calculations, scores requiring extensive data collection and entry, or scores that are frequently recalculated.[ 23 ] Many of the scores evaluated in our analysis have already been successfully automated with minimal modification, including APACHE II, SOFA, PESI [ 24 – 28 ]. In our analysis, we found these scores to be highly programmable. Additionally, these scores were clinically important at the time of automation. We propose that future efforts towards clinical score automation should be directed towards the clinical scores that are both highly utilized and highly programmable. To accomplish this goal, the results of our programmability analysis should be paired with a user-needs assessment to prioritize future work.

Our programmability analysis has several limitations. First, the advanced programmability assessments were theoretical and based on extensive experience with these specific advanced information retrieval methods at our institution. Score calculators requiring advanced information retrieval methods for full automation will likely need further study prior to local implementation.

Second, retrieval of certain variable types may require special considerations. Diagnosis variables can be found in both structured and unstructured data sources within the EHR and linked information systems. The accuracy of diagnosis identification using either diagnosis codes (ICD-9-CM, ICD-10-CM) independently or supplemented with text search or NLP can be suspect – producing many false positives and false negatives [ 29 ]. More comprehensive terminologies such as SNOMED CT® (Systemized Nomenclature of Medicine – Clinical Terms) may improve accuracy of diagnosis identification as its usage becomes more widespread by virtue of its comprehensive biomedical vocabulary. Laboratory test values can have multiple naming variations that require reconciliation (e.g. samples measured from serum or plasma). Reconciliation of laboratory test data may be more difficult when using an EHR with remote retrieval capabilities due to different laboratory naming conventions.

Third, many clinical scores rely on time-sensitive clinical data that may be missing from the EHR at the time of score calculation – such as clinical examination findings and clinical history. Furthermore, subjective variable extraction by advanced information retrieval techniques is not 100% accurate. Consequently, calculator interfaces will need to allow for manual entry to correct erroneous data or to input missing data and data from external sources. To give users confidence of data veracity, users may desire a hyperlink to the source data or timestamp of when the source data was obtained.

Lastly, about half of all variables extracted from the clinical scores evaluated in our study are likely not retrievable using either structured data alone or supplemented with advanced information retrieval techniques. Other advanced information retrieval techniques, such as machine learning and data mining, could be used to extract score variables from the EHR or clinical images [ 30 – 34 ]. The application of these techniques may expand the list of fully programmable calculators and increase calculation accuracy. However, even these other advanced techniques will likely not be able to retrieve many variables representing clinical history items, clinical examination findings or clinical judgments – the largest categories of data elements used in the selected clinical scores. Score calculators containing these data elements may require alternative strategies to utilize these non-programmable elements. One potential strategy for semi-automated calculation of scores utilizing nonprogrammable data elements would be creation of an interface with pre-populated checkboxes or radio-buttons for expected inputs. Other strategies may be needed for scores requiring raw data input, such as patient generated family history information.

6. Conclusion

We assessed one hundred sixty-eight commonly used clinical scores for programmability to facilitate EHR-based automated calculation. Only 26/168 (15.5%) of scores were completely programmable using solely structured data extractable from the EHR and 43/168 (25.6%) could potentially be programmable using widely available advanced information retrieval techniques. Partial automation with manual entry of non-programmable data elements may be necessary for many important clinical scores. The effect of fully or partially automated score calculation on clinical efficiency and clinical guideline adherence requires further study.

Multiple Choice Question

In addition to programmability, which of the following factors is most important to guide the prioritization of automated clinical score calculator development?

Disease prevalence

Clinician needs

Frequency of score calculation

Incorporation into clinical practice guidelines

Answer

All of these factors are important considerations when choosing a clinical score to automate. A score calculator for a disease with high prevalence may be used frequently, but only if the predicted outcome is useful to the clinician. Automating a score that requires frequent recalculation would save time, especially if the information retrieval and score calculation tasks are time-consuming. Incorporation of a score into clinical practice guidelines can both elevate the importance of a score and drive utilization. Clinicians are the best suited to understand the local prevalence of disease, the clerical burdens of recalculation, and the relative importance of the score to their practice. Therefore, (B) is the best answer.

Funding Statement

Funding This publication was made possible by CTSA Grant Number UL1 TR000135 from the National Center for Advancing Translational Sciences (NCATS), a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NIH.

Conflict of Interest Statement The authors declare that they have no conflicts of interest in the research.

Clinical Relevance Statement

Automated calculation of commonly used clinical scores within the EHR could reduce the cognitive-workload, improve practice efficiency, and facilitate clinical guideline adherence.

Human Subjects Protects

Humans subjects were not included in the project.

Online Zusatzmaterial (XLS)

Online Supplementary Material (XLS)

References

- 1.Wyatt JC, Altman DG.Commentary: Prognostic models: clinically useful or quickly forgotten? BMJ 199531170191539–1541. [Google Scholar]

- 2.January CT, Wann LS, Alpert JS, Calkins H, Cigarroa JE, Cleveland JC, Conti JB, Ellinor PT, Ezekowitz MD, Field ME, Murray KT, Sacco RL, Stevenson WG, Tchou PJ, Tracy CM, Yancy CW. American College of Cardiology/American Heart Association Task Force on Practice Guidelines. 2014 AHA/ACC/HRS guideline for the management of patients with atrial fibrillation: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines and the Heart Rhythm Society. J Am Coll Cardiol. 2014;64(21):e1–e76.. doi: 10.1016/j.jacc.2014.03.022. [DOI] [PubMed] [Google Scholar]

- 3.Kahn SR, Lim W, Dunn AS, Cushman M, Dentali F, Akl EA, Cook DJ, Balekian AA, Klein RC, Le H, Schulman S, Murad MH. Prevention of VTE in Nonsurgical Patients. Chest. 2012;141(02):e195S–e226S.. doi: 10.1378/chest.11-2296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Konstantinides S V, Torbicki A, Agnelli G, Danchin N, Fitzmaurice D, Galiè N, Gibbs JSR, Huisman M V, Humbert M, Kucher N, Lang I, Lankeit M, Lekakis J, Maack C, Mayer E, Meneveau N, Perrier A, Pruszczyk P, Rasmussen LH, Schindler TH, Svitil P, Vonk Noordegraaf A, Zamorano JL, Zompatori M.Task Force for the Diagnosis and Management of Acute Pulmonary Embolism of the European Society of Cardiology (ESC). 2014 ESC guidelines on the diagnosis and management of acute pulmonary embolism Eur Heart J 201435433033–3069.3069a–3069k.25173341 [Google Scholar]

- 5.Mandell LA, Wunderink RG, Anzueto A, Bartlett JG, Campbell GD, Dean NC, Dowell SF, File TM, Musher DM, Niederman MS, Torres A, Whitney CG. Infectious Diseases Society of America/American Thoracic Society Consensus Guidelines on the Management of Community-Acquired Pneumonia in Adults. Clin Infect Dis. 2007;44(02):S27–S72.. doi: 10.1086/511159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Knaus WA, Wagner DP, Draper EA, Zimmerman JE, Bergner M, Bastos PG, Sirio CA, Murphy DJ, Lotring T, Damiano A, Harrell FE. The APACHE III Prognostic System. Chest. 1991;100(06):1619–1636. doi: 10.1378/chest.100.6.1619. [DOI] [PubMed] [Google Scholar]

- 7.Knaus WA, Draper EA, Wagner DP, Zimmerman JE. APACHE II. Crit Care Med. 1985;13(10):818–829. [PubMed] [Google Scholar]

- 8.Knaus WA, Zimmerman JE, Wagner DP, Draper EA, Lawrence DE. APACHE—acute physiology and chronic health evaluation: a physiologically based classification system. Crit Care Med. 1981;9(08):591–597. doi: 10.1097/00003246-198108000-00008. [DOI] [PubMed] [Google Scholar]

- 9.Gage BF, Waterman AD, Shannon W, Boechler M, Rich MW, Radford MJ. Validation of Clinical Classification Schemes for Predicting Stroke. JAMA. 2001;285(22):2864. doi: 10.1001/jama.285.22.2864. [DOI] [PubMed] [Google Scholar]

- 10.Pisters R, Lane DA, Nieuwlaat R, de Vos CB, Crijns HJGM, Lip GYH. A Novel User-Friendly Score (HAS-BLED) To Assess 1-Year Risk of Major Bleeding in Patients With Atrial Fibrillation. Chest. 2010;138(05):1093–1100. doi: 10.1378/chest.10-0134. [DOI] [PubMed] [Google Scholar]

- 11.Walker G.MDCalc. New York, NY: MD Aware, LLC; 2016 [cited 2016 Apr 21]. Available from:http://www.mdcalc.com

- 12.Schwartz D, Kruse C.QxMD Vancouver, BC: QxMD Medical Software Inc.2016[cited 2016 Apr 21]. Available from:http://www.qxmd.com [Google Scholar]

- 13.Topol EJ.Medscape New York, NY: Medscape, LLC;2016[cited 2016 Apr 21]. Available from:http://www.medscape.com

- 14.Fleischmann R, Duhm J, Hupperts H, Brandt SA. Tablet computers with mobile electronic medical records enhance clinical routine and promote bedside time: a controlled prospective crossover study. J Neurol. 2015;262(03):532–540. doi: 10.1007/s00415-014-7581-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Charles D, Gabriel M, Searcy T. Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospitals: 2008–2014. Vol. 23, ONC Data Brief. Office of the National Coordinator for Health Information Technology. 2015.

- 16.Tipping MD, Forth VE, O’Leary KJ, Malkenson DM, Magill DB, Englert K, Williams M V. Where did the day go?-A time-motion study of hospitalists. J Hosp Med. 2010;5(06):323–328. doi: 10.1002/jhm.790. [DOI] [PubMed] [Google Scholar]

- 17.Hill RG, Sears LM, Melanson SW. 4000 Clicks: a productivity analysis of electronic medical records in a community hospital ED. Am J Emerg Med. 2013;31(11):1591–1594. doi: 10.1016/j.ajem.2013.06.028. [DOI] [PubMed] [Google Scholar]

- 18.Bates DW.Using information technology to reduce rates of medication errors in hospitals BMJ 20003207237788–791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dziadzko MA, Gajic O, Pickering BW, Herasevich V. Clinical calculators in hospital medicine: Availability, classification, and needs. Comput Methods Programs Biomed. 2016;133:1–6. doi: 10.1016/j.cmpb.2016.05.006. [DOI] [PubMed] [Google Scholar]

- 20.Post TW.UpToDate Waltham, MA: UpToDate, Inc.2016. Available from:http://www.uptodate.com [Google Scholar]

- 21.Nadkarni PM, Ohno-Machado L, Chapman WW. Natural language processing: an introduction. J Am Med Inform Assoc. 2011;18(05):544–551. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.R Core Team.R: A Language and Environment for Statistical Computing Vienna, Austria: 2016 [Google Scholar]

- 23.Sintchenko V, Coiera EW.Which clinical decisions benefit from automation? A task complexity approach Int J Med Inform 2003702–3309–316. [DOI] [PubMed] [Google Scholar]

- 24.Harrison AM, Yadav H, Pickering BW, Cartin-Ceba R, Herasevich V. Validation of Computerized Automatic Calculation of the Sequential Organ Failure Assessment Score. Crit Care Res Pract. 2013;2013:1–8. doi: 10.1155/2013/975672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Thomas M, Bourdeaux C, Evans Z, Bryant D, Greenwood R, Gould T. Validation of a computerised system to calculate the sequential organ failure assessment score. Intensive Care Med. 2011;37(03):557–557. doi: 10.1007/s00134-010-2083-2. [DOI] [PubMed] [Google Scholar]

- 26.Mitchell WAL. An automated APACHE II scoring system. Intensive Care Nurs. 1987;3(01):14–18. doi: 10.1016/0266-612x(87)90005-8. [DOI] [PubMed] [Google Scholar]

- 27.Gooder VJ, Farr BR, Young MP. Accuracy and efficiency of an automated system for calculating APACHE II scores in an intensive care unit. Proc AMIA Annu Fall Symp. 1997:131–135. [PMC free article] [PubMed] [Google Scholar]

- 28.Vinson DR, Morley JE, Huang J, Liu V, Anderson ML, Drenten CE, Radecki RP, Nishijima DK, Reed ME. The Accuracy of an Electronic Pulmonary Embolism Severity Index Auto-Populated from the Electronic Health Record: Setting the stage for computerized clinical decision support. Appl Clin Inform. 2015;6(02):318–333. doi: 10.4338/ACI-2014-12-RA-0116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Li L, Chase HS, Patel CO, Friedman C, Weng C. Comparing ICD9-encoded diagnoses and NLP-processed discharge summaries for clinical trials pre-screening: a case study. AMIA Annu Symp Proc. 2008:404–408. [PMC free article] [PubMed] [Google Scholar]

- 30.Jiang M, Chen Y, Liu M, Rosenbloom ST, Mani S, Denny JC, Xu H. A study of machine-learning-based approaches to extract clinical entities and their assertions from discharge summaries. J Am Med Inform Assoc. 2011;18(05):601–606. doi: 10.1136/amiajnl-2011-000163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Deo RC. Machine Learning in Medicine. Circulation. 2015;132(20):1920–1930. doi: 10.1161/CIRCULATIONAHA.115.001593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Austin PC, Tu J V, Ho JE, Levy D, Lee DS. Using methods from the data-mining and machine-learning literature for disease classification and prediction: a case study examining classification of heart failure sub-types. J Clin Epidemiol. 2013;66(04):398–407. doi: 10.1016/j.jclinepi.2012.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.de Bruijn B, Cherry C, Kiritchenko S, Martin J, Zhu X. Machine-learned solutions for three stages of clinical information extraction: the state of the art at i2b2 2010. J Am Med Inform Assoc. 2011;18 05:557–562. doi: 10.1136/amiajnl-2011-000150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA. 2016;316(22):2402. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Online Zusatzmaterial (XLS)

Online Supplementary Material (XLS)