Abstract

A new diffusion model of decision making in continuous space is presented and tested. The model is a sequential sampling model in which both spatially continuously distributed evidence and noise are accumulated up to a decision criterion (a 1D line or a 2D plane). There are two major advances represented in this research. The first is to use spatially continuously distributed Gaussian noise in the decision process (Gaussian process or Gaussian random field noise) which allows the model to represent truly spatially continuous processes. The second is a series of experiments that collect data from a variety of tasks and response modes to provide the basis for testing the model. The model accounts for the distributions of responses over position and response time distributions for the choices. The model applies to tasks in which the stimulus and the response coincide (moving eyes or fingers to brightened areas in a field of pixels) and ones in which they do not (color, motion, and direction identification). The model also applies to tasks in which the response is made with eye movements, finger movements, or mouse movements. This modeling offers a wide potential scope of applications including application to any device or scale in which responses are made on a 1D continuous scale or in a 2D spatial field.

Keywords: Diffusion model, spatially continuous scale, response time, Gaussian process noise, distributed representations

Stimuli in laboratory research and in the real world are often continuous in space and, in the real world, responses to them are often made on continuous scales, sometimes one dimensional (1D) and sometimes two dimensional (2D). I present a new spatially continuous diffusion model (SCDM), a quantitative sequential-processing model, and show that it can explain how decisions are made about such stimuli, how decisions are expressed on continuous scales, and how decisions evolve over the time between onset of a stimulus and execution of a response.

Continuous response scales may be better suited than discrete ones in some situations for clinical patients, children, or older adults in that they remove the requirement of dividing the knowledge on which their decisions are based into discrete categories. There is also a broad range of potential applications in cognitive psychology including visual search and coordinate systems (e.g., Golomb et al., 2014), psychometric item response theory (e.g., Noel & Dauvier, 2007; Muller, 1987; Ferrando, 1999), working memory (e.g., Hardman et al., 2017; van den Berg et al., 2014), number line tasks in numerical cognition (e.g., Thompson & Siegler, 2010), relationships between binary responses, confidence judgments, and responses on continuous scales in perception and memory (e.g., Province & Rouder, 2012), fuzzy set theory (e.g., Smithson & Verkuilen, 2006), visual attention (e.g., Itti & Koch, 2001), and dynamical systems models of movements (e.g., Klaes et al., 2012; Wilimzig et al., 2006). Many of this list of studies used representations of stimuli and responses on continuous scales but did not examine or model the time course of processing, something that is essential to understanding decision making. This modeling approach also fits naturally with models of neural population codes (e.g., Beck et al., 2008; Deneve et al., 1999; Georgopoulos et al., 1986; Jazayeri & Movshon, 2006; Liu & Wang, 2008; Nichols & Newsome, 2002; see the review and challenge for diffusion modeling in Pouget et al., 2013). The SCDM can also be seen as an extension of dynamical systems and population code models that allows them to account for both response choices and the distributions of response times (RTs).

The SCDM is also an extension of one of the most successful models of simple decision making, the sequential sampling, diffusion decision model for two-choice decisions (Ratcliff, 1978; Ratcliff & McKoon, 2008; Ratcliff, Smith, Brown, & McKoon, 2016). That model explains the choices individuals make and the time taken to make them by assuming that noisy evidence is accumulated over time to one of two decision criteria. This and related models have been influential in many domains, including clinical research (Ratcliff & Smith, 2015; White, Ratcliff, Vasey, & McKoon, 2010), neuroscience research, and neuroeconomics research (Gold & Shadlen, 2001, 2007; Krajbich, Armel, & Rangel, 2010; Smith & Ratcliff, 2004). There is also a growing body of evidence that diffusion models provide a reasonable account of the mappings between behavioral measures and neurophysiological measures (e.g., EEG, fMRI, and single-cell recordings in animals; see the review by Forstmann, Ratcliff, & Wagenmakers, 2016). The model is also being used as a psychometric tool in studies of differences among individuals (e.g., Ratcliff, Thapar & McKoon, 2010, 2011; Ratcliff, Thompson, & McKoon, 2015: Schmiedek et al., 2007; Pe, Vandekerckhove, & Kuppens, 2013).

Historically, the earliest models for two-choice decisions were random walk models or counter models (LaBerge, 1962; Laming, 1968; Link & Heath, 1975; Stone, 1960; Smith & Vickers, 1988; Vickers, Caudrey, & Willson, 1971) in which evidence entered the decision process at discrete times (see Ratcliff & Smith, 2004, for an evaluation of model architectures). The advance from discrete random walk processes to continuous diffusion processes resulted in an explosion of theoretical and applied research (much of it in the last 15 to 20 years). I believe that the advance from modeling the time course of discrete decisions to the time course of decisions in continuous space could have the same theoretical and applied impact.

Diffusion models have also been used for multi-choice decisions. For example, Roe, Busemeyer, & Townsend (2001; Busemeyer & Townsend, 1993) developed decision field theory and applied it to tasks with multi-alternative decisions and multi-attribute stimuli. According to the theory, at each moment in time, options are compared in terms of advantages and disadvantages with respect to an attribute and these evaluations are accumulated across time until a threshold is reached. The first option to cross the threshold determines the choice that is made. The theory accounts for a number of findings that seem paradoxical from the perspective of rational choice theory. Another domain that has been studied intensively involves confidence judgments. When individuals are asked to indicate how confident they are in the correctness of a decision, they typically do so by choosing one of several categorical responses (e.g., very confident, somewhat confident, etc.). Like the two-choice model, multi-choice diffusion models have provided a detailed explanation of choices and RTs (Leite & Ratcliff, 2010; Niwa & Ditterich, 2008; Pleskac & Busemeyer, 2011; Ratcliff & Starns, 2009, 2013; Voskuilen & Ratcliff, 2016). However, despite the tradition in which confidence judgments are measured in discrete categories, confidence should be seen as a continuous dimension in some situations, not a discrete categorical one, and the modeling presented here might apply to such confidence judgments made on a continuous scale.

The Spatially Continuous Diffusion Model

The core of the SCDM is conceptually simple: It is a sequential sampling model in which information from a stimulus is represented on a continuous line or plane and evidence from it is accumulated up to a decision criterion, which is also a continuous line or plane. Key to the model’s success is that the noise added to the accumulation process is spatially continuously distributed. To demonstrate the potential of the model, the experiments below tested it across a range of tasks, stimuli, and response modalities. The tasks were brightness, color, and direction-of-motion discriminations with static and dynamic displays with responses made on the same scale as stimuli were displayed or with responses and stimuli decoupled. Responses were made on 1D circles, arcs, and lines and 2D planes and they were given by eye, finger, and mouse movements. The effects of each independent variable were measured in at least two experiments to address the replicability of the effects.

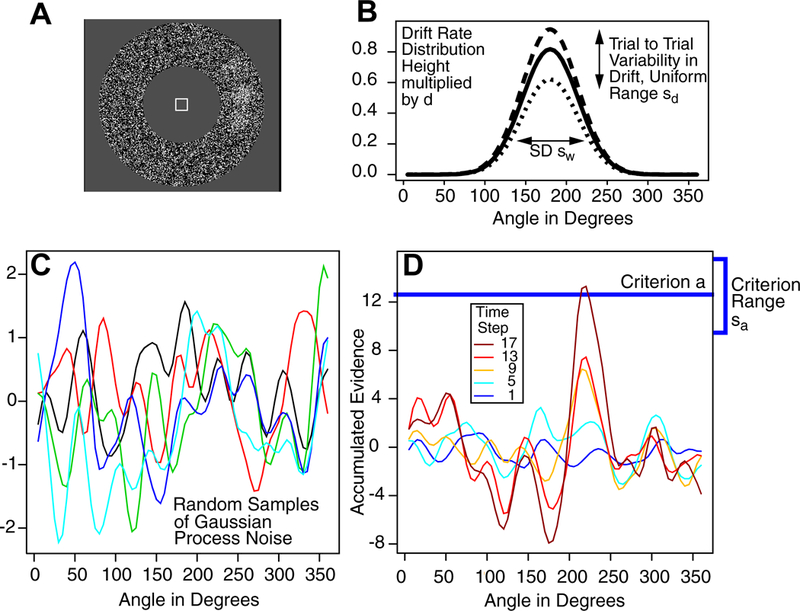

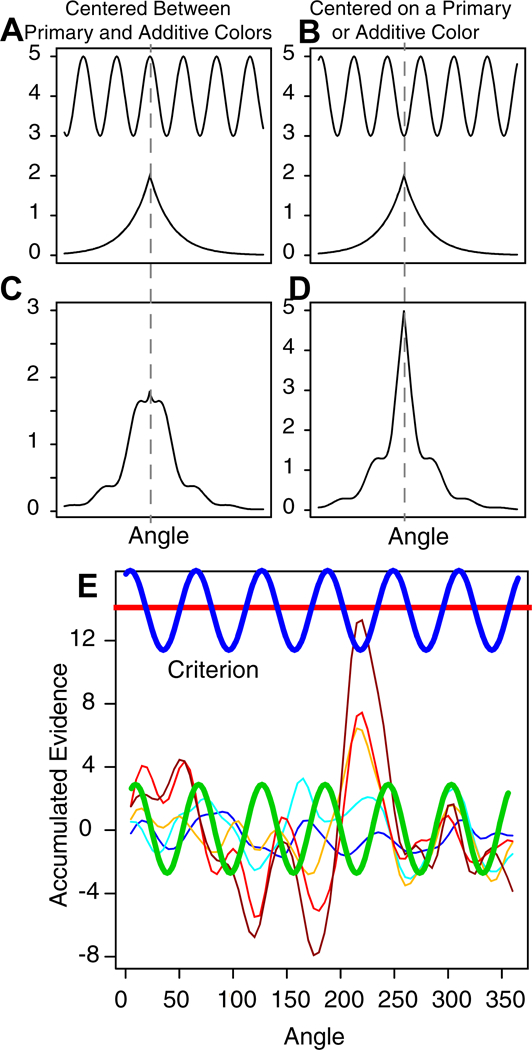

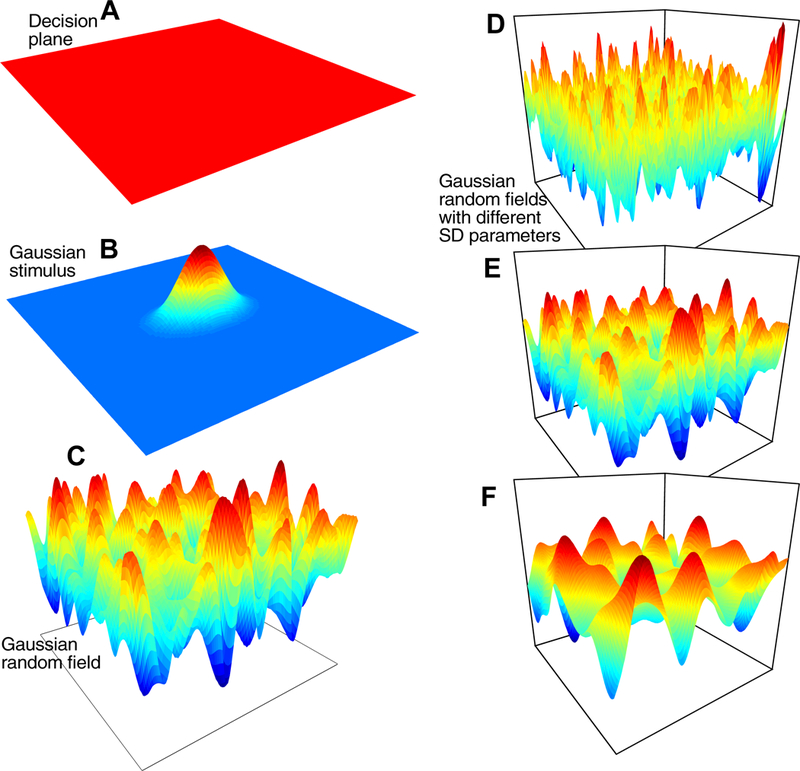

Figure 1 illustrates the model for a 1D task for which subjects move their eyes from a central fixation point to the location on a circle (actually an annulus) that is the brightest, that is, the greatest concentration of white pixels (Figure 1A). The heavy line in Figure 1B shows the representation of a stimulus for which the center of a bright patch is at an angle of 180 degrees from an arbitrary zero point. The dashed and dotted lines show variability across trials, which is discussed later. A Gaussian distribution is used for the representation because 2D Gaussian distributions were used to generate the patches, but a circular Gaussian von Mises distribution, traditionally used in modeling tasks with circular response fields (Smith, 2016; Zhang & Luck, 2008), could also be used. Gaussian and von Mises distributions are probably indistinguishable in the applications presented here. The representation of the stimulus determines the rate (drift rate) at which evidence is accumulated toward a criterion, with the highest drift rates at and near the center of the distribution and decreasing with distance from the center. A response is executed when the amount of accumulated evidence reaches the criterion. In tasks with more than one stimulus (e.g., two or more patches of bright pixels, or more than one motion direction), the stimulus distribution has two or more Gaussian distributions, one for each stimulus.

Figure 1.

(A) An example stimulus display for a task in which the subject moves his or her eyes from the central fixation square to the brightest area on the surrounding annulus. (B) A representation of the normally distributed stimulus representation. (C) Six examples of random Gaussian process noise. (D) Five samples of accumulated information with the last reaching the decision criterion (the blue horizontal line).

Noise in the accumulation of evidence for a 1D stimulus is represented by a spatially continuously distributed Gaussian process. For a Gaussian process, at any point on the spatial dimension, noise in the evidence dimension has a Gaussian distribution. There is a correlation between nearby points on the spatial dimension and there is a kernel parameter of the model that determines the range of this correlation. The SD in the evidence dimension (within-trial variability or diffusion coefficient) is set to 1 per 10 ms step and it acts as a scaling parameter in the same way as within-trial noise in the two-choice diffusion model. For tasks with circular displays, I have not attempted to make the Gaussian process noise continuous over the 360 degree to 0 degree boundary that is present in the current modeling. Until this becomes an issue that is critical in modeling data, it is left for future modeling.

Figure 1C shows examples of Gaussian process noise for 1D stimuli; the five lines show noise across angles, horizontally, and across time, vertically. The distribution of noise is added to the evidence from the representation of the stimulus (Figure 1D) and the evidence from the sum of the two proceeds through time until it reaches criterion at some location on the circle (the blue line in 1D). For 2D stimuli, the idea is the same except that variability is represented by Gaussian random field noise. Gaussian processes and Gaussian random fields are active areas of research in machine learning (Lord, Powell, & Shardlow, 2014; Powell, 2014). For example, because random Gaussian processes are summed (along with the signal), the accumulation process can be seen as a time autoregressive spatial model (Storvik, Frigessi, & Hirst, 2002).

The accumulation process is assumed to be continuous in space and time but to simulate it, discrete time steps and discrete spatial locations are used. For any simulation of a continuous process on a digital computer, the continuous process must be approximated by a discrete one. A later section in this article presents a discussion of how to scale the process to change the sizes of the steps in space and time to approach continuous processes with smaller time steps and more points on the continuous spatial dimension.

It is assumed that evidence for one location is evidence against the others such that the total amount of accumulated evidence is constant across time (normalized to zero at each time step; e.g., Audley & Pike, 1965; Bogacz et al., 2006; Ditterich, 2006; Niwa & Ditterich, 2008; Ratcliff & Starns, 2013; Roe, Busemeyer, & Townsend, 2001; Shadlen & Newsome, 2001). Ratcliff and Starns (2013), in their confidence and multichoice model, showed that normalization of the evidence (so that the mean over all the accumulators was zero) on each time step allowed the model to account for shifts in RT distributions that occur for about half of the subjects in their experiments.

It is also assumed that the amounts of accumulated evidence for nearby angles are correlated (because the angles are close together). Because of the noise in the accumulation process, the time it takes for evidence to reach the criterion varies and sometimes the accumulated evidence reaches the wrong location. Total RT is the time to reach criterion plus the time to encode the stimulus into decision-relevant information and the time to execute a response. The latter two, which are outside the decision process itself, are added together in one component of the model that is called nondecision time.

The assumptions that there is noise in the process of accumulating evidence and that the amount of accumulated evidence is constant across time are shared with the two-choice diffusion model. There are three other shared assumptions: One is that the three components of processing (drift rate, criterion, and nondecision time) are independent of each other. Another is that the value of the criterion is under an individual’s control; setting it higher means longer RTs and better accuracy and setting it lower means shorter RTs and lower accuracy. The independence of drift rate and criterion means that an individual can set the criterion to value speed over accuracy (or accuracy over speed), no matter what his or her drift rate, and an individual with high drift rate (or low drift rate) can respond more or less quickly, depending on where he or she sets the criterion. The third shared assumption is that there is variability across trials in drift rate, criterion setting, and nondecision time, reflecting individuals’ inability to hold processing exactly constant from one trial of a stimulus to another. Variability in drift rate is represented by random variation in the height of the drift-rate distribution, illustrated by the three lines in Figure 1B. When there is more than one stimulus in the display, trial to trial variability in the height of the drift rate distributions can act to make the internal representation of a weaker stimulus stronger than a strong stimulus. This acts like an attentional mechanism with a focus on a weaker stimulus on some trials (though random noise is the major determinant of choices of weaker stimuli and random responding away from any stimuli).

The most important feature of the SCDM is that the stimulus representation (which determines drift rates), the noise in the accumulation of evidence, and the response criteria are all continuous in space. That representations of stimuli have a Gaussian distribution is straightforward. However, the assumption about noise is less so because theoretical assumptions about continuously distributed noise across space have received almost no attention in psychology. Earlier versions of my approach, since discarded, assumed multiple accumulators, but this always raised the issue of granularity (e.g., how many accumulators for a circle, 36, 360, 3600?) and the question of scaling the number of accumulators. Moving to continuous noise makes accumulation in continuous space possible. As mentioned, for fitting the model to data, the continuous functions are approximated with discrete functions but there is a simple transformation of model parameters to vary the number of discrete points.

In Figure 1C, for any angle, a straight vertical line drawn through samples for that angle (five are shown) would produce a Gaussian distribution on the vertical line. A smooth continuous function across angles (on the x-axis) is generated by a kernel function; a standard one was used here, a squared exponential, (K(x,x’)=exp(−(x-x’)2/(2r2)), where x and x’ are two points, K is a matrix, and r is a (kernel) length parameter that determines how smooth the function is. If r is varied from small to large, the correlation in noise between nearby points starts small and becomes larger. A small value of r would give a function with more peaks and troughs and larger value of r would give a function with fewer peaks and troughs. (The precise form of the kernel function is likely to be unimportant as long as it is unimodal because samples are accumulated.)

To obtain random numbers from the Gaussian process, the square root (R) of the kernel matrix, K (K=R’R, where R is an upper triangular matrix), is multiplied by a vector of independent Gaussian distributed random numbers (with SD 1) to produce the smooth random function (Lord et al., 2014). If r is relatively small, the matrix R will have only a few values off the diagonal and only points close together in the random vector will be smoothed together resulting in a jagged Gaussian process function. If r is relatively large, the matrix R will have many off-diagonal elements that are not small and the Gaussian process function will be smooth with few peaks and troughs. In Figure 1C, r is 10 degrees.

Figure 1D shows the amounts of accumulated evidence at each angle for time steps from 1 to 17, with the process terminating at the 17th time step at an angle of about 215 degrees. The peak emerges gradually with the spread of activity around the peak determined by the standard deviation (SD) of the drift-rate distribution and the kernel length parameter.

The parameters of the model that are common across tasks are nondecision time (Ter), the range of nondecision times (st, uniformly distributed), criterion (or boundary) setting (a), the range of the boundary setting (sa, uniformly distributed), the Gaussian process kernel parameter (r), the across-trial range in the height of the drift-rate distribution (sd, uniformly distributed), and the standard deviation in the drift-rate normal distribution (sw). In addition, there is one parameter for each of the conditions in an experiment that differ in difficulty, where the parameter (di) represents the mean height of the drift-rate distribution. The appendix shows how each of these parameters affects RT and accuracy. The parameters of the 2D model are described in the section on that model.

Fitting the Model to Data

I do not know of any exact solutions for DIXCthe probabilities of responses across the criterion line (i.e., the probabilities of responses at each angle) or for the distributions of RTs, so simulations are used (usually 10,000 simulated trials) to generate predictions. The data generated from the simulations are compared to the empirically obtained data and then the generating parameters are adjusted with a SIMPLEX fitting routine to obtain the best match between simulated and empirical data. The data for all the conditions of an experiment are fit to the model simultaneously and the data for each subject are fit individually. In this article, my aim was not to explore model fitting methods to find an optimal method but rather to use a fairly straightforward and robust method to show that the model can fit the data. Other methods might produce better fits but this is a topic for future investigation.

In order to generate predictions and fit the model, both time and space have to be made discrete. We fit the model using 10 ms time steps and 5 degree spatial divisions. The model parameters are presented in terms of 10 ms time steps and 1 degree spatial divisions. The equation for the update to a spatial position at each 10 ms time step is the standard Δxi=viΔt + σηi √Δt, where σ(=1) is the SD in within-trial noise, vi is the height of the drift rate location at spatial position i, xi is the evidence, and ηi is a normally-distributed random variable with mean zero and SD 1. Note that the samples of noise are not correlated across time steps, but they are correlated across spatial position (as in Gaussian process noise). This means that the samples of noise are not independent across spatial locations.

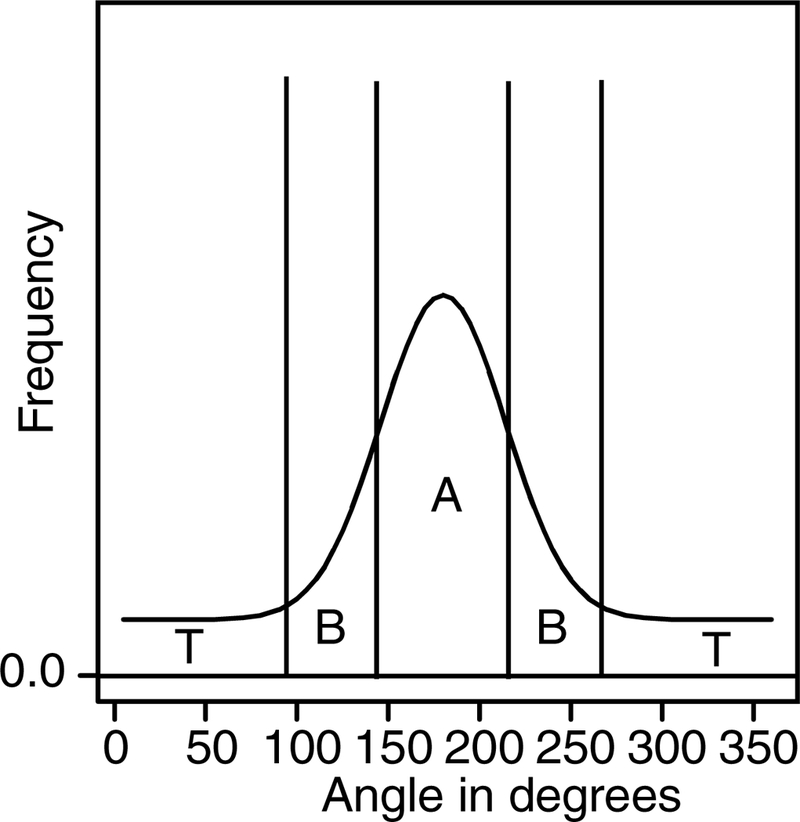

To fit the model, the data are grouped into three categories: the area around the central peak (the A area in Figure 2); the two areas just outside the central peak (B’s in Figure 2), and the combination of all the other areas (T’s - for tail - in Figure 2). Two areas, A and B, were needed for the center because when only one area was used, there was an identifiability problem because a high narrow central peak mimicked the lower wider peak shown in Figure 2. Using the two areas (A and B) solved the problem because the two areas constrained the fitting method to produce responses across the range around the peak. In experiments with more than one response location, two areas A and B were used for the strongest peak but only one area (C, D, etc.) was needed for each of the weaker peaks. The cutoffs that defined the areas A and B were selected based on the mean experimental results with a check that each individual had a B area that contained some of the tail of the distribution around the central peak.

Figure 2.

A plot of a hypothetical distribution of responses showing how they are divided into the central proportion (A), the side lobes (B), and responses outside the stimulus range (T). This division is used to group the data to provide RT distributions for model fitting. When there were two or more possible targets, areas C, D, etc. were added for the weaker targets and these represented areas A and B combined for those stimuli.

For fitting the model and for displaying data and model predictions, RT distributions are represented by 5 quantiles, the .1, .3, .5, .7, and .9 quantiles. The quantiles and the probabilities of responses for each region for each condition of an experiment are entered into a minimization routine and the model is used to generate the predicted cumulative probability of a response occurring by each quantile RT. Subtracting the cumulative probabilities for each successive quantile from the next higher quantile gives the proportion of responses between adjacent quantiles. For a G-square computation, these are the expected proportions, to be compared to the observed proportions of responses between the quantiles (i.e., the proportions between 0, .1, .3, .5, .7, .9, and 1.0, which are .1, .2, .2, .2, .2, and .1). The proportions for the observed (po) and expected (pe) frequencies and summing over 2Npolog(po/pe) for all conditions gives a single G-square (log multinomial likelihood) value to be minimized (where N is the number of observations for the condition). A standard SIMPLEX minimization routine was used to adjust the model parameters to minimize G-square. To avoid the possibility that the fitting process ended up in a local minimum, the SIMPLEX routine was restarted 8 times with 40 iterations per run and then finally run with 200 iterations (for the last 100 to 150 iterations, usually there was no change in the model parameters).

Besides the possibility of local minima in fitting the model to data, there were some other problems. In fitting the model (and in two-choice modeling), if nondecision time is too large and across-trial variability in nondecision time is small, it is possible for there to be no overlap between the predicted and data distributions at the lower quantiles. This means that a probability cannot be assigned to the lower quantile RTs and this produces numerical overflow in the programs. To deal with this, a value of nondecision time at the low end of the range for successful fits to data was selected along with a large value of across-trial variability in nondecision time. These were fixed for the first two runs of the SIMPLEX routine. This allowed other parameters to move to values nearer their best-fitting values. For the third iteration, all the parameters were free to vary which allowed nondecision time to move to a value near the best-fitting value for those data. The fourth iteration started with the across-trial range in nondecision time divided by 2.5 to counteract the large value used in the initial runs (without this adjustment, it took a lot of restarts of the SIMPLEX routine for this parameter to move to a stable lower value). Also, nondecision time was not allowed to become shorter than 175 ms because a value much lower than this is implausible given the encoding and response output processes and translation between the stimulus representation and the decision variable processes that were needed (this is discussed later). Initial values of the parameters were near the mean of those from a first run of the model fitting program and were the same for each subject, i.e., they were not adjusted for each subject. The method described above was robust to moderate changes in the initial values (e.g., a 30–50% change in them).

Experiments

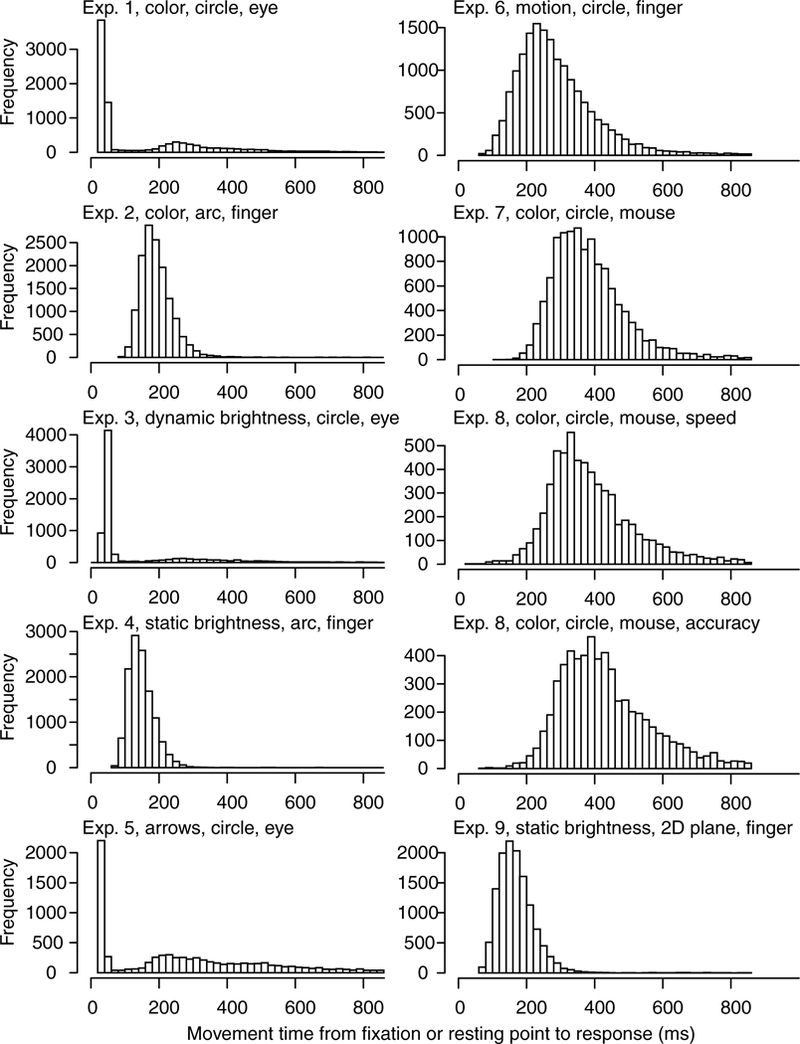

There is little guidance in the literature on how to design experiments to examine performance on tasks with responses on continuous scales while at the same time measuring RTs. There are paradigms with responses on continuous scales but none that I know of that are designed to provide RT measures, especially with the constraint that decision processing should be completed prior to initiating a response.

RTs were measured from the onset of a stimulus until subjects’ eyes, finger, or mouse left a resting location. Reducing the possibility of movement before a decision was a major constraint on the development of the paradigms used here. To do this, in all the tasks, subjects were instructed to make movements only after they had made their decision. Furthermore, they were instructed to move directly to the response area to make their response. Feedback was provided if the movement from the resting point to the response location was too slow. There were some false starts with experiments that did not control or give feedback on the duration of the movement. In these, some subjects clearly lifted their finger or moved their eyes very quickly after stimulus presentation and before they made their decision, and then moved to make the response (often with slow movement times). In tasks with eye movements, eye position was recorded every millisecond and this allowed tracks to be examined. In some cases, tracks to intermediate points with a fixation at that earlier point were recorded (Kowler & Pavel, 2013). Then the eyes moved to make a response. The experiments reported here eliminated the majority of these behaviors with careful instructions, monitoring, and feedback if movement times were too long.

Recently there has been concern about the lack of replicability of studies in psychology and, historically, there has been concern that models or empirical results apply only to the specific design of a single experiment. To address these concerns, nine experiments were performed with four kinds of tasks. Each major empirical and modeling result was replicated at least once. The tasks allowed generalization over response modes, types of stimuli, and types of decisions.

Data and model fits are presented from a series of experiments with manipulations that involve the task, the stimulus, the response mode, and the mapping from stimulus to response. The first six experiments use different response methods, namely, eye fixations and touch-screen finger movements. The question was whether these modalities produce qualitatively similar or different patterns of results. The first two experiments present a patch of colored pixels in a central location, with one color dominant, and the task is to move the eyes or finger (usually the index finger) to the position on a color annulus or color half annulus that matches the dominant color in the stimulus. The second two experiments present an annulus or half annulus of black and white pixels with some areas brighter or darker than the background (more white or more dark pixels respectively) and the task is to move the eyes or finger to the brightest or darkest area (alternating from trial block to trial block). In the experiment with black and white pixels, the stimulus and response are physically the same whereas in the color experiment, the subject has to map between a degraded central color stimulus and the non-degraded response area. The next two experiments use quite different stimuli and the task is to respond by moving hand or eyes to a point on a surrounding annulus that best matches the stimulus. The fifth experiment uses stimuli that are a collection of arrows with some proportion pointing in the same direction. The task is to move the eyes to a position on the response annulus corresponding to the dominant direction. The sixth experiment is a moving dots experiment with three directions of motion and with one stronger than the others. The task is to move the finger to the position on a response annulus that corresponds to the dominant direction of motion. The next two experiments are mouse based versions of Experiment 1 and the last experiment is a version of Experiment 3 but with stimuli presented in a rectangular 2D array and requiring a touch screen response in the 2D space.

All the subjects were Ohio State University students in an introductory psychology class who participated for class credit. A small proportion (less than 5%) finished only a few trials in an experiment before deciding to leave and were eliminated. A few others had trouble with the eye-movement apparatus (e.g., excessive blinking, inability of the system to provide accurate eye fixation data) and were also eliminated. The aim was to collect data from 16 subjects in each experiment but in a few cases, the number who signed up for an experiment was more than 16 and data from all of them was used.

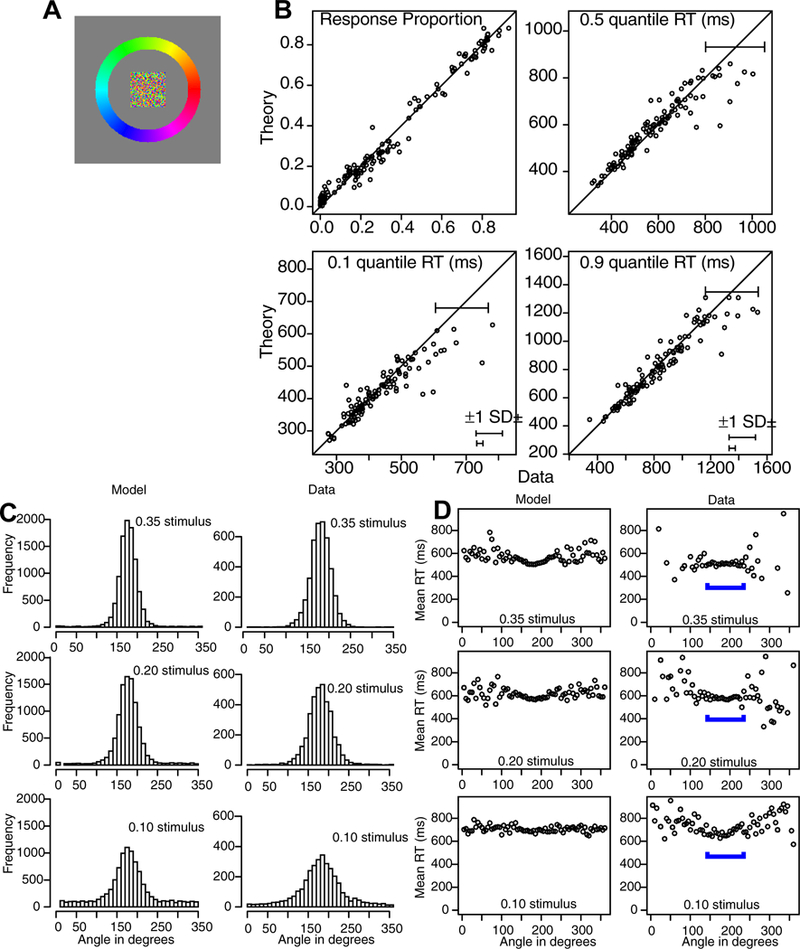

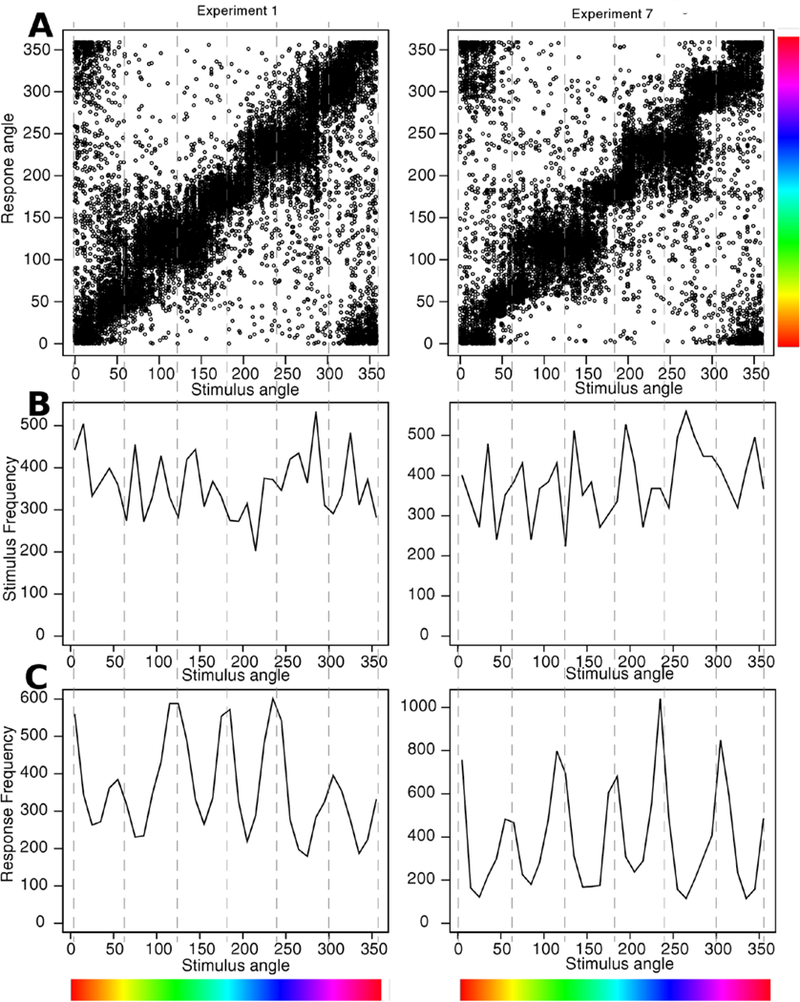

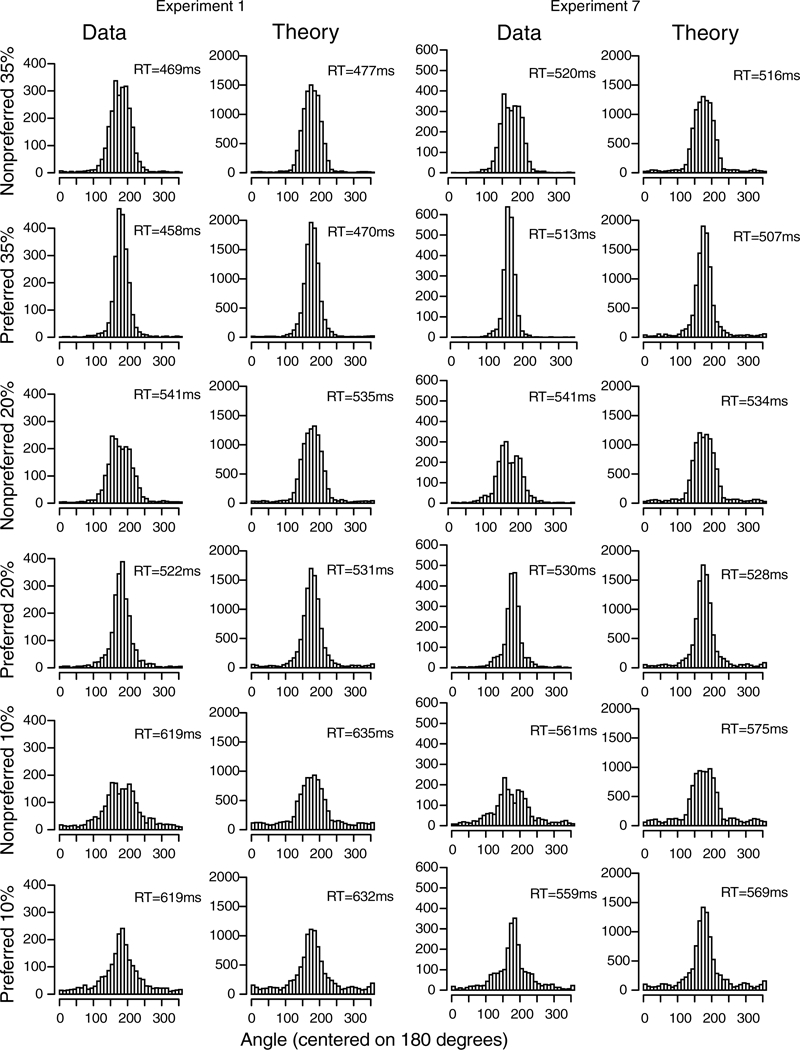

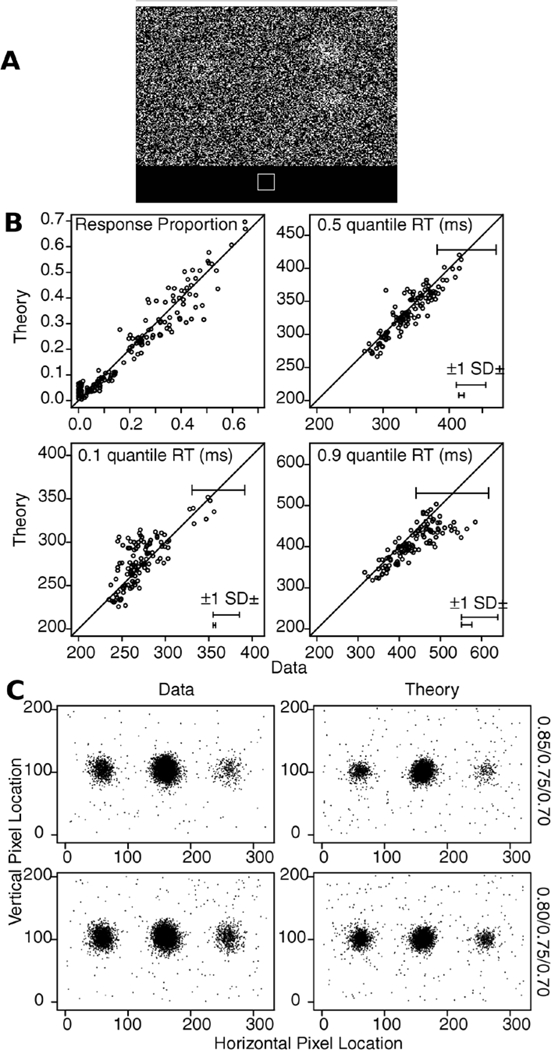

The data and predictions of the model for them are displayed in two ways, illustrated with the data from Experiment 1. The stimuli (Figure 3A) were center patches surrounded by a circular annulus and a subject’s task was to move his or her eyes from the center patch to the location on the annulus that matched the most dominant color in the center patch. The data were aligned so that the correct response was at 180 degrees. Then, as in Figure 2, the annulus was divided into the region around the (180 degree) location that best matched the center patch (the A region, Figure 2), the regions immediately on each side of it (the B regions), and all the other regions (the T regions).

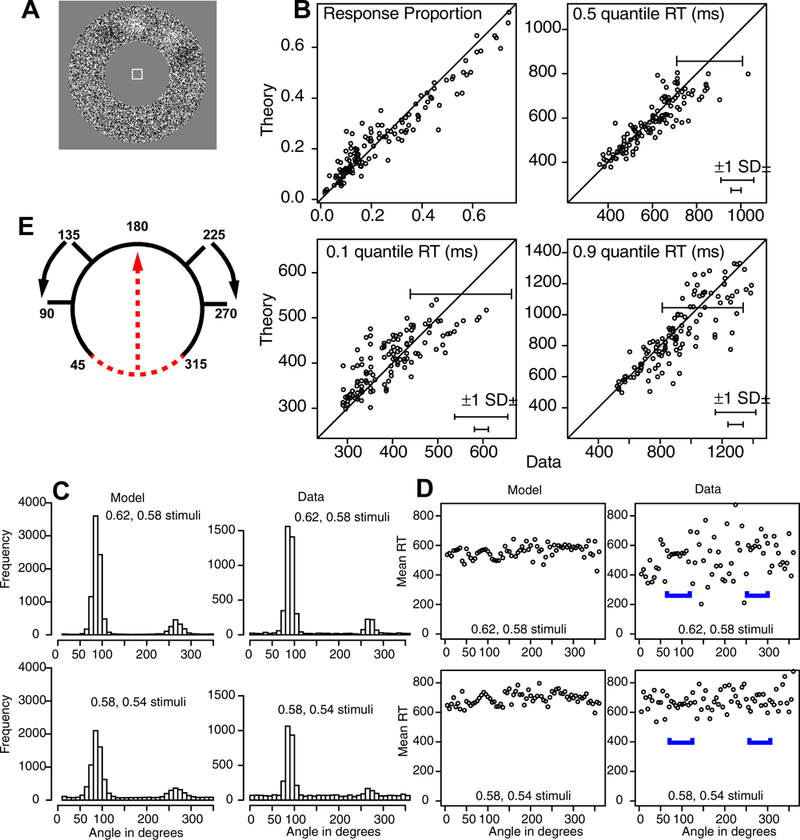

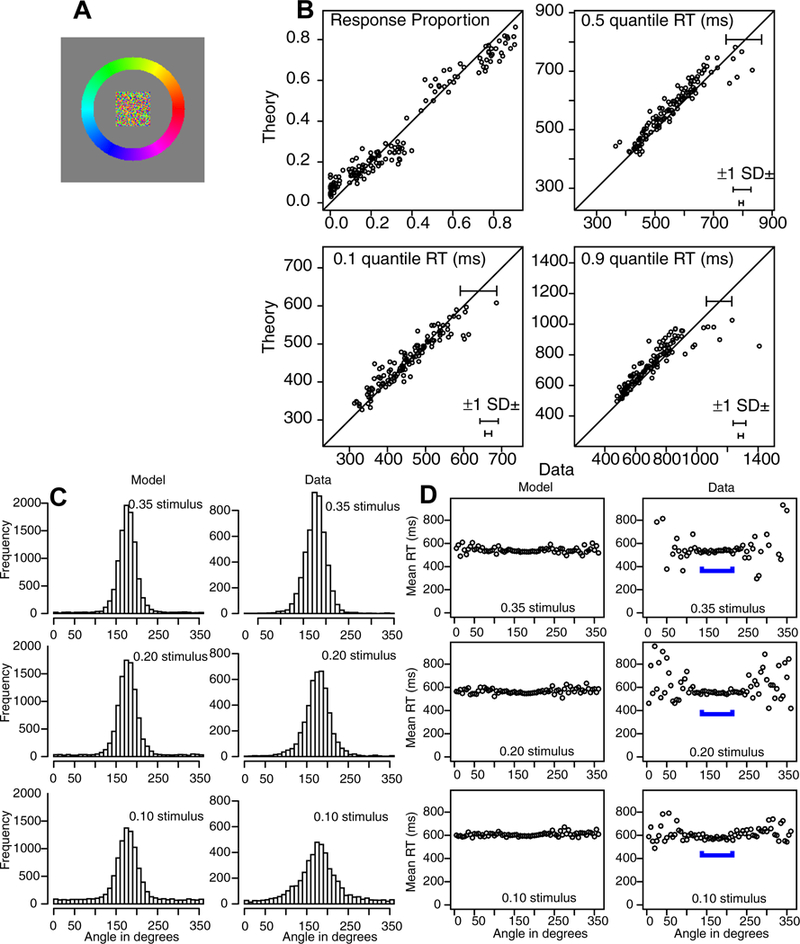

Figure 3.

Stimulus and results for Experiment 1. A: An example of the stimulus and response configuration. B: Plots of model predictions plotted against data of the proportion of A, B, and T responses (see Figure 2) and the 0.1, 0.5 (median) and 0.9 quantile RTs for all the conditions for data from each individual subject. The horizontal error bars in the bottom right corner represent the minimum and maximum 1 SDs in the quantile RTs derived from a bootstrap analysis. The error bars in the top right show a 2 SD error bar from the maximum of the error bars in the bottom right corner. This provides an upper bound of the variability in the quantile RTs. C: Histograms of responses for the model and data as a function of angle for all data from subjects combined with the stimulus aligned on 180 degrees. D: Plots of mean RT for theory and data as a function of angle averaged over subjects. The blue brackets show the angles with most responses as shown in Panel C (and hence with lowest variability).

Figure 3B shows the data and the predictions plotted against each other. The upper left panel shows response probabilities, ranging from 0.0 (for T regions) to 0.8 (for A regions). There are 144 points on the function: the three regions for each of the three conditions (different levels of difficulty, defined later) in the experiment for each of the 16 subjects. The fact that the data and predictions fall tightly around the straight line indicates a reasonably good fit of the model to the data. The other three panels of Figure 3B show predictions against data for the 0.1, 0.5, and 0.9 quantile RTs (quantiles with less than 10 observations are not shown). Again, the tight fit of predications to data indicates a reasonably good fit.

The second way predictions and data are displayed is to plot response probabilities and RTs across angles. Figure 3C shows histograms for response probabilities for the three conditions for all the data from all the subjects and the predictions match the data well. Figure 3D shows similar plots for mean RTs.

Apparatus

For the eye-movement experiments, stimuli and response fields were presented on a CRT monitor 40 cm wide (640 pixels) and 30 cm high (480 pixels). At the standard viewing distance used (69.5 cm), the whole screen subtends a visual angle of 32×24 degrees. For the touch screen experiments, the CRT monitor was 32 cm wide and 24 cm high, which, at a standard viewing distance of 55.8 cm, gave a visual angle of 32×24 degrees. The screen phosphors for the CRT monitors are not known so the precise decay characteristics of the displays are not known and the relative intensities of the three color guns are not known. However, most of the manipulations were within-subjects with moderately short presentation durations (250–300 ms) and differences among individual subjects were so large that any assumptions about stimulus duration that might be affected by slow decay of a stimulus on the screen over 20 or 40 ms was not important.

For the eye-movement experiments, the eye tracker was an EyeLink 1000 from SR Research. The system was desktop-mounted with a chin and forehead rest. The measurements were monocular (left eye) sampling at a rate of 1000 Hz. Every trial began with a fixation point (details are presented for each experiment). After some amount of time (e.g., 500 ms) of fixation, the trial began. A response was recorded when the eyes moved from the fixation position to a response location and remained fixated at the response location for 500 ms. Response time was defined as the time from stimulus presentation to the time at which the eyes moved from the fixation position.

A few times in an experiment, calibration in the eye tracker drifted, that is, the eye tracker recorded a location systematically away from the location to which the eyes were looking. The first part of each experimental trial involved the subject fixating on a box prior to stimulus presentation (usually for 500 ms). During this time, and only this time, the position of the eye was shown on the screen by a dot drawn at every screen refresh. Both experimenter and subject could see the dots as they were drawn and the experimenter could hit a game controller button during the fixation period to tell the system that the subject was fixated in the box if the system was recording fixations outside the box. The eye tracker was then recalibrated. This happened no more than 5 or 10 times per experimental session.

The touch screen (CRT) was an ELO Entuitive 1725C with dimensions 40 cm wide and 30 cm high. Because there is considerable arm fatigue in using a touch screen horizontally on a desk, a mount was constructed so that the screen was at almost horizontal and was located between the knees of subject. This eliminated arm fatigue. A trial began when subjects hit a starting box on the screen (details are presented in each experiment). They were required to lift their finger and place it at the response location and not slide it.

There were some complicating factors with the touch-screen system used in these experiments. It uses a “surface wave” technology that detects an event based on detection of an ultrasonic wave that is generated when a finger hits or leaves the glass of the touch screen. The first measurements of the duration of the movement from finger lift to response placement produced some delays of only 20–40 ms, too short to be legitimate measurements of movement time.

In light of this, calibration tests were conducted. A piezoelectric sensor was used to measure the time at which the finger lifted, which gave an immediate measurement of lift time. This was compared to the time at which the touch screen recorded the lift. In the calibration procedure, the screen was programmed to turn an all-black screen display to an all-white one when the finger lift was recorded. A photodiode was used to record the time of this all-black to all-white change. An oscilloscope displayed the two events and results showed a 110 ms delay from when the piezoelectric sensor detected the change (finger lift) until the screen turned white. The same setup was used to record the time from a finger press to detection of the finger press event from the touch screen. As before, the finger press turned the black screen white and the photodiode was used to record this change. A delay between the finger press and recording the event of about 48 ms was found. These numbers were verified by recording the events on a video camera at a 60Hz frame rate in a completely independent set of measurements. The number of frames between lift of the finger to the screen changing from black to white replicated the 110 ms measurement (as did the finger press measurement). These delays were added into the measurements in Experiments 2, 4, 6, and 9. It is important to perform such measurements if touch-screen devices, such as tablets, phones, or laptop screens, are to be used in RT experiments.

In the mouse-based experiments, subjects placed the mouse pointer in a starting box on the screen and after some delay (so long as the pointer remained in the box), the trial began. The position of the mouse was displayed on the screen every 16.7 ms as a small dot to subjects.

RTs were measured from the onset of the stimulus/response display to the eye leaving the fixation point, a finger leaving the resting box, or the mouse leaving the starting box. Responses that were too slow (specific to each task) and movements off the response dimension (e.g., off an arc) were considered spoiled trials. To minimize visual search of the display, the stimulus was usually presented for only 250 ms for finger and mouse movements. For eye movements, the stimulus and response field remained on the screen until the eye left the fixation point, at which time the screen was blanked. To provide feedback to participants, correct responses were defined as responses within a 50-pixel square box of the peak of the target; responses outside that box were followed by an error message.

The eye tracker experiments were more finicky than the others. With touch or mouse responses, when a trial ended, the next trial could begin. With the eye tracker, the next trial began only when the camera sensed the subject’s eyes on the central fixation point. For some subjects this was a smooth process, but for others it took a few extra seconds for the fixation point to register. Over the course of the entire experiment, if this happened with regularity then those subjects were not able to complete the full sessions of trials. Additionally, some subjects calibrated easily in the initial calibration process and for others, there were problems that required the experimenter to perform the calibration process several times. Sometimes the subjects also had difficulty in fixating on the target (without moving back to the central location). Also, for some subjects, there were problems in calibration due to glasses and contact lens. A session was planned to be the number of trials that could be completed in 50 minutes if everything ran without problems, but sometimes only a little more than half the trials could be obtained from a subject.

Experiment 1

The stimuli were central patches of colored pixels surrounded by a circular annulus also made up of colored pixels (Figure 3A). Subjects responded by moving their eyes from the central patch to the location on the annulus that best matched the dominant color in the patch. There were three levels of difficulty; the proportion of pixels of the dominant color in the central patch was 0.35, 0.20, or 0.10. Subjects were instructed to make their decisions as quickly and accurately as possible and to move their eyes only after they had made their decision.

Method

At the beginning of each trial of the experiment, subjects were asked to fixate on a 20×20 pixel white box at the center of the CRT screen. After 500 ms of fixation, the central patch replaced the box and simultaneously the annulus was displayed. RTs were measured from the onset of the display to when the eyes moved outside a 30 pixel (1.5 degree) radius from the fixation point.

The central patches were 44×44 pixels. They were created by placing pixels of random colors (out of 253 possible colors) at random locations on the patch. One color was selected randomly and then a proportion of the pixels, 0.35, 0.20, or 0.10, was changed so that each pixel was changed to the target color or one within 10 of the target. The color selected was one of 21 from a uniform distribution with range minus 10 to plus 10 of the target.

The annulus contained all 253 colors. Its central radius was 60 pixels (3.0 degrees) from the center of the screen and it was 16 pixels (0.75 degrees) wide. The pixels changed from red (at angle zero, horizontal right) counter-clockwise through all 253 colors with yellow at 60 degrees, green at 120 degrees, teal at 180 degrees, dark blue at 240 degrees, and violet at 300 degrees (Figure 3A).

There were 16 subjects in the experiment. They were instructed to move their eyes away from the central patch only when they had made a decision about where the central patch’s dominant color was located on the surround. To discourage moving the eyes in more than a single step, the display of the central patch and surround was blanked when the eye moved outside two degrees from the fixation point. This meant that once the eyes moved, there was no more stimulus or response information available from the display.

There were 10 blocks of 72 trials each, preceded by two practice blocks. Each block contained 24 trials for each of the conditions, randomly ordered. A session was 50 min. long. Many subjects did not finish all 10 blocks: There was an average of 560 observations per subject out of a possible 720.

Target locations were measured in terms of their x/y coordinates in the 640 by 480 screen of pixels. The center of a target location was at a radius midway between the inner and outer radii of the surrounding annulus and its size was a 2-degree-by-2-degree (40-by-40 pixel) invisible box centered on the target color. The box was not rotated based on stimulus angle, so the box had a narrower angular extent at 0, 90, 180, 270 degrees than at other angles.

To indicate whether a response was correct, if a subject’s eyes moved to a location in the box, a “1” was displayed in the invisible box location; if not, a “0” was displayed at the position to which the eyes had moved. “1”s and “0”s remained on the screen for 300 ms. If the movement started later than 1250 ms after the onset of the display, “TOO SLOW” was presented for 500 ms in the center of the display. If it started earlier than 150 ms, “TOO FAST” was presented. There was a 40 ms blank screen between the feedback messages and the fixation point for the next trial. Generally, the task became routine and subjects rarely received “TOO SLOW” or “TOO FAST” messages after the practice blocks.

Results

The stimuli were aligned to set the zero point at 180 degrees. The A area corresponded to 150–210 degrees, the B area to 100–150 and 210–260 degrees, and the T area to the rest. Figure 3B, top left panel, shows the data and predictions from the model for response probabilities for the three difficulty conditions for the three response categories for the 16 subjects (144 points). There were a few misses as large as 10%, but misses of this size are only a little larger than the maximum expected. (If there were 200 observations per difficulty level, then for a proportion of 0.2, the SD is sqrt(.2*.8/200)=0.028, which means that 2SD’s are almost plus or minus 0.06.)

The other panels of the figure show the 0.1, 0.5 (median) and 0.9 quantile RTs. There are only 111 points on these plots because only data for which there were more than 10 observations per condition per response category are plotted. In the bottom right corner of each quantile plot are two plus and minus 1 SD error bars (horizontal because the data are on the x-axis). To construct the error bars, a bootstrap method was used. For each condition and response category for each subject, a bootstrap sample was obtained by sampling with replacement from all the responses for that condition. This was repeated for 100 samples and then the SDs in the RT quantiles were obtained for that subject, condition, and response category from the 100 bootstrap data sets. The SDs for the three conditions and the three response categories with the largest and smallest SDs were then averaged across subjects and it is these two SDs that are at the bottom right corners (this excluded T quantiles from the two easier conditions, 0.35 and 0.20, because most subjects had less than 10 responses in those categories, and sometime zero, with 10 the minimum number of observations we used for displaying quantiles). On the diagonal line of equality, a 2-SD error bar computed from the larger SD at the bottom right is shown. This 2-SD error bar provides an upper bound on deviations that would be expected between the predictions and data if the model fit the data perfectly.

The results for the quantile RTs show a good match between predictions and data; almost all of the data points fall within the two-SD error bars. The largest misses are four values of the 0.1 quantile that show longer RTs than predicted (by about 100–200 ms). These are for single conditions and single response categories for single subjects. If they represented a systematic miss, then the 0.1 quantile RTs should miss for all the A, B, and T responses (and perhaps all the conditions) but they do not.

Tables 1 and 2 show the values of the parameters that produced the best fits of the model to data for all the experiments, averaged over subjects. The SDs in the model parameters across subjects are shown in Tables 3 and 4. For this experiment, the height of the normal distribution of drift rates decreased with difficulty, as would be expected. Discussion of the other parameters is presented after Experiment 1–8.

Table 1:

SCDM parameters

| Task | Exp. | Ter | St | a | sa | sw | r | Sd | G2 | df | χ2 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Color 72 eye | 1 | 177.5 | 33.1 | 15.4 | 5.9 | 37.0 | 14.0 | 0.859 | 89.2 | 41 | 56.9 |

| Color 72 touch | 2 | 272.4 | 25.1 | 11.0 | 3.0 | 24.9 | 11.8 | 1.082 | 98.8 | 41 | 56.9 |

| Dynamic bright eye | 3 | 177.7 | 48.1 | 12.3 | 5.5 | 26.0 | 34.0 | 0.821 | 102.0 | 35 | 49.8 |

| Static bright touch | 4 | 221.5 | 59.3 | 7.5 | 2.7 | 17.5 | 18.1 | 0.761 | 92.6 | 35 | 49.8 |

| Arrows 72 eye | 5 | 186.0 | 22.6 | 11.4 | 5.7 | 26.5 | 24.1 | 0.839 | 82.4 | 41 | 56.9 |

| Moving dots touch | 6 | 301.6 | 34.4 | 9.3 | 3.4 | 31.0 | 21.7 | 0.925 | 185.2 | 71 | 91.7 |

| Color 72 mouse | 7 | 235.9 | 26.3 | 15.4 | 2.9 | 32.0 | 5.4 | 1.047 | 93.0 | 41 | 56.9 |

| Color sp/acc mouse | 8 | 225.6 | 23.4 | 13.6 15.3 |

2.4 | 33.7 | 9.8 | 0.897 | 133.6 | 58 | 76.7 |

| 2D brightness | 9 | 179.1 | 26.0 | 8.8 | 4.0 | 16.4 | 18.2 | 0.528 | 282.4 | 48 | 65.2 |

Ter is nondecision time, st is the range in nondecision time, a is the boundary setting, sa is the range in the boundary setting, sw is the SD in the drift rate distribution, r is the Gaussian process kernel parameter, sd is the range in the height of the drift rate distribution, G2 is the multinomial maximum likelihood statistic, df is the number of degrees of freedom, and χ2 is the critical chi-square value. Exp. means Experiment.

Table 2:

SCDM drift rates

| Task | Exp. | Conditions | d1 | d2 | d3 |

|---|---|---|---|---|---|

| Color 72 eye | 1 | 0.35, 0.20, 0.10 | 42.7 | 31.3 | 16.7 |

| Color 72 touch | 2 | 0.35, 0.20, 0.10 | 29.3 | 25.8 | 19.4 |

| Dynamic bright eye | 3 | 0.62, 0.58 | 26.2 | 12.2 | |

| 0.58, 0.54 | 14.6 | 5.1 | |||

| Static bright touch | 4 | 0.75, 0.65 | 26.7 | 13.4 | |

| 0.65, 0.60 | 20.6 | 12.2 | |||

| Arrows 72 eye | 5 | 0.60, 0.40, 0.20 | 31.1 | 23.4 | 13.2 |

| Moving dots touch | 6 | 0.5, 0.1,0.1 | 33.6 | 5.6 | 6.0 |

| 0.4, 0.2, 0.1 | 25.3 | 9.6 | 3.3 | ||

| 0.4, 0.2, 0.2 | 21.9 | 6.9 | 5.6 | ||

| Color 72 mouse | 7 | 0.35, 0.20, 0.10 | 30.0 | 25.8 | 18.0 |

| Color sp/acc mouse | 8 | 0.25, 0.10 | 32.1 | 21.4 | |

| 2D brightness touch | 9 | 0.70, 0.50, 0.40 | 18.1 | 10.6 | 6.6 |

| 0.60, 0.50, 0.40 | 16.3 | 12.1 | 8.3 |

di is the height of the drift rate distribution. Exp. means Experiment.

Table 3:

SDs in SCDM parameters

| Task | Exp. | Ter | St | a | sa | sw | r | Sd |

|---|---|---|---|---|---|---|---|---|

| Color 72 eye | 1 | 12.4 | 13.2 | 2.6 | 2.5 | 2.4 | 10.0 | 0.47 |

| Color 72 touch | 2 | 81.8 | 3.8 | 1.3 | 1.9 | 2.9 | 6.0 | 0.46 |

| Dynamic bright eye | 3 | 20.7 | 15.7 | 1.8 | 0.9 | 2.2 | 5.5 | 0.25 |

| Static bright touch | 4 | 39.3 | 6.6 | 1.4 | 0.7 | 3.5 | 5.7 | 0.11 |

| Arrows 72 eye | 5 | 12.6 | 2.0 | 1.3 | 0.6 | 1.7 | 4.9 | 0.13 |

| Moving dots touch | 6 | 57.4 | 14.2 | 1.5 | 0.6 | 2.9 | 5.8 | 0.22 |

| Color 72 mouse | 7 | 41.7 | 6.3 | 2.6 | 1.3 | 3.9 | 0.3 | 0.46 |

| Color sp/acc mouse | 8 | 30.6 | 1.8 | 2.3 3.2 |

0.8 | 3.7 | 0.9 | 0.28 |

| 2D brightness | 9 | 6.9 | 4.0 | 0.9 | 0.4 | 1.8 | 17.2 | 0.05 |

Table 4:

SDs in SCDM drift rates

| Task | Exp. | Conditions | d1 | d2 | d3 |

|---|---|---|---|---|---|

| Color 72 eye | 1 | 0.35, 0.20, 0.10 | 11.2 | 5.9 | 3.9 |

| Color 72 touch | 2 | 0.35, 0.20, 0.10 | 4.6 | 3.5 | 3.7 |

| Dynamic bright eye | 3 | 0.62, 0.58 | 3.2 | 2.6 | |

| 3 | 0.58, 0.54 | 2.8 | 2.4 | ||

| Static bright touch | 4 | 0.75, 0.65 | 2.4 | 1.3 | |

| 4 | 0.65, 0.60 | 2.3 | 1.8 | ||

| Arrows 72 | 5 | 0.60, 0.40, 0.20 | 3.1 | 2.7 | 1.9 |

| Moving dots | 6 | 0.5, 0.1,0.1 | 4.3 | 2.8 | 3.4 |

| 6 | 0.4, 0.2, 0.1 | 4.4 | 2.4 | 1.9 | |

| 6 | 0.4, 0.2, 0.2 | 4.3 | 1.8 | 1.8 | |

| Color 72 mouse | 7 | 0.35, 0.20, 0.10 | 5.4 | 5.1 | 4.5 |

| Color sp/acc mouse | 8 | 0.25, 0.10 | 5.3 | 4.4 | |

| 2D brightness | 9 | 0.70, 0.50, 0.40 | 1.4 | 1.1 | 1.1 |

| 9 | 0.60, 0.50, 0.40 | 1.3 | 1.1 | 0.9 |

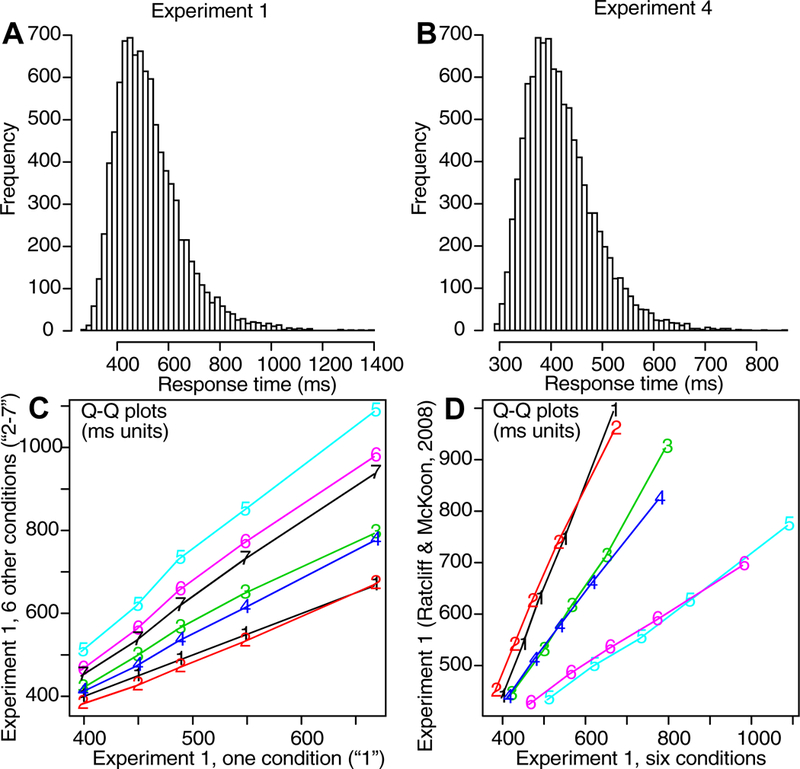

For Figures 3C and 3D, predictions and data are plotted as a function of angle, with the target locations aligned at 180 degrees. The predictions were generated by simulation using the parameter values in Table 1 with 10,000 simulations for each condition. For Figure 3C, response probabilities, the data and predictions were grouped into bins of 10 degrees. The predicted and data distribution peaks and spreads qualitatively match each other. This is especially impressive because the predictions were generated from the model parameters derived from fits to the response probabilities and quantiles (Figure 2B) and not from fits to the distributions of data directly. One deviation between predictions and data is the wider distribution of responses for the data relative to the model for the 0.1 stimulus. This suggests that a weak stimulus has greater variability (less precision) and this could be accommodated by assuming the SD in the drift rate distribution is larger for weak stimuli. Another deviation between theory and data is in the response proportions for conditions with values near zero for the data. The model predicts values larger than zero and there are systematic misses for some of these. In the discussion the possibility that the Gaussian process noise is stimulus location dependent and larger near the stimulus is considered.

For Figure 3D, RTs, the data and predictions also match well (5 degree angles per bin). The responses nearest the target angle (180 degrees) represent the A category, responses a little farther away represent the B categories, and all the others represent the T category. The blue bars in the figure show the responses in the A and B categories. The data show higher variability in the tails away from 180 degrees for the data than the predictions because there were low numbers of observations for the data but 10,000 observations in the simulated values. There was little difference in RTs across angles (i.e., across the A, B, and T response categories). However, RTs increased with difficulty, from a mean of 507 ms for the 0.35 condition to 576 ms for the 0.20 condition to 693 for the 0.10 condition (these means represent the vertical shifts from responses in one condition to another in Figure 3D). These are large effects and ones that the model captures well.

Experiment 2

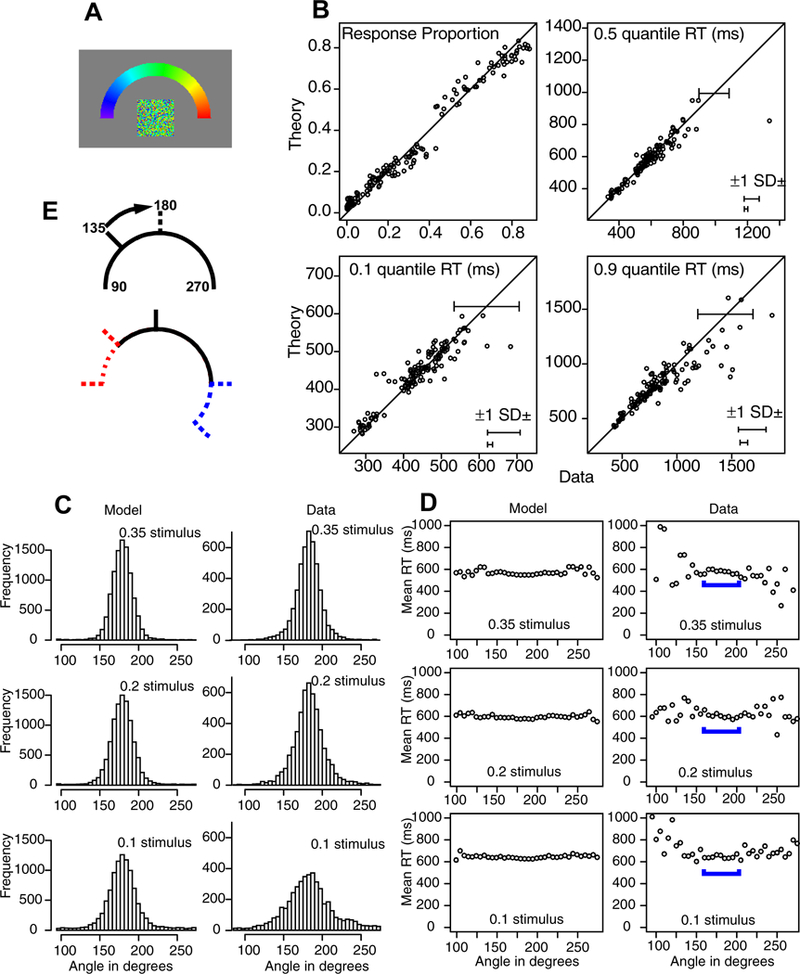

This experiment was similar to Experiment 1 except that the display used a half annulus surround and the response modality was a finger movement to the target color. Apart from this, the geometry of the display was the same as for Experiment 1. Figure 4A shows the central patch and the response half annulus. Responses were made by finger movements on the touch screen system. A half annulus was used because the resting position of the arm would have obscured part of a full annulus. Difficulty was manipulated in the same way as for Experiment 1. To anticipate, response modality (eye movements or finger movements) did not affect the patterns of results.

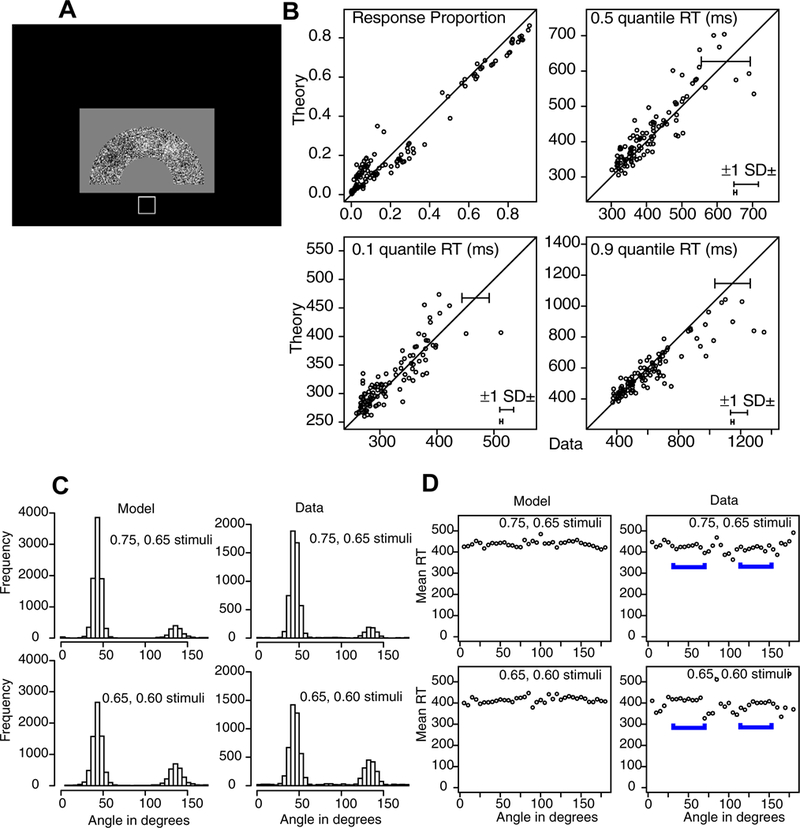

Figure 4.

The same analysis as in Figure 3 for Experiment 2. Panel E shows the result of aligning the stimuli at a common angle (180 degrees). Responses are lost (blue dashed line to the right) and areas contain no responses (red dashed line to the right). For full details, see the text.

Method

There were 12 blocks of 72 trials each, 24 of each condition in each block ordered randomly. Like Experiment 1, a session was 50 min. long but, without the need for eyetracker calibration, there were more responses, an average of 823 out of a possible 864. There were 16 subjects.

The central patches were constructed in the same way as for Experiment 1 except that only colors between 10 degrees and 170 degrees were used in order to avoid end effects. The central patch was displayed at the center of the half annulus (Figure 4A) and it was 16 pixels (.75 degrees) square. The half annulus contained 190 out of the possible 253 colors and its colors began at purple on the far left and ended at red on the right.

Subjects began each trial by touching their index fingers to a square that was located 9.5 degrees below the central patch. 250 ms after the touch, a plus sign appeared at the location at which the central patch would be displayed and it remained on the screen for 500 ms. 240 ms after that, the central patch and the half annulus were displayed. Subjects were instructed to move their fingers and touch the location on the half annulus that best matched the dominant color in the central patch. To discourage subjects from changing the target locations they had chosen during the finger movement, the central patch was turned off as soon as the finger was lifted but, unlike Experiment 1, the half annulus remained on the screen until the finger touch. This seemed to be more natural than turning it off as in the eye movement experiments. In the eye movement experiments, many of the eye movements were ballistic and turning off the response annulus did not affect the response. Following a response, feedback of the same kind as for Experiment 1 was presented for 300 ms, followed by the 40-ms blank screen. Response time was measured from onset of the patch and half-annulus to the time at which the finger lifted from the resting square.

Results

The stimuli were aligned to set the zero point at 180 degrees. The A area corresponded to 70–110 degrees, the B area to 45–70 and 110–145, and the T area to the rest. The results are quite similar to those of Experiment 1. The best-fitting parameter values are shown in Tables 1 and 2, with the heights of the distributions of drift rates decreasing with difficulty.

Figure 4B shows response probabilities and the 0.1, 0.5, and 0.9 quantile RTs for the three difficulty conditions for the three response categories for each subject, and for the quantiles, SDs constructed by the same method as for Experiment 1. There are 144 points on the probability plot and only 124 for the quantiles because conditions with fewer than 10 observations were excluded. There are no remarkable deviations between predictions and data for the response probabilities. For RTs, there are perhaps two large deviations in the 0.1 quantile, one in the 0.5 quantile and four or five in the 0.9 quantile. As in Experiment 1, these deviations are not consistent across conditions for an individual subject.

Figures 4C and 4D show predictions and data as a function of angle. The predictions were generated in the same manner as for Experiment 1 and they match the data well. As for Experiment 1, there was little change in RTs across angles, RTs increased with difficulty, and there was more variability in the tails away from 180 degrees for the predictions than the data. RTs increased from a mean of 536 ms for the 0.35 condition to 555 ms for the 0.20 condition to 579 ms for the 0.10 condition and the model fit these differences well.

There is one issue to note and that is that to produce the plots in Figures 4C and 4D, the stimulus location was repositioned to 180 degrees (with a range from 100 to 260 degrees). In Experiment 1, this was accomplished by simple rotation, but in this experiment there is a problem because there are separate end points for the half annulus. To illustrate this problem, suppose the central patch had a color that was at 135 degrees (with the center of the half annulus at 180 degrees) and the stimulus location was rotated by 45 degrees from 135 to 180 degrees as in Figure 4E. Then responses that were at 270 degrees move to 270+45=315 degrees which is outside the 100–260 degree range (the blue dashed line to the right in the bottom panel). Stimuli at 90 degrees rotate to 135 degrees which means that are no responses in the range from 90 to 135 (red dashed line to the left in the bottom panel).

For the responses in Figure 4C, frequencies at 135 degrees are out of 75% of the possible frequencies at 180 degrees. This means that the histograms in Figure 4C (for the data) underestimate the frequencies in the tails away from 180 degrees. This does not change the results for the 0.35 and 0.20 stimulus conditions because there are few responses in the tails, but for the 0.1 stimulus condition, the extreme tails are lower than they would be with equiprobable histograms. In the model predictions, the stimulus position is set to 180 degrees only so all the other angles from 100–260 degrees are equiprobable.

In the later experiments, two or more stimuli occur at random positions and in order to provide plots of the responses around the peak of both stimuli and between them, it is necessary to compensate for missing responses by moving responses that are outside the range to inside the range that would otherwise contain no responses, i.e., responses in regions that do not correspond to stimuli are filled in with responses that would be discarded.

The width of the histogram for the 0.10 stimulus condition is larger than that for the predictions. As for Experiment 1, one way to address this is to assume more variability (less precision) in weak stimuli which would require increasing the SD in the drift rate distribution (instead of keeping it constant as it was done in the fits).

Experiment 3

The aim for this experiment was to examine a task in which stimulus and response coincided, as they might for many devices such as cell phones and touch screens. Each stimulus was an annulus made up of black and white pixels (Figure 5A). The pixels were randomly distributed across the annulus except that there were two patches with more white than black pixels, one with a higher proportion of white pixels than the other, and two patches with more black than white, one with a higher proportion of black pixels than the other. On some blocks of trials, subjects were instructed to move their eyes from a center fixation point to the location on the annulus that was the brightest (the largest proportion of white pixels) and on the other blocks of trials, to the darkest (largest proportion of black pixels). It turned out that dark responses to dark patches were symmetric with bright responses to bright targets and so the two were collapsed. Also, when responding to bright targets there was no evidence that subjects were avoiding dark patches (and vice versa) and so the status of the patches of the other polarity was ignored in the method and analyses presented below. Collapsing conditions produced two conditions at different levels of difficulty with two stimulus patches for each condition. The circular annulus was dynamic: a new randomly generated annulus was displayed on each frame of the display with the same brighter and darker locations.

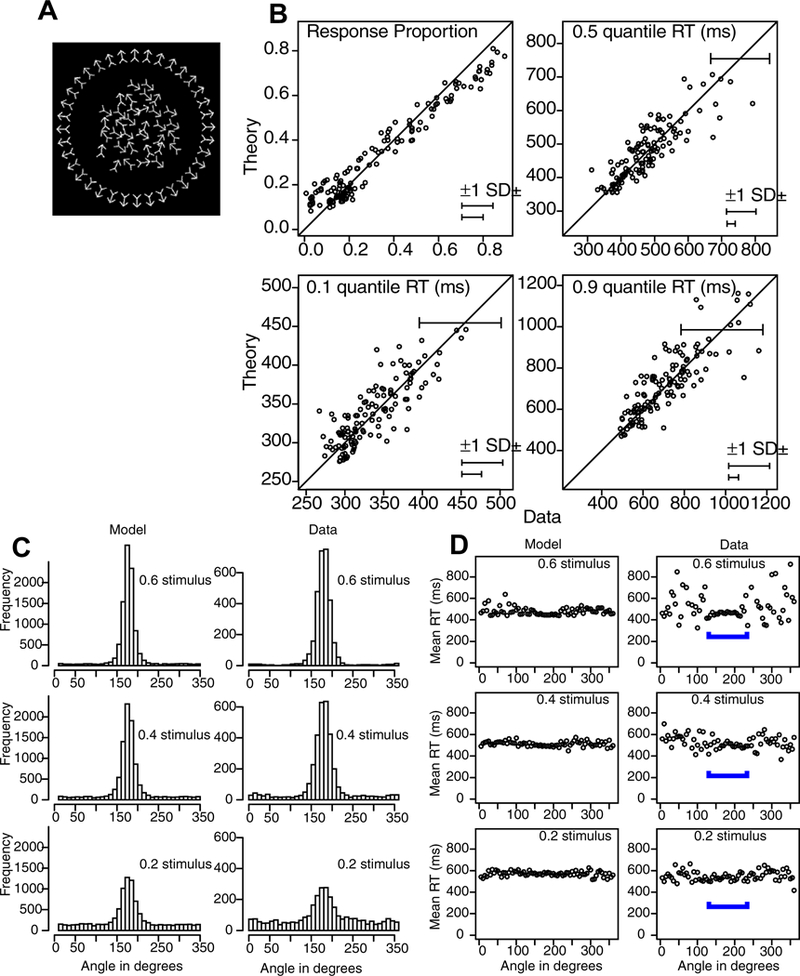

Figure 5.

The same analysis as in Figure 3 for Experiment 3. Panel E shows the result of aligning the stimuli at common angles (90 and 270 degrees). When stimuli are moved apart, areas are left with no responses (e.g., 135 to 225 degrees - what was at 179 moves to 134 and what was at 181 moves to 226), and to compensate, responses in areas in which there may be responses but disappear, e.g., 45–0-315, the red dashed area, are moved to the 135–225 range.

Method

The circular annulus stimuli were constructed in the following way: first, pixels were randomly set so that 50% of them were white and 50% black. Then four locations on the annulus (at the center radius) were randomly selected with the limitation that they were at least 36 pixels apart (about 1.8 degrees of visual angle and 21 radial degrees around the annulus). Then some proportion of the pixels at two of the locations were changed to white and at the other two, changed to black. These locations served as the centers of 2D normal distributions with SD 6 pixels. The proportions that were flipped to white or to black in the patches were obtained from the height of the normal distributions. The proportions of white or black pixels at the peak of the normal distribution (center of the patch) were 0.62 and 0.58 for the easier condition and 0.58 and 0.54 for the more difficult condition. The radius of the center of the annulus was 100 pixels (5 degrees of visual angle) and it was 72 pixels wide.

The displays were dynamic. Every 10 ms (determined by the refresh rate of the CRT monitors), a new random sample of noise was generated and new patches were generated in the same locations with different random samples of pixels changed from black to white or white to black.

There were 12 blocks of 72 trials, preceded by 45 practice trials. In each block, there were two conditions for the bright or dark targets, one with the easier proportions and one with the more difficult ones, in random order. For half of the blocks, subjects were instructed at the beginning of the block to move their eyes to the brightest location and for the other half, to the darkest location. These blocks alternated through the experiment. Subjects found this task more difficult than that for Experiment 1 in terms of staying on task and so very few subjects completed the experiment. This produced an average of 457 observations per subject out of 819 total trials.

At the beginning of a trial, subjects fixated on a white square (20 pixels square - about 1 degree) at the center of where the annulus was to appear (Figure 5A); then the annulus appeared with random assignment of 50% black and 50% white pixels for 500 ms with a new random assignment of black and white pixels presented every 10 ms (the frame rate of the display); then as a signal that the stimulus appeared, the fixation rectangle changed to all black pixels and the pixels at the four locations on the annulus changed to the appropriate proportions of black and white pixels. RTs were recorded from the onset of the bright and dark patches to when eyes moved 30 pixels from the center of the fixation box. When the eyes had moved 70 pixels from the center of the fixation box, the screen blanked. Feedback was provided with “2” presented for a response at the strongest peak, “1” at the weaker peak, and “0” for the other locations. The regions around the peak used to determine the feedback were boxes that had side lengths of 40 pixels. During the period that feedback was presented, the next set of images for the stimuli was loaded into the computer memory and this took about 1.4 seconds.

Results

“Bright” responses to bright stimuli were collapsed with “dark” responses to dark stimuli because there was less than a 1% difference in accuracy between them and only a 21 ms difference in mean RTs between them. Furthermore, subjects did not avoid responding to the opposite parity (e.g., they were not less likely to respond to a dark region when the task was to respond to a bright region). Therefore the data were collapsed across dark and bright conditions and so modeling and data are in terms of two conditions, stronger (higher proportion of black or white pixels) and weaker (lower proportions).

Because the locations of the bright and dark patches were randomly generated, it was necessary to align their positions in order to group data appropriately for model fitting. The peaks of the locations were aligned such that the stronger peak was rotated to 90 degrees and the weaker rotated to 270 degrees. The two peaks were usually closer than 180 degrees which means that after aligning the peaks, the numbers of observations between the two would be under-represented. For example, if the locations were at 135 and 225 degrees and responses were moved along with the locations, then responses 135–180 would move to 90–135 and responses 180–225 would move to 225–270. Thus there would be a gap between 135 and 225 degrees. To compensate, these positions were filled with responses from 45–0-315 degree range on the opposite side (the red dashed line moving up as in Figure 5E). An analysis showed that there were no differences in response proportions from those in the shorter distance between two peaks versus those in the larger distance between two peaks which shows that this alignment method did not distort results.

Responses were divided into four areas (cf., Figure 4): the A area was 83–97 degrees with the center of the strongest location at 90 degrees, the B area was from 69–82 and from 98–111 degrees, the C area was from 249–291 degrees (with the center of the weaker location at 270 degrees), and the T area was all the rest.

Figure 5B shows response probabilities and the 0.1, 0.5, and 0.9 quantile RTs for the two levels of difficulty and the four categories of responses (A, B, C, and T) for each subject. For the quantiles, only conditions and areas with more than 10 observations per subject are plotted. The number of points for response probabilities was 128 and the number for quantiles was 123.

There is a good match between predictions and data. The SD bars in the lower right of the panels and the SD bar around the diagonal line were computed as for the other experiments. For the response probabilities, there were few large non-systematic outliers. For the quantiles, there were fewer observations than for the earlier experiments, so variability was larger and there were deviations in the 0.1 quantile RTs between theory and data as large as 100 ms. But the large variability in the data made these large deviations less than 2 SD’s outside the predictions. There seems to be less deviation between theory and data in the median RTs (0.5 quantiles), but the x-axis and y-axis scales are twice that for the 0.1 quantiles and the deviations are as large numerically. As before, the deviations in the 0.9 quantiles (tails of the distributions) are large also.

Figure 5C shows the proportions of responses combined over subjects plotted as a function of angle. To construct these plots from the data, the data are aligned as described above with the strongest stimulus at 90 degrees and the weaker one at 270 degrees then all responses are combined over subjects and plotted. Predictions are generated from the mean parameter values from Table 1 and then plotted in the same way as for the data. The comparisons between data and the model predictions are good especially keeping in mind that the details of the shape of the function are not fitted, rather only the A, B, C, and T groups of trials as in Figure 2.

Figure 5D shows mean RTs as a function of angle. There was little difference in mean RTs across the angles, but there was a large effect of difficulty with the mean RT 549 ms for the easier condition and 665 ms for the more difficult one.

Experiment 4

The display was a half annulus of black and white pixels (Figure 6A) and subjects were to move their index finger to the brightest or darkest area. Like Experiment 3, there were two locations with more white than black pixels, one with a higher proportion of white than the other, and two locations with more black than white pixels, one with a higher proportion of black than the other. As for Experiment 3, bright responses to bright stimuli were symmetric with dark responses to dark stimuli and when responding to bright stimuli, the dark patches were not avoided and vices versa (and so patches of the opposite polarity could be ignored). This led to two conditions with two levels of difficulty (brightness levels) with two patches in each of them. Unlike Experiment 3, the displays were static.

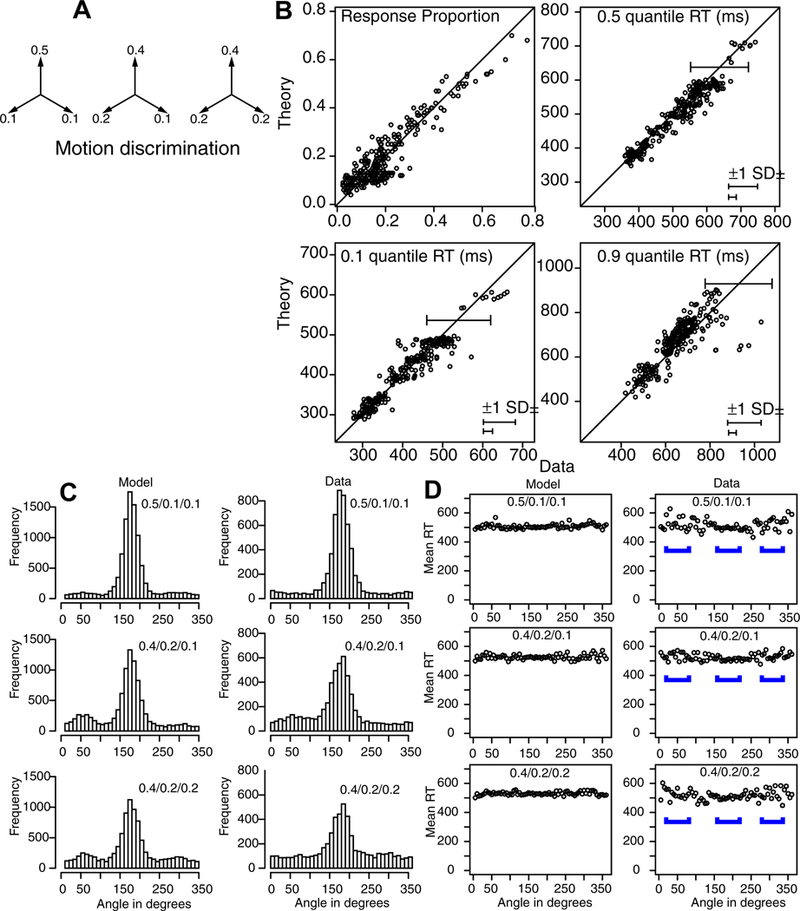

Figure 6.

The same analysis as in Figure 3 for Experiment 4.

Method

The half annulus stimuli were constructed in the same way as for the annulus stimuli in Experiment 3. To construct the stimuli, a half annulus of 50% randomly placed black and white pixels was constructed with a center radius of 100 pixels (5 degrees of visual angle) and width of 72 pixels. The patches were 2D Gaussians with SD of 12 pixels. The patches were constrained to be at least 36 pixels apart (about 1.8 degrees of visual angle). There were two levels of difficulty with 0.75 and 0.65 proportions of white (or black) pixels at the strongest peak of the Gaussian and with 0.65 and 0.60 proportions at the weaker peak (the shorthand strong and weak stimuli within the easy and difficult conditions is used below). In the task, subjects placed a finger in a start box 190 pixels (9.5 degrees of visual angle) below the center of the half annulus (Figure 6A), the stimulus appeared and then the task was to move the finger to the brightest patch (or the darkest patch in different blocks of trials).

The proportions of pixels changed were much larger and the SDs in the Gaussians were twice as large as those for Experiment 3. This is because Experiment 3 used dynamic stimuli which tended to average out variability leading to more visible differences at lower values of the proportions (e.g., Ratcliff & Smith, 2010).

The apparatus was the same as in Experiment 2 and there were 16 undergraduate subjects. Subjects touched their finger in a square corresponding to the fixation square in Experiment 3 (as in Experiment 2) which was located at the center of the screen. After the touch there was a 250 ms period where the square remained on, then it was erased and a + sign came on for 500 ms in the center of the half annulus, then it went off and 250 ms later the stimulus in the response annulus was presented. The annulus remained on until a finger lift was detected. After the response, feedback was presented for 250 ms with “2” presented for the stronger peak, “1” for the weaker peak, and “0” otherwise. The regions around the peak used to determine the feedback were boxes that had side lengths of 50 pixels. Response time was measured from stimulus presentation to the time at which the finger lifted from the resting square to move to the target.

For modeling and data analysis, the strong and weak stimuli were aligned on 45 degrees and 135 degrees respectively on the half annulus (which subtended 180 degrees). The stimuli were randomly placed in the display which leads to the problems discussed in Experiments 2 and 3: how to align responses and deal with those that were outside the patches. Also, because the stimuli were presented on the half annulus, there was the same problem as for Experiment 2 with alignment outside the 180 degree range. In the design of the experiment, first, no patch could be more than 90 degrees away from the others, second, patches were 3 SDs away from the ends of the half annulus (i.e., between 21 and 159 radial degrees), and third, patches could be no closer than 3 SDs, i.e., 21 radial degrees.

To align the stimuli, the stimuli were moved in a way analogous to Figure 5E and areas analogous to the blue area in Figure 4E and the red area in Figure 5E were moved to fill in the empty ranges. As for Experiment 3, there was no systematic difference between the proportions of responses in the areas that were moved from those that were rotated (Figure 4E).

As for Experiments 1 and 2, the touch screen task was easier to perform than Experiment 3 with eye movements. There were 12 blocks of 72 trials as for Experiment 3 preceded by 45 practice trials. Trials proceeded more quickly than in Experiment 1 and many subjects completed all trials. There was a mean of 812 observations per subject out of 819 total.

Results

As in Experiment 3, bright responses to bright stimuli were reasonably symmetric with dark responses to dark stimuli (for strong stimuli, the difference is 3% in accuracy and 9 ms in mean RT and for weak stimuli the difference is 4% in accuracy and 5 ms in mean RT) and so they were grouped into strong versus weak. The angles used to specify the areas used to group data to produce quantile RTs as in Figure 2 are: A 36–54 degrees, B 18–35 and 55–72 degrees, C (the area for the second peak) 126–172 degrees, and T the rest. Also, as in Experiment 3, when the task was to respond to bright areas, responses at dark areas were no lower than the background (and vice versa).

Figure 6B shows plots of the model fit to the response proportions and 0.1, 0.5, and 0.9 quantile RTs for each individual subject for each condition of the experiment (there are 168 points in the response proportion plot and 154 in the quantile plots, i.e., those with greater than 10 observations in the condition.

Figures 6C and 6D show plots of the data and model for response proportions and mean RTs. As for the other experiments, these show good matches between theory and data. There are responses to the brightest and next brightest peak in the stimulus and the model matches the data as for Experiment 3. The RT results show little effect of difficulty on performance with only a 10 ms effect of difficulty (0.75/0.65 versus 0.65/0.60 stimuli). Also, the weak peak has mean RT only 5 ms shorter than the strong peak. This lack of any difference as a function of difficulty is quite different from the results from Experiments 1 and 2 which show quite large differences in RTs for easy and difficult stimuli. However, the model captures all these accuracy and RT effects.

Experiment 5

In Experiments 1 and 2, there was a direct 1–1 correspondence between the dominant color in the central patch and the target location in the surrounding annulus. In other words, if red was the dominant color in the patch, then red was the target location. In Experiments 3 and 4, there was also a 1–1 correspondence: the brightest (or darkest) location on the circular or half-circular annulus was the location to which eyes or fingers moved. Experiment 5 broke these direct perceptual correspondences. The central patches were made up of arrows (Figure 7A) with a proportion of them pointing in the same general direction and the others in random directions. The surrounding response annulus was also made up of arrows, with their directions moving around the circle from pointing upward at the top of the circle to downward at the bottom. Subjects were to move their eyes from the central patch to the location on the circle that matched the dominant direction of the arrows in the patch. This requires determining the dominant direction in the patch and only after that has been accomplished can the target location on the surround be determined. There were three different conditions with different proportions of arrows pointing in the target direction.

Figure 7.

The same analysis as in Figure 3 for Experiment 5.

Method

At the beginning of each trial, subjects were asked to fixate on a white 20×20 pixel square at the center of the screen. After 500 ms of fixation, the central patch and the circular annulus surrounding it were displayed; these remained on the screen until a subject’s eyes moved 40 pixels (2 degrees) away from the fixation point, at which point the screen cleared.

Feedback was presented for 300 ms (“1” for a response in the target location which was a box 40 pixels around the target location and “0” otherwise). Feedback for fast or slow responses or slow movement time was the same as for Experiment 1.

The central patch was a disk with radius 50 pixels (2.5 degrees of visual angle) and contained 32 non-overlapping arrows. The circular response annulus was 4 degrees of visual angle (80 pixels) from the fixation point with a width of 0.65 degrees. In both the central patch and the surrounding annulus, the arrows were 13 pixels (0.65 degrees) long and 7 pixels (0.35 degrees) wide. The arrows had heads and tails to minimize the possibility of using the density of the head relative to the tail if only heads were used. There were 36 arrows in the response annulus, one for each 10 degrees of rotation, as in Figure 7A.

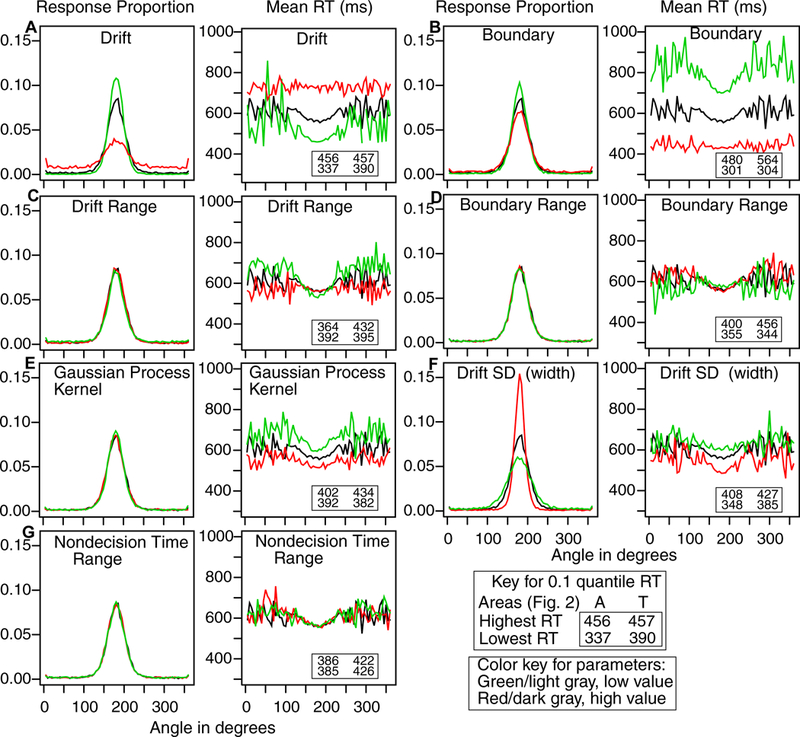

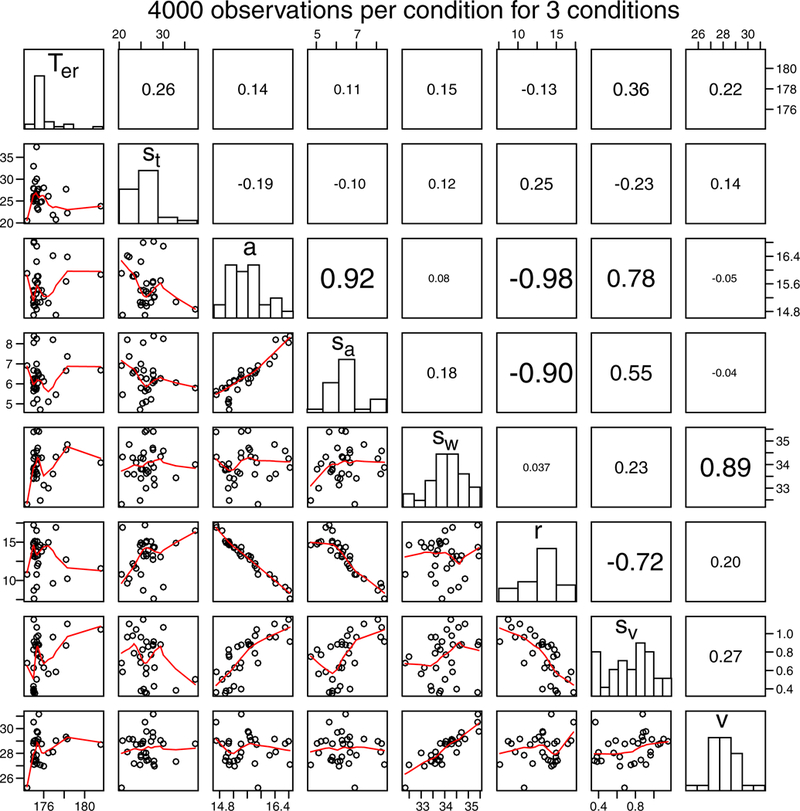

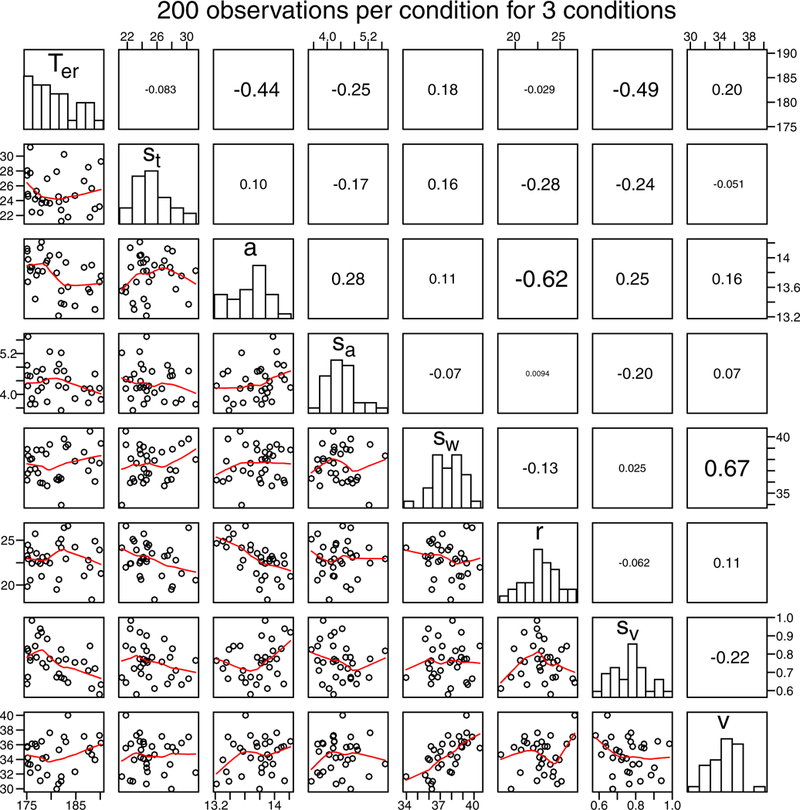

In the stimulus patch, some proportion of the arrows pointed within plus or minus 10 degrees of the target direction. Difficulty was manipulated by the proportion that pointed in the same direction: the proportions were 0.6, 0.4, and 0.2.