Abstract

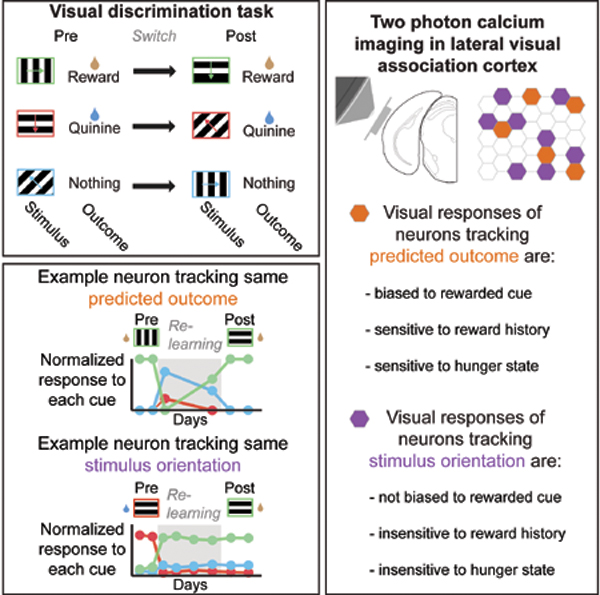

The response of a cortical neuron to a motivationally salient visual stimulus can reflect a prediction of the associated outcome, a sensitivity to low-level stimulus features, or a mix of both. To distinguish between these alternatives, we monitored responses to visual stimuli in the same lateral visual association cortex neurons across weeks, both prior to and after reassignment of the outcome associated with each stimulus. We observed correlated ensembles of neurons with visual responses that either tracked the same predicted outcome, the same stimulus orientation, or that emerged only following new learning. Visual responses of outcome-tracking neurons encoded “value,” as they demonstrated a response bias to salient, food-predicting cues and sensitivity to reward history and hunger state. Strikingly, these attributes were not evident in neurons that tracked stimulus orientation. Our findings suggest a division of labor between intermingled ensembles in visual association cortex that encode predicted value or stimulus identity.

Graphical abstract

Introduction

Our brains track not only the identity of sensory stimuli, but also the possible outcomes associated with these stimuli. Due to a constantly changing environment, learned cue-outcome associations are frequently made, broken, and re-made. Accordingly, the neural representation of a given cue-outcome association must include both reliable encoding of the stimulus identity and flexible, context-dependent encoding of the predicted outcome and its motivational salience. However, representations of stimuli and of predicted outcomes are often considered in separate studies focused on different brain regions. For example, studies in early visual cortical areas have described correlated ensembles of neurons that encode low-level information about stimulus identity (Cossell et al., 2015; Hofer et al., 2011; Ko et al., 2011; Kohn and Smith, 2005; Lee et al., 2016), while studies in subcortical regions such as the amygdala have identified ensembles that respond to those sensory cues that become associated with a given salient outcome (Grewe et al., 2017; Paton et al., 2006; Schoenbaum et al., 1999; Zhang et al., 2013). Within intervening brain areas, it remains unclear whether representations of stimulus identity and of predicted outcome are largely encoded by a common group of neurons or are separately encoded by different groups of neurons.

A natural region in which to address this question is the visual postrhinal cortex (POR; Wang and Burkhalter, 2007) and neighboring regions of lateral visual association cortex (LVAC). Behavioral studies suggest that lateral association cortex may play a key role in linking representations of sensory stimuli and predicted outcomes (e.g., Parker and Gaffan, 1998; Sacco and Sacchetti, 2010). Anatomical evidence points to LVAC as a putative integration site, as it receives feedforward projections from early visual cortex and visual thalamus (Wang and Burkhalter, 2007; Zhou et al., 2018) and feedback projections from lateral amygdala (Burgess et al., 2016). Evidence that LVAC contains a faithful representation of the visual world comes from observations that some LVAC neurons are retinotopically organized and can encode low-level stimulus features such as stimulus orientation, even in naïve mice (Burgess et al., 2016). Evidence that LVAC also contains a representation of the value of predicted outcomes comes from observations that, following training, LVAC neurons show a hunger-dependent response bias towards learned cues that predict food delivery, as well as sensitivity to reward history (Burgess et al., 2016).

While the above study in mice and many neuroimaging studies in humans demonstrate enhanced responses to salient cues in LVAC (reviewed in Burgess et al., 2017), these studies were fundamentally limited in their ability to determine whether a given neuron is responsive to a visual cue because it is sensitive to the low-level features of the stimulus, to the outcome predicted by the stimulus, or to a combination of these factors. At one extreme, the observed population-level sensitivity to food cues, hunger state, and reward history could be a result of individual neurons that are tuned to low-level visual stimulus features but that also demonstrate changes in response gain across behavioral contexts. At the other extreme, one subset of neurons might track whichever cues predict a given salient outcome, while another intermingled set of neurons might track low-level visual features and maintain the same high-fidelity response tuning independent of context.

To address this question, we used two-photon calcium imaging to record in LVAC of mice performing a Go-NoGo visual discrimination task. Critically, we tracked the same neurons across days prior to, during, and following learning of a reassignment of cue-outcome associations (similar to classic reversal learning paradigms; Paton et al., 2006). We identified multiple ensembles of correlated LVAC neurons, each of which tracked the identity of a specific visual stimulus. Strikingly, we found that visual responses in these identity-coding ensembles were not sensitive to motivational salience. Instead, we identified an additional ensemble of correlated neurons that tracked predicted outcome and whose visual responses were sensitive to cue saliency, reward history, and hunger state. Surprisingly, relatively few neurons demonstrated appreciable joint tracking of stimulus identity and of predicted outcome. We propose that intermingled ensembles encoding stimulus identity or predicted outcome in visual association cortex may achieve dual goals of maintaining a faithful representation of a visual stimulus while enabling flexible encoding of predicted outcomes and their motivational salience.

Results

Mice learn changes in cue-outcome associations in a visual discrimination task

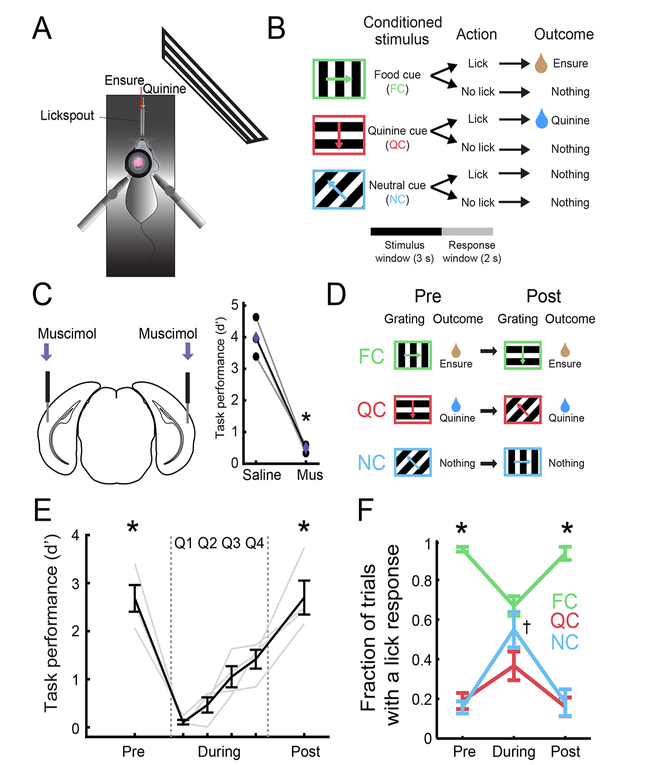

We trained food-restricted mice to perform a Go-NoGo visual discrimination task (Figure 1A–B). Mice were presented with visual cues for 3 s, followed by a 2-s response window and a 6-s inter-trial interval. Mice were trained to discriminate between a food-predicting cue (FC; 0° in Figure 1B; orientations were counterbalanced across animals), a quinine-predicting cue (QC; 270°), and a neutral cue (NC; 135°). Licking during the response window following the FC, QC, or NC resulted in delivery of liquid food (5 μL of Ensure), an aversive bitter solution (5 μL of 0.1 mM quinine), or nothing, respectively.

Figure 1: Mice learn changes in cue-outcome associations in a visual discrimination task.

A. Head-restrained setup for visual stimulation, delivery of Ensure or quinine, and two-photon calcium imaging.

B. Mice were trained on a Go-NoGo task.

C. Bilateral injection of muscimol (Mus) to silence lateral visual association cortex resulted in decreased task performance.

D. Following initial learning, we switched cue-outcome associations by changing the orientation of the visual grating that predicted each specific outcome.

E. Mice performed poorly immediately following the switch in cue-outcome associations, but gradually improved until they attained high performance. Pre: pre-Reversal; During: during-Reversal; Post: post-Reversal. “During” period shown in quartiles Q1-Q4. * p < 0.001 vs. during-Reversal (combined across quartiles; see text).

F. Mice selectively licked in response to the food cue (FC) during pre- and post-Reversal epochs but licked indiscriminately to all cues during-Reversal. * p < 0.05; † denotes significant increase in licking to the neutral cue (NC) during- vs. pre- and post-Reversal (p < 0.01); 2-way ANOVA, Tukey-Kramer post hoc test. Error bars: s.e.m. See also Figure S1.

Lateral visual association cortex (LVAC) was necessary for task performance, as bilateral silencing (centered on visPOR) using the GABAA agonist muscimol (125 ng in 50 nl) disrupted performance (p = 0.006, paired t-test; n = 3 mice; Figure 1C and S1A). Silencing of LVAC did not result in general cessation of licking (as occurs when silencing insular cortex during this task, Livneh et al., 2017). Instead, it caused mice to lick indiscriminately in response to all cues (Figure S1A), suggesting a perceptual rather than a motivational deficit.

Once mice stably performed the Go-NoGo task, we switched the three cue-outcome contingencies via a clockwise rotation of the outcome associated with each stimulus (Figure 1D; e.g. FC: 0°→270°; QC: 270° →135°; NC: 135° →0°). Discrimination returned to high levels in as few as 3 days following this “Reversal” (Figure 1E–F; d’ pre- vs. during- vs. post-Reversal: p < 0.001, 1-way repeated measures ANOVA, Tukey-Kramer method). We used a behavioral performance threshold (d’ > 2 for ≥ 2 consecutive days) to divide our training paradigm into 3 epochs: (i) prior to switching of cue-outcome associations (pre-Reversal), (ii) poor performance immediately after switching of cue-outcome associations (during-Reversal), and (iii) following learning of the new associations (post-Reversal; Figure 1E–F). Following the switch, mice began licking indiscriminately to all cues, and then gradually increased licking to the new food cue (Figures 1F and S1B; p < 0.05, 2-way ANOVA, Tukey-Kramer method) and decreased licking to other cues. This switching of cue-outcome associations also caused an increase in pre-stimulus licking. Food cue-evoked and pre-stimulus licking gradually returned to pre-Reversal levels and became more stereotyped (Figure S1C–D; Jurjut et al., 2017). The fraction of all trials with lick responses did not change (Figure S1E), suggesting similar levels of task engagement throughout the Reversal.

Different subsets of LVAC neurons track stimulus identity or predicted outcome

Throughout daily imaging sessions (14 ± 5 sessions/mouse in 4 mice), we tracked visual responses in the same population of neurons in layer 2/3 of lateral visual association cortex (LVAC) using two-photon calcium imaging. Recordings were from a region of LVAC centered around visPOR (Figure 2A), which was delineated using widefield intrinsic autofluorescence imaging of retinotopy, and subsequently injected with AAV1-hSyn-GCaMP6f (Burgess et al., 2016).

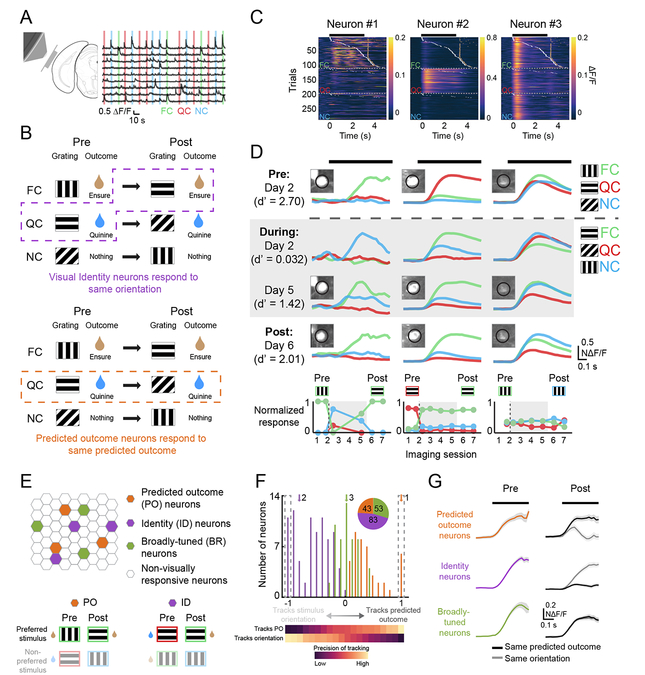

Figure 2. Different subsets of lateral visual association cortex neurons track stimulus identity or predicted outcome.

A. Left: schematic demonstrating location of cranial window. Right: example traces showing varied responses to food cue (FC), quinine cue (QC), and neutral cue (NC) trials.

B. A schematic demonstrating the hypothetical responses of two neurons whose early visual responses consistently track either stimulus identity (ID, top; same stimulus orientation) or predicted outcome (PO, bottom) across a Reversal.

C. Heatmaps from one session for three simultaneously recorded neurons. White ticks denote the first lick on a given trial and gold ticks denote delivery of Ensure.

D. Average cue-evoked responses across sessions for the same example neurons as in C. Dashed gray line indicates when cue-outcome associations were switched. Neuron #1 tracked the cue that predicted food delivery. Neuron #2 tracked the 270° drifting grating, regardless of the predicted outcome. Neuron #3 was broadly responsive to visual stimuli. Parentheses: behavioral performance, d’. Insets: images of the GCaMP6-expressing cell bodies. Bottom: normalized cue response magnitudes for all imaging sessions in which the neuron was visually responsive.

E. Top: schematic of neurons within an imaging field of view, including those classified as PO, ID, or Broadly-tuned (BR) neurons (e.g. example Neurons #1, #2, and #3, respectively). Bottom: illustration of preferred stimulus orientation across a switch in cue-outcome contingencies for a PO neuron and an ID neuron.

F. The distribution of all neurons recorded both pre- and post-Reversal, ordered from those that precisely tracked the same stimulus orientation (leftmost on x-axis) to those that precisely tracked whichever stimulus predicted the same outcome (rightmost on x-axis). Inset: pie chart indicating numbers of imaged neurons in each category. Arrows denote example neurons from C-D.

G. Mean normalized responses for each category, demonstrating the degree to which neurons in each category either responded preferentially to the same predicted outcome or to the same orientation pre- vs. post-Reversal. See also Figure S2.

We tracked 731 neurons across multiple imaging sessions, of which 179 were significantly visually responsive both pre- and post-Reversal. We hypothesized that some neurons would respond selectively to the same low-level stimulus feature (orientation) regardless of changes in associated outcome (e.g. Figure 2B, top: neural response preference tracks the 270° grating), while other neurons would respond selectively to visual cues predicting the same outcome regardless of stimulus orientation (see hypothetical example neuron in Figure 2B, bottom, which responds to the stimulus that predicts quinine, regardless of stimulus orientation; Figure S2A).

Diverse tuning properties were observed across simultaneously recorded neurons, both in single-trial visual responses (Figure 2C) and in mean responses (Figure 2D). Neuron #1 responded to the food-predicting cue (FC) both pre- and post-Reversal, regardless of stimulus orientation (FC pre-Reversal: 0°; FC post-Reversal: 270°). Neuron #2 responded to the same orientation (270°) across the Reversal. Neuron #3 r esponded to all 3 cues across all sessions.

We assigned all neurons that were visually responsive both pre- and post-Reversal to one of three categories (Figure 2E): “Predicted Outcome (PO)” neurons had visual responses that predominantly tracked the same predicted outcome, “Identity (ID)” neurons maintained visual responses to the same preferred stimulus orientation, and “Broadly-tuned (BR)” neurons had non-selective visual responses. To categorize a neuron, we constructed a 3-point tuning curve of its responses to the 3 stimuli pre-Reversal (Figure S2A, left panel; net cue preference: black arrow). We then estimated how this tuning curve and net preference would have “rotated” post-Reversal (Figure S2A, right panels), had the neuron been purely tuned to stimulus identity (no rotation, as ID neurons respond consistently to the same orientation; dashed purple arrow), or predicted outcome (clockwise rotation to match the rotation of outcomes relative to the 3 stimuli; dashed orange arrow). Using this categorization, we found 83 ID neurons and 43 PO neurons (Figure 2F; Figure S2A–E, right). We also found 53 BR neurons that showed similar responses to all cues, and thus equally poorly “tracked” stimulus orientation, predicted outcome, and an artificial, counter-clockwise “null” rotation across Reversal (Figure S2B). The tracking of predicted outcome did not occur due to noisy estimates: while many LVAC neurons showed surprisingly pure tracking of the same predicted outcome (Figure 2F, right dashed box) or the same stimulus orientation (Figure 2F, left dashed box; Figure 2G), control analyses confirmed that almost no neurons showed similarly pure tracking of the artificial null rotation (Figure S2C).

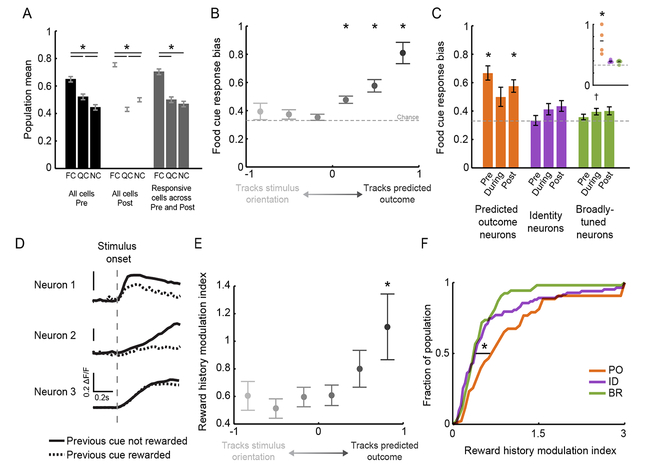

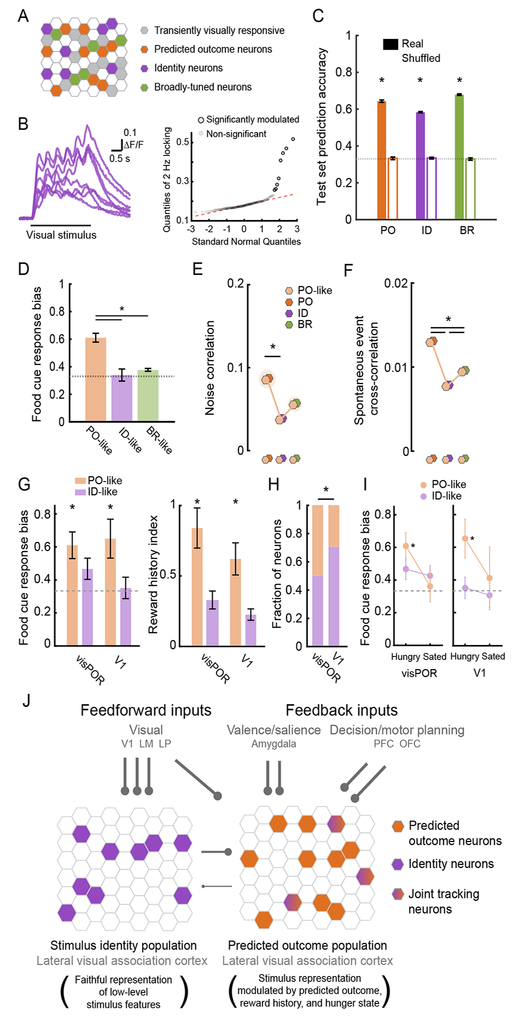

Enhanced sensitivity to food cues and reward history in Predicted Outcome but not Identity neurons

Neural responses in LVAC of food-restricted mice were biased to food cues vs. other visual cues (Figure 3A), consistent with previous work in humans and mice (Burgess et al., 2016; Huerta et al., 2014; LaBar et al., 2001). Here, we asked whether this food cue response bias is more prevalent in any one category of LVAC neurons. The set of 179 neurons responsive to visual cues both pre- and post-Reversal showed a similar net food cue response enhancement as the total population (i.e. neurons driven either pre- and/or post-Reversal; Figure 3A and Figure S3A). Surprisingly, this food cue response bias was absent in the subset of neurons that preferentially tracked stimulus orientation (left half of Figure 3B; cf. Figure 2F; food cue bias = FCresponse / [FCresponse + QCresponse + NCresponse]; no bias: 0.33). This was true both for those neurons that purely tracked stimulus orientation (leftmost datapoint) and for those neurons that weakly tracked stimulus orientation (2nd and 3rd datapoints from left). Instead, the food cue bias only existed in those neurons that predominantly tracked the predicted outcome (right half of Figure 3B; p < 0.001, Wilcoxon Sign-Rank test against 0.33, Bonferroni corrected). Furthermore, those neurons that purely tracked the predicted outcome demonstrated the strongest food cue response bias.

Figure 3. Enhanced sensitivity to food cues and reward history in PO but not ID neurons.

A. In hungry mice, lateral visual association cortex showed a response bias to the motivationally-salient food cue both pre- and post-Reversal (black and white bars). A similar bias was observed when only including those neurons that were visually responsive both pre- and post-Reversal (gray bars). * p < 0.001; FCPrePost vs. FCPre, FCPost: p > 0.05, 2-way ANOVA, Tukey-Kramer test. Pre: pre-Reversal; Post: post-Reversal. FC: food cue; QC: quinine cue; NC: neutral cue.

B. Food cue response bias across a range of neurons, from those that mostly tracked stimulus orientation (left; see Figure 2F) to those that mostly tracked predicted outcome.* p < 0.001, Wilcoxon Sign-Rank test against 0.33, Bonferroni corrected.

C. Analysis by category showed that PO neurons, but not ID or BR neurons, were significantly biased to the food cue. * p < 0.0001, Wilcoxon Sign-Rank test against 0.33. Inset: only PO neurons showed a significant food cue bias when performing statistics across mice, rather than across neurons. * p < 0.025, 1-way RM ANOVA, Tukey-Kramer method.

D. Example neurons exhibiting modulation of cue-evoked response depending on whether the previous trial was a rewarded trial (dashed) or not (solid).

E. Only those neurons that strongly tracked the same predicted outcome showed significantly elevated modulation of visual responses by reward history. * p < 0.01 bootstrap permutation test.

F. PO neurons were more sensitive to recent reward history than ID or BR neurons. Error bars: s.e.m. (see text). See also Figure S3.

We confirmed that the group of PO neurons showed a response bias to the food cue (Figure 3C). This bias persisted both pre- and post-Reversal, and thus across associations of different stimulus orientations with the food reward (Figure 3C; inset shows per animal; Figure S3A–B; PO neurons pre- and post-Reversal: p < 0.0001, Wilcoxon Sign-Rank test against chance, Bonferroni corrected within group). Critically, the group of ID neurons did not exhibit this bias (p > 0.05). Thus, while on average there exists a food cue response bias when considered across all neurons, a subgroup of neurons exists that faithfully encodes stimulus identity irrespective of motivational salience. These data suggest the co-existence of largely distinct sets of LVAC neurons that either faithfully represent the same low-level sensory features across time, or that show more plastic representations that track whichever stimulus predicts a given salient outcome.

We previously showed that the magnitude of food cue responses in LVAC neurons varied in their sensitivity to reward history (Burgess et al., 2016). We asked whether such short-timescale variation in expected value of predictive cues differed across functional categories. We estimated the magnitude of a neuron’s average response to its preferred stimulus, either for trials preceded by a rewarded food cue (RFC→Pref) or those preceded by one or more non-rewarded cues (RnonFC→Pref), and calculated a reward history modulation index (abs[RnonFC→Pref-RFC→Pref]/RPref; see example cells in Figure 3D). We found that those neurons that tracked the orientation of the stimulus across the Reversal were relatively insensitive to recent reward history (left half of Figure 3E). In contrast, those neurons that purely tracked the predicted outcome (darkest gray circle) were more sensitive to reward history (bootstrap permutation test: p < 0.01; Figure 3E). Similarly, when analyzing neurons by category, we found that PO neurons were more sensitive to recent reward history than ID or BR neurons (Figure 3F and Figure S3C; PO vs. ID or BR neurons: p < 0.02; ID vs. BR neurons: p > 0.05; Kruskal-Wallis, Bonferroni corrected). As response bias towards the motivationally relevant food cue and sensitivity to reward history both reflect encoding of the expected value of predicted outcomes, these findings further support the conclusion that the subset of LVAC neurons that encode low-level stimulus features do not additionally encode expected value.

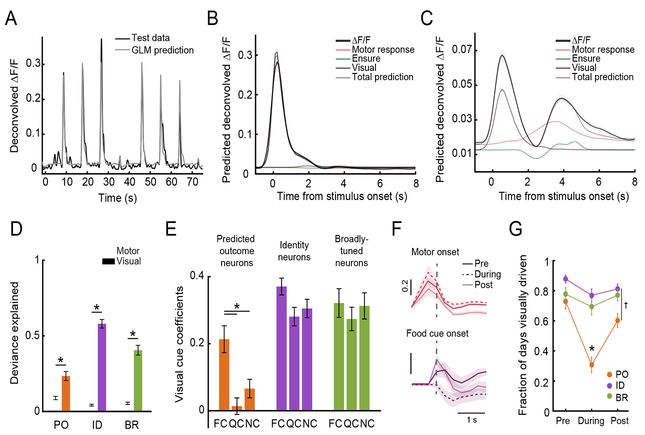

Dissecting sensory, motor, and reward-related responses in visual association cortex

We next used a generalized linear model (GLM; Figure 4A–C) to estimate the relative contributions of visual cues, reward delivery, and other task-relevant events to the overall responses of each group of neurons. Notably, visual cue coefficients, which captured activity tightly locked to stimulus onsets, explained the largest proportion of task-modulated activity in PO neurons as well as in ID and BR neurons (deviance explained using visual coefficients vs. motor coefficients such as those locked to the first lick following cue onset: p < 0.01, Wilcoxon Rank-Sum, Bonferroni corrected; Figure 4A–D; Figure S4A–B). We confirmed that a selective bias to the food cue was present in PO neurons, but not in ID or BR neurons, even when considering only the visual cue response coefficients from the GLM (p < 0.05, Kruskal-Wallis, Bonferroni corrected; Figure 4E).

Figure 4. Dissecting sensory, motor, and reward-related responses in visual association cortex.

A. A generalized linear model (GLM) could predict the neural activity of an example ID neuron using task variables.

B. The mean response of this neuron was mostly explained by the visual response component (dark purple). Contributions to peri-stimulus responses by activity locked to the motor response (red) or to Ensure delivery (green) were minimal.

C. An example PO neuron demonstrated a visual response component (dark purple), a motor response component (red), and a component related to Ensure delivery (green).

D. We quantified the fraction of the deviance explained by each task variable included in the GLM (normalized by the total deviance explained for each neuron). The deviance explained by the visual response component was significantly greater than for the motor component in PO, ID, and BR neurons. * p < 0.01, Wilcoxon Rank-Sum.

E. Critically, the response bias to the food cue in Fig. 2A–C was not the result of motor or premotor activity, as we still observed this bias when only considering coefficients reflecting the visual response component for food cue (FC), quinine cue (QC), and neutral cue (NC) trials.

F. Top: responses of PO neurons contained a motor component that peaked prior to the onset of licking (dashed gray line), and that remained stable Pre-, During- and Post-Reversal. Bottom: responses of PO neurons contained a visual response component for the food cue (dashed gray line) Pre-, and Post-, but not During-Reversal.

G. Average fraction of all days that cells in each category were responsive to at least one stimulus, for each task epoch. PO neurons were not reliably visually responsive during-Reversal, when behavioral performance was poor. * p < 0.001, PO fraction during- vs. pre- and vs. post-Reversal, as well as PO vs. ID neurons or PO vs. BR neurons during-Reversal. † p < 0.01, PO vs. ID neurons post-Reversal. 2-way ANOVA, Tukey-Kramer test. Error bars: s.e.m. See also Figure S4.

PO neurons did not show responses tightly locked to any visual cue in sessions during-Reversal, when the animal was performing the task poorly (potentially due to decreased confidence in cue predictions). We therefore quantified the likelihood of significant visual responses in all three categories of neurons as previously assessed pre- and post-Reversal, but now for during-Reversal sessions. We found that while ID and BR neurons were consistently visually responsive during-Reversal, this was not the case for PO neurons (Figure 4G and Figure S4C–D; PODuring vs. IDDuring or BRDuring: p < 0.0001, 2-way ANOVA with Tukey-Kramer method). This was true despite reliable activity prior to motor initiation (Figure 4F and Figure S4A), implying that this pre-motor neural signal can be decoupled from the visual component of responses in PO neurons. Thus, this GLM analysis reveals that PO neurons lose their short-latency visual response to the stimulus previously predictive of reward, and only display a short-latency response to the new visual stimulus predicting reward after re-learning, once the mouse has developed confident predictions of the new outcomes predicted by each cue.

Taken together, the above results are consistent with a model in which LVAC neurons that track the same outcome across a Reversal are differentially influenced by common top-down inputs, such as amygdala feedback projections that exhibit strong food cue bias, sensitivity to trial/reward history, and encoding of both learned visual cues and associated outcomes (Burgess et al., 2016). In contrast, ID neurons may be differentially influenced by bottom-up input from early visual cortex and thalamus.

Correlated ensembles of Predicted Outcome, Identity, and Broadly-tuned neurons

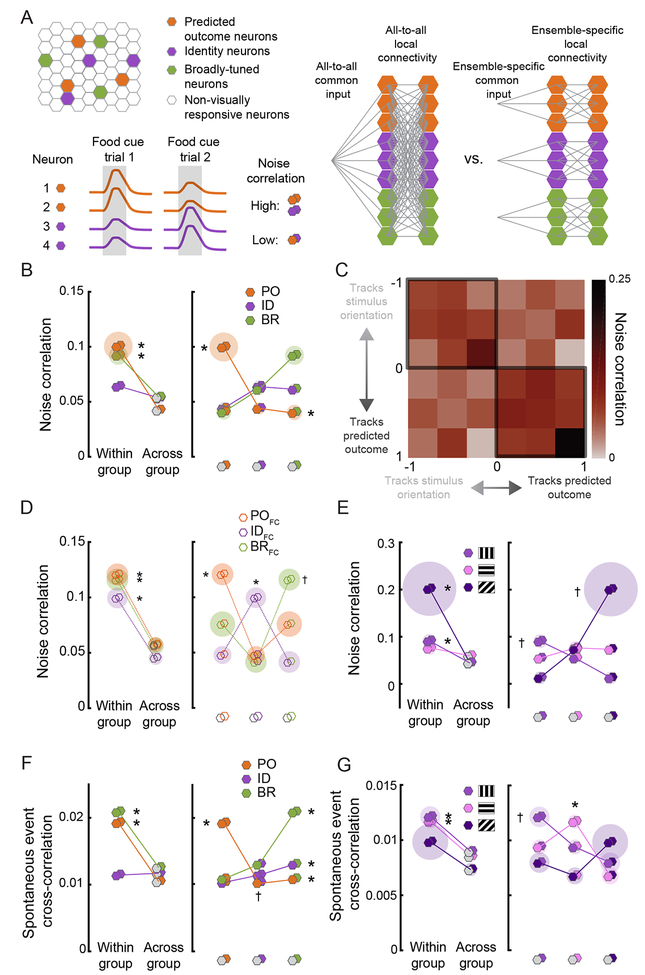

Previously, higher within- vs. across-group co-fluctuations in cue-evoked neural activity (i.e. noise correlations) have been used to identify distinct functional “ensembles” that may reflect increased common input (Figure 5A; Cumming and Nienborg, 2016; Shadlen and Newsome, 1998) and/or increased local connectivity (Cossell et al., 2015; Ko et al., 2011). Thus, we tested whether the largely distinct functional groups of neurons encoding stimulus identity or predicted outcome showed increased within- vs. across-group functional connectivity, thereby supporting the notion of distinct ensembles. To this end, we assessed trial-to-trial co-fluctuations in cue-evoked responses across pairs of neurons during food cue presentations (Figure 5A). Indeed, PO neurons showed higher noise correlations with other PO neurons than with ID or BR neurons (Figure 5B; PO-PO vs. PO-Other: p < 0.001, Wilcoxon Rank-Sum, Bonferroni corrected). BR neurons also demonstrated higher within- vs. across-group noise correlations (p < 0.001). Similar results were observed when separately considering neurons that either weakly or strongly tracked predicted outcome (right half of Figure 5C, cf. Figure 2F). These elevated within- vs. across-group noise correlations were not due to differences in response preferences across groups: restricting analyses to those neurons in each group that preferred the orientation associated with food reward yielded similar results (p < 0.0001; Figure 5D, Wilcoxon Rank-Sum, Bonferroni corrected). Thus, even neurons that preferentially respond to the same stimulus can belong to different ensembles based on whether they track stimulus orientation vs. predicted outcome across a Reversal.

Figure 5. Correlated ensembles of PO, ID, and BR neurons.

A. Top left: schematic of PO, ID, and BR neurons within a field of view. Right: hypothetical profiles of local and long-range connectivity, were the network of PO, ID, and BR neurons to show either full connectivity or selective connectivity within functionally-distinct ensembles. Bottom left: hypothetical pairwise food cue response co-fluctuation (noise correlation) in simultaneously recorded PO and ID neurons (bottom; gray shaded area: cue presentation). Even though the mean response of all neurons is identical, PO neurons (1 & 2) have larger responses on trial 1, and ID neurons (3 & 4) have larger responses on trial 2, resulting in higher cue-evoked noise correlations within- vs. across-group. For the pairwise correlation analyses below, hexagon pairs denote the functional identity of each neuron in the pair.

B. PO and BR neurons showed higher noise correlations within-group vs. across-group. Left: pairwise correlations between two neurons in the same group (same color) vs. in any other group (gray). Right: all pairwise comparisons. Shaded discs indicate s.e.m. * p < 0.01, Wilcoxon Rank-Sum (left) and Kruskal-Wallis (right).

C. Noise correlation heatmap for all neurons, ranging from those that mostly tracked the orientation of a stimulus to those that mostly tracked the predicted outcome. Pairs of neurons with similar tracking properties had higher pairwise noise correlations.

D. Same analyses as in B, but restricted to the subsets of PO, ID, and BR neurons that preferred the food cue (FC). Right: Pairwise noise correlations were higher within-group (same color) vs. across-group (different colors). * significantly higher mean correlation for neurons in the same vs. in different groups (gray): p < 0.01, Wilcoxon Rank-Sum (left) and Kruskal-Wallis (right), Bonferroni corrected. † significantly higher mean correlation of BRFC neurons with other BRFC neurons than with IDFC neurons: p < 0.001, Kruskal-Wallis, Bonferroni corrected. All other p values > 0.05. Shaded disc radius: s.e.m.

E. Subgroups of ID neurons preferentially responsive to a specific stimulus orientation showed higher noise correlations within- vs. across-subgroup. Left: within-subgroup vs. across-subgroup comparisons. Right: all pairwise comparisons. * p < 0.01, within- vs. across-subgroup. † p < 0.01, highest vs. lowest pairs; Kruskal-Wallis/Wilcoxon Rank-Sum, Bonferroni corrected

F. PO and BR neurons showed higher spontaneous event ross-correlations within-group vs. across-group. * denotes p < 0.001 for within- vs. across-group comparison or vs. all other groups; Kruskal-Wallis/Wilcoxon Rank-Sum, Bonferroni corrected. † p < 0.05, POID vs. BR-ID pairs.

G. Subgroups of ID neurons showed higher spontaneous event cross-correlations within-subgroup vs. across-subgroup. * p < 0.01, within- vs. across-subgroups. † p < 0.01, highest vs. lowest pairs:, Kruskal-Wallis/Wilcoxon Rank-Sum, Bonferroni corrected. See also Figures S5-S6.

We additionally hypothesized that within the set of ID neurons in LVAC, there might exist highly correlated sub-ensembles, each preferring a unique stimulus orientation, as in primary visual cortex (Cossell et al., 2015; Ko et al., 2011). Indeed, we confirmed the presence of different sub-ensembles of ID neurons preferring each of the three stimulus orientations, with increased noise correlations within vs. across sub-ensemble (Figure 5E; FCori-FCori vs. FCori- Otherori: p = 0.009, NCori-NCori vs. NCori-Otherori: p = 0.004; QCori-QCori vs. QCori-Otherori: p = 0.27).

Analysis of spontaneous co-activity of neurons provided additional evidence for the existence of distinct ensembles in LVAC. As with trial-to-trial noise correlations of stimulus-evoked activity, we observed higher within-group vs. across-group correlations in spontaneous activity, during moments in which no stimulus was present (Figure 5F and Figure S5A–B; p < 0.001, Wilcoxon Rank-Sum, Bonferroni corrected). Pairs of ID neurons with the same orientation preference also showed higher spontaneous co-activity than those with different orientation preferences (Figure 5G; FCori-FCori vs. FCori-Otherori: p < 0.01, QCori-QCori vs. QCori- Otherori: p < 0.0001, NCori-NCori vs. NCori-Otherori: p = 0.17, Wilcoxon Rank-Sum, Bonferroni corrected). These results were evident even in individual recording sessions (e.g. Figure S5CE). Altogether, these data suggest the existence of multiple ID sub-ensembles of correlated neurons encoding distinct stimulus features in LVAC (as in V1, Cossell et al., 2015; Ko et al., 2011), as well as at least one additional intermingled ensemble that encodes predicted outcome.

While PO, BR, and ID neurons were generally intermingled, PO and BR neurons did show weak spatial clustering relative to ID neurons (Figure S6A). However, functional properties did not differ between neurons in the center or in the periphery of our fields of view (Figure S6BF): both demonstrated selective food cue response enhancement in PO neurons (Figure S6D) and higher correlations within vs. across functional categories (Figure S6E–F). This suggests that our findings are not limited to the retinotopically-defined area in the center of our field of view – visual postrhinal cortex (visPOR) – but instead may generalize to neighboring regions of LVAC, possibly due to innervation by common sources of input (Burgess et al., 2016).

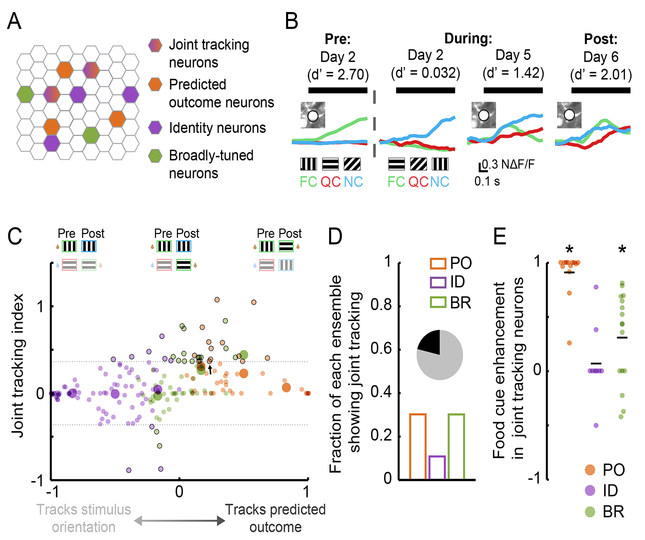

Joint tracking of stimulus identity and predicted outcome in single neurons

We sought to distinguish neurons that might exhibit true ‘joint tracking’ (i.e. those demonstrating a visual cue-evoked response both to a specific stimulus orientation and to whichever stimulus predicts the same outcome pre- and post-Reversal) from those that were simply broadly responsive to all stimuli. An example joint tracking neuron is shown in Figure 6B. To assess the strength of joint tracking, we used an index that equals 0 for those neurons that purely track the stimulus orientation (Figure 6C; x-axis value of −1) or the predicted outcome (Figure 6C; x-axis value of 1) across the Reversal, and that is positive for those neurons that partially track both stimulus orientation and predicted outcome (Figure 6C; small black circles: neurons with significant joint tracking; large circles: averages for each category; dashed gray lines: 95% confidence intervals). Only a minority of neurons showed significant joint tracking (Figure 6D). PO and BR neurons with significant joint tracking demonstrated enhanced food cue responses post- vs. pre-Reversal (p < 0.01, Wilcoxon Sign-Rank test against 0, Bonferroni corrected; Figure 6E). This suggests that, in addition to encoding stimulus orientation, these neurons shifted their tuning curves post-Reversal to also encode the new food cue. In contrast, the small minority of ID neurons exhibiting joint tracking showed no significant enhancement in food cue responses post-Reversal, further supporting the notion that visual responses of ID neurons are largely insensitive to predicted value (p > 0.05, Wilcoxon Sign-Rank test against 0, Bonferroni corrected; Figure 6E).

Figure 6. Joint tracking of stimulus identity and predicted outcome in single neurons.

A. Schematic demonstrating the possible existence of neurons that partially track both stimulus identity and predicted outcome.

B. An example neuron that demonstrates joint tracking. This neuron initially responded to the orientation associated with the food cue (FC). Following new learning, it continued to respond to this orientation but now also responded to the new FC. QC: quinine cue; NC: neutral cue.

C. Joint tracking index across neurons, ordered from those that precisely tracked the same stimulus orientation (left end of x-axis) to those that precisely tracked whichever stimulus predicted the same outcome (right end of x-axis). A minority of neurons showed significant joint tracking of both stimulus identity and predicted outcome (dashed lines: 95% confidence intervals). Black outlines denote neurons with significant joint tracking. Arrow: example neuron from B.

D. Fraction of neurons in each category showing significant joint tracking. 38/179 showed significant joint tracking (inset).

E. Joint tracking neurons from the PO category showed a response enhancement to the food cue, while those from the ID category did not.

Neurons recruited during new learning encode predicted value

During Reversal, Predicted Outcome neurons lose their short-latency response to the visual stimulus that previously predicted food reward, and subsequently develop a response to the new visual stimulus now predicting reward (Figure 4F–G). We therefore hypothesized that an additional subset of neurons might exist that develop a similar response to the new visual stimulus now predicting reward, yet that were unresponsive to any visual stimulus pre-Reversal. Further, we predicted that such “Recruited” neurons would exhibit similar functional properties as PO neurons. We identified 472 neurons that were either not visually responsive or that were not identifiable pre-Reversal, but that became visually responsive post-Reversal (Figure 7A–C). Visual responses in these neurons showed a similar bias to the food cue as in PO neurons (Figure 7D; p < 0.025 for all comparisons, Kruskal-Wallis, Bonferroni corrected) and a similarly strong sensitivity to reward history (Figure 7E; p > 0.05, Wilcoxon Rank-Sum).

Figure 7. Neurons recruited during new learning encode predicted value.

A. Schematic demonstrating the possible existence of neurons that are “recruited” by new learning.

B. Example neuron that developed cue-evoked responses as the mouse learned the new cue-outcome associations. FC: food cue; QC: quinine cue; NC: neutral cue.

C. Fraction of visually-responsive Recruited neurons and Predicted Outcome (PO) neurons pre-, during- and post-Reversal.

D. Recruited neurons showed a population response bias to the food cue.

E. Recruited neurons and PO neurons both had similarly strong sensitivity to recent reward history. n.s.: p > 0.05, Wilcoxon Rank-Sum.

F. Recruited neurons and PO neurons showed similar noise correlations with PO, ID, and BR neurons, suggesting that Recruited neurons might become integrated with the PO ensemble. * p < 0.05, Kruskal-Wallis.

G. Recruited neurons showed higher spontaneous correlations with PO neurons than with ID or BR neurons post-Reversal and, surprisingly, pre-Reversal (an epoch when Recruited neurons were not visually responsive). * p < 0.05, 2-way ANOVA, Tukey-Kramer test. Shaded disc radius: s.e.m.

H. We quantified the similarity in the patterns of noise and spontaneous correlations for neurons in each category. PO neurons showed higher similarity to Recruited neurons, while ID neurons showed higher similarity to BR neurons. See also Figure S7.

We found that Recruited neurons were integrated with the correlated ensemble of PO neurons. Specifically, both Recruited neurons and PO neurons showed higher post-Reversal noise correlations with PO neurons than with ID or BR neurons (Figure 5C; p < 0.002, Kruskal-Wallis, Bonferroni corrected). Recruited neurons also showed higher post-Reversal spontaneous event cross-correlations with PO neurons than with ID or BR neurons (Figure 7G; post-Reversal: p < 0.01, 2-way ANOVA, Tukey-Kramer method). Overall, when combining noise and spontaneous correlations together post-Reversal, Recruited neurons were generally more co-active with PO neurons than with ID or BR neurons (Figure 7H). Remarkably, in those Recruited neurons for which we could measure spontaneous activity pre-Reversal, we also observed a significantly higher pre-Reversal correlation in spontaneous activity with PO neurons than with other groups (right of Figure 7G; p < 0.05, 2-way ANOVA, Tukey-Kramer method), even though Recruited neurons were not significantly visual driven by any cue during this pre-Reversal epoch. These data suggest that Recruited neurons become integrated into the PO ensemble and may be predisposed to this integration even prior to the emergence of visual responses during new learning.

These Recruited neurons were mirrored by a population of neurons that ceased to be visually responsive when cue-outcome contingencies were reassigned (“Offline” neurons; n = 232 neurons; Figure S7A–B). Pre-Reversal, these Offline neurons were also biased to the food cue (Figure S7C; FC vs. QC and NC: p < 0.001, Kruskal-Wallis, Bonferroni corrected) and showed similar sensitivity to reward history as PO neurons (p > 0.05, Wilcoxon Rank-Sum test). However, Offline neurons did not demonstrate the same pattern of functional connectivity as the Recruited population (Figure S7F–G), as they did not show higher noise correlations or spontaneous correlations with PO than with ID or BR neurons. Thus, while Offline neurons exhibit similar properties to PO neurons, they may be predisposed to go ‘offline’ due to a lack of strong functional connectivity with PO neurons.

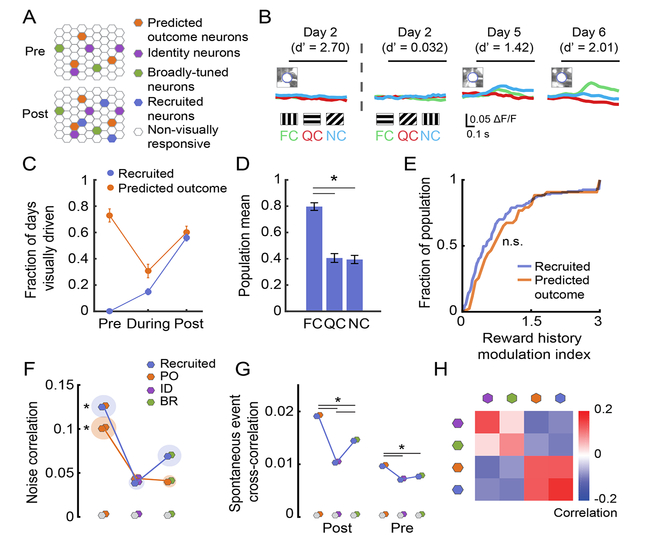

Single-session visual response dynamics can predict which neurons will track stimulus identity or predicted outcome across Reversal

The above findings regarding Recruited and Offline neurons led us to conduct a more comprehensive assessment of the functional similarity and correlations between the small set of neurons driven pre- and post-Reversal and thus classifiable as PO, ID, or BR neurons (n = 179) and the larger group of transiently visually responsive neurons driven on at least 2 sessions pre-or post-Reversal, but not both (n = 543 neurons; Figure 8A). To this end, we tested whether response dynamics measured during a single recording session were sufficient to correctly classify neurons responsive pre- and post-Reversal as PO, ID, or BR (i.e. whether single-session responses could predict if a neuron would track stimulus orientation or predicted outcome across Reversal). If successful, this same classifier could then be applied to the larger pool of transiently responsive neurons, and thus serve as a useful tool for relating our across-day findings to single-session measurements from both current and previous studies. Initial observations suggested that low-level response features might indeed be predictive of membership in an across-Reversal category. For example, certain neurons showed a temporally locked response to the 2 Hz temporal frequency of the drifting gratings (Figure 8B; p < 0.05; see STAR methods), and these neurons were all ID neurons that tracked the same stimulus orientation across the Reversal (note that 2 Hz locking was not used during initial categorization in Figure S2A–B).

Figure 8. Single-session visual response dynamics can predict which neurons will track stimulus identity or predicted outcome across Reversal.

A. Many neurons were transiently visually responsive and were not tracked across the Reversal.

B. Left: some neurons displayed visual responses that followed the 2 Hz temporal frequency of the drifting grating. Right: quantile plot. Neurons that were significantly modulated at 2 Hz (rightmost circles, outlined in black; all plotted in left panel) were all ID neurons.

C. A Random Forests classifier could use single-session, low-level visual response features to distinguish PO, ID, or BR neurons at levels significantly above chance. * p < 0.01, Wilcoxon Rank-Sum, real vs. permuted shuffle of category labels during classifier training.

D. Neurons that shared the same low-level visual response features as PO neurons (“PO-like neurons”) showed a stronger bias to the motivationally relevant food cue than “ID-like” or “BR-like” neurons.

E. PO-like neurons showed higher noise correlations with PO neurons than with ID neurons.

F. PO-like neurons showed higher spontaneous correlations with PO neurons than with ID or BR neurons.

G. Unlike ID-like neurons, PO-like neurons in both visual postrhinal cortex (visPOR) and primary visual cortex (V1) showed a significant response bias to the food cue (left). PO-like neurons also demonstrated greater sensitivity to reward history than ID-like neurons (right).

H. While PO-like neurons were present in V1, they were significantly less common than in visPOR. * p < 0.05, Tukey’s HSD post hoc test among proportions.

I. In both V1 and visPOR, PO-like neurons showed a strong response bias to the food cue while ID-like neurons did not. This bias was abolished following satiation. * p < 0.01, Wilcoxon Sign-Rank vs. chance (0.33). Error bars: s.e.m.

J. We hypothesize that within the same region of lateral visual association cortex, PO, PO-like and Recruited neurons (right) receive inputs from brain regions involved in assigning valence/salience to learned cues (e.g. LA/BLA), in task-dependent decision-making and/or motor action (e.g. PFC/OFC), as well as input from visual sources, including LP, and local neurons encoding stimulus identity. By contrast, ID and ID-like neurons (left) may predominantly receive input from earlier visual areas including LP, V1 and LM. LA: lateral nucleus of the amygdala; BLA: basolateral nucleus of the amygdala; PFC: prefrontal cortex; OFC: orbitofrontal cortex; LP: lateral posterior nucleus of the thalamus; LM: lateromedial visual area. See also Figure S8.

To attempt to distinguish PO, ID, and BR neurons using single-session data, we extracted a large set of basic visual response features collected from a single imaging session (2 Hz locking, trial-to-trial response variability, mean response latency, response selectivity, response magnitude, response latency variability, ramp index, and time to peak). We trained a Random Forests classifier to label neurons as PO, ID or BR based on these sensory response features. Strikingly, this approach showed that within-session data was sufficient to classify neurons as PO, ID, and BR at well above chance levels (Figure 8C; Figure S8B–D; PO-, Identity-, and Broadly-tuned vs. Shuffle: p < 0.0001, Wilcoxon Rank-Sum; Breiman, 2001; Geng et al., 2004).

Having validated the classifier, we applied it to transiently visually responsive neurons. We defined “PO-like,” “ID-like,” or “BR-like” neurons as those that demonstrated a high level of classifier confidence (> 0.6) that their response characteristics were similar to those of PO, ID, or BR neurons, respectively (Figure S8F). We found that, as with PO neurons, PO-like neurons showed a stronger food cue response bias than ID-like or BR-like neurons (Figure 8D; PO-like vs. ID-like or BR-like: p < 0.0001; Kruskal-Wallis, Bonferroni corrected).

We then tested if these transiently visually responsive neurons were integrated into previously defined PO, ID, or BR ensembles. Indeed, PO-like neurons showed higher noise correlations and spontaneous correlations with PO neurons than with ID or BR neurons (Figure 8E–F; noise correlations with PO vs. ID neurons: p = 0.001; spontaneous cross-correlations with PO vs. ID or BR neurons: p < 0.01, Kruskal-Wallis, Bonferroni corrected). Thus, neurons that are transiently visually responsive and share single-session visual response properties with PO neurons appear to be integrated with the PO ensemble.

Predicted Outcome-like neurons in both LVAC and V1 are differentially sensitive to hunger state

We next applied this classifier to imaging datasets from a previous study of neurons in visPOR (an area within lateral visual association cortex, LVAC) and in primary visual cortex (V1; n = 8 mice; Burgess et al., 2016). In previous studies, neurons in both V1 and LVAC of naïve mice often responded to specific oriented gratings (Burgess et al., 2016; Niell and Stryker, 2008) and maintained stable orientation tuning curves across multiple days (Burgess et al., 2016; Mank et al., 2008). Further, in trained mice, we previously found that satiety abolished the population-wide average food cue response bias across visPOR neurons, while a similar response bias and hunger sensitivity was not observed in V1 (Burgess et al., 2016). These previous analyses averaged across all responsive neurons, due to our previous inability to define ID, PO, and BR categories in the absence of Reversal learning. Now, by training the classifier on our current dataset and classifying neurons from this previous dataset into functional categories, we could test (i) if PO-like neurons were also present in V1, and (ii) if the selective reduction in food cue responses in visPOR neurons following satiation occurred predominantly in PO-like neurons, consistent with their proposed role in encoding motivationally-salient predicted outcomes.

The Random Forests classifier identified PO-like neurons as well as ID-like neurons in our previous recordings in visPOR and, surprisingly, in V1. Consistent with the findings from PO and PO-like LVAC neurons in the current dataset, PO-like neurons in this previous dataset also showed a response bias to the food cue and enhanced sensitivity to reward history relative to ID-like neurons in visPOR and, surprisingly, in V1 (Figure 8G; p < 0.05, Wilcoxon Sign-Rank test against 0.33 and Wilcoxon Rank-Sum test, PO-like vs. ID-like). Nevertheless, the fraction of PO-like neurons was significantly higher in visPOR than in V1 (Figure 8H; p < 0.05, Tukey’s HSD method).

Predicted Outcome (PO)-like neurons in V1 and visPOR were strongly biased to the food cue, and this bias was abolished by satiation in both regions of cortex (Figure 8I). In contrast, ID-like neurons in V1 and visPOR from this previous dataset did not show a food cue response bias, regardless of hunger state (Figure 8I; p > 0.05 Wilcoxon Rank-Sum). Moreover, even the subset of ID-like neurons that preferred the stimulus associated with food reward was not modulated by changes in hunger state or reward history (Figure S8G–H). These data further support our finding that the encoding of either stimulus identity or predicted value is carried out by largely distinct, intermingled ensembles of cortical neurons. More generally, these findings illustrate the utility of training a classifier to use single-session data to predict a neuron’s response plasticity and dynamics during longitudinal recordings, as a means to bridge chronic functional imaging datasets with single-session recordings across studies and brain areas.

Discussion

Using two-photon calcium imaging, we tracked visual responses in the same layer 2/3 neurons of mouse lateral visual association cortex (LVAC) across sessions prior to, during and after a reassignment of cue-outcome associations. We identified intermingled ensembles of neurons that mostly tracked either stimulus identity or predicted outcome. Neurons that tracked stimulus identity (ID neurons) encoded a low-level stimulus feature – stimulus orientation – across a switch in cue-outcome associations. Strikingly, ID neurons did not encode stimulus value, as they showed no response bias to the salient food cue and little sensitivity to reward history or hunger state. In this way, these neurons maintained a faithful representation of stimulus identity. In contrast, neurons that tracked the same predicted outcome irrespective of the stimulus orientation (PO neurons) exhibited short-latency visual responses that were biased to the motivationally relevant food cue and sensitive to reward history. Both PO and ID groups of neurons showed higher within- vs. across-group noise correlations and spontaneous correlations, suggesting that these groups represent largely distinct functional ensembles. Other neurons in LVAC were initially unresponsive to visual cues but developed cue-evoked responses after new learning. These recruited neurons had visual responses that were strongly biased to the food cue and were selectively correlated with those of the PO ensemble. Together, these findings suggest that lateral visual association cortex maintains a faithful neuronal representation of visual stimuli, together with a largely separate, motivation-dependent representation of salient predicted outcomes.

Ensembles encoding visual stimulus identity or predicted value in other brain regions

Historically, sensory representations of stimulus identity and predicted value have most commonly been considered in separate studies in different brain areas (but see Paton et al., 2006; Schoenbaum et al., 1998). For example, the existence of ensembles of excitatory neurons preferring distinct stimulus features has been extensively documented in primary visual cortex (V1; Ko et al., 2011; Kohn and Smith, 2005; Lee et al., 2016). Groups of V1 neurons with common stimulus preferences and with high pairwise trial-to-trial noise correlations (Cossell et al., 2015; Ko et al., 2011) and/or high correlations in spontaneous activity (Ch’ng and Reid, 2010; Okun et al., 2015; Tsodyks et al., 1999) are often defined as belonging to the same ensemble. Such ensembles may be activated together due to increased probability of within-ensemble connections (Cossell et al., 2015; Ko et al., 2011; Lee et al., 2016), and/or due to common sources of feedforward or feedback input (Cohen and Maunsell, 2009; Cumming and Nienborg, 2016; Shadlen and Newsome, 1998; Smith and Kohn, 2008). Due to their robust responses even during passive viewing of visual stimuli, these ensembles in primary visual cortex are thought to encode low-level features of the visual stimulus.

In contrast to the important role of V1 ensembles in encoding the identity of visual stimuli, regions such as lateral and basolateral amygdala have been shown to strongly encode cues predicting salient outcomes (Grewe et al., 2017; Morrison and Salzman, 2010; Paton et al., 2006). Intermingled neurons in the rodent and primate amygdala encode cues associated with positive or negative outcomes (Beyeler et al., 2018; Morrison and Salzman, 2010; O’Neill et al., 2018; Schoenbaum et al., 1998), and silencing or lesioning the amygdala affects behavioral responses to learned cues (Baxter and Murray, 2002; Sparta et al., 2014). Furthermore, recent studies using cross-correlation analyses show that positive value-coding and negative value-coding amygdala neurons form distinct ensembles (Zhang et al., 2013). Our findings show that, in a region of cortex between early visual areas and amygdala, there exists intermingled ensembles of functionally distinct neurons whose visual responses mostly track either stimulus orientation or predicted outcome, thereby separately encoding stimulus identity and stimulus value.

Different afferent inputs may target ensembles encoding stimulus identity or predicted outcome

How might these largely distinct functional ensembles come about in lateral visual association cortex? We hypothesize that different afferent inputs target PO vs. ID ensembles, and that these inputs could contribute to the higher within-ensemble vs. across-ensemble correlations (Figure 8J). Previous studies have shown that visPOR receives feedforward input from areas important for basic visual processing, including V1 (V1; Niell and Stryker, 2008; Wang et al., 2012), secondary visual cortex (LM; Wang et al., 2012), and the lateral posterior nucleus in the thalamus (LP; Zhou et al., 2018). We predict that these inputs more strongly target the ID ensemble and provide information predominantly regarding low-level stimulus features (Figure 8J). In contrast, PO neurons could receive stronger input from brain regions that exhibit sensitivity to motivational context (e.g. hunger-dependent food cue biases and sensitivity to reward history) such as the lateral amygdala (LA; Burgess et al., 2016; Saez et al., 2017). Supporting this, amygdala silencing impairs neuronal responses to salient learned cues in many cortical areas (Livneh et al., 2017; Samuelsen et al., 2012; Schoenbaum et al., 2003; Yang et al., 2016). PO neurons may also receive input from areas conveying information about decision making and motor planning that varies with task context (e.g. PFC: Otis et al. 2017; OFC: Schoenbaum et al. 2003; PPC: Pho et al. 2018).

We found a minority of neurons whose visual responses significantly tracked both stimulus identity and predicted outcome across a Reversal. These “joint tracking” neurons could potentially arise locally due to cross-talk between PO and ID neurons, as we observed lower but non-zero noise and spontaneous correlations between these groups. Alternatively, joint tracking neurons could inherit their tuning (e.g. from LP or LM inputs; Wang et al., 2012; Zhou et al., 2018). Critically, only a small fraction of ID neurons demonstrated significant joint tracking, and those that did showed no response enhancement to the motivationally relevant food cue (Figure 6). These findings further suggest largely separate LVAC populations representing stimulus identity or predicted value.

The hierarchical organization of value representations in the visual system

Human neuroimaging studies consistently report strong hunger-dependent enhancement of neural responses to food cues in lateral visual association cortex (LVAC), but not in V1 (V1; Huerta et al., 2014; LaBar et al., 2001). Similarly, our previous study in mice showed stronger hunger modulation in LVAC than in V1 (Burgess et al., 2016). Here, we identified a small number of neurons in V1 that had similar stimulus response features to PO neurons in LVAC. Visual responses in these “PO-like” V1 neurons were strongly biased to the food cue and sensitive to hunger state, similar to previous studies showing increased coding of cues predicting rewards in rodent V1 (Poort et al., 2015; Shuler and Bear, 2006). Value-related activity in PO-like V1 neurons may arise from top-down feedback projections from PO neurons in LVAC (Gilbert and Li, 2013; Wang et al., 2012), from other higher visual areas and non-visual inputs (Buffalo et al., 2010; Burgess et al., 2016; Makino and Komiyama, 2015; Zhang et al., 2014).

The overall increase in food cue response enhancement in LVAC vs. V1 appears mainly due to a larger fraction of LVAC neurons with short-latency visual responses that encode predicted value vs. identity. Consistent with these findings, a recent study found that many neurons in mouse parietal association cortex (PPC) tracked sensorimotor contingencies associated with the “Go” response across a reversal in cue-outcome contingencies, while only a few PPC neurons tracked stimulus identity, whereas the converse was observed in V1 (Pho et al., 2018).

What might be the purpose of identity-coding neurons in lateral visual association cortex? A recent study found that while visual and non-visual information is present in rostral visual association cortex, neurons in this area that project to secondary motor cortex preferentially deliver “pure” visual information, while reciprocal feedback to this area delivers “pure” motor information (Itokazu et al., 2018). Delivery of low-level visual information to downstream brain areas may guide motor performance or provide prediction error signals during learning. We speculate that a similar loop exists between LVAC and lateral amygdala (LA), in which inputs from LVAC to LA encode low-level stimulus features, while inputs from LA to LVAC carry information regarding motivational context (Burgess et al., 2016) and target PO neurons. Thus, LVAC may deliver high-fidelity sensory information to limbic regions important for encoding of motivational salience, while providing context-dependent estimates of the motivational salience of a stimulus to earlier visual areas, thereby biasing bottom-up processing.

At first blush, the finding that ID neurons in mouse LVAC do not demonstrate sensitivity to cue saliency might appear inconsistent with studies of attentional gain modulation of orientation-tuned neurons in macaque higher visual cortical areas (McAdams and Maunsell, 1999; Reynolds and Chelazzi, 2004). However, the magnitude of attentional modulation in these studies is often reduced when using high contrast and easily discriminable visual stimuli (see Reynolds and Chelazzi, 2004) similar to those employed in our task.

Visual responses emerge with learning in a subset of cortical neurons

We additionally identified a subset of neurons that were not initially visually responsive in well-trained mice, but that subsequently developed cue-evoked visual responses and hence were “recruited” with new learning. Previous work in the amygdala, hippocampus, and cortex has shown that neurons with increased excitability are more likely to be incorporated into the memory representation of a salient experience (Cai et al., 2016; Josselyn et al., 2015; Sano et al., 2014). We suggest that Recruited neurons may be more excitable at the time of new learning, and thus predisposed to integrate into newly formed cue-outcome associative representations. In support of this, Recruited neurons showed a strong response bias to the new food cue, and became increasingly integrated into the PO ensemble, as they showed stronger correlations with PO neurons post- and even pre-Reversal.

In summary, we have identified separate but intermingled ensembles in lateral visual association cortex that predominantly encode stimulus identity or predicted outcome across a switch of cue-outcome associations. Our chronic imaging approach sets the stage for testing the circuit and synaptic mechanisms by which this brain region maintains a faithful representation of the visual features of the external world as well as a flexible representation of the predicted value of learned cues.

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Mark Andermann (manderma@bidmc.harvard.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

All animal care and experimental procedures were approved by the Beth Israel Deaconess Medical Center Institutional Animal Care and Use Committee. Mice (n=15, male C57BL/6) were housed with standard mouse chow and water provided ad libitum, unless specified otherwise. Mice used for in vivo two-photon imaging (age at surgery: 9–15 weeks) were instrumented with a headpost and a 3 mm cranial window, centered over lateral visual association cortex including postrhinal cortex (window centered at 4.5 mm lateral and 1 mm anterior to lambda; the exact retinotopic location of visual postrhinal association cortex (visPOR) was determined via intrinsic signal mapping; see below and Goldey et al., 2014). Portions of the data in Figure 8 involve new analyses of a previous dataset (n=8 mice; Burgess et al., 2016).

METHOD DETAILS

Behavioral training

After at least one week of recovery post-surgery, animals were food-restricted to 85–90% of their free-feeding body weight. Animals were head-fixed on a 3D printed running wheel for habituation prior to any behavioral training (10 minutes to 1 hour over 3–4 days). If mice displayed any signs of stress, they were immediately removed from head-fixation, and additional habituation days were added until mice tolerated head-fixation without visible signs of stress. On the final day of habituation to head-fixation, mice were delivered Ensure (a high calorie liquid meal replacement) by hand via a syringe as part of the acclimation process. Subsequently, we trained the animals to associate licking a lickspout with delivery of Ensure, by initially triggering delivery of Ensure (5 μL, 0.0075 calories) to occur with every lick (with a minimum inter-reward-interval of 2.5 s). We tracked licking behavior via a custom 3D-printed, capacitance-based lickspout positioned directly in front of the animal’s mouth. All behavioral training was performed using MonkeyLogic (Asaad and Eskandar, 2008; Burgess et al., 2016).

For the Go-NoGo visual discrimination task, food-restricted mice were trained to discriminate square-wave drifting gratings of different orientations (2 Hz and 0.04 cycles/degree, full-screen square-wave gratings at 80% contrast; the same 3 orientations were used for all mice; for example: food cue (FC): 0°, quinine cue (QC): 270°, neutral cue (NC): 135°; grating orientations were counterbalanced across mice). All visual stimuli were designed in Matlab and presented in pseudorandom order on a calibrated LCD monitor positioned 20 cm from the mouse’s right eye. All stimuli were presented for 3 s, followed by a 2-s window in which the mouse could respond with a lick and a 6-s inter-trial-interval (ITI). The first lick occurring during the response window triggered delivery of Ensure or quinine during FC or QC trials, respectively. Licking during the visual stimulus presentation was not punished, but also did not trigger delivery of the Ensure/quinine. The lickspout was designed with two lick tubes (one for quinine and one for Ensure) positioned such that the tongue contacted both tubes on each lick.

Training in the Go-NoGo visual discrimination task progressed through multiple stages. The first stage was Pavlovian FC introduction, followed by FC trials in which reward delivery depended on licking during the response window (‘go’ trials in which licking during the response window led to delivery of 5 μL of Ensure), and finally by the introduction of QC and NC trials (‘no-go’ trials in which licking during the response window led to delivery of 5 μL of 0.1 mM quinine and nothing, respectively) as described in Burgess et al. (2016). Mice were deemed well-trained (d’ > 2; loglinear approach, Stanislaw and Todorov, 1999) following ˜2–3 weeks of training. Performance in well-trained mice involved sessions including equal numbers of FC, QC, and NC trials. We began all imaging and behavior sessions with presentation of 2–5 Pavlovian FC trials involving automatic delivery of Ensure reward, as a behavioral reminder. Pavlovian FC trials also occurred sporadically during imaging (0–15% of trials) to help maintain engagement. None of these Pavlovian FC presentations were included in subsequent data analyses, unless specifically mentioned.

Pharmacological silencing experiments proceeded as follows: experiments took place once mice were fully trained, and only for mice weighing ˜85% of free-feeding weight. We removed the dummy cannulae and inserted stainless steel cannulae to target visual association cortex centered on POR (internal: 33-gauge; Plastics One). Each day, mice were tested on 100 trials of the task to ensure good performance. We then injected 50 nL of muscimol solution (2.5 ng/nL) or saline at a rate of 50 nL/min. After infusion, the injection cannulae were replaced with the dummy cannulae, and behavioral testing started 20 min later. Mice performed 400 trials post-injection. Each run started with 5 Pavlovian food cue trials, then 10 operant food cue trials. Pavlovian food cue trials also occurred sporadically (5% of trials) throughout training. None of these Pavlovian food cue presentations were included in the behavioral or neural data analyses. We verified cannula location for every animal and included in subsequent analyses all animals with verified locations of cannulae in POR.

‘Reversal’ training paradigm involving a switch in cue-outcome contingencies

Once mice stably performed the Go-NoGo visual discrimination task with high behavioral performance (d’ > 2) for at least two days, we switched the cue-outcome associations. The switching of cue-outcome associations consisted of a clockwise rotation of the outcome associated with each of the three visual oriented drifting gratings (Figure 1D). If the initial cue-outcome associations were: FC: 0°, QC: 270°, and NC: 135°, then switching the cue-outc ome associations would involve switching the FC from 0° → 270°, the QC from 270° → 135°, and the NC from 135° → 0°.

As mentioned above, we classified sessions as containing high behavioral performance when the discriminability, d’, had a value greater than 2. To calculate d’, we pooled false-alarm trials containing a QC or a NC. Separately, we pooled correct-reject trials containing a QC or a NC. This metric allowed us to divide up the behavioral performance across sessions into 3 different epochs: (i) “pre-Reversal” – before the cue-outcome associations have been changed and while the mice exhibit high behavioral performance (d’ > 2); (ii) “during-Reversal” – after the cue-outcome associations have been switched and while the mice exhibit poor behavioral performance (d’ < 2), and (iii) “post-Reversal” – an epoch in which the mice again exhibit high behavioral performance (d’ > 2). We use the term “Reversal” to refer to the switch in cue-outcome associations, even though it is a rotation of the outcome associated with each stimulus orientation, rather than a strict Reversal of the food-associated stimulus and the quinine-associated stimulus. The Reversal could take as little as 3 days and as long as 2 weeks.

During initial training following Reversal, more FC trials were given in order to facilitate learning and maintain task engagement. Over the subsequent days of the during-Reversal epoch, we increased the number of QC and NC trials until equal numbers of FC, QC, and NC trials were presented (all pre-Reversal and post-Reversal sessions contained equal numbers of each trial type).

Intrinsic signal mapping

To delineate visual cortical areas, we used epifluorescence imaging to measure stimulus-evoked changes in the intrinsic autofluorescence signal (Andermann et al., 2011) in awake mice. Autofluorescence produced by blue excitation (470 nm center, 40 nm band, Chroma) was measured through a longpass emission filter (500 nm cutoff). Images were collected using an EMCCD camera (Rolera EM-C2 QImaging, 251 × 250 pixels spanning 3 × 3 mm; 4 Hz acquisition rate) through a 4x air objective (0.28 NA, Olympus) using the Matlab Image Acquisition toolbox. For retinotopic mapping, we presented Gabor-like patches at 6–9 retinotopic locations for 8 s each (20 degree disc, 2 Hz, 0.04 cycles/degree, 45° or noise patch), with an 8 s int er-stimulus interval. Analysis was performed in ImageJ and Matlab (as in Andermann et al., 2011; Burgess et al., 2016). We isolated POR from LI/LM most easily using stimuli centered at varying vertical locations (from high-to-low stimulus elevation) in the medial/nasal visual field, which translated to medial-to-lateral locations of peak neuronal responses, respectively. We isolated area POR from area P by comparing responses to stimuli positioned at nasal vs. lateral locations in visual space, corresponding to posterior-to-anterior locations of peak neuronal responses in POR but not in P, as expected from Wang and Burkhalter (2007). Following retinotopic mapping for identification of POR, the cranial window was removed, AAV1-Syn-GCaMP6f was injected into POR (100–150 nL into layer 2/3; UPenn Vector Core), and the window was replaced (Goldey et al., 2014). We centered our imaging field of view on POR (visPOR, Allen Brain Atlas), as opposed to cytoarchitectonic POR (Beaudin et al., 2013).

Two-photon calcium imaging

Two-photon calcium imaging was performed using a resonant-scanning two-photon microscope (Neurolabware; 31 frames/second; 787×512 pixels/frame). All imaging was performed with a 16x 0.8 NA objective (Nikon) at 1x zoom (˜1200 × 800 μm2). All imaged fields of view (FOV) were at a depth of 110–250 μm below the pial surface. Laser power measured below the objective was 25–60 mW using a Mai Tai DeepSee laser at 960 nm (Newport Corp.). Neurons were confirmed to be within a particular cortical area by comparison of two-photon images of surface vasculature above the imaging site with surface vasculature in widefield intrinsic autofluorescence maps, aligned to widefield retinotopic maps (Andermann et al., 2011; Burgess et al., 2016).

QUANTIFICATION AND STATISTICAL ANALYSIS

For analysis of behavior, n refers to the number of mice, and for comparisons of neural activity n refers to the number of neurons. Where appropriate statistical tests were performed across mice in addition to across neurons. These n values are reported in the results and in the figure legends.

Statistical analyses are described in the results, figure legends, and below in this section. In general, we used non-parametric statistical analyses (Wilcoxon sign-rank test, rank-sum test, Kruskal-Wallis tests) or permutation tests so that we would make no assumptions about the distributions of the data. All statistical analyses were performed in Matlab and p < 0.05 was considered significant (with Bonferroni correction for the number of tests where applicable). Quantitative approaches were not used to determine if the data met the assumptions of the parametric tests.

Lick behavior analysis

We additionally quantified behavioral performance by assessing the fraction of trials in which the animal licked to each cue (Figure 1F; Figure S1B). Following the change in cue-outcome associations, we observed a decrease in cue-evoked licking and an increase in levels of “baseline” or non-specific licking. We quantified this by using a lick-learning index (Jurjut et al., 2017) that compares the number of licks in the one-second period prior to cue presentation (LicksPreCue) and the number of licks in the last second of cue presentation (LicksCue; Figure S1C; lick-learning index = [LicksCue – LicksPreCue]/[LicksCue + LicksPreCue]; a value of 1 means that, for every trial, there were zero licks prior to cue onset and there is anticipatory licking before cue offset). We also quantified how stereotyped the licking behavior was at various stages prior to and following Reversal. We smoothed the lick raster plot with a Gaussian filter (σ: 1 second) and then calculated the correlation of each trial’s smoothed lick timecourse with the mean across all smoothed lick timecourses for all trials of a given type. Importantly, we only included the timecourse on each trial up to delivery of the outcome, so that we would not be correlating periods with consummatory licking. The value plotted is the mean correlation value across trials. A value of 1 would indicate that the animal demonstrated identical lick dynamics in every trial that included a behavioral response (Figure S1D).

Image registration and timecourse extraction

To correct for motion along the imaged plane (x-y motion), each frame was cropped to account for edge effects (cropping removed outer ˜10% of image) and registered to an average field-of-view (cropped) using efficient subpixel registration methods (Bonin et al., 2011). Within each imaging session (one session/day), each 30-minute run (4–6 runs/session) was registered to the first run of the day. Slow drifts of the image along the z-axis were typically < 5 μm within a 30-minute run, and z-plane was adjusted between runs by eye or by comparing a running average field-of-view to an imaged volume ± 10 μm above and below our target field-of-view. Cell region-of-interest (ROI) masks and calcium activity timecourses (F(t)) were extracted using custom implementation of common methods (Burgess et al., 2016; Mukamel et al., 2009). To avoid use of cell masks with overlapping pixels, we only included the top 75% of pixel weights generated by the algorithm for a given mask (Ziv et al., 2013) and excluded any remaining pixels identified in multiple cell masks.

Fluorescence timecourses were extracted by (non-weighted) averaging of fluorescence values across pixels within each ROI mask. Fluorescence timecourses for neuropil within an annulus surrounding each ROI (outer diameter: 50 μm; not including pixels belonging to adjacent ROIs) were also extracted (Fneuropil(t): median value from the neuropil ring on each frame). Fluorescence timecourses were calculated as Fneuropil_corrected(t) = FROI(t) – w * Fneuropil(t). The neuropil weight was calculated by maximizing the skewness of the difference between the raw fluorescence and the neuropil per day (Bonin et al., 2011), imposing a minimum of 0 and a maximum of 1.5. Most neuropil weights ranged between 0.4 – 1.4. A running estimate of fractional change in fluorescence timecourses was calculated by subtracting a running estimate of baseline fluorescence (F0(t)) from Fneuropil_corrected(t), then dividing by F0 (t): ΔF/F(t) = (Fneuropil_corrected(t) - F0(t))/ F0(t). F0(t) was estimated as the 10th percentile of a trailing 32-s sliding window (Burgess et al., 2016; Petreanu et al., 2012). For visualization purposes, all example cue-evoked timecourses shown were re-zeroed by subtracting the mean activity in the 1 s prior to visual stimulus onset.

Normalization of traces

For all traces shown in Figure 2D and Figure 6B, we normalized within day to the largest overall mean response from 0–1 seconds post-stimulus onset. This was done in order to illustrate the dynamics of the neuron’s response to all 3 cues, and to control for differences in response magnitude and/or in z-sectioning of the cell across sessions. For the across-day response plots in Figure 2D, bottom, and the timecourses in Figure 2G, we normalized the mean response for each cue by the sum of all three responses, thereby focusing on the relative magnitude of each response. For all mean timecourse plots, we smoothed individual trials by 3 frames. For the single trial heatmaps (Figure 2C), we smoothed each trial by 500 ms.

To plot the timecourses of all visually driven neurons on a single day (Figure S5C), we used an auROC (area under the receiver operating characteristic) timecourse (Burgess et al., 2016). We calculated this timecourse each day by binning (93 ms bins) the ΔF/F response of single trials and comparing each bin with a baseline distribution (binned data in the 1 s prior to stimulus onset, for all trials of a given cue type within a session) using an ROC analysis. For this analysis, we included all trials regardless of behavioral performance. This analysis quantifies how discriminable the distribution of activity in a given bin is relative to the baseline activity distribution. For example, if the two distributions are completely non-overlapping, the auROC yields an estimate of 1 (clear increase in activity on every trial; all post-baseline firing rate values are larger than all baseline firing rate values; gold) or 0 (clear decrease in activity; all post-baseline firing rate values are smaller than all baseline firing rate values; light blue), while an auROC estimate of 0.5 indicates that the distributions of baseline activity and of post-baseline activity are indistinguishable (white).

Alignment of cell masks across days

For imaging of cell bodies in POR, we chose one set of cell masks for each day. All analyses for the alignment of cell masks across days were semi-automated with the aid of custom Matlab GUIs. To align masks across any pair of daily sessions, we first aligned the mean image from each day by estimating the displacement field using the Demon’s algorithm (Thirion; Vercauteren et al., 2009). This was done for all pairwise combinations of days. This displacement field was then applied to each individual mask for each pair of days. If at least a single pixel overlap existed between 2 cell masks, we calculated a local 2D correlation coefficient to obtain candidate masks of the same cell across multiple days. We then used a custom Matlab GUI to edit these suggestions, and another GUI to confirm each cell across days (Figures 2D & 6B). Thus, each cell aligned across days was manually observed by eye two times for confirmation. Note that the image registration and warping techniques were applied only to masks for alignment suggestion purposes and were never applied to cell masks for fluorescence timecourse estimation. To visualize neurons tracked across days for Figure 2 and 6, we took the mean image of the 200 frames where that neuron had the highest activity (from extracted timecourses).

Criteria for visually responsive neurons