Significance

We show that the orbitofrontal cortex contains cells that first discriminate face from nonface stimuli, then categorize faces by their intrinsic sociodemographic and emotional content. In view of the role of the orbitofrontal cortex in reward processing, decision making, and social behavior, our detailed characterization of these cells sheds light on mechanisms by which of social categories are represented in this region. These data articulate a more comprehensive view of the neural architecture for processing face information, one including areas far beyond the core occipitotemporal regions.

Keywords: orbitofrontal cortex, face, macaque, social, single cell

Abstract

Perceiving social and emotional information from faces is a critical primate skill. For this purpose, primates evolved dedicated cortical architecture, especially in occipitotemporal areas, utilizing face-selective cells. Less understood face-selective neurons are present in the orbitofrontal cortex (OFC) and are our object of study. We examined 179 face-selective cells in the lateral sulcus of the OFC by characterizing their responses to a rich set of photographs of conspecific faces varying in age, gender, and facial expression. Principal component analysis and unsupervised cluster analysis of stimulus space both revealed that face cells encode face dimensions for social categories and emotions. Categories represented strongly were facial expressions (grin and threat versus lip smack), juvenile, and female monkeys. Cluster analyses of a control population of nearby cells lacking face selectivity did not categorize face stimuli in a meaningful way, suggesting that only face-selective cells directly support face categorization in OFC. Time course analyses of face cell activity from stimulus onset showed that faces were discriminated from nonfaces early, followed by within-face categorization for social and emotion content (i.e., young and facial expression). Face cells revealed no response to acoustic stimuli such as vocalizations and were poorly modulated by vocalizations added to faces. Neuronal responses remained stable when paired with positive or negative reinforcement, implying that face cells encode social information but not learned reward value associated to faces. Overall, our results shed light on a substantial role of the OFC in the characterizations of facial information bearing on social and emotional behavior.

A face typically possesses a range of sociodemographic information pertaining to a person: her age, gender, and state of mind. The ability to readily interpret facial features and their emotions is central to human interaction, and the human brain has evolved with areas dedicated to face processing (1). This neural architecture is shared among primates including humans and embodied in several face-selective areas in the occipitotemporal cortical regions (2–5). Neurons in these areas respond almost exclusively to face stimuli (6–10). The distribution of these face areas along a posterior–anterior axis suggests a functional hierarchy along the axes with differentiated roles in face processing and face identification. Occipital areas appear to perform early face processing that yields basic low-level properties of faces. Progressing from posterior to anterior areas, regions in the middle superior temporal sulcus (STS) appear to link features to form face view selectivity, while these are further combined to achieve view invariance and face identification in the anterior regions (1, 11). In parallel, cells sensitive to facial expressions are present in STS (8, 12), although it is not yet clear whether facial expressions are processed in dedicated patches or in conjunction with other attributes such as identity or gaze direction (12–14).

In parallel with temporal areas, face-selective neuronal activity has been observed in ventrolateral prefrontal areas. Face-selective cells were discovered in the inferior convexity below the principal sulcus [n = 37 neurons (15)], in the lower limb of the arcuate sulcus [n = 3 neurons (15)], and in the lateral orbital cortex [n = 4 neurons (15) and 65 visually selective cells among which there are some face-selective cells (16)].

The presence of face-responsive areas in the prefrontal cortex was further confirmed below the principal sulcus (17, 18) and in orbitofrontal cortex (19). In line with this, three small regions selective for face were revealed in the frontal cortex by functional imaging: area PL, below the principal sulcus; PA in the anterior bank of the arcuate sulcus; and PO, in the lateral orbital sulcus (20). In these areas the hemodynamic response was stronger for face expression compared with neutral faces. This difference was stronger in the orbitofrontal cortex, suggesting that this region is involved in processing the emotional content of faces. The presence of this face-selective area among the heterogeneous functions of the surrounding orbitofrontal cortex may set the stage for multidimensional information processing related to face, person, and emotion. Specifically, surrounding areas are linked to computing reward values or preference (21–26) and to processing social information. With respect to the latter, orbitofrontal cortex (OFC) cells are modulated by the viewing of a live monkey receiving a shared reward, suggesting that cells code the value of the reward as modulated by the identity of the monkey with whom reward was shared (27). In addition, neurons in the OFC are more sensitive to rewards when they are not shared, suggesting that they code the social context of a given reward (28). Further, other neurons therein encode the motivational values of social stimuli such as dominant faces or perinea (29). In addition, there are findings from human imaging and lesion studies showing that the OFC is involved in social judgments (30, 31).

In sum, we induce from this literature that neurons specifically coding faces in the orbitofrontal cortex represent social attributes of the faces. To test this hypothesis, we operationally defined social attributes as elements providing information about (i) identity or status such as gender and youth and (ii) emotions as indicated by face expressions. These categories were to represent a large demographic scope of a social population. The categories were not based on the monkey’s prior experience with the stimuli but inherent to the properties of the facial attributes. To our knowledge, only one electrophysiological study (19) described the response properties of selective face cells in the orbitofrontal cortex to attributes such as view, identity, facial expression, and movement. This study reported that some face cells responded to face expression, and others responded to face identity. However, the small sample size of cells (n = 14 for which a characterization was possible out of 32 face-selective cells) permits only tentative conclusions.

Here we systematically characterized the properties of a large number of face-selective cells (n = 179) recorded in the lateral orbitofrontal sulcus by addressing three questions: Are these face cells coding social dimensions of faces, such as age, gender, or expressions? Are the responses of these face-selective cells also triggered by a vocal stimulus, such as a call which can be considered the acoustic counterpart of the face? Are these responses modified by a learned positive or negative Pavlovian association? We also compared the results obtained with the selective face cell population to a control population of non–face-selective cells to rule out responses based on low-level stimulus properties. Our findings reveal that only OFC face-selective cells encode faces in different categories such as facial expression, age, and gender. The cells appear to be primarily visual and are not sensitive to learned associations between a face and a reward or a punisher. In combination, these data imply that the cells provide an independent matrix within the OFC to represent socially and emotionally relevant categories.

Results

Identifying a Face-Selective Area.

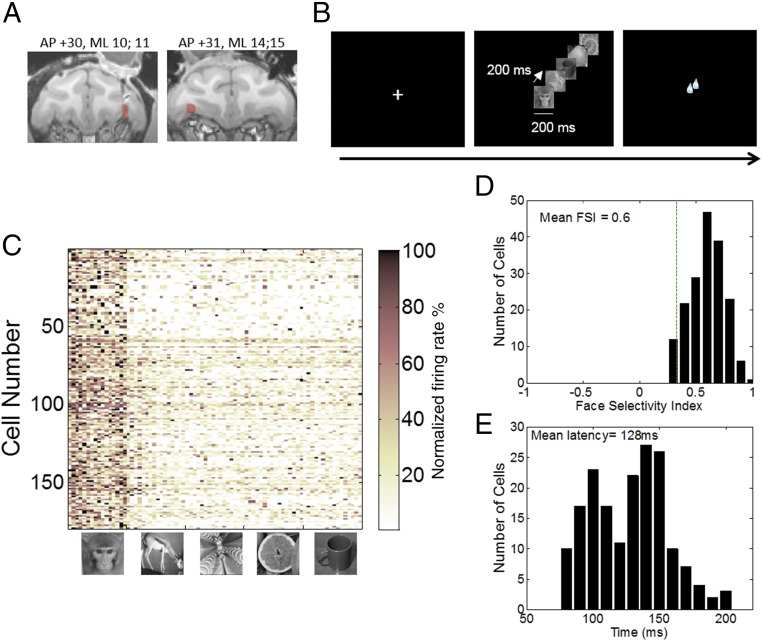

To localize the stereotaxic coordinates of the lateral orbital sulcus along which lies a robust face-selective area [PO (19, 20)], we used MRI scans on each monkey. Then we placed a recording chamber above those coordinates (for monkey Y, AP is +27, ML is +12, right hemisphere; for monkey D, AP is +31, ML is −10, left hemisphere; Materials and Methods and Fig. 1A) and acquired activity in daily recording tracks in two rhesus macaques performing a visual fixation task (Fig. 1B). An electrode was advanced in the brain until activity was detected at the depth corresponding to PO. Cells were then isolated online with a template matching program [multispike detector (MSD), Alpha Omega Engineering], and the activity of each isolated cell was examined with an online peristimuli time histograms (PSTH) that plotted rasters and cumulative activity to face stimuli (16 pictures of different macaque faces unknown to the monkeys we recorded from) and to nonface stimuli (animals, fruits, objects, and fractals, 16 items per category; Materials and Methods), 80 stimuli in all. The activity of every cell to each stimulus category was examined, and we classified online as putative face-selective cells the ones that exhibited a mean response to face stimuli of at least twice the mean of nonface stimuli. Only cells that passed this criterion were kept for further testing; otherwise, the electrode was moved until another cell could be isolated. Using this method, a circumscribed region (Fig. 1A) was identified exhibiting a low, albeit reliable, proportion of face-specific responses: while not all of the cells on these electrode tracks were face cells, we found on average 1 out of 10 cells to respond to faces (130 out of 1,072 cells for monkey Y and 49 out of 730 for monkey D). Note that this sampling is biased because further testing was not performed once a cell not selective to faces was found and vice versa—we stopped sampling within a track when a face cell was found. The population of face cells we tested further consisted of 179 neurons (Fig. 1C; 130 from monkey Y and 49 from monkey D) that (i) exhibited a mean response to face stimuli of at least twice the mean of nonface stimuli and (ii) showed a significant difference between face and nonface stimuli (P < 0.05, one-way ANOVAs with stimulus category as a factor). This second criterion was used offline to discriminate between cells that exhibited a response to face category and the animal category (which also had included animal faces). We also tested 39 cells that were not face selective. The cells were recorded concurrently with face cells because of either their proximity to a face cell on the same electrode or their presence on a different contact from the face cells. These cells were used as a control population. In both monkeys, the face cells were located in the lateral orbital sulcus (Fig. 1A, red area), with one set of recordings slightly more lateral than the other (for monkey Y, AP is +30, ML is 10, 11; for monkey D, AP is 31, ML is 14, 15). Fig. 1C shows the normalized response selectivity of all face-selective neurons recorded from the two monkeys. Face items (images 1–16) elicited stronger responses across the population than did nonface ones (images 17–96) with an average 23 spikes per second (±1.77 SEM) to faces compared with 11.49 (±0.9) to animals, 8.59 (±0.7) to fractals, (9.05) ±0.8 to fruits, and (8.54) ±0.75 to objects [F(4, 712) = 310, P < 0.0001]. This is even more clearly shown by the distribution of the face-selectivity index (FSI; response to face stimuli minus response to nonface stimuli, all divided by their sum) shown in Fig. 1D. Most cells had firing rates to face stimuli that were three or more times greater than the mean response to the nonface stimuli, with a mean FSI of 0.60, with an overall distribution significantly different from 0 (one sample t test, P < 0.001). The distribution of the latencies of face-selective neurons (Fig. 1E) to face images ranged from 80 to 196 ms with a mean of 128 ms, which is consistent with previously reported latencies in the OFC (19). Given the restricted localization of our recordings and the very selective nature of the cells we identified, we conclude that we recorded cells from the previously identified patch PO (20).

Fig. 1.

Localization of recording sites in OFC and task description. (A) Coronal view of anatomical MRI scans of monkey Y (Left) and monkey D (Right) showing the area of recording (coordinates above figure) of face-selective cells in color. (B) Basic task design. Face and nonface stimuli were displayed randomly in sequence of five images (presented for 200 ms, separated by 200-ms blank intervals). (C) Population response matrices of all 179 face-selective neurons to a single trial with each of 80 images of faces, animals, fractals, fruits, and objects (16 images per category). Rows represent cells. Images in column are sorted by category. For each cell, responses to images were averaged over period starting from the face-response latency onset to the end of the face response and were normalized. (D) Distribution of face-selectivity indexes (FSI) across all face-selective neurons. (E) Distribution of latencies across all face-selective neurons. Latencies were computed for face images only.

Characteristics of the Information Coded by OFC Cells.

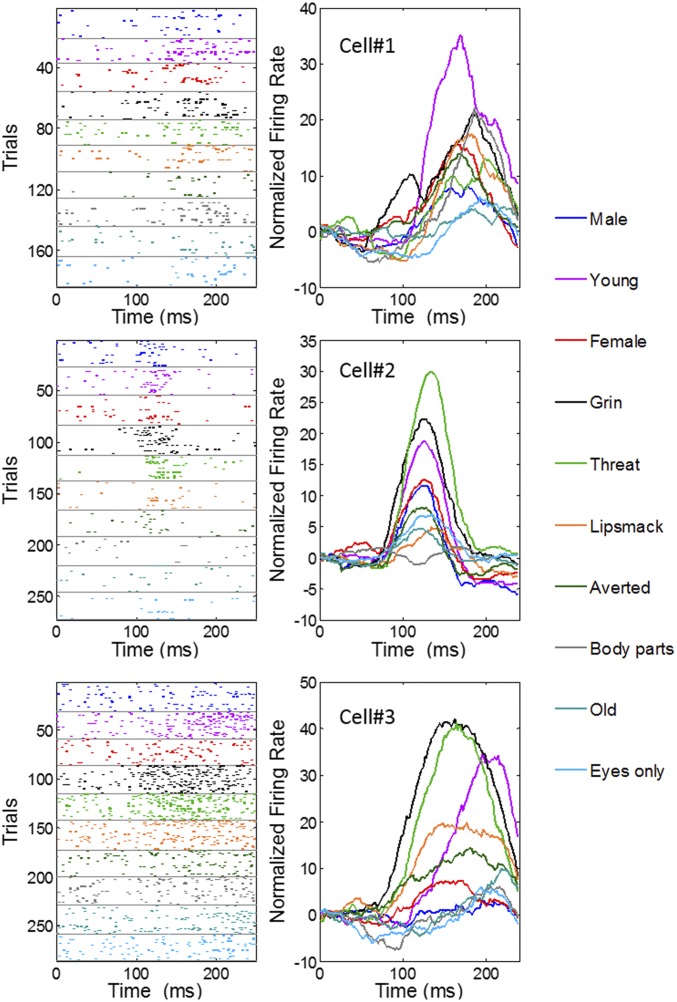

We tested whether 106 of the 179 face-selective cells (66 from M1 and 40 from M2) were sensitive to facial attributes such as gender, age, expression, and gaze (see SI Appendix, Fig. S1, for a representation of all stimuli in the set). To do this, we recorded while monkeys viewed pictures of the full faces of other monkeys unknown to them but bearing different facial expressions or characteristics. We included two other social stimuli that did not represent a full face or possessed no face at all (eyes only and body parts). Photographs of monkeys were assigned to social categories (gender and age) or facial expression following previous assessment (32–34). For example, a threatening face has an open mouth, without showing teeth; a distressed grin has an open mouth with the teeth bared and averted gaze; and a lip smacking affiliative expression has protruding lips and a direct gaze. Photographs were not homogenous in head position, brightness, or identity. We reasoned that if any representation of the categories was present, it would be observed despite the diversity in the images (e.g., different individuals for each category). In previous studies testing identity representation versus facial expressions, authors used up to five different monkey identities bearing three facial expressions (35, 36). Here we used more individuals (n = 64 individuals) to encompass larger social categories but excluded working on identity. Thus, results we obtain can only reveal categories perceived directly from the images. Each image was flashed for 200 ms, and animals were required to maintain their gaze in the center of the image and were not allowed explorative eye movements. Although we did not assess whether animals perceived and categorized these stimuli, we found nonetheless that 71% of the cells (76 of 106) showed a significant difference in the response to these categories, as assessed by a one-way ANOVA performed on each individual cell (P < 0.05). Fig. 2 illustrates how the activity in three example cells reflected face category (see SI Appendix, Fig. S2, for more examples). Each of these cells presents a tuning of their response to categories, sometimes responding much more strongly to one or two categories relative to others.

Fig. 2.

Activity of face cells to the social categories. Example of three cells with selective activity to the stimulus categories. (Left) The raster histograms for each category, shown color-coded, and (Right) the spike density function for each category. x axis represents time from stimulus onset.

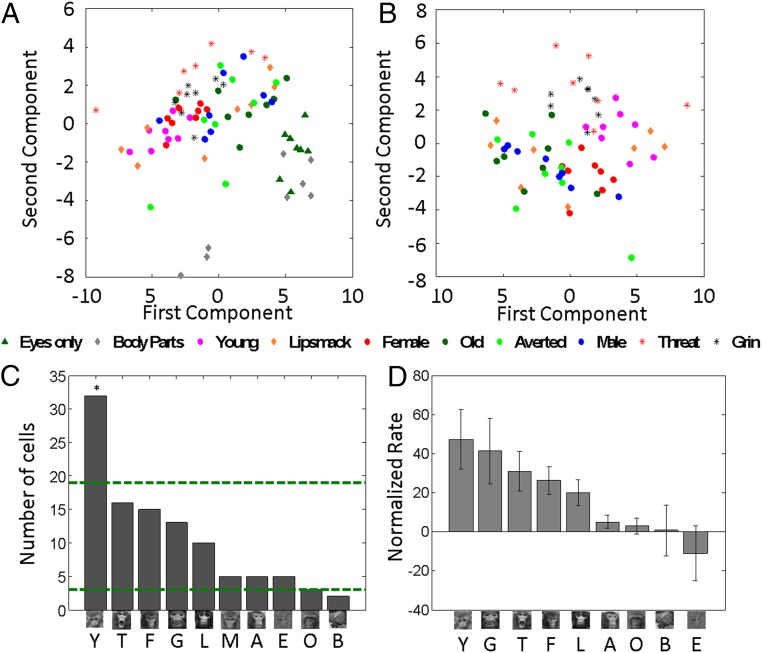

To evaluate whether such facial information is coded across the population of cells, we performed a principal component analysis (PCA) on the normalized firing rates (Materials and Methods). The projection of the three first components against each other for each stimulus reveals different activity patterns for different categories: stimuli belonging to the same category appear close together in the scatterplots (Fig. 3A and SI Appendix, Fig. S3). To test whether the distributions of scores for each category differ from chance, we performed Hotelling T-squared tests with a Bonferroni correction. We found that a large number of categories differed from chance: eyes only [T2 = 677.68, df = (3, 5), P < 0.001], body parts (T2 = 798.4644, P < 0.001), young (T2 = 227.65, P < 0.01), female (T2 = 95.2732, P < 0.05), male (T2 = 517.7198, P < 0.001), threat (T2 = 114.11, P < 0.05), and grin (T2 = 84.38, P < 0.05). Pairwise comparisons [Hotelling T-squared for dependent samples, df (3, 12), with Bonferroni correction, P < 0.05] revealed that categories eyes and body parts differed from all other categories, confirming that these stimuli probably did not engage face-selective cells sufficiently. The category of young stimuli differed from all other full face categories except lip smack and grin. Females differed from young, threat, and grin. Old, averted, and male categories differed from young, threats, and grin. Threat differed from all categories but lip smack and grin, while grin did not differ from young, threat, and lip smack.

Fig. 3.

Principal component analysis and rates per category. (A) Score plots of the first vs. second for PCA including all categories. (B) Score plots of the first vs. second principal components for PCA conducted on face categories only. (C) Number of cells sorted by their best category (n = 106). The green dotted lines represent the upper and lower chance level for the proportions of cells per category with a Bonferonni correction. A, averted; B, body parts; E, eyes only; F, female; G, grin; L, lipsmapck; M, male; O, old; T, threat; Y, young. (D) Population firing rate plotted as relative to their response to neutral male faces (which then represents zero) and sorted by decreasing order.

Because stimuli such as body parts and eyes only contributed substantially to the distribution of the principal components, and may thus distort the statistical tests, we also assessed the robustness of the categorization by performing the analysis without those two categories (Fig. 3B and SI Appendix, Fig. S3). We found that young (T = 60.64, P = 0.05), female (T = 249.97, P < 0.01), male (539.69, P < 0.01), and grin (T = 90.5, P < 0.05) differed from the distribution of other stimuli. This latter analysis shows that even within a space exclusively composed of faces, stimuli belonging to the same social categories are represented by the cells closely together and differ from other face categories.

A Response Gradient Across Stimuli Categories.

Earlier imaging studies showed that BOLD activity in OFC was more pronounced for expressive than neutral faces (20). To assess this here, we next examined the average firing rates for each category of stimuli. Fig. 3C shows the number of cells classified by the stimulus category eliciting each cell’s strongest response. Young faces were associated with the highest number of cells, whereas body parts, averted faces, males, and older monkeys were linked to the fewest cells. This distribution is different from chance (χ2 = 68.40, df = 9, P < 0.001). Further, the number of cells for which young stimuli were the best stimulus was above chance (two-tailed binomial test, Bonferroni corrected, P < 0.05). This outcome indicates that certain stimuli are strongly represented and others poorly so (e.g., older monkey stimuli, which are at chance level). This was confirmed by an analysis of the firing rates across the population of cells for each category (Fig. 3D): young monkeys elicited the strongest firing rates, followed by threats and grins; averted face and older monkeys elicited the weakest rates [F(9, 1,049) = 2.36, P < 0.05].

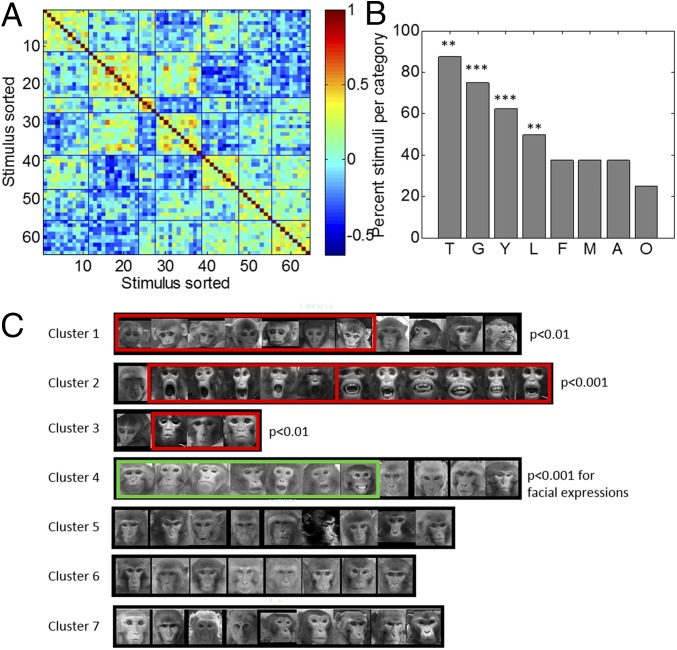

Unsupervised Categorization Along Social Dimensions.

The PCA analysis showed that stimuli from different categories are in some cases adjacent in representational space, possibly because of shared features or high saliency. Thus, threat and grin faces share open mouths, and threat, grin, and young faces may be salient because they differ either from all other faces or from a putative prototypical face (e.g., adult monkey with a neutral expression). To explore such possibilities, we conducted an unsupervised k-means clustering of the stimulus correlation matrix derived from the responses of the population of neurons (Materials and Methods). This analysis also tests whether responses to stimuli cluster without using our assigned labels. We conducted this analysis with the stimulus space consisting of only faces (but see SI Appendix, Fig. S4, for stimulus space including all stimuli). The number of clusters used (seven) was determined as the minimum number that explained at least 90% of variance on the first two components of the 64 × 64 stimulus cross-correlation matrix. Fig. 4 presents the cross-correlation matrix for the seven clusters of stimuli (Fig. 4A) and the actual stimuli corresponding to each cluster (Fig. 4C). Silhouette values (a measure of within cluster coherence with respect to other clusters) for each cluster are presented in SI Appendix, Fig. S4B. Visual examination of the stimuli within each cluster shows that the neuronal population captures a natural feature-based grouping of the stimuli: lip smack and young are each grouped into separate clusters, while threats and grins appear grouped together. Together, this shows that features of salient stimuli such as youth and facial expression are well represented by the population, probably by virtue of their distinctiveness.

Fig. 4.

Unsupervised cluster analysis on the face stimuli only. (A) Cross-correlation matrix with stimuli sorted with respect to stimuli cluster assignment. (B) Percent of stimuli for each prelabeled category that were grouped together in the unsupervised analysis. (C) Actual photos corresponding to the stimuli (1–64) for each cluster shown in A. The red rectangles highlight the stimuli groupings that are above chance with eight categories of eight stimuli. The green rectangle highlights the stimuli grouping above chance when all facial expressions are grouped. Black rectangles surround images whose cluster was not significant.

We further examined the extent to which the unsupervised clustering fits our labels. We calculated the exact probability via Monte Carlo methods of each possible number of pictures of the same category (1–8) to be together in a cluster, given the number of clusters and their size (Materials and Methods). Four clusters out of seven consisted of more images of the prelabeled categories than would be expected by chance (Fig. 4B). Cluster 1 comprised seven pictures of young monkeys of the eight pictures total (P < 0.001). Cluster 2 was almost exclusively composed of threats and grins stimuli (five threats, six grins) (P < 0.01), cluster 3 was composed of lip smacks (P < 0.01), and cluster 4 contained several mouth expressions (three lip smack, three threats, and one grin). Although female monkeys were separated in the PCA analyses above, they were dispersed among clusters that included males, old, and averted faces in this analysis. A similar classification was obtained when the analysis was performed including the nonface stimuli (body parts and eyes only) in the stimulus space. The results show that both classes of stimuli separated into two distinct clusters (SI Appendix, Fig. S4). These latter results also indicate that the cells discriminate nonfacial content to some extent because stimuli showing only body parts clustered separately from eyes only stimuli.

In sum, this unsupervised analysis supports the results of the PCA analysis, showing that young monkeys are categorized separately from threats and grins. Finally, the cluster findings suggest that the cells are sensitive to information pertaining to the mouth: lip smacks (cluster 4), mixed mouth expressions (cluster 5), or threats and grins (cluster 6).

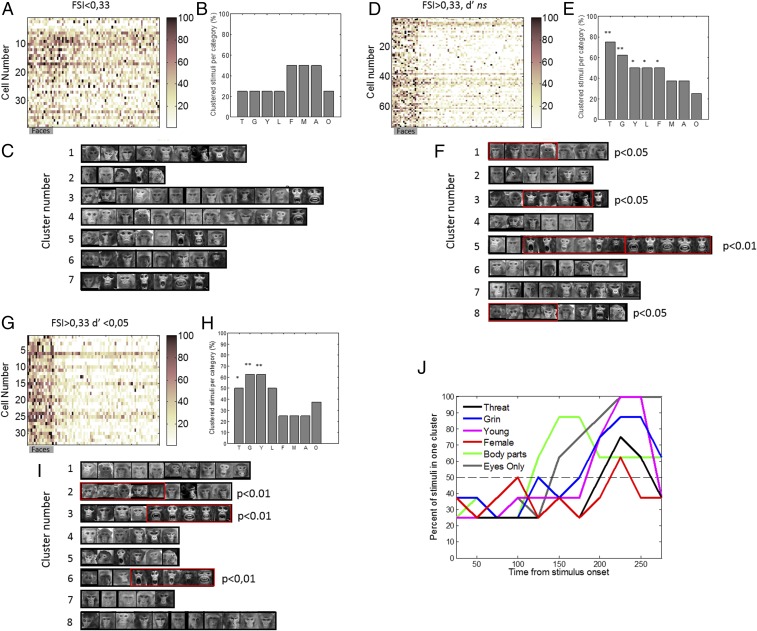

The Categorization Along Social Categories Is Specific to Face Cells.

Our previous sample consisted of face cells only. Next, we assessed whether the properties we described in the previous sections are specific to face cells. We examined whether those properties could be obtained by conducting the same analysis on other cells. We compared results obtained with face cells to ones obtained with a population of 39 cells recorded in the vicinity of face cells but which did not exhibit face-selective responses (Fig. 5A). Because the population of non–face-selective cells is small (n = 39 cells), we compared results to the ones obtained from the population of face cells split in two subsets: face-selective cells with a low face selectivity (Fig. 5 D–F; n = 73) and face-selective cells with high selectivity (n = 33; Fig. 5 G–I). To separate face-selective cells into these high and low populations, we computed the d-prime and classified cells as highly selective when the d′ was greater than 1.65 corresponding to P < 0.05 one-tailed (Materials and Methods). The distribution of d′ of all three populations as well as their respective face-selectivity indices are shown in SI Appendix, Fig. S5. Then we performed the unsupervised cluster analysis on the cross-correlation matrices (SI Appendix, Fig. S5 D, H, and L) and calculated the probability for each category of stimuli to be in one cluster (Materials and Methods). Results show that clusters obtained with the cells that were not face selective did not significantly separate stimuli into meaningful categories. There were no more images from one of our prelabeled categories in any single cluster than would be expected by chance (Fig. 5 B and C). In contrast, clusters obtained with face-selective cells with a low d′ showed significant categories: there were significantly more pictures of the categories grin, threat, young, lip smack, and females than would be expected by chance for eight clusters (Fig. 5 E and F). Next, the eight clusters obtained with cells that had a high d′ (Fig. 5 H and I) further discriminated pictures of grins from pictures of threats into different clusters. Moreover, pictures of grin were clustered into the same cluster as lip smacks, suggesting that these cells separated the stimuli further along ecologically meaningful categories, rather than only on physical properties.

Fig. 5.

Analysis as a function of face selectivity. (A) Population response matrices of non–face-selective neurons (39 cells) to images of faces, animals, fractals, fruits, and objects (16 images per category; conventions are the same as in Fig. 1A). (B) Percent of stimuli for each prelabeled category that were grouped together in the unsupervised analysis. A, averted; F, female; G, grin; L, lip smack; M, male; O, old; T, threat; Y, young. None of the categories showed significant number of stimuli groups together. (C) Pictures corresponding to each cluster in the unsupervised cluster analysis performed on the non–face-selective rates to the 64 face stimuli. (D) Population response matrices of the intermediate face-selective population (n = 73 cells). Conventions are as in A. (E) Percent of stimuli for each prelabeled category that were grouped together in the unsupervised analysis. **P < 0.01 for threat and grin, and *P < 0.05 for young, lip smack, and females. (F) Pictures corresponding to each cluster in the unsupervised cluster analysis performed on the intermediate face-selective rates to the 64 face stimuli. (G) Population response matrices of the highly face-selective population (n = 33 cells). Conventions as in A. (H) Percent of stimuli for each prelabeled category that were grouped together in the unsupervised analysis. **P < 0.01 for grin and young, and *P < 0.05 for threat. (I) Pictures corresponding to each cluster in the unsupervised cluster analysis performed on the highly face-selective rates to the 64 face stimuli. (J) Percent of stimuli grouped together by category as a function of time for all face-selective cells. Only categories with a significant number of cluster were represented. The black dotted line represents a conservative probability threshold of at least 50% of stimuli of each category (four out of eight) to be assigned to the same cluster.

Emergence of Categorization as a Function of Time from Stimulus Onset.

Our results show that the face-selective cells display a large range of latencies, as can be appreciated in Figs. 1E and 2. Moreover, some cells like cell 3 in Fig. 2 appear to increase their firing rate at different times from stimulus onset for different categories. To evaluate whether the OFC face cell population discriminated some categories at earlier times than others, we conducted the cluster analysis on 11 successive, partially overlapping 50-ms bins using a sliding window by increments of 25 ms, starting from stimulus onset until 100 ms after stimulus extinction. To contrast the timing for the emergence of within-face categories to that of face versus nonface discrimination, we also conducted this analysis on nonface stimuli. The results, presented in Fig. 5J, indicate the number of stimuli for categories for which the stimuli clustered together above chance. The analysis shows that cells discriminated between body parts and faces early in the trial starting from 125 ms after stimulus onset. This was followed by a discrimination between faces and eyes only at 150 ms after stimulus onset. Finally, categorization for within-face stimuli emerged later with young and grin clustered from 200 ms onward from stimulus onset, followed closely by threat and female clustering. In general, the number of stimuli clustered together increased as a function of time. This suggests that categorization was higher when the firing rate was the highest. Taken together, our results show that faces are first discriminated from nonfaces. Then, a second process permits a finer-grained categorization of faces within emotional and demographic categories.

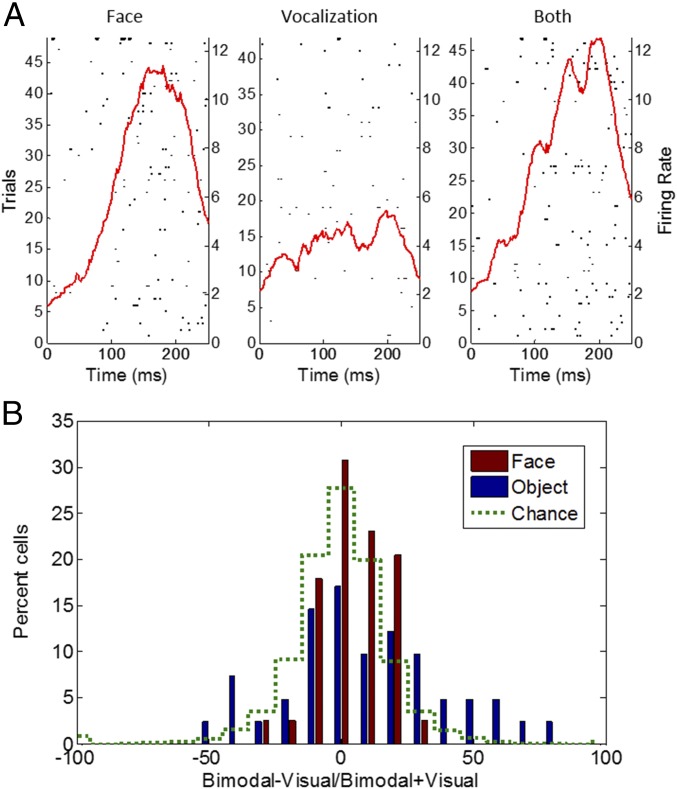

Very Little Modulation of Face Responses by Vocal Stimuli.

To assess whether face cell responses were modulated by voices, we recorded from 43 face cells while photos of faces were presented alone or with a vocal stimulus (grunt, coo, and alarm call playback). This does not test a real multimodal integration such as what could be obtained with a video but rather tests whether the cells responded to an acoustic stimulus or whether the playback of a call modulated the response to the face. The affect or valence content of the photo was congruent with the nature of the call; that is, a face of a monkey cooing was presented together with the recording of a monkey cooing, an alarm call was presented together with a face bearing a grin, and a picture of a monkey emitting a grunt was presented together with a grunt audio sample. Because monkeys on the pictures or on the audio samples were not familiar to the monkeys tested, this setup does not test specific individual identities. We also presented the vocalization alone and nonface stimuli paired with nonvocal auditory stimuli. There was very little modulation of the responses to the visual stimuli because only 2 out of the 43 cells showed a significant difference between face alone and the face paired with a vocalization (t test; Fig. 6A). This proportion rose to six significant cells when an object stimulus was paired with an audio stimulus, but this remains equal to chance overall. Moreover, only four cells had a significant response to vocal stimuli (face or object). To assess whether there was a global modulation at the population level, we calculated an index measuring the effect of the bimodal stimulus compared with the unimodal visual stimulus for each cell (bimodal minus visual, divided by their sum). The results illustrated in Fig. 6B show that there is a shift of the distribution toward the right for the indices calculated on face/call stimuli and object/audio stimuli. This suggests that the activity to a visual stimulus is enhanced by the presentation of a joint auditory stimulus. However, this shift was not significant (sign test, P < 0.07) when comparing the distributions to that obtained with permuted data representing chance. Thus, only a weak effect was observed.

Fig. 6.

Multisensory testing. (A) An example of a cell’s response during the presentation of a face, a vocalization only, and both stimuli. (B) Distribution of the bimodality indices (bimodal minus visual/sum).

Very Little Modulation by Learning of Face–Reward or Face–Punishment Associations.

To evaluate whether face cells encode the learning of face and outcome associations, we recorded 30 face cells as the monkey underwent a classical conditioning of face–reward, face–air puff associations. Two new photos of male monkeys unknown to the subject monkeys, with a neutral expression, were presented each day. One face was paired with a positive outcome (fruit juice), the other with negative outcome (air puff directed to the eye lid). On every recording session, two new pictures were used. We monitored the animal’s behavior expecting an anticipatory licking after acquisition of one face–reward association and an anticipatory eye blink after learning of the face–air puff association (SI Appendix, Fig. S6). To delineate mechanisms more specific to faces, we also used two new nonface images every day.

To assess whether there was an interaction between the face–outcome association and the value of this association (i.e., reward or air puff), we used a two-way ANOVA on different epochs of the trial (SI Appendix) with learning period (the first 10 trials compared with the last 10 trials of the session comprising each five positive and five negative outcome stimuli) and value (positive or negative) as main factors., There was a main effect of period for 5 of the 30 cells on the activity recorded during the image presentation (P < 0.05).

This modulation was observed only for face stimuli, except for one cell which showed significant difference for both face and nonface stimuli (P < 0.05). A main effect of value was observed for four cells (P < 0.05) implying that they discriminated between the faces associated with positive or negative outcome. If the cells assigned a value to each face and modified their firing rate accordingly, we would expect an interaction between the value and the period. On the contrary, there was a significant interaction for 2 of 30 cells only. In combination, these findings indicate that there is very little effect of learning measured by classical conditioning with taste and air puff reinforcers in face-selective neurons in the OFC.

Discussion

Our findings indicate that face cells in OFC categorize a rich set of photographs of conspecifics into emotion and social categories despite differences in pictures, identity, lightning, or position of head within a category and without familiarity with the individuals portrayed. Thus, the most parsimonious interpretation is that the cells encoded intrinsic properties conveyed by the faces and their expressions. At the single-cell and population levels, faces are discriminated from nonface stimuli and into categories such as young, female, old, and facial expressions involving the mouth. Images bearing physical similarities are grouped together as body parts, eyes only, open mouth expressions (threat/grin), lip smack, and juvenile monkeys. We showed that this categorization was not obtained with a control population that did not respond more to faces than to other stimuli. In addition, cells with a very high selectivity discriminated between stimuli bearing very similar physical traits such as grin and threat. Together, these results indicate that the social categorization of stimuli was specific to face cells and that the processing of the stimuli along social categories did not only depend on low-level properties of pictures but expressed a fine-grained sensitivity to the content of the pictures. Juvenile monkeys and faces with expressions involving an open mouth recruit the greatest number of cells, with each kind mapped into distinct clusters at the population level. However, the cells showed virtually no modulation to faces accompanied by vocalization, indicating their reliance on visual input, nor were they affected by learning in a classical conditioning paradigm involving faces. Taken together, the results suggest that the face cells code intrinsic physical and expressive information conveyed by faces.

Orbitofrontal Face Cells Are Sensitive to Features Conveying Emotions.

The facial expressions in our stimuli are mainly distinguished by modulations of the physical features of the mouth. Affiliative lip smacks are characterized by protruding lips, whereas threat and grin bear a similar open mouth. Our data reveal clearly that OFC face cells are sensitive to a set of features that are specific to facial expressions. There seems to be a gradient to which face-selective cells can discriminate between facial expressions bearing close similarity. Threat and grin are of interest in this respect because, despite their similarity, they are very different in an ethological context (34). We found that these two categories of stimuli were separated by cells that were highly selective (Fig. 5) but not by other face cells with intermediate selectivity. Further grin stimuli were clustered together with lip smack stimuli by the cells with a high selectivity. This is an interesting result given that a grin teeth-chatter can be intermixed with a lip smack when produced in an affiliative context (37). These results suggest that cells may be able to represent the emotional implication of the stimuli per se, beyond the physical features.

One puzzling result was that in our learning condition, despite the development of a behavioral expectancy associated with positive or negative outcome (a taste reward or an air puff), the neuronal responses to the faces were unchanged. This implies that there is a stable representation of the intrinsic physical aspect of faces, independent of the learned variables associated with the face. This result fits observations that the selectivity profiles of face cells in the anterior fundus face patch of the temporal lobe did not change over months of recordings (38). In general, the scope of our conclusions is limited to the assertion that face cells can associate faces with primary reinforcers and nonrewards such as fruit juice and air puff. It is conceivable that more ecologically relevant social reinforcers such as positive and negative facial expressions would change the neural representation of a neutral face when frequently associated with that face. This line of inquiry remains to be pursued.

In sum, our findings indicate that the OFC face cells provide a matrix to code physical attributes of the faces, including the variations of the features during expressions. This matrix can support an effective interpretation of the emotions conveyed by faces. This process could emerge from the orbitofrontal face cells directly or result from interaction with the orbitofrontal cortex surrounding the face patches. In support for this hypothesis, patients with OFC lesions are impaired in the identification of facial expressions or emotions (39–41). Medial OFC lesions in particular are associated with profound changes in personality, with traits close to sociopathy (42, 43), and with a decrease in the subjective feelings of negative emotions (44, 45). Likewise, fMRI studies of healthy adults report activation of the OFC for facial expressions per se (46–48). Overall, then, emotion recognition is likely the result of a functional network (49), and the prominent representation of the facial expressions that we uncovered here may act as a scaffold for the interpretation of the emotions.

Orbitofrontal Face Cells as a Support for Socially Relevant Information.

Another key finding here is the prominent categorization of face stimuli along demographic features. Juvenile faces were robustly separated from other stimuli and yielded the largest firing rate response. Female faces were also represented separately in the PCA analysis. By contrast, several categories elicited low activation such as older monkeys, males, and monkeys with averted gaze. Together, these results provide nearly unique evidence that different types of demographic categories are encoded as distinct in the primate OFC. The encoding of these categories echoes fMRI studies in healthy humans implying that this region bears on adaptive social behavior. For example, fMRI studies in healthy adults observe activity in OFC for viewing infants (50, 51) and attractive female faces (52–54). Further, a variety of findings indicate that there is a special processing of infant faces that engenders an increased perceived pleasantness for their viewing (55) and for their prioritization (56, 57). The increased activity to young faces in OFC observed here may support findings in humans for a dedicated processing of infants.

Another interpretation of these results is related to social dominance. A morphometric imaging study in the macaque showed that brain organization reflects several aspects of social dominance such as social status or social network size (58). Specifically, social status recruited an amygdala-centered network, while the size of the social group recruited cortical regions in the middle superior temporal sulcus and rostral prefrontal cortex. These findings imply the existence of a neuronal substrate specifically involved in social dominance. Consistent with this, neurons in the orbitofrontal cortex, which is connected to the amygdala, were shown to discriminate dominant and nondominant faces of known conspecifics (29). In our data, the representations of young faces were close to female faces in the PCA analysis and were opposed to the much less represented category of older monkeys. Possibly this could denote a safe, nondominant social category that can be inferred from the images. In this framework, our results contrast with the recent ones (21), which reported a lack of a coding of social hierarchy in OFC. However, these authors did not test face-selective cells specifically. Further, this study differs from ours because it searched for a fine representation of hierarchical ranking of known familiar males. Our result, in contrast, relates to perceived categories based on physical characteristics, rather than on a learned history of interactions. Human ratings of faces, for example, show that dominance evaluation is sensitive to features signaling strength and masculinity (59), so baby and feminine faces, which lack masculine features, may be perceived as nonthreatening or as dominant in the matriarchal rhesus hierarchy. Recent studies in humans imply that the dorsomedial prefrontal cortex may encode social dominance (31), so the activity of the cells studied here could contribute to such representations of social hierarchy.

Comparing Properties of the OFC Face Cells with Face Cells in Other Areas.

Our face cells were in a small area just above the lateral orbital sulcus, at the boundary between areas 13l and 12o (60), and corresponded to the orbitofrontal face patch described in a majority of monkeys in an fMRI study (20). This confirms earlier preliminary reports of face cells in the lateral orbital cortex (15, 19). This confirmation across three different experimental methods and laboratories affirms the robustness of the selectivity effects.

The latencies of these face cells, ranging from 80 to 200 ms (mean of 127 ms), are consistent with the previous reports (15, 19). Our findings on the emergence of categorization as a function of time showed that cells discriminated faces from nonfaces before they performed a finer categorization within the face stimulus space. This is consistent with findings showing a decoding of different stimulus categories as a function of time from stimulus onset in the macaque inferotemporal cortex (61). Specifically, the authors found an earlier representation of midlevel categories (e.g., face vs. body and human vs. monkey) compared with subordinate-level categories (such as person identity). The latencies of the OFC face cells are generally above the ones reported in the temporal cortex, ranging from 80 to 120 ms using similar methods for computing the latency, i.e., a threshold on the instantaneous spike density function (61–64). How the OFC face cells may combine or multiplex input from the temporal regions remains to be determined.

The orbitofrontal face cells did not respond to vocalization and performed very little multisensory integration. While auditory neurons responding to vocalization have been found in the orbitofrontal cortex (19), we confirmed that face-selective cells did not appear to be sensitive to acoustic stimuli (19), whether these stimuli were presented separately or together with a face. This is similar to face-responsive cells in the medial temporal lobe, which, while responsive to faces, did not show any modulation by acoustic stimuli (64). This functional organization contrasts strongly with cells in the prefrontal cortex (17, 65–67). Overall, these results would be consistent with the possibility that either (i) the OFC and the lateral prefrontal cortex receive parallel input from the ventral stream (68) or (ii) the OFC provides input to the prefrontal cortex, which in turn integrates this input with other acoustic input (69).

The patterns of selectivity of the OFC cells are similar to those in (i) the amygdala (35, 70) and (ii) the lower bank of the STS (8, 12), both of which possess neurons selective to facial expression and facial identity. However, we did not test whether OFC cells coded identity directly. Nonetheless, every category in our set was composed of eight different identities, and the rates within a category were not uniform. This variation may reflect sensitivity to identities, but clarification must await the completion of studies aimed at this issue.

For further comparison, consider that the latencies in the OFC (130 ms) were longer than the ones in both the amygdala (70–100 ms) and the STS (80–120 ms). Therefore, it is conceivable that the OFC cells receive input from cells in the STS or the amygdala that encode facial expressions, thus multiplexing the information in a local module relevant to prefrontal function. Given the tight anatomical connections between OFC and the amygdala (60, 71, 72) and the role of the amygdala in emotion (73, 74), studying how they influence each other during emotional processing would be a timely, fruitful pursuit.

Materials and Methods

Subjects and Surgical Procedures.

Two adult male rhesus monkeys (Macaca mulatta) (denoted Y and D, 8.5 kg and 7.5 kg, respectively) were used. All studies were carried out in accordance with European Communities Council Directive of 2010 (2010/63/UE) as well as the recommendations of the French National Committee (2013/113). All procedures were examined and approved by the ethics committee CELYNE (Comité d’Ethique Lyonnais pour les Neurosciences Expérimentales #42). Animals were prepared for chronic recording of single-neuron activity in the orbitofrontal cortex. Anesthesia was induced with Zoletil 20 (15 mg/kg) and maintained under isoflurane (2.5%) during positioning of a cilux head-restraint post and recording chamber (Crist Instruments). The position of the recording chamber for each animal was determined using stereotaxic coordinates derived from presurgical anatomical magnetic resonance images (MRI) at 0.6-mm isometric resolution (1.5 T). We used postsurgical MR images with an electrode of known depth inserted in the middle section of the chamber to finely monitor recording locations.

Behavioral Testing.

All experiments were performed in a dark room where monkeys were head restrained and sat in front of an LCD screen situated 26 cm from their eyes. The animal’s gaze positions were monitored with an infrared eye tracker (ISCAN) at a frame rate of 250 Hz. Eye movements were sampled and stored with REX Software (75), which was also used for stimulus presentation and outcome delivery. Visual stimuli subtended a visual angle of 9 × 9° and were presented randomly. Auditory stimuli were displayed at ∼70 dB, from two speakers located at 45 cm in front of the subject and symmetrically 60 cm apart. The monkey had to look at a fixation point at the center of the screen to start the trial and from then on had to maintain its gaze on the images displayed (five images per block, presented for 200 ms duration and separated by 200 ms of blank display).

Visual Stimuli.

Visual stimuli consisted of several blocks tested separately for the screening and the social categorization part of the study. Screening stimuli contained nonface (fruits, objects, fractals, and nonprimate animals) and face images (with unfamiliar neutral monkey faces) (16 images per category). For the social characterization, 10 categories were used (male monkey faces, female monkey faces, juvenile monkey faces, old monkey faces, lip smacking, threatening, fear-expressing faces, averted-gaze monkey faces, eyes, or monkey body parts) with eight exemplars per category. The stimuli were photographs of monkeys unfamiliar to the monkeys we recorded from. Given the very diverse nature of the stimulus categories, we used pictures taken from various sources: colonies in other laboratories but also occasionally from the internet. For each category, there were no stimuli portraying the same entity.

Neuronal Recordings.

We targeted area 13, in the lateral orbital sulcus, corresponding to the prefrontal orbital face patch PO described by Tsao et al. (20). Monkeys were first implanted with a recording chamber based on anatomical information acquired with MRI. Single-neuron activity was recorded extracellularly with a tungsten electrode (1–2 MΩ; Frederick Haer Company) or 16 contact U-probes spaced by 300 µm (Plexon Inc.). The electrodes were daily introduced in the brain using stainless steel guide tubes set inside a delrin grid (Crist Instruments) and a NAN microdrive (Plexon Inc.). Most cells (n = 150) were recorded with an alpha–omega real time spike sorter [amplified using a Neurolog system (Digitimer) and digitized for online spike discrimination using the MSD software]. Behavioral control, visual display, and data storage were under the control of a PC running the REX system (75). REX was used to display online PSTH. For the reminder of the sessions, neuronal signals were band-pass filtered from 150 Hz to 8 kHz and preamplified using a Plexon system (Plexon Inc.). Signals were digitized and sampled at 20 kHz via a Spike 2 system which also displayed PSTH.

Face-Selectivity Screening Procedure and Testing Design.

Once a single unit was isolated, we used a screening block set of stimuli (Fig. 1B) which contained images of faces and nonfaces presented at random for 200 ms. We used a custom-made PSTH online to identify online face-selective cells. In the laboratory, a cell was accepted as a face cell if its activity to face stimuli was at least twice that of nonface stimuli. The population of face cells was further examined on either one of the following tests described below: screening for categories (106 cells), multimodal sensitivity (43 cells), and face–reward associations (30 cells). These tests were performed on different sessions because the monkeys were only tested for a limited amount of time in the laboratory during which they earned liquid reward, and they would not perform all of the tasks during one session. We also gathered a total of 39 cells that were not face selective and were recorded concurrently to some of the face cells. These cells served as a control for the analysis.

Face Category Testing.

The majority of face-selective cells (106 out of 179 cells) were tested with a block of face stimuli differing in age, gender, and facial expressions (see SI Appendix, Fig. S1, for a complete representation of the stimulus set). The stimuli were presented for 200 ms in the same way as described for the screening test.

Multisensory Testing.

Forty three cells (31 cells from monkey Y and 12 cells from monkey D) were tested for their response to face, vocalization, or face plus vocalization. The auditory stimuli contained monkey vocalizations or nonvocal sounds (birdcall, trumpet, or computer-generated noises) and were presented either alone or in combination with a congruent visual stimulus (face or object). Vocalization stimuli were divided into three categories (coo, scream, and grunt) with five different identities per category.

Data analysis.

Analyses were performed with custom-written scripts and statistical Toolbox in MATLAB (MathWorks).

Latencies.

We smoothed spike trains for face stimuli by convolution with a Gaussian kernel (σ = 10 ms) to obtain the spike density function (SDF). The baseline activity was defined as the mean discharge rate during the 100-ms period just preceding image onset until 50 ms after image onset. The latency of the neuronal response was determined to be the time point when the SDF for the face stimuli first exceeded a level +2.5 SDs from the baseline activity for 30 consecutive ms. This was defined as the “latency on.” We defined the time at which neuronal activity returned to baseline as the time at which neuronal discharge was below threshold for a consecutive 50 ms. This was defined as the “activity off.”

Rates per stimulus.

For each cell, firing rates were calculated during image presentation, from the latency-on to the activity-off time points, and selectivity was assessed with ANOVAs comparing the mean response to the different image categories (face, fruits, animals, fractals, and objects).

Face-selectivity index.

The face-selectivity index was calculated using the average response to face and nonface stimuli during the screening test. Rates were not normalized; they were averaged for the time window between latency on and activity off, as described just above. The same time window was used for the nonface stimuli. FSI was then calculated as follows:

Computation of the sensitivity index or d′.

A finer estimation of the face selectivity was obtained by computing the d′ for the 16 face stimuli with respect to the 64 nonface stimuli (fruits, objects, fractals, and animals). The sensitivity index or d′ provides the separation between the means of the signal and the noise distributions, compared against the SD of the signal or noise distribution. For normally distributed signal and noise with mean and SDs µS and σS, and µN and σN, respectively, d′ is defined as

| [1] |

The significance level for d′ was determined with respect to the normal distribution.

Principal component analysis.

For our PCA, we used the activity of 106 of the 179 cells examined with the second set of face stimuli, which included the various social and emotional categories (SI Appendix, Fig. S1). The rate of each of the 106 cells for each stimulus was calculated with the discharge between the latency-on and the activity-off time points (see above). Then in the matrix, each stimulus is represented by a vector of 106 cells. We first transformed the rates for each cell into z scores. To do this, the rate of each cell for each stimulus was normalized by the variance of the cell calculated for all stimuli. This adjusted for differences in firing rates across cells, while preserving the discriminability across stimuli within a cell. We then performed a standard PCA analysis using MATLAB statistical toolbox. To test whether the scores for each category were different from chance, we performed a Hotelling T-2 test with a Bonferonni correction on the number of categories. Pairwise comparisons were also tested with a Hotelling T-2 test with a Bonferonni correction.

Cross-correlation matrix and clustering.

We performed a cross-correlation of the stimulus matrix for the 106 cells and their average rate for the 80 stimuli. The firing rate was normalized in z scores as above. The correlation matrix of the stimuli was then used for clustering using k-means methods. We determined the optimal number of clusters to use, via the minimal number of clusters that explained at least 90% of the variance (best elbow method). The cross-correlation matrix was then sorted by stimulus and cluster to show the different clusters in Fig. 5A and the corresponding images in Fig. 5C.

Test of significance for the clustering by k-means.

We computed the probability of each possible number of labels belonging to the same category for a cluster, given the number of clusters used in the k-means. To do this, we performed 1,000 permutations of the labels and formed n clusters on each of these permutations; these n clusters were the same size as the actual clusters (n = 4–13 stimuli). Then, we derived the probability that one to eight labels of the same category were present in each single cluster. We performed identical computations for the number of stimuli with facial expressions (24 stimuli) to be present in the actual cluster size (n = 4–13 stimuli).

Multisensory integration.

We defined a significant multisensory integration to be when there was a significant difference (unpaired t test) between the bimodal response and the response evoked by the unimodal face stimulus. We calculated an integration index as the response to the face stimulus minus the response to the bimodal stimulus, divided by the sum. We tested whether the distribution of the integration indices was different from chance by performing the calculation of the index on data that was permuted 500 times. Then we tested the difference between actual and permuted data with a Wilcoxon test.

Supplementary Material

Acknowledgments

We thank Lawrence Parsons for editing with the English language, Gilles Reymond for designing the infrared video analysis software, and Stéphane Bugeon for help on editing the stimuli dataset. This work was supported by the LABEX (Laboratory of Excellence) CORTEX (ANR-11—LABEX-0042) of University de Lyon within the program Investissement d’Avenir and by grants from the Agence Nationale de la Recherche (BLAN-SVSE4-023-01 and BS4-0010-01 to J.-R.D.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1806165115/-/DCSupplemental.

References

- 1.Freiwald W, Duchaine B, Yovel G. Face processing systems: From neurons to real-world social perception. Annu Rev Neurosci. 2016;39:325–346. doi: 10.1146/annurev-neuro-070815-013934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ku SP, Tolias AS, Logothetis NK, Goense J. fMRI of the face-processing network in the ventral temporal lobe of awake and anesthetized macaques. Neuron. 2011;70:352–362. doi: 10.1016/j.neuron.2011.02.048. [DOI] [PubMed] [Google Scholar]

- 3.Pinsk MA, DeSimone K, Moore T, Gross CG, Kastner S. Representations of faces and body parts in macaque temporal cortex: A functional MRI study. Proc Natl Acad Sci USA. 2005;102:6996–7001. doi: 10.1073/pnas.0502605102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, Tootell RB. Faces and objects in macaque cerebral cortex. Nat Neurosci. 2003;6:989–995. doi: 10.1038/nn1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tsao DY, Moeller S, Freiwald WA. Comparing face patch systems in macaques and humans. Proc Natl Acad Sci USA. 2008;105:19514–19519. doi: 10.1073/pnas.0809662105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Baylis GC, Rolls ET, Leonard CM. Selectivity between faces in the responses of a population of neurons in the cortex in the superior temporal sulcus of the monkey. Brain Res. 1985;342:91–102. doi: 10.1016/0006-8993(85)91356-3. [DOI] [PubMed] [Google Scholar]

- 7.Bell AH, et al. Relationship between functional magnetic resonance imaging-identified regions and neuronal category selectivity. J Neurosci. 2011;31:12229–12240. doi: 10.1523/JNEUROSCI.5865-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hasselmo ME, Rolls ET, Baylis GC. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res. 1989;32:203–218. doi: 10.1016/s0166-4328(89)80054-3. [DOI] [PubMed] [Google Scholar]

- 9.Hung CC, et al. Functional mapping of face-selective regions in the extrastriate visual cortex of the marmoset. J Neurosci. 2015;35:1160–1172. doi: 10.1523/JNEUROSCI.2659-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tsao DY, Freiwald WA, Tootell RB, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Freiwald WA, Tsao DY. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science. 2010;330:845–851. doi: 10.1126/science.1194908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Morin EL, Hadj-Bouziane F, Stokes M, Ungerleider LG, Bell AH. Hierarchical encoding of social cues in primate inferior temporal cortex. Cereb Cortex. 2015;25:3036–3045. doi: 10.1093/cercor/bhu099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Baseler HA, Harris RJ, Young AW, Andrews TJ. Neural responses to expression and gaze in the posterior superior temporal sulcus interact with facial identity. Cereb Cortex. 2014;24:737–744. doi: 10.1093/cercor/bhs360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RB. Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Natl Acad Sci USA. 2008;105:5591–5596. doi: 10.1073/pnas.0800489105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.O Scalaidhe SP, Wilson FA, Goldman-Rakic PS. Areal segregation of face-processing neurons in prefrontal cortex. Science. 1997;278:1135–1138. doi: 10.1126/science.278.5340.1135. [DOI] [PubMed] [Google Scholar]

- 16.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: Neuronal activity in the behaving monkey. Exp Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 17.Diehl MM, Romanski LM. Responses of prefrontal multisensory neurons to mismatching faces and vocalizations. J Neurosci. 2014;34:11233–11243. doi: 10.1523/JNEUROSCI.5168-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Romanski LM, Diehl MM. Neurons responsive to face-view in the primate ventrolateral prefrontal cortex. Neuroscience. 2011;189:223–235. doi: 10.1016/j.neuroscience.2011.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rolls ET, Critchley HD, Browning AS, Inoue K. Face-selective and auditory neurons in the primate orbitofrontal cortex. Exp Brain Res. 2006;170:74–87. doi: 10.1007/s00221-005-0191-y. [DOI] [PubMed] [Google Scholar]

- 20.Tsao DY, Schweers N, Moeller S, Freiwald WA. Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci. 2008;11:877–879. doi: 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Munuera J, Rigotti M, Salzman CD. Shared neural coding for social hierarchy and reward value in primate amygdala. Nat Neurosci. 2018;21:415–423. doi: 10.1038/s41593-018-0082-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rolls ET. The orbitofrontal cortex and emotion in health and disease, including depression. Neuropsychologia. September 24, 2017 doi: 10.1016/j.neuropsychologia.2017.09.021. [DOI] [PubMed] [Google Scholar]

- 24.Saez RA, Saez A, Paton JJ, Lau B, Salzman CD. Distinct roles for the amygdala and orbitofrontal cortex in representing the relative amount of expected reward. Neuron. 2017;95:70–77.e3. doi: 10.1016/j.neuron.2017.06.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 26.Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- 27.Azzi JC, Sirigu A, Duhamel JR. Modulation of value representation by social context in the primate orbitofrontal cortex. Proc Natl Acad Sci USA. 2012;109:2126–2131. doi: 10.1073/pnas.1111715109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chang SW, Gariépy JF, Platt ML. Neuronal reference frames for social decisions in primate frontal cortex. Nat Neurosci. 2013;16:243–250. doi: 10.1038/nn.3287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Watson KK, Platt ML. Social signals in primate orbitofrontal cortex. Curr Biol. 2012;22:2268–2273. doi: 10.1016/j.cub.2012.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cicerone KD, Tanenbaum LN. Disturbance of social cognition after traumatic orbitofrontal brain injury. Arch Clin Neuropsychol. 1997;12:173–188. [PubMed] [Google Scholar]

- 31.Ligneul R, Girard R, Dreher JC. Social brains and divides: The interplay between social dominance orientation and the neural sensitivity to hierarchical ranks. Sci Rep. 2017;7:45920. doi: 10.1038/srep45920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Partan SR. Single and multichannel signal composition: Facial expressions and vocalizations of rhesus macaques (Macaca mulatta) Behaviour. 2002;139:993–1027. [Google Scholar]

- 33.Maestripieri D. Gestural communication in macaques: Usage and meaning of nonvocal signals. Evol Commun. 1997;1:193–122. [Google Scholar]

- 34.Maestripieri D, Wallen K. Affiliative and submissive communication in rhesus macaques. Primates. 1997;38:127–138. [Google Scholar]

- 35.Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol. 2007;97:1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- 36.Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- 37.Ferrari PF, Paukner A, Ionica C, Suomi SJ. Reciprocal face-to-face communication between rhesus macaque mothers and their newborn infants. Curr Biol. 2009;19:1768–1772. doi: 10.1016/j.cub.2009.08.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.McMahon DB, Jones AP, Bondar IV, Leopold DA. Face-selective neurons maintain consistent visual responses across months. Proc Natl Acad Sci USA. 2014;111:8251–8256. doi: 10.1073/pnas.1318331111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Damasio AR, Tranel D, Damasio H. Individuals with sociopathic behavior caused by frontal damage fail to respond autonomically to social stimuli. Behav Brain Res. 1990;41:81–94. doi: 10.1016/0166-4328(90)90144-4. [DOI] [PubMed] [Google Scholar]

- 40.Heberlein AS, Padon AA, Gillihan SJ, Farah MJ, Fellows LK. Ventromedial frontal lobe plays a critical role in facial emotion recognition. J Cogn Neurosci. 2008;20:721–733. doi: 10.1162/jocn.2008.20049. [DOI] [PubMed] [Google Scholar]

- 41.Willis ML, Palermo R, McGrillen K, Miller L. The nature of facial expression recognition deficits following orbitofrontal cortex damage. Neuropsychology. 2014;28:613–623. doi: 10.1037/neu0000059. [DOI] [PubMed] [Google Scholar]

- 42.Eslinger PJ, Damasio AR. Severe disturbance of higher cognition after bilateral frontal lobe ablation: Patient EVR. Neurology. 1985;35:1731–1741. doi: 10.1212/wnl.35.12.1731. [DOI] [PubMed] [Google Scholar]

- 43.Schneider B, Koenigs M. Human lesion studies of ventromedial prefrontal cortex. Neuropsychologia. 2017;107:84–93. doi: 10.1016/j.neuropsychologia.2017.09.035. [DOI] [PubMed] [Google Scholar]

- 44.Hornak J, et al. Changes in emotion after circumscribed surgical lesions of the orbitofrontal and cingulate cortices. Brain. 2003;126:1691–1712. doi: 10.1093/brain/awg168. [DOI] [PubMed] [Google Scholar]

- 45.Hornak J, Rolls ET, Wade D. Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia. 1996;34:247–261. doi: 10.1016/0028-3932(95)00106-9. [DOI] [PubMed] [Google Scholar]

- 46.Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122:883–893. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- 47.Nakamura K, et al. Activation of the right inferior frontal cortex during assessment of facial emotion. J Neurophysiol. 1999;82:1610–1614. doi: 10.1152/jn.1999.82.3.1610. [DOI] [PubMed] [Google Scholar]

- 48.Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: An event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- 49.Adolphs R. Neural systems for recognizing emotion. Curr Opin Neurobiol. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- 50.Kringelbach ML, et al. A specific and rapid neural signature for parental instinct. PLoS One. 2008;3:e1664. doi: 10.1371/journal.pone.0001664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Parsons CE, Stark EA, Young KS, Stein A, Kringelbach ML. Understanding the human parental brain: A critical role of the orbitofrontal cortex. Soc Neurosci. 2013;8:525–543. doi: 10.1080/17470919.2013.842610. [DOI] [PubMed] [Google Scholar]

- 52.Aharon I, et al. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32:537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- 53.Ishai A. Sex, beauty and the orbitofrontal cortex. Int J Psychophysiol. 2007;63:181–185. doi: 10.1016/j.ijpsycho.2006.03.010. [DOI] [PubMed] [Google Scholar]

- 54.Winston JS, O’Doherty J, Kilner JM, Perrett DI, Dolan RJ. Brain systems for assessing facial attractiveness. Neuropsychologia. 2007;45:195–206. doi: 10.1016/j.neuropsychologia.2006.05.009. [DOI] [PubMed] [Google Scholar]

- 55.Little A. Manipulation of infant-like traits affects perceived cuteness of infant, adult and cat faces. Ethology. 2012;118:775–782. [Google Scholar]

- 56.DeBruine LM, Hahn AC, Jones BC. Perceiving infant faces. Curr Opin Psychol. 2016;7:87–91. [Google Scholar]

- 57.Hodsoll J, Quinn KA, Hodsoll S. Attentional prioritization of infant faces is limited to own-race infants. PLoS One. 2010;5:e12509. doi: 10.1371/journal.pone.0012509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Noonan MP, et al. A neural circuit covarying with social hierarchy in macaques. PLoS Biol. 2014;12:e1001940. doi: 10.1371/journal.pbio.1001940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Oosterhof NN, Todorov A. The functional basis of face evaluation. Proc Natl Acad Sci USA. 2008;105:11087–11092. doi: 10.1073/pnas.0805664105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Carmichael ST, Price JL. Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. J Comp Neurol. 1995;363:615–641. doi: 10.1002/cne.903630408. [DOI] [PubMed] [Google Scholar]

- 61.Dehaqani MR, et al. Temporal dynamics of visual category representation in the macaque inferior temporal cortex. J Neurophysiol. 2016;116:587–601. doi: 10.1152/jn.00018.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mruczek RE, Sheinberg DL. Stimulus selectivity and response latency in putative inhibitory and excitatory neurons of the primate inferior temporal cortex. J Neurophysiol. 2012;108:2725–2736. doi: 10.1152/jn.00618.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Naya Y, Yoshida M, Miyashita Y. Backward spreading of memory-retrieval signal in the primate temporal cortex. Science. 2001;291:661–664. doi: 10.1126/science.291.5504.661. [DOI] [PubMed] [Google Scholar]

- 64.Sliwa J, Planté A, Duhamel JR, Wirth S. Independent neuronal representation of facial and vocal identity in the monkey hippocampus and inferotemporal cortex. Cereb Cortex. 2016;26:950–966. doi: 10.1093/cercor/bhu257. [DOI] [PubMed] [Google Scholar]

- 65.Romanski LM. Integration of faces and vocalizations in ventral prefrontal cortex: Implications for the evolution of audiovisual speech. Proc Natl Acad Sci USA. 2012;109:10717–10724. doi: 10.1073/pnas.1204335109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Romanski LM, Hwang J. Timing of audiovisual inputs to the prefrontal cortex and multisensory integration. Neuroscience. 2012;214:36–48. doi: 10.1016/j.neuroscience.2012.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26:11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ungerleider LG, Gaffan D, Pelak VS. Projections from inferior temporal cortex to prefrontal cortex via the uncinate fascicle in rhesus monkeys. Exp Brain Res. 1989;76:473–484. doi: 10.1007/BF00248903. [DOI] [PubMed] [Google Scholar]

- 69.Saleem KS, Miller B, Price JL. Subdivisions and connectional networks of the lateral prefrontal cortex in the macaque monkey. J Comp Neurol. 2014;522:1641–1690. doi: 10.1002/cne.23498. [DOI] [PubMed] [Google Scholar]

- 70.Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol. 2007;17:766–772. doi: 10.1016/j.cub.2007.03.040. [DOI] [PubMed] [Google Scholar]

- 71.Aggleton JP, Burton MJ, Passingham RE. Cortical and subcortical afferents to the amygdala of the rhesus monkey (Macaca mulatta) Brain Res. 1980;190:347–368. doi: 10.1016/0006-8993(80)90279-6. [DOI] [PubMed] [Google Scholar]

- 72.Amaral DG, Price JL. Amygdalo-cortical projections in the monkey (Macaca fascicularis) J Comp Neurol. 1984;230:465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- 73.Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- 74.Pessoa L, Adolphs R. Emotion processing and the amygdala: From a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat Rev Neurosci. 2010;11:773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Hays AV, Richmond BJ, Optican LM. WESCON Conference Proceedings. Vol 2. Electron Conventions; El Segundo, CA: 1982. A UNIX-based multiple process system for real-time data acquisition and control; pp. 1–10. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.