ABSTRACT

In conducting its practice analysis, the National Commission on Certification of Physician Assistants incorporated new approaches in 2015. Twelve groups of PAs identified knowledge, tasks, and skills required for practice not only in primary care, but also in 11 practice focus areas (specialties). In addition, a list of diseases and disorders likely to be encountered was identified for each specialty. A representative sample of 15,771 certified PAs completed an online algorithm-driven survey and data were analyzed to determine how practice is the same for all PAs and how it differs depending on the practice focus. Larger differences between PAs in different specialties were seen in the frequency ratings of diseases and disorders encountered than for ratings of task, knowledge, and skill statements. Distinctions between PAs in primary care and those in other specialties were more pronounced, as the specialty was more divergent from primary care.

Keywords: physician assistant, practice focus, disorders, NCCPA, certification, blueprint

A practice analysis identifies and documents the major tasks performed in a particular profession, and often includes the knowledge and skills required to conduct those tasks.1,2 This type of analysis is used to validate certification examinations and provide a basis for defending the appropriateness of examination content.3 Practice analysis of the physician assistant (PA) profession helps the National Commission on Certification of Physician Assistants (NCCPA) ensure that examination content is realistic, relevant, and reflects current practice. In complying with best practices for the development and validation of high-stakes certification examinations, most healthcare professions—for example, physical therapy or occupational therapy—base their examination content on data gathered through a practice analysis.4-7 The NCCPA is responsible not only to the PAs it certifies, but to other stakeholders as well for the preparation of psychometrically sound and defensible certification examinations. In turn, certifications are the quality disclosures healthcare professionals put forth to the public to indicate that they have been examined in accordance with contemporary healthcare practice standards of care.8 Those relying on the validity of NCCPA certification include patients, employers (such as physicians, hospitals, or healthcare systems), those responsible for credentialing professionals (including state licensing boards), external accreditors, and insurers.

Contemporary professional standards for certification testing require that the examination content reflect the actual practice of the profession in question.3 Because the US healthcare industry is rapidly changing, practice analysis results help to focus examination content and to meet standards established by external accreditation organizations.9

The NCCPA was created in the early 1970s to provide a certification program that reflects standards for clinical knowledge and skills required for PAs. The first certification examination was administered in 1975.10 Since then, a PA practice analysis has been undertaken about once every 5 years. A major goal of these studies centers on documentation development and content validation to link NCCPA's examination specifications or blueprints to the practice of PAs in the healthcare environment. Examination specifications enable the construction of consistent test forms. The specifications also serve other purposes, such as providing guidance for test question authors in reviewing and classifying examination questions to comply with current medical standards and contemporary medical practice. The blueprint serves as the framework for building examination forms, informing score reports, and providing part of the documentation required to support the validity of examination scores. Furthermore, the compilation of practice analyses provides an archival record of the evolution and trends of the PA profession.11,12

NCCPA practice analyses before 2015 focused on general tasks, knowledge, and skills that were performed by all PAs. One list of tasks, knowledge, and skills was used to evaluate the entire PA profession. By contrast, in the 2015 practice analysis, 12 different PA groups developed a list of tasks, knowledge, and skills in primary care, as well as listings of tasks, knowledge, and skills in 11 specialty areas. Lists also were developed of diseases and disorders encountered in primary care and in the 11 specialty areas. These 12 specialties represented at least 88% of all certified PAs. Specialty is self-reported.

PAs were asked how often they encountered the diseases and disorders (frequency) and the extent to which the diagnosis or treatment of that disease or disorder was likely to result in harm to the patient (criticality). This was the first PA practice analysis that collected data on specific diseases and disorders encountered in practice, and the first time the ratings were analyzed by considering the specialty practice of the PA and then comparing those ratings with the ratings of PAs practicing in primary care. Results also were analyzed separately for PAs certified for 6 or fewer years compared with those certified for more than 6 years.

Data from 12 PA focus groups were used to develop an online survey, which was made available to all certified PAs. All PA respondents reviewed and evaluated the primary care elements, and then were routed to specific specialty elements to evaluate if they indicated they practiced in a specialty area for which additional survey components were available. Results from this national survey were reviewed by additional PAs who participated in revising the Physician Assistant National Certifying Examination (PANCE) content outline or blueprint.

METHODS

In 2015, 72 clinically active and currently certified PAs were convened as subject matter experts at various meetings to delineate the major tasks, knowledge, and skills required for PA practice in primary/general medical care as well as 11 other medical and surgical specialties. In addition to their medical area of practice or specialization, currently certified PAs (PA-Cs) were recruited using NCCPA data to construct groups balanced according to sex, geographic region, race/ethnicity, and years of certification. Electronic inquiries were sent to a balanced sample of certified PAs who listed their practice focus in one of the 12 areas of interest. The initial inquiry was to determine interest in and availability for specific meeting dates. Depending on initial responses, additional inquiries were sent until a representative group of certified PAs was constructed. These PA-Cs were invited to meet as a committee at the NCCPA office in Johns Creek, Ga. The expertise sought from subject matter experts who participated in these meetings was their clinical experience as PAs. The specialties chosen included the seven practice areas for which NCCPA offers Certificates of Added Qualifications, as well as the four next most populous specialties (dermatology, cardiology, neurosurgery, and general surgery). The intent was to explore how PA practice is similar and different across a broad spectrum of medical and surgical specialties.

A group of 17 PAs was convened in January 2015 to delineate the major knowledge and skills required for PA practice in eight task areas of primary/general medical care as well as a listing of diseases and disorders likely to be encountered. Demographics used in selecting the participants included age, sex, geographic region, years of certification, and practice discipline. In February 2015, 11 specialty-focused committees consisting of five PAs each reviewed the general lists developed by the first committee, and then developed listings of additional knowledge and skills used in each medical and surgical specialty represented. The committees also developed a list of additional disorders commonly seen in these specialty areas. After the two lists were established, the participants were asked to discuss how PA practice has changed over the past decade and where practice remained similar since previous practice analyses.

From this series of steps, a survey was created, evaluated, piloted, and refined for the 2015 NCCPA practice analysis study. The pilot survey was administered to 90 PAs, some of whom were current or previous NCCPA test committee members, and their comments were used to improve the survey. The instrument was delivered online using Survey Gizmo software and formatted for multistage delivery, with participants being routed to different sections of the survey based on their self-identified specialty. All participants were promised confidentiality with regard to their involvement and their responses. The study protocol was reviewed and approved by an independent institutional review board for compliance with organizational guidelines for research studies involving human subjects.

Similar to the then-current PANCE content outline, the 2015 practice analysis was based on two dimensions: diseases and disorders organized by organ systems and knowledge and skills related to task areas. Drawing on previous studies, the eight major task areas included 67 skill statements and 75 knowledge statements, as well as 14 organ systems. New to the 2015 practice analysis was the inclusion of 142 diseases or disorders linked to those organ systems.

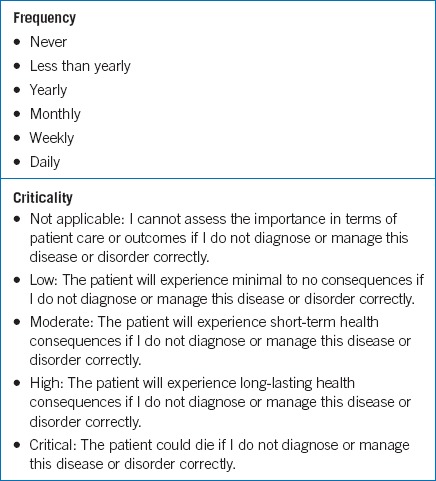

All respondents were asked to rate each knowledge, skill, disease or disorder item on two independent scales considering their current practice in their principal clinical position. The first was the Frequency Scale with which the diseases and disorders are encountered in their practice. The second scale was a rating of the criticality of applying the knowledge or skills related to these diseases and disorders in terms of patient safety. The Criticality Scale was defined in terms of the potential negative effect on patient care or outcomes if the diseases or disorders were not diagnosed or managed appropriately. The two scales are summarized in Table 1.

TABLE 1.

Frequency and criticality scales

In addition to primary care/general medicine, survey respondents who worked in one of 11 identified nonprimary care specialties also were routed to an additional survey component to rate tasks, knowledge, and skills, as well as diseases and disorders specifically for that specialty. If the respondent did not select one of these specialty practice areas, the survey concluded for that PA. The other specialties were cardiology, cardiovascular/thoracic surgery, dermatology, emergency medicine, general surgery, hospital medicine, nephrology, neurosurgery, orthopedic surgery, pediatrics, and psychiatry.

The survey was available for 2 months starting in April 2015. All certified PAs were invited to participate, and each invited PA was issued a unique link so that participation could be tracked. To encourage participation, PAs were sent multiple email reminders, and those who completed the survey were provided 1.5 hours of CME credit and entered into a drawing for five $1,000 gift cards.

The review of data was intended to draw on a variety of techniques to identify meaningful relationships in the results. Demographic characteristics of the respondent sample were compared with characteristics of the certified PA population as identified in NCCPA's database for all PAs, as well as data from NCCPA's PA Professional Profile, an online tool that certified PAs may access and update whenever they log into their secure, personal certification portal.13 The profile includes fields to gather practice-specific information and other demographic data. In 2015, 93.5% of certified PAs had completed the profile.

Analyses of the two primary scales used in the practice analysis survey included frequencies, means, and standard deviations of respondent ratings of the frequency and criticality of skill and knowledge statements organized by task area, as well as ratings of frequency and criticality for various diseases and disorders organized by organ systems that might be encountered in practice. Each specialty cohort was compared with the primary care cohort, consisting of PAs practicing in family medicine, general internal medicine, and general pediatrics. In addition to this comparison, another comparison was made between newly certified PAs (6 or fewer years in practice) and those certified more than 6 years to compare those PAs who had only taken the PANCE with those who had also taken the Physician Assistant National Recertifying Examination (PANRE) as part of their 6-year certification maintenance cycle. Because the primary purpose of this study included linking survey data to practice and using the results to inform discussions on test content outline revision, more sophisticated data analysis was reserved for different analyses of these data. Descriptive statistics were used and no inferential statistics were incorporated.

RESULTS

In midyear 2015, the survey invitation was distributed electronically to 101,252 certified PAs. Of those offers, 7,617 emails were returned due to inactive/incorrect email addresses or being identified as spam, providing 93,365 potential respondents. From a database of 93,365 PAs with a valid email address, 15,771 PAs responded to the 90-minute survey about the content of their medical practice, years of experience, location, and specialty (response rate: 16.9%) and self-selected into any of 11 specialty domains or general medicine. Characteristics of the respondents mirrored those found in the PA Professional Profile, and of the universe of clinically active PAs. Because the results were considered representative of the profession of certified PAs, the weighting of samples was not required for matching purposes.

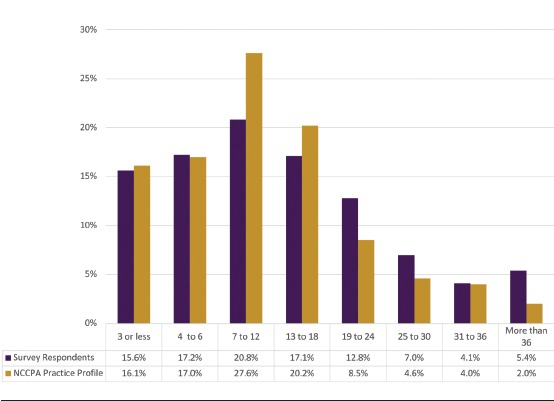

The majority (63%) of the 15,771 respondent PAs had been certified for more than 6 years, consistent with the NCCPA profile's results of 86,363 PAs, of whom 66% had been certified more than 6 years (Figure 1). The length of time PA respondents were certified compared with the NCCPA data varied 5 percentage points or less for each category (that is, certified 3 or fewer years 21.1% and 16.1%, respectively). The 1:6 ratio of first-time test takers to those with two or more certification cycles also reflected the certified PA population.

FIGURE 1.

Survey respondents compared with all certified PAs by years of practice

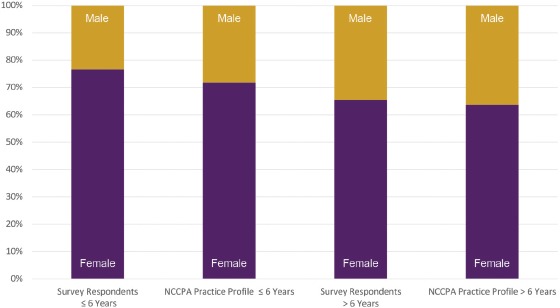

Differences in survey respondents by years of PA practice were examined by sex and by years of practice (Figure 2). Nearly 77% of the survey respondents practicing 6 or fewer years were female, compared with nearly 72% in the NCCPA profile. Among PAs practicing for more than 6 years, women accounted for nearly 66% of the survey respondents and nearly 64% of the NCCPA profile.

FIGURE 2.

Sex differences in survey respondents compared with all certified PAs by years of practice

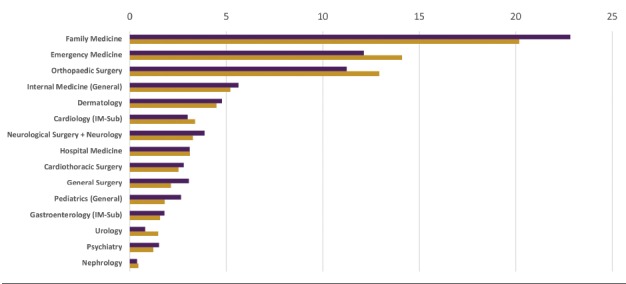

The specialty areas of PAs responding to the survey were compared with those in the profile (Figure 3). The top 15 specialties were cardiology, cardiothoracic surgery, dermatology, emergency medicine, family medicine, gastroenterology, general surgery, hospital medicine, internal medicine, nephrology, neurological surgery and neurology, orthopedic surgery, general pediatrics, psychiatry, and urology. Of the top 15-specialty survey respondents (13,365), all matched within 2 percentage points of their NCCPA profile cohorts (58,801).

FIGURE 3.

Survey respondents compared with all PAs by specialty

In general, the ratings of tasks, knowledge, and skills of newer PAs (6 or fewer years in practice) were similar to ratings of PAs in practice for more than 6 years in terms of criticality and frequency. This was especially true for tasks, knowledge, and skills in the clinical areas. A few differences appeared in professional skills. For example, recently certified PAs were more likely to spend time accessing and applying medical informatics and recognizing professional and clinical limitations, while PAs certified for more than 6 years spent more time negotiating contracts, conducting clinical research, precepting students, and advocating for the profession.

Comparing PAs in specialty practice and PAs in general primary care practice by examining differences in the frequency and criticality ratings of task knowledge and skill statements yielded small differences in clinical practice areas and larger differences in only a few professional practice areas. However, more dramatic differences were seen when examining their ratings of specific diseases and disorders.

DISEASES AND DISORDERS LIST

Combining ratings from family medicine, general internal medicine, and general pediatrics created a primary care cohort. Means were calculated for PA respondent ratings of each task knowledge and skill statement for the various cohorts, and corresponding values were subtracted, one from the other (for example, the mean frequency rating for knowledge 1 by PAs in dermatology was subtracted from the mean frequency rating for knowledge 1 by all PAs in the primary care cohort in order to form a comparison). Examining the differences between the mean ratings of two groups provided an overview of how similarly or differently the groups rated the same knowledge or skill as well as diseases and disorders.

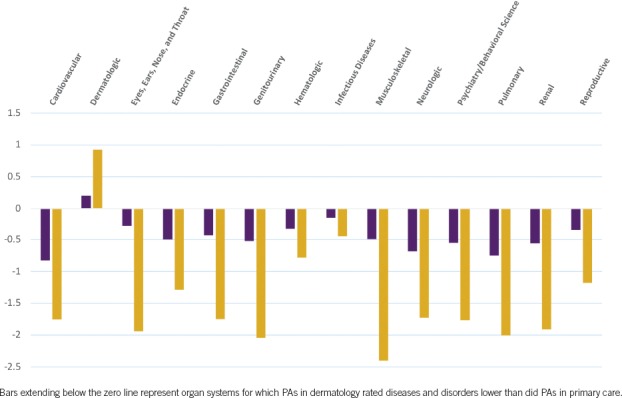

Differences between ratings of PA cohorts are easier to visualize when reviewing a chart that displays differences between the two groups across all the ratings grouped by category. As an example, Figure 4 shows the average difference between means for criticality and frequency for diseases and disorders grouped by organ system. Bars extending below the zero line depict organ systems for which the dermatology PAs rated diseases and disorders, on average, lower than ratings of the same diseases and disorders by primary care PAs.

FIGURE 4.

Mean differences for disease and disorder ratings (grouped by organ systems) of criticality and frequency by PAs in dermatology and those in primary care

Differences between frequency and criticality ratings of diseases and disorders by PAs in the primary care cohort versus those in nonprimary care specialties tended to be larger if the nonprimary care specialty was more dissimilar from primary care. As the example in Figure 4 shows, frequency and criticality ratings of diseases and disorders in dermatologic systems were higher by dermatology PAs than they were by the primary care cohort. By contrast, ratings of diseases and disorders by PAs in emergency medicine were closer to those same ratings from the primary care cohort. PA cohorts that tended to be more similar to the primary care cohort included hospital medicine and emergency medicine; cohorts that were more dissimilar included cardiovascular/thoracic surgery, dermatology, orthopedic surgery, and psychiatry.

DISCUSSION

The 2015 practice analysis provided specific data on a wide range of diseases and disorders that PAs are treating and diverse roles that PAs are filling in US medicine. These data were used to inform the PANCE blueprint and PANRE blueprint revisions. The subject matter experts, working with psychometricians, recommended modifications in test content outlines based on a review of the practice analysis data. For example, they recommended that professional practice content be increased and that the renal/genitourinary section be separated into two categories on the PANCE content outline. In addition, data gathered from this practice analysis were used in conjunction with additional studies to inform a new assessment format and separate content blueprint for the PANRE. The revised PANCE and PANRE blueprints become effective January 1, 2019, and appear on the NCCPA website.

The 15,771 PA respondents reported their primary job, but other NCCPA survey work has revealed that 14% may have a different secondary clinical job.14 Thus, the number of certified PAs reported in any of the specialty areas could be underreported. However, a review of the demographic characteristics of the PA respondents compared with the NCCPA profile data suggests that the respondents are representative of the certified PA population in the United States.

When analyzing the broad tasks performed by all PAs, the practices appear similar: all conduct patient histories; order and interpret laboratory results; and diagnose, treat, and manage patients. However, the knowledge and skills required to perform those tasks can vary significantly, for example, between PAs in orthopedic surgery and those in psychiatry. For the first time, this study examines the practice of not only primary care PAs but also 11 different specialty groups, as well as contrasting entry-level practice with that of the more experienced PA. This dataset can be used to help inform discussions on the knowledge, skills, and tasks PAs need from the classroom to clinical rotations to graduation in order to practice at entry level and beyond, as well as to support PAs' practice mobility and role change.15,16

Secondary goals of this study included examining how nonprimary care specialty practice may differ from primary care specialty practice and examining how entry-level practice may differ from beyond entry-level practice. In general, the ratings of tasks, knowledge, and skills of newer PAs (6 or fewer years in practice) were similar to ratings of PAs in practice for more than 6 years in terms of criticality and frequency. This was especially true for tasks, knowledge, and skills in clinical medicine.

A few differences appeared in professional skills. For example, recently certified PAs were more likely to spend time accessing and applying medical informatics and recognizing professional and clinical limitations, while PAs certified for more than 6 years spent added time negotiating contracts, conducting clinical research, precepting students, and advocating for the profession. Regarding primary care and nonprimary care specialty practice, many clinical tasks performed for patients are similar across all specialties but the knowledge and skills required to perform those tasks can be specific to particular patient populations. An important secondary use of this data set has been to inform the modification of test content outlines for existing examinations for the Certificates of Added Qualifications. A full exploration of PA specialty practice is beyond the scope of this paper.

In looking at recertification redesign, NCCPA considered how entry-level PA practice compares with beyond entry-level practice. What do all practicing PAs need to continue to practice, and what knowledge and skills do they need in order to change their practice specialty? An additional use of the 2015 practice analysis data is as a basis for the study and development of core medical knowledge and skills. NCCPA has used these data extensively in conducting focus groups involving PA subject matter experts to help better define the medical content that all PAs should know regardless of their specialty practice. Completion of this work led to a redesign of the PANRE test blueprint and contributed to the redesign of the assessment process for recertification. The results of this study have educational implications. Training for purpose is at the heart of PA pedagogy if new PAs are to be well equipped to serve all clinical settings. The broad list of diagnoses and conditions across medical specialty settings revealed by this study informs the student being prepared for 21st-century healthcare needs. Detailed discussion of blueprint redesigns for PANCE and PANRE is beyond the scope of this paper but remains an important product of this research.

As the healthcare landscape changes domestically and globally, the need for a practice analysis every 5 years is more important than ever. In determining how often to conduct a practice analysis, NCCPA considers the expenditure of resources required to undertake such analyses, the rate of change in medical practice and the PA profession, and accepted industry standards in test development. Additional considerations include time commitments for PAs to complete the surveys and effect on PA educators and students when test blueprints are modified. Revisiting the practice analysis every 5 years strikes a balance between these competing interests and comports with current professional standards.

LIMITATIONS

This study provides a snapshot of PA practice in 2015 and, as such, can be outdated within a relatively short time. More detailed information about diagnoses and disorders as rated by PAs practicing in different specialty areas will be reported in subsequent publications.

Almost all survey research relies heavily on self-report, which represents a source of bias. What people report at the time of query depends on interpretation of the question and the willingness to answer fully or candidly. The quality of self-reported data when compared with more objective administrative data can differ depending on the study and circumstances.17

Another potential limitation was that subject matter experts self-declared their skill level or degree of expertise. The degree of self-reported bias in the survey or in the NCCPA profile was not assessed.

The challenge of a contemporary PA practice analysis is to detail the best and most realistic picture of PA clinical activity based on time, staff assignment, cost, and resources. Engaging the support of external data to supplement this clinical picture is challenging at best. Reaching out to other groups, including the National Center for Health Statistics, Kaiser Permanente, or VA databases to illustrate the broad range of PA practice has limitations. In those cases, the available dataset may be limited to a particular region or population, often confounded with data collected for physicians, or data not available because of privacy restrictions or proprietary concerns. Any data available are inherently biased by a variety of variables, such as insurance, billing, population, urbanity, and provider age.

CONCLUSION

Respondents to the 2015 PA Practice Analysis found the activities listed in the survey to be representative of the work they performed in their practice settings. Because the survey results were similar to what the PA subject matter experts deemed important, the results seemed appropriate, and the refined process meaningful. New information is advanced about the diseases and disorders in primary care and a wide range of other specialties occupied by PAs. With this research, the role of PAs in US society is better understood. Such focused findings serve as a foundation in continuing the investigation and understanding of the PA profession on the diverse delivery of American healthcare services. The stage is set for the next practice analysis. In turn, more practice analyses are needed to continue to measure the rapidly evolving and complex facets of medicine and roles of PAs in the production and maintenance of healthcare.

REFERENCES

- 1.Raymond MR. A practical guide to practice analysis for credentialing examinations. Educational Measurement: Issues & Practice. 2002;21:25–37. [Google Scholar]

- 2.American Educational Research Association, American Psychological Association, National Council on Measurement in Education. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association; 2014:11. [Google Scholar]

- 3.Kane M. Model-based practice analysis and test specifications. Appl Measurement Educ. 1997;10(1):5–18. [Google Scholar]

- 4.Lane S, Raysmond MR, Haladyna TM. Evaluating tests. Handbook of Test Development. 2016:725–738. [Google Scholar]

- 5.National Organization for Competency Assurance. Certification: A NOCA Handbook. Washington, DC: National Organization for Competency Assurance; 1996. [Google Scholar]

- 6.Federation of State Boards of Physical Therapy. Analysis of practice for the physical therapy profession: entry-level physical therapists. www.fsbpt.org/Portals/0/documents/free-resources/PA2011_PTFinalReport20111109.pdf. Accessed September 11, 2018.

- 7.National Board for Certification in Occupational Therapy, Inc. Practice analysis of the occupational therapist registered. www.nbcot.org/-/media/NBCOT/PDFs/2012-Practice-Analysis-Executive-OTR.ashx?la=en. Accessed September 11, 2018.

- 8.Dranove D, Jin GZ. Quality disclosure and certification: theory and practice. J Economic Literature. 2010;48(4):935–963. [Google Scholar]

- 9.Institute for Credentialing Excellence. ICE National Commission for Certifying Agencies (NCCA) Standards for the Accreditation of Certification Programs. https://cdn.ymaws.com/www.napo.net/resource/resmgr/Docs/2016_NCCA_Standards.pdf. Accessed October 10, 2018.

- 10.Hooker RS, Carter R, Cawley JF. The National Commission on the Certification of Physician Assistants: history and role. Perspective on Physician Assist Educ. 2004;15(1):8–15. [Google Scholar]

- 11.Arbet S, Lathrop J, Hooker RS. Usingpractice analysis to improve the certifying examinations for PAs. JAAPA. 2009;22(2):31–36. [DOI] [PubMed] [Google Scholar]

- 12.Hess B, Subhiyah R. Confirmatory factor analysis of the NCCPA Physician Assistant National Recertifying Examination. J Physician Assist Educ. 2004;15(1):55–61. [Google Scholar]

- 13.Glicken AD. Physician assistant profile tool provides comprehensive new source of PA workforce data. JAAPA. 2014;27(2):47–49. [DOI] [PubMed] [Google Scholar]

- 14.Jeffery C, Morton-Rias D, Rittle M, et al. Physician assistant dual employment. JAAPA. 2017;30(7):35–38. [DOI] [PubMed] [Google Scholar]

- 15.Rizzolo D, Cavanagh K, Ziegler O, et al. A retrospective review of physician assistant education association end of rotation examinations. J Physician Assist Educ. 2018;29(3):158–161. [DOI] [PubMed] [Google Scholar]

- 16.Hooker RS, Cawley JF, Leinweber W. Career flexibility of physician assistants and the potential for more primary care. Health Aff (Millwood). 2010;29(5):880–886. [DOI] [PubMed] [Google Scholar]

- 17.Halbesleben JR, Whitman MV. Evaluating survey quality in health services research: a decision framework for assessing nonresponse bias. Health Serv Res. 2013;48(3):913–930. [DOI] [PMC free article] [PubMed] [Google Scholar]