Abstract

Background

Application of surgical navigation for pelvic bone cancer surgery may prove useful, but in addition to the fact that research supporting its adoption remains relatively preliminary, the actual navigation devices are physically large, occupying considerable space in already crowded operating rooms. To address this issue, we developed and tested a navigation system for pelvic bone cancer surgery assimilating augmented reality (AR) technology to simplify the system by embedding the navigation software into a tablet personal computer (PC).

Questions/purposes

Using simulated tumors and resections in a pig pelvic model, we asked: Can AR-assisted resection reduce errors in terms of planned bone cuts and improve ability to achieve the planned margin around a tumor in pelvic bone cancer surgery?

Methods

We developed an AR-based navigation system for pelvic bone tumor surgery, which could be operated on a tablet PC. We created 36 bone tumor models for simulation of tumor resection in pig pelves and assigned 18 each to the AR-assisted resection group and conventional resection group. To simulate a bone tumor, bone cement was inserted into the acetabular dome of the pig pelvis. Tumor resection was simulated in two scenarios. The first was AR-assisted resection by an orthopaedic resident and the second was resection using conventional methods by an orthopaedic oncologist. For both groups, resection was planned with a 1-cm safety margin around the bone cement. Resection margins were evaluated by an independent orthopaedic surgeon who was blinded as to the type of resection. All specimens were sectioned twice: first through a plane parallel to the medial wall of the acetabulum and second through a plane perpendicular to the first. The distance from the resection margin to the bone cement was measured at four different locations for each plane. The largest of the four errors on a plane was adopted for evaluation. Therefore, each specimen had two values of error, which were collected from two perpendicular planes. The resection errors were classified into four grades: ≤ 3 mm; 3 to 6 mm; 6 to 9 mm; and > 9 mm or any tumor violation. Student’s t-test was used for statistical comparison of the mean resection errors of the two groups.

Results

The mean of 36 resection errors of 18 pelves in the AR-assisted resection group was 1.59 mm (SD, 4.13 mm; 95% confidence interval [CI], 0.24-2.94 mm) and the mean error of the conventional resection group was 4.55 mm (SD, 9.7 mm; 95% CI, 1.38-7.72 mm; p < 0.001). All specimens in the AR-assisted resection group had errors < 6 mm, whereas 78% (28 of 36) of errors in the conventional group were < 6 mm.

Conclusions

In this in vitro simulated tumor model, we demonstrated that AR assistance could help to achieve the planned margin. Our model was designed as a proof of concept; although our findings do not justify a clinical trial in humans, they do support continued investigation of this system in a live animal model, which will be our next experiment.

Clinical Relevance

The AR-based navigation system provides additional information of the tumor extent and may help surgeons during pelvic bone cancer surgery without the need for more complex and cumbersome conventional navigation systems.

Introduction

Even with improvements in cancer treatment, patients with a malignant bone tumor in the pelvis are at a higher risk of having treatment failure (eg, recurrence, metastasis, death) than patients with cancer of the extremities [8]. The goal of surgery is to obtain a wide margin because marginal or intralesional resections are one of the important adverse prognostic factors for overall survival of patients with bone cancer in the pelvic girdle [14]. However, because of the complexity of pelvic anatomy, and the proximity of internal organs and large neurovascular structures, these adequate margins can be difficult to achieve. Recently, several preliminary reports have suggested that surgical navigation in pelvic bone cancer surgery may improve oncologic and functional outcomes [6, 8, 9]. Nevertheless, the size of the navigation unit in an already crowded operating room, the somewhat cumbersome process, and the additional time and cost all are impediments to adopting surgical navigation in bone cancer surgery [10].

To address this issue, we developed an augmented reality (AR)-based navigation system for bone tumor resection, which is operated on a tablet personal computer (PC) platform. Augmented reality is an overlay of information over real-world imagery and in the medical field, the information is usually radiologic data. Our goal in developing this system was to simplify its use by unifying the workstation and position tracker into a tablet PC. In this study, we evaluated AR-based navigation assistance in pelvic bone tumor resection through the simulation of bone tumors in a pig pelvic model.

In this report, we specifically asked: Can AR-assisted resection achieve reduced errors in terms of planned bone cuts and improve ability to achieve the planned margin around a tumor in pelvic bone cancer surgery?

Materials and Methods

Development of the AR-based Navigation System

A conventional navigation system is composed of an optical position tracker, a display, and a workstation. Our AR-based navigation system used a tablet PC (Surface Pro 3; Microsoft, Redmond, WA, USA), which had simultaneous roles as a workstation/display and a position tracker (Fig 1). A single camera embedded in the tablet PC was used as the tracker. The positions of the target object were calculated based on images captured by the camera. To track the position of the target object and surgical instruments, cuboidal reference markers were used, which had featured square patterns with binary code [13]. The camera should be calibrated before using for pose estimation. Zhang’s camera calibration algorithm to obtain calibrated camera parameters was used to estimate the pose of the cuboidal reference marker from the camera [20]. The segmentation and registration methods of this study were the same as for a typical navigation system, except our system used radiologic data and images captured by a single camera for paired point registration, and a typical navigation system uses radiologic data and a real human body. Our AR-assisted navigation system can demonstrate tumor extent by overlaying radiologic data on a real-world image. In addition, we developed a method to display the safety margin and to visualize and guide the resection plane in real time by adopting a three-dimensional (3-D) dilatation technique, by which the volume of an object can be expanded keeping the original contour. The separation of the dilated model from the original tumor volume indicated the planned safety margin.

Fig. 1.

A schematic diagram illustrates workflow of AR-assisted and conventional navigation surgeries.

Production of the Bone Tumor Model and Simulation of Resection

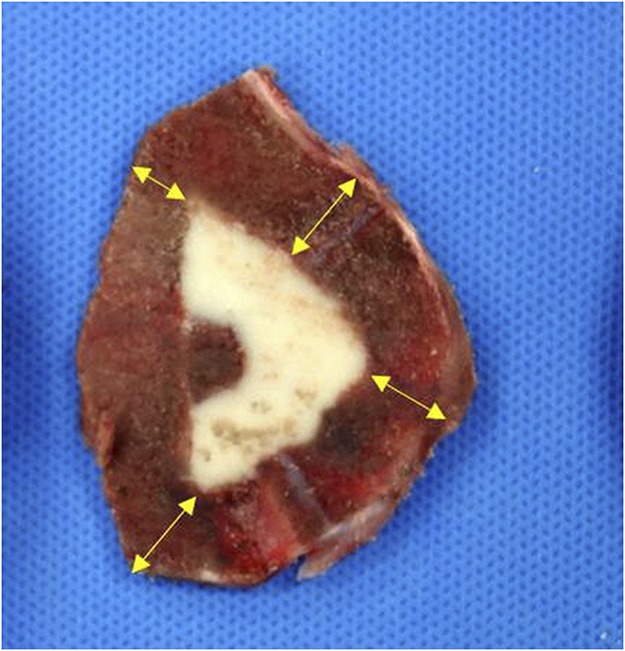

We created a simulated tumor by inserting bone cement into the acetabular dome of a pig. Tumor resection was simulated in two scenarios. The first was AR-assisted resection by an orthopaedic resident and the second was resection using conventional methods by an experienced orthopaedic oncologist without AR assistance (H-SK). The orthopaedic oncologist has performed more than five internal hemipelvectomies per year for > 10 years. Thirty-six pig pelves of unknown age were purchased from a butcher. This study was approved by the institutional animal care and use committee of our hospital (No. BA1506-178/036-01). A cortical window was created on the medial wall of the acetabular dome of the pig pelves and bone cement (DePuy CMW, Blackpool, UK) was inserted to simulate a bone tumor; CT scan was used to evaluate the extent of bone cement. The amount of bone cement inserted ranged from 3 to 10 mL (Fig. 2).

Fig. 2 A-C.

A bone tumor model was created using bone cement around the acetabular dome of a pig pelvis (A) and CT scan (B-C) was used to evaluate the extent of bone cement inserted.

Resection was planned with a 1-cm safety margin around the bone cement. The 36 pig pelves were assigned to the AR-assisted resection group (n = 18) and the conventional resection group (n = 18). In the AR-assisted resection group, resection was performed with AR assistance along the 1-cm dilated tumor contour line. In the conventional resection group, resection was performed with discernment of tumor extent based on the CT image by an expert orthopaedic oncologist (H-SK) as per routine practice.

Evaluation of Resection and Comparison of AR-assisted and Conventional Groups

All specimens were sectioned twice through a plane parallel to the medial wall of the acetabulum and a plane perpendicular to the former plane. Resection margins were evaluated by another orthopaedic surgeon (MSP) who was blinded to the type of resection. The distance from the resection margin to bone cement was measured at four separate locations (Fig. 3). The difference between the obtained surgical margin and the planned surgical margin (1 cm) was regarded as the error of resection. The largest of the four errors was taken to be the error for that plane. Therefore, each specimen had two values of error, which were collected from two perpendicular planes. The resection errors were classified into four grades: ≤ 3 mm; 3 to 6 mm; 6 to 9 mm; and > 9 mm or any tumor violation.

Fig. 3.

Resection error was measured at four locations.

Statistical Analysis

A sample size calculation showed that 36 pig pelves would be required at a significance level of 0.05 and 85% power assuming an effect size of a 2-mm difference in the resection error. This was the difference we wished to be able to detect between the study groups (mean ± SD, 2 ± 2 mm for the AR group compared with 4 ± 2 mm for the conventional resection group). Student’s t-test was used for statistical comparison of the mean resection errors of the two groups. Difference of error distribution was assessed by Fisher’s exact test.

Results

AR assistance could help to achieve the planned margin. The mean of 36 resection errors of 18 pelves in the AR-assisted resection by an orthopaedic resident was 1.59 mm (SD, 4.13 mm; 95% confidence interval [CI], 0.24-2.94) and the mean of 36 errors of the conventional resection by an orthopaedic oncologist was 4.55 mm (SD, 9.7 mm; 95% CI, 1.38-7.72; p < 0.001). In the AR-assisted resection group, 34 (94%) of 36 resection errors were ≤ 3 mm and two (6%) were 3 to 6 mm. None of them were > 6 mm. In the conventional resection group, 15 of 36 errors (42%) were ≤ 3 mm, 13 (36%) were 3 to 6 mm, three (8%) were 6 to 9 mm, and five (14%) were > 9 mm or had tumor violation (Fig. 4). The ability of a surgeon to obtain a 10-mm safety margin with a 6-mm tolerance was 100% in the AR-assisted resection group and 78% (28 of 36) in the conventional resection group (p = 0.005). Three of 18 pelves in the conventional resection group had tumor violation compared with none in the AR-assisted resection group although all of the AR-assisted resections were performed by an orthopaedic resident.

Fig. 4 A-B.

Thirty-four (94%) of 36 resection errors were ≤ 3 mm and two were 3 to 6 mm in the AR-assisted resection group (A); and 22% of resections in the conventional resection group had errors > 6 mm or tumor violation.

Discussion

Despite seemingly favorable attributes of surgical navigation in orthopaedic oncology, it has not been as popular as one might expect. These systems are large and expensive, and thus experienced surgeons may be reluctant to crowd an already busy operating room with a further piece of equipment [10], particularly given that the research on the efficacy of these approaches is best described as nascent [8, 9]. To address the first concern (that is, to try to reduce the physical presence of these systems in the operating room), we developed an AR-based navigation system, which is embedded in a tablet PC, and evaluated the usefulness of the system in pelvic bone cancer in an in vitro experimental study with a pig pelvic bone tumor model. In the AR-assisted resection group, all (100%) of the resections were performed within a 6-mm tolerance, whereas only 78% (28 of 36) were in the conventional method resection group.

The study had a number of limitations. First, this study was an experimental study with pig pelvic bones ex vivo. All pelvic bones used in this study were purchased from a butcher in skeletonized condition. Our model was designed as a proof of concept; whereas our findings do not justify a clinical trial in humans, they do substantiate the need to try to use this system in a live animal model, which will be our next experiment. Second, the bone tumor model was produced with bone cement. Most bone cancers have an aggressive growth pattern and edema around the lesion and it is hard to define the extent of the tumor. With tumor simulation with bone cement, the tumor had a well-defined border. Although determination of the tumor border is a planning concern in an actual operation, this study aimed to evaluate the usefulness of AR assistance in the performance of a planned resection, and we believe bone cement worked well to allow us to measure margins. Third, we had two differently trained persons perform the resection with AR (trainee) in comparison to the conventional resection by an experienced surgeon. The comparison would be more reasonable if the operators were consistent to account for variation in technique and to reduce variables. However, pelvic bone cancer surgery is not so frequent that it is hard for a surgeon to be experienced. Two senior authors of this study have performed pelvic bone cancer surgery for > 10 years. Only one of them was blinded for what he had performed during the experiment because the other designed and directed the study. Comparison between AR-assisted resection and conventional navigation-assisted resection/freehand resection by experienced surgeons would be necessary to address this issue.

Augmented reality has been used in many fields including medicine, education, and entertainment [2, 15, 17]. AR is defined as a direct or indirect view of a real-world environment whose elements are augmented by computer-generated information and, in a surgical field, the information usually means radiologic data. The adoption of AR technology has been reported to be successful and promising in neurosurgery, otolaryngology, and maxillofacial surgery [1, 11, 12]. One might therefore expect that the same technology could be useful in orthopaedic oncology. In orthopaedic oncology, the potential utility of surgical navigation has been demonstrated in many studies [5-9, 18, 19], although most of them are uncontrolled observational studies. An experimental study by Cartiaux et al. [4] demonstrated that only approximately 50% of experienced surgeons performed tumor resection with a 5-mm tolerance in pelvic bone cancer surgery with conventional methods. They suggested that the use of navigation-assisted technology could be helpful for pelvic ring cancer surgery. Nevertheless, most experienced surgeons are reluctant to use a navigation system reasoning that most limb-preserving surgery can be performed without the help of navigation and that the additional time, cost, and effort are not justified [16]. In addition, the size of the navigation unit in an already crowded operating room and somewhat cumbersome process may hinder its use in bone cancer surgery [10]. We developed an AR-based system that is housed in a portable tablet PC, which in this pig model using simulated tumors seemed to reduce the size and number of resection errors. In addition, with the system, a less experienced surgeon made better resections than a more experienced surgeon did by conventional methods.

The AR system of this study consists of a tablet PC, some instruments (reference frame/probe), and the AR software. The reference frame and probe were produced by 3-D printing and the AR software was developed by two authors of this study (HC, JH) using open source software, which needed no additional cost. Our AR system needs conventional CT images as a classic navigation system does. The basic principles of AR navigation of this study were the same as for the conventional navigation system, except our system used CT data and images captured by a tablet PC and a typical navigation system uses CT data and a real human body. The difference between the two systems is projection of two-dimensional images onto a 3-D object in the AR system, which may involve image distortion. Besharati and Mahvash [3] compared the accuracy of an AR system with that of a conventional navigation system for localization and size measurement of a brain tumor. They reported that any projection of an image causes image distortion in an AR system, particularly at the outer border of the projection, and the lowest grade of distortion exists in the center of the projection axis. Despite the image distortion in the AR system, they concluded that it was possible to visualize the brain tumor with a mean projection error of 0.8 ± 0.25 mm. Future studies will likely further reduce the mean projection error in AR systems by compensating for this distortion.

In summary, we developed a new navigation system for pelvic bone cancer surgery assimilating AR technology to simplify the system by embedding the navigation software into a tablet PC. The AR-based navigation system provided additional information on the tumor extent and may help surgeons during pelvic bone cancer surgery without complex, cumbersome, conventional navigation systems. Our model was designed as a proof of concept; although our findings do not justify a clinical trial in humans, they do substantiate the need to try to use this system in a live animal model, which will be our next experiment.

Acknowledgments

We thank Chan Yoon MD, for participating in the experimental study.

Footnotes

One of the authors (HSC) received funding from Seoul National University Bundang Hospital (02-2017-016).

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research® editors and board members are on file with the publication and can be viewed on request.

Clinical Orthopaedics and Related Research® neither advocates nor endorses the use of any treatment, drug, or device. Readers are encouraged to always seek additional information, including FDA approval status, of any drug or device before clinical use.

Each author certifies that his or her institution approved the animal protocol for this investigation and that all investigations were conducted in conformity with ethical principles of research.

This work was performed at Seoul National University Bundang Hospital, Seoul, Korea.

References

- 1.Arora A, Lau LYM, Awad Z, Darzi A, Singh A, Tolley N. Virtual reality simulation training in otolaryngology. Int J Surg. 2014;12:87–94. [DOI] [PubMed] [Google Scholar]

- 2.Baker DK, Fryberger CT, Ponce BA. The emergence of AR in orthopaedic surgery and education. Orthop J Harv Med Sch. 2015;16:8–16. [Google Scholar]

- 3.Besharati TL, Mahvash M. Augmented reality-guided neurosurgery: accuracy and intraoperative application of an image projection technique. J Neurosurg. 2015;123:206–211. [DOI] [PubMed] [Google Scholar]

- 4.Cartiaux O, Docquier P-L, Paul L, Francq BG, Cornu OH, Delloye C, Raucent B, Dehez B, Banse X. Surgical inaccuracy of tumor resection and reconstruction within the pelvis: an experimental study. Acta Orthop. 2008;79:695–702. [DOI] [PubMed] [Google Scholar]

- 5.Cheong D, Letson GD. Computer-assisted navigation and musculoskeletal sarcoma surgery. Cancer Control. 2011;18:171–176. [DOI] [PubMed] [Google Scholar]

- 6.Cho HS, Kang HG, Kim H-S, Han I. Computer-assisted sacral tumor resection. A case report. J Bone Joint Surg Am. 2008;90:1561–1566. [DOI] [PubMed] [Google Scholar]

- 7.Cho HS, Oh JH, Han I, Kim H-S. Joint-preserving limb salvage surgery under navigation guidance. J Surg Oncol. 2009;100:227–232. [DOI] [PubMed] [Google Scholar]

- 8.Cho HS, Oh JH, Han I, Kim H-S. The outcomes of navigation-assisted bone tumour surgery: minimum three-year follow-up. J Bone Joint Surg Br. 2012;94:1414–1420. [DOI] [PubMed] [Google Scholar]

- 9.Hüfner T, Kfuri M, Galanski M, Bastian L, Loss M, Pohlemann T, Krettek C. New indications for computer-assisted surgery: tumor resection in the pelvis. Clin Orthop Relat Res. 2004;426:219–225. [DOI] [PubMed] [Google Scholar]

- 10.Lanfranco AR, Castellanos AE, Desai JP, Meyers WC. Robotic surgery: a current perspective. Ann Surg. 2004;239:14–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mason TP, Applebaum EL, Rasmussen M, Millman A, Evenhouse R, Panko W. Virtual temporal bone: creation and application of a new computer-based teaching tool. Otolaryngol Head Neck Surg. 2000;122:168–173. [DOI] [PubMed] [Google Scholar]

- 12.Masutani Y, Dohi T, Yamane F, Iseki H, Takakura K. Augmented reality visualization system for intravascular neurosurgery. Comput Aided Surg. 1998;3:239–247. [DOI] [PubMed] [Google Scholar]

- 13.Nicolau SA, Pennec X, Soler L, Buy X, Gangi A, Ayache N, Marescaux J. An augmented reality system for liver thermal ablation: design and evaluation on clinical cases. Med Image Anal. 2009;13:494–506. [DOI] [PubMed] [Google Scholar]

- 14.Ozaki T, Flege S, Kevric M, Lindner N, Maas R, Delling G, Schwarz R, von Hochstetter AR, Salzer-Kuntschik M, Berdel WE, Jürgens H, Exner GU, Reichardt P, Mayer-Steinacker R, Ewerbeck V, Kotz R, Winkelmann W, Bielack SS. Osteosarcoma of the pelvis: experience of the Cooperative Osteosarcoma Study Group. J Clin Oncol. 2003;21:334–341. [DOI] [PubMed] [Google Scholar]

- 15.Ponce BA, Jennings JK, Clay TB, May MB, Huisingh C, Sheppard ED. Telementoring: use of augmented reality in orthopaedic education. J Bone Joint Surg Am. 2014;96:e84. [DOI] [PubMed] [Google Scholar]

- 16.Randall RL. Metastatic Bone Disease: An Integrated Approach to Patient Care. New York, NY, USA: Springer-Verlag; 2016. [Google Scholar]

- 17.Shuhaiber JH. Augmented reality in surgery. Arch Surg. 2004;139:170–174. [DOI] [PubMed] [Google Scholar]

- 18.So TYC, Lam Y-L, Mak K-L. Computer-assisted navigation in bone tumor surgery: seamless workflow model and evolution of technique. Clin Orthop Relat Res. 2010;468:2985–2991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wong KC, Kumta SM. Joint-preserving tumor resection and reconstruction using image-guided computer navigation. Clin Orthop Relat Res. 2013;471:762–773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang Z. A. Flexible New Technique for Camera Calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22 Available at: https://www.microsoft.com/en-us/research/publication/a-flexible-new-technique-for-camera-calibration/. Accessed December 17, 2017.