Abstract

Background

The American Joint Replacement Registry (AJRR) Total Joint Risk Calculator uses demographic and clinical parameters to provide risk estimates for 90-day mortality and 2-year periprosthetic joint infection (PJI). The tool is intended to help surgeons counsel their Medicare-eligible patients about their risk of death and PJI after total joint arthroplasty (TJA). However, for a predictive risk model to be useful, it must be accurate when applied to new patients; this has yet to be established for this calculator.

Questions/purposes

To produce accuracy metrics (ie, discrimination, calibration) for the AJRR mortality calculator using data from Medicare-eligible patients undergoing TJA in the Veterans Health Administration (VHA), the largest integrated healthcare system in the United States, where more than 10,000 TJAs are performed annually.

Methods

We used the AJRR calculator to predict risk of death within 90 days of surgery among 31,214 VHA patients older than 64 years of age who underwent primary TJA; data was drawn from the Veterans Affairs Surgical Quality Improvement Project (VASQIP) and VA Corporate Data Warehouse (CDW). We then used VHA mortality data to evaluate the extent to which the AJRR calculator estimates distinguished individuals who died compared with those who did not (C-statistic), and graphically depicted the relationship between estimated risk and observed mortality (calibration). As a secondary evaluation of the calculator, a sample of 39,300 patients younger than 65 years old was assigned to the youngest age group available to the user (65-69 years) as might be done in real-world practice.

Results

C-statistics for 90-day mortality for the older samples were 0.62 (95% CI, 0.60–0.64) and for the younger samples they were 0.46 (95% CI, 0.43–0.49), suggesting poor discrimination. Calibration analysis revealed poor correspondence between deciles of predicted risk and observed mortality rates. Poor discrimination and calibration mean that patients who died will frequently have a lower estimated risk of death than surviving patients.

Conclusions

For Medicare-eligible patients receiving TJA in the VA, the AJRR risk calculator had a poor performance in the prediction of 90-day mortality. There are several possible reasons for the model’s poor performance. Veterans Health Administration patients, 97% of whom were men, represent only a subset of the broader Medicare population. However, applying the calculator to a subset of the target population should not affect its accuracy. Other reasons for poor performance include a lack of an underlying statistical model in the calculator’s implementation and simply the challenge of predicting rare events. External validation in a more representative sample of Medicare patients should be conducted to before assuming this tool is accurate for its intended use.

Level of Evidence

Level I, diagnostic study.

Introduction

Accurate statistical models that predict patients’ risk of death and major complications after total joint arthroplasty (TJA) could improve the quality of preoperative management, including informed consent, shared decision making, and risk stratification. The American Total Joint Replacement Registry Risk Calculator (https://teamwork.aaos.org/ajrr/SitePages/Risk%20Calculator.aspx), developed by members of our team (KJB and EL) with data from a sample of 65,499 Medicare patients who underwent primary THA and 137,546 Medicare patients who underwent primary TKA, inputs 30 demographic and clinical variables and returns risk estimates for 90-day mortality and periprosthetic joint infection (PJI) within 2 years [2, 3]. The tool is expressly intended to help surgeons counsel their Medicare-eligible patients (65 years or older) on their individual risk of death or PJI after THA and TKA.

For a predictive risk tool to be useful, however, it must be accurate [5], but no accuracy metrics were calculated when the AJRR risk calculator was developed nor has it been internally or externally validated [10]. The accuracy of predictive tools is evaluated by determining the extent to which individuals who experience the outcome have higher predicted risk of that outcome compared with those who do not (ie, discrimination) and by plotting the relationship between predicted risk and observed outcome rates (calibration), which ideally is positive and monotonic. The Veterans Health Administration (VHA) is the largest integrated health care system in the United States; more than 10,000 TJAs are performed at VHA facilities annually. Patients who are eligible for both Medicare and VA health benefits often use both options when seeking and receiving medical care. Because no healthcare system is representative of the entire Medicare population, it is important to evaluate the accuracy of the calculator in subsets of the target population. As part of our ongoing efforts to develop and validate predictive models for VA TJA patients [8], we constructed databases that offered an opportunity to externally validate the AJRR risk calculator with an entirely new set of Medicare-eligible patients (external validation).

The purpose of this study was to produce accuracy metrics (ie, discrimination, calibration) for the AJRR mortality calculator using data from Medicare-eligible patients undergoing TJA at the VHA.

Patients and Methods

Definitions

Assessing predictive model performance is complex and multidimensional, but three concepts are essential: discrimination, calibration, and internal/external validation.

Discrimination refers to the ability of a model to distinguish patients who experience an outcome from those who do not. Discrimination is often quantified by the C-statistic, which represents the probability that a patient who experienced an outcome (eg, 90-day mortality) would have a higher predicted probability than a randomly selected patient who did not experience that outcome. Generally, C-statistics can be interpreted as excellent (0.9–1), good (0.8–0.89), fair (0.7–0.79), poor (0.6–0.69), or fail/no discriminatory capacity (0.5–0.59) [7, 9]. However, because the C-statistic is based on rank, it has limitations and should be accompanied by other metrics of model accuracy, such as calibration.

A model’s calibration compares predicted and observed outcomes across the entire range of the data. Calibration is commonly visually represented and assessed by plotting observed versus predicted outcomes over equally sized deciles of risk. If outcome rates generally track monotonically along with predicted probabilities, calibration is considered good.

Finally, predictive models and tools must be validated. Most predictive models are developed with regression analysis, using demographic, clinical, and other preoperative variables to generate outcome estimates. When the number of predictors and interaction terms are large, and the number of events is small, it is possible to overfit the model to the data in which it was developed. Overfitting, a well-known issue in predictive modeling, occurs when a statistical model or other means of generating predictions is too specific to the data in which it was developed, which leads to less-reliable predictions for new patients.

To protect against overfitting, developers often split their data into a learning sample in which the model is developed, and a test sample in which the model is validated. When developers hold some of the same dataset aside for testing, that is called internal validation. When developers or others test their models with an entirely different data source, that is called external validation. External validation provides more rigorous protection against overfitting and evaluates the generalizability of the model to new contexts and populations. In either case, the discrimination and calibration of the models when validated are the metrics by which prediction tools should be judged.

Study Design and Setting

Calculator Specifications and Implementation

The development of the AJRR online risk calculator and its implementation into a related app is described elsewhere [4]. Although the original model development involved logistic regression, the AJRR risk calculator is implemented with a lookup table, not with regression coefficients, to accommodate a very large number of interactions. The lookup table includes observed rates of 90-day mortality for patients matching more than 30,000 combinations of inputs that existed in the original Medicare datasets. For patterns of inputs without a match in the lookup table, the highest rate for mortality based on available combinations of conditions is returned [4].

Participants/Study Subjects

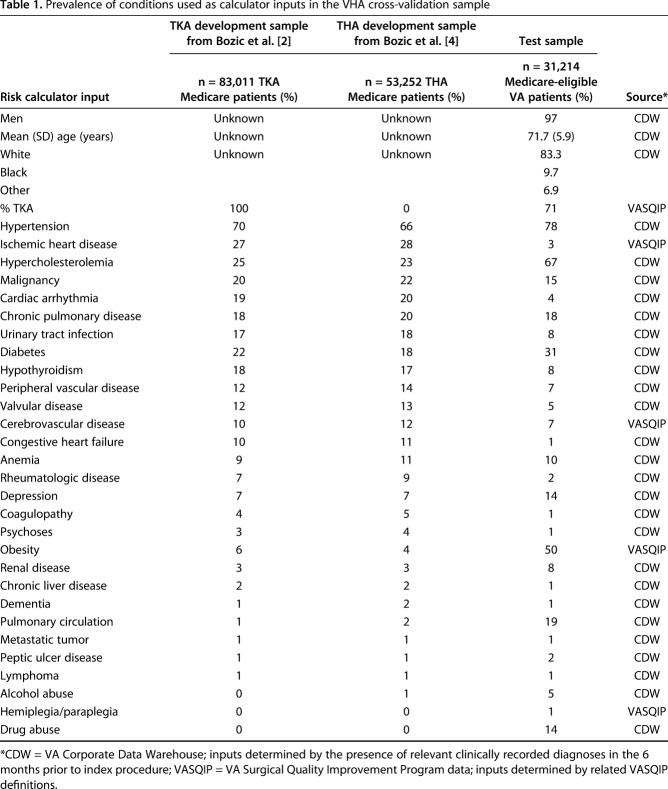

The Veterans Affairs Surgical Quality Improvement Program (VASQIP) collects preoperative, perioperative, and postoperative data on annual samples of high-volume surgical procedures in VHA medical centers across the United States [8]. All primary THAs and TKAs in the VASQIP for a 6-year period (fiscal years 2008-2015) were included in the initial sample. The initial sample was then restricted to patients with clinically documented osteoarthritis in the year before the index procedure and stratified by Medicare-eligibility (n = 31,214) and patients less than 65 years old (n = 39,300). We described the characteristics of the VHA validation sample and the previously reported development samples for reference. The VHA sample is 97% men (30,277 of 31,214 patients) and had a different comorbidity profile than the original development sample (Table 1).

Table 1.

Prevalence of conditions used as calculator inputs in the VHA cross-validation sample

Variables, Outcome Measures, Data Sources

Data for risk calculator inputs were determined by the presence of relevant clinically recorded diagnoses in the VA’s Corporate Data Warehouse (CDW) in the 6 months prior to the index procedure or by using relevant VASQIP data and definitions (Table 1). Death within 90 days, the primary outcome, was determined from the VA Vital Status File using a method that has 98.3% sensitivity and 97.6% exact agreement with dates from the National Death Index [12].

Statistical Analysis

Discrimination and Calibration

We calculated C-statistics using the calculator’s outputted risk probabilities and 90-day mortality data from VASQIP data. Calibration was assessed visually by examining the relationship between predicted and observed mortality across equally sized deciles of risk.

Sensitivity and Secondary Analyses

We did not have data on one of the calculator inputs: state buy-in status, which is described on the website (http://riskcalc.aaos.org/input.html) as a “socioeconomic status proxy; state buy-in indicates receipt of state subsidies for Medicare insurance premium.” This refers to patients who have dual eligibility for Medicare and Medicaid. For the primary analyses, because many VA patients have lower socioeconomic status, we assigned all patients in the sample to “state buy-in = yes.” To check the impact of this assumption, we changed the response to “no” in the sensitivity analyses. The AJRR risk calculator is expressly intended to estimate risk for Medicare-eligible patients older than 64 years. However, anticipating that some users might want to generate estimates for younger patients, we conducted a secondary analysis using data from our younger cohort and assigned them to the youngest age group allowed by the calculator (65–69 years), then repeated the analyses described above. We conducted all statistical analyses using R version 3.1.3 (R Core Team, Vienna, Austria) [11].

Results

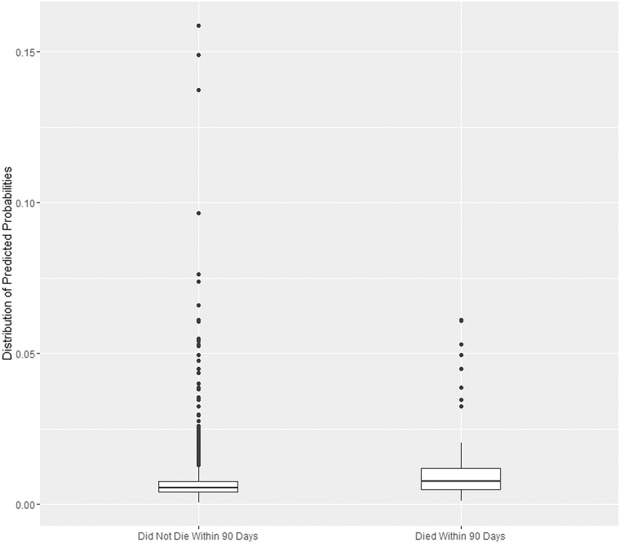

The AJRR risk calculator had poor discrimination in terms of identifying those patients at risk of death within 90 days of surgery. The C-statistic for 90-day mortality in the validation sample was 0.62 (95% CI, 0.60–0.64), meaning that a person who died only had a 62% chance of having a higher estimated risk than a person who did not die. The 90-day mortality rate in the VA cohort was 0.0072 (ie, 0.72%, n = 224), similar to the mean predicted probability for the sample of 0.0078 (range, 0.0007–0.1587). Ideally, prediction tools generate distributions of predicted probabilities that do not overlap, such that those who experienced the outcomes have higher predicted probabilities than those who do not. The mean predicted probability for the group of 224 patients who died within 90 days was higher than the mean predicted probability for the group of 30,990 patients who did not die (0.0116 versus 0.0077; p < 0.001) but also showed substantial overlap in the distributions (Fig. 1).

Fig. 1.

The mean of the predicted probability from the calculator is higher for patients who died but also shows substantial overlap in the distributions. The horizontal centerline is the median, the “hinges” (upper and lower limits of the box) represent the first and third quartiles, the whisker extends from the hinge to the largest value no further than 1.5 times the interquartile range from the hinge. Values beyond the whiskers are represented by dots.

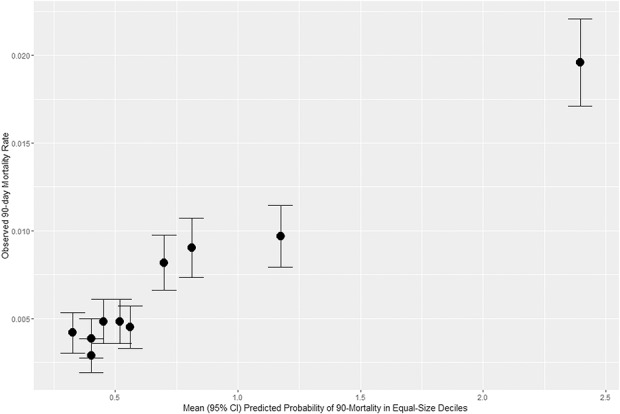

Regarding calibration, mortality rates generally increased with predicted probabilities (Fig. 2). There was a stair-step quality rather than a monotonic increase with increasing predicted probability.

Fig. 2.

Calibration plot of observed mortality rate across deciles of predicted probability of 90-day mortality; CI = confidence interval.

For the sensitivity analyses regarding “state buy-in status” that did not have an exact counterpart in the VA data, changing the assumptions of our primary analyses did not affect the results. In the secondary analysis, for the sample of patients younger than 65 who we assigned to the youngest age group available for the calculator (65–69 years), which is what a clinician might do when using the calculator for a younger patient, the C-statistic for 90-day mortality in the VHA validation sample was 0.46 (95% CI, 0.43–0.49), or worse than random chance.

Discussion

Risk estimation is becoming increasingly important as physician performance and quality of care become publicly reportable. Other than our recently published models for use within the VA [8], to our knowledge, there are currently no other published, validated, and accurate predictive models of short-term mortality or other complications specifically for THA or TKA. For a predictive risk tool such as the AJRR risk calculator to be useful for informed consent, patient selection, and risk adjustment, it must be accurate upon validation [5]. Using data from a large sample of mostly male, Medicare-eligible VA patients, who were included in our ongoing study to develop and validate predictive models for VA TJA patients, we sought to externally validate the AJRR risk calculator. However, we found poor discrimination and calibration. Therefore, at least for the subsample of Medicare-eligible patients represented in VA data, the AJRR risk calculator cannot be recommended as a tool to estimate risk of postoperative mortality or for counseling patients before TJA. The tool’s performance in the wider Medicare patient population is unknown.

This study has several limitations. We did not have data on 2-year PJI in the VA sample and could not evaluate the risk calculator’s performance for that outcome. Also, we used a combination of VA medical record diagnoses and VASQIP variables to operationalize calculator inputs, which depend on the accuracy of clinical coding and abstraction, and which may have differed from the definitions used by the developers. However, our method of operationalizing variables was at least internally consistent and likely more reliable than most real-world use of the calculator. Furthermore, the quality of VA mortality data (vital status files), which pulls from many sources, including CMS data, has excellent agreement with the National Death Index [12]. Also, although we used a subsample of Medicare eligible patients, many of whom are dual users of VA and Medicare, the sample was not representative of all Medicare patients. Nevertheless, no surgeon has patients who are representative of all Medicare patients. Ideally, applying the calculator to a subset of the target sample should not affect its accuracy.

The poor performance of the AJRR risk calculator may have resulted from several factors. First, mortality and complications after TJA are rare, and predicting rare events is very difficult. It is important to note that predictive models of surgical outcomes depend on data from people who underwent surgery. They do not include data from people who did not undergo the procedures. Therefore, the AJRR calculator and other surgical risk models try to predict risk for patients who have been otherwise cleared for surgery. In other words, they are trying to screen patients for risk who may have already been screened for risk. Although this makes it harder produce accurate models, the intent of surgical risk calculators is to give surgeons and patients information that they do not already have. Thus, many surgical risk models of complications and mortality have poor-to-modest discrimination statistics [10]. One single-site validation of the American College of Surgeons National Surgical Quality Improvement Program (ACS-NSQIP) universal risk calculator in a small sample of THA and TKA patients found a C-statistic of 0.67 for 90-day mortality, indicating poor discrimination [6]. Another single-site study found the ASC-NSQIP model to have fair discrimination for 90-day PJI (C-statistic = 0.71) [13]. Models that we recently developed for use in VA TJA patients had fair discrimination for cardiac complications and 30-day mortality (0.75 and 0.73 respectively), but very poor C-statistics for deep vein thrombosis (0.59) and return to the operating room (0.60).

Second, the tool was developed with a more representative sample of Medicare patients, which differed in several ways from the validation sample, especially because the VA sample was predominantly men (about 97%). In addition to sex distribution, the patient cohort in the VA sample had a different distribution of comorbidities than the Medicare sample used to develop the tool (Table 1). It is important to understand that all the patients in our VA sample were Medicare eligible, meaning that they should be appropriate for the AJRR risk calculator. This external validation admittedly focused on the risk calculator’s statistical performance of a subset of the overall Medicare eligible population. However, most surgeons and systems will treat only a subset of Medicare patients, highlighting the importance of the calculator being accurate for the entire population, or as we have done, identifying subpopulation for which it is substandard. It could be that the tool is more accurate for other subgroups of Medicare patients, but that should not be assumed until rigorously evaluated.

Third, to capture the potential predictive power of interaction effects, the AJRR risk calculator was implemented with a lookup table, not a statistical model. Patients with inputs that have matching counterparts in the lookup table are assigned the observed mortality rate in that group. Patients for whom an exact match is lacking are assigned the observed mortality rate of another group. The limitations of this method are highlighted when we consider an elderly patient with cardiac arrhythmia, dementia, drug abuse, HIV, lymphoma, and obesity, who not surprisingly, has no match in the lookup table. As a result, this patient is assigned the observed risk of patients with the same demographic with no comorbidities (and a 2.51% risk of 90-mortality). This method assigns no added risk for comorbidities in patients without an exact match in the lookup tables. This appears to be a weakness. By contrast, a statistical model with coefficients for each of these inputs would have added risk for this patient’s comorbidities. Furthermore, the Medicare data used in the tool development only includes in-hospital mortality. There may have been an under-ascertainment of mortality in the development sample.

The AJRR risk calculator had poor predictive value for estimating 90-day mortality risk, at least for Medicare-eligible VA patients. Its accuracy for predicting 2-year PJI and its applicability in other subgroups of Medicare patients is unknown and should not be assumed. There remains a need for accurate models for mortality, major complications, and long-term outcomes after TJA. The recent Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) statement, and the Consensus Statement on Electronic Health Predicative Analytics describe the methodological and reporting standards for the development of clinical risk calculators [1, 5]. Healthcare providers and patients should consider these standards and limitations when using risk calculators to predict rare events after surgical procedures such as TJA. Researchers should continue efforts to develop and improve clinical prediction models, perhaps by including nonstandard or patient-collected inputs, and by predicting longer-term, patient-centered outcomes such as improvements in pain, functioning, and satisfaction.

Footnotes

This work was funded by grants from the Veterans Administration Health Services Research and Development Service (I01-HAX002314-01A1(AHSH); RCS14-232 (AHSH). One author (EL) is an employee of Exponent Inc (Menlo Park, CA, USA). Each author certifies that neither he or she, nor any member of his or her immediate family, have funding or commercial associations (consultancies, stock ownership, equity interest, patent/licensing arrangements, etc) that might pose a conflict of interest in connection with the submitted article.

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research® editors and board members are on file with the publication and can be viewed on request.

Clinical Orthopaedics and Related Research® neither advocates nor endorses the use of any treatment, drug, or device. Readers are encouraged to always seek additional information, including FDA approval status, of any drug or device before clinical use.

Each author certifies that his or her institution approved the human protocol for this investigation and that all investigations were conducted in conformity with ethical principles of research.

This work was performed at the VA Palo Alto Healthcare System, Center for Innovation to Implementation, Menlo Park, CA, USA.

References

- 1.Amarasingham R, Audet AM, Bates DW, Glenn Cohen I, Entwistle M, Escobar GJ, Liu V, Etheredge L, Lo B, Ohno-Machado L, Ram S, Saria S, Schilling LM, Shahi A, Stewart WF, Steyerberg EW, Xie B. Consensus statement on electronic health predictive analytics: a guiding framework to address challenges. EGEMS (Wash DC). 2016;4:1163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bozic KJ, Lau E, Kurtz S, Ong K, Berry DJ. Patient-related risk factors for postoperative mortality and periprosthetic joint infection in Medicare patients undergoing TKA. Clin Orthop Relat Res. 2012;470:130–137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bozic KJ, Lau E, Kurtz S, Ong K, Rubash H, Vail TP, Berry DJ. Patient-related risk factors for periprosthetic joint infection and postoperative mortality following total hip arthroplasty in Medicare patients. J Bone Joint Surg Am. 2012;94:794–800. [DOI] [PubMed] [Google Scholar]

- 4.Bozic KJ, Ong K, Lau E, Berry DJ, Vail TP, Kurtz SM, Rubash HE. Estimating risk in Medicare patients with THA: an electronic risk calculator for periprosthetic joint infection and mortality. Clin Orthop Relat Res. 2013;471:574–583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD). Ann Intern Med. 2015;162:735–736. [DOI] [PubMed] [Google Scholar]

- 6.Edelstein AI, Kwasny MJ, Suleiman LI, Khakhkhar RH, Moore MA, Beal MD, Manning DW. Can the American College of Surgeons risk calculator predict 30-day complications after knee and hip arthroplasty? J Arthroplasty. 2015;30:5–10. [DOI] [PubMed] [Google Scholar]

- 7.Fischer JE, Bachmann LM, Jaeschke R. A readers' guide to the interpretation of diagnostic test properties: clinical example of sepsis. Intensive Care Med. 2003;29:1043–1051. [DOI] [PubMed] [Google Scholar]

- 8.Harris A, Kuo A, Bowe T, Gupta S, Nordin D, Giori NJ. Prediction models for 30-day mortality and complications after total knee and hip arthroplasties for Veteran Health Administration patients with osteoarthritis. J Arthroplasty.2018;33;1539–1545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hosmer DW, Lemeshow S. Applied Logistic Regression. New York: John Wiley & Sons, Inc.; 2000. [Google Scholar]

- 10.Manning DW, Edelstein AI, Alvi HM. Risk prediction tools for hip and knee arthroplasty. J Am Acad Orthop Surg. 2016;24:19–27. [DOI] [PubMed] [Google Scholar]

- 11.R Development Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria; 2015. URL http://www.R-project.org/. Accessed May 18, 2018. [Google Scholar]

- 12.Sohn MW, Arnold N, Maynard C, Hynes DM. Accuracy and completeness of mortality data in the Department of Veterans Affairs. Popul Health Metr. 2006;4:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wingert NC, Gotoff J, Parrilla E, Gotoff R, Hou L, Ghanem E. The ACS NSQIP Risk calculator is a fair predictor of acute periprosthetic joint infection. Clin Orthop Relat Res. 2016;474:1643–1648. [DOI] [PMC free article] [PubMed] [Google Scholar]