Abstract

Primates have evolved to rapidly detect and respond to danger in their environment. However, the mechanisms involved in attending to threatening stimuli are not fully understood. The dot-probe task is one of the most widely used experimental paradigms to investigate these mechanisms in humans. However, to date, few studies have been conducted in non-human primates. The aim of this study was to investigate whether the dot-probe task can measure attentional biases towards threatening faces in chimpanzees. Eight adult chimpanzees participated in a series of touch screen dot-probe tasks. We predicted faster response times towards chimpanzee threatening faces relative to neutral faces and faster response times towards faces of high threat intensity (scream) than low threat intensity (bared teeth). Contrary to prediction, response times for chimpanzee threatening faces relative to neutral faces did not differ. In addition, we found no difference in response times for faces of high and low threat intensity. In conclusion, we found no evidence that the touch screen dot-probe task can measure attentional biases specifically towards threatening faces in our chimpanzees. Methodological limitations of using the task to measure emotional attention in human and non-human primates, including stimulus threat intensity, emotional state, stimulus presentation duration and manual responding are discussed.

Introduction

Faces are one of most important and salient social stimuli for primates. They convey information about identity, age, sex, attention and emotion [1]. Humans display a wide range of facial expressions to communicate their emotions, including anger, fear, disgust, sadness, surprise and happiness [2, 3]. Similarity in human and animal facial expressions have long been thought to reflect similarity in basic emotions [4, 5]. The animal fear system has evolved to rapidly detect and respond to danger in the environment and is particularly sensitive to threatening stimuli such as snakes and angry faces [6, 7]. Thus, information from threatening stimuli is given attentional priority over other information [8]. This attentional bias refers to “differential attentional allocation towards threatening stimuli relative to neutral stimuli” [9] (p. 204).

To investigate the mechanisms involved in attending to threatening stimuli in humans, several experimental paradigms have been developed. These include emotional stroop, visual search, attentional probe, spatial cueing and rapid serial visual presentation tasks [9, 10]. One of the most widely used spatial cueing tasks is the visual dot-probe task, originally developed by MacLeod et al. [11]. Two stimuli (words or images) are simultaneously presented on a screen for a short duration (traditionally 500 ms). Typically, one of the stimuli is threatening (angry face) and the other is neutral (non-expressive face). The stimuli disappear and a dot (probe) appears randomly in the spatial location of either the threatening stimulus (congruent trial) or the neutral stimulus (incongruent trial). Response times to detect the dot are recorded using computer keys. It is assumed if attention is already fixated in the spatial location of one stimulus, detecting the dot in the same location will result in faster response times [10]. Faster reaction times on congruent trials indicate attentional bias towards threatening faces (vigilance) and on incongruent trials attentional bias away from threatening faces (avoidance) [12].

The dot-probe task has been used extensively to investigate the relationship between emotion and attention in humans. Vigilance towards threatening faces is more consistently found in anxious than non-anxious people. However, whilst in some studies anxious participants show greater vigilance towards threatening faces compared with non-anxious participants [13–18], in other studies they show avoidance [19, 20] or no attentional bias [21–23]. Several methodological differences likely account for these inconsistencies. Two of the most important moderating variables for measuring vigilance towards threatening faces are the duration between the start of the stimulus and the start of the dot presentation (stimulus onset asynchrony; SOA) and stimulus threat intensity [9, 10, 24].

Several human studies have found vigilance towards threatening faces at short SOAs. Shorter SOAs are thought to facilitate threat detection by the amygdala and involve more automatic processing, whereas longer SOAs facilitate strategic processing by the prefrontal cortex and involve greater attentional control [9, 25]. Stevens et al. [18] found vigilance towards threatening faces at 175 ms but not 600 ms, Cooper and Langton [26] at 100 ms but not 500 ms, and Holmes et al. [27] at 30 ms or 100 ms but not 500 ms or 1,000 ms. Vigilance towards threatening faces has also been found at the longer SOA of 500 ms but not 1,250 ms [15, 28]. Overall, SOAs of less than 500 ms appear more suitable for measuring vigilance towards threatening faces. In addition, shorter SOAs prevent attention from switching between the two stimuli in the dot-probe task [25]. Eye saccades are “rapid, ballistic eye movements that move the fovea (the region of highest visual acuity) towards the target stimulus” [29] (p. 381) and can occur within 200 ms. Therefore, SOA duration should ideally be limited to 150 ms, to prevent attention from switching during stimuli presentation [29].

Regarding stimuli threat intensity, faces expressing anger are often used as stimuli, as they are more salient, threatening and ecologically valid than words [30, 31]. An interesting study by Wilson and MacLeod [32] investigated attentional bias to faces of different threat intensity in the dot-probe task. Low anxiety participants showed vigilance towards morphed faces expressing very high anger, but not moderate anger. High anxiety participants showed vigilance towards both very high and moderate anger faces. Overall, vigilance towards threatening faces increased with threat intensity. In a simplified dot-probe task in humans, de Valk et al. [33] found faster response times to touch angry faces than fearful or neutral faces. Although both angry and fearful faces signal danger in the environment, angry faces signal a more direct threat to the observer [34]. Therefore, angry faces likely have a higher threat intensity than fearful faces.

Despite extensive use of the dot-probe task to investigate emotional attention in humans, few studies have been conducted with animals [35]. Recently, a handful of dot-probe studies in non-human primates have been conducted using touchscreens. In monkeys, Koda et al. [36] presented two Japanese macaques with conspecific infant and adult faces for 100 ms. Attention was captured by visual cues, but no bias was found towards infant faces. In six rhesus monkeys King et al. [37] found vigilance towards threatening conspecific faces presented for 1,000 ms. Also in six rhesus monkeys Parr et al. [38] found vigilance towards conspecific threatening faces (bared-teeth and open mouth threat expressions) presented for 500 ms. In great apes, Tomonaga and Imura [39] conducted the first visuo-spatial cueing (dot-probe) experiment with three chimpanzees. Neutral chimpanzee and human faces and random objects were presented for 200 ms. Attentional biases were observed towards chimpanzee and human faces versus objects, but not bananas versus objects, indicating a face-specific bias. More recently, Kret et al. [40] presented four bonobos with images of conspecifics and other animals as control stimuli for 300 ms. Bonobo images consisted of either emotional scenes (i.e. distress, groom, sex, yawn, play, food, pant hoot) or neutral scenes, including the whole body. Attention was biased towards emotional scenes, with the strongest biases towards affiliative and protective behaviours such as sex, yawning and grooming. However, when Kret et al. [41] presented eight chimpanzees with conspecific whole-body threatening stimuli (fearful and display expressions) paired with neutral stimuli for 33 ms and 300 ms no vigilance towards threatening stimuli was found.

Overall, evidence from non-human primate studies suggests the dot-probe task can successfully measure attentional biases to emotional stimuli in monkeys and great apes. However, many methodological inconsistencies in both the human and non-human primate literature exist, including but not limited to: SOA duration, stimuli threat intensity, stimuli pair combination and response methods. Nevertheless, the task offers a promising method to investigate attentional processes in emotional perception in non-human primates. Furthermore, the task is implicit and requires little training, making it ideal for use with non-human primates and other animal species [24].

The aim of the present study was to investigate whether the dot-probe task can measure attentional biases towards threatening faces in chimpanzees. We predicted faster response times to touch the dot replacing threatening faces than neutral faces or scrambled faces. In addition, we predicted faster response times towards faces of higher than lower threat intensity [33, 34].

Materials and methods

Ethics statement

This study was approved by the Animal Welfare and Care Committee of the Primate Research Institute, Kyoto University, and the Animal Research Committee of Kyoto University (2016–064, 2017–106), and followed the Guidelines for the Care and Use of Laboratory Primates of the Primate Research Institute, Kyoto University (Version 3, 2010). No food or water deprivation was used in the study.

Participants and housing

Eight adult chimpanzees (Pan troglodytes), six females and two males, participated in the study at the Primate Research Institute, Kyoto University, Japan (Table 1) [42]. The chimpanzees were members of a social group of 11 individuals living in an environmentally enriched facility consisting of two outdoor enclosures (250 m2 and 280 m2), an open air outdoor enclosure (700 m2) and indoor living rooms linked to testing rooms. The open air outdoor enclosure was equipped with 15 m high climbing frames and included trees [43, 44]. The chimpanzees had extensive experience participating in touchscreen cognitive tasks.

Table 1. Basic information about the eight chimpanzees.

| Name | GAIN ID Numbera | Sex | Age in years (at study start) |

|---|---|---|---|

| Ai | 0434 | Female | 41 |

| Ayumu | 0608 | Male | 16 |

| Chloe | 0441 | Female | 37 |

| Cleo | 0609 | Female | 17 |

| Gon | 0437 | Male | 51 |

| Pal | 0611 | Female | 16 |

| Pendensa | 0095 | Female | 39 |

| Popo | 0438 | Female | 34 |

a Identification number (ID) for each chimpanzee listed in the database of the Great Ape Information Network (GAIN): https://shigen.nig.ac.jp/gain/ [42].

Apparatus

Experiments were conducted in an experimental booth (1.80 × 2.15 × 1.75 m) inside a testing room. Each chimpanzee voluntarily walked to the booth through an overhead walkway connected to the living rooms. Two 17-inch touch-sensitive LCD monitors (1280 × 1024 pixels) encased in Plexiglas were used to present visual stimuli at approximately 40 cm distance. Food rewards (8 mm apple cubes) were delivered via a universal feeder device. All experimental events were controlled by a PC and the computer task was programmed using Microsoft Visual Basic 2010 (Express Edition).

Stimuli

Facial stimuli consisted of cropped photographs (200 × 250 pixels, 53 mm × 66 mm) of unfamiliar chimpanzee (Pan troglodytes), orangutan (Pongo pygmaeus and Pongo abelii) and olive baboon (Papio anubis) faces. Stimuli were obtained from photographs taken of chimpanzees at Kumamoto Sanctuary, Kyoto University, or from personal collections. All faces were presented in greyscale and the average luminance of each face was scaled to the average luminance of all faces in each experiment. This was to control for differences in colour hue, luminance and low-level features which may inadvertently bias attention. Chimpanzee threatening faces were categorised into ‘scream’ (higher threat intensity) and ‘bared teeth’ (lower threat intensity) expressions. A chimpanzee scream expression was defined as: “a raised upperlip with lip corners pulled back exposing the upper teeth, lower lip depressed also exposing the lower teeth, and mouth stretched wide open with lips parted” which is thought to reflect “general agonism” [45] (p. 176). A chimpanzee bared-teeth expression was defined as: “an open mouth with lips parted, a raised upper lip, and retracted lip corners functioning to expose the teeth” which occurs “in response to aggression” [45] (p. 175) and likely reflects fear. The scream and bared-teeth facial expression stimulus set was reviewed by a certified Chimp Facial Action Coding System (ChimpFACS) coder (http://www.chimpfacs.com/) who found the expressions consistent with our categorisation. Colour stimuli consisted of two shades of red; ‘light red’ and ‘dark red’. Object stimuli consisted of two chairs against a nondescript background in colour. Control stimuli consisted of scrambled images. Scrambled chair images were composed by randomly shuffling each pixel of the original images to a new position (box scrambling). This ensured content-related information was removed while average brightness levels were maintained. Scrambled face images were composed by calculating the average power spectrum of the original images to generate phase randomised images (phase scrambling). This preserved the same spatial frequency spectrum as the original images [46]. Stimuli pairs were presented at a distance of 114 mm (432 pixels) between their center points The inside edge of each stimulus was presented at 4° from central fixation so that each stimulus was presented unilaterally to the left and right visual fields [47, 48].

Procedure

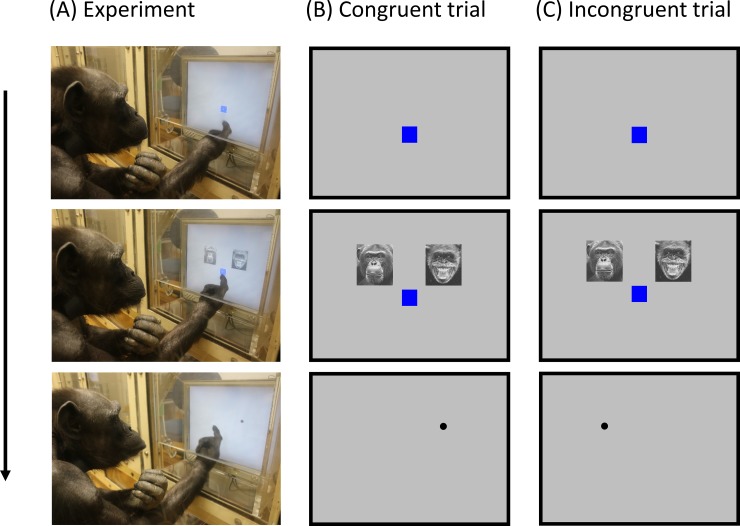

The chimpanzees participated in a series of touchscreen dot-probe tasks (Fig 1). All chimpanzees participated in all experiments which took place every weekday (one session per day). Experiments ran consecutively. To begin each trial the chimpanzees touched a fixation cue (blue square) just below the center of the screen. This was followed by simultaneous presentation of two stimuli directly above the fixation cue. The stimuli and fixation cue then disappeared together and a black dot (probe) appeared immediately in the spatial location of one of the stimuli. The Stimulus Onset Asynchrony (SOA) was 150 ms [29]. When the dot was touched a chime was played and a food reward given. If the dot was not touched it remained on the screen. Response times (ms) to touch the dot were recorded by a PC. The inter-trial interval was 2 s. For each stimuli pair, the dot replaced the stimulus predicted to facilitate faster response times (congruent trials) or the stimulus predicted to lead to slower response times (incongruent trials). In each session half the trials were congruent and half were incongruent. Stimuli presentation (left or right) and congruency (congruent or incongruent dot presentation) was randomised across trials.

Fig 1. Schematic diagram of the dot-probe task.

(A) Experiment: Chimpanzee ‘Ai’ participating in the threatening face experiment. (B) Congruent trial: dot replaces threatening face. (C) Incongruent trial: dot replaces neutral face. Response times (ms) to touch the dot were recorded.

Preliminary experiments

Prior to conducting the main experiment to examine vigilance towards threatening faces, we conducted three preliminary experiments. The aim of these experiments was (a) to examine the sensitivity of the dot-probe task to measure attentional biases towards stimuli of increasing visual, social and emotional complexity and (b) to serve as control experiments to determine that attentional biases measured towards threatening faces are threat-specific and not also found towards non-social or non-threatening stimuli. In Experiment 1 (Colour) we presented two shades of red (two images; dark red and light red) paired with each other or unpaired with any stimuli. Red was chosen because evidence in humans demonstrates that red captures attention and facilitates faster motor responses than non-red cues in the dot-probe task; an effect which is enhanced further for images with an emotional context [49]. We predicted faster response times towards dark red than light red, as there was greater contrast between dark red against the grey background screen than light red. In Experiment 2 (Object) we presented two chairs (two images) paired with scrambled chairs or unpaired with any stimuli. Chairs were chosen as they are not social, threatening or novel for our chimpanzees. We predicted faster response times towards chairs than scrambled chairs, as scrambled chairs lack content-related information. In Experiment 3 (Primate faces) we presented chimpanzee faces (36 images) paired with orangutan (12 images), baboon (12 images) or scrambled chimpanzee (36 images) faces. Primate faces were chosen as they are social but not overtly threatening. In addition, we wanted to build on Tomonaga and Imura’s [39] dot-probe study in chimpanzees which found face-specific attentional biases, by examining whether the task is sensitive to detecting differences in perceptual similarity and familiarity between faces. Chimpanzees are faster at discriminating perceptually different than perceptually similar primate faces [50] and show better performance for discriminating familiar than unfamiliar primate species faces (e.g. [51, 52]). Therefore, we predicted a significant difference in response times for perceptually different faces (chimpanzee paired with baboon) but not for perceptually similar faces (chimpanzee paired with orangutan), with faster response times towards highly familiar faces (chimpanzee) than unfamiliar faces (orangutan or baboon). For Experiment 1, 36 trials × 6 sessions were completed, for Experiment 2, 36 trials × 6 sessions were completed and for Experiment 3, 36 trials × 12 sessions were completed. Trial order was randomised within and across sessions.

Main experiment

In Experiment 4 (Threatening faces), chimpanzee bared teeth faces (12 images) and scream faces (12 images) were paired with neutral faces (12 novel chimpanzee face images) or scrambled faces (12 images each). For Experiment 4, 48 trials × 12 sessions were completed. Trial order was randomised within and across sessions.

Statistical analysis

Responses times less than 150 ms and longer than 5,000 ms were excluded from the analysis, as fast response times may have reflected anticipatory responding and slow response times distraction [53, 54]. In addition, for each chimpanzee, condition and session, data two standard deviations above the mean were excluded, resulting in elimination of a total of 513 trials (4.3% of trials). We used R version 3.4.3 [55] and the ‘lme4’ package to perform a Generalized Linear Mixed Model (GLMM) analysis on the relationship between response times and congruency for each stimuli pair comparison. The ‘glmer’ function was used to extract Z values. As fixed effects, we entered congruency and stimuli pair comparison (with interaction term) into the model. The value of the intercept may differ over chimpanzees and sessions, so random intercepts were included. Visual inspection of residual plots revealed the data were skewed, so a gamma probability distribution with a log link function was selected. We chose the model with the smallest AIC values. SPSS (version 24) was also used to analyse response times with repeated measures analysis of variance (ANOVA). Effect sizes were reported with partial etas squared ().

Results

Preliminary experiments

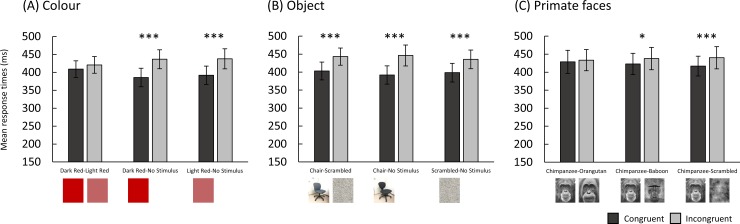

Fig 2A. shows the mean response times in Experiment 1 (Colour). Individual chimpanzee data is shown in S1 Dataset. Response times to touch the dot were significantly faster for dark red (congruent, M = 386 ms) than no stimulus (incongruent, M = 437 ms), (β = 0.13, SE = 0.02, Z = 5.65, p < 0.001), and light red (congruent, M = 392 ms) than no stimulus (incongruent, M = 438 ms), (β = 0.12, SE = 0.02, Z = 5.19, p < 0.001). No significant difference in response times was found for dark red (congruent, M = 409 ms) versus light red (incongruent, M = 421 ms), (β = 0.02, SE = 0.02, Z = 0.98, p = 0.329). No other pair comparisons were significant. Fig 2B. shows the mean response times in Experiment 2 (Object). Response times were significantly faster for chairs (congruent, M = 403 ms) than scrambled chairs (incongruent, M = 443 ms), (β = 0.09, SE = 0.02, Z = 5.54, p < 0.001), chairs (congruent, M = 392 ms) than no stimulus (incongruent, M = 446 ms), (β = 0.12, SE = 0.02, Z = 7.06, p < 0.001) and scrambled chairs (congruent, M = 398 ms) than no stimulus (incongruent, M = 435 ms), (β = 0.09, SE = 0.02, Z = 5.24, p < 0.001). No other pair comparisons were significant. Fig 2C. shows the mean response times in Experiment 3 (Primate faces). Response times were significantly faster for chimpanzee faces (congruent, M = 423 ms) than baboon faces (incongruent, M = 438 ms), (β = 0.04, SE = 0.01, Z = 2.49, p = 0.013) and chimpanzee faces (congruent, M = 417 ms) than scrambled chimpanzee faces (incongruent, M = 440 ms), (β = 0.05, SE = 0.01, Z = 3.79, p < 0.001). No significant difference in response times was found for chimpanzee faces (congruent, M = 429 ms) versus orangutan faces (incongruent, M = 433 ms), (β = 0.01, SE = 0.01, Z = 1.03, p = 0.304). No other pair comparisons were significant.

Fig 2.

Mean response times (ms) for congruent and incongruent trials in (A) colour, (B) object, and (C) primate face experiments. Error bars indicate the standard error of the mean.

Main experiment

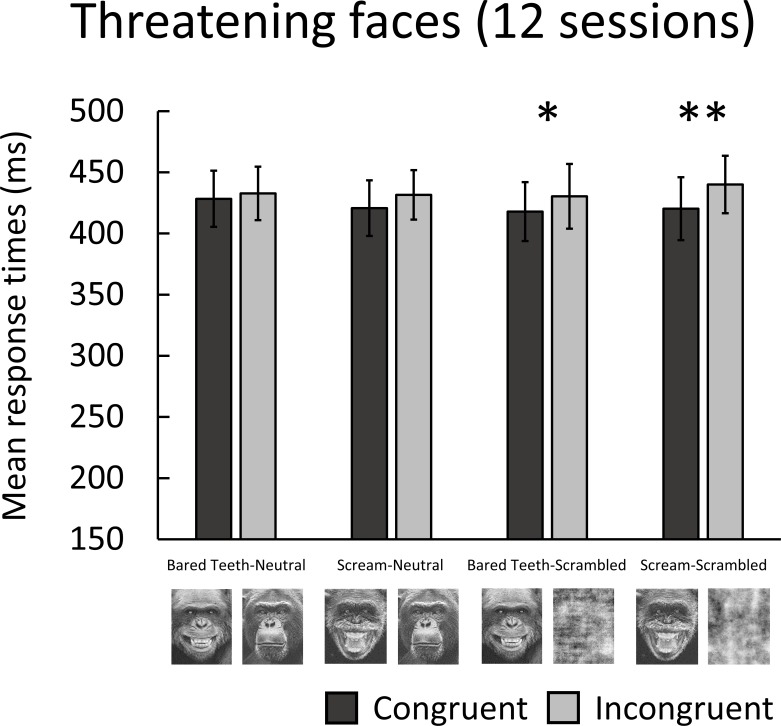

Fig 3. shows the mean response times in the threatening faces experiment. Response times were significantly faster for bared teeth faces (congruent, M = 418 ms) than scrambled bared teeth faces (incongruent, M = 430 ms), (β = 0.03, SE = 0.01, Z = 2.34, p = 0.019) and scream faces (congruent, M = 420 ms) than scrambled scream faces (incongruent, M = 440 ms), (β = 0.05, SE = 0.01, Z = 3.28, p = 0.001). No significant difference in response times was found for bared teeth faces (congruent, M = 428 ms) versus neutral faces (incongruent, M = 433 ms), (β = 0.01, SE = 0.01, Z = 0.61, p = 0.544), or scream faces (congruent, M = 421 ms) versus neutral faces (incongruent, M = 432 ms), (β = 0.03, SE = 0.01, Z = 1.83, p = 0.067). No other pair comparisons were significant. No significant difference in response times between bared teeth faces (congruent, M = 418 ms) and scream faces (congruent, M = 420 ms), (β = 0.01, SE = 0.01, Z = 0.42, p = 0.677) paired with scrambled faces was found.

Fig 3. Mean response times (ms) for congruent and incongruent trials in the threatening face experiment.

Error bars indicate the standard error of the mean.

To investigate the possibility that vigilance towards threatening faces may decrease across sessions, we analysed overall response times for each session using Pearson’s Correlation Coefficient. There was no significant relationship between sessions and response times (M = 427 ms, SE = 23 ms), (r = 0.27, t (10) = 0.87, p = 0.403), indicating no decreasing trend in vigilance across sessions.

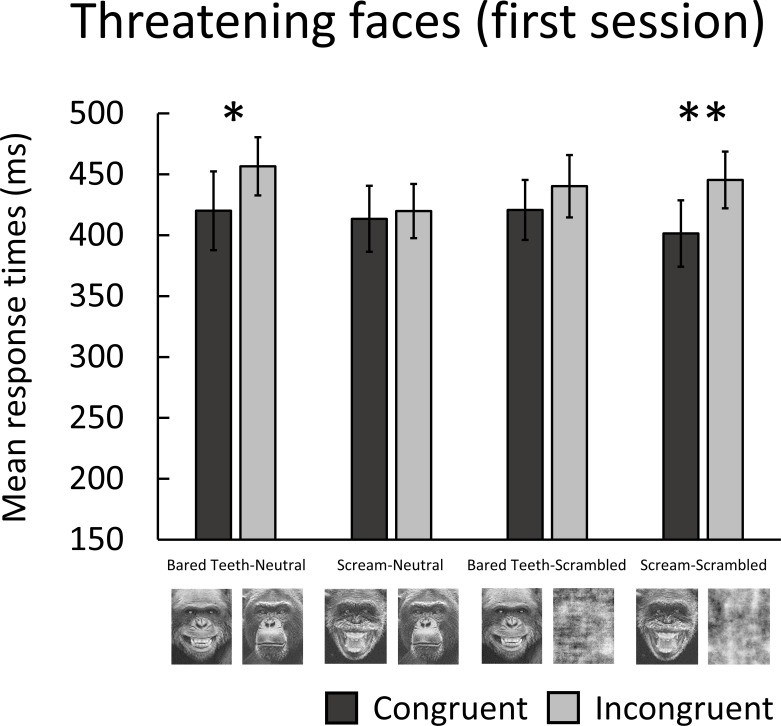

We also explored whether attentional bias effects were more evident at an early stage of the experiment by analysing the mean response times in the first session using GLMM. Fixed effects were congruency and stimuli pair comparisons and the random effect was chimpanzees. Fig 4. shows the mean response times in the first session of the threatening faces experiment. Response times were significantly faster for bared teeth faces (congruent, M = 420 ms) than neutral faces (incongruent, M = 457 ms), (β = 0.09, SE = 0.04, Z = 2.52, p = 0.012), and scream faces (congruent, M = 401 ms) than scrambled scream faces (incongruent, M = 445 ms), (β = 0.10, SE = 0.04, Z = 2.72, p = 0.006). No significant differences in response times were found for scream faces (congruent, M = 413 ms) versus neutral faces (incongruent, M = 420 ms), (β = 0.02, SE = 0.04, Z = 0.55, p = 0.584), or bared teeth faces (congruent, M = 421 ms) versus scrambled bared teeth faces (incongruent, M = 440 ms), (β = 0.04, SE = 0.04, Z = 1.21, p = 0.228). No other pair comparisons were significant. No significant difference in response times between bared teeth faces (congruent, M = 420 ms) paired with neutral faces, and scream faces (congruent, M = 401 ms) paired with scrambled scream faces (β = -0.03, SE = 0.04, Z = -0.91, p = 0.365) was found.

Fig 4. Mean response times (ms) for congruent and incongruent trials in the first session of the threatening face experiment.

Error bars indicate the standard error of the mean.

To examine emotional laterality effects, specifically a right brain hemisphere advantage for processing emotional stimuli, reflected in faster response times for threatening faces presented to the left visual field [14, 56–58], we used a 2 × 2 repeated measures ANOVA to compare response times for threatening face (combined bared teeth faces and scream faces) position (left or right visual field) and dot-probe position (left or right) as independent variables. No difference in response times was found between threatening faces presented in the left visual field (M = 427 ms, SE = 28 ms) and right visual field (M = 428 ms, SE = 27 ms), (F (1,7) = 0.21, p = 0.664, = 0.028). In addition, no significant difference was found between touching the dot in the left position (M = 457 ms, SE = 30 ms) and the right position (M = 397 ms, SE = 25 ms), (F (1,7) = 4.45, p = 0.073, = 0.389), and no interactions were found. Overall, no laterality effects were found.

Comparison of reaction times across experiments

We further analysed the possibility of increasing or decreasing trends in response times across preliminary and main experiments. A 2 × 3 repeated measures ANOVA was conducted to compare reaction times between experiments with congruency (congruent and incongruent trials) and experiments (1, 2 and 3) as independent variables. To make equivalent comparisons across experiments, only those stimuli paired with scrambled control images were analysed; Experiment 2 (chair–scrambled chair); Experiment 3, (chimpanzee face–scrambled chimpanzee face) and Experiment 4 (combined bared teeth face–scrambled bared teeth face and scream face–scrambled scream face). In addition, only the first six sessions in each experiment were analysed. A significant main effect of congruency was found, with faster response times for congruent trials (M = 410 ms, SE = 26 ms) than incongruent trials (M = 439 ms, SE = 27 ms) across experiments, (F (1,7) = 13.72, p = 0.008, = 0.662). However, no significant difference was found in response times between experiments, (F (2, 14) = 0.29, p = 0.756, = 0.039), and no interactions were found. Our chimpanzees showed no systematic trends in response times across experiments.

Discussion

This study was the first to investigate attentional bias specifically towards threatening faces in chimpanzees using the dot-probe task. In the preliminary experiments, significant biases towards chairs and neutral chimpanzee faces paired with scrambled faces were found. In addition, a significant bias towards highly familiar chimpanzee faces paired with unfamiliar baboon faces was found, but not when chimpanzee faces were paired with unfamiliar orangutan faces. This result is likely explained by the closer perceptual similarity between chimpanzee and orangutan faces than chimpanzee and baboon faces. Wilson and Tomonaga [50] found faster responses for discriminating perceptually different chimpanzee and human faces, or baboon and capuchin monkey faces, than perceptually similar gorilla and orangutan faces. The present study suggests detection of perceptually different faces is possible, even at very short presentation times. In the same chimpanzee group, Tomonaga and Imura [39] found attentional biases towards chimpanzee or human faces paired with random objects, but not towards bananas paired with objects, indicating a face-specific bias. Furthermore, Tomonaga and Imura [59] found chimpanzees rapidly detected faces amongst non-face distractors in a visual search task. Together, these results suggest the dot-probe task is sensitive to detecting a general bias towards faces, as well as larger perceptual differences between faces.

In the main experiment, significant biases towards chimpanzee threatening faces paired with scrambled faces were found. However, there were no significant biases for threatening faces paired with neutral faces, and response times towards threatening faces with higher threat intensity (scream) and lower threat intensity (bared teeth) did not differ. In rhesus monkeys, Parr et al. [38] found a significant bias towards threatening faces versus scrambled images at an SOA of 500 ms. However, these biases may not have been observed if the threatening faces were paired with neutral faces, and so it is unclear whether they are threat-specific. Interestingly, when we analysed the data for the first session, chimpanzees showed an attentional bias towards bared teeth faces (but not scream faces) versus neutral faces. However, this bias disappeared when response times for all sessions were analysed. Similarly, King et al. [37] found a significant bias towards threatening faces versus neutral faces at an SOA of 1000 ms in rhesus monkeys which disappeared over time. It is possible that a threat-specific attentional bias occurred early in our experiment and that repeated exposure to the stimuli weakened this bias, although our shorter SOA of 150 ms may have limited exposure to some extent. Alternatively, this bias could also be explained by individual variation in response times between chimpanzees in the first session. Indeed, biases appear to become more reliable when the dot-probe task is repeated daily over a number of weeks [24]. Overall, in combination with the biases found towards chairs and neutral chimpanzee faces paired with scrambled faces in the preliminary experiments, these results suggest faster response times towards threatening faces reflect a general bias towards faces, rather than threatening faces specifically.

Although we found no convincing evidence for threat-specific attentional biases, it is premature to conclude they do not exist. Several methodological issues may account for our results. One issue may be that the stimuli threat intensity was too weak to facilitate vigilance. In humans, Wilson and MacLeod [32] found morphing angry facial expressions to increase their threat intensity enhanced vigilance. This would be an interesting manipulation to explore in future chimpanzee studies. Another possibility is the stimuli lacked threat intensity as they were presented in greyscale rather than colour. Whilst some evidence suggests chimpanzees can recognise conspecific emotions from greyscale images (e.g., [60]), other evidence suggests effects are only obtained in colour (e.g., [61]). Therefore, in future dot-probe studies it would be useful to test threatening stimuli in colour. In addition, although we were unable to verify the sex of our stimuli, presenting male chimpanzee faces may be more threatening than female faces, as male chimpanzees are more aggressive [62, 63]. Finally, testing chimpanzees with limited exposure to facial stimuli may help to maintain their emotional salience. Our chimpanzees have extensive experience participating in cognitive tasks using facial stimuli, so may be habituated to faces in general.

Another issue is the influence of emotional state on the task. Emotional state has been shown to influence attention in non-human primates [64–66]. Rhesus macaques in a stressful state showed greater avoidance and slower responses to threatening conspecific faces than when in a neutral state [64, 65]. However, these studies presented stimuli for 10–60 seconds and were not restricted to measuring initial threat detection at very short presentation times, as in the present study. In human dot-probe studies, failure to observe vigilance towards threatening faces is often attributed to low trait anxiety or failure to induce a high state of anxiety experimentally [21–23]. Behaviourally, our chimpanzees appeared to be in a relaxed state during the tasks, so anxiety levels may have been too low to facilitate threat-specific biases.

Conversely, attentional training has been shown to influence emotional state in humans (for a review see [67]). In a modified version of the dot-probe task, the dot location is systematically manipulated to increase the proportion of dots appearing in the location of the threatening stimuli or the neutral stimuli. Repeated training leads to an implicitly learned bias towards or away from threat and subsequently an increase or decrease in anxiety respectively (e.g., [68, 69]). In chimpanzees, increasing the predictability of visual social precues (gaze) and non-social cues leads to stronger cueing effects [70, 71]. Therefore, it may be possible to induce a learned bias towards threating faces and subsequently an anxious state in chimpanzees using a modified dot-probe task.

A third issue is the stimuli presentation duration may not have been optimal for facilitating vigilance towards threatening faces. Although we presented stimuli at an SOA of 150 ms based on a review of the human literature (e.g., [18, 26, 27, 29]) this duration may not have been optimal for chimpanzees. Vigilance towards threatening faces has been found at SOAs as short as 17 ms (i.e. subliminal presentation) in humans [72]. However, in chimpanzees Kret et al. [41] failed to find vigilance towards threatening whole-body images presented at 33 ms (also subliminal presentation) and 300 ms. Together, these results suggest the optimal SOA for facilitating vigilance may be very precise and so it would be useful to test additional SOAs in the future.

Finally, manual response dot-probe tasks may not be sensitive enough to detect biases towards threatening faces in chimpanzees. In non-human primates, touchscreen response times are used as an indirect measure of attention. This method assumes gaze location directly corresponds to motor responses. However, more direct measures such as eye tracking and event-related potentials may reveal biases otherwise masked by hand movement and are generally considered more reliable [73–75]. In an example of eye tracking use in non-human primates Pine et al. [76] found monkeys looked significantly longer at threatening than neutral human faces. Bethell et al. [64] observed rhesus macaques were faster to direct initial gaze towards threatening than neutral conspecific faces from video recordings. For further study in chimpanzees, it would be useful to examine to what extent initial gaze location corresponds to manual responses in the dot-probe task using eye tracking.

In conclusion, this study found the touchscreen dot-probe task can measure attentional biases not only towards faces, but perceptually different faces in chimpanzees. However, no evidence for attentional biases specific to threatening faces was found. More research investigating stimuli threat intensity, emotional state, stimuli presentation duration and direct measures of attention is needed to fully explore the potential of the dot-probe task to assess emotional attention in non-human primates.

Supporting information

(XLSX)

Acknowledgments

We sincerely thank Prof. Tetsuro Matsuzawa and Drs. Ikuma Adachi, Yuko Hattori and Misato Hayashi of the Language and Intelligence Section, Primate Research Institute, Kyoto University, for their helpful comments and generous support of this research. We also thank Dr. Chloe Gonseth, Ms. Yuri Kawaguchi and Ms. Etsuko Ichino of the Language and Intelligence Section, Primate Research Institute, Kyoto University, for assistance with conducting the experiments, and staff at the Center for Human Evolution Modelling Research for their daily care of the chimpanzees. In addition, we thank Prof. Satoshi Hirata, Dr. Naruki Morimura, Dr. Yumi Yamanashi and Ms. Etsuko Nogami at Kumamoto Sanctuary, Kyoto University, and Dr. Renata Mendonça, Primate Research Institute, Kyoto University, for permission to use their photographs of chimpanzees and orangutans. Finally, we thank Dr. Sarah-Jane Vick, University of Stirling, for assistance in categorising chimpanzee facial expression images.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

This research was financially supported by the Japanese Ministry of Education, Culture, Sports, Science and Technology (MEXT: http://www.mext.go.jp/en/) (#150528) to DAW, and the Japan Society for the Promotion of Science (JSPS)-MEXT KAKENHI (https://www.jsps.go.jp/english/e-grants/) (#18H05811) to DAW and (#15H05709, #16H06283, #18H04194, #20002001, #23220006, #24000001 to MT and #18H05811 to DAW), JSPS-CCSN, National Bio Resource Project-Great Ape Information Network (GAIN, https://shigen.nig.ac.jp/gain/index.jsp) and JSPS Leading Graduate Program in Primatology and Wildlife Science (U04) to MT. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Adachi I, Tomonaga M. Face perception and processing in nonhuman primates In: Call J, Burghardt GM, Pepperberg IM, Snowdon CT, Zentall T, editors. APA Handbook of Comparative Psychology: Perception, Learning, and Cognition. Washington; American Psychological Association; 2017. pp. 141–161. [Google Scholar]

- 2.Ekman P. Cross-cultural studies of facial expressions In: Ekman P, editor. Darwin and facial expressions: A Century of Research in Review. New York: Academic Press; 1973. pp. 169–222. [Google Scholar]

- 3.Ekman P, Friesen WV. Constants across cultures in the face and emotion. Journal of personality and social psychology. 1971. February; 17(2): 124–129. [DOI] [PubMed] [Google Scholar]

- 4.Chevalier-Skolnikoff S. Facial expression of emotion in nonhuman primates In: Ekman P, editor. Darwin and facial expressions: A Century of Research in Review. New York: Academic Press; 1973. pp. 11–89. [Google Scholar]

- 5.Darwin C. The Expression of the Emotions in Man and Animals. 1st ed. London UK: John Murray; 1872. [Google Scholar]

- 6.LeDoux JE. The emotional brain: The mysterious underpinnings of emotional life. New York: Simon and Schuster; 1996. [Google Scholar]

- 7.Morris JS, Öhman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998. June; 393(6684): 467–470. 10.1038/30976 [DOI] [PubMed] [Google Scholar]

- 8.Koster E, Crombez G, Van Damme S, Verschuere B, De Houwer J. Signals for threat modulate attentional capture and holding: Fear-conditioning and extinction during the exogenous cueing task. Cognition & Emotion. 2005. August 1; 19(5): 771–780. [Google Scholar]

- 9.Cisler JM, Koster EH. Mechanisms of attentional biases towards threat in anxiety disorders: An integrative review. Clinical psychology review. 2010. March 1; 30(2): 203–216. 10.1016/j.cpr.2009.11.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yiend J. The effects of emotion on attention: A review of attentional processing of emotional information. Cognition and Emotion. 2010. January 1; 24(1): 3–47. [Google Scholar]

- 11.MacLeod C, Mathews A, Tata P. Attentional bias in emotional disorders. Journal of abnormal psychology. 1986. February; 95(1): 15–20. [DOI] [PubMed] [Google Scholar]

- 12.Staugaard SR. Threatening faces and social anxiety: a literature review. Clinical Psychology Review. 2010. August 1; 30(6): 669–690. 10.1016/j.cpr.2010.05.001 [DOI] [PubMed] [Google Scholar]

- 13.Klumpp H, Amir N. Examination of vigilance and disengagement of threat in social anxiety with a probe detection task. Anxiety, Stress & Coping. 2009. May 1; 22(3): 283–296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mogg K, Bradley BP. Selective orienting of attention to masked threat faces in social anxiety. Behaviour research and therapy. 2002. December 1; 40(12): 1403–1414. [DOI] [PubMed] [Google Scholar]

- 15.Mogg K, Philippot P, Bradley BP. Selective attention to angry faces in clinical social phobia. Journal of abnormal psychology. 2004. February; 113(1): 160–165. 10.1037/0021-843X.113.1.160 [DOI] [PubMed] [Google Scholar]

- 16.Pishyar R, Harris LM, Menzies RG. Attentional bias for words and faces in social anxiety. Anxiety, Stress & Coping. 2004. March 1; 17(1): 23–36. [Google Scholar]

- 17.Sposari JA, Rapee RM. Attentional bias toward facial stimuli under conditions of social threat in socially phobic and nonclinical participants. Cognitive Therapy and Research. 2007. February 1; 31(1): 23–37. [Google Scholar]

- 18.Stevens S, Rist F, Gerlach AL. Influence of alcohol on the processing of emotional facial expressions in individuals with social phobia. British Journal of Clinical Psychology. 2009. June 1; 48(2): 125–140. [DOI] [PubMed] [Google Scholar]

- 19.Chen YP, Ehlers A, Clark DM, Mansell W. Patients with generalized social phobia direct their attention away from faces. Behaviour research and therapy. 2002. June 1; 40(6): 677–687. [DOI] [PubMed] [Google Scholar]

- 20.Mansell W, Clark DM, Ehlers A, Chen YP. Social anxiety and attention away from emotional faces. Cognition & Emotion. 1999. October 1; 13(6): 673–690. [Google Scholar]

- 21.Bradley BP, Mogg K, Millar N, Bonham-Carter C, Fergusson E, Jenkins J, et al. Attentional biases for emotional faces. Cognition & Emotion. 1997. January 1; 11(1): 25–42. [Google Scholar]

- 22.Gotlib IH, Kasch KL, Traill S, Joormann J, Arnow BA, Johnson SL. Coherence and specificity of information-processing biases in depression and social phobia. Journal of abnormal psychology. 2004. August; 113(3): 386–398. 10.1037/0021-843X.113.3.386 [DOI] [PubMed] [Google Scholar]

- 23.Pineles SL, Mineka S. Attentional biases to internal and external sources of potential threat in social anxiety. Journal of Abnormal Psychology. 2005. May; 114(2): 314–318. 10.1037/0021-843X.114.2.314 [DOI] [PubMed] [Google Scholar]

- 24.van Rooijen R, Ploeger A, Kret ME. The dot-probe task to measure emotional attention: A suitable measure in comparative studies? Psychonomic bulletin & review. 2017. December 1; 24(6): 1686–1717. [DOI] [PubMed] [Google Scholar]

- 25.Weierich MR, Treat TA, Hollingworth A. Theories and measurement of visual attentional processing in anxiety. Cognition and Emotion. 2008. September 1; 22(6): 985–1018. [Google Scholar]

- 26.Cooper RM, Langton SR. Attentional bias to angry faces using the dot-probe task? It depends when you look for it. Behaviour research and therapy. 2006. September 1; 44(9): 1321–1329. 10.1016/j.brat.2005.10.004 [DOI] [PubMed] [Google Scholar]

- 27.Holmes A, Green S, Vuilleumier P. The involvement of distinct visual channels in rapid attention towards fearful facial expressions. Cognition & Emotion. 2005. September 1; 19(6): 899–922. [Google Scholar]

- 28.Bradley BP, Mogg K, Falla SJ, Hamilton LR. Attentional bias for threatening facial expressions in anxiety: Manipulation of stimulus duration. Cognition & Emotion. 1998. November 1; 12(6): 737–753. [Google Scholar]

- 29.Bourne VJ. The divided visual field paradigm: Methodological considerations. Laterality. 2006. July 1; 11(4): 373–393. 10.1080/13576500600633982 [DOI] [PubMed] [Google Scholar]

- 30.Schmukle SC. Unreliability of the dot probe task. European Journal of Personality. 2005. December 1; 19(7): 595–605. [Google Scholar]

- 31.Staugaard SR. Reliability of two versions of the dot-probe task using photographic faces. Psychology Science Quarterly. 2009. July 1; 51(3): 339–350. [Google Scholar]

- 32.Wilson E, MacLeod C. Contrasting two accounts of anxiety-linked attentional bias: selective attention to varying levels of stimulus threat intensity. Journal of abnormal Psychology. 2003. May; 112(2): 212–218. [DOI] [PubMed] [Google Scholar]

- 33.de Valk JM, Wijnen JG, Kret ME. Anger fosters action. Fast responses in a motor task involving approach movements toward angry faces and bodies. Frontiers in psychology. 2015. September 3; 6: 1240 10.3389/fpsyg.2015.01240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Grillon C, Charney DR. In the face of fear: anxiety sensitizes defensive responses to fearful faces. Psychophysiology. 2011. December 1; 48(12): 1745–1752. 10.1111/j.1469-8986.2011.01268.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Coleman K, Pierre PJ. Assessing anxiety in nonhuman primates. ILAR Journal. 2014. January 1; 55(2): 333–346. 10.1093/ilar/ilu019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Koda H, Sato A, Kato A. Is attentional prioritisation of infant faces unique in humans?: Comparative demonstrations by modified dot-probe task in monkeys. Behavioural processes. 2013. September 1; 98: 31–36. 10.1016/j.beproc.2013.04.013 [DOI] [PubMed] [Google Scholar]

- 37.King HM, Kurdziel LB, Meyer JS, Lacreuse A. Effects of testosterone on attention and memory for emotional stimuli in male rhesus monkeys. Psychoneuroendocrinology. 2012. March 1; 37(3): 396–409. 10.1016/j.psyneuen.2011.07.010 [DOI] [PubMed] [Google Scholar]

- 38.Parr LA, Modi M, Siebert E, Young LJ. Intranasal oxytocin selectively attenuates rhesus monkeys’ attention to negative facial expressions. Psychoneuroendocrinology. 2013. September 1; 38(9): 1748–1756. 10.1016/j.psyneuen.2013.02.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Tomonaga M, Imura T. Faces capture the visuospatial attention of chimpanzees (Pan troglodytes): evidence from a cueing experiment. Frontiers in zoology. 2009. December; 6(1): 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kret ME, Jaasma L, Bionda T, Wijnen JG. Bonobos (Pan paniscus) show an attentional bias toward conspecifics’ emotions. Proceedings of the National Academy of Sciences. 2016. April 5; 113(14): 3761–3766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kret ME, Muramatsu A, Matsuzawa T. Emotion processing across and within species. A comparison between humans and chimpanzees (Pan troglodytes). Journal of comparative psychology. Forthcoming 2018. 10.1037/com0000108 [DOI] [PubMed] [Google Scholar]

- 42.Watanuki K, Ochiai T, Hirata S, Morimura N, Tomonaga M, Idani G, et al. Review and long-term survey of the status of captive chimpanzees in Japan in 1926–2013. Primate Res. 2014; 30: 147–156. [Google Scholar]

- 43.Matsuzawa T, Tomonaga M, Tanaka M. Cognitive Development in Chimpanzees. Tokyo: Springer; 2006. [Google Scholar]

- 44.Yamanashi Y, Hayashi M. Assessing the effects of cognitive experiments on the welfare of captive chimpanzees (Pan troglodytes) by direct comparison of activity budget between wild and captive chimpanzees. American journal of primatology. 2011. December 1; 73(12): 1231–1238. 10.1002/ajp.20995 [DOI] [PubMed] [Google Scholar]

- 45.Parr LA, Waller BM, Vick SJ, Bard KA. Classifying chimpanzee facial expressions using muscle action. Emotion. 2007. February; 7(1): 172–181. 10.1037/1528-3542.7.1.172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ales JM, Farzin F, Rossion B, Norcia AM. An objective method for measuring face detection thresholds using the sweep steady-state visual evoked response. Journal of vision. 2012. September 1; 12(10): 1–18. 10.1167/12.10.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bourne VJ, Hole GJ. Lateralized repetition priming for familiar faces: Evidence for asymmetric interhemispheric cooperation. The Quarterly Journal of Experimental Psychology. 2006. June 1; 59(6): 1117–1133. 10.1080/02724980543000150 [DOI] [PubMed] [Google Scholar]

- 48.Young AW. Methodological and theoretical bases of visual hemifield studies In: Beaumont JG, editor. Divided visual field studies of cerebral organisation. London: Academic Press; 1982. pp. 11–27. [Google Scholar]

- 49.Kuniecki M, Pilarczyk J, Wichary S. The color red attracts attention in an emotional context. An ERP study. Frontiers in human neuroscience. 2015. April 29; 9: 212 10.3389/fnhum.2015.00212 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wilson DA, Tomonaga M. Visual discrimination of primate species based on faces in chimpanzees. Primates. 2018. May; 59(3): 243–251. 10.1007/s10329-018-0649-8 [DOI] [PubMed] [Google Scholar]

- 51.Dahl CD, Rasch MJ, Tomonaga M, Adachi I. Developmental processes in face perception. Scientific reports. 2013. January 9; 3: 1044 10.1038/srep01044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Tanaka M. Development of the visual preference of chimpanzees (Pan troglodytes) for photographs of primates: effect of social experience. Primates. 2007. October 1; 48(4): 303–309. 10.1007/s10329-007-0044-3 [DOI] [PubMed] [Google Scholar]

- 53.Koster EH, Crombez G, Verschuere B, Van Damme S, Wiersema JR. Components of attentional bias to threat in high trait anxiety: Facilitated engagement, impaired disengagement, and attentional avoidance. Behaviour research and therapy. 2006. December 1; 44(12): 1757–1771. 10.1016/j.brat.2005.12.011 [DOI] [PubMed] [Google Scholar]

- 54.Lacreuse A, Schatz K, Strazzullo S, King HM, Ready R. Attentional biases and memory for emotional stimuli in men and male rhesus monkeys. Animal cognition. 2013. November 1; 16(6): 861–871. 10.1007/s10071-013-0618-y [DOI] [PubMed] [Google Scholar]

- 55.Team RC. R. A Language and Environment for Statistical Computing. Vienna, Austria; 2015. Available: http://www.R-project.org/.

- 56.Fox E. Processing emotional facial expressions: The role of anxiety and awareness. Cognitive, Affective & Behavioral Neuroscience. 2002. March 1; 2(1): 52–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Mogg K, Bradley BP. Orienting of attention to threatening facial expressions presented under conditions of restricted awareness. Cognition & Emotion. 1999. October 1; 13(6): 713–740. [Google Scholar]

- 58.Wilson DA, Tomonaga M, Vick SJ. Eye preferences in capuchin monkeys (Sapajus apella). Primates. 2016. July 1; 57(3): 433–440. 10.1007/s10329-016-0537-z [DOI] [PubMed] [Google Scholar]

- 59.Tomonaga M, Imura T. Efficient search for a face by chimpanzees (Pan troglodytes). Scientific reports. 2015. July 16; 5: 11437 10.1038/srep11437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Parr LA. The discrimination of faces and their emotional content by chimpanzees (Pan troglodytes). Annals of the New York Academy of Sciences. 2003. December 1; 1000(1): 56–78. [DOI] [PubMed] [Google Scholar]

- 61.Kret ME, Tomonaga M, Matsuzawa T. Chimpanzees and humans mimic pupil-size of conspecifics. PLoS One. 2014. August 20; 9(8): e104886 10.1371/journal.pone.0104886 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Wilson ML, Wrangham RW. Intergroup relations in chimpanzees. Annual Review of Anthropology. 2003. October; 32(1): 363–392. [Google Scholar]

- 63.Muller MN, Mitani JC. Conflict and cooperation in wild chimpanzees. Advances in the Study of Behavior. 2005. January 1; 35: 275–331. [Google Scholar]

- 64.Bethell EJ, Holmes A, MacLarnon A, Semple S. Evidence that emotion mediates social attention in rhesus macaques. PLoS One. 2012. August 30; 7(8): e44387 10.1371/journal.pone.0044387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Bethell EJ, Holmes A, MacLarnon A, Semple S. Emotion evaluation and response slowing in a non-human primate: New directions for cognitive bias measures of animal emotion? Behavioral Sciences. 2016. January 11; 6(1): 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Marzouki Y, Gullstrand J, Goujon A, Fagot J. Baboons' response speed is biased by their moods. PloS one. 2014. July 25; 9(7): e102562 10.1371/journal.pone.0102562 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Bar-Haim Y. Research review: attention bias modification (ABM): a novel treatment for anxiety disorders. Journal of Child Psychology and Psychiatry. 2010. August 1; 51(8): 859–870. 10.1111/j.1469-7610.2010.02251.x [DOI] [PubMed] [Google Scholar]

- 68.Eldar S, Ricon T, Bar-Haim Y. Plasticity in attention: Implications for stress response in children. Behaviour Research and Therapy. 2008. April 1; 46(4): 450–461. 10.1016/j.brat.2008.01.012 [DOI] [PubMed] [Google Scholar]

- 69.MacLeod C, Rutherford E, Campbell L, Ebsworthy G, Holker L. Selective attention and emotional vulnerability: assessing the causal basis of their association through the experimental manipulation of attentional bias. Journal of abnormal psychology. 2002. February; 111(1): 107–123. [PubMed] [Google Scholar]

- 70.Tomonaga M. Precuing the target location in visual searching by a chimpanzee (Pan troglodytes): Effects of precue validity. Japanese Psychological Research. 1997. September 1; 39(3): 200–211. [Google Scholar]

- 71.Tomonaga M. Is chimpanzee (Pan troglodytes) spatial attention reflexively triggered by gaze cue? Journal of Comparative Psychology. 2007. May; 121(2): 156–170. 10.1037/0735-7036.121.2.156 [DOI] [PubMed] [Google Scholar]

- 72.Monk CS, Telzer EH, Mogg K, Bradley BP, Mai X, Louro HM, et al. Amygdala and ventrolateral prefrontal cortex activation to masked angry faces in children and adolescents with generalized anxiety disorder. Archives of general psychiatry. 2008. May 1; 65(5): 568–576. 10.1001/archpsyc.65.5.568 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Fashler SR, Katz J. Keeping an eye on pain: Investigating visual attention biases in individuals with chronic pain using eye-tracking methodology. Journal of pain research. 2016; 9: 551–561. 10.2147/JPR.S104268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Hirata S, Matsuda G, Ueno A, Fukushima H, Fuwa K, Sugama K, et al. Brain response to affective pictures in the chimpanzee. Scientific reports. 2013. February 26; 3: srep01342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Torrence RD, Troup LJ. Event-related potentials of attentional bias toward faces in the dot-probe task: A systematic review. Psychophysiology. 2017. December 20 10.1111/psyp.13051 [DOI] [PubMed] [Google Scholar]

- 76.Pine DS, Helfinstein SM, Bar-Haim Y, Nelson E, Fox NA. Challenges in developing novel treatments for childhood disorders: lessons from research on anxiety. Neuropsychopharmacology. 2009. January; 34(1): 213–228. 10.1038/npp.2008.113 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(XLSX)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.