Abstract

Problem

Formal education in requesting consultations is inconsistent in medical education. To address this gap, the authors developed the Consultation Observed Simulated Clinical Experience (COSCE), a simulation-based curriculum for interns using Kessler et al’s 5Cs of Consultation model to teach and assess consultation communication skills.

Approach

In June 2016, 127 interns entering 12 University of Chicago Medicine residency programs participated in the COSCE pilot. The COSCE featured an online training module on the 5Cs and an in-person simulated consultation. Using specialty-specific patient cases, interns requested telephone consultations from faculty, who evaluated their performance using validated checklists. Interns were surveyed on their preparedness to request consultations before and after the module and after the simulation. Subspecialty fellows serving as consultants were surveyed regarding consultation quality before and after the COSCE.

Outcomes

After completing the online module, 84% of interns (103/122) were prepared to request consultations compared with 52% (63/122) at baseline (P < .01). After the COSCE, 96% (122/127) were prepared to request consultations (P < .01). Neither preparedness nor simulation performance differed by prior experience or training. Over 90% (115/127) indicated they would recommend the COSCE for future interns. More consultants described residents as prepared to request consultations after the COSCE (54%; 21/39) than before (27%; 11/41, P = .01).

Next Steps

The COSCE was well-received and effective for preparing entering interns with varying experience and training to request consultations. Future work will emphasize consultation communication specific to training environments and evaluate skills via direct observation of clinical performance.

Problem

Communication is recognized as a core competency across the continuum of medical education. One of the most common types of communication in medicine is requesting a consultation, which occurs across nearly all specialties and clinical settings. Communication failures are major contributors to medical error, and the complexity and ubiquity of consultation communication likely amplifies this risk.1,2 Suboptimal consultation communication strategies, such as “curbside” consultations, are high in risk for potential errors yet remain common.3

Despite the universality of consultations and concern about lapses in communication, there is wide variability in training in requesting a consultation.1,2,4 One framework, Kessler et al’s 5Cs of Consultation, has been successfully employed in undergraduate medical education (UME) and graduate medical education (GME) settings.1,2,4 However, many trainees still receive little or no formal education regarding consultations. Prior work4 at our institution demonstrated that while nearly all third-year students reported calling consultations during clerkships, only 75% reported they had been taught how to do so—and almost exclusively by other trainees. Furthermore, few reported receiving feedback on their skills.

The transition between UME and GME offers a unique time in training, assessment, and feedback for incoming interns. GME orientation is a well-described educational strategy to maximize the potential of this period, particularly through the use of “boot camps” incorporating simulation and observed structured clinical examinations (OSCEs).5 Boot camps traditionally emphasize procedural skills, but they are also effective in teaching communication skills.6 In this report, we describe the development, implementation, and preliminary evaluation of the Consultation Observed Simulated Clinical Experience (COSCE), pairing web-based training on the 5Cs model1 with an interactive, high-fidelity consultation simulation OSCE during GME orientation.

Approach

Setting and participants

The COSCE was piloted in June 2016 as part of the required Advanced Communication Skills (ACS) Boot Camp within GME orientation at the University of Chicago Medicine, an urban, academic medical center. Conducted at the simulation center, ACS Boot Camp included 3 OSCEs: (1) requesting a consultation (as part of the COSCE), (2) conducting a handoff,6 and (3) acquiring informed consent. All 127 interns entering 12 clinical residency programs participated. The University of Chicago Institutional Review Board granted educational exemption.

COSCE curriculum design

We designed the COSCE using Kessler et al’s 5Cs model.1,2 Developed in Emergency Medicine, the 5Cs provide a structured communication framework for requesting a consultation. Learners are prompted to provide introductory information (Contact); relay a concise clinical story (Communicate); highlight the reason and timeframe for the consultation (Core Question); foster an open and dynamic conversation (Collaborate); and politely ensure all parties understand the next steps (Close the Loop).1,2

Following Kern et al’s model of curriculum development,7 we conducted a targeted needs assessment. In March 2016 we sent an electronic survey, via REDCap,8 to medical subspecialty fellows serving as consultants at our institution, asking them to rate their satisfaction with consultations received from residents (using a 5-point Likert-type scale, from strongly disagree = 1 to strongly agree = 5). We also asked them to rate consultation quality by each of the 5Cs, identify common barriers to effective communication, and report how often they received requests for curbside consultations. We planned this survey both to serve as a needs assessment and to be repeated following COSCE implementation. The results of the needs assessment highlighted common problems in consultation communication (e.g., lacking a specific question for consultation), and we used this information in developing the COSCE curriculum.

We designed the COSCE to have 2 parts: an online training module and an in-person simulated consultation OSCE. The online module began with a 2.5-minute video illustrating poor consultation communication: An intern requests a cardiology consultation, but disorganized communication leads to a curbside consultation and delay in care.9 Next, a 12-minute didactic screencast introduced the 5Cs model, with video examples demonstrating each of the 5Cs using the same consultation scenario with improved communication.9 Finally, there was a 7-question, multiple-choice quiz. Also, as part of the module, before viewing the videos interns completed a 3-item pre-survey asking whether they had prior experience requesting consultations during medical school (yes/no) and/or prior training in consultation communication (yes/no), and whether they felt prepared to request a consultation (using a 5-point Likert type scale from 1 = “strongly disagree” to 5 = “strongly agree”).

For the simulated consultation OSCE, we developed 3 specialty-specific cases detailing a clinical problem requiring consultation (available as Supplemental Digital Appendix 1). These cases were presented in the format of a written signout document. The cases used in this simulation were the same as those used during the concurrent OSCEs in the ACS Boot Camp and modeled after previous work (i.e., performing a handoff using a patient signout6). In the COSCE simulation, the intern read the case and then telephoned a faculty standardized consultant (SC) to request the consultation. The SC received the consultation and offered case-specific recommendations (available as Supplemental Digital Appendix 2). The SC assessed the intern’s performance, completing 2 checklists during the consultation: a 12-item checklist evaluating adherence to the 5Cs4,10 and a 7-item Global Rating Scale (GRS) evaluating overall consultation effectiveness on a 5-point scale (from 1 = “not effective” to 5 = “extremely effective”).1,4 These validated checklists have been previously used in multiple settings.1,4,10 Following the consultation call, the SC met with the intern to provide in-person feedback.

COSCE implementation

ACS Boot Camp occurred on June 20–21, 2016. Two weeks prior, the 127 interns received the online module to review; it was distributed via Oracle Learning Management (Oracle, Redwood Shores, California). Three days prior, they were emailed the COSCE clinical cases, along with 5Cs model pocket cards for reference.4 During the boot camp, interns rotated through the OSCEs in 15-minute timeslots. The COSCE room contained a telephone and a written description of one clinical case with instructions to telephone the SC for the consultation.

Seventeen program directors and core faculty, recruited from the interns’ residency programs, served as the SCs. Two weeks prior to orientation, they were emailed training packets containing the clinical cases, faculty instructions, and checklists. They also viewed the online module. On the day of their participation in the boot camp, SCs were oriented again, in person in the simulation center, by two of the authors (S.K.M. and K.C.), who were immediately available to answer questions. This 5-minute session demonstrated use of the telephones and the checklists for assessment. It also included orientation to the rooms the SCs would use to receive the telephone consultations as well as the OSCE room in which SCs would give in-person feedback to the interns.

COSCE evaluation

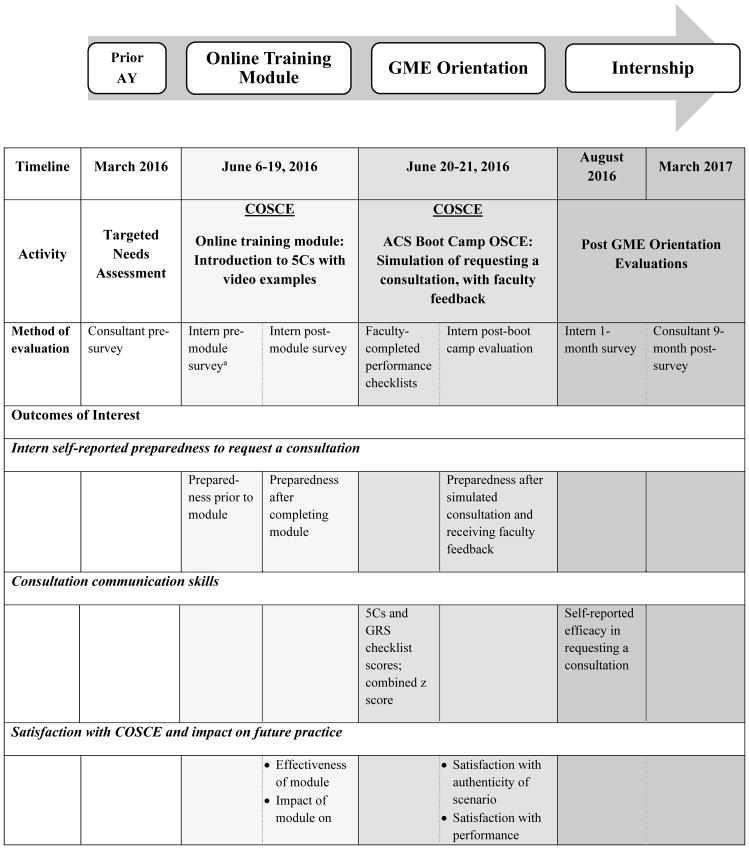

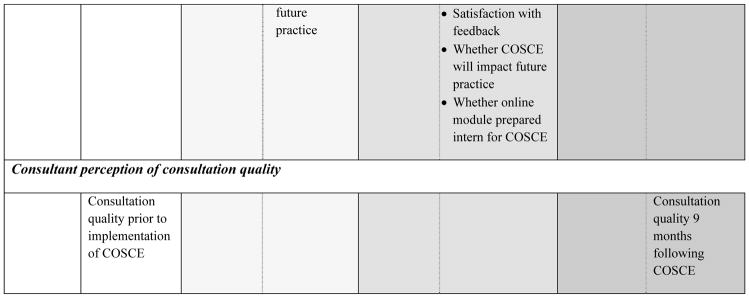

To evaluate the COSCE, we gathered data at several points throughout the 2016–2017 academic year: pre and post online module, during and post boot camp, and during internship (details provided in Chart 1). We were interested in 4 outcomes: (1) self-reported preparedness to request a consultation; (2) communication skills performance in the simulated consultation; (3) satisfaction with the COSCE and impact on future practice; and (4) consultant perception of consultation quality. For preparedness and satisfaction, interns were surveyed at various points and were asked to rate these items on 5-point Likert-type scales. Skills performance was measured via the 5Cs and GRS checklists completed by SCs during the simulation, and via intern self-report on a survey administered one month into internship. Finally, we resurveyed the consultants 9 months post COSCE to compare perceived consultation quality with our needs assessment.

Chart 1. Timeline of Consultation Observed Simulated Clinical Experience (COSCE) Components and Evaluation, University of Chicago Medicine, 2016–2017.

Abbreviations: AY indicates academic year; GME, graduate medical education; ACS, Advanced Communication Skills; OSCE, objective structured clinical examination; consultant, subspecialty fellow serving as a consultant; 5Cs, 5Cs of Consultation checklist10; GRS, Global Rating Scale checklist.1

aAlso collected intern baseline characteristics (i.e., prior experience requesting consultations, prior training on requesting consultations, satisfaction with prior training).

Data analysis

We analyzed data using Stata 14 (StatCorp, College Station, Texas). We used descriptive statistics to summarize all data, including demographics, survey item responses, and checklist scores. Interns who responded “agree” or “strongly agree” (4 or 5, respectively, on the 5-point Likert-type scale) in response to the statement “I am prepared to request consultations” were considered prepared. For checklist scores, to account for rater differences, we created a composite score averaging both the 5Cs and GRS checklist scores for each intern and normalized by rater to a z score (mean = 0, SD = 1). We defined poor and outstanding performance as < −1 SD and > 1 SD, respectively. We used Wilcoxon rank-sum tests to compare pretests and post-tests that used Likert-type scales, Pearson’s chi-square to compare proportions, and Student’s t tests to compare means. We considered P < .05 to be statistically significant.

Outcomes

The 127 interns participating in the ACS Boot Camp were from 55 different medical schools. Ninety-six percent (n = 122) completed the online module. Most (78%; 95/122) had prior UME experience requesting consultations, two-thirds (67%; 82/122) had prior training in requesting consultations, and more than half (52%; 63/122) were prepared to request consultations (Table 1). Prior experience and training were associated with higher baseline preparedness as self-reported on the online module pre-survey (P < .01, Wilcoxon rank-sum).

Table 1.

Intern Demographic and Baseline Data From the June 2016 Pilot of the Consultation Observed Simulated Clinical Experience (COSCE), University of Chicago Medicine

| Intern characteristics | No. (%) |

|---|---|

| Gender (n = 127) | |

| Female | 63 (50) |

| Location of undergraduate medical educationa (n = 127) | |

| Home institution (i.e., University of Chicago) | 14 (11) |

| Midwest (not including home institution) | 53 (42) |

| Northeast | 21 (17) |

| Southern | 21 (17) |

| Western | 14 (11) |

| International | 4 (3) |

| Residency program (n = 127) | |

| Internal medicine | 41 (32) |

| Pediatrics | 23 (18) |

| Emergency medicine | 16 (13) |

| General surgery | 11 (9) |

| Anesthesiology | 8 (6) |

| Obstetrics/Gynecology | 7 (5) |

| Psychiatry | 6 (5) |

| Orthopedic surgery | 5 (4) |

| Internal medicine/Pediatrics | 4 (3) |

| Neurosurgery | 2 (2) |

| Plastic surgery | 2 (2) |

| Otolaryngology | 2 (2) |

| Prior consultation experience (n = 122)b | |

| No experience | 27 (22) |

| Experience in medical school | 95 (78) |

| Prior consultation training (n = 122)b | |

| No training | 40 (33) |

| Prior training in medical school | 82 (67) |

| Satisfied with prior consultation training (n = 82)c | 54 (65) |

| Baseline self-reported preparedness (n = 122)b | |

| Prepared to request a consultationc | 63 (52) |

U.S. regions defined using U.S. Census definitions.

Self-reported by the interns who completed the online training module.

Responded “agree” or “strongly agree” to the following item on the pre-module survey: “I am satisfied with the training I received related to requesting consultations in medical school.”

Responded “agree” or “strongly agree” to the following item on the pre-module survey: “I am prepared to request consultations.”

Self-reported preparedness to request consultations

Following completion of the online module, 84% of interns (103/122) were prepared to request consultations compared with 52% (63/122) at baseline (P < .01, Wilcoxon sign-rank test). Despite differences at baseline, after the module interns without prior experience and training rated themselves as similarly prepared to request consultations when compared to experienced and trained interns (P > .20, Wilcoxon rank-sum). After the boot camp, 96% (122/127) of interns were prepared to request consultations (compared with 84% post module, P < .01, Wilcoxon sign-rank test); this did not differ by prior experience or training (P > .20, Wilcoxon rank-sum).

Consultation communication skills

All 127 interns completed the consultation simulation OSCE. Table 2 presents results of skills performance assessments. Completion of the 5Cs checklist items ranged from 32% (identifies supervising attending; n = 40) to 99% (states name; n = 126). The mean GRS rating was 3.78 (SD 0.71). Twenty interns (16%) had outstanding performance and 24 (19%) had poor performance, by their z-scores. Performance did not differ by prior experience or training. Nearly all interns (126/127) completed the one-month internship survey, with 76% (95/126) reporting they retained knowledge of the 5Cs and were more effective at calling a consultation because of the COSCE.

Table 2.

Intern Consultation Communication Skills Performance Results From the June 2016 Pilot of the Consultation Observed Simulated Clinical Experience (COSCE), University of Chicago Medicine

| COSCE skills performance measure | All interns (n = 127) | Untrained interns (n = 40/122)a | Trained interns (n = 82/122)a | Comparison P valueb |

|---|---|---|---|---|

| 5Cs checklist, no. (%) completing itemc | ||||

| Contact | ||||

| States name | 126 (99) | 40 (100) | 81(99) | |

| States service | 101 (80) | 33 (83) | 66 (81) | |

| Identifies supervising attending | 40 (32) | 11 (28) | 33 (27) | |

| Identifies name of consultant | 106 (83) | 32 (80) | 69 (84) | |

| Communicate | ||||

| Presents a concise story | 110 (87) | 34 (85) | 71 (87) | |

| Presents an accurate account of information and case detail | 103 (81) | 31 (78) | 68 (83) | |

| Speaks clearly | 125 (98) | 40 (100) | 80 (98) | |

| Core question | ||||

| Specifies reason for consultation | 121 (95) | 39 (98) | 77 (94) | |

| Specifies timeframe for consultation | 98 (77) | 31 (78) | 64 (78) | |

| Collaborate | ||||

| Is open to and incorporates consultant recommendations | 123 (97) | 39 (98) | 79 (96) | |

| Close the loop | ||||

| Is open to and incorporates consultant recommendations | 100 (79) | 33 (83) | 62 (76) | |

| Thanks consultant | 125 (98) | 40 (100) | 80 (98) | |

| No. of checklist items completed, median (IQR) | 10 (9, 11) | 10 (9, 11) | 10 (9, 11) | > .20d |

| Global Rating Scale (GRS), mean rating (SD)e | ||||

| Introduction of involved parties | 3.81 (0.93) | 3.78 (0.89) | 3.85 (0.97) | |

| Patient case presentation | 3.50 (0.97) | 3.38 (1.00) | 3.57 (0.97) | |

| Specified consultation objective | 3.81 (0.80) | 3.73 (0.82) | 3.87 (0.80) | |

| Case discussion | 3.72 (0.95) | 3.53 (0.96) | 3.83 (0.95) | |

| Confirmation and closing | 3.93 (0.90) | 3.75 (0.95) | 4.01 (0.88) | |

| Interpersonal skills | 4.09 (0.78) | 4.00 (0.85) | 4.14 (0.76) | |

| Overall global rating | 3.66 (0.83) | 3.58 (0.90) | 3.72 (0.81) | |

| Mean GRS rating | 3.73 (0.71) | 3.68 (0.77) | 3.86 (0.68) | .19f |

| Z score, no. (%)g | ||||

| Outstanding (> 1 SD) | 20 (16) | 8 (20) | 12 (15 | > .20h |

| Average (−1 SD to 1 SD) | 83 (65) | 57 (70) | 22 (55) | |

| Poor (< −1 SD) | 24 (19) | 13 (16) | 10 (25) | |

Five interns did not complete the online training module and had missing baseline characteristic data. The 122 interns who completed the module self-reported prior consultation training in medical school on the pre-module survey.

P values represent comparisons between trained and untrained interns. The same comparisons were applied to experienced and unexperienced interns, and there was no significant difference when comparing these two groups (data not shown).

5Cs checklist source: Kessler et al.10

Wilcoxon rank-sum.

GRS checklist items were rated on a 5-point scale from with 1 = “not effective” to 5 = “extremely effective.” Source: Kessler et al.1

Student’s t test.

The z score was a composite score averaging the 5Cs and GRS checklist scores for each intern and normalizing by rater to account for rater differences (mean = 0, SD = 1).

Chi-square.

Satisfaction and impact on future practice

Nearly all interns rated the online module as effective (89%; 108/122) and the COSCE as realistic (95%; 120/127). Interns were highly satisfied with their boot camp performance (90%; 113/127) and with feedback received in the consultation OSCE (98%; 125/127). Their free-text comments about the COSCE were positive, for example, “great exercise … honing skills that don’t often get formally taught.” Almost all rated the COSCE as useful to their future practice (96%; 122/127) and indicated they would recommend it for future interns (91%; 115/127).

Consultant surveys

The results of the consultant surveys are presented in Supplemental Digital Appendix 3. Response rates were 44% (41/94) and 39% (39/99) for the pre- and post-COSCE surveys, respectively. Following the COSCE, more consultants described residents as prepared to request consultations (54%; 21/39) than before the COSCE (27%; 11/41; P = .01, Chi-square). Consultants also rated consultation quality for most of the 5Cs significantly higher following the intervention. The reported frequency of curbside consultations and the perceived barriers to effective communication were unchanged.

Next Steps

The COSCE, which couples online training with interactive, in-person simulation, was well-received by interns and successful in preparing incoming interns to request consultations. It was also effective for interns with varying levels of UME experience and training in requesting a consultation. Training and feedback exercises like the COSCE may help level the playing field for interns with different backgrounds.

Remarkably, 22% of interns responding to the pre-survey in our pilot reported having no UME experience requesting consultations. Even among interns with prior experience, over one-third (37%; 35/95) felt unprepared to request a consultation upon entering residency. These findings from a sample of interns from 55 different medical schools confirm and strengthen prior work suggesting inconsistency in UME consultation training and reinforce calls to improve education in this critical skill.2,4 The benefits of the COSCE noted for interns without prior training and experience were particularly encouraging: After completing the online module, they not only felt as prepared to request consultations as interns with previous training and experience but also had similar skills performance during the simulated consultation.

Interns were highly satisfied with the COSCE both in terms of practicing consultation communication skills and receiving feedback on their skills. Though most felt the simulation was realistic, the challenge of requesting a consultation using a signout document was noted during feedback sessions, as interns felt unfamiliar with these patients. Interestingly, this sentiment echoed one of the needs assessment findings: Consultants perceived lack of familiarity with the patient, often due to cross-cover or float rotations, to be a common barrier to effective consultation communication (see Supplemental Digital Appendix 3. Duty hours, shift work, and coverage models in GME create training environments in which this challenge is commonplace for residents. We found the COSCE to be an ideal strategy to raise awareness of this issue, and faculty directed their feedback accordingly to stress the importance of structured communication to help mitigate this potential barrier. We plan to explicitly highlight this skill in future implementations of the COSCE by calling attention to clinical situations such as cross-cover, shift-change, or night float rotations in which residents may need to call consultations on patients with whom they are not familiar.

Our innovation has several limitations and areas for future work. The COSCE was piloted at a single academic institution and our model of consultation may not be generalizable. Many outcomes were self-reported, and we did not measure actual performance in a clinical environment. Directly observing interns requesting consultations during clinical rotations would be ideal for assessing this skill, and we are considering this strategy for selected residency programs in the future. Our intervention was incorporated into GME orientation, which facilitated faculty recruitment, but the high levels of faculty involvement required could be a barrier for institutions without similar structures. However, we believe the COSCE can be adapted to meet other needs and varying resource levels, and we aim to reproduce it at other institutions moving forward.

While we strove to evaluate long-term impact by surveying consultants 9 months after the COSCE, the quality of these data is limited. The response rate was low, presumably due to the busy clinical schedules of subspecialty fellows. These data reflect fellows’ assessment of consultation quality, rather than attendings’. The results were mixed; while some elements of consultation quality improved following the COSCE, others, such as requests for curbside consultation, were unchanged. We hope positive change suggests the COSCE fostered lasting improvement, but we recognize the limitations of a single intervention and the potential need for future longitudinal training.

In sum, the COSCE was effective in training and assessing incoming interns on consultation communication skills as well as providing them with feedback. The combination of online training with in-person simulation was helpful for a diverse group of interns with varying prior UME experience. Pairing training experiences such as the COSCE with a systematic assessment could provide baseline data for interns entering residency and may assist programs in determining early entrustment decisions and personalized learning plans for further development.

Supplementary Material

Acknowledgments

The authors wish to thank the University of Chicago GME Office, the Pritzker School of Medicine, and John F. McConville, MD, and the University of Chicago Internal Medicine Residency Program for their invaluable assistance with this work. The authors are also grateful to all of the residents, fellows, and faculty who participated in this educational innovation.

Funding/Support: This work was supported in part by a Medical Education Grant from the Academy of Distinguished Medical Educators from the University of Chicago Pritzker School of Medicine. The University of Chicago REDCap project is hosted and managed by the Center for Research Informatics and funded by the Biological Sciences Division and by the Institute for Translational Medicine, CTSA grant number UL1 TR000430 from the National Institutes of Health. Finally, this work was also supported in part by the NIH NIA Grant #4T35AG029795-10.

Footnotes

Other disclosures: None reported.

Ethical approval: This program was granted exemption by the University of Chicago Institutional Review Board (IRB16-0480).

Previous presentations: Preliminary results of this work were presented at the Alliance for Academic Internal Medicine Week, Baltimore, Maryland, March 2017; the Society of Hospital Medicine Annual Meeting, Las Vegas, Nevada, May 2017; and the Midwest Society of General Internal Medicine Meeting, Chicago, Illinois, September 2017.

Supplemental digital content for this article is available at [LWW INSERT LINK].

Contributor Information

Shannon K. Martin, Assistant professor of medicine and associate program director, Internal Medicine Residency Program, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

Keme Carter, Associate professor of medicine and assistant dean of admission, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

Noah Hellermann, Student, College of the University of Chicago, Chicago, Illinois, at the time of writing. The author is now a research assistant, Veterans Affairs Medical Center New York–Manhattan Campus, New York, New York.

Laura R. Glick, Third-year student, University of Chicago Pritzker School of Medicine, Chicago, Illinois

Samantha Ngooi, Research coordinator, Department of Medicine, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

Marika Kachman, Second-year student, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

Jeanne M. Farnan, Associate professor of medicine and associate dean, evaluation and continuous quality improvement, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

Vineet M. Arora, Professor of medicine, assistant dean for scholarship and discovery, and director of clinical learning environment innovation, University of Chicago Pritzker School of Medicine, Chicago, Illinois.

References

- 1.Kessler CS, Afshar Y, Sardar G, Yudkowsky R, Ankel F, Schwartz A. A prospective, randomized, controlled study demonstrating a novel, effective model of transfer of care between physicians: The 5 Cs of consultation. Acad Emerg Med. 2012;19(8):968–74. doi: 10.1111/j.1553-2712.2012.01412.x. [DOI] [PubMed] [Google Scholar]

- 2.Kessler CS, Chan T, Loeb JM, Malika ST. I’m clear, you’re clear, we’re all clear: Improving consultation communication skills in undergraduate medical education. Acad Med. 2013;88(6):753–8. doi: 10.1097/ACM.0b013e31828ff953. [DOI] [PubMed] [Google Scholar]

- 3.Burden M, Sarcone E, Keniston A, et al. Prospective comparison of curbside versus formal consultations. J Hosp Med. 2013;8(1):31–5. doi: 10.1002/jhm.1983. [DOI] [PubMed] [Google Scholar]

- 4.Carter KC, Golden A, Martin SK, et al. Results from the first year of implementation of CONSULT: Consultation with Novel Method and Simulation for UME Longitudinal Training. West J Emerg Med. 2015;16(6):845–50. doi: 10.5811/westjem.2015.9.25520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Blackmore C, Austin J, Lopushinsky SR, Donnon T. Effects of postgraduate medical education “boot camps” on clinical skills, knowledge and confidence: A meta-analysis. J Grad Med Educ. 2014;6(4):643–52. doi: 10.4300/JGME-D-13-00373.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): Assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438–41. doi: 10.1007/s11606-016-3591-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kern D, Thomas P, Howard D, Bass E. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: Johns Hopkins University Press; 1998. [Google Scholar]

- 8.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.The University of Chicago MERITS Lab. [Accessed May 24, 2018];Consultation gone poorly [video]; Beyond “appreciate recs”: A standardized approach to consultation communication for new residents [video] 2016 https://www.youtube.com/user/MergeLab.

- 10.Kessler CS, Kalapurayil PS, Yudkowsky R, et al. Validity evidence for a new checklist evaluating consultations, the 5Cs model. Acad Med. 2012;87(10):1408–12. doi: 10.1097/ACM.0b013e3182677944. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.