Abstract

Background

Health information systems with applications in patient care planning and decision support depend on high-quality data. A postacute care hospital in Ontario, Canada, conducted data quality assessment and focus group interviews to guide the development of a cross-disciplinary training programme to reimplement the Resident Assessment Instrument–Minimum Data Set (RAI-MDS) 2.0 comprehensive health assessment into the hospital’s clinical workflows.

Methods

A hospital-level data quality assessment framework based on time series comparisons against an aggregate of Ontario postacute care hospitals was used to identify areas of concern. Focus groups were used to evaluate assessment practices and the use of health information in care planning and clinical decision support. The data quality assessment and focus groups were repeated to evaluate the effectiveness of the training programme.

Results

Initial data quality assessment and focus group indicated that knowledge, practice and cultural barriers prevented both the collection and use of high-quality clinical data. Following the implementation of the training, there was an improvement in both data quality and the culture surrounding the RAI-MDS 2.0 assessment.

Conclusions

It is important for facilities to evaluate the quality of their health information to ensure that it is suitable for decision-making purposes. This study demonstrates the use of a data quality assessment framework that can be applied for quality improvement planning.

Keywords: decision support, clinical; health professions education; continuous quality improvement; implementation science; information technology

Background

The widespread implementation of standardised comprehensive assessment tools has helped to give clinicians, organisation administrators and policymakers the capacity to make evidence-informed decisions.1 Healthcare organisations making use of health information systems to aid in clinical decision-making should have confidence in their data quality. First, it ensures that clinicians are responsive to the clinical needs of patients and are able to detect change in clinical status over time. Second, to hospital administrators under case mix-based funding algorithms, high-quality data ensure appropriate allocation of economic resources proportionate with hospital case mix and provides the opportunity to perform well on quality measures.

Data quality concerns at the hospital level may arise from several sources of error, bias and poor assessment practice. A thorough review of this topic is provided by Hirdes et al.2 Systematic error as a result of financial incentives to inflate patient case mix scores or ‘gaming’ is a strong concern when facilities are competing for funding.3 Assessments that do not accurately represent true patient characteristics may also stem from an assessor’s desire to avoid negative impressions of the clinical staff or the hospital itself. Less intentional sources of poor data quality include errors as a result of inadequate training and ongoing education or a lack of clinical expertise by staff resulting in a failure to detect subtler patient characteristics. Further, ascertainment bias may prevent staff from detecting clinical characteristics they may believe are uncommon among particular patient groups. Poor data collection and coding strategies may be responsible for poor assessment data quality, for example, in single assessor implementations. Finally, a lack of staff buy-in or a lack of feedback to staff may result in a ‘black box’ effect where staff disregards the importance of the assessment.2

A 120-bed hospital in Ontario, Canada, that provides postacute complex medical care and rehabilitation services embarked on an initiative to improve the quality of their patient assessment data by training all clinical staff on the use of a comprehensive health assessment as a decision support and care planning tool. The interRAI Resident Assessement Instrument–Minimum Data Set (RAI-MDS) 2.0 is a comprehensive health assessment that is used in long-term care facilities and postacute care hospitals to assess patients across a broad range of domains of health and well-being including physical function, cognition, mood and behaviour, social function and health service utilisation.4–7 The information that is collected with the RAI-MDS 2.0 assessment is used for patient care planning and decision support, quality assessment, case mix-based funding, research and policy development.1 8–12 These various applications rely on high-quality health information collected with a reliable assessment.13 A study of RAI-MDS 2.0 assessments completed in Ontario postacute care hospitals and residential long-term care facilities found that the data that were collected were of high quality.2 Similarly, the quality of the data collected using the Resident Assessment Instrument-Home Care, the counterpart assessment of the RAI-MDS 2.0 for use in home care settings, was also found to be strong.14

Beginning in 1996, postacute ‘Complex Continuing Care’ hospitals in Ontario, Canada were required to complete an RAI-MDS 2.0 assessment at admission and 90-day intervals for all patients with an expected length of stay greater than 14 days.1 Despite its applications in decision support and patient care planning, the hospital detailed in this report recognised that there were opportunities to improve their assessment practice to further integrate the assessment into their clinical practice. In collaboration with hospital practice leads and a group of interRAI researchers and educators, a training programme was developed to augment the clinical use of the instrument and integrate the Clinical Assessment Protocols (CAPs) and clinical outcome scales into all care planning and clinical team activities. The goals of the training programme were to educate staff on the assessment theory and processes that are conducive to quality assessment data and provide them with necessary knowledge and tools to incorporate the RAI-MDS 2.0-derived outcome measures, scales and CAPs into patient care planning activities. Given the significance of this change to clinical workflows, this initiative was framed as a reimplementation of the RAI-MDS 2.0.

This article outlines how hospital-level data quality assessment and focus groups were used to evaluate the effectiveness of an RAI-MDS 2.0 training programme that was designed to improve data quality and clinical use of the comprehensive health assessment. Successes, challenges and lessons learnt are discussed for the benefit of other facilities seeking to evaluate their own data quality and improve the clinical relevance of their routinely collected patient data.

Methods

This study employed a non-experimental pretest/post-test design to evaluate change in assessment data quality and staff knowledge of the use of the RAI-MDS 2.0 assessment as a decision support and care planning tool. This was accomplished through the use of focus groups and data quality analysis performed before and after the administration of a cross-disciplinary education and training programme for nursing, allied health professions and medical staff. Given that RAI-MDS 2.0 was mandated for use many years prior to the start of this evaluation study, the pretraining evaluation methods were used to establish a baseline measure of data quality, staff knowledge and present state use of the assessment outputs in clinical practice. Change in data quality following the training programme was measured by observing time series comparisons compared with a benchmark measure. Historical performance on these measures was used to tailor the training programme to emphasise areas of data quality concerns. The evaluation measures were repeated following the training programme to evaluate change and identify opportunities for continuing education.

The training programme was designed to facilitate a better understanding of the RAI-MDS 2.0 assessment process, care planning applications and the use of the electronic health record system for RAI-MDS 2.0 documentation. In order to address concerns that the RAI-MDS 2.0 was being completed by staff in isolation of other disciplines and that information contained in completed assessments was not being used for care planning, this training programme was delivered to all front-line staff and managers to foster interdisciplinary interaction. The hospital committed to providing full staff coverage where needed, enabling staff to participate in the 2-day training session as part of the organisation’s professional development programme. Training sessions used a variety of learning strategies focused on interdisciplinary collaboration such as small group discussions using case studies, panel debates with facilitators, practice enablers (summary sheets) and wall charts.

Data quality analysis

The data quality analysis was performed according to the analytic framework established by Hirdes et al.2 Using time series comparisons, the hospital’s performance was benchmarked against an aggregate of other Ontario postacute care hospitals. Four indicators of data quality were evaluated: (1) trends in convergent validity for scales and items over time; (2) trends in internal consistency for scales over time; (3) trends in logical inconsistencies, improbable coding and autopopulation over time; and finally (4) patterns of association in clinical variables between facilities. Performance on trend line indicators was ascertained by visual comparison against the aggregate postacute care hospital benchmark. Since patterns of association in clinical variables between facilities serve as an overall measure of data quality, this analysis was also completed for 49 other comparably sized Ontario postacute care hospitals to determine rank among peers.

Two sources of RAI-MDS 2.0 assessment data from 2005 to 2014 were used for the hospital data quality assessment. Deidentified RAI-MDS 2.0 patient assessment data collected from 1 April 2005 to 30 June 2014 (n=3583) were obtained from the postacute care hospital completing the data quality assessment. Comparisons were made using RAI-MDS 2.0 assessments from the Continuing Care Reporting System that were received from the Canadian Institute of Health Information with encrypted patient and hospital identifiers. A total of 268 246 RAI-MDS 2.0 assessments completed between 31 March 2005 and 31 March 2014 were used. Patients in coma were removed from the sample. All analyses were completed using SAS V.9.4 (SAS Institute).

Focus groups

Before and after implementing the RAI-MDS 2.0 training programme, focus group sessions were conducted with the hospital’s nursing and allied health professional staff. These focus groups were completed by researchers who were independent from the hospital. All clinical staff were invited to participate in the focus group sessions with the exception of unit managers to ensure that accurate results were obtained. Initial focus group sessions prior to the development and implementation of the training programmes were focused primarily on the organisation’s current assessment practices and approaches to gathering clinical information, including the use of the RAI-MDS 2.0 assessment instrument and the day-to-day use of outcome measures and CAPs. A second round of focus groups was conducted 4 months following the training programme, the purpose of which was to evaluate the reintroduction of the RAI-MDS 2.0 as a clinical tool. This included the day-to-day use of the instrument and its clinical applications. Feedback from staff on the delivery of the training programme was also sought, including opportunities for implementation and further education needs.

Results

Data quality assessment

Convergent validity

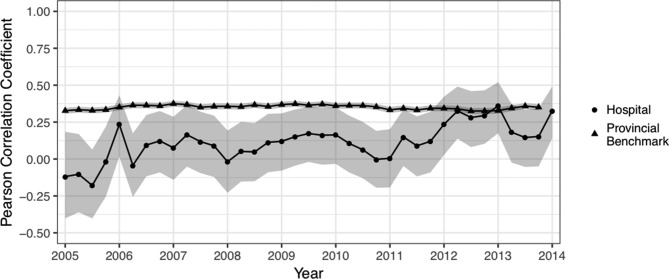

Prior to the implementation of the RAI-MDS 2.0 training programme, performance on measures of convergent validity between the Cognitive Performance Scale and the bowel continence item, Activities of Daily Living (ADL) Long Scale and Pain Scale were good. The benchmark of the aggregate of Ontario postacute care hospitals demonstrates that scale scores on the Aggressive Behaviour Scale and Cognitive Performance Scale are weakly positively correlated. However, as demonstrated in figure 1, hospital performance was poor and inconsistent on this measure of data quality over time. This indicated potential data quality concerns with Aggressive Behaviour Scale items. There was little change in Aggressive Behaviour Scale and Cognitive Performance Scale convergence in the quarters immediately following the implementation of the staff training programme; however, 1 year later, the hospital’s data quality for the Aggressive Behaviour Scale items is in line with the provincial benchmark.

Figure 1.

Pearson’s R correlation coefficient for the Aggressive Behaviour Scale and Cognitive Performance Scale over time.

Reliability based on internal consistency of scales

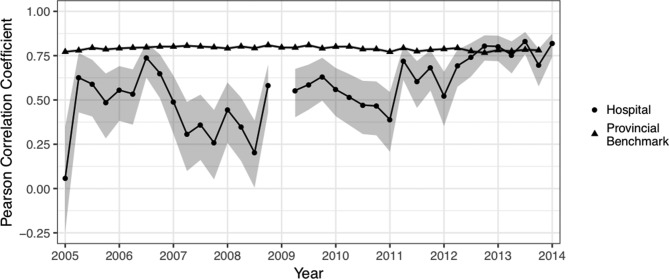

Internal consistency for the ADL Long and the Depression Rating Scale was comparable to the Ontario postacute care hospital benchmark. However, as demonstrated in figure 2, the internal consistency of the Aggressive Behaviour Scale was poor. Again, this raises concerns about the data quality of items used in the Aggressive Behaviour Scale. In the time since the RAI-MDS 2.0 training programme, internal reliability for the Aggressive Behaviour Scale has improved to be in line with the provincial benchmark.

Figure 2.

Cronbach’s alpha for the Aggressive Behaviour Scale over time. Due to a lack of variance in one Aggressive Behaviour Scale item in all assessments in Q1 2009, Cronbach’s alpha based on standardised items could not be computed for this quarter.

Logical inconsistencies, improbable coding and possible autopopulation over time

Data quality issues attributable to logical inconsistencies and improbable coding such as the number of therapy minutes surpassing the number of minutes in a day or the presence of mood persistence despite no individual mood item indicators present were very low for both the Ontario postacute care hospital benchmark and the hospital performing the data quality analysis.

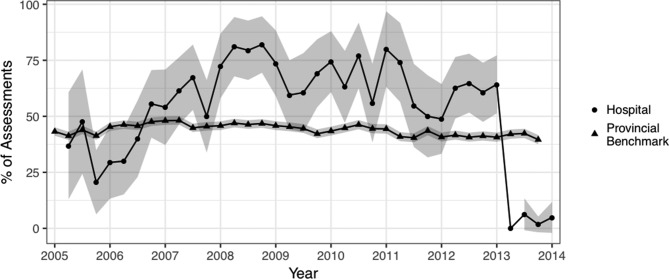

Autopopulation is used to describe situations when several items in a particular assessment domain are unchanged on follow-up assessments. While it is possible that some patients may not demonstrate clinical change over consecutive assessments, high rates of autopopulation indicate that assessors may be carrying forward previous assessment scores without reassessing patients. The data quality analysis showed strong and consistent evidence of autopopulation by the hospital performing the data quality analysis. In as many as 80% of assessments, all 16 mood items and all 20 ADL items were unchanged from the previous assessment. Following the staff training programme, ADL item autopopulation rates fell to 0% (figure 3). Although the training programme focused on reducing autopopulation over consecutive assessments, it is important that the assessment is an accurate reflection of the patient. A dramatic reduction in autopopulation rates to well below the provincial average may also be an indication of poor data quality.

Figure 3.

Percentage of Resident Assessment Instrument-Minimum Data Set (RAI-MDS) 2.0 assessments with no change in all 16 mood items from the previous assessment.

Patterns of association in clinical variables

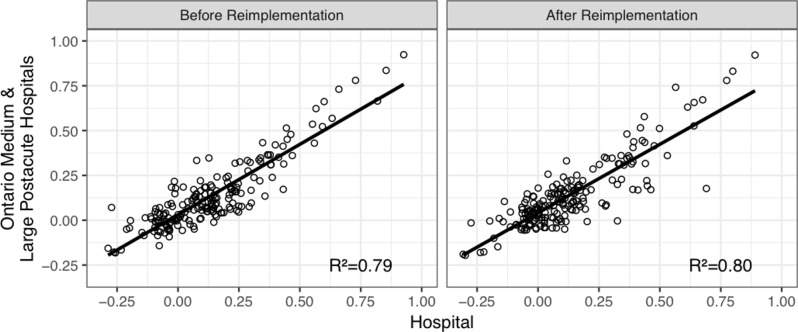

From 31 March 2012 to 31 March 2013, one year prior to the implementation of the staff training programme, the overall association between the hospital and the Ontario postacute care hospital benchmark for 189 different statistical tests including validity and reliability of outcome scales and assessment item correlations resulted in R 2=0.79. Completing the same analysis for 47 other medium and large postacute care hospitals yielded a mean R 2=0.77 (SD=0.08) ranking the hospital in question 20th in the province on this measure of data quality. This analysis was replicated for assessments completed from 31 March 2013 to 31 March 2014 to measure data quality following the staff training. The hospital R2 improved to 0.80 compared with the provincial average of 0.74 (SD=0.9), improving its rank among peers by seven positions. Figure 4 presents the change in overall data quality before and after the reimplementation of the RAI-MDS 2.0.

Figure 4.

Change in overall data quality before and after Resident Assessment Instrument-Minimum Data Set (RAI-MDS) 2.0 reimplementation based on the association between the hospital and the provincial benchmark of medium and large postacute care facilities.

Focus groups

Preimplementation

A total of 10 clinical staff elected to participate in the focus group sessions prior to the implementation of the RAI-MDS 2.0 training programme. It was apparent from the focus groups that little was known by about the MDS 2.0 assessment system as a whole. Allied health professions reported that they were primarily concerned with completing their portion of the RAI-MDS 2.0 assessment and did not use other sections of the assessment as possible information sources for patient care. Nursing staff reported that a single registered nurse per floor was responsible for completing the RAI-MDS 2.0 assessment and did so primarily by means of chart review. Focus group participants viewed the completion of the RAI-MDS 2.0 assessment as a burdensome activity that took time away from patient care. They were largely unaware of outcome measures and scales, and were not aware of the CAPs used in care planning as decision support tools. Feedback pertaining to the RAI-MDS 2.0 was not provided to staff completing the assessments. Focus group participants believed that the patient case mix index, a relative value used in determining the allocation of resources to care for patients under case mix funding algorithms, was the purpose for completing the assessment. It was perceived that low case mix index values reflected poorly on the hospital and staff reported being questioned when patients had a low case mix index value. Further, it was reported that unit managers would often discuss case mix index when care planning, suggesting that decision-making was guided in part by remuneration.

Postimplementation

Twelve clinical staff participated in the second round of focus groups following the implementation of the RAI-MDS 2.0 training programme. A primary source of staff concern involved the mechanics of using the assessment software. Staff expressed challenges in navigating the software (eg, can’t ‘find’ the CAPs) in addition to broader computer-related issues such as software timeouts and difficulties in obtaining access to a computer to complete the assessment. With respect to the clinical use of the RAI-MDS 2.0, staff feedback suggests that following the training programme, the outcome measures, scales and CAPs were not yet consistently used as part of the clinical process. Staff said that use of assessment outputs is inconsistent across patient care teams in meetings, but when it was used as a core platform for patient discussion that it was of value.

Throughout the two focus group sessions, there was a noticeable increase in the use of the terms ‘CAPs’ and ‘outcome measures’ with fewer references to the case mix index. While most participants acknowledged limited understanding and use of CAPs and outcome measures, some focus group members reported accessing the outcome scales and the assessment as part of their evaluation and clinical review. There was an expressed desire for more information or refresher course about CAPs and scales in order to increase comfort levels in working with these applications of the assessment process as well as education on how to create patient goals. Few participants reported using available manuals or software help functions.

Discussion

Insights for training programme development

Based on information obtained through the focus group sessions, the training programme was designed to demonstrate the value of the assessment and its role in patient care planning. In contrast to past practice where patients had numerous discipline-specific care plans, during the training sessions staff were given opportunities to model care planning best practices using interdisciplinary discussion based on outcome measures and CAPs. A strong emphasis was placed on the clinical applications of the RAI-MDS 2.0 as opposed to only deriving case mix index values.

As supported by findings from both the data quality assessment and focus group activities, a decision to adopt a multiassessor model was made. Unlike the current model where one staff member per floor completed the bulk of the assessment, the multiassessor model requires all nursing and allied health professional staff to participate in the completion of the assessment. Workstation-on-wheels systems located outside of patient rooms were purchased, allowing staff to complete RAI-MDS 2.0 assessment activities following direct patient interaction, reducing reliance on chart audits for completion of the assessment.

Using results from the data quality analysis and in consultation with the research team, assessment items associated with poor data quality were identified, including the domains of communication, mood and behaviour. During the training programme, additional emphasis and focus was placed on these areas of data quality concern.

Training successes and challenges

Overall, improvements in both data quality and the culture surrounding the RAI-MDS 2.0 assessment were detected based on results from the second round of focus groups and data quality assessment. Focus group discussions revealed that following the training, staff saw the assessment and the associated outputs for care planning as platform for ongoing interdisciplinary discussion that could be used in patient rounds, case conferences and family meetings, suggesting a change in their perception of the assessment’s utility. Members of the interdisciplinary team continue to work to integrate and sustain the use of outcome measures and CAPs into care planning activities and this has become a focus of continuous professional development throughout the organisation.

Feedback about the 2-day education session suggested that although participants recognised the value of the training programme, they may have experienced information ‘overload’. This is likely because the training programme addressed assessment practices and clinical applications, and the use of a new electronic health record software system as the assessment platform. While there was a desire to present these multiple components as a unified system, separating RAI-MDS 2.0 assessment from the use of the electronic health record software may have reduced the cognitive load of the training programme. Encouraging staff reported that they felt comfortable approaching a manager with assessment coding questions.

Following the training programme, it became apparent that issues related to the electronic health record software were a challenge to the reimplementation. It was necessary to differentiate software versus user knowledge concerns in order to identify the root cause of difficulties and the impact on acceptance of the RAI-MDS 2.0 as an assessment system. For example, staff voiced a concern that the software would often time out while they were completing an assessment. These software challenges were addressed with the electronic health record vendor and changes were made to meet the needs of the hospital. Feedback from management staff suggested that poor computer skills may have hindered the learning process and acceptance of the reimplementation programme. As such, it may have been helpful to complete a staff computer competency scan prior to commencing reimplementation to ensure that computer literacy skills were not a barrier to the goals of the programme.

Only a subset of the data quality analyses were presented in this report; however, the plots that were selected provide an opportunity to discuss the use of time series comparisons for quality improvement. A large aggregated sample provides a stable benchmark on which to compare performance over time. However, when computing measures for a single hospital, due to small sample size, some random quarter-to-quarter variation is expected. When evaluating data quality against a benchmark, caution should be taken to differentiate sustained trends from time-limited variation. While a year-long trend may suggest systematic data quality concerns, improvement or decline within a single quarter may not warrant intervention. When using time series plots for continuous data quality monitoring, organisations should consider plotting moving averages to visualise trends and reduce short-term fluctuations.

Future directions

Looking forward, efforts to increase knowledge about clinical applications of the assessment and further integrate it into clinical practice might take a number of paths such as additional education sessions, online learning, development of a hospital-wide Community of Practice (for both internal community building and external linkages), providing information to staff based on aggregate-level analysis of the patient population and building incentives or rewards for using the information from the RAI-MDS 2.0 assessment system (eg, recognition in newsletters, staff awards, conference attendance).

Although the framework employed to evaluate change in data quality before was used as the foundation for a large overhaul in assessment workflow, data quality assessment of this nature may have a role in regular quality improvement planning to ensure that high-quality data are collected for clinical decision-making purposes. As demonstrated, areas of data quality concern may reveal opportunities for change in practice or additional staff training in assessing particular domains of health and well-being. From a broader system perspective, hospital ranking based on measures of data quality may be used as a means of encouraging growth through competition.

Conclusion

Data quality analysis and focus groups revealed that knowledge, practice and cultural barriers prevented the collection of quality data and the clinical use of the RAI-MDS 2.0 assessment system. In today’s data-driven healthcare environment, quality improvement projects aimed at augmenting assessment knowledge and practice for better data quality allow clinicians to have confidence in the output of clinical decision support tools and ensure that facilities receive proportionate compensation for services delivered. Further, it ensures that assessments are a true reflection of the patient, providing a fair opportunity to perform on quality measures. Data quality assessment and focus group activities of this nature may be used both in the development staff training programmes and in regular quality improvement practice.

Acknowledgments

The authors acknowledge the participation of the numerous TGHC clinical staff who volunteered to take part in the focus group sessions. They also acknowledge the assistance of Jonathan (Xi) Chen in assembling the data sets used in this study.

Footnotes

Contributors: JPH conceived the study and its design. JAT, JM, NCT and LE developed and delivered the reimplementation training programme. NCT and LE conducted the focus groups and analysed the results. LT conducted the data quality analysis and drafted the manuscript. Critical revisions were performed by all coauthors.

Funding: Funding for this study was provided by Toronto Grace Health Centre.

Competing interests: JAT and JM are employed by Toronto Grace Health Centre. JPH is a board member of interRAI, and chairs both the interRAI Network of Excellence in Mental Health and the interRAI Network of Canada.

Patient consent: Not required.

Ethics approval: University of Waterloo Office of Research Ethics (ORE 18132 and 18228) and the Joint Bridgepoint Health–West Park Healthcare Centre–Toronto Central Community Care Access Centre (CCAC)–Toronto Grace Health Centre Research Ethics Board (JREB)

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: The data that support the findings of this study are available from Canadian Institute for Health Information and Toronto Grace Health Centre but restrictions apply to the availability of these data. Continuing Care Reporting System data were used under license, and so are not publicly available. Toronto Grace Health Centre RAI MDS 2.0 data contain sensitive patient health information and are also not publicly available.

References

- 1. Hirdes JP, Sinclair DG, King J, et al. From Anecdotes to evidence: complex continuing care at the dawn of the information age in Ontario: Millbank Memorial Fund, 2003. [Google Scholar]

- 2. Hirdes JP, Poss JW, Caldarelli H, et al. An evaluation of data quality in Canada’s Continuing Care Reporting System (CCRS): secondary analyses of Ontario data submitted between 1996 and 2011. BMC Med Inform Decis Mak 2013;13:27 10.1186/1472-6947-13-27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ikegami N. Games policy makers and providers play: introducing case-mix-based payment to hospital chronic care units in Japan. J Health Polit Policy Law 2009;34:361–80. 10.1215/03616878-2009-003 [DOI] [PubMed] [Google Scholar]

- 4. Bernabei R, Landi F, Onder G, et al. Second and third generation assessment instruments: the birth of standardization in geriatric care. J Gerontol A Biol Sci Med Sci 2008;63:308–13. [DOI] [PubMed] [Google Scholar]

- 5. Gray LC, Berg K, Fries BE, et al. Sharing clinical information across care settings: the birth of an integrated assessment system. BMC Health Serv Res 2009;9:71 10.1186/1472-6963-9-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Hirdes JP, Ljunggren G, Morris JN, et al. Reliability of the interRAI suite of assessment instruments: a 12-country study of an integrated health information system. BMC Health Serv Res 2008;8:277 10.1186/1472-6963-8-277 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Ikegami N, Hirdes JP, Carpenter I. Measuring the Quality of Long-term Care in Institutional and Community Settings : Measuring up: Improving health system performance in oecd countries: OECD Publishing, 2002:277–93. [Google Scholar]

- 8. Fries BE, Morris JN, Bernabei R, et al. Rethinking the resident assessment protocols. J Am Geriatr Soc 2007;55:1139–40. 10.1111/j.1532-5415.2007.01207.x [DOI] [PubMed] [Google Scholar]

- 9. Mor V. Improving the quality of long-term care with better information. Milbank Q 2005;83:333–64. 10.1111/j.1468-0009.2005.00405.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Zimmerman DR, Karon SL, Arling G, et al. Development and testing of nursing home quality indicators. Health Care Financ Rev 1995;16:107–27. [PMC free article] [PubMed] [Google Scholar]

- 11. Morris JN, Fries BE, Morris SA. Scaling ADLs within the MDS. J Gerontol A Biol Sci Med Sci 1999;54:M546–53. [DOI] [PubMed] [Google Scholar]

- 12. Hawes C, Phillips CD, Mor V, et al. MDS data should be used for research. Gerontologist 1992;32:563–4. 10.1093/geront/32.4.563b [DOI] [PubMed] [Google Scholar]

- 13. Poss JW, Jutan NM, Hirdes JP, et al. A review of evidence on the reliability and validity of minimum data set data. Healthc Manage Forum 2008;21:33–9. 10.1016/S0840-4704(10)60127-5 [DOI] [PubMed] [Google Scholar]

- 14. Hogeveen SE, Chen J, Hirdes JP. Evaluation of data quality of interRAI assessments in home and community care. BMC Med Inform Decis Mak 2017;17:150 10.1186/s12911-017-0547-9 [DOI] [PMC free article] [PubMed] [Google Scholar]