Abstract

Baer, Wolf, and Risley (1968) indicated “technological” was one of seven core dimensions of applied behavior analysis (ABA). They described this dimension as being met if interventions were described well enough to be implemented correctly. Often in the applied settings, a behavior plan is the method by which interventions are communicated to staff and parents for implementation. The necessary components of a behavior plan have been discussed in relation to compliance with regulations (e.g., Vollmer, Iwata, Zarcone, & Rodgers, Research in Developmental Disabilities 13:429–441, 1992), in school settings (e.g., Horner, Sugai, Todd, & Lewis-Palmer, Exceptionality: A Special Education Journal 8:205–215, 2000), and other applied settings (e.g., Tarbox et al., Research in Autism Spectrum Disorder 7:1509–1517, 2013) for the last 25 years. The purpose of this research is to review the literature regarding components of behavior plans and synthesize it with a recent survey of behavior analysts regarding essential components of behavior plans. The results are discussed in light of training, treatment fidelity implications (i.e., Registered Behavior Technician Task List), public policy development (e.g., state initiative for a single behavior plan template), and research opportunities (e.g., comparison of different visual structures).

Keywords: Behavior plans, Reports, Documentation, Behavior analysis

Baer et al. (1968)) stated that one dimension of applied behavior analysis (ABA) is that applied interventions are technological. Technological has been described as the process of specifying the components of applied interventions well enough to be implemented correctly by caregivers. Specifically, “all the salient ingredients of (therapy) must be described as a set of contingencies between child response, therapist response, and play materials, before a statement of technique has been approached” (Baer, Wolf, & Risley, p. 95). In today’s ABA world, a key example of attempts at satisfying the dimension of technological is via written behavior plans1.

The role of behavior plans is further elevated as a necessity by the Behavior Analyst Certification Board’s (BACB) Registered Behavior Technician (RBT) Task List (2013). Specifically, the RBT Task List indicates that an RBT can “identify the essential components of a well written skills acquisition plan/behavior reduction plan” (tasks C-01 and D-01, 2013). Behavior analysts conducting the required 40-h RBT training, therefore, should also identify essential components of behavior plans. RBTs work directly with the individuals served through the behavior plans, often leaving these individuals as responsible for the majority of direct implementation of the behavior plan. Furthermore, if individuals have a better understanding of the essential components of a behavior plan, they can begin to measure the changes in the quality of services provided to the clients.

Carr (2008) described three main activities for writing a behavior plan: identifying appropriate treatments, content of the plan (e.g., reinforcement schedule), and visual structure and layout of the plan. Multiple publications have considered identification of appropriate treatments (e.g., function-based treatments that are matched to challenging behavior function; Kroeger & Phillips, 2007) and content of the plan (e.g., goals, objectives; Vollmer et al., 1992). These recommendations fall well within our guidelines as behavior analysts, such as the need to be analytical in understanding the function of behavior within context and remaining behavioral in developing written definitions of the behavior of concern. Yet, our experience has indicated a lack of literature to meet the technological dimension. This is emphasized by the fact that plan content varies substantially from three broad domains (i.e., Tarbox et al., 2013) to a higher number of specific items (i.e., Vollmer et al., 1992). It appears a consensus of the “essential components of a written skill acquisition/behavior reduction plan” has not been achieved. Additionally, some commonly identified components are vague and might benefit from clarification (e.g., communication). The purpose of this study is to provide summary information regarding essential components of written behavior plans. This information was obtained via a literature review and a recent survey of behavior analysts.

Method

Survey Rationale and Creation

Due to the limited amount and paucity of research, there is a need to align current opinion of essential components with historical research. Further research could then include experimental analyses of application and utilization of behavior plans with and without essential components, visual display characteristics, and users (e.g., parents, paraprofessionals, professionals). For the purposes of this paper, we focused on establishing a consensus of essential components via historical literature and professional opinion. We provided further discussion of possible future research opportunities to refine the identified essential components. We have done this recognizing that experimental analyses of relationships between variables (e.g., written behavior plans and treatment outcomes) are a hallmark of behavior analysis, but also recognizing that survey data and literature summaries are useful in guiding future development of experimental analyses (Kazdin, 2003).

Three of the authors created a survey that consisted of 56 questions. Six questions were demographic (e.g., credential, practice setting), 48 questions were potential components of behavior plans, and 2 questions were open ended allowing respondents to suggest additional behavior plan components. The survey items were based upon a review of the previous literature (Brinkman, Segool, Pham, & Carlson, 2007; Browning-Wright, Mayer, & Saren, 2013; Carr, 2008; Horner et al., 2000; Kroeger & Phillips, 2007; Tarbox et al., 2013; Vollmer et al., 1992) and author experiences with various behavior plan formats. The survey was developed in an online survey system that allowed for data analysis.

Survey Process

The survey was distributed via email to all Board Certified Behavior Analysts (BCBA) and Board Certified Behavior Analysts-Doctoral (BCBA-D) credentialed through the BACB. Behavior analysts were targeted, as writing behavior plans is a common daily job task for practitioners and researchers alike. The email briefly explained the nature of the survey and contained a link to the online survey. Respondents rated possible components on a behavior plan on a scale of 1 to 5, where 1 indicated “not necessary to include,” 2 indicated “useful but not essential,” 3 indicated “should be included, but if omitted the plan could still be effective,” 4 indicated “must be included,” and 5 indicated “must be included, but on a separate document.” Respondents could also write-in and rate additional components not specified in the survey.

Dependent Variable

The average ratings per question were used to determine a consensus as to whether a possible behavior plan component was essential or not. Average ratings of 3.0 or higher were considered essential, whereas average ratings of 2.99 or lower were considered non-essential. Averages were computed separately for BCBA and BCBA-D respondents. Computing the averages separately allowed for a comparison of different response patterns across credentials.

Literature Summary

The content of previously published literature was reviewed to further establish a consensus of essential components of written behavior plans and to provide a summary of previous literature for readers. The literature was determined via database searches (i.e., ERIC, PsycINFO, Google Scholar) using the terms “written behavior plan,” “behavior plan,” and “positive behavior support plan.” Results varied widely (i.e., approximately 5000 hits to 0 hits). We attribute this to the generic use of the term (e.g., behavior plan for a student being mentioned, but not part of the research protocol). Most of the articles reviewed were located based upon author experience with the literature (e.g., Carr, 2008; Tarbox et al., 2013) and performing reverse searches of the references of these articles. The contents of each publication were summarized by one of the authors. Specifically, the essential components were categorized into five content areas that aligned with the questionnaire (i.e., client information, previous evaluations—non-behavioral, previous evaluations—behavioral, treatment components, consent components). The literature review is organized in this manner to allow for comparison between previous literature and the current survey data (see Table 1). The five content areas and process for review are discussed below.

Table 1.

Summary of previously published components of written behavior plans. Information is presented using the same content components as the survey of this study for ease of comparison

| Content identification | Client information | Previous evaluations (non-behavioral) | Previous evaluations (behavioral) | Treatment components | Consent components | |

|---|---|---|---|---|---|---|

| Brinkman et al. (2007) | Present | Present | Absent | Present | Present | Present |

| Browning-Wright et al. (2013) | Present | Absent | Absent | Present | Present | Absent |

| Horner et al. (2000) | Absent | Absent | Present | Present | Present | Absent |

| Kroeger and Phillips (2007) | Present | Absent | Absent | Absent | Present | Absent |

| Tarbox et al. (2013) | Present | Absent | Absent | Absent | Present | Absent |

| Vollmer et al. (1992) | Present | Absent | Present | Present | Present | Present |

Tool Development Process (i.e., Column 1)

This category was scored as “present” or “absent.” If the procedures described by the authors would allow for a reasonable replication of the tool (a subjective and arbitrary distinction), it was scored present. If sufficient information was not provided (e.g., scale used was not defined, clear definition of component absent, information regarding dissemination and population missing), it was scored absent.

Behavior Plan Content Areas (i.e., Columns 2–6)

Each of the behavior plan content areas was scored as present or absent. The present and absent score indicates whether the authors discussed at least one of the identified items within that content area on the survey.

Results

A narrative summary of each identified article is provided first, followed by the survey results. The literature review is provided in chronological order. Each summary describes the essential components discussed and procedures for determining those components. For ease of review, the survey questions and results are presented in groups based upon content. Each content area is discussed below. Average ratings for each component are presented. If BCBA-Ds and BCBAs disagreed on the rating for a component (i.e., one group rated it as essential and the other did not), the average ratings for each group are provided and noted on the visual representations. Visual representations of the results are also presented in Figures 1, 2, 3, 4, and 5. A summary of essential components from the survey and previous literature is provided in Table 1.

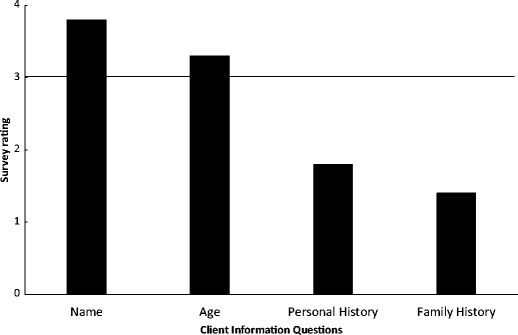

Fig. 1.

Average BCBA-D and BCBA ratings for client information components of a behavior plan

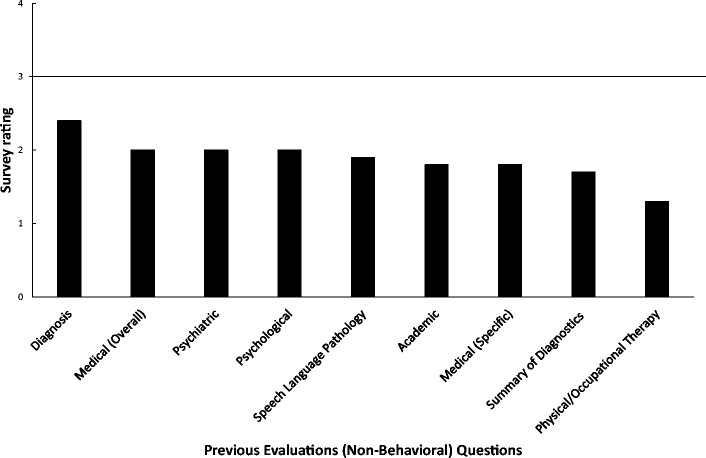

Fig. 2.

Average BCBA-D and BCBA ratings for previous evaluation (non-behavioral) components of a behavior plan

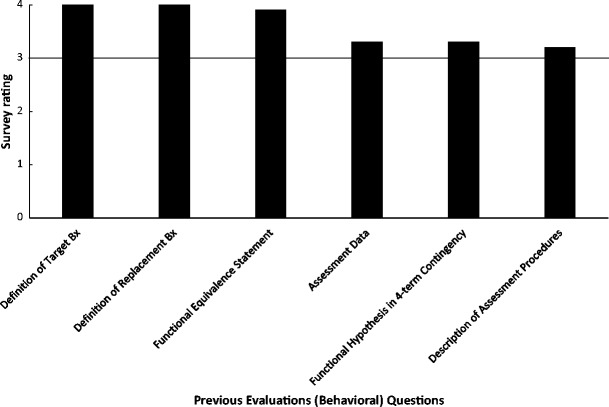

Fig. 3.

Average BCBA-D and BCBA ratings for previous evaluation (behavioral) components of a behavior plan

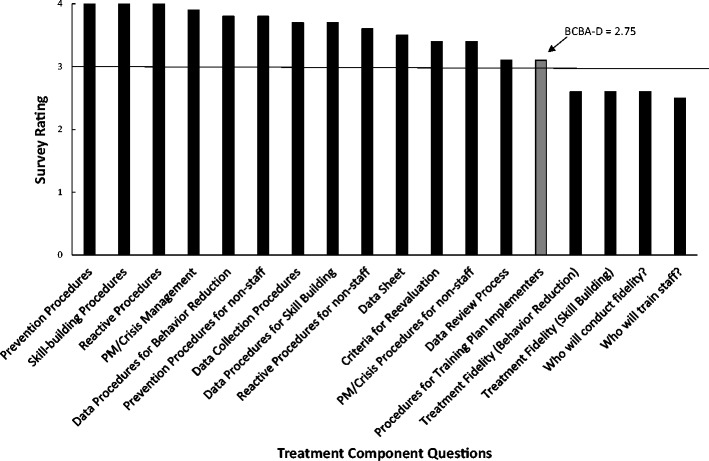

Fig. 4.

Average BCBA-D and BCBA ratings for treatment components of a behavior plan

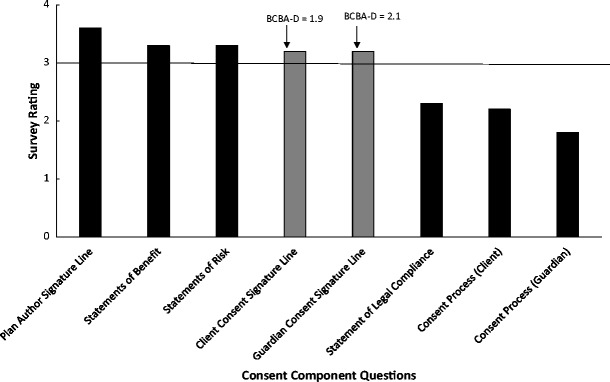

Fig. 5.

Average BCBA-D and BCBA ratings for consent components of a behavior plan

Literature Review

In response to controversy associated with decreasing challenging behavior, Vollmer et al. (1992) systematically reviewed the content of behavior plans. Specifically, the authors noted difficulty demonstrating compliance with clinical oversight of behavior reduction programs in the absence of specific guidelines and processes. The authors utilized published books, published research, and regulatory standards during the review. The authors identified 24 components to be included in written behavior plans. The identified components were then utilized as a quality assurance measure to review previously written behavior plans.

Horner et al. (2000) suggested that school-based behavior plans detail what will be done differently to evoke desirable changes in behavior and how outcomes of a behavior plan are monitored. The authors proposed six key elements of behavior plans to meet the stated purpose: learn how the student experiences events in the environment, identify prevention strategies, identify teaching strategies, avoid rewarding the problem behavior, reward desirable behaviors, and know what to do in the most difficult situations. These six key elements are buoyed by formal monitoring and evaluation of the effects of the plan. The authors concluded their description of behavior plans by providing a rating form to guide the behavior plan development process. The authors do not discuss a specific process for determining the components of the behavior plan. They do, however, refer readers to the broader positive behavior support literature for further information regarding the content of the article.

Brinkman et al. (2007) describe critical elements of a comprehensive behavioral consultation report. The identified critical elements were determined after a review of traditional psychology literature, the goals of the behavioral consultation model (e.g., Bergman & Kratochwill, 1990), and the understanding that behavior plans convey information for future service providers to work with the individual. There are 18 identified critical elements: identifying information (i.e., name, age, date of birth, dates of consultation and consultation sponsor), reason for referral, consent, problem-solving techniques (i.e., data gathering process), background information (e.g., developmental history, current functioning), problem identification (e.g., hypotheses of function), data collection procedures (i.e., define the target behavior, type of data collection), problem analysis (i.e., functional relationship statement), baseline data, problem definition (i.e., difference between current behavior and expected behavior), goal definition, treatment implementation (i.e., who will do what, when and where with data collection and fidelity procedures), summative treatment evaluation, progress monitoring data, formative treatment evaluation, summary, recommendations, and signature (consultant only). At the conclusion of the document, the authors provide an exemplar report.

Kroeger and Phillips (2007) indicated that educational professionals are creating more and more behavior plans to help support students at school, but the plans are not complete (e.g., too much focus on punitive strategies). The authors developed a guide to help support the assessment process and subsequent development of behavior plans in the academic setting and evaluated its social validity with educators. The guide included eight descriptors: focused, functional, instructional and preventive, positive, ecological, individuality effectiveness, long term, and comprehensive. Each of the eight descriptors could be scored “strong, moderate/partial, or weak” to help determine areas of the behavior plan that needed to be completed or strengthened. The guide was based upon a previous version (i.e., Weigle, 1997) and was modified based upon feedback from educator use across multiple state sites.

Browning-Wright et al. (2013) created the Behavior Support Plan Quality Evaluation Tool (BSP-QEII). The BSP-QEII contains 12 components: problem behavior, predictors/triggers of problem behavior, analysis of what supports the problem behavior is logically related to predictors, environmental change is logically related to what supports the problem behavior, predictors related to function of behavior, function related to replacement behavior, teaching strategies, reinforcers, reactive strategies, goals and objectives, team coordination in implementation, and communication. The 12 components were determined through literature review of articles and published texts specific to ABA. The utility and application of this tool have been evaluated (e.g., McVilly, Webber, Paris, & Sharp, 2013; McVilly, Webber, Sharp, & Paris, 2013).

Tarbox et al. (2013) evaluated a web-based tool for designing function-based plans. The tool guides practitioners through behavior plan development via a series of questions. Based upon answers to the questions, a behavior plan with standard treatments (although there is ability to individualize) is generated. The interventions are based upon function, evidence base (see Smith (2013) and Spring (2007) for further discussion regarding evidence-based practice), and positive reinforcement, to the greatest degree possible. Therefore, essential components of plans generated by this tool consider function, evidence base, and reinforcement strategies. The authors cited previous literature (experimental and opinion) as the method for determining the essential components.

Respondent Demographics

A total of 54 individuals (9 BCBA-Ds and 45 BCBAs) responded to the survey request. Thirty seven of the respondents indicated that they spend 50% or more of their time in a practitioner role, 4 respondents indicated that they spend 50% or more of their time in an academic role, and 13 respondents indicated that they spend 50% or more of their time in an administrative role. In addition to a BACB credential, respondents had education, special education, educational diagnostician, educational administration, social work, school psychology, psychology, and business credentials.

Client Information Questions

Client information questions consisted of name, age, personal history, and family history (see Fig. 1). Doctoral- and master-level behavior analysts rated name and age as essential (3.8 and 3.2, respectively). Personal and family history did not meet the criteria for essential (2.3 and 1.9, respectively).

Previous Evaluations (Non-Behavioral) Questions

The non-behavioral previous evaluations consisted of diagnosis, general medical history, psychiatric, psychological, speech-language pathology, academic, specific medical information, summary of diagnostic information, and physical/occupational therapy (see Fig. 2). None of the components in this content area met the criteria for essential. The range of average ratings was 2.4 (i.e., diagnosis) to 1.3 (i.e., physical/occupational therapy).

Previous Evaluations (Behavioral) Questions

The behavioral previous evaluations consisted of definition of target behaviors, definition of replacement behaviors, functional equivalence statements, assessment data, four-term contingency functional hypothesis statement, and description of assessment procedures (see Fig. 3). Each of the components in this content area met the cutoff for essential. The range of average ratings was 4.0 (i.e., definitions of target and replacement behaviors) to 3.2 (i.e., description of assessment procedures).

Treatment Component Questions

This content area had the most components possible for evaluation (see Fig. 4). All components but four (i.e., treatment fidelity for behavior reduction and skill building, who will conduct fidelity measurements, and who will train staff) met the criteria for essential. A fifth component (i.e., procedures for training plan implementers) had disagreement between BCBAs and BCBA-Ds. Doctoral-level BCBAs rated this component 2.8, and BCBAs rated it 3.1. The range of average ratings was 4.0 (i.e., prevention, skill-building, and reactive procedures) to 2.5 (i.e., who will train staff).

Consent Questions

This content area consisted of plan author signature line, statement of benefits, statement of risks, client consent signature line, guardian consent signature line, statement of legal compliance, and consent processes for the client and guardian (see Fig. 5). The plan author signature line and statements of benefit/risk met the essential criteria, whereas the statement of legal compliance and consent processes for the client and guardian did not. Signature lines for the client and guardian components had disagreement between BCBAs and BCBA-Ds. Doctoral-level BCBAs rated these components below the criteria (1.9 and 2.1, respectively). The range of average rating was 3.6 (i.e., plan author signature line) to 1.8 (i.e., guardian consent process).

Discussion

The purpose of this article was to provide a summary of professional opinion, based upon previous publications and a brief survey, regarding the essential components of a written behavior plan. Understanding the essential components of a written behavior plan is vital to fulfill the technological dimension of ABA (i.e., Baer et al., 1968), to effectively train and supervise RBTs (BACB, 2013), and to potentially increase the quality of services through more integrative behavior plans. This paper is considered an initial step to guide further efforts to improve the level of guidance for practitioners and outcomes for consumers. It is hoped that this information will stimulate many more studies evaluating different aspects of behavior plans, now that potential essential components have been identified. The implications of this research are discussed below.

There have been several publications identified, that when taken together create a foundation for understanding essential components. The inclusion of data from this survey, almost 25 years after Vollmer et al. (1992), provides an updated view from behavior analysts regarding the essential components of a written behavior plan. The survey data indicate much of what was considered essential for 25 years is still considered essential by behavior analysts today. This consistency of opinion over time could help facilitate practice guidelines for essential components of written behavior plans. Tools such as practice guidelines can support training and practice duties (e.g., creating behavior plans, training, and supervising RBTs regarding essential components).

One interesting outcome of the survey was the view that non-behavioral evaluations were not essential components of a behavior plan. The BCBA Fourth Edition Task List (BACB, 2012) indicates that trainees learn how to review records and available data, consider biological/medical variables, and provide behavior-analytic services in collaboration with other service providers (i.e., tasks G-01, G-02, and G-06), to name a few. Much of the time, these tasks are learned through collaboration with other disciplines (e.g., reviewing assessments, coordinating services). It is unclear if exclusion of non-behavior evaluations hinders these learning tasks (and later professional skills) or if it further contributes to the perception that behavior analysts do not play well with others (see Brodhead (2015) for an example). It might be possible to fulfill these expectations without including them in a written behavior plan.

Another interesting outcome of the survey was that treatment fidelity procedures and staff training procedures were both rated as non-essential. Given the effects of training and subsequent feedback (via fidelity monitoring) have on client outcomes (see Lerman, LeBlanc, and Valentino (2015) for further discussion), it would seem that inclusion of this information is important. However, it is the experience of these authors that lack of inclusion as an essential component does not mean these practices are not happening. Training and fidelity of implementation are typically outlined as a broader clinical practice that does not need reiterated on individual behavior plans. A future research question would be to evaluate whether inclusion on the behavior plan, either in a limited form (e.g., statement of frequency of fidelity evaluations on the plan) or comprehensive form (e.g., full description of fidelity protocols), affects actual fidelity practices.

A potential limitation of standardized behavior plans is lack of individualization. It is our belief that essential components act to standardize the structure of the behavior plan and not the structure of the behavior plan contents or procedures. The behavior plan specific contents and interventions are still left to the behavior analyst to individualize. Many of the essential components fit within the broad categories of the prevention, teaching, and reinforcing or responding to the target behavior model (Dunlap et al., 2010). These categories are meant to signal the behavior analyst to individualize content and interventions for each child and not to serve as a “cookbook” model with unvarying content across individuals. Take for example child A and child B. Child A had an assessment completed, and aggressive behavior was identified as maintained specifically by access to adult attention in the form of reprimands. Child B had the same form of assessment completed, and property destruction was identified as maintained by access to a preferred technological device. The behavior plans for both children would include prevention strategies (prevent), functional communication training (teach), and reactive strategies (respond). Child A is completely non-verbal with fine motor difficulties, so the behavior plan might include environmental modifications including non-contingent delivery of praise, teaching a full-hand functional communication response of tapping a card to ask for attention (teach), and finally extinguishing aggression by ignoring the target behavior and redirecting to the functional communication response (respond/reinforce). Child B is verbal and uses vocal language, and the target behavior is only observed in the classroom setting where the device is not always available. Again there would be preventative strategies but this time perhaps in the form of a visual prompting system, teaching of a functional communication response to ask for breaks to play with the iPad on a thinned schedule, and reinforcing these appropriate responses and ignoring/blocking the property destruction while prompting the individual to communicate appropriately.

Although there is much to be pleased with in relation to consistency of opinion of the last 25 years, there are still areas of research that can affect practice and training. The majority of these publications are tools for determining the quality of the written behavior plan. However, many of the publications equated quality with inclusion of certain components (e.g., statement of function), but neglected an evaluation of the accuracy of the component or other potential aspects of quality. That is, inclusion of a function-based intervention indicates higher quality than those omitting it, but there is no evaluation of whether the function-based intervention matches the needs of the client or whether the intervention was implemented with fidelity. Ultimately, evaluations of how essential components correlate with actual treatment outcomes will be required. As indicated by Carr (2008), determining the contents of a behavior is only one third of the process. Additionally, while the inclusion of these identified components may be of benefit to practitioners, their presence or absence within a behavior plan does not assure implementation or meaningfulness of the intervention. Although we still have much to learn with respect to the impact and quality of these agreed upon dimensions, there remain additional benefits in standardization (i.e., practice standards outlining essential components). One benefit is the ability for other fields or service entities (e.g., therapy providers, review committees, insurance agencies) to engage with our service in a consistent and reliable manner allowing for efficient and consistent oversight of our widely expanding field of practice.

Further research should evaluate whether the process for identifying treatments and visual structure affects quality of interventions including fidelity, training time, and client outcomes, to name a few. The process for determining content and visual structure could be also evaluated across behavior analytic professionals (i.e., RBT, BCaBA, BCBA, BCBA-D), non-behavior analytic professionals (e.g., educator), non-professionals (e.g., parents), setting of service delivery, and goals of the behavior plan (e.g., behavior reduction versus skill acquisition). Each of these of factors might mediate outcomes for the client and affect fidelity or social validity. Each of these individuals may require separate implementation guidelines, perhaps in the form of job aides as a supplement to the original lengthy behavior plan. For example, a registered behavior technician might best benefit from access to a one to two-page document on the procedures relevant only to the clinic setting he/she was working in and perhaps not require other academic or home information.

Another reason for guidelines outlining components of behavior plans and research to further the guidelines is policy development. Standardization of the components may expedite evaluation of behavior plans for mental health agency approval and insurance funding. For example, the state of Michigan is currently developing a behavior plan template and rubric to be used by providers implementing behavior analytic services (J. Frieder, personal communication, September 22, 2016). The involvement of behavior analysts in such endeavors is critical to ensuring that templates being used by or imposed upon behavior analysts are designed in such a way as to maximize treatment efficacy and socially valid consumer outcomes and are not primarily philosophy-based documents. As mentioned previously, a template such as this provides a general outline for the necessary components of a behavior plan while leaving room for individualization of specific interventions under the broad categories. Behavior analytic interventions must be individualized based on behavior function, client preference, setting, etc. (BACB, 2014). State and organizational guidelines along with the current research may dictate the essential components (e.g., proactive, reactive, and restrictive/intrusive interventions), and then, the practitioner could detail the specifics of the assessment or treatment within each component. Development of practice guidelines might also help reduce idiosyncrasies of written behavior plans across funders and employers.

Some limitations of this research include the low number of respondents and type of research (i.e., survey versus experimental evaluation). At the time of this survey, there were approximately 12,000 to 15,000 BCBAs (J. Carr, personal communication, December 7, 2016). Fifty-four respondents represent a very small percentage of the total population to base consensus opinion on. Additionally, survey research might be criticized as not evaluating the true relationship between variables. This is true, yet survey research allows for the development of research agendas, key variables to be researched, and can increase awareness of needed research. Survey research can support the development of a larger literature base, which often takes much more time and effort to develop. It is our hope that this information, despite its limitations, will lead to further discussion and efforts to more comprehensively develop a consensus and formal practice guidelines in the realm of essential behavior plan components.

Compliance with Ethical Standards

Conflict of Interest

The Behavior Analyst Certification Board distributed the survey to all registrants allowing solicitation for no cost. One author, Alissa A. Conway, is part of a team consulting with the state of Michigan to develop a uniform behavior plan template. She is not compensated for her consultation time.

Ethical Approval

The Beacon Services internal review committee reviewed this research protocol and indicated that it did not pose any significant risks to participants.

Footnotes

The authors recognize that there are many different names for behavior plans (e.g., behavior support plan, behavior intervention plan), and the name often reflects conceptual differences (e.g., positive behavior plan versus a behavior plan) or different applications (e.g., skill acquisition plan versus behavior reduction plan). Behavior plan is used as a generic reference to a document detailing intervention procedures that fits Baer et al.’s (1968)) definition of technological.

This research was supported in part through the Behavior Analysis Certification Board (BACB).

References

- Baer DM, Wolf MM, Risley TR. Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis. 1968;1:91–97. doi: 10.1901/jaba.1968.1-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behavior Analyst Certification Board (2012). Board Certified Behavior Analyst (BCBA) Task List.

- Behavior Analyst Certification Board (2013). Registered Behavior Technician (RBT) Task List.

- Behavior Analyst Certification Board (2014). Professional and Ethical Compliance Code for Behavior Analysts. Retrieved from the website: https://bacb.com/ethics-code/

- Bergman JR, Kratochwill TR. Behavioral consultation and therapy. New York: Plenum Press; 1990. [Google Scholar]

- Brinkman TM, Segool NK, Pham AV, Carlson JS. Writing comprehensive behavioral consultation reports: critical elements. International Journal of Behavioral Consultation and Therapy. 2007;3:372–383. doi: 10.1037/h0100812. [DOI] [Google Scholar]

- Brodhead, M. T. (2015). Maintaining professional relationships in an interdisciplinary setting: strategies for navigating non-behavioral treatment recommendations for individuals with autism. Behavior Analysis in Practice, 8, 70–78. 10.1007/s40617-015-0042-7 [DOI] [PMC free article] [PubMed]

- Browning-Wright, D., Mayer, G. R., & Saren, D. (2013). The behavior support plan-quality evaluation guide. Available at: http://www.pent.ca.gov Retrieved 22 June 2016

- Carr, J.E. (2008). Practitioners notebook #2—acknowledging the multiple functions of written behavior plans. APBA Reporter, 1(2). Retrieved from http://www.apbahome.net/newsletters.php

- Dunlap, G., Carr, E. G., Horner, R. H., Koegel, R. L., Sailor, W., Clarke, S., et al., (2010). A descriptive, multiyear examination of positive behavior support. Behavioral Disorders, 35, 259-279.

- Horner RH, Sugai G, Todd AW, Lewis-Palmer T. Elements of behavior support plans: a technical brief. Exceptionality: A Special Education Journal. 2000;8:205–215. doi: 10.1207/S15327035EX0803_6. [DOI] [Google Scholar]

- Kazdin AE. Research designs in clinical psychology. Boston, MA: Allyn & Bacon; 2003. [Google Scholar]

- Kroeger SD, Phillips LJ. Positive behavior support assessment guide: creating student-centered behavior plans. Assessment for Effective Intervention. 2007;32:100–112. doi: 10.1177/15345084070320020101. [DOI] [Google Scholar]

- Lerman, D. C., LeBlanc, L. A., & Valentino, A. L. (2015). Evidence-based application of staff and care-provider training procedures. In H. S. Roane, J. E. Ringdahl, & T. S. Falcomata (Eds.), Clinical and organizational applications of applied behavior analysis. (pgs. 321–352). New York: Elsevier, Inc.

- McVilly K, Webber L, Sharp G, Paris M. The content validity of the Behavior Support Plan Quality Evaluation tool (BSP-QEII) and its potential application in accommodation and day-support services for adults with intellectual. disability. Journal of Intellectual Disability Research. 2013;57:703–715. doi: 10.1111/j.1365-2788.2012.01602.x. [DOI] [PubMed] [Google Scholar]

- McVilly K, Webber L, Paris M, Sharp G. Reliability and utility of the Behavior Support Plan Quality Evaluation tool (BSP-QEII) for auditing and quality development in services for adults with intellectual disability and challenging behavior. Journal of Intellectual Disability Research. 2013;57:716–727. doi: 10.1111/j.1365-2788.2012.01603.x. [DOI] [PubMed] [Google Scholar]

- Smith T. What is evidence-based behavior analysis? The Behavior Analyst. 2013;36:7–33. doi: 10.1007/BF03392290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spring B. Evidence-based practice in clinical psychology: what it is, why it matters, what you need to know. Journal of Clinical Psychology. 2007;63:611–631. doi: 10.1002/jclp.20373. [DOI] [PubMed] [Google Scholar]

- Tarbox J, Najdowski AC, Bergstrom R, Wilke A, Bishop M, Kenzer A, Dixon D. Randomized evaluation of a web-based tool for designing function-based behavioral intervention plans. Research in Autism Spectrum Disorder. 2013;7:1509–1517. doi: 10.1016/j.rasd.2013.08.005. [DOI] [Google Scholar]

- Vollmer TR, Iwata BA, Zarcone JR, Rodgers TA. A content analysis of written behavior management programs. Research in Developmental Disabilities. 1992;13:429–441. doi: 10.1016/0891-4222(92)90001-M. [DOI] [PubMed] [Google Scholar]

- Weigle K. Positive behavior support as a model for promoting educational inclusion. Journal of the Association for Persons with Severe Handicaps. 1997;22:36–48. doi: 10.1177/154079699702200104. [DOI] [Google Scholar]