Abstract

Automated measurement of affective behavior in psychopathology has been limited primarily to screening and diagnosis. While useful, clinicians more often are concerned with whether patients are improving in response to treatment. Are symptoms abating, is affect becoming more positive, are unanticipated side effects emerging? When treatment includes neural implants, need for objective, repeatable biometrics tied to neurophysiology becomes especially pressing. We used automated face analysis to assess treatment response to deep brain stimulation (DBS) in two patients with intractable obsessive-compulsive disorder (OCD). One was assessed intraoperatively following implantation and activation of the DBS device. The other was assessed three months post-implantation. Both were assessed during DBS on and o conditions. Positive and negative valence were quantified using a CNN trained on normative data of 160 non-OCD participants. Thus, a secondary goal was domain transfer of the classifiers. In both contexts, DBS-on resulted in marked positive affect. In response to DBS-off, affect flattened in both contexts and alternated with increased negative affect in the outpatient setting. Mean AUC for domain transfer was 0.87. These findings suggest that parametric variation of DBS is strongly related to affective behavior and may introduce vulnerability for negative affect in the event that DBS is discontinued.

Keywords: Obsessive compulsive disorder, Deep brain stimulation, Facial expression, Body expression, Action units, Behavioral dynamics, Social signal processing

1. INTRODUCTION

Obsessive compulsive disorder (OCD) is a persistent, oftentimes disabling psychiatric disorder that is characterized by obsessive thoughts and compulsive behavior. Obsessive thoughts are intrusive and unwanted and can be highly disturbing. Compulsions are repetitive behaviors that an individual feels driven to perform. The obsessive thoughts are recognized as irrational, yet they remain highly troubling and are relieved if only temporarily by compulsive rituals, such as repetitive hand washing or repeated checking to see whether a particular event (e.g., shutting a door) was performed [1]. When severe, individuals may be home-bound and highly impaired personally and in their ability to function in family or work settings. While cognitive behavioral therapy or medication often are successful in providing relief, about 25% of patients fail to respond to them. Their OCD is treatment resistant, unrelenting.

Electrical stimulation of the ventral striatum (VS) has proven effective in about 60% of otherwise treatment resistant (i.e., intractable) cases [16]. DBS entails implanting electrodes into the VS for continuous deep brain stimulation (DBS). The VS is part of a reward circuit that is involved in appetitive behavior and emotion processes more generally. A potential side effect of DBS for treatment of OCD is hypomania or mania, which can have serious consequences that can necessitate hospitalization. To avoid this potential side effect and maximize treatment efficacy, optimal programming of DBS is essential.

A mirth response of intense positive affect frequently occurs during initial DBS and signals good prognosis. The mirth response is related to the affective circuitry of VS. Programing DBS adjustments for OCD are made largely on the basis of subjective reported emotion over a period of several months. While useful, subjective judgments are idiosyncratic and difficult to standardize. To maximize treatment efficacy while minimizing potential side effects, objective, quantifiable, repeatable, and efficient biomarkers of treatment response to DBS are needed.

A promising option is automated measurement of facial expression. Automated emotion recognition from facial expression is an active area of research [26, 29]. In clinical contexts, investigators have detected occurrence of depression, autism, conflict, and PTSD from visual features (i.e., face and body expression or movement) [7, 10, 18, 22, 25, 27]. In the current pilot study, we explored the feasibility of detecting changes in affect in response to time-locked changes in neurophysiological challenge. We evaluated intraoperative variation in DBS in relation to discrete facial actions and emotion valence in response to DBS.

Most previous work in relation to psychological disorders has focused on detecting presence or absence of a clinical diagnosis (e.g., depression). In contrast, our focus is synchronized variation between deep brain stimulation and affective behavior in a clinical disorder, OCD. We ask how closely affect varies with parametric changes in DBS of the ventral striatum in clinical patients. The goal is to evaluate a new measure of DBS, objective measurement of facial expression, in relation to brain stimulation. The long-term goal is to evaluate the potential efficacy of objective measurement of behavior for modulating DBS to optimize treatment outcome.

Video was available from two patients that were treated with DBS for intractable OCD. One was recorded intraoperatively. The other was recorded during interviews at about three months post-implantation of DBS electrodes. He was recorded when DBS was on and then three hours after DBS had been turned off. In both patients, our focus was on change in affect as indicated by objective facial measurement in response to variation in DBS.

Two contributions may be noted. One is exploration of a novel, objective, repeatable, efficient measure of emotion-relevant behavior in response to parametric variation in brain activity. Emotion-relevant behavior is quantified using both discrete facial action units [14] and positive and negative valence. Action units are anatomically based facial movements that individually or in combination can describe nearly all possible facial expressions. Using automated face analysis, we measured facial action units (AU) relevant to emotion. Affective valence was measured using a priori combinations of AU. Valence is informed by circumplex models of emotion and RDoC (Research Domain Criteria) constructs of positive and negative affect. We explored an approach that maps specific AU to each of these constructs. Two, we found strong support for the relation between variation in VS stimulation and affective behavior. The findings suggest the hypothesis that DBS is related to increased positive affect and vulnerability to negative affect if withdrawn.

2. METHODS

2.1. Participants and observational procedures

Patient A was a 29-year-old woman with severe, treatment-resistant early childhood onset OCD. Prior to implantation and treatment with DBS, she experienced nearly constant unwanted intrusive thoughts accompanied by the urge to perform neutralizing rituals, such as repeatedly snapping the shoulder straps of her clothing. She was video-recorded intraoperatively. While lightly sedated, she was alert and able to engage in conversation with medical personnel.

The camera was oriented about 45 degrees above her face. Her head was secured in a stereotaxic frame for surgery. The duration of the recording was about 9 minutes. About 4 minutes of which was analyzable. During the remainder of the recording the camera was zoomed out and face size proved too small for analysis.

Patient B was a 23-year-old man with severe OCD since early childhood. His OCD was characterized by extreme, recurrent doubt and checking. He would take hours to leave his house due to checking. He also suered from perfectionism, particularly with shaving, which could take up to 2 hours to complete. Always late to everything, he missed large amounts of school as a child and young adult. He tried some college classes but could rarely manage to get there.

He was implanted with bilateral VC/VS stimulation in 2002, which dramatically reduced his obsessive-compulsive behavior and improved his quality of life. Approximately three months following the start of DBS treatment, he was interviewed in each of two conditions. One was with DBS on. The other was after DBS had been turned o for about three hours. The video sample from the on condition was about 1 minute in duration; video from the o condition was about 43 secs in duration. We thus were able to compare his emotion expression both with and without DBS.

2.2. Video

For Patient A, video resolution was 640×426 with an intraocular distance (IOD) of about 130 pixels. The sterotaxic frame occluded the lateral eye corners and cheeks. For Patient B, video resolution was 320×240 with IOD of about 36 pixels, which is less than optimal. Using bicubic interpolation, IOD was increased to about 80 pixels for facial action unit detection.

2.3. Face tracking and registration

Faces in the video were tracked and normalized using real-time face alignment software that accomplishes dense 3D registration from 2D videos and images without requiring person-specific training [20]. Faces were centred, scaled, and normalized to the average interocular (IOD) distance of the patients and in training of the classifiers prior to their use in the patients.

2.4. Action Unit Detection

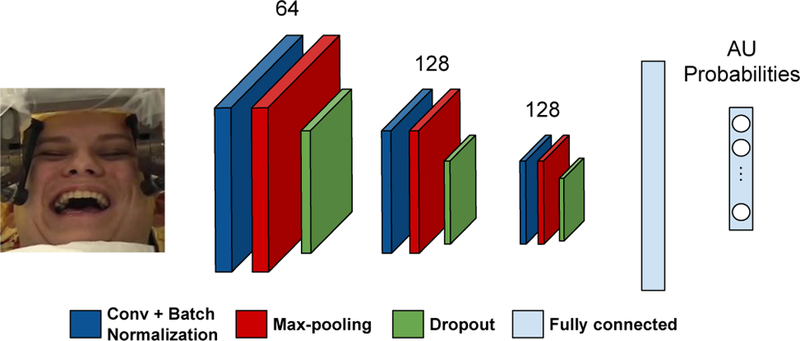

We trained a convolutional neural network (CNN) on data from 160 participants from the BP4D and BP4D+ databases [30, 31]. Subsets of these data have been used in FERA 2017 [29] and 3DFAW [21]. The CNN contains three convolutional layers and two fully connected layers (see Figure 1). Normalized frames are converted into grayscale images and fed as inputs to the network. We employ 64, 128, and 128 filters of 5 × 5 pixels in three convolutional layers, respectively. After convolution, rectified linear unit (ReLU) is applied to the output of the convolutional layers in order to add non-linearity to the model. We apply batch normalization to the outputs of all convolutional layers. Our network contains three max-pooling layers which are applied after batch normalization. We apply max-pooling with a 2 × 2 window such that the output of max-pooling layer is downsampled with a factor of 2. We add dropout layers after max-pooling and set dropout rate to 0.25 for regularization. Output of the last dropout layer is connected to the fully connected layer of size 400. Finally, the output of first fully connected layer is connected to the final layer having 12 neurons, each corresponding to the probability of 12 AUs: AU1, AU2, AU4, AU6, AU7, AU10, AU12, AU14, AU15, AU17, AU23 and AU24. The AUs were selected based on their relevance to positive and negative affect [8, 9, 12, 24].

Figure 1:

Overview of the deep network trained on BP4D+dataset used to provide AU probabilities for OCD patients.

We perform multi-label AU classification in such a way that our model learns to represent and discriminate multiple AUs simultaneously. Therefore, we used binary cross-entropy loss. During optimization we used Adam optimizer [23] with a learning rate 0.001 and decaying learning rate weight 0.9. We performed 5-fold cross validation to measure the success of our model in detecting AUs.

Intersystem agreement between the CNN and manual FACS coding (i.e., ground truth) was quantified using AUC, F1 (positive agreement), NA (negative agreement), and free-marginal kappa [3, 4], which estimates chance agreement by assuming that each category is equally likely to be chosen at random [32]. (Table 1).

Table 1:

AU classication results on BP4D+ dataset.

| - | Base Rates | S score | AUC | F1-Score (PA) | NA |

|---|---|---|---|---|---|

| AU1 | 0.09 | 0.75 | 0.79 | 0.37 | 0.93 |

| AU2 | 0.07 | 0.79 | 0.80 | 0.32 | 0.94 |

| AU4 | 0.08 | 0.84 | 0.79 | 0.45 | 0.96 |

| AU6 | 0.45 | 0.70 | 0.93 | 0.84 | 0.86 |

| AU7 | 0.65 | 0.63 | 0.88 | 0.86 | 0.72 |

| AU10 | 0.60 | 0.73 | 0.93 | 0.89 | 0.82 |

| AU12 | 0.52 | 0.73 | 0.94 | 0.87 | 0.86 |

| AU14 | 0.52 | 0.43 | 0.79 | 0.73 | 0.69 |

| AU15 | 0.11 | 0.76 | 0.85 | 0.43 | 0.93 |

| AU17 | 0.14 | 0.67 | 0.80 | 0.42 | 0.90 |

| AU23 | 0.15 | 0.68 | 0.82 | 0.49 | 0.91 |

| AU24 | 0.04 | 0.91 | 0.85 | 0.18 | 0.98 |

| Average | 0.29 | 0.72 | 0.85 | 0.57 | 0.87 |

2.5. Positive and Negative Affect

To quantify intensity of positive and negative affect (PA and NA, respectively), linear combinations of AU intensities were used. Positive affect was defined as intensity of AU 1+2 and AU 6+12. AU 6+12 signals positive affect and is moderately to highly correlated with dimensional subjective ratings of same [2, 15, 17]. AU 1+2 was included in PA for its relationship to interest, engagement and surprise [9, 13, 19]. Negative affect was defined as intensity of AU 4 and AU 7. AU 4 is common in holistic expressions of negative emotions (e.g., anger and sadness) [9, 13], is a primary or often sole measure for negative affect in facial EMG studies of emotion [5, 6, 11], and is highly correlated with dimensional subjective ratings of negative affect [2, 17]. Because AU 7 can occur secondarily to strong AU 6 [14], it was omitted from NA in the presence of AU 6 and AU 12. Additional AU were considered as indices of negative affect (e.g., AU 15) but failed to occur and so were not included in NA. We obtain positive and negative affect (PA and NA, respectively) from AU probabilities as given in Algorithm 1.

Algorithm 1. Obtaining PA and NA from AU probabilities

3. RESULTS

The CNN was used to automatically detect AUs and positive and negative affect for each patient. Because the classifiers were trained and tested on BP4D and not on OCD patient video, we assessed their generalizability for use in the patient videos. CNN multilabel output was compared with manual FACS coding of the videos. AUC for the intraoperative, DBS-off, and DBS-on videos was 0.93, 0.77, and 0.92, respectively, which suggests good generalizability to the target domain.

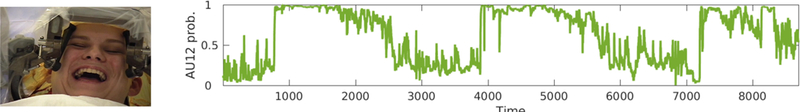

Time series for classier outputs for AU 12 (smile) in Patient A are shown in Figure 2. Outputs for AU 6 (cheek raise, the Duchenne marker for positive affect) were highly congruent with those for AU 12, and in interest of brevity are not shown. In Patient A, smiling shows a dramatic on/off cycling that maps onto the variation in DBS. Onsets of AU 12 are especially steep, rising from baseline to maximum intensity in very few frames. Each of these onsets occurs immediately after DBS is triggered. The decreases in AU 12 follow ramping down of the DBS. AU associated with negative affect was not observed. However, during the DBS off segments, facial tone dramatically decreased. Affect drained from her face.

Figure 2:

Intraoperative tracking of positive affect in Patient A. The pronounced cycling corresponds closely to variation in DBS parameters. It is high and sustained during stimulation and low and sustained when stimulation is off.

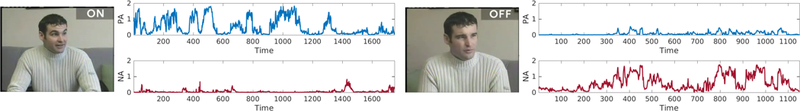

Figure 3 shows outputs for positive and negative affect (PA and NA, respectively) during On and Off segments for Patient B. When DBS was on, PA cycled on an off with variable cycle duration and more variable and moderate onset phase dynamics. Specific AU included both illustrators (AU 1+2, which can be seen in the example frame) and strong AU 6+12. NA was infrequent and of low intensity.

Figure 3:

Positive and negative affect (PA and NA, respectively) in Patient B. In the left panels, DBS is on. In the right panels, DBS has been o for three hours.

When DBS was off, PA was greatly attenuated. PA signal strength was at chance levels. NA by contrast was frequent and cycled on and off over the entire segment. This activity was primarily due to AU 7 (lower eyelid tightening, which is common in anger) and AU 4 (brow furrowing), which is common to anger and other negative emotions. AU 7 and low-intensity AU 4 are visible in the example.

4. DISCUSSION

DBS was associated with marked variation in affect, especially intraoperatively. In that context, steep increases in DBS produced dramatic increases in positive affect. Smiling was intense and protracted. Two of three smiles were about a minute in duration, which is unusual. When smiles ended, muscle tone appeared to drain from the face rather than return to a baseline. Loss of tone is not something described in Facial Action Coding System and to our knowledge has not been reported previously in emotion research. Experience with DBS suggests that facial actions alone may be insufficient to annotate facial affect.

Unlike Patient A for whom DBS was novel, Patient B was three months post implantation and had accommodated to chronic DBS. When his DBS was discontinued for three hours, the effects were less pronounced than in the interoperative case, but were dramatic nevertheless. While DBS was on, Patient B was highly interactive, he frequently used brow raise as illustrators or indices of surprise, and frequently displayed Duchenne smiles. Large arm and movements were integrated with his facial affect. Cycles of positive affect were well organized, showed more gradual acceleration in smile onsets, and were variable in intensity and duration.

When DBS was off for three hours, Patient B’s behavior changed markedly. Gone were the paralinguistic brow raises, smiling was infrequent and failed to include the Duchenne marker (AU 6), lower eyelid tightening (AU 7) occurred, and brow furrowing (AU 4) was common. While these indicators of NA were not severe, affect was consistently flattened and mildly negative.

The findings in both patients suggest VS stimulation has a strong influence on both positive and negative affect. The relation between PA and the VS has been noted previously; the relation between NA and VS has been less appreciated. Further work will be needed to explore the full range of affective experience in relation to DBS. This will be increasingly important with the advent of “closed-loop” DBS in which level of stimulation is titrated continuously in response to neurophysiology and affective behavior. Experimental investigations of closed-loop DBS are underway.

DBS as a treatment for OCD raises essential questions about personality. It would be important to know about Patient B’s levels of extraversion and neuroticism prior to DBS. Was he reserved, inhibited, slightly negative, as he was in the o phase? Or was he more outgoing, positive, and engaging, at least when his OCD allowed? To what extent might DBS alter qualities of being? Does habituation occur [28]? What might be the consequences of withdrawing DBS? Questions such as these will require longitudinal designs.

Several imitations are noted. In common with most previous reports of DBS for OCD and depression, the number of subjects was small. Neurosurgery for psychiatric disorders is in its early stages and cost and availability of subjects are limiting factors. Video in the current study was available from only two patients, was relatively brief, and was not recorded with analysis in mind. Self-reported emotion and personality measures were not obtained, and in the intraoperative case, the timing of DBS offsets was unavailable. Further research using a range of behavioral measures, larger samples, and longitudinal designs will be needed to understand the relation between neural stimulation, affect, and personality. We recently initiated two studies that have these goals.

CCS CONCEPTS.

Applied computing → Psychology

ACKNOWLEDGEMENT

This research was supported in part by NIH awards NS100549 and MH096951 and NSF award 1418026. Zakia Hammal assisted with the algorithm for valence.

Contributor Information

Jeffrey F. Cohn, University of Pittsburgh, Pittsburgh, PA

Michael S. Okun, University of Florida, Gainesville, Florida

Laszlo A. Jeni, Carnegie Mellon University, Pittsburgh, PA

Itir Onal Ertugrul, Carnegie Mellon University, Pittsburgh, PA.

David Borton, Brown University, Providence, Rhode Island.

Donald Malone, Cleveland Clinic, Cleveland, Ohio.

Wayne K. Goodman, Baylor College of Medicine, Houston, Texas

REFERENCES

- [1].American Psychiatric Association. 2015. Diagnostic and statistical manual of mental disorders (DSM-5®) American Psychiatric Pub. [Google Scholar]

- [2].Baker Jason K, Haltigan John D, Brewster Ryan, Jaccard James, and Messinger Daniel. 2010. Non-expert ratings of infant and parent emotion: Concordance with expert coding and relevance to early autism risk. International Journal of Behavioral Development 34, 1 (2010), 88–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Bennett Edward M, Alpert R, and Goldstein AC. 1954. Communications through limited-response questioning. Public Opinion Quarterly 18, 3 (1954), 303–308. [Google Scholar]

- [4].Brennan Robert L and Prediger Dale J. 1981. Coefficient kappa: Some uses, misuses, and alternatives. Educational and psychological measurement 41, 3 (1981), 687–699. [Google Scholar]

- [5].Cacioppo John T, Martzke Jerey S, Petty Richard E, and Tassinary Louis G. 1988. Specic forms of facial EMG response index emotions during an interview: From Darwin to the continuous ow hypothesis of affect-laden information processing. Journal of personality and social psychology 54, 4 (1988), 592. [DOI] [PubMed] [Google Scholar]

- [6].Cacioppo John T, Petty Richard E, Losch Mary E, and Kim Hai Sook. 1986. Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. Journal of personality and social psychology 50, 2 (1986), 260. [DOI] [PubMed] [Google Scholar]

- [7].Campbell Kathleen, Carpenter Kimberly LH, Hashemi Jordan, Espinosa Steven, Marsan Samuel, Borg Jana Schaich, Chang Zhuoqing, Qiu Qiang, Vermeer Saritha, Adler Elizabeth, et al. 2018. Computer vision analysis captures atypical attention in toddlers with autism. Autism (2018), 1362361318766247. [DOI] [PMC free article] [PubMed]

- [8].Camras Linda A. 1992. Expressive development and basic emotions. Cognition & Emotion 6, 3–4 (1992), 269–283. [Google Scholar]

- [9].Charles Darwin. 1872/1998. The expression of the emotions in man and animals (3rd ed.). Oxford University Press, New York. [Google Scholar]

- [10].Dibeklioğlu Hamdi,Hammal Zakia, and Cohn Jeffrey F. 2018. Dynamic multimodal measurement of depression severity using deep autoencoding. IEEE journal of biomedical and health informatics 22, 2 (2018), 525–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Dimberg Ulf, Thunberg Monika, and Grunedal Sara. 2002. Facial reactions to emotional stimuli: Automatically controlled emotional responses. Cognition & Emotion 16, 4 (2002), 449–471. [Google Scholar]

- [12].Ekman Paul, Davidson Richard J, and Friesen Wallace V. 1990. The Duchenne smile: Emotional expression and brain physiology: II. Journal of personality and social psychology 58, 2 (1990), 342. [PubMed] [Google Scholar]

- [13].Ekman Paul and Friesen Wallace V. 2003. Unmasking the face: A guide to recognizing emotions from facial clues Ishk. [Google Scholar]

- [14].Ekman Paul, Friesen Wallace V., and Hager Joseph C.. 2002. Facial Action Coding System. Manual and InvestigatorâĂŹs Guide (2002).

- [15].Girard Jeffrey M. and Cohn Jeffrey F.. 2018. Correlation of facial action units with observers’ ratings of affect from video and audio recordings. (Unpublished) (2018).

- [16].Greenberg BD, Gabriels LA, Malone DA Jr, Rezai AR, Friehs GM, Okun MS, Shapira NA, Foote KD, Cosyns PR, Kubu CS, et al. 2010. Deep brain stimulation of the ventral internal capsule/ventral striatum for obsessive-compulsive disorder: worldwide experience. Molecular psychiatry 15, 1 (2010), 64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Haines Nathaniel. 2017. Decoding facial expressions that produce emotion valence ratings with human-like accuracy Ph.D. Dissertation. The Ohio State University. [Google Scholar]

- [18].Hammal Zakia, Cohn Jeffrey F, and George David T. 2014. Interpersonal co-ordination of headmotion in distressed couples. IEEE transactions on affective computing 5, 2 (2014), 155–167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Izard Carroll E. 1993. Four systems for emotion activation: Cognitive and noncognitive processes. Psychological review 100, 1 (1993), 68. [DOI] [PubMed] [Google Scholar]

- [20].Jeni László A, Cohn Jeffrey F, and Kanade Takeo. 2017. Dense 3d face alignment from 2d video for real-time use. Image and Vision Computing 58 (2017), 13–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Jeni László A, Tulyakov Sergey, Yin Lijun, Sebe Nicu, and Cohn Jeffrey F. 2016. The first 3d face alignment in the wild (3dfaw) challenge. In European Conference on Computer Vision Springer, 511–520. [Google Scholar]

- [22].Joshi Jyoti, Dhall Abhinav, Goecke Roland, and Cohn Jeffrey F. 2013. Relative body parts movement for automatic depression analysis. In Affective Computing and Intelligent Interaction (ACII), 2013 Humaine Association Conference on IEEE, 492–497. [Google Scholar]

- [23].Kingma Diederik P and Ba Jimmy. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

- [24].Lucey Patrick, Cohn Jeffrey F, Kanade Takeo, Saragih Jason, Ambadar Zara, and Matthews Iain. 2010. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Computer Vision and Pattern Recognition Workshops (CVPRW), 2010 IEEE Computer Society Conference on IEEE, 94–101. [Google Scholar]

- [25].Martin Katherine B, Hammal Zakia, Ren Gang, Cohn Jeffrey F, Cassell Justine, Ogihara Mitsunori, Britton Jennifer C, Gutierrez Anibal, and Messinger Daniel S. 2018. Objective measurement of head movement differences in children with and without autism spectrum disorder. Molecular autism 9, 1 (2018), 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Sariyanidi Evangelos, Gunes Hatice, and Cavallaro Andrea. 2015. Automatic analysis of facial affect: A survey of registration, representation, and recognition. IEEE transactions on pattern analysis and machine intelligence 37, 6 (2015), 1113–1133. [DOI] [PubMed] [Google Scholar]

- [27].Scherer Stefan, Stratou Giota, Lucas Gale, Mahmoud Marwa, Boberg Jill, Gratch Jonathan, Morency Louis-Philippe, et al. 2014. Automatic audiovisual behavior descriptors for psychological disorder analysis. Image and Vision Computing 32, 10 (2014), 648–658. [Google Scholar]

- [28].Springer Utaka S, Bowers Dawn, Goodman Wayne K, Shapira Nathan A, Foote Kelly D, and Okun Michael S. 2006. Long-term habituation of the smile response with deep brain stimulation. Neurocase 12, 3 (2006), 191–196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Valstar Michel F, Sánchez-Lozano Enrique, Cohn Jeffrey F, Jeni László A, Girard Jeffrey M, Zhang Zheng, Yin Lijun, and Pantic Maja. 2017. Fera 2017-addressing head pose in the third facial expression recognition and analysis challenge. In Automatic Face & Gesture Recognition (FG 2017), 2017 12th IEEE International Conference on IEEE, 839–847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Zhang Xing, Yin Lijun, Cohn Jeffrey F, Canavan Shaun, Reale Michael, Horowitz Andy, Liu Peng, and Girard Jeffrey M. 2014. Bp4d-spontaneous: a high-resolution spontaneous 3d dynamic facial expression database. Image and Vision Computing 32, 10 (2014), 692–706. [Google Scholar]

- [31].Zhang Zheng, Jeff M Girard Yue Wu, Zhang Xing, Liu Peng, Ciftci Umur, Canavan Shaun, Reale Michael, Horowitz Andy, Yang Huiyuan, et al. 2016. Multimodal spontaneous emotion corpus for human behavior analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3438–3446.

- [32].Zhao Xinshu, Liu Jun S, and Deng Ke. 2013. Assumptions behind intercoder reliability indices. Annals of the International Communication Association 36, 1 (2013), 419–480. [Google Scholar]

- [33].Cohn Jeffrey F., Jeni Laszlo A., Ertugrul Itir Onal, Malone Donald, Okun Michael S., Borton David, and Goodman Wayne K.. 2018. Automated Affect Detection in Deep Brain Stimulation for Obsessive-Compulsive Disorder: A Pilot Study. In 2018 International Conference on Multimodal Interaction (ICMI ‘18), October 16–20, 2018, Boulder, CO, USA: ACM, New York, NY, USA, 5 pages. https://doi.org/10.1145/3242969.3243023 [DOI] [PMC free article] [PubMed] [Google Scholar]