Abstract

Simulation models of facial expressions propose that sensorimotor regions may increase the clarity of facial expressions representations in extrastriate areas. We monitored the event-related potential marker of visual working memory (VWM) representations, namely the sustained posterior contralateral negativity (SPCN), also termed contralateral delay activity, while participants performed a change detection task including to-be-memorized faces with different intensities of anger. In one condition participants could freely use their facial mimicry during the encoding/VWM maintenance of the faces while in a different condition participants had their facial mimicry blocked by a gel. Notably, SPCN amplitude was reduced for faces in the blocked mimicry condition when compared to the free mimicry condition. This modulation interacted with the empathy levels of participants such that only participants with medium-high empathy scores showed such reduction of the SPCN amplitude when their mimicry was blocked. The SPCN amplitude was larger for full expressions when compared to neutral and subtle expressions, while subtle expressions elicited lower SPCN amplitudes than neutral faces. These findings provide evidence of a functional link between mimicry and VWM for faces and further shed light on how this memory system may receive feedbacks from sensorimotor regions during the processing of facial expressions.

Keywords: facial mimicry, facial expressions, visual working memory, empathy, event-related potentials

Introduction

Humans are incredibly efficient in understanding the affective states of others and in empathizing with them, and in particular they are exceptionally capable of inferring others’ affective states by extracting this information from a single look at their face. Among the stimuli able to convey information on the emotions of others, faces indeed occupy a place of prime importance and for this reason faces in general, and facial expressions in particular, are of paromount importance for individuals. According to recent models aimed at explaining facial expression recognition, the understanding of others’ emotions expressed through their faces would be carried out through a simulation of those emotions by the observer (Carr et al., 2003; Wicker et al., 2003; Goldman and Sripada, 2005; Niedenthal, 2007; Pitcher et al., 2008; Goldman and de Vignemont, 2009; Gallese and Sinigaglia, 2011; Borghi et al., 2013; see also Mastrella and Sessa, 2017, for a review on this topic). This simulation process would also involve the facial mimicry of the observer, and depending on the specific simulation model, this mimicry would have a different role in order to extract the meaning of the emotion from a visual pattern linked to a facial expression. Some models assign to mimicry an absolute central role in this process; others indicate it as an accessory element (see Goldman and Sripada, 2005, for a review of different simulation models; see also Hess and Fischer, 2014, for a review on emotional mimicry) or a sort of spillover of simulation by sensorimotor areas (Wood et al., 2016). In favor of the link between mimicry and simulative processes, emotional mimicry has been associated with the activation of the mirror neuron system such that congruent facial reactions to angry and happy expressions correlate significantly with activations in the inferior frontal gyrus, supplementary motor area and cerebellum (see Likowski et al., 2012).

To note, the concept of mimicry has also been closely linked to emotional contagion in particular and to the construct of empathy more specifically (see Prochazkova and Kret, 2017, for a recent review on this topic). Empathy is a multifaceted construct that consists of at least two different aspects: a ‘neural resonance’ process, which is the automatic tendency to simulate the states of others, including sensory, motor and emotional components; and a mentalizing process, through which it would be possible to explicitly assign affective states to others (see e.g. Decety and Lamm, 2006; Lamm et al., 2011; Decety and Svetlova, 2012; Zaki and Ochsner, 2012; Sessa et al., 2014; Bruneau et al., 2015; Kanske et al., 2015; Meconi et al., 2015; Kanske et al., 2016; Vaes et al., 2016; Marsh, 2018; Meconi et al., 2018). For instance, in a study by Sonnby-Borgström (2002), the participants were exposed to angry, neutral and happy facial expressions, while their facial muscles contractions were recorded using electromyography. The authors aimed at investigating how facial mimicry behavior in ‘face-to-face interaction’ situations was related to individual differences in empathy. The results of this study demonstrated that at individual differences in empathy correspond differences in the zygomaticus muscle reactions. Specifically, the high-empathy group was characterized by a significantly higher correspondence between facial expressions and self-reported feelings; on the contrary, the low-empathy group showed inverse zygomaticus muscle reactions, namely ‘smiling’ when exposed to angry expressions. Interestingly, no differences were found between the high- and low-empathy participants in their (verbally) reported feelings when exposed to happy or angry faces. Thus, the differences between the groups in empathy appeared to be related to differences in automatic somatic reactions to facial stimuli rather than to differences in their conscious interpretation of the emotional situation.

The objective of the present investigation was to test whether the mimicry of the observer is a critical element for the construction of visual working memory (VWM) representations of facial expressions of emotions, monitoring whether this process can also depend on the degree of empathy of the observer. VWM constitutes a pivotal buffer for human cognition and a critical hub between early processing stages and the manifest behavior of the individuals (see e.g. Luck, 2005). Thus, demonstrating that the observer’s facial mimicry could have an impact on the functioning of this buffer is of fundamental importance for understanding how facial expression recognition occurs and, in particular, how the VWM buffer operates when coordinating online behavior.

The concertation of processing stages/activities in different brain regions necessary for an understanding of others’ emotions is well delineated by a recent theoretical model proposed by Wood et al. (2016), which considers the recognition/discrimination of others’ emotions as a complex process involving the parallel activation of two different systems, one for the visual analysis of faces and facial expressions and a second one for sensorimotor simulation of facial expressions. This second system would trigger the activation of the emotion system that is the whole of those additional brain regions involved in the emotional processing, including the limbic areas. The combination of these processing steps, in continuous iterative interaction with each other, would allow us to understand the emotion expressed by others’ faces, assigning an affective state to the others and possibly producing appropriate behavioral responses. Within this theoretical framework, a large body of studies supports the central role of the observer’s facial mimicry during emotion recognition and discrimination, such that, for instance, when mimicry is blocked/altered either mechanically (Niedenthal et al., 2001; Oberman et al., 2007; Stel and van Knippenberg, 2008; Baumeister et al., 2015; Wood et al., 2015) or chemically (e.g. by the botulinum toxin A-BTX; Baumeister et al., 2016), or in patients with facial paralysis (Keillor et al., 2002; Korb et al., 2016), emotional faces recognition is disturbed.

A crucial aspect of Wood et al.’s (2016) model is that the sensorimotor simulation process (which, according to the authors, may or may not involve the facial mimicry of the observer depending on the intensity of the simulation) ‘feeds back to shape the visual percept itself’. This aspect of the model therefore implies that interfering with the simulation mechanism may have an effect on the quality of the representation of facial expressions. However, at the moment there is no direct evidence in the literature of this specific fascinating aspect of Wood et al.’s model.

The present experimental investigation aimed at testing the aspect of the model by Wood et al. (2016) that hypothesized a feedback process from simulation to facial percept representation. In particular, the main research question that led the present study was whether alterating/blocking of facial mimicry by using a hardening gel mask could interfere with VWM representations of emotional facial expressions. This would allow first of all to demonstrate the feedback processing postulated by the model, in which the simulation has an effect on the construction of facial expression representations, and also would allow better understanding of the functioning of the VWM buffer, as an effect of blocking/alterating of mimicry on this buffer would demonstrate that the simulation process normally contributes to its functioning in the case faces are represented/stored.

The decision to use a gel mask to block/alter the participants’ mimicry was based on some considerations: first, each electroencephalographic (EEG) session is particularly long, making it impractical to require subjects to voluntarily contract some facial muscles by using other tools (such as pens to hold between the lips, see e.g. Niedenthal, 2007 and Oberman et al., 2007). Furthermore, although the emotion of anger mainly involves the supercilii corrugator, it is known that other facial muscles are involved, including the nasalis and the levator labii superioris, and in this view the mask could have simultaneously blocked a set of muscles potentially in action during the recognition of anger expressions. In addition, the mask has also been successfully used in previous studies by Baumister et al. (2015) and Wood et al. (2015), encouraging us to use this tool to interfere with participants’ mimicry. Finally, we would like to briefly make a further consideration that we believe to be of fundamental importance for the whole research in this field. Some of the methods used to manipulate participants’ mimicry in the previous studies employed techniques that required voluntary muscle contraction by the subjects, e.g. by holding a Chinese chopstick in the mouth and constantly pressing with the teeth (Ponari et al., 2012), by holding a pen between the lips (Niedenthal et al., 2001) or by clenching their teeth (Stel and van Knippenberg, 2008). However, it is known that voluntary and involuntary facial expressions depend on different neural tracts (Rinn, 1991) with the first controlled by impulses from the motor strip through the pyramidal tract and the latter depending on subcortical impulses through the extrapyramidal tract. The blocking of facial muscles in the case of the mask occurs in a ‘passive way’, i.e. it does not require an active voluntary contraction of the muscles by the participants, allowing therefore to observe not so much an interference mechanism on emotion recognition due to an active muscle contraction but rather the impact of the absence/alteration of mimicry during the recognition of others’ emotions.

For our purposes, we implemented a variant of the classic change detection task (Vogel and Machizawa, 2004; Vogel et al., 2005; Luria et al., 2010; Sessa et al., 2011, 2012; Meconi et al., 2014; Sessa and Dalmaso, 2016) in which participants, who wore the gel mask for half of the experiment (manipulation of the gel within subjects), were asked, in each trial, to memorize a face (‘memory array’) with a facial expression of three possible intensities (neutral, subtle, intense) for a short time interval of ∼1 s and to decide, at the presentation of a ‘test array’, if the expression of the presented face was the same or different from that of the memorized face. The identity of the faces did not change within the same trial. In order to monitor the event-related potential (ERP) named sustained posterior contralateral negativity (SPCN; Jolicœur et al., 2010; Luria et al., 2010; Sessa et al., 2011, 2012; Meconi et al., 2014; Sessa and Dalmaso, 2016) or also contralateral delay activity (CDA; Vogel and Machizawa, 2004), the presentation of the stimuli was lateralized in the visual field and a distractor face was presented on the opposite side. An arrow placed in the center of the screen immediately above the fixation cross indicated to the participants—for each trial—if they had to memorize the face in left or right side of the memory array. The participants then had to compare this memorized face with the face that appeared in the same position in the test array.

The SPCN/CDA is a well-known marker of VWM representations (see Luria et al., 2016, for a review). It is defined as the difference between the activity recorded at posterior sites (PO7/PO8, O1/O2, P3/P4) contralaterally to the presentation of one or more to-be-memorized stimuli and the activity recorded ipsilaterally to the presentation of one or more to-be-memorized stimuli. SPCN/CDA amplitude tends to increase as the amount of information to be memorized or the quality (in terms of resolution) of the representation increases (e.g. Vogel and Machizawa, 2004; Sessa et al., 2011, 2012) until an asymptotic limit is reached that corresponds to the saturation of the VWM capacity (e.g. Vogel and Machizawa, 2004).

In one of our previous studies (Sessa et al., 2011) we have shown that faces with intense facial expressions (fear) elicit larger SPCN/CDA than faces with the same identity but with a neutral expression. These findings suggest either that emotions are represented in VWM, such that VWM representations may include emotion information, or that faces with an emotional expression are represented with higher resolution than neutral faces (see also Stout et al., 2013, for a replication of these findings).

The SPCN proves therefore to be a very useful marker in the present experimental context, since it offers us the opportunity to test the role of observer’s facial mimicry on the construction of facial expression representations in VWM. As a secondary goal, we also wanted to provide an extension of our previous results to a different negative facial expression (i.e. anger).

We expected to observe lower SPCN/CDA amplitude values for facial expressions memorized when participants wore the hardening gel compared to the condition in which participants’ facial mimicry was not blocked/altered; i.e. as suggested by Wood et al.s’ (2016) model, we hypothesized that mimicry may increase the clarity of visual representations of facial expressions, and, as a consequence, we expected a higher resolution for facial expressions memorized when participants’ mimicry could be freely used than when blocked/altered. We also expected larger SPCN/CDA amplitude values for intense expressions of anger when compared to both neutral and moderate expressions of anger (i.e. higher resolution for intense angry expressions than for neutral and subtle expressions).

Finally, as mentioned above, the literature strongly suggests that mimicry is strictly linked to empathy (see Prochazkova and Kret, 2017). On the basis of the evidence provided by the literature on the link between mimicry and empathy, we included the empathy variable in our experimental design by measuring the participants’ empathic quotient (Baron-Cohen and Wheelwright, 2004) in order to evaluate whether the interference on the simulation process by blocking/altering participants’ mimicry may affect VWM representations of faces differently in high- and low-empathic individuals.

Method

Participants

Before starting with data collection, we decided to proceed with the analysis of a sample of ~about 30 participants, as the existing studies monitoring the SPCN amplitude suggest being an appropriate sample (e.g. Sessa et al., 2011, 2012).

The analyses were conducted only after completing the data collection. Data were collected from 36 healthy volunteer students of the University of Padova. Because of an excess of electrophysiological artifacts, especially eye movements, data from seven participants were discarded from the analysis. All participants reported normal or correct vision from lenses and no history of neurological disorders. Twenty-nine participants (18 males, average age in years = 24, s.d. = 2.73; 2 left-handed) were included in the final sample.

Stimuli

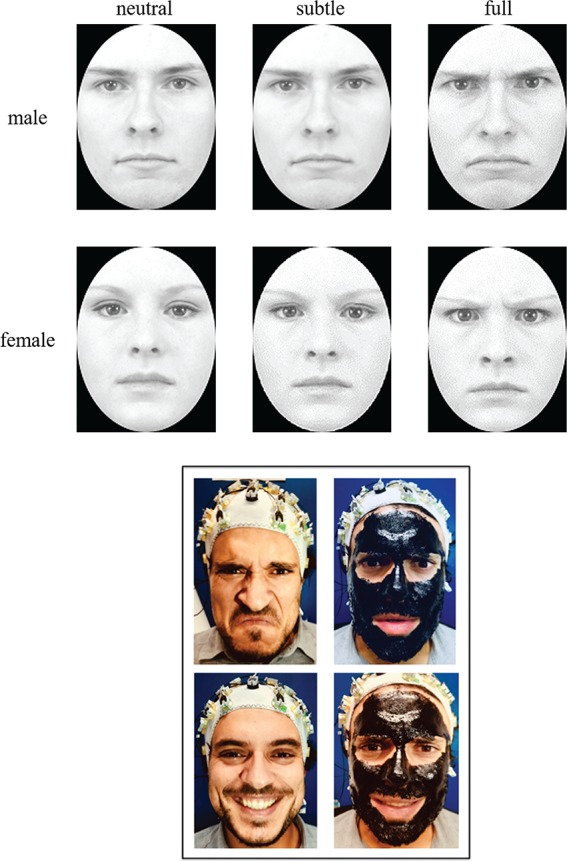

The stimuli were grayscale digital photographs of faces of eight individuals (four females and four males) expressing three different levels of emotional facial expression (neutral, subtle and full). These face stimuli have been modified by Vaidya et al. (2014) from the original images taken from the Karolinska database (Lundqvist et al., 1998) that included neutral and angry facial expressions. The subtle facial expressions were generated by a morphing procedure of facial expressions of the neutral and angry expressions of the same individual from Vaidya et al. (2014) and were 30–40% along the morph continuum. Figure 1A shows the three levels of facial expressions’ intensity for two individual faces (one of a female and one of a male). All images have been resized to subtend at a visual angle between 10 and 12 degrees. The participants were seated at 70 cm from the screen. The stimuli were presented on a 7 in CRT monitor of a computer with E-prime software.

Fig. 1.

(A) Examples of the stimuli used in the change detection task, one for each three level of facial expression (neutral, intermediate, full) for two individual faces (one of a female and one of a male). (B) The pictures show how the gel mask limited the facial movements of a participant for two different facial expressions (of anger at the top and happiness at the bottom).

Procedure

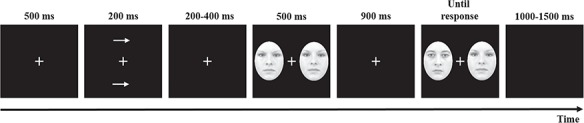

We used a variant of the change detection task (e.g. Vogel and Machizawa, 2004; Sessa et al., 2011). Each trial began with a fixation of 500 ms that remained in the center of the screen throughout the trial followed by the presentation of two arrows as cues shown for 200 ms one above and one below the fixation cross, both pointing in the same direction (ie both on the left or both on the right). The two cues, or the two arrows, were shown for 200 ms and followed by a blank screen of variable duration (200−400 ms). Then a memory array of faces appeared, presented for 500 ms. The memory array consisted of two faces with a neutral, subtle or full emotional facial expression of anger.

Following the memory array, a blank screen with a duration of 900 ms preceded the test array onset, which also contained two faces, one on the right and one on the left of the fixation cross, which was shown until an answer was provided by the participant. In the memory and in the test array, faces of the same identity were presented. Participants were instructed to maintain their gaze on the fixation cross throughout the trial and to memorize only the face of the memory array shown in the side indicated by the arrows and were also explicitly informed that the face shown on the opposite side was not relevant for the task at hand. The task was to compare the memorized face with the one presented on the same side of the test array, in order to indicate if the facial expression of the face had changed or not. In 50% of the trials, the facial expression in the memory array and the test array were identical. In the remaining 50% of the trials, the facial expression was replaced in the test array with a different facial expression. When a change occurred, the face stimulus was replaced with a face stimulus of the same individual but which presented a different intensity of facial expression.

Half of the participants were instructed to press the ‘F’ key to indicate a change between the memory array and the test array and the ‘J’ key to indicate that there was no change between the memory array and the test array. The other half of the participants responded on the basis of an inverted response mapping. The responses had to be given without any time pressure; the participants were informed in this regard that the speed of response would not be taken into account for the evaluation of their performance. Following the response, a variable interval of 1000–1500 ms (in 100 ms steps) elapsed before the presentation of the fixation cross indicating the beginning of the next trial. The experiment started with a block of 12 practice trials. The participants performed 4 experimental blocks, each of 144 trials (i.e. 432 trials in total). Figure 2 shows the trial structure of the change detection task.

Fig. 2.

Timeline of each trial of the change detection task.

Each participant has performed the task in two different conditions (counterbalanced order across the participants); in the gel condition a mask gel was applied on the participant’s whole face, so as to create a thick and uniform layer, excluding the areas near the eyes and upper lip. The product used as a gel was a removable cosmetic mask (BlackMask Gobbini©, Gabrini Cosmetics©, Bibbiano (RE), Italy) that dries 10 min after application and becomes a sort of plastified and rigid mask. The participants perceived that the gel prevented the wider movements of face muscles. In the other half of the experiment (no-gel condition) nothing was applied to the participants’ faces. Figure 1 (B) shows how the gel mask limited the facial movements of a participant for two different facial expressions (of anger at the top and happiness at the bottom).

As in the study by Wood et al. (2015), at the beginning of the experimental session participants were told that the experiment involved ‘the role of skin conductance in perception’ and that they would be asked to spread a gel on their face in order to ‘block skin conductance’ before completing a computer task.

EEG/ERP recording

The EEG was recorded during the task by means of 64 active electrodes distributed on the scalp according to the extended 10/20 system, positioning an elastic Acti-Cap with reference to the left ear lobe. The high viscosity of the gel used has allowed the impedance to be kept <10 KΩ. The EEG was re-referenced offline to the mean activity recorded at the left and right ear lobes. The EEG has been segmented into epochs lasting 1600 ms (−200/1400). Following the baseline correction, trials contaminated by ocular artifacts (i.e. those in which the participants blinked or moved the eyes, eliciting activity higher than ±30 or ±60 μV, respectively) or from other types of artifacts (greater than ±80 μV) were removed. Finally, the contralateral waveforms were computed by mediating the activity recorded by the electrodes of the right hemisphere when the participants were required to encode and memorize the face stimulus presented on the left side of the memory array (pooling of the electrodes O2, PO8 and P4) with the activity recorded by the electrodes positioned on the left when the participants were required to encode and memorize the face stimulus presented on the right side of the memory array (pooling of electrodes O1, PO7 and P3). The SPCN was quantified as the difference in mean amplitude between the contralateral and ipsilateral waveforms in a time window of 300–1300 ms time-locked to the presentation of the memory array for each experimental condition (facial expression: neutral, subtle and full; condition: gel and no-gel).

At the end of the EEG session involving the change detection task, the participants were given the empathy quotient questionnaire (EQ; Baron-Cohen and Wheelwright, 2004). The EQ measures the empathic skills of the individual through 80 items (20 of which are control items). Individuals have to express their agreement on a 4-point Likert scale: ‘very much agree’, ‘partially agree’, ‘partially disagree’ and ‘very much in disagreement’. The analysis of the scores is carried out on the basis of a scale ranging from 0 (almost null empathy) to 80 (exceptional empathy).

The EQ values were then sorted in ascending order and the participants were divided into two groups so that a group of participants had a medium-low EQ average value (N = 15) and another group a medium-high EQ average value (N = 14). The rationale for this procedure was based on the assumption that individuals with higher empathic abilities are more likely to use their facial mimicry when recognizing and discriminating other people’s facial expressions than individuals with lower empathic abilities (e.g. Sonnby-Borgström, 2002; Sonnby-Borgström et al., 2003). From this point of view it is possible that the blocking/altering facial mimicry by means of the gel could compromise the representations of facial expressions more in the participants with medium-high EQ than in the participants with medium-low EQ.

Results

Behavior

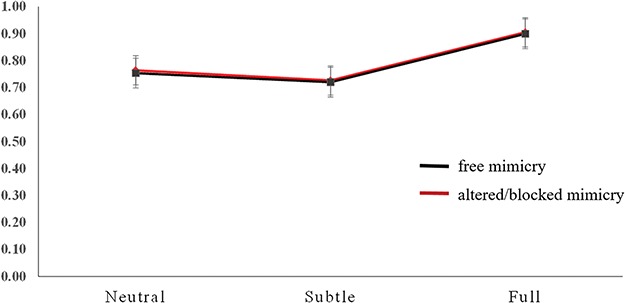

The mean proportion of correct responses was submitted to an analysis of variance (ANOVA) considering the within-subject factors emotion (neutral, subtle and full), the mimicry condition (free vs blocked/altered by the presence of the gel) and the between-subject factor EQ (medium-low EQ, medium-high EQ). The only statistically significant effect was that of emotion [F(2,26) = 325.937, P < 0.001, ηp2 = 0.923]. Following planned comparisons indicated that participants were more accurate when they had to memorize faces with full expressions than when they had to memorize faces with neutral expressions (P < 0.001, SEM = 0.008, 95% CI [0.123, 0.163]) or subtle expressions (P < 0.001, SEM = 0.007, 95% CI [0.160, 0.196]). In addition, the participants were more accurate when they had to memorize faces with neutral expressions than faces with subtle expression (P < 0.001, SEM = 0.007, 95% CI [0.016, 0.054]). The effect of the mimicry condition and the interaction between emotion and mimicry conditions were not statistically significant (F = 1.463, and F < 1, respectively). See Figure 3.

Fig. 3.

Mean proportion of correct responses in the change detection task for each facial expression condition (neutral, subtle and full).

Sustained posterior contralateral negativity

An ANOVA of the mean SPCN amplitude values was performed including the within-subject factors emotion (neutral, subtle and full) and mimicry condition (free vs blocked/altered by the presence of the gel) and the between-subject factor EQ (medium-low EQ, medium-high EQ). Wherever appropriate the Greenhouse–Geisser correction was used.

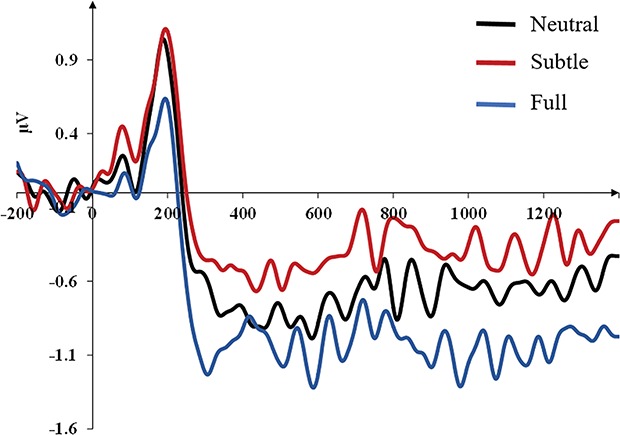

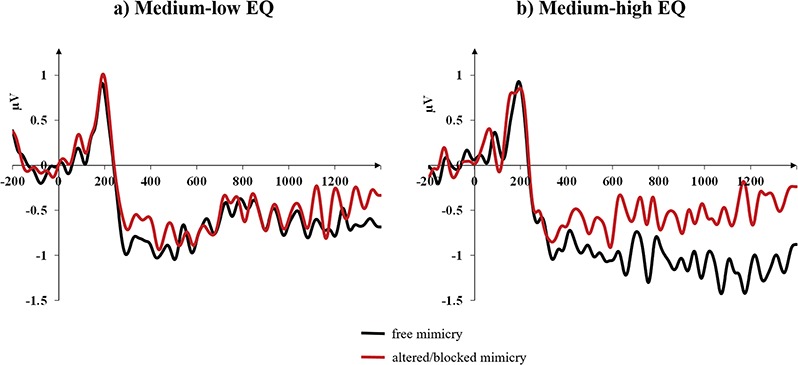

The ANOVA revealed a significant main effect of the emotion [F(2,26) = 9.841, P = 0.001, ηp2 = 0.267], the mimicry condition [F(1,27) = 5.189, P = 0.031, ηp2 = 0.161] and an interaction between the mimicry condition and the EQ [F(1,32) = 4.617, P = 0.041, ηp2 = 0.146]. The other interactions were not statistically significant (F < 1). Pairwise comparisons revealed that facial expressions of full anger elicited larger SPCN amplitude values (mean SPCN amplitude for full expressions = −1.03 μV) when compared to both neutral expressions (P = 0.004, SEM = 0.104, 95% CI [−0.540, −0.112] mean SPCN amplitude for neutral expressions = −0.71 μV) and subtle expressions (P = 0.001, SEM = 0.166, 95% CI [0.956, −0.275]; mean SPCN amplitude for subtle expressions = −0.42 μV). Interestingly, subtle expressions elicited reduced SPCN amplitude values when compared to neutral expressions (P = 0.047, SEM = 0.139, 95% CI [0.004, 0.575]). In brief, the whole pattern of SPCN mean amplitudes elicited by the different levels of emotions nicely mirrored participants’ accuracy in the change detection task. The differential waveforms (contralateral minus ipsilateral) time-locked to the presentation of the memory array for each level of facial expression (neutral, subtle, full) are presented in Figure 4.

Fig. 4.

Grand averages of the face-locked ERP waveforms time-locked to the presentation of the memory array as a function of the facial expression conditions (neutral, subtle and full) collapsed across the mimicry conditions (free vs altered/blocked).

Pairwise comparisons (Bonferroni corrected) for participants with medium-low EQ did not highlight any effect of the mimicry condition (F < 1, SEM = 0.183, 95% CI [−0.391, 0.358]; mean SPCN amplitude for the blocked/altered mimicry condition = −0.73 μV, for the free mimicry condition = −0.75 μV), but, importantly, participants with medium-high EQ showed that the blocked/altered mimicry significantly impacted the SPCN amplitude (F = 9.471, P = 0.005; SEM = 0.189, 95% CI [−0.969, 0.194]; ηp2 = 0.260; mean SPCN amplitude for the blocked/altered mimicry condition = −0.40 μV, for the free mimicry condition = −0.98 μV).

Figure 5 shows the differential waveforms (contralateral minus ipsilateral) time-locked to the presentation of the memory array for the two mimicry conditions (free vs blocked/altered) for medium-low EQ participants (Figure 5A) and medium-high EQ participants (Figure 5B) separately.

Fig. 5.

Grand averages of the face-locked ERP waveforms time-locked to the presentation of the memory array as a function of the mimicry conditions (free vs altered/blocked) and collapsed across facial expression conditions (neutral, subtle and full) for medium-low EQ participants (A) and medium-high EQ participants (B) separately.

These results therefore suggest that VWM representations of facial expressions appear to be impaired by the gel in the more empathic participants, but not in the less empathic participants.

Discussion

The present experimental investigation, based on the theoretical background offered by the simulation models of facial expressions and, more specifically, on the model recently proposed by Wood et al. (2016), had the objective of testing whether the facial mimicry of an observer could be an element able to modulate the visual representations of facial expressions, as predicted by this latter model that proposes a feedback processing from simulation in sensorimotor areas to processing in the extrastriate areas, such that the simulation process is able to increase the clarity of visual representations of facial expressions.

With the aim to test this hypothesis, we implemented a variant of the classic change detection task in order to monitor the electrophysiological marker of VWM representations, namely the SPCN/CDA ERP component, in two critical experimental conditions in a within-subject design. In one experimental condition the participants performed the change detection task that included faces with different intensities of facial expression of anger (neutral, subtle and full) while being able to freely use their facial mimicry during the encoding and VWM maintenance of the face stimuli; in a different experimental condition, critical to test the main hypothesis of the present investigation, the participants performed the same task but their facial mimicry was blocked/altered by a facial gel that, hardening, greatly limited their facial movements.

In line with our hypotheses, the results with regard to the gel manipulation showed reduced SPCN/CDA amplitude values for the face representations stored when participants wore the facial gel compared to when their mimicry was not blocked/altered thus suggesting that the information maintained in VWM under the blocked/altered mimicry condition was poorer than that maintained in the condition in which participants could naturally use their facial muscles during the exposure to facial expressions.

We did not observe differences between the two conditions of anger expressions (subtle vs full) as a function of the mimicry condition in terms of modulation of the SPCN amplitude. One possible explanation is that mimicry is not so selectively sensitive to different levels of negative expressions, as for instance it is suggested by a recent study by Fujimura et al. (2010). These authors have indeed provided experimental evidence that the intensity level (arousal) of facial expressions induces in the observers a modulation of their facial mimicry (measured as the electromyography reactions of the zygomatic major and supercilii corrugator muscles) only in the case of expressions with positive valence, but not in the case of negative expressions that instead induced the same level of mimicry in the observers, as indicated by the activity of their supercilii corrugator muscle. On the other hand, another possibility that we cannot disregard is that the statistical power of our study could have been able to allow observing an overall effect of the mimicry but not more subtle modulations related to the different levels of facial expressions.

A second main objective of our work was also to investigate whether the level of empathy of the observer could be an important variable for understanding the role of mimicry on the construction and maintenance of facial expression representations in VWM. The evidence in the literature that guided this hypothesis strongly suggests that individuals with higher levels of empathy are more likely to use their facial mimicry during exposure to facial expressions such as happiness and anger (Sonnby-Borgström, 2002; Sonnby-Borgström et al., 2003; Dimberg et al., 2011) and activate their corrugator muscle even when exposed to fear (Balconi and Canavesio, 2016) and disgust (Balconi and Canavesio, 2013; see also Rymarczyk et al., 2018). These findings, together, constitute an important body of knowledge that supports the view that mimicry is an important component of emotional empathy (see e.g. Preston and de Waal, 2002; Prochazkova and Kret, 2017). Our results are very nicely in agreement with this previous evidence; in fact, the participants who suffered the most impairment of VWM facial expression representations (in terms of SPCN amplitude) due to the block/alteration of their facial mimicry were those with higher levels of empathy (as measured by the EQ).

A very recent study by de la Rosa et al. (2018) has used a clever paradigm of visual and motor adaptations to explore their effects on recognition of facial expressions. Notably, their results showed that visual adaptation (through the repeated visual presentation of facial expressions) and motor adaptation (through the repeated execution of facial expressions) had an opposite effect on expression recognition. These findings support a dual system for the recognition of others’ facial expressions, one ‘purely’ visual and one based on simulation. Our results, nevertheless, seem to suggest that this dissociability does not imply that the two systems cannot influence each other, at least as regards the effect of the simulation system on the visual system. Furthermore, they suggest that there might be an important inter-individual variability in the connectivity of the two systems, such that this influence of the simulation system on the visual system (in our present work in terms of VWM representations) is particularly relevant for those individuals with higher levels of empathy who likely tend to recognize others’ emotional expressions through the synergy of the two systems. Moreover, in the light of these observations, it should probably be emphasized that these findings inform us about the recognition/discrimination of emotions under conditions of interference with simulation, but nothing tells us about the observer’s subjective experience that might differ in cases in which facial expressions processing is accomplished on a visual basis, on a simulation basis or through an integration of the two systems. We believe that answering this question is one of the most ambitious challenges that future research will have to face.

The present study also allowed us to replicate our previous findings (Sessa et al., 2011) regarding the effect of facial expressions on VWM representations extending those previous findings to a different negative facial expression, i.e. anger. In our previous work we have demonstrated that faces with an intense expression of fear elicit larger SPCN amplitudes than faces with a neutral expression, suggesting that fearful faces are either represented in greater detail (i.e. high-resolution representations) or that the emotion is also represented in VWM in some kind of additional visual format. In the present study, we have replicated these results with angry faces compared to neutral faces; we observed that facial expressions of intense anger elicited an SPCN of greater amplitude than neutral and subtle facial expressions and, moreover, were associated with greater accuracy in the change detection task when compared to the other two levels of intensity of facial expressions (neutral and subtle). This overall pattern of findings is very well in line with the benefit observed for negative and angry facial expressions in previous studies in terms of behavioral indices of sensitivity (Jackson et al., 2008; Langeslag et al., 2009; Jackson et al., 2014; Simione et al., 2014; Xie and Shang, 2016). An unexpected result refers to the observation that the faces with expression of subtle anger elicited not only an SPCN of lower amplitude than the faces with full expressions of anger but also an SPCN of lower amplitude than the faces with neutral expressions. This electrophysiological pattern also entirely parallels accuracy in the change detection task, such that accuracy associated with trials with subtle facial expressions was lower than accuracy for faces with both full and neutral expressions. A possible account for this result could take into consideration the concept of ‘distinctiveness’, originally coined in the context of long-term memory studies (see e.g. Eysenck, 1979) and later considered a variable of great importance also in the context of short-term memory studies (Hunt, 2006). Distinctiveness refers to the ability of an item to produce a reliable representation in memory relative to the other items in a certain (experimental) context. In relation to the stage of information retrieval, distinctiveness specifies that it is more likely to recover memories/representations that are sparsely represented within the space of representation than memories/representations that are densely represented. In this perspective, neutral and full expressions are two prototypical categories characterized by high distinctiveness, while subtle facial expressions of anger might be characterized by a low distinctiveness and, as a consequence, might elicit smaller SPCN amplitudes and be associated with reduced accuracy in the change detection task when compared to full expressions of anger and neutral expressions.

Finally, we want to examine the lack of an impact of the mimicry manipulation on overt behavior. This discrepancy between the neural and behavioral levels of our investigation could originate from at least two possible sources. On the one hand, it is possible that the neural measure might be more sensitive to the mimicry manipulation than accuracy, at least in the context of the present change detection paradigm. According to this line of reasoning, the effect size of the mimicry effect on SPCN amplitude values was lower than the effect size of the emotion effect (ηp2 = 0.161 vs ηp2 = 0.267), an observation that could suggest that accuracy captured only the greatest effect in terms of effect size. The literature offers several examples of this discrepancy between neural and behavioral findings (e.g. Luck et al., 1996; Heil et al., 2004), also in the context of the change detection task (e.g. Sessa et al., 2011, 2012). Moreover, the effects related to mimicry tend to be very small and usually require, in behavioral studies, rather large samples (with N even larger than 90–100 in some cases) to be observed (e.g. Niedenthal et al., 2001; Wood et al., 2015). An alternative explanation of this incongruity could be that SPCN and accuracy provide estimates of two different aspects of the VWM functioning; while the SPCN can be considered a pure index of VWM representation, accuracy also reflects the processes of retrieval, deployment of attention on the test array stimuli and the comparison between the stored representation and the to-be-compared stimulus in the test array [i.e. ‘comparison process’; (Awh et al., 2007; Hyun et al., 2009; Dell’Acqua et al., 2010]. Therefore, in light of these observations, we believe that the most relevant result of the present investigation, related to a modulation of the SPCN amplitude as a function of the mimicry manipulation, is entirely reliable.

An additional and, in our opinion, fascinating consideration refers to the observation that in the present task, unlike most of the tasks used in previous studies, a delayed response was requested, so it is possible that this facilitated the engagement of additional cognitive resources that compensated the simulation deficit. In this vein, the SPCN, which is an online visual processing index (albeit of a high level), could be particularly sensitive to the simulation deficit, while accuracy could reflect the recruitment of these additional cognitive resources. This interpretative framework - that of course requires further investigation to be confirmed - would therefore suggest that the privileged automatic and fast mechanism through which we recognize others' emotions is the simulation, but that, in the case of a simulation deficit or in the case a prolonged time of processing is possible, other resources can be conveyed for the recognition of others' facial expressions.

Finally, we would like to briefly draw attention to a possible limitation of our study concerning the condition in which participants could freely use their facial mimicry. It is possible that a simple moisturizing facial mask could have been a better control, especially as regards the possible role of somatosensory feedback. At the moment we cannot therefore exclude that a small part of the effect of the hardening gel on VWM representations of faces may depend on an alteration of the somatosensory feedback as well as an alteration of the muscular feedback.

In conclusion, the present investigation showed that VWM representations of facial expressions are sensitive to the observer’s mimicry and, more specifically, that mimicry, when it can be used freely, is able, as predicted by the model of Wood et al. (2016), to enhance the clarity of these representations, which in neural terms translate into greater SPCN amplitude values than when mimicry is prevented or altered. These results, therefore, support this aspect of Wood et al.’s model by providing direct evidence of this relationship between mimicry and VWM representations and further clarify that the specific stage of VWM is involved in this feedback processing from simulation to visual analysis. Moreover, our findings represent a progress also with regard to the studies on VWM as they provide further knowledge on how this memory system operates and on what can be the sources of input information able to modulate its functioning. Specifically, our results suggest that VWM receives the feedback of sensorimotor regions during the processing of faces and facial expressions, including also emotional information. We therefore believe, overall, that the present results can represent a valuable progress for the definition of a simulation model for the recognition and understanding of others’ emotions.

Author contributions

Paola Sessa developed the study concept. All authors contributed to the study design. Arianna Schiano Lomoriello performed testing and data collection. Paola Sessa and Arianna Schiano Lomoriello performed the data analysis and all the authors interpreted the data. Paola Sessa drafted the manuscript. Roy Luria provided critical revision. All authors approved the final version of the manuscript for submission.

Competing financial interests

The authors declare no competing financial interests.

Acknowledgments

We would like to thank Prof. Lesley Fellows for kindly providing us with the stimuli used in the present study (see Vaidya et al., 2014). We also want to thank Chiara Cantoni, Giulia Crosara, Irene Rinaldi, Stefano Santacroce and Valeria Tornabene for their valuable contribution with the EEG data collection. Finally, we also want to thank Dr Giulio Caperna for the pictures that portray him in Figure 1 (Panel B).

References

- Awh E., Barton B., Vogel E.K. (2007). Visual working memory represents a fixed number of items regardless of complexity. Psychological Science, 18(7), 622–8. doi: 10.1111/j.1467-9280.2007.01949.x . [DOI] [PubMed] [Google Scholar]

- Balconi M., Canavesio Y. (2013). High-frequency rTMS improves facial mimicry and detection responses in an empathic emotional task. Neuroscience, 236, 12–20. doi:https://doi.org/10.1016/j.neuroscience.2012.12.059. [DOI] [PubMed] [Google Scholar]

- Balconi M., Canavesio Y. (2016). Is empathy necessary to comprehend the emotional faces? The empathic effect on attentional mechanisms (eye movements), cortical correlates (N200 event-related potentials) and facial behaviour (electromyography) in face processing. Cognition & Emotion, 30(2), 210–24. doi:https://doi.org/10.1080/02699931.2014.993306. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S., Wheelwright S. (2004). The empathy quotient: an investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders, 34(2), 163–75. doi:https://doi.org/10.1023/B:JADD.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- Baumeister J.C., Papa G., Foroni F. (2016). Deeper than skin deep—the effect of botulinum toxin-A on emotion processing. Toxicon, 118, 86–90. doi: 10.1016/j.toxicon.2016.04.044. [DOI] [PubMed] [Google Scholar]

- Baumeister J.C., Rumiati R.I., Foroni F. (2015). When the mask falls: the role of facial motor resonance in memory for emotional language. Acta Psychologica, 155, 29–36. doi:https://doi.org/10.1016/j.actpsy.2014.11.012. [DOI] [PubMed] [Google Scholar]

- Borghi A.M., Scorolli C., Caligiore D., Baldassarre G., Tummolini L. (2013). The embodied mind extended: using words as social tools. Frontiers in psychology, 4, 214. doi:10.3389/fpsyg.2013.00214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruneau E.G., Jacoby N., Saxe R. (2015). Empathic control through coordinated interaction of amygdala, theory of mind and extended pain matrix brain regions. NeuroImage, 114, 105–19. doi:https://doi.org/10.1016/j.neuroimage.2015.04.034. [DOI] [PubMed] [Google Scholar]

- Carr L., Iacoboni M., Dubeau M.C., Mazziotta J.C., Lenzi G.L. (2003). Neural mechanisms of empathy in humans: a relay from neural systems for imitations to limbic areas. Proceedings of the National Academy of Sciences of the United States of America, 100(9), 5497–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de la Rosa S., Fademrecht L., Bülthoff H.H., Giese M.A., Curio C. (2018). Two ways to facial expression recognition? Motor and visual information have different effects on facial expression recognition. Psychological Science, 29(8), 1257–69. doi: 10.1177/0956797618765477. [DOI] [PubMed] [Google Scholar]

- Decety J., Jackson P.L. (2006). A social–neuroscience perspective on empathy. Current Directions in Psychological Science, 15(2), 54–8. doi:https://doi.org/10.1111/j.0963-7214.2006.00406.x. [Google Scholar]

- Decety J., Svetlova M. (2012). Putting together phylogenetic and ontogenetic perspectives on empathy. Developmental Cognitive Neuroscience, 2(1), 1–24. doi: 10.1016/j.dcn.2011.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dell’Acqua R., Sessa P., Peressotti F., Mulatti C., Navarrete E., Grainger J. (2010). ERP evidence for ultra-fast semantic processing in the picture–word interference paradigm. Frontiers in Psychology, 1, 177. doi: 10.3389/fpsyg.2010.00177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimberg U., Andréasson P., Thunberg M. (2011). Emotional empathy and facial reactions to facial expressions. Journal of Psychophysiology, (25), 26–31. doi:https://doi.org/doi:10.1027/0269-8803/a000029. [Google Scholar]

- Eysenck M.W. (1979). The feeling of knowing a word’s meaning. British Journal of Psychology, 70(2), 243–51. doi: 10.1111/j.2044-8295.1979.tb01681.x. [DOI] [Google Scholar]

- Fujimura T., Sato W., Suzuki N. (2010). Facial expression arousal level modulates facial mimicry. International Journal of Psychophysiology, 76(2), 88–92. doi: 10.1016/j.ijpsycho.2010.02.008. [DOI] [PubMed] [Google Scholar]

- Gallese V., Sinigaglia C. (2011). What is so special about embodied simulation? Trends in Cognitive Sciences, 15(11), 512–9. doi:https://doi.org/10.1016/j.tics.2011.09.003. [DOI] [PubMed] [Google Scholar]

- Goldman A.I., Vignemont F. (2009). Is social cognition embodied? Trends in Cognitive Sciences, 13(4), 154–9. doi: 10.1016/j.tics.2009.01.007. [DOI] [PubMed] [Google Scholar]

- Goldman A.I., Sripada C.S. (2005). Simulationist models of face-based emotion recognition. Cognition, 94(3), 193–213. doi:https://doi.org/10.1016/j.cognition.2004.01.005. [DOI] [PubMed] [Google Scholar]

- Heil M., Rolke B., Pecchinenda A. (2004). Automatic semantic activation is no myth task in the absence of response time effects. Psychological Science, 15(12), 852–8. [DOI] [PubMed] [Google Scholar]

- Hess U., Fischer A. (2014). Emotional mimicry: why and when we mimic emotions. Social and Personality Psychology Compass, 8(2), 45–57 doi:https://doi.org/10.1111/spc3.12083. [Google Scholar]

- Hunt R.R. (2006). The concept of distinctiveness in memory research In: Hunt R.R., Worthen J.B., editors. Distinctiveness and Memory, New York, NY: Oxford University Press, 3–25. [Google Scholar]

- Hyun S., Lee J.H., Jin H., et al. (2009). Conserved microRNA miR-8/miR-200 and its target USH/FOG2 control growth by regulating PI3K. Cell, 139(6), 1096–108. doi: 10.1016/j.cell.2009.11.020. [DOI] [PubMed] [Google Scholar]

- Jackson M.C., Linden D.E., Raymond J.E. (2014). Angry expressions strengthen the encoding and maintenance of face identity representations in visual working memory. Cognition & Emotion, 28(2), 278–97. doi: 10.1080/02699931.2013.816655. [DOI] [PubMed] [Google Scholar]

- Jackson M.C., Wolf C., Johnston S.J., Raymond J.E., Linden D.E. (2008). Neural correlates of enhanced visual short-term memory for angry faces: an fMRI study. PLoS One, 3(10), doi:https://doi.org/10.1371/journal.pone.0003536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jolicoeur P., Dell’Acqua R., Brisson B., et al. (2010). Visual spatial attention and visual short-term memory: Electro-magnetic explorations of the mind., pp. 143--185. In Coltheart V., (Ed.), Tutorials in Visual Cognition, Hove, UK: Psychology Press. [Google Scholar]

- Kanske P., Böckler A., Trautwein F.M., Lesemann F.H., Singer T. (2016). Are strong empathizers better mentalizers? Evidence for independence and interaction between the routes of social cognition. Social Cognitive and Affective Neuroscience, 11(9), 1383–92. doi:https://doi.org/10.1093/scan/nsw052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanske P., Böckler A., Trautwein M., Singer T. (2015). Dissecting the social brain: Introducing the EmpaToM to reveal distinct neural networks and brain-behavior relations for empathy and Theory of Mind. NeuroImage, 122, 6–19. [DOI] [PubMed] [Google Scholar]

- Keillor J.M., Barrett A.M., Crucian G.P., Kortenkamp S., Heilman K.M. (2002). Emotional experience and perception in the absence of facial feedback. Journal of the International Neuropsychological Society, 8(1), 130–5. doi: 10.1017/S1355617702811134. [DOI] [PubMed] [Google Scholar]

- Korb S., Wood A., Banks C.A., Agoulnik D., Hadlock T.A., Niedenthal P.M. (2016). Asymmetry of facial mimicry and emotion perception in patients with unilateral facial paralysis. JAMA Facial Plastic Surgery, 18(3), 222–7. doi: 10.1001/jamafacial.2015.2347. [DOI] [PubMed] [Google Scholar]

- Lamm C., Decety J., Singer T. (2011). Meta-analytic evidence for common and distinct neural networks associated with directly experienced pain and empathy for pain. NeuroImage, 54(3), 2492–502. doi: 10.1016/j.neuroimage.2010.10.014. [DOI] [PubMed] [Google Scholar]

- Langeslag S.J., Morgan H.M., Jackson M.C., Linden D.E., Van Strien J.W. (2009). Electrophysiological correlates of improved short-term memory for emotional faces. Neuropsychologia, 47(3), 887–96. doi: 10.1016/j.neuropsychologia.2008.12.024. [DOI] [PubMed] [Google Scholar]

- Likowski K.U., Mühlberger A., Gerdes A.B., Wieser M.J., Pauli P., Weyers P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Frontiers in Human Neuroscience, 6(July), 1–10. doi: 10.3389/fnhum.2012.00214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck S.J. (2005). An Introduction to the Event-Related Potential Technique. Cambridge, Mass: MIT Press. [Google Scholar]

- Luck S.J., Vogel E.K., Shapiro K.L. (1996). Word meanings can be accessed but not reported during the attentional blink. Nature, 383, 616–8. doi:https://doi.org/10.1038/383616a0. [DOI] [PubMed] [Google Scholar]

- Lundqvist D., Flykt A., Öhman A. (1998). The Karolinska Directed Emotional Faces---KDEF [CD-ROM]. Department of Clinical Neuroscience, Psychology section. Stockholm, Sweden: Karolinska Institutet. [Google Scholar]

- Luria R., Balaban H., Awh E., Vogel E.K. (2016) The contralateral delay activity as a neural measure of visual working memory. Neuroscience and Biobehavioral Reviews, 62, 100–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luria R., Sessa P., Gotler A., Jolicoeur P., Dell’Acqua R. (2010). Visual short-term memory capacity for simple and complex objects. Journal of Cognitive Neuroscience, 22(3), 496–512. doi:https://doi.org/10.1162/jocn.2009.21214. [DOI] [PubMed] [Google Scholar]

- Marsh A.A. (2018). The neuroscience of empathy. Current Opinion in Behavioral Sciences, 19, 110–5. doi: 10.1016/j.cobeha.2017.12.016. [DOI] [Google Scholar]

- Mastrella G., Sessa P. (2017). Facial expression recognition and simulative processes. Giornale Italiano di Psicologia, 44 (4), 877–902. doi:https://doi.org/10.1421/88772. [Google Scholar]

- Meconi F., Doro M., Lomoriello A.S., Mastrella G., Sessa P. (2018). Neural measures of the role of affective prosody in empathy for pain. Scientific Reports, 8 (1), 1–13. doi:https://doi.org/10.1038/s41598-017-18552-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meconi F., Luria R., Sessa P. (2014). Individual differences in anxiety predict neural measures of visual working memory for untrustworthy faces. Social Cognitive and Affective Neuroscience, 9(12), 1872–9. doi:https://doi.org/10.1093/scan/nst189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meconi F., Vaes J., Sessa P. (2015). On the neglected role of stereotypes in empathy toward other-race pain. Social Neuroscience, 10(1), 1–6. doi:https://doi.org/10.1080/17470919.2014.954731. [DOI] [PubMed] [Google Scholar]

- Niedenthal P.M. (2007). Embodying emotion. Science, 316(5827), 1002–5. doi:https://doi.org/10.1126/science.1136930. [DOI] [PubMed] [Google Scholar]

- Niedenthal P.M., Brauer M., Halberstadt J.B., Innes-Ker Å.H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition and Emotion, 15(6), 853–64. doi:https://doi.org/10.1080/02699930143000194. [Google Scholar]

- Oberman L.M., Winkielman P., Ramachandran V.S. (2007). Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neuroscience, 2(3–4), 167–78. doi:https://doi.org/10.1080/17470910701391943. [DOI] [PubMed] [Google Scholar]

- Pitcher D., Garrido L., Walsh V., Duchaine B.C. (2008). Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. Journal of Neuroscience, 28(36), 8929–33. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ponari M., Conson M., D’amico N.P., Grossi D., Trojano L. (2012). Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion, 12(6), 1398. [DOI] [PubMed] [Google Scholar]

- Preston S.D., Waal F.B. (2002). Empathy: its ultimate and proximate bases. Behavioral and Brain Sciences, 25(1), 1–20. doi:https://doi.org/10.1017/S0140525X02000018. [DOI] [PubMed] [Google Scholar]

- Prochazkova E., Kret M.E. (2017). Connecting minds and sharing emotions through mimicry: a neurocognitive model of emotional contagion. Neuroscience and Biobehavioral Reviews, 80 (April), 99–114. doi: 10.1016/j.neubiorev.2017.05.013. [DOI] [PubMed] [Google Scholar]

- Rinn W.E. (1991). Neuropsychology of facial expression. In Feldman R. S., Rimé B., editors. Fundamentals of Nonverbal Behavior, Studies in Emotion & Social Interaction, New York, NY, USA: Cambridge University Press, 3–30. [Google Scholar]

- Rymarczyk K., urawski, Jankowiak-Siuda K., Szatkowska I. (2018). Neural correlates of facial mimicry: simultaneous measurements of EMG and BOLD responses during perception of dynamic compared to static facial expressions. Frontiers in Psychology, 9(February), 1–17. doi: 10.3389/fpsyg.2018.00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sessa P., Dalmaso M. (2016). Race perception and gaze direction differently impair visual working memory for faces: an event-related potential study. Social Neuroscience, 11(1), 97–107. doi:https://doi.org/10.1080/17470919.2015.1040556. [DOI] [PubMed] [Google Scholar]

- Sessa P., Luria R., Gotler A., Jolicœur P., Dell’acqua R. (2011). Interhemispheric ERP asymmetries over inferior parietal cortex reveal differential visual working memory maintenance for fearful versus neutral facial identities. Psychophysiology, 48(2), 187–97. doi:https://doi.org/10.1111/j.1469-8986.2010.01046.x. [DOI] [PubMed] [Google Scholar]

- Sessa P., Meconi F., Han S. (2014). Double dissociation of neural responses supporting perceptual and cognitive components of social cognition: evidence from processing of others' pain. Sci. Rep, 4, 7424 doi: 10.1038/srep07424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sessa P., Tomelleri S., Luria R., Castelli L., Reynolds M., Dell’Acqua R. (2012). Look out for strangers! Sustained neural activity during visual working memory maintenance of other-race faces is modulated by implicit racial prejudice. Social Cognitive and Affective Neuroscience, 7(3), 314–21. doi:https://doi.org/10.1093/scan/nsr011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simione L., Calabrese L., Marucci F.S., Belardinelli M.O., Raffone A., Maratos F.A. (2014). Emotion based attentional priority for storage in visual short-term memory. PLoS One, 9(5), doi:https://doi.org/10.1371/journal.pone.0095261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sonnby-Borgström M. (2002). Automatic mimicry reactions as related to differences in emotional empathy. Scandinavian Journal of Psychology, 43(5), 433–43. doi: 10.1111/1467-9450.00312. [DOI] [PubMed] [Google Scholar]

- Sonnby-Borgström M., Jönsson P., Svensson O. (2003). Emotional empathy as related to mimicry reactions at different levels of information processing. Journal of Nonverbal Behavior, 27, doi:https://doi.org/10.1023/A:1023608506243. [Google Scholar]

- Stel M., Knippenberg A. (2008). The role of facial mimicry in the recognition of affect. Psychological Science, 19(10), 984–5. doi:https://doi.org/10.1111/j.1467-9280.2008.02188.x. [DOI] [PubMed] [Google Scholar]

- Stout D.M., Shackman A.J., Larson C.L. (2013). Failure to filter: anxious individuals show inefficient gating of threat from working memory. Frontiers in Human Neuroscience, 7, 58. doi:https://doi.org/10.3389/fnhum.2013.00058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaes J., Meconi F., Sessa P., Olechowski M. (2016). Minimal humanity cues induce neural empathic reactions towards non-human entities. Neuropsychologia, 89, 132--40. [DOI] [PubMed] [Google Scholar]

- Vaidya A.R., Jin C., Fellows L.K. (2014). Eye spy: the predictive value of fixation patterns in detecting subtle and extreme emotions from faces. Cognition, 133(2), 443–56. [DOI] [PubMed] [Google Scholar]

- Vogel E.K., Machizawa M.G. (2004). Neural activity predicts individual differences in visual working memory capacity. Nature, 428(6984), 748–51. doi:https://doi.org/10.1038/nature02447. [DOI] [PubMed] [Google Scholar]

- Vogel E.K., McCollough A.W., Machizawa M.G. (2005). Neural measures reveal individual differences in controlling access to working memory. Nature, 438(7067), 500–3. doi: 10.1038/nature04171. [DOI] [PubMed] [Google Scholar]

- Wicker B., Keysers C., Plailly J., Royet J.P., Gallese V., Rizzolatti G. (2003). Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron, 40(3), 655–64. doi: 10.1016/S0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- Wood A., Lupyan G., Sherrin S., Niedenthal P. (2015). Altering sensorimotor feedback disrupts visual discrimination of facial expressions. Psychonomic Bulletin & Review, 23(4), 1150–6. doi:https://doi.org/10.3758/s13423-015-0974-5. [DOI] [PubMed] [Google Scholar]

- Wood A., Rychlowska M., Korb S., Niedenthal P. (2016). Fashioning the face: sensorimotor simulation contributes to facial expression recognition. Trends in Cognitive Sciences, 20(3), 227–40. doi:https://doi.org/10.1016/j.tics.2015.12.010. [DOI] [PubMed] [Google Scholar]

- Xie W., Zhang W. (2016). Negative emotion boosts quality of visual working memory representation. Emotion, 15(5), 760–74doi:https://doi.org/http://dx.doi.org/10.1037/emo0000159. [DOI] [PubMed] [Google Scholar]

- Zaki J., Ochsner K. (2012). The neuroscience of empathy: progress, pitfalls and promise. Nat. Neurosci, 15, 675–80. doi:10.1038/nn.3085. [DOI] [PubMed] [Google Scholar]