Abstract

The 2016 US Presidential Election brought considerable attention to the phenomenon of “fake news”: entirely fabricated and often partisan content that is presented as factual. Here we demonstrate one mechanism that contributes to the believability of fake news: fluency via prior exposure. Using actual fake news headlines presented as they were seen on Facebook, we show that even a single exposure increases subsequent perceptions of accuracy, both within the same session and after a week. Moreover, this “illusory truth effect” for fake news headlines occurs despite a low level of overall believability, and even when the stories are labeled as contested by fact checkers or are inconsistent with the reader’s political ideology. These results suggest that social media platforms help to incubate belief in blatantly false news stories, and that tagging such stories as disputed is not an effective solution to this problem. Interestingly, however, we also find that prior exposure does not impact entirely implausible statements (e.g., “The Earth is a perfect square”). These observations indicate that although extreme implausibility is a boundary condition of the illusory truth effect, only a small degree of potential plausibility is sufficient for repetition to increase perceived accuracy. As a consequence, the scope and impact of repetition on beliefs is greater than previously assumed.

Keywords: fake news, news media, social media, fluency, illusory truth effect

The ability to form accurate beliefs, particularly about issues of great importance, is key to our success as individuals as well as the functioning of our societal institutions (and, in particular, democracy). Across a wide range of domains, it is critically important to correctly assess what is true and what is false: Accordingly, differentiating real from unreal is at the heart of our societal constructs of rationality and sanity (Corlett, 2009; Sanford, Veckenstedt, & Moritz, 2014). Yet the ability to form and update beliefs about the world sometimes goes awry – and not just in the context of inconsequential, small-stakes decisions.

The potential for systematic inaccuracy in important beliefs has been particularly highlighted by the wide-spread consumption of disinformation during the 2016 US Presidential Election. This is most notably exemplified by so-called “fake news” – that is, news stories that were fabricated (but presented as if from legitimate sources) and promoted on social media in order to deceive the public for ideological and/or financial gain (Lazer et al., 2018). An analysis of the top performing news articles on Facebook in the months leading up to the election revealed that the top fake news articles actually outperformed the top real news articles in terms of shares, likes, and comments (Silverman, Strapagiel, Shaban, & Hall, 2016). Although it is unclear to what extent fake news influenced the outcome of the Presidential Election (Allcott & Gentzkow, 2017), there is no question that many people were deceived by entirely fabricated (and often quite fanciful) fake news stories – including, for example, high-ranking government officials, such as Pakistan’s defense minister (Goldman, 2016). How is it that so many people came to believe stories that were patently and demonstrably untrue? What mechanisms underlie these false beliefs that might be called mass delusions?

Here, we explore one potential answer: prior exposure. Given the ease with which fake news can be created and distributed on social media platforms (Shane, 2017), combined with our increasing tendency to consume news via social media (Gottfried & Shearer, 2016), it is likely that we are being exposed to fake news stories with much greater frequency than in the past. Might exposure per se help to explain people’s tendency to believe outlandish political disinformation?

The Illusory Truth Effect

There is a long tradition of work in cognitive science demonstrating that prior exposure to a statement (e.g., “The capybara is the largest of the marsupials”) increases the likelihood that participants will judge it to be accurate (Arkes, Boehm, & Xu, 1991; Bacon, 1979; Begg, Anas, & Farinacci, 1992; Dechene, Stahl, Hansen, & Wanke, 2010; Fazio, Brashier, Payne, & Marsh, 2015; Hasher, Goldstein, & Toppino, 1977; Polage, 2012; Schwartz, 1982). The dominant account of this “illusory truth effect” is that repetition increases the ease with which statements are processed (i.e., processing fluency), which in turn is used heuristically to infer accuracy (Alter & Oppenheimer, 2009; Begg et al., 1992; Reber, Winkielman, & Schwarz, 1998; Unkelbach, 2007; Wang, Brashier, Wing, Marsh, & Cabeza, 2016; Whittlesea, 1993, but see Unkelbach & Rom, 2017). Past studies have shown this phenomenon using a range of innocuous and plausible statements, such as obscure trivia questions (Bacon, 1979) or assertions about consumer products (Hawkins & Hoch, 1992; Johar & Roggeveen, 2007). Repetition can even increase the perceived accuracy of plausible but false statements among participants who are subsequently able to identify the correct answer (Fazio et al., 2015).

Here we ask whether illusory truth effects will extend to fake news. Given that the fake news stories circulating on social media are quite different from the stimuli that have been employed in previous illusory truth experiments, finding such an effect for implausible and highly partisan fake news extends the scope (and real-world relevance) of the effect and, as we will argue, informs theoretical models of the effect. Indeed, there are numerous reasons to think that simple prior exposure will not extend to fake news.

Implausibility as a potential boundary condition of the illusory truth effect

Fake news stories are constructed with the goal of drawing attention, and are therefore often quite fantastical and implausible. For example, Pennycook and Rand (2018) gave participants a set of politically partisan fake news headlines collected from online websites (e.g., “Trump to Ban All TV Shows that Promote Gay Activity Starting with Empire as President”), and found that they were only judged as accurate 17.8% of the time. To contrast this figure with the existing illusory truth literature, Fazio et al. (2015) found that false trivia items were judged to be true around 40% of the time, even when restricting the analysis to participants who were subsequently able to recognize the statement as false. Thus, these previous statements (such as “chemosynthesis is the name of the process by which plants make their food”), despite being untrue, are much more plausible than typical fake news headlines. This may have consequences for whether repetition increases perceived accuracy of fake news: When it is completely obvious that a statement is false, it may be perceived as inaccurate regardless of how fluently it is processed. Although such an influence of plausibility is not explicitly part of the Fluency-Conditional Model of illusory truth proposed by Fazio and colleagues (under which knowledge only influences judgment when people do not rely on fluency), the possibility of such an effect is acknowledged in their discussion when they state that they “expect that participants would draw on their knowledge, regardless of fluency, if statements contained implausible errors” (p. 1000). Similarly, when summarizing a meta-analysis of illusory truth effects, (Dechene et al., 2010) argued that: “Statements have to be ambiguous, that is, participants have to be uncertain about their truth status because otherwise the statements’ truthfulness will be judged on the basis of their knowledge” (p. 239). Thus, investigating the potential for an illusory truth effect for fake news is not simply important because it helps us understand the spread of fake news, but also because it allows us to test heretofore untested (but common) intuitions about the boundary conditions of the effect.

Motivated reasoning as a potential boundary condition of the illusory truth effect

Another striking feature of fake news that may counteract the effect of repetition – and which is absent from prior studies of the illusory truth effect – is the fact that fake news stories are not only political in nature, but are often extremely partisan. Although prior work has shown the illusory truth effect on average for (relatively innocuous) social-political opinion statements (Arkes, Hackett, & Boehm, 1989), the role of individual differences in ideological discordance has not been examined. Importantly, people have a strong motivation to reject the veracity of stories that conflict with their political ideology (Flynn, Nyhan, & Reifler, 2016; Kahan, 2013; Kahan et al., 2012), and the hyper-partisan nature of fake news makes such conflicts virtually assured for roughly half the population. Furthermore, the fact that fake news stories are typically of immediate real-world relevance – and therefore, presumably, more impactful on a person’s beliefs and actions than the relatively trivial pieces of information considered in previous work on the illusory truth effect – should make people more inclined to think carefully about the accuracy of such stories, rather than relying on simple heuristics when making accuracy judgments. Thus, there is reason to expect that people may be resistant to illusory truth effects for partisan fake news stories that they have politically motivated reasons to reject.

The current work

Although there are many reasons that, in theory, people should not believe fake news (even if they have seen it before), it is clear that many people do in fact find such stories credible. If repetition increases perceptions of accuracy even for highly implausible and partisan content, then increased exposure may (at least partly) explain why fake news stories have recently proliferated. Here we assess this possibility with a set of highly powered and preregistered experiments. In a first study, we explore the impact of extreme implausibility on the illusory truth effect in the context of politically neutral statements. We find that implausibility does indeed present a boundary condition for illusory truth, such that repetition does not increase perceived accuracy of statements which essentially no one believes at baseline. In two more studies, however, we find that – despite being implausible, partisan, and provocative – fake news headlines that are repeated are in fact perceived as more accurate. Taken together, these results shed light on how people come to have patently false beliefs, help to inform efforts to reduce such beliefs, and extend our understanding of the basis of illusory truth effects.

Study 1 – Extreme Implausibility Boundary Condition

Although existing models of the illusory truth effect do not explicitly take plausibility into account, we hypothesized that prior exposure should not increase perceptions of accuracy for statements that are prima facie implausible – that is, statements for which individuals hold extremely certain prior beliefs. That is, when strong internal reasons exist to reject the veracity of a statement, it should not matter how fluently the statement is processed.

To assess implausibility as a boundary condition for the illusory truth effect, we created statements that participants would certainly know to be false (i.e., extremely implausible statements such as “The Earth is a perfect square”) and manipulated prior exposure using a standard illusory truth paradigm (via Fazio et al., 2015). We also included unknown (but plausible) true and false trivia statements from a set of general knowledge norms (Tauber, Dunlosky, & Rawson, 2013). To balance out the set, participants were also given obvious known truths (see Table 1 for example items from each set). Participants first rated the “interestingness” of half of the items and, following an unrelated intervening questionnaire, they were asked to assess the accuracy of all items. Thus, half of the items in the assessment stage were previously presented (i.e., familiarized) and half were novel. If implausibility is a boundary condition for the illusory truth effect, there should be no significant effect of repetition on extremely implausible (known) falsehoods. We expect to replicate the standard illusory truth effect for unknown (but plausible) trivia statements. For extremely plausible known true statements, there may be a ceiling effect on accuracy judgments that precludes an effect of repetition (c.f. results for fluency on known truths, Unkelbach, 2007).

Table 1.

Example items from Study 1.

| Known | True | There are more than fifty stars in the universe. |

| False (Implausible) | The earth is a perfect square. | |

| Unknown | True | Billy the Kid’s last name was Bonney. |

| False | Angel Falls is located in Brazil. |

Method

All data are available online (https://osf.io/txf46/). We preregistered our hypotheses, primary analyses, and sample size (https://osf.io/txf46/). Although one-tailed tests are justified in the case of pre-registered directional hypotheses, here we follow conventional practices and use two-tailed tests throughout (the use of one-tailed versus two-tailed tests does not qualitatively alter our results). All participants were recruited from Amazon’s Mechanical Turk (Horton, Rand, & Zeckhauser, 2011), which has been shown to be a reliable resource for research on political ideology (Coppock, 2016; Krupnikov & Levine, 2014; Mullinix, Leeper, Druckman, & Freese, 2015). These studies were approved by the Yale Human Subject Committee.

Participants

Our target sample was 500. In total, 566 participants completed some portion of the study. We had complete data for 515 participants (51 participants dropped out). Participants were removed if they indicated responding randomly (N = 50), or searching online for any of the claims (N = 24; 1 of which did not respond), or going through the familiarization stage without doing the task (N = 32). These exclusions were preregistered. The final sample (N = 409; Mean age = 35.8) included 171 males and 235 females (3 did not indicate sex).

Materials

We created 4 known falsehoods (i.e., extremely implausible statements) and 4 known truths statements (see Supplementary Information, SI, for full list). We also used 10 true and 10 false trivia questions framed as statements (via Tauber, Dunlosky, & Rawson, 2013). Trivia items were sampled from largely unknown facts (see Table 1).

Procedure

We used a parallel procedure to Fazio et al. (2015). Participants were first asked to rate the “interestingness” of the items on a 6 point scale from 1) very uninteresting to 6) very interesting. Half of the items were presented in this familiarization stage (counterbalanced). Participants then completed a few demographic questions and the Positive and Negative Affect Schedule (PANAS; Watson, Clark, & Tellegen, 1988). This filler stage consisted of 25 questions and took approximately two minutes. Demographic questions consisted of age (“What is your age?”), sex (“What is your sex?”), education (“What is the highest level of school you have completed or the highest degree you have received” with 8 typical education level options), English fluency (“Are you fluent in English”), and zip code (“Please enter the ZIP code for your primary residence. Reminder: This survey is anonymous”). Finally, participants were asked to assess the accuracy of the statements on a 6 point scale from 1) definitely false to 6) definitely true. At the end of the survey, participants were asked about random responding (Did you respond randomly at any point during the study?) and use of search engines (Did you search the internet (via Google or otherwise) for any of the news headlines?). Both were accompanied by a “yes/no” response option and the following clarification: “Note: Please be honest! You will get your HIT regardless of your response.”

Results

Following our preregistration, the key comparison was between familiarized and novel implausible items. As predicted, repetition did not increase perceptions of accuracy for implausible (known false) statements, p = .462 (see Table 2), while there was a significant effect of repetition for both true and false trivia (unknown) statements, p’s < .001. There was no significant effect of repetition on very plausible (known true) statements (p = .078). These results were supported by a significant interaction between knowledge (known, unknown) and exposure (familiarized, novel), F(1, 408) = 82.17, MSE = .35, p < .001, η2 = .17. Specifically, there was no significant overall effect of repetition for known items, F(1, 408) = .91, MSE = .30, p = .341, η2 = .002, but a highly significant overall effect for unknown items, F(1, 408) = 107.99, MSE = .47, p < .001, η2 = .21.

Table 2.

Means, standard deviations, and significance tests (comparing familiarized and novel items) for known or unknown true and false statements in Study 1.

| Type | Familiarized | Novel | Difference | t (df) | p | |

|---|---|---|---|---|---|---|

| Known | True | 5.59 (0.8) | 5.66 (0.6) | −0.07 | 1.77 (408) | .078 |

| False (Implausible) | 1.13 (0.6) | 1.11 (0.5) | 0.02 | 0.74 (408) | .462 | |

|

| ||||||

| Unknown | True | 4.12 (0.7) | 3.79 (0.8) | 0.33 | 6.65 (408) | < .001 |

| False | 3.77 (0.7) | 3.39 (0.7) | 0.38 | 9.44 (408) | < .001 | |

Discussion

While we replicated prior results indicating a positive effect of repetition on ambiguously plausible statements, regardless of their correctness, we observed no significant effect of repetition on accuracy judgements for statements which are patently false.

Study 2 – Fake News

Study 1 establishes that, at least, extreme implausibility is a boundary condition for the illusory truth effect. Nonetheless, given that fake news stories are highly (but not entirely) implausible (Pennycook & Rand, 2017a), it is unclear whether their level of plausibility will be sufficient to allow prior exposure to inflate the perceived accuracy of fake news. It is also unclear what impact the highly partisan nature of fake news stimuli, and the motivated reasoning to which this partisanship may lead (i.e., reasoning biased toward conclusions that are concordant with previous opinion; Kahan, 2013; Kunda, 1990; Mercier & Sperber, 2011; Redlawsk, 2002), will have on any potential illusory truth effect. Motivated reasoning may cause people to see politically discordant stories as disproportionally inaccurate, such that the illusory truth effect may be diluted (or reversed) when headlines are discordant. We assess these questions in Study 2.

In addition to assessing the baseline impact of repetition on fake news, we also investigated the impact of explicit warnings about a lack of veracity on the illusory truth effect, given that warnings have been shown to be effective tools for diminishing (although not abolishing) the memorial effects of misinformation (Ecker, Lewandowsky, & Tang, 2010). Furthermore, such warnings are a key part of efforts to combat fake news – for example, Facebook’s first major intervention against fake news consisted of flagging stories shown to be false with a caution symbol and the text “Disputed by 3rd Party Fact-Checkers” (Mosseri, 2016). To this end, half of the participants were randomly assigned to a Warning condition in which this caution symbol and “Disputed” warning were applied to the fake news headlines.

Prior work has shown that participants rate repeated trivia statements as more accurate than novel statements, even when they were told that the source was inaccurate (Begg et al., 1992). Specifically, Begg and colleagues attributed statements in the familiarization stage to people with either male or female names, and then told participants that either all males or all females were lying. Participants were then presented with repeated and novel statements – all without sources – and they rated previously presented statements as more accurate even if they had been attributed to the lying gender in the familiarization stage. This provides evidence that the illusory truth effect survives manipulations that decrease belief in statements at first exposure. Nonetheless, Begg and colleagues employed a design that was different in a variety of ways from our warning manipulation. Primarily, Begg and colleagues provided information about veracity indirectly: for any given statement presented during their familiarization phase, participants had to complete the additional step at encoding of mapping the source’s gender into the information provided about which gender was unreliable in order to inform their initial judgment about accuracy. The “Disputed” warnings we test here, conversely, do not involve this extra mapping step. Thus, by assessing their impact on the illusory truth effect, we test whether the scope of Begg and colleagues’ findings extends to this more explicit warning, while also generating practically useful insight into the efficacy of this specific fake news intervention.

Method

Participants

We had an original target sample of 500 participants in our preregistration. We then completed a full replication of the experiment with another 500 participants. Given the similarity across the two samples, the datasets were combined for the main analysis (the results are qualitatively similar when examining the two experiments separately, see SI). The first wave was completed on January 16th and the second wave was completed on February 3rd (both in 2017). In total, 1069 participants from Mechanical Turk completed some portion of the survey. However, 64 did not finish the study and were removed (33 from the no warning condition and 31 from the warning condition). A further 32 participants indicated responding randomly at some point during the study and were removed. We also removed participants who reported searching for the headlines (N = 18) or skipping through the familiarization stage (N = 6). These exclusions were preregistered for Studies 1 and 3, but accidentally omitted from the preregistration for Study 2. The results are qualitatively identical with the full sample, but we report analyses with participants removed to retain consistency across our studies. The final sample (N = 949; Mean age = 37.1) included 449 males and 489 females (11 did not respond).

Materials and Procedure

Participants engaged in a 3-stage experiment. In the familiarization stage, participants were shown six news headlines that were factually accurate (real news) and six others that were entirely untrue (fake news). The headlines were presented in an identical format to that of Facebook posts (i.e., a headline with an associated photograph above it and a byline below it; see Figure 1a; fake and real news headlines can be found in the Appendix – images for each item, as presented to participants, can be found at the following link: https://osf.io/txf46/). Participants were randomized into two conditions: 1) The warning condition where all of the fake news headlines (but none of the real news headlines) in the familiarization stage were accompanied by a “Disputed by 3rd Party Fact-Checkers” tag” (see Figure 1b), or 2) The control condition where fake and real news headlines were displayed without warnings. In the familiarization stage, participants engaged with the news headlines in an ecologically valid way: they indicated whether they would share each headline on social media. Specifically, participants were asked “Would you consider sharing this story online (for example, through Facebook or Twitter)?” and were given three response options (“No, Maybe, Yes”). For purposes of data analysis, “no” was coded as 0 and “maybe” and “yes” were coded as 11.

Figure 1.

Sample fake news headline without (A) and with (B) “Disputed” warning, as presented in Experiments 2 and 3. Note: The original image (but not the headline) has been replaced with a stock military image (under a CC0 license) for copyright purposes.

The participants then advanced to the distractor stage, in which they completed a set of filler demographic questions. These included: age, sex, education, proficiency in English, political party (Democratic, Republican, Independent, other), social and economic conservatism (separate items)2, and two questions about the 2016 election. For these election-related questions, participants were first asked to indicate who they voted for (given the following options: Hillary Clinton, Donald Trump, Other Candidate (such as Jill Stein or Gary Johnson), I did not vote for reasons outside my control, I did not vote but I could have, and I did not vote out of protest). Participants were then asked “If you absolutely had to choose between only Clinton and Trump, who would you prefer to be the next President of the United States”. This binary was then used as our political ideology variable for the concordance/discordance analysis. Specifically, for participants who indicated a preference for Trump, Pro-Republican stories were scored as politically concordant and Pro-Democrat stories were scored as politically discordant; for participants who indicated a preference for Clinton, Pro-Democrat stories were scored as politically concordant and Pro-Republican stories were scored as politically discordant. The filler stage took approximately one minute.

Finally, participants entered the assessment stage, where they were presented with 24 news headlines – the 12 headlines they saw in the familiarization stage and 12 new headlines (6 fake news, 6 real news) – and rated each for familiarity and accuracy. Which headlines were presented in the familiarization stage was counterbalanced across participants, and headline order was randomized for every participant in both Stage 1 and Stage 3. Moreover, the items were balanced politically, with half being Pro-Democrat and half Pro-Republican. The fake news headlines were selected from Snopes.com, a third-party website that fact-checks news stories. The real headlines were contemporary stories from mainstream news outlets. For each item, participants were first asked “Have you seen or heard about this story before?” and were given three response options (“No, Unsure, Yes”). For the purposes of data analysis, “no” and “unsure” were combined (this was preregistered in Study 3 but not Study 2). As in other work on perceptions of news accuracy (Pennycook & Rand, 2017a, 2017b; Pennycook & Rand, 2018), participants were then asked “To the best of your knowledge, how accurate is the claim in the above headline?” and they rated accuracy on the following 4-point scale: 1) not at all accurate, 2) not very accurate, 3) somewhat accurate, 4) very accurate. We focus on judgments about news headlines, as opposed to full articles, because much of the public’s engagement with news on social media involves only reading story headlines (Gabielkov, Ramachandran, & Chaintreau, 2016).

At the end of the survey, participants were asked about random responding, use of search engines the check accuracy of the stimuli, and whether they skipped through the familiarization stage (“At the beginning of the survey (when you were asked whether you would share the stories on social media), did you just skip through without reading the headlines”?). All were accompanied by a “yes/no” response option.

Our preregistration specified the comparison between familiarized and novel fake news, separately in the warning and no warning conditions, as the key analyses. However, for completeness, we report the full set of analyses that emerge from our mixed design ANOVA. Our political concordance analysis deviates somewhat from the analysis that was preregistered (see footnote 3), and our follow-up analysis that focuses on unfamiliar headlines was not preregistered. Our full preregistration is available at the following link: https://osf.io/txf46/.

Results

As a manipulation check for our familiarization procedure, we submitted familiarity ratings (recorded during the assessment stage) to a 2 (Type: fake, real) x 2 (Exposure: familiarized, novel) x 2 (Warning: warning, no warning) mixed design ANOVA. Critically, there was a main effect of exposure such that familiarized headlines were rated as more familiar (M = 44.7%, SD = 35.6) than novel headlines (M = 16.2%, SD = 15.5), F(1, 947) = 578.76, MSE = .13, p < .001, η2 = .38, and a significant simple effect was present within every combination of news type and warning condition, all t’s > 14.0, all p’s < .001. This indicates that our social media sharing task in the familiarization stage was sufficient to capture participants’ attention. Further analysis of familiarity judgments can be found in supplementary information.

As a manipulation check for attentiveness to the “Disputed by 3rd party fact-checkers” warning, we submitted the willingness to share news articles on social media measure (from the familiarization stage) to a 2 (Type: fake, real) x 2 (Condition: warning, no warning) mixed design ANOVA. This analysis revealed a significant main effect of type, such that our participants were more willing to share real stories (M = 41.6%, SD = 31.8) than fake stories (M = 29.7%, SD = 29.8), F(1, 947) = 131.16, MSE = 0.05, p < .0017, η2 = .12. More importantly, there was a significant main effect of condition, F(1, 947) = 15.33, MSE = 0.13, p < .001, η2 = .016, which was qualified by an interaction between type and condition, F(1, 947) = 19.65, MSE = 0.05, p < .001, η2 = .020, such that relative to the No Warning condition, participants in the Warning condition reported being less willing to share fake news headlines (which actually bore the warnings in the Warning condition; Warning: M = 23.9%, SD = 28.3; No Warning: M = 35.2%, SD = 30.2), t(947) = 5.93, p < .001, d = 0.39, whereas there was no significant difference across conditions in sharing of real news (which did not have warnings in either condition; Warning: M = 40.6%, SD = 32.2; No Warning: M = 42.6%, SD = 31.5), t < 1. Thus, participants clearly paid attention to the warnings.

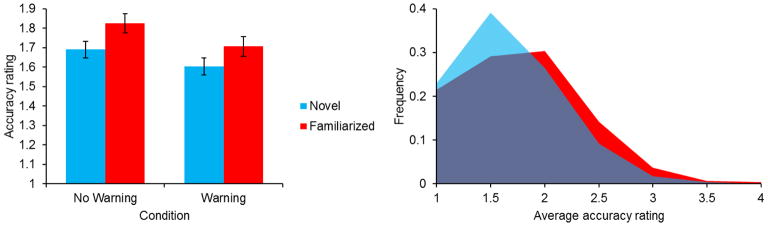

We now turn to perceived accuracy, our main focus. Perceived accuracy was entered into a 2 (Type: fake, real) x 2 (Exposure: familiarized, novel) x 2 (Warning: warning, no warning) mixed design ANOVA (see Table 3 for means and standard deviations). Demonstrating the presence of an illusory truth effect, there was a significant main effect of exposure, F(1, 947) = 93.65, MSE = .12, p < .001, η2 = .09, such that headlines presented in the familiarization stage (M = 2.24, SD = .42) were rated as more accurate than novel headlines (M = 2.13, SD = .39). There was also a significant main effect of headline type, such that real news headlines (M = 2.67, SD = .48) were rated as much more accurate than fake news headlines (M = 1.71, SD = .46), F(1, 945) = 2424.56, MSE = .36, p < .001, η2 = .72. However, there was no significant interaction between exposure and type of news headline, F < 1. In particular, prior exposure increased accuracy ratings even when only considering fake news headlines (see Figure 2; Familiarized: M = 1.77, SD = .56; Novel: M = 1.65, SD = .48), t(948) = 7.60, p < .001, d = 0.25. For example, nearly twice as many participants (92.1% increase, from 38 to 73 out of 949 total) judged the fake news headlines presented to them during the familiarization stage as accurate (mean accuracy rating above 2.5), compared to the stories presented to them for the first time in the assessment stage. Although both of these participant counts are only a small fraction of the total sample, the fact that a single exposure to the fake stories doubled the number of credulous participants suggests that repetition effects may have a substantial impact in daily life, where people can see fake news headlines cycling many times through their social media newsfeeds.

Table 3.

Means, standard deviations, and significance tests (comparing familiarized and novel items) for politically concordant and discordant items in the warning and no warning conditions. Political concordant items consistent of Pro-Democrat items for Clinton supporters and Pro-Republican items for Trump supporters (and vice-versa for politically discordant items).

| Type | Warning | Familiarized | Novel | t (df) | p | |

|---|---|---|---|---|---|---|

|

| ||||||

| Politically Concordant | Fake News | No Warning | 1.93 (0.7) | 1.78 (0.6) | 5.46 (486) | < .001 |

| Warning | 1.81 (0.7) | 1.68 (0.6) | 4.69 (459) | < .001 | ||

|

| ||||||

| Real News | No Warning | 2.98 (0.6) | 2.83 (0.7) | 5.45 (486) | < .001 | |

| Warning | 2.92 (0.7) | 2.86 (0.7) | 2.17 (459) | .031 | ||

|

| ||||||

| Politically Discordant | Fake News | No Warning | 1.72 (0.6) | 1.60 (0.5) | 3.91 (486) | < .001 |

| Warning | 1.60 (0.6) | 1.53 (0.5) | 2.66 (459) | .008 | ||

|

| ||||||

| Real News | No Warning | 2.50 (0.6) | 2.39 (0.6) | 3.85 (486) | < .001 | |

| Warning | 2.49 (0.6) | 2.40 (0.6) | 3.03 (459) | .003 | ||

Figure 2.

Exposing participants to fake news headlines in Study 2 increased accuracy ratings, even when the stories were tagged with a warning indicating that they had been disputed by third-party fact checkers. (a) Mean accuracy ratings for fake news headlines as a function of repetition (familiarized stories were shown previously during the familiarization stage; novel stories were shown for the first time during the assessment stage) and presence or absence of a warning that fake news headlines had been disputed. Error bars indicate 95% confidence intervals. (b) Distribution of participant-average accuracy ratings for the fake news headlines, comparing the six familiarized stories shown during the familiarization stage (red) with the six novel stories shown for the first time in the assessment stage (blue). We collapse across warning and no warning conditions as the repetition effect did not differ significantly by condition.

What effect did the presence of warnings on fake news in the familiarization stage have on later judgments of accuracy and, potentially, the effect of repetition? The ANOVA described above revealed a significant main effect of the warning manipulation, F(1, 947) = 5.39, MSE = .53, p = .020, η2 = .005, indicating that the warning decreased perceptions of news accuracy. However, this was qualified by an interaction between warning and type, F(1, 947) = 5.83, MSE = .36, p = .016, η2 = .006. Whereas the presence of warnings on fake news in the assessment stage had no effect on perceptions of real news accuracy (Warning: M = 2.67, SD = .49; No Warning: M = 2.67, SD = .48), t < 1, participants rated fake news as less accurate in the warning condition (Warning: M = 1.66, SD = .46; No Warning: M = 1.76, SD = .46), t(947) = 3.40, p = .001, d = 0.22. Furthermore, there was a marginally significant interaction between exposure and warning, F(1, 947) = 3.32, MSE = .12, p = .069, η2 = .004, such that the decrease in overall perceptions of accuracy was significant for familiarized items (Warning: M = 2.21, SD = .41; No Warning: M = 2.28, SD = .43), t(947) = 2.77, p = .006, d = 0.18, but not novel items, (Warning: M = 2.12, SD = .38; No Warning: M = 2.15, SD = .39), t(947) = 1.36, p = .175, d = 0.09. That is, the warning decreased perceptions of accuracy for items that were presented in the familiarization stage – both fake stories that were labeled with warnings and the real stories presented without warnings3 – but not for items that were not presented in the familiarization stage.

There was no significant three-way interaction, however, between headline type, exposure, and warning condition, F < 1. As a consequence, the repetition effect was evident for fake news headlines in the Warning condition, t(460) = 4.89, p < .001, d = 0.23, as well as the No Warning condition, t(487) = 5.81, p < .001, d = 0.26 (see Figure 2). That is, participants rated familiarized fake news headlines that they were explicitly warned about as more accurate than novel fake news headlines that they were not warned about (despite the significant negative effect of warnings on perceived accuracy of fake news reported above). In fact, there was no significant interaction between the exposure and warning manipulations when isolating the analysis to fake news headlines, F(1, 947) = 1.00, MSE = .12, p = .317, η2 = .001, Thus, the warning seems to have created a general sense of distrust – thereby reducing perceived accuracy for both familiarized and novel fake news headlines – rather than making people particularly distrust the stories that were labeled as disputed.

As a secondary analysis4, we also investigate whether the effect of prior exposure is robust to political concordance (i.e., whether headlines were congruent or incongruent with one’s political stance). Mean perceptions of news accuracy for politically concordant and discordant items as a function of type, exposure, and warning condition can be found in Table 3. Perceived accuracy was entered into a 2 (Political valence: concordant, discordant) x 2 (Type: fake, real) x 2 (Exposure: familiarized, novel) x 2 (Warning: warning, no warning) mixed design ANOVA. First, as a manipulation check, politically concordant items were rated as far more accurate than politically discordant items overall (see Table 3), F(1, 945) = 573.08, MSE = .34, p < .001, η2 = .38. Nonetheless, we observed no significant interaction between the repetition manipulation and political valence, F(1, 945) = 2.24, MSE = .15, p = .135, η2 = .002. The illusory truth effect was evident for fake news headlines that were politically discordant, t(946) = 4.70, p < .001, d = 0.15, as well as concordant, t(946) = 7.19, p < .001, d = 0.23. Political concordance interacted significantly with type of news story, F(1, 945) = 138.91, MSE = .23, p < .001, η2 = .13, such that the difference between perceptions of real and fake news (i.e., discernment) was greater for politically concordant headlines (Real: M = 2.90, SD = .59; Fake: M = 1.80, SD = .56), than politically discordant headlines (Real: M = 2.44, SD = .53; Fake: M = 1.61, SD = .48), t(946) = 11.8, p < .001, d = 0.38 (see Pennycook & Rand, 2018 for a similar result). All other interactions with political concordance were not significant, all F’s < 1.5, p’s > .225.

The illusory truth effect also persisted when analyzing only news headlines that the participants marked as unfamiliar (i.e., in the same mixed ANOVA as above but only analyzing stories the participants were not consciously aware of having seen in the familiarization stage or at some point prior to the experiment) (Familiarized: M = 1.90, SD = .53; Novel: M = 1.83, SD = .49), F(1,541)5 = 11.82, MSE = .17, p = .001, η2 = .02. See SI for details and further statistical analysis.

Discussion

The results of Study 2 indicate that a single prior exposure is sufficient to increase perceived accuracy for both fake and real news. This occurs even 1) when fake news is labeled as “Disputed by 3rd party fact-checkers” during the familiarization stage (i.e., during encoding at first exposure), 2) among fake (and real) news headlines that are inconsistent with one’s political ideology, and 3) when isolating the analysis to news headlines that participants were not consciously aware of having seen in the familiarization stage.

Study 3 – Fake News, One Week Interval

We next sought to assess the robustness of our finding that repetition increases perceptions of fake news accuracy by making two important changes to the design of Study 2. First, we assessed the persistence of the repetition effect by inviting participants back after a week long delay (following previous research which has shown illusory truth effects to persist over substantial periods of time, e.g. Hasher, Goldstein, & Toppino, 1977; Schwartz, 1982). Second, we restricted our analyses to only those items that were unfamiliar to participants when entering the study, which allows for a cleaner ‘novel’ baseline.

Method

Participants

Our target sample was 1000 participants from Mechanical Turk. This study was completed on February 1st and 2nd, 2017. Participants who completed Study 2 were not permitted to complete Study 3. In total, 1032 participants completed the study, 40 of which dropped out or had missing data (14 from the no warning condition, 27 from the warning condition). Participants who reported responding randomly (N = 29), skipping over the familiarization phase (N = 1), or searching online for the headlines (N = 22) were removed. These exclusions were preregistered. The final sample (N = 940; Mean age = 36.8) included 436 males and 499 females (5 did not respond).

Materials and Procedure

The design was identical to Study 2 (including the Warning and No Warning conditions), with a few exceptions. First, the length of the distractor stage was increased by adding 20 unrelated questionnaire items to the demographics questions (namely, the PANAS, as in Study 1). This filler stage took approximately two minutes to complete. Furthermore, participants were invited to return for a follow-up session one week later in which they were presented with the same headlines they had seen in the assessment stage plus a set of novel headlines not included in the first session (N = 566 participants responded to the follow-up invitation). To allow full counterbalancing, we presented participants with 8 headlines in the familiarization phase, 16 headlines in the accuracy judgment phase (of which 8 were those shown in the familiarization phase), and 24 headlines in the follow-up session a week later (of which 16 were those shown in the assessment phase of the first session), again maintaining an equal number of real/fake and Pro-Democrat/Pro-Republican headlines within each block. The design of Study 3 therefore allowed us to assess the temporal stability of the repetition effect both within session 1 (over the span of a distractor task) and session 2 (over the span of a week).

Second, during the familiarization stage participants were asked to indicate whether each headline was familiar, instead of whether they would share the story on social media (the social media question was moved to the assessment stage). This modification allowed us to restrict our analyses to only those items that were unfamiliar to participants when entering the study (i.e., they said “no” when asked about familiarity)6, allowing for a cleaner assessment of the causal effect of repetition (903 of the 940 participants in the first session were previously unfamiliar with at least one story of each type and thus included in the main text analysis, as were 527 out of the 566 participants in second session; see SI for analyses with all items and all participants). Fake and real news headlines as presented to participants can be found at the following link: https://osf.io/txf46/

As in Experiment 2, our preregistration specified the comparison between familiarized and novel fake news in both warning and no warning conditions (and for both sessions) as the key analyses, although in this case we preregistered the full 2 (Type: fake, real) x 2 (Exposure: familiarized, novel) x 2 (Warning: warning, no warning) mixed design ANOVA. We also preregistered the political concordance analysis. Finally, we preregistered the removal of cases where participants were familiar with the news headlines as a secondary analysis, but we will focus on it as a primary analysis here as this is the novel feature relative to Study 2 (primary analyses including all participants are discussed in footnote 8). Our preregistration is available at the following link: https://osf.io/txf46/.

Results

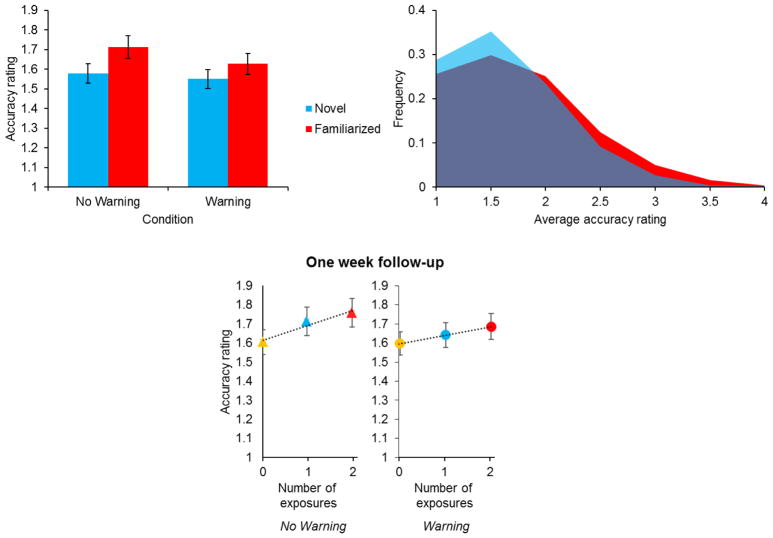

Perceived accuracy was entered into a 2 (Type: fake, real) x 2 (Exposure: familiarized, novel) x 2 (Warning: warning, no warning) mixed design ANOVA. Replicating the illusory truth effect from Study 2, there was a clear causal effect of prior exposure on accuracy in the first session of Study 3 despite the longer distractor stage: Headlines presented in the familiarization stage (M = 2.01, SD = .54) were rated as more accurate than novel headlines (M = 1.92, SD = .49), F(1, 721) = 22.52, MSE = .23, p < .001, η2 = .03. Again replicating the results of Study 2, there was a significant main effect of type, such that real stories (M = 2.31, SD = .63) were rated as much more accurate than fake stories (M = 1.63, SD = .52), F(1, 721) = 934.57, MSE = .36, p < .001, η2 = .57, but there was no significant interaction between exposure and type of news headline, F(1, 721) = 2.65, MSE = .20, p = .104, η2 = .004. Accordingly, prior exposure increased perceived accuracy even when only considering fake news headlines (see Figure 3a,b), t(902)7 = 5.99, p < .001, d = 0.20 (89.5% increase in number of participants judging familiarized fake news headlines as accurate compared to novel fake news headlines, from 38 to 72 participants out of 903).

Figure 3.

The illusory truth effect for fake news is persistent, lasting over a longer filler stage in Study 3 and continuing to be observed in a follow-up session one week later. (a) Mean accuracy ratings for fake news headlines in the initial session of Study 3 as a function of repetition and presence or absence of a warning that fake news headlines had been disputed. Error bars indicate 95% confidence intervals. (b) Distribution of participant-average accuracy ratings for the fake news headlines in Study 3, comparing the four headlines shown during the familiarization stage (red) with the four novel headlines shown for the first time in the assessment stage (blue). We collapse across warning and no warning conditions as the repetition effect did not differ significantly by condition. (c) Mean accuracy ratings for fake news headlines in the follow-up session conducted one week later, as a function of number of exposures to the story (2 times for headlines previously presented in the familiarization and assessment stage of the first session; 1 time for headlines previously presented only in the assessment stage of the first session; and 0 times for headlines introduced for the first time in the follow-up session) and presence or absence of warning tag. Error bars indicate 95% confidence intervals based on robust standard errors clustered by participant, and trend line shown in dotted black.

Unlike Study 2, there was no main effect of the warning manipulation on overall perceptions of accuracy (i.e., across the aggregate of fake and real news), F < 1. However, there was a marginally significant interaction between type of news story and warning condition, F(1, 721) = 2.95, MSE = .36, p = .086, η2 = .004. Regardless, the fake news warnings in the familiarization stage had no significant overall effect on perceptions of fake news accuracy in the assessment stage (Warning: M = 1.61, SD = .50; No Warning: M = 1.66, SD = .54), t(932)8 = 1.54, p = .123, d = 0.10. There was also no significant effect of the warning on perceptions of real news accuracy (Warning: M = 2.32, SD = .63; No Warning: M = 2.30, SD = .63), t < 1, no significant interaction between the repetition and warning manipulations, F(1,721) = 1.89, MSE = .23, p = .169, η2 = .003), and no significant three-way interaction between warning, exposure, and type of news story, F < 1.9 Nonetheless, it should be noted that familiarized fake news headlines (i.e., the fake news headlines that were warned about in the familiarization stage) were rated as less accurate (M = 1.64, SD = .59) than the same headlines in the control (no warning) condition (M = 1.73, SD = .63), t(925) = 2.14, p = .032, d = 0.14, suggesting that the warning did have some effect on accuracy judgments. However, this effect was smaller than in Study 2 and did not extend to non-warned (and not familiarized) fake news. This is perhaps due to the smaller number of items in the familiarization stage of Study 3.

Following our preregistration, we also analyzed the effect of exposure for fake news headlines separately in the warning and no warning conditions. The repetition effect was evident for fake news headlines in both the Warning condition (Familiarized: M = 1.63, SD = .58; Novel: M = 1.55, SD = .52), t(447) = 3.07, p = .002, d = 0.14, and the No Warning condition (Familiarized: M = 1.71, SD = .61; Novel: M = 1.58, SD = .54), t(454) = 5.41, p < .001, d = 0.25. Furthermore, familiarized fake news headlines were judged as more accurate than novel ones for both political discordant (Familiarized: M = 1.60, SD = .67; Novel: M = 1.51, SD = .63), t(858) = 3.41, p = .001, d = 0.12, and concordant items (Familiarized: M = 1.72, SD = .77; Novel: M = 1.59, SD = .67), t(801) = 4.93, p < .001, d = 0.18 (an ANOVA including concordance indicated that there was no significant interaction between repetition and political concordance for fake news, F(1,769) = 1.46, MSE = .32, p = .228, η2 = .002)10.

Following up one week later, we continued to find a clear causal effect of repetition on accuracy ratings: Perceived accuracy of a story increased linearly with the number of times the participants had been exposed to that story. Using linear regression with robust standard errors clustered on participant11, we found a significant positive relationship between number of exposures and accuracy overall (Familiarized twice: M = 2.00, SD = 0.53; Familiarized once: M = 1.94, SD = 0.53; Novel: M = 1.90, SD = 0.51), b = .046, t(537) = 3.68, p < .001, and when only considering fake news headlines (see Figure 3c) (Familiarized twice: M = 1.70, SD = .58; Familiarized once: M = 1.66, SD = .58; Novel: M = 1.60, SD = .53), b = .048, t(526) = 3.66, p < .001 (64% increase in number of participants judging fake news headlines as accurate among stories seen twice compared to novel fake news headlines, from 25 to 41 participants out of 527). Once again, this relationship was evident for fake news in both the Warning condition (Familiarized twice: M = 1.67, SD = .59; Familiarized once: M = 1.63, SD = .56; Novel: M = 1.60, SD = .52), b = .036, t(276) = 1.97, p = .050, and the No Warning condition (Familiarized twice: M = 1.73, SD = .57; Familiarized once: M = 1.70, SD = .59; Novel: M = 1.61, SD = .53), b = .061, t(249) = 3.27, p = .001 (there was no significant interaction between the repetition and warning manipulations, b = −.025, t(526) = 0.96, p = .337; see Figure 3c); and for fake news headlines that were politically discordant (Familiarized twice: M = 1.62, SD = .72; Familiarized once: M = 1.61, SD = .68; Novel: M = 1.54, SD = .62), b = .041, t(525) = 2.28, p = .023, as well as concordant (Familiarized twice: M = 1.78, SD = .77; Familiarized once: M = 1.71, SD = .75; Novel: M = 1.66, SD = .70), b = .061, t(523) = 3.24, p = .001.

Discussion

The results of Study 3 further demonstrated that prior exposure increases perceived accuracy of fake news. This occurred regardless of political discordance and among previously unfamiliar headlines that were explicitly warned about during familiarization. Crucially, the effect of repetition on perceived accuracy persisted after a week and increased with an additional repetition. This suggests that fake news credulity compounds with increasing exposures and maintains over time.

General Discussion

While repetition did not impact accuracy judgments of totally implausible statements, across two preregistered experiments with a total of more than 1,800 participants we found consistent evidence that repetition did increase the perceived accuracy of fake news headlines. Indeed, a single prior exposure to fake news headlines was sufficient to measurably increase subsequent perceptions of their accuracy. Although this effect was relatively small (d = .20–.21), it increased with a second exposure, thereby suggesting a compounding effect of repetition across time. Explicitly warning individuals that the fake news headlines had been disputed by third-party fact-checkers (which was true in every case) did not abolish or even significantly diminish this effect. The illusory truth effect was also evident even among news headlines that were inconsistent with the participants’ stated political ideology.

Mechanisms of illusory truth

First, it is important to note that repetition increased accuracy even for items that the participants were not consciously aware of having been exposed to. This supports the broad consensus that repetition influences accuracy through a low-level fluency heuristic (Alter & Oppenheimer, 2009; Begg et al., 1992; Reber et al., 1998; Unkelbach, 2007; Whittlesea, 1993). These findings indicate that our repetition effect is likely driven, at least in part, by automatic (as opposed to strategic) memory retrieval (Diana, Yonelinas, & Ranganath, 2007; Yonelinas, 2002; Yonelinas & Jacoby, 2012). More broadly, these effects correspond with prior work demonstrating the power of fluency to influence a variety of judgments (Schwarz, Sanna, Skurnik, & Yoon, 2007) – for example, subliminal exposure to a variety of stimuli (e.g., Chinese characters) increases associated positive feelings (i.e., the mere exposure effect; see Zajonc, 1968, 2001). Our evidence that the illusory truth effect extends to implausible and even politically inconsistent fake news stories expands the scope of these effects. That perceptions of fake news accuracy can be manipulated so easily despite being highly implausible (only 15–22% of the headlines were judged to be accurate) has substantial practical implications (discussed below) – but what implications do these results have for our understanding of the mechanisms that underlie the illusory truth effect (and, potentially, a broader array of fluency effects observed in the literature)?

For decades, it has been assumed that repetition only increases accuracy for statements that are ambiguous (e.g., Dechene et al., 2010) because, otherwise, individuals will simply use prior knowledge to determine truth. However, recent evidence indicates that repetition can even increase the perceived accuracy of plausible but false statements (e.g. “chemosynthesis is the name of the process by which plants make their food”) among participants who were subsequently able to identify the correct answer (Fazio et al., 2015). However, it may be that the illusory truth effect is only robust to the presence of conflicting prior knowledge when statements are plausible enough that individuals fail to detect the conflict (for a perspective on conflict detection during reasoning, see Pennycook, Fugelsang, & Koehler, 2015). Indeed, as noted earlier, Fazio and colleagues speculated that “participants would draw on their knowledge, regardless of fluency, if statements contained implausible errors” (p. 1000). On the contrary, our findings indicate that implausibility is only a boundary condition of the illusory truth effect in the extreme: It is possible to use repetition to increase the perceived accuracy even for entirely fabricated and, frankly, outlandish fake news stories that, given some reflection (Pennycook & Rand, 2017b, 2018), people probably know are untrue. This observation substantially expands the purview of the illusory truth effect, and suggests that external reasons for disbelief (such as direct prior knowledge and implausibility) are no safe-guard against the fluency heuristic.

Motivated reasoning

Our results also have implications for a broad debate about the scope of motivated reasoning, which has been taken to be a fundamental aspect of how individuals interact with political misinformation and disinformation (Swire, Berinsky, Lewandowsky, & Ecker, 2017) and has been used to explain the spread of fake news (Allcott & Gentzkow, 2017; Beck, 2017; Calvert, 2017; Kahan, 2017; Singal, 2017). While Trump supporters were indeed more skeptical about fake news headlines that were anti-Trump relative to Clinton supporters (and vice versa), our results show that repetition increases perceptions of accuracy even in such politically discordant cases. Take, for example, the item “BLM Thug Protests President Trump with Selfie… Accidentally Shoots Himself In The Face,” which is politically discordant for Clinton supporters and politically concordant for Trump supporters. While on first exposure Clinton supporters were less likely (11.7%) to rate this headline as accurate than Trump supporters (18.5%), suggesting the potential for motivated reasoning, a single prior exposure to this headline increased accuracy judgements in both cases (to 17.9% and 35.5%, for Clinton and Trump supporters respectively). Thus, fake news headlines were positively affected by repetition even when there was a strong political motivation to reject them. This observation complements the results of Pennycook and Rand (2018), who find – in contrast to common motivated reasoning accounts (Kahan, 2017) – that analytic thinking leads to disbelief in fake news regardless of political concordance. Taken together, this suggests that motivated reasoning may play less of a role in the spread of fake news than is often argued.

These results also bear on a recent debate about whether corrections might actually make false information more familiar, thereby increasing the incidence of subsequent false beliefs (i.e., the familiarity backfire effect; Berinsky, 2015; Nyhan & Reifler, 2010; Schwarz et al., 2007; Skurnik et al., 2005). In contrast to the backfire account, the latest research in this domain indicates that explicit warnings or corrections of false statements actually have a small positive (and certainly not negative) impact on subsequent perceptions of accuracy (Ecker, Hogan, & Lewandowsky, 2017; Lewandowsky et al., 2012; Pennycook & Rand, 2017b; Swire, Ecker, et al., 2017). In our data, the positive effect of a single prior exposure (d = .20 in Study 2) was effectively equivalent to the negative effect of the “Disputed” warning (d = .17 in Study 2). Thus, although any benefit arising from the disputed tag is immediately wiped out by the prior exposure effect, we also do not find any evidence of a meaningful backfire. Our findings therefore support recent skepticism about the robustness and importance of the familiarity backfire effect.

Societal implications

Our findings have important implications for the functioning of democracy, which relies on an informed electorate. Specifically, our results shed some light on what can be done to combat belief in fake news. We employed a warning that was developed by Facebook to curb the influence of fake news on their social media platform (“Disputed by 3rd Party Fact-Checkers”). We found that this warning did not disrupt the illusory truth effect, an observation that resonates with previous work demonstrating that, for example, explicitly labelling consumer claims as false (Skurnik et al., 2005) or retracting pieces of misinformation in news articles (Berinsky, 2015; Ecker, Lewandowsky, & Tang, 2010; Lewandowsky, Ecker, Seifert, Schwarz, & Cook, 2012; Nyhan & Reifler, 2010) are not necessarily effective strategies for decreasing long-term misperceptions (but see Swire, Ecker, & Lewandowsky, 2017). Nonetheless, it is important to note that the warning did successfully decrease subsequent overall perceptions of the accuracy of fake news headlines; the warning’s effect was just not specific to the particular fake news headlines that the warning was attached to (and so the illusory truth effect survived the warning). Thus, the warning appears to have increased general skepticism, which increased the overall sensitivity to fake news (i.e., the warning decreased perceptions of fake news accuracy without affecting judgments for real news). The warning also successfully decreased people’s willingness to share fake news headlines on social media. However, neither of these warning effect sizes were particularly large – for example, as described above, the negative impact of the warning on accuracy was entirely canceled out by the positive impact of repetition. That result, coupled with the persistence of the illusory truth effect we observed and the possibility of an “implied truth” effect whereby tagging some fake headlines may increase the perceived accuracy of untagged fake headlines (Pennycook & Rand, 2017a), suggests that larger solutions are needed that prevent people from ever seeing fake news in the first place, rather than qualifiers aimed at making people discount the fake news that they do see.

Finally, our findings have implications beyond just fake news on social media. They suggest that politicians who continuously repeat false statements will be successful, at least to some extent, in convincing people those statements are in fact true. Indeed, the word “delusion” derives from a Latin term conveying the notion of mocking, defrauding, and deception. That the illusory truth effect is evident for highly salient and impactful information suggests that repetition may also play an important role in domains beyond politics, such as the formation of religious and paranormal beliefs where claims are difficult to either validate or reject empirically. When the truth is hard to come by, fluency is an attractive stand-in.

Context

In this research program, we use cognitive psychological theory and techniques to illuminate issues that have clear consequences for everyday life, with the hope of generating insights that are both practically and theoretically relevant. The topic of fake news – and disinformation more broadly – is of great relevance to current public discourse and policy making, and fits squarely in the domain of cognitive psychology. Plainly, this topic is something that cognitive psychologists should be able to say something specific and illuminating about!

Supplementary Material

Acknowledgments

This research was supported by a Social Sciences and Humanities Council of Canada Banting Postdoctoral Fellowship (to G. P.), a grant from the National Institute of Mental Health (MH081902) (to T.D.C.), and grants from the Templeton World Charity Foundation and DARPA (to D.G.R.).

Appendix

Study 1 Items

| Known | False (Extremely Implausible) | Smoking cigarettes is good for your lungs. |

| The earth is a perfect square. | ||

| Across the United States, only a total of 452 people voted in the last election. | ||

| A single elephant weighs less than a single ant. | ||

|

| ||

| True | More people live in the United States than in Malta. | |

| Cows are larger than sheep. | ||

| Coffee is a more popular drink in America than goat milk. | ||

| There are more than fifty stars in the universe. | ||

|

| ||

| Unknown | False | George was the name of the goldfish in the story of Pinocchio. |

| Johnson was the last name of the man who killed Jesse James. | ||

| Charles II was the first ruler of the Holy Roman Empire. | ||

| Canopus is the name of the brightest star in the sky, excluding the sun. | ||

| Tirpitz was the name of Germany’s largest battleship that was sunk in World War II. | ||

| John Kenneth Galbraith is the name of a well-known lawyer. | ||

| Huxley is the name of the scientist who discovered radium. | ||

| The Cotton Bowl takes place in Auston, Texas. | ||

| The drachma is the monetary unit for Macedonia. | ||

| Angel Falls is located in Brazil. | ||

|

| ||

| True | The thigh bone is the largest bone in the human body. | |

| Bolivia borders the Pacific Ocean. | ||

| The largest dam in the world is in Pakistan. | ||

| Mexico is the world’s largest producer of silver. | ||

| More presidents of the United States were born in Virginia than any other state. | ||

| Helsinki is the capital of Finland. | ||

| Marconi is name of the inventor of the wireless radio. | ||

| Billy the Kid’s last name was Bonney. | ||

| Tiber is the name of the river that runs through Rome. | ||

| Canberra is the capital of Australia. | ||

Note: Fake and real news headlines as presented to participants can be found at the following link: https://osf.io/txf46/

Study 2 ‘Fake News’ Items

| Political Valence | Headline | Source |

|---|---|---|

| Pro-Republican | Election Night: Hillary Was Drunk, Got Physical With Mook and Podesta | dailyheadlines.net |

| Obama Was Going to Castro’s Funeral – Until Trump Told Him This… | thelastlineofdefense.org | |

| Donald Trump Sent His Own Plane To Transport200 Stranded Marines | uconservative.com | |

| BLM Thug Protests President Trump With Selfie… Accidentally Shoots Himself In The Face | freedomdaily.com | |

| NYT David Brooks: “Trump Needs To Decide If He Prefers To Resign, Be Impeached Or Get Assassinated” | unitedstates-politics.com | |

| Clint Eastwood Refuses to Accept Presidential Medal of Freedom From Obama, Says “He is not my president” | incredibleusanews.com | |

|

| ||

| Pro-Democrat | Mike Pence: Gay Conversion Therapy Saved My Marriage | ncscooper.com |

| Pennsylvania Federal Court Grants Legal Authority To REMOVE TRUMP After Russian Meddling | bipartisanreport.com | |

| Trump on Revamping the Military: We’re Bringing Back the Draft | realnewsrightnow.com | |

| FBI Director Comey Just Proved His Bias By Putting Trump Sign On His Front Lawn | countercurrentnews.com | |

| Sarah Palin Calls To Boycott Mall of America Because “Santa Was Always White In The Bible” | politicono.com | |

| Trump to Ban All TV Shows that Promote Gay Activity Starting with Empire as President | colossil.com | |

Study 2 ‘Real News’ Items

| Political Valence | Headline | Source |

|---|---|---|

| Pro-Republican | Dems scramble to prevent their own from defecting to Trump | foxnews.com |

| Majority of Americans Say Trump Can Keep Businesses, Poll Shows | bloomberg.com | |

| Donald Trump Strikes Conciliatory Tone in Meeting With Tech Executives | wsj.com | |

| Companies are already canceling plans to move U.S. jobs abroad | msn.com | |

| She claimed she was attached by men who yelled”Trump” and grabber her hijab. Police say she lied. | washingtonpost.com | |

| At GOP Convention Finale, Donald Trump Vows to Protect LGBTQ Community | fortune.com | |

|

| ||

| Pro-Democrat | North Carolina Republicans Push Legislation to Hobble Incoming Democratic Governor | huffingtonpost.com |

| Vladimir Putin ‘personally involved’ in US hack, report claims | theguardian.com | |

| Trump Lashes Out At Vanity Fair, One Day After It Lambastes His Restaurant | npr.org | |

| Donald Trump Says He’d ‘Absolutely’ Require Muslims to Register | nytimes.com | |

| The Small Business Near Trump Tower Are Experiencing A Miniature Recession | slate.com | |

| Trump Questions Russia’s Election Meddling on Twitter - Inaccurately | nytimes.com | |

Study 3 ‘Fake News’ Items

| Political Valence | Headline | Source |

|---|---|---|

| Pro-Republican | Election Night: Hillary Was Drunk, Got Physical With Mook and Podesta | dailyheadlines.net |

| Donald Trump Protester Speaks Out: “I Was Paid $3,500 To Protest Trump’s Rally” | abcnews.com.co | |

| NYT David Brooks: “Trump Needs To Decide If He Prefers To Resign, Be Impeached Or Get Assassinated” | unitedstates-politics.com | |

| Clint Eastwood Refuses to Accept Presidential Medal of Freedom From Obama, Says “He is not my president” | incredibleusanews.com | |

| Donald Trump Sent His Own Plane To Transport200 Stranded Marines | uconservative.com | |

| BLM Thug Protests President Trump With Selfie… Accidentally Shoots Himself In The Face | freedomdaily.com | |

|

| ||

| Pro-Democrat | FBI Director Comey Just Proved His Bias By Putting Trump Sign On His Front Lawn | countercurrentnews.com |

| Pennsylvania Federal Court Grants Legal Authority To REMOVE TRUMP After Russian Meddling | bipartisanreport.com | |

| Sarah Palin Calls To Boycott Mall of America Because “Santa Was Always White In The Bible” | politicono.com | |

| Trump to Ban All TV Shows that Promote Gay Activity Starting with Empire as President | colossil.com | |

| Mike Pence: Gay Conversion Therapy Saved My Marriage | ncscooper.com | |

| Trump on Revamping the Military: We’re Bringing Back the Draft | realnewsrightnow.com | |

Study 3 ‘Real News’ Items

| Political Valence | Headline | Source |

|---|---|---|

| Pro-Republican | House Speaker Ryan praises Trump for maintaining congressional strength | cnbc.com |

| Donald Trump Strikes Conciliatory Tone in Meeting With Tech Executives | wsj.com | |

| At GOP Convention Finale, Donald Trump Vows to Protect LGBTQ Community | fortune.com | |

| Companies are already canceling plans to move U.S. jobs abroad | msn.com | |

| Majority of Americans Say Trump Can Keep Businesses, Poll Shows | bloomberg.com | |

| She claimed she was attached by men who yelled”Trump” and grabber her hijab. Police say she lied. | washingtonpost.com | |

|

| ||

| Pro-Democrat | North Carolina Republicans Push Legislation to Hobble Incoming Democratic Governor | huffingtonpost.com |

| Vladimir Putin ‘personally involved’ in US hack, report claims | theguardian.com | |

| Trump Lashes Out At Vanity Fair, One Day After It Lambastes His Restaurant | npr.org | |

| Trump Questions Russia’s Election Meddling on Twitter - Inaccurately | nytimes.com | |

| The Small Business Near Trump Tower Are Experiencing A Miniature Recession | slate.com | |

| Donald Trump Says He’d ‘Absolutely’ Require Muslims to Register | nytimes.com | |

Footnotes

This was not preregistered for Study 2; however, it was for Study 3. Hence, we use this analysis strategy to retain consistency across the two fake news studies. The results are qualitatively similar if the social media question is scored continuously.

Participants were asked “On social issues I am: Strongly Liberal, Somewhat Liberal, Moderate, Somewhat Conservative, Strongly Conservative”. The same was true for the economic conservatism item except the prompt was “On economic issues I am:”.

However, it should be noted that when examining simple effects, there was only a significant negative effect of warning condition on perceived accuracy of familiarized (i.e., warned) fake news (Warning: M = 1.71, SD = .55; No Warning: M = 1.82, SD = .56), t(947) = 3.25, p = .001, d = 0.21, and not familiarized real news (Warning: M = 2.71, SD = .54; No Warning: M = 2.74, SD = .52), t < 1.

These analyses were not preregistered, although we did preregister a parallel analysis where pro-Democrat and pro-Republican items would be analyzed separately while comparing liberals and conservatives. The present analysis simply combines the data into a more straightforward analysis and uses the binary Clinton/Trump choice to distinguish liberals and conservatives. The effect of prior exposure was significant for fake news when political concordance was determined based on Democrat/Republican party affiliation: Politically concordant: t(609) = 4.8, p < .001; politically disconcordant: t(609) = 2.9, p = .004.

Degrees of freedom are lower here because this analysis only includes individuals who were unfamiliar with at least one item in each cell of the design (familiarized/novel and fake/real).

Whereas participants indicated their familiarity with familiarized items prior to completing the accuracy judgments, they indicated their familiarity with novel items after completing the accuracy judgments. Thus, it is possible that seeing the news headlines in the accuracy judgment phase would increase perceived familiarity. There was no evidence for this, however, as mean familiarity judgment (scored continuously) did not significantly differ based on whether the judgment was made before or after the accuracy judgment phase, t(939) = 0.68, SE = .01, p = .494, d = 0.02. Participants were unfamiliar with 81.2% of the fake news headlines and 49.2% of the real news headlines.

Only unfamiliar headlines are included and therefore missing data accounts for missing participants in some cell of the design. Degrees of freedom vary throughout because the maximum number of participants is included in each analysis.

Degrees of freedom change here because this analysis includes the maximum number of individuals who were unfamiliar with at least one fake news item.

In our (also) preregistered analysis that includes both previously familiar and unfamiliar items, there is a main effect of repetition, F(1, 938) = 18.98, MSE = .16, p < .001, η2 = .02, but (unlike in Study 2) a significant interaction between exposure and warning condition, F(1, 938) = 7.81, MSE = .16, p = .005, η2 = .01. There was a significant repetition effect for fake news in the no warning condition, t(475) = 5.31, SE = .03, p < .001, d = 0.24, but no effect in the warning condition, t(463) = 1.30, SE = .03, p = .193, d = 0.06. It is possible that prior knowledge of the items facilitated explicit recall of the warning, which may have mitigated the illusory truth effect. See SI for means and further analyses.

We focus on fake news headlines here because the political concordance manipulation cuts the number of items in half. Including real news in this analysis decreases the number of participants markedly because the ANOVA requires each participant to contribute at least one observation to each cell of the design. Nonetheless, the full ANOVA reveals a significant main effect of repetition, F(1,312) = 8.94, p = .003, η2 = .03, and no interaction with political concordance, F < 1. The effect of prior exposure was also significant for fake news when political concordance was determined based on Democrat/Republican party affiliation: Politically concordant: t(494) = 4.1, p < .001; politically discordant: t(529) = 2.3, p = .020.

This specific analysis was not preregistered. Rather, the preregistration called for a comparison of the full 16 items from session 1 with the 8 novel items in session 2. This, too, revealed a significant main effect of repetition (using the same ANOVA as in the session 1 analysis), F(1,453) = 12.91, p < .001, η2 = .03. However, such an analysis does not tell us about the increasing effect of exposure, hence our deviation from the preregistration. See SI for further details and analyses.

All data are available online (https://osf.io/txf46/).

A working paper version of the manuscript was posted online via the Social Sciences Research Network (https://ssrn.com/abstract=2958246) and on ResearchGate (https://www.researchgate.net/publication/317069544_Prior_Exposure_Increases_Perceived_Accuracy_of_Fake_News).

Portions of this research were presented in 2017 and 2018 at the following venues: 1) Conference for Combating Fake News: An Agenda for Research and Action. Harvard Law School & Northeastern University, 2) Canadian Society for Brain, Behaviour and Cognitive Science Annual Conference, 3) Brown University, Department of Psychology, 4) Harvard University, Department of Psychology, 5) Yale University, Institute for Network Science and Technology and Ethics Study Group, 6) University of Connecticut, Cognitive Science Colloquium, 7) University of Regina, Department of Psychology, 8) University of Saskatchewan, Department of Psychology, and 9) The Society of Personality and Social Psychology 2018 Annual Conference.

References

- Allcott H, Gentzkow M. Social Media and Fake News in the 2016 Election. NBER Working Paper No. 23098. 2017 Retrieved from http://www.nber.org/papers/w23089.

- Alter AL, Oppenheimer DM. Uniting the tribes of fluency to form a metacognitive nation. Personality and Social Psychology Review. 2009;13(3):219–235. doi: 10.1177/1088868309341564. https://doi.org/10.1177/1088868309341564 [DOI] [PubMed] [Google Scholar]

- Arkes HR, Boehm LE, Xu G. Determinants of judged validity. Journal of Experimental Social Psychology. 1991;27(6):576–605. https://doi.org/10.1016/0022-1031(91)90026-3 [Google Scholar]

- Arkes HR, Hackett C, Boehm L. The generality of the relation between familiarity and judged validity. Journal of Behavioral Decision Making. 1989;2(2):81–94. https://doi.org/10.1002/bdm.3960020203 [Google Scholar]

- Bacon FT. Credibility of repeated statements: Memory for trivia. Journal of Experimental Psychology: Human Learning & Memory. 1979;5(3):241–252. https://doi.org/10.1037/0278-7393.5.3.241 [Google Scholar]

- Beck J. This article won’t change your mind: The fact on why facts alone can’t fight false beliefs. The Atlantic. 2017 Retrieved from https://www.theatlantic.com/science/archive/2017/03/this-article-wont-change-your-mind/519093/

- Begg IM, Anas A, Farinacci S. Dissociation of processes in belief: Source recollection, statement familiarity, and the illusion of truth. Journal of Experimental Psychology: General. 1992;121(4):446–458. https://doi.org/10.1037/0096-3445.121.4.446 [Google Scholar]

- Berinsky AAJ. Rumors and Health Care Reform: Experiments in Political Misinformation. British Journal of Political Science. 2017;(47):241–246. https://doi.org/10.1017/S0007123415000186

- Calvert D. The Psychology Behind Fake News. 2017 Retrieved August 2, 2017, from https://insight.kellogg.northwestern.edu/article/the-psychology-behind-fake-news.

- Coppock A. Generalizing from Survey Experiments Conducted on Mechanical Turk: A Replication Approach. 2016 Retrieved from https://alexandercoppock.files.wordpress.com/2016/02/coppock_generalizability2.pdf.

- Corlett PR. Why do delusions persist? Frontiers in Human Neuroscience. 2009:3. doi: 10.3389/neuro.09.012.2009. https://doi.org/10.3389/neuro.09.012.2009 [DOI] [PMC free article] [PubMed]

- Dechene A, Stahl C, Hansen J, Wanke M. The Truth About the Truth: A Meta-Analytic Review of the Truth Effect. Personality and Social Psychology Review. 2010;14(2):238–257. doi: 10.1177/1088868309352251. https://doi.org/10.1177/1088868309352251 [DOI] [PubMed] [Google Scholar]

- Diana R, Yonelinas A, Ranganath C. Imaging recollection and familiarity in the medial temporal lobe: a three-component model. Trends in Cognitive Sciences. 2007 doi: 10.1016/j.tics.2007.08.001. Retrieved from http://www.sciencedirect.com/science/article/pii/S1364661307001878. [DOI] [PubMed]

- Ecker U, Hogan J, Lewandowsky S. Reminders and Repetition of Misinformation: Helping or Hindering Its Retraction? Journal of Applied Research in Memory and Cognition. 2017;6:185–192. [Google Scholar]

- Ecker UKH, Lewandowsky S, Tang DTW. Explicit warnings reduce but do not eliminate the continued influence of misinformation. Memory & Cognition. 2010;38(8):1087–1100. doi: 10.3758/MC.38.8.1087. https://doi.org/10.3758/MC.38.8.1087 [DOI] [PubMed] [Google Scholar]