Abstract

Oral cancer is a growing health issue in a number of low- and middle-income countries (LMIC), particularly in South and Southeast Asia. The described dual-modality, dual-view, point-of-care oral cancer screening device, developed for high-risk populations in remote regions with limited infrastructure, implements autofluorescence imaging (AFI) and white light imaging (WLI) on a smartphone platform, enabling early detection of pre-cancerous and cancerous lesions in the oral cavity with the potential to reduce morbidity, mortality, and overall healthcare costs. Using a custom Android application, this device synchronizes external light-emitting diode (LED) illumination and image capture for AFI and WLI. Data is uploaded to a cloud server for diagnosis by a remote specialist through a web app, with the ability to transmit triage instructions back to the device and patient. Finally, with the on-site specialist’s diagnosis as the gold-standard, the remote specialist and a convolutional neural network (CNN) were able to classify 170 image pairs into ‘suspicious’ and ‘not suspicious’ with sensitivities, specificities, positive predictive values, and negative predictive values ranging from 81.25% to 94.94%.

1 Introduction

Oral cancer incidence and death rates are rising in low- and middle-income countries (LMIC) [1–5]. As of 2012, 65% of new oral cancer cases and 77% of oral cancer deaths occurred in LMIC [6] with a five year survival rate under 50% in some countries [7].

Oral cancer development is increased by a number of lifestyle choices including tobacco [8, 9] and alcohol use [10]. Particularly in Asia, betel quid (or paan) chewing (with or without tobacco [11, 12]) increases rates of oral squamous cell carcinoma (OSCC) and oral submucous fibrosis (OSMF) [13–21]. Betel quid (typically consisting of betel leaf, areca nut, slaked lime, and possibly tobacco [22]) was identified as a contributer to increased oral cancer incidence as early as 1902 [23]. Despite the risk of developing oral cancer, psychostimulating qualities keep betel quid popular [22, 24–26].

High-risk populations living in remote areas with limited access to healthcare infrastructure are in need of low-cost, easy-to-use medical imaging devices to enable early diagnosis with increased sensitivity as early diagnosis is well correlated with higher survival rates [7]. Conventional visual examinations achieve sensitivities around 60% with specificity over 98.5% [27] but require visible lesions, possibly delaying diagnosis.

Autofluorescence imaging (AFI) is an alternate detection technique using changes in the radiant exitance of oral tissue fluorescence when illuminated at 400–410 [28–30] to discriminate potential oral malignant lesions, removing the requirement of the lesion being visible [19, 28, 31–41]. Increasing dysplasia results in a decreased fluorescence signal from changes in endogenous fluorophores and increased absorption from hemoglobin [29, 33, 34, 42–44]. Carcinogenesis affects cellular structure, breaking down the collagen and elastin cross-linking, leading to reduced fluorescence signal [29, 33, 35, 44]. Additionally, changes in mitochondrial metabolism decreases fluorescence from flavin adenine nucleotide (FAD) [43]. Increased microvascularization results in higher hemoglobin content [42, 45], increasing absorption of both excitation and emission wavelengths [46]. Lastly, in addition to decreased green wavelength fluorescence, a 635 nm emission peak occurs due to increased porphryin take-up in cancerous cells [47, 48] with the ratio of signal between 635 nm and 500 nm indicating possible cancerous lesions [30, 39, 40].

Previous autofluorescence imaging (AFI) system studies have typically achieved sensitivities of greater than 71% and specificities of 15.3%—100% [30, 42, 49–54] though a few studies have achieved sensitivities of only 30%–50% [45, 55]). Increased sensitivity will lead to earlier diagnosis of oral cancer, enabling prompt treatment of the disease, while the specificity of an AFI device needs to remain high to avoid unneeded, invasive biopsies.

In high-risk, remote populations with low doctor-to-patient ratios, the ideal AFI system is operable by any frontline health worker in primary health centers, dentists, nurses, or by any community member, even those without formal healthcare training. In the cases where a trained specialist is not present, a remote specialist can be integrated into the clinical environment through the internet, allowing for informed diagnosis. Smartphones provide portable image collection, computation, and data transmission capabilities controlled by a simple touchscreen interface, addressing the needs of a cancer screening device being simple to use and connected to the internet. Using the smartphone’s data transmission capabilities, the collected data can be uploaded to a cloud server, where a remote specialist can access the images and make a diagnosis. Additionally, deep-learning tools like a CNN can be implemented in the cloud and used for automatic image analysis and classification [56].

2 Materials

2.1 Hardware

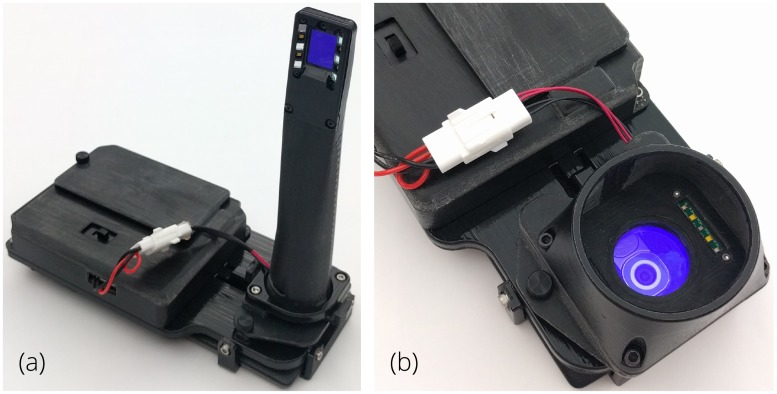

To address the need for oral cancer screening in high-risk populations, we have developed a low-cost, point-of-care smartphone-based system (Fig 1). The dual-view, oral cancer screening device augments a commercially available Android smartphone (LG G4, LG, Seoul, South Korea) for AFI and white light imaging (WLI) both internal to the oral cavity with an intraoral probe, and external with a whole mouth imaging module [57]. The whole cavity imaging module provides a wide field of view (FOV) image for assessment of the patient’s overall oral health.

Fig 1. Smartphone-based oral cancer screening device using both WLI and AFI.

Interchangeable modules installed on a common platform allow for both (a) intraoral imaging and (b) whole cavity imaging.

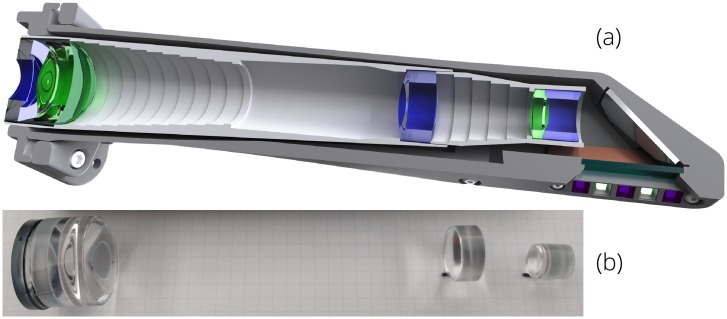

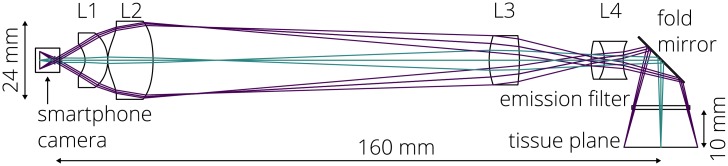

The intraoral probe’s custom optical system (Figs 2 and 3) extends the entrance pupil away from the smartphone camera aperture and allows for close-focus imaging of the oral tissues. A hygienic sleeve (TIDI Products, Neenah, WI) is used with the intraoral probe for infection prevention. Smartphone cameras are well-designed to capture a wide field of view from a relatively long distance away, and modifying this optical system to (a) decrease the field of view by ∼90%, (b) focus on a close object, (c) utilize the entire image sensor, and (d) yield a packaged design to fit comfortably in the oral cavity and access base of tongue and cheek pockets is challenging. During the design process, the lenses of the smartphone camera were modeled as a single paraxial surface to ensure compatibility with any smartphone camera whose camera can be set to infinite focus. The prescription of the optical system is provided in Table 1. The sag of the aspheric surfaces is defined using an even polynomial [58]

| (1) |

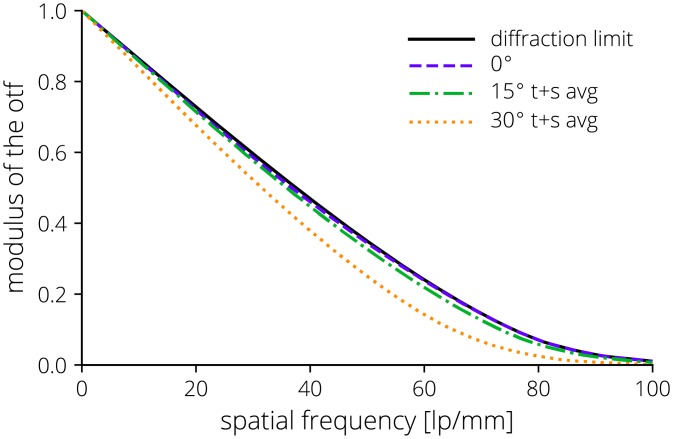

where r is the radial distance from the optical axis, c is the curvature (1/R), k is the conic constant, and the α’s define the coefficients of the even r-polynomial. The lenses were designed using poly(methyl methacrylate) (PMMA) and OKP4HT (Osaka Gas Chemicals, Osaka, Japan) and fabricated using single point diamond turning (Moore Nanotechnology Systems, Swanzey, NH). A rendered sectioned view of the intraoral probe assembly and the manufactured lenses are shown in Fig 2. A layout of the optical system is shown in Fig 3 and the nominal modulation transfer function (MTF) is provided in Fig 4.

Fig 2.

(a) Intraoral probe section view showing the mechanical structure, lenses, and illumination LEDs; and (b) the diamond turned lenses.

Fig 3. Layout of the intraoral probe optical design.

Table 1. Intraoral probe optical system prescription.

| Surface | Material | Radius | Thickness | Conic | α2 | α3 |

|---|---|---|---|---|---|---|

| Obj | air | infinity | 32.6 | |||

| 1 | OKP4HT | -20.585 | 5.0 | 12.222 | -3.086·10-4 | 8.902·10-7 |

| 2 | PMMA | 9.862 | 3.5 | |||

| STOP | air | -20.904 | 19.0 | 15.340 | -5.511·10-5 | 5.041·10-6 |

| 4 | PMMA | 46.623 | 8.0 | 11.035 | -4.636·10-5 | -8.567·10-8 |

| 5 | air | -20.564 | 89.0 | -1.795 | -2.443·10-5 | -5.850·10-8 |

| 6 | PMMA | 33.722 | 12.0 | 4.506 | 5.408·10-5 | 1.450·10-7 |

| 7 | air | -7.986 | 0.0 | -4.612 | 7.972·10-5 | 4.231·10-7 |

| 8 | OKP4HT | 10.437 | 6.0 | 0.397 | 3.454·10-5 | 1.445·10-6 |

| 9 | air | 4.480 | 8.0 | -3.363 | 4.041·10-4 | 1.126·10-5 |

| 10 | smartphone camera | |||||

Fig 4. Nominal MTF of the intraoral probe at the tissue plane with the sagittal and tangential data averaged.

The system utilizes six 405 nm Luxeon UV U1 LEDs (Lumileds, Amsterdam, Netherlands) to enable AFI and four 4000 K Luxeon Z ES LEDs (Lumileds) for WLI and general screening. The LEDs are placed in a plane-symmetrical pattern on either side of the optical axis (Figs 1 and 2). In the intraoral probe, the LEDs are angled toward the object plane to increase illumination uniformity. An emission filter (Asahi Spectra, Tokyo, Japan) with a 470 nm cut-on wavelength is installed in the imaging channel for AFI and excitation filter (Asahi Spectra) is installed in front of the violet LEDs to limit output in the passband of the emission filter. The whole mouth module uses the unmodified smartphone camera optics to provide wide FOV imaging and includes both wavelengths of illumination LEDs, with an emission filter for AFI in the imaging channel.

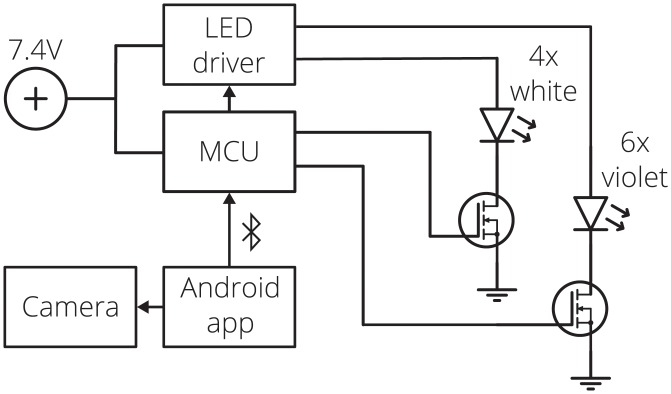

The illumination LEDs are driven with a switching boost voltage regulator (Linear Technology, Milpitas, CA) controlled by a custom Android application (Sec 2.2) through a Bluetooth connected microcontroller unit (MCU, SparkFun Electronics, Niwot, CO). Two 3.7 V 18650 Li-ion batteries (Orbtronic, Saint Petersburg, FL) power the MCU and LED driver. The MCU sets the LED current through a digital potentiometer (Analog Devices, Norwood, MA) and switches between the LED strings using signal voltages applied to MOSFETs. The smartphone application synchronizes the LED illumination with image capture, optimizing the LED on-time, reducing power consumption and generated heat. A block diagram is shown in Fig 5.

Fig 5. Block diagram of the oral screening system electronics.

Finally, the phone and electronics are mounted to a low-cost, 3D-printed mechanical structure of VeroBlackPlus RGD875 plastic (Stratasys, Eden Prairie, MN). This structure also provides a universal mount for the interchangeable imaging modules. A simple redesign of the mechanical structure could allow for a variety of smartphone sizes and camera locations on the backside of the smartphone.

2.2 Software

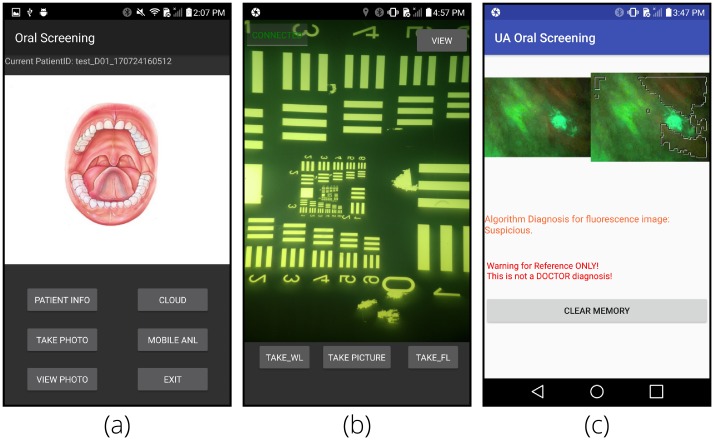

A custom Android application (app) was developed to guide the user through the data collection process. When first opened, the app prompts the user to create a new case ID or select an ID from a previous session, storing all the data from a single patient under the same ID. From the main menu, relevant patient data (age, history of tobacco or paan use, etc.) can be input, AFI and WLI images can be collected and viewed, on-phone image processing can be completed (Fig 6), or data can be uploaded to the cloud. During image capture, the smartphone uses its Bluetooth connection to communicate with the MCU to synchronize image capture and the LED illumination. After image capture, the images may be viewed within the app or the AFI images processed on the phone using the red-to-green signal ratio [30, 59] with a ‘suspicious’ or ‘not suspicious’ classification.

Fig 6. Screenshots of the custom Android application.

(a) shows the main menu of the app where buttons allow for navigation to image capture, image viewing, image upload to the cloud, and mobile analysis. (b) shows the image capture interface for both WLI (using the ‘TAKE_WL’ button) and AFI (using the ‘TAKE_FL’ button) individually or sequentially using the ‘TAKE PICTURE’ button. (c) shows a sample result for on-phone image processing of AFI images.

The Android Camera2 API [60] is used to enable low-level camera control by the app, including exposure, gain, focus, ISO, color conversion, and white balance. The LG G4 device runs Android 6.0 Marshmallow which supports most of the Camera2 API features and the Camera2 API is compatible with Android 5.0 Lollipop and newer allowing 84.7% of Android devices to run the app [61]. Additionally, the app could be ported to other popular smartphone operating systems though the device cost could significantly increase.

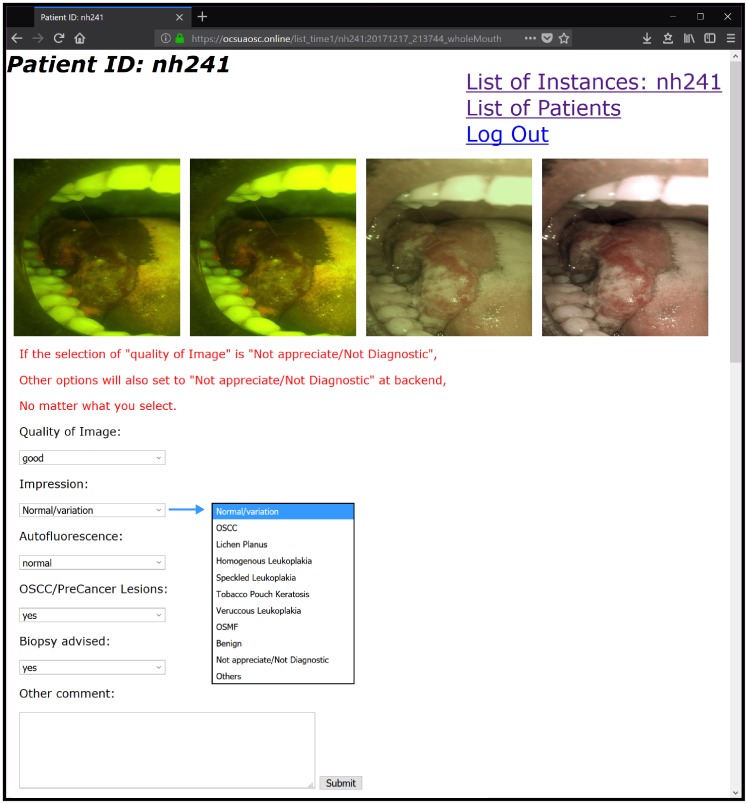

The patient data, images, and location data (for further spatio-temporal analysis) is uploaded to a cloud server through Wi-Fi and can be remotely accessed anywhere with an internet connection through a web app deployed on the server (Fig 7). When viewing images, the specialist is presented with original, full-resolution images along with sliders to adjust contrast and brightness. On the same web-page the specialist uses dropdown menus to select a diagnosis from list (normal, lichen planus, leukoplakia, erythroplakia, etc.) and a text box to provide triage instructions to the patient.

Fig 7. Sample web portal screen for remote viewing and diagnosis of images.

The four images presented from left to right are: (i) original AFI, (ii) AFI with histogram equalization, (iii) original WLI, (iv) WLI with color correction.

The cloud server hosts a virtual machine configured on a Google cloud compute engine to automatically classify uploaded images with a pre-trained convolutional neural network (CNN), determining the likelihood of the presence of suspicious lesions in each image.

A reminder email is automatically sent to the remote specialists whenever a new case is uploaded to the cloud. Once a remote specialist diagnoses a waiting case, a summary report is generated with uploaded data from the smartphone, CNN results, and diagnoses. The reports can be viewed continuously on the web app and also be downloaded to the smartphone through the Android app.

3 Methods

3.1 System characterization

3.1.1 Imaging

Performance of the intraoral imaging system was characterized by (a) measuring the MTF without the smartphone camera, (b) measuring the MTF with the smartphone camera, (c) evaluating the predicted assembled performance with a Monte Carlo analysis, (d) measuring the cutoff frequency, and (e) evaluating the field of view.

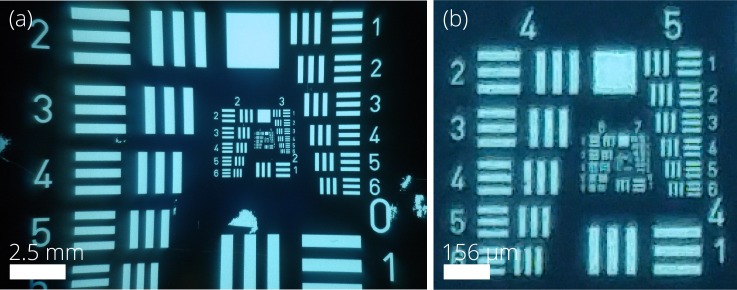

The cutoff frequency and field of view of the intraoral probe optical system was validated by imaging a 1951 USAF resolution test chart.

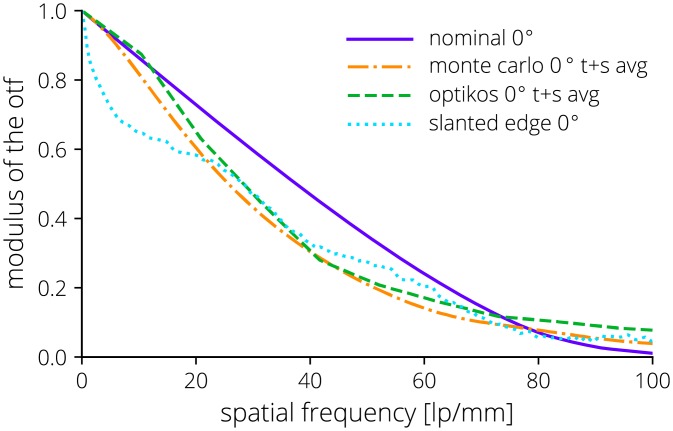

The MTF of the optical system was directly measured using both an Optikos LensCheck (Optikos, Wakefield, MA) instrument and the slanted edge method [62, 63]. The LensCheck system directly measures the point-spread function (PSF) of the intraoral lens system without the smartphone camera lens or image sensor and the MTF is calculated from the normalized Fourier transform of the PSF. The slanted edge method was used to measure the entire optical system including the external lens system, the smartphone camera, and the image sensor. The slanted edge method measures an edge-spread function (ESF) of which the derivative is the line-spread function (LSF). The normalized Fourier transform of the LSF is the one-dimensional MTF. The results from multiple regions of interest across the slanted edge in the central field of view were averaged. The spatial frequency limit was then scaled by the limiting spatial frequency of the added intraoral optical system. Both MTF measurements were compared to representative assembled performance of the passively aligned intraoral probe optics modeled using a Monte Carlo analysis in Zemax OpticStudio (Zemax, Kirkland, WA).

Due to the imaging channel emission filter, the color space is distorted. More accurate color representation is important for image evaluation by a remote specialist and is achieved by applying a custom color matrix

| (2) |

defined by amn values that maps the camera RGB values to the CIEXYZ color space [64]. After imaging a standard 24-patch color checker board (X-Rite, Grand Rapids, MI) with known CIEXYZ values, the A matrix composed of the amn coefficients can be calculated by

| (3) |

where T is the matrix of known CIEXYZ values and C is the matrix of measured RGB camera values.

3.1.2 Illumination

The white light and violet light illumination uniformity was measured by imaging a matte white surface without the emission filter in place. For this test, the violet LEDs were replaced with white LEDs with similar radiance characteristics from the same product series (Luxeon Z) to avoid exciting fluorescence from the measurement surface. The uniformity measurements are corrected by the relative illumination (RI) of the imaging system. The RI of the combined intraoral lens system and the smartphone camera was measured using a liquid light guide coupled source diffused by multiple plates of ground glass. The measured uniformity is compared to a non-sequential raytracing model (FRED, Photon Engineering, Tucson, AZ) using LED rayfiles from the manufacturer. Uniformity is quantified using the coefficient of variation (cv) [65] on normalized data,

| (4) |

where xi is the luminance value of each pixel, is the mean of the pixels in the image, and σ is the standard deviation of the pixel values.

3.2 Field testing and CNN classification

A pilot human subjects study was performed at KLE Society’s Institute of Dental Sciences (Bangalore, India), Mazumdar Shaw Medical Centre (Bangalore, India), and the Christian Institute of Health Sciences & Research (Dimapur, India) to demonstrate the feasibility of the oral cancer screening hardware, remote clinical diagnosis workflow, and classification algorithms. This study received institutional review board (IRB) approval from Mazumdar Shaw Cancer Centre (NNH/MEC-CL-2016-394) and University of California, Irvine (HS#2002-2805). All subjects provided informed written and oral consent.

Inclusion criteria included clinically suspicious oral lesions, a history of previously treated OSCC with no current evidence of cancer recurrence at least six months after cessation of treatment, or the presence of recently diagnosed, untreated OSCC or pre-cancerous lesions. Exclusion criteria included being less than or equal to 18 years of age, currently undergoing treatment for malignancy, pregnancy, under treatment for tuberculosis, or suffering from any acute illness.

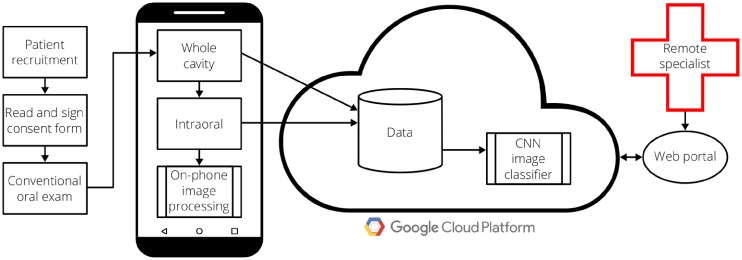

The full field testing workflow is shown in Fig 8. When patients arrived for their visit, they first read, understood, and signed a consent form. After acknowledging consent, a general dentist or oral oncology specialist performed a conventional visual oral exam. Following, the general dentist performed the smartphone-based imaging exam, collecting both AFI and WLI with both the whole cavity imaging module and the intraoral probe module. Finally, the oral oncology specialist clinically diagnosed each lesion site, with the clinical diagnosis serving as the gold standard.

Fig 8. Field testing workflow for smartphone-based oral screening.

Based on the gold-standard diagnosis, the images were assigned to either the normal class or suspicious class. Diagnoses of oral squamous cell carcinoma, lichen planus, homogeneous leukoplakia, speckled leukoplakia, tobacco pouch keratosis, verruccous leukoplakia, and oral submucous fibrosis were included in the suspicious class. Diagnoses of normal/variation were included in the normal class. Variation includes normal variations of oral mucosa, including fissured tongue, Fordyce granules, leukoedema, physiological pigmentation, and linea alba buccalis [66–68]. Diagnoses of benign were not included in either class.

The captured images were uploaded to the cloud server for diagnosis by a remote specialist, and for the intraoral images, classification by the conventional neural network (CNN). Image pairs (WLI and AFI) were screened by the remote specialist for sufficient image quality (minimal motion blur, in focus) to make a diagnosis.

The intraoral images were then classified with a trained CNN. For the CNN training, methods commonly used in network training were applied including transfer learning [69] and data augmentation [70–72]. For data augmentation, the original images were rotated and flipped to feed the network more data for training. Additionally, transfer learning was applied by using a VGG-M [70] network pre-trained on the ImageNet dataset [73]. The network was modified for our task by replacing the final dense layer and softmax layer and then training the network with our dataset.

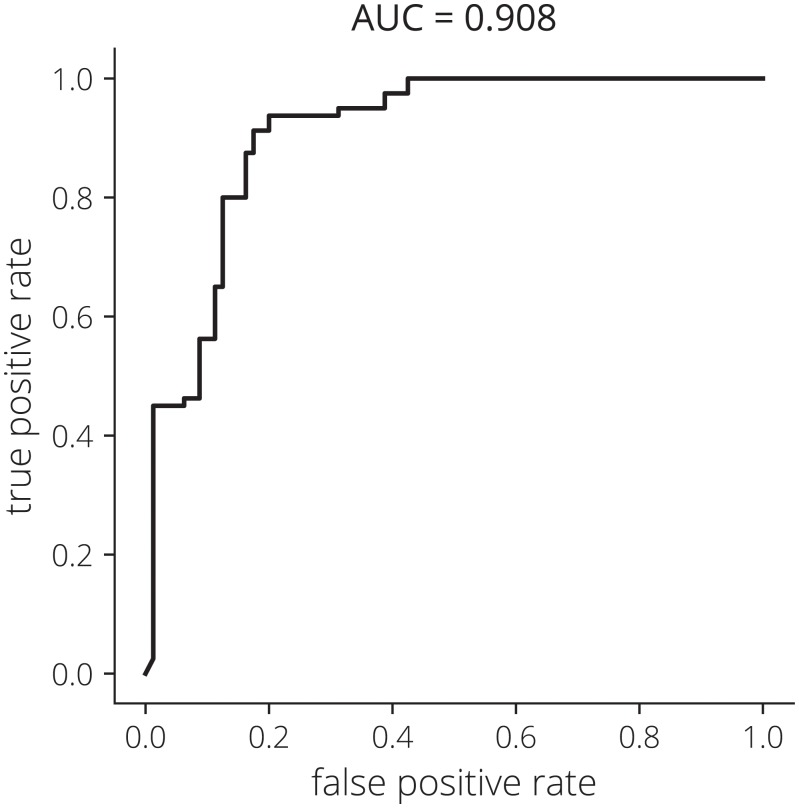

Sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) [74, 75] were calculated to compare the remote specialist diagnosis and the CNN result to the gold-standard on-site specialist diagnosis. Lastly, a receiver operating characteristic (ROC) curve was generated to determine the accuracy of the classifier and area under the ROC curve (AUC) calculated to provide a single value for comparison to other devices [76, 77].

4 Results

4.1 System performance

4.1.1 Imaging

Fig 9 provides the resulting image of a 1951 USAF resolution test chart, showing a resolution limit of 71.8 lp/mm and also the full field of the view of the intraoral probe.

Fig 9.

Image of a 1951 USAF resolution test chart showing the (a) full field of view of the intraoral probe and (b) contrast limit. The zoomed contrast limit image (b) shows group 6–2 is resolvable, a cutoff frequency of 71.8 lp/mm.

The measured MTF along with the performance of an average system from the Monte Carlo analysis with representative tolerances is shown in Fig 10. A sensitivity analysis shows decenter of the outside concave surface of L4, L3 decenter, and L4 decenter have the greatest effect on performance.

Fig 10. Comparison of the nominal, Monte Carlo, and measured on-axis MTF performance.

The Monte Carlo analysis is an average system output. Measured MTF data is from an Optikos LensCheck instrument and from a slanted edge test. The sagittal and tangential data has been averaged where noted.

Lastly, the color mapping A matrix was calculated to be

| (5) |

4.1.2 Illumination uniformity

Data for the modeled and measured uniformity for the intraoral and whole mouth modules are provided in Table 2.

Table 2. Measured and modeled uniformity for white light and violet light for the intraoral probe and whole mouth module.

The measured uniformity is adjusted by the relative illumination of each optical system.

| Color | Intraoral | Whole cavity | ||

|---|---|---|---|---|

| Modeled | Measured | Modeled | Measured | |

| White | 0.85 | 0.92 | 0.94 | 0.96 |

| Violet | 0.89 | 0.93 | 0.95 | 0.96 |

4.2 Field testing and CNN classification

Data was collected at the three testing sites from 190 patients with data from 99 patients (demographics shown in Table 3) used for CNN analysis and remote diagnosis.

Table 3. Study participant demographics for the image pairs used in CNN classification.

Values are provided as the N of each category except for age in units of years. The Both health behavior represents the combination of both smoking and chewing. Health behavior was not collected for all participants.

| Item | Female | Male | Total |

|---|---|---|---|

| N | 46 | 53 | 99 |

| Age, μ [yr] | 37.4 | 42.2 | 40.0 |

| Age, σ [yr] | 15.0 | 13.0 | 14.1 |

| Health behavior | |||

| None | 8 | 6 | 14 |

| Smoking | 0 | 7 | 7 |

| Chewing | 17 | 25 | 42 |

| Both | 3 | 14 | 17 |

| Alcohol | 3 | 9 | 12 |

| Clinical diagnosis | |||

| Normal | 24 | 9 | 33 |

| Lichen Planus | 2 | 6 | 8 |

| Homogeneous Leukoplakia | 6 | 10 | 16 |

| Speckled Leukoplakia | 1 | 2 | 3 |

| Tobacco Pouch Keratosis | 12 | 21 | 33 |

| Squamous Cell Carcinoma | 1 | 5 | 6 |

Out of 364 image pairs, 170 WLI and AFI image pairs had sufficient quality for remote diagnosis and use in the CNN with N = 86 in the normal class and N = 84 in the suspicious class. Data augmentation increased the dataset size by 8× to 1360 image pairs. After training the network for 80 epochs, four-fold cross validation accuracy of the VGG-M network was 86.88%. The ROC curve for the CNN is provided in Fig 11 and the AUC = 0.908.

Fig 11. Receiver operating characteristic (ROC) curve for the CNN.

The area under the curve (AUC) equals 0.908.

Sensitivity, specificity, PPV, and NPV values comparing the remote specialist diagnosis and the CNN result to the gold-standard on-site oral oncology specialist clinical diagnosis are provided in Table 4.

Table 4. Sensitivity, specificity, PPV, and NPV values for the images of sufficient quality for remote diagnosis and CNN evaluation compared to the gold-standard on-site specialist clinical diagnosis.

| Parameter | Remote specialist | CNN |

|---|---|---|

| Sensitivity | 0.9259 | 0.8500 |

| Specificity | 0.8667 | 0.8875 |

| PPV | 0.9494 | 0.8767 |

| NPV | 0.8125 | 0.8549 |

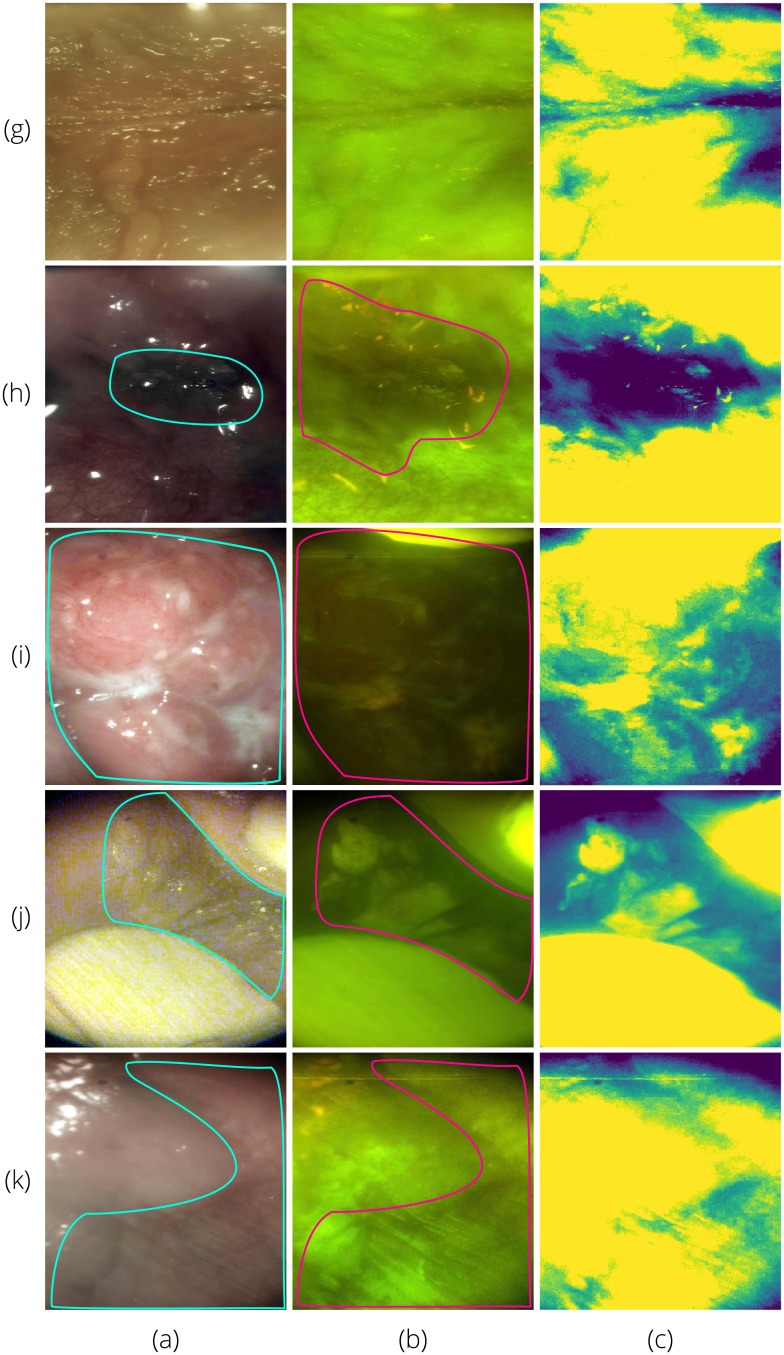

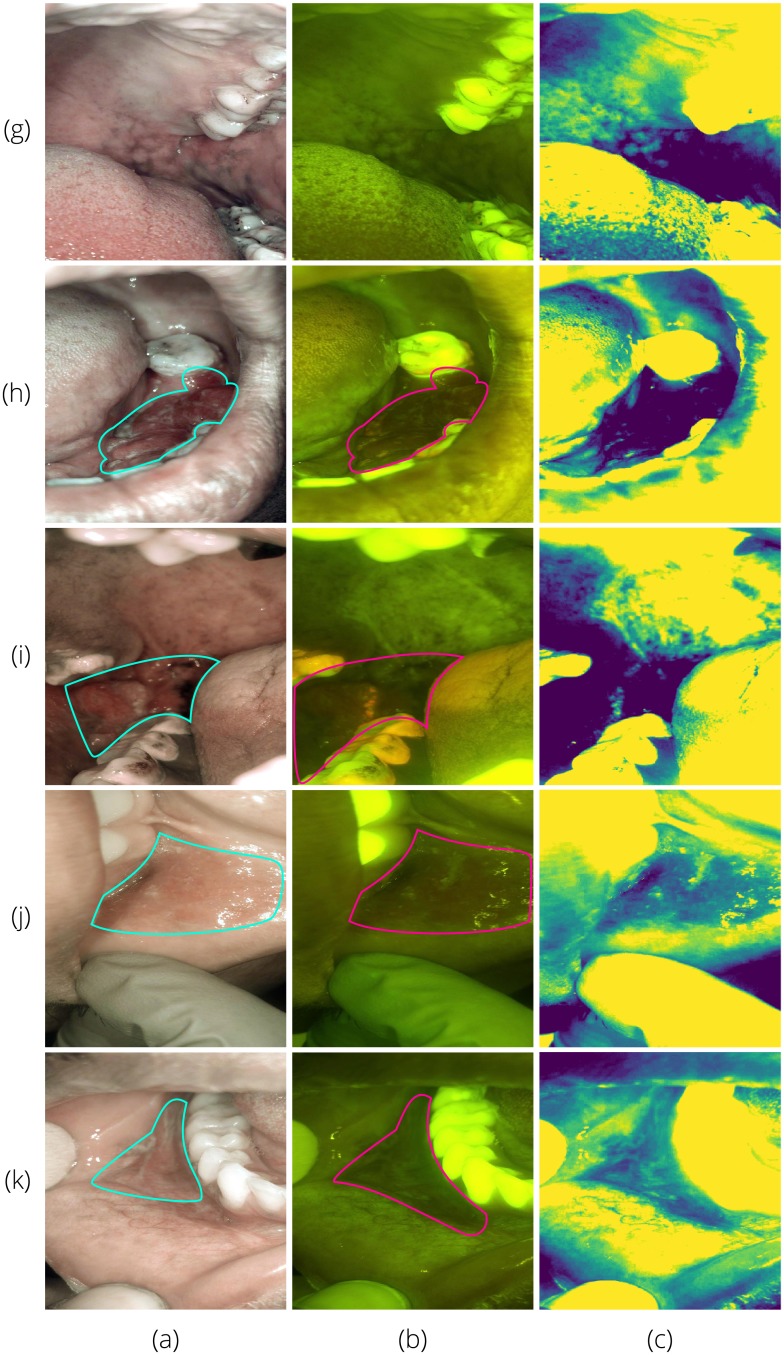

Sample images diagnosed by a remote specialist are shown in Figs 12 and 13. Fig 12 shows AFI and WLI taken with the intraoral probe. With suspect areas outlined, the combination of WLI and AFI provides the most information about the type of lesion and the size of the affected area. Fig 13 provides similar findings for the whole cavity imaging module.

Fig 12. Sample white light (column a) and autofluorescence (column b) intraoral probe field testing images with suspect areas outlined.

All rows were classified by the CNN as suspicious. On-site specialist diagnoses were: (g)—normal/variation; (h)—homogeneous leukoplakia; (i)—carcinoma of the left mandibular alveolus; (j)—tobacco pouch keratosis demonstrating increased fluorescence due to hyperkeratosis; and (k)—tobacco pouch keratosis. Column (c) shows the green intensity map with the mean subtracted as discussed in Section 5.

Fig 13. Sample white light (column a) and autofluorescence (column b) whole cavity module field testing images with suspect areas outlined.

All rows were classified by the CNN as suspicious. On-site specialist diagnoses were: (g)—normal/variation; (h)—carcinoma of the left mandibular alveolus; (i)—oral squamous cell carcinoma; (j)—tobacco pouch keratosis; and (k)—homogeneous leukoplakia. Column (c) shows the green intensity map with the mean subtracted as discussed in Section 5.

5 Discussion

The smartphone platform is a natural progression of previous autofluorescence systems targeting oral lesions [34, 41, 42, 78–83] and our device offers several improvements. Compared to previous smartphone-based systems [83], the two FOVs are useful for both an overview of the oral cavity health along with targeted imaging of problem areas. Our intraoral probe extends capability, reaching to the base of the tongue and cheek pockets in some patients, areas of increased cancer risk [4]. Our device offers image capture, save, review, and transmit of both AFI and WLI captured both intraorally and externally to the oral cavity. Additionally, our intraoral imaging attachment utilizes a custom designed optical system to maximize the number of pixels used on the smartphone image sensor. Operation of the system is simple through an intuitive user interface. Since the device is connected to the cloud and remote diagnosis is possible, the system does not need to be operated by a specialist, with the remote specialist integrated into the clinical environment through the internet. Importantly, the device implements a machine learning algorithm to aid both the community health workers and the remote specialists as devices requiring the human visual system (HVS) to make decisions based on small changes in scene or image brightness are suboptimal due to the logarithmic response of the HVS [84, 85].

The measured imaging performance of the device matches the predicted performance for a passively aligned optical system and is sufficient for an oral cancer screening device, able to resolve features down to 14 μm. Similar to the Monte Carlo result, the measured mid-spatial frequency performance is decreased from the nominal. Contributions to the decreased performance include stray light from various mechanical surfaces, chromatic aberration, and passively aligned lenses. The TIDI Products SureClear Window is specifically designed to minimally affect image quality through the sheath, though the barrier can increase aberrations and specular reflection from the white LEDs when saliva is introduced on the barrier. Image sensor noise and the proprietary image processing pipeline of the smartphone have the opportunity to decrease the resolution cutoff of the optical system. Automatic, immutable image processing implemented by the smartphone manufacturer including edge sharpening could explain differences between the Optikos and slanted edge measured results. Additionally, single-point diamond turning tool marks cause diffraction-type scatter proportional to the power spectral density (PSD) of the surface, diminishing the quality of the PSF [86]. Due to the low amount of nominal distortion (<0.8%) in the optical system, distortion is not calibrated to save computation time and power in the system.

The measured and modeled illumination uniformity match well for both modules and illumination wavelengths. The whole cavity module uniformity error is only 2% for white illumination and 1% for violet illumination. For the intraoral probe the error is slightly larger at 7% for white light illumination and 4% for violet illumination. The increase in uniformity from the model is likely due to errors in the scattering properties of the various surfaces in the model, including the system mechanics and the target surface.

Our initial field-testing workflow and results were positive. Through the web app, doctors were able to diagnose cases quickly and efficiently, with the AFI and WLI from two FOVs providing the needed information. Compared to the on-site specialist, the remote specialist was able to correctly diagnose patients as having suspicious lesions with high specificity, sensitivity, and PPV, though the remote specialist’s ability to correctly clear patients without suspicious lesions could be improved. The sensitivity and specificity of previous autofluorescence-only devices can have large variation [54], while also needing to be operated by a specialist. The combination of AFI and WLI in our device should set the sensitivity floor at 60%, the value for a conventional visual exam [27].

The CNN sensitivity, specificity, PPV, NPV, and AUC results are promising given the small size of the dataset, however, future research will need to include benign cases in the training and classification processes. Our AUC value is similar to the high-end of results obtained with similar systems in discriminating healthy tissue from lesions [30, 38, 45, 50, 87], however, results have been mixed and the addition of benign lesions decreased the AUC significantly [38].

Additionally, a study including biopsy and a histopathology gold standard is needed to fully correlate the CNN result. Importantly for our small dataset, data augmentation increased the number of images pairs by 8×, and since the images have no natural orientation, flipped and rotated images are still valid. As improvements to the device are made and the health providers acquire additional time and training with the device, the dataset size and percentage of quality images will increase, leading to improvements in CNN training. We hope augmenting the WLI with AFI and the CNN classification algorithm leads to true diagnostic performance in line with our reported CNN result.

The main challenges to using AFI and WLI for cancerous and pre-cancerous lesion detection include increased fluorescence signal from hyperkeratinization of pre-malignant lesions causing an increase in autofluorescence signal [88] and differentiating between pre-cancerous lesions and areas of inflammation or irritation that can confound either a human or computer diagnosis [42], though combining WLI and AFI with longitudinal data discriminates dysplasia from short-term inflammation. The main challenges to large-scale implementation of this device will be addressing the needs of regions without cellular data or internet access and the additional time burden on the remote specialists for diagnosing cases and monitoring lesion progress. However, the overall time burden should decrease as other community members will be able to collect the necessary data.

Improvements for the next generation device could include the addition of a simple mean subtraction from the green channel of the original AFI

| (6) |

to the AFI image already presented to provide the diagnosing specialist with an additional map of areas of decreased fluorescence signal as shown in Figs 12 and 13. The on-phone red/green ratio image analysis could also be added to the information shown to the remote specialist (and on-site specialist if present during data collection) [30]. Additionally, including the whole cavity images in the CNN training and classification would increase the amount of data available, however, these images have many additional noise features such as the perioral epidermis and teeth.

A smaller profile for the intraoral probe would be more effective in accessing sites deep in the oral cavity like the cheek pockets and base of tongue, particularly in patients with advanced oral submucous fibrosis. The remote specialist could be better integrated into the clinical environment with a wider field of view and longer depth of field of the intraoral probe to improve area recognition and image quality, helping to orient the remote specialist during diagnosis. Crossed polarizers for the white light LEDs would reduce noise in the image due to specular reflection. Lastly, as use hours increase, app feedback will be used to further streamline the user experience, making data collection easier for all types of users.

Though the targeted communities lack healthcare infrastructure, many have ample cellular data coverage, and as the cost of smartphones continues to decrease, ownership in LMIC increases (the compound annual growth rate (CAGR) of mobile subscriptions in LMICs since 2008 is 20% [89] and the CAGR of smartphone ownership from 2013–2015 is >30% [90]). Smartphone-based devices allow for a hub and spoke model where the hub houses the specialists and trained healthcare workers implementing the screening program and the smartphones extend spokes out to the remote communities. A low system cost enables this model and high-volume cost estimates for our system are ~ $100 plus the cost of the smartphone (The cost of the smartphone is not included since most users will be able to use their own smartphone), an inexpensive medical imaging device.

6 Conclusion

Described is the design and implementation of a low-cost, point-of-care, smartphone-based, dual-modality imaging system for oral cancer screening in LMIC. The device enables clinicians and community members to capture AFI and WLI and upload images to the cloud for both remote specialist diagnosis and CNN classification. We have tested the device and diagnosis workflow in three locations in India and initial feedback on the system is positive, with both the remote specialist and CNN achieving high values of sensitivity, specificity, PPV, and NPV compared to the on-site specialist gold standard.

Inexpensive, high-power LED sources in white and violet wavelengths, plastic lens molding technology, and low-cost but powerful smartphones are promising developments for the creation of low-cost, portable, simple-to-use autofluorescence imaging devices for oral cancer detection. Performance should increase as additional images are collected and with improvements to the device hardware and usability. Enabling oral cancer detection in low-resource communities will lead to earlier detection and diagnosis, minimizing disease progression and ultimately, a reduction in oral cancer death rates and healthcare costs.

7 Supplemental material

The design files for the LED driver have been released on GitHub under the GPL-3.0 license [91, 92] and the corresponding data repository is found at [93].

Acknowledgments

Thank you to Pier Morgan and the Center for Gamma-ray Imaging (CGRI) for use and operation of the rapid prototype printer, and to Ken Almonte for guidance on the design of the LED driver. Thank you to TIDI Products for manufacturing custom shields for our device.

Data Availability

All relevant data are deposited at doi:10.5281/zenodo.1477483. The design files for the LED driver are deposited on GitHub at doi:10.5281/zenodo.1214892; doi:10.5281/zenodo.1214890.

Funding Statement

RL received research funding from the National Institutes Health, National Institute of Biomedical Imaging and Bioengineering award UH2EB022623 (https://www.nibib.nih.gov/). RDU received research funding from the National Institutes of Health, National Institute of Biomedical Imaging and Bioengineering, Graduate Training in Biomedical Imaging and Spectroscopy grant T32EB000809 (https://www.nibib.nih.gov/training-careers/postdoctoral/ruth-l-kirschstein-national-research-service-award-nrsa-institutional-research/supported-programs). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Petersen PE. Strengthening the Prevention of Oral Cancer: The WHO Perspective. Community Dent Oral Epidemiol. 2005;33(6):397–399. 10.1111/j.1600-0528.2005.00251.x [DOI] [PubMed] [Google Scholar]

- 2. World Health Organization. Age-Standardized Death Rates per 100,000 by Cause. World Health Organization; 2006. [Google Scholar]

- 3.International Agency for Research on Cancer, World Health Organization. GLOBOCAN 2012: Estimated Cancer Incidence, Mortality and Prevalence Worldwide in 2012; 2017. Available from: http://globocan.iarc.fr.

- 4. Agarwal AK, Sethi A, Sareen D, Dhingra S. Treatment Delay in Oral and Oropharyngeal Cancer in Our Population: The Role of Socio-Economic Factors and Health-Seeking Behaviour. Indian J Otolaryngol Head Neck Surg. 2011;63(2):145–150. 10.1007/s12070-011-0134-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.World Health Organization. Cancer Country Profiles 2014; 2018. Available from: http://www.who.int/cancer/country-profiles/en/#P.

- 6. Ferlay J, Soerjomataram I, Dikshit R, Eser S, Mathers C, Rebelo M, et al. Cancer Incidence and Mortality Worldwide: Sources, Methods and Major Patterns in GLOBOCAN 2012: Globocan 2012. Int J Cancer. 2015;136(5):E359–E386. 10.1002/ijc.29210 [DOI] [PubMed] [Google Scholar]

- 7. Sankaranarayanan R, Swaminathan R, Brenner H, Chen K, Chia KS, Chen JG, et al. Cancer Survival in Africa, Asia, and Central America: A Population-Based Study. Lancet Oncol. 2010;11(2):165–173. 10.1016/S1470-2045(09)70335-3 [DOI] [PubMed] [Google Scholar]

- 8. Pindborg JJ, Mehta FS, Gupta PC, Daftary DK, Smith CJ. Reverse Smoking in Andhra Pradesh, India: A Study of Palatal Lesions among 10,169 Villagers. Br J Cancer. 1971;25(1):10 10.1038/bjc.1971.2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Van der Eb MM, Leyten EMS, Gavarasana S, Vandenbroucke JP, Meera Kahn P, Cleton FJ. Reverse Smoking as a Risk Factor for Palatal Cancer: A Cross-Sectional Study in Rural Andhra Pradesh, India. Int J Cancer. 1993;54(5):754–758. 10.1002/ijc.2910540508 [DOI] [PubMed] [Google Scholar]

- 10. Reidy J, McHugh E, Stassen LFA. A Review of the Relationship between Alcohol and Oral Cancer. The Surgeon. 2011;9(5):278–283. 10.1016/j.surge.2011.01.010 [DOI] [PubMed] [Google Scholar]

- 11. Amarasinghe HK, Usgodaarachchi US, Johnson NW, Lalloo R, Warnakulasuriya S. Betel-Quid Chewing with or without Tobacco Is a Major Risk Factor for Oral Potentially Malignant Disorders in Sri Lanka: A Case-Control Study. Oral Oncol. 2010;46(4):297–301. 10.1016/j.oraloncology.2010.01.017 [DOI] [PubMed] [Google Scholar]

- 12. Song H, Wan Y, Xu YY. Betel Quid Chewing Without Tobacco: A Meta-Analysis of Carcinogenic and Precarcinogenic Effects. Asia Pac J Public Health. 2015;27(2):NP47–NP57. 10.1177/1010539513486921 [DOI] [PubMed] [Google Scholar]

- 13. Ko YC, Huang YL, Lee CH, Chen MJ, Lin LM, Tsai CC. Betel Quid Chewing, Cigarette Smoking and Alcohol Consumption Related to Oral Cancer in Taiwan. J Oral Pathol Med. 1995;24(10):450–453. 10.1111/j.1600-0714.1995.tb01132.x [DOI] [PubMed] [Google Scholar]

- 14. Sharma DC. Indian Betel Quid More Carcinogenic than Anticipated. Lancet Oncol. 2001;2(8):464 10.1016/S1470-2045(01)00444-2 [Google Scholar]

- 15. Jeng JH, Chang MC, Hahn LJ. Role of Areca Nut in Betel Quid-Associated Chemical Carcinogenesis: Current Awareness and Future Perspectives. Oral Oncol. 2001;37:477–492. 10.1016/S1368-8375(01)00003-3 [DOI] [PubMed] [Google Scholar]

- 16. Gupta PC, Warnakulasuriya S. Global Epidemiology of Areca Nut Usage. Addict Biol. 2002;7(1):77–83. 10.1080/13556210020091437 [DOI] [PubMed] [Google Scholar]

- 17. Jacob BJ, Straif K, Thomas G, Ramadas K, Mathew B, Zhang ZF, et al. Betel Quid without Tobacco as a Risk Factor for Oral Precancers. Oral Oncol. 2004;40(7):697–704. 10.1016/j.oraloncology.2004.01.005 [DOI] [PubMed] [Google Scholar]

- 18. Chen YJ, Chang JTC, Liao CT, Wang HM, Yen TC, Chiu CC, et al. Head and Neck Cancer in the Betel Quid Chewing Area: Recent Advances in Molecular Carcinogenesis. Cancer Sci. 2008;99(8):1507–1514. 10.1111/j.1349-7006.2008.00863.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ponnam SR, Chandrasekhar T, Ramani P, Anuia. Autofluorescence Spectroscopy of Betel Quid Chewers and Oral Submucous Fibrosis: A Pilot Study. J Oral Maxillofac Pathol. 2012;16(1):4–9. 10.4103/0973-029X.92965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Bicknell LM, Ladizinski B, Tintle SJ, Ramirez-Fort MK. Lips as Red as Blood: Areca Nut Lip Staining. JAMA Dermatol. 2013;149(11):1288–1288. 10.1001/jamadermatol.2013.7965 [DOI] [PubMed] [Google Scholar]

- 21. Prabhu R, Prabhu V, Chatra L, Shenai P, Suvarna N, Dandekeri S. Areca Nut and Its Role in Oral Submucous Fibrosis. J Clin Exp Dent. 2014;6(5):e569–e575. 10.4317/jced.51318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.IARC Working Group on the Evaluation of Carcinogenic Risks to Humans, World Health Organization, International Agency for Research on Cancer. Betel-Quid and Areca-Nut Chewing and Some Areca-Nut-Derived Nitrosamines. vol. 85. IARC; 2004. [PMC free article] [PubMed]

- 23. Ram AB. The Use of Betelnut as a Cause of Cancer in Malabar. Indian Med Gaz. 1902;37(10):414. [PMC free article] [PubMed] [Google Scholar]

- 24. Boucher BJ, Mannan N. Metabolic Effects of the Consumption of Areca Catechu. Addict Biol. 2002;7(1):103–110. 10.1080/13556210120091464 [DOI] [PubMed] [Google Scholar]

- 25. Garg A, Chaturvedi P. A Review of the Systemic Adverse Effects of Areca Nut or Betel Nut. Indian J Med Paediatr Oncol. 2014;35(1):3 10.4103/0971-5851.133702 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Strickland SS. Anthropological Perspectives on Use of the Areca Nut. Addict Biol. 2002;7(1):85–97. 10.1080/13556210120091446 [DOI] [PubMed] [Google Scholar]

- 27. Sankaranarayanan R, Ramadas K, Thomas G, Muwonge R, Thara S, Mathew B, et al. Effect of Screening on Oral Cancer Mortality in Kerala, India: A Cluster-Randomised Controlled Trial. The Lancet. 2005;365(9475):1927–1933. 10.1016/S0140-6736(05)66658-5 [DOI] [PubMed] [Google Scholar]

- 28. Heintzelman DL, Utzinger U, Fuchs H, Zuluaga A, Gossage K, Gillenwater AM, et al. Optimal Excitation Wavelengths for In Vivo Detection of Oral Neoplasia Using Fluorescence Spectroscopy. J Photochem Photobiol. 2000;72(1):103–113. 10.1562/0031-8655(2000)072<0103:OEWFIV>2.0.CO;2 [DOI] [PubMed] [Google Scholar]

- 29. Svistun E, Alizadeh-Naderi R, El-Naggar A, Jacob R, Gillenwater A, Richards-Kortum R. Vision Enhancement System for Detection of Oral Cavity Neoplasia Based on Autofluorescence. Head Neck. 2004;26(3):205–215. 10.1002/hed.10381 [DOI] [PubMed] [Google Scholar]

- 30. Roblyer D, Kurachi C, Stepanek V, Williams MD, El-Naggar AK, Lee JJ, et al. Objective Detection and Delineation of Oral Neoplasia Using Autofluorescence Imaging. Cancer Prev Res (Phila Pa). 2009;2(5):423–431. 10.1158/1940-6207.CAPR-08-0229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Ingrams DR, Dhingra JK, Roy K, Perrault DF, Bottrill ID, Kabani S, et al. Autofluorescence Characteristics of Oral Mucosa. Head Neck. 1997;19(1):27–32. 10.1002/(SICI)1097-0347(199701)19:1<27::AID-HED5>3.0.CO;2-X [DOI] [PubMed] [Google Scholar]

- 32. Dhingra JK, Perrault DF Jr, McMillan K, Rebeiz EE, Kabani S, Manoharan R, et al. Diagnosis of Upper Aerodigestive Tract Cancer by Autofluorescence. Arch Otolaryngol Head Neck Surg. 1996;122:1181–1186. 10.1001/archotol.1996.01890230029007 [DOI] [PubMed] [Google Scholar]

- 33. Schwarz RA, Gao W, Daye D, Williams MD, Richards-Kortum R, Gillenwater AM. Autofluorescence and Diffuse Reflectance Spectroscopy of Oral Epithelial Tissue Using a Depth-Sensitive Fiber-Optic Probe. Appl Opt. 2008;47(6):825–834. 10.1364/AO.47.000825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Lane PM, Follen M, MacAulay C. Has Fluorescence Spectroscopy Come of Age? A Case Series of Oral Precancers and Cancers Using White Light, Fluorescent Light at 405 Nm, and Reflected Light at 545 Nm Using the Trimira Identafi 3000. Gend Med. 2012;9(1S):S25–35. 10.1016/j.genm.2011.09.031 [DOI] [PubMed] [Google Scholar]

- 35. Lane PM, Lam S, Follen M, Macaulay C. Oral Fluorescence Imaging Using 405-Nm Excitation, Aiding the Discrimination of Cancers and Precancers by Identifying Changes in Collagen and Elastic Breakdown and Neovascularization in the Underlying Stroma. Gend Med. 2012;9(1S):S78–S82.e8. 10.1016/j.genm.2011.11.006 [DOI] [PubMed] [Google Scholar]

- 36. de Veld DCG, Skurichina M, Witjes MJH, Duin RPW, Sterenborg DJCM, Star WM, et al. Autofluorescence Characteristics of Healthy Oral Mucosa at Different Anatomical Sites. Lasers Surg Med. 2003;32(5):367–376. 10.1002/lsm.10185 [DOI] [PubMed] [Google Scholar]

- 37. de Veld DCG, Witjes MJH, Sterenborg HJCM, Roodenburg JLN. The Status of in Vivo Autofluorescence Spectroscopy and Imaging for Oral Oncology. Oral Oncol. 2005;41(2):117–131. 10.1016/j.oraloncology.2004.07.007 [DOI] [PubMed] [Google Scholar]

- 38. de Veld DCG, Skurichina M, Witjes MJH, Duin RPW, Sterenborg HJCM, Roodenburg JLN. Autofluorescence and Diffuse Reflectance Spectroscopy for Oral Oncology. Lasers Surg Med. 2005;36(5):356–364. 10.1002/lsm.20122 [DOI] [PubMed] [Google Scholar]

- 39. Zuluaga A, Utzinger U, Durkin A, Fuchs H, Gillenwater A, Jacob R, et al. Fluorescence Excitation Emission Matrices of Human Tissue: A System for in Vivo Measurement and Method of Data Analysis. Appl Spectrosc. 1999;53(3):302–311. 10.1366/0003702991946695 [Google Scholar]

- 40. Mallia RJ, Thomas SS, Mathews A, Kumar RR, Sebastian P, Madhavan J, et al. Laser-Induced Autofluorescence Spectral Ratio Reference Standard for Early Discrimination of Oral Cancer. Cancer. 2008;112(7):1503–1512. 10.1002/cncr.23324 [DOI] [PubMed] [Google Scholar]

- 41. Poh CF, Ng SP, Williams PM, Zhang L, Laronde DM, Lane P, et al. Direct Fluorescence Visualization of Clinically Occult High-Risk Oral Premalignant Disease Using a Simple Hand-Held Device. Head Neck. 2007;29(1):71–76. 10.1002/hed.20468 [DOI] [PubMed] [Google Scholar]

- 42. Lane PM, Gilhuly T, Whitehead P, Zeng H, Poh CF, Ng S, et al. Simple Device for the Direct Visualization of Oral-Cavity Tissue Fluorescence. J Biomed Opt. 2006;11(2):024006 10.1117/1.2193157 [DOI] [PubMed] [Google Scholar]

- 43. Wagnieres GA, Star WM, Wilson BC. In Vivo Fluorescence Spectroscopy and Imaging for Oncological Applications. Photochem Photobiol. 1998;68(5):603–632. 10.1111/j.1751-1097.1998.tb02521.x [PubMed] [Google Scholar]

- 44. Richards-Kortum R, Sevick-Muraca E. Quantitative Optical Spectroscopy for Tissue Diagnosis. Annu Rev Phys Chem. 1996;47(1):555–606. 10.1146/annurev.physchem.47.1.555 [DOI] [PubMed] [Google Scholar]

- 45. Farah CS, McIntosh L, Georgiou A, McCullough MJ. Efficacy of Tissue Autofluorescence Imaging (Velscope) in the Visualization of Oral Mucosal Lesions. Head Neck. 2012;34(6):856–862. 10.1002/hed.21834 [DOI] [PubMed] [Google Scholar]

- 46.Prahl SA. Tabulated Molar Extinction Coefficient for Hemoglobin in Water; 1998. Available from: https://omlc.org/spectra/hemoglobin/summary.html.

- 47. Batlle AdC. Porphyrins, Porphyrias, Cancer and Photodynamic Therapy—a Model for Carcinogenesis. J Photochem Photobiol B. 1993;20(1):5–22. 10.1016/1011-1344(93)80127-U [DOI] [PubMed] [Google Scholar]

- 48. Vicente MDGH. Porphyrins and the Photodynamic Therapy of Cancer. Rev Port Quimica. 1996;3:46–57. [Google Scholar]

- 49. Messadi DV, Younai FS, Liu HH, Guo G, Wang CY. The Clinical Effectiveness of Reflectance Optical Spectroscopy for the in Vivo Diagnosis of Oral Lesions. Int J Oral Sci. 2014;6(3):162–167. 10.1038/ijos.2014.39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Awan KH, Morgan PR, Warnakulasuriya S. Evaluation of an Autofluorescence Based Imaging System (VELscope™) in the Detection of Oral Potentially Malignant Disorders and Benign Keratoses. Oral Oncology. 2011;47(4):274–277. 10.1016/j.oraloncology.2011.02.001 [DOI] [PubMed] [Google Scholar]

- 51. Scheer M, Neugebauer J, Derman A, Fuss J, Drebber U, Zoeller JE. Autofluorescence Imaging of Potentially Malignant Mucosa Lesions. Oral Surgery, Oral Medicine, Oral Pathology, Oral Radiology and Endodontics. 2011;111(5):568–577. 10.1016/j.tripleo.2010.12.010 [DOI] [PubMed] [Google Scholar]

- 52. Sawan D, Mashlah A. Evaluation of Premalignant and Malignant Lesions by Fluorescent Light (VELscope). J Int Soc Prev Community Dent. 2015;5(3):248–254. 10.4103/2231-0762.159967 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Paderni C, Compilato D, Carinci F, Nardi G, Rodolico V, Lo Muzio L, et al. Direct Visualization of Oral-Cavity Tissue Fluorescence as Novel Aid for Early Oral Cancer Diagnosis and Potentially Malignant Disorders Monitoring. Int J Immunopathol Pharmacol. 2011;24(2_suppl):121–128. 10.1177/03946320110240S221 [DOI] [PubMed] [Google Scholar]

- 54. Rashid A, Warnakulasuriya S. The Use of Light-Based (Optical) Detection Systems as Adjuncts in the Detection of Oral Cancer and Oral Potentially Malignant Disorders: A Systematic Review. J Oral Pathol Med. 2015;44(5):307–328. 10.1111/jop.12218 [DOI] [PubMed] [Google Scholar]

- 55. Mehrotra R, Singh M, Thomas S, Nair P, Pandya S, Nigam NS, et al. A Cross-Sectional Study Evaluating Chemiluminescence and Autofluorescence in the Detection of Clinically Innocuous Precancerous and Cancerous Oral Lesions. The Journal of the American Dental Association. 2010;141(2):151–156. doi: 10.14219/jada.archive.2010.0132 [DOI] [PubMed] [Google Scholar]

- 56. Shen D, Wu G, Suk HI. Deep Learning in Medical Image Analysis. Annu Rev Biomed Eng. 2017;19(1):221–248. 10.1146/annurev-bioeng-071516-044442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Uthoff RD, Song B, Birur P, Kuriakose MA, Sunny S, Suresh A, et al. Development of a Dual-Modality, Dual-View Smartphone-Based Imaging System for Oral Cancer Detection In: Proc. SPIE 10486, Design and Quality for Biomedical Technologies XI. vol. 10486 San Francisco, California: SPIE; 2018. p. 104860V–10486–7. Available from: 10.1117/12.2296435. [DOI] [Google Scholar]

- 58. Kingslake R, Johnson RB. Lens Design Fundamentals. Academic Press; 2009. [Google Scholar]

- 59. Pierce MC, Schwarz RA, Bhattar VS, Mondrik S, Williams MD, Lee JJ, et al. Accuracy of in Vivo Multimodal Optical Imaging for Detection of Oral Neoplasia. Cancer Prev Res (Phila Pa). 2012;5(6):801–809. 10.1158/1940-6207.CAPR-11-0555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Google Inc. Camera2 API: Reference; 2018. Available from: https://developer.android.com/reference/android/hardware/camera2/package-summary.

- 61.Google Inc. Distribution Dashboard; 2018. Available from: https://developer.android.com/about/dashboards/.

- 62.Burns PD. Slanted-Edge MTF for Digital Camera and Scanner Analysis. In: Proc. IS&T; 2000. p. 135–8.

- 63.Burns PD. Sfrmat3: SFR Analysis for Digital Cameras and Scanners; 2009. LosBurns Imaging Software. Available from: http://www.losburns.com/imaging/software/SFRedge/index.htm.

- 64.Schanda J, International Commission on Illumination, editors. Colorimetry: Understanding the CIE System. [Vienna, Austria]: Hoboken, N.J: CIE/Commission internationale de l’eclairage; Wiley-Interscience; 2007.

- 65. Qin Z, Wang K, Chen F, Luo X, Liu S. Analysis of Condition for Uniform Lighting Generated by Array of Light Emitting Diodes with Large View Angle. Opt Express. 2010;18(16):17460 10.1364/OE.18.017460 [DOI] [PubMed] [Google Scholar]

- 66. Wood NK, Goaz PW, Alling CC. Differential Diagnosis of Oral Lesions. Mosby St. Louis; 1991. [Google Scholar]

- 67. Lynch MA, Brightman VJ, Greenberg MS. Burket’s Oral Medicine: Diagnosis and Treatment. Lippincott Williams & Wilkins; 1994. [Google Scholar]

- 68. Ongole R, B N P, editors. Text Book of Oral Medicine, Oral Diagnosis and Oral Radiology. 2nd ed Elsevier; India; 2010. [Google Scholar]

- 69. Pan SJ, Yang Q. A Survey on Transfer Learning. IEEE Trans Knowl Data Eng. 2010;22(10):1345–1359. 10.1109/TKDE.2009.191 [Google Scholar]

- 70.Chatfield K, Simonyan K, Vedaldi A, Zisserman A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. ArXiv Prepr ArXiv14053531. 2014;.

- 71. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature. 2017;542(7639):115–118. 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Aubreville M, Knipfer C, Oetter N, Jaremenko C, Rodner E, Denzler J, et al. Automatic Classification of Cancerous Tissue in Laserendomicroscopy Images of the Oral Cavity Using Deep Learning. Sci Rep. 2017;7(1):11979 10.1038/s41598-017-12320-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L. Imagenet: A Large-Scale Hierarchical Image Database. In: Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference On. IEEE; 2009. p. 248–255.

- 74. Altman DG, Bland JM. Diagnostic Tests. 1: Sensitivity and Specificity. BMJ. 1994;308(6943):1552 10.1136/bmj.308.6943.1552 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Altman DG, Bland JM. Diagnostic Tests 2: Predictive Values. BMJ. 1994;309(6947):102 10.1136/bmj.309.6947.102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Swets J. Measuring the Accuracy of Diagnostic Systems. Science. 1988;240(4857):1285–1293. 10.1126/science.3287615 [DOI] [PubMed] [Google Scholar]

- 77. Zweig MH, Campbell G. Receiver-Operating Characteristic (ROC) Plots: A Fundamental Evaluation Tool in Clinical Medicine. Clin Chem. 1993;39(4):561–577. [PubMed] [Google Scholar]

- 78.Carestream Health. Carestream Dental CS 1600 Intraoral Camera; 2014. Available from: http://carestreamdental.com/us/en/intraoralcamera/1600#Features%0020and%0020Benefits.

- 79.LED Apteryx, Inc. VELscope® Vx, System; 2015. Available from: http://www.velscope.com/velscope-products/velscope/.

- 80. Rahman M, Chaturvedi P, Gillenwater AM, Richards-Kortum R. Low-Cost, Multimodal, Portable Screening System for Early Detection of Oral Cancer. J Biomed Opt. 2008;13(3):030502 10.1117/1.2907455 [DOI] [PubMed] [Google Scholar]

- 81. Roblyer D, Richards-Kortum R, Sokolov K, El-Naggar AK, Williams MD, Kurachi C, et al. Multispectral Optical Imaging Device for in Vivo Detection of Oral Neoplasia. J Biomed Opt. 2008;13(2):024019 10.1117/1.2904658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.DentalEZ. How It Works; 2015. Available from: http://www.identafi.net/identafi-technology/how-it-works.

- 83.Prakash M, Prakash Lab. OScan: Screening Tool for Oral Lesions; 2012. Available from: https://web.stanford.edu/~manup/Oscan/.

- 84. Fechner GT. Elements of Psychophysics. Howes DH, Boring EG, editors. New York: Holt, Reinhart, and Winston; 1966. [Google Scholar]

- 85.Awais M, Walter N, Faye I, Saad MN, Ramanathan A, Zain RM. Analysis of Auto-Fluorescence Images for Automatic Detection of Abnormalities in Oral Cavity. In: 2015 7th International Conference on Information Technology and Electrical Engineering (ICITEE); 2015. p. 209–214.

- 86. Harvey JE. Integrating Optical Fabrication and Metrology into the Optical Design Process. Appl Opt. 2015;54(9):2224 10.1364/AO.54.002224 [DOI] [PubMed] [Google Scholar]

- 87. Rahman MS, Ingole N, Roblyer D, Stepanek V, Richards-Kortum R, Gillenwater A, et al. Evaluation of a Low-Cost, Portable Imaging System for Early Detection of Oral Cancer. Head Neck Oncol. 2010;2(10):8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Wu Y, Qu JY. Autofluorescence Spectroscopy of Epithelial Tissues. JBO, JBOPFO. 2006;11(5):054023. [DOI] [PubMed] [Google Scholar]

- 89.The World Bank. Mobile Cellular Subscriptions (per 100 People); 2017. Available from: https://data.worldbank.org/indicator/IT.CEL.SETS?end=2016&start=2000&view=chart.

- 90.Poushter J. Smartphone Ownership and Internet Usage Continues to Climb in Emerging Economies; 2016. Available from: http://www.pewglobal.org/2016/02/22/smartphone-ownership-and-internet-usage-continues-to-climb-in-emerging-economies/.

- 91.Uthoff RD Rossuthoff/Intraoral_led_driver: KiCAD Design Files. GitHub. 2018;.

- 92.Uthoff RD rossuthoff/Kicad_footprints: Custom Footprints for Pcb Designs. GitHub. 2018;.

- 93.Uthoff RD rossuthoff/rossuthoff/10.1371-journal.pone.0207493: Data repository for manuscript 10.1371/journal.pone.0207493. GitHub. 2018;.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are deposited at doi:10.5281/zenodo.1477483. The design files for the LED driver are deposited on GitHub at doi:10.5281/zenodo.1214892; doi:10.5281/zenodo.1214890.