Abstract

Purpose of Study

To develop a structured observational tool, the Resident-centered Assessment of Interactions with Staff and Engagement tool (RAISE), to measure 2 critical, multi-faceted, organizational-level aspects of person-centered care (PCC) in nursing homes: (a) resident engagement and (b) the quality and frequency of staff-resident interactions.

Design and Methods

In this multi-method psychometric development study, we conducted (a) 120 hr of ethnographic observations in one nursing home and (b) a targeted literature review to enable construct development. Two constructs for which no current structured observation measures existed emerged from this phase: nursing home resident-staff engagement and interaction. We developed the preliminary RAISE to measure these constructs and used the tool in 8 nursing homes at an average of 16 times. We conducted 8 iterative psychometric testing and refinement cycles with multi-disciplinary research team members. Each cycle consisted of observations using the draft tool, results review, and tool modification.

Results

The final RAISE included a set of coding rules and procedures enabling simultaneously efficient, non-reactive, and representative quantitative measurement of the interaction and engagement components of nursing home life for staff and residents. It comprised 8 observational variables, each represented by extensive numeric codes. Raters achieved adequate to high reliability with all variables. There is preliminary evidence of face and construct validity via expert panel review.

Implications

The RAISE represents a valuable step forward in the measurement of PCC, providing objective, reliable data based on systematic observation.

Keywords: Person-centered care, Measurement, Nursing homes, Psychosocial

The 1987 Federal Nursing Home Reform Act emphasized the dual importance of quality of life (QOL) and physical health (Hawes et al., 1997), and the Institute of Medicine’s landmark publication on quality in healthcare underscored the importance of providing care consistent with patient goals, that is, providing person-centered care (PCC) (Institute of Medicine, 2001). In nursing homes, PCC should promote choice, purpose, and meaning in residents’ daily lives and ensure that residents have support to achieve their highest practicable levels of physical, mental, and psychosocial well-being (Koren, 2010; Molony, 2011). The evidence base for numerous individual components of PCC in nursing homes is growing. Positive management practices, for example, are associated with better resident outcomes (Anderson, Issel, & McDaniel, 2003). Stimulating environments are associated with resident well-being (Beerens et al., 2016; Cox, Burns, & Savage, 2004; Molony, 2011; Schreiner, Yamamoto, & Shiotani, 2005; Zeisel et al., 2003). Staff empowerment has been suggested to improve PCC (Bowers et al., 2016b; Caspar & O’Rourke, 2008; Caspar, O’Rourke, & Gutman, 2009; Scalzi et al. 2006). Consistent assignment (i.e., the same staff members caring for the same residents a majority or all of the time) has been associated with fewer deficiency citations (including in quality of care and QOL) (Castle, 2011). A recent examination of the trademarked Green House nursing homes associated PCC with lower Medicare expenditures, reduced hospitalizations, and increased staff satisfaction (Bowers et al., 2016b; Cohen et al., 2016; Grabowski et al., 2016). PCC in other settings may also result in lower nursing home staff turnover and higher staff satisfaction, although findings are mixed (Brownie & Nancarro, 2013; Craft Morgan et al., 2007; Deutschman, 2001; Miller, Mor, & Burgess, 2016; Stone et al., 2002; Tellis-Nayak, 2007).

Multifaceted PCC adoption may include simultaneous adoption of environmental enhancements; leadership and management changes; staff empowerment; consistent staff assignment; individualized care plans; and/or engagement of residents in meaningful activities, social interactions, and relationships (Brownie & Nancarrow, 2013; Sterns, Miller & Allen, 2010)—all while achieving high-quality resident clinical outcomes. The significance and potential benefits of multifaceted adoption are well documented (Brownie & Nancarrow, 2013; Burack, Weiner, & Reinhardt, 2012; Chang, Li, & Porock, 2013; Svarstad, Mount, & Bigelow, 2001). Multifaceted PCC interventions may improve resident outcomes, including QOL, Minimum Data Set (MDS) quality indicators, and activities of daily living (Grabowski et al., 2014; Grant & The Commonwealth Fund, 2008; Hill et al., 2011; Kane et al., 2007; Petriwskyj et al., 2016). But the particular combination of PCC components that nursing homes choose to simultaneously implement varies considerably (Bowers et al., 2016a; Miller et al., 2014; Sterns, Miller, & Allen, 2010). The overall evidence base for PCC as a multifaceted intervention is growing, but the results to date have been mixed (Petriwskyj et al., 2016; Shier et al., 2014). Even within Green House homes, person-centered principles are not always uniformly operationalized and do not result in uniform outcomes (Bowers & Nolet, 2014; Bowers et al., 2016a).

As these studies show, measurement is key to identifying PCC’s critical components, trends over time, and areas and methods for improvement. A particular tool’s measurement of PCC, however, is necessarily bounded by the tool’s intent, its method of application, and the type of data collected. Many more tools are available to measure the physical than the psychosocial, subjective, or “soft” components of PCC. This reflects measurement in long-term care in general. The MDS process for clinical assessment, for example, is primarily based on physical assessment, with only a small proportion of its measures focused on QOL. The QOL measures rely on resident self-report or staff members’ reporting on behalf of or in lieu of a resident. Use of MDS data for QOL constructs is also limited due to their relatively narrow focus and the length of time between MDS assessments (Zimmerman et al., 2015). Chart review, often the gold standard for many physical measures, is limited for QOL by wide variability regarding what is available in the chart and how it is assessed and reported.

Several staff self-report measurement tools that include items assessing PCC components do exist, such as the Person Centered Practices in Assisted Living PC-Pal questionnaire (Zimmerman et al., 2015), the Better Jobs Better Care Person Centered Care tool (White, Newton-Curtis, & Lyons, 2008; Sullivan et al., 2013), the Artifacts of Culture Change tool (Bowman & Schoeneman, 2006), and others as summarized in several reviews (Edvardsson & Innes, 2010; Levenson, 2009; McGilton et al., 2012; White-Chu et al., 2009). But self-report tools such as these provide only a limited, though important, view of PCC due to respondents’ potential biases and knowledge limitations (Edvardsson & Innes, 2010). Exclusive use of self-report tools also limits the scope of assessment to those areas in which respondents are willing and able to provide an accurate representation. There is also evidence that staff ratings differ from ratings obtained through observation (McCann et al., 1997).

Observational methods remedy many self-report biases by allowing raters to gather objective data about actual behaviors that occur (Curyto, Van Haitsma, & Vriesman, 2008). But there are limitations to using existing observational measures to assess PCC in nursing homes. (a) Their primary intent may be to represent and assess PCC at the individual rather than organizational level (e.g., Dementia Care Mapping tool) (Brooker, 2005). (b) They may be focused only on resident behaviors and exclude staff behaviors (e.g., Casey et al., 2014; de Boer et al., 2016). (c) Or they may be narrowly focused on just one area, such as activity (e.g., Godlove, Richard & Rodwell, 1982), interaction (e.g., Dean & Proudfoot, 1993; Grosch, Medvene & Wolcott, 2008), or dining (e.g., Gilmore-Bykovskyi, 2015).

This paper describes the development and refinement of a structured, direct observation tool designed to partially fill this gap. The Resident-centered Assessment of Interaction with Staff and Engagement tool (RAISE) enables organization-level aggregate measurement of resident engagement and the quality and frequency of staff-resident interactions, important aspects of PCC not well-represented in other PCC measures. Work on the RAISE took place primarily within one of the United States’ largest healthcare systems, the Veterans Health Administration (VHA), in which nursing homes are known as community living centers (CLCs). There are 135 CLCs located throughout the United States, providing a range of short and long stay services, including but not limited to rehabilitation, skilled, palliative and hospice, mental health recovery, and dementia care. These CLCs serve approximately 40,000 veterans annually. CLCs served as an ideal setting to develop a PCC tool due to CLCs’ long (since 2004) and ongoing mandate to improve the person-centeredness of care.

Design and Methods

RAISE development and refinement was a multi-step process that took place between 2010 and 2015. It comprised (a) development of the preliminary instrument and (b) instrument refinement and finalization. All aspects of this study were reviewed and approved by the relevant institutional review boards.

Preliminary Instrument

A needs assessment and a literature review were first conducted. These provided the foundation for development of the preliminary instrument.

Needs Assessment

Investigators conducted over 120 hr of ethnographic observations of residents and staff at one CLC transitioning to a small house model of care. The sensitizing concept guiding the observations, field notes, and analysis was, “What aspects of person-centered care can be identified through observations?” Observations occurred in increments of 30 min to an hour on each nursing shift. Investigators wrote observation field notes after each observation. Researchers reviewed and discussed the field notes in an iterative process to identify observed concepts. Analysis identified numerous observable aspects of CLC life related to PCC, including the influence of the CLC environment, the quality of interactions among staff and residents, resident activity levels, and levels of resident engagement.

Literature Review

Concurrent with the needs assessment, we conducted a review of the published literature on culture change and PCC to understand what could be achieved with already existing measurement tools and methods (see final conceptual model in Hartmann, et al., 2013). Within that review we collected information on (a) quantitative survey instruments that measured how nursing home staff are impacted by a transition to a more person-centered environment (e.g., psychological empowerment, job satisfaction), (b) open ended-ended interview guides and close-ended surveys that captured staff and other stakeholders’ impressions of PCC, and (c) structured observation tools assessing PCC constructs (e.g., interaction, activity, dining, well-being, mood).

Combining Needs Assessment and Literature Review

The research team compared the type and scope of existing PCC measurement instruments with the PCC components identified by the needs assessment. Two key PCC components identified through the needs assessment were not adequately addressed by existing tools: overall level of resident engagement and overall quantity and quality of staff-resident interactions. We concluded that (a) the potentially subjective nature of the two identified components would be most reliably measured by a structured observation instrument resulting in quantitative data and (b) it was important to simultaneously assess staff and resident interaction, emotional tone, activity type, and engagement, because the multifactorial context of the quality of resident activity and staff-resident interactions could only be understood by assessing multiple elements.

Preliminary Instrument Development

Observation Variables

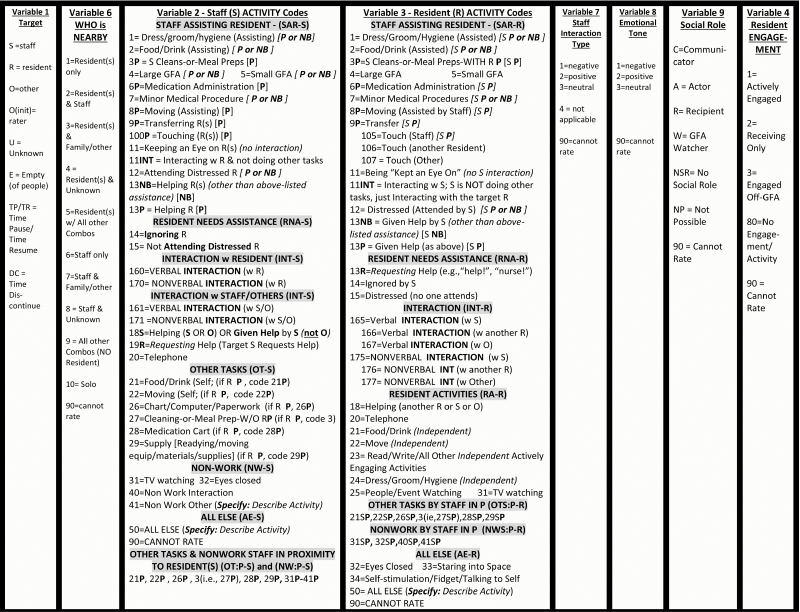

We concretized results of the needs assessment and literature review through iterative meetings in which we developed nine specific observation variables for the preliminary RAISE. One variable described the (1) target individual being observed (e.g., staff or resident). A set of four variables characterized the multi-faceted concept of staff and resident interactions: (2) who was near the observation target individual during the observation, (3) specific staff activities, (4) specific resident activities, (5) quality of staff-resident interaction from the perspective of PCC best clinical practices, (6) emotional tone of a staff-resident interaction, and (7) the observation target’s social role in the interaction. Two variables characterized the concept of resident engagement beyond the descriptive coding of specific resident activities mentioned above: (8) level of resident engagement and (9) who initiated the resident activity (Variable removed from the final instrument due to poor inter-rater reliability (IRR). The eight remaining variables are represented in Figure 1 in the same order as described here.). See Figure 1 for the final RAISE tool and its variables.

Figure 1.

RAISE final version observation variables and codes. Note. S = staff; R = resident; O = other; P = proximal; NB = nearby; GFA = group formal activity. All observation variables except Variable 2 are mutually exclusive, “trump rules” guide raters in choosing a single numeric code when multiple applicable behaviors are observed within a discrete observation period. Codes are presented here in order of trump rules; e.g., Variable 7 code 1 (negative), “trumps” code 2, and code 2 trumps code 3. Variable 2 is not mutually exclusive—all applicable codes that are observed during the observation period are recorded.

Evidence Base for Observational Variable Definitions

The RAISE requires raters to make many complex decisions, such as determining when an interaction is occurring, the emotional tone of others, and the quality of staff interactions. To achieve adequate IRR in these highly subjective areas, a comprehensive coding manual was iteratively developed that defined the observation variables and codes and provided descriptive examples (final version, 135 pages, available upon request). Design of the manual was guided by the Unmet Needs Conceptual Model (Algase et al., 1996) and Self Determination Theory (Deci & Ryan, 1985; Deci & Ryan, 2000). In the manual, interaction was defined in accordance with communication theory (Benokraitis, 2015). Emotional tone was defined in accordance with the two-dimensional model of emotion (Plutchik, 2001; Schacter & Singer, 1962). PCC best clinical practices, particularly dementia care communication practices, were defined in accordance with dementia care principles (Smith et al., 2011).

Observation Procedures

Our goal was to develop an observational method for the constructs of interest that would accurately represent daily life in a nursing home at an aggregate organizational level, combining the experiences of residents or staff. We used a time sampling approach to achieve “snapshots” in time of specific physical locations (Suen & Ary, 1989). We piloted several procedures, settling upon a method that directed users to define an assessment area and systematically and consecutively rate each resident and staff member within that area, working from the left to right. We began instrument refinement by using a required time sample (observation interval) of 30 s. One complete observation cycle was set at 20 min.

Instrument Refinement and Finalization

Facility Sample

As part of a larger study of PCC organizational change efforts, multiple site visits were conducted at seven CLCs and one large non-VHA continuing care retirement community (CCRC). These facilities were chosen because all were actively engaging in “culture change” efforts to improve PCC practices. Two CLCs were in the Midwest (MW1, MW2); the CCRC and three CLCs were in the South (S1, S2, S3, and S4); and two CLCs were in the West (W1, W2). Facility size ranged from 50 to 250 beds. The resident population in these facilities was primarily long-term care with a smaller percentage of shorter-term rehabilitation; we did not use units that had a specialized focus such as dementia-only.

Site Visit Procedures

Instrument piloting took place during facility visits ranging from 3 to 5 days. At each facility, raters conducted observation sessions both individually and in pairs. An average of 16 observation sessions were conducted per visit (range, 8–40). Rating observations took place in public areas (e.g., hallways, communal dining, living areas). Raters completed short field notes after observation sessions, focusing particularly on difficulties with procedure, structure, or coding (Emerson, Fretz, & Shaw, 1995). A rater training protocol and manual were iteratively developed (final versions available upon request). Raters received training prior to site visits, including conducting co-ratings with the first author.

Instrument Piloting and Refinement

We conducted eight chronological but not necessarily contiguous piloting and refinement cycles (Table 1). Cycles comprised one or two facility visits, with instrument revision taking place after each visit. A new cycle began when we visited a different facility from that/those in the previous cycle. Cycles were designed to ensure that the final instrument yielded stable results across the multi-disciplinary group of raters and across the variety of facilities represented. Cycles also enabled evaluation and iterative development of tool structures and procedures, content, and coding and training manuals. The instrument was considered final after the eighth cycle, when no more substantive changes were indicated.

Table 1.

Summary of Instrument Refinement Cycles and Major Determinations

| Cycle | Site(s) at which ratings took placea | Number of raters per site, respectively | Major determinations re. tool refinementb | |

|---|---|---|---|---|

| Domain(s)c | Comments | |||

| 1 | S1 (visit a), S1 (visit b), MW1 | 6,2,3 | RLP, AC | Procedural focus, particularly determination of best observation period durations |

| First coding rules developed and piloted | ||||

| 2 | S1 | 6 | DD | Three psychologist raters enjoy high IRR |

| Speech pathologist, social worker, and pre-med student join and IRR ratings significantly decrease | ||||

| 3 | MW2, practice videos | 5, 5 | AC, RLP, RT | Novel physical environments and resident case mixes present new challenges, changes result |

| 4 | S1, S2, practice videos | 2, 2, 4 | AC, CR, DD, ED, RLP | Video IRR protocol developed, allowing for finer analysis of IRR difficulties |

| Definitions document expanded | ||||

| Consensus conference convened to review expanded definitions document, changes made based resulting on recommendations | ||||

| Revised instrument piloted in two sites and with videos, IRR difficulties isolated to three major sections (C5,C7,C9), major changes made | ||||

| 5 | S2, practice videos | 2, 5 | AC, CR, ED, RLP, RT | Revised instrument piloted again, C5,C7,C9 improve but causes a cascade effect of new inconsistences in new areas, more changes made |

| 6 | W1, practice videos | 3, 3 | AC, CR, ED, RM, RT | Changes continue to cause cascade effect, major structural revision implemented to address the difficulty |

| During the major revision, instrument also refined to reflect new strengths-based orientation and to reflect a “universal design” approach to more intuitive wording and structure | ||||

| 7 | S3, S4 | 3, 3 | AD, CR, ED, RLP, RM | Novel physical environments and resident case mixes present new challenges, changes result |

| 8 | W2, S4, practice videos | 2, 3, 3 | RLP | Major changes to rater procedures that streamline the physical aspects of the rating experience |

| IRR scores stabilize | ||||

Notes: aMultiple site visits were conducted at seven VHA community living centers (CLCs) and one large non-VHA continuing care retirement community (CCRC). Two CLCs were located in the Midwest (MW1, MW2); the CCRC and three CLCs were located in the South (S1, S2, S3, and S4); and two CLCs were in the West (W1, W2).

bRepresentative examples provided. Full Audit Trail document available on request from authors.

| AC: Activities Coding | Identification of the need for increased specificity of staff and resident activities code definitions. Resulted in significant revisions of coding rules, reorganization of similar activities into a number of discrete categories (e.g., staff assisting resident; resident needs assistance) and the addition of nearby and proximal specifiers within the code names to support rater memorization and application efforts. |

| CR: Social Role Definitions | Identification of the need for increased specificity of the communication role definitions within the context of the GFAs. Resulted in definition modifications and the addition of a wide variety of examples. |

| DD: Discipline Differences | Identification of systematic differences in application of coding rules by discipline. Resulted in extensive revisions to coding rules and use of multidisciplinary rater teams going forward to assure generalizability. |

| ED: Emotion Definitions | Identification of the need for increased specificity of emotion definitions to increase reliability. Resulted in adding a wide variety of examples to the coding rules and specifying four kinds of expressive behaviors that could be focused upon for behavioral indications of emotional valence and intensity. |

| RLP: Rater Logistics/ Procedures | Identification of need for modifications of the observation protocol and of the materials used to record ratings during observation. Resulted in forms and procedures changes. For example, the data record form was modified from standard to legal sized paper to encourage more note taking thus allowing raters to double-check their codes immediately after an observation session to reduce errors and increase reliability. |

| RM: ROR/MOR Definitions | Identification of the need for increased specificity of Realized Opportunity for Relationship (ROR)/Missed Opportunity for Relationship (MOR) definitions within the context of Group Formal Activities. Resulted in coding rule revisions, addition of additional examples and the addition of the NOR (Neither ROR nor MOR) code to indicate those occasions in which neither MOR nor ROR were applicable. |

| RT: Rater Training | Identification of need for modifications of procedures and materials used to train raters. Resulted in development of supplementary rater training materials such as a rater training guide and glossary. Resulted in development of an iterative evaluation method to assess each subsequent procedure and material revision (such that the quality of rater training procedures and materials were determined based on the raters’ performance in applying coding rules, and their self-reports regarding their experiences in trying to learn the material). |

| Specific training challenges that were identified and evaluated included: raters’ ability to develop an advanced understanding of the interdependence of coding rules (e.g., if Emotional Tone = x and Resident Activity = z then Staff Interaction Type cannot be = y); raters’ efforts to gain mastery of code applications across the wide variety of physical environment scenarios that can occur; raters’ efforts to gain mastery across the wide variety of events that can occur and how these events covary with the typical behaviors of residents with certain kinds of physical and cognitive abilities; and raters’ efforts to gain mastery of the typical interaction challenges staff exhibit other staff, as well as with residents of varying physical and cognitive abilities. |

A total of 13 raters took part in the cycles. Raters represented a wide range of health-related disciplines and educational levels: three clinical geropsychologists, two doctoral-level social workers, a geriatrician, a speech-language pathologist, a pre-med undergraduate, a doctoral-level nurse, a doctoral-level social psychologist, and three clinical geropsychology doctoral students. Not all raters participated in all cycles. The first author participated in all cycles.

Audit Trail

A detailed audit trail was maintained throughout, assuring rigor and reproducibility. All relevant information was documented: (a) development timeline; (b) rater involvement; (c) activities undertaken; (d) coding, structure, and procedural decisions; and (e) justifications. The final document was 60 pages (available upon request).

Reliability

Video Protocol

A video-based IRR testing protocol was developed and introduced in cycle 3. Video-based IRR training and testing has been used successfully in previous instrument development efforts (Chan et al., 2014). Publicly available videos portraying people interacting in circumstances representing typical nursing home scenes were chosen. Each 3-min video was divided into 5-s coding segments. Fleiss’s kappa was computed between raters for each video clip to gauge success and highlight potential areas for modification (Geertzen, 2012). An average of three raters completed an average of five videos for each revision pilot.

Preliminary and Interim IRR Analyses

IRRs were calculated at multiple points during the instrument refinement process. Frequency counts of disagreements were organized by RAISE variable. Fleiss kappas were calculated between raters.

Final IRR Analyses

After the eighth cycle, the final RAISE was evaluated. Three raters (licensed psychologist, doctoral-level social worker, and speech-language pathologist) viewed and rated 17 video coding segments using the final RAISE, representing a total of 555 rating decisions. Fleiss kappas were calculated amongst the final group of raters.

Validity

An expert panel was convened to assess the face and construct validity of the final RAISE using a three-item survey with an 11-point scale. The items assessed (a) how well the RAISE captured the engagement/activity and interaction aspects of PCC, (b) whether the RAISE was missing important components, and (c) confidence that RAISE ratings over time would reflect actual changes in the constructs measured. The panel comprised seven members, all with many years experience in nursing homes: a geriatrician, Green House guide, geropsychologist, geriatric social worker, recreation therapist, dementia intervention expert, and nursing home organizational development consultant.

Results

Refinement Cycles

Table 1 summarizes the eight piloting and refinement cycles. Table 1 also summarizes the major challenges that surfaced in the tool development process, provides examples of these challenges, and summarizes how the development team met these challenges.

Final Instrument

The final RAISE consisted of eight variables; one of the original variables (initiation of resident activity) was removed due to failure to achieve adequate IRR. The final protocol used a 5-s observation interval, repeated over a 20-min observation period.

Final IRR

All Fleiss kappas for all eight RAISE variables were ≥.70, indicating adequate IRR (refer to Figure 1 for a summary of RAISE variables). Raters exhibited the lowest agreement, with an average of .71, for the specific staff and resident activities observed (Figure 1, variables #2 & #3). This level of IRR is in the acceptable range; indeed, it can be viewed as relatively strong, given that these categories include approximately 30 codes each. When the codes were aggregated into sub-groups (e.g., Staff Assisting Resident, Resident Needs Assistance, see sub-group headings in Figure 1), agreement was >.90. Rater agreement for emotional tone (Figure 1, variable #8), staff interaction quality (Figure 1, variable #7), and resident engagement (Figure 1, variable #4) were .76, .77, and .87, respectively. Raters exhibited perfect agreement on all remaining RAISE variables.

Validity

The expert panel returned high ratings for all three survey items: Q1 avg rating = 8.4, range = 6–10, median = 8; Q2 avg rating = 3, range = 0–8, median = 1; Q3 avg rating = 8.83, range = 7–10, median = 9.

Implications

The RAISE is the first quantitative PCC observational instrument that simultaneously (a) measures aspects of both resident and staff experiences across a range of contextual elements to determine interaction and activity quality, (b) enables an organization-level assessment of these experiences across multiple residents and staff, and (c) can be used in many nursing home locations and contexts. Measuring such foundational aspects of PCC is crucial for nursing homes to monitor progress, identify areas of strength, learn from successes, and intervene to improve areas that are struggling. The RAISE represents a new and unique approach to nursing home PCC measurement—structured observation from the perspectives of residents and staff of two specific aspects of PCC: engagement and interaction. The RAISE underwent rigorous development and was shown to have adequate IRR. Its inclusion in the spectrum of currently available PCC measures thus represents an important step in the journey toward more widespread adoption of resident-centered care practices.

The two PCC aspects measured by the RAISE, engagement and interaction, are necessary but not sufficient for a PCC journey. Engagement and interaction themselves, as defined and measured by the RAISE, may therefore be categorized more as “customer service” elements than truly resident-focused care. Yet the RAISE was designed to be administered by individuals with no prior knowledge of the residents and staff being observed. And use of the RAISE provides a view of nursing home life that is rarely seen: by relying on observation, the RAISE provides an important counterbalance to surveys and interviews that contain inherent potential self-report biases. The RAISE’s time sampling-based observation protocol also provides flexibility, allowing data collection to be tailored to assessment goals such that multiple “snapshots” of life can be taken across a variety of locations, times, and/or individuals. The RAISE allows for in-depth assessment of particular aspects of care quality, both through its staff activity codes (e.g., “ignoring,” “not attending distressed resident”) and through simultaneous rating of multiple observation variables (e.g., presence of staff activity code “food/drink assisting, proximal” and simultaneous absence of verbal and nonverbal interaction codes).

Limitations

The Hawthorne effect is a common limitation of observational instruments (McCambridge, Witton, & Elbourne, 2014). Staff who realize they are being observed may change their behavior during the observation period, resulting in potentially misleading assessments of, in the case of the RAISE, resident-staff interactions and engagement. This is, of course, also a danger with the currently mandated CMS nursing home surveys and is why it is always important to triangulate data. In our experience with the RAISE, the unobtrusive nature of each 5-s observation interval, the switching of observation subjects across a room during a scan, and the length of each observation period (20 min in total) all combine to reduce the intrusion upon staff and fade the researcher into the background. Any lingering Hawthorne effect is likely to result in positive changes in staff behavior, benefitting residents in the moment and serving as potential learning experiences when results are analyzed.

The RAISE was developed using a convenience sample of nursing homes from a larger study of PCC organizational change. It is therefore possible that the sampling strategy led to biased results. In particular, the majority of the study sites were VA nursing homes, in which mostly men reside. The sample was, however, large and highly varied, including both small and large facilities and facilities from multiple areas of the country.

A limitation of the validation methods used here was that concurrent validity was not assessed. Future efforts should explore concurrent validity of the RAISE with other observational measures of PCC, wellness, and/or distress. The IRR procedures also involved only video ratings, not live observations. This may be seen as a limitation, because the tool is designed to be used primarily in a real-life setting. To calculate accurate IRR data, however, it was helpful to time multiple observations to the second, a procedure that was only possible using videos. The rating of videos has been employed before to determine reliability of an observational instrument (Chan et al., 2014). The videos were chosen to represent common aspects of nursing home life. Future efforts will need to explore real-time IRR.

Effective and reliable use of the RAISE requires approximately 40 hr of training. Research shows raters able to reliably rate observations using even more intensive measures (Godlove, Richard, & Rodwell, 1982). But the intensity of the training required for the RAISE means that it will not be accessible to many frontline nursing home staff members. Simplified versions of the tool requiring minimal training and designed for nursing home staff to use within a quality improvement framework have been designed and are currently being tested (Hartmann et al., in press).

Conclusion

The RAISE provides researchers with a quantitative tool to measure heretofore unmeasured aspects of PCC. It thus represents an important step forward in our attempts to accurately represent PCC journeys, identify positive outliers, and build supports and interventions to further the process, to the benefit of residents and staff. The RAISE was developed in both community-based nursing homes and VHA CLCs and is thus equally applicable to both. Its possible use is not, however, confined to nursing homes. The tool could conceivably be tested and applied in any setting in which positive interactions among residents and staff is desired and engagement of residents in daily life is the ideal. Assisted living and inpatient psychiatric settings, for example, may have situations and circumstances that make the transition of the instrument smooth. With potential modifications, the instrument could also be used in other inpatient settings, as well as in community-based and other non-healthcare programs and centers, such as environments where adults are incarcerated or otherwise confined. Next steps for continued work on the RAISE include exploring the concurrent validity of particular scoring combinations.

Acknowledgments

This material is based upon work supported in part by the Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development, Health Services Research and Development, project numbers I01HX000258-01 and HX000797. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government.

Conflict of Interest

None.

References

- Algase D., Beck C., Kolanowski A., Whall A., Berent S., Richards K., Beattie E. (1996). Need-driven dementia-compromised behavior: An alternative view of disruptive behavior. American Journal of Alzheimer’s Disease and Other Dementias, 11, 10–19. doi:10.1177/153331759601100603 [Google Scholar]

- Anderson R. A. Issel L. M., & McDaniel R. R Jr (2003). Nursing homes as complex adaptive systems: Relationship between management practice and resident outcomes. Nursing Research, 52, 12–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beerens H. C. de Boer B. Zwakhalen S. M. Tan F. E. Ruwaard D. Hamers J. P., & Verbeek H (2016). The association between aspects of daily life and quality of life of people with dementia living in long-term care facilities: A momentary assessment study. International Psychogeriatrics, 28, 1323–1331. doi:10.1017/S1041610216000466 [DOI] [PubMed] [Google Scholar]

- Benokraitis N. V. (2015). SOC (4th ed.). Boston, MA: Cengage Learning. [Google Scholar]

- Bowers B. Nolet K. Jacobson N.,& THRIVE Research Collaborative. (2016a). Sustaining culture change: Experiences in the green house model. Health Services Research, 51(Suppl. 1), 398–417. doi:10.1111/1475–6773.12428 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowers B. Nolet K. Roberts T., & Ryther B (2016b). Inside the greenhouse ‘black box’: Opportunities for high-quality clinical decision making (the THRIVE research collaborative). Health Services Research, 51, 378–97. doi:10.1111/1475–6773.12427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowers B. J., & Nolet K (2014). Developing the green house nursing care team: Variations on development and implementation. Gerontologist, 54(Suppl. 1), S53–64. doi:10.1093/geront/gnt109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowman C., & Schoeneman K (2006). Development of the artifacts of culture change tool, Report of Contract HHSM-500-2005-00076P. Artifacts of Culture Change. Retrieved from https://www.artifactsofculturechange.org/Data/Documents/artifacts.pdf. [Google Scholar]

- Brooker D. (2005). Dementia care mapping: A review of the research literature. Gerontologist, 45 Spec No 1, 11–18. doi:10.1093/geront/45.suppl_1.11 [DOI] [PubMed] [Google Scholar]

- Brownie S., & Nancarrow S (2013). Effects of person-centered care on residents and staff in aged-care facilities: A systematic review. Clinical Interventions in Aging, 8, 1–10. doi:10.2147/CIA.S38589 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burack O. R. Weiner A. S., & Reinhardt J. P (2012). The impact of culture change on elders’ behavioral symptoms: A longitudinal study. Journal of the American Medical Directors Association, 13(6), 522–528. doi:10.1016/j.jamda.2012.02.006 [DOI] [PubMed] [Google Scholar]

- Casey A. N. Low L. F. Goodenough B. Fletcher J., & Brodaty H (2014). Computer-assisted direct observation of behavioral agitation, engagement, and affect in long-term care residents. Journal of the American Medical Directors Association, 15, 514–520. doi:10.1016/j.jamda.2014.03.006 [DOI] [PubMed] [Google Scholar]

- Caspar S., & O’Rourke N (2008). The influence of care provider access to structural empowerment on individualized care in long-term-care facilities. The Journals of Gerontology Series B, Psychological Sciences and Social Sciences, 63, S255–S265. [DOI] [PubMed] [Google Scholar]

- Caspar S. O’Rourke N., & Gutman G. M (2009). The differential influence of culture change models on long-term care staff empowerment and provision of individualized care. Canadian Journal on Aging, 28, 165–175. doi:10.1017/S0714980809090138 [DOI] [PubMed] [Google Scholar]

- Castle N. G. (2011). The influence of consistent assignment on nursing home deficiency citations. The Gerontologist, 51, 750–760. doi:10.1093/geront/gnr068 [DOI] [PubMed] [Google Scholar]

- Chan D. K. Liming B. J. Horn D. L., & Parikh S. R (2014). A new scoring system for upper airway pediatric sleep endoscopy. JAMA Otolaryngology– Head & Neck Surgery, 140, 595–602. doi:10.1001/jamaoto.2014.612 [DOI] [PubMed] [Google Scholar]

- Chang Y. Li J., & Porock D (2013). The effect on nursing home resident outcomes of creating a household within a traditional structure. Journal of the American Medical Director Association, 14, 293–299. doi:10.1016/j.jamda.2013.01.013 [DOI] [PubMed] [Google Scholar]

- Cohen L. Zimmerman S. Reed D. Brown P. Bowers B. Nolet K. Hudak S., & Horn S (2016). The green house model of nursing home care in design and practice (the THRIVE research collaborative). Health Services Research, 51(Suppl. 1), 352–377. doi:10.1111/1475–6773.12418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox H. Burns I., & Savage S (2004). Multisensory environments for leisure: Promoting well-being in nursing home residents with dementia. Journal of Gerontological Nursing, 30, 37–45. [DOI] [PubMed] [Google Scholar]

- Craft Morgan J. Haviland S. B. Woodside M. A., & Konrad T. R (2007). Fostering supportive learning environments in long-term care: The case of WIN A STEP UP. Gerontology & Geriatrics Education, 28, 55–75. doi:10.1300/J021v28n02_05 [DOI] [PubMed] [Google Scholar]

- Curyto K. J. Van Haitsma K., & Vriesman D. K (2008). Direct observation of behavior: A review of current measures for use with older adults with dementia. Research in Gerontological Nursing, 1, 52–76. doi:10.3928/19404921-20080101-02 [DOI] [PubMed] [Google Scholar]

- Dean R., & Proudfoot R (1993). The quality of interactions schedule (QUIS): Development, reliability and use in the evaluation of two domus units. International Journal of Geriatric Psychiatry, 8, 819–26. doi:10.1002/gps.930081004 [Google Scholar]

- de Boer B. Beerens H. C. Zwakhalen S. M. Tan F. E. Hamers J. P., & Verbeek H (2016). Daily lives of residents with dementia in nursing homes: Development of the Maastricht electronic daily life observation tool. International Psychogeriatrics, 28, 1333–1343. doi:10.1017/S1041610216000478 [DOI] [PubMed] [Google Scholar]

- Deci E., & Ryan R (1985). Intrinsic motivation and self-determination in human behavior. New York: Plenum. [Google Scholar]

- Deci E., & Ryan R (2000). The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry, 11, 227–68. doi:10.1207/S15327965PLI1104_01 [Google Scholar]

- Deutschman M. (2001). Interventions to nurture excellence in the nursing home culture. Journal of Gerontological Nursing, 27, 37–43. [DOI] [PubMed] [Google Scholar]

- Edvardsson D., & Innes A (2010). Measuring person-centered care: A critical comparative review of published tools. The Gerontologist, 50, 834–846. doi:10.1093/geront/gnq047 [DOI] [PubMed] [Google Scholar]

- Emerson R. Fretz R., & Shaw L (1995). Writing ethnographic fieldnotes. Chicago: University of Chicago Press. [Google Scholar]

- Geertzen J. (2012). Inter-rater agreement with multiple raters and variables Retrieved from https://nlp-ml.io/jg/software/ira/.

- Gilmore-Bykovskyi A. L. (2015). Caregiver person-centeredness and behavioral symptoms during mealtime interactions: Development and feasibility of a coding scheme. Geriatric Nursing, 36(2 Suppl), S10–S15. doi:10.1016/j.gerinurse.2015.02.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godlove C. Richard L., & Rodwell G (1982). Time for action: an observation study of elderly people in four different care environments. Sheffield, UK: Joint Unit for Social Services Research, University of Sheffield, in collaboration with Community Care. [Google Scholar]

- Grabowski D. O’Malley A. Afendulis C. Caudry D. Elliot A., & Zimmerman S (2014). Culture change and nursing home quality of care. The Gerontologist, 54 (Suppl. 1), S35–45. doi:10.1093/geront/gnt143 [DOI] [PubMed] [Google Scholar]

- Grabowski D. Afendulis C. Caudry D. O’Malley A. Kemper P.,& THRIVE Research Collaborative. (2016). The impact of green house adoption on medicare spending and utilization. Health Services Research, 51(Suppl. 1), 433–453. doi:10.1111/1475-6773.12438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant L.,& The Commonwealth Fund. (2008). Culture Change in a For-Profit Nursing Home Chain an Evaluation. Retrieved from http://www.commonwealthfund.org/usr_doc/Grant_culturechangefor-profitnursinghome_1099.pdf?section=4039. [Google Scholar]

- Grosch K. Medvene L., & Wolcott H (2008). Person-centered caregiving instruction for geriatric nursing assistant students: Development and evaluation. Journal of Gerontological Nursing, 34, 23–31; quiz 32. [DOI] [PubMed] [Google Scholar]

- Hartmann C. W. Snow A. L. Allen R. S. Parmelee P. A. Palmer J. A., & Berlowitz D (2013). A conceptual model for culture change evaluation in nursing homes. Geriatric Nursing, 34, 388–394. doi:10.1016/j.gerinurse.2013.05.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann C. W., Palmer J. A., Mills W. L., Pimentel C. B., Allen R. S., Wewiorski N. J.…, Snow A. L. Adaptation of a nursing home culture change research instrument for frontline staff quality improvement use. Psychological Services. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawes C., Mor V., Phillips C. D., Fries B. E., Morris J. N., Steele-Friedlob E., …, Nennstiel M. (1997). The OBRA-87 nursing home regulations and implementation of the Resident Assessment Instrument: Effects on process quality. Journal of the American Geriatrics Society, 45, 977–985. [DOI] [PubMed] [Google Scholar]

- Hill N. L. Kolanowski A. M. Milone-Nuzzo P., & Yevchak A (2011). Culture change models and resident health outcomes in long-term care. Journal of Nursing Scholarship: an official publication of Sigma Theta Tau International Honor Society of Nursing, 43, 30–40. doi:10.1111/j.1547-5069.2010.01379.x [DOI] [PubMed] [Google Scholar]

- Institute of Medicine (2001). Crossing the quality chasm: a new health system for the 21st century. Washington, DC: National Academies Press. [PubMed] [Google Scholar]

- Kane R. A. Lum T. Y. Cutler L. J. Degenholtz H. B., & Yu T. C (2007). Resident outcomes in small-house nursing homes: A longitudinal evaluation of the initial green house program. Journal of the American Geriatrics Society, 55, 832–839. doi:10.1111/j.1532-5415.2007.01169.x [DOI] [PubMed] [Google Scholar]

- Koren M. J. (2010). Person-centered care for nursing home residents: The culture-change movement. Health affairs (Project Hope), 29, 312–317. doi:10.1377/hlthaff.2009.0966 [DOI] [PubMed] [Google Scholar]

- Levenson S. A. (2009). The basis for improving and reforming long-term care. Part 3: essential elements for quality care. Journal of the American Medical Directors Association, 10, 597–606. doi:10.1016/j.jamda.2009.08.012 [DOI] [PubMed] [Google Scholar]

- McCambridge J. Witton J., & Elbourne D (2014). Systematic review of the Hawthorne effect: New concepts are needed to study research participation effects. Journal of Clinical Epidemiology, 67, 267–277. doi:10.1016/j.jclinepi.2013.08.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCann J. J. Gilley D. W. Hebert L. E. Beckett L. A., & Evans D. A (1997). Concordance between direct observation and staff rating of behavior in nursing home residents with Alzheimer’s disease. The Journals of Gerontology Series B, Psychological Sciences and Social Sciences, 52, P63–P72. [DOI] [PubMed] [Google Scholar]

- McGilton K. S., Heath H., Chu C. H., Boström A. M., Mueller C., Boscart V. M., …, Bowers B. (2012). Moving the agenda forward: A person-centred framework in long-term care. International Journal of Older People Nursing, 7, 303–309. doi:10.1111/opn.12010 [DOI] [PubMed] [Google Scholar]

- Miller S. C. Cohen N. Lima J. C., & Mor V (2014). Medicaid capital reimbursement policy and environmental artifacts of nursing home culture change. The Gerontologist, 54(Suppl. 1), S76–S86. doi:10.1093/geront/gnt141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller S. Mor V., & Burgess J (2016). Studying nursing home innovation: The green house model of nursing home care. Health Services Research, 51(Suppl. 1), 335–343. doi:10.1111/1475-6773.12437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molony S. L. Evans L. K. Jeon S. Rabig J., & Straka L. A (2011). Trajectories of at-homeness and health in usual care and small house nursing homes. The Gerontologist, 51, 504–515. doi:10.1093/geront/gnr022 [DOI] [PubMed] [Google Scholar]

- Petriwskyj A. Parker D. Wilson C., & Gibson A (2016). What health and aged care culture change models mean for residents and their families: A systematic review. Gerontologist, 56, e12–e20. doi:10.1093/geront/gnv151 [DOI] [PubMed] [Google Scholar]

- Plutchik R. (2001). The nature of emotions. American Scientist, 89, 344–49. doi:10.1511/2001.4.344 [Google Scholar]

- Scalzi C. C. Evans L. K. Barstow A., & Hostvedt K (2006). Barriers and enablers to changing organizational culture in nursing homes. Nursing Administration Quarterly, 30, 368–372. [DOI] [PubMed] [Google Scholar]

- Schachter S., & Singer J. E (1962). Cognitive, social, and physiological determinants of emotional state. Psychological Review, 69, 379–399. [DOI] [PubMed] [Google Scholar]

- Shier V. Khodyakov D. Cohen L. W. Zimmerman S., & Saliba D (2014). What does the evidence really say about culture change in nursing homes?The Gerontologist, 54(Suppl. 1), S6–S16. doi:10.1093/geront/gnt147 [DOI] [PubMed] [Google Scholar]

- Schreiner A. S. Yamamoto E., & Shiotani H (2005). Positive affect among nursing home residents with Alzheimer’s dementia: The effect of recreational activity. Aging & Mental Health, 9, 129–134. doi:10.1080/13607860412331336841 [DOI] [PubMed] [Google Scholar]

- Smith E. R. Broughton M. Baker R. Pachana N. A. Angwin A. J. Humphreys M. S.,…Chenery H. J (2011). Memory and communication support in dementia: Research-based strategies for caregivers. International Psychogeriatrics, 23, 256–263. doi:10.1017/S1041610210001845 [DOI] [PubMed] [Google Scholar]

- Stecker M., & Stecker M. M (2014). Disruptive staff interactions: A serious source of inter-provider conflict and stress in health care settings. Issues in Mental Health Nursing, 35, 533–541. doi:10.3109/01612840.2014.891678 [DOI] [PubMed] [Google Scholar]

- Sterns S. Miller S. C., & Allen S (2010). The complexity of implementing culture change practices in nursing homes. Journal of the American Medical Directors Association, 11, 511–518. doi:10.1016/j.jamda.2009.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stone R., Reinhard S., Bowers B., Zimmerman D., Phillips C., Hawes C., Fielding J., Jacobson N, The Commonwealth Fund (2002). Evaluation of the wellspring model for improving nursing home quality Retrieved from http://www.commonwealthfund.org/usr_doc/stone_wellspringevaluation.pdf

- Suen H., & Ary D (1989). Analyzing quantitative behavioral observation data. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Sullivan J. L. Meterko M. Baker E. Stolzmann K. Adjognon O. Ballah K., & Parker V. A (2013). Reliability and validity of a person-centered care staff survey in veterans health administration community living centers. The Gerontologist, 53, 596–607. doi:10.1093/geront/gns140 [DOI] [PubMed] [Google Scholar]

- Svarstad B. L. Mount J. K., & Bigelow W (2001). Variations in the treatment culture of nursing homes and responses to regulations to reduce drug use. Psychiatric Services, 52, 666–672. doi:10.1176/appi.ps.52.5.666 [DOI] [PubMed] [Google Scholar]

- Tellis-Nayak V. (2007). A person-centered workplace: The foundation for person-centered caregiving in long-term care. Journal of the American Medical Directors Association, 8, 46–54. doi:10.1016/j.jamda.2006.09.009 [DOI] [PubMed] [Google Scholar]

- White D. L. Newton-Curtis L., & Lyons K. S (2008). Development and initial testing of a measure of person-directed care. Gerontologist, 48 (Spec No 1), 114–123. doi:10.1093/geront/48.Supplement_1.114 Zimm [DOI] [PubMed] [Google Scholar]

- White-Chu E. F. Graves W. J. Godfrey S. M. Bonner A., & Sloane P (2009). Beyond the medical model: The culture change revolution in long-term care. Journal of the American Medical Directors Association, 10, 370–378. doi:10.1016/j.jamda.2009.04.004 [DOI] [PubMed] [Google Scholar]

- Zeisel J. Silverstein N. M. Hyde J. Levkoff S. Lawton M. P., & Holmes W (2003). Environmental correlates to behavioral health outcomes in Alzheimer’s special care units. The Gerontologist, 43, 697–711. [DOI] [PubMed] [Google Scholar]

- Zimmerman S. Allen J. Cohen L. Pinkowitz J. Reed D. Coffey W. Reed D. Lepore M. Sloane P.,& The University of North Carolina-Center for Excellence in Assisted Living Collaborative. (2015). A measure of person-centered practices in assisted living: The PC-PAL. Journal of the American Medical Directors Association, 16, 132–137. doi:10.1016/j.jamda.2014.07.016 [DOI] [PMC free article] [PubMed] [Google Scholar]