Abstract

Context

Resources for medical education are becoming more constrained, whereas accountability in medical education is increasing. In this constrictive environment, medical schools need to consider and justify their selection procedures in terms of costs and benefits. To date, there have been no studies focusing on this aspect of selection.

Objectives

We aimed to examine and compare the costs and benefits of two different approaches to admission into medical school: a tailored, multimethod selection process versus a lottery procedure. Our goal was to assess the relative effectiveness of each approach and to compare these in terms of benefits and costs from the perspective of the medical school.

Methods

The study was conducted at Maastricht University Medical School, at which the selection process and a weighted lottery procedure ran in parallel for 3 years (2011–2013). The costs and benefits of the selection process were compared with those of the lottery procedure over three student cohorts throughout the Bachelor's programme. The extra costs of selection represented the monetary investment of the medical school in conducting the selection procedure; the benefits were derived from the increase in income generated by the prevention of dropout and the reductions in extra costs facilitated by decreases in the repetition of blocks and objective structured clinical examinations.

Results

The tailor‐made selection procedure cost about €139 000 when extrapolated to a full cohort of students (n = 286). The lottery procedure came with negligible costs for the medical school. However, the average benefits of selection compared with the lottery system added up to almost €207 000.

Conclusions

This study not only shows that conducting a cost–benefit comparison is feasible in the context of selection for medical school, but also that an ‘expensive’ selection process can be cost‐beneficial in comparison with an ‘inexpensive’ lottery system. We encourage other medical schools to examine the cost‐effectiveness of their own selection processes in relation to student outcomes in order to extend knowledge on this important topic.

Short abstract

It's too expensive! Although feasibility is a common concern about selection practice, this study shows through cost‐benefit comparison that ‘expensive’ admissions processes can be cheaper than ‘inexpensive’ lotteries

Introduction

Medical schools throughout the world are confronted with high numbers of applicants for a restricted amount of places.1 Schools are required to select the best candidates from a pool of well‐qualified applicants, many of whom also possess the personal qualities considered desirable in a medical student and doctor.2 Although typically three general domains are selected for at the time of admission (academic achievement, aptitude for medical school or medicine and non‐academic attributes),1, 2 how these are measured varies widely.

In the era of resource constraints, decreases in public funding and simultaneous increases in demand for accountability,3 medical schools are under increasing pressure to justify their selection processes in terms of costs and benefits.4, 5, 6 They must ensure the efficient and effective use of finances in the organisation and delivery of education.6, 7, 8, 9 Until now, research on selection has mostly focused on the predictive validity of the various selection tools.1, 10

With reference to admissions procedures, the most inexpensive process in terms of costs is probably based on the use of either a single selection tool referencing prior attainment (e.g. secondary school examination results) or a weighted lottery system in which admission chances increase in parallel with the applicant's pre‐university grade point average (GPA). (For more information on the weighted lottery procedure previously used in the Netherlands, the reader is referred to6, 10, 11, 12). These are inexpensive approaches because the required data are provided to the medical school by external bodies at minimum cost to the medical school. At the other end of the spectrum, selection procedures consisting of multiple time‐intensive and costly tools are highly expensive. For example, conducting multiple mini‐interviews (MMIs) with large numbers of applicants is an expensive endeavour: many assessors and actors must be present for long periods of time, rooms must be booked and allocated, and staff and actors provided with refreshments. Furthermore, MMIs require much preparatory work to ensure high levels of reliability and validity across many stations, and the logistics and administration of organising MMIs can be challenging.4, 5, 7, 8

To date, cost–benefit analyses of multi‐tool selection procedures overall are scarce; to the best of our knowledge, this area represents a gap in the literature. At the same time, this is the focus of much discussion in terms of policy and practice. In the Netherlands, for example, a debate on admissions processes is ongoing, focusing on the question of whether the previous, relatively inexpensive weighted lottery system should be reintroduced to replace the current, more expensive selection procedures.13 We add to this debate by focusing on an economic evaluation of medical school selection to examine what is spent in relation to the value that is returned.9, 14

The aim of the current study was to determine whether the benefits of applying a tailor‐made selection process outweigh the costs this process entails in comparison with a lottery procedure, from the perspective of the medical school. To safeguard the quality of the study, we followed the CHEERS (Consolidated Health Economic Evaluation Reporting Standards) statement.15, 16 We define costs as the extra costs accrued by the medical school in applying the selection procedure over the lottery procedure, while benefit is defined as a combination of preventing loss of income due to student drop‐out and preventing additional future costs accrued by poor performance (see later for details). The ultimate goal of this study is to contribute information for decision making on whether to continue investing time and money in developing and adapting selection procedures, or to (re)introduce the inexpensive lottery procedure.

Methods

Setting and population

We were able to examine our research question in a naturalistic setting, specifically that of Maastricht University Medical School (MUMS), at which a tailor‐made selection process ran in parallel with a lottery system for 3 years.17 In this context, the costs and benefits of the traditional admissions procedure (i.e. the lottery procedure) could be compared with those of a tailored selection procedure (selection is now common practice in the Netherlands).10 Up to and including 2010, all students were admitted to MUMS through a national weighted lottery. Thereafter, the admission process was gradually changed from the lottery procedure to a selection process for all students from 2014 onwards. Thus, for 3 years (2011–2013) an outcome‐based selection procedure ran in parallel with the lottery. In the first year, 111 of 286 of students were admitted through this selection procedure. This number increased to 141 in 2012 and 149 in 2013. The selection ratios in these years were 6.6, 5.9 and 5.3 applicants per available study place, respectively. The remaining study places were filled through the national weighted lottery procedure. As a result, the cohorts of 2011, 2012 and 2013 consisted of combinations of selected students and students who entered via the lottery. The latter group was composed of students rejected in the selection process who then successfully entered through the lottery procedure, and students who participated in the lottery procedure only. In this study, the costs and benefits related to selected students (S, n = 401 in total) and those related to students who entered through the lottery only (L, n = 185) were determined for all three cohorts. A comparison of both groups (S and L) on pre‐university GPA revealed no difference.

In the Netherlands, medical school is divided into two 3‐year phases: the Bachelor's programme, and the Master's programme. In the Bachelor's programme, education is mostly university‐based and pre‐clinical. The Master's programme is clinical and primarily workplace‐based. In this study, we focused on the Bachelor's programme at MUMS, in which a problem‐based learning (PBL) curriculum was offered.

Perspective and time horizon

The current study was conducted from the perspective of the medical school; all costs and benefits were therefore determined within the context of the medical school. The time span in the current study is the Bachelor's programme in medicine (3 years) for three cohorts of students (starting in 2011, 2012 and 2013). All costs and benefits were analysed in the first quarter of 2018.

Selection procedure

The selection procedure applied at MUMS consisted of two rounds, both assessing the CanMEDS competencies set forward by Frank in 2005.18 In the first round, applicants completed an online portfolio. This contained information on their previous academic attainment (e.g. pre‐university GPA), distinguishing abilities gained in extracurricular activities (e.g. relating to the roles of communicator or collaborator), reasoning behind choosing MUMS and their knowledge of and self‐perceived suitability for PBL. Applicants were ranked based on their scores on the portfolio, and the highest ranking applicants (twice the number of available study places) were invited to the second round – a selection day at MUMS. During this selection day, two different assessment tools were used: a video‐based situational judgement test (V‐SJT), consisting of eight to 10 short video clips with corresponding questions, and a written aptitude test. Both tools focused on talent for the whole set of competencies, mostly pertaining to a real‐life medical student or doctor setting. Finally, applicants were ranked using their mean Z‐scores on all assignments and the highest ranking students were offered places on the programme.

Rejected applicants and those who chose not to participate in the selection procedure at all could participate in the national weighted lottery procedure. Weighting was based on the applicant's pre‐university GPA.

Costs of admission procedures

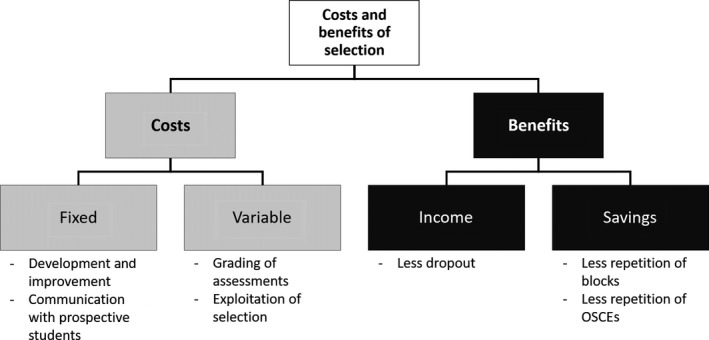

To delineate the costs of both admission procedures from the medical school's perspective, two types of cost were distinguished: fixed costs and variable costs (Fig. 1). Fixed costs are the costs accrued by the admissions process independent of the number of applicants, such as the costs of the staff hours required to develop the assignments. Variable costs are the costs directly related to the number of applicants, such as the costs of the staff hours required to evaluate assignments and to provide surveillance throughout the selection day. Both types of cost were expressed as the number of hours allocated to both scientific and support staff (converted to monetary costs) and remaining costs (e.g. costs of external staff for video‐editing and test layout) were also included. Variable costs were extrapolated to costs for a full cohort using the data for the cohorts of 2011–2013.

Figure 1.

The different kinds of costs and benefits of a selection procedure and their sources. OSCE = objective structured clinical examination

Economic benefits derived from selection

The expected monetary benefits of selection can be divided roughly into two types: (i) an increase in net income because fewer students leave the programme without graduating, and (ii) a decrease in the costs of remediation and resits because fewer students fail examinations (Fig. 1).13, 19 With respect to the first type of benefit, it is important to note that the education of medical students in the Netherlands is funded by the government; payments are received for each registered year, with a maximum of 3 years for the Bachelor's programme, as well as for graduation. This results in a yearly payment per student to the medical school. As soon as a student drops out, MUMS no longer receives these yearly payments for this student; at the same time, money is saved because the student no longer participates in educational activities.

Secondly, poor performance of students results in study delay and extra costs for remediation and resits of failed blocks and assessments. The PBL Bachelor's curriculum at MUMS consists of two 4‐week and four 8‐week blocks in Years 1 and 2, and of four 10‐week blocks in Year 3. In these thematic blocks, educational activities such as skills trainings, practical sessions, and simulated and authentic patient contacts are organised alongside tutorial group meetings. Assessment of students is either theoretical (block examinations and progress tests) or related to attitude and all kinds of skills (e.g. in objective structured clinical examinations [OSCEs], of which there is one per year).

When a student failed a block (of 4, 8 or 10 weeks), he or she was required either to follow the whole block again, which carried considerable costs for the medical school, or to resit the examination only, for which the expenses were negligible. The only single assessment causing significant additional costs per student was the OSCE.20 Repetitions of other parts of the assessment programme (e.g. the progress test) caused negligible expenditures. Other costs related to poor performance, such as the costs of providing extra guidance to support individual, poorly performing students in completing their studies, were not available for ethical reasons and were therefore not included in the current analysis.

Cost–benefit analysis

In the current article, cost–benefit analysis is defined as the investigation of whether selection is ‘good value’ when its costs are compared with its monetary benefits.3, 21 Firstly, the costs of admission procedures were determined by sorting out both the fixed and variable costs of the lottery and selection methods, and extrapolating the variable costs to an entire cohort of 286 students. Hereafter, the benefits of selection over lottery were assessed by determining the average frequencies of dropout and repetition per year in each of the two student groups (S and L). The benefit attained was then calculated by extrapolating these frequencies to an entire cohort of either S or L students.

Results

Costs of the admission procedures

The costs of the national weighted lottery for the medical school itself were close to zero. An administration office within the university determined whether applicants fulfilled all legal requirements for all admission procedures, including the lottery. The selection procedure, by contrast, came with considerable fixed and variable costs. The fixed costs comprised staff hours for setting up the selection procedure, producing content for the selection procedure, and providing information on the selection procedure. The variable costs were costs related to, for example, the grading of assignments, as well as costs for provisions such as equipment, materials and facilities, all of which increase with the number of students applying. Both scientific and support staff were involved in the procedure; average salary costs were €90 000 per full‐time‐equivalent (FTE; i.e. 1650 hours per year) for scientific staff and €52 000 per FTE for support staff. These costs include on‐costs such as employed pension contributions and payroll tax.

On average, 134 students entered MUMS through selection per year in the three cohorts under study. As Table 1 shows, the average fixed costs per year represented 0.51 FTE scientific staff (€45 900) and 0.50 FTE support staff (€26 000), and a further amount of fixed costs, such as for video‐editing (€5800). These fixed costs were unrelated to the number of applicants involved. The average variable quantity amounted to 0.13 FTE for scientific staff and 0.18 FTE for support staff per year. Extrapolation of these variable costs to an entire cohort of 286 students results in sums of 0.28 FTE for scientific staff (€25 200) and 0.38 FTE for support staff (€19 760). The average costs of remaining provisions per year were €7600, which extrapolates to €16 220 for a full cohort of students. Taken together, this means that whereas the average cost of selecting 134 students at MUMS was approximately €106 360 per year, the application of the current selection procedure to an entire cohort of 286 students would have resulted in an average cost of €138 880.

Table 1.

Average yearly costs of the selection procedure at Maastricht University Medical School in the years 2011–2013, extrapolated to a full cohort

| Scientific staff | Support staff | Remaining costs | Total | |

|---|---|---|---|---|

| Fixed | ||||

| FTE | 0.51 | 0.50 | 1.01 | |

| Costs | €45 900 | €26 000 | €5800 | €77 700 |

| Variable | ||||

| Extrapolated FTEs, full cohort | 0.28 | 0.38 | 0.65 | |

| Extrapolated costs, full cohort | €25 200 | €19 760 | €16 220 | €61 180 |

| Total costs, full cohort | €71 100 | €45 760 | €22 020 | €138 880 |

FTE = full‐time‐equivalent (1650 hours per year; €90 000 for scientific staff, €52 000 for support staff).

Economic benefits because of selection

The medical school received an average payment from the hosting faculty of about €10 000 per registered student per year; this payment represents compensation for the educational activities provided by the medical school staff. The total cost of educating a medical student exceeds this amount by far; the additional overhead and more generic educational costs were paid to and covered by the faculty and the university (e.g. infrastructure, information technology, library, service centre and management costs and clinical workplace‐based costs). The payment of a fixed amount of money per year means that if a student drops out in Year 1, the payments for the second and third years are missed (i.e. €20 000). For dropout during Year 2, MUMS misses out on €10 000. If students drop out in Year 3 or later, this no longer affects MUMS’ budget for educational activities during the Bachelor's programme. However, it should be noted that when a student drops out, the medical school no longer has to provide this student's education. The majority of the costs of education do not change with a slight decrease in the number of students (e.g. course development and lectures). Nevertheless, a small portion of the costs of education will decline with a slight reduction in the amount of students; this aggregates to an estimated €2260 per student per year (four 8‐ and two 4‐week blocks and an OSCE in Year 2, and four 10‐week blocks and an OSCE in Year 3; these costs will be specified later in this paper). Therefore, preventing dropout in Years 1 and 2 increases the medical school's net income by about €15 480 and €7740 per student, respectively. As Table 2 shows, a higher percentage of lottery‐admitted than selected students dropped out in Year 1; when extrapolated to full cohorts, 20 lottery‐admitted students and seven selected students may be expected to drop out in Year 1. In Year 2, two selected students versus one lottery‐admitted student may be expected to drop out. In monetary terms, a lottery‐admitted cohort would cause a loss of income to the school of €317 340, whereas a selection‐admitted cohort would cause a loss of income of €123 840. Therefore, the reduction in dropout facilitated by selection would increase the average income for a cohort over the entire Bachelor's programme by €193 500.

Table 2.

Average yearly benefits of the selection procedure versus the national weighted lottery procedure at Maastricht University Medical School

| Selected | Lottery | Selected | Lottery | Difference (L − S) | Gainsa | Total gains of selection | |

|---|---|---|---|---|---|---|---|

| Average per cohort, % | Extrapolated number of students per full cohortb | Gains per student | |||||

| Dropout in Year 1 | 2.5 | 7.0 | 7 | 20 | 13 | €15 480 | €201 240 |

| Dropout in Year 2 | 0.7 | 0.5 | 2 | 1 | −1 | €7740 | €−7740 |

| Average per cohort, % | Extrapolated number of repetitions per full cohort | Gains per repetition avoided | |||||

| Repetitions of 4‐week blocks | 2.4 | 3.5 | 27 | 40 | 13 | €220 | €2860 |

| Repetitions of 8‐week blocks | 3.2 | 3.9 | 73 | 89 | 16 | €445 | €7120 |

| Repetitions of 10‐week blocks | 0.9 | 1.1 | 10 | 13 | 3 | €555 | €1665 |

| Resits of OSCEs | 9.6 | 14.2 | 82 | 122 | 40 | €40 | €1600 |

| Total | €206 745 | ||||||

Gains can be: (i) an increase in income because the amount of dropout is decreased, or (ii) savings for the medical school because blocks or assessments (OSCEs) are repeated less often.

A full cohort consists of 286 students; data from the combined cohorts of 2011–2013 (401 selected students and 185 students admitted through lottery only) were extrapolated to a full cohort of selected or lottery‐admitted students during the entire Bachelor's programme. Without repetitions, a full cohort of students (n = 286) represents 4 × 286 = 1144 4‐week blocks, 8 × 286 = 2288 8‐week blocks, 4 × 286 = 1144 10‐week blocks and 3 × 286 = 858 OSCE‐participations during the Bachelor's programme.

OSCE = objective structured clinical examination.

When students failed a block examination and were required to retake the entire block, the amount of staff hours needed for teaching increased. This led to additional costs of €220, €445 and €555 per student for 4‐, 8‐ and 10‐week blocks, respectively. The data in Table 2 show that lottery‐admitted students were required to retake all three types of block more often than selected students. Hence, when extrapolated to full cohorts, a lottery‐admitted cohort would be required to repeat these blocks more often than a selection‐admitted cohort. As a result, the average total costs of the repetition of blocks over the entire Bachelor's programme for one cohort would be €11 645 lower in a full cohort of selected students.

The OSCE is an individual test in which all costs (i.e. assessors [scientific staff], simulated patients and provisions) increase with each student. The mean total cost of an OSCE was €40 per student. As Table 2 shows, an entirely lottery‐admitted cohort would be required to complete 122 OSCE resits throughout the Bachelor's programme (€4880), whereas a full cohort of selected students would need 82 resits (€3280). This results in a difference in costs of €1600.

Combining all of these data shows that an average full cohort of selected students would be less expensive in terms of dropout and need to repeat blocks and OSCEs than an average full cohort of lottery‐admitted students (Table 2). This benefit adds up to a total of €206 745. Because the yearly costs of the selection procedure applied for these cohorts were €138 880, the applied selection procedure appears to be cost‐beneficial.

Discussion

We compared the costs and benefits of a tailor‐made selection procedure at one medical school with those of a weighted lottery system. The cost–benefit analysis indicates that, from the perspective of the medical school, the benefits of this selection procedure outweigh its costs, compared to the costs and benefits of the lottery system. This result is attributable to lower rates of dropout and failure in selected students than in lottery‐admitted students.

The selection procedure under study was more expensive than the lottery procedure. In the 3 years under investigation, the average cost of selecting almost half of the students at MUMS was approximately €106 000 per year. When we extrapolate this to a context in which all students in a cohort are admitted through selection (i.e. without a lottery entry stream), the total costs per year emerge at approximately €139 000.

Although the calculation of costs is relatively simple, estimating the monetary benefits of selection over lottery is more complex. We divided the benefits of selection into two types of gain: (i) a decrease in the number of students dropping out and a consequent increase in net income for the medical school, and (ii) a decrease in the amount of repeated courses and examinations, and a consequent decrease in extra costs to the medical school (which are not reimbursed by government funding). To do this, we calculated the average numbers of selected and lottery‐admitted students who dropped out and repeated blocks and OSCEs in the years under study. These average numbers were then extrapolated to a complete selection‐admitted cohort and referenced against the outcomes of an entire cohort of lottery‐admitted students. This comparison indicated that shifting completely to selection would provide a total benefit of almost €207 000. It is important to note that this is a conservative estimate of the benefits as we did not calculate the costs of providing extra support for underperforming students or additional administrative costs; both are difficult to estimate and data on underperforming students are not available for ethical reasons.

When we examine the costs (~ €139 000) and benefits (~ €207 000) of the selection procedure under study, we can conclude that the selection procedure is cost‐effective to the tune of about €68 000 per cohort compared with the lottery. This implies that even a relatively complex and time‐consuming selection procedure can be cost‐beneficial if it has predictive value in terms of performance throughout the Bachelor's programme of medical school (Schreurs et al. ‘Selection into medicine: the predictive validity of an outcome‐based procedure’; unpublished study 2018).

It is important, however, to bear in mind that the extrapolation to a full cohort of 286 students was conducted based on data from a total number of 401 selected and 185 lottery‐admitted students in three cohorts. Our assumption was that the performance of the hypothetical students who would be added to these actual groups to obtain full cohorts of students would be equal to that of the students in the respective actual groups. We do not know if this would be the case. That the sample of selected students is representative of an entire cohort is supported by the fact that dropout remained as low as in our extrapolation in the years during which MUMS proceeded to select the entire cohort (cohorts of 2014 and later). To support our extrapolation further, we determined that there was no significant difference in any of the current outcomes between students who performed in the top 10% and those who performed in the bottom 10% of the selection procedure. This suggests that an entire cohort of selected students would be likely to perform as well as the selected students in the current study. Whether the lottery‐admitted students in the current study are representative of a full cohort of lottery‐admitted students is questionable. However, we could not carry out a retrospective comparison because the Bachelor's curriculum was completely revised in 2011. We looked to see what would happen if the full lottery‐admitted cohort were to consist of the 185 actual lottery‐admitted students used in the current study, supplemented with a hypothetical 50 : 50 mixture of students admitted through selection and lottery, respectively. In this rather conservative hypothesis, the selection procedure would still be cost‐effective (the benefits would still outweigh the costs by more than €42 000). In reality, the true gain is likely to be much closer to the extrapolation based on a full extrapolation of both groups, as students are recruited from a large pool.

Although the current study provides important insights, many questions relating to cost‐effectiveness remain unanswered. These include questions of the effect on cost‐effectiveness of: (i) other perspectives; (ii) different combinations of selection tools, and (iii) different weightings of selection tools. We focused on the medical school perspective. However, the perspectives of other stakeholders, such as students, patients and society, are at least equally important,19 and merit study. For example, from a societal perspective, the biggest gain from selection would be an increase in the quality of future doctors, which also increases cost‐effectiveness. Secondly, different universities use different ways of selecting their students.1 This makes it nearly impossible to conduct a study that would be easily generalisable to other contexts. However, the broad combination of pre‐university GPA with an aptitude test and a tool focused on (inter)personal skills is relatively common.4 It is also important to examine with more granularity which specific features of a selection procedure make it cost‐effective.13 What do the different tools cost, and what is their contribution to the predictive value of the procedure as a whole? This may help to create a selection procedure that is as ‘lean’ as it possibly can be. Lastly, cost‐effectiveness analyses can be used as outcomes to optimise the weighting of different tools or assessed features within the selection procedure. Weighting of different tools and features has been previously proposed as a new field of research,2, 8, 10, 22 but may be well combined with the more granular understanding of costs and effectiveness within selection. Finding an optimal weighting of the tools and content within the selection procedure may result in better prediction and, in turn, a more cost‐efficient selection procedure.

Like all research, the current study has limitations. We conducted the study in a single medical school. As we have noted, medical school selection processes vary and so it is difficult to compare across contexts. Secondly, we focused on the Bachelor's programme of medical school only and limited the analysis to outcomes that were predicted to have high monetary impact18. We may have underestimated the cost benefits of the selection procedure given that dropout at any point on the Bachelor's programme also affects incomes in the Master's programme. The follow‐up of our three cohorts as they progress through the later part of their medical degree is underway. Lastly, not all points on the CHEERS checklist were relevant to the current study (e.g. health outcomes and discount rates).15, 16 A strength of the current study is its use of three year groups, which controls for possible cohort effects.

We responded to Patterson et al.'s conclusion1 that very little research has explored the relative cost‐effectiveness of medical selection methods by carrying out a cost–benefit comparison of a tailor‐made medical school selection procedure with a lottery system. The knowledge to be derived from this kind of cost–benefit analysis can help relevant stakeholders determine the optimal use of resources when planning selection, and can help to inform decision making about resource allocation. Furthermore, in contexts in which there is debate as to whether or not anything more than GPA or a lottery are needed at all in medical selection, our study provides important intelligence.

Contributors

SS, MGAoE, JC and KC contributed to the conception and design of the study. SS and KC collected the data. SS undertook the analysis under the supervision of AMMM, MGAoE and KC, and prepared a preliminary draft of the paper. All authors contributed to the critical revision of the paper and approved the final manuscript for submission. All authors have agreed to be accountable for the accuracy and integrity of the work.

Funding

none.

Conflicts of interest

none.

Ethical approval

this study was approved by the Ethical Review Board of the Netherlands Association for Medical Education (NVMO; file no. 332). Students were ensured of confidentiality and provided signed informed consent.

Acknowledgements

the authors would like to thank Marielle Heckmann, Educational Institute, and Raymond Bastin, department of Finance, both at Maastricht University (Maastricht, Limburg, the Netherlands), for their help in relation to the financial data.

References

- 1. Patterson F, Knight A, Dowell J, Nicholson S, Cousans F, Cleland J. How effective are selection methods in medical education? A systematic review. Med Educ 2016;50 (1):36–60. [DOI] [PubMed] [Google Scholar]

- 2. Hecker K, Norman G. Have admissions committees considered all the evidence? Adv Health Sci Educ 2017;22 (2):573–6. [DOI] [PubMed] [Google Scholar]

- 3. Maloney S, Cook D, Foo J, Rivers G, Golub R, Tolsgaard M, Cleland J, Abdalla M, Evans D, Walsh K. AMEE Guide no. *: An Educational Decision‐Makers Guide to Evaluating and Applying Studies of Educational Costs. Med Teach, in press. [DOI] [PubMed] [Google Scholar]

- 4. Cleland J, Dowell J, McLachlan J, Nicholson S, Patterson F. Identifying best practice in the selection of medical students (literature review and interview survey). 2012. https://www.sgptg.org/app/download/7964849/Identifying_best_practice_in_the_selection_of_medical_students.pdf_51119804.pdf. [Accessed 02 July 2015.]

- 5. Hissbach JC, Sehner S, Harendza S, Hampe W. Cutting costs of multiple mini‐interviews – changes in reliability and efficiency of the Hamburg Medical School admission test between two applications. BMC Med Educ 2014;14 (1):54–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. ten Cate TJ, Hendrix HL, de Fockert Koefoed KJJ, Rietveld WJ. Studieresultaten van toegelatenen binnen en buiten de loting. Tijdschr Med Onderwijs 2002;21 (6):253–60. [Google Scholar]

- 7. Dore KL, Kreuger S, Ladhani M et al The reliability and acceptability of the multiple mini‐interview as a selection instrument for postgraduate admissions. Acad Med 2010;85 (10 Suppl):S60–3. [DOI] [PubMed] [Google Scholar]

- 8. Patterson F, Cleland J, Cousans F. Selection methods in healthcare professions: where are we now and where next? Adv Health Sci Educ 2017;22 (2):229–42. [DOI] [PubMed] [Google Scholar]

- 9. Maloney S. When I say…cost and value. Med Educ 2017;51 (3):246–7. [DOI] [PubMed] [Google Scholar]

- 10. Stegers‐Jager KM. Lessons learned from 15 years of non‐grades‐based selection for medical school. Med Educ 2018;52 (1):86–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Schripsema NR, van Trigt AM, Borleffs JCC, Cohen‐Schotanus J. Selection and study performance: comparing three admission processes within one medical school. Med Educ 2014;48 (12):1201–10. [DOI] [PubMed] [Google Scholar]

- 12. Schripsema NR, van Trigt AM, Lucieer SM, Wouters A, Croiset G, Themmen APN, Borleffs JCC, Cohen‐Schotanus J . Participation and selection effects of a voluntary selection process. Adv Health Sci Educ 2017;22 (2):463–76. [DOI] [PubMed] [Google Scholar]

- 13. Foo J, Ilic D, Rivers G, Evans DJR, Walsh K, Haines TP, Paynter S, Morgan P, Maloney S. Using cost‐analyses to inform health professions education – the economic cost of pre‐clinical failure. Med Teach 2017; 10.1080/0142159x.2017.1410123 [Epub ahead of print.] [DOI] [PubMed] [Google Scholar]

- 14. Nestel D, Brazil V, Hay M. You can't put a value on that…Or can you? Economic evaluation in simulation‐based medical education. Med Educ 2018;52 (2):139–41. [Accessed 30 January 2018.] [DOI] [PubMed] [Google Scholar]

- 15. Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, Augustovski F, Briggs AH, Mauskopf J, Loder E. Consolidated health economic evaluation reporting standards (CHEERS) statement. BMJ 2013;346:f1049. [DOI] [PubMed] [Google Scholar]

- 16. Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, Augustovski F, Briggs AH, Mauskopf J, Loder E. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) – explanation and elaboration: a report of the ISPOR Health Economic Evaluation Publication Guidelines Good Reporting Practices Task Force. Value Health 2013;16 (2):231–50. [DOI] [PubMed] [Google Scholar]

- 17. Schreurs S, Cleutjens KB, Muijtjens AMM, Cleland J. Oude Egbrink MGA. Selection into medicine: the predictive validity of an outcome‐based procedure. BMC Med Educ 2018;18 (1):214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Frank JR. The CanMEDS 2005 physician competency framework: better standards, better physicians, better care. 2005. http://www.ub.edu/medicina_unitateducaciomedica/documentos/CanMeds.pdf. [Accessed 25 August 2015.]

- 19. Foo J, Rivers G, Ilic D et al The economic cost of failure in clinical education: a multi‐perspective analysis. Med Educ 2017;51 (7):740–54. [DOI] [PubMed] [Google Scholar]

- 20. Brown C, Ross S, Cleland J, Walsh K. Money makes the (medical assessment) world go round: the cost of components of a summative final year objective structured clinical examination (OSCE). Med Teach 2015;37 (7):653–9. [DOI] [PubMed] [Google Scholar]

- 21. Maloney S, Haines T. Issues of cost–benefit and cost‐effectiveness for simulation in health professions education. Adv Simul 2016;1 (1):13–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Kreiter CD. A research agenda for establishing the validity of non‐academic assessments of medical school applicants. Adv Health Sci Educ 2016;21 (5):1081–5. [DOI] [PubMed] [Google Scholar]