Abstract

Population heterogeneity is frequently observed among patients' treatment responses in clinical trials because of various factors such as clinical background, environmental, and genetic factors. Different subpopulations defined by those baseline factors can lead to differences in the benefit or safety profile of a therapeutic intervention. Ignoring heterogeneity between subpopulations can substantially impact on medical practice. One approach to address heterogeneity necessitates designs and analysis of clinical trials with subpopulation selection. Several types of designs have been proposed for different circumstances. In this work, we discuss a class of designs that allow selection of a predefined subgroup. Using the selection based on the maximum test statistics as the worst‐case scenario, we then investigate the precision and accuracy of the maximum likelihood estimator at the end of the study via simulations. We find that the required sample size is chiefly determined by the subgroup prevalence and show in simulations that the maximum likelihood estimator for these designs can be substantially biased.

Keywords: bias, enrichment design, maximum likelihood estimator, prevalence, subgroup analysis, subpopulation selection

1. INTRODUCTION

Heterogeneity is frequently observed among patients' treatment response in clinical trials. This is due to various factors such as age, race, disease severity, or genetic differences. The topic of heterogeneity in treatment effects has received some attention in the literature (eg, see related works1, 2, 3) and graphical methods such as forest plots are routinely used for the purpose of examining heterogeneity in effects (eg, the work of Cuzick4). Ignoring heterogeneity can substantially impact on medical practice. For example, a treatment might work well in some patients but not in others. Naively estimating the treatment effect across all patients will result in a diluted effect for the group that truly benefits from the treatment. At the same time, an ethical issue arises due to delivering a treatment to all patients, whereas some might not expect an effect and will potentially be exposed to harmful side effects. To address these issues, trials that consider (potential) subgroups defined by one or more biomarkers are becoming more popular. In general, a biomarker is some measurable variable that might help to identify distinct groups of patients and some examples include cholesterol levels, genetic variations, or age. A biomarker is considered prognostic if it provides information about the value of some other variable of interest (eg, the primary endpoint of a study), whereas it is called predictive if its value yields information about the treatment effect. In this paper, we will only consider the latter type of biomarkers.

A number of different designs concerning treatment selection and subgroups within the study populations have been proposed. These designs can be categorized by factors such as design setting (confirmatory or exploratory) or methodology (frequestist, Bayesian, or utility/decision function) (see related works5, 6, 7). Additionally, the designs can be categorized into single‐stage (fixed sample) designs and multistage (adaptive) designs. Both conventionally utilize multiple testing procedures to test for effects in each of the populations of interest. An overview of different multiple testing approaches for this purpose is given in the work of Alosh et al6 and the references therein. A single‐stage design with one biomarker tests, for example, the null hypotheses, ie, the treatment effect of the full population is zero, ie, H 0F and the treatment effect in the subgroup of interest is zero, ie, H 0S.5, 8, 9, 10, 11, 12 These designs are usually employed for exploratory subgroup analysis in phase II (ie, to identify an interesting subgroup) or for confirmatory subgroup analysis in phase III, examining the treatment benefit of prespecified subgroups. Corresponding multistage designs are constructed either as extensions of group sequential approaches13 or using combination tests.14 They can refine the population to either the whole or one or more subgroups at the interim analysis and can allow for early stopping for benefit and lack of benefit (see, eg, other works5, 15, 16, 17, 18).

The accuracy and precision of the treatment effect estimators in subgroup analysis are also crucial to the development of novel treatments and decisions about treatment implementation. Especially, bias is ubiquitous in designs that select (see the work of Bauer et al19) and, in the designs considered here, the bias can come from selecting which (sub)population should be studied further or from selective reporting promising results even in a simple fixed sample design. A variety of papers on treatment effect estimation in the related problem of trials with treatment selection have been published. Approximate bias‐correction estimators for single‐stage designs for normal endpoints are discussed in the works of Shen20 and Stallard et al,21 uniformly minimum variance conditional unbiased estimators for two stage designs have been proposed by Cohen and Sackrowitz,22 and further extensions are published in the works of Bowden and Glimm23 Sill and Sampson.24 Shrinkage estimators have been discussed in the work of Carreras and Brannath,25 whereas approaches to construct confidence intervals are described in related works.26, 27, 28 Time‐to‐event endpoints are considered in the work of Brückner et al.29

In contrast, rather limited literature addresses estimation issues in clinical trials with subpopulation selection. For single‐stage designs, Rosenkranz30 proposed a bias‐adjustment method employing bootstrap techniques to calibrate the estimates upon general distributional assumption on outcomes. For multistage designs, Kimani et al31 proposed two estimators, ie, one is a naive estimator using a weighted average of per‐stage means and prevalences for each subgroup and the other is a uniformly minimum variance conditional unbiased estimator derived by the Rao‐Blackwell theorem. They assessed the performance under several situations, such as different values of prevalence and treatment effect of one subpopulation, and also suggested which estimator should be used according to what population is selected at Stage 1. In addition, Magnusson and Turnbull16 focused on the designs rather than estimation, though they outlined an extended bias‐reduction algorithm proposed by Wang and Leung32 in which uses double bootstrap methods33 to adjust ML‐estimates and build bootstrap confidence interval.

Despite some contributions on estimation, the aforementioned papers do not provide a complete overview of the maximum likelihood estimator (MLE) under various designs and lack exploring the estimator performance in further conditions. Rosenkranz's30 simulation work on single‐stage designs implicitly regarded the MLE only in circumstances with few different treatment effects for subgroups and thresholds used in the selection rule. Kimani et al31 considered two‐stage adaptive seamless designs, selecting subpopulation based on the Stage 1 data but not allowing early stopping, and they only assessed estimators with selection but without reporting promising results. The multistage designs of Magusson and Turnbull allow to select multiple subpopulations if the estimates of treatment effects are above certain thresholds at Stage 1.

In this paper, we discuss a framework to design single and multistage design that select subgroups. We illustrate the design properties when selection is based on the maximum statistic and comprehensively evaluate the properties of the MLE for these designs. Note that selecting on the basis of the maximum statistic is the worst case for both type I error (provided that the number of hypothesis remains the same) and bias and hence of particular interest. In Section 2, we derive a subgroup selection design that selects groups based on the maximum test statistic. Section 3 describes a simulation study in which different general design scenarios are evaluated and the bias and MSE of the corresponding MLEs are derived. In Section 4, we remark on the designs with different selection rules, then summarize the results of the simulation study and discuss its implications for future work.

2. DESIGNS

In this section, we first define the basic setting and notation and then provide general ideas for designs with subpopulation selection based on the maximum test statistic.

2.1. Basic setting and notation

Assume J mutually disjoint subpopulations are in the full study population (F ) and denote the prevalence of the jth subpopulation (S j) by λj, where j = 1,…,J and . The sample size of each subgroup is fixed as a proportion of the total sample size depending on the respective prevalence. We use n j to denote the sample size in subgroup S j and more generally use subscripts to denote groups and treatments and superscripts for stages. We consider a normally distributed endpoint with mean μ j,l with j = 1,…J and l = T,C, where subscript T corresponds to the treatment group and C to the control group. Additionally, we assume a common variance, ie, σ 2, across subpopulations.

2.1.1. Single‐stage design

For a single‐stage design, the test statistics used for selection and decision are distributed as

Note that we use the (unnecessary) superscript (1) for consistency with the multistage notation used later. and are the sample means of the treatment group and of the control group within S j, respectively. The true treatment difference in S j is denoted as θ j = μ j,T − μ j,C and is the information level for S j. This further simplifies to when the assumed treatment allocation ratio is 1:1, where is the total sample size of S j until the end of Stage 1.

Considering a composite population , combining two subpopulations and (where ⊆{1,2,…,J}, ), the test statistics are distributed as

where and are defined as before but the observations are from the combined treatment group and the combined control group of the united subpopulation . The true treatment effect size and the information level of are and , respectively. is also equal to for equal allocation. Additionally, . Note that, if and are complementary, their composite population is the full population F and then the subscript of the aforementioned notations are replaced with f. If and have an individual element for each, such as {1} and {2}, we simplify the notation of as 1 + 2. This notation simply denotes the union of and , and it does not necessarily imply that one is nested in the other.

2.1.2. Multistage design

For multistage designs, the test statistic based on the accumulated data at the end of stage k (k ≤ K, the total stage number) for is denoted by

where the superscript 1:k refers to a quantity calculated based on the accumulated data at the end of stage k; therefore, is the accumulated information level defined accordingly as .

2.2. Designs considered

We consider designs that control the family‐wise error rate (FWER) at level α in the strong sense34 and the set of hypotheses to be tested

where is the index set corresponding to the subpopulations considered and can index nested groups. For instance, if we consider subgroup 1, subgroup 1 and 2, or the full population being of interest, .

2.2.1. Single‐stage designs

To select, we use the maximum of the test statistics among , for population selection. Its implication and other selection rules will be discussed later. In the evaluation of the operating characteristics, we consider the case where population selection is undertaken first and only subsequently the corresponding hypothesis being tested. The testing procedure is making a decision about rejecting H 0w if , where w is a realized value of the random variable W and refers to the event that subpopulation S w is chosen. is the selected test statistic for S w, and C α is the corresponding critical value found to ensure the FWER in the strong sense.

The crucial element to find the appropriate critical value and sample size is the density of the joint distribution of the selected test statistic and the selected population index W. While the subsequent results are derived on the basis of selecting based on the maximum statistic, other selection rules can equally be implemented. Using a different rule results in a different density and, for illustration purposes, we also provide the resulting distribution for selecting any populations whose estimated effect exceeds a prespecified value, ie, δ, in the Supplementary Materials S.6. The joint densities , govern the probability whether to select S w and to reject the null hypothesis H 0w (where Θ is a configuration of all mutually disjoint subgroup treatment effects θ 1,θ 2,…,θ J). It can further be decomposed as . Consequently, the joint densities of and W can be represented as

| (1) |

where φ denotes the standard normal density; and is the cumulative distribution function of the ‐dimensional normal distribution conditional on under a specified configuration of treatment effects Θ, where is the cardinality of . The covariance matrix depends on whether subgroups are nested or not (see examples in Supplementary Materials S.2 and S.3). The cumulative distribution function specifies . It is noted that (1) is similar to the integrand of equation (4) in the work of Spiessens and Debois,9 where two coprimary analyses are performed on the full population and a subgroup, and the significance level for F is prespecified.

Using an iterative search, C α can then be found using the following inequality:

| (2) |

where Θ=Θ 0 denotes the global null hypothesis H 0, θ 1 = θ 2 = … = θ J = 0. Note that finding the critical value under this setting implies weak control of the FWER. Following the work of Magirr et al,35 it can be shown, however, that weak control implies strong control since θ 1 = θ 2 = … = θ J = 0 maximizes the type I error when selection is based on the maximum. Similarly, assume an alternative hypothesis that exactly one subgroup (say S w, w in ) has nonzero positive effect size, ie, δ, but others have none is true, the required total sample size for the full population can be found using the aforementioned critical values, a desired effect, and a specified power level, ie, 1 − β. The related equation is

| (3) |

where Θ a denotes the alternative hypothesis, a vector of size J whose elements are all 0 except for the wth element, which is δ. The desired is obtained by iteratively increasing the sample size until Equation (3) holds.

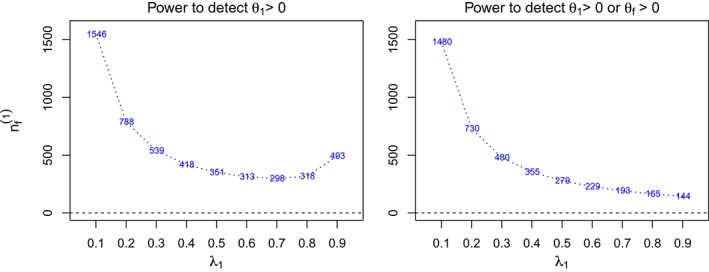

Note that only rejection of the hypothesis with the truly largest effect is considered in this power requirement. Similar considerations can be used to find the power to reject any false null hypothesis (see Figure 1 for an example).

Figure 1.

The total sample sizes of the full population F ( ) across prevalence rates of S 1 (λ1) for two different definitions of power. The design is a single‐stage design with two subpopulations where the treatment effects θ 1 and θ 2 for S 1 and S 2 are 0.5 and 0, respectively. The type‐I error and power are specified at 0.025 and 80% [Colour figure can be viewed at http://wileyonlinelibrary.com]

We have derived the aforementioned formula here for consistency, as for the multistage designs considered in the following, only the selected subgroup continues to subsequent stages.

The derivations of (2) and (3) are provided in the Supplementary Materials S.1 and more specific example solutions for the single‐stage design with two and three subgroups are given in Supplementary Materials S.2 and S.3 when the index set of selection population is and .

2.2.2. Multistage designs

The multistage designs we consider follow similar procedures as the aforementioned single‐stage designs. Population selection is performed at the first interim analysis, but any population in can be selected. We consider the case where data after Stage 1 are enriched so that the total sample size in the trial remains fixed but the sample size of subgroups that have not been selected is reallocated to the remaining populations. Suppose the selected population is S w, the difference is that, at stage k, the testing procedure stops by rejecting H 0w if , or stops with retaining H 0w if , or the procedure continues to stage k + 1 if , where and are the corresponding upper and lower stopping boundaries at stage k.

Two elements are required for appropriate stopping boundaries and stage‐wise sample sizes. The first is the joint density of , as shown in (1). The second element is the density of the conditional distribution of the test statistics (with accumulated data until stage k) given its precursor at stage k − 1. We denote this conditional density by and its general mathematical form is given in Supplementary materials S.4.

The stage‐wise density comprising of the two elements can then be used to determine the probability of stopping for efficacy or for futility at stage k. For example, the stage‐wise densities at Stage 2 with different values of W are specified as

| (4) |

Then, given Θ=Θ0 (ie, under the global null hypothesis), the probability of early stopping at Stage 2 (either for lack of effect or early rejection) for the subgroup S w can be calculated as

where the integral bounds signify that the design continues after Stage 1 but stops at Stage 2 for efficacy. The conditional function is used to calculate stopping probability at Stage 2, given that the design does not stop at the preceding stage. Similarly, the stage‐wise densities at stage k are the product of the expression in (1), multiplying the factor . The value of the k‐fold multiple integral within the integrand region defined by stopping boundaries before stage k + 1 is the early stopping probability at stage k. Each conditional density with its respective integral bound controls the probability of whether the design stops or continues, given that the design has proceeded at the previous stage.

To find boundaries that ensure FWER control, an iterative search over the stopping boundaries is conducted based on the following inequality:

| (5) |

where the integration region A k

where Θ 0 denotes the globe null hypothesis. We define and therefore . Note that this yields only one inequality, whereas and are all unknown. To overcome this, we set them to follow a specific functional form, where for the K stage design. For example, when using the O'Brien Fleming (OBF)13, 36 type stopping boundaries, and is a certain function of k. In addition, the calculations in (5) assumes that the futility bounds are binding. For nonbinding bounds, one can simply set the lower bounds to −∞.

As before, (5) implies weak control of the FWER but also guarantees strong control following the arguments in the work of Magirr et al.35

Suppose an alternative hypothesis of the form θ w = δ > 0 for exactly one element (say w) in and is true. Then, under this alternative hypothesis, the aforementioned critical values, and specified power, the stage‐wise total sample size for the full population can be found to satisfy the following inequality:

| (6) |

where the configuration Θ a has an nonzero positive effect δ on the wth element but the other J − 1 elements are zero. Detailed derivations of (5) and (6) are provided in Supplementary Materials S.1 and the design details of two‐stage designs with two subgroups (considering selection of S 1 or F) in Supplementary Materials S.5.

2.2.3. An illustrative example

The Dose Ranging Efficacy And safety with Mepolizumab in severe asthma (DREAM) trial37 investigates, among other endpoints, the effect of mepolizumab on exacerbations and forced expiratory volume in 1 second (FEV1). Subsequent secondary analyses of the trial data38, 39 find that the treatment effect of mepolizumab depends on the baseline levels of eosinophil and suggests that only patients with blood eosinophil levels of more than 150 cells per μL receive benefit from the treatment.

Suppose that, on the basis of these exploratory findings, we wish to embark on a prospective evaluation of the claim that mepolizumab results in meaningful improvements only for patients with baseline levels of eosinophil of 150 or more cells per μL in the blood. We will use a change in FEV1 from baseline to 90 days, modeled as normally distributed as the primary endpoints although the same arguments hold for other endpoints such as exacerbations. Additionally, we suppose that the prevalence of each group (below and above 150 cells per μL blood) is 50%. Following the work of Santanello et al,40 we assume that the standard deviation is 0.72L and consider a reduction of FEV1 of 0.23L as the minimum clinically relevant treatment difference, and consequently seek to power our evaluations for this effect.

Three different evaluation strategies are considered, ie, (i) running two separate studies in each of the two subgroups, (ii) a single‐stage study with one subgroup versus the full population (see Section 2.2.1), and (iii) a two‐stage enrichment design where the best performing group is selected at the halfway point and early stopping using O'Brien and Flemming bounds36 are used (see Section 2.2.2). For each of the three designs, we consider a type I error per study of 2.5% and require a power of 80% to reject any false null hypothesis. Furthermore. we assume that 25 patients are recruited per month and that it takes two months to conduct the interim analysis for strategy 3.

A summary of the characteristics of the different strategies is given in Table 1. The strategy using two separate studies requires just over 600 patients to be recruited, whereas the single‐stage design with two groups does need almost 70 patients more. The reason for this is that no attempt has been made in the first approach to control the FWER. If we were to correct for multiplicity for the separate studies using a Bonferroni correction, the required sample size would increase to 748 patients. Using a two‐stage selection design allows us to reduce the required sample size even further to around 550 patients, a reduction of 10% and 30% as compared to the uncorrected and multiplicity corrected separate study strategy, respectively. Additionally, the two‐stage design does investigate more patients in the group that is truly benefitting from treatment, which is one of the reasons for the reduction in required sample size. Besides the reduction in sample size, running a single study rather than two separate ones does also yields organizational advantages. The main drawback of this approach is that the duration of the study is increased by almost nine months should the subgroup be selected (although a small reduction in the duration is expected if the full population is selected at interim).

Table 1.

Comparison of different evaluation strategies. max family‐wise error rate (FWER) is the maximum family‐wise error of the strategy, N is the total sample size, % superior is the percentage of patient studied in the better performing subgroup, and duration is the time from recruiting the first patient until the primary endpoint is available for all patients

| Strategy | max FWER | N | % superior | Duration (Months) |

|---|---|---|---|---|

| Separate studies | 0.0494 | 616 | 50% | 27.64 |

| Single‐stage study | 0.0250 | 684 | 50% | 30.36 |

| Two‐stage design | 0.0250 | 552 | 75% | 25.08 or 36.12 |

Note that, in addition to the advantages illustrated earlier, the FWER in the two‐stage enrichment design is controlled for the worst‐case situation in terms of selection and hence other selection rules can be used without error rate inflation.

2.2.4. Alternative designs

We have illustrated how to obtain critical bounds and sample size for general enrichment designs earlier. Here, we discuss alternative designs considering different type‐I error and power configurations.

Significance levels and stopping boundaries.

An alternative to specifying the design and corresponding stage‐wise α levels via the boundaries is to specify marginal significance level α k to each stage k (where ) and use an error spending approach as used in classic group sequential designs.13 Such considerations affect the way we find stopping boundaries where the same boundaries are shared by all the populations considered. More specifically, based on the following inequality (7), it is required to search the critical value used in A k − 1 first under the upper limit of α k − 1 (where the subscript of the upper bounds is changed accordingly). Then, substitute those critical values for the associated bounds used in A k under the upper limit of α k for finding the remaining critical values and so on

| (7) |

Note that there are several ways to determine the lower stopping boundaries; for example, one could set symmetric values with respect to the upper critical values, or simply set 0.

One can further prespecify the marginal significance levels for specific populations at each stage. One example of taking this consideration can be found in the work of Spiessens and Debois,9 although they only consider single‐stage designs. Such design features may lead to different stopping boundaries for all the populations included in .

Incidentally, for two‐stage designs, if early stopping is not considered at stage 1 (that is, the stage‐1 data is only used for population selection), then the first bound of integration in Equations (5) and (6), ie, A k, is ( −∞,∞), where k > 1. Meanwhile, the upper bound of A 1 is defined as ∞ and therefore the integral is 0. Such designs are the same as the two‐stage adaptive seamless designs used in the work of Kimani et al.31

Power.

The power of the designs in Section 2.2 is defined as the probability to detect the treatment effect of the population of interest under H a. Alternatively, we can define power to detect any treatment effects wherever they are from a set of specific subpopulations. Such change leads the total sample size for F to be different because of its influence on Equation (6), which is the basis of searching . Moreover, the equation becomes

| (8) |

where is the subset of and contains the specified subpopulations of interest. Take an example that, if and , Figure 1 shows the resulting total sample sizes in a single‐stage design, corresponding to different prevalence values of S 1, under different definitions of power. The left panel is computed to have power 1 − β for selecting the subpopulation with the largest true effect and rejecting the corresponding null hypothesis, whereas the right panel considers any correct rejection. Under the left power definition, the required sample size is large when the prevalence of the subgroup with a positive treatment effect is small as the number of patients having said effect is (relatively) small. As the prevalence λ1 approaches 1, increases again as the effect of the subgroup dominates the effect in the full population and differentiating between the two populations becomes more difficult. In contrast, always decreases under the definition of power to detect θ 1 > 0 or θ f > 0. Since the effect sizes for S 1 and F are close, it is difficult to select the correct subgroup and thus large sample sizes are needed. The reason that the behavior of is always decreasing for larger prevalences in the right panel is that there is no restriction on selecting a prespecified population and reporting the efficacy. The decreasing pattern can be similar to that using the closed testing procedure41 in a single‐stage design, where the total sample is available for investigating any subpopulation without considering selection. Note that all the patterns observed in Figure 1 emerge in a case of multistage designs as well (not shown in this paper).

3. ESTIMATION ASSESSMENT

In this section, we report a simulation study assessing the properties of MLEs. Note that, in the reported figures, different scales for the y‐axes are used to highlight patterns.

3.1. Simulation setup

In our evaluations, we specify the FWER, ie, α, as 0.025 and set the sample size for each scenario so that the power of the design is 1 − β = 80%. Our alternative hypothesis is that the treatment has an effect of 0.5 in S 1, whereas the effect of the treatment is zero for all other subgroups. Therefore, the power aims to detect the nonzero effect in S 1 (that is to reject H 01) once the first subgroup is selected. The assumed common variance across subpopulations, ie, σ 2, is set to 1 and we use 1 000 000 simulation runs.

The designs we consider are a single‐stage design with two subpopulations (Design 1), a single‐stage design with three subpopulations (Design 2), and a two‐stage design with two subpopulations and three subpopulations (Design 3 and Design 4, respectively), with an OBF upper stopping boundary and a fixed lower boundary of zero is used. We calculate the stopping boundaries and the total sample sizes for F based on (2) and (3) for single‐stage designs (and (5) and (6) for multistage designs). The sample sizes and critical values for each of the designs are given in Appendix A (Table A1‐A2). Based on these four designs, several scenarios are investigated, altering the design features such as prevalence.

Denote as the naive MLE (that is not accounting for selection) for the parameter θ, then and represent the MLEs for the treatment effect of F and S s, respectively. The estimates can be calculated by , where s ∈ {1,f } in scenarios for Design 1 and s ∈ {1,1 + 2, f } in scenarios for Design 2. In multistage scenarios, the MLE estimates of and are calculated by , where s ∈ {1,f } and M is the stage at which the study stops.

We define bias as bias( ) = E( and the mean squared error (MSE), MSE( ) = E( ) as performance measures for estimation assessment. As the sample size for the full population satisfies the aforementioned power requirement and varies across different prevalence, a standardized scale is used in the assessments (readers are referred to Supplementary Materials S.7 for details on the standardization). In our subsequent evaluations, we will consider three situations. Firstly, we consider the treatment effect estimator regardless of the population being selected or the hypothesis test being significant. Secondly, we consider only the estimators of the selected populations, which is expected to result in selection bias. The third situation considers reporting bias and, for this, we only consider only the treatment effect estimates of the selected population if the corresponding hypothesis test is significant. Implicitly, we are therefore considering that the outcome of a study is only reported (published) if it was significant. Note that, in the evaluations to follow, we refer to the selection bias as Select S w and the reporting bias as Select S w + Reject H 0w, where w in specifies the population chosen through a selection rule. In addition to the bias and MSE depending on which subgroup has been selected, we also report the family‐wise (FW) bias and MSE, ie, the bias and MSE averaged over all possible selections.

3.2. Scenarios for Design 1

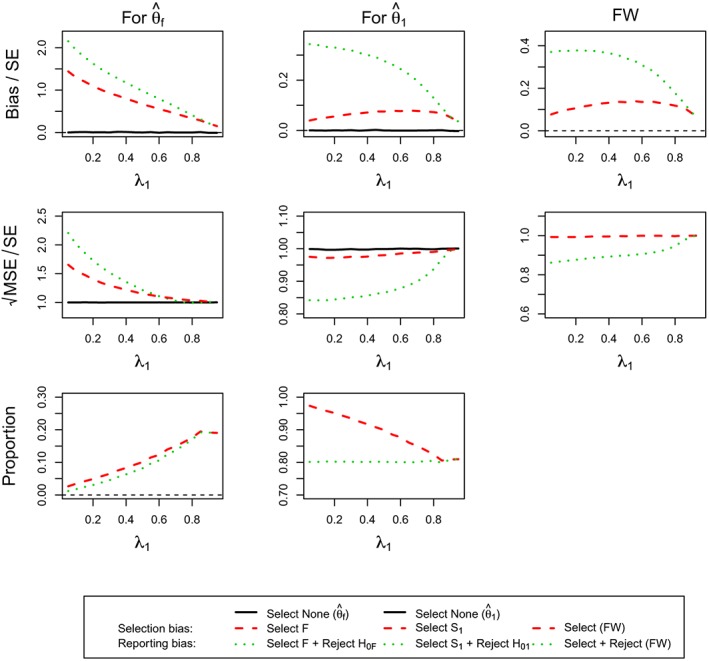

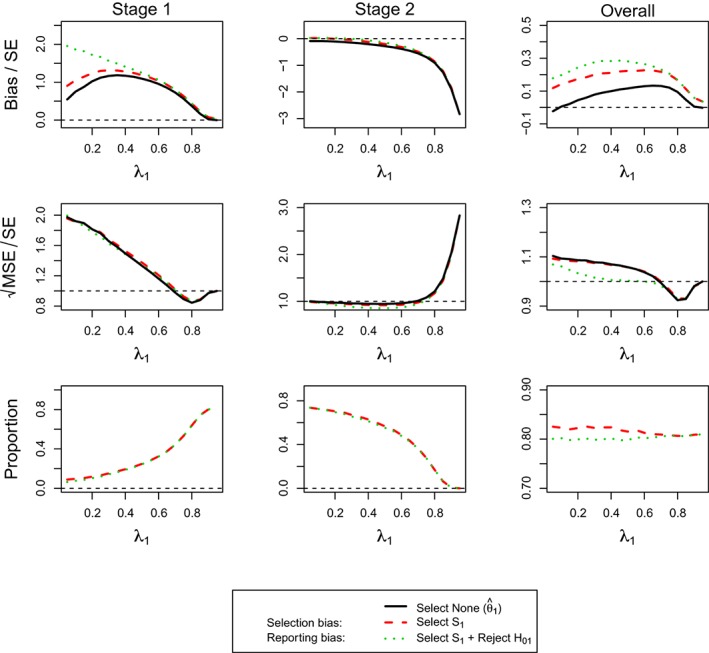

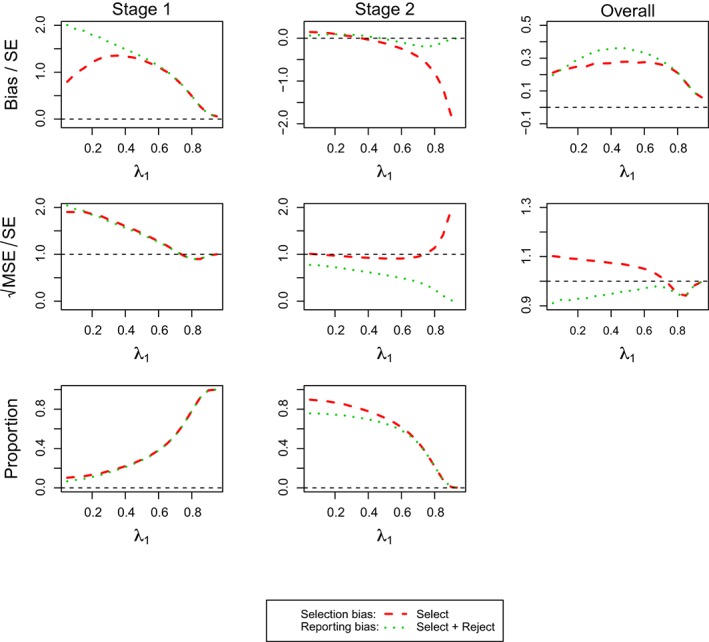

Scenarios here cover different prevalence values of S 1, λ1 varying from 0.05 to 0.95 in increments of 0.05. We illustrate the assessments for the scenarios under three configurations of different values of θ 1 and θ 2 in Figure 3‐4. Their horizontal axes are for the prevalence of S 1, λ1, and the vertical axes of the row‐wise panels are for standardized bias, standardized , and simulation proportions (%).

Figure 3.

(For Design 1, θ 1 = 0.5 and θ 2 = 0) the standardized bias and standardized of maximum likelihood estimators , and the simulation proportions for different circumstances against the prevalence of subpopulation 1, λ1. FW, family‐wise; MSE, mean squared error [Colour figure can be viewed at http://wileyonlinelibrary.com]

Figure 4.

(For Design 1, θ 1 = 0.5 and θ 2 = 0.5) the standardized bias and standardized of maximum likelihood estimators , and the simulation proportions for different circumstances against the prevalence of subpopulation 1, ie, λ1. FW, family‐wise; MSE, mean squared error [Colour figure can be viewed at http://wileyonlinelibrary.com]

Figure 2 presents the estimation assessment of and under the assumption of θ 1 = 0 and θ 2 = 0. As expected, we do not see any bias when no selection is undertaken as well as constant standardized MSE, ie, a pattern that is repeated throughout all other simulations. Additionally, the selection probability is constant at 50% due to the equal effect in both subgroups. The selection bias is largest when the prevalence in the subgroup is smallest with a matching pattern for the standardized MSE. The reporting bias and MSE follow the same pattern although at a markedly increased level.

Figure 2.

(For Design 1, θ 1 = 0, and θ 2 = 0) the standardized bias and standardized of maximum likelihood estimators , and the simulation proportions for different circumstances against the prevalence of subpopulation 1, ie, λ1. FW, family‐wise; MSE, mean squared error [Colour figure can be viewed at http://wileyonlinelibrary.com]

Figure 3 considers the case when θ 1 = 0.5 and θ 2 = 0. Considering the selection probabilities first, we find that, as per design, there is an 80% chance to select population 1 correctly and reject the corresponding hypothesis. The selection probability of the full population increases as the prevalence increases as the effect in the full population gets larger as the subpopulation contributes more toward it. At the same time, the chance to also reject the hypothesis also increases. The selection and reporting bias in the full population estimate is largest when the prevalence in the subpopulation is smallest and then steadily decreases toward zero. The size of the bias is well over 0.5 standard errors for almost all prevalences and hence should be considered important although the incorrect selection in itself is not very common in this case. For the full population, the bias dominates the MSE and hence the MSE follows the same pattern.

Focusing the attention on subpopulation 1, we find that bias is present, although it is of much smaller magnitude (selection bias at most 0.1 and reporting bias at most 0.35 standard errors) than for the full population (up to over 2 standard errors). The selection bias is maximized at a prevalence of around 0.75, whereas it is largest for a small prevalence for the reporting bias.

When both treatment groups have the same effect, θ 1 = θ 2 = 0.5 (Figure 4), we observe that, almost always, the full population is selected and only for large prevalences of the subpopulation (>50%) we obtain notable selection probability for the subpopulation (up to 20%). As a consequence of this, we obtain no estimate of the bias and MSE for the subpopulation for low prevalences. The bias in the estimate in this population is potentially very large (>3 standard errors) but drops quickly toward zero as the prevalence increases. In this setting, it is also notable that the selection bias is virtually identical to the reporting bias as very large observed effects are necessary to select the subpopulation in the first place.

The patterns for the full population are somewhat more distinct as no bias is observed for small prevalences because it is always the full population that is selected. The bias in this case is, however, very small even in the worst‐case situation (prevalence of around 0.75), where the reporting bias is less than 0.1 standard errors and the selection bias is even smaller.

3.3. Scenarios for Design 2

Scenarios for Design 2 regard to select a population among S 1, S 1 + 2, and F under different configurations of θ 1, θ 2, and θ 3. Our focus here is to assess the MLEs , , and under θ 1 = 0.5,θ 2 = 0,θ 3 = 0 under the population selection rule given by

| (9) |

This rule is one variant of the maximum statistic rule and sequentially decides which population to be selected. The results for other configurations of θ 1, θ 2, and θ 3 are provided in Tables S.1‐S.3 in Supplementary Materials S.8. Note that, for all the scenarios, simulations are run under the same stopping boundaries and sample sizes ( ) found based on Design 2 with the maximum statistics selection rule, θ 1 = 0.5,θ 2 = 0,θ 3 = 0, and equal subgroup prevalence.

The results in Table 2 shows that, in this case, the correct population is selected most of the time (>80%) due to the design constraint to obtain 80% power. The selection bias when selecting the correct population is small at <0.1 standard errors and even the reporting bias is only modest at 0.27 standard errors. The selection and reporting bias when selecting the incorrect population are notably larger in this instance resulting in biases up to 1.3 standard errors. The bias is largest for the full population as the true underlying effect in this group is at 0.167 smallest among all populations and hence a rather unusual sample is required for its MLE to be the largest.

Table 2.

(For Design 2, θ 1 = 0.5, θ 2 = 0, and θ 3 = 0) Standardized bias and standardized of the maximum likelihood estimators where the prevalence rates of three subgroups are 1/3. In addition, Proportion (Prop.) stands for how often the corresponding circumstance occurs

| Bias/SE |

|

Prop.(%) | ||

|---|---|---|---|---|

| (Select none) | ‐0.00186 | 0.99849 | ||

| (Select F) | 0.96546 | 1.32104 | 3.74 | |

| (Select F + reject H 0F) | 1.31217 | 1.47472 | 2.91 | |

| (Select none) | ‐0.00151 | 1.00004 | ||

| (Select S 1) | 0.09094 | 0.97526 | 88.58 | |

| (Select S 1 + reject H 01) | 0.27068 | 0.87036 | 80.20 | |

| (Select none) | ‐0.00118 | 0.99884 | ||

| (Select S 1+2) | 0.76128 | 1.19617 | 7.68 | |

| (Select S 1+2 + reject H 0,1+2) | 1.02579 | 1.26021 | 6.47 | |

| Family‐wise select | 0.17516 | 1.00518 | ||

| Family‐wise select + reject | 0.35902 | 0.91814 |

Abbreviations: MSE, mean squared error.

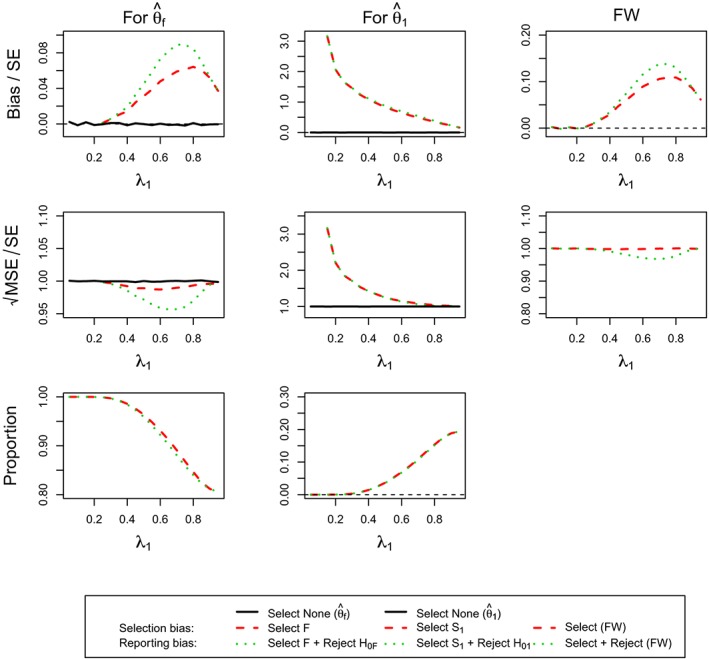

3.4. Scenarios for Design 3

The investigation presented here concerns Design 3, a two‐stage design and we focus on θ 1 = 0.5 and θ 2 = 0 here, whereas the results for other configurations are given in Figures S.1‐S.6 of Supplementary Materials S.8.

Figure 5 shows the results of the estimator for the full population. The top row corresponds to standardized bias, middle row to standardized , and the bottom row to the probability of selecting the full population. The first column is associated with the estimators that stop at Stage 1, the second considers only trials that reach Stage 2, whereas the final column corresponds to the estimator irrespective of when the trial was stopped. In addition to the selection bias and the reporting bias, we also consider the estimator irrespective of the reason for stopping (green triangle) in the figure.

Figure 5.

(For Design 3, θ 1 = 0.5 and θ 2 = 0) standardized bias and mean squared error (MSE) of and simulation proportions for different circumstances at stopping stage 1, 2, and overall, against the prevalence of subpopulation 1, ie, λ1 [Colour figure can be viewed at http://wileyonlinelibrary.com]

The reporting bias is potentially very large (up to three standard errors for Stage 1 only and up to two standard errors for Stage 2) and is the largest when the prevalence of the subgroup is small and subsequently decreases. When only considering studies that select the full population and stop at Stage 1, it approaches zero, whereas the bias does in fact become negative for trials that stop at the second stage. The overall estimator is, however, always positively biased, showing a very similar pattern as the Stage 1 cases only. The selection bias overall and, for Stage 2, only follows the same pattern as the reporting bias, whereas it does show an inverted U‐shape for Stage 1 only, which is maximized at a prevalence of around 0.5. The bias in the estimator that only considers stopping at Stage 1 for any reason follows the same pattern as the selection bias, although the bias is smaller. It is noteworthy that, although substantial bias is exhibited under some situation, the probability of reaching these (eg, selecting the full population and stopping at Stage 1) are very rare. The standardized appears like that in standardized bias except for the second stage. In those exceptional cases, the MSE (for selection, reporting, and regardless of selection) decreases at a different rate before inflating substantially at a prevalence of 0.8.

Considering the findings for the estimator of the first subpopulation, ie, (Figure 6), the results exhibit similar patterns in many circumstances in Figure 5. When stopping the trial at the first stage, the estimator is largely biased for prevalences up to 0.6. The reporting bias subsequently decreases from two standard errors, whereas the selection bias is more moderate at around 1 SE. All the MSE (regardless of any circumstances) decreases to 0.9 SE from 2 and is close one for larger prevalances larger 0.7. As most of the time, the subpopulation is selected correctly, the selection bias and the bias considering all studies that stopped at Stage 1 are very similar and the MSE, meanwhile, is near one standard error. The estimators considering only trials that stop at Stage 2 are almost unbiased for small and moderate prevalence but can exhibit a large negative bias when the prevalence is large. The MSE is close to 1 SE for most of prevalences but becomes very large beyond a prevalence of 0.7. The overall estimator is, however, positively biased (for both selection and reporting) for all prevalences and shows an inverted U‐shape with a maximum bias of about 0.3 SEs for a prevalence of 0.6. Its MSE conditional on selection or no‐selection appears different from that considering reporting before a prevalence of 0.7. The estimator thereafter performs similarly in MSE with a small U‐shape under 1 SE.

Figure 6.

(For Design 3, θ 1 = 0.5 and θ 2 = 0) standardized bias and mean squared error (MSE) of and simulation proportions for different circumstances at stopping stage 1, 2, and overall, against the prevalence of subpopulation 1, ie, λ1 [Colour figure can be viewed at http://wileyonlinelibrary.com]

The FW bias and MSE for this design with θ 1 = 0.5 and θ 2 = 0 are given in Figure 7.

Figure 7.

(For Design 3, θ 1 = 0.5 and θ 2 = 0) FW bias and mean squared error and simulation proportions for different circumstances at stopping stage 1, 2, and overall, against the prevalence of subpopulation 1, ie, λ1 [Colour figure can be viewed at http://wileyonlinelibrary.com]

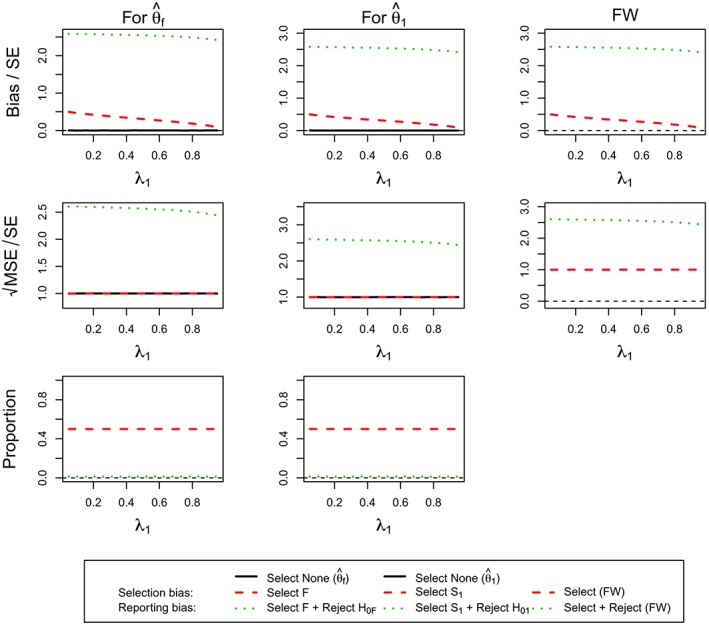

3.5. Scenarios for Design 4

Scenarios for Design 4 is the two‐stage counterpart of Design 2 for selecting a population among S 1, S 1+2, and F under different configurations of θ 1, θ 2, and θ 3. The investigation here focus on assessing the maximum likelihood estimators , , and under θ 1 = 0.5,θ 2 = 0,θ 3 = 0 under the population selection rule given in Equation 9. The results for other configurations of θ 1, θ 2, and θ 3 are provided in Tables S.4‐S.6 in Supplementary Materials S.8. All the simulations are run under the same stopping boundaries and sample sizes ( ) found based on Design 4 with the maximum statistics selection rule, the configuration of treatment effects (θ 1 = 0.5,θ 2 = 0,θ 3 = 0) and subgroup prevalences being 1/3.

Table 3 shows the results of the estimators for the first subgroup, the combined subgroup, and the full population. The standardized bias, standardized , and simulation proportions are presented in the trials that stop at Stage 1, reach Stage 2, and are irrespective of which stopping stage.

Table 3.

For Design 4, θ 1 = 0.5, θ 2 = 0, and θ 3 = 0) standardized bias and standardized of the MLEs where the prevalence rates of three subgroups are 1/3. In addition, Proportion (Prop.) stands for how often the corresponding circumstance occurs

| Stop at Stage 1 | Stop at Stage 2 | Overall | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Bias/SE | Prop.(%) | Bias/SE | Prop.(%) | Bias/SE | Prop.(%) | ||||

| (Select none) | 0.67715 | 1.13140 | ‐0.26340 | 0.96585 | 0.05614 | 1.02209 | |||

| (Select F ) | 2.02110 | 2.14318 | 1.54 | 0.36337 | 0.92461 | 5.98 | 0.70283 | 1.17414 | 7.52 |

| (Select F + reject H 0F) | 2.10568 | 2.14995 | 1.51 | 0.85268 | 1.01727 | 3.89 | 1.20283 | 1.33379 | 5.39 |

| (Select none) | 1.03255 | 1.22555 | ‐0.29968 | 0.97840 | 0.15293 | 1.06237 | |||

| (Select S 1 ) | 1.12932 | 1.27694 | 29.13 | ‐0.20496 | 0.94416 | 51.17 | 0.27906 | 1.06487 | 80.29 |

| (Select S 1 + reject H 01) | 1.14828 | 1.26420 | 28.99 | ‐0.20175 | 0.93853 | 51.11 | 0.28684 | 1.05639 | 80.11 |

| (Select none) | 0.81892 | 1.16958 | ‐0.26809 | 0.96219 | 0.10120 | 1.03265 | |||

| (Select S 1+2) | 1.78983 | 1.88884 | 3.31 | 0.17732 | 0.89687 | 8.88 | 0.61488 | 1.16604 | 12.18 |

| (Select S 1+2 + reject H 0,1+2) | 1.82834 | 1.88619 | 3.27 | 0.41744 | 0.81323 | 7.57 | 0.84338 | 1.13715 | 10.85 |

| Family‐wise select | 1.23403 | 1.37576 | 33.97 | ‐0.10207 | 0.93603 | 66.03 | 0.35185 | 1.08542 | |

| Family‐wise select + reject | 1.25693 | 1.36402 | 33.77 | ‐0.06135 | 0.92826 | 62.57 | 0.40076 | 1.08101 | |

Abbreviations: MSE, mean squared error.

Considering the trials irrespective of stopping, we observed the correct population is selected in the 80% of simulations due to the design requirement of 80% power. The bias is found positive for all the overall estimators and varies widely (smallest at 0.05 and maximum up to 1.2 standard errors). The selection and reporting bias when selecting the correct population are the smallest (less than 0.3 standard errors), but larger when selecting the incorrect population (particularly for the full population). All the standardized MSE are larger than one standard error but only up to a moderate size of around 1.3. While selecting the correct population or rejecting the null hypothesis, the estimator for the first subgroup has a smaller standardized MSE (around 1.06 standard errors) than its counterparts.

The results at different stages show a contrary picture. More trials stop at Stage 2 than at Stage 1 and each stage has a higher proportion of selecting the correct population (around 30% and 50% at Stage 1 and Stage 2, respectively). The bias is large at Stage 1. The selection and reporting bias are smaller when selecting S 1 (around 1.1 standard errors) than those when selecting S 1+2 or F (around 1.8 and 2, respectively). A moderate bias is observed at Stage 2 (up to 0.85 standard errors). In particular, the selection and reporting bias are found negative in the estimator for the first subgroup. The standardized MSE of all the estimators at Stage 1 are much larger than one SE but those at Stage 2 show the opposite pattern, being less than 1 (between 0.8 and 1).

4. DISCUSSIONS AND CONCLUDING REMARKS

In this paper, we have discussed general design considerations for clinical trials with subpopulation selection and illustrate how such studies can be designed. The design framework described can be viewed as an extension of group‐sequential methods42 and therefore requires the same types of assumptions and specifically we do assume an independent increment structure of the data. In our evaluations, we have assumed that the primary endpoint is available immediately or at least before the next patient is recruited to the trial. While the general results in the paper will remain to hold if the endpoint is available only after some time, patients may still be recruited from a subpopulation that is subsequently not selected. Different approaches to deal with delayed responses have been proposed (eg, the work of Hampson and Jennison43) in the context of group‐sequential trials have been proposed. As a general rule, however, it is clear that the efficiency of selection is reduced if the time to observe the endpoint is long in comparison with the recruitment speed. Other assumptions made within this framework are common to most adaptive designs. Most notably, we are assuming that there are differences in the population before and after interim analysis and, in particular, that no time trends are present.

In this work, we only consider designs with normally distributed endpoints, although they can easily be extended to other types of endpoints via the efficient scores framework.42, 44 Note, however, that particular care is required when using time to event endpoints (see the work of Magirr et al}45 for a more detailed challenges of adaptive trials with time to event endpoints). Moreover, we assume that the subgroup prevalence is known although clearly specifying this parameter correctly in the design will be crucial for the designs operating characteristics. A consequence of the assumed known prevalence is that we only present the estimation assessment of the MLE, where subgroup sample sizes are fixed according to the respective prevalence in designs. Further simulations (not shown), however, suggest that random sample sizes of populations only alter the findings marginally.

Selection based on the maximum test statistics is the main focus throughout the paper and an R package implementing this design is currently under development. While this selection rule is simple and intuitive, it may not be optimal in certain circumstances. It makes sense to adopt the rule when some subgroup treatment effects have been identified as being positive and difference between test statistics across subgroups are reasonably large. However, when the test statistic for S s and F are close but the former is larger, applying this rule leads to ethical issues that selecting only part of the population rather than the whole population although they could benefit from the treatment. Therefore, other options for selection rules should be considered for similar situations and investigation.

One alternative, which is also considered for designs with treatment selection (eg, see the work of Bretz et al46), can be to introduce a threshold in the selection rule. This allows all the subgroups whose effect sizes are similar to the best one (their absolute difference is within a threshold) to be united so that the pooled population can continue to the next stage. Meanwhile, it also permits to select a population whose effect size is above a threshold plus the effect size from the others.

Another option that has been used in the context of treatment selection (eg, see the works of Magnusson and Turnbull16 and Magirr and Jaki35 is simply to select a population whose efficacy exceeds a certain value at stage 1. This selection rule was used in the work of Magnusson and Turnbull16 and integrates population selection and hypothesis testing at the first stage. Their designs considering a prior ordering on underlying effect sizes of all individual subgroups somehow connect to ours where the target subpopulations for selection has a nested structure. It is noted that the mathematical expression of in (1) will be different if the aforementioned selection rules are used. We provide the required modifications to the design framework in the supplementary materials for illustrative purposes.

In term of estimation, we have assessed the bias of the MLE under various scenarios. We find that, almost always, bias is positive leading to an overenthusiastic estimate of the true treatment effect. While for some settings, the size of the bias can be viewed as negligible, it can become large under other situations. The challenge clearly being that one will usually not know if one is in one of these extreme situations. Another observation we make is that, although bias is introduced by selecting the population, the bias gets markedly increased (often more than doubled) when only significant results are reported highlighting the effect of reporting bias, which may be even more problematic than the bias introduced by selection.

Our results suggest that the MSE of the overall MLEs performs quite well (around 1 standard error) in many circumstances and scenarios. We find whether selecting the correct population or not impacts the size of MSE for the corresponding estimator. The extent can be more substantial when further reporting significant results. The same finding is even observed in the extreme scenario, where no correct population is defined because the underlying effect of each subgroup is assumed none.

Future work will consider estimators that are unbiased (or have smaller bias) while maintaining comparable MSE. The conditional bias‐adjusted estimator following the ideas in the work of Stallard and Todd28 appears as the most promising. One extension to the case of multiple‐stage designs given the process continues to the final stage can be naturally achieved. However, whether the derived estimators have less MSE should be verified in further investigations.

Supporting information

SIM_7925‐Supp‐0001‐SIMPaper_SM_V2.pdf

ACKNOWLEDGEMENTS

This work is independent research arising in part from Dr Jaki's Senior Research Fellowship (NIHR‐SRF‐2015‐08‐001) supported by the National Institute for Health Research. Funding for this work was also provided by the Medical Research Council (MR/M005755/1). The views expressed in this publication are those of the authors and not necessarily those of the NHS, the National Institute for Health Research, or the Department of Health. All authors have made equal contributions to this manuscript.

APPENDIX A.

DESIGN SPECIFICATIONS

A.1. Design 1

Table A1.

Design specifications for different values of λ for Design 1. c is the critical value and N is the total sample size

| λ | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | 0.35 | 0.4 | 0.45 | 0.5 |

| c | 2.232 | 2.228 | 2.223 | 2.217 | 2.212 | 2.206 | 2.200 | 2.193 | 2.186 | 2.178 |

| N | 3070 | 1546 | 1040 | 788 | 638 | 539 | 469 | 418 | 380 | 351 |

| λ | 0.55 | 0.6 | 0.65 | 0.7 | 0.75 | 0.8 | 0.85 | 0.9 | 0.95 | |

| c | 2.170 | 2.160 | 2.150 | 2.139 | 2.126 | 2.111 | 2.094 | 2.072 | 2.042 | |

| N | 329 | 313 | 303 | 298 | 302 | 318 | 363 | 493 | 943 |

A.2. Design 2

Table A2.

Design specifications for different values of λ for Design 2 and an interim analysis after half the patients have been observed. c 1 is the upper stopping boundary at stage 1, c 2 the final stage critial value, and N the total sample size. The lower bound at stage 1 is fixed at zero for all λ

| λ | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | 0.35 | 0.4 | 0.45 | 0.5 |

| c 1 | 3.018 | 3.031 | 3.037 | 3.039 | 3.039 | 3.037 | 3.034 | 3.029 | 3.023 | 3.016 |

| c 2 | 2.134 | 2.143 | 2.147 | 2.149 | 2.149 | 2.148 | 2.145 | 2.142 | 2.138 | 2.133 |

| N | 719 | 401 | 298 | 251 | 224 | 207 | 196 | 188 | 183 | 181 |

| λ | 0.55 | 0.6 | 0.65 | 0.7 | 0.75 | 0.8 | 0.85 | 0.9 | 0.95 | |

| c 1 | 3.008 | 2.999 | 2.989 | 2.977 | 2.964 | 2.948 | 2.930 | 2.907 | 2.875 | |

| c 2 | 2.127 | 2.121 | 2.114 | 2.105 | 2.096 | 2.085 | 2.072 | 2.055 | 2.033 | |

| N | 181 | 184 | 192 | 205 | 229 | 269 | 342 | 491 | 943 |

A.3. Design 3

For this single‐stage design with three subgroups, the prevalance of each subgroup is equal to one third, resulting in a critical value of c = 2.289 and a total sample size of N = 575.

A.4. Design 4

The two stage design with three subgroups uses equal prevalance of each subgroup and an interim analysis after half the patients have been observed. The critical value at the first stage is c 1 = 3.119, whereas the final critical value is c 2 = 2.205. A fixed futility bound of zero is used and the total sample size is N = 335.

Chiu Y‐D, Koenig F, Posch M, Jaki T. Design and estimation in clinical trials with subpopulation selection. Statistics in Medicine. 2018;37:4335–4352. 10.1002/sim.7925

REFERENCES

- 1. Kent DM, Rothwell PM, Ioannidis JPA, Altman DG, Hayward RA. Assessing and reporting heterogeneity in treatment effects in clinical trials: a proposal. Trials. 2010;11(1):85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Varadhan R, Segal JB, Boyd CM, Wu AW, Weiss CO. A framework for the analysis of heterogeneity of treatment effect in patient‐centered outcomes research. J Clin Epidemiol. 2013;66(8):818‐825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Basu S, Sussman JB, Hayward RA. Detecting heterogeneous treatment effects to guide personalized blood pressure treatment: a modeling study of randomized clinical trials. Ann Intern Med. 2017;166(5):354‐360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Cuzick J. Forest plots and the interpretation of subgroups. Lancet. 2005;365(9467):1308. [DOI] [PubMed] [Google Scholar]

- 5. Ondra T, Dmitrienko A, Friede T, et al. Methods for identification and confirmation of targeted subgroups in clinical trials: a systematic review. J Biopharm Stat. 2016;26(1):99‐119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Alosh M, Huque M, Bretz F, D'Agostino RB Sr.. Tutorial on statistical considerations on subgroup analysis in confirmatory clinical trials. Stat Med. 2017;36(8):1334‐1360. [DOI] [PubMed] [Google Scholar]

- 7. Lipkovich I, Dmitrienko A, D'Agostino RB Sr.. Tutorial in biostatistics: data‐driven subgroup identification and analysis in clinical trials. Stat Med. 2017;36(1):136‐196. [DOI] [PubMed] [Google Scholar]

- 8. Placzek M, Friede T. Clinical trials with nested subgroups: analysis, sample size determination and internal pilot studies. Stat Methods Med Res. First published date: March‐14‐2017. 10.1177/0962280217696116 [DOI] [PubMed] [Google Scholar]

- 9. Spiessens B, Debois M. Adjusted significance levels for subgroup analyses in clinical trials. Contemp Clin Trials. 2010;31(6):647‐656. [DOI] [PubMed] [Google Scholar]

- 10. Song Y, Chi GYH. A method for testing a prespecified subgroup in clinical trials. Stat Med. 2007;26(19):3535‐3549. [DOI] [PubMed] [Google Scholar]

- 11. Alosh M, Huque MF. A flexible strategy for testing subgroups and overall population. Stat Med. 2009;28(1):3‐23. [DOI] [PubMed] [Google Scholar]

- 12. Graf AC, Posch M, Koening F. Adaptive designs for subpopulation analysis optimizing utility functions. Biom J. 2015;57(1):76‐89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Jennison C, Turnbull BW. Group Sequential Methods With Applications to Clinical Trials. Boca Raton, FL: Chapman & Hall/CRC; 2000. [Google Scholar]

- 14. Bauer P, Kieser M. Combining different phases in the development of medical treatments within a single trial. Stat Med. 1999;18:1833‐1848. [DOI] [PubMed] [Google Scholar]

- 15. Ghosh P, Liu L, Senchaudhuri P, Gao P, Mehta C. Design and monitoring of multi‐arm multi‐stage clinical trials. Biometrics. 2017;73(4):1289‐1299. 10.1111/biom.12687 [DOI] [PubMed] [Google Scholar]

- 16. Magnusson BP, Turnbull BW. Group sequential enrichment design incorporating subgroup selection. Stat Med. 2013;32(16):2695‐2754. [DOI] [PubMed] [Google Scholar]

- 17. Jenkins M, Stone A, Jennison C. An adaptive seamless phase II/III design for oncology trials with subpopulation selection using correlated survival endpoints. Pharm Stat. 2011;10:347‐356. [DOI] [PubMed] [Google Scholar]

- 18. Stallard N, Hamborg T, Parsons N, Friede T. Adaptive designs for confirmatory clinical trials with subgroup selection. J Biopharm Stat. 2014;24(1):168‐187. [DOI] [PubMed] [Google Scholar]

- 19. Bauer P, Koenig F, Brannath W, Posch M. Selection and bias–two hostile brothers. Stat Med. 2010;29(1):1‐13. [DOI] [PubMed] [Google Scholar]

- 20. Shen L. An improved method of evaluating drug effect in a multiple dose clinical trial. Stat Med. 1999;20:1913‐1929. [DOI] [PubMed] [Google Scholar]

- 21. Stallard N, Todd S, Whitehead J. Estimation following selection of the largest of two normal means. J Stat Plan Inference. 2008;138:1629‐1638. [Google Scholar]

- 22. Cohen A, Sackrowitz H. Two stage conditionally unbiased estimators of the selected mean. Stat Probab Lett. 1989;8:273‐278. [Google Scholar]

- 23. Bowden J, Glimm E. Unbiased estimation of selected treatment means in two‐stage trials. Biom J. 2008;50(4):515‐527. [DOI] [PubMed] [Google Scholar]

- 24. Sill MW, Sampson AR. Extension of a two‐stage conditionally unbiased estimator of the selected population to the bivariate normal case. Commun Stat Theory Methods. 2007;36(4):801‐813. [Google Scholar]

- 25. Carreras M, Brannath W. Shrinkage estimation in two‐stage adaptive designs with midtrial treatment selection. Stat Med. 2013;32(10):1677‐1690. [DOI] [PubMed] [Google Scholar]

- 26. Kimani PK, Todd S, Stallard N. A comparison of methods for constructing confidence intervals after phase II/III clinical trials. Biom J. 2014;56(1):107‐128. [DOI] [PubMed] [Google Scholar]

- 27. Magirr D, Jaki T, Posch M, Klinglmueller F. Simultaneous confidence intervals that are compatible with closed testing in adaptive designs. Biometrika. 2013;100(4):985‐996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Stallard N, Todd S. Point estimates and confidence regions for sequential trials involving selection. J Stat Plan Inference. 2005;135:402‐419. [Google Scholar]

- 29. Brückner M, Titman A, Jaki T. Estimation in multi‐arm two‐stage trials with treatment selection and time‐to‐event endpoint. Stat Med. 2017;36(20):3137‐3153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Rosenkranz GK. Bootstrap corrections of treatment effect estimates following selection. Comput Stat Data Anal. 2014;69:220‐227. [Google Scholar]

- 31. Kimani PK, Todd S, Stallard N. Estimation after subpopulation selection in adaptive seamless trials. Stat Med. 2015;34(18):2581‐2601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Wang YG, Leung DHY. Bias reduction via resampling for estimation following sequential tests. Seq Anal. 1997;16(3):249‐267. [Google Scholar]

- 33. Davison AC, Hinkley DV. Bootstrap Methods and Their Application. Cambridge, UK: Cambridge University Press; 1999. [Google Scholar]

- 34. Dmitrienko A, Tamhane AC, Bretz F. Multiple Testing Problems in Pharmaceutical Statistics. Boca Raton, FL: Chapman & Hall/CRC; 2010. Biostatistics Series. [Google Scholar]

- 35. Magirr D, Jaki T, Whitehead J. A generalized Dunnett test for multi‐arm multi‐stage clinical studies with treatment selection. Biometrika. 2012;99:494‐501. [Google Scholar]

- 36. O'Brien PC, Fleming TR. A multiple testing procedure for clinical trials. Biometrics. 1979;35(3):549‐556. [PubMed] [Google Scholar]

- 37. Pavord ID, Korn S, Howarth P, et al. Mepolizumab for severe eosinophilic asthma (DREAM): a multicentre, double‐blind, placebo‐controlled trial. Lancet. 2012;380(9842):651‐659. [DOI] [PubMed] [Google Scholar]

- 38. Ortega HG, Yancey SW, Mayer B, et al. Severe eosinophilic asthma treated with mepolizumab stratified by baseline eosinophil thresholds: a secondary analysis of the DREAM and MENSA studies. Lancet Respir Med. 2016;4(7):549‐556. [DOI] [PubMed] [Google Scholar]

- 39. Yancey SW, Keene ON, Albers FC, et al. Biomarkers for severe eosinophilic asthma. J Allergy Clin Immunol. 2017;140(6):1509‐1518. [DOI] [PubMed] [Google Scholar]

- 40. Santanello NC, Zhang J, Seidenberg B, Reiss TF, Barber BL. What are minimal important changes for asthma measures in a clinical trial? Eur Respir J. 1999;14(1):23‐27. [DOI] [PubMed] [Google Scholar]

- 41. Marcus R, Eric P, Gabriel KR. On closed testing procedures with special reference to ordered analysis of variance. Biometrika. 1976;63(3):655‐660. [Google Scholar]

- 42. Whitehead J. The Design and Analysis of Sequential Clinical Trials. 2nd ed. Chichester, UK: Wiley; 1997. [Google Scholar]

- 43. Hampson LV, Jennison C. Group sequential tests for delayed responses (with discussion). J R Stat Soc Ser B Stat Methodol. 2013;75(1):3‐54. [Google Scholar]

- 44. Jaki T, Magirr D. Considerations on covariates and endpoints in multi‐arm multi‐stage clinical trials selecting all promising treatments. Stat Med. 2013;32(7):1150‐1163. [DOI] [PubMed] [Google Scholar]

- 45. Magirr D, Jaki T, Koenig F, Posch M. Sample size reassessment and hypothesis testing in adaptive survival trials. PLoS ONE. 2016;11(2):e0146465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Bretz F, Koenig F, Brannath W, Glimm E, Posch M. Tutorial in biostatistics ‐ adaptive designs for confirmatory clinical trials. Stat Med. 2009;28:1181‐1217. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

SIM_7925‐Supp‐0001‐SIMPaper_SM_V2.pdf