Abstract

Previous research indicates that conspiracy thinking is informed by the psychological imposition of order and meaning on the environment, including the perception of causal relations between random events. Four studies indicate that conspiracy belief is driven by readiness to draw implausible causal connections even when events are not random, but instead conform to an objective pattern. Study 1 (N = 195) showed that conspiracy belief was related to the causal interpretation of real‐life, spurious correlations (e.g., between chocolate consumption and Nobel prizes). In Study 2 (N = 216), this effect held adjusting for correlates including magical and non‐analytical thinking. Study 3 (N = 214) showed that preference for conspiracy explanations was associated with the perception that a focal event (e.g., the death of a journalist) was causally connected to similar, recent events. Study 4 (N = 211) showed that conspiracy explanations for human tragedies were favored when they comprised part of a cluster of similar events (vs. occurring in isolation); crucially, they were independently increased by a manipulation of causal perception. We discuss the implications of these findings for previous, mixed findings in the literature and for the relation between conspiracy thinking and other cognitive processes.

Keywords: conspiracy belief, spurious correlation, pattern perception, causality

In January 2012, the Argentinian President Christina Fernandez de Kirchner was diagnosed with cancer. Speaking the day after her diagnosis, Hugo Chavez, then President of Venezuela, noted that several other leftist Latin American leaders had also recently been afflicted by cancer, including the President of Paraguay (Fernando Lugo), the President (Dilma Rousseff) and former President (Lula de Silva) of Brazil, not to mention Chavez himself (who was later to die of the disease, while de Kirchner turned out to be misdiagnosed). He then suggested that this co‐occurrence was “difficult to explain using the laws of probabilities.” In place of these laws, he implicated the United States. “Would it be strange,” Chavez asked in a televised speech, “if they had developed the technology to induce cancer and nobody knew about it?” (Alexander, 2012).

This anecdote illustrates several important features of conspiracy theorizing, which may be defined as the attribution of events to the secret actions of powerful and malevolent groups (Douglas, Sutton, & Cichocka, 2017). For example, conspiracy thinking may take the form of suspicion and oblique questioning rather than direct accusation (Wood, 2017). Also, conspiracy theorizing serves political purposes, casting rival nations, factions, and social outgroups as devious and malign and ingroup members as their victims (Cichocka, Marchlewska, Golec de Zavala, & Olechowski, 2016; Imhoff & Bruder, 2014; Uscinski & Parent, 2014).

Of most interest to the present article, Chavez's conspiratorial musings appealed explicitly to an apparent pattern in events. In so doing, they conform to the theory that conspiracy thinking is linked to the motivated perception of order and meaning in the environment (Marchlewska, Cichocka, and Kossowska, 2017; Quinby, 1999; Van Prooijen & Jostmann, 2013; van Prooijen, Douglas, & De Inocencio, 2018; Whitson, Galinsky, & Kay, 2015; Whitson & Galinsky, 2008). Crucially, Chavez did not stop by observing the pattern: He explicitly rejected the idea that it could be explained as a coincidence and implied that the events were causally connected—despite the causal connection being vague and implausible. Building on recent research, we propose that this tendency to draw implausible causal connections between events is a crucial driver of conspiracy thinking. Further, we propose that this tendency is important not only when events are random, but also when they co‐occur systematically or conform to some objective pattern. We report four studies to test this hypothesis and discuss its implications for theories of conspiracy thinking.

Conspiracy Thinking and Perceiving Pattern and Causality in Random Events

Scholars have argued that conspiracy beliefs are motivated by the desire to explain and find order and meaning in events that might otherwise seem random, unpredictable, or outside of one's control (Goertzel, 1994). Although early research did not test this idea directly, several findings provided indirect support for it. For example, conspiracy beliefs were found to be more prevalent among disadvantaged groups, who presumably have a stronger need to explain events beyond their control (e.g., Crocker, Luhtanen, Broadnax, & Blaine, 1999; Goertzel, 1994; Thorburn & Bogart, 2005). Other findings indicated that individuals are more likely to endorse conspiracy beliefs if they are dispositionally high in need of compensatory control, including alienated and powerless individuals (e.g., Abalakina‐Paap, Stephan, Craig, & Gregory, 1999). In a direct test of this idea, Whitson and Galinsky (2008) found that participants who were experimentally made to feel powerless were more inclined to perceive patterns (identifiable shapes such as animals and buildings) in visual stimuli and were also more inclined to endorse conspiracy theories.

Whitson and Galinsky's (2008) results suggest that there is a relationship between conspiracy belief and the perception of patterns. Both are the products of an underlying motivation to restore control by imposing meaning on the environment. As Whitson and Galinsky put it (see also Dieguez, Wagner‐Egger, & Gauvrit, 2015), conspiracy theories can be seen as the “identification of a coherent and meaningful interrelationship among a set of random or unrelated stimuli” (p. 115). However, Whitson and Galinsky (2008) examined the correlation between the perception of visual patterns and conspiracy belief only indirectly, by showing that they were both increased by a lack of control.

Visual pattern perception is one means of imposing order on random stimuli. A related mechanism involves perceiving patterns in event sequences. People tend to be surprised by how often random processes throw up results that look ordered, for example long streaks of heads or tails in coin tosses. In principle, people may notice these co‐occurrences without assuming that the events are causally connected—for example, they perceive the co‐occurrence as a random coincidence. In practice, however, people appear to find it hard to resist attributing co‐occurrences to a proximal causal mechanism, rather than to chance (e.g., Braga, Mata, Ferreira, & Sherman, 2016; Caruso, Waytz, & Epley, 2010). As van Prooijen et al. (2018), p. 321 wrote:

Illusory pattern perception emerges because people often have difficulty recognizing when stimuli do or do not occur through a random process… Put differently, a random process often generates sequences that appear non‐random to the human mind, and that may even contain occasional symmetries or aesthetic regularities. As a result, it is difficult for people to appreciate the role of coincidence in generating these pattern‐like sequences.

This reasoning suggests that causal inferences are a crucial part of judgments that random events comprise patterns. The first empirical test of the perception of pattern in random events was conducted by Dieguez et al. (2015), who examined the correlation between perceptions of pattern (vs. randomness) specifically in event sequences. Like Blackmore and Trościanko (1985), they devised a measure of perceptions of non‐randomness in strings of Xs and Os. Across three studies, they found that perceptions of non‐randomness (i.e., that events were causally determined rather than random) were unrelated to established measures of conspiracy belief. In contrast, van Prooijen et al. (2018) found that conspiracy beliefs were related to measures of pattern perception including perceptions of non‐randomness (causal determination) in coin tosses and in world events. They also found that instructing participants to search for patterns in random strings of coin tosses increased pattern perception, which in turn was associated with increased conspiracy belief.

Conspiracy Thinking and Perceiving Causality in Non‐random Events

The different results obtained by Dieguez et al. (2015) and van Prooijen et al. (2018) indicate the need for further research to clarify the conditions under which conspiracy thinking is related to pattern perception. Both sets of studies also leave an important question open: Namely, whether conspiracy thinking is related to faulty causal perceptions even in situations where events are not truly random. These studies were grounded in the theory that conspiracy belief is a form of pattern perception in which causal understandings are imposed on essentially random or at least under‐determined events to make them seem more ordered. Put differently, causal inferences are a way of imposing an arbitrary but psychologically meaningful order on randomness. In these studies, participants have, for the most part, been presented with random event sequences. In such situations, perceiving patterns is only possible when observers are willing to draw implausible and unwarranted causal connections between events which, by definition, are causally unconnected.

This unanswered question is important, because typically, the events at issue in conspiracy theories are not random. For example, the deaths of John F. Kennedy, Princess Diana, and Osama Bin Laden were not random occurrences. Each death can be seen as the outcome of a multitude of personal, social, and political causes (e.g., in the case of Princess Diana, including a celebrity culture that fueled the reckless actions of paparazzi, the fact that the driver had been drinking, and the lack of guard rails on the concrete columns in the tunnel where the fatal crash occurred). Each death, indeed, has an official causal explanation that is challenged by conspiracy theories. More generally, events in human life are typically somewhat structured and are over‐ rather than under‐determined—that is, each event has multiple causes (Mill, 1973). This is why the explanatory dilemma typically posed by socially significant events is not whether something caused them, but rather what caused them (Kelley, 1967). To paraphrase the apt metaphor for causal inference—“connecting the dots”—put forward by van Prooijen et al. (2018), the issue when events are non‐random may be whether observers connect the wrong dots, rather than any dots whatsoever.

To be sure, conspiracy thinking appears to thrive under conditions of causal uncertainty; that is, when people have incomplete, second hand, conflicting, or ambiguous causal information (Douglas & Sutton, 2011; Kovic & Füchslin, 2018; Newheiser, Farias, & Tausch, 2011). The true (ontological) rather than merely apparent (epistemic) random stimuli of existing studies (Dieguez et al., 2015; van Prooijen et al., 2018) can be viewed as a simulation of these conditions of causal uncertainty. Crucially therefore, it appears reasonable to infer that faulty perceptions that events are causally connected should be important to conspiracy theories even when there is some objective structure to events. However, non‐random situations are different, in that perceptible patterns in events may exist independently of any causal inference by the observer. Further, these patterns, notably co‐occurrences, may affect causal reasoning processes and disrupt their relation to conspiracy thinking. The external validity of research on conspiracy thinking will benefit from developing and testing the hypothesis that it depends on the faulty perception of causal connections between events, whether those events are random or non‐random.

Lessons from the Literature on Co‐occurrence and Causal Inference

Previous research has shown that causal inference and the perception of co‐occurrence in events are strongly related. Causal inference can affect the perceptual organization of events: Since Heider (1958), psychologists have seen causal inference as a means of imposing order on the environment, and organizing multiple stimuli into coherent units or Gestalts (Read, Vanman, & Miller, 1997; Xu, Tang, Zhou, Shen, & Gao, 2017). Conversely, causal inference is strongly influenced by the perception of at least two kinds of regularity. First, for one event to be seen as the cause of another, the two events should normally be seen to be correlated. That is, if one tends to be present, then the other is present, and if it tends to be absent, then the other is absent. This is known as the covariation principle (e.g., Kelley, 1967; Sutton & McClure, 2001). Second, in addition to correlation, temporal contiguity is important. People are more likely to perceive events as causally connected if they occur close together in time (Alloy & Abramson, 1979; Buehner, 2005).

Nonetheless, the perception and causal interpretation of co‐occurrences are conceptually and empirically separable. Whether people infer causality from a correlation depends on whether they harbor a tacit theory that the putative cause has the power to affect the putative outcome (Cheng, 1997; Cheng & Lu, 2017). Thus, people prefer to explain large outcomes in terms of large effects, and small outcomes in terms of small effects (Einhorn & Hogarth, 1986; Spina et al., 2010; see also Van Prooijen & Van Dijk, 2014). Indeed, people will override the covariation principle if they have specific information about causal mechanisms. For example, even if a driver has had no accidents before, observers tend to see her as the primary cause of an accident if they know she was short‐sighted and not wearing corrective lenses (Ahn & Bailenson, 1996). Likewise, even if intentional actions (e.g., lighting a campfire) covary equally or less strongly with an outcome (e.g., a forest fire), people prefer to natural causes (e.g., a drought) as explanations over natural events (e.g., McClure, Hilton, & Sutton, 2007).

This means that variations in the willingness to perceive causal relationships between variables may be important even in the presence of objective co‐occurrences. A relevant individual difference variable is magical thinking (Eckblad & Chapman, 1983), which captures the “belief, quasi‐belief, or … semi‐serious entertainment of the possibility that events which, according to the causal concepts of this culture, cannot have a causal relation to each other, might somehow nevertheless do so” (Meehl, 1973, p. 54). Although magical thinking is discouraged by modern industrialized societies, it persists, often co‐existing with culturally mandated, quasi‐scientific conceptions of causality (Legare, Evans, Rosengren, & Harris, 2012). Magical thinkers are more willing than others to ascribe causal powers to stimuli, for example entertaining the possibility that stepping on cracks on a pavement may bring bad luck, or that misfortunes (e.g., a freak electrocution) may be brought about by objectively unrelated bad deeds (e.g., infidelity; for a review see Callan, Sutton, Harvey, & Dawtry, 2014). Crucially, magical thinkers are also more inclined to endorse conspiracy theories (Darwin, Neave, & Holmes, 2011; Douglas, Sutton, Callan, Dawtry, & Harvey, 2016; Lobato, Mendoza, Sims, & Chin, 2014).

In sum, we propose that the willingness to draw implausible connections between events, even when events co‐occur non‐randomly, underpins conspiracy thinking. Put differently, we expect that the effect of faulty causal inferences demonstrated by van Prooijen et al. (2018) generalizes to situations in which events are non‐random. Whether events are truly random or not, conspiracy thinking reflects a “psychological need to explain events” (Newheiser et al., 2011, p. 1007), and may be sustained by willingness to impose implausible causal narratives on event sequences.

The Present Research

In the present studies, we examined whether conspiracy beliefs are related to perceptions of causal connection between events—whether or not events co‐occur. The key strategy of these studies is to present participants with sequences of events that have some objective structure, but where the causal mechanisms for that structure are unspecified. Studies 1 and 2 tested the relationship between conspiracy belief and causal interpretation of one previously unstudied type of co‐occurrence: spurious correlations. Participants read about documented (real‐life) spurious correlations (e.g., between chocolate consumption and Nobel Prizes) and indicated whether those correlations reflect a direct causal connection between the spurious correlates. Studies 3 and 4 tested the relationship between conspiracy belief and causal interpretation of another type of co‐occurrence: streaks or coincidences in which a rash of similar events occur closely together in time. Study 3 investigated whether people prefer conspiracy explanations for a recent human tragedy (e.g., the death of a journalist), if they see it as not only forming a co‐occurrence together with similar recent tragedies, but causally connected to them. In an experimental design, Study 4 presented human tragedies as either isolated or part of a streak of three or four similar cases, and independently as causally connected (vs. unconnected). It therefore examined whether perceiving events as causally connected affects conspiracy thinking independently of the presence of an objective co‐occurrence in those events. All materials and data can be viewed at: https://osf.io/m2g4x.

Study 1

One of the most familiar catch cries for students in psychology and other empirical disciplines is that correlation does not entail causation. Getting students to understand and apply the principle is a crucial aim in their training in critical thinking (Halpern, 1998; Wilson, Aronson, & Carlsmith, 2010). As we have seen, meeting this aim confronts an obstacle in that human judgments of causation are heavily influenced by perceptions of correlation (Cheng, 1997; Heider, 1958; Kelley, 1967). However, to our knowledge there is little or no research examining people's (in)ability to judge that verbally described correlations between two variables may not signify a causal relationship (but for relevant research on contingency learning over multiple trials, see Fiedler, Walther, Freytag, & Nickel, 2003). Neither has any research examined the correlates of this (in)ability.

In this study, we aimed to investigate whether causal interpretations of correlations are associated with conspiracy beliefs, in accordance with our proposal that conspiracy thinking is fostered by readiness to impose implausible causal interpretations on events in the environment. In particular, we examined the relationship between conspiracy beliefs and causal interpretation of spurious correlations—those that are produced by the operation of third causes. For stimuli, we exploited Vigen's (2015) compilation of real‐life but spurious and indeed often entertainingly absurd correlations, mined from publicly available datasets. These include relations between per capita chocolate consumption and Nobel Prizes, and between drownings in American swimming pools and power generated by US nuclear power plants. We presented six spurious correlations to participants and asked if they could be explained in terms of a causal relationship between the two variables, versus chance alone, or the operation of a third cause. We also measured the extent to which participants endorsed conspiracy theories about a separate set of well‐known events such as the NASA moon landings and the deaths of Princess Diana and John F. Kennedy.

Importantly, these spurious correlations involving events conform to an objective pattern. Since Vigen's (2015) correlations refer to statistically significant relations over time, they are by definition unlikely to be attributable to chance. Therefore, the critical issue is not whether some causal force, but rather what causal force is responsible for these non‐random correlations. Of course, the correlations are spurious because there is no plausible direct causal connection between the two variables: For example, it is (unfortunately!) difficult to argue that eating chocolate is of direct benefit to a country's scientific research. Thus, just as the perception of patterns in random event sequences depends on the imposition of implausible connections between events (van Prooijen et al., 2018), so does the inference that direct causal relations exist between spurious correlates. Since our proposal is that conspiracy thinking depends on the imposition of implausible causal connections between events, we therefore predicted that the perception of direct causal connections between spurious correlates would be associated with conspiracy belief. We asked participants whether they thought the events might be associated due to chance but also to the influence of a third cause, which is itself a causal interpretation of the events. Since neither of these inferences involves an implausible imposition of causality, we did not expect them to be associated with conspiracy thinking.

Method

Participants and design

A sample of 200 participants was recruited via Amazon's Mechanical Turk (MTurk). Five participants indicated that their data should not be used, and were deleted for further analyses (i.e., answered “No” to the question, “In your honest opinion, should we use your data in our analyses in this study?”). The final sample consisted of 195 participants (94 men, 101 women) between the ages of 18 and 74 (M = 36.32, SD = 11.38). The study had a correlational design. The sample size allowed us to estimate stable correlation coefficients (Schönbrodt & Perugini, 2013) and provided us with 80% power to detect a correlation of r = .20 with α = .05 and a two‐tailed test.

Materials and procedure

After giving informed consent, participants were instructed that they would receive several questionnaires tapping into people's attitudes toward real life issues and their causal reasoning. Participants were allowed to quit the survey at any point and they could not change their responses. After completing the survey, participants were debriefed and thanked.

Belief in conspiracy theories

Belief in conspiracy theories was measured using a scale assessing belief in real‐world conspiracy theories including eight items from Douglas and Sutton (2011) (e.g., “The American moon landings were faked”; 1 = strongly disagree, 7 = strongly agree, α = .80).1

Causal interpretation of spurious correlations

Causal interpretation of spurious correlations (CISC) was measured with a newly developed scale (see Appendix). Participants were presented with six spurious correlations (e.g., “It has been shown that an increase in people's income is associated with more visits to the hospital”; “It has been shown that an increase in the average global temperature is associated with an increase in the national science foundation budget”), and asked to rate how much they agree with the following explanations of the relation between the two events: a causal relation, random coincidence, or a third cause (1 = totally disagree, 9 = totally agree, αcause = .67, αrandom = .69, αconfound = .71). Additionally, participants were asked to indicate how hard it would be to think of a reason why the two events are causally connected (1 = extremely easy, 9 = extremely hard, α = .62).

Results

Descriptive statistics and correlations are reported in Table 1. Of primary interest to the present study, these shows that that participants were more likely to agree with conspiracy theories if they also tended to infer direct causal relations from spurious correlations, r(195) = .39, p < .001. In addition, conspiracy belief correlated positively with third cause perceptions, r(195) = .16, p = .027, and negatively, but only marginally, with random coincidence perceptions, r(195) = −.14, p = .059.

Table 1.

Descriptive statistics and correlations for study variables (Study 1)

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1. | Conspiracy belief | 2.80 | 1.11 | |||||||

| 2. | Direct causal relation | 3.19 | 1.43 | .39** | ||||||

| 3. | Random | 6.22 | 1.53 | −.14† | −.35** | |||||

| 4. | Third cause | 4.05 | 1.57 | .16* | .30** | −.03 | ||||

| 5. | Ease | 4.14 | 1.44 | .02 | .13† | −.28** | .19* | |||

| 6. | Education | 2.87 | .60 | −.06 | .08 | −.07 | .23* | .12 | ||

| 7. | Age | 36.32 | 11.38 | −.06 | −.02 | .09 | −.01 | −.15* | .01 | |

| 8. | Gender | 1.48 | .50 | −.05 | .001 | −.04 | −.17* | −.03 | .04 | .11 |

†p < .10; *p < .05; **p < .001 (two‐tailed).

Most importantly, a linear regression analysis of causal relation, random coincidence, third cause perceptions, ease, level of education, age and gender in the same model on conspiracy belief revealed that only the perception of a direct causal relation predicted conspiracy belief significantly, B = .29, SE = .06, CI 95% [0.17; 0.41], p < .001 (Table 2).

Table 2.

Regression results for the prediction of conspiracy belief from direct causal relation, random coincidence, third cause perception, ease, level of education, age, and gender (Study 1)

| Conspiracy belief | ||

|---|---|---|

| Model 1 | Model 2 | |

| β | β | |

| Education | −.06 | −.10 |

| Age | −.05 | −.05 |

| Gender | −.004 | −.03 |

| Direct causal relation | .37** | |

| Random coincidence | −.02 | |

| Third cause perception | .07 | |

| Ease | −.05 | |

| F(187) | .41 | 4.47** |

| ∆R 2 | −.01 | .11 |

**p < .001 (two‐tailed).

Discussion

In the present study, perceptions of direct causal relationships between spuriously correlated variables were associated with conspiracy thinking. Previous research has shown that conspiracy thinking is associated with the perception of causal connections between random events, between which, by definition, no causal relationships exist (van Prooijen et al., 2018). The present results confirm that perception of implausible causal relationships between events may underpin conspiracy thinking, and extend this finding to cases in which events are not random, but conform to an objective pattern. Importantly, perceptions that the spurious correlations were explained either by coincidence or by the operations of a third cause were not uniquely associated with conspiracy thinking. Thus, the critical predictor of conspiracy thinking was not the inference of causality (vs. randomness) per se, but the specific, implausible inference that a direct causal connection linked the two focal events. In the next study, we sought to extend these findings further by including measures that address the perception of implausible causal relations (magical ideation) as well as more general measures of the perception of meaning and order in random stimuli (visual pattern perception).

Study 2

In Study 2, we aimed to replicate and extend the findings of Study 1 in two distinct ways. First, we included Whitson and Galinsky's (2008) measure of visual pattern perception to examine whether it is related to conspiracy thinking and, once it is adjusted for, whether causal interpretation of spurious correlations remains a significant predictor of conspiracy thinking. The perception of patterns in visual stimuli is thought to be another manifestation of the motivated perception of meaning and order in the environment, so we wanted to ensure that our predicted effect was related to but also functioned independently of this mechanism. Second, we included a scale of magical ideation (Eckblad & Chapman, 1983) in the present study. Since this scale addresses the tendency for permissive and unconventional causal thinking, we expected that it should be related to the causal interpretation of spurious correlations, as well as conspiracy belief. Further, we included other theoretically relevant control factors that have also been shown to be associated with conspiracy thinking, including rationalistic mind‐set (Swami, Voracek, Stieger, Tran, & Furnham, 2014), political orientation (Van Prooijen, Krouwel, & Pollet, 2015), religiosity (Beller, 2017; Newheiser et al., 2011), and education (Douglas et al., 2016; van Prooijen, 2017). As in Study 1, we predicted that causal interpretations of spurious correlations would be related to conspiracy belief. Crucially, we also predicted that it would be related to conspiracy belief adjusting for magical ideation, and all other variables, since our theory suggests that imposing a causal interpretation on the environment is a proximal driver of conspiracy belief.

Method

Participants and design

A sample of 216 participants (122 men, 91 women, 3 transgendered) between the ages of 21 and 70 (M = 38.58, SD = 12.05) were recruited via Amazon's Mechanical Turk (MTurk). Of this sample, 82.9% were White/Caucasian, 8.3% African American, 4.2% Asian, 3.7% Hispanic, and .5% Other. Forty‐five percent indicated that they had no religion or were atheist, 43% were Christian (e.g., Catholic, Protestant), 3% Jewish, 0.5% Muslim, 2% Buddhist, 0.5% Hindu, and 6% Other (including ‘spiritual’ and Jehovah's Witness). The study had a correlational design. The sample size allowed us to estimate stable correlation coefficients (Schönbrodt & Perugini, 2013) and provided us with 80% power to detect a correlation of r = .19 with α = .05 and a two‐tailed test.

Materials and procedure

After giving informed consent, participants were instructed that they would receive several questionnaires about people's attitudes to real‐life issues and their causal reasoning.

Belief in conspiracy theories

As in Study 1, conspiracy beliefs were measured using five items from the scale assessing belief in real‐world conspiracy theories (Douglas & Sutton, 2011; α = .81).2

Causal interpretation of spurious correlations

The same scale as in Study 1 was used to measure CISC (αcause = .61, αrandom = .62, αconfound = .70, αease = .64).

Visual pattern perception

A modified version of Whitson and Galinsky's measure of pattern perception was used. Participants received 12 snowy pictures in random order of which 2 contained a grainy embedded image that was difficult but possible to perceive. The other 10 pictures were manipulated using software to eliminate any traces of the embedded image (Whitson & Galinsky, 2008). Participants were asked to identify as quickly and accurately as they can whether there was an image or not. Since 10 of the 12 pictures were of random static, in which no image exists, any identification from a participant that they see an image in the picture is evidence of illusory pattern perception. For our analyses, we used the number of times participants perceived an image in the pictures that lacked an image (see Whitson & Galinsky, 2008).

Magical ideation

To measure magical ideation, we administered the 10‐item magical ideation scale (Eckblad & Chapman, 1983). This measure assesses endorsement of causal mechanisms that are invalid or metaphysical (e.g., “I have wondered whether the spirits of the dead can influence the living”, 1 = strongly disagree, 5 = strongly agree, α = .85).

Rationalistic versus intuitive mind‐set

Participants completed the Rational‐Experiential Inventory, which is a questionnaire assessing individual differences in rational and experiential thinking styles (REI; Pacini & Epstein, 1999; e.g., rationalistic mind‐set; “I prefer complex to simple problems”, 1 = definitely false, 5 = definitely true, α = .89; and intuitive mind‐set; “I trust my initial feelings about people”, 1 = definitely false, 5 = definitely true, α = .94). For our analyses, we used separate scores for rational and experiential thinking styles (with higher scores indicating higher rational and higher experiential thinking).

Demographics

Finally, participants were asked to provide some demographic details. In addition to age, gender, and ethnicity, participants were asked to rate their political orientation (e.g., “How would you describe your political attitudes?”; 1 = very liberal/very left‐wing/strong Democrat, 7 = very conservative/very right‐wing/strong Republican, α = .94). They also rated their religiosity (Sullivan, 2001) (e.g., “How often do you attend religious services?”, 1 = not at all, 5 = a great deal, α = .93), and their level of education (no formal education, n = 4; primary level education, n = 5; secondary level education, n = 90; college education bachelor's degree, n = 93; college education graduate degree, n = 24).3

Results

In Table 3, descriptive statistics and correlations are reported for all study variables. It shows that as predicted, the belief that a direct causal relation held between spurious correlates again was positively related to conspiracy belief, r(216) = .31, p < .001. Third cause perception, r(216) = .23, p < .001, and visual pattern perception, r(216) = .17, p = .012, also correlated significantly with conspiracy belief. Moreover, aside from the non‐significant correlation with political orientation, all other distal variables (i.e., magical ideation, non‐analytic thinking, religiosity, education) correlated significantly, and in the predicted direction, with conspiracy belief, thereby replicating previous findings (e.g., Douglas et al., 2016; Lobato et al., 2014). CISC correlated positively with visual pattern perception, r(216) = .19, p = .006, and magical ideation, r(216) = .28, p < .001. Causal relation was unrelated to non‐analytic thinking, political orientation, religiosity, education, age, or gender.

Table 3.

Descriptive statistics and correlations for study variables (Study 2)

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. | Conspiracy belief | 2.35 | 1.26 | |||||||||||||

| 2. | Direct causal relation | 3.36 | 1.31 | .31** | ||||||||||||

| 3. | Random coincidence | 5.99 | 1.46 | −.01 | −.38** | |||||||||||

| 4. | Third cause | 4.15 | 1.58 | .23* | .49** | −.28** | ||||||||||

| 5. | Ease | 5.98 | 1.37 | .04 | −.37** | .45** | −.50** | |||||||||

| 6. | Visual pattern perception | 2.52 | 2.24 | .17* | .19* | .05 | .15* | .03 | ||||||||

| 7. | Magical ideation | 1.88 | 0.74 | .62** | .28** | −.08 | .23* | −.04 | .06 | |||||||

| 8. | Rationalistic thinking | 4.76 | 0.67 | −.18* | −.09 | .13† | −.02 | −.07 | .16* | −.25** | ||||||

| 9. | Experiential thinking | 4.26 | 0.81 | .26** | .03 | .04 | −.04 | .08 | .04 | .26** | −.02 | |||||

| 10. | Political orientation | 3.47 | 1.39 | −.02 | .03 | −.04 | .17* | −.13† | −.06 | .06 | −.13* | −.08 | ||||

| 11. | Religiosity | 2.32 | 1.34 | .15* | .09 | −.15* | .12† | −.03 | .01 | .28** | −.06 | .16* | .31** | |||

| 12. | Education | 3.59 | 0.79 | −.20* | −.07 | .05 | .02 | −.04 | .02 | .004 | .20* | −.14* | −.15* | −.03 | ||

| 13. | Age | 38.58 | 12.05 | −.08 | .08 | −.04 | .13† | −.06 | .06 | −.14* | −.10 | −.16* | .17* | .19* | .01 | |

| 14. | Gender | 1.45 | 0.53 | .14* | .03 | .01 | .04 | .06 | −.02 | .06 | −.03 | .13† | −.09 | .21* | .01 | .19* |

†p < .10; *p < .05; **p < .001 (two‐tailed).

Notably, a linear regression of conspiracy belief on causal relation, random coincidence, third cause perception, ease, visual pattern perception, magical ideation, rationalistic and experiential thinking, political orientation, religiosity, level of education, age, and gender in the same model revealed that causal relation significantly predicted conspiracy belief, B = .14, SE = .06, CI 95% [0.02; 0.26], p = .025, although this time, magical ideation, B = .92, SE = .10, CI 95% [0.72; 1.12], p < .001, and education level, B = −.31, SE = .08, CI 95% [−0.15; −0.47], p < .001, were also significant predictors. Crucially, causal relation predicted conspiracy belief, even when adjusting for all third variables (Table 4).

Table 4.

Regression results for the prediction of conspiracy belief from causal relation, random coincidence, third cause perception, ease, visual pattern perception, magical ideation, non‐analytic thinking, political orientation, religiosity, education, age, and gender (Study 2)

| Conspiracy belief | |||

|---|---|---|---|

| Model 1 | Model 2 | Model 3 | |

| β | β | β | |

| Political orientation | −.08 | −.07 | −.06 |

| Religiosity | .17* | −.04 | −.02 |

| Education | −.21** | −.21** | −.20** |

| Age | −.12† | .02 | −.01 |

| Gender | .12† | .10† | .09† |

| Magical ideation | .62** | .54** | |

| Rational thinking | .01 | −.01 | |

| Experiential thinking | .07 | .07 | |

| Causal relation | .14* | ||

| Random coincidence | .07 | ||

| Third cause perception | .11† | ||

| Ease | .10 | ||

| Visual pattern perception | .09† | ||

| F(202) | 4.48* | 20.86** | 15.19** |

| ∆R 2 | .08 | .43 | .46 |

†p < .10; *p < .05; **p < .001 (two‐tailed).

Discussion

The present findings replicate and extend the results of Study 1. First, they show that, as predicted, causal interpretations of spurious correlations were related to conspiracy belief. Second, they indicate that this factor is related to magical ideation, which is an index of permissive and unconventional causal thinking (Eckblad & Chapman, 1983), as well as visual pattern perception, which is an index of the motivated perception of order and meaning in the environment (Whitson & Galinsky, 2008). Third, they indicate that the perception of direct causal relations between spurious correlates, nonetheless, predicts conspiracy thinking over and above these other variables, as well as other factors previously shown to be relevant to conspiracy thinking, including rationalistic or experiential thinking, political orientation, religiosity, and education. These findings, together, provide further evidence that implausible causal interpretations even of non‐random events are uniquely related to conspiracy thinking.

Study 3

We began this article with a real‐life example of conspiracy thinking in which a streak of cancer diagnoses among leftist Latin American leaders triggered one of them, Hugo Chavez, to wonder if the US government may have conspired against them. In this case, the implausible perception of causal connections between events led to conspiracy thinking about the same events. In contrast, Studies 1 and 2 showed that conspiracy beliefs about some events (e.g., the death of Princess Diana) were associated with implausible causal interpretations of other events (e.g., the co‐occurrence of chocolate consumption and Nobel Prizes).

In Study 3, we return the focus to cases like the one that concerned Chavez. We therefore measured co‐occurrence perception, causal interpretation, and conspiracy belief within a single context. Each participant was presented with one scenario describing a streak of human tragedies (either the deaths of three or four journalists, or the poisoning of three or four local politicians). Streaks in events, even when they occur by chance, often trigger implausible causal perceptions such as gambler's belief in a “hot hand” (Braga et al., 2016; Caruso et al., 2010). Conspiracy explanations for the most recent of these tragedies were measured, and participants were also asked whether the events are causally connected. We predicted that perceiving the events as causally connected would be related to conspiracy explanations. We also measured the extent to which participants perceived the events to comprise a pattern‐like co‐occurrence, and to which they explicitly acknowledged a pattern‐like sequence but denied that it reflected a causal connection.

Prior to these scenarios, participants read two scenarios describing streaks of natural events, and were similarly asked to indicate whether these events comprised a co‐occurrence and were causally connected. This served both to conceal the main focus of the study, and also to test the hypothesis that perceiving causal connections between natural events would be related to perceiving casual connections between human tragedies, and in turn, a preference for conspiracy explanations. Thus, Studies 1 and 2 presented events that comprised a co‐occurrence because they were correlated, whereas Study 3 presented events that comprised a co‐occurrence insofar as they occurred as a temporally contiguous cluster (see Appendix).

Method

Participants and design

A sample of 214 participants (105 men, 108 women, 1 transgendered) between the ages of 20 and 69 (M = 37.08, SD = 10.85) were recruited via Amazon's Mechanical Turk (MTurk). Of this sample, 79.4% were White/Caucasian, 7.5% African American, 6.5% Asian, 4.7% Hispanic, and 1.8% Other. Fifty‐one percent indicated that they had no religion or were atheist, 42.5% were Christian (e.g., Catholic, Protestant), 0.5% Jewish, 0.9% Muslim, 1.4% Buddhist, 0.5% Hindu, and 3.2% Other (including ‘spiritual’ and Wiccan). The study had a correlational design. The sample size allowed us to estimate stable correlation coefficients (Schönbrodt & Perugini, 2013) and provided us with 80% power to detect a correlation of r = .19 with α = .05 two‐tailed. Moreover, we had approximately 80% power to detect a mediation effect with small to medium paths (Fritz & MacKinnon, 2007).

Materials and procedure

Participants were randomly presented with two non‐social scenarios followed by one human scenario. The non‐social events involved a streak of natural events (a cluster of three or four whale strandings, volcanic eruptions, or animal disease outbreaks). The human tragedy similarly comprised part of a recent streak of similar tragedies (the last in a series of journalists dying suddenly, or of local politicians being poisoned). Participants were asked to indicate, in random order, to what extent they perceived an underlying cause to the events (3 items, including, “There is a causal connection between these events”, 1 = strongly disagree, 7 = strongly agree, α = .91), a co‐occurrence (3 items, including “There seems to be a pattern to these events”, 1 = strongly disagree, 7 = strongly agree, α = .86), or no connection (2 items, e.g., “Any apparent pattern, similarity, or increased frequency in these events is probably due to chance”, 1 = strongly disagree, 7 = strongly agree) (see Appendix, Table 1).

As this instrument has to date not been used before, we examined its factor structure by testing and comparing two factor models. The first model examined whether the three items measuring causal connection and the three items measuring co‐occurrence all loaded on the same underlying factor. This model did not fit the data well, χ2(9) = 170.97, p < .001, CFI = .86, RMSEA = .286, CI 90% [0.249; 0.324], SRMR = .064. The second model included two factors. This model showed better fit, χ2(8) = 128.08, p < .001, CFI = .90, RMSEA = .261, CI 90% [0.222; 0.302], SRMR = .059, and fitted the data significantly better than the single‐factor model, Δχ2(1) = 42.99, p < .001. We therefore proceeded with our analyses using the two‐factor structure (in addition to the two items measuring no connection).

Belief in conspiracy theories

Our measure of conspiracy belief was participants’ agreement with conspiracy explanations for the most recent human event, which was either a journalist dying suddenly or a mayor being poisoned (journalist's death: “A group of people acted in secret to cause her death” and “There was a plot to kill her”, 1 = strongly disagree, 9 = strongly agree, M = 4.31, SD = 2.53, α = .92, and mayor's illness: “A group of people acted in secret to poison her” and “There was a plot to poison her”, 1 = strongly disagree, 9 = strongly agree, M = 5.33, SD = 2.50, α = .87). As filler items, participants indicated their agreement with two non‐conspiracy explanations (journalist’ death: “Her death was a suicide” and “She was killed by a sole person acting alone [not as part of a plot]”, and mayor's illness: “It was accident” and “She was poisoned by a sole person acting alone [not as part of a plot]”).4

Finally, as a check of understanding, participants were asked what the most recent event was in the final story they read (1 = the poisoning of a mayor, 2 = the death of a journalist, 3 = neither). A total number of 6 participants did not correctly identify the last scenario they read. These participants were excluded for the analyses.

Results

We first ran a regression analysis in which conspiracy explanations for the human scenarios were regressed onto perceived causal connection and perceived co‐occurrence. As predicted, this analysis revealed that causal connection, B = .66, SE = .10, CI 95% [0.46; 0.86], p < .001, and co‐occurrence, B = .29, SE = .11, CI 95% [0.07; 0.51], p = .010, within the human scenarios are strongly and independently related to conspiracy explanations (Table 5). However, it should be noted that although perceptions of causal connection and co‐occurrence comprised separate factors, they were strongly related to each other.

Table 5.

Regression results for the prediction of conspiracy explanations for the human scenarios from causal connection and perceived co‐occurrence (Study 3)

| Conspiracy belief | |||

|---|---|---|---|

| β | F(211) | ∆R 2 | |

| Causal connection | .52** | ||

| Perceived co‐occurrence | .20* | ||

| Model | 98.11 | .48** | |

*p < .05; **p < .001 (two‐tailed).

We proceeded by testing the mediation between judgments of cause in natural scenarios and conspiracy belief by judgments of cause in human scenarios. Using Hayes and Preacher's (2013) bootstrapping macro designed for SPSS, we tested the significance of the indirect effect with 5,000 bootstrap re‐samples. The mediation analysis revealed that there was a significant indirect effect of judgments of cause in natural scenarios through judgments of cause in human scenarios on conspiracy belief (with judgments of co‐occurrence in natural and human scenarios added as covariates) with the 95% bootstrap confidence interval (CI) excluding zero (ab = 0.25, SE = .08, 95% CI [0.10, 0.43]). Importantly, the indirect effect indicates that there seems to be a general tendency to see cause and co‐occurrence across social and non‐social settings, which in turn affects the tendency to belief in conspiracy explanations.

Discussion

The present results offer initial evidence that conspiracy thinking is associated with perceptions that a cluster of similar events was causally connected. It therefore builds on Studies 1 and 2 by showing that a preference for conspiracy explanations is associated with perceived causal connections within the same domain. Its findings also build on Study 2 by indicating that the tendency to draw causal connections in the human realm is reflected more generally by a tendency to draw connections between events in the natural world. One feature of the present results is that perceptions that events comprised a pattern and causal perception of the patterns were separable in a confirmatory factor analysis, but were highly correlated. This finding lends weight to the suggestion that pattern perception in event sequences depends on the causal interpretation of those sequences (van Prooijen et al., 2018). It also suggests the need for further research to more effectively tease apart the co‐occurrence of events and the extent to which they are perceived as causally connected. Thus, whereas Study 3 always presents event clusters, in our next and final study we turn to an experimental design in which causal interpretation and the co‐occurrence of events are orthogonalized.

Study 4

In Study 4, we experimentally manipulated causal connection and co‐occurrence perception, using similar scenarios as those in Study 3. The primary aim of this study was to examine whether conspiracy explanations are not only associated with but are affected by the perception of causal connection. Another aim of the study was to examine whether the effect of causal connection on conspiracy belief is moderated by objective evidence of a co‐occurrence. According to our theorizing, the implausible perception of causal connections between events drives conspiracy theories whether there is no objective pattern in events (as in van Prooijen et al., 2018) or whether events conform to some kind of structure (as in Studies 1 and 2). We therefore expected that seeing events as causally connected would predict conspiracy belief whether or not a co‐occurrence was evident in those events.

To test these ideas, we used a 2 (causal vs. no causal connection) × 2 (co‐occurrence vs. no co‐occurrence) between‐subjects design, in which participants were presented with the same events as in Study 3. In the manipulation of co‐occurrence, each event was described (co‐occurrence condition) as the latest in a streak of three or four similar recent events (as in Study 3), or (isolated condition) as a relatively isolated event, with only one local and relatively distant precedent (e.g., 25 years ago). In the manipulation of causal perception, participants were told that the events within each scenario were, or were not, causally connected. Our measure of conspiracy belief was again participants’ agreement with two potential conspiracy explanations of the (most recent) human event.

Method

Participants and design

A sample of 211 participants (120 men, 91 women) between the ages of 18 and 77 (M = 35.94, SD = 11.61) were recruited via Amazon's Mechanical Turk (MTurk). Of this sample, 69.7% were White/Caucasian, 7.1% African American, 14.2% Asian, 5.7% Hispanic, and 3.3% Other. Fifty‐three percent indicated that they had no religion or were atheist, 32.7% were Christian (e.g., Catholic, Protestant), 2.4% Jewish, 0.9% Muslim, 1.9% Buddhist, 0.5% Hindu, and 8.5% Other (including Mormon and ‘spiritual’). The study had a 2 Causality (causally connected vs. causally unconnected) × 2 Co‐occurrence (isolated vs. co‐occurring) between‐subjects design. The sample size provided us with 80% power to detect an effect of with α = .05 and a two‐tailed test.

Materials and procedure

In the manipulation of co‐occurrence, each event was described (co‐occurring condition; N = 110) as the latest in a streak of three or four similar recent events, or (isolated condition; N = 101) as a relatively isolated event. In the manipulation of causal perception, participants were told that the events within each scenario were, or were not, causally connected (causally connected; N = 101, causally unconnected; N = 110).

Belief in conspiracy theories

Our measure of conspiracy belief was again participants’ agreement with two potential conspiracy explanations of the (most recent) human event (journalist’ death: “A group of people acted in secret to cause her death” and “There was a plot to kill her”, 1 = strongly disagree, 9 = strongly agree, M = 4.77, SD = 2.53, and mayor's illness: “A group of people acted in secret to poison her” and “There was a plot to poison her”, 1 = strongly disagree, 9 = strongly agree, M = 4.53, SD = 2.58).5

Manipulation checks

As manipulation checks, we asked participants to rate the extent they thought the events within the scenarios seemed to have occurred (i) unusually close together in time, (ii) more often than one would normally expect (co‐occurrence perception: M = 4.40, SD = 2.03, α = .82), and (iii) whether they thought the events within them were causally connected (causal connection: M = 4.26, SD = 2.57).

Finally, as a check of understanding, participants were asked what the most recent event was in the final story they read (1 = the poisoning of a mayor, 2 = the death of a journalist, 3 = neither). A total number of 11 participants did not correctly identify the last scenario they read. These participants were omitted from the dataset.

Results

We first checked whether the co‐occurrence condition affected perceptions of cause, and in a similar way, whether the cause condition affected perceptions of co‐occurrence. An Analysis of Variance (ANOVA) of cause condition, co‐occurrence condition, and the interaction between cause and co‐occurrence condition on perceived causal connection revealed a significant effect of cause condition, F(1, 207) = 14.74, p < .001, , and also a significant effect of co‐occurrence condition, F(1, 207) = 4.28, p = .040, . The interaction effect between cause and co‐occurrence condition was not significant, p = .426. In addition, an ANOVA of co‐occurrence condition, cause condition, and the interaction between co‐occurrence and cause condition on perceived co‐occurrence revealed only a significant effect of co‐occurrence condition, F(1, 207) = 91.10, p < .001, , but no effect of cause condition, p = .157, nor an interaction effect between co‐occurrence and cause condition, p = .586. These analyses suggest that, indeed, cause and co‐occurrence are orthogonal manipulations, and thus should not be seen as manipulations of the same construct.

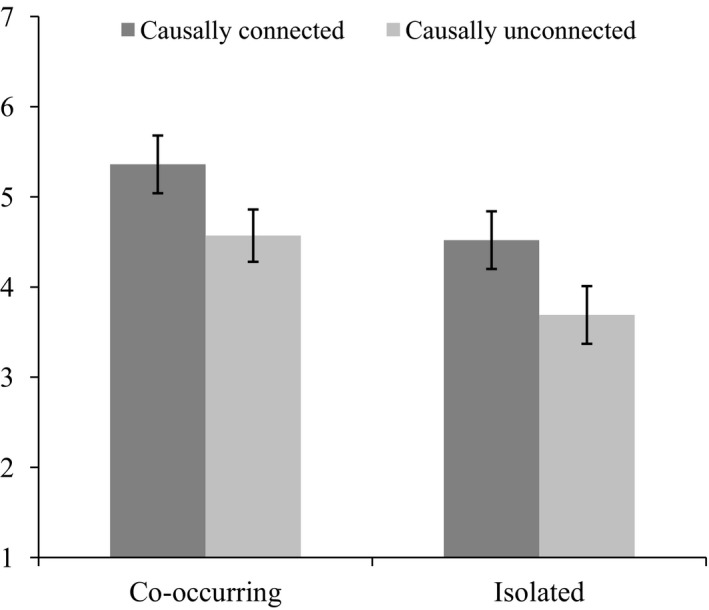

To test our main hypothesis that conspiracy explanations are affected by the perception of causal connection, over and above co‐occurrence perception, we ran an ANOVA with the two between‐subjects manipulations as fixed factors and the tendency to explain events as conspiracies as dependent variable (Figure 1). This analysis revealed a main effect of co‐occurrence, F(1, 207) = 7.46, p = .007, , such that events are more likely to be explained as conspiracies if they happen in a cluster of similar events (M = 4.93, SD = 2.32) rather than as relatively isolated (M = 4.11, SD = 2.28). In addition, we found a main effect of causal connection, F(1, 207) = 6.66, p = .011, . That is, events are more likely to be explained as conspiracies if they are seen as causally connected (M = 4.94, SD = 2.16) versus unconnected to other similar events (M = 4.17, SD = 2.43). We did not find a significant interaction effect between co‐occurrence perception and causal connection, p = .954. Hence, these findings underscore our reasoning such that inferring causal connection affects conspiracy belief, whether or not there is an underlying co‐occurrence.

Figure 1.

Mean ratings of conspiracy explanation as a function of co‐occurrence perception (co‐occurring vs. isolated) and causal connection (causally connected vs. causally unconnected; Study 4)

Discussion

The present study produced three novel and important effects. First, participants favored conspiracy explanations when they were told that events were causally connected (vs. causally unconnected). This provides experimental evidence that conspiracy thinking is promoted by the perception that events are causally connected. Second, participants favored conspiracy explanations for events that were preceded (vs. not preceded) by similar events in the recent past. This finding addresses a largely neglected question—namely what types of events are most likely to attract conspiracy thinking (but see Leman & Cinnirella, 2007; for evidence that conspiracy explanations are more likely for events that are especially socially significant). Kovic and Füchslin (2018) found that conspiracy thinking is elicited by highly improbable events. The present findings are broadly consistent with that result insofar as the focal event may be seen as less probable in the context of a rash of similar events. Third, and most important to our central argument, the first two effects were independent of each other: Seeing events as causally connected increased conspiracy theory irrespective of whether events co‐occurred or were relatively isolated. This suggests that conspiracy theories are a manifestation of a desire to impose a causal interpretation on events, whether or not those events comprise an objective co‐occurrence.

General Discussion

Previous research and theory has suggested that conspiracy thinking is determined by the desire to impose meaning and order on the world, and so should be related to the perception of patterns in the environment (Newheiser et al., 2011; Whitson & Galinsky, 2008). More recently, researchers have investigated the more specific relation between conspiracy thinking and perceptions of causal connections between events (Dieguez et al., 2015; van Prooijen et al., 2018). The primary focus of this theory and research has been on illusory pattern perception in the case of random event sequences in which no objective pattern is present. By definition, no causal connections exist between random events, meaning that perceptions of pattern in such events depend on implausible causal inferences—an erroneous process of “connecting the dots” (van Prooijen et al., 2018) among dots that are necessarily unconnected. However, conspiracy theories generally do not attempt to explain genuinely random events. Rather, they explain events for which there is a multitude of causes, but where observers rely on second‐hand, ambiguous, or contested causal information (Douglas & Sutton, 2011).

We therefore attempted to build on previous research by examining whether conspiracy thinking is informed by implausible causal inferences even when events have some objective causal structure. In Studies 1 and 2, this structure took the form of a spurious correlation between events, for which some causal inferences are warranted, but not the specific, critical inference that a direct causal connection exists between the two focal events. Confirming predictions, conspiracy thinking was related to this specific causal inference, and not to the perception that the correlation was a coincidence or attributable to a third cause. In Studies 3 and 4, it took the form of temporal contiguity, such that the target event occurred as part of a streak of similar events (vs. in isolation). Confirming predictions, whether measured (Study 3) or manipulated (Study 4), the inference that these events were causally connected was shown to inform conspiracy explanations for the most recent event in the sequence.

The present research therefore indicates that conspiracy thinking is driven by a general permissiveness in causal inference—specifically, the willingness to perceive causal connections where none are likely. This tendency is well‐established in research on causal inference, where it has been dubbed “the illusion of causality” (Blanco & Matute, 2018, p. 45), and contributes to pseudoscientific beliefs (Blanco & Matute, 2018), the rejection of science (Rutjens, Heine, Sutton, & van Harreveld, 2018), and belief that misfortunes are cosmic punishments for objectively unrelated wrongdoings (Callan et al., 2014). It is responsible for the perception of pattern in random event sequences (van Prooijen et al., 2018), and as the present studies indicate, for causal inferences linking events that comprise part of some objective pattern but which are not causally related to each other.

Permissive and unconventional causal thinking is captured by the individual difference variable magical ideation (Eckblad & Chapman, 1983), which has been shown to be related to conspiracy thinking (Darwin et al., 2011; Douglas et al., 2016; Lobato et al., 2014). In Study 2, we found this variable to be related not only to conspiracy thinking but to the perception of direct causal relations between spurious correlates. Our findings suggest that magical and conspiracy beliefs are linked not only because each is (in general) “epistemically unwarranted” (Lobato et al., 2014, p.617), but because each reflects a common psychological process, namely the imposition of physically implausible causal interpretations on co‐occurrences.

Relation to Previous Studies of Pattern Perception and Conspiracy Belief

The present studies converge with other evidence to suggest that conspiracy belief is associated with the motivated perception of meaning and order in the environment. Whitson and Galinsky (2008) found that both conspiracy thinking and the perception of pattern in noisy visual arrays were affected by a manipulation of powerlessness, but did not report the correlation between the two. In Study 2, we observed a statistically significant correlation between conspiracy beliefs and visual pattern perception. We also found that the relation between conspiracy belief and implausible causal inferences linking spurious correlates was significant even adjusting for visual pattern perception. This finding suggests that conspiracy beliefs are informed more specifically by implausible causal inferences. As we have seen, this conclusion resonates strongly with the conclusions of van Prooijen et al. (2018) who showed that such causal inferences, in the context of random stimuli, were associated with and causative of conspiracy thinking.

Although largely consistent with the findings and conclusions of van Prooijen et al. (2018), our results diverge from one of their results in a subtle but important way. Most important, van Prooijen et al. (2018) report one finding that suggests that pattern perception may be associated with conspiracy thinking only when stimuli are unstructured. Specifically, in their Study 3, they found that only perceptions of pattern in unstructured paintings (by Pollock) were associated with conspiracy belief, whereas perceiving pattern in structured paintings (by Vasarely) were not.6 We suggest that this might be due to a key methodological difference. The critical tasks in the present studies involved judgments about event sequences, rather than visual stimuli such as paintings. The difference is not just superficial, but may be conceptually important. For example, the perception of patterns in visual stimuli such as paintings and Whitson and Galinsky's (2008) snowy images may not depend on causal inferences but rather gestalt perceptual processes. Indeed, magical ideation (in Study 2; see Table 3) was unrelated to visual pattern perception. Further, since paintings (unlike most event sequences in everyday life) are deliberately created by artists, there is always an unambiguous causal attribution for a clearly apparent structure: That is, it was put there by the artist. This means that (at least) when paintings are clearly structured, the perception of pattern in clearly structured paintings might be decoupled from the causal reasoning processes that underpin conspiracy belief.

Further, while the present results affirm others that have also found a relationship between conspiracy belief and perceptions of pattern and non‐randomness (van Prooijen et al., 2018; Whitson & Galinsky, 2008), they are at odds with results reported by Dieguez et al. (2015), which found no such relationship. One potential explanation might appeal to the social and ecological meaningfulness of the stimuli. Pattern perception appears to be relevant to conspiracy thinking when the stimuli are (or could be) meaningful in everyday life, as is the case with pictures that represent objects or convey ideas and emotions, and coin tosses, which are typically performed when something is at stake. In contrast, Dieguez et al. (2015) presented sequences of Xs and Os, and did not describe to participants what they represented, or what was at stake in these sequences. If stimuli do not have a clear apparent or potential social meaning, they may not activate the sense‐making motivations that are thought to underpin conspiracy theories (Douglas, Sutton, & Cichocka, 2017). Another important feature of some of their conditions is that a potential cause for any order was introduced (i.e., a cheat who was influencing the outcome). This may have stripped the task of some of the critical causal ambiguity that seems to be needed for the thought processes that are characteristic of conspiracy thinking to be detectable. A final point is that Dieguez et al.'s measure of beliefs about randomness versus determination was rigorous and sophisticated, but it is not known how it relates to the measures used in our studies, nor those by Whitson and Galinsky (2008) and van Prooijen et al. (2018).

Limitations and Future Directions

Our studies have some important limitations. They relied on online MTurk samples and did not incorporate actual events that may have happened in the (recent) past. Future research should examine a broader, more representative range of participants and stimulus events. Further, the present studies were not always successful in cleanly distinguishing the causal interpretation of event sequences from the perception of apparent patterns in those sequences. For reasons we have noted, including the covariation principle in causal reasoning, we can expect perceptions of co‐occurrence and causal inferences to be related under normal circumstances. Future studies could go further in developing tasks that cleanly separate the two processes. For example, in pictorial stimuli, it could be useful to ask participants not only whether they see a pattern (van Prooijen et al., in press, Study 3) or an object (Whitson & Galinsky, 2008) in a visual array, but whether they think the pattern could have emerged by chance or whether it must have been determined.

Future research could also determine whether a tendency to interpret event sequences in causal terms mediates several of the effects in the conspiracy theory literature, and so offers an organizing theoretical framework for them. For example, thwarting needs to belong (Graeupner & Coman, 2017) and to achieve cognitive closure (Marchlewska et al., 2017), and activating powerlessness (Whitson & Galinsky, 2008) have been shown to increase conspiracy thinking. They are also likely to result in heightened readiness to draw causal connections between events in an effort to impose meaning on experience. The conjunction fallacy—the tendency to see explanations with two premises as more plausible than those with one—has also been linked both to conspiracy theory (Brotherton & French, 2014) and to the perception of underlying causal mechanisms (Ahn & Bailenson, 1996).

Concluding Remarks

Since the earliest research on conspiracy theories, scholars have seen conspiracy belief as an attempt to find order in the environment. The present results are consistent with this perspective, but show that whether or not co‐occurrences exist (and are perceived to exist), conspiracy thinking is fueled by implausible causal interpretations of those events: specifically, the perception of direct causal connections between events that are unlikely to be so connected. Thus, Hugo Chavez's conspiracy thinking was fueled by the fact that not just one, but several, Latin American leaders were stricken by cancer in the early years of this decade—consistent with the novel finding, in our final study, that events are more likely to be explained as conspiracies if they are part of a series of similar events, rather than one‐offs. Crucially, Chavez's conspiracy thinking was also fueled by his view that this co‐occurrence in events reflected a causal connection between them.

The philosopher Mackie (1980) termed causality the “cement of the universe.” By this, Mackie meant that causality is a force, real or imagined, that links events together in people's minds, and enables people to understand and respond to relations between events. The rash of cancer diagnoses afflicting several Latin American leaders in the early years of this decade is most plausibly seen as a tragic coincidence, and cementing them together as Chavez did appears to be illegitimate. Nonetheless, each diagnosis should not necessarily be seen as an entirely random event: It could also be seen as the product of a confluence of genetic, environmental and lifestyle factors. More generally, the events that conspiracy theories typically seek to explain are not entirely random or unrelated to other events. Natural disasters, diseases, personal deaths and tragedies, election results, and socio‐political circumstances do not occur in a causal vacuum but are enmeshed in a complicated matrix of causes. Nonetheless, even though conspiracy thinking takes place in contexts where events are seldom entirely random, it appears to be characterized by a willingness to draw imaginary causal connections between events.

Conflict of Interest

The authors confirm they have no conflict of interest to declare. Authors also confirm that this article adheres to ethical guidelines specified in the APA Code of Conduct as well as the authors’ national ethics guidelines.

Conspiracy Belief (Study 1)

Please indicate how much you agree with each statement by selecting the appropriate response in each case (1 = strongly disagree, 7 = strongly agree):

-

1

The Federal Reserve System is designed to transfer wealth from the poor and middle classes of the United States to a group of unknown international elites.

-

2

Forced transition to digital television broadcasting is intended to facilitate subliminal advertising.

-

3

Media outlets try to hide some government actions, claiming those military operations are actually terrorist actions.

-

4

A group of international elites controls and manipulates governments, industry, and media organizations worldwide.

-

5

Some chronic diseases could be treated using medical and pharmaceutical developments that are obscured by the pharmaceutical industry interests.

-

6

Pope Benedict XVI resigned because he himself was part of the Roman Catholic sex abuse scandals.

Items from Douglas and Sutton (2011):

-

7

There was no conspiracy involved in the assassination of John. F. Kennedy.

-

8

Princess Diana's death was an accident.

-

9

The AIDS virus was created in a laboratory.

-

10

The attack on the Twin Towers was not a terrorist action but a governmental conspiracy.

-

11

The American moon landings were faked.

-

12

Princess Diana had to be killed because the British government could not accept that the mother of the future king was involved with a Muslim Arab.

-

13

Efficient alternative energy sources were developed but kept into obscurity by petroleum companies.

-

14

Governments are suppressing evidence of the existence of aliens.

Conspiracy Belief (Study 2)

Please indicate how much you agree with each statement by selecting the appropriate response in each case (1 = strongly disagree, 7 = strongly agree):

-

1

Lee Harvey Oswald collaborated with the CIA in assassinating President John F. Kennedy.

-

2

A small, secret group of people is responsible for making all major world decisions, such as going to war.

-

3

Technology with mind‐control capacities is used on people without their knowledge.

Items from Douglas and Sutton (2011):

-

4

Governments are suppressing evidence of the existence of aliens.

-

5

The American moon landings were faked.

-

6

The AIDS virus was created in a laboratory.

-

7

The attack on the Twin Towers was not a terrorist action but a governmental conspiracy.

-

8

There was an official campaign by MI6 to assassinate Princess Diana, sanctioned by elements of the establishment.

Causal Interpretation of Spurious Correlations (CISC: Study 1 and Study 2)

Often different variables are strongly correlated, although the reasons for this relation are not understood. You will now be asked to think about how different variables or events relate to each other. This is, you will have to think about the relation between events that were demonstrated to be highly correlated:

It has been shown that an increase in the number of storks is associated with an increase in the number of children.

It has been shown that an increase in people's income is associated with more visits to the hospital.

It has been shown that an increase in body lice is associated with an increase in health.

It has been shown that an increase in chocolate consumption is associated with an increase in Nobel prize winners in a country.

It has been shown that an increase in the amount of US spending on science, space and technology is associated with an increase in suicides by hanging, strangulation and suffocation.

It has been shown that an increase in the average global temperature is associated with an increase in the national science foundation budget.

For each of the six combinations, please rate how much you agree with the following possible explanations of the relation between the two events (1 = strongly disagree, 9 = strongly agree):

This is a causal relation. One event caused (directly or indirectly) the other.

This relation is a random coincidence.

This relation is explained by a third variable that affects the prevalence of both events.

Natural and Human Scenarios (Study 3)

Whale stranding (natural)

Most recent event

A pod of over 200 pilot whales stranded on a remote beach in the North Island of New Zealand. Despite the efforts of hundreds of volunteers, the majority of the whales tragically died.

Previous events

In the past three years, there have been four such incidents of whale strandings in the area.

In the first such incident, a pod of 15 sperm whales were stranded on the same beach and locals were able to rescue almost all but two of them.

In next incident, some fifteen months later, a pod of over 150 pilot whales beached themselves in a bay only 10 km to the south.

About a year after that, a pod of about 60 pilot whales were beached on the next bay to the north. About half of them were rescued by volunteers.

Volcano eruption (natural)

Most recent event

A volcano in the Philippines that was thought to be dormant suddenly erupted, causing a pyroclastic (mud and ash flow) that demolished a small village, killing 47 people.

Previous events

In the past two years, three other volcanoes in the region have erupted unexpectedly.

One such eruption, 50 km to the southeast, obliterated a small, uninhabited island and caused ash deposits of up to 20 cm to fall on neighboring islands.

Another eruption lasted several days and caused the formation of a new island a further 100 km to the east.

The first of these eruptions killed 105 people in an island to the north, and created a peninsula that extended the island a further 750 m into the sea

Swine flu (natural)

Most recent event

In a southern province of China, a new strain of swine flu erupted was identified at a local farm. Thousands of pigs were culled and strict quarantine measures were imposed.

Previous events

In the past two years, there here have been three similar outbreaks of new strains of the virus in the same province.

One was caught early when an alert inspector at an agricultural market noticed flu‐like symptoms in some sows as they arrived to be sold. They were immediately isolated and only the on local farm needed to be quarantined.

Another was much more disastrous. A new strain emerged at a mixed poultry and pig farm and rapidly spread to neighboring farms, resulting in the immediate shutdown of agricultural production in the province, and culling on a scale that has not been officially disclosed.

Shortly before the most recent case, a new strain emerged in a remote village, and there were initial reports that the virus had crossed the species barrier to infect several villagers. However, the outbreak was contained effectively and tests confirmed that the humans were infected with a normal strain of human influenza.

Journalist’ death (human)

Most recent event

A journalist investigating local political affairs was found dead in her apartment with a suicide note and an open bottle of pills.

Previous events

In the past eighteen months, three other journalists in the same city have died suddenly.

One of them was struck and killed outside her home in a hit‐and‐run after returning home from covering a late‐night council meeting. The driver was never found.

Another was discovered dead at the bottom of a cliff after an extensive missing persons search, having apparently committed suicide.

A fourth journalist was shot and killed in a local backstreet after an apparent botched robbery.

Mayor's sudden illness (human)

Most recent event

The mayor of a large city was severely disfigured and largely blind after suffering an apparent toxic reaction after a dinner function. Doctors were unable to identify the toxin. She remains in hospital, and is not expected to recover her sight, and is reliant on dialysis until a kidney transplant can be arranged.

Previous events

She was the third politician in the region to have become very ill as a result of a toxic reaction in the last year.

Some two months previously, the elected official responsible for the city's finances abruptly lost consciousness during a council meeting. Although no lasting damage appears to have been done to his health, he was in hospital for approximately two weeks with severe drowsiness, vomiting, and a rash. Doctors were unable to find a cause for the symptoms and attributed them to a reaction to an unknown poison.

Around six months before that, the official responsible for planning and developments fell ill while visiting business interests in the surrounding countryside, experiencing anaphylaxis and temporary organ failure. The official is on long‐term leave until he is well enough to return to work.

Please rate how much you agree with the following statements about the events described (1 = strongly disagree, 9 = strongly agree):

There is a causal connection between these events.

These events have a common underlying cause.

These events are causally connected.

There seems to be a pattern to these events.

These events seem to comprise a group or cluster.

These events appear to have occurred closer together in time than one would normally expect.

Any apparent pattern, similarity or increased frequency in these events is a coincidence.

Any apparent pattern, similarity, or increased frequency in these events is probably due to chance.

In your own words, please explain what you think might have caused the most recent event (i.e., the journalist investigating local political affairs being found dead in her apartment with a suicide note and an open bottle of pills/the mayor's sudden illness)?

What do you think caused the most recent event (i.e., the journalist investigating local political affairs being found dead in her apartment with a suicide note and an open bottle of pills/the mayor's sudden illness)? 1 = strongly disagree, 9 = strongly agree

Her death was a suicide/It was an accident.

She was killed/poisoned by a sole person acting alone (not as part of a plot).