Abstract

Study Design

Cross sectional study.

Purpose

To assess the quality of anterior cervical discectomy and fusion (ACDF) videos available on YouTube and identify factors associated with video quality.

Overview of Literature

Patients commonly use the internet as a source of information regarding their surgeries. However, there is currently limited information regarding the quality of online videos about ACDF.

Methods

A search was performed on YouTube using the phrase ‘anterior cervical discectomy and fusion.’ The Journal of the American Medical Association (JAMA), DISCERN, and Health on the Net (HON) systems were used to rate the first 50 videos obtained. Information about each video was collected, including number of views, duration since the video was posted, percentage positivity (defined as number of likes the video received, divided by the total number of likes or dislikes of that video), number of comments, and the author of the video. Relationships between video quality and these factors were investigated.

Results

The average number of views for each video was 96,239. The most common videos were those published by surgeons and those containing patient testimonies. Overall, the video quality was poor, with mean scores of 1.78/5 using the DISCERN criteria, 1.63/4 using the JAMA criteria, and 1.96/8 using the HON criteria. Surgeon authors’ videos scored higher than patient testimony videos when reviewed using the HON or JAMA systems. However, no other factors were found to be associated with video quality.

Conclusions

The quality of ACDF videos on YouTube is low, with the majority of videos produced by unreliable sources. Therefore, these YouTube videos should not be recommended as patient education tools for ACDF.

Keywords: Cervical spine, Anterior cervical discectomy and fusion, Patient education as topic, YouTube

Introduction

The internet allows access to a vast range of information. Health-related searches are common, comprising 4.5% of queries entered into a search engine [1]. Furthermore, the majority of patients report the use of the internet for obtaining information about medical conditions [2]. For patients attending elective spinal outpatient clinics, the use of the internet to research their condition is common [3,4]. YouTube is the most popular video website in the world, with >1 billion users [5]. However, health-related videos that are posted on YouTube are not subjected to peer review or regulated in any way.

The majority of patients believe that the health information found on the internet is either equal or better than the information provided by their doctor and the majority of patients using the internet as a source of medical information do not tell their doctor about their search results [2,6]. If the standard of information found on the internet is indeed low, it can create an undesirable situation wherein the patient receives potentially incorrect information and the doctor-patient relationship is undermined simultaneously. Thus, it is important to determine the quality of online videos related to various healthcare subjects.

Several studies have assessed health-related videos on YouTube, and the quality those videos is found to be generally low [7-10]. Specific to spinal surgery, Brooks et al. [11] found that the quality of information in YouTube videos on lumbar discectomy was poor, with Staunton et al. [12] reporting similar findings for scoliosis videos. Furthermore, the YouTube search algorithm does not rank high quality videos prominently [8], although there is evidence that the number of views a video has correlates with its quality [12]. To our knowledge this is the first study assessing the quality of YouTube videos on ACDF.

Our aim was to determine the quality of YouTube videos on ACDF using three validated scoring systems and to identify any variables that were predictive of a higher score.

Materials and Methods

A search query for the phrase ‘anterior cervical discectomy and fusion’ (ACDF) on YouTube was performed on March 5th, 2016. The first 50 videos were collected and included in the study. The search was conducted in English (United States) language. No filters were used.

The following data was collected for each of the videos: number of views, duration since video was posted, percentage positivity (defined as the number of likes the video received, divided by the total number of likes or dislikes of that video), number of comments, and author category. Author categories included patient testimonies, spinal surgeon, and other (paramedic companies, medical engineering companies, or media teams).

The videos were viewed and graded independently by each author using the Journal of the American Medical Association (JAMA), DISCERN, and Health on the Net (HON) ranking systems. The JAMA ranking system is scored out of four points, with the categories being authorship, attribution, currency, and disclosure [13]. DISCERN is a tool that assesses the reliability and quality of a publication through a 15-part questionnaire [14]. Each question is scored out of 5 points, with the mean score across the 15 questions used as the final score for the video. The HON ranking system scores eight separate criteria, including authoritativeness, transparency, and financial disclosure [6].

Interobserver reliability was assessed using intraclass correlation (ICC) analysis for each of the three ranking systems; values >0.7 were considered good correlation. The mean score from both authors was used for subsequent analysis. Linear regression was used to analyze the association between the assigned scores and the video length, number of views, percentage positivity, number of comments, and age of the video. Linear regression was also used to analyze the relationship between assigned scores and the number of views and comments after these factors had been controlled for age of the video. Analysis of variance was used to analyze the relationship between video ratings and author category. All statistical analysis was performed using the IBM SPSS ver. 24.0 (IBM Corp., Armonk, NY, USA).

Results

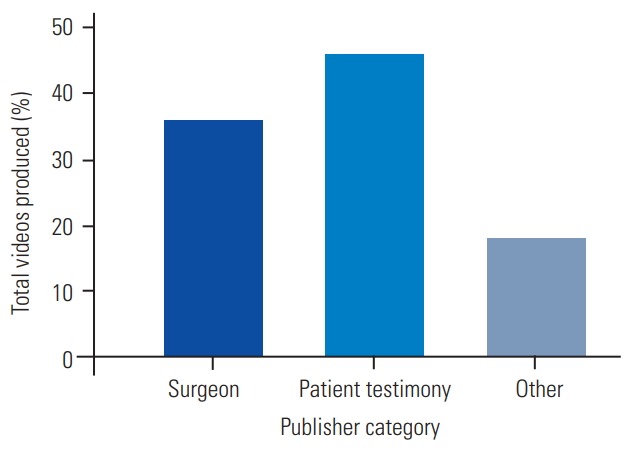

Of the 17,200 videos obtained from our search query, the first 50 were analyzed, with mean±standard deviation (SD) number of views being 96,239±271,000, with a range of 44–1,745,843. The combined number of views of the videos was 4,811,958. For three videos, comments were disabled; however, mean±SD (range) number of comments on the remaining videos was 56±79 (0–373). On an average, videos were 3.23±2.04 years (range, 0.39–8.68 years). The average length (±SD) of the videos was 10.35±16.5 minutes. The average positivity was 94%±0.06%. Surgeons authored 36% of the videos, whereas 46% were patient testimonies, and other authors posted the remaining 18% (included mainly videos produced by media companies) (Fig. 1).

Fig. 1.

Percentage of videos produced by different author categories.

ICC values were >0.7 for HON and DISCERN; however, the value for JAMA was just below, at 0.68. Mean (±SD) HON score was 1.96/8 (±0.83) with range 0.5–4.5, mean (±SD) DISCERN score was 1.78/5 (±0.58) with range 1.03–3.53, and mean (±SD) JAMA score was 1.63/4 (±0.44) with range 1–3.

The assigned video quality for each of the three scoring systems was not significantly associated with the following variables: video length, number of views, age of video, number of comments, or percentage positivity. There remained no significant association for views or comments even after they had been adjusted for the age of the video.

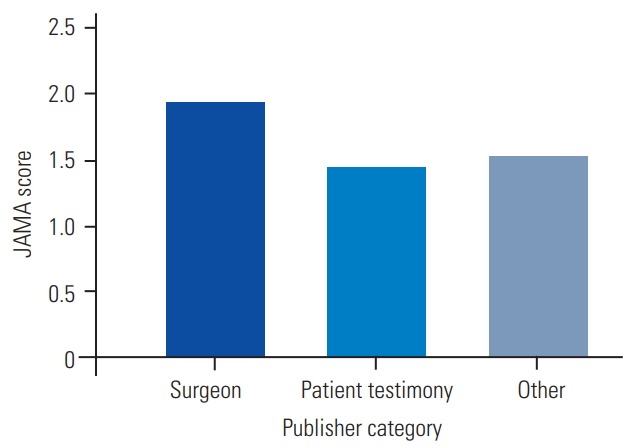

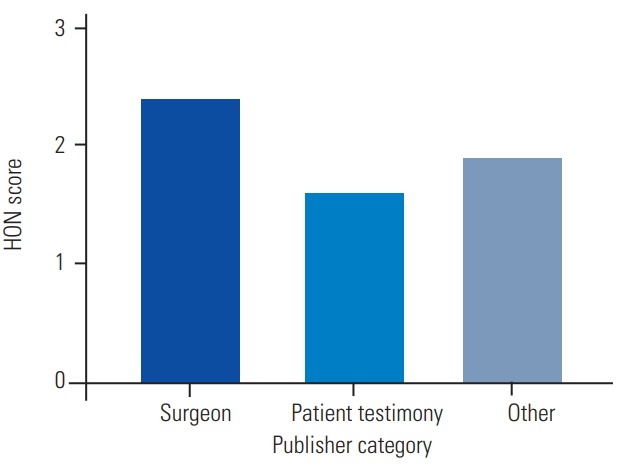

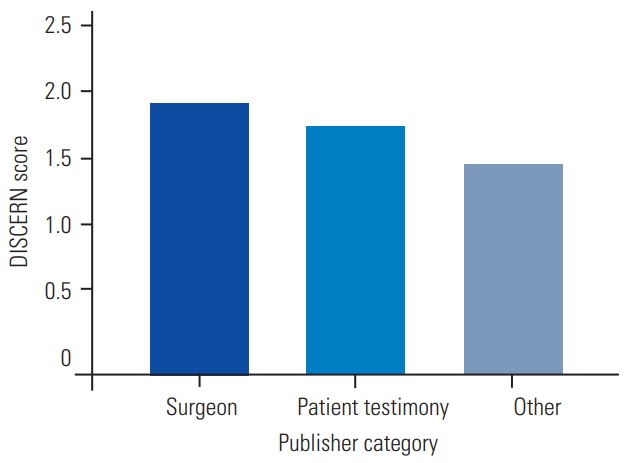

When scoring with JAMA or HON systems, surgeon author videos scored significantly higher than patient testimony videos (p<0.05), but not significantly higher than videos classified as ‘other’ (Figs. 2, 3). Author category was not significantly associated with a different DISCERN score (Fig. 4).

Fig. 2.

The Journal of the American Medical Association (JAMA) scores of individual author categories.

Fig. 3.

Health on the Net (HON) scores of individual author categories.

Fig. 4.

DISCERN scores of individual author categories.

Discussion

The average quality of YouTube videos on ACDF is low when scored using the JAMA, DISCERN, or HON systems of video assessment. The HON and DISCERN scoring systems were considered to have good ICC, but the JAMA ICC value was slightly below 0.7. Videos made by surgeons scored significantly better than those containing patient testimonies when scored using the HON and JAMA criteria.

These findings are perhaps not surprising, given that the quality of YouTube videos on various medical and surgical issues has been previously graded, with the general trend being low quality [7-10]. Specific to spinal surgery, the quality of YouTube videos on lumbar discectomy [11] and scoliosis [12] have also been previously graded as poor. Indeed, Staunton et al. [12] gave an average JAMA score of 1.32 to the scoliosis videos they assessed, which is similar to the average score obtained in our analysis, suggesting that these poor scores are not limited to videos on one particular surgical procedure or condition.

The majority of videos in our study were produced by non-physicians, with 46% videos being patient testimonies. This number is slightly higher than the 38% reported in a similar study on scoliosis videos [15]. The increase in the number of videos produced by patients is not unique to our study, with a recent systematic review finding that a significant number of healthcare-related YouTube videos contained information considered anecdotal in nature [15]. Younger patients are more likely to search for anecdotal healthcare information. Therefore, counseling on the quality of these sources is particularly important for such patients [16]. There is little to no regulation of such videos, and authors are not required to provide evidence that they have indeed had the surgery.

When assessing the videos using the HON or JAMA criteria, videos published by surgeons scored significantly higher than those containing patient testimonies, but no other factors were found to be significantly associated with a higher score. Previous studies have found that medical information published on the internet by physicians is of a higher quality than that published by non-physicians [17]. However, there is evidence that the average viewer has difficulty engaging or understanding videos produced by medical professionals [18].

One limitation of our study is that we only assessed the first 50 of the 17,200 videos returned by our search query. Videos not ranked highly by the sorting mechanism on YouTube were therefore excluded, and our findings may not necessarily be generalized to all videos. However, in practical terms, the quality of the first 50 videos is the most important, as these are the ones most likely to be viewed by patients. Additionally, our query was only performed using the English language and ACDF videos published in another language were not assessed.

Watching these videos may provide benefits beyond the objective criteria measured by our three scoring systems. Listening to the story of a patient who underwent a similar surgery may assuage fears the patient has or answer questions that medical professionals may not have considered. In contrast, a patient testimony with a negative opinion may influence the patient unduly, particularly given that many patients are unable to determine bias in medically related publications [19]. Although difficult to measure, the qualitative nature of experiences cannot be completely disregarded. It is currently unclear as to whether the influence of qualitative bias on elective surgery is positive or negative.

An ideal ACDF YouTube video should reflect the informed consent process and include an explanation of the pathology of the condition, the treatment options, and the associated risks and benefits. Guidelines detailing how to produce appropriate online sources have previously been published [20]; however, it seems that the majority of YouTube video uploads do not currently adhere to these principles. This is perhaps expected, given the fact that medical professionals do not produce many such videos. As such, this study provides evidence that the spinal surgeon must ensure they verbally communicate information about ACDF clearly and succinctly in addition to warning their patient about YouTube being an unreliable source of information.

Conclusions

The internet, YouTube in particular, has the potential to provide patients with easy access to large amounts of information on ACDF. However, currently the majority of these sources are of a low quality, with few reliable variables to predict quality. Doctors should warn patients about the limitations of YouTube videos and direct them toward more appropriate sources of information.

Footnotes

No potential conflict of interest relevant to this article was reported.

Author Contributions

Christopher Dillon Ovenden is a medical student at the University of Adelaide and was involved in the planning of the study, the collection of data, and writing of the manuscript. Francis Michael Brooks is a qualified orthopaedic surgeon and was involved in the planning of the study, the collection of data, and revision of the prepared manuscript.

References

- 1.Eysenbach G, Kohler Ch. What is the prevalence of health-related searches on the World Wide Web? Qualitative and quantitative analysis of search engine queries on the internet. AMIA Annu Symp Proc. 2003:225–9. [PMC free article] [PubMed] [Google Scholar]

- 2.Diaz JA, Griffith RA, Ng JJ, Reinert SE, Friedmann PD, Moulton AW. Patients’ use of the Internet for medical information. J Gen Intern Med. 2002;17:180–5. doi: 10.1046/j.1525-1497.2002.10603.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baker JF, Devitt BM, Kiely PD, et al. Prevalence of Internet use amongst an elective spinal surgery outpatient population. Eur Spine J. 2010;19:1776–9. doi: 10.1007/s00586-010-1377-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bao H, Zhu F, Wang F, et al. Scoliosis related information on the internet in China: can patients benefit from this information? PLoS One. 2015;10:e0118289. doi: 10.1371/journal.pone.0118289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.YouTube . Statistics [Internet] San Bruno (CA): You-Tube; [cited 2016 Mar 25]. Available from: http://www.youtube.com/yt/press/statistics.html. [Google Scholar]

- 6.Health On the Net Foundation . Geneva: Health On the Net Foundation; HONcode [Internet] [cited 2016 Apr 27]. Available from: https://www.hon.ch/HONcode/Pro/Visitor/visitor.html. [Google Scholar]

- 7.Fischer J, Geurts J, Valderrabano V, Hugle T. Educational quality of YouTube videos on knee arthrocentesis. J Clin Rheumatol. 2013;19:373–6. doi: 10.1097/RHU.0b013e3182a69fb2. [DOI] [PubMed] [Google Scholar]

- 8.Ho M, Stothers L, Lazare D, Tsang B, Macnab A. Evaluation of educational content of YouTube videos relating to neurogenic bladder and intermittent catheterization. Can Urol Assoc J. 2015;9:320–54. doi: 10.5489/cuaj.2955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mukewar S, Mani P, Wu X, Lopez R, Shen B. You-Tube and inflammatory bowel disease. J Crohns Colitis. 2013;7:392–402. doi: 10.1016/j.crohns.2012.07.011. [DOI] [PubMed] [Google Scholar]

- 10.Syed-Abdul S, Fernandez-Luque L, Jian WS, et al. Misleading health-related information promoted through video-based social media: anorexia on You-Tube. J Med Internet Res. 2013;15:e30. doi: 10.2196/jmir.2237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Brooks FM, Lawrence H, Jones A, McCarthy MJ. YouTube™ as a source of patient information for lumbar discectomy. Ann R Coll Surg Engl. 2014;96:144–6. doi: 10.1308/003588414X13814021676396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Staunton PF, Baker JF, Green J, Devitt A. Online curves: a quality analysis of scoliosis videos on You-Tube. Spine (Phila Pa 1976) 2015;40:1857–61. doi: 10.1097/BRS.0000000000001137. [DOI] [PubMed] [Google Scholar]

- 13.Silberg WM, Lundberg GD, Musacchio RA. Assessing, controlling, and assuring the quality of medical information on the Internet: caveant lector et viewor: let the reader and viewer beware. JAMA. 1997;277:1244–5. [PubMed] [Google Scholar]

- 14.Discern Online . San Mateo (CA): Discern Group Inc; The Discern instrument [Internet] [cited 2016 Apr 12]. Available from: http://www.discern.org.uk/discern_instrument.php. [Google Scholar]

- 15.Madathil KC, Rivera-Rodriguez AJ, Greenstein JS, Gramopadhye AK. Healthcare information on YouTube: a systematic review. Health Informatics J. 2015;21:173–94. doi: 10.1177/1460458213512220. [DOI] [PubMed] [Google Scholar]

- 16.Lagan BM, Sinclair M, Kernohan WG. Internet use in pregnancy informs women’s decision making: a web-based survey. Birth. 2010;37:106–15. doi: 10.1111/j.1523-536X.2010.00390.x. [DOI] [PubMed] [Google Scholar]

- 17.Tartaglione JP, Rosenbaum AJ, Abousayed M, Hushmendy SF, DiPreta JA. Evaluating the quality, accuracy, and readability of online resources pertaining to hallux valgus. Foot Ankle Spec. 2016;9:17–23. doi: 10.1177/1938640015592840. [DOI] [PubMed] [Google Scholar]

- 18.Desai T, Shariff A, Dhingra V, Minhas D, Eure M, Kats M. Is content really king?: an objective analysis of the public’s response to medical videos on You-Tube. PLoS One. 2013;8:e82469. doi: 10.1371/journal.pone.0082469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ullrich PF, Jr, Vaccaro AR. Patient education on the internet: opportunities and pitfalls. Spine (Phila Pa 1976) 2002;27:E185–8. doi: 10.1097/00007632-200204010-00019. [DOI] [PubMed] [Google Scholar]

- 20.Winker MA, Flanagin A, Chi-Lum B, et al. Guidelines for medical and health information sites on the internet: principles governing AMA web sites. American Medical Association. JAMA. 2000;283:1600–6. doi: 10.1001/jama.283.12.1600. [DOI] [PubMed] [Google Scholar]