Abstract

Background

Until now, the use of technology in health care was driven mostly by the assumptions about the benefits of electronic health (eHealth) rather than its evidence. It is noticeable that the magnitude of evidence of effectiveness and efficiency of eHealth is not proportionate to the number of interventions that are regularly conducted. Reliable evidence generated through comprehensive evaluation of eHealth interventions may accelerate the growth of eHealth for long-term successful implementation and help to experience eHealth benefits in an enhanced way.

Objective

This study aimed to understand how the evidence of effectiveness and efficiency of eHealth can be generated through evaluation. Hence, we aim to discern (1) how evaluation is conducted in distinct eHealth intervention phases, (2) the aspects of effectiveness and efficiency that are typically evaluated during eHealth interventions, and (3) how eHealth interventions are evaluated in practice.

Methods

A systematic literature review was conducted to explore the evaluation methods for eHealth interventions. Preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines were followed. We searched Google Scholar and Scopus for the published papers that addressed the evaluation of eHealth or described an eHealth intervention study. A qualitative analysis of the selected papers was conducted in several steps.

Results

We intended to see how the process of evaluation unfolds in distinct phases of an eHealth intervention. We revealed that in practice and in several conceptual papers, evaluation is performed at the end of the intervention. There are some studies that discuss the importance of conducting evaluation throughout the intervention; however, in practice, we found no case study that followed this. For our second research question, we discovered aspects of efficiency and effectiveness that are proposed to be assessed during interventions. The aspects that were recurrent in the conceptual papers include clinical, human and social, organizational, technological, cost, ethical and legal, and transferability. However, the case studies reviewed only evaluate the clinical and human and social aspects. At the end of the paper, we discussed a novel approach to look into the evaluation. Our intention was to stir up a discussion around this approach with the hope that it might be able to gather evidence in a comprehensive and credible way.

Conclusions

The importance of evidence in eHealth has not been discussed as rigorously as have the diverse evaluation approaches and evaluation frameworks. Further research directed toward evidence-based evaluation can not only improve the quality of intervention studies but also facilitate successful long-term implementation of eHealth in general. We conclude that the development of more robust and comprehensive evaluation of eHealth studies or an improved validation of evaluation methods could ease the transferability of results among similar studies. Thus, the resources can be used for supplementary research in eHealth.

Keywords: evidence-based practice, program evaluation, systematic review, technology assessment

Introduction

Background

The use of electronic health (eHealth) is still driven by assumptions about the benefits of eHealth rather than its evidence [1]. With time, the trustworthiness and robustness of eHealth to facilitate safe and cost-efficient care are being questioned because of a lack of evidence [2]. This may trigger reluctance in investing and developing policies related to eHealth in organizations as well as in countries [3].

The term eHealth was introduced in the 1990s [4]; however, it was hardly in use until 1999 [5]. According to Eysenbach [5]:

e-health is an emerging field in the intersection of medical informatics, public health and business, referring to health services and information delivered or enhanced through the Internet and related technologies.

Eysenbach believes eHealth stands for more than internet and medicine [5]. In our study, eHealth was used as the broadest umbrella encompassing everything that comes within information and communication technology and health care, including telemedicine, mobile health, and health informatics.

Systematic evaluation can capture the evidence and criteria that evaluative judgment is based on and curtail the sources of biases [6]. The quality of an evaluation is assessed by the credibility of evidence assembled through it and using evidence in refining the policies and programs [7]. Evaluation of eHealth interventions is complex because of several reasons (eg, the need for multidisciplinary collaboration [8], context dependency [9], and differences in epistemological beliefs considering the interventions in clinical studies or including social aspects as well [10-14]). Therefore, variety exists concerning how the evaluation of eHealth interventions is performed and presented. Garnering robust evidence through evaluation becomes difficult because of these circumstances.

It is relevant to understand evidence-based medicine (EBM) while discussing the importance of evidence in eHealth interventions. A common query is how EBM can help generate evidence for eHealth interventions [15]:

Evidence-based medicine is the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients. The practice of EBM means integrating individual clinical expertise with the best available external clinical evidence from systematic research.

As per this definition, evidence in EBM is conspicuously related to the clinical aspect. Although it is argued whether EBM is only about randomized controlled trials [16] or not [15], it is quite explicit that EBM usually does not contemplate anything outside clinical practices. However, eHealth interventions have more aspects to evaluate besides the clinical aspects. An extensive assessment of the aspects including sociotechnical aspects is needed through each phase of the technology’s life cycle while evaluating eHealth interventions [10,11,17]. Hence, to gather evidence from eHealth intervention, the evaluation process requires a distinct approach than what is usually put forward within EBM.

Objective

Our objective was to elucidate how the evidence of effectiveness and efficiency of eHealth can be generated through evaluation. Consequently, a literature review was conducted to understand the evaluation process regarding both theories of eHealth and the practices in case studies of eHealth interventions. We decided to employ a broader perspective at the beginning of the review process to achieve our research objective. As the literature review progressed, our research objective narrowed, and the research questions were redefined several times. However, the objective was always to understand the evaluation of eHealth from a comprehensive perspective. It was pertinent to recognize the phases of eHealth interventions where evaluation occurs and the aspects of effectiveness and efficiency that are evaluated during such interventions. Our 3 research questions were as follows:

How is evaluation conducted in distinct eHealth intervention phases?

What aspects of effectiveness and efficiency are typically evaluated during eHealth interventions?

How have eHealth intervention case studies been evaluated?

Finally, we presented an approach to evaluate eHealth interventions by developing a model—Evidence in eHealth Evaluation. To our knowledge, this model is a novel way of looking into evaluation of eHealth interventions for comprehensive evidence. This conceptual model was based on the findings of the literature review.

Methods

Systematic Review

Identification and Screening

A systematic search of relevant literature was conducted following preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines [18]. Google Scholar and Scopus were used to search the following identified terms, “research methods” and “eHealth interventions,” “study design” and eHealth interventions,” “evaluation methods” and “eHealth interventions,” “eHealth interventions” and “evaluation framework,” “evidence based” and “evaluation,” and “eHealth interventions.” The selected set of terms is aligned to the broad scope of eHealth interventions’ evaluation methods and to the aim of including and analyzing as many relevant studies as possible in the review. We included scientific papers published between 1990 and 2016. As the term eHealth evolved during the 1990s [4], we deemed it reasonable to consider the literature published on eHealth interventions since then. A total of 1624 records were found with these selected search keywords.

The screening of the papers was conducted in 3 steps. For the first 2 steps, the screening was based on the title of the manuscripts using a predefined set of exclusion and inclusion criteria. Only scientific papers were used, whereas books and patents were excluded during the search. To avoid overanalysis and repetition of the papers, the exclusion criteria for the first step were citations, literature reviews, and meta-analyses. In addition, studies addressing specific health issues designed to answer clinical research questions were excluded (ie, publications solely addressing behavior change theory, ergonomics, drugs, sedentary issues, or physical activity intervention as well as those addressing nonadult patients). The number of records was reduced to 813 after the first elimination. At this point, all the records were listed together, and duplicate records were removed. During the second step, title screening was conducted. For papers whose titles did not explicitly mention the intervention target group, the abstracts were read to decide. When in doubt, the papers were included for further scrutiny in the next step. Consequently, only those records that included either a conceptual discussion about eHealth interventions or discussion about eHealth interventions that focused on adult patients and caregivers were selected. The third step of the screening process started with 279 records; this time, all the abstracts and the methodology of the papers were read by 2 of the authors individually. Previously, we devised the inclusion parameters for this stage; specifically, the selected papers ought to (1) be aligned to the study objective, (2) have the potential to provide insight for 1 or more research question, and (3) comply with the inclusion and exclusion criteria described above. At the end of step 3, the authors discussed their observations, and 81 papers were selected for thorough reading and analysis. Besides the papers selected through systematic search, 10 records were added for further analysis. These records were found from the citation of the papers selected using the systematic literature search. All 10 papers were included in this study because of their relevance to our objective.

Eligibility and Inclusion

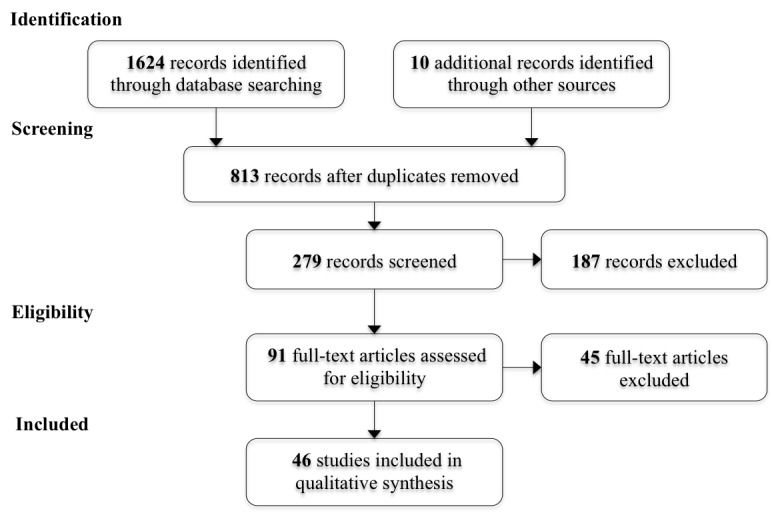

To extract and record useful information from the papers and to gain a general overview of evaluation in eHealth interventions, a Microsoft Excel spreadsheet was created with the criteria shown in Multimedia Appendix 1. Although most of the criteria were adopted from section 2.2.3 Planning the topic and scope of a review in the Cochrane review [19], criterion such as learning point was included by us. This section of Cochrane review was adapted to identify the potentials of the selected papers in fulfilling the research objectives. On the other hand, it seemed advantageous to record novelties of the studies concerning learning points for our own development. During these phases, the papers (N=91) were read meticulously, which led to the final screening. As a result, 46 papers were selected for the qualitative analysis that categorically focused on evaluation in terms of phases and aspects. The flow diagram of the papers selection process is presented in Figure 1.

Figure 1.

Preferred reporting items for systematic reviews and meta-analyses flow diagram of the study selection process.

Qualitative Analysis

On the basis of the summary of studies mentioned in the previous section, the papers (N=46) were classified into 2 categories: (1) conceptual exploration of eHealth interventions (n=21) and (2) case studies of eHealth interventions (n=25). Using the summary table (Multimedia Appendix 1), the papers in the first category were divided into 2 groups: (A) evaluation of distinct eHealth intervention phases (n=10) and (B) aspects of evaluation in eHealth interventions (n=11).

Evaluation of Distinct Phases of an Electronic Health Intervention

For the papers in group A, thematic scrutiny was applied by mapping out the content of the papers and grouping the phases of intervention with similar objectives, activities, or results. The objective of the analysis was to understand whether the researchers emphasize some phases over others during evaluation and, if so, what phases are most frequently evaluated during an intervention.

Aspects of Electronic Health Intervention Supposed to Be Evaluated According to Electronic Health Literature

The studies in group B elaborated on the aspects evaluated to gather evidence of the efficiency and effectiveness of eHealth evaluations. These aspects and their key area of measurements were extracted from the papers to understand the parameters of efficiency and effectiveness that are emphasized by eHealth literature.

Evaluation Reported in Empirical Studies of Electronic Health Interventions

The studies categorized as case studies of eHealth interventions were analyzed based on several characteristics (ie, duration of the intervention, number of participants, used a framework or predefined theory for evaluation or designing the intervention, aspects assessed for evaluation, phases involved in the evaluation, data collection method, and presentation of intervention results). The purpose of the analysis was to conduct a descriptive comparison of the characteristics of evaluation performed in case studies with that of the conceptual papers.

Results

Evaluation of Distinct Phases of an Electronic Health Intervention

This subsection concentrates on how evaluation is conducted in distinct phases of an eHealth intervention. From the selected studies in group A, a spectrum of phases of an eHealth intervention was identified including design, pretesting, pilot study, pragmatic trial, evaluation, and postintervention. Table 1 provides a compilation of the phases along with the area of focus and key activities within each phase.

Table 1.

Characteristics of distinct phases of an electronic health intervention.

| Phase | Area of focus | Key activities |

| Design phase | Conceptualization | Gather theoretical foundations and empirical evidence to detect the existing problems and identify viable solutions [20-24] and define the objectives of the to-be-developed technology [20,25] |

| Contextual inquiry | Identify the end users and stakeholders to define and analyze the characteristics of the context the technology is going to be implemented on [20,21,23-25] | |

| Value specification | Prioritize the critical values of the technology derived from the end users and stakeholders’ needs [12,22-25] | |

| Requirements specification | Translate the values into functional and technical requirements that frame the final design and the technology development [12,23-25] | |

| Pretesting phase | Conduct short-term trials | Provide evidence of efficacy of the technology [12,24,26]; measure factors such as optimal intensity, timing, safety, feasibility, usability, intervention content, and logistic issues [21,22,26]; and evaluate the correspondence between technology capabilities and technology requirements [24] |

| Pilot study | Strategic plan | Define the preliminary plan of the pilot study (ie, objective, timeline, budget, sponsors, and team members [27,28] and identify related ethical and legal issues [20,28] |

| Study design | Define the study type, duration, and participants [20,26,28] as well as data collection methods [28] and design the recruitment process to conform to statistical validity and minimize selection bias [27] | |

| Evaluation | Evaluate the technology and its impact simultaneously [27] and evaluate the effectiveness of the intervention [22] | |

| Pragmatic trial phase | Execution | Administer the intervention to a larger group of participants [21,23,26] with fewer eligibility restrictions [26] |

| Evaluation | Formative and summative evaluation (discussed in the evaluation phase) and internal and external evaluation (discussed in the evaluation phase) | |

| Evaluation phase | Formative evaluation | Generate measures that provide timely feedback [12,23] and perform an evaluative iterative process, as the findings from each step are used to inform subsequent steps [21] |

| Summative evaluation | Provide generalizable knowledge and benefits of the intervention [12,23] | |

| Internal evaluation | Perform an evaluative process intrinsic to information and communication technology implementations and conducted by the implementation team [12] | |

| External evaluation | Conduct the evaluation by external evaluators to provide expertise where it is needed and minimize the bias of in-house evaluators [12] | |

| Postintervention phase | Conduct postmarketing or surveillance studies to follow up the technology once scaled up and used by a wider audience [22,26] |

It can be ascertained from Table 1 that evaluation is not commonly performed in the design and pretesting phases. Although some researchers see evaluation as an ongoing process throughout the intervention [12,21,23], others believe there is value in evaluating the intervention at the end of the study period [12]. Concerning the latter, evaluation itself is one of the phases of the intervention.

Aspects of Electronic Health Intervention Supposed to Be Evaluated According to Electronic Health Literature

We determined the aspects that researchers evaluated to gather the evidence of efficiency and effectiveness of an eHealth intervention. To understand the aspects of efficiency and effectiveness, we compiled the dimensions of eHealth interventions that are proposed to be measured by the studies categorized in group B (n=11). While excerpting the dimensions during the qualitative analysis, we found that they can be classified into 7 aspects: organizational aspect, technological aspect, human and social aspect, clinical aspect, cost and economic aspect, ethical and legal aspect, and transferability aspect. Table 2 exhibits these aspects along with their key area of measurements.

Table 2.

Description of identified aspects of evaluation in electronic health interventions.

| Aspects of assessment | Key areas of measurement |

| Organizational aspect | Organizational setting where the intervention is taking place; it can differ depending on the scale of the intervention (eg, health center, region, and country) [29]; all type of individuals or groups in the health care system that participate in the eHealth intervention, their characteristics, and expectations [30]; organizational performance and professional practice standards [30]; changes in the functions of the health care provider, skills and resource demands, and the roles of the professionals in the organization [29,31-33]; representativeness and participation rates of the health care professionals during the intervention [34]; capability of the organization to implement the intervention [30,34-38] and the extent that the technology fits the organizational strategy, operations, culture, and processes [30]; and sustainability or the degree that the technology becomes accustomed in the daily practice of an organization [29,32,34] |

| Technological aspect | Ensure trust [38], effectiveness, and contribution of quality of care [30,36] of the technology implemented; system performance: hardware and software requirements, correct functioning of the components [29,38], and system capability to meet users’ needs and fit the work patterns of the health care system’ professionals [30,39,40]; usability: broad experience of the users with the system [29,33,37,40]; privacy and security: safety and reliability of the technology [29], and security of the data managed in the technology [37,38]; technical accuracy: quality of the transfer of data [41]; information quality: relates to accuracy, completeness, and availability of the information produced by the system (eg, patients’ records, reports, images, and prescriptions), and it depends on users’ subjectivity [30,39]; service quality: measures the support and follow-up service delivered by the technology provider [39]; triability: the ability of the innovation to be tested on a small scale before the final implementation [40]; maturity: whether the system has been used on a sufficient number of patients to address all the technical problems [36]; and interoperability: communication between the technology and the pre-existing systems, the fit between the technology and the existing work practices [37] |

| Human and social aspect | Acceptance and usability satisfaction of the technology used in the intervention [30,31,33,36,38,39,41] where the user can be physicians, nurses and other staff, and patients, depending on the type of the participants in the intervention [41]; system use: volume of use, who is using, purpose of use, and motivation to use the technology [39]; user satisfaction: perceived usefulness, enjoyment, decision-making satisfaction, and overall satisfaction for the technology [30,39]; and psychological aspects such as satisfaction, well-being, and other psychological variables, and social aspects such as accessibility to the technology, the social relationships evolving over the transmission of care, or activities of the patients under the intervention [31] |

| Clinical aspect | Benefits and unanticipated negative effects of the intervention, biological outcomes including disease risk factors, behavioral outcomes of the participants, staff who deliver the intervention and the sponsors, and quality-of-life outcomes to evaluate participants’ mental health and satisfaction [34] and long-term measurements of the diagnostic and clinical effectiveness [41,35,36], safety of care [33,35,36], and quality of care [33] |

| Cost and economic aspect | Cost analysis methods to compare the intervention with relevant alternatives in terms of costs and consequences [36]; diverse cost analysis methods can be considered (eg, cost-minimization analysis, cost-effectiveness analysis, cost-benefit analysis, cost-utility analysis, and cost-consequence analysis) [30,31,41] and are conducted from several perspectives such as societal, third-party payers, health care providers, or patient [31]; and diverse costs can be included such as investment cost, monthly user charge of equipment, costs of used communication line, education of the technology, costs of patients and their close relatives [41], wages of doctor and other staff [30,41], expenditure and revenue for the health care organization adopting the technology [36], and resource utilization and opportunity cost of the eHealth intervention [34] |

| Ethical and legal aspect | Ethical concerns of the app itself and its implementation including all the stakeholders’ viewpoints on using the technology and the key ethical principles associated with the context in which intervention is conducted [35,36] and legal aspect identifies and analyzes the legislative documents and legal obligations that may exist in each context involved in the intervention [30,35,36] |

| Transferability aspect | Participation and representativeness of the intervention, percentage of persons who receive or are affected by the program, and the characteristics of participants and nonparticipants to investigate the extent that participants are representative and what population group should be a priority for future research [34] and transferability of results from studies of eHealth from one setting to another and the assessment of validity and reliability of the study [36] |

Evaluation Reported in Case Studies of Electronic Health Interventions

The papers categorized as case studies of eHealth intervention show substantial variation in the approaches taken to evaluate the interventions. The use of standardized frameworks and theories for evaluating the interventions was hardly noticed in these studies. Multimedia Appendix 2 provides the result of the analysis [20,42-65].

To summarize Multimedia Appendix 2, it can be said that out of 25 case studies, 16 (64%, 16/25) evaluate clinical aspects, 12 (48%, 12/25) evaluate human and social aspects, 5 (20%, 5/25) evaluate technological aspect, and 4 (16%, 4/25) evaluate organizational aspect. The other aspects discussed by the theory-based literature (Table 2) are not evaluated in any of the case studies.

Discussion

Principal Findings

From the papers reviewed in this study, it has been revealed that numerous approaches to conceptualize and conduct eHealth intervention coexist. Several attributes of evaluation of eHealth intervention have become known through this review. There are vivid differences between how evaluation is conducted in practice (case studies) and how it is discussed in the conceptual papers. Moreover, a wide range of variety prevails within each group. Evaluation has been depicted as both static action performed at the end of the intervention [20,24,26-28] and dynamic action dividing it further into summative and formative evaluation [12,21,23]. Depending on the evaluators, evaluation can also be classified into internal and external assessment [12]. However, all case studies conducted evaluation at the end of the intervention. Although several aspects of evaluation have been found in conceptual papers [32-39,41], the case studies mostly evaluated clinical [20,42-44,46-49,52,53,55,59-61,64,65] and human and social aspects [42,46-49,53-55,59,61,63,65].

Although analyzing standardization of eHealth evaluation was not an objective of this review, the variability found in the studies compelled us to think whether it hinders the sharing of evidence among eHealth interventions. Scarcity of evidence, in turn, could delay the growth of eHealth. It is noticeable that the need for evidence is not clearly stated in any of the papers. The evaluation of the empirical studies typically focused on the success or failure of the technology (eHealth) in that intervention. It seems that the numerous efforts taken in eHealth research are still quite disconnected, and they are thus unable to create a synergic effect on the growth of eHealth.

Evidence in Electronic Health Evaluation Model

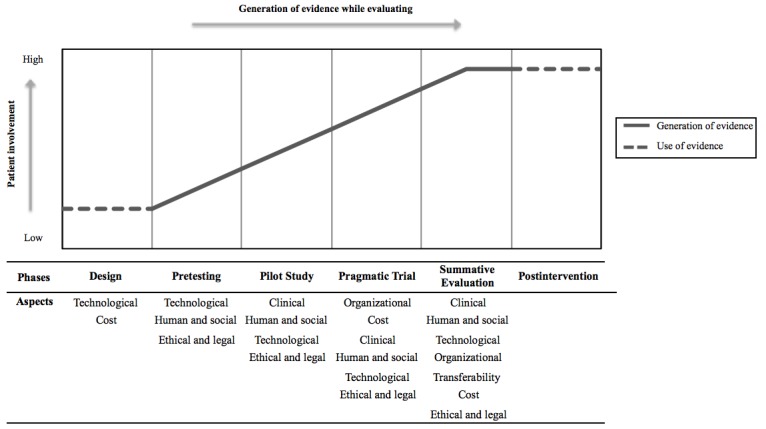

It was noticeable from the review that although some studies elaborate on the aspects of evaluation for eHealth intervention (studies from group B) and some organize the evaluation of intervention into certain phases (studies from group A), no visible interaction has been made so far between these 2 groups of works (ie, what to assess during what phase). There is a gap where a connection can be made between the distinct phases of intervention and aspects of evaluation. This led us to develop the Evidence in eHealth Evaluation model (Figure 2), which exhibits the accumulation of evidence by assessing certain aspects of evaluation in distinct intervention phases. The Evidence in eHealth Evaluation model is a novel approach to investigate the evaluation of eHealth interventions.

Figure 2.

The evidence in electronic health (eHealth) evaluation model.

In this study, an eHealth intervention comprising all 6 phases (ie, design, pretesting, pilot study, pragmatic trial, evaluation, and postintervention) was conceived as a comprehensive intervention. We propose that the generation of robust evidence of effectiveness and efficiency would be plausible when the evaluation is conducted through all intervention phases. Moreover, the aspects of evaluation (ie, organizational aspect, technological aspect, human and social aspect, clinical aspect, cost aspect, ethical and legal aspect, and transferability aspect) would vary in each phase depending on activities of the phases. For example, when an eHealth intervention initiates with the design phase, the decisions are made based on the evaluation of the technological aspect and cost of technology development. The formal evaluation of the intervention begins in succeeding phases. The evaluation of technological, human and social, and cost aspects occurs in the pretesting phase. During the pilot study phase, the focus of evaluation shifts primarily to clinical aspect followed by human and social, technological, and ethical and legal. Depending on the evidence garnered in the pilot study, the intervention may proceed to the next phase or go back to the design phase. As the intervention is scaled up in the pragmatic trial, the evaluation is conducted to identify whether the technology-enabled care can be executed within the realistic layout of an organization. Hence, the key areas of evaluation in this phase are organizational and cost aspects along with other aspects such as clinical, human and social, technological, and ethical and legal. The last phase of gathering evidence is summative evaluation, where all the aspects are assessed including transferability. This comprehensive evaluation process gradually accumulates the evidence that reaches its peak in the summative evaluation phase and is used in the postintervention phase to make future decisions. The model also exhibits how the involvement of patients increases continuously from the design phase to the pragmatic trial, escalating the complexity of the evaluation process.

The inclusion of relevant information regarding other aspects besides the clinical aspect (eg, organizational aspect and cost aspect) allows creating reusable knowledge to facilitate the transfer of results to other settings [36] and to obtain useful insights for long-term implementations. It can be assumed that assessing all the aspects in a single study might conclude with a confounding result, as all the aspects are interrelated and inferior performance in an aspect can affect the performance in other aspects, which might create a misleading result. Therefore, our model proposes to extend the evaluation process throughout the 6 phases of eHealth intervention. The underlying idea is to assess specific aspects in each phase instead of evaluating all aspects in a single phase. This way of evaluating eHealth interventions can capture comprehensive evidence that is usually dynamic and complex in nature.

We acknowledge the fact that an eHealth intervention including all the phases presented in the model will become cumbersome because of high resource consumption. This conceptual model is not a prescription but just a way to show the progression of evidence in eHealth intervention in a reliable manner.

Conclusions

To date, the importance of evidence has not been discussed as rigorously as the diverse research approaches and evaluation frameworks have been discussed. In this study, the Evidence in eHealth Evaluation model was developed to exhibit how evidence can be generated by evaluating certain aspects in each intervention phase. Assessing distinct aspects during distinct phases is a novel concept discussed in this study and requires further analysis. Moreover, this study implies an inconsistency between the literary concepts and practices of eHealth intervention, which has not been noted until now.

As health interventions are context-specific, the transferability of results from eHealth studies may be difficult. Moreover, neither the conceptual nor the case studies suggested the long-term implementation of specific technology into the health care settings where it has been tested. We believe that this might be caused by a lack of or insufficiency of preliminary evidence of the effectiveness and efficiency after conducting the micro-trials or short-term tests on the effects of the technology. Consequently, it appears that lack of evidence hinders the growth of eHealth. Further research directed toward evidence-based evaluation can not only improve the quality of that intervention study but also facilitate long-term implementation of eHealth in general. We conclude that the development of more robust and comprehensive evaluation of eHealth studies or an improved validation of evaluation methods could ease the transferability of results among similar studies. Thus, the resources can be used for supplementary research in eHealth.

Limitations

This study is not devoid of limitations. We tried to include and analyze as many papers as possible; however, unknowingly and unintentionally, some papers may have been omitted. Furthermore, regarding the model, its development is in the preliminary stages; therefore, it cannot be compared with other validated frameworks.

Acknowledgments

This study was partly funded by the European Union’s Horizon 2020 research and innovation programme, grant agreement number 643588.

Abbreviations

- EBM

evidence-based medicine

- eHealth

electronic health

- PRISMA

Preferred reporting items for systematic reviews and meta-analyses

Criteria of the summary table developed to record information from the selected papers.

Evaluation reported in empirical studies of eHealth interventions.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Rigby M, Ammenwerth E. The need for evidence in health informatics. Stud Health Technol Inform. 2016;222:3–13. [PubMed] [Google Scholar]

- 2.Rigby M, Magrabi F, Scott P, Doupi P, Hypponen H, Ammenwerth E. Steps in moving evidence-based health informatics from theory to practice. Healthc Inform Res. 2016 Oct;22(4):255–60. doi: 10.4258/hir.2016.22.4.255. https://www.e-hir.org/DOIx.php?id=10.4258/hir.2016.22.4.255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Al-Shorbaji N. Is there and do we need evidence on eHealth interventions? IRBM. 2013 Feb;34(1):24–7. doi: 10.1016/j.irbm.2012.11.001. [DOI] [Google Scholar]

- 4.Oh H, Rizo C, Enkin M, Jadad A. What is eHealth (3): a systematic review of published definitions. J Med Internet Res. 2005;7(1):e1. doi: 10.2196/jmir.7.1.e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Eysenbach G. What is e-health? J Med Internet Res. 2001;3(2):E20. doi: 10.2196/jmir.3.2.e20. http://www.jmir.org/2001/2/e20/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mark MM, Greene JC, Shaw I, editors. The SAGE Handbook of Evaluation. London, UK: SAGE Publication Ltd; 2006. [Google Scholar]

- 7.Newcomer KE, Hatry HP, Wholey JS. Handbook of practical program evaluation. USA: John Wiley & Sons; 2015. [Google Scholar]

- 8.Pagliari C. Design and evaluation in eHealth: challenges and implications for an interdisciplinary field. J Med Internet Res. 2007;9(2):e15. doi: 10.2196/jmir.9.2.e15. http://www.jmir.org/2007/2/e15/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bahati R, Guy S, Bauer M, Gwadry-Sridhar F. Where's the evidence for evidence-based knowledge in ehealth systems?. Proceedings of the Developments in E-systems Engineering (DESE); Developments in E-systems Engineering (DESE); September, 2010; London, UK. 2010. Sep, pp. 29–34. https://doi-org.proxy.lib.chalmers.se/10.1109/DeSE.2010.12. [DOI] [Google Scholar]

- 10.Bates DW, Wright A. Evaluating eHealth: undertaking robust international cross-cultural eHealth research. PLoS Med. 2009 Sep;6(9):e1000105. doi: 10.1371/journal.pmed.1000105. http://dx.plos.org/10.1371/journal.pmed.1000105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Catwell L, Sheikh A. Evaluating eHealth interventions: the need for continuous systemic evaluation. PLoS Med. 2009 Aug;6(8):e1000126. doi: 10.1371/journal.pmed.1000126. http://dx.plos.org/10.1371/journal.pmed.1000126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lilford RJ, Foster J, Pringle M. Evaluating eHealth: how to make evaluation more methodologically robust. PLoS Med. 2009 Nov;6(11):e1000186. doi: 10.1371/journal.pmed.1000186. http://dx.plos.org/10.1371/journal.pmed.1000186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review--a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005 Jul;10 Suppl 1:21–34. doi: 10.1258/1355819054308530. [DOI] [PubMed] [Google Scholar]

- 14.Greenhalgh T, Russell J. Why do evaluations of eHealth programs fail? An alternative set of guiding principles. PLoS Med. 2010;7(11):e1000360. doi: 10.1371/journal.pmed.1000360. http://dx.plos.org/10.1371/journal.pmed.1000360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. 1996. Clin Orthop Relat Res. 2007 Feb;455:3–5. [PubMed] [Google Scholar]

- 16.Geanellos R, Wilson C. Building bridges: knowledge production, publication and use. Commentary on Tonelli (2006), Integrating evidence into clinical practice: an alternative to evidence-based approaches. J Eval Clin Pract. 2006 Jun;12(3):299–305. doi: 10.1111/j.1365-2753.2006.00592.x. [DOI] [PubMed] [Google Scholar]

- 17.Black AD, Car J, Pagliari C, Anandan C, Cresswell K, Bokun T, McKinstry B, Procter R, Majeed A, Sheikh A. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med. 2011 Jan;8(1):e1000387. doi: 10.1371/journal.pmed.1000387. http://dx.plos.org/10.1371/journal.pmed.1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009 Jul 21;6(7):e1000100. doi: 10.1371/journal.pmed.1000100. http://dx.plos.org/10.1371/journal.pmed.1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Alderson P, Clarke M, Mulrow C, Oxman A. Introduction. In: Green S, Higgins JP, editors. Cochrane Handbook for Systematic Reviews of Interventions. 1st ed. Chichester (UK): John Wiley & Sons; 2008. pp. 6–34. [Google Scholar]

- 20.Harrison V, Proudfoot J, Wee PP, Parker G, Pavlovic DH, Manicavasagar V. Mobile mental health: review of the emerging field and proof of concept study. J Ment Health. 2011 Dec;20(6):509–24. doi: 10.3109/09638237.2011.608746. [DOI] [PubMed] [Google Scholar]

- 21.Whittaker R, Merry S, Dorey E, Maddison R. A development and evaluation process for mHealth interventions: examples from New Zealand. J Health Commun. 2012;17 Suppl 1:11–21. doi: 10.1080/10810730.2011.649103. [DOI] [PubMed] [Google Scholar]

- 22.Linn AJ, van Weert JC, Smit EG, Perry K, van Dijk L. 1+1=3? The systematic development of a theoretical and evidence-based tailored multimedia intervention to improve medication adherence. Patient Educ Couns. 2013 Dec;93(3):381–8. doi: 10.1016/j.pec.2013.03.009. [DOI] [PubMed] [Google Scholar]

- 23.van Gemert-Pijnen JE, Maarten NN, van Limburg A, Kelders SM, Van Velsen L, Brandenburg B, Ossebaard HC. eHealth wiki-platform to increase the uptake and impact of eHealth technologies. Proceedings of 4th International Conference on eHealth, Telemedicine, and Social Medicine eTELEMED; 4th International Conference on eHealth, Telemedicine, and Social Medicine eTELEMED; Jan 30- Feb 4; Valencia, Spain. 2012. p. 184. [Google Scholar]

- 24.Steele Gray C, Khan AI, Kuluski K, McKillop I, Sharpe S, Bierman AS, Lyons RF, Cott C. Improving patient experience and primary care quality for patients with complex chronic disease using the electronic patient-reported outcomes tool: adopting qualitative methods into a user-centered design approach. JMIR Res Protoc. 2016;5(1):e28. doi: 10.2196/resprot.5204. http://www.researchprotocols.org/2016/1/e28/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Van Velsen L, Wentzel J, Van Gemert-Pijnen JE. Designing eHealth that matters via a multidisciplinary requirements development approach. JMIR Res Protoc. 2013 Jun 24;2(1):e21. doi: 10.2196/resprot.2547. http://www.researchprotocols.org/2013/1/e21/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nguyen HQ, Cuenco D, Wolpin S, Benditt J, Carrieri-Kohlman V. Methodological considerations in evaluating eHealth interventions. Can J Nurs Res. 2007 Mar;39(1):116–34. [PubMed] [Google Scholar]

- 27.Dansky KH, Thompson D, Sanner T. A framework for evaluating eHealth research. Eval Program Plann. 2006 Nov;29(4):397–404. doi: 10.1016/j.evalprogplan.2006.08.009. [DOI] [PubMed] [Google Scholar]

- 28.Nykänen P, Brender J, Talmon J, de Keizer N, Rigby M, Beuscart-Zephir MC, Ammenwerth E. Guideline for good evaluation practice in health informatics (GEP-HI) Int J Med Inform. 2011 Dec;80(12):815–27. doi: 10.1016/j.ijmedinf.2011.08.004. [DOI] [PubMed] [Google Scholar]

- 29.Cornford T, Doukidis G, Forster D. Experience with a structure, process and outcome framework for evaluating an information system. Omega. 1994 Sep;22(5):491–504. doi: 10.1016/0305-0483(94)90030-2. [DOI] [Google Scholar]

- 30.Lau F, Kuziemsky C, editors. Handbook of eHealth Evaluation: An Evidence-Based Approach. Victoria: University of Victoria Press; 2017. Feb, Clinical adoption framework; pp. 55–76. [PubMed] [Google Scholar]

- 31.Liberati A, Sheldon TA, Banta HD. EUR-ASSESS project subgroup report on methodology: methodological guidance for the conduct of health technology assessment. Int J Technol Assess Health Care. 1997;13(2):186–219. doi: 10.1017/s0266462300010369. [DOI] [PubMed] [Google Scholar]

- 32.Takian A, Petrakaki D, Cornford T, Sheikh A, Barber N, National NHS Care Records Service Evaluation Team Building a house on shifting sand: methodological considerations when evaluating the implementation and adoption of national electronic health record systems. BMC Health Serv Res. 2012 Apr 30;12:105. doi: 10.1186/1472-6963-12-105. https://bmchealthservres.biomedcentral.com/articles/10.1186/1472-6963-12-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cresswell KM, Sheikh A. Undertaking sociotechnical evaluations of health information technologies. Inform Prim Care. 2014;21(2):78–83. doi: 10.14236/jhi.v21i2.54. doi: 10.14236/jhi.v21i2.54. [DOI] [PubMed] [Google Scholar]

- 34.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999 Sep;89(9):1322–7. doi: 10.2105/ajph.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lampe K, Mäkelä M, Garrido MV, Anttila H, Autti-Rämö I, Hicks NJ, Hofmann B, Koivisto J, Kunz R, Kärki P, Malmivaara A, Meiesaar K, Reiman-Möttönen P, Norderhaug I, Pasternack I, Ruano-Ravina A, Räsänen P, Saalasti-Koskinen U, Saarni SI, Walin L, Kristensen FB, European network for Health Technology Assessment (EUnetHTA) The HTA core model: a novel method for producing and reporting health technology assessments. Int J Technol Assess Health Care. 2009 Dec;25 Suppl 2:9–20. doi: 10.1017/S0266462309990638. [DOI] [PubMed] [Google Scholar]

- 36.Kidholm K, Ekeland AG, Jensen LK, Rasmussen J, Pedersen CD, Bowes A, Flottorp SA, Bech M. A model for assessment of telemedicine applications: mast. Int J Technol Assess Health Care. 2012 Jan;28(1):44–51. doi: 10.1017/S0266462311000638. [DOI] [PubMed] [Google Scholar]

- 37.Leon N, Schneider H, Daviaud E. Applying a framework for assessing the health system challenges to scaling up mHealth in South Africa. BMC Med Inform Decis Mak. 2012;12:123. doi: 10.1186/1472-6947-12-123. http://www.biomedcentral.com/1472-6947/12/123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lo O, Fan L, Buchanan W, Thuemmler C. Technical evaluation of an e-health platform. Proceedings of the IADIS International Conference of E-Health; IADIS International Conference of E-Health; July 17-19, 2012; Lisbon, Portugal. 2012. Jul, [Google Scholar]

- 39.Yusof MM, Kuljis J, Papazafeiropoulou A, Stergioulas LK. An evaluation framework for Health Information Systems: human, organization and technology-fit factors (HOT-fit) Int J Med Inform. 2008 Jun;77(6):386–98. doi: 10.1016/j.ijmedinf.2007.08.011. [DOI] [PubMed] [Google Scholar]

- 40.Lovejoy TI, Demireva PD, Grayson JL, McNamara JR. Advancing the practice of online psychotherapy: an application of Rogers' diffusion of innovations theory. Psychotherapy (Chic) 2009 Mar;46(1):112–24. doi: 10.1037/a0015153. [DOI] [PubMed] [Google Scholar]

- 41.Ohinmaa A, Hailey D, Roine R. Elements for assessment of telemedicine applications. Int J Technol Assess Health Care. 2001;17(2):190–202. doi: 10.1017/s0266462300105057. [DOI] [PubMed] [Google Scholar]

- 42.Aschbrenner KA, Naslund JA, Gill LE, Bartels SJ, Ben-Zeev D. A qualitative study of client-clinician text exchanges in a mobile health intervention for individuals with psychotic disorders and substance use. J Dual Diagn. 2016;12(1):63–71. doi: 10.1080/15504263.2016.1145312. http://europepmc.org/abstract/MED/26829356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mohr DC, Duffecy J, Ho J, Kwasny M, Cai X, Burns MN, Begale M. A randomized controlled trial evaluating a manualized TeleCoaching protocol for improving adherence to a web-based intervention for the treatment of depression. PLoS One. 2013 Aug;8(8):e70086. doi: 10.1371/journal.pone.0070086. http://dx.plos.org/10.1371/journal.pone.0070086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kleiboer A, Donker T, Seekles W, van Straten SA, Riper H, Cuijpers P. A randomized controlled trial on the role of support in Internet-based problem solving therapy for depression and anxiety. Behav Res Ther. 2015 Sep;72:63–71. doi: 10.1016/j.brat.2015.06.013. [DOI] [PubMed] [Google Scholar]

- 45.Cristancho-Lacroix V, Moulin F, Wrobel J, Batrancourt B, Plichart M, De Rotrou J, Cantegreil-Kallen I, Rigaud A. A web-based program for informal caregivers of persons with Alzheimer's disease: an iterative user-centered design. JMIR Res Protoc. 2014;3(3):e46. doi: 10.2196/resprot.3607. http://www.researchprotocols.org/2014/3/e46/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Klein B, Meyer D, Austin DW, Kyrios M. Anxiety online: a virtual clinic: preliminary outcomes following completion of five fully automated treatment programs for anxiety disorders and symptoms. J Med Internet Res. 2011;13(4):e89. doi: 10.2196/jmir.1918. http://www.jmir.org/2011/4/e89/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pratt SI, Naslund JA, Wolfe RS, Santos M, Bartels SJ. Automated telehealth for managing psychiatric instability in people with serious mental illness. J Ment Health. 2015;24(5):261–5. doi: 10.3109/09638237.2014.928403. http://europepmc.org/abstract/MED/24988132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zimmer B, Moessner M, Wolf M, Minarik C, Kindermann S, Bauer S. Effectiveness of an Internet-based preparation for psychosomatic treatment: results of a controlled observational study. J Psychosom Res. 2015 Nov;79(5):399–403. doi: 10.1016/j.jpsychores.2015.09.008. [DOI] [PubMed] [Google Scholar]

- 49.Ali L, Krevers B, Söström N, Skärsäter I. Effectiveness of web-based versus folder support interventions for young informal carers of persons with mental illness: a randomized controlled trial. Patient Educ Couns. 2014 Mar;94(3):362–71. doi: 10.1016/j.pec.2013.10.020. [DOI] [PubMed] [Google Scholar]

- 50.Bergmo TS, Ersdal G, Rødseth E, Berntsen G. Electronic messaging to improve information exchange in primary care. Proceedings 5th International Conference on eHealth, Telemedicine, and Social Medicine eTELEMED; 5th International Conference on eHealth, Telemedicine, and Social Medicine eTELEMED; Feb 24-Mar 1, 2013; Nice, France. 2013. [Google Scholar]

- 51.Pham Q, Khatib Y, Stansfeld S, Fox S, Green T. Feasibility and efficacy of an mhealth game for managing anxiety: “flowy” randomized controlled pilot trial and design evaluation. Games Health J. 2016 Feb;5(1):50–67. doi: 10.1089/g4h.2015.0033. [DOI] [PubMed] [Google Scholar]

- 52.Meglic M, Furlan M, Kuzmanic M, Kozel D, Baraga D, Kuhar I, Kosir B, Iljaz R, Novak Sarotar B, Dernovsek MZ, Marusic A, Eysenbach G, Brodnik A. Feasibility of an eHealth service to support collaborative depression care: results of a pilot study. J Med Internet Res. 2010;12(5):e63. doi: 10.2196/jmir.1510. http://www.jmir.org/2010/5/e63/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ebert DD, Lehr D, Baumeister H, Boß L, Riper H, Cuijpers P, Reins JA, Buntrock C, Berking M. GET.ON Mood Enhancer: efficacy of Internet-based guided self-help compared to psychoeducation for depression: an investigator-blinded randomised controlled trial. Trials. 2014;15:39. doi: 10.1186/1745-6215-15-39. http://www.trialsjournal.com/content/15//39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Skidmore ER, Butters M, Whyte E, Grattan E, Shen J, Terhorst L. Guided training relative to direct skill training for individuals with cognitive impairments after stroke: a pilot randomized trial. Arch Phys Med Rehabil. 2017 Dec;98(4):673–80. doi: 10.1016/j.apmr.2016.10.004. http://europepmc.org/abstract/MED/27794487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Burns MN, Begale M, Duffecy J, Gergle D, Karr CJ, Giangrande E, Mohr DC. Harnessing context sensing to develop a mobile intervention for depression. J Med Internet Res. 2011;13(3):e55. doi: 10.2196/jmir.1838. http://www.jmir.org/2011/3/e55/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Robertson A, Cresswell K, Takian A, Petrakaki D, Crowe S, Cornford T, Barber N, Avery A, Fernando B, Jacklin A, Prescott R, Klecun E, Paton J, Lichtner V, Quinn C, Ali M, Morrison Z, Jani Y, Waring J, Marsden K, Sheikh A. Implementation and adoption of nationwide electronic health records in secondary care in England: qualitative analysis of interim results from a prospective national evaluation. BMJ. 2010;341:c4564. doi: 10.1136/bmj.c4564. http://www.bmj.com/cgi/pmidlookup?view=long&pmid=20813822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Bouamrane MM, Mair FS. Implementation of an integrated preoperative care pathway and regional electronic clinical portal for preoperative assessment. BMC Med Inform Decis Mak. 2014 Nov 19;14:93. doi: 10.1186/1472-6947-14-93. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/1472-6947-14-93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Langrial SU, Lappalainen P. Information systems for improving mental healthix emerging themes of research. Proceedings of the 20th Pacific Asia Conference on Informatics Systems (PACIS); 20th Pacific Asia Conference on Informatics Systems (PACIS); Jun 27- Jul 1, 2016; Chiayi, Taiwan. 2016. [Google Scholar]

- 59.Holländare F, Eriksson A, Lövgren L, Humble MB, Boersma K. Internet-based cognitive behavioral therapy for residual symptoms in bipolar disorder type II: a single-subject design pilot study. JMIR Res Protoc. 2015;4(2):e44. doi: 10.2196/resprot.3910. http://www.researchprotocols.org/2015/2/e44/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Glozier N, Christensen H, Naismith S, Cockayne N, Donkin L, Neal B, Mackinnon A, Hickie I. Internet-delivered cognitive behavioural therapy for adults with mild to moderate depression and high cardiovascular disease risks: a randomised attention-controlled trial. PLoS One. 2013;8(3):e59139. doi: 10.1371/journal.pone.0059139. http://dx.plos.org/10.1371/journal.pone.0059139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Lederman R, Wadley G, Gleeson J, Bendall S, Álvarez-Jiménez M. Moderated online social therapy: designing and evaluating technology for mental health. ACM Trans Comput Hum Interact (TOCHI) 2014 Feb 1;21(1):1–26. doi: 10.1145/2513179. [DOI] [Google Scholar]

- 62.Burton C, Szentagotai Tatar A, McKinstry B, Matheson C, Matu S, Moldovan R, Macnab M, Farrow E, David D, Pagliari C, Serrano Blanco A, Wolters M, Help4Mood Consortium Pilot randomised controlled trial of Help4Mood, an embodied virtual agent-based system to support treatment of depression. J Telemed Telecare. 2016 Sep;22(6):348–55. doi: 10.1177/1357633X15609793. [DOI] [PubMed] [Google Scholar]

- 63.Schaller S, Marinova-Schmidt V, Gobin J, Criegee-Rieck M, Griebel L, Engel S, Stein V, Graessel E, Kolominsky-Rabas PL. Tailored e-Health services for the dementia care setting: a pilot study of 'eHealthMonitor'. BMC Med Inform Decis Mak. 2015 Jul 28;:15–58. doi: 10.1186/s12911-015-0182-2. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-015-0182-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Frangou S, Sachpazidis I, Stassinakis A, Sakas G. Telemonitoring of medication adherence in patients with schizophrenia. Telemed J E Health. 2005 Dec;11(6):675–83. doi: 10.1089/tmj.2005.11.675. [DOI] [PubMed] [Google Scholar]

- 65.de Wit J, Dozeman E, Ruwaard J, Alblas J, Riper H. Web-based support for daily functioning of people with mild intellectual disabilities or chronic psychiatric disorders: a feasibility study in routine practice. Internet Interv. 2015 May;2(2):161–8. doi: 10.1016/j.invent.2015.02.007. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Criteria of the summary table developed to record information from the selected papers.

Evaluation reported in empirical studies of eHealth interventions.