Abstract

In this paper, we study robust covariance estimation under the approximate factor model with observed factors. We propose a novel framework to first estimate the initial joint covariance matrix of the observed data and the factors, and then use it to recover the covariance matrix of the observed data. We prove that once the initial matrix estimator is good enough to maintain the element-wise optimal rate, the whole procedure will generate an estimated covariance with desired properties. For data with only bounded fourth moment, we propose to use adaptive Huber loss minimization to give the initial joint covariance estimation. This approach is applicable to a much wider class of distributions, beyond sub-Gaussian and elliptical distributions. We also present an asymptotic result for adaptive Huber’s M-estimator with a diverging parameter. The conclusions are demonstrated by extensive simulations and real data analysis.

Keywords: Robust covariance matrix, Approximate factor model, M-estimator

1 Introduction

The problem of estimating a covariance matrix and its inverse has been fundamental in many areas of statistics and econometrics, including principal component analysis (PCA) and undirected graphical models for instance. The intense research in high dimensional statistics has contributed a stream of papers related to covariance matrix estimation, including sparse principal component analysis (Johnstone and Lu, 2009; Amini and Wainwright, 2008; Vu and Lei, 2012; Birnbaum et al., 2013; Berthet and Rigollet, 2013; Ma, 2013; Cai et al., 2013), sparse covariance estimation (Bickel and Levina, 2008; Cai and Liu, 2011; Cai et al., 2010; Lam and Fan, 2009; Ravikumar et al., 2011) and factor model analysis (Stock and Watson, 2002; Bai, 2003; Fan et al., 2008, 2013, 2016; Onatski, 2012). A strong interest in precision matrix estimation (undirected graphical model) has also emerged in the statistics community following the pioneering works in Meinshausen and Bühlmann (2006) and Friedman et al. (2008). In the application aspect, many areas such as portfolio allocation (Fan et al., 2008), have benefited from this continuing research.

In the high dimensional setting, the number of variables p is comparable or greater than the sample size n. This dimensionality poses a challenge to the estimation of covariance matrices. It has been shown in Johnstone and Lu (2009) that the empirical covariance matrix behaves poorly, and sparsity of leading eigenvectors circumvents this issue. Following this work, a flourishing literature on sparse PCA has developed in-depth analysis and refined algorithms; see Vu and Lei (2012); Berthet and Rigollet (2013); Ma (2013). Taking a different route, Bickel and Levina (2008) advocated thresholding as a regularization approach to estimate a sparse matrix, in the sense that most entries of the matrix are close to zero and this approach was used independently in (Fan et al., 2008) for estimating covariance matrix with factor structure.

Another challenge in high-dimensional statistics is that measurements may not have light tails. For example, large scale datasets are often obtained by using bio-imaging technology (e.g., fMRI and microarrays) that often leads to heavy-tailed measurement errors (Dinov et al., 2005). Moreover, it is well known that financial returns exhibit heavy tails. These invalidate the fundamental assumptions in high-dimensional statistics that data have sub-Gaussian or sub-exponential tails, popularly imposed in most of the aforementioned papers. Significant relaxation of the assumption requires some new ideas and forms the subject of this paper.

Recently, motivated by Fama-French model (Fama and French, 1993) from financial econometrics, Fan et al. (2008) and Fan et al. (2013) considered the covariance structure of the static approximate factor model, which models the covariance matrix by a low-rank signal matrix and a sparse noise matrix. The same model will also be the focus of the current paper. The model assumes existence of several low-dimensional factors that drives a large panel data {yit}i≤ p,t≤n, that is

| (1.1) |

where ft’s are the common factors, which are observed; and bi’s are their corresponding factor loadings, which are considered unknown but fixed parameters in this work. The noises uit’s, known as the idiosyncratic component, are uncorrelated with the factors ft ∈ Rr. Here r is relatively small compared with p and n. We will treat r as fixed independent of p and n throughout this paper. When the factors are known, this model subsumes the well-known CAPM model (Sharpe, 1964; Lintner, 1965) and Fama-French model (Fama and French, 1993). When ft is unobserved, the model tries to recover the underlying factors for the movements of the whole panel data. Here the “approximate” factor model indicates that the covariance Σu of ut = (u1t,…, upt) is sparse, including the strict factor model in which Σu is diagonal as a special case. In addition, “static” is a specific case of the dynamic model which takes into account the time lag and allows more general infinite dimensional representations (Forni et al., 2000; Forni and Lippi, 2001).

The covariance matrix of the outcome yt = (y1t,… ypt)′ from model (1.1) can be written as

| (1.2) |

where Bp×r consisting of in each row is the loading matrix, Σf is the covariance of ft and Σu is the sparse covariance matrix for ut. Here we assume the process of (ft, ut) is stationary so that Σf, Σu do not change over time. When factors are unknown, Fan et al. (2013) proposed applying PCA to obtain an estimate of the low rank part and sparse part Σu. The crucial assumption is that the factors are pervasive, meaning that the factors have non-negligible effects on a large amount of dimensions of the outcomes. Wang and Fan (2017) gave more explanation from random matrix theories and aims to relax the pervasiveness assumption in applications such as risk management and estimation of the false discovery proportion. See Onatski (2012) for more discussions on strong and weak factors.

In this paper, we consider estimating Σ with known factors. Unknown factors pose more difficulties for robust estimation, which will be explored in future works. The main focus of the paper is on robustness instead of factor recovery. Under exponential tails of the factors and noises, Fan et al. (2011) proposed the idea of performing thresholding on the estimate of Σu, obtained from the sample covariance of the residuals of multiple regression (1.1). The legitimacy of this approach hinges on the assumption that the tails of the factor and error distributions decay exponentially, which is likely to be violated in practice, especially in the financial applications. Thus, the need to extend the applicability of this approach beyond well-behaved noise has driven further research such as Fan et al. (2015b), in which they assume that yt has an elliptical distribution (Fang et al., 1990).

This paper studies model (1.1) under a much more relaxed condition: the random variables ft and uit only have finite fourth moment. The main observation that motivates our method is that, the joint covariance matrix of supplies sufficient information to estimate BΣfBT and Σu. To estimate the joint covariance matrix in a robust way, the classical idea that dates back to Huber (1964) proves to be vital and effective. The novelty here is that we let the parameter diverge in order to control the bias in high-dimensional applications. The Huber loss function with a diverging parameter, together with other similar functions, has been shown to produce concentration bounds for M-estimators, when the random variables have fat tails; see for example Catoni (2012) and Fan et al. (2017). This point will be clarified in Sections 2 and 3. The M-estimators considered here have additional merits in asymptotic analysis, which is studied in Section 3.3.

This paper can be placed in the broader context of low rank plus sparse representation. In the past few years, robust principal component analysis has received much attention among statisticians, applied mathematicians and computer scientists. Their focus is on identifying the low rank component and sparse component from a corrupted matrix (Chandrasekaran et al., 2011; Candès et al., 2011; Xu et al., 2010). However, the matrices considered therein do not come from random samples, and as a result, neither estimation nor inference are involved. Agarwal et al. (2012) does consider the noisy decomposition, but still focuses more on identifying and separating the low rank part and sparse part. In spite of connections with the robust PCA literature, such as the incoherence condition (see Section 2), this paper and its predecessors are more engaged in disentangling “true signal” from noise, in order to improve estimation of covariance matrices. In this respect, they bear more similarity with the literature of covariance matrix estimation.

We make a few notational definitions before presenting the main results. For a general matrix M, the max-norm of M, or the entry-wise maximum, is denoted as The operator norm of M is whereas the Frobenius norm is If M is furthermore symmetric, we denote λj (M) as the jth largest eigenvalue, λmax(M) as the largest one, and λmin(M) as the smallest one. In the paper, C is a generic constant that may differ from line to line in the assumptions and also derivation of our theories.

The paper is organized as follows. In Section 2, we present the procedure for robust covariance estimation when only finite fourth moment is assumed for both factors and noises without specific distributional assumption. The theoretical justification will be provided in Section 3. Simulations will be carried out in Section 4 to demonstrate the effectiveness of the proposed procedure. We also conduct real data analysis on portfolio risk of S&P stocks via Fama-French model in Section 5. Technical proofs will be delayed to the appendix.

2 Robust covariance estimation

Consider the factor model (1.1) again with observed factors. It can be written in the vector form as

| (2.1) |

where yt = (y1t,…, ypt)T, ft ∈ Rr are the factors for t = 1,…, T, B = (b1,…, bp)T is the fixed unknown loading matrix and ut = (u1t,…, upt)T is uncorrelated with the factors. We assume that have zero mean and independent for t = 1,…, T. A motivating example from economic and financial studies is the classical Fama-French model, where yit’s represent excess returns of stocks in the market and ft’s are interpreted as common factors driving the market. It is more natural to allow for weak temporal dependence such as α-mixing as in the work of Fan et al. (2016). Though possible, we assume independence in this paper for the sake of simplicity of analysis.

2.1 Assumptions

We now state the main assumptions of the model. Let Σf be the covariance of ft, and Σu the covariance of ut. A covariance decomposition shows that Σ, the covariance of yt, comprises two parts,

| (2.2) |

We assume that Σu is sparse and the sparsity level is measured through

| (2.3) |

If q = 0, mq is defined to be , i.e. the exact sparsity. An intuitive justification of the sparsity measurement stems from modeling of the covariance structure: after taking out the common factors, the rest only has weak cross-sectional dependence. In addition, we assume that , as well as , is bounded away from 0 and ∞. In the case of degenerate Σf, we can always consider rescaling the factors and reduce the number of observed factors to meet the requirement of non-vanishing minimum eigenvalue of Σf. This leads to our first assumption.

Assumption 2.1

There exists a constant C > 0 such that and , where Σf is a r × r matrix with r being a fixed number.

Here assuming a fixed r is just for simplicity of presentation. It can be allowed to grow with n and p. Then we would need to keep track of r in the theoretical analysis and impose certain growth condition on r.

Another important feature of the factor model, observed by Stock and Watson (2002), is that the factors are pervasive in the sense that the low rank part of (2.2) is the dominant component of Σ; more specifically, the top r eigenvalues grow linearly as p. This motivates the following assumption.

Assumption 2.2

There exists a constant c > 0 such that λr(Σ) > cp.

The elements of B are uniformly bounded by a constant C.

First note assumption (ii) implies that . So together with (i), the above assumption requires leading eigenvalues to grow with an order of p. This assumption is satisfied by the approximate factor model, since by Weyl’s inequality, if the main term is bounded from below. Furthermore, for illustrative purposes, if we additionally assume (though not needed in this paper) that each entry of B is iid with a finite second moment, it is not hard to see satisfies such a condition with probability tending to one. Consequently, it is natural to assume λi(Σ)/p is lower bounded for i ≤ r. Note that B is considered to be deterministic throughout the paper.

Assumption (ii) is related to the matrix incoherence condition. In fact, when λmax(Σ) grows linearly with p, the condition of bounded is equivalent to the incoherence of eigenvectors of Σ being bounded, which is standard in the matrix completion literature (Candès and Recht, 2009) and the robust PCA literature (Chandrasekaran et al., 2011).

We now consider the moment assumption of random variables in model (1.1).

Assumption 2.3

(ft, ut) is iid with mean zero and bounded fourth moment. That is, there exists a constant C > 0 such that and .

The independence assumption can be relaxed to mixing conditions, but we do not pursue this direction in the current paper. Note that our main Theorem 3.1 is essentially deterministic. So under certain mixing condition such as that used by Fan et al. (2011), as long as we achieve a max-norm error bound (3.2) in Corollary 3.1, all conclusions in Theorem 3.1 follow immediately. Please find more details in Section 3.

We are going to establish our results based on the above general distribution family with only bounded fourth moments.

2.2 Robust estimation procedure

The basic idea we propose is to estimate the covariance matrix of the joint vector (yt, ft) instead of just that of yt, although it is our target. The covariance of the concatenated p + r dimensional vector contains all the information we need to recover the low-ranks and sparse structure. Observe that the covariance matrix Σz:= Cov(zt) can be expressed as

Any method which yields an estimate of Σz as an initial estimator or estimates of , , , could be used to infer the unknown B, Σf and Σu. Specifically, using the estimator , we can readily obtain an estimator of BΣf BT through the identity

Subsequently, we can subtract the estimator of BΣfBT from to obtain . With the sparsity structure of Σu assumed in Section 2.1, the well-studied thresholding (Bickel and Levina, 2008; Rothman et al., 2009; Cai and Liu, 2011) can be employed. Applying thresholding to , we obtain a thresholded matrix with guaranteed error in terms of max-norm and operator norm. The final step is to add up with the estimator of BΣfBT from to produce the final for Σ.

Due to the fact that we only have bounded fourth moments for factors and errors, we estimate the covariance matrix Σz through robust methodology. For the sake of simplicity, we assume zt has zero mean, so the covariance matrix of zt takes the form . We shall use the M-estimator proposed in Catoni (2012) and Fan et al. (2017), where the authors proved the concentration property in the estimation of population mean of a random variable with only finite second moment. Here the variable of interest is , and naturally we will need bounded fourth moment of zt.

In essence, minimizing a suitable loss function, say Huber loss, yields an estimator of the population mean with deviation of order n−1/2. The Huber loss reads

| (2.4) |

Choosing , ε ∈ (0, 1) where v is an upper bound of the standard deviation of the iid random variables Xi of interest, Fan et al. (2017) showed that the minimizer satisfies

| (2.5) |

when n ≥ 8 log(ε−1) where μ = EXi. This finite sample result holds for any distributions with bounded second moments, including asymmetric distributions generated by Z2. This assumption of bounded second moments for mean estimation translates into a fourth moments assumption for our covariance estimation, because covariances are products of two random variables. When applying (2.5), we will take Xi to be the square of a random variable or products of two random variables. The diverging parameter α is chosen to reduce the biases of the M-estimator for asymmetric distributions. When applying this method to estimate Σz element-wisely, we expect , , , to achieve element-wise maximal error of , where the logarithmic term is incurred when we bound the errors uniformly. The formal result will be given in Section 3.

In an earlier work, Catoni (2012) proposed solving the equation , where the strictly increasing h(x) satisfies For ε ∈ (0, 1) and n > 2 log(ε−1), Catoni (2012) proved that

when n ≥ 4 log(ε−1) and , where v is an upper bound of the standard deviation. This M-estimator can also be used for covariance estimation, though it usually has a larger bias as shown in Fan et al. (2017).

The whole procedure can be presented in the following steps:

- Step 1 For each entry of the covariance matrix Σz, obtain a robust estimator by solving a convex minimization problem (through, for example, Newton-Rapson method):

where α is chosen as discussed above and .(2.6) - Step 2 Derive an estimator of Σu through the algebraic manipulation

and then apply adaptive thresholding of Cai and Liu (2011). That is,

where sij(·) is the generalized shrinkage function (Antoniadis and Fan, 2001; Rothman et al., 2009) and is an entry-dependent threshold. - Step 3 Produce the final estimator for Σ:

Note in the above steps, the choice of the parameters v (in the definition of α) and τij are not yet specified and will be discussed in Section 3.

There are p(p + 1)/2 adaptive Huber estimators (2.6) that we need to compute in Step 1. This can be very computationally intensive when p is large, even though only univariate convex optimization problems are solved. The fact that we solve so many similar optimization problems (2.6) gives us some advantages to implement more efficiently. The key is to pick a good initial value. A simple method is to use the sample covariance as the initial value for (2.6). A better alternative is to use the interpolation of computed as the initial value. More precisely, to get an initial value of the robust covariance of the pair (i0, j0), we find the interval to be the smallest interval that contains , and then take the linear interpolation of and as the initial value for solving the adaptive Huber problem for the pair (i0, j0).

Before delving into the analysis of the procedure, we first deviate to look at a technical issue. Recall that is an estimator of Σf, by Weyl’s inequality,

Since both matrices are of low dimensionality, as long as we are able to estimate every entry of Σf accurate enough (see Lemma 3.1 below), vanishes with high probability as n diverges. Therefore is invertible with high probability, and there is no major issue implementing the procedure. In cases where positive semidefinite matrix is expected, we replace the matrix by its nearest positive semidefinite version. We can do this projection for either or . For example, for , we solve the following optimization problem:

| (2.7) |

and simply employ as a surrogate of . Observe that

Thus, apart from a different constant, inherts all the desired properties of , as we will see in section 3 that those properties only rely on the maximal error bound. Hence we are able to safely replace with without modifying our estimation procedure. Moreover, (2.7) can be cast into the semidefinite programming problem below,

| (2.8) |

which can be easily solved by a semidefinite programming solver, e.g. Grant et al. (2008).

3 Theoretical analysis

In this section, we will show the theoretical properties of our robust estimator under bounded fourth moment. We will also show that when the data are known to be generated from more restricted families (e.g. sub-Gaussian), commonly used estimators such as sample covariance estimator suffices as the initial estimator in Step 1.

3.1 General theoretical properties

From the above discussion on M-estimators and their concentration results, it is immediate to have the following lemma.

Lemma 3.1

Suppose that a d-dimensional random vector X is centered and has finite fourth moment, i.e. EX = 0, . Let σij = E(XiXj) and be Huber’s estimator with parameter , then there exists a universal constant C such that for any δ ∈ (0, 1) and n ≥ C log(p/δ), with probability 1 − δ,

| (3.1) |

where v is a pre-determined parameter satisfying v2 ≥ maxi,j≤p Var(XiXj).

In practice, we do not know any of the fourth moments in advance. To pick up a good v, one possibility is Lepski’s adaptation method (Lepskii, 1992) where a sequence of geometrically increasing v is tried and the estimated v is picked up as the middle of the smallest confidence interval intersecting all the larger ones. Please refer the details to Catoni (2012). Alternatively, we may simply use the empirical variance to give a rough bound of v similar to Fan et al. (2015b).

Recall that zt is a p + r dimensional vector concatenating yt and ft. From Assumption 2.3, there is a constant C0 as a uniform bound for . This leads to the following result.

Corollary 3.1

Suppose that is an estimator of covariance matrix Σz, whose entries are Huber’s estimators with parameter . Then there exists a universal constant C such that for any δ ∈ (0, 1) and n ≥ C log(p/δ), with probability 1 − δ,

| (3.2) |

where v is a pre-determined parameter satisfying v2 ≥ C0.

After Step 1 of the proposed procedure, we obtain an estimator that achieves optimal rate of element-wise convergence. With , we proceed to establish convergence rates for both and . The key theorem that links the estimation error under element-wise max-norm with other metrics is stated below.

Theorem 3.1

Under Assumptions 2.1–2.3, if we have estimator satisfying

| (3.3) |

then the three-step procedure in Section 2.2 with generates and satisfying

| (3.4) |

and furthermore

| (3.5) |

| (3.6) |

| (3.7) |

where is the relative Frobenius norm defined in Fan et al. (2008), if n is large enough so that mp (log p/n)(1−q)/2 is bounded.

Theorem 3.1 provides a nice interface connecting max-norm guarantee with the desired convergence rates. Therefore, any robust method that attains the element-wise optimal rate as in Corollary 3.1 can be used in Step 1 instead of the current M-estimator approach.

3.2 Estimators under more restricted distributional assumptions

We analyzed theoretical properties of the robust procedure in the previous subsection under the assumption of bounded fourth moment. Theorem 3.1 shows that any estimator that achieves the optimal max-norm convergence rate could serve as an initial pilot estimator for Σz to be used in Step 2 and Step 3 of our procedure. Thus the procedure depends on the distributional assumption 2.3 only through Step 1 where a proper estimator is proposed. Sometimes, we do have more information on the shapes of the distributions of factors and noises. For example, if the distribution of has a sub-Gaussian tail, the sample covariance matrix attains the optimal element-wise maximal rate for estimating Σz.

In an earlier work, Fan et al. (2011) proposed to simply regress observations yt on ft in order to obtain

| (3.8) |

where Y = (y1,…, yn)T and F = (f1,…, fn)T. Then they threshold the matrix where and . This regression-based method is equivalent to applying directly in Step 1 and also equivalent to solving a least-square minimization problem, and thus suffers from robustness issue when the data come from heavy-tailed distributions. All the convergence rates achieved in Theorem 3.1 are identical with Fan et al. (2011) where exponentially decayed tails are assumed.

As we explained, if zt is sub-Gaussian distributed, instead of can be used. If ft and ut exhibit heavy tails, another widely used assumption is multivariate t-distribution, which is included in the elliptical distribution family. The elliptical distribution is defined as follows. Let μ ∈ ℝp and Σ ∈ ℝp×p with rank (Σ) = q ≤ p. A p-dimensional random vector y has an elliptical distribution, denoted by y ∼ EDp(μ, Σ, ζ), if it has a stochastic representation (Fang et al., 1990)

| (3.9) |

where U is a uniform random vector on the unit sphere in ℝq, ζ ≥ 0 is a scalar random variable independent of U, A ∈ ℝp×q is a deterministic matrix satisfying AA′ = Σ. To make the representation (3.9) identifiable, we require so that Cov(y) = Σ. Here we also assume continuous elliptical distributions with .

If ft and ut are uncorrelated and jointly elliptical, i.e., , then a well-known good estimator for the correlation matrix R of zt is the marginal Kendall’s tau. Kendall’s tau correlation coefficient is defined as

| (3.10) |

whose population counterpart is

| (3.11) |

For the elliptical family, the key identity rjk = sin(πτjk/2) relates Pearson correlation with Kendall’s correlation (Fang et al., 1990). Using , Han and Liu (2014) showed that is an accurate estimate of R, achieving Let Σz = DRD where R is the correlation matrix and D = diag(σ1,…, σp) is a diagonal matrix consisting of standard deviations for each dimension. We construct by separately estimating D and R. As before, if the fourth moment exists, we estimate D by only considering i = j in Step 1, namely by using the adaptive Huber method.

Therefore, if zt is elliptically distributed, can be used as the initial pilot estimator for Σz in Step 1. Note that is more computationally efficient than . This difference can be reduced by using However, for general heavy-tailed distributions, there is no simple way to connect the Pearson correlation with Kendall’s correlation. Thus we should favor instead. We will compare the three estimators , and thoroughly through simulations in Section 4.

3.3 Asymptotics of robust mean estimators

In this section we look further into robust mean estimators. Though the result we shall present is asymptotic and not essential for our main Theorem 3.1, it is interesting in its own right and deserves some treatment.

Perhaps the best known result of Huber’s mean estimator is the asymptotic minimax theory. Huber (1964) considered the so-called ε-contamination model:

where G is a known distribution, ε is fixed and ℱ is the family of symmetric distributions. Let Tn be the minimizer of , where ρH(x) = x2/2 for |x| < α, and ρH(x) = α|x| − α2/2 for |x| ≥ α, where α is fixed. In the special case where G is Gaussian, Huber’s result showed that with appropriate choice of α, Huber’s estimator minimizes the maximal asymptotic variance among all translation invariant estimators, the maximum being taken over .

One problem with ε-contamination model is that it makes sense only when we assume symmetry of H, if θ is the quantity we are interested in. In contrast, Catoni (2012) and Fan et al. (2017) studied a different family, in which distributions have finite second moment. Bickel (1976) called them ‘local’ and ‘global’ models respectively, and offered a detailed discussion.

This paper, along with the preceding two papers (Catoni, 2012; Fan et al., 2017), studies robustness in the sense of the second model. The technical novelty primarily lies in the nice concentration property, which is fundamental to high dimensional statistics. This requires the parameter α of ρH to grow with n, versus being kept fixed, such that the condition in Corollary 3.1 is satisfied. It turns out that, in addition to the concentration property, we can establish results regarding its asymptotic behaviors in an exact manner.

Let and ; its derivative . Let us write λn(t) = Eψn(X − t). Denote tn as a solution of λn(t) = 0, which is unique when n is sufficiently large, and Tn a solution of . We have the following theorem.

Theorem 3.2

Suppose that x1, …, xn is drawn from some distribution F with mean μ and finite variance σ2. Suppose {αn} is any sequence with limn→∞ αn = ∞. Then, as n → ∞,

and moreover

Theorem 3.2 gives a decomposition of error Tn−μ into two components: variance and bias. The rate of bias Eψn(X−μ) depends on the distribution F and {αn}. When the distribution is either symmetric or , the second component tn − μ is , a negligible quantity compared with the asymptotic variance. While Huber’s approach needs the symmetric restriction, there is no need for our estimator. This theorem also lends credibility to the bias-variance tradeoff we observed in the simulation (see Section 4.1).

It is worth comparing the above Huber loss minimization with another candidate for robust mean estimation called “median-of-means” given by Hsu and Sabato (2014). The method, as its name suggests, first divides samples into k subgroups and calculates means for each subgroup, then take the median of those means as the final estimator. The first step basically symmetrizes the distribution by the central limit theorem and the second step is to robustify the procedure. According to Hsu and Sabato (2014), if we choose k = 4.5 log(p/δ) and element-wisely estimate Σz, similar to (2.5), with probability 1 − δ, we have

Although “median-of-means” has the desired concentration property, unlike our estimator here, its asymptotic behavior differs from the empirical mean estimator, and as a consequence, it is not asymptotically efficient when the distribution F is Gaussian. Therefore, regarding efficiency, we prefer our proposed procedure in Section 2.2.

4 Simulations

We now present simulation results to demonstrate improvement of the proposed robust method over the least-square based method (Fan et al., 2008, 2011) and Kendall’s tau based method (Han and Liu, 2014; Fan et al., 2015b) when factors and errors are elliptically distributed and generally heavy-tailed.

However, one must be cautious of the choice of the tuning parameter α, since it plays an important role in the quality of the robust estimates. Out of this concern, we shall discuss the intricacy of choosing parameter α before presenting the performance of robust estimates of covariance matrices.

4.1 Robust estimates of variances and covariances

For random variables X1,…, Xp with zero mean that may potentially exhibit heavy-tailed behavior, the sample mean of vij = E(XiXj) is not good enough for our estimation purpose. Though being unbiased, in the high dimensional setting, there is no guarantee that multiple sample means stay close to the true values simultaneously.

As shown in theoretical analysis, this problem is alleviated for robust estimators constructed through M-estimators, whose influence functions grow slowly at extreme values. The desired concentration property in (3.2) depends on the choice of parameter α, which decides the range outside which large values cease to become more influential. However, in practice, we have to make a good guess of Var(XiXj) as the theory suggests; even so, we may be too conservative in the choice of α.

To show this, we plot in Figure 1 the histograms of our estimates of v = Var(Xi) in 1000 runs, where Xi is generated from a t-distribution with degree of freedom ν = 4.2. The first three histograms show the estimates constructed from Huber’s M-estimator, with parameter

| (4.1) |

where β is 0.2, 1, 5 respectively, and the last histogram is the usual sample estimate (or β = ∞). The quality of estimates ranges from large biases to large variances. We also plot in Figure 2 the histograms of estimates of v = Cov(Xi, Xj), where (Xi, Xj), i ≠ j is generated from a multivariate t-distribution with ν = 4.2 and an identity scale matrix. The only difference is that in (4.1), the variance of is replaced by the variance of XiXj.

Figure 1.

The histograms show the estimates of Var(Xi) with different paramters α, parametrized by β via (4.1), in 1000 runs. Xi ~t4.2 so that the true variance Var(Xi) = 1.909. The sample size n = 100.

Figure 2.

The histograms show the estimates of Cov(Xi, Xj) with different paramters α in 1000 runs. The true covariance Cov(Xi, Xj) = 0. n = 100 and the degree of freedom is 4.2.

From Figure 1, we observe a bias-variance tradeoff phenomenon as α varies. This is also consistent with the theory in Section 3.3. When α is small, the robust method underestimate the variance, yielding a large bias due to the asymmetry of the distribution of . As α increases, a larger variance is traded for a smaller bias, until α = ∞, in which case the robust estimator simply becomes the sample mean.

For the covariance estimation, Figure 2 exhibits a different phenomenon. Since the distribution of XiXj is symmetric for i ≠ j, there is no bias incurred when α is small. Since the variance is smaller when α is smaller, we have a net gain in terms of the quality of estimates. In the extreme case where α is zero, we are actually estimating the median. Fortunately, under distributional symmetry, the mean and the median are the same.

The simple simulations help us to understand how to choose α in practice: if the distribution is close to a symmetric one, one can choose α aggressively, i.e. making α smaller; otherwise, a conservative α is preferred.

4.2 Covariance matrix estimation

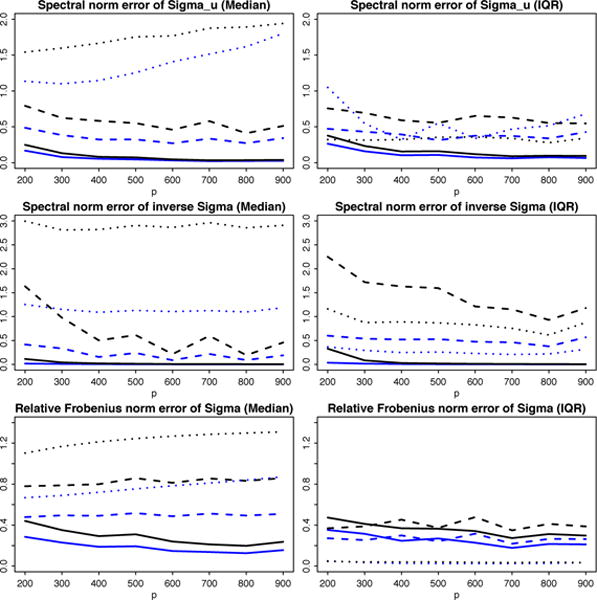

We implemented the robust estimation procedure with three initial pilot estimators , and . We simulated n samples of from a multivariate t-distribution with covariance matrix diag {Ir, 5Ip} and various degrees of freedom. Each row of B is independently sampled from a standard normal distribution. The population covariance matrix of yt = Bft + ut is Σ = BBT + 5Ip. For p running from 200 to 900 and n = p/2, we calculated errors of the robust procedure in different norms. As suggested by the experiments in the previous section, we chose a larger parameter α to estimate the diagonal elements of Σz, and a smaller one to estimate its off-diagonal elements. We used the thresholding parameter .

The estimation errors are gauged in the following norms: , and as shown in Theorem 3.1. We considered two different settings: (1) zt is generated from multivariate t distribution with very heavy (ν = 3), medium heavy (ν = 5), and light (ν = ∞ or Gaussian) tail; (2) zt is element-wise iid one-dimensional t distribution with degree of freedom ν = 3, 5 and ∞. They are separately plotted in Figures 3 and 4. The estimation errors of applying sample covariance matrix are used as the baseline for comparison. For example, if is used to measure performance, the blue curve represents ratio while the black curve represents ratio where , , are respectively estimators given by the robust procedure with initial pilot estimators , , for Σz. Therefore if the ratio curve moves below 1, the method is better than naive sample estimator given in Fan et al. (2011) and vice versa. The more it gets below 1, the more robust the procedure is against heavy-tailed randomness.

Figure 3.

Errors of robust estimates against varying p. Blue line represents ratio of errors with over errors with , while black line represents ratio of errors with over errors with . zt is generated by multivariate t-distribution with df = 3 (solid), 5 (dashed) and ∞ (dotted). The median errors and their IQR over 100 simulations are reported.

Figure 4.

Errors of robust estimates against varying p. Blue line represents ratio of errors with over errors with , while black line represents ratio of errors with over errors with . zt is generated by element-wise iid t-distribution with df = 3 (solid), 5 (dashed) and ∞ (dotted). The median errors and their IQR over 100 simulations are reported.

The first setting (Figure 3) represents a heavy-tailed elliptical distribution, where we expect the two robust methods work better than the sample covariance based method, especially in the case of extremely heavy tails (solid lines for ν = 3). As expected, both black curves and blue curves under the three measures behave visibly better (smaller than 1). On the other hand, if data are indeed Gaussian (dotted line for ν = ∞), the method with sample covariance performs better under most measures (greater than 1). Nevertheless, our robust method still performs comparably with the sample covariance method, as the median error ratio stays around 1 whereas Kendall’s tau method can be much worse than the sample covariance method. A plausible explanation is that the variance reduced compensates for the bias incurred in our procedure. In addition, the IQR plots tell us the proposed robust method is indeed more stable than Kendall’s tau.

The second setting (Figure 4) provides an example of non-elliptical distributed heavy-tailed data. We can see that the performance of the robust method dominates the other two methods, which verifies the approach in this paper especially when data comes from a general heavy-tailed distribution. While our method is able to deal with more general distributions, Kendall’s tau method does not apply to distributions outside the elliptical family, which excludes the element-wise iid t distribution in this setting. This explains why under various measures, our robust method is better than Kendall’s tau method by a clear margin. Note that even in the first setting where the data are indeed elliptical, with proper tuning, the proposed robust methods can still outperform Kendall’s tau.

5 Real data analysis

In this section, we look into financial historical data during 2005–2013, and assess to what extent our factor model characterizes the data.

The dataset we used in our analysis consists of daily returns of 393 stocks, all of which are large market capitalization constituents of S&P 500 index, collected without missing values from 2005 to 2013. This dataset has also been used in Fan et al. (2016), where they investigated how covariates (e.g. size, volume) could be utilized to help estimate factors and factor loadings, whereas the focus of the current paper is to develop robust methods in the presence of heavy tailed data.

In addition, we collected factors data for the same period, where the factors are calculated according to Fama-French three-factor model (Fama and French, 1993). After centering, the panel matrix we will use for analysis, is a 393 by 2265 matrix Y, in addition to a factor matrix F of size 2265 by 3. Here 2265 is the number of daily returns and 393 is the number of stocks.

5.1 Tail-heaviness

First, we look at how the daily returns are distributed. Especially, we are interested in the behaviors of their tails. In Figure 5, we made Q-Q plots that compare the distribution of all yit with either Gaussian distribution or t-distributions with varying degree of freedom, ranging from df = 2 to df = 6. We also fit a line in each plot, showing how much the return data deviate from the base distribution. It is clear that the data has a tail heavier than that of a Gaussian distribution, and that t-distribution with df = 4 is almost in alignment with the return data. Similarly, we made the Q-Q plots for the factors in Figure 6. The plots also show that t-distribution is better in terms of fitting the data; however, the tails are even heavier, and t-distribution t2 seems to best fit the data.

Figure 5.

Q-Q plot of excess returns yit for all i and t against Gaussian distribution and t-distribution with degree of freedom 2, 4 and 6. For each plot, a line is fitted by connecting points at first and third quartile.

Figure 6.

Q-Q plot of factor fit against Gaussian distribution and t-distribution with degree of freedom 2, 4 and 6. For each plot, a line is fitted by connecting points at first and third quartile.

5.2 Spiked covariance structure

We now consider how the covariance matrix of returns looks like, since a spiked covariance structure would justify the pervasiveness assumption. To find the spectral structure, we calculated eigenvalues of the sample covariance matrix YYT /n, and made a histogram based on logarithmic scale (see the left panel in Figure 7). In the histogram, the counts in the rightmost four bins are 5, 1, 0 and 1, representing only a few large eigenvalues, which is a strong signal of a spiked structure. We also plotted the proportion of residue eigenvalues , against K in the right panel of Figure 7. The top 3 eigenvalues account for a major part of the variances, which lends weight to the pervasive assumption.

Figure 7.

Left panel: Histogram of eigenvalues of sample covariance matrix YYT / n. The histogram is plotted on the logarithmic scale, i.e. each bin counts the number of log λi in a given range. Right panel: Proportion of residue eigenvalues , against varying K, where λi, is the ith largest eigenvalue of sample covariance matrix YYT / n.

The spiked covariance structure has been studied in Paul (2007), Johnstone and Lu (2009) and many other papers, but under their regime, the top eigenvalues or “spiked” eigenvalues do not grow with the dimension. In this paper, the spiked eigenvalues have stronger signals, and thus are easier to be separated from the rest of eigenvalues. In this respect, the connotation of “spiked covariance structure” is closer to that in Wang and Fan (2017). As empirical evidence, this phenomenon also buttresses the motivation of study in Wang and Fan (2017).

5.3 Portfolio risk estimation

We consider portfolio risk estimation. To be specific, for a portfolio with weight vector w ∈ Rp on all the market assets, its risk is measured by quantity wT Σw where Σ is the true covariance of excess returns of all the assets. Note that Σ is time varying. Here we consider a class of weights with gross exposure c ≥ 1, that is Σi wi = 1 and Σi|wi| = c. We consider four scenarios c = 1, 1.4, 1.8, 2.2. Note that c = 1 represents the case of no short selling and (c − 1)/2 represents the level of exposure to short selling.

To assess how well our robust estimator performs compared with sample covariance, we calculated the covariance estimators and , using the daily data of preceding 12 months, where is our robust covariance estimator and is the sample covariance, for every trading day from 2006 to 2013. We indexed those dates by t where t runs from 1 to 2013 (from 2006–01–01 to 2013–12–31, it happens to contain 2013 trading days, so here 2013 is the total number of trading days instead of a year indicator). Let γt+1 be the excess return of the following trading day after t. For a weight vector w, the error we used to gauge the two approaches is

Note the bias-variance decomposition

where . The first term measures the size of the stochastic error that cannot be reduced while the second term is the estimation error for the risk of portfolio w.

To generate multiple random weights w with gross exposure c, we adopted the strategy used in Fan et al. (2015a), which aims to generate a uniform distribution on the simplex {w: Ʃi wi = 1, Ʃi|wi| = c}: (1) for each index i ≤ p let ηi = 1 (long) with probability (c + 1)/2c and ηi = −1 (short) with probability (c − 1)/2c; (2) generate iid ξi by exponential distribution; (3) for ηi = 1, let and for ηi = −1, let . We made a set of scatter plots in Figure 8, in which the x-axis represents RR(w) and the y-axis RS(w). In addition, we highlighted in the first plot the point with uniform weights (i.e. wi = 1/p), which serves as a benchmark for comparison. The dashed line shows where the two approaches have the same performance. Clearly, for all w the robust approach has smaller risk errors, and therefore has better empirical performance in estimating portfolio risks.

Figure 8.

(RR(w), RS(w)) for multiple randomly generated w. The four plots compare the errors of the two methods under different settings (upper left: no short selling; upper right: exposure c = 1.4; lower left: exposure c = 1.8; lower right: exposure c = 2.2). The red diamond in the first plot corresponds to uniform weights. The dashed line is the 45 degree line representing equal performance. Our robust method gives smaller errors.

Acknowledgments

The research was partially supported by NSF grants DMS-1206464 and DMS-1406266 and NIH grants R01-GM072611-11 and NIH R01GM100474-04.

A Appendix

Proof of Theorem 3.1

Since we have robust estimator such that , we clearly know , , , achieve the same rate. Using this, let us first prove . Obviously,

| (A.1) |

because the multiplication is along the fixed dimension r and each element is estimated with the rate of convergence . Also , therefore is good enough to estimate Σu = Σ−BΣfBT with error in max-norm.

Once the max error of sparse matrix Σu is controlled, it is not hard to show the adaptive procedure in Step 2 gives such that the spectral error (Fan et al., 2011; Cai and Liu, 2011; Rothman et al., 2009) where we define . Furthermore, . So is also due to the lower boundedness of ‖Σu‖. So (3.4) is valid.

Proving (3.5) is trivial. when τ is chosen as the same order as wn and thus

Next let us take a look at the relative Frobenius convergence (3.6) for .

| (A.2) |

We bound the four terms one by one. The last term is the most straightforward,

Bound for Δ1 uses the fact that and are OP (1) and . So

Bound for Δ3 needs additional conclusion that , where and the last inequality is shown in Fan et al. (2008). So

Lastly, by similar trick, we have

Combining results above, by (A.2), we conclude that .

Finally we show the rate of convergence for . By Woodbury formula,

Thus, let , and , , we have the following bound similar to (A.2):

| (A.3) |

From (3.4), . For the remaining terms, we need to find the rates for , , ‖D‖ and separately. Note that by Assumption 2.2 (ii). So and

In addition, it is not hard to show . In addition, we claim ‖A−1‖ = OP(p−1) since and by Weyl’s inequality, λr(BΣfBT) ≥ λr(Σ) − ‖Σ‖ ≥ cp by Assumption 2.2 (i). Therefore, ‖Â−1 − A−1‖ ≤ ‖A−1‖‖Â −1‖‖Â− A‖ implies , and furthermore ‖Â−1‖=OP(p−1). Finally we incorporate the above rates together and conclude

So combining rates for , i = 1, 2, 3, 4, we show (3.7) is true. The proof is now complete. □

Proof of Theorem 3.2

Without loss of generality we can assume μ = 0. By dominated converge theorem we know that for all t, limn λn(t) = −t, that λn(t) is differentiable, that , and that . With Taylor’s expansion, we have

| (A.4) |

where . Observe that

By Markov’s inequality,

For any ε ∈ (0, 1), there exists N > 0, such that for all n > N,

Plugging t = (1 + ε)λn(0) into (A.4),

where . Equivalently,

where |βn| ≤ /4. Similarly,

where . Also we have and . Multiplying both sides of the equations, we deduce that

If λn(0) = 0, equation λn(t) = 0 has one zero t = 0; and in fact it is the unique one for sufficiently large n, since λn(t) is nonincreasing and for n large enough. If λn(0) ≠ 0, at least one zero lies in the interval with endpoints (1 + ε)λn(0) and (1 − ε)λn(0). Since λn(0) → 0, for any zero in this interval we have , which implies . It follows that such zero is unique for sufficiently large n. This leads to tn/λn(0) → 1, thus proving the second claim in the theorem.

The proof of the first claim is similar in spirit to that of Huber (1964). Let us denote

By monotonicity, . Since

it follows that for any fixed z ∈ R,

where we denote and .

By dominate convergence theorem, and σn (un)2 → σ2. By Taylor expansion of λn (un) at tn,

where . A similar argument shows that

This leads to , and thus .

Let us write

for the centered variance ξi with unit variance. If we can show

| (A.5) |

then by continuity of Φ, standard normal distribution function, we have

which gives . It is similar to show that . At this point, we are able to conclude that the first claim in the theorem holds, i.e. .

To prove (A.5), it suffices to check Lindeberg’s condition:

for any ε > 0. Notice that λn (un) → 0 and σn (un) → σ, we only need to show

This is true due to

and dominated convergence theorem. □

References

- Agarwal A, Negahban S, Wainwright MJ. Noisy matrix decomposition via convex relaxation: Optimal rates in high dimensions. The Annals of Statistics. 2012;40:1171–1197. [Google Scholar]

- Amini AA, Wainwright MJ. High-dimensional analysis of semidefinite relaxations for sparse principal components. Information Theory, 2008 ISIT 2008 IEEE International Symposium on IEEE 2008 [Google Scholar]

- Antoniadis A, Fan J. Regularization of wavelet approximations. Journal of the American Statistical Association. 2001;96 [Google Scholar]

- Bai J. Inferential theory for factor models of large dimensions. Econometrica. 2003;71:135–171. [Google Scholar]

- Berthet Q, Rigollet P. Optimal detection of sparse principal components in high dimension. The Annals of Statistics. 2013;41:1780–1815. [Google Scholar]

- Bickel PJ. Another look at robustness: a review of reviews and some new developments. Scandinavian Journal of Statistics. 1976:145–168. [Google Scholar]

- Bickel PJ, Levina E. Covariance regularization by thresholding. The Annals of Statistics. 2008:2577–2604. [Google Scholar]

- Birnbaum A, Johnstone IM, Nadler B, Paul D. Minimax bounds for sparse pca with noisy high-dimensional data. Annals of statistics. 2013;41:1055. doi: 10.1214/12-AOS1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai T, Liu W. Adaptive thresholding for sparse covariance matrix estimation. Journal of the American Statistical Association. 2011;106:672–684. [Google Scholar]

- Cai T, Ma Z, Wu Y. Optimal estimation and rank detection for sparse spiked covariance matrices. Probability Theory and Related Fields. 2013;161:781–815. doi: 10.1007/s00440-014-0562-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai TT, Zhang CH, Zhou HH. Optimal rates of convergence for covariance matrix estimation. The Annals of Statistics. 2010;38:2118–2144. [Google Scholar]

- Candès EJ, Li X, Ma Y, Wright J. Robust principal component analysis? Journal of the ACM (JACM) 2011;58:11. [Google Scholar]

- Candès EJ, Recht B. Exact matrix completion via convex optimization. Foundations of Computational mathematics. 2009;9:717–772. [Google Scholar]

- Catoni O. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques. Vol. 48. Institut Henri Poincaré; 2012. Challenging the empirical mean and empirical variance: a deviation study. [Google Scholar]

- Chandrasekaran V, Sanghavi S, Parrilo PA, Willsky AS. Rank-sparsity incoherence for matrix decomposition. SIAM Journal on Optimization. 2011;21:572–596. [Google Scholar]

- Dinov ID, Boscardin JW, Mega MS, Sowell EL, Toga AW. A wavelet-based statistical analysis of fmri data. Neuroinformatics. 2005;3:319–342. doi: 10.1385/NI:3:4:319. [DOI] [PubMed] [Google Scholar]

- Fama EF, French KR. Common risk factors in the returns on stocks and bonds. Journal of financial economics. 1993;33:3–56. [Google Scholar]

- Fan J, Fan Y, Lv J. High dimensional covariance matrix estimation using a factor model. Journal of Econometrics. 2008;147:186–197. [Google Scholar]

- Fan J, Li Q, Wang Y. Estimation of high dimensional mean regression in the absence of symmetry and light tail assumptions. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2017;79:247–265. doi: 10.1111/rssb.12166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Mincheva M. High dimensional covariance matrix estimation in approximate factor models. Annals of statistics. 2011;39:3320. doi: 10.1214/11-AOS944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Mincheva M. Large covariance estimation by thresholding principal orthogonal complements. Journal of the Royal Statistical Society: Series B. 2013;75:1–44. doi: 10.1111/rssb.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Shi X. Risks of large portfolios. Journal of Econometrics. 2015a;186:367–387. doi: 10.1016/j.jeconom.2015.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Wang W. Projected principal component analysis in factor models. Annals of statistics. 2016;44:219. doi: 10.1214/15-AOS1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liu H, Wang W. Large covariance estimation through elliptical factor models. arXiv preprint arXiv:1507.08377. 2015b doi: 10.1214/17-AOS1588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang KT, Kotz S, Ng KW. Symmetric multivariate and related distributions. Chapman and Hall; 1990. [Google Scholar]

- Forni M, Hallin M, Lippi M, Reichlin L. The generalized dynamic-factor model: Identification and estimation. Review of Economics and statistics. 2000;82:540–554. [Google Scholar]

- Forni M, Lippi M. The generalized dynamic factor model: representation theory. Econometric theory. 2001;17:1113–1141. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9:432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant M, Boyd S, Ye Y. Cvx: Matlab software for disciplined convex programming 2008 [Google Scholar]

- Han F, Liu H. Scale-invariant sparse PCA on high-dimensional meta-elliptical data. Journal of the American Statistical Association. 2014;109:275–287. doi: 10.1080/01621459.2013.844699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu D, Sabato S. Heavy-tailed regression with a generalized median-of-means. Proceedings of the 31st International Conference on Machine Learning (ICML-14) 2014 [Google Scholar]

- Huber PJ. Robust estimation of a location parameter. The Annals of Mathematical Statistics. 1964;35:73–101. [Google Scholar]

- Johnstone IM, Lu AY. On consistency and sparsity for principal components analysis in high dimensions. Journal of the American Statistical Association. 2009;104:682–693. doi: 10.1198/jasa.2009.0121. URL http://amstat.tandfonline.com/doi/abs/10.1198/jasa.2009.0121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrix estimation. Annals of statistics. 2009;37:4254. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lepskii O. Asymptotically minimax adaptive estimation. i: Upper bounds. optimally adaptive estimates. Theory of Probability & Its Applications. 1992;36:682–697. [Google Scholar]

- Lintner J. The valuation of risk assets and the selection of risky investments in stock portfolios and capital budgets. The review of economics and statistics. 1965:13–37. [Google Scholar]

- Ma Z. Sparse principal component analysis and iterative thresholding. The Annals of Statistics. 2013;41:772–801. [Google Scholar]

- Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the lasso. The Annals of Statistics. 2006:1436–1462. [Google Scholar]

- Onatski A. Asymptotics of the principal components estimator of large factor models with weakly influential factors. Journal of Econometrics. 2012;168:244–258. [Google Scholar]

- Paul D. Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statistica Sinica. 2007;17:1617–1642. [Google Scholar]

- Ravikumar P, Wainwright MJ, Raskutti G, Yu B, et al. High-dimensional covariance estimation by minimizing ?1-penalized log-determinant divergence. Electronic Journal of Statistics. 2011;5:935–980. [Google Scholar]

- Rothman AJ, Levina E, Zhu J. Generalized thresholding of large covariance matrices. Journal of the American Statistical Association. 2009;104:177–186. [Google Scholar]

- Sharpe WF. Capital asset prices: A theory of market equilibrium under conditions of risk*. The journal of finance. 1964;19:425–442. [Google Scholar]

- Stock J, Watson M. Forecasting using principal components from a large number of predictors. 2002;97:1167–1179. [Google Scholar]

- Vu VQ, Lei J. Minimax rates of estimation for sparse pca in high dimensions. arXiv preprint arXiv: 1202.0786 2012 [Google Scholar]

- Wang W, Fan J. Asymptotics of empirical eigen-structure for high dimensional spiked covariance. The Annals of statistics (to appear) 2017 doi: 10.1214/16-AOS1487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu H, Caramanis C, Sanghavi S. Robust pca via outlier pursuit. Advances in Neural Information Processing Systems 2010 [Google Scholar]