Abstract

A central goal of sensory neuroscience is to construct models that can explain neural responses to natural stimuli. As a consequence, sensory models are often tested by comparing neural responses to natural stimuli with model responses to those stimuli. One challenge is that distinct model features are often correlated across natural stimuli, and thus model features can predict neural responses even if they do not in fact drive them. Here, we propose a simple alternative for testing a sensory model: we synthesize a stimulus that yields the same model response as each of a set of natural stimuli, and test whether the natural and “model-matched” stimuli elicit the same neural responses. We used this approach to test whether a common model of auditory cortex—in which spectrogram-like peripheral input is processed by linear spectrotemporal filters—can explain fMRI responses in humans to natural sounds. Prior studies have that shown that this model has good predictive power throughout auditory cortex, but this finding could reflect feature correlations in natural stimuli. We observed that fMRI responses to natural and model-matched stimuli were nearly equivalent in primary auditory cortex (PAC) but that nonprimary regions, including those selective for music or speech, showed highly divergent responses to the two sound sets. This dissociation between primary and nonprimary regions was less clear from model predictions due to the influence of feature correlations across natural stimuli. Our results provide a signature of hierarchical organization in human auditory cortex, and suggest that nonprimary regions compute higher-order stimulus properties that are not well captured by traditional models. Our methodology enables stronger tests of sensory models and could be broadly applied in other domains.

Author summary

Modeling neural responses to natural stimuli is a core goal of sensory neuroscience. A standard way to test sensory models is to predict responses to natural stimuli. One challenge with this approach is that different features are often correlated across natural stimuli, making their contributions hard to tease apart. We propose an alternative in which we compare neural responses to a natural stimulus and a “model-matched” synthetic stimulus designed to yield the same responses as the natural stimulus. We tested whether a standard model of auditory cortex can explain human cortical responses measured with fMRI. Model-matched and natural stimuli produced nearly equivalent responses in primary auditory cortex, but highly divergent responses in nonprimary regions, including those selective for music or speech. This dissociation was not evident using model predictions because of the influence of feature correlations in natural stimuli. Our results provide a novel signature of hierarchical organization in human auditory cortex, and suggest that nonprimary regions compute higher-order stimulus properties that are not captured by traditional models. The model-matching methodology could be broadly applied in other domains.

Introduction

One definition of understanding a neural system is to be able to build a model that can predict its responses. Responses to natural stimuli are of particular interest, both because natural stimuli are complex and varied and thus provide a strong test of a model, and because sensory systems are presumably adapted to represent features present in natural stimuli [1–3]. The evaluation of models by their ability to predict responses to natural stimuli is now widespread in sensory neuroscience [4–16].

A challenge for this approach is that because natural stimuli are richly structured, the features of a set of natural stimuli in one model (or model stage) are often correlated with the features in other models (or model stages) [17,18]. Model features can thus in principle predict neural responses to a natural stimulus set, even if the neural responses are in fact driven by other features not captured by the model. Related issues have been widely discussed in the receptive field estimation literature [4,19] but have been less noted in cognitive neuroscience [17,18].

A canonical example of this phenomenon occurs in the auditory domain, where there is still considerable uncertainty regarding computational descriptions of cortical processing. Consider a common model of auditory processing, in which a sound waveform is processed by two stages of filters intended to mimic cochlear and cortical filtering, respectively [20] (Fig 1A). The filters in the second model stage are tuned to temporal and spectral modulations in the spectrogram-like representation produced by the cochlea. Such filters and variants thereof are commonly used to account for human perceptual abilities [21–25] and to explain neural responses throughout the auditory pathway [2,7,11,12,26–36]. But in natural stimuli, the responses of these second-stage filters are often correlated with other sound properties, such as semantic categories (Fig 1B) [37], which can confound the interpretation of neural responses. Speech, for instance, has a distinctive temporal modulation rate that corresponds loosely to the rate of syllabic patterning [38], music has distinctive temporal modulations reflective of its beat structure [39], and both speech and music have characteristic spectral modulations due to harmonic frequency structure [20]. However, speech, music, and other natural sounds also have many unique properties that are not captured by spectrotemporal modulation alone [40]. Thus, if a neuron responds more to speech than to other sounds, modulation filters may be able to predict the neuron’s response, even if the response is driven by another property of speech that is not captured by such filters. This is what we term a “stimulus-driven response correlation,” created when different stimulus properties (e.g., spectrotemporal modulations and semantic categories) are correlated within a particular stimulus set, making their contribution to the neural response difficult to tease apart.

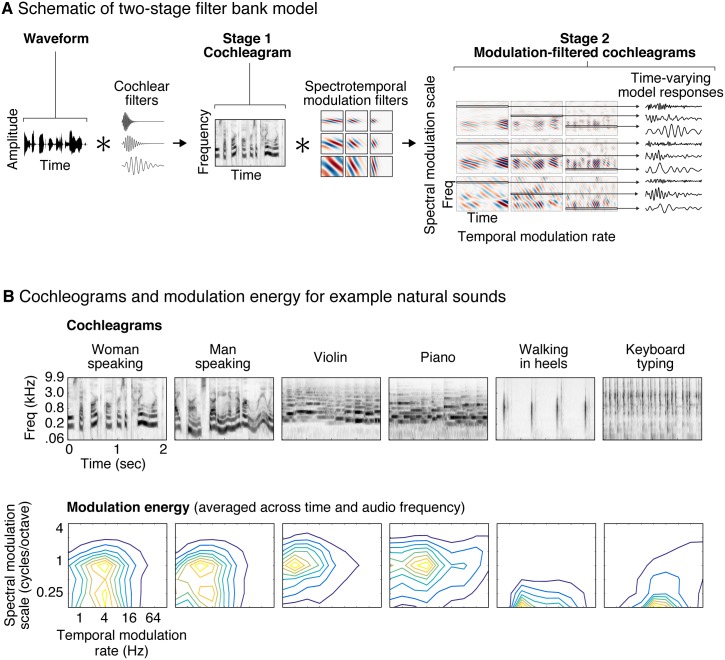

Fig 1. Illustration of the auditory model tested in this study.

(A) The model consists of two cascaded stages of filtering. In the first stage, a cochleagram is computed by convolving each sound with audio filters tuned to different frequencies, extracting the temporal envelope of the resulting filter responses, and applying a compressive nonlinearity to simulate the effect of cochlear amplification (for simplicity, envelope extraction and compression are not illustrated in the figure). The result is a spectrogram-like structure that represents sound energy as a function of time and frequency. In the second stage, the cochleagram is convolved in time and frequency with filters that are tuned to different rates of temporal and spectral modulation. The output of the second stage can be conceptualized as a set of filtered cochleagrams, each highlighting modulations at a particular temporal rate and spectral scale. Each frequency channel of these filtered cochleagrams represents the time-varying output of a single model feature that is tuned to audio frequency, temporal modulation rate, and spectral modulation scale. (B) Cochleagrams and modulation spectra are shown for six example natural sounds. Modulation spectra plot the energy (variance) of the second-stage filter responses as a function of temporal modulation rate and spectral modulation scale, averaged across time and audio frequency. Different classes of sounds have characteristic modulation spectra.

Here, we propose a complementary method for evaluating models that circumvents the challenge of stimulus-driven response correlations. The idea is simple: we synthesize a stimulus that yields the same response in a model as a natural stimulus, and then test whether the “model-matched” stimulus elicits the same neural response as the natural stimulus. The synthesized sounds are not influenced by the correlations between different feature sets that may exist in natural stimuli because they are constrained only by the features in the model. As a result, they generally differ in other properties that could potentially be important to the neural response, and often sound markedly different from their natural counterparts. Comparing responses to natural and model-matched sounds thus provides a strong test of the model’s explanatory power.

We demonstrate the method by using it to evaluate whether a common filter bank model of auditory cortex can explain human cortical responses to natural sounds measured with fMRI. Many prior fMRI studies of auditory cortex have identified aspects of cortical tuning that are unique to nonprimary regions [16,17,41], such as selectivity for voice [42], speech [43,44], and music [45–47]. At the same time, other studies have demonstrated that the standard filter bank model has relatively good predictive accuracy throughout primary and nonprimary regions [7,12,16], raising the possibility that primary and nonprimary regions encode sound using similar representations. Alternatively, such predictions could in part reflect stimulus-driven correlations. Here, we addressed this question by comparing cortical fMRI responses to natural and model-matched stimuli.

The model-matched stimuli were synthesized to yield the same response as a natural sound in one of several models of varying complexity, ranging from a model of just the cochlea’s response to the two-stage spectrotemporal filter bank model shown in Fig 1A [20]. Our results show that tuning for temporal and spectral modulations explains much of the voxel response to natural sounds in human primary auditory cortex (PAC) but much less of the response in nonprimary areas. This functional difference between primary and nonprimary regions was much less evident using conventional model predictions because of the effect of stimulus-driven response correlations. Our findings provide novel evidence for functional differentiation between primary and nonprimary auditory cortex, and suggest that nonprimary regions build higher-order representations that cannot be explained by standard models. Our methodology could provide stronger tests of neural models in any system for which models are used to predict neural responses.

Results

Overview of model-matching method and underlying assumptions

The goal of this paper was to test whether conventional auditory models can explain voxel responses in auditory cortex to natural sounds. The models we consider are described by a set of model features (mk(t)), each of which has a time-varying response to sound determined by the feature’s filter (Fig 2A). In general, the response of these features will differ across natural sounds, both in their temporal pattern and their time-averaged properties (S1A Fig). The BOLD signal reflects a time-averaged measure of neural activity, and thus we expect that if a model provides a good description of the underlying neural responses, any two sounds with the same time-averaged model responses should yield the same fMRI response, even if the temporal pattern of the response is different. To test this prediction, we iteratively modified a noise stimulus (that was initially unstructured) so as to match the time-averaged model responses (S1B Fig), similar to methods for texture synthesis [40,48–50]. Because the temporal patterns of the model responses are unconstrained, the model-matched sounds differ from the natural sounds to which they were matched.

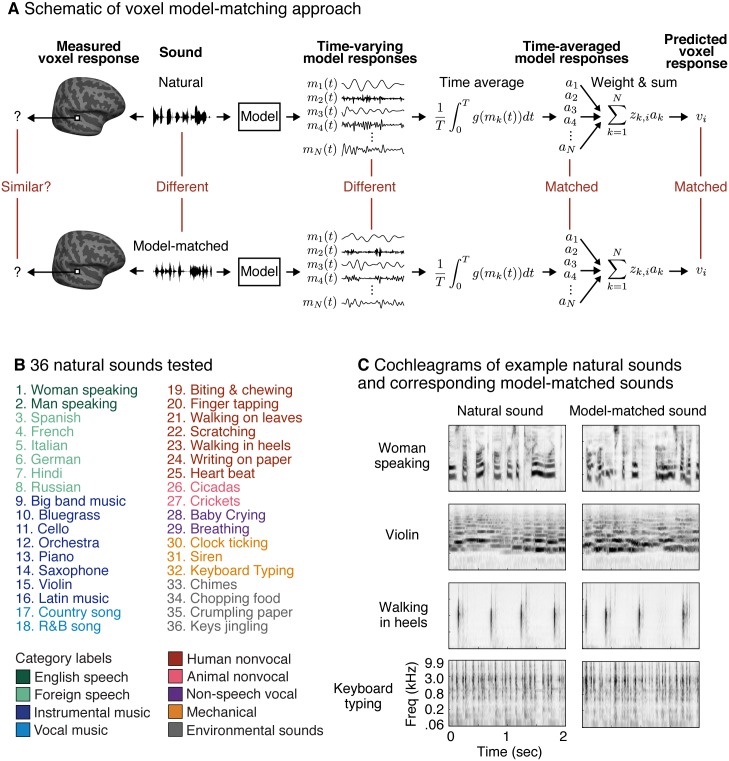

Fig 2. Model-matching methodology and experimental stimuli.

(A) The logic of the model-matching procedure, as applied to fMRI. The models we consider are defined by the time-varying response of a set of model features (mk(t)) to a sound (as in the auditory model shown in Fig 1A). Because fMRI is thought to pool activity across neurons and time, we modeled fMRI voxel responses as weighted sums of time-averaged model responses (Eqs 1 and 2, with ak corresponding to the time-averaged model responses and zk,i to the weight of model feature k in voxel i). Model-matched sounds were designed to produce the same time-averaged response for all of the features in the model (all ak matched) and thus to yield the same voxel response (for voxels containing neurons that can be approximated by the model features), regardless of how these time-averaged activities are weighted. The temporal response pattern of the model features was otherwise unconstrained. As a consequence, the model-matched sounds were distinct from the natural sounds to which they were matched. (B) Stimuli were derived from a set of 36 natural sounds. The sounds were selected to produce high response variance in auditory cortical voxels, based on the results of a prior study [45]. Font color denotes membership in one of nine semantic categories (as determined by human listeners [45]). (C) Cochleagrams are shown for four natural and model-matched sounds constrained by the spectrotemporal modulation model shown in Fig 1A.

Formally, we assume that the response of a voxel to a sound can be approximated as the weighted sum of time-averaged neuronal firing rates. Here, we assume the voxel response to be a single number because the sounds we present are short relative to the timescale of the BOLD response. Our goal is to test whether these model feature responses approximate neuronal responses within a voxel, in which case we should be able to approximate the voxel’s response (vi) as a weighted sum of time-averaged model responses (ak) (Fig 2A):

| (1) |

| (2) |

where g is an (unknown) point-wise function that maps the model responses to a neuronal firing rate (e.g., a rectifying nonlinearity), zk,i is the weight of model feature k in voxel i, and T is the duration of the response to a sound. The most common approach for testing Eqs 1 and 2 is to estimate the weights (zk,i) that best predict a given voxel’s response to natural sounds (for a particular choice of g) and to assess the cross-validated prediction accuracy of the model using these weights (via explained variance). Here, we instead test the above equations by synthesizing a “model-matched” sound that should yield the same voxel response as a natural sound for all voxels that are accurately described by the model (Fig 2A). We then test the model’s validity by assessing whether the voxel responses to the two sounds are similar.

In principle, one could synthesize a separate model-matched sound for each voxel after learning the weights (zk,i). However, this approach is impractical given the many thousands of voxels in auditory cortex. Instead, we matched the time-averaged response of all features in the model (i.e., all ak in Eq 2 are matched; see Fig 2A), which guarantees that all voxel responses that can be explained by the model should be matched, regardless of that voxel’s weights. We accomplished this objective by matching the histogram of each feature’s response (S1 Fig; see “Model-matching synthesis algorithm” in Materials and methods) [48]. Histogram matching implicitly equates the time-averaged response of the model features for any point-wise transformation (g) since, for any such transformation, the time-averaged response can be approximated via its histogram. It thus obviates the need to choose a particular nonlinearity.

Whether or not a voxel responds similarly to natural and model-matched sounds depends on the response properties of the model features and underlying neurons. If the model features are good approximations to the neurons in a voxel, then the voxel response to natural and model-matched sounds should be similar; if not, they could differ. Here, we consider model features that are tuned to different patterns of temporal and/or spectral modulation [20] in a “cochleagram” (Fig 1A) produced by passing a sound through filters designed to mimic cochlear tuning. Each model feature is associated with a time-frequency filter tuned to a particular temporal rate and/or scale, as well as to a particular audio frequency. The response of each model feature is computed by convolving the spectrotemporal filter with the cochleagram.

Although the response time courses of the models considered here are sufficient to reconstruct the stimulus with high accuracy, the time-averaged properties of the filters, as captured by a histogram, are not. As a consequence, the model-matched sounds differed from the natural sounds they were matched to. Indeed, many of the model-matched stimuli sound unnatural (see http://mcdermottlab.mit.edu/svnh/model-matching/Stimuli_from_Model-Matching_Experiment.html for examples). This observation demonstrates that the time-averaged properties of the model’s features, which approximately capture the modulation spectrum (Fig 2A), fail to capture many perceptually salient properties of natural stimuli (e.g., the presence of phonemic structure in speech or melodic contours in music). This additional structure is conveyed by temporal patterns in the feature responses, which are not made explicit by the model but which might be extracted by additional layers of processing not present in modulation filter models. If the neurons in a voxel respond to such higher-order properties (e.g., the presence of a phoneme or melodic contour), we might expect their time-averaged response to differ between natural and model-matched sounds. Thus, by measuring the similarity of voxel responses to natural and model-matched sounds, we can test whether the features of the filter bank model are sufficient to explain their response, or whether other features are needed.

Comparing fMRI responses to natural and model-matched sounds

We measured fMRI responses to a diverse set of 36 natural sounds and their corresponding model-matched sounds (Fig 2B). Each sound was originally 10 seconds in duration, but the sounds were broken up into successively presented 2-second excerpts to accommodate the fMRI scanning procedure (S2 Fig; see “Stimulus presentation and scanning procedure” in Materials and methods). The model-matched sounds were constrained by all of the features from the two-stage filter bank model shown in Fig 1A (see below for results from sounds constrained by simpler models). We first plot the response of two example voxels from a single subject (Fig 3A), which illustrate some of the dominant trends in the data. One voxel was located in the low-frequency area of the “high-low-high” tonotopic gradient thought to span PAC, and which is organized in a roughly V-shaped pattern [51–55]. Another voxel was located outside of tonotopically defined PAC. We note that how best to define PAC is a matter of active debate [54,56–59], and thus we have quantified our results using both tonotopic and anatomical definitions of PAC (described below).

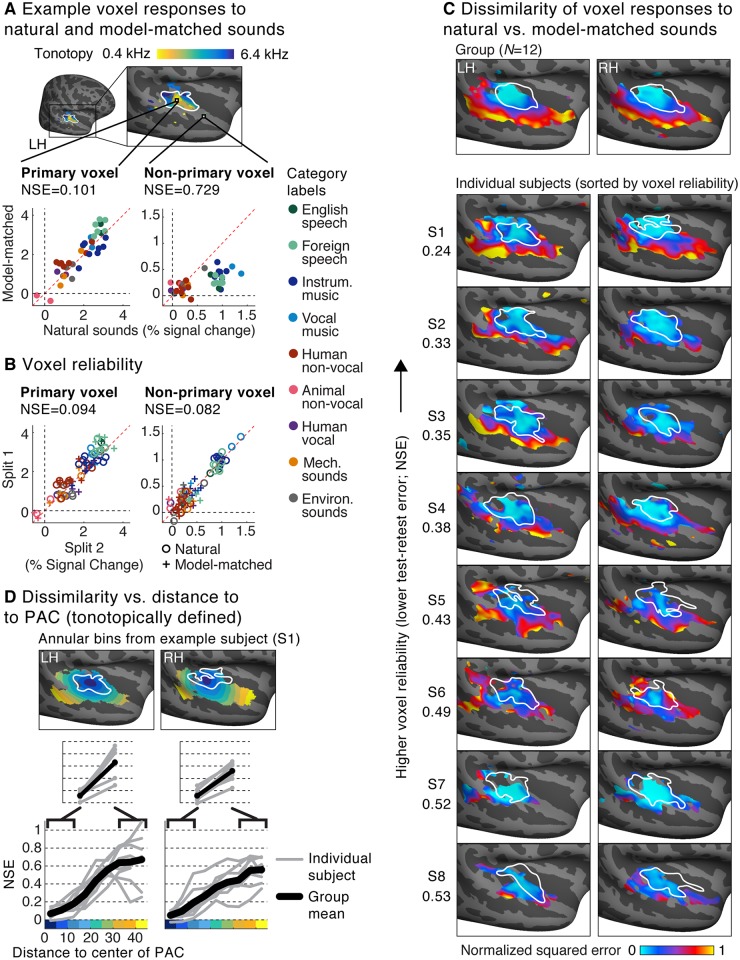

Fig 3. Voxel responses to natural and model-matched sounds.

(A) Responses to natural and model-matched sounds from two example voxels from a single subject. One voxel is drawn from the low-frequency region of PAC (defined tonotopically) and one from outside of PAC. A tonotopic map measured in the same subject is shown for anatomical comparison; the map plots the pure tone frequency that produced the highest voxel response. Each dot represents the response to a single pair of natural and model-matched sounds. The primary voxel responded similarly to natural and model-matched sounds, while the nonprimary voxel exhibited a weaker response to model-matched sounds. We quantified the dissimilarity of voxel responses to natural and model-matched sounds using a normalized squared error metric (NSE) metric (see text for details). (B) Split-half reliability of the responses to natural (circles) and model-matched sounds (crosses) for the two voxels shown in panel A. Both primary and nonprimary voxels exhibited a reliable response (and thus a low NSE between the two measurements). (C) Maps plotting the NSE between each voxel’s response to natural and model-matched sounds, corrected for noise in fMRI measurements (see S4 Fig for uncorrected maps). Maps are shown both for voxel responses from eight individual subjects (who were scanned more than the other subjects) and for group responses averaged across 12 subjects in standardized anatomical coordinates (top). The white outline plots the boundaries of PAC, defined tonotopically. Only voxels with a reliable response were included (see text for details). Subjects are sorted by the median test-retest reliability of their voxel responses in auditory cortex, as measured by the NSE (the number to the left of the maps for each subject). (D) A summary figure plotting the dissimilarity of voxel responses to natural and model-matched sounds as a function of distance to the low-frequency region of PAC (see S5 Fig for an anatomically based analysis). This figure was computed from the individual subject maps shown in panel C. Voxels were binned based on their distance to PAC in 5-mm intervals. The bins for one example subject (S1) are plotted. Each gray line represents a single subject (for each bin, the median NSE value across voxels is plotted), and the black line represents the average across subjects. Primary and nonprimary auditory cortex were defined as the average NSE value across the three bins closest and farthest from PAC (inset). In every subject and hemisphere, we observed larger NSE values in nonprimary regions. Note that the left hemisphere has been flipped in all panels to facilitate comparison between the left and right hemispheres. LH, left hemisphere; PAC, primary auditory cortex; RH, right hemisphere.

As shown in Fig 3A, the response of the primary voxel to natural and model-matched sounds was similar. By contrast, the nonprimary voxel responded notably less to the model-matched sounds. We quantified the dissimilarity of responses to natural and model-matched sounds by computing the squared error between corresponding pairs of natural and model-matched sounds, normalized by the squared error that would be expected if there was no correspondence between the two sound sets (see “Normalized squared error” in Materials and methods). We quantified response differences using the squared error rather than the correlation because model matching makes no prediction for how responses to natural and model-matched sounds should differ if the model is inaccurate, and, in practice, responses to model-matched sounds were often weaker in nonprimary regions, a phenomena that would not have been captured by correlation. At the end of the results, we quantify how natural and model-matched sounds differ by comparing correlation and squared error metrics.

For these example voxels, the normalized squared error (NSE) was higher for the nonprimary voxel (NSE = 0.729) than the primary voxel (NSE = 0.101), reflecting the fact that the nonprimary voxel showed a more dissimilar response to natural and model-matched sounds. Moreover, most of the error between responses to natural and model-matched sounds in the primary voxel could be attributed to noise in the fMRI measurements, because a similar NSE value was observed between two independent measurements of the voxel’s response to natural and model-matched sounds (NSE = 0.094) (Fig 3B). By contrast, in the nonprimary voxel, the test-retest NSE (NSE = 0.082) was much lower than the NSE between responses to natural and model-matched sounds, indicating that the difference in response to natural and model-matched sounds cannot be explained by a lower signal-to-noise ratio (SNR).

We quantified these effects across voxels by plotting the NSE between responses to natural and model-matched sounds for each voxel (Fig 3C). Maps were computed from voxel responses in eight individual subjects who were scanned substantially more than the other subjects (see “Participants” in Materials and methods for details) and from responses that were averaged across all twelve subjects after aligning their brains. Data were collected using two different experiment paradigms that differed in the sounds that were repeated within a scanning session. The results were similar between the two paradigms (S3 Fig), and so we describe them together (see Materials and methods for details; subjects S1, S2, S3, S7, and S8 were scanned in Paradigm I; subjects S4, S5, and S6 were scanned in Paradigm II. Group results are based on data from Paradigm I). In Paradigm I, only responses to natural sounds were repeated, while in Paradigm II, both natural and model-matched sounds were repeated. Only voxels with a reliable response are plotted (test-retest NSE < 0.4; see “Evaluating the noise-corrected NSE with simulated data” in Materials and methods for a justification of this criterion; reliability was calculated using natural sounds for Paradigm I and both natural and model-matched sounds for Paradigm II). Subjects have been ordered by the overall reliability of their data (median test-retest NSE across the superior temporal plane and gyrus, evaluated using natural sounds so that we could apply the same metric to subjects from Paradigms I and II). These maps have been corrected for noise in the fMRI measurements (see “Noise-correcting the NSE” in Materials and methods), but the results were similar without correction (S4 Fig).

Both group and individual subject maps revealed a substantial change across the cortex in the similarity of responses to natural and model-matched sounds. Voxels in PAC showed a similar response to natural and model-matched sounds with noise-corrected NSEs approaching 0, indicating nearly identical responses. Moving away from PAC, NSE values rose substantially, reaching values near 1 in some voxels far from PAC (Fig 3C). This pattern of results suggests that the filter bank model can explain much of the voxel response in primary regions but much less of the response in nonprimary regions, plausibly because nonprimary regions respond to higher-order features not made explicit by the model. This result is suggestive of a hierarchy of feature selectivity in auditory cortex and demonstrates where in the cortex the standard filter bank model fails to explain voxel responses.

We quantified the gradient we observed between primary and nonprimary voxels by binning the NSE of voxels from individual subjects based on their distance to PAC. Similar results were observed for tonotopic (Fig 3D) and anatomical definitions of PAC (S5 Fig; PAC was defined either as the center of the high-low-high gradient or as the center of anatomical region TE1.1 [58], in posteromedial Heschl’s gyrus (HG)). To directly compare primary and nonprimary regions, we then averaged NSE values within the three bins nearest and farthest from PAC (Fig 3D, inset). This analysis revealed that responses to natural and model-matched sounds became more dissimilar in nonprimary regions in both the left and right hemisphere of every subject tested, leading to a highly significant difference between primary and nonprimary regions (p < 0.01 via sign test for both hemispheres and for both tonotopic and anatomical definitions of PAC). The gradient between primary and nonprimary regions was observed in both scanning paradigms, regardless of smoothing (S3 Fig), and could not be explained by selectivity for intelligible speech (a similar pattern was observed when intelligible speech sounds were excluded from the analysis; see S6 Fig). These results also could not be explained by variations in voxel reliability across brain regions, both because our NSE measures were noise-corrected and because voxel responses were similarly reliable throughout primary and nonprimary regions (S4C Fig). As a consequence of the similar reliability across auditory cortex, the increase in the NSE between natural and model-matched sounds between primary and nonprimary regions was significantly greater than the change in voxel reliability. This was true using both corrected and uncorrected values for the natural versus model-matched NSE, both tonotopic and anatomical definitions of PAC, and with reliability measured using just natural sounds (for Paradigm I) and both natural and model-matched sounds (for Paradigm II) (p < 0.01 via sign test in all cases; see S3 Fig for a breakdown by paradigm). Thus, our results demonstrate that the modulation filter bank model is worse at accounting for voxel responses in nonprimary regions.

Comparing responses to sounds matched on subsets of model features

We next used a similar approach to test whether responses in PAC could be explained by simpler models. For example, if neurons in a voxel are tuned primarily to audio frequency, then all sounds with similar spectra should produce similar responses, regardless of their modulation properties. To test such alternative models, we synthesized three new sounds for each natural sound. Each synthetic sound was matched on a different subset of features from the full model (Fig 4A). One sound was synthesized to have the same marginal distribution of cochlear envelopes as a natural sound and, thus, a similar audio spectrum, but its modulation properties were otherwise unconstrained. Another sound was constrained to have the same temporal modulation statistics within each cochlear frequency channel, computed using a bank of modulation filters modulated in time but not frequency. A third sound was synthesized to have matched spectral modulation statistics, computed from a bank of filters modulated in frequency but not time. All of the modulation-matched sounds also had matched cochlear marginal statistics, thus making it possible to test whether adding modulation structure enhanced the similarity of cortical responses to natural and model-matched sounds.

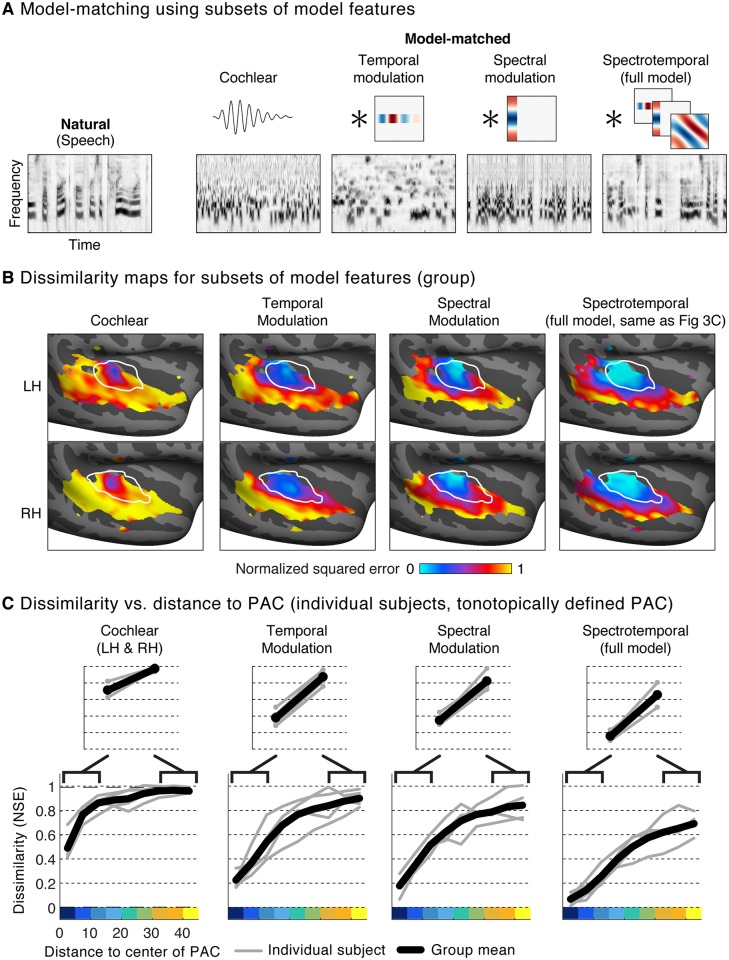

Fig 4. Comparison of responses to model-matched sounds constrained by different models.

(A) Cochleagrams for an example natural sound and several corresponding model-matched sounds constrained by subsets of features from the full two-stage model. Cochlear-matched sounds were constrained by time-averaged statistics of the cochleagram representation but not by any responses from the second-stage filters. As a consequence, they had a similar spectrum and overall depth of modulation as the corresponding natural sound, but were otherwise unconstrained. The other three sounds were additionally constrained by the response of second-stage filters, tuned either to temporal modulation, spectral modulation, or both temporal and spectral modulation (the full model used in Fig 3). Temporal modulation filters were convolved separately in time with each cochlear frequency channel. Spectral modulation filters were convolved in frequency with each time slice of the cochleagram. In this example, the absence of spectral modulation filters causes the frequency channels to become less correlated, while the absence of temporal modulation filters results in a signal with more rapid temporal variations than that present in natural speech. (B) Maps of the NSE between responses to natural and model-matched sounds, constrained by each of the four models. The format is the same as panel 3C. See S7 Fig for maps from individual subjects. (C) Dissimilarity between responses to natural and model-matched sounds versus distance to the low-frequency area of PAC. Format is the same as panel 3D. Results are based on data from the four subjects that participated in Paradigm I, because model-matched sounds constrained by subsets of features were not tested in Paradigm II. LH, left hemisphere; NSE, normalized squared error; PAC, primary auditory cortex; RH, right hemisphere.

The results of this analysis suggest that all of the model features are necessary to account for voxel responses to natural sounds in PAC (Fig 4B and 4C; S7 Fig). Responses to model-matched sounds constrained just by cochlear statistics differed substantially from responses to natural sounds even in PAC, leading to significantly larger NSE values than those observed for the full model (p < 0.001 in PAC via bootstrapping across subjects; see “Statistics” in Materials and methods). Thus, even though PAC exhibits selectivity for frequency due to tonotopy, this selectivity only accounts for a small fraction of its response to natural sounds. Responses to natural and model-matched sounds in PAC became more similar when the sounds were constrained by either temporal or spectral modulation properties alone (NSE temporal < NSE cochlear: p < 0.001 via bootstrapping; NSE spectral < NSE cochlear: p < 0.001). However, we only observed NSE values near 0 when sounds were matched in both their temporal and spectral modulation properties (NSE full model < NSE temporal: p < 0.001; NSE full model < NSE spectral: p < 0.001). These results provide further support for the idea that selectivity for both temporal and spectral modulation is a prominent feature of cortical tuning in PAC [7,32,33]. In nonprimary auditory cortex, we also observed more similar responses when matching sounds on spectrotemporal modulation compared with simpler models (NSE spectrotemporal < NSE cochlear: p < 0.001; NSE spectrotemporal < NSE temporal: p < 0.05; NSE spectrotemporal < NSE spectral: p < 0.01). However, the noise-corrected NSE values were high for all of the models tested, indicating that the modulation model fails to account for a substantial fraction of nonprimary responses.

Predicting responses to natural sounds from model features

Part of the motivation for using model-matched stimuli comes from the more common approach of predicting responses to natural stimuli from the features of a model (e.g., via linear regression). As discussed above, good predictive accuracy is not sufficient to guarantee that the features of a model drive a neural response, due to the potential for correlations between different feature sets across natural stimuli. Model matching provides one way to circumvent this issue, because the synthesized sounds are only constrained by the statistics of the particular model being tested. Here, we test whether our approach yields novel insights compared with simply predicting cortical responses to natural sounds from model features.

We attempted to predict responses to the 36 natural sounds from time-averaged statistics of the same model features used to generate the model-matched sounds (Fig 5A; see S8 Fig for individual subject prediction error maps for the full spectrotemporal model). Specifically, we used ridge regression to predict voxel responses from the amplitude of each model feature’s response to each natural sound [7,16], measured as the standard deviation across time (for the cochlear model, we used the mean rather than the standard deviation because the features were the result of an envelope extraction operation, and the mean thus conveyed the amplitude of the filter’s response). Because histogram matching approximately matches all time-averaged statistics of a distribution, predictions based on a single time-averaged statistic, such as the standard deviation, provide a conservative estimate of the predictive power of time-averaged statistics. Good predictions in voxels whose responses to model-matched sounds deviated from those to natural sounds would thus suggest that prediction-based analyses overestimate the model’s explanatory power. We quantified prediction accuracy by measuring the NSE between measured and predicted responses for left-out sounds that were not used to learn the regression weights (see “Model predictions” in Materials and methods).

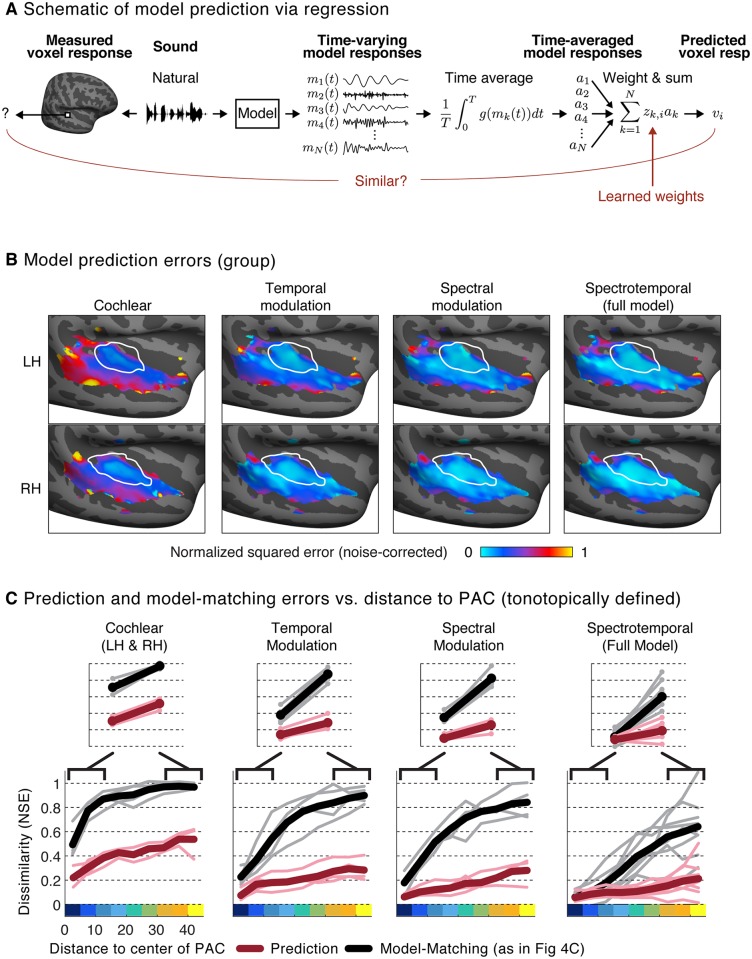

Fig 5. Predicted responses to natural sounds via regression using the same auditory model used to constrain the model-matched sounds.

(A) Schematic of regression procedure used to predict neural responses from model features. For each natural sound, we computed the response time course for each feature in the model, as was done for model matching. We then computed a time-averaged measure of each feature’s activity (the mean across time for the cochlear features, because they are the result of an envelope operation, and the standard deviation for the modulation features, because they are raw filter outputs) and estimated the weighted combination of these time-averaged statistics that yielded the best-predicted response (using ridge regression, cross-validated across sounds). (B) Maps showing the prediction error (using the same NSE metric employed in Figs 3 and 4) between measured and predicted responses to natural sounds for the corresponding models shown in Fig 4 (see S8 Fig for maps from individual subjects). (C) Prediction error versus distance to the low-frequency area of PAC (maroon lines: thin lines correspond to individual subjects, thick lines correspond to the group average). For comparison, the corresponding NSE values derived from the model-matching procedure are replotted from Fig 4C (black lines). The analyses are based on individual subject maps. Results for the full model (rightmost plot) are based on data from the same eight subjects shown in Fig 3C. Results for model subsets (cochlear, temporal modulation, and spectral modulation) are based on data from four subjects that were scanned in Paradigm I (sounds constrained by subsets of model features were not tested in Paradigm II). LH, left hemisphere; NSE, normalized squared error; PAC, primary auditory cortex; RH, right hemisphere.

Overall, we found that voxel responses to natural sounds were substantially more similar to the predicted model responses than to the measured responses to the model-matched stimuli (Fig 5B and 5C), leading to smaller NSEs for model predictions compared with model-matched stimulus responses. This difference was particularly pronounced in nonprimary regions, where we observed relatively good predictions from the full two-stage model despite highly divergent responses to model-matched sounds, leading to a significant interaction between the type of model evaluation (model prediction versus model matching) and region (primary versus nonprimary) (p < 0.01 via sign test for both tonotopic and anatomical definitions of PAC; a sign test was used to evaluate whether the change in NSE values between primary and non-primary regions was consistently larger for model matching compared with model prediction). Because the natural and model-matched sounds were matched in the features used for prediction, the divergent responses to the two sound sets imply that the features used for prediction do not in fact drive the response. Thus, good predictions for natural sounds in the presence of divergent model-matched responses must reflect the indirect influence of correlations between the features of the model and the features that actually drive the neuronal response. Model matching thus reveals a novel aspect of functional organization not clearly evident from model predictions by demonstrating the failure of the filter bank model to account for nonprimary responses.

Our prediction analyses were based on responses to a set of 36 natural sounds that was smaller than the sound sets that have been used elsewhere to evaluate model predictions [7,16,45,60]. Because our analyses were cross-validated, small sound sets should reduce prediction accuracy and thus cannot explain our finding that model predictions were better than would be expected given responses to model-matched sounds. Nonetheless, we assessed the robustness of our findings by also predicting responses to a larger set of 165 natural sounds [45]. We observed similar results with this larger sound set, with relatively good prediction accuracy for the full spectrotemporal model throughout primary and nonprimary auditory cortex (S9 Fig).

Another way to assess the utility of the model-matching approach is to train a model to predict natural sounds, and then test its predictive accuracy on model-matched sounds (and vice versa). In practice, this approach yielded similar results to directly comparing responses to natural and model-matched sounds: good cross-predictions in PAC but poor cross-predictions in nonprimary auditory cortex (S10 Fig). This observation is expected given that (a) the model predictions for natural sounds were good throughout auditory cortex and (b) responses to natural and model-matched sounds diverged in nonprimary regions, but it provides a consistency check of the two types of analyses.

Voxel decomposition of responses to natural and model-matched sounds

All of our analyses described thus far were performed on individual voxels, summarized with maps plotting the NSE between each voxel’s response to natural and model-matched sounds. However, these error maps do not reveal in what respect the responses to natural and model-matched sounds differ, and, because of the large number of voxels, it is not feasible to simply plot all of their responses. We previously found that voxel responses to natural sounds can be approximated as a weighted sum of a small number of canonical response patterns (components) [45] (Fig 6A). Specifically, six components explained over 80% of the noise-corrected response variance to a diverse set of 165 natural sounds across thousands of voxels. We thus used these six components to summarize the responses to natural and model-matched sounds described here. This analysis was possible because many of the subjects from this experiment also participated in our prior study. As a consequence, we were able to learn a set of voxel weights that reconstructed the component response patterns from our prior study and then apply these same weights to the voxel responses from this experiment (see “Voxel decomposition” in Materials and methods).

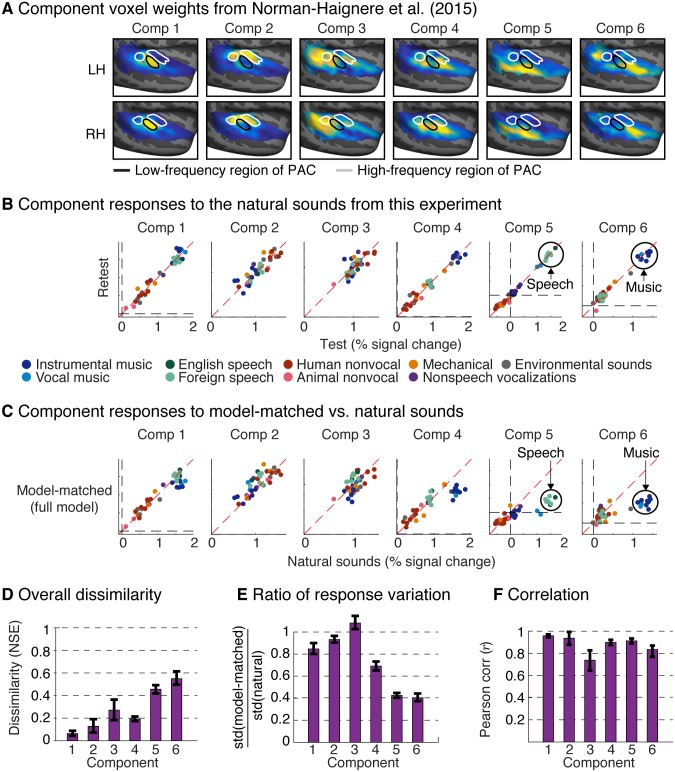

Fig 6. Voxel decomposition of responses to natural and model-matched sounds.

Previously, we found that much of the voxel response variance to natural sounds can be approximated as a weighted sum of six canonical response patterns (“components”) [45]. This figure shows the response of these components to the natural and model-matched sounds from this experiment. (A) The group component weights from Norman-Haignere and colleagues (2015) [45] are replotted to show where in auditory cortex each component explains the neural response. (B) Test-retest reliability of component responses to the natural sounds from this study. Each data point represents responses to a single sound, with color denoting its semantic category. Components 5 and 6 showed selectivity for speech and music, respectively, as expected (Component 4 also responded most to music because of its selectivity for sounds with pitch). (C) Component responses to natural and model-matched sounds constrained by the complete spectrotemporal model (see S11 Fig for results using subsets of model features). The speech and music-selective components show a weak response to model-matched sounds, even for sounds constrained by the full model. (D) NSE between responses to natural and model-matched sounds for each component. (E) The ratio of the standard deviation of each component’s responses to model-matched and natural sounds (see S12A Fig for corresponding whole-brain maps). (F) Pearson correlation of responses to natural and model-matched sounds (see S12B Fig for corresponding whole-brain maps). All of the metrics in panels D—F are noise-corrected, although the effect of this correction is modest because the component responses are reliable (as is evident in panel B). Error bars correspond to one standard error computed via bootstrapping across subjects. LH, left hemisphere; NSE, normalized squared error; PAC, primary auditory cortex; RH, right hemisphere.

We found that all six components exhibited reliable responses to the natural sounds from this experiment (Fig 6B). Two of the components (5 and 6) responded selectively to speech and music, respectively, replicating the selectivity we found previously (last two columns of 6B). Critically, responses to the model-matched sounds were much weaker in these speech- and music-selective components, even for sounds matched on the full model (Fig 6C, last two columns; see S11 Fig for sounds matched on subsets of model features), leading to high NSE values (speech NSE = 0.45; music NSE = 0.55 for the full model, noise-corrected; Fig 6D). By contrast, the other four components, all of which overlapped PAC to varying extents, responded similarly to natural and model-matched sounds constrained by the full model, leading to smaller errors (NSE for Component 1: 0.06, Component 2: 0.12, Component 3: 0.26, Component 4: 0.19) than those for the speech- and music-selective components (p < 0.001 for all direct comparisons between the speech- and music-selective components and Components 1, 2, and 4; for Component 3, which had the lowest test-retest reliability, the direct comparison with the music-selective component was significant, p < 0.01, and the direct comparison with the speech-selective component was nearly significant, p = 0.076; statistics computed via bootstrapping across subjects). These results indicate that selectivity for music and speech cannot be purely explained by standard acoustic features that nonetheless account for much of the voxel response in primary regions.

Our model-matching approach posits that responses should be exactly matched if the model is accurate. If the model is not accurate, the approach makes no prediction about how the responses should differ. Nonetheless, the divergent responses to natural and model-matched sounds in Components 5 and 6 appeared to be largely driven by weaker responses to the model-matched sounds. We verified this observation by comparing the standard deviation of responses to natural and model-matched sounds: the response variation for model-matched sounds decreased sharply in Components 5 and 6, driven by lower overall responses to the model-matched sounds (Fig 6E). In contrast, the noise-corrected correlation remained high (Fig 6F). A similar pattern was also evident in whole-brain maps (S12 Fig): the variation in voxel responses to model-matched sounds constrained by the full model dropped in nonprimary regions (driven by lower responses to the model-matched stimuli), while the correlation remained high. For Components 5 and 6, the high correlations were driven by the fact that model-matched sounds from the component’s preferred category produced a higher response than model-matched sounds from other categories (as is evident in Fig 6C). For example, in Component 6, model-matched music produced a lower response than natural music but a higher response than model-matched sounds from other categories (p < 0.001, via bootstrapping). The same pattern was evident for Component 6, which responded selectively to speech (p < 0.001). This finding suggests that selectivity in nonprimary regions may reflect a mixture of category-specific modulation tuning and responses to higher-order properties specific to music and speech, consistent with prior studies [9,53,61]. The results suggest that the modulation-specific structure driving Components 5 and 6 is correlated across natural sounds with the other properties of music and speech that drive their response. The model-matching approach allows us to see these two contributions to the response, revealing that there is something unique to the response of Components 5 and 6 that is distinct from the other components.

Discussion

We have described a novel approach for evaluating a model of neuronal responses. Given a model, we synthesize a stimulus that yields the same model response as a natural stimulus, and test whether they produce similar neural responses. We applied this approach to test whether voxel responses in human auditory cortex can be explained by a commonly used auditory model based on spectrotemporal modulation. Our results revealed a substantial functional difference between primary and nonprimary regions of human auditory cortex. Many voxels in PAC showed nearly equivalent responses to natural and model-matched sounds constrained by the full spectrotemporal model. We also found that these voxels responded differently when sounds were model matched with only cochlear filter statistics, or with temporal or spectral modulations alone. These findings together suggest that spectrotemporal modulation accounts for much of the voxel response in PAC. By contrast, many voxels in nonprimary regions responded weakly to all of the model-matched sounds, demonstrating that they are only weakly driven by the features captured by the model. This functional difference between primary and nonprimary regions was not clearly evident when the model was evaluated by its response predictions, due to the confounding influences of stimulus-driven correlations across natural stimuli. Model matching thus reveals a novel aspect of functional organization by showing where in the cortex a standard auditory model can explain voxel responses to natural sounds.

Implications for models of auditory cortex

The notion that auditory cortex might be organized hierarchically—i.e., into a series of stages supporting increasingly abstract representations—has been a popular proposal for decades [41,62–64]. Hierarchical organization has some support from anatomical studies [65], and from qualitative observations that responses in nonprimary regions are more complex than those in primary regions [66,67] and more closely aligned with semantically meaningful sound properties [17,45–47,68]. However, there has been little evidence for how primary and nonprimary regions might differ in computational terms [16], and thus it has been unclear what mechanisms underlie the apparent differences in tuning between primary and nonprimary regions.

Most computational models of auditory processing beyond the periphery are based on tuning for modulation [20]. Such models have been used to explain responses throughout the auditory pathway in non-human animals [2,11,26–31,34]. In humans, modulation-based models have been shown to have relatively good predictive accuracy throughout both primary and nonprimary regions [7,12,45], which has led to the hypothesis that sounds are represented in a distributed manner [69]. This view contrasts with the notion of hierarchical organization and, in its most extreme form, suggests that responses to seemingly complex attributes of sound in nonprimary regions (e.g., speech and music selectivity) could reflect the same types of mechanisms used to code sound in PAC.

Our study helps to reconcile the literatures on modulation-based auditory models and hierarchical organization. First, we show that modulation selectivity fails to explain much of the response in nonprimary regions, and that model predictions provide overly optimistic estimates of the model’s efficacy. This conclusion follows from the fact that we observed many voxels in nonprimary regions whose response to natural sounds was well predicted by the model and yet produced divergent responses to model-matched sounds. Because the model by definition predicts that natural and model-matched sounds should have equivalent responses, this finding demonstrates a clear model failure.

Conversely, our findings provide further evidence that modulation selectivity is a key feature of functional organization in human PAC [7,32,33,35]. Using both predictions and model matching, we found that the modulation model explains the large majority of the voxel responses in this region. This finding was again not obvious from prior studies using model prediction alone, because the predictions could have been influenced by stimulus-driven correlations, as turned out to be the case in nonprimary regions. By contrast, we found that frequency selectivity, which presumably reflects tonotopy, explained much less response variance in PAC. This finding suggests that modulation selectivity may be a key organizing dimension of PAC.

What features might nonprimary regions of auditory cortex represent? These regions are primarily driven by sound, show signs of having relatively short integration windows [43], and, even when speech selective, respond largely independently of the presence of linguistic structure [43,45], suggesting acoustic rather than linguistic or semantic representations [17]. Moreover, although responses to the model-matched sounds were substantially weaker than responses to natural sounds, the model-matched sounds still drove responses to natural sounds above baseline and were correlated with responses to natural sounds. Thus, one natural hypothesis is that nonprimary regions transform a lower-level acoustic representation, such as the spectrotemporal representation considered here, into a representation that makes behaviorally relevant variables more explicit (e.g., easier to decode). This hypothesis could be tested with hierarchical models that transform the output of modulation filters with additional stages of nonlinear and linear operations [70]. In principle, such models could be fit to existing neural data sets and then evaluated with model-matched stimuli. But because the space of such models is large, some additional constraint is likely to be needed to select models for experimental tests. Such constraints could come from natural sounds and tasks, for example by optimizing for efficient encoding of natural sounds or for performance of ecologically relevant tasks [71–76].

We have recently explored this idea by training a deep neural network to recognize words and musical genres [16] and then comparing the resulting representations with voxel responses. We found that later layers of the network better predicted voxels in nonprimary regions of the cortex, consistent with the notion of hierarchical organization. These predictions could of course be influenced by stimulus-driven correlations, which may explain why the differences in prediction accuracy between layers were modest. Future work could address this question and provide stronger tests of such models by applying model matching to the representation from different layers of a hierarchical model.

Implications and limitations of model matching

The result of our model-matching experiment is an error metric between 0 and 1, indicating the dissimilarity of a neural response to natural and model-matched sounds. What does this number tell us about the type of models that could underlie the neural response? When the error metric is near 1, the models under which responses have been matched are ruled out as descriptions of the voxel response. Because the error metric is noise-corrected, its absolute value is meaningful, and large errors invalidate a model. Our specific implementation matched model responses for all point-wise functions of the filters in question, and thus that family of models is ruled out for voxels with large error.

At the other extreme, errors near 0, like those we observed in PAC, reveal that the voxel responses are consistent with the family of models whose response was matched. The matching procedure employed a specific filter bank, but alternative models might also be matched (for instance, those with filters that can be approximated as linear combinations of the filters used for matching). Small error values thus do not exclude models other than the one we used. However, specific alternative models could be evaluated by measuring their response to the two sets of stimuli used in an experiment (natural and model-matched). Models that give distinct responses to the two stimulus sets could be ruled out for voxels whose responses to the two sets are similar. Conversely, one could also rule out models whose responses to the two sets are similar for voxels whose responses to the two sets are different. We used this approach to investigate different types of spectrotemporal filter banks (S15 Fig), finding that a range of alternative filter banks had matched statistics for the natural and model-matched sounds tested here (see Variants of the spectrotemporal filter model in Materials and methods). This finding suggests that a wide range of spectrotemporal filter models can be ruled out as models of nonprimary auditory cortex. Our stimuli and fMRI data are available, so that alternative models can be evaluated using this approach: https://osf.io/73pfv/.

In other situations, matching with one model may entail matching with another, but not vice versa. This was the case for the four models we compared in Fig 4—the full spectrotemporal model is inclusive of the other models. The higher NSE values observed with the other models provides evidence for the necessity of the spectrotemporal model features.

As with any method for model evaluation, the interpretation of our results is constrained by the resolution of the brain measurements used for evaluation. Because fMRI is primarily sensitive to brain responses that are spatially clustered, our results bear most directly on aspects of cortical tuning that are organized at the scale of voxels. Our results were robust to the exact size of the voxels tested and the amount of spatial smoothing, suggesting that our results hold for spatial scales on the order of millimeters to centimeters. But even small voxels pool activity across neurons and across time, and thus it is possible that voxels with similar responses to natural and model-matched sounds might nonetheless contain neurons that show more divergent responses or that have temporal response properties that differ from the model. This fact may partially explain why electrophysiological recordings in animals have found that linear spectrotemporal filters are insufficient to account for responses in PAC [13,77–79]. Future work could apply model matching to neuronal responses measured electrophysiologically to test models at a finer spatial and temporal scale. For example, one could synthesize model-matched sounds that should yield the same firing rate as a natural sound given a model of an individual neuron’s response. At the scale of fMRI voxels, however, linear spectrotemporal filters provide a good description of PAC, potentially because neurons with similar modulation selectivity are spatially clustered.

Because the spatial pooling of fMRI can obscure neural responses that are heterogeneous across nearby neurons, voxel responses to natural and model-matched stimuli could in principle also be more dissimilar than the responses of the underlying neural populations. That is, there could be neural populations that respond similarly to natural and model-matched sounds, but which do not contribute to the voxel NSE because they are not clustered at a coarse enough scale and thus do not differentially drive voxel responses to different sounds within a stimulus set. A high NSE thus demonstrates a model failure (because it implies underlying neurons that respond differently to natural and model-matched sounds), but it does not preclude the possibility that the voxel also contains some neurons that are well described by the model features. We note that these limitations are not specific to the model-matching approach and apply equally to evaluations of models by their predictions of fMRI responses—in both cases, finer-grained brain measurements will enable finer-grained model tests.

Relation to prior work on perceptual metamers and texture synthesis

Our approach to model matching is an extension of methods for texture synthesis originally developed in image processing and computer vision [48,49], and later applied to sound texture [40] and visual texture perception [80,81]. In texture synthesis, the goal is typically to test whether a set of statistical features could underlie perception by testing whether synthetic stimuli with the same statistics are metameric, i.e., whether they look or sound the same as a real-world texture. The implementation of our synthesis procedure is inspired by classic texture synthesis methods [48], but the scientific application differs notably in that we evaluate the model by the similarity of neural responses rather than the similarity that is perceived by a human observer. Indeed, many of the model-matched stimuli sounded unnatural, demonstrating that the modulation spectrum fails to capture higher-order properties of natural sounds to which listeners are sensitive (e.g., the presence of phonemic or melodic structure). This observation reveals the insufficiency of the modulation spectrum as a complete account of perception but does not place strong constraints on whether particular neural stages are well described by the model. The fact that responses to natural and model-matched sounds diverged in nonprimary regions of auditory cortex suggests that those regions may be driven by higher-order structure not made explicit by the modulation model, which we could not have concluded from perceptual observations alone.

The most similar previous approach involved comparing the strength of cortical responses to visual textures synthesized from different classes of statistics of a wavelet filter bank model [81]. Although we also compared cortical responses to sounds synthesized from different model statistics, the key comparison was between responses to individual natural and synthesized sounds, which is critical to identifying regions of the brain that are not well explained by a model.

The modulation filter bank model tested here bears similarities to the texture model of McDermott and Simoncelli [40,82]. The key difference is that dependencies between cochlear frequency channels are captured here by spectral modulation filters rather than the correlations used in the original texture model. In practice, we found that sounds synthesized from the two models were perceptually similar, suggesting that correlations in one stage of representation (the cochlea) can be captured by marginal statistics of a subsequent stage of representation (modulation filters) [40].

Approaches for model testing

Recent years have seen growing interest in the use of computational “encoding models” to test formal theories of sensory processing [5–7,9–14,16–19,71,83]. Because encoding models make quantitative predictions about the neural response, they can be used to test and compare theories of neural coding. The features of the model can then provide insight into the sensory features that are represented in different neural populations [6,7,12,17].

A key challenge of testing encoding models with natural stimuli is that the features of different models are often correlated [17,18], making it difficult to tease apart the unique contribution of any particular model. This problem can be partially overcome by comparing the predictions of two different models but is difficult to eliminate when the features of two models are strongly correlated and when responses can only be measured to a relatively small number of stimuli (as is common with fMRI). Another approach is to alter stimuli so as to decouple different features sets [18,71]. For example, adding varied background noise to natural sounds could help to decouple low- and high-level features of sounds, because noise can alter a sound’s low-level features without affecting its perceived identity. However, such approaches are heuristic and do not guarantee that the relevant features will be decorrelated unless the candidate feature sets can be measured with existing models. Model matching is appealing because it provides a way to test the ability of a single model to explain neural responses by imposing the structure of that model alone, decoupling the model from alternative models without needing to specify the many possible alternatives.

Materials and methods

Ethics statement

The study was approved by MIT’s Committee on the Use of Humans as Experimental Subjects (protocol 1012004218). All subjects gave written informed consent. The experiments adhere to the Declaration of Helsinki.

Participants

The experiment comprised 41 scanning sessions, each approximately 2 hours. Fifteen subjects participated in the experiment (ages 19–36; five male; all right-handed; one subject, S1, was author SNH). Two different experiment paradigms were tested (hereafter referred to as Paradigm I and Paradigm II). We have chosen to describe these two paradigms as a part of the same experiment because the stimuli and analyses were very similar. In Paradigm I, eight subjects completed a single scanning session, three subjects completed five sessions, and one subject completed three sessions (this subject chose not to return for the fourth and fifth sessions). We chose this approach because it allowed us to compute reliable group maps by averaging across the 12 subjects, as well as reliable individual subject maps using a larger amount of data from the subjects with multiple scan sessions. Five subjects were scanned in Paradigm II. One subject completed two sessions, two subjects completed three sessions, and one subject completed four sessions. One subject (S1) was scanned in both paradigms (when possible we used data from Paradigm II for this subject, because there was a higher quantity of data, and the scan sessions for Paradigm II were higher resolution, as noted below).

Because we aimed to characterize auditory cortex of typical listeners without extensive musical experience, we required that subjects not have received formal musical training in the 5 years preceding their participation in the experiment.

Data acquisition parameters and preprocessing

Data for Paradigm I were collected on a 3T Siemens Trio scanner with a 32-channel head coil (at the Athinoula A. Martinos Imaging Center of the McGovern Institute for Brain Research at MIT). The functional volumes were designed to provide good spatial resolution in auditory cortex. Each functional volume (i.e., a single 3D image for one participant) included 15 slices oriented parallel to the superior temporal plane and covering the portion of the temporal lobe superior to and including the superior temporal sulcus (3.4 second TR, 30 millisecond TE, 90-degree flip angle; five discarded initial acquisitions). Each slice was 4 mm thick and had an in-plane resolution of 2.1 × 2.1 mm (96 × 96 matrix, 0.4-mm slice gap). iPAT was used to minimize acquisition time (1 second/volume). T1-weighted anatomical images were also collected for each subject (1 mm isotropic voxels).

Data for Paradigm II were collected more recently using a 3T Prisma scanner (also at the McGovern Institute). We used a multiband acquisition sequence (3× acceleration) to reduce slice thickness, while maintaining coverage (36 slices with 2-mm thickness and no gap) and thus reducing voxel size (2 mm isotropic). iPAT was not used. Other acquisition parameters were similar (3.45 second TR, 1.05-second acquisition time, 34 millisecond TE, 90-degree flip angle; three discarded initial acquisitions).

Functional volumes were preprocessed using FSL software and custom MATLAB scripts. Volumes were motion corrected, slice-time corrected, skull stripped, linearly detrended, and aligned to the anatomical volumes (using FLIRT [84] and BBRegister [85]). Volume data were then resampled to the reconstructed cortical surface, computed by FreeSurfer [86], and smoothed on the surface using a 5-mm FWHM kernel to improve SNR (results were similar without smoothing; S3 Fig). Individual subject data were then aligned on the cortical surface to the FsAverage template brain distributed by Freesurfer.

Stimulus presentation and scanning procedure

Our stimulus set was derived from 36 natural sounds, each 10 seconds in duration (Fig 2B). From each natural sound, we synthesized four model-matched sounds, constrained by different subsets of features from a commonly used spectrotemporal filter bank model [20]. The complete stimulus set thus included five conditions (natural sounds + 4 model-matched versions), each with 36 sounds, yielding a total of 180 stimuli.

Scan acquisitions produce a loud noise due to rapid gradient switching. To prevent these noises from interfering with subjects’ ability to hear the sounds, we used a “sparse” scanning paradigm [87] that alternated between presenting sounds and acquiring scans, similar to those used in our prior experiments [45,88,89] (S2 Fig). This was achieved by dividing each 10-second stimulus into five 2-second segments (windowed with 25-millisecond linear ramps). These five segments were presented sequentially with a single scan acquired after each segment. The five segments for a particular sound were always presented together in a “block” (the order of the segments within a block was random). Each scan acquisition lasted 1 second in Paradigm I and 1.05 seconds in Paradigm II. There was a 200-millisecond buffer of silence before and after each acquisition. The total duration of each five-segment block was 17 seconds in Paradigm I and 17.25 seconds in Paradigm II. We averaged the responses of the second through fifth acquisitions after the onset of each stimulus block. The first acquisition was discarded to account for the hemodynamic delay. Results were similar when we instead averaged just the second and third time point or just the fourth and fifth time point after stimulus onset, indicating that our results were robust to the averaging window applied to the fMRI measurements (S13 Fig). We chose to use signal averaging rather than a GLM with a standard hemodynamic response function (HRF), because we have found this approach leads to slightly more reliable responses, presumably due to inaccuracies in the standard HRF [90].

In Paradigm I, each model-matched stimulus was presented once per 2-hour scanning session, and the natural stimuli were presented twice so that we could measure the reliability of each voxel’s response to natural sounds and noise-correct the NSE metric. Each session was divided into 12 “runs,” after which subjects were given a short break (approximately 30 seconds). Each run included six natural sounds and 12 model-matched sounds (three per condition). In Paradigm II, we presented only the model-matched sounds constrained by the complete model, which allowed us to present both the natural and model-matched sounds several times per scan session. Each run included nine natural and nine model-matched sounds. The entire sound set was presented over four consecutive runs. Subjects completed 12 or 16 runs depending on the time constraints of the scan session. Thus, each subject heard each sound between three and four times per session. In both paradigms, there were periods during which no stimulus was presented and only scanner noise was heard, which provided a baseline with which to compare stimulus-driven responses. There were four such “silence” periods per run (each 17 seconds in Paradigm I and 17.25 seconds in Paradigm II). The ordering of stimuli and silence periods was pseudorandom and was designed such that, on average, each condition occurred with roughly the same frequency at each position in a run, and each condition was preceded equally often by every other condition (as in our prior work [88,89]).

Prior to settling on the procedure for Paradigm I, we conducted a pilot experiment in which six of the twelve participants from Paradigm I completed a single session. These sessions featured stimuli from only three of the model-matched conditions (spectral modulation matched stimuli were omitted). These scan sessions were the first of this study, and we limited the number of conditions to make sure the experiment could fit within the allotted 2-hour scanning slot. The runs for these sessions were slightly shorter because there were only nine model-matched stimuli presented per run (there were only three periods of silence per run for these sessions). When analyzing the results, we included the data from these sessions in order to use the maximum amount of data available for each condition, and thus the results for the spectral modulation matched condition were based on less data than the other model-matched conditions. However, because the NSE metric was corrected for noise (see below), differences in the amounts of data across conditions should not bias the results.

Selection of natural stimuli

We used long sounds (10 seconds) so that we could compute time-averaged statistics for filters with relatively long integration periods (i.e., periods of up to 2 seconds). We selected sounds that were likely to produce high response variance in auditory cortical voxels, guided by the results of a prior paper from our lab that measured fMRI responses in auditory cortex to a large set of natural sounds [45]. In our prior study, we found that much of the voxel response variance could be captured by a weighted sum of six response patterns (“components”), and we thus attempted to select sounds that had high response variance along these components. To accomplish this goal, we created a subset of 60 sounds with high component response variance by iteratively discarding sounds in a greedy manner, each time removing the sound that led to the largest increase in response variance, averaged across the six components. Because we needed stimuli that were relatively long in duration, we could not directly use the stimuli from our prior study, which were only 2 seconds in duration. Instead, we created a new stimulus set with 10-second sounds, each of which had the same label (e.g., “finger tapping”) as one of the sounds from the 60-sound set.

Model representation

We synthesized sounds based on four different model representations. The simplest model was just based on the output of filters designed to mimic cochlear responses (i.e., a cochleagram). The other three models were based on filters tuned to modulations in this cochleagram representation. Two models were tuned to either temporal modulation or spectral modulation alone, and one was jointly tuned to both temporal and spectral modulation. MATLAB code for measuring and synthesizing sounds from the models described in this paper is available here: https://github.com/snormanhaignere/spectrotemporal-synthesis-v2.

We refer to specific scripts in this repository to clarify our descriptions and ensure that others can replicate our work.

The cochlear representation was computed by convolving the audio waveform of each sound with 120 bandpass filters, spaced equally on an ERBN-scale between 20 Hz and 10 kHz, with bandwidths chosen to match those measured psychophysically in humans (individual filters had frequency responses that were a half-cycle of the cosine function, in order to exactly tile the frequency spectrum; adjacent filters overlapped by 87.5%) [40] (see wav2coch_without_filts.m). Each channel was intended to model the response of a different point along the basilar membrane. The envelopes of each filter output were computed using the Hilbert transform, raised to the 0.3 power to mimic cochlear compression/amplification, and downsampled to 400 Hz after applying an anti-aliasing filter. So that we could express the spectral modulation filters that operate on the cochleagram (described below) in units of cycles per octave (as in the original model of Chi and colleagues, 2005 [20]), we interpolated the frequency axis from an ERB-scale to a logarithmic frequency scale (24 cycles/octave), yielding 217 channels.

The modulation-based representations were computed using a bank of multiscale wavelet filters (Fig 1A) that were convolved in time and/or frequency with the cochleagram for each sound (see coch2filtcoch.m). The shapes and bandwidths of the filters were the same as those described by Chi and colleagues (2005). The three sets of filters differed in whether they were tuned to modulation in time, frequency, or both.

The temporal modulation representation was computed using gammatone filters (see filt_temp_mod.m):

| (3) |

where br determines the best modulation rate of the filter (i.e., the rate with maximum gain). We used nine filters with octave-spaced best rates: 0.5, 1, 2, 4, 8, 16, 32, 64, and 128 Hz. Each filter was separately convolved in time with each frequency channel of the cochleagram. The output of the model can thus be represented as a set of nine filtered cochleagrams, each of which highlights modulations at a particular temporal rate.

The spectral modulation representation was computed using “Mexican hat” filters, which are proportional to the second derivative of a Gaussian (see filt_spec_mod.m):

| (4) |

where bs determines the best modulation scale of the filter (i.e., the scale with maximum gain). The spectral filters were implemented in the frequency domain using the Fourier representation of a Mexican hat filter:

| (5) |

We used six filters with octave-spaced scales: 0.25, 0.5, 1, 2, 4, and 8 cycles/octave. Each filter was separately convolved in frequency with each time “slice” of the cochleagram. The output of the model can thus be represented as six filtered cochleagrams, each of which highlights a different range of spectral modulations. Each temporal and spectral filter was scaled so that the power of its best rate/scale was the same for all filters.

The spectrotemporal modulation representation (often referred to as the “full model”) was computed primarily from 2D filters that were convolved with the cochleagram in both time and frequency. The filters were instantiated in the 2D Fourier domain (as in the original implementation of Chi and colleagues, 2005 [20]) by taking the outer product of the frequency-domain representations of the temporal and spectral modulation filters described above (see filt_spectemp_mod.m). These filters were then “oriented” so as to be sensitive to upward-right or downward-right modulations. This was accomplished by zeroing either the first and third quadrant of the 2D frequency response (for upward-oriented filters) or the second and fourth quadrant (for downward-oriented filters) (the Nyquist frequency and DC were never zeroed). There were 108 total spectrotemporal filters produced by crossing nine temporal filters with six spectral filters (with best modulation frequencies as described above), and orienting each filter upwards or downwards. Thus, the output of this portion of the model can be represented by 108 filtered cochleagrams (modulo the additional filters described next).