Short abstract

Background

Cognitive monitoring that can detect short-term change in multiple sclerosis is challenging. Computerized cognitive batteries such as the CogState Brief Battery can rapidly assess commonly affected cognitive domains.

Objectives

The purpose of this study was to establish the acceptability and sensitivity of the CogState Brief Battery in multiple sclerosis patients compared to controls. We compared the sensitivity of the CogState Brief Battery to that of the Paced Auditory Serial Addition Test over 12 months.

Methods

Demographics, Expanded Disability Status Scale scores, depression and anxiety scores were compared with CogState Brief Battery and Paced Auditory Serial Addition Test performances of 51 patients with relapsing–remitting multiple sclerosis, 19 with secondary progressive multiple sclerosis and 40 healthy controls. Longitudinal data in 37 relapsing–remitting multiple sclerosis patients were evaluated using linear mixed models.

Results

Both the CogState Brief Battery and the Paced Auditory Serial Addition Test discriminated between multiple sclerosis and healthy controls at baseline (p<0.001). CogState Brief Battery tasks were more acceptable and caused less anxiety than the Paced Auditory Serial Addition Test (p<0.001). In relapsing–remitting multiple sclerosis patients, reaction time slowed over 12 months (p<0.001) for the CogState Brief Battery Detection (mean change –34.23 ms) and Identification (–25.31 ms) tasks. Paced Auditory Serial Addition Test scores did not change over this time.

Conclusions

The CogState Brief Battery is highly acceptable and better able to detect cognitive change than the Paced Auditory Serial Addition Test. The CogState Brief Battery could potentially be used as a practical cognitive monitoring tool in the multiple sclerosis clinic setting.

Keywords: Cognition, multiple sclerosis, monitoring, CogState, computerized, Paced Auditory Serial Addition Test

Introduction

Cognition deficits in multiple sclerosis (MS) reflect the widespread damage to the central nervous system and commonly manifest as difficulty in information processing, concentration and attention, working memory and new learning.1 It is possible that, long before these deficits are obvious, subtle dysfunction may be present that can be difficult to detect using current clinical screening techniques.2,3 The gold standard for diagnosis of cognitive impairment in MS patients is a formal neuropsychological assessment, however, this is time and resource intensive. Abbreviated MS-specific batteries such as the Brief International Cognitive Assessment for MS (BICAMS) battery have been developed to shorten and simplify assessments, but still require skilled and trained operators. In practice, a BICAMS test can take 15–20 min to fully complete.4,5 Furthermore, fatigue and learning effects (despite alternate forms),6 restrict the use of most batteries as monitoring tools in the MS clinic. The sensitivity of current tests to detect slow subclinical decline in ostensibly cognitively normal patients beyond that expected for age alone, is limited.7,8 Single tests that potentially measure cognitive changes under treatment conditions are often used in research trials. Tools such as the Paced Auditory Serial Addition Test (PASAT)9 and Symbol Digit Modalities Test (SDMT)10 have been validated against neuropsychological testing in people with MS (pwMS). While the PASAT utility is limited by its relatively low acceptability, the SDMT could be used to monitor longitudinal cognitive changes.11 However, it also has significant practice effects even with multiple different versions,12 which are likely even with emerging computerized versions.13

Effective cognitive monitoring requires rapid cognitive assessments that can maintain motivation, minimize process factors (such as fatigue or anxiety), and avoid significant learning effects with repeated use. Computerized, brief and repeatable cognitive batteries such as the CogState Brief Battery (CBB)14 have been extensively validated (see http://www.cogstate.com). The test can be Web-based and potentially represents an opportunity for self-administered cognitive screening. Psychometric properties have been optimized to minimize practice effects after an initial familiarization, making it useful for repeated testing15 such as before and after clinical intervention.12 In this study, we compared performance on the CBB and the PASAT in pwMS and healthy controls (HCs) cross-sectionally. In addition, we provide a 12-month, prospective comparison between the two tests in patients with relapsing–remitting multiple sclerosis (RRMS).

Materials and methods

Participants

Seventy MS patients with RRMS or secondary progressive MS (SPMS) were recruited from a tertiary hospital clinic in Melbourne Australia. HCs included partners, friends of patients, hospital staff and students. Participants needed to be able to use a computer (adequate vision, upper limb dexterity) and English speaking. Existing cognitive impairment due to other neurological, psychiatric or medical disease was an exclusion. We recorded relevant demographic characteristics, and medical, psychiatric and neurological history. The Expanded Disability Status Scale (EDSS) score was completed by a trained investigator. All participants were screened and enrolled for cross-sectional comparison of the CBB and the PASAT. To study longitudinal changes in early MS, only patients with RRMS were invited to repeat CBB testing at one, two, three, six and 12 months. The PASAT was repeated at the six- and 12-month visits. The study was approved by the local Human Research Ethics Committee and all participants provided informed consent.

Cognitive testing

Cognitive testing was performed on a desktop computer in a quiet room. All participants were tested twice at screening to ensure familiarity and minimize practice effects before the baseline test (within 28 days after screening). The total duration of a study visit was approximately 30 min.

The CBB.14

The CBB (CogState Ltd) version consisted of six different tasks, using playing card visuals, in a game-like interface. The cognitive domains measured include psychomotor function, information processing speed, attention, visual learning, spatial problem solving, executive function and (working) memory. Each task displays an instruction screen, followed by a short familiarization, and then the real test. Single random playing cards appear face-down in the center of the screen, then turn face-up, and the participant is required to press either a ‘yes’ or ‘no’ button as quickly as they can. The first task (‘Detection’ (DET)) requires pressing the ‘yes’ button ‘as soon as the card turned face-up. Subsequent tasks used either button to respond ‘if the card is red’ (‘Identification’ (IDN)), ‘if you have seen the card before in the same task’ (‘One card learning’ (OCL)) or ‘if the card is the same as the previous card’ (‘One card back’ (ONB)). An executive function (‘Groton Maze learning) test that involved discovering a hidden pathway in a 10x10 tile maze was performed. Finally, a spatial memory task that required learning the screen locations of simple colored shapes (Continuous paired associated learning task (CPAL)) was completed. After a single practice, most participants were familiar with the test and showed no/minimal practice effects subsequently. The total test battery duration was 15 min.

The PASAT.16

The PASAT is an audio-presented test that assesses information processing speed, attention, working memory and calculation ability. Single digits are presented every three seconds and the subject is required to add each new digit to the one immediately prior to it. To minimize familiarity with stimulus items, two alternate forms were used. Total test duration was 13 min.

Acceptability

Acceptability (e.g. ‘Did you enjoy the tasks?’) was scored on 10-point Likert scales, ranging from a maximum negative response (=0) to a maximum positive response (=10). Questionnaires were delivered electronically after completion of the cognitive tasks at baseline and each subsequent visit.

Anxiety, depression and fatigue

MS participants completed the Patient Health Questionnaire (PHQ-9)17 to assess depression and the Penn State Worry Questionnaire (PSWQ)18 to assess anxiety at all visits. The Participant Fatigue Questionnaire was completed prior to each test. The participant was asked: How sleepy are you feeling right now? Responses were rated from 0–10 with 0='I am fully awake'; and 9='I am asleep'; with an associated cartoon depiction of a face in various stages of drowsiness.

Statistical analysis

Non-parametric statistics were used to compare (a) acceptability and mental health scores and (b) baseline disease characteristics and cognitive scores. For each CBB task that included playing cards, accuracy was defined by the number of correct responses expressed as a proportion of the total responses made (true positive, true negative, false positive, false negative, trials in which they failed to respond (maxout) and premature responses (anticipations)). An arcsine transformation was used to normalize the distribution. Performance speed was defined as the average reaction time (RT; (ms)) for correct responses made; mean RT was transformed using a base 10 logarithmic transformation. The GML and CPAL results were recorded as the total errors made. For the PASAT, the total correct answers out of 60 was recorded. Student t-tests, assessing means, standard deviations (SDs) and 95% confidence intervals (CIs) were used where data was normally distributed. Effect sizes (Cohen’s d) were calculated to determine the magnitude of overlap of the distributions. For the 30 RRMS patients who had five tests over the 12-month period, a linear mixed-model analysis was performed, including age, sex and disease duration as fixed covariates, time analyzed as a continuous variable and change over time expressed as beta.

Results

Baseline characteristics

Demographic and disease characteristics are shown in Table 1. Of the 70 patients enrolled, 51 (73%) had RRMS. Two RRMS and two SPMS patients withdrew consent before baseline assessment. The mean disease duration was 14 (SD=10.8) years. The median EDSS was 2.5 (interquartile range (IQR) 1.5–4.0), the cerebellar Kurtzke functional score (KFS) was 0 (0–2), the pyramidal KFS was 1 (0–2) and the visual KFS was 0 (0.0). The PASAT was performed in 56 patients (10 patients declined doing the test) at baseline. Thirty-nine RRMS patients agreed to longitudinal follow-up, 34 completed the six-month assessment and 30 completed the 12-month assessment. Patients who completed baseline and longitudinal follow-up, were similar in sex (80.5% and 81.6% female), age (p=0.649), EDSS (median 2.3 and 2.6) and disease duration (p=0.758). Educational status was similar between the groups with 43.9% being tertiary educated (42.1% in the longitudinal group) while a secondary education was recorded in 44.9% at baseline (43.1% in the longitudinal group). The nine participants who dropped out of the study, were similar to the baseline cohort in age (mean 35 years), EDSS (1 (1–1.5)) and baseline PASAT (46.3 (16.6)). The 40 healthy controls were demographically well-matched to the MS group.

Table 1.

Demographics at baseline.

| MS patientsn=66 | Non-MS controlsn=40 | |

|---|---|---|

| Age in years (SD) | 47.5 (12.4) | 35.2 (14.7) |

| Gender | ||

| Male | 14 (21%) | 12 (30%) |

| Female | 52 (79%) | 28 (70%) |

| MS characteristics | ||

| RRMS | 51 (73%) | - |

| EDSS (median, IQR) | 2.5 (1.25, 4.0) | - |

| Disease duration (years, (SD)) | 14.0 (10.8) | - |

| Baseline data (mean, SD) | ||

| PASAT (n=56) | 46.37 (11.83) | 55.63 (5.95) |

| CBB (n=66) | ||

| Detection (DET)a | ||

| Speed | 2.56 (0.11) | 2.51 (0.07) |

| Accuracy | 1.47 (0.11) | 1.50 (0.09) |

| Identification (IDN)a | ||

| Speed | 2.75 (0.09) | 2.69 (0.08) |

| Accuracy | 1.43 (0.13) | 1.43 (0.15) |

| One card back (ONB)a | ||

| Speed | 2.91 (0.09) | 2.84 (0.11) |

| Accuracy | 1.36 (0.15) | 1.42 (0.17) |

| One card learning (OCL)a | ||

| Speed | 3.01 (0.27) | 2.99 (0.10) |

| Accuracy | 0.99 (0.10) | 1.05 (0.10) |

| Continuous paired associate learning (CPAL) | ||

| Errors | 30.89 (32.17) | 12.23 (15.21) |

| Groton maze learning (GML) | ||

| Errors | 46.45 (16.22) | 36.90 (12.88) |

CBB: CogState Brief Battery; EDSS: Expanded Disability Status Scale; IQR: interquartile range; MS: multiple sclerosis; PASAT: Paced Auditory Serial Addition Test; RRMS: relapsing–remitting multiple sclerosis; SD: standard deviation.

aValues shown after base 10 logarithmic transformation.

Acceptability

Acceptability data were available for 37 MS participants. The duration of the CBB test was rated as ‘not too long, not too short’ to ‘just right’ by 86% of participants compared to 50% for the PASAT (p<0.001). Seventy-five percent of participants were happy to repeat the CBB compared to 52% for the PASAT. Performing the PASAT caused anxiety in 69% of patients compared to 41% with the CBB, p=0.002. Test enjoyment was rated as neutral to highly enjoyable by 83% for the CBB compared to 61% completing the PASAT (p=0.002).

Anxiety, depression and fatigue

The mean baseline PSWQ score was 40.5 (SD=14.8) with a score suggestive of significant worry (>45) recorded in 11 (31%) participants. The mean PHQ-9 score was 7.3 (SD 5.7) with 14 participants (52%) reporting no depression and three (8%) reporting moderate to severe depression. The median baseline fatigue score was two (IQR 0–4) indicating mild levels of fatigue in this cohort. There was no correlation between baseline PSWQ-15, PHQ-9 or fatigue scores and performance scores on the PASAT or CBB.

Disease severity markers and cognitive outcomes

There was no correlation between PASAT scores at baseline and the EDSS (r=–0.029, p=0.84) or disease duration (r=–0.128, p=0.36). EDSS scores correlated moderately with baseline DET speed (r=0.464, p<0.001), OCL speed (r=0.467, p<0.001) and ONB reaction speed (r=0.442, p=0.001), GML accuracy (r=0.323, p=0.02) and CPAL errors (r=0.32, p=0.018). Disease duration correlated with OCL speed (r=0.661, p<0.001), ONB RT (r=0.539, p<0.001) and GML accuracy (r=0.295, p=0.03). Linear regression models (correcting for age and sex) to assess the effect of baseline EDSS and disease duration on change over 12 months in the CBB and PASAT scores were performed. Significant associations between change in performance speed for each CBB task and EDSS scores, but not with disease duration, were found. There were no associations between EDSS or disease duration and change in the PASAT scores.

Comparison of cognitive performance between people with MS and HCs

The mean performance values (with SD) are reported in Table 1. A comparison between MS participants and HCs is shown in Table 2 with the mean difference and 95% CIs between the groups reported. The cognitive performance of MS patients on both CBB and PASAT was significantly worse (p<0.05) than that of the HCs. Speed was more significantly affected than accuracy during DET (mean difference between MS and non-MS RT=39.5 ms (95% CI 36.8–49.7 ms), p<0.001), IDN (72.6ms (61.9–90.8), p<0.001), and ONB (121 ms (92.2–126.9), p<0.002) tasks.

Table 2.

Baseline comparison between multiple sclerosis (MS) patients and healthy controls.

| Task | Outcome | Mean difference (MS vs non-MS) | Lower CI | Upper CI | p-Value | Effect size | |

|---|---|---|---|---|---|---|---|

| PASAT | Correct | 9.45 | 5.81 | 13.08 | <0.0001 | –0.97 | |

| CBB | |||||||

| Detectiona | Accuracy | 0.037 | –0.003 | 0.077 | 0.07 | 0.36 | |

| Speed | –0.066 | –0.100 | –0.031 | 0.0003 | –0.67 | ||

| Identificationa | Accuracy | 0.007 | –0.069 | 0.082 | 0.86 | 0.035 | |

| Speed | –0.062 | –0.097 | –0.026 | 0.0009 | –0.698 | ||

| One card backa | Accuracy | 0.08 | 0.001 | 0.151 | 0.047 | 0.409 | |

| Speed | –0.08 | –0.118 | –0.038 | 0.0002 | 0.79 | ||

| One card learninga | Accuracy | 0.061 | 0.017 | 0.104 | 0.00063 | 0.568 | |

| Speed | –0.06 | –0.103 | –0.017 | 0.007 | –0.56 | ||

| Groton Maze learning | Errors | –10.72 | –16.74 | –4.70 | 0.0006 | –0.72 | |

| Continuous paired associate learning | Errors | –17.04 | –27.23 | –6.86 | 0.00013 | –0.68 | |

CBB: CogState Brief Battery; CI: confidence interval; PASAT: Paced Auditory Serial Addition Test.

Lower values correspond to a slower reaction time or decreased accuracy.

aValues shown after base 10 logarithmic transformation.

Correlation between CBB tasks and PASAT

Moderate correlations between baseline performance on PASAT and CBB tasks were found (Table 3).

Table 3.

Comparisona between Paced Auditory Serial Addition Test (PASAT) and CogState Brief Battery (CBB) tasks.

| DET | IDN | OCL | ONB | CPAL | GML | |

|---|---|---|---|---|---|---|

| PASAT with speed score | –0.38 | –0.44 | –0.16 | –0.50 | - | - |

| PASAT with accuracy score | 0.11 | 0.21 | 0.33 | 0.41 | –0.48 | –0.47 |

CPAL: Continuous paired associate learning; DET: Detection; GML: Groton Maze learning; IDN: Identification; OCL: One card learning; ONB: One card back.

aPearson correlation.

Longitudinal change in CBB and PASAT scores in RRMS patients

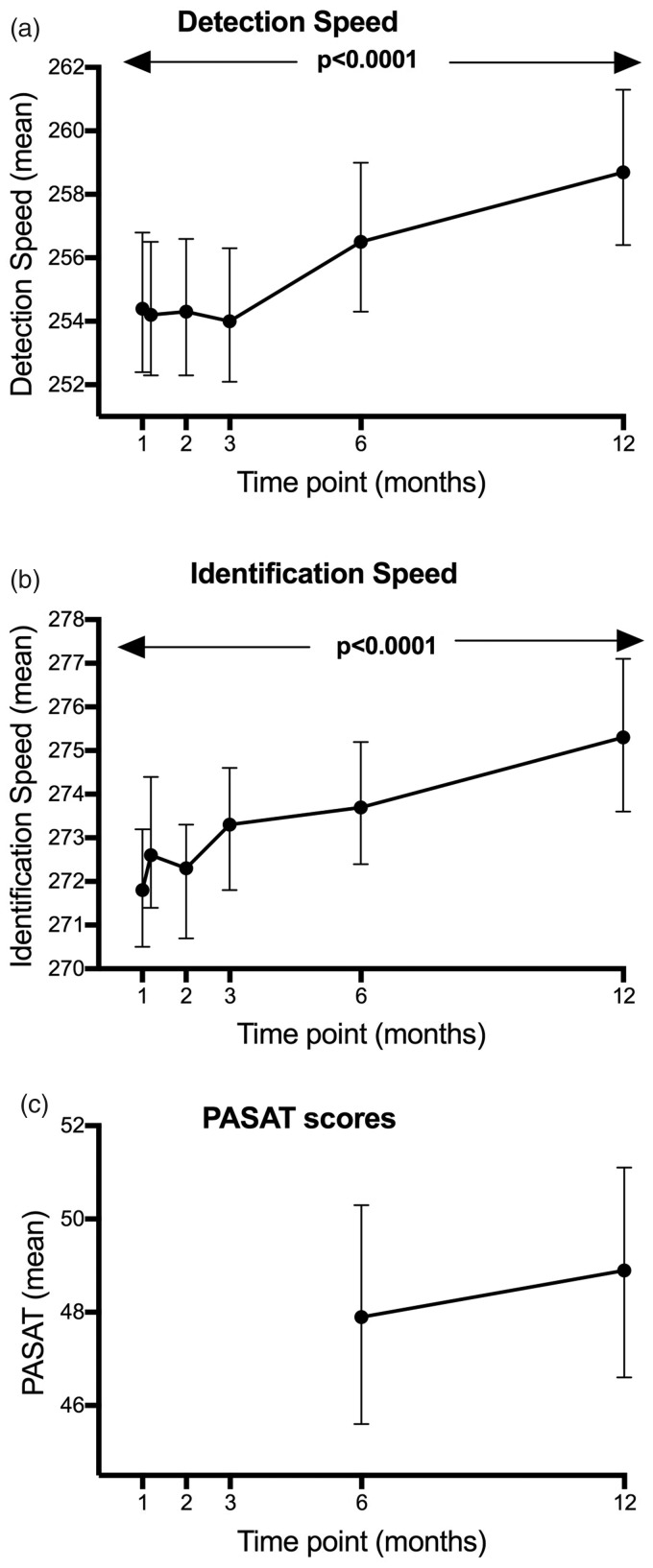

Linear mixed models to analyze the change of task performance over time indicated that RT slowed significantly over 12 months for the DET (ß=0.001, F=25.98, p<0.0001) and IDN (ß=0.001, F=26.84, p<0.0001) tasks. To demonstrate this more clearly, we back-transformed the standardized values and calculated RT over a year, demonstrating slowing in RTs of around 0.5 ms per week. A linear mixed model (adjusted for age and disease duration) of the transformed means over time, with time included as a factor (co-varying for baseline) was then performed to better demonstrate slowing over time in DET (mean change over 12 months –34.23 ms, F=5.43, p=0.0001) and IDN (–25.31 ms, F=5.56, p<0.0001) speed (see Figure 1).

Figure 1.

Adjusted mean change in detection (DET) and identification (IDN) speed over time with time modeled as a factor (co-varying for baseline). Reaction speed during the CogState Brief Battery (CBB) Detection and Identification tasks slowed by a significant amount over 12 months (p<0.0001). Back-transformed values are shown with 95% confidence intervals.

The same models revealed no change over 12 months in PASAT scores (standardized slope ß=0.044, F= –2.32, p=0.14) over 12 months. The RT of the OCL (ß=0.0002, F=1.48, p=0.224) and ONB (ß=0.0001, F=0.26, p=0.614) tests did not change over time. In addition, no significant changes over 12 months in the accuracy of the DET (ß=0.00025, F=0.36, p=0.548), IDN (ß =–0.0011, F=3.57, p=0.06), OCL (ß=0.0002, F=1.48, p=0.225) and ONB (ß=–0.0002, F=0.11, p=0.741) tasks were identified. GML (ß=–0.031, F=0.87, p=0.35) and CPAL (ß=0.085, F=2.38, p=0.125) errors did not increase over the observation period (Supplementary Material Figure e-1).

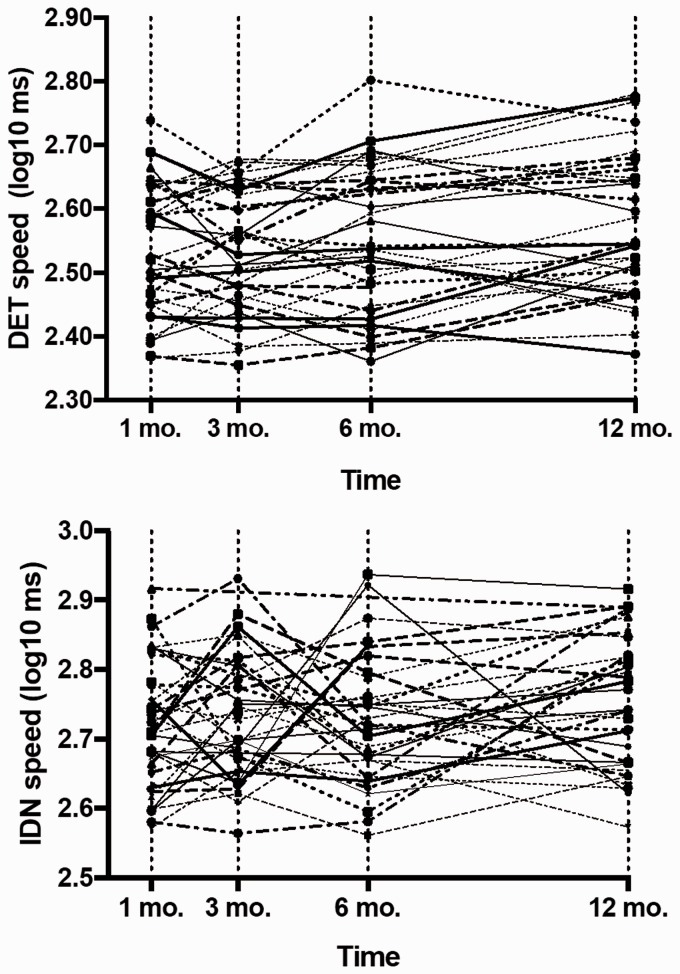

Individual patient trajectories for DET and IDN RT over 12 months are shown in Figure 2 and indicate variability in RT during the first six months with a general trend for RT slowing emerging between 6–12 months. IDN RT over 12 months slowed by a mean of 6.1% (3.1); 29.4% participants worsened by more than one SD of the mean one-month DET RT. Reaction time for the IDN task slowed by 7.5% (4.3) with 32.4% slowing more than one SD from the mean IDN RT at one month; four participants (11.8%) improved over 12 months.

Figure 2.

Individual trajectories for detection (DET) and identification (IDN) reaction time (RT) over 12 months. Compared to mean DET and IDN RT at one month, we identified 29.4% participants with slower DET RT and 32.4% participants with slower IDN RT at 12 months.

Discussion

This study demonstrates higher acceptability of computerized cognitive testing using CBB in pwMS compared with the established PASAT test.19 This is important, as patient acceptance for a cognitive monitoring test is paramount for feasibility of repeat administration. The CBB tests were rated as enjoyable and engaging and caused less patient anxiety than the PASAT. Both the PASAT and the CBB enabled detection of slower processing speed in pwMS compared to HCs tested in the same environment at baseline. In addition, the CBB task RTs were correlated with disease severity (EDSS) but not disease duration, whereas PASAT scores did not correlate with either measure. Although cognitive impairment in MS can occur irrespective of disease duration20,21 and physical disability,22–24 it is well known that the presence of cognitive decline is associated with higher EDSS scores and a more progressive disease course.25

Importantly, in an RRMS population, our data showed subtle slowing (in the absence of any change in visual, motor or sensory functional scores) of CBB RT and demonstrated the feasibility of detecting RT change over 12 months. During each CBB task, performance speed was more impaired than accuracy, demonstrating the sensitivity of RT as a measure of processing speed in MS. This has also recently been reported in pediatric MS using the DET and IDN speed measures of the CBB at a single time point, showing similar sensitivity to the BICAMS speed measure.26 In addition, our longitudinal analyses identified two CBB tasks, DET and IDN speed, for which performance slowed over the 12-month study period. The PASAT measures failed to identify such change, suggesting that the sensitivity of the CBB measures to detect change was greater and measured real decline, a conclusion supported by the correlation of the change in CBB speed measures over 12 months with the change in EDSS scores (also not seen with the PASAT measures). These findings are of particular importance given that a decline or slowing in information processing speed is one of the principal cognitive domains affected in MS.27 Both processing speed and working memory are core cognitive skills that are essential to performance in domains such as attention and learning.25 However, the change was subtle and determined in group analyses rather than being observable in individual trajectories. When individual trajectories were interrogated (Figure 2), patients with slowing in RT for DET and IDN could be identified despite significant variability in this small cohort. Further experience with these speed measures is required to determine whether more accurate measurement of this commonly affected domain presents an opportunity to develop a valid monitoring tool for use in individual pwMS in the clinic. Longitudinal measurements in HCs were not done and should be included in future studies to better understand the significance of these changes.

The therapeutic landscape for MS is rapidly evolving,28,29 with more than 12 disease-modifying treatments available. Monitoring to detect suboptimal therapeutic response is a key aim of modern MS services, with regular magnetic resonance imaging (MRI) scans supplementing relapse assessments and clinical evaluations. Unfortunately, cognitive function is assessed rarely during patients’ clinic visits due to the resource requirements and subtle nature2 of these symptoms. Cognitive decline is, however, a major determinant of disease outcome25,30,31 and quality of life32 in pwMS. Our results suggest that automated computerized cognitive testing could potentially fill this monitoring gap, allowing for earlier identification and more comprehensive assessment. This would ideally include evaluation of potential confounders such as emergent depression or increased fatigue, but also, if no medical reason other than potential MS-associated decline emerges, therapeutic escalation of immunotherapy33,34 and rehabilitation strategies that can improve outcomes.35

Our preliminary results need validation in larger cohorts of MS patients. In addition, it is possible that the educational status of the HC group was higher than the MS cohort due to the inclusion of hospital staff and students. Although repeat testing was performed to ensure familiarization with the tests at baseline, learning effects between the pwMS and HC were not formally explored. This study used the PASAT 39 as a comparative test – this is often poorly tolerated by patients. Whilst we selected the PASAT 3 based on the recommendations from the Multiple Sclerosis Functional Composite (MSFC) developers,19 it should be noted that it is a quick index of overall function and does not provide a clinical cognitive assessment. Notably, recent work has recommended that the PASAT be replaced by the SDMT in the MSFC8 and future studies need to include the SMDT as a comparative test of processing speed.10 The specificity of using relatively simple tasks of a CBB as a long-term, serial, cognitive monitoring tool in MS, also needs to be established. PwMS with a decline in detection and identification speed will need to be tested more comprehensively in comparison to abbreviated batteries such as BICAMS4 or Auditory Recorded Cognitive Screen (ARCS)36 to better understand the implications of CBB decline in comparison to impairment in a multi-domain cognitive assessment. Although the CBB has been extensively longitudinally validated in HCs in other studies,15,37–39 a comparison in parallel with a MS cohort is needed. Nine of the 39 RRMS patients who agreed to longitudinal follow-up, did not complete the 12-month visit, and our results need to be viewed as preliminary. If, however, future studies confirm our results, then computerized monitoring of reaction speed in three tasks, with a brief total test-time of three minutes, potentially entirely self-administered, could prove to be sufficient to monitor cognitive change in processing speed over time in pwMS. This would allow for efficient testing and improve the feasibility of rapid, large-scale implementation in clinical practice, and hopefully in the future, Web-based home testing.

Supplemental Material

Supplemental Material for Monitoring cognitive change in multiple sclerosis using a computerized cognitive battery by L De Meijer, D Merlo, O Skibina, EJ Grobbee, J Gale, J Haartsen, P Maruff, D Darby, H Butzkueven* and Van der A Walt* in Multiple Sclerosis Journal-Experimental, Translational and Clinical

Acknowledgements

The authors wish to dedicate this article to the memory of Louise Kurczyski, their study coordinator, who died before completion of the analyses.

Conflicts of Interests

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: L De Meijer: nothing to declare. D Merlo: nothing to declare. O Skibina has received travel support and honoraria from Biogen, Roche and Merck. EJ Grobbee: nothing to declare. J Gale: nothing to declare. J Haartsen: nothing to declare. D Darby DD is a consultant to Neurability, former founder and shareholder of CogState, CEO of Cerescape, and received honoraria for lectures from Biogen, Novartis and other pharma. Paul Maruff is the Chief Science Officer at CogState Ltd. H Butzkueven has received research support, travel support and speaker's honoraria from Biogen, Novartis, Merck, Teva, Roche and Bayer.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was funded by the MS Research Fund, Box Hill Hospital.

Supplemental Material

Supplemental material for this article is available online.

References

- 1.Rovaris M, Riccitelli G, Judica E, et al. Cognitive impairment and structural brain damage in benign multiple sclerosis. Neurology 2008; 71: 1521–1526. [DOI] [PubMed] [Google Scholar]

- 2.Romero K, Shammi P, Feinstein A. Neurologists' accuracy in predicting cognitive impairment in multiple sclerosis. Mult Scler Relat Disord 2015; 4: 291–295. [DOI] [PubMed] [Google Scholar]

- 3.Deloire MS, Bonnet MC, Salort E, et al. How to detect cognitive dysfunction at early stages of multiple sclerosis? Mult Scler 2006; 12: 445–452. [DOI] [PubMed] [Google Scholar]

- 4.Langdon DW, Amato MP, Boringa J, et al. Recommendations for a Brief International Cognitive Assessment for Multiple Sclerosis (BICAMS). Mult Scler 2012; 18: 891–898. [DOI] [PMC free article] [PubMed]

- 5.Benedict RHB, Cookfair D, Gavett R, et al. Validity of the minimal assessment of cognitive function in multiple sclerosis (MACFIMS). J Int Neuropsychol Soc 2006; 12: 549–558. [DOI] [PubMed] [Google Scholar]

- 6.Collie A, Maruff P, Darby DG, et al. The effects of practice on the cognitive test performance of neurologically normal individuals assessed at brief test-retest intervals. J Int Neuropsychol Soc 2003; 9: 419–428. [DOI] [PubMed] [Google Scholar]

- 7.Roar M, Illes Z, Sejbaek T. Practice effect in Symbol Digit Modalities Test in multiple sclerosis patients treated with natalizumab. Mult Scler Relat Disord 2016; 10: 116–122. [DOI] [PubMed] [Google Scholar]

- 8.Benedict RH, Deluca J, Phillips G, et al. Validity of the Symbol Digit Modalities Test as a cognition performance outcome measure for multiple sclerosis. Mult Scler 2017; 23: 721–733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tombaugh TN. A comprehensive review of the Paced Auditory Serial Addition Test (PASAT). Arch Clin Neuropsychol 2006; 21: 53–76. [DOI] [PubMed] [Google Scholar]

- 10.Parmenter BA, Weinstock-Guttman B, Garg N, et al. Screening for cognitive impairment in multiple sclerosis using the Symbol Digit Modalities Test. Mult Scler 2007; 13: 52–57. [DOI] [PubMed] [Google Scholar]

- 11.Benedict RH, Cohan S, Lynch SG, et al. Improved cognitive outcomes in patients with relapsing-remitting multiple sclerosis treated with daclizumab beta: Results from the DECIDE study. Mult Scler 2018; 24: 795--804. [DOI] [PMC free article] [PubMed]

- 12.Jacques FH, Harel BT, Schembri AJ, et al. Cognitive evolution in natalizumab-treated multiple sclerosis patients. Mult Scler J Exp Transl Clin 2016; 2: 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rao SM, Losinski G, Mourany L, et al. Processing speed test: Validation of a self-administered, iPad®-based tool for screening cognitive dysfunction in a clinic setting. Mult Scler 2017; 23: 1929–1937. [DOI] [PubMed] [Google Scholar]

- 14.Maruff P, Thomas E, Cysique L, et al. Validity of the CogState brief battery: Relationship to standardized tests and sensitivity to cognitive impairment in mild traumatic brain injury, schizophrenia, and AIDS dementia complex. Arch Clin Neuropsychol 2009; 24: 165–178. [DOI] [PubMed] [Google Scholar]

- 15.Falleti MG, Maruff P, Collie A, et al. Practice effects associated with the repeated assessment of cognitive function using the CogState battery at 10-minute, one week and one month test-retest intervals. J Clin Exp Neuropsychol 2006; 28: 1095–1112. [DOI] [PubMed] [Google Scholar]

- 16.Gronwall DM. Paced auditory serial-addition task: A measure of recovery from concussion. Percept Mot Skills 1977; 44: 367–373. [DOI] [PubMed] [Google Scholar]

- 17.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: Validity of a brief depression severity measure. J Gen Intern Med 2001; 16: 606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Molina S, Borkovec TD. The Penn State Worry Questionnaire: Psychometric properties and associated characteristics In: Davey GCL, Tallis F, eds. Worrying perspectives on theory, assessment and treatment. Oxford: John Wiley and Sons, 1994, pp.265–283. [Google Scholar]

- 19.Fischer JS, Rudick RA, Cutter GR, et al. The Multiple Sclerosis Functional Composite Measure (MSFC): An integrated approach to MS clinical outcome assessment. National MS Society Clinical Outcomes Assessment Task Force. Mult Scler 1999; 5: 244–250. [DOI] [PubMed] [Google Scholar]

- 20.Achiron A, Barak Y. Cognitive changes in early MS: A call for a common framework. J Neurol Sci 2006; 245: 47–51. [DOI] [PubMed] [Google Scholar]

- 21.Glanz BI, Holland CM, Gauthier SA, et al. Cognitive dysfunction in patients with clinically isolated syndromes or newly diagnosed multiple sclerosis. Mult Scler 2007; 13: 1004–1010. [DOI] [PubMed] [Google Scholar]

- 22.Feuillet L, Reuter F, Audoin B, et al. Early cognitive impairment in patients with clinically isolated syndrome suggestive of multiple sclerosis. Mult Scler 2007; 13: 124–127. [DOI] [PubMed] [Google Scholar]

- 23.Calabrese M, Filippi M, Rovaris M, et al. Evidence for relative cortical sparing in benign multiple sclerosis: A longitudinal magnetic resonance imaging study. Mult Scler 2009; 15: 36–41. [DOI] [PubMed] [Google Scholar]

- 24.Patti F, Amato MP, Trojano M, et al. Cognitive impairment and its relation with disease measures in mildly disabled patients with relapsing–remitting multiple sclerosis: Baseline results from the Cognitive Impairment in Multiple Sclerosis (COGIMUS) study. Mult Scler 2009; 15: 779–788. [DOI] [PubMed] [Google Scholar]

- 25.Amato MP, Portaccio E, Goretti B, et al. Relevance of cognitive deterioration in early relapsing-remitting MS: A 3-year follow-up study. Mult Scler 2010; 16: 1474–1482. [DOI] [PubMed] [Google Scholar]

- 26.Charvet LE, Shaw M, Frontario A, et al. Cognitive impairment in pediatric-onset multiple sclerosis is detected by the Brief International Cognitive Assessment for Multiple Sclerosis and computerized cognitive testing. Mult Scler 2017; 32: 1–8. [DOI] [PubMed] [Google Scholar]

- 27.Bodling AM, Denney DR, Lynch SG. Individual variability in speed of information processing: An index of cognitive impairment in multiple sclerosis. Neuropsychology 2012; 26: 357–367. [DOI] [PubMed] [Google Scholar]

- 28.Broadley SA, Barnett MH, Boggild M, et al. Therapeutic approaches to disease modifying therapy for multiple sclerosis in adults: An Australian and New Zealand perspective: Part 1 Historical and established therapies. J Clin Neurosci 2014; 21: 1835–1846. [DOI] [PubMed] [Google Scholar]

- 29.Broadley SA, Barnett MH, Boggild M, et al. Therapeutic approaches to disease modifying therapy for multiple sclerosis in adults: An Australian and New Zealand perspective Part 2: New and emerging therapies and their efficacy. J Clin Neurosci 2014; 21: 1847–1856. [DOI] [PubMed] [Google Scholar]

- 30.Moccia M, Lanzillo R, Palladino R, et al. Cognitive impairment at diagnosis predicts 10-year multiple sclerosis progression. Mult Scler 2016; 22: 659–667. [DOI] [PubMed] [Google Scholar]

- 31.Pitteri M, Romualdi C, Magliozzi R, et al. Cognitive impairment predicts disability progression and cortical thinning in MS: An 8-year study. Mult Scler 2017; 23: 848–854. [DOI] [PubMed] [Google Scholar]

- 32.Mitchell AJ, Benito-León J, González J-MM, et al. Quality of life and its assessment in multiple sclerosis: Integrating physical and psychological components of wellbeing. Lancet Neurol 2005; 4: 556–566. [DOI] [PubMed] [Google Scholar]

- 33.Comi G. Effects of disease modifying treatments on cognitive dysfunction in multiple sclerosis. Neurol Sci 2010; 31: 261–264. [DOI] [PubMed] [Google Scholar]

- 34.Mückschel M, Beste C, Ziemssen T. Immunomodulatory treatments and cognition in MS. Acta Neurol Scand 2016; 134: 55–59. [DOI] [PubMed] [Google Scholar]

- 35.Amato MP, Langdon D, Montalban X, et al. Treatment of cognitive impairment in multiple sclerosis: Position paper. J Neurol 2013; 260: 1452–1468. [DOI] [PubMed] [Google Scholar]

- 36.Lechner-Scott J, Kerr T, Spencer B, et al. The Audio Recorded Cognitive Screen (ARCS) in patients with multiple sclerosis: A practical tool for multiple sclerosis clinics. Mult Scler 2010; 16: 1126–1133. [DOI] [PubMed] [Google Scholar]

- 37.Silbert BS, Maruff P, Evered LA, et al. Detection of cognitive decline after coronary surgery: A comparison of computerized and conventional tests. Br J Anaesth 2004; 92: 814–820. [DOI] [PubMed] [Google Scholar]

- 38.Dingwall K, Lewis M, Maruff P, et al. Reliability of repeated cognitive testing in healthy Indigenous Australian adolescents. Aust Psychol 2009; 44: 224–234. [Google Scholar]

- 39.Darby DG, Fredrickson J, Pietrzak RH, et al. Reliability and usability of an Internet-based computerized cognitive testing battery in community-dwelling older people. Comput Human Behav 2014; 30: 199–205. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Material for Monitoring cognitive change in multiple sclerosis using a computerized cognitive battery by L De Meijer, D Merlo, O Skibina, EJ Grobbee, J Gale, J Haartsen, P Maruff, D Darby, H Butzkueven* and Van der A Walt* in Multiple Sclerosis Journal-Experimental, Translational and Clinical