Abstract

Objective

Querying electronic health records (EHRs) to find patients meeting study criteria is an efficient method of identifying potential study participants. We aimed to measure the effectiveness of EHR-driven recruitment in the context of ADAPTABLE (Aspirin Dosing: A Patient-centric Trial Assessing Benefits and Long-Term Effectiveness)—a pragmatic trial aiming to recruit 15 000 patients.

Materials and Methods

We compared the participant yield of 4 recruitment methods: in-clinic recruitment by a research coordinator, letters, direct email, and patient portal messages. Taken together, the latter 2 methods comprised our EHR-driven electronic recruitment workflow.

Results

The electronic recruitment workflow sent electronic messages to 12 254 recipients; 13.5% of these recipients visited the study website, and 4.2% enrolled in the study. Letters were sent to 427 recipients; 5.6% visited the study website, and 3.3% enrolled in the study. Coordinators recruited 339 participants in clinic; 23.6% visited the study website, and 16.8% enrolled in the study. Five-hundred-nine of the 580 UNC enrollees (87.8%) were recruited using an electronic method.

Discussion

Electronic recruitment reached a wide net of patients, recruited many participants to the study, and resulted in a workflow that can be reused for future studies. In-clinic recruitment saw the highest yield, suggesting that a combination of recruitment methods may be the best approach. Future work should account for demographic skew that may result by recruiting from a pool of patient portal users.

Conclusion

The success of electronic recruitment for ADAPTABLE makes this workflow well worth incorporating into an overall recruitment strategy, particularly for a pragmatic trial.

Keywords: electronic health records, pragmatic clinical trials, research subject recruitment, patient portals

BACKGROUND AND SIGNIFICANCE

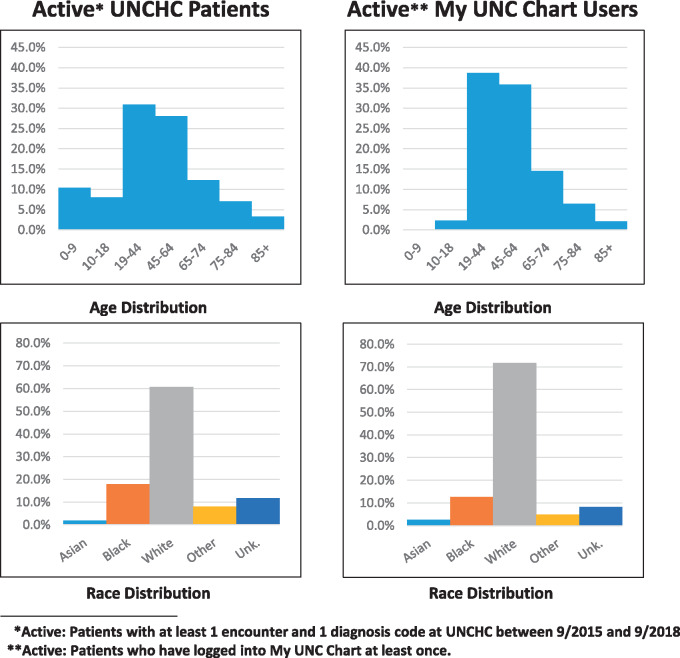

Querying electronic health records (EHRs) to find patients meeting a study’s criteria is an effective method of identifying potential study participants.1–5 Directly messaging those potential participants using the EHR is a newer practice,6,7 made possible by clinical data warehouses and patient portals. At University of North Carolina Health Care System (UNCHC), which uses the Epic™ EHR, the patient portal is branded as “My UNC Chart” (from Epic’s MyChart™). As of September 2018, My UNC Chart is actively used (logged into at least once) by 28% of UNCHC patients.

We explored a My UNC Chart and email-based recruitment strategy (hereafter “electronic recruitment”) in the context of ADAPTABLE,5 a national pragmatic trial using the PCORnet Clinical Data Research Network (CDRN). The study relies heavily on informatics methods: sites are recruiting 15 000 participants to an almost completely “virtual,” questionnaire-based study, aiming to compare the effectiveness of 2 different daily doses of aspirin to prevent heart attacks and strokes in at-risk patients. Once a patient agrees to participate, he or she answers screening questions, consents and enrolls using a web portal, and completes web-based questionnaires every 3 to 6 months.8

OBJECTIVE

However appealing the idea of electronic recruitment, the EHR is primarily intended to be a legal medical record, and does not include many features of research-focused software (IRB integration, consent management, etc.) as would a clinical trial management system. Thus, recruitment workflows using the EHR generally cannot use the EHR alone to manage participants and data. For ADAPTABLE, we aimed to (1) devise an efficient and secure method of tracking a participant’s identity through several research systems, and (2) determine whether electronic recruitment is in fact an effective method of study recruitment.

MATERIALS AND METHODS

Cohort identification

Like all CDRN-driven studies, the process to identify potential ADAPTABLE participants begins with a computable phenotype, or a data query that “use[s] EHR data exclusively to describe clinical characteristics, events, and service patterns for specific patient populations.”9

UNC’s computable phenotype queries the Carolina Data Warehouse for Health (CDWH), UNCHC’s enterprise data warehouse. Running this phenotype against the CDWH resulted in 27 964 patients who met the criteria.10 Each of these patients’ relevant data were then extracted for use in the recruitment workflow. A key element in this extract was a flag denoting whether each patient has an active My UNC Chart account and/or email address on file, which was the main factor in deciding how a patient is contacted by the study team.

The study team opted to use REDCap (Research Electronic Data Capture) to collect and manage study and recruitment data.11 The ADAPTABLE REDCap project, developed by Vanderbilt University Medical Center and shared broadly, allows patient contacts to be tracked in a comprehensive manner.

The CDWH extract was imported into REDCap, where each patient was preassigned a study ID and a “golden ticket” (a unique ADAPTABLE invitation code). Patients were divided into 4 groups based on initial contact method:

Patients scheduled for an appointment → recruited in clinic

Patients with an active My UNC Chart account → receive a My UNC Chart message

Patients without My UNC Chart accounts with a known email address → receive an email message

Patients without a My UNC Chart account or email address → receive a letter through U.S. mail

Taken together, groups 2 and 3 comprise the electronic recruitment workflow. Patients from groups 2 through 4 were also eligible to be recruited in clinic, should they have an appointment during the recruitment period.

Participant tracking

Ultimately, the patients identified in the CDWH must be tracked through all systems involved in recruitment so that their EHR data can eventually be merged with their study data. Though using the patient’s medical record number (MRN) as a consistent identifier is tempting, the need to transmit this identifier over the Internet in parts of the workflow makes it problematic from a security perspective.

We ultimately decided to use MRN as a cross-system identifier, but only after encrypting using the triple data encryption algorithm (TDEA).12 TDEA can be used for encryption within and outside of Epic, allowing us to generate the same encrypted MRN for each patient across each system in the workflow. Encrypting the MRN allows it to be safely transmitted in a URL between My UNC Chart and REDCap, maintaining a unique identifier while also preserving patient privacy.

However, even the encrypted MRN cannot be used as the only participant identifier due to the fact that it is meaningful only within UNCHC. For that reason, an identifier crosswalk is necessary. Before recruitment began, each patient’s MRN was encrypted using TDEA and stored in REDCap in a crosswalk table alongside the unencrypted MRN, preassigned golden ticket, and an internal REDCap ID (see Table 1).

Table 1.

Example crosswalk (fictitious data)

| REDCap ID | MRN | Encrypted MRN | Golden ticket |

|---|---|---|---|

| 1 | 000062537118 | A56FBH32341HN | 2881A |

| 2 | 000026637899 | RR62349JHNAK9 | 2882B |

| 3 | 000015534267 | T33GBAA72N780 | 2883C |

Study-eligible patients with active My UNC Chart accounts (group 2) received participation invitations in their My UNC Chart inbox. The invitation uses IRB-approved recruitment language and includes a link to a REDCap survey if the patient wishes to go further in the enrollment process. The same workflow applies to patients in group 3, but rather than receiving the initial message in My UNC Chart, they instead receive an email. If patients indicate interest on the survey, they are automatically taken to the ADAPTABLE website to enroll.

In all cases, it is necessary to know who is filling out the REDCap survey (to connect the patient invitation to their study enrollment). This was straightforward for group 3 (direct email), as the emails came from REDCap’s bulk email functionality. Group 2 (My UNC Chart) did not have this advantage, as messages were not sent by REDCap. To track the patient’s identity without transmitting the patient’s actual MRN in the REDCap URL, we inserted an Epic “SmartText” into the body of the My UNC Chart message. SmartText allows for dynamic insertion of text, links, or other features into form letters or other text fields in Epic. In this case, we use the SmartText to fetch each patient’s MRN, encrypt it using TDEA, and generate a unique REDCap link including the encrypted MRN. The actual MRN is never transmitted, maintaining patient privacy.

Messaging

Epic has the ability to “bulk message” patients from a pre-defined list. Using the CDWH export, we pre-grouped patients into batches of 200 to 800. This staggered approach ensured continual contact with potential participants while not overwhelming study coordinators.

Once a batch of messages is sent, most recipients receive an email notification that they have a message waiting in My UNC Chart. If a patient chooses to act on that email and log in to My UNC Chart, they will see a message that briefly describes the study and gives recipients the opportunity to click their unique survey link.

Because the patient’s identifiers are stored in a crosswalk table, any study participant can be traced back to his or her MRN at any point. This becomes necessary at the last step in the process, where lists of enrolled participants are sent back to sites from the coordinating center and are matched with patients in each site’s PCORnet datamart.

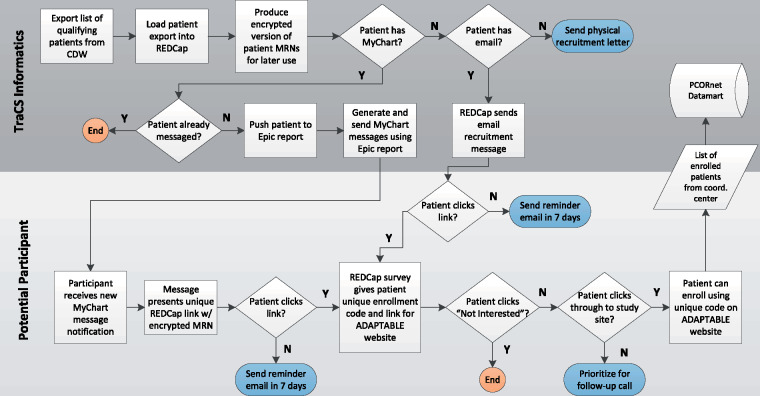

The full informatics workflow is summarized in Figure 1.

Figure 1.

The full ADAPTABLE informatics recruitment workflow.

RESULTS

The UNCHC patients recruited to ADAPTABLE as of May 2018 are tallied in Table 2.

Table 2.

ADAPTABLE recruitment through May 2018

| Initial method of contact | # Approached | Golden tickets entered (#) | Golden tickets entered (%) | Enrolled (#) | Enrolled (%) |

|---|---|---|---|---|---|

| In-clinic | 339 | 80 | 23.6% | 57 | 16.8% |

| Letter | 427 | 24 | 5.6% | 14 | 3.3% |

| 3891 | 424 | 10.9% | 145 | 3.7% | |

| My UNC Chart | 8363 | 1226 | 14.7% | 364 | 4.4% |

| All electronic (My UNC Chart + email) | 12254 | 1650 | 13.5% | 509 | 4.2% |

The percentage of golden tickets (unique invitation codes) entered is perhaps the best metric to differentiate the recruitment methods for ADAPTABLE, as not all participants who start the enrollment process will ultimately enroll for reasons not attributable to the recruitment method. As our main interest (from the informatics perspective) is driving traffic to the enrollment website, the count of golden tickets “used” on the site is perhaps more indicative of the effectiveness of these recruitment methods than the percent ultimately enrolled.

While in-clinic recruitment is the highest yield method for both golden tickets entered (23.6%) and participants enrolled (16.8%), it is also the most labor intensive, requiring coordinators to be present in the clinic. Moreover, the high-touch nature of in-person recruitment means that fewer patients can be approached. In comparison, letters (the traditional “low-touch” method) resulted in 5.6% of recipients accessing the enrollment site and 3.3% enrolling in the study.

Both electronic methods allow the study team to reach out to large numbers of patients at once, increasing the study’s chances of meeting recruitment goals faster. (Notably, 509 of the 580 UNC enrollees [87.8%] were recruited using an electronic method.) Moreover, when compared with letters, the electronic methods saw a higher percentage of recipients access the enrollment website—13.5% for both methods combined. Enrollment by recipients of electronic messages was also higher than letters at 4.2%.

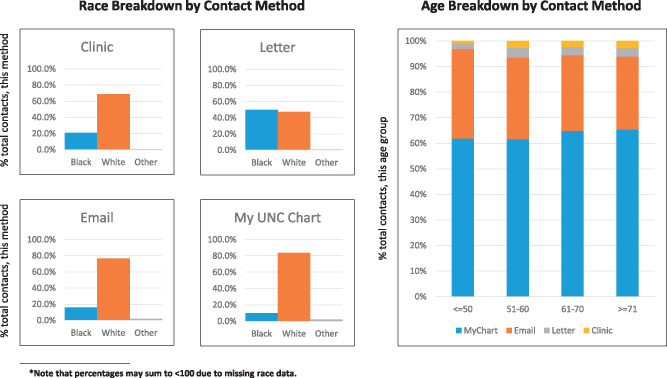

The contact methods differed in terms of the population reached. A full breakdown of contacted individuals by race and age is shown in Figure 2. Note also that only 28% of UNCHC patients are active My UNC Chart users, and the demographics of these users are different from the UNCHC population at large (Figure 3).

Figure 2.

Demographic breakdowns of the populations reached by each contact method.

Figure 3.

Comparing the demographics of all active UNCHCS patients with the subset of patients who use My UNC Chart.

DISCUSSION

Our main finding is that electronic recruitment is feasible and effective for pragmatic trial recruitment. For ADAPTABLE, it was useful to use both My UNC Chart and email in our electronic workflow, as it enabled us to cast the widest net. Despite the effectiveness of this double-pronged approach, there are good arguments for using the portal and not email for other studies. If a trial is sensitive (eg, relating to substance abuse, sexually transmitted diseases, etc.) or it would be not possible to draft appropriately vague recruitment language, email is inappropriate from both a HIPAA standpoint and the patient’s perspective. In contrast, the patient portal is a secure method of transmission.

Depending on available resources, letters should also not be discounted as a recruitment method in which security/privacy is a concern. The ADAPTABLE study relies heavily on the Internet, and a less web-focused study (requiring a less web-savvy participant population) may see different results from sending letters. Telephone- and text-message-based recruitment strategies would be interesting comparators in future work, though they were not undertaken in this investigation due to staff and time limitations.

Prior research has suggested that patient portal users are not demographically representative of the patient population as a whole (reinforced by Figures 2 and 3), in addition to portals presenting challenges to low socioeconomic status (SES) and low literacy patients.13–15 These factors likely also affect the makeup of the populations that receive recruitment messages through the patient portal and ultimately make the choice to enroll. Though we were not able to determine SES or literacy for the patients contacted for ADAPTABLE, Figures 2 and 3 indeed indicate that different methods of contact reach different populations. Based on this experience, while the portal can be used as an effective method of study recruitment, using it as the only method of recruitment may result in a skewed pool of participants. Ultimately, the choice of method should be made based on the target population of a given trial.

Our identity-tracking method proved to be an efficient way of accounting for participants at all stages in the recruitment process, while maintaining patient privacy. Due to the flexibility of REDCap and Epic’s ability to encrypt natively using TDEA, this method can be used in future studies with only a few tweaks, and can be easily shared.

Setting up this workflow required significant resources. The majority of this time commitment was spent designing the workflow, testing, and troubleshooting. Significant time was also spent working through governance, as any EHR programming is subject to several layers of approvals. Once set up, however, this infrastructure can be reused for new studies that wish to take advantage of electronic recruitment, significantly reducing the up-front costs over time. Other institutions wishing to replicate this workflow can download code and documentation from https://tracs.unc.edu/tracs-resources/sharehub/category/2-informatics.

CONCLUSION

Pragmatic trials are increasingly common, and demand new approaches to participant recruitment. Our experience with ADAPTABLE revealed that electronic recruitment is an effective low-touch recruitment method. Though electronic recruitment has lower yield than in-clinic recruitment, electronic recruitment’s ability to cast a much wider net makes this workflow well worth incorporating into a recruitment strategy, particularly for a pragmatic trial.

FUNDING

This work was supported by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health, through Grant Award Number UL1TR001111. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

This work was also partially funded through a Patient-Centered Outcomes Research Institute (PCORI) Award (ASP-1502-27079). The statements presented in this article are solely the responsibility of the authors and do not necessarily represent the views of the Patient-Centered Outcomes Research Institute (PCORI), its Board of Governors, or Methodology Committee.

Conflict of interest statement. None declared.

CONTRIBUTORS

Manuscript drafting: Pfaff, DeWalt

Project leadership: Pfaff, DeWalt, Knoepp, Thompson, Roumie

Programming and data analysis: Pfaff, Lee, Bradford, Pae, Potter, Thompson

Workflow design: Pfaff, Lee, Bradford, Pae, Potter, Blue, Knoepp, Thompson, Roumie, Crenshaw, Servis, DeWalt

Manuscript revisions and final approval: Pfaff, Lee, Bradford, Pae, Potter, Blue, Knoepp, Thompson, Roumie, Crenshaw, Servis, DeWalt

REFERENCES

- 1. Thadani SR, Weng C, Bigger JT, et al. Electronic screening improves efficiency in clinical trial recruitment. J Am Med Inform Assoc 2009; 166: 869–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Miotto R, Weng C.. Case-based reasoning using electronic health records efficiently identified eligible patients for clinical trials. J Am Med Inform Assoc 2015; 22 (e1): e141–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Jensen PB, Jensen LJ, Brunak S.. Mining electronic health records: towards better research applications and clinical care. Nat Rev Genet 2012; 136: 395–405. [DOI] [PubMed] [Google Scholar]

- 4. Schmickl CN, Li M, Li G, et al. The accuracy and efficiency of electronic screening for recruitment into a clinical trial on COPD. Respir Med 2011; 10510: 1501–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hernandez AF, Fleurence RL, Rothman RL.. The ADAPTABLE trial and PCORnet: Shining light on a new research paradigm. Ann Intern Med 2015; 1638: 635–6. [DOI] [PubMed] [Google Scholar]

- 6. Baucom RB, Ousley J, Poulose BK, et al. Case report: Patient portal versus telephone recruitment for a surgical research study. Appl Clin Inform 2014; 0504: 1005–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Marshall EA, Oates JC, Shoaibi A, et al. A population-based approach for implementing change from opt-out to opt-in research permissions. PLoS One 2017; 124: e0168223.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Zimmerman LP, Goel S, Sathar S, et al. A novel patient recruitment strategy: Patient selection directly from the community through linkage to clinical data. Appl Clin Inform 2018; 0901: 114–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Richesson RL, Hammond WE, Nahm M, et al. Electronic health records based phenotyping in next-generation clinical trials: a perspective from the NIH Health Care Systems Collaboratory. J Am Med Inform Assoc 2013; 20 (e2): e226–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. ClinicalTrials.gov [Internet]. Aspirin Dosing: A Patient-Centric Trial Assessing Benefits And Long-Term Effectiveness (ADAPTABLE) Identifier NCT02697916. Bethesda (MD): National Library of Medicine (US); 2016. https://clinicaltrials.gov/ct2/show/NCT02697916 Accessed June 29, 2017.

- 11. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap): a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009. Apr; 422: 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Barker E, Mouha N.. Recommendation for Triple Data Encryption Algorithm (TDEA) Block Cipher. Gaithersburg, MD: National Institute of Standards and Technology, U.S. Department of Commerce; 2017. http://csrc.nist.gov/publications/drafts/800-67r2/sp800-67r2-draft.pdf. Accessed October 22, 2018. [Google Scholar]

- 13. Bower JK, Bollinger CE, Foraker RE, et al. Active use of electronic health records (EHRs) and personal health records (PHRs) for epidemiologic research: sample representativeness and nonresponse bias in a study of women during pregnancy. eGEMs 2017; 51: 1.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Czaja SJ, Zarcadoolas C, Vaughon WL, et al. The usability of electronic personal health record systems for an underserved adult population. Hum Factors 2015. May; 573: 491–506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Wallace LS, Angier H, Huguet N, et al. Patterns of electronic portal use among vulnerable patients in a nationwide practice-based research network: from the OCHIN practice-based research network (PBRN). J Am Board Fam Med 2016; 295: 592–603. [DOI] [PMC free article] [PubMed] [Google Scholar]