Abstract

Background and Objectives

The number of people diagnosed with dementia is rising appreciably as the population ages. In an effort to improve outcomes, many have called for facilitating early detection of cognitive decline. Increased use of mobile technology by older adults provides the opportunity to deliver convenient, cost-effective assessments for earlier detection of cognitive impairment. This article presents a review of the literature on how mobile platforms—smartphones and tablets—are being used for cognitive assessment of older adults along with benefits and opportunities associated with using mobile platforms for cognitive assessment.

Research Design and Methods

We searched MEDLINE, Web of Science, PsycInfo, CINAHL, EMBASE, and Cochrane Central Register of Controlled Trials in October 2018. This search returned 7,024 articles. After removing 1,464 duplicates, we screened titles and abstracts then screened full-text for those articles meeting inclusion and exclusion criteria.

Results

Twenty-nine articles met our inclusion criteria and were categorized into 3 groups as follows: (a) mobile versions of existing article or computerized neuropsychological tests; (b) new cognitive tests developed specifically for mobile platforms; and (c) the use of new types of data for cognitive assessment. This scoping review confirms the considerable potential of mobile assessment.

Discussion and Implications

Mobile technologies facilitate repeated and continuous assessment and support unobtrusive collection of auxiliary behavioral markers of cognitive impairment, thus allowing users to view trends and detect acute changes that have traditionally been difficult to identify. Opportunities include using new mobile sensors and wearable devices, improving reliability and validity of mobile assessments, determining appropriate clinical use of mobile assessment information, and incorporating person-centered assessment principles and digital phenotyping.

Keywords: Alzheimer’s disease, Cognition, Dementia, Information technology, Memory, mHealth, Psychometrics

Translational Significance

This article highlights the innovative potential of mobile platforms to advance cognitive assessment and promote earlier detection of cognitive decline. Mobile technologies facilitate repeated and continuous assessments and enable unobtrusive collection of new types of data, facilitating detection of trends that have been difficult to identify up to now.

The number of people living with dementia worldwide is about 47 million, and this number is estimated to almost triple by 2050 (WHO, 2017). In the United States, the number of people with Alzheimer’s dementia (AD) is about 5.5 million, or roughly one in 10 adults over age 65 (Alzheimer’s Association, 2017). The main argument of a 2015 Gerontological Society of America (GSA) report on cognitive decline and early diagnosis is that cognitive impairment is grossly underdiagnosed in the United States, leading to poor health outcomes for people with cognitive impairment and their carers (Gerontological Society of America, 2015).

Early detection and intervention can help patients retain and even improve cognitive functioning (Laske et al. 2015; Rodakowski, Saghafi, Butters, & Skidmore, 2015), but it is not easy to notice cognitive decline until daily functioning is disrupted (Lyons et al., 2015). Many innovative cognitive assessment methods, such as cerebrospinal fluid analysis, neuroimaging, and laboratory tests, are invasive, time-consuming, and expensive (Laske et al., 2015), hindering screening in primary care or community settings. More efficient, cost-effective solutions could lower barriers to testing.

As the number of older adults grows, they are also increasingly using technology. Forty-six percent of adults aged 65 and older own smartphones (Pew Research Center, 2018) and 67% use the Internet (Anderson & Perrin, 2017). Combined with the need for early detection, the high rate of smartphone ownership and Internet use has led some to investigate using mobile devices for convenient, cost-effective screening for and monitoring of cognitive impairment. Furthermore, novel applications and sensors implemented on mobile platforms allow us to leverage new streams of data, such as GPS information, to identify markers sensitive to preclinical or early clinical stages of dementia.

Much research has investigated new approaches for the detection of the preclinical stages of Alzheimer’s disease focusing on the development of new neuropsychometric, clinical, laboratory, and neurophysiological tests (Laske et al., 2015; Rentz et al., 2013), or computerized test batteries implemented in personal computers, laptops, or tablets (Zygouris & Tsolaki, 2015). All of these approaches carry heavy time and financial burdens for the person being assessed and tax already limited testing resources. However, advances in mobile technologies can allow older adults to be more engaged in cognitive screening and monitoring inside and outside the clinic, giving them the opportunity for better health and the continued independence they desire (Morris, Intille, & Beaudin, 2005). Mobile technology also facilitates both norm-based and individualized features called for in the person-centered care philosophy (Molony, Kolanowski, Van Haitsma, & Rooney, 2018). Therefore, the aim of this article is to identify trends in the published research and directions for future research by reviewing the literature on the use of smartphone and tablet mobile platforms for cognitive assessment of older adults.

Research Design and Methods

We conducted a scoping review to perform conceptual mapping of prior literature using mobile technology for cognitive assessment. Scoping reviews summarize and disseminate research findings to identify gaps in research and to make suggestions for future research (Peters et al., 2015). The method and procedure for this study were based on the guidelines suggested by Arksey and O’Malley (2005) and Peters and colleagues (2015). The procedure started with a systematic search of published literature. We then analyzed selected articles for key elements of mobile cognitive assessment and categorized them by level of innovation.

Data Sources and Search Strategies

In October 2018, we searched MEDLINE, Web of Science, PsycInfo, CINAHL, EMBASE, and the Cochrane Central Register of Controlled Trials (CENTRAL) using search terms including mobile devices, mobile application, smartphone, tablet, mild cognitive impairment (MCI), Alzheimer’s, dementia, and older adults. Search strings for each database are in Supplementary Appendix A.

Study Selection

This review included research-based articles related to the development of cognitive assessments for detecting MCI, Alzheimer’s, or related dementias in adults over age 50 using mobile platforms, including PDA, mobile phone, smartphone, and tablet platforms. Articles were required to be English language full-text articles published in academic journals or presented at international conferences. The review excluded studies that used cognitive assessments that did not detect MCI, Alzheimer’s, or related dementias, systematic reviews, literature reviews, or articles lacking results (i.e., those describing only a study protocol or a system design without evaluation). We also excluded studies of telemedicine, tele-psychiatry, telecare, or mobile integrated health care.

Procedures

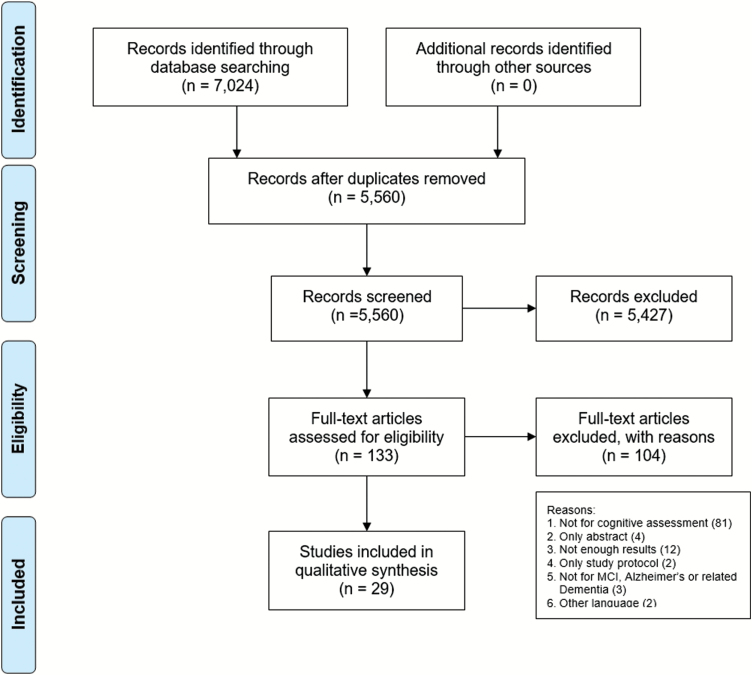

The initial search yielded 7,024 results: 1,132 from Medline, 1,760 from the Web of Science, 646 from PsycInfo, 211 from CINAHL, 3,255 from EMBASE, and 20 from CENTRAL. After removing 1,464 duplicates, both authors (B. M. Koo and L. Vizer) performed a title and abstract screening using our inclusion and exclusion criteria and Covidence software. Next, we screened the full text of the remaining 133 articles. Discrepancies were resolved through consensus. After conducting the full-text screening, 29 articles met our inclusion criteria (Figure 1).

Figure 1.

PRISMA flow diagram.

Results

We first examined general trends in publications over time, types of mobile platforms studied, and study completion rates. We then grouped the publications by the level of innovation and described their characteristics.

Publications per Year

The number of articles per year on using mobile platforms for cognitive assessment has increased substantially over time. The year 2017 showed a 60% increase in publications over 2016 and 100% over any year before that (Figure 2).

Figure 2.

Articles per year on mobile devices for cognitive assessment.

Mobile Platforms Studied

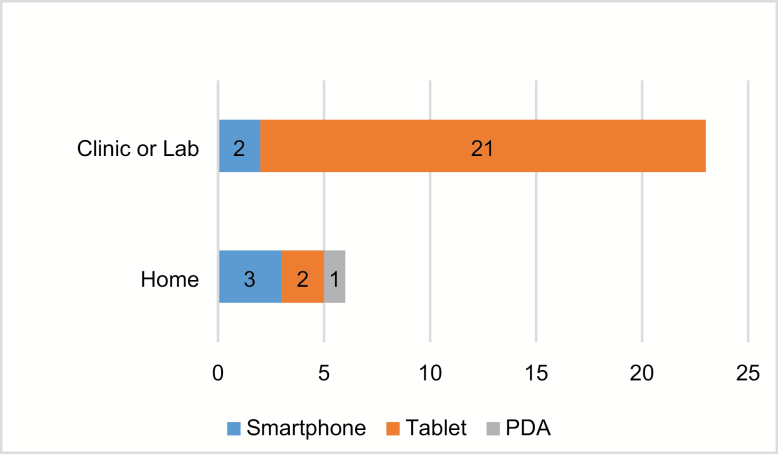

Articles examining tablet computers versus other mobile platforms predominated, but we found clear preferences depending on the research setting (Figure 3). For research in clinic venues, articles focused largely on tablet computers, while for research in home settings, smartphones were preferred.

Figure 3.

Mobile device platforms used per research venue.

Test Completion Rates

To examine the feasibility of cognitive assessment using mobile devices with an older adult population, we analyzed study completion rates for the six articles in which it was reported (Table 1). In two articles (Scanlon et al., 2016; Tong et al., 2016), researchers performed single in-clinic assessments using touchscreen tablets. Patients with mild to moderate dementia and patients in a hospital emergency department exhibited a test completion rate of at least 85%, but the controlled settings may have inflated the rate of test completion. However, four studies (Allard et al., 2014; Jongstra et al., 2017; Lange & Suss, 2014; Rentz et al., 2016), varying in test length and frequency, showed good completion rates for self-administration at home by older adults with normal cognition. Tests completed once per day showed full completion rates of around 60% (Jongstra et al., 2017; Rentz et al., 2016), but one study revealed that of those not completing every assessment, almost all missed only a single day and no participants missed more than 2 days (Rentz et al., 2016). In studies with cognitive assessments completed several times per day, completion rates were over 77% (Allard et al., 2014; Lange & Suss, 2014). These results confirm that older adults with normal cognition are able to complete an ambulatory assessment with mobile devices.

Table 1.

Study Completion Rates

| Study | Population | Device/venue | Frequency | Completion rates |

|---|---|---|---|---|

| Scanlon, O’Shea, O’Caoimh, and Timmons (2016) | 40 Dementia (>50 years old) |

Tablet/clinic | Single test | 85% |

| Tong, Chignell, Tierney, and Lee (2016) | 146 Patients (>70 years old) |

Tablet/clinic | Single test | 96.6% |

| Jongstra and colleagues (2017) | 151 Healthy (>50 years old) |

Smartphone/home | Once every 1 to 14 days for 6 months | 60% |

| Rentz and colleagues (2016) | 49 Healthy (>60 years old) |

Tablet/home | Once per day for 5 days | 5 days = 57% ≥4 days = 98%; ≥3 days = 100% |

| Allard and colleagues (2014) | 60 Healthy (>65 years old) |

PDA/home | Several times per day for 1 week | 79.5% |

| Lange and Suss (2014) | 91 Healthy (>60 years old) |

Smartphone/home | Several times per day for 1 week | 77% |

Innovations in Mobile Cognitive Assessment

We then categorized the 29 articles into three groups based on the level of innovation: (a) mobile versions of existing neuropsychological tests, (b) new cognitive tests developed specifically for mobile platforms, and (c) the use of new data streams for cognitive assessment. See summary of studies in Table 2 and full details in Supplementary Appendix B.

Table 2.

Summary of Studies Included in Review

| Author (year) | Device/venue | Population | Assessment methods | |

|---|---|---|---|---|

| Mobile versions of existing tests | Berg and colleagues (2018) | Tablet/clinic | MCI, D, SCI | eMoCA |

| Mielke and colleagues (2016) | Tablet/clinic | HC, MCI | iPad version of CogState Battery | |

| Ruggeri, Maguire, Andrews, Martin, and Menon (2016) | Tablet/lab | HC | The digital version of SLUMS (CUPDE) | |

| Scharre, Chang, Nagaraja, Vrettos, and Bornstein (2017) | Tablet/lab | HC, MCI, D | The digital version of SAGE (eSAGE) | |

| Wu and colleagues (2015) | Tablet/lab | HC | A cancellation test (e-CT) | |

| Wu and colleagues (2017) | Tablet/lab | HC, MCI, AD | A cancellation test (e-CT) | |

| New tests for mobile devices: screening tests | Brouillette and colleagues (2013) | Smartphone/lab | HC | CST: color and shape matching |

| Onoda and colleagues (2013) | Tablet/lab | HC, D | CADi: 10 items of neuropsychological tests | |

| Onoda and Yamaguchi (2014) | Tablet/lab | HC, AD | Revised version of the CADi (CADi2) | |

| Possin and colleagues (2018) | Tablet/clinic | HC, MCI, D | The Brain Health Assessment (BHA) | |

| Scanlon and colleagues (2016) | Tablet/lab | HC, D | CCS: symbol matching, memory, and object matching tasks | |

| Test batteries | Freedman and colleagues (2018) | Tablet/clinic | HC, MCI | Toronto Cognitive Assessment (TorCA) |

| Kokubo and colleagues (2018) | Tablet/clinic | HC, MCI, D, PD | The User eXperience-Trail Making Test (UX-TMT) | |

| Makizako and colleagues (2013) | Tablet/lab | HC | NCGG-FAT: 8 neuropsychological tasks | |

| - | Rentz and colleagues (2016) | Tablet/home | HC | C3-PAD: home cognitive test on iPad |

| - | Zorluoglu, Kamasak, Tavacioglu, and Ozanar (2015) | Tablet/lab | HC, D | MCS: 33 questions from 14 standard tests |

| Repeated administration | Allard and colleagues (2014) | PDA/home | HC | Brief semantic memory tests, questions about daily life |

| Jongstra and colleagues (2017) | Smartphone/home | HC | iVitality app: five neuropsychological tests | |

| Lange and Suss (2014) | Smartphone/home | HC | eKFA: questions assessing the number of slips or lapses | |

|

New data streams:

new data streams from conventional tests |

Dahmen, Cook, Fellows, and Schmitter-Edgecombe (2017) | Tablet/lab | HC, MCI, PD | dTMT: digital version of the Trail Making Test |

| Fellows, Dahmen, Cook, and Schmitter-Edgecombe (2017) | Tablet/lab | HC, MCI | dTMT: A digital version of the Trail Making Test | |

| Muller, Preische, Heymann, Elbing, and Laske (2017) | Tablet/lab | HC, MCI, D | Drawing a three-dimensional picture of a house | |

| Game performance | Thompson, Barrett, Patterson, and Craig (2012) | Smartphone/lab | Older adults | Smartphone-based games: word, number, and picture games |

| Tong and colleagues (2016) | Tablet/clinic | Older adults | Serious game in the emergency department | |

| GPS data | Tung and colleagues (2014) | Smartphone/home | HC, AD | VALMA measuring global movement in daily life |

| VR activities | Ip and colleagues (2017) | Tablet/lab | HC, MCI, D | Simulation-Based Assessment of Cognition (SIMBAC) |

| Zygouris and colleagues (2017) | Tablet/home | HC, MCI | Monitoring the longitudinal performance of VSM training | |

| Speech changes | Konig and colleagues (2018) | Tablet/clinic | SCI, MCI, D, AD | Automatic Speech Analysis (ASA) |

| Physical movement changes | Suzumura and colleagues (2018) | Tablet/clinic | HC, MCI, AD | Finger dexterity analysis |

Note. HC = healthy control; MCI = mild cognitive impairment; AD = Alzheimer’s disease; D = dementia; SCI = subjective cognitive impairment; PD = Parkinson’s disease.

Mobile versions of existing tests

Six articles described translating paper-based or personal computer (PC)-based neuropsychological tests to mobile platforms. Five articles focused on converting paper-based tests (Berg et al., 2018; Ruggeri et al., 2016; Scharre et al., 2017; Wu et al., 2015, 2017) and one investigated converting a PC-based test (Mielke et al., 2015).

The eSAGE is the mobile version of the paper-based Self-Administered Gerocognitive Examination (SAGE). The eSAGE contains the same questions as the original SAGE, assessing orientation, language, memory, executive function, calculation, abstraction, and visuospatial domains. People used their fingers to draw or type answers on the tablet with no time limit. Answers, response time, and number of erasures were recorded. Most questions were scored by the eSAGE software, but trained assessors scored drawings. Results showed that the eSAGE was highly correlated with the validated SAGE and other neuropsychological tests (Scharre et al., 2017).

The e-CT is the mobile version of the K-T paper-based cancellation test, consisting of two blocks of stimuli composed of 30 symbols displayed on a tablet touchscreen. Participants tapped symbols in the left-side block if they did not match the corresponding symbol in the right side block. A maximum of seven pages of stimuli were available for review within the 2-min time limit. The number of correct cancellations, omission errors, and commission errors were calculated. The e-CT score was significantly correlated to Trail Making Test (TMT-B), phonemic verbal fluency, and categorical verbal fluency (Wu et al., 2015). Furthermore, the e-CT had good diagnostic accuracy in differentiating people with MCI or AD from cognitively healthy older adults. However, age, education, and daily use of technology were shown to influence e-CT score (Wu et al., 2015, 2017).

The Cambridge University Pen to Digital Equivalence assessment (CUPDE) is the mobile version of the Saint Louis University Mental State Examination (SLUMS) and consists of 11 items (Ruggeri et al., 2016). The SLUMS examination is administered orally while the CUPDE presents items via text and audio. Ruggeri and colleagues (2016) found significant differences between SLUMS and CUPDE in composite and individual item scores and showed the necessity of new normative standards when translating traditional tests to a computerized, mobile platform.

Mielke and colleagues (2015) compared performance on PC and iPad versions of the CogState battery. The CogState PC battery consists of a detection task, identification task, one card learning task, one back task, and the Groton Maze Learning Test. As the PC CogState battery is digital, the same questions were delivered via the iPad, with different modes of interaction: keyboard and mouse for the PC, and touchscreen and stylus for the iPad. Participants completed the PC and iPad versions of CogState with a 2 to 3-min break between the two. Mielke and colleagues (2015) found speed and accuracy were better on the PC and scores differed between the PC and iPad. However, participants over age 50 preferred the iPad to the PC (Mielke et al., 2015).

eMOCA is an electronic version of the standard paper-based Montreal Cognitive Assessment (MoCA; Berg et al., 2018). eMoCA consists of 12 tasks administered on a tablet with a stylus. Results showed that the MoCA and eMoCA scores did not differ significantly for study participants with primary memory complaints such as Alzheimer’s disease, dementia, MCI, or subjective cognitive impairment. Authors reported no significant difference in the mean scores on the trail making, cube copy, and clock drawing tests between administrations.

New tests for mobile devices

Thirteen articles described new tests that took advantage of the mobile platform. Five discussed short tests for mass screening (Brouillette et al., 2013; Onoda et al., 2013; Onoda & Yamaguchi, 2014; Possin et al., 2018; Scanlon et al., 2016), five discussed test batteries covering several domains of cognitive function (Freedman et al., 2018; Kokubo et al., 2018; Makizako et al., 2013; Rentz et al., 2016; Zorluoglu et al., 2015), and three described tests designed for repeated administration (Allard et al., 2014; Jongstra et al., 2017; Lange & Suss, 2014). Screening tests require a relatively short time to complete and focus on general cognitive function or a few specific domains known to change in the early stages of cognitive decline. Test batteries are longer and assess both overall cognition and specific domains. Repeated administration of a test (a design also known as a measurement burst; Sliwinski, 2008) results in a longitudinal set of results that enable continuous monitoring for detection of cognitive decline.

Screening tests

The Cognitive Assessment for Dementia, iPad version (CADi) was developed for mass screening for dementia. The CADi consists of 10 items, including immediate recognition, sematic memory, categorization, subtraction, repeating backward, cube rotation, pyramid rotation, trail making A & B, and delayed recognition tests. The CADi (Onoda et al., 2013) takes about 10 min and is self-administered using an iPad. Questions and instructions are presented as text on the screen or explained via audio with headphones. The application records the number of correct answers and the total response time. CADi2 (Onoda & Yamaguchi, 2014) is an improved version of CADi with better sensitivity for discriminating between normal cognition and dementia.

The smartphone-based Color-Shape Test (CST) assesses cognitive processing speed and attention (Brouillette et al., 2013). Participants matched color and shape according to a legend showing paired colors and shapes at the top of screen by touching the color pad at the bottom of screen. The application recorded the number of attempts and the number of correct answers. CST scores were associated with scores on the Mini-Mental State Exam (MMSE) and other speed and attention tests, showing the possibility of using smartphones for cognitive assessment in older adults (Brouillette et al., 2013).

Scanlon and colleagues (2016) investigated the usability and validity of free, commercially available tests for detecting cognitive impairment in a community setting. The computerized cognitive screening (CCS) consists of a symbol matching task, a memory task, and an object matching task used to assess concentration, memory, and visuospatial functioning. Participants completed each task in 1 min using a tablet with a touchscreen. Authors found a significant correlation between CCS scores and Montreal Cognitive Assessment (MoCA) scores and no difference in CCS scores between people with and without computer experience (Scanlon et al., 2016).

The Brain Health Assessment (BHA) is the 10-min tablet-based cognitive assessment to detect mild cognitive impairment and dementia. The BHA includes an informant survey and four subtests: Favorites, Matching, Line Orientation, and Animal Fluency. The informant survey of the participant’s functional decline and changes in cognition and behavior was included to improve diagnosis. When compared with the MoCA, Possin and colleagues (2018) found that the BHA demonstrated higher accuracy in detecting mild cognitive decline and similar accuracy in detecting dementia.

Test batteries

The Mobile Cognitive Screening (MCS) is a mobile neuropsychological test. This test battery consists of 33 questions from 14 types of test that assess eight cognitive domains including arithmetic, orientation, abstraction, attention, memory, language, visual, and executive function. All test questions were modified for a mobile platform. Participants clicked or dragged pictures, numbers, and letters on the touchscreen of a tablet. The MCS app calculated a score by counting the number of correct answers and provides immediate test results visualized as a radar chart. MCS scores were significantly correlated with MoCA scores (Zorluoglu et al., 2015).

Rentz and colleagues (2016) developed a Computerized Cognitive Composite for Preclinical Alzheimer’s Disease (C3-PAD) to assess episodic memory and working memory. The study investigated the feasibility and reliability of at-home self-administration by comparing scores on two in-clinic C3-PADs and five at-home C3-PADs. Analysis demonstrated a significant association between the in-clinic tests and the at-home tests, suggesting that home-based cognitive assessment with mobile devices is feasible if enough training is provided (Rentz et al., 2016).

The National Center for Geriatrics and Gerontology function assessment tool (NCGG-FAT) consists of eight tasks used to assess memory, attention, processing speed, visuospatial, and executive function (Makizako et al., 2013). Participants had 30 min to complete the test battery using a tablet and digital pen. The study showed high test–retest reliability and high validity in comparison with conventional neurocognitive tests, suggesting that the NCGG-FACT may be useful in assessing cognition in population-based samples (Makizako et al., 2013).

Freedman and colleagues (2018) developed the Toronto Cognitive Assessment (TorCA) with the aim of producing a test that is more comprehensive than screening tests but shorter than a neuropsychological battery. The TorCA consists of 27 subtests to evaluate multiple cognitive domains and is administered on paper or on an iPad with each mode using the same questions. The TorCA demonstrated statistically significant ability to differentiate between MCI and normal cognition. There was no significant difference between the scores obtained using the two administration modes, but the authors discussed the automated test scoring and graphical representation of the test results as advantages of the iPad version.

The User eXperience-Trail Making Test (UX-TMT) was developed for cognitive assessment and training (Kokubo et al., 2018). The UX-TMT consists of neurocognitive assessment, cognitive training, and life-logging. The neurocognitive assessment includes the Modified Train making test, 1-back task, Stroop and reverse-Stroop tasks, and verbal memory task. Participants also recorded mood, physical condition, and sleepiness on a Likert scale. The UX-TMT showed high sensitivity and specificity which were more pronounced when the analysis took participant age into account.

Tests for repeated administration

The iVitality smartphone app contains five neuropsychological tests adapted from existing paper-based test. Jongstra and colleagues (2017) investigated the feasibility and validity of delivering the tests via smartphone app every 2 weeks for 6 months. Participants completed each test four times. The iVitality smartphone app prompts users to complete tests, collects the test results, provides results to the user, and transfers the data to the iVitality website and database. Analysis showed that the smartphone-based Stroop and Trail Making Test (TMT) were moderately correlated with the paper-based Stroop and TMT and that repeated testing demonstrated learning effects for the Stroop and TMT (Jongstra et al., 2017).

To examine how cognitive performance impacts daily life, Allard and colleagues (2014) used a PDA to deliver repeated assessments using brief Semantic Memory tests and questions about daily life experiences, including activities, behaviors, and locations. Participants completed each assessment test within less than 2 min of an alert and repeated tests five times a day over 1 week. Daily life experiences were categorized into six topics: chores, socializing, general sustenance or hygiene activities, physical activities, passive leisure, and intellectual activities. This study demonstrated not only high compliance rates, but also an association between cognitive performance and intellectually stimulating activities (Allard et al., 2014).

The Electronic Questionnaire of Cognitive Failures in Everyday Life (eKFA) is a mobile application based on the Questionnaire for Cognitive Failures in Everyday Life (KFA) which assesses slips and lapses (Lange & Suss, 2014). The eKFA assesses the number of slips or lapses within the prior 2 hr, allowing for more accurate reporting. The eKFA application prompted participants to complete the questionnaire four times a day for 1 week. Participants could also manually record a cognitive failure outside of the prompted reports. The authors showed that eKFA results were not influenced by educational or knowledge level.

New data streams for cognitive assessment

Ten articles described new data types leveraged for assessment. Three articles discussed the use of new data streams unobtainable from paper-based tests (Dahmen et al., 2017; Fellows et al., 2017; Muller et al., 2017). We also reviewed research that used game performance metrics (Thompson et al., 2012; Tong et al. 2016), GPS data (Tung et al., 2014), Virtual Reality activities (Ip et al., 2017; Zygouris et al., 2017), speech changes (Konig et al., 2018), and physical movement changes (Suzumura et al., 2018).

New data streams from conventional tests

A digital version of the Trail Making Test (dTMT) consists of two tests similar to the paper-based TMT (pTMT): Part A (connecting circles in numbered sequence) and Part B (connect circles with numbers and letters in ordered sequence; Dahmen et al., 2017; Fellows et al., 2017). The dTMT showed convergent validity with the pTMT, but there were some differences based on administration mode and data collected, such as for a stylus versus paper and pencil. The dTMT collects auxiliary performance data not possible with the pTMT, including pause number and duration, lift number and duration, rate and time between circles, and rate and time inside circles, in addition to the standard completion time and number of errors. These performance data are used to predict the pTMT and dTMT time to completion scores Analysis showed significant correlation between predicted performance from the dTMT and clinically measured performance on dTMT and pTMT (Dahmen et al., 2017) as well as significant correlation between actual pTMT and dTMT scores on Parts A and B (Fellows et al., 2017).

Muller and colleagues (2017) used a tablet with stylus to measure novel performance features on handwriting and drawing tasks. A mobile application measured the time that the pen was touching the surface of the tablet (Time on Surface) and the time it was off the surface (Time in Air) to assess differences in fine motor control and coordination. The study compared patients with early dementia, patients with MCI, and healthy individuals on their performance copying a three-dimensional picture of a house. The novel performance features recorded during the digital drawing task enabled more precise assessment of visuo-constructive abilities, which could be used for early detection of dementia (Muller et al., 2017).

Game performance

Another avenue for unobtrusively assessing cognitive ability is through instrumented games. To assess the feasibility and validity of this idea, Thompson and colleagues (2012) investigated the association between traditional neuropsychological test scores and performance on a word game, a number game, and a picture game. The study found significant correlations between performance on the picture game and visual memory test scores, performance on the word game and verbal IQ and verbal learning test scores, and performance on the number game and reasoning problem-solving scores. The results suggest that smartphone-based puzzle games could be used to accurately assess and monitor cognitive function in older adults (Thompson et al., 2012).

Rather than instrumenting existing games, some researchers are developing new serious games—whose purpose goes beyond entertainment—to increase engagement while testing performance or delivering important content. Tong and colleagues (2016) designed a mobile game to screen cognition in the emergency department. They found that most patients (96.6%) successfully completed the game and performance was significantly correlated with scores on the MoCA, MMSE, and Confusion Assessment Method (CAM; Tong et al., 2016).

GPS data

A person’s “life space”—the geographic area and perimeter a person covers in daily life—is an indicator of physical and cognitive function. To reduce recall bias related to interviews or self-report instruments, which is of particular concern in people with cognitive impairment, researchers explored GPS technologies to objectively measure global movement in daily life. Tung and colleagues (2014) developed a smartphone-based ambulatory wearable sensor system called Voice, Activity, and Location Monitoring For Alzheimer’s Disease (VALMA) to collect life space movement data and determine which GPS-measured behavioral indicators were related to cognitive decline. They found that the area and perimeter a person covers and average distance from home distinguished between people with dementia and the healthy control group. In addition, the area and perimeter were significantly correlated with physical function as measured by steps per day, the Disability Assessment for Dementia (DAD) and gait velocity, and affective status such as apathy and depression (Tung et al., 2014).

Virtual Reality activities

Researchers used Virtual Reality (VR) applications to examine behavioral characteristics of people with executive dysfunction or disability. Zygouris and colleagues (2017) developed a Virtual Super Market (VSM) application that trained users to complete daily shopping activities in a supermarket environment, such as navigating the store, finding products on a shopping list, and paying the right amount of money. The study suggested that longitudinal performance on VR cognitive training applications could be used to monitor cognitive function in healthy older adults, since analyzing trends over time can show early cognitive declines (Zygouris et al., 2017).

The Simulation-Based Assessment of Cognition (SIMBAC) program evaluates various daily activities performed in a virtual reality setting (Ip et al., 2017). The SIMBAC includes five activities: recognizing, matching faces and names, filling a pillbox, withdrawing money using an automated teller machine, and refilling a prescription by phone. These tasks were chosen because they are closely relevant to daily activities required for independent living. While the subject completed the activities using a tablet, characteristics, such as accuracy, time to completion, error, and percentage of steps/subtasks completed were measured as proxies for cognitive assessment. In a validation study, the SIMBAC demonstrated significant discrimination between different cognitive states. Study participants also had positive feedback concerning the number of activity modules.

Changes in speech

As cognitive impairment affects speech at the linguistic level and paralinguistic level, some vocal characteristics in speech have been used for cognitive assessment. Automatic Speech Analysis (ASA) developed by Konig and colleagues (2018) provides vocal cognitive tasks, such as sentence repetition, denomination, picture description, verbal fluency phonemic, verbal fluency sematic, counting backward, positive storytelling, negative storytelling, and episodic storytelling. Task responses were recorded, and the mobile application used automatic speech processing and machine learning techniques to analyze vocal markers. The ASA showed highly accurate classification rates differentiating between subjective cognitive impairment, MCI, and Alzheimer’s disease.

Changes in physical movement

The decline in cognitive function is also correlated with changes in physical movement. Suzumura and colleagues (2018) developed a mobile application measuring fine motor skills from tapping on the tablet screen. Participants tapped markers with the left, right, or both hands in time with a rhythm or audio signal. For the assessment, tap response time, rhythm, contact duration, and inter-hand divergence were investigated. Analysis showed a significant difference in finger dexterity between people with dementia, people with MCI, and the healthy control group. The authors suggest that this measurement may be valuable for mass screening.

Discussion and Implications

Our review found an increasing number of articles published on the use of mobile devices for cognitive assessment in older adults. This confirms growing research interest and effort in leveraging mobile technology for assessing cognitive status in older adults. We also saw biases for different platforms depending on the environment. Outside the clinic, more research used mobile phones, while in the clinic tablet computers were preferred. We conjecture that this difference is based on the form factor of each device. The smaller, the more portable smartphone is more ubiquitous outside the clinic where the technology must integrate into daily life whereas the larger, less portable tablet computer works better for clinics where any technology must be usable by as many people as possible. Furthermore, we found that older adults were able to complete mobile assessments at high rates both inside and outside a clinic setting. Although adults with cognitive impairment did not participate in the studies outside the clinic, except in the study from Tung and colleagues (2014) investigating “life space” with passive GPS technology, those with normal cognition are the primary target population for any technology intended for early detection of cognitive decline. However, future usability studies must include older adults with cognitive impairment so we can successfully implement monitoring technologies to identify trends and acute changes outside the clinic in people with cognitive impairment.

We then organized the 29 articles into three categories according to the level of innovation: (a) existing tests translated to a mobile platform, (b) new tests developed specifically for a mobile platform, and (c) new data streams leveraged for cognitive assessment. The development of mobile cognitive assessments in these three categories resulted in applications that can overcome the limitations of traditional assessments, computerized assessments, and even other mobile assessments.

Translating paper-based tests to mobile devices increased test completion rate, decreased administration cost, enabled automatic score calculation, allowed immediate access to results, and supported easy tracking of patient outcomes (Berg et al., 2018; Mielke et al., 2015; Ruggeri et al., 2016; Scharre et al., 2017; Wu et al., 2015, 2017). Mobile devices also allowed cognitive assessment outside of clinics with rapid data transfer to health care providers (Scharre et al., 2017), and even older adults with low technology literacy were readily able to complete tests using tablets with touchscreens in a clinic setting (Mielke et al., 2015). Lessening the burden on professional examiners can further increase administration of cognitive assessments outside of clinic settings (Makizako et al., 2013). Conducting cognitive assessment using the powerful multimedia components available on mobile platforms also facilitated more efficient and effective content delivery. However, results suggested the need for further validation when existing normed tests are translated to a mobile platform because the change in the delivery method may bias the test results, especially for self-administration (Mielke et al., 2015; Ruggeri et al., 2016). The number of items, test length, and administration frequency were important factors influencing effectiveness and efficiency, thus researchers expressed a desire for valid shorter self-administered tests with high accuracy, especially in community settings or for self-administration (Makizako et al., 2013; Onoda et al., 2013; Rentz et al., 2016). Furthermore, although remote assessments may reduce the need for a trained examiner during the screening stage, face-to-face assessments provide an opportunity for professional judgment and valuable metadata needed for accurate diagnosis (American Psychological Association, 2012).

Paper- and PC-based tests administered in clinics give a snapshot of cognitive status, but cannot give insight into the day-to-day variations in cognition, emotion, and stress (Hess, Popham, Emery, & Elliott, 2012). However, the portability of mobile technologies can reduce time, frequency, and place barriers for cognitive assessment, and allow short- or long-term monitoring through repeated assessment outside the clinic to detect early, subtle signs of cognitive decline (Allard et al., 2014; Lange & Suss, 2014). Remote assessments with mobile technology are ideally suited for ambulatory assessment (AA; Lange & Suss, 2014), enabling older adults to take tests at a preferred time in a comfortable environment (Allard et al., 2014; Lange & Suss, 2014; Rentz et al., 2016) and to complete assessments several times a day (Allard et al., 2014; Jongstra et al., 2017; Lange & Suss, 2014). Researchers can also examine the interaction between context and cognitive function (Allard et al., 2014).

Mobile platforms can allow us to leverage new data streams and achieve higher measurement accuracy (Dahmen et al., 2017; Fellows et al., 2017; Muller et al., 2017). Furthermore, we can collect much of these data unobtrusively, passively, and more objectively using mobile platforms, thus reducing user burden and increasing ecological validity. Examples include the timing data that are a byproduct of cognitive assessments (Muller et al., 2017), timing and performance measures from games (Thompson et al., 2012; Tong et al., 2016), sensor data such as GPS traces (Tung et al., 2014), performance data in Virtual reality activities (Ip et al., 2017; Zygouris et al., 2017), and changes in speech (Konig et al., 2018) or movement (Suzumura et al., 2018). Mobile device-based games and VR technology facilitate high user engagement and interactivity, providing an enjoyable experience for older adults while attaining assessment information (Ip et al., 2017; Thompson et al., 2012; Tong et al., 2016; Zygouris et al., 2017). See Supplementary Appendix C for a comparison of the benefits of mobile versions of existing tests, new tests designed for mobile devices, and leveraging new data streams for cognitive assessment.

Collating these new data streams results in a composite description of a person’s behavior. This “digital phenotype” (Wiederhold, 2016) is now attracting increased attention as an alternative measurement of health-related behaviors. Digital phenotyping incorporates data from mobile sensors, keyboard interactions, voice, speech, and other streams obtained during everyday use of social media, wearable technologies, and mobile devices (Insel, 2017; Jain, Powers, Hawkins, & Brownstein, 2015). Advances in mobile technologies and applications will lead to even more streams of data to tap for information on cognitive trajectories and cognitive decline. In particular, we see growing interest around analyzing data streams to detect neuropsychiatric or behavioral symptoms as early manifestations of emergent dementia (Gold et al., 2018). For example, GPS technology records daily movement data that can aid in recognition of behavioral symptoms of incipient dementia (Tung et al., 2014). Further research will no doubt translate findings from studies conducted using personal computers, digital pens, and other sensors to mobile platforms to facilitate detection of cognitive status. Examples include measures from digital clock drawing (Davis, Libon, Au, & Penney, 2015), technology use patterns (Kaye et al., 2014), and social behavior (Dodge et al., 2015). This new approach may decrease the learning effects or retrospective bias that can limit current cognitive assessment methods. Prior work on digital phenotypes (Insel, 2017; Jain et al., 2015; Wiederhold, 2016) does not mention incorporating the game performance elements we reviewed (Thompson et al., 2012; Zygouris et al., 2017). However, game or training performance can provide objective data for cognitive assessment and monitoring through longitudinal analysis of performance (Zygouris et al., 2017). Although single measures may not provide a significant improvement in sensitivity and specificity over conventional tests, we expect that multivariate models of unobtrusively obtained data (Vizer & Sears, 2015) will demonstrate a substantial improvement resulting in important clinical impacts.

Clinicians can deploy mobile technologies in a clinic for a brief cognitive screening or a more comprehensive battery. Mobile screening facilitates efficient identification of people at risk for cognitive issues during a regular checkup or hospitalization and assists in planning further examinations. Outside the clinic, mobile devices enable ongoing analysis of data obtained from self-reports, instrumented games or activities, or unobtrusive measures. Furthermore, if each technology can connect with the electronic medical record, personalized suites of technologies can support different people’s needs in different settings. For example, the in-clinic assessment plan for one person might involve a history elicitation in the person’s and carer’s chosen formats upon check-in, a quick tablet-based assessment while waiting in the exam room, and a virtual reality task that holistically assesses multiple domains delivered in conjunction with the clinical exam. The clinician might also prescribe a monitoring app that employs a combination of games and sensors and communicates results back to the clinic.

Although mobile technologies have many advantages for use in cognitive assessment, we must also address some limitations. Several variables can influence the outcome of a technology-based cognitive assessment, including test characteristics, test duration, test frequency, and training and prior technology experience of the person being assessed (Makizako et al., 2013; Onoda et al., 2013; Rentz et al., 2016). We also saw tradeoffs in terms of sensitivity and specificity, required effort, and adherence. Mobile assessments range from those that are normed and validated with high sensitivity and specificity that are suited to diagnosis (Scharre et al., 2017; Wu et al., 2017); to those with high adherence rates that are attractive for monitoring outside the clinic (Allard et al., 2014; Jongstra et al., 2017; Lange & Suss, 2014; Rentz et al., 2016); to those with higher sensitivity and lower specificity that are appropriate for screening (Scanlon et al., 2016). Some assessments impose high cognitive burden whereas others are less demanding, and passive assessments leveraging unobtrusive metadata require no additional effort. Higher adherence rates are associated with shorter, more interesting applications embedded into daily activities (Tong et al., 2016). For example, many game-based assessments or training applications may not currently pinpoint impairment in specific domains, but they offer older adults enjoyable, stimulating, or meaningful activities while providing clinicians a view of relative day-to-day function. Examiners must consider these tradeoffs, especially for use outside of the clinic where the person can choose whether to complete assessment activities. Consistent with standard clinical practice, choice of assessments should be appropriate to each person, and their situation and correct interpretation depend on the skill of the clinician (American Psychological Association, 2012).

We also noted some literature on mobile cognitive assessment with younger adults (e.g., Plourde, Hrabok, Sherman, & Brooks, 2018; Twomey et al., 2018). Although it is tempting to generalize results from studies with one age group to assessments with another age group, we must keep in mind that each group has unique needs and characteristics that should guide development. Compared with younger adults, older adults have lower mobility, lower dexterity, lower speed, higher crystallized memory, prefer that health technologies complement rather than replace face-to-face interactions, and have less access to and experience with mobile technology, although this is changing rapidly (Anderson & Perrin, 2017; Kuerbis, Mulliken, Muench, Moore, & Gardner, 2017). These characteristics can impact test performance even though they are not directly related to cognition, therefore we should consider the effects of aging when adapting cognitive assessments that were validated in young adults for use with older adults. Anguera and colleagues (2013) directly compared the performance of younger and older adults on a game-based cognitive assessment and found that adults between ages 20 and 79 exhibited a linear age-related decline in multitasking performance. However, when the older adults played an adapted version of the game that included multitasking training, they were able to attain performance better than that of 20-year-olds who did not receive training and maintain performance gains for at least 6 months. Interestingly, the older adults also exhibited gains in untrained cognitive domains such as attention and working memory. Kuerbis and colleagues (2017) and Jenkins, Lindsay, Eslambolchilar, Thornton, and Tales (2016) discussed other age-related considerations for developing mHealth technology specifically for cognitive or behavioral health assessments. Kuerbis and colleagues (2017) synthesized mHealth literature to develop recommendations for providing behavioral mHealth interventions to older adults. In particular, they emphasize the necessity of superior usability and supportive training. To ascertain issues specific to developing cognitive assessments for tablets, Jenkins and colleagues (2016) conducted a focus group with younger and older adults. They received generally positive feedback regarding assessment delivery via tablets, but also noted that user-interface and ergonomics issues affected performance regardless of cognitive ability. These studies suggest that we should use appropriate training to reduce or eliminate age-related noncognitive deficits that can affect performance (Anguera et al., 2013; Kuerbis et al., 2017) and we should rigorously apply human-centered design methods (Holtzblatt & Beyer, 2016) to ensure that interface usability and ergonomics do not unintentionally confound test results (Jenkins et al., 2016). These insights also support stratification of normative data by age, and use of longitudinal, personalized monitoring for trend identification to account for performance variability between and within people of any age.

Furthermore, when obtaining health data from any Internet-connected device, and especially someone’s personal device, privacy issues are paramount. Methods of collection, storage, analysis, and transfer of personal data must all protect against compromising the privacy of the patient. We saw very little discussion of this important issue and clinicians should understand privacy provisions before using a mobile solution in practice. We also caution against using these technologies as a sole means of diagnosis, but advocate for use in conjunction with comprehensive evaluations by trained clinicians.

Lastly, we found little attention paid to person-centered care (Fazio, Pace, Flinner, & Kallmyer, 2018) and person-centered assessment concepts (Molony et al., 2018). Although this research is in its infancy, any technology should support person-centeredness as early as possible (Holtzblatt & Beyer, 2016). Fazio and colleagues (2018) describe the key components of person-centered care as: “(a) supporting a sense of self and personhood through relationship-based care and services, (b) providing individualized activities and meaningful engagement, and (c) offering guidance to those who care for them.” We found that mobile technologies enable continuous assessment and support unobtrusive collection of auxiliary data for cognitive assessment based on behavior, which addresses the person-centered assessment notion of valuing the experience of people with dementia in their real environments (Molony et al., 2018). In addition to standardized cognitive assessments given during a clinic visit, healthcare providers can utilize auxiliary data obtained from a person’s behavior and lived context for more accurate cognitive assessment. Furthermore, mobile assessment researchers can seek out and incorporate into technology design the input of people with cognitive impairment, carers, and clinicians. Stakeholders might suggest that technology facilitates relationships with family, friends and medical providers; adapt to individual cognitive and functional characteristics; use games or personally meaningful activities to make assessments enjoyable and engaging; offer tailored information; and support people with cognitive impairment and their carers’ needs.

Logical extensions of the research we reviewed include using new mobile sensors and wearable devices, improving reliability and validity of mobile assessments, and determining appropriate clinical use of mobile assessment information, and incorporating person-centered assessment and digital phenotyping in conjunction with mobile technologies. These advancements can engage and respect the person with cognitive impairment, provide stakeholders with valuable insights into trends and changes in cognitive function and behavior, and substantially change cognitive assessment and monitoring practices for older adults.

We limited our review to full-text journal articles written in English focusing on the use of mobile phones, smartphones, PDAs, and tablets for cognitive assessment in older adults. In the full-text screening stage, 12 articles using smartphones or tablets for cognitive assessment were excluded for the following reasons: four publications were not full-text, six publications did not report assessment results (i.e., only reported on technology acceptance by health care professionals or described only a study protocol), and two publications did not employ scales for detecting MCI, Alzheimer’s, or dementia. In addition, we did not consider or review several studies of devices such as smartwatches, activity trackers, and other wearable sensors. Future research should consider the use of these mobile technologies for cognitive assessment in older adults, especially in the context of digital phenotyping and person-centered care.

With respect to the feasibility of mobile cognitive assessment, we recognize that the education level of the study participants in our review was slightly higher than that of the general older adult population. Several articles mentioned that education level did not influence performance (Brouillette et al., 2013; Rentz et al., 2016), but a large body of work has shown that most neuropsychological tests are influenced by age and education (Acevedo, Loewenstein, Agrón, & Duara, 2007; Allard et al., 2014; Ganguli et al., 2010; Mielke et al., 2015; Onoda et al., 2013). Some studies specifically assessed acceptability and feasibility and found that older adults are willing to use mobile technology for health purposes (Mielke et al., 2016; Tong et al., 2016), especially if barriers such as cost and experience are addressed (Kuerbis et al., 2017). Further work should take into account educational background and previous experience with mobile technology and purposefully include a diverse sample to develop widely accessible technologies.

Conclusion

This review highlights the increasingly innovative ways mobile platforms are used to deliver cognitive assessment for older adults. The first group of articles discussed using mobile devices as a convenient mode of delivering existing tests. The next group described assessments developed specifically to take advantage of the features of mobile platforms. The last group leveraged unique streams of data, such as GPA, sensors, and touchscreen attributes, to gain deeper insight into cognition. Future work should include more diverse participant samples, investigate new sensor and wearable technologies, improve reliability and validity, and incorporate the points of view of people being assessed, carers, and clinicians. As mobile devices become even more ubiquitous, we expect technology to become more person-centered and for researchers to use active and passive measures of cognition in digital phenotyping to detect very early signs of cognitive decline with little burden to the person being assessed. Innovation in mobile technologies will continue to catalyze new tools and methods and profoundly transform the way we assess cognition, thus improving health outcomes and quality of life for older adults.

Funding

This work was supported by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health (NIH) (UL1TR002489). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Conflicts of Interest

None reported.

Supplementary Material

Acknowledgment

We are grateful to Sarah Towner Wright, Clinical librarian of the UNC Health Sciences Library, for her expertise in formulating our search strategy.

References

- Acevedo A., Loewenstein D. A., Agrón J., & Duara R (2007). Influence of sociodemographic variables on neuropsychological test performance in Spanish-speaking older adults. Journal of Clinical and Experimental Neuropsychology, 29, 530–544. doi: 10.1080/13803390600814740 [DOI] [PubMed] [Google Scholar]

- Allard M., Husky M., Catheline G., Pelletier A., Dilharreguy B., Amieva H.,…Swendsen J (2014). Mobile technologies in the early detection of cognitive decline. PLos One, 9, e112197. doi: 10.1371/journal.pone.0112197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alzheimer’s Association (2017). Alzheimer’s disease facts and figures Retrieved from https://www.alz.org/facts/

- American Psychological Association (2012). Guidelines for the evaluation of dementia and age-related cognitive change. American Psychologist, 67, 1–9. [DOI] [PubMed] [Google Scholar]

- Anderson M., & Perrin A (2017). Tech adoption climbs among older adults Retrieved from http://www.pewinternet.org/2017/05/17/tech-adoption-climbs-among-older-adults/

- Anguera J. A., Boccanfuso J., Rintoul J. L., Al-Hashimi O., Faraji F., Janowich J.,…Gazzaley A (2013). Video game training enhances cognitive control in older adults. Nature, 501, 97–101. doi: 10.1038/nature12486 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arksey H., & O’Malley L (2005). Scoping studies: Towards a methodological framework. International Journal of Social Research Methodology, 8, 19–32. [Google Scholar]

- Berg J. L., Durant J., Léger G. C., Cummings J. L., Nasreddine Z., & Miller J. B (2018). Comparing the electronic and standard versions of the Montreal cognitive assessment in an outpatient memory disorders clinic: A validation study. Journal of Alzheimer’s Disease: JAD, 62, 93–97. doi: 10.3233/JAD-170896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouillette R. M., Foil H., Fontenot S., Correro A., Allen R., Martin C. K.,…Keller J. N (2013). Feasibility, reliability, and validity of a smartphone based application for the assessment of cognitive function in the elderly. PLos One, 8, e65925. doi: 10.1371/journal.pone.0065925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen J., Cook D., Fellows R., & Schmitter-Edgecombe M (2017). An analysis of a digital variant of the trail making test using machine learning techniques. Technology and Health Care: Official Journal of the European Society for Engineering and Medicine, 25, 251–264. doi: 10.3233/THC-161274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis R., Libon D. J., Au R., & Penney D. L (2015). THink: Inferring cognitive status from subtle behaviors. AI Magazine, 36, 49. [Google Scholar]

- Dodge H. H., Mattek N., Gregor M., Bowman M., Seelye A., Ybarra O.,…Kaye J. A (2015). Social markers of mild cognitive impairment: Proportion of word counts in free conversational speech. Current Alzheimer Research, 12, 513–519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fazio S., Pace D., Flinner J., & Kallmyer B (2018). The fundamentals of person-centered care for individuals with dementia. The Gerontologist, 58(Suppl. 1), S10–S19. doi: 10.1093/geront/gnx122 [DOI] [PubMed] [Google Scholar]

- Fellows R. P., Dahmen J., Cook D., & Schmitter-Edgecombe M (2017). Multicomponent analysis of a digital trail making test. The Clinical Neuropsychologist, 31, 154–167. doi: 10.1080/13854046.2016.1238510 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman M., Leach L., Carmela Tartaglia M., Stokes K. A., Goldberg Y., Spring R.,…Tang-Wai D. F (2018). The Toronto Cognitive Assessment (TorCa): Normative data and validation to detect amnestic mild cognitive impairment. Alzheimer’s Research & Therapy, 10, 65. doi: 10.1186/s13195-018-0382-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganguli M., Snitz B. E., Lee C. W., Vanderbilt J., Saxton J. A., & Chang C. C (2010). Age and education effects and norms on a cognitive test battery from a population-based cohort: The Monongahela-Youghiogheny Healthy Aging Team. Aging & Mental Health, 14, 100–107. doi: 10.1080/13607860903071014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerontological Society of America (2015). The Gerontological Society of America workgroup on cognitive impairment detection and earlier diagnosis: Report and recommendations. Washington, DC: Retrieved from http://www.geron.org/images/gsa/ documents/gsaciworkgroup2015report.pdf [Google Scholar]

- Gold M., Amatniek J., Carrillo M. C., Cedarbaum J. M., Hendrix J. A., Miller B. B.,…Czaja S. J (2018). Digital technologies as biomarkers, clinical outcomes assessment, and recruitment tools in Alzheimer’s disease clinical trials. Alzheimer’s & Dementia (New York, N. Y.), 4, 234–242. doi: 10.1016/j.trci.2018.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hess T. M., Popham L. E., Emery L., & Elliott T (2012). Mood, motivation, and misinformation: Aging and affective state influences on memory. Neuropsychology, Development, and Cognition. Section B, Aging, Neuropsychology and Cognition, 19, 13–34. doi: 10.1080/13825585.2011.622740 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holtzblatt K., & Beyer H (2016). Contextual design: Design for life. Elsevier Science. [Google Scholar]

- Insel T. R. (2017). Digital phenotyping: Technology for a new science of behavior. JAMA, 318, 1215–1216. doi: 10.1001/jama.2017.11295 [DOI] [PubMed] [Google Scholar]

- Ip E. H., Barnard R., Marshall S. A., Lu L., Sink K., Wilson V.,…Rapp S. R (2017). Development of a video-simulation instrument for assessing cognition in older adults. BMC Medical Informatics and Decision Making, 17, 161. doi: 10.1186/s12911-017-0557-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jain S. H., Powers B. W., Hawkins J. B., & Brownstein J. S (2015). The digital phenotype. Nature Biotechnology, 33, 462–463. doi: 10.1038/nbt.3223 [DOI] [PubMed] [Google Scholar]

- Jenkins A., Lindsay S., Eslambolchilar P., Thornton I. M., & Tales A (2016). Administering cognitive tests through touch screen tablet devices: Potential issues. Journal of Alzheimer’s Disease: JAD, 54, 1169–1182. doi: 10.3233/JAD-160545 [DOI] [PubMed] [Google Scholar]

- Jongstra S., Wijsman L. W., Cachucho R., Hoevenaar-Blom M. P., Mooijaart S. P., & Richard E (2017). Cognitive testing in people at increased risk of dementia using a smartphone app: The ivitality proof-of-principle study. JMIR Mhealth and Uhealth, 5, e68. doi: 10.2196/mhealth.6939 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaye J., Mattek N., Dodge H. H., Campbell I., Hayes T., Austin D.,…Pavel M (2014). Unobtrusive measurement of daily computer use to detect mild cognitive impairment. Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association, 10, 10–17. doi: 10.1016/j.jalz.2013.01.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kokubo N., Yokoi Y., Saitoh Y., Murata M., Maruo K., Takebayashi Y.,…Horikoshi M (2018). A new device-aided cognitive function test, user experience-trail making test (UX-TMT), sensitively detects neuropsychological performance in patients with dementia and Parkinson’s disease. BMC Psychiatry, 18, 220. doi: 10.1186/s12888-018-1795-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konig A., Satt A., Sorin A., Hoory R., Derreumaux A., David R., & Robert P. H (2018). Use of speech analyses within a mobile application for the assessment of cognitive impairment in elderly people. Current Alzheimer Research, 15, 120–129. doi: 10.2174/1567205014666170829111942 [DOI] [PubMed] [Google Scholar]

- Kuerbis A., Mulliken A., Muench F., Moore A. A., & Gardner D (2017). Older adults and mobile technology: Factors that enhance and inhibit utilization in the context of behavioral health. Mental Health and Addiction Research, 2. [Google Scholar]

- Lange S., & Suss H. M (2014). Measuring slips and lapses when they occur—Ambulatory assessment in application to cognitive failures. Consciousness and Cognition, 24, 1–11. doi: 10.1016/j.concog.2013.12.008 [DOI] [PubMed] [Google Scholar]

- Laske C., Sohrabi H. R., Frost S. M., López-de-Ipiña K., Garrard P., Buscema M.,…O’Bryant S. E (2015). Innovative diagnostic tools for early detection of Alzheimer’s disease. Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association, 11, 561–578. doi: 10.1016/j.jalz.2014.06.004 [DOI] [PubMed] [Google Scholar]

- Lyons B. E., Austin D., Seelye A., Petersen J., Yeargers J., Riley T.,…Kaye J. A (2015). Pervasive computing technologies to continuously assess Alzheimer’s disease progression and intervention efficacy. Frontiers in Aging Neuroscience, 7, 102. doi: 10.3389/fnagi.2015.00102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makizako H., Shimada H., Park H., Doi T., Yoshida D., Uemura K.,…Suzuki T (2013). Evaluation of multidimensional neurocognitive function using a tablet personal computer: Test–retest reliability and validity in community-dwelling older adults. Geriatrics & Gerontology International, 13, 860–866. doi: 10.1111/ggi.12014 [DOI] [PubMed] [Google Scholar]

- Mielke M. M., Machulda M. M., Hagen C. E., Edwards K. K., Roberts R. O., Pankratz V. S.,…Petersen R. C (2015). Performance of the CogState computerized battery in the mayo clinic study on aging. Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association, 11, 1367–1376. doi: 10.1016/j.jalz.2015.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molony S. L., Kolanowski A., Van Haitsma K., & Rooney K. E (2018). Person-centered assessment and care planning. The Gerontologist, 58, S32–S47. doi: 10.1093/geront/gnx173 [DOI] [PubMed] [Google Scholar]

- Morris M., Intille S. S., & Beaudin J. S (2005). Embedded assessment: Overcoming barriers to early detection with pervasive computing. Berlin, Heidelberg: Pervasive Computing. [Google Scholar]

- Muller S., Preische O., Heymann P., Elbing U., & Laske C (2017). Diagnostic value of a tablet-based drawing task for discrimination of patients in the early course of Alzheimer’s disease from healthy individuals. Journal of Alzheimer’s Disease: JAD, 55, 1463–1469. doi: 10.3233/JAD-160921 [DOI] [PubMed] [Google Scholar]

- Onoda K., Hamano T., Nabika Y., Aoyama A., Takayoshi H., Nakagawa T.,…Yamaguchi S (2013). Validation of a new mass screening tool for cognitive impairment: Cognitive assessment for dementia, iPad version. Clinical Interventions in Aging, 8, 353–360. doi: 10.2147/CIA.S42342 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onoda K., & Yamaguchi S (2014). Revision of the cognitive assessment for dementia, iPad version (CADi2). PLos One, 9, e109931. doi: 10.1371/journal.pone.0109931 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters M. D., Godfrey C. M., Khalil H., McInerney P., Parker D., & Soares C. B (2015). Guidance for conducting systematic scoping reviews. International Journal of Evidence-Based Healthcare, 13, 141–146. doi: 10.1097/XEB.0000000000000050 [DOI] [PubMed] [Google Scholar]

- Pew Research Center (2018). Mobile fact sheet Retrieved from http://www.pewinternet.org/fact-sheet/mobile/

- Plourde V., Hrabok M., Sherman E. M. S., & Brooks B. L (2018). Validity of a computerized cognitive battery in children and adolescents with neurological diagnoses. Archives of Clinical Neuropsychology: The Official Journal of the National Academy of Neuropsychologists, 33, 247–253. doi: 10.1093/arclin/acx067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Possin K. L., Moskowitz T., Erlhoff S. J., Rogers K. M., Johnson E. T., Steele N. Z. R.,…Rankin K. P (2018). The brain health assessment for detecting and diagnosing neurocognitive disorders. Journal of the American Geriatrics Society, 66, 150–156. doi: 10.1111/jgs.15208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rentz D. M., Dekhtyar M., Sherman J., Burnham S., Blacker D., Aghjayan S. L.,…Sperling R. A (2016). The feasibility of at-home iPad cognitive testing for use in clinical trials. The Journal of Prevention of Alzheimer’s Disease, 3, 8–12. doi: 10.14283/jpad.2015.78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rentz D. M., Parra Rodriguez M. A., Amariglio R., Stern Y., Sperling R., & Ferris S (2013). Promising developments in neuropsychological approaches for the detection of preclinical Alzheimer’s disease: A selective review. Alzheimer’s Research & Therapy, 5, 58. doi: 10.1186/alzrt222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodakowski J., Saghafi E., Butters M. A., & Skidmore E. R (2015). Non-pharmacological interventions for adults with mild cognitive impairment and early stage dementia: An updated scoping review. Molecular Aspects of Medicine, 43–44, 38–53. doi: 10.1016/j.mam.2015.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruggeri K., Maguire Á., Andrews J. L., Martin E., & Menon S (2016). Are we there yet? Exploring the impact of translating cognitive tests for dementia using mobile technology in an aging population. Frontiers in Aging Neuroscience, 8, 21. doi: 10.3389/fnagi.2016.00021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scanlon L., O’Shea E., O’Caoimh R., & Timmons S (2016). Usability and validity of a battery of computerised cognitive screening tests for detecting cognitive impairment. Gerontology, 62, 247–252. doi: 10.1159/000433432 [DOI] [PubMed] [Google Scholar]

- Scharre D. W., Chang S. I., Nagaraja H. N., Vrettos N. E., & Bornstein R. A (2017). Digitally translated Self-Administered Gerocognitive Examination (eSAGE): Relationship with its validated paper version, neuropsychological evaluations, and clinical assessments. Alzheimer’s Research & Therapy, 9, 44. doi: 10.1186/s13195-017-0269-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sliwinski M. J. (2008). Measurement burst designs for social health research. Social and Personality. Psychology Compass, 2, 245. [Google Scholar]

- Suzumura S., Osawa A., Maeda N., Sano Y., Kandori A., Mizuguchi T.,…Kondo I (2018). Differences among patients with Alzheimer’s disease, older adults with mild cognitive impairment and healthy older adults in finger dexterity. Geriatrics & Gerontology International, 18, 907–914. doi: 10.1111/ggi.13277 [DOI] [PubMed] [Google Scholar]

- Thompson O., Barrett S., Patterson C., & Craig D (2012). Examining the neurocognitive validity of commercially available, smartphone-based puzzle games. Psychology, 3, 525–526. [Google Scholar]

- Tong T., Chignell M., Tierney M. C., & Lee J (2016). A serious game for clinical assessment of cognitive status: Validation study. JMIR Serious Games, 4, e7. doi: 10.2196/games.5006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tung J. Y., Rose R. V., Gammada E., Lam I., Roy E. A., Black S. E., & Poupart P (2014). Measuring life space in older adults with mild-to-moderate Alzheimer’s disease using mobile phone GPS. Gerontology, 60, 154–162. doi: 10.1159/000355669 [DOI] [PubMed] [Google Scholar]

- Twomey D. M., Wrigley C., Ahearne C., Murphy R., De Haan M., Marlow N., & Murray D. M (2018). Feasibility of using touch screen technology for early cognitive assessment in children. Archives of Disease in Childhood, 103, 853–858. doi: 10.1136/archdischild-2017-314010 [DOI] [PubMed] [Google Scholar]

- Vizer L. M., & Sears A (2015). Classifying text-based computer interactions for health monitoring. IEEE Pervasive Computing, 14, 64–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO (2017). 10 facts on dementia Retrieved from http://www.who.int/features/factfiles/dementia/en/

- Wiederhold B. K. (2016). Using your digital phenotype to improve your mental health. Cyberpsychology, Behavior and Social Networking, 19, 419. doi: 10.1089/cyber.2016.29039.bkw [DOI] [PubMed] [Google Scholar]

- Wu Y. H., Vidal J. S., De Rotrou J., Sikkes S. A. M., Rigaud A. S., & Plichart M (2017). Can a tablet-based cancellation test identify cognitive impairment in older adults?PLoS One, 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y. H., Vidal J. S., de Rotrou J., Sikkes S. A., Rigaud A. S., & Plichart M (2015). A tablet-PC-based cancellation test assessing executive functions in older adults. The American Journal of Geriatric Psychiatry: Official Journal of the American Association for Geriatric Psychiatry, 23, 1154–1161. doi: 10.1016/j.jagp.2015.05.012 [DOI] [PubMed] [Google Scholar]

- Zorluoglu G., Kamasak M. E., Tavacioglu L., & Ozanar P. O (2015). A mobile application for cognitive screening of dementia. Computer Methods and Programs in Biomedicine, 118, 252–262. doi: 10.1016/j.cmpb.2014.11.004 [DOI] [PubMed] [Google Scholar]

- Zygouris S., Ntovas K., Giakoumis D., Votis K., Doumpoulakis S., Segkouli S.,…Tsolaki M (2017). A preliminary study on the feasibility of using a virtual reality cognitive training application for remote detection of mild cognitive impairment. Journal of Alzheimer’s Disease: JAD, 56, 619–627. doi: 10.3233/JAD-160518 [DOI] [PubMed] [Google Scholar]

- Zygouris S., & Tsolaki M (2015). Computerized cognitive testing for older adults: A review. American Journal of Alzheimer’s Disease and Other Dementias, 30, 13–28. doi: 10.1177/1533317514522852 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.