Abstract

Intra-operative imaging is sometimes available to assist needle biopsy, but typical open-loop insertion does not account for unmodeled needle deflection or target shift. Closed-loop image-guided compensation for deviation from an initial straight-line trajectory through rotational control of an asymmetric tip can reduce targeting error. Incorporating robotic closed-loop control often reduces physician interaction with the patient, but by pairing closed-loop trajectory compensation with hands-on cooperatively controlled insertion, a physician’s control of the procedure can be maintained while incorporating benefits of robotic accuracy. A series of needle insertions were performed with a typical 18G needle using closed-loop active compensation under both fully autonomous and user-directed cooperative control. We demonstrated equivalent improvement in accuracy while maintaining physician-in-the-loop control with no statistically significant difference (p > 0.05) in the targeting accuracy between any pair of autonomous or individual cooperative sets, with average targeting accuracy of 3.56 mmrms. With cooperatively controlled insertions and target shift between 1 mm – 10 mm introduced upon needle contact, the system was able to effectively compensate up to the point where error approached a maximum curvature governed by bending mechanics. These results show closed-loop active compensation can enhance targeting accuracy, and that the improvement can be maintained under user directed cooperative insertion.

Keywords: Image-Guided Therapy, Medical Robotics, Teleoperation, Needle Steering

3). Introduction

Positive clinical outcomes for deep percutaneous needle based procedures such as targeted biopsy are dependent on the accuracy of needle tip placement. If a target is not reached with sufficient accuracy a physician often retracts the needle without taking a sample and must restart with a new attempt, lengthening procedure time, increasing cost and causing unnecessary discomfort to the patient. Although intra-operative imaging is sometimes available to assist in this procedure; open-loop insertion trajectories, wherein image-based feedback is not used to adjust the trajectory during the insertion, do not account for unmodeled needle deflection or target shift due to tissue deformation. One approach to compensate for unmodeled error is to robotically control the rotation of a bevel-tipped needle to induce corrective deflection towards the desired trajectory. Paired with real-time needle tip tracking, closed-loop image-guided active compensation can be accomplished. A system controlling robotic needle rotation for compensation also requires accurate control needle insertion position; however, incorporating a robot to actuate needle insertion typically either removes a physician from direct patient interaction through autonomous insertion, or distances them from patient interaction through teleoperation20. Instead, a cooperatively controlled robotic system would enable them to be at the procedure site, maintaining ultimate control while adding robotic accuracy to the final placement of the needle tip. In the presented work, this takes the form of a hands-on cooperative needle insertion, in which the surgical workspace is shared between the physician and the needle placement robot. The physician applies an input force directly onto the robot controlling the needle insertion velocity, while active compensation of the direction through rotational bevel positioning is performed autonomously based on closed-loop image feedback.

Motivation for this work came as our research group performed clinical trials of MRI-guided robot-assisted prostate biopsy using the robot seen in Eslami et al7. The system employed a robot for target alignment but was still dependent on manual needle insertion along a robotically aligned axis. As a result, even though initial alignment was performed robotically, the system suffered from loss of targeting accuracy due to unmodeled needle deflection and target shift. Targeting accuracy across all clinical trials was 6.6 ± 5.1 mm26. The overall goal of our research is to implement closed-loop active compensation during cooperative controlled needle insertions under real-time MR imaging, and we have previously reported aspects of this including a needle driver configured for cooperative control and suitable for the MR environment31, with needle localization in real-time MR images shown in Patel et al.18

The maximum needle tip deflection and consequently the degree of compensation attainable is limited by the mechanical properties of the needle. Related work implementing image-feedback for rotational bevel tip positioning has been primarily based on steering thin flexible needles. Modeling of flexible needles has been widely implemented using the kinematic bicycle model32 as well as a joint kinematic and dynamic system24. These were expanded using imaging for feedback in Abayazid et al.1 for evaluation of two models for flexible needle steering, one kinematics based and one based on needle-tissue interaction forces, both validated with double bend tests. A duty cycled approach for needle steering was introduced by Engh et al.6, and expanded in Minhas et al.14, with Vrooijink et al.30 implementing this for 3D targeting using ultrasound imaging. The duty-cycled approach, although implemented widely with positive results, requires insertion and rotation to be very coupled so as to alternate between pure insertion and rotation during insertion, which would not be feasible in a cooperative approach. Furthermore, a handheld device for seed placement in brachytherapy was introduced by Rossa et al.21, rotating the needle autonomously during manual needle insertion. This system can be comparable to the work presented here in that rotation is autonomous while insertion is user directed, however; a cooperative insertion approach adds robotic accuracy to the insertion axis as well.

Robotic insertion can improve accuracy of needle tip placement, but in a clinical scenario a fully autonomous insertion would isolate the patient from the physician and is likely to receive increased regulatory scrutiny20. Several teleoperated systems have been developed to keep the physician in the loop during robotic needle insertion, often employing haptic feedback to restore some of the tactile information typically used by the physician for anatomical localization of the needle tip. For instance, Tse et al.27 introduced a haptic needle device with a feedforward controller to increase response of the slave, while a completely MRI-safe robot was developed by Stoianovici et al.23, employing manual insertion to a depth stop set robotically using a PneuStep pneumatic stepper motor29. Teleoperated systems offer the benefits of robotic precision for insertion position with the ability to integrate compensation via needle rotation, such as in the system presented by Pacchierotti et al.17 In this system the user maintains control of the needle insertion via teleoperation, with kinesthetic and vibratory feedback providing guidance to steer the slave robot to the target. This system provides full control to a physician for needle placement, but still distances them from the patient. Cooperative control on the other hand is defined as the direct robotic guidance of a tool that is also held and controlled by the user28. A cooperatively controlled system provides a framework to incorporate the benefits of teleoperation without distancing the physician from the procedure site. Some notable cooperative devices include the PADyC22 used for joint replacement, the ACROBOT11 used in bone surgery and the Steady Hand Robot3, eliminating hand tremor for precision eye surgery. A biopsy robot described to work synergistically with the physician was described by Megali et. al.13. This system was considered synergistic in that the procedure had both robotic and manual input components. The alignment of the biopsy needle was done robotically followed by a manual needle insertion, much like the clinical version of our research group's biopsy robot previously described. The system described herein is different in that the cooperative aspect is hands-on synergy between robot and physician during the insertion task.

Finally, in much of the literature present, the specialized thin flexible needles used are not approved by a regulatory agency for biopsy; in fact, they are mostly solid core and too thin to be used in a clinical scenario where a tissue sample must be retrieved. Research contributions on needle steering tend to be focused on obstacle avoidance or following trajectories of a predefined shape to a target location, while teleoperated systems aim for stable control of needle insertion position with haptic feedback. Here the work is focused on clinical translation, with active compensation for deviations from an initial straight-line trajectory using a typical biopsy needle (e.g. that of a relatively stiff 18G biopsy gun). More so, to perform this compensation during cooperatively controlled needle insertions to maintain a biopsy procedure directed by the physician at the procedure site, while providing continuous haptic feedback to restore tactile information typically surrendered in favor of robotic accuracy. The primary contributions of this work are development of a method of closed-loop active compensation for unmodeled needle deflection and target shift under cooperatively controlled needle insertion, and experimental validation of this on an existing robotic needle placement system with results showing the accuracy improvements of autonomous needle placement can be maintained in user directed cooperative insertion.

4). Methods and Materials

A). Workflow

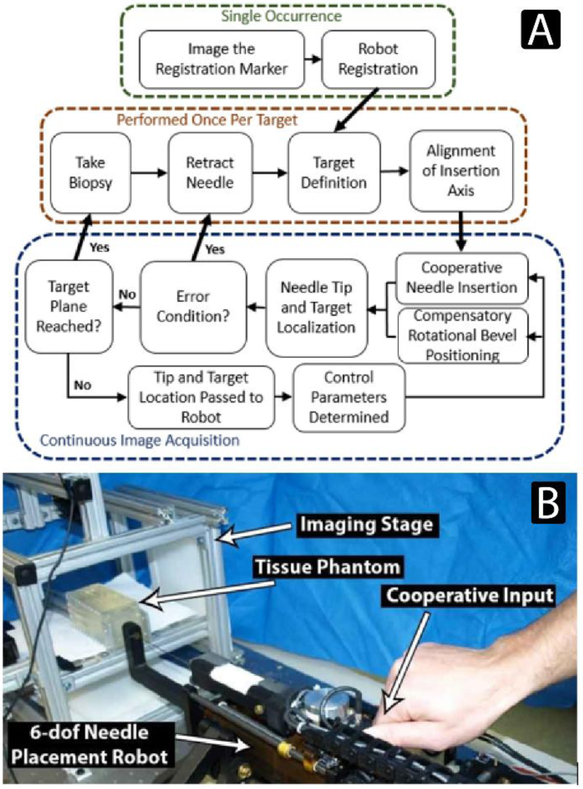

The workflow for retrieving a targeted biopsy core using continuous image-guided feedback is illustrated in Figure 1a. The workflow begins with registration, using a marker or fiducial to transform image-based feature localizations into the robot coordinate frame. After a target feature is defined, the robot positions the needle at the skin surface and aligns the insertion axis towards the target for a straight line insertion trajectory. Insertion begins under cooperative control and continues until the target depth is reached or an error condition occurs, such as the needle sufficiently deflecting into a configuration where the target would not be reachable with suitable accuracy. The tip and target features are localized in imaging during insertion and homogeneous transforms describing their location and orientation are passed to the robot controller. The localization information along with robot kinematics and force sensor readings are used to determine the cooperative insertion velocity as well as the bevel rotation required to compensate for any deviations from the initial straight-line trajectory. Figure 1b illustrates the needle placement manipulator in position to perform a cooperative needle insertion, the imaging stage with phantom, and how an input force is applied to the robot to create a cooperative insertion velocity.

Figure 1:

A) The workflow of biopsy retrieval using a robotic system configured for closed-loop image-guided active compensation through rotational bevel tip position during cooperatively controlled needle insertion. B) The experimental setup, showing a cooperative input to the needle placement manipulator. Cooperative insertions were performed by applying an input force to the robot to insert an 18G clinical biopsy gun.

B). Needle Placement Manipulator

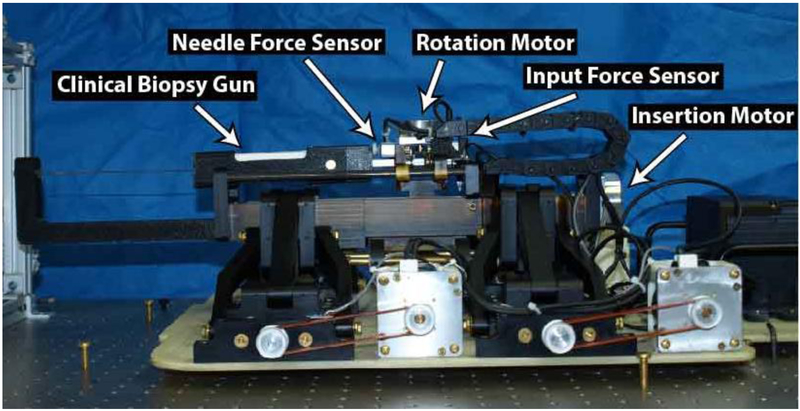

A 6-dof robotic needle placement system designed for in-bore MR image-guided prostate biopsy, similar to that in Wartenberg et al.31, was used. It is comprised of the 4-dof alignment base used in the clinical trials previously mentioned, with a 2-dof needle driver configured for cooperative control mounted in place of the manual needle guide. The system collects both user directed input and axial needle forces, with the insertion velocity based on a relationship between the two. All forces were collected using aluminum load cell sensors MLP-10 from Transducer Techniques (Temecula, CA, USA). The system is capable of inserting and rotating a biopsy gun typically used in clinical procedures, the Full-Auto Bx Gun 18G 175 mm from Invivo (Best, Netherlands). Actuation for the base, insertion, and rotation are performed by non-magnetic piezoelectric motors, model USR-60 from the Shinsei Corporation (Tokyo, Japan), and models USR-45 and USR-30 from Fukoku Co Ltd. (Tokyo, Japan), respectively. All position feedback was achieved using optical encoders from US Digital (Vancouver, WA), model EM-1-1250. Figure 2 shows an annotated image of the robotic needle placement manipulator.

Figure 2:

Annotated view of the needle placement robot. The base was previously used in clinical trials, aligning the needle guide for manual insertion. Here, a 2-dof needle driver was mounted in place of that manual needle guide and configured for cooperative controlled needle insertions with active compensation for unmodeled errors through closed-loop image-guided rotational bevel tip positioning.

The control system for this robot is implemented on the sbRIO-9651 from National Instruments (Austin, TX, USA). This module contains an Artix-7 FPGA from Xilinx (San Jose, CA, USA), as well as a Linux Real-Time Operating System (RTOS) running on an ARM Cortex-A9 from ARM Holdings (Cambridge, England, UK). Low level communication is programmed using parallel loops in the FPGA while the cooperative algorithms are implemented in the RTOS using C/C++. The control system is housed inside a custom shielded control box which alongside the robot is suitable for the MR environment and was the same controller used in Nycz et al.15, where it was shown to perform inside the MR room without degrading image quality.

C). Cooperative Needle Insertion

Tactile forces felt during manual needle insertion are often used by physicians for mental registration of anatomical localization of the needle tip within the body. By collecting both the user input force as well as the forces along the needle, continuous feedback can be provided during cooperative needle insertion by adjusting the insertion velocity as a function of the two. The forces seen along the needle are comprised of three components: the cutting force, the stiffness on the tip, and the friction force along the length of the needle16. Separating these forces can be possible, for instance by using Fiber Bragg grating4, but this is not feasible in a scenario where altering an already regulatory approved clinical biopsy needle is not an option. Instead these forces are collected as one collective measurement at the proximal end of the biopsy gun, Σ Fneedle, and used alongside Finput in Equation 1 to show the insertion velocity under the cooperative control scheme.

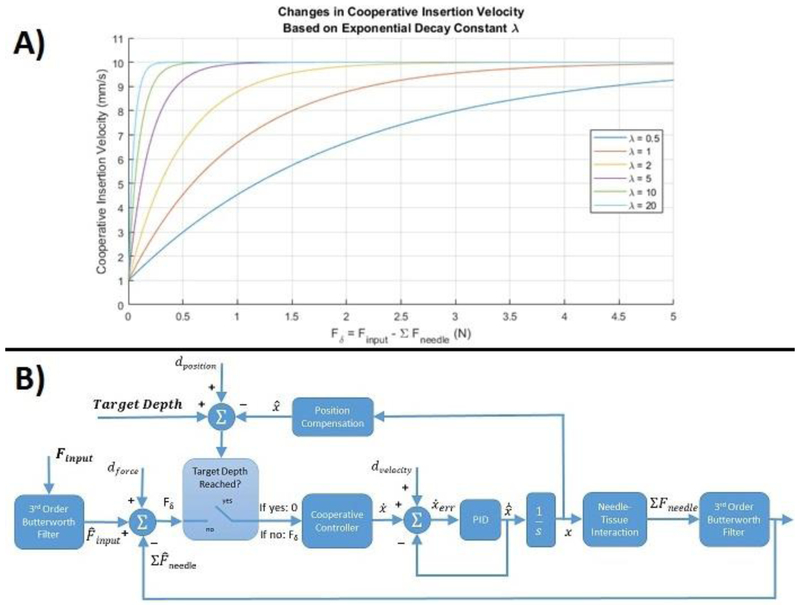

| (1) |

The velocity is based on a decreasing exponential, with the effect of difference in forces in the normal operating range (Finput > Σ Fneedle) scaled by the exponential decay constant λ. Increasing the magnitude of λ leads to an increased insertion velocity during initial insertion with higher sensitivity between Finput and Σ Fneedle as their values approach each other. The goal is augmented feedback, not transparent haptic sensation. This algorithm gives the user control of insertion speed through a force input, while increasing the sensitivity of tactile feedback as the needle force approaches this input. This situation is seen at the transition between tissue boundaries when needle force spikes, and could allow sensing of boundary transitions that could not have been able to be felt by the user alone. This inherently makes the system non-transparent, instead allowing for additional augmented feedback to be implemented at event detection such as these boundary transitions or forbidden regions corresponding to specific anatomical structures to avoid. Figure 3A illustrates a series of velocity curves for cooperative insertion based on varying the value of λ, and was set to 5 in this work for all insertion trials. The non-zero value in the second condition was added empirically to avoid a discontinuity in movement if, for instance, needle force grew larger than the input force of the user, but an intent of continuous insertion was present.

Figure 3:

A) The cooperative insertion velocity vs. the difference in input and needle forces based on varying the exponential decay constant λ. As λ increases the difference in forces has less influence early in insertion, with increased sensitivity on insertion velocity when the forces approach each other. B) The control loop describing functional methodology for implementing cooperative controlled needle insertion. The two inputs are a force input and a target depth, and the two outputs are collected needle forces and needle tip position.

The cooperative insertion was implemented through admittance based closed-loop velocity control, providing a velocity output to a force input. A block diagram describing cooperative insertion is shown in Figure 3B. There are two inputs, a target depth and a user applied force input. First, Fδ is determined by subtracting the sum of needle forces from the applied input force. Then the current needle tip position, determined via real-time imaging, is subtracted from the target depth to determine if the target depth has been reached. If it has then a zero value is passed to the cooperative controller. If it hasn't then Fδ is passed to the cooperative controller to determine the insertion velocity based on Equation 1. An inner PID control loop with velocity feedback maintains the desired set point insertion velocity based on the cooperative controller output. An insertion velocity produces the increased insertion position that is fed back to make the supervisory control loop decision. Finally, as the needle inserts deeper into the tissue the force seen on the needle increases, and this needle force is fed back to the beginning of the control loop and subtracted from the input force.

D). Feature Localization

The proposed active compensation technique is agnostic to imaging modality, and in previous work we have demonstrated needle tracking in MRI coupled with active scan plane geometry control18. With the focus of this work being on developing active compensation during cooperatively controlled insertions, for evaluation in the lab we use a standalone software application using two orthogonal USB cameras, models C920 from Logitech (Lausanne. Switzerland); this serves as a proxy for medical imaging to provide real-time 3D coordinates of the needle tip and target within the robot workspace. Each image has a resolution of 640 x 480 pixels and two-dimensional localization of the moving needle tip is found by analyzing pairs of sequential video frames using the dense optical flow algorithm introduced in Farneback8. For the purposes of demonstrating the active compensation technique, the target point is either selected manually or segmented by color in the camera images, while target orientation is set to a nominally straight trajectory from the entry point, but it could represent an approach vector for angulated insertions in future work. The output of both localizations are homogeneous transforms for the position and orientation of tip and target.

E). Active Compensation

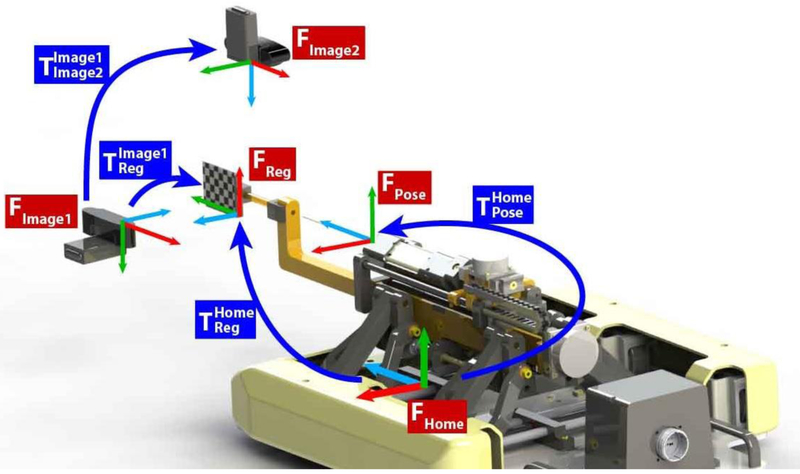

In this system the control of rotational bevel positioning is completely independent from the imaging modality used. Information of needle tip and target localization is passed one-way downstream from a standalone image-guidance software to the robot controller via the OpenIGTLink communication protocol25, an open network interface for image-guided therapy. Registration is performed prior to any targeting through a marker rigidly attached to the robot and in view of the image frame, and this registration marker along with each transformation used in the following calculations for the benchtop configuration is seen below in Figure 4. When moving to the MRI, registration would be performed with a set of MR visible fiducials in a z-frame configuration rigidly mounted to the robot.

Figure 4:

The coordinate systems and frame transformations used for robot to image guidance registration. The registration marker is rigidly attached to the robot and placed in view of the cameras for registration. Once registration is performed the marker is removed.

This figure illustrates that serves as the sequential multiplication of transformations required to register the imaging system’s coordinate frame to the current pose of the robot, . In this series of multiplications, is the transformation from the current pose of the robot to the robot home coordinate frame, is a constant matrix describing the transformation from the robot home frame to the registration marker, while is also a constant matrix describing the transformation from the registration marker rigidly attached to the robot to the imaging coordinate frame.

The robot controller continuously receives the localization transforms, and , throughout insertion and pre-multiplies them by the to determine and , their position and orientation of tip and target with respect to the current pose of the robot at each instance. Finally, is found by the multiplication , the matrix product of the inverse of with .

Calculating at each instance provides the information needed to determine desired compensatory effort relative to a reference frame at the needle tip. With insertion defined along the Z-direction, the rotational effort in the plane normal to insertion can be found using the arctangent of the X and Y positions of as shown in Equation 2.

| (2) |

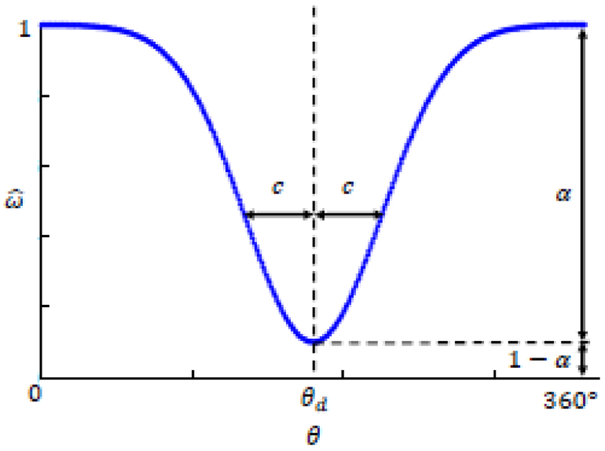

In our case the imaging software is able to estimate needle tip orientation, therefore the rotational component of can be used to employ a variable curvature needle compensation technique, where both direction and magnitude of desired effort can be implemented. A Gaussian based model employing Continuous Rotation and Variable12 (CURV) curvature was used for all insertion trials; a variation of the duty-cycle approach6 wherein the needle continuously rotates with an angle-dependent angular velocity. In this model for rotational bevel positioning, θ is the current rotational angle with θd the desired angle of bevel position for compensatory effort. Angular velocity at each rotational position is calculated using Equation 3 with the two parameters c and α corresponding to the Gaussian width and magnitude of compensatory effort respectively.

| (3) |

A larger α generates a greater drop from the nominal rotational velocity, leading to more deflection in the desired direction θd, while increasing c widens the total range of angular position where rotation occurs below the nominal velocity. Figure 5 illustrates the angular velocity profile for the CURV approach to active compensation. To note, the scope of this work is not to compare different approaches to steering of the needle trajectory; though the authors chose to employ the CURV approach, other bevel tip based curvature models such as the duty cycle6 technique could be readily implemented instead.

Figure 5:

Angular velocity, of the needle during Continuous Rotation and variable (CURV) rotational compensation. This Gaussian based model is dependent on the current rotational position, Θ, the desired direction of compensatory effort, θd, the desired Gaussian width c, the magnitude of desired compensatory effort, α.

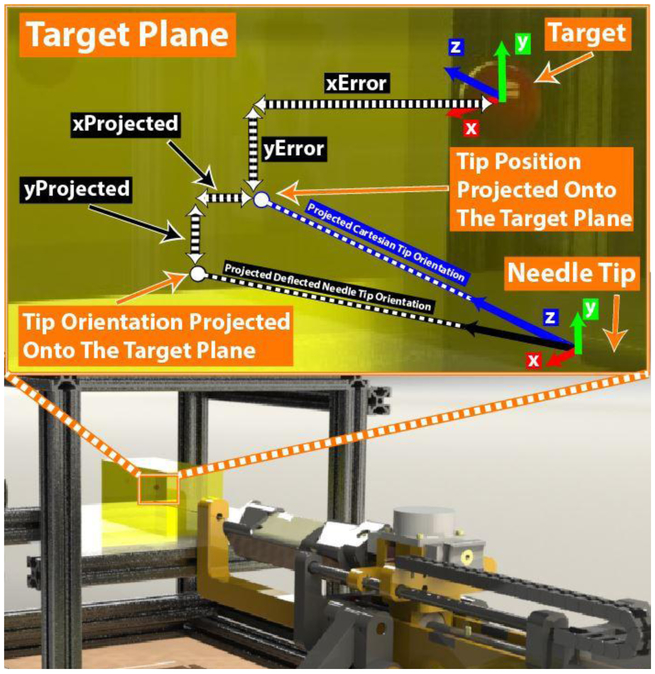

In our case θd was calculated throughout insertion using Equation 2 and c was set to 10° for all insertions. The magnitude of compensatory effort α was calculated throughout insertion by the two-dimensional difference between the target point and projection of the needle tip orientation onto the target plane, thus selecting the intermediate value between maximum curvature of a given needle in a given tissue and a straight line trajectory. Euler angles were extracted from the transform to determine the rotations of needle tip orientation about each of the x, y, z axes of the needle insertion coordinate frame, where insertion was performed along the z-direction. The locations of the projected needle tip position on the target plane were found with Equations 4 – 5, using trigonometric relationships of the angles of rotation about the x and y axes normal to the insertion direction, Rot(x) and Rot(y) respectively.

| (4) |

| (5) |

Furthermore, the x and y values of the current needle tip position were used directly as projections of the current needle position on the target plane, and the inplane magnitude of Errorprojected was calculated using Equation 6.

| (6) |

Finally, the instantaneous value of a was set using Equation 7, the ratio of Errorprojected to the maximum deflection attainable using our 18G needle, Errormax. For this experimental setup, Errormax was experimentally determined to be 9.31 mmrms by performing insertions with no needle rotation to a depth of 125 mm, typical of all insertion trials performed as described below in section 5.B. Figure 6 illustrates the components required to calculate Errorprojected inside the experimental setup.

Figure 6:

Dynamically determining the magnitude of desired steering compensation α using the needle tip position and orientation. The x and y positions of the needle tip are projected along the Cartesian z-axis to the target plane as the current XError and YError between tip and target. Additionally, the instantaneous needle tip orientation is extended to the target plane, where Xprojected and YProjected are found using the Euler angles determined from the rotation matrix of along the remaining insertion depth. The magnitude of total instantaneous Errorprojected is determined Equation 6, the two-dimensional Pythagorean theorem of (xError + XProjected) and (YError + YProjected)

| (7) |

5). Results

A). Tissue Phantoms

To produce useful results of targeting accuracy with compensation for needle deflection, the tissue phantoms used must mimic realistic needle forces and induce needle deflection comparable to a clinical scenario. Using an 18G needle, Podder et al.19 measured an average in vivo needle force of 8.87 N outside the prostate and 6.28 N inside the prostate during brachytherapy. Furthermore, the Young’s Modulus of excised prostate tissue was reported as 16.0 ± 5.7 KPa and 40.6 ± 15.9 KPa for healthy and cancerous samples respectively9. In experiments for this work, tissue phantoms were made from a 70%/30% ratio by volume of Plastisol liquid PVC and PVC softener, parts Regular Liquid Plastic and Plastic Softener from M-F manufacturing (Fort Worth, TX, USA). Use of this material for developing tissue phantoms was described in Hungr et al.10 and also used in Ahn et al2 and Elayaperumal et al.5 to mimic soft tissue for needle insertion experiments. Constant velocity needle insertions were performed at 2 mm/s, 6 mm/s, and 10 mm/s with average forces at all speeds reaching between 5.5N and 6.5N, which is within the range of in vivo forces presented. Additionally, the Young’s Modulus was tested through ultrasound elastography on a LOGIQ E9 ultrasound machine with probe model C106, both from General Electric (Boston, MA, USA). The Young’s Modulus for the 70%/30% concentration of plastisol and softener was found to be 25 KPa, within the range of reported excised prostate tissue.

B). Accuracy for Stationary Targets

Two targeting experiments were performed, the first on a static target, and then on a shifted target mimicking tissue deformation and target deflection. In all insertion cases the needle was inserted until it reached the target depth based on visual confirmation of tip location using the needle tracking software. For the case of static targets, the robot was positioned to place the needle tip at the desired entry point aligned along a straight line trajectory toward the target location, and insertions were performed in three conditions: 1) autonomous insertion with no rotation to characterize Errormax, the needle deflection without active compensation, 2) autonomous insertion with image-guided active compensation, and 3) hands-on user directed cooperative insertion with image guided active compensation. The hypothesis was that targeting accuracy would improve with active compensation, and that targeting accuracy would not be negatively affected when moving from an autonomous to cooperative insertion. In each trial a different target within the phantom was selected to avoid errors in a needle following a previous insertion track, and the result for all conditions was the position error between the needle tip and target at the target plane, orthogonal to the initial needle alignment axis.

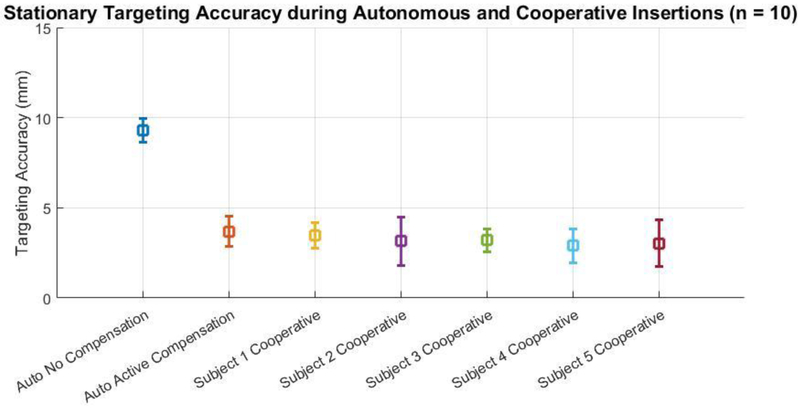

Ten targeted insertions were performed with and without closed-loop image-guided active compensation and the average in-plane targeting error was found to be 9.30 mmrms and 3.79 mmrms for the no compensation and active compensation cases, respectively. Subsequently, five participants (3 males, 2 females, average age 33.8 ± 10.32, all right handed, all novice to the task) each performed ten cooperatively controlled needle insertions with active compensation and Table 1 describes the inplane targeting accuracy for each participant. Figure 7 illustrates the comparison of targeting accuracy between the autonomous no rotation, autonomous with active compensation, and all hands on cooperative cases with active compensation.

Table 1:

Stationary targeting accuracy for insertions under cooperative control

| Subject 1 | Subject 2 | Subject 3 | Subject 4 | Subject 5 | |

|---|---|---|---|---|---|

| Mean (mm) | 3.50 | 3.15 | 3.20 | 2.90 | 3.04 |

| St. Dev. (mm) | 0.71 | 1.36 | 0.62 | 0.95 | 1.28 |

Figure 7:

Comparison of targeting accuracy (n=10) between autonomous insertions with no rotational of bevel tip positioning, autonomous insertions with closed-loop image-guided active compensation through rotational bevel tip positioning, and 5 subjects performing hands-on cooperatively controlled needle insertions with active compensation.

For each of the insertion sets with active compensation, paired t-tests were performed to compare the data. Table 2 illustrates there was no statistically significant difference (all p > 0.05) between the targeting accuracy during autonomous insertion with any of the user directed cooperative cases, in addition to no statistical significance between the targeting accuracy seen between each pair of user. Across all insertions the average target depth was 125.23 mmrms and the average targeting accuracy with active compensation was found to be 3.56 mmrms.

Table 2:

Statistical outcomes from paired t-tests on stationary targeting accuracy across insertion conditions

| Cooperative Subject 5 |

Cooperative Subject 4 |

Cooperative Subject 3 |

Cooperative Subject 2 |

Cooperative Subject 1 |

|

|---|---|---|---|---|---|

| Autonomous Insertion | 0.253 | 0.085 | 0.247 | 0.298 | 0.514 |

| Cooperative Subject 1 | 0.383 | 0.098 | 0.383 | 0.443 | |

| Cooperative Subject 2 | 0.803 | 0.698 | 0.880 | ||

| Cooperative Subject 3 | 0.549 | 0.448 | |||

| Cooperative Subject 4 | 0.802 |

C). Accuracy for Shifted Targets

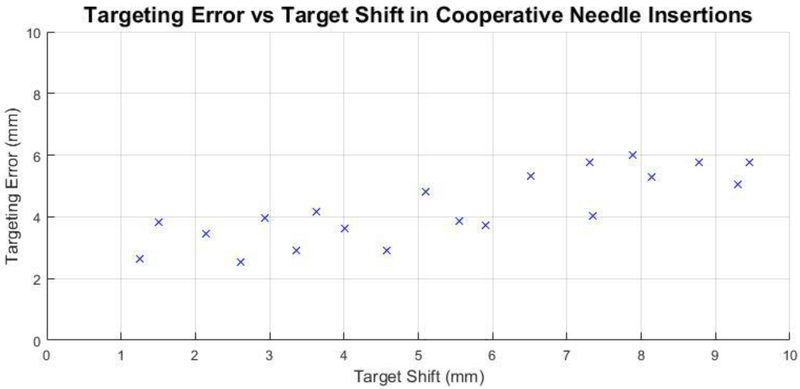

The second targeting experiment evaluated the response of the system to target shift during cooperative insertions. As the error seen in the non-compensatory case of the first experiment was 9.30 mmrms, it was expected the system could compensate with comparable targeting accuracy to target shifts below this value. Twenty targeted cooperative insertions were performed in a similar manner to the first experiment, with a target shift introduced once the needle made contact with the phantom. The shift was introduced virtually in the target plane perpendicular to the insertion axis, with both direction and magnitude assigned randomly using polar coordinates. The direction of shift was open to all 360° and magnitude was randomly assigned between 1 – 10mm.

There was no statistically significant variation among users in the previous experiment, thus a single user was used for this second experiment. Figure 8 shows the targeting accuracy for each insertion with respect to the amount of target shift, sorted by magnitude of the randomly assigned shift.

Figure 8:

Targeting accuracy vs. in-plane target shift magnitude for twenty cooperatively controlled needle insertions with a randomized shift introduced upon needle contact with the phantom. The direction and magnitude of target shift was randomly generated in polar coordinates to be on the target plane perpendicular to the needle insertion axis at a magnitude between 1 – 10 mm.

D). Accuracy of Feature Localization

Finally, validating the accuracy of feature localization must be performed to confidently use the needle tracking outputs for robot control. A series of five controlled needle insertions were performed into a phantom made with the same 70%/30% ratio of plastisol and softener described in section 5. A to test the accuracy of needle localization. Error was found by subtracting a ground truth coordinate found by manual selection of the needle tip in each video frame from the coordinate calculated through the optical flow needle tracking algorithm. With camera resolution 640x480 pixels, the error over the length of all insertions was 10.78 pixelsrms along the needle insertion path and 1.05 pixelsrms perpendicular to the insertion path, corresponding to 2.65 mmrms and 0.12 mmrms at the phantom boundary respectively. Error along the axis of needle insertion is greater because the insertion itself creates substantially more motion along that axis as opposed to perpendicular motion from deflection.

6). Discussion

In this work we presented an imaging-agnostic method to provide closed-loop active compensation for unmodeled needle deflection and target shift during cooperatively controlled needle insertions. The controller is structured to determine compensation parameters from streamed homogeneous frame transformations representing needle tip and target received via OpenIGTLink from any capable imaging source. The robot autonomously updates rotational bevel position based on these inputs to direct the needle tip towards the target. As opposed to an open-loop insertion, active compensation is robust to registration error, distortion of images, unmodeled needle deflection and swelling or target shift. Here we performed tests in the lab setting using perpendicular cameras to act as a proxy for multiplanar MR imaging, as we have previously shown needle localization in MRI18. This system was developed to be used in the lab and is adoptable by other researchers, only requiring two low cost USB cameras to implement with the tracking algorithm available open source on github (https://github.com/WPI-AIM/NeedleTracker).

Full robotic control of needle insertion is expected to be the most accurate way to place a needle tip for biopsy, but this leap from the clinical standard of practice would likely be met with caution from both acting physicians as well as regulatory bodies. Instead, through user directed control via a cooperative insertion paradigm, a physician would maintain full control of the procedure directly at the procedure site, while adding the benefit of robotic accuracy. Continuous augmented haptic feedback is also provided via force sensing of needle interaction with tissue, returning the tactile forces used by the physician for anatomical localization which would typically be lost when migrating to robotic insertion. Furthermore, an enhanced haptic response can be provided during a hands-on cooperative insertion, for instance a scaled force response for event based detection of membrane puncture.

Needle insertions towards stationary targets were performed using image-guided active compensation under both autonomous and cooperative insertions. As was shown in the experiment, active compensation improved accuracy. This experiment was intended to demonstrate that the improvement in accuracy can be maintained even when the physician is still directly in the loop, thus also enhancing the safety of the procedure. In a separate experiment, a case with no compensation was performed under autonomous insertion to illustrate that active compensation does in fact increase overall targeting accuracy, but each participant did not perform the task without active compensation. Figure 6 first illustrates that in-plane targeting error is greatly reduced when using closed-loop active compensation as compared to a non-compensatory no rotation insertion case. The average targeting error was found to be 3.56 mmrms in all cases with active compensation, considerably less than the average 6.6 ± 5.1 mm error found via open-loop manual needle insertions during clinical trials using the robot base for target alignment26. Furthermore, there was no statistically significant difference in the targeting accuracy between autonomous and user directed cooperative insertions. These results show three things: 1) closed-loop active compensation through autonomous rotational bevel tip positioning can improve the targeting accuracy when compared to open-loop manual needle insertion, 2) targeting accuracy when using closed-loop autonomous rotational bevel positioning does not degrade when moving from an autonomous insertion to a user directed cooperative insertion, and 3) the system is robust to different individuals performing the cooperative insertions.

Regarding cooperative insertions toward shifted targets, the results indicate that the system does compensate for target shift, with accuracy degrading as shift increases towards Errormax. This outcome is understandable for a system using a relatively stiff needle typical of clinical biopsy where aggressive needle poses and s-curve conformations as seen in research of thin flexible needles are not possible. In this case, if a deflection has begun in one direction it is much harder to overcome the initial curvature already present in an undesired direction. This becomes important due to the randomized location of the target shift in the experiment. If the needle entered the phantom with the bevel tip facing a certain way and began deflecting, a random shift to a location opposite the initial trajectory line, to a distance and angle unlikely to occur anatomically, would cause the final targeting accuracy to degrade and not be reflective of what could be possible with this system clinically. Figure 7 suggests targeting accuracy comparable to the stationary case can be achieved for target shifts approximately up to 5 mm, which in a clinical scenario can still lead to more biopsies collected during the first insertion, shortening procedure time and limiting patient discomfort.

In each experiment the depth of the needle was determined using the visual tracking software. Upon extension of this work into the MRI, live imaging would be available to guide the tip to the target depth as was done here. The accuracy would be assessed in transverse images at the target plane, similarly yielding in-plane error results as determined in these experiments.

From a tissue interaction stand point, a cooperative insertion is just a variable velocity insertion, therefore as long as accurate feature localization is provided to update rotational bevel positioning, the targeting accuracy should be comparable whether performed with autonomous or cooperative insertion. This paper showed a method for image-guided closed-loop active compensation of unmodeled needle deflection and target shift, showing no statistical difference between targeting accuracy under autonomous robotic needle insertion versus user directed hands-on cooperative needle insertion. Through analysis of the results, the authors believe overall targeting accuracy could be improved if the same experiments were performed with an increased speed of needle rotation, a more controlled target shift to better represent what is possible anatomically, and ensuring in each trial a biopsy gun with a like-new needle is used, in case any deflection during experiment plastically deformed the needle to have natural curvature.

7). Acknowledgement and Disclosure

This research was funded by NIH R01 CA111288, NIH R01 CA166379 and NIH R01 EB020667.

NH has a financial interest in Harmonus, a company developing Image Guided Therapy products. NH’s interests were reviewed and are managed by Brigham and Women’s Hospital and Partners Healthcare in accordance with their conflict of interest policies.

8) References

- 1.Abayazid M, Roesthuis RJ, Reilink R, Misra S Integrating deflection models and image feedback for real-time flexible needle steering. IEEE Transactions on Robotics, 29(2):542–553, 2013. [Google Scholar]

- 2.Ahn B, Kim J, Ian L, Rha K, Kim H Mechanical property characterization of prostate cancer using minimally motorized indenter in an ex vivo indentation experiment. Urology, 76(4): 1007–1011, 2010. [DOI] [PubMed] [Google Scholar]

- 3.Bettini A, Land S, Okamura A, Hager G Vision assisted control for manipulation using virtual fixtures. IEEE Transactions on Robotics, 20(6):953–966, 2004. [Google Scholar]

- 4.Elayaperumal S, Bae JH, Christensen D, Cutkosky MR, Daniel BL, Black RJ, Costa JM, Faridian F, Moslehi B MR-compatible biopsy needle with enhanced tip force sensing. IEEE World Haptics Conference, pp. 109–114, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Elayaperumal S, Bae JH, Daniel BL, Cutkosky MR Detecton of membrane puncture with haptic feedback using a tip-force sensing needle. IEEE International Conference on Intelligent Robots and Systems, pp. 3975–3981, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Engh JA, Minhas DS, Kondziolka D, Riviere CN, Percutaneous intercerebral navigation by duty-cycled spinning of flexible bevel tipped needles. Neurosurgery, 67(4):1117–1123, 2010. [DOI] [PubMed] [Google Scholar]

- 7.Eslami S, Shang W, Li G, Patel N, Fischer GS Tokuda J, Hata N, Tempany CM and lordachita I In-bore prostate transperineal interventions with an MRI-guided parallel manipulator: system development and preliminary evaluation. International Journal of Medical Robotics and Computer Assisted Surgery, 12(2):98–109, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Farneback G Two-frame motion estimation based on polynomial expansion. Image Analysis, pp 363–370, 2003. [Google Scholar]

- 9.Hoyt K, Casteneda B, Zhang M, Nigwekar P, di Sant’Agnese PA, Joseph JV, Strang J, Rubens D, Parker J Tissue elasticity properties as biomarkers for prostate cancer. Cancer Biomarkers, 4(4–5):213–225, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hungr N, Long J, Beix V, Troccaz J, A realistic deformable prostate phantom for multimodal imaging and needle-insertion procedures. Medical Physics, 39(4):2031–2041, 2012. [DOI] [PubMed] [Google Scholar]

- 11.Jacopec M, Harris SJ, Rodrigues y Baena F, Gomes P, Cobb J, Davies B The first clinical application of a hands-on robotic knee surgery system. Computer Aided Surgery. 6:329–339, 2001. [DOI] [PubMed] [Google Scholar]

- 12.Li G Robotic System Development for Precision MRI-Guided Needle-Based Interventions. PhD Dissertation, Dept, of Mechanical Engineering. June 2016. [Google Scholar]

- 13.Megali G, Tonet O, Stefanini C, Boccadoro M, Papaspyropoulos V, Angelini L, Dario P, A Computer-Assisted Robotic Ultrasound-Guided Biopsy System for Video-Assisted Surgery. Medical Image Computing and Computer-Assisted Intervention, MICCAI, pp. 343–350, 2016. [Google Scholar]

- 14.Minhas DS, Engh JA, Fenske MM, Riviere CN Modeling of needle steering via duty-cycled spinning. IEEE International Conference on Engineering in Medicine and Biology Society, EMBC, pp. 2756–2759, 2017. [DOI] [PubMed] [Google Scholar]

- 15.Nycz CJ Gondokaryono R, Carvalho P, Patel N, Wartenberg M, Pilitsis JG, Fischer GS Mechanical validation of an MRI compatible stereotactic neurosurgery robot in preparation for pre-clinical trials. International Conference on Intelligent Robots and Systems, IROS, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Okamura AM, Simone C, O’Leary MD Force modeling for needle insertion into soft tissue. IEEE Transactions on Biomedical Engineering, 10(51): 1707–1716, 2004. [DOI] [PubMed] [Google Scholar]

- 17.Pacchierotti C, Abayazid M, Misra S, Prattichizzo D Teleoperation of steerable flexible needles by combining kinesthetic and vibratory feedback. IEEE Transactions on Haptics, 7(4):551–556, 2014 [DOI] [PubMed] [Google Scholar]

- 18.Patel NA, van Katwijk T, Li G, Moreira P, Shang W, Misra S, and Fischer GS. Closed-loop asymmetric-tip needle steering under continuous intraoperative MRI guidance. IEEE International Conference on Engineering in Medicine and Biology Society, EMBC, pp. 6687–6690, 2015. [DOI] [PubMed] [Google Scholar]

- 19.Podder T, Clark D, Sherman J, Fuller D, Messing E, Rubens D, Strang J, Brasacchio R, Liao L, Ng W, Yu Y In vivo motion and force measurement of surgical needle intervention during prostate brachytherapy. Medical Physics, 33(8):2915–2922, 2006. [DOI] [PubMed] [Google Scholar]

- 20.Prasad SK, Kitagawa M, Fischer GS, Zand J, Talamini MA, Taylor RH, Okamura AM A modular 2-DOF force-sensing instrument for laproscopic surgery. Medical Image Computing and Computer Assisted Interventions Conference, MICCAI, pp. 279–286, 2013. [Google Scholar]

- 21.Rossa C, Usmani N, Sloboda R, Tavakoli M, A hand-held assistant for semiautomated percutaneous needle steering. IEEE Transactions on Biomedical Engineering, 64(3):637–648, 2017. [DOI] [PubMed] [Google Scholar]

- 22.Schneider O, Troccaz J, Chavanon O, Blin D PADyC: a Synergistic Robot for Cardiac Puncturing. IEEE International Conference on Robotics and Automation, ICRA, pp 2883–2888, 2000. [Google Scholar]

- 23.Stoianovici D, Kim C, Srimathveeravalli G, Sebrecht P, Petrisor D, Coleman J, Solomon SB, Hricak H MRI-safe robot for endorectal prostate biopsy. IEEE Transactions on Mechatronics, 19(4):1289–1299, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Swensen JP, Cowan NJ, Torsional dynamics compensation enhances robotic control of tip-steerable needles. IEEE International Conference on Robotics and Automation, ICRA, pp. 1601–1606, 2012. [Google Scholar]

- 25.Tokuda J, Fischer GS, Papademetris X, Yaniv Z, Ibanex L, Cheng P, Liu H, Blevins J, Arata J, Golby AJ, Kapur T, Pieper S, Burdette EC, Fichtinger G, Tempany CM, Hata N OpenIGTLink: an open network protocol for image-guided therapy environment. International Journal of Medical Robotics, 5(4):423–434, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tokuda J, Tuncali K, Li G, Patel N, Heffter T, Fischer GS, Iordachita I, Burdette EC, Hata N, Tempany C In-Bore MRI-Guided transperineal prostate biopsy using 4-DOF needle-guide manipulator. International Society for Magnetic Resonance in Medicine, 2016. [Google Scholar]

- 27.Tse ZTH, Elhawary H, Rea M, Davies B, Young I, Lamperth M Haptic needle unit for MR-guided biopsy and its control. IEEE Transactions on Mechatronics, 17(1):183–187, 2012. [Google Scholar]

- 28.Troccaz J, Peshkin M, Davies B Guiding systems for computer aided surgery: introducing synergistic devise and discussing the different approaches. Medical Image Analysis, 2(2):101–118, 1998. [DOI] [PubMed] [Google Scholar]

- 29.Vigaru B, Petrisor D, Patriciu A, Mazilu D, Stoianovici D MR compatible actuation for medical instrumentation. IEEE International Conference on Automation, Quality and Testing, Robotics, pp. 49–52, 2008. [Google Scholar]

- 30.Vrooijink GJ, Abayazid M, Patil S, Alterovitz R, Misra S Needle path planning and steering in a three-dimensional non-static environment using two-dimensional ultrasound images. International Journal of Robotics Research. 33(10):1361–1374, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wartenberg M, Schornak J, Carvalho P, Patel N, Iordachita I, Tempany C, Hata N, Tokuda J, Fischer GS Closed-loop autonomous needle steering during cooperatively controlled needle insertions for MRI-guided pelvic interventions. The 10th Hamlyn Symposium on Medical Robotics, pp 33–34, 2017. [Google Scholar]

- 32.Webster J, Kim JS, Cowan NJ, Chirikjian GS, Okamura AM Nonholonomic modeling of needle steering. The International Journal of Robotics Research. 25(5–6):509–524, 2006. [Google Scholar]