Abstract

The human brain network is modular—comprised of communities of tightly interconnected nodes1. This network contains local hubs, which have many connections within their own communities, and connector hubs, which have connections diversely distributed across communities2,3. A mechanistic understanding of these hubs and how they support cognition has not been demonstrated. Here, we leveraged individual differences in hub connectivity and cognition. We show that a model of hub connectivity accurately predicts the cognitive performance of 476 individuals in four distinct tasks. Moreover, there is a general optimal network structure for cognitive performance—individuals with diversely connected hubs and consequent modular brain networks exhibit increased cognitive performance, regardless of the task. Critically, we find evidence consistent with a mechanistic model in which connector hubs tune the connectivity of their neighbors to be more modular while allowing for task appropriate information integration across communities, which increases global modularity and cognitive performance.

Main

The human brain is a complex network that can be parsimoniously summarized by a set of nodes representing brain regions and a set of edges representing the connections between brain regions. In network models of fMRI data, each edge represents the strength of functional connectivity—the temporal correlation of fMRI activity levels—between the two nodes (Figure 1). This network model can be used to study global and local brain connectivity patterns. Brain networks contain communities—groups of nodes that are more strongly connected to members of their own group than to members of other groups(Figure 1)1,4,5. This feature of networks is termed modularity and can be quantified by the modularity quality index Q (see Methods for equation).

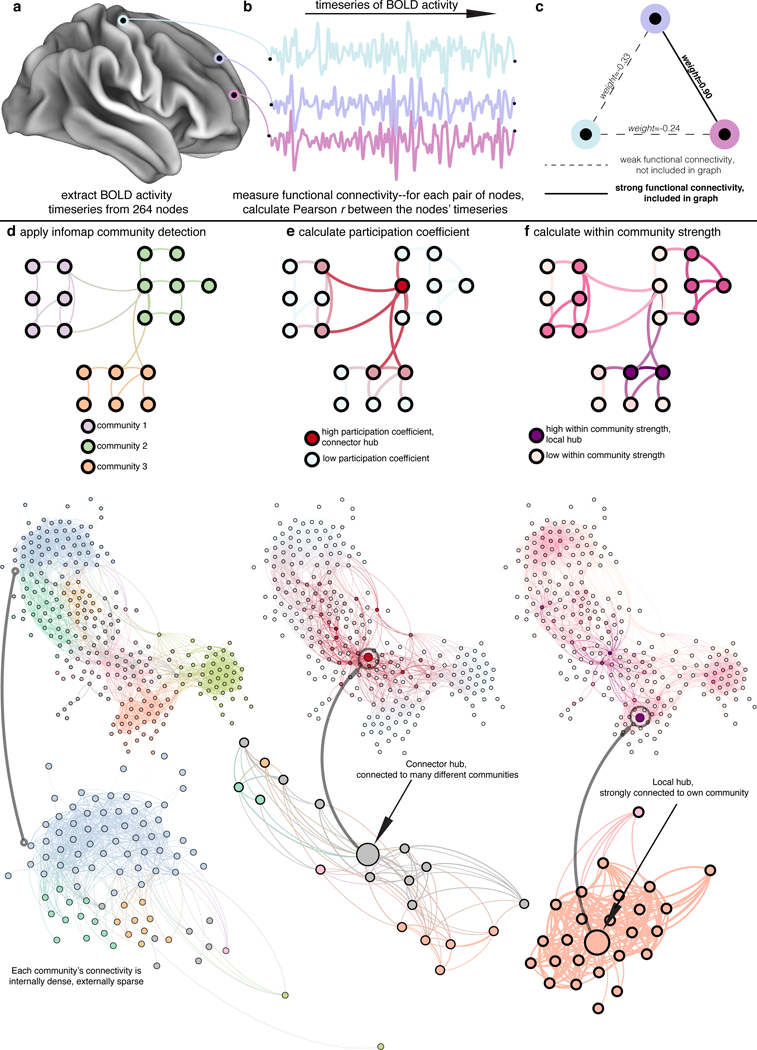

Figure 1 |. Functional connectivity and network science processing workflow.

a, The mean signal across time is extracted from 264 cortical, sub-cortical, and cerebellar regions, three of which are shown here. b, The time series of the three nodes is shown. To measure functional connectivity, the Pearson r correlation coefficient between the time series of node i and the time series of node j for all i and j is calculated. c, The strongest (e.g., the top 5% percent r values) functional connections serve as weighted edges in the graph (a range of graph densities was explored, see Methods for details). d, The Infomap community detection algorithm is applied, generating a community assignment for each node, displayed here in different colors in a schematic (top) and the mean graph across subjects (bottom). e, Given that particular community assignment and network, nodes’ participation coefficients are calculated. Red nodes are high participation coefficient nodes, shown here in a schematic (top) and the mean graph (bottom). f, Within community strengths are also calculated. Purple nodes are high within community strength nodes, shown here in a schematic (top) and the mean graph (bottom). The graphs along the bottom are laid out using the force-atlas algorithm, where nodes are repelling magnets and edges are springs, which causes nodes in the same community to cluster together, nodes that are diversely connected across communities (connector hubs) to be in the center of the graph, and nodes that are strongly connected to a single community (local hubs) in the middle of that community. d, lower, A single community (light blue) and its connections to the rest of the graph is extracted and enlarged, with nodes colored by community. Note that the nodes within each community are more strongly connected to each other than to nodes in other communities. e, lower, A node (and its connections) with a high participation coefficient is extracted and enlarged, with nodes colored by community. Note that the connector hub is connected to many different communities. f, A node (and its connections) with a high within community strength is extracted and enlarged, with nodes colored by community. Note that the local hub is strongly connected to its own community.

Modularity is ubiquitously observed in complex systems in Nature—a modular structure is observed consistently across the brains of very different species, from c elegans to humans6. Given its ubiquity, modular network organizations are potentially naturally selected because they reduce metabolic costs. Functional and structural connectivity is metabolically expensive7-11. A modular architecture with anatomically segregated and functionally specialized communities reduces the average length and number of connections—the network’s wiring cost. Moreover, the brain’s genotype-phenotype map is modular, forming groups of phenotypes, including brain communities (e.g., the visual community)12,13, that are co-affected by groups of genes14. Modularity, at the genetic and phenotypic level, allows systems to quickly evolve under new selection pressures15,16.

As we noted in earlier work2, modularity potentially increases fitness in information processing systems 17-19 and network simulations show that modularity allows for robust network dynamics, in that the connections between nodes can be reconfigured without sacrificing information processing functions, a process necessary for the evolution of a network20. Artificial intelligence research has shown that modular networks also solve tasks faster and more accurately and evolve faster than non-modular networks 21 with lower wiring costs than non-modular networks22. Critically, modularity is also behaviorally relevant—modularity predicts intra-individual variation in working memory capacity23 and how well an individual will respond to cognitive training24,25.

Within each of these communities, local hubs exist that have strong connectivity to their own community. The within community strength can be used to measure a node’s locality (see Methods for equation; Figure 1 for a schematic). A high within community strength reflects that a node has strong connectivity within its own community and is thus a local hub. Local hubs are ideally wired for segregated processing. Because the connections of local hubs are predominantly concentrated within their own community and their functions are likely specialized and segregated, damage to local hubs tends to cause relatively specific cognitive deficits26,27 and does not significantly alter the modular organization of the network27. Supporting their more segregated and discrete role in information processing, their activity levels do not increase as more communities are involved in a task1.

Yet, a completely modular organization renders the brain extremely limited in function—without connectivity between communities, information from, for example, visual cortex could never reach motor cortex and therefore visual information could not be used to inform movements. Thus, how is information integrated across these mostly segregated communities? The interdependence between modular communities and integration is a modern rendition of one of the first observations in neuroscience—Cajal’s conservation principle, which states that the brain has been naturally selected and is thus organized by an economic trade-off between minimizing the wiring cost of the network, which leads to modularity, and more costly connectivity patterns that increase fitness, like the integrative functions afforded by connections between communities 28-30.

Connector hubs have diverse connectivity across different communities. The participation coefficient can be used to measure a node’ diversity (see Methods for equation, Figure 1 for a schematic). A high participation coefficient reflects that a node has connections equally distributed across the brain’s communities and is thus labeled a connector hub. Connector hubs are ideally wired for integrative processing1,28,31-34. In human brain networks, connector hubs have a particular cytoarchitecture35, are implicated in a diverse range of cognitive tasks36,37, and are physically located in anatomical areas at the boundaries between many communities33. Moreover, damage to connector hubs causes widespread cognitive deficits26 and a decrease in the modular structure of the network27. During cognitive tasks, connector hubs appear to coordinate connectivity changes between other pairs of nodes—activity in connector hubs predicts changes in the connectivity of other nodes, particularly the connectivity between nodes in different communities38-40. Connector hubs are also strongly interconnected to each other, forming a diverse club—tightly interconnected connector hubs5. Connector hubs also have connections to almost every community in the network. Thus, they have access to information from every community. Finally, connector hubs exhibit increased activity if more communities are engaged in a task, which suggests that connector hubs are involved in processes that are more demanding as more communities are engaged1.

Connector hubs might be Nature’s cheapest solution to integration in a modular network. Generative models suggest that the diverse club—tightly interconnected connector hubs—potentially evolved to balance modularity and efficient integration5. However, given the amount of wiring required to link to many different and distant communities, connector hubs’ connectivity pattern dramatically increases wiring costs11. Despite this cost, connector hubs potentially provide a necessary function—connector hubs could be the conductor of the brain’s neural symphony.

A parsimonious mechanistic model of these findings is that connector hubs tune connectivity between communities. Neuronal tuning refers to cells selectively representing a particular stimulus, association, or information. We introduce the mechanistic concept of network tuning, in which connections between nodes are organized to achieve a particular network function or topology, like the integration of information across communities or decreased connectivity between two communities. We propose that diverse connectivity across the network’s communities allows connector hubs to tune connectivity between communities to be modular but also allows for task appropriate information integration across communities. This facilitates a global modular network structure in which local hubs and nodes within each community are dedicated to mostly autonomous local processing. The modular network structure afforded by diversely connected connector hubs—connector hubs that are wired well for network tuning—is potentially optimal for a wide variety of cognitive processes. Thus, despite their cost, strong and diverse connector hubs might be critically necessary for integrative processing in complex modular neural networks.

Local and connector hubs have been exhaustively studied by network science and their functions have been inferred from their topological locations in the network5. Moreover, individuals’ brain network connectivity has been shown to be predictive of task performance41-45,46 and is able to “fingerprint” individuals47. However, no study has leveraged these individual differences to test a mechanistic model of hub function and direct evidence supporting a mechanistic model of these hubs and how they support human cognition remains absent. Moreover, it is currently unknown if there is a hub and network structure that is optimal for a diverse set of tasks or if different hub and network structures are optimal for different tasks. Here, we analyze how individual differences in the locality and diversity of hubs during the performance a task relates to network connectivity, modularity, and performance on that task as well as subject measures collected outside of the scanner, including psychometrics (e.g., fluid intelligence, working memory) and other behavioral measures (e.g., sleep quality and emotional states). We test a mechanistic model in which connector hubs tune their neighbors’ connectivity to be more modular, which increases the global modular structure of the network and task performance, regardless of the particular task.

We leveraged the size and richness of fMRI data from 476 (S500 release) subjects that participated in the Human Connectome Project48. A network was built for each subject using fMRI data collected during seven different cognitive states (Resting-State, Working Memory, Social Cognition, Language & Math, Gambling, Relational, Motor, see Methods). Thus, each subject has seven different networks for analysis. Each edge represents the strength of functional connectivity between each pair of 264 nodes49. In every network, Q and a division of nodes into communities was calculated (see Methods). Next, in every network, for each node, locality and diversity was measured. Within community strength measures a node’s locality and the participation coefficient measures a node’s diversity, respectively (see Methods for equations). Figure 1 displays this processing workflow.

In the proposed mechanistic model of hub connectivity, connector hubs, via their diverse connectivity, tune the network to preserve or increase global modularity and local hubs’ locality, which, in turn, increases task performance. If this model is true, hubs’ connectivity in the network and network modularity should be predictive of task performance. Thus, the first test of this model involved using hub diversity and locality, network connectivity, and modularity to predict task performance. A predictive multilayer perceptron model (three layers plus the input layer and the output layer (enough for non-linear relationships); eight neurons per layer (one per feature, with two layers containing 12 neurons, allowing for higher dimensional expansion)) was used to predict subjects’ task performance (Supplementary Figure 1, Figure 2). Known as deep neural networks, these predictive models are constructed by tuning the weights between neurons across adjacent layers to achieve the most accurate relationship between the features (input) and the value the model is trying to predict (output). The predictive model’s features (n=8) captured how well subjects’ nodes’ diversity and locality, network connectivity (i.e., edge weights in the network), and modularity (Q) are optimized for the performance of a task. For example, for the feature that captures how optimized the diversity of subjects’ nodes’ are for task performance, for each node, the Pearson r across subjects between that node’s participation coefficients (which measures diversity) and task performance values was calculated (Supplementary Figure 1a). We call this r value the node’s diversity facilitated performance coefficient. The feature, then, for a given subject, is the Pearson r across nodes between each node’s diversity facilitated performance coefficient and each node’s participation coefficient in that subject, representing how optimized the diversity of that subject’s nodes’ are for performance in the task (Supplementary Figure 1). Critically, for each subject’s feature calculation, the diversity facilitated performance coefficients are calculated without that subject’s data. The same procedure is executed for locality (using the within community strengths) and edge weights; instead of participation coefficients, within community strengths or edge weights are used. Finally, the Q values of the networks are included in the model.

The predictive model was fit for each of the four cognitive tasks that subjects performed in the Human Connectome Project for which performance was measured (Working Memory, Relational, Language and Math, Social tasks; see Methods for task performance measures). For this and other subject performance analyses, we could not analyze the Gambling, Motor, or Resting-State tasks, as there was no performance measured for these tasks. Each predictive model was fit to the subjects’ networks constructed during the performance of each task as well as the resting state (four features from each). The inclusion of the resting-state and the cognitive task state allowed the model to capture the subjects’ so-called intrinsic network states as well as the subjects’ task driven network states. Using a leave-one-out cross-validation procedure, the features were constructed, and the model was fit with data from all subjects except one. The predictive model was then used to predict the left-out subject’s task performance (Supplementary Figure 1c). To test the accuracy of the model, the Pearson r between the observed and predictive performance of each subject was calculated (Figure 2, Supplementary Figure 2).

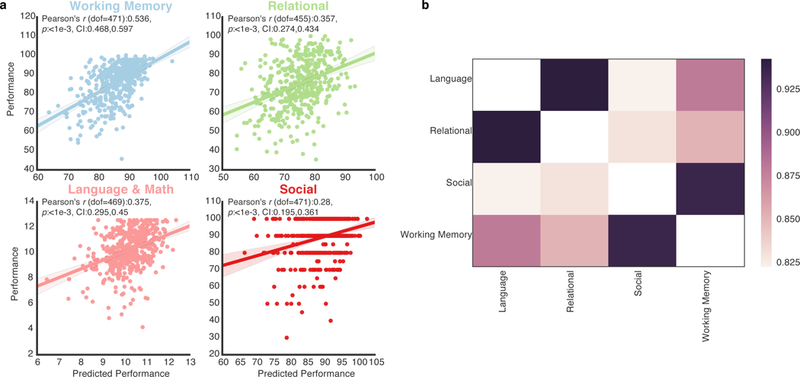

Figure 2 |. Hub diversity and locality, modularity, and network connectivity predict cognitive performance.

a, for each task, the correlation between task performance and the performance predicted by a predictive model of hub diversity and locality, modularity, and network connectivity. Each data point represents the (y-axis) true performance (see Methods, each task’s performance value scale is unique) of the subject and the (x-axis) predicted performance of the subject by the neural network. Shaded areas represent 95 percent confidence intervals. In every task, the predictive model significantly predicted task performance (p < 1e-3, Bonferroni corrected (n tests=4), N=Working Memory: 473, Relational: 457, Language & Math: 471, Social: 473). b, we correlated the tasks’ feature correspondence values (see Supplementary Figure 3 for each task’s feature correspondence with each subject measure)—measuring if the two tasks’ optimal hub and network structures are also optimal for the same subject measures. High correlations mean that the two tasks’ hub and network structures are similarly optimal for the same subject measures (all results significant at p < 1e-3, Bonferroni corrected (n tests = 4), dof=45, N=47, the number of subject measures, while the feature correspondence N =Working Memory: 473, Relational: 457, Language & Math: 471, Social: 473).

This predictive model significantly (p<0.001, Bonferroni corrected (n tests = 4)) predicted performance in all four tasks (Figure 2). Also, using a predictive model with only nodes’ diversity and locality and modularity features (i.e., ignoring individual connections in the network) did not dramatically decrease the models’ prediction accuracies (Supplementary Figure 2a,b). Given that head motion is a concern when analyzing fMRI data, scrubbing techniques were applied to remove motion artifacts from the fMRI data and the mean frame-wise displacement was regressed out from task performance. Neither of these additional analyses dramatically decreased the predictive models’ prediction accuracies (Supplementary Figure 2c-f). Finally, in each task, modularity (Q) alone was only weakly correlated with task performance (Working Memory, Pearson’s r (dof=471):0.303, p<0.001, CI:0.219,0.383; Relational, Pearson’s r (dof=455):0.106, p:0.095, CI:0.014,0.196; Language & Math, Pearson’s r (dof=469):0.085, p:0.259, CI:−0.005,0.174; Social, Pearson’s r (dof=471):0.084, p:0.275, CI:−0.006,0.173, all confidence intervals=95%). These results suggest that the diversity and locality of nodes, in combination with the modular connectivity structure of the network, are highly predictive of task performance.

The Human Connectome project contains psychometrics and other behavioral measures collected outside of the MRI scanner; for clarity and to differentiate these measures from the task performance measures and the tasks’ corresponding networks, we call these “subject measures” 50. If a particular hub and network structure is generally optimal for many different types of cognition and many different behaviors (a component of the mechanistic model of hub function), then the tasks’ optimal hub and network structures should be similarly optimal across subject measures—sub-optimal for negative measures like poor sleep, sadness, and anger and optimal for positive measures like life satisfaction and processing speed.

For each task, the predictive model constructs features that capture how optimal each subject’s hub and network structure is for performance on that task. Using the subjects’ networks from a given task, the predictive model of hub and network structure can construct features that capture how optimal each subject’s hub and network structure is for a given subject measure collected outside of the scanner instead of task performance. This was executed using the networks from each of the four tasks for all subject measures. Thus, the predictive model constructs features that capture how optimal each subject’s hub and network structure (measured during the performance of a task (e.g. Working Memory)) is for a given subject measure (e.g., Delayed Discounting). The correspondence between the features in the two models—how similarly optimal subjects’ hub and network structure are for the task and a given subject measure—can then by calculated by, across subjects, computing the correlation between the features in the two predictive models. Specifically, for each feature, the correlation, across subjects, between the feature in the predictive model fit to task performance (e.g., Working Memory) and that feature in the predictive model that was fit to a subject measure (e.g., Delayed Discounting) is computed. The mean correlation across the edge, locality, and diversity features (n=6, three features from resting-state and three features from the task) is then calculated, which we call feature correspondence. The Q feature was ignored, as the Q feature remains constant regardless of what the model is fit to. Thus, this value determines if each task’s optimal hub and network structure is optimal for other subject measures and if all the tasks’ optimal hub and network structures are similarly optimal for other subject measures.

For each task, the hub and network structures that were optimal for that task were typically also optimal for positive subject measures and sub-optimal for negative subject measures (Supplementary Figure 3). Next, the similarity by which two tasks’ optimal hub and network structures generalized to other subject measures can be measured by correlating the feature correspondence values (for example, the Working Memory and Social columns in Supplementary Figure 3). High Pearson r correlations were found between all tasks (r (dof=45) values between 0.82 and 0.96, p<0.001 Bonferroni corrected (n tests = 4), Figure 2b). Finally, the predictive model was able to significantly predict most subject measures (Supplementary Figure 4). These results demonstrate that, if an individual has a particular brain state during a given task, as defined by the connectivity of the network’s hubs, that is optimal for that given task, it also likely optimal for other subject measures. Critically, different tasks’ optimal hub and network structures are similarly optimal for other subject measures. Moreover, these findings demonstrate that the predictive model captures hub connectivity patterns in the network that are relevant for behavior and cognition in general, instead of overfitting hub connectivity patterns that are only related to a particular cognitive process or behavior.

Having established relationships between hub locality and diversity, modularity, and task performance, we sought to test the mechanistic claim that diverse connector hubs increase modularity by analyzing how individual differences in a node’s diversity within the network are predictive of individual differences in brain network modularity (Q; see Methods for mathematical definition). Typically, the result of damage to a region can be used to infer the function of that region—if a region is damaged and modularity deceases, the region is putatively involved in preserving modularity. Here, we analyze the other direction—when a hub is diversely connected across the brain (i.e., strong), if modularity increases, the region’s diverse connectivity is putatively involved in preserving modularity (Supplementary Figure 5).

Thus, we first tested if, across subjects, a node’s participation coefficients are positively correlated with modularity (Q). For each node, the Pearson r between that node’s participation coefficients and the network’s modularity values (Q) across subjects was calculated. Intuitively, higher r values indicate that the node’s diversity (i.e., the participation coefficient) is associated with higher network modularity. This is an important feature that can be used to distinguish the roles of different brain regions. For ease of presentation, we refer to each node’s r value as the diversity facilitated modularity coefficient, as it measures how the diversity of the node’s connections facilitates (we use this term to remain causally agnostic) the modularity of the network. For every node, the Pearson r between the within community strengths and Q values across subjects was also calculated. Intuitively, higher r values indicate that the node’s locality (i.e., the within community strength) is associated with higher network modularity. We refer to each node’s r value (between within community strengths and Q values across subjects) as the locality facilitated modularity coefficient, as it measures how the locality of the node’s connections facilitates the modularity of the network.

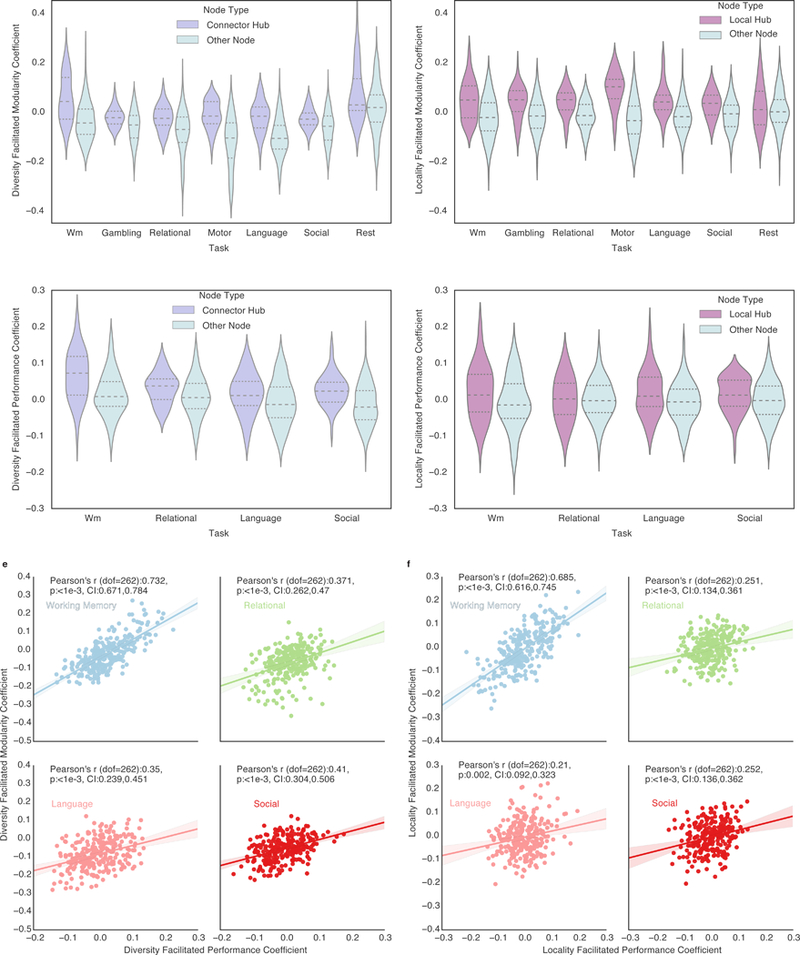

We performed these computations separately for all seven distinct cognitive states. In all states, the diversity facilitated modularity coefficients of connector hubs (top 20 percent highest participation coefficient nodes) were shown to be significantly higher than other nodes in a Bonferroni-corrected independent two-tailed t-test (Figure 3a, Working Memory t(dof:262):7.182, p<0.001, Cohen’s d:1.104, CI:0.062,0.117, Gambling t(dof:262):4.101, p:0.0004, Cohen’s d:0.63, CI:0.025,0.052, Language & Math t(dof:262):7.292, p<0.001, Cohen’s d:1.12, CI:0.062,0.102, Motor t(dof:262):7.354, p<0.001, Cohen’s d:1.13, CI:0.088,0.13, Relational t(dof:262):4.457, p:0.0001, Cohen’s d:0.685, CI:0.038,0.075, Resting State t(dof:262):3.947, p:0.0007, Cohen’s d:0.606, CI:0.029,0.096, Social t(dof:262):3.716, p:0.0017, Cohen’s d:0.571, CI:0.022,0.051. P values Bonferroni corrected (n tests=7), all confidence intervals=95%). Moreover, in all cognitive states, the locality facilitated modularity coefficients of local hubs (top 20 percent highest within community strength nodes) were shown to be significantly higher than other nodes in a Bonferroni-corrected independent two-tailed t-test (Figure 3b, Working Memory t(dof:262):5.415, p<0.001, Cohen’s d:0.832, CI:0.045,0.093, Gambling t(dof:262):4.959, p<0.001, Cohen’s d:0.762, CI:0.034,0.074, Language & Math t(dof:262):6.428, p<0.001, Cohen’s d:0.988, CI:0.045,0.085, Motor t(dof:262):9.822, p<0.001, Cohen’s d:1.509, CI:0.101,0.146, Relational t(dof:262):6.131, p<0.001, Cohen’s d:0.942, CI:0.036,0.07, Resting State t(dof:262):0.966, p:1.0, Cohen’s d:0.148, CI:−0.014,0.038, Social t(dof:262):4.54, p:0.0001, Cohen’s d:0.698, CI:0.026,0.06. P values Bonferroni corrected (n tests = 7), all confidence intervals=95%). While the diversity facilitated modularity coefficients of connector hubs were not always positive, they were typically close to or above zero. This means that a diverse connector hub can be associated with increased integrative connectivity between communities without decreasing the modularity of the network. To more fully understand the relationship between nodes’ diversity and the networks’ modularity, the Pearson r between each node’s mean participation coefficient across subjects (which defines a connector hub) and the node’s diversity facilitated modularity coefficient was calculated (Supplementary Figure 6). Moreover, the Pearson r between each node’s mean within community strength across subjects (which defines a local hub) and the node’s locality facilitated modularity coefficient was calculated (Supplementary Figure 6). In every task, there was a significant positive correlation between a node’s mean participation coefficient and that node’s diversity facilitated modularity coefficient (Supplementary Figure 6a). In every task, there was also a significant positive correlation between a node’s mean within community strength and its locality facilitated modularity coefficient (Supplementary Figure 6b). These analyses demonstrate that connector hubs’ strong diverse connectivity to many communities and local hubs’ strong local connectivity is associated with higher brain network modularity, regardless of the subjects’ cognitive state. Thus, these results are consistent with the mechanistic model of connector hub function, where connector hubs preserve the modular structure of the network via diverse connectivity.

Figure 3 |. Connector hubs and local hubs concurrently facilitate increased modularity and task performance.

For each task, diversity and locality facilitated modularity coefficients, a measure of how the diversity and locality (respectively) of a node facilitates modularity, were calculated. In every task, the diversity and locality facilitated modularity coefficients of connector (a) and local hubs (b), compared to other nodes, is significantly (except Resting-State for locality) higher, demonstrating that strong connector and local hubs facilitate the modular structure of brain networks. For each task, diversity and locality facilitated performance coefficients were calculated. In every task the diversity and locality facilitated performance coefficients of connector (c) and local hubs (d), compared to other nodes, is significantly (except Language for diversity (p=0.0677 after Bonferroni correction (uncorrected p=0.0169)), Relational and Social for locality) higher, demonstrating that strong connector and local hubs facilitate increased task performance. For a-d, the mean and quartiles are marked in each violin. Each task’s distribution of coefficients was tested for normality using D’Agostino and Pearson’s omnibus test k2. No evidence was found (k2>0.0 for all tasks) that these distributions were not normal. N=264, the number of nodes in the graph. e, The correlation between a node’s diversity facilitated modularity coefficient and a node’s diversity facilitated performance coefficient. f, The correlation between the node’s locality facilitated modularity coefficient and the node’s locality facilitated performance coefficient. In panels e,f, N=264, the number of nodes in the graph. Shaded areas represent 95 percent confidence intervals. All p values are Bonferroni corrected (n tests = 4).

We confirmed the reliability and reproducibility of these results and demonstrated that they are not driven by analytically necessary relationships. First, the mean participation coefficient and within community strength was calculated in one half of the subjects and the diversity and locality facilitated modularity coefficients were calculated in the other half of the subjects, testing 10,000 splits (Supplementary Figure 7). Next, four null models were tested to ensure the current results were not driven by analytically necessary relationships (Supplementary Figure 8). Other analyses ensured the current results are not driven by the number of communities (Supplementary Figure 9, Supplementary Figure 10). Finally, to justify the use of the Pearson r to calculate the coefficients, the relationship between nodes’ diversity and Q was confirmed as typically linear (Supplementary Figure 11).

Having found evidence supporting a mechanistic model in which connector hubs tune their neighbors’ connectivity to be more modular, thereby increasing the global modular structure of the network, we next asked if diverse hubs concurrently facilitate higher modularity and higher task performance. To address this question, the Pearson r between each node’s participation coefficient (or within community strength) and task performance was calculated. A positive r at a node indicates that a subject with a higher participation coefficient (or within community strength) at that node performs better on the task. We refer this r value as the node’s diversity facilitated performance coefficient for participation coefficients, and locality facilitated performance coefficient for within community strengths. Note that these are the same r values used in the construction of the predictive performance model features (Supplementary Figure 1). However, for the predictive model, we calculated these r values with the subject whose behavior was to be predicted held out. Here, we calculate these r values across all subjects.

In all tasks, the diversity facilitated performance coefficients of connector hubs (top 20 percent strongest) were shown to be significantly higher than other nodes in a Bonferroni-corrected independent two-tailed t-test, with only the Language & Math task at p=0.0677 after Bonferroni correction (uncorrected p=0.0169) (Figure 3c, Working Memory t(dof:262):5.378, p<0.001, Cohen’s d:0.826, CI:0.03,0.071, Language & Math t(dof:262):2.404, p:0.0677, Cohen’s d:0.369, CI:0.005,0.04; Relational t(dof:262):2.959, p:0.0135, Cohen’s d:0.455, CI:0.01,0.037; Social t(dof:262):4.744, p<0.001, Cohen’s d:0.729, CI:0.025,0.053. All p values Bonferroni corrected (n tests=4), all confidence intervals=95%). The locality facilitated performance coefficients of local hubs (top 20 percent strongest) were shown to be significantly higher than other nodes in a Bonferroni-corrected independent two-tailed t-test (Figure 3d, Working Memory t(dof:262):2.712, p:0.0285, Cohen’s d:0.417, CI:0.008,0.054, Language & Math t(dof:262):2.864, p:0.0181, Cohen’s d:0.44, CI:0.006,0.043; Relational t(dof:262):0.327, p:1.0, Cohen’s d:0.05, CI:−0.016,0.021; Social t(dof:262):1.862, p:0.2547, Cohen’s d:0.286, CI:0.0,0.031. All p values Bonferroni corrected (n tests=4), all confidence intervals=95%). Moreover, the correlation between each node’s diversity facilitated performance coefficient and each node’s mean participation coefficient was positive and significant (Supplementary Figure 6), suggesting that, for connector hubs, a higher participation coefficient is associated with higher task performance. The Pearson r correlation between each node’s locality facilitated performance coefficient and each node’s mean within community strength was also positive and significant (Supplementary Figure 6; all tasks except Relational, Bonferroni (n tests=4) p=0.081, uncorrected p=0.02), suggesting that, for local hubs, a higher within community strength is also associated with higher task performance. Finally, there was a significant positive correlation between a node’s diversity facilitated modularity coefficient and a node’s diversity facilitated performance coefficient (Figure 3e) as well as a significant positive correlation between a node’s locality facilitated modularity coefficient and a node’s locality facilitated performance coefficient (Figure 3f). Thus, diverse connector hubs facilitate higher task performance in proportion to how much they facilitate higher modularity, suggesting a strong link between the increased modularity afforded by diverse hubs and increased task performance.

Next, we tested the mechanistic network tuning claim of the model: “do connector hubs increase Q by tuning the connectivity of their neighbors’ edges to be more modular?” This relationship should only hold for connector hubs, not local hubs, as previous studies suggest that connector hubs tune connectivity between communities and maintain a modular structure1,36,38-40. We therefore examined connector hubs for which their diversity facilitated modularity coefficients were positive. This analysis had two aspects. First, do connector hubs increase modularity by tuning within community edge strengths? Second, are connector hubs tuning the within community edge strengths of their neighbors in order to increase global modularity?

In order to test the first aspect of the neural tuning mechanism—if within community edges are tuned by connector hubs in order to increase global modularity—we used a canonical division of nodes into communities (Figure 4d displays this division, see Methods for link to division36,49). We assessed, for each edge in the network, how the edge’s weights related to modularity values (Q) across subjects (Figure 4a). Next, we calculated how well each connector hub’s participation coefficients correlate with each edge’s weights across subjects (Figure 4b). Higher Q values and higher connector hub participation coefficients are associated with decreased connectivity between the visual, sensory/motor hand, sensory/motor mouth, auditory, ventral attention, dorsal attention, and cingulo-opercular communities. These communities were also more strongly connected to fronto-parietal, default mode, salience, and sub-cortical communities in networks with higher modularity values and higher connector hub participation coefficients.

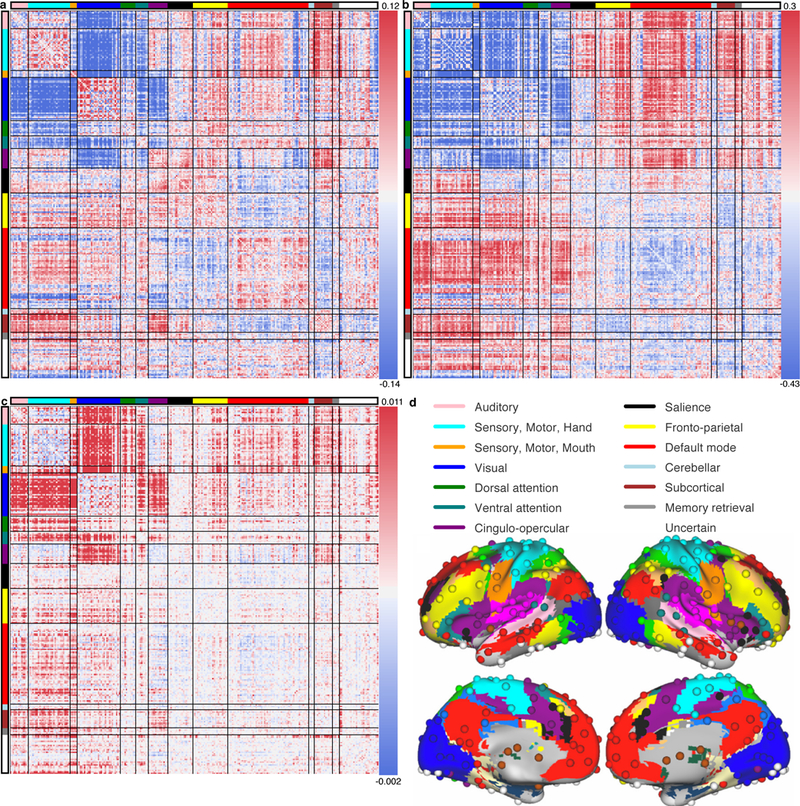

Figure 4 |. Connectivity between primary sensory, motor, dorsal attention, ventral attention, and cingulo-opercular communities mediate the relationship between connector hubs and modularity.

a, Each entry is the Pearson correlation coefficient, r, across subjects (N=476), between modularity (Q) and that edge’s weights. b, For each connector hub, the Pearson r between the hub’s participation coefficients and each edge’s weights across subjects (N=476) was calculated. The matrix in b is the mean of those matrices across connector hubs. c, To investigate the relationship between connector hubs’ participation coefficients, edge weights, and Q, a mediation analysis was performed for each connector hub, with an edge’s weights mediating the relationship between the connector hub’s participation coefficients and Q indices (N=476). Each edge’s mean mediation value between connector hubs’ participation coefficients and Q is shown. d, The anatomical locations of each node and community on the cortical surface36,49.

Given these observations, we sought to find the edges that mediate between connector hubs’ increased participation coefficients and modularity (Q), as these are the edges that connector hubs likely tune in order to increase Q. Specifically, a mediation analysis was performed for each connector hub, with an edge weight mediating the relationship between the connector hub’s participation coefficients and the Q indices of the networks across subjects. An edge’s mediation value of a connector hub’s participation coefficients and Q is the regression coefficient of the edge’s weights by the connector hub’s participation coefficients across subjects multiplied by the regression coefficient of Q indices by the edge’s weights, controlling for the connector hub’s participation coefficients, across subjects. Each edge’s mean mediation value across connector hubs is shown in Figure 4c. We found that edges between the visual, sensory/motor hand, sensory/motor mouth, auditory, ventral attention, dorsal attention, and cingulo-opercular communities, as well as edges between those communities and the fronto-parietal, default mode, and sub-cortical communities, mediate the relationship between connector hubs’ participation coefficients and Q indices. These results are consistent with a mechanistic model in which diverse connector hubs tune connectivity to increase segregation between sensory, motor, and attention systems, which increases the global modularity of the network.

Next, we tested the second aspect of the network tuning mechanism—if the relationship between a connector hub’s participation coefficients and Q indices is mediated primarily by that connector hub’s neighbors’ edge pattern increasing Q. Neighbors were defined based on edges present between the two nodes in a graph at a density of 0.15 (as it was our densest cost explored). The mediation values calculated above each represent an edge mediating between a node i’s participation coefficients and Q values. Thus, for each connector hub i, there is the set of arrays of absolute mediation values of node i’s neighbors’ edges (n=263 for each neighbor j’s array) and the set of arrays of the absolute mediation values of node i’s non-neighbors’ edges (n=263 for each non-neighbor j’s array). Edges of node i in every array were ignored, as we were only interested in how the participation coefficients of connector hub i modulate Q via the mediation of j’s connectivity to the rest of the network, not j’s connectivity to connector hub i (thus, n=264-1). If a connector hub is primarily modulating Q via the tuning of its neighbors’ edges, then the absolute mediation values in the neighbors’ arrays should be greater than the absolute values in the non-neighbors’ arrays. The distribution of t-values between the two sets of arrays for all connector hubs (neighbors versus non-neighbors) is shown in Supplementary Figure 12; across tasks, the mediation values were consistently and significantly higher for connector hubs’ neighbors’ edges than non-neighbors edges. Moreover, these same t-values can be calculated for local hubs, using the within community strength instead of the participation coefficient; thus, an edge mediates between a local hub’s within community strengths and Q. Across tasks, connector hubs’ neighbors’ mediation t values were shown to be higher than local hubs’ neighbors’ mediation t values with a two-tailed independent student’s t-test (t(dof:1358): 3.892, p:0.0001, Cohen’s d:0.219, CI:1.887,6.62), demonstrating that this result is specific to connector hubs. All distributions were confirmed as normal (k2>100.0, p<0.00001 for all tasks). We also performed an alternative analysis that confirmed these relationships (see Methods, Supplementary Figure 13). These results suggest that each connector hub, not local hub, tunes their neighbors’ connectivity to be more modular. A connector hub’s high diversity facilitated modularity coefficient does not largely reflect diffuse global connectivity changes. Instead, connector hubs are likely connected in a way that allows them to directly tune the connectivity of their neighbors to be more modular, thereby increasing global network modularity. Thus, the locality facilitated modularity coefficients are likely a downstream effect of connector hub modulation. Supporting this interpretation, we found that, when connector hubs have high participation coefficients, local hubs have high within community strengths (Supplementary Figure 14).

In the series of analyses we report here, we explicitly and comprehensively tested a mechanistic model by leveraging individual differences in connectivity and cognition in humans. Specifically, a model of the diversity and locality of hubs, the modularity of the network, and the network’s connectivity was highly predictive of task performance and a range of subject measures. Critically, the diversity and locality of nodes optimal for each task were also similarly optimal for positive subject measures. Thus, it appears that there is a hub and network structure that is generally optimal for cognitive processing. We found evidence that diverse connector hubs preserve or increase the modularity of brain networks. Moreover, diverse connector hubs tune the connectivity of their neighbors to be more modular. Finally, we found that the diversity of connector hubs simultaneously facilitated higher modularity and task performance. Thus, connector hubs appear to contribute to the maintenance of an optimal modular architecture during integrative cognition without greatly increasing the wiring cost or decreasing modularity5,29,51. In sum, these data are consistent with a mechanistic model of hub function, where connector hubs integrate information and subsequently tune their neighbors’ connectivity to be more modular, which increases the global modularity of the network, allowing local hubs and nodes to perform segregated processing.

Across individuals, we found that diverse connector hubs increase modularity and task performance, regardless of the task. In all seven tasks, the subjects with the most diversely connected connector hubs also had the highest modularity and, in the four tasks for which performance was measured, the highest task performance. Thus, we propose that connector hubs are likely critical for integrating information and tuning their neighbors’ connectivity to be more modular, regardless of the task. Although connector hubs are more active during tasks that require many communities1, as their functions are likely more computationally demanding during these tasks, it is likely that every task requires the functions of connector hubs, as supported by our finding that their diverse connectivity predicts performance in all of the tasks analyzed here.

Our findings compliment many previous task-based fMRI studies that have identified regions that are more active during a particular cognitive process. We have demonstrated that, while different regions are more or less active in different tasks, including connector hubs, the diverse connectivity and integration and tuning functions of connector hubs are consistently required across different cognitive processes. Our findings are also consistent with neuropsychological studies of patients with focal brain lesions. It has been found that damage to connector hubs decreases modularity and causes widespread cognitive deficits, while damage to local nodes does not decrease modularity and causes more isolated deficits, such as hemiplegia, or aphasia26,27. While connector hubs are not likely critical for only one specific cognitive process, their functions and diverse connectivity are required to maintain a cognitively optimal modular structure across cognitive processes. Thus, as we observed here, individual differences in the diversity of connector hubs’ connectivity is predictive of cognitive performance across a range of very different tasks. Although diversely connected connector hubs are critical for successful performance in many different tasks, any given task nevertheless recruits very different cognitive and neural processes; each task likely engages connectivity patterns that are specifically optimal to that task. Future analyses should seek to understand both the general optimal connectivity patterns of connector hubs found here and the connectivity patterns that are optimal to a single task, including if and how these connectivity patterns interact.

Methods

Data and Preprocessing

We used fMRI data from the Human Connectome Project48 S500 release. For the task-based fMRI data, Analysis of Functional NeuroImages (AFNI) was used to preprocess the images52. The AFNI command 3dTproject was used, passing the mean signal from the cerebral spinal fluid mask, the white matter mask, the whole brain signal, and the motion parameters to the “-ort” options, which removes these signals via linear regression. Within AFNI, the “-automask” option was used to generate the masks. The “-passband 0.009 0.08” option, which removes frequencies outside of 0.009 and 0.08, was used. Finally, the “-blur 6” option, which smooths the images (inside the mask only) with a 6mm FWHM filter after the time series filtering. Given the short length of the Emotion task (176 frames; Resting-State:1200, Social: 274, Relational:232, Motor:284, Language:316, Working Memory:405, Gambling:253) it was not included in our analyses. For the fMRI data collected at rest, we used the images that were previously preprocessed by the Human Connectome Project with ICA-FIX. We also used the AFNI command 3dBandpass to further preprocess these images. We used it to remove the mean whole brain signal and frequencies outside 0.009 and 0.08 (explicitly, “-ort whole_brain_signal.1D -band 0.009 0.08 -automask”). We did not regress out stimulus or task effects from the time series of each node, because how nodes’ low frequency oscillations respond to stimulus or task effects is meaningful. Moreover, other investigators have noted that task effect regression has minimal effects53.

As subject head motion during fMRI can impact functional connectivity estimates and has been shown to bias brain-task performance relationships54, performance prediction analyses were executed with scrubbing (removing frames with high motion) executed on frames with frame-wise displacement greater than 0.2 millimeters, including the frame before and after the movement. Frame-wise displacement measures movement of the head from one volume to the next, and was computed as the sum of the absolute values of the differentiated rigid body realignment estimates (translation and rotation in x, y, and z directions) at every time point with rotation values evaluated with a radius of 50 mm54. Frames were removed after all preprocessing was executed. Subjects with more than 75 percent of frames removed were not analyzed. Moreover, we executed all analyses after regressing out mean frame-wise displacement from the task performance values (Supplementary Figure 2).

Graph Theory Analyses

The Power atlas49 was used to define the 264 nodes in our graph because it was the only atlas that met all of the following requirements: (1) Given that the homogeneity of nodes in this atlas is high and they do not share physical boundaries, it will not overestimate the local connectivity of regions, (2) it is the only atlas that is defined based both on functional connectivity and studies of task activations making it optimal for our current analyses, (3) it accurately divides nodes into communities observed with other approaches (e.g., at the voxel level), and this division has been used in many studies33,36,49,55. A canonical division of nodes into communities aides in the interpretation and generalizability of our results. It can be found at: http://www.nil.wustl.edu/labs/petersen/Resources_files/Consensus264.xls. Moreover, we used this division to calculate within and between community edge weight changes across subjects. (4) It has anatomical coverage of cortical, subcortical, and cerebellar regions.

All graph theory analyses were executed with our own custom python code (www.github.com/mb3152/brain_graphs) that uses the iGraph library. All analysis code is also publicly available (github.com/mb3152/hcp_performance/). For each task (both LR and RL encoding directions were used) and for each subject, the mean signal from 264 regions in the Power atlas was computed. The Pearson r between all pairs of signals was computed to form a 264 by 264 matrix, which was then Fisher z transformed. We chose Pearson r values to represent functional connectivity (i.e., edges) between nodes, for its simplicity in interpretation and ubiquity in human network neuroscience56. However, more complex statistical measures could be employed, including measures that attempt to estimate the directionality of each edge. The LR and RL matrices were then averaged. The mean matrix was then thresholded, retaining edge weights, at a range of costs (0.05 to 0.15 at 0.01 intervals), a common range and interval in graph theory analyses1,27,33,49. The maximum spanning tree was calculated to ensure all nodes had at least one edge. No negative correlations were included in our analyses. The matrix was then normalized to sum to a common value across subjects, and was used to represent the edges in the graph. Thus, all graphs had the same number of edges and sum of edge weights.

For each cost, the InfoMap algorithm57 was run. While this method has been shown to be highly accurate on benchmark networks with known community structures, it is still a heuristic, as community detection is NP-hard58. While InfoMap does not explicitly maximize Q, it has been shown to estimate community structure accurately in several test cases59, rendering the Q value, the participation coefficients, and within community strengths computed based on the community structure accurate and valid. Moreover, in biological networks, InfoMap achieves Q values that are similar to algorithms that maximize Q 60; in the current resting-state data, InfoMap Q values and Fast-Greedy Q61 values were correlated at Pearson r=0.87 (dof=474, p<0.001, 95% CI: 0.84, 0.89); InfoMap Q values were found to be higher than Fast-Greedy Q values with a student’s independent t-test (t(dof:952):16.027, p:<0.001, Cohen’s d:0.775, 95% CI:0.024,0.03). InfoMap Q values and Louvain Q 62 values were correlated at Pearson r=0.98 (dof=474, p<0.001, 95% CI: (0.97, 0.98)); Louvain Q values were higher than InfoMap Q values. When comparing InfoMap Q values to the distribution that includes both Louvain and Fast-Greedy, two algorithms that explicitly maximize Q, InfoMap Q values were shown to be significantly higher with a student’s independent t-test (t(dof:1429):5.304, p:<0.001, Cohen’s d:0.222, 95% CI:0.005,0.011). Regardless, we found that it detects a community structure with Q values highly similar to other methods (Supplementary Figure 15). Moreover, previous work has demonstrated the stability of community detection and the participation coefficient across community detection methods5.

The participation coefficients, within community strengths, and Q were calculated at each cost. Q is written analytically as follows. Consider a weighted and undirected graph with n nodes and m edges represented by an adjacency matrix A with elements

Aij = edge weight between i and j.

Thus, the strength of a node is given by

And modularity (Q) can be written as:

Here, pij is the probability that nodes i and j are connected in a random null network

γ is the resolution parameter, and ci is the community to which node i belongs to and if and if

Given a particular community assignment, the participation coefficient of each node can be calculated. The participation coefficient (PC) of node i is defined as:

Where Ki is the sum of i ‘s edge weights, Kis is the sum of i ‘s edge weights to community s, and NM is the total number of communities. Thus, the participation coefficient is a measure of how evenly distributed a node’s edges are across communities. A node’s participation coefficient is maximal if it has an equal sum of edge weights to each community in the network. A node’s participation coefficient is 0 if all of its edges are to a single community.

Finally, we calculate the within community strength value for each node as follows:

Where ki is the number of links of node i to other nodes in its community si, is the average of k over all the nodes in si, and is the standard deviation of k in si. Thus, The within community strength measures how well-connected node i is to other nodes in the community relative to other nodes in the community.

Each subject’s participation coefficient, within community strength, and Q were the mean of those values across the range of costs. All analyses were executed and all prediction models were fit separately for each task.

Tasks

The following descriptions for each task have been adapted for brevity from the Human Connectome Project Manual63.

Working Memory.

The category specific representation task and the working memory task are combined into a single task paradigm. Participants were presented with blocks of trials that consisted of pictures of places, tools, faces and body parts (non-mutilated parts of bodies with no “nudity”). Within each run, the 4 different stimulus types were presented in separate blocks. Also, within each run, 1⁄2 of the blocks use a 2-back working memory task and 1⁄2 use a 0-back working memory task (as a working memory comparison). A 2.5 second cue indicates the task type (and target for 0-back) at the start of the block. Each of the two runs contains 8 task blocks (10 trials of 2.5 seconds each, for 25 seconds) and 4 fixation blocks (15 seconds). On each trial, the stimulus is presented for 2 seconds, followed by a 500 ms inter-task interval (ITI).

Gambling.

Participants play a card guessing game where they are asked to guess the number on a mystery card (represented by a “?”) in order to win or lose money. Participants are told that potential card numbers range from 1-9 and to indicate if they think the mystery card number is more or less than 5 by pressing one of two buttons on the response box. Feedback is the number on the card (generated by the program as a function of whether the trial was a reward, loss or neutral trial) and either: 1) a green up arrow with “$1” for reward trials, 2) a red down arrow next to -$0.50 for loss trials; or 3) the number 5 and a gray double headed arrow for neutral trials. The “?” is presented for up to 1500 ms (if the participant responds before 1500 ms, a fixation cross is displayed for the remaining time), following by feedback for 1000 ms. There is a 1000 ms ITI with a “+” presented on the screen. The task is presented in blocks of 8 trials that are either mostly reward (6 reward trials pseudo randomly interleaved with either 1 neutral and 1 loss trial, 2 neutral trials, or 2 loss trials) or mostly loss (6 loss trials pseudo- randomly interleaved with either 1 neutral and 1 reward trial, 2 neutral trials, or 2 reward trials). In each of the two runs, there are 2 mostly reward and 2 mostly loss blocks, interleaved with 4 fixation blocks (15 seconds each).

Motor.

Participants are presented with visual cues that ask them to either tap their left or right fingers, or squeeze their left or right toes, or move their tongue to map motor areas. Each block of a movement type lasted 12 seconds (10 movements), and is preceded by a 3 second cue. In each of the two runs, there are 13 blocks, with 2 of tongue movements, 4 of hand movements (2 right and 2 left), and 4 of foot movements (2 right and 2 left). In addition, there are 3 15-second fixation blocks per run.

Language & Math.

The task consists of two runs that each interleave 4 blocks of a story task and 4 blocks of a math task. The lengths of the blocks vary (average of approximately 30 seconds), but the task was designed so that the math task blocks match the length of the story task blocks, with some additional math trials at the end of the task to complete the 3.8 minute run as needed. The story blocks present participants with brief auditory stories (5-9 sentences) adapted from Aesop’s fables, followed by a 2-alternative forced- choice question that asks participants about the topic of the story. For example: “after a story about an eagle that saves a man who had done him a favor, participants were asked, “Was that about revenge or reciprocity?” The math task also presents trials auditorily and requires subjects to complete addition and subtraction problems. The trials present subjects with a series of arithmetic operations (e.g., “fourteen plus twelve”), followed by “equals” and then two choices (e.g., “twenty-nine or twenty- six”). Participants push a button to select either the first or the second answer. The tasks are adaptive to try to maintain a similar level of difficulty across participants.

Social (Theory of Mind).

Participants were presented with short video clips (20 seconds) of objects (squares, circles, triangles) that either interacted in some way, or moved randomly on the screen. After each video clip, participants judge whether the objects had a mental interaction (an interaction that appears as if the shapes are taking into account each other’s feelings and thoughts), Not Sure, or No interaction (i.e., there is no obvious interaction between the shapes and the movement appears random). Each of the two task runs has 5 video blocks (2 Mental and 3 Random in one run, 3 Mental and 2 Random in the other run) and 5 fixation blocks (15 seconds each).

Relational.

The stimuli are 6 different shapes filled with 1 of 6 different textures. In the relational processing condition, participants are presented with 2 pairs of objects, with one pair at the top of the screen and the other pair at the bottom of the screen. They are told that they should first decide what dimension differs across the top pair of objects (differed in shape or differed in texture) and then they should decide whether the bottom pair of objects also differ along that same dimension (e.g., if the top pair differs in shape, does the bottom pair also differ in shape). In the control matching condition, participants are shown two objects at the top of the screen and one object at the bottom of the screen, and a word in the middle of the screen (either “shape” or “texture”). They are told to decide whether the bottom object matches either of the top two objects on that dimension (e.g., if the word is “shape”, is the bottom object the same shape as either of the top two objects. For both conditions, the subject responds yes or no using one button or another. For the relational condition, the stimuli are presented for 3500 ms, with a 500 ms ITI, and there are four trials per block. In the matching condition, stimuli are presented for 2800 ms, with a 400 ms ITI, and there are 5 trials per block. Each type of block (relational or matching) lasts a total of 18 seconds. In each of the two runs of this task, there are 3 relational blocks, 3 matching blocks and 3 16-second fixation blocks.

Performance measures.

All performance measures were chosen a priori. In the working memory task, we used the mean accuracy across all n-back conditions (face, body, place, tool). In the relational task, we used mean accuracy across both the matching and the relational conditions. For the language task, we took the maximum difficulty level that the subject achieved across both the math and language conditions. We did not use accuracy, because the task varies in difficulty based on how well the subject is doing, making accuracy an inaccurate measure of performance for these tasks. For the social task, given that almost all subjects correctly identified the social interactions as social interactions, we used the percentage of correctly identified random interactions.

Deep neural network model.

A deep neural network is a supervised learning algorithm that can learn a non-linear function for regression or classification. Unlike logistic regression, there are one or more non-linear layers, called hidden layers, between the input and the output layer. Thus, the model is trained to relate a set of input features to outputs by learning weights between neurons across adjacent layers (Supplementary Figure 1). Our implementation uses the sklearn python library. Explicitly, a prediction for subject z is calculated as:

model = sklearn.neural_network.MLPRegressor(hidden_layer_sizes=(8,12,8,12))

model.fit(x[subjects!=z], y[subjects!=z])

prediction = model.predict(x([z])

where x is the set of features across subjects and y is the task performance across subjects.

Analytic quality of diversity and locality facilitated modularity coefficients

To further understand diversity and locality facilitated modularity coefficients, we performed an iterative split-half analysis. Specifically, we estimated the mean within community strength or participation coefficient of each node in one half of subjects, and each node’s locality and diversity facilitated coefficient in the other half, testing 10,000 random splits of subjects. All relationships were reliably observed in every cognitive state (Supplementary Figure 7). Next, we sought to determine if this relationship was a necessary feature of the underlying mathematics, or whether it was a phenomenon specific to the neurophysiology of brain networks. To address this question, we tested four null model networks and observed that none of them exhibited a significant relationship between mean participation coefficient and diversity facilitated modularity coefficient (Supplementary Figure 8). As a third check, we assessed whether the number of communities identified in the network was inadvertently biasing our results. We observed that the number of communities in each network was negatively correlated with the modularity value Q (Supplementary Figure 9). After regressing out the number of communities in each network from the modularity value, we observed that our findings remained qualitatively unchanged (Supplementary Figure 10). Finally, we tested whether the relationships between variables of interest were linear (and therefore appropriate to examine with Pearson r correlation coefficients), or nonlinear. To address this question, we analyzed individual 1st, 2nd, and 3rd order curve fits of the relationship between participation coefficients and modularity values. We observed that many relationships were well-captured by a first order fit, with the connector hub’s maximal participation coefficients corresponding to maximal Q indices, with only a few showing a more nonlinear relationship (Supplementary Figure 11).

Alternative analysis of connector hubs’ tuning the connectivity of their neighbors

We executed an alternative analysis to test if connector hubs tune the connectivity of their neighbors to be more modular. For each node i we calculated a matrix, where the j-kth entry is the Pearson r correlation coefficient that captures how well the participation coefficients of node i correlates with the edge weights between nodes j and k in the network across subjects. These Pearson r values allowed us to test whether a node’s participation coefficients correlate positively with its neighbors’ increased connectivity to its own community and decreased connectivity to other communities. We subtracted the sum of r values in the matrix corresponding to node i’s participation coefficients and node j’s between community edge weights from the sum of r values in the matrix corresponding to node i’s participation coefficients and node j’s within community edge weights. Thus, this value measures how well the participation coefficients of node i are correlated with the increased modular (within community) connectivity of node j. We used the partition of nodes into communities that was created along with the nodes themselves (Figure 6d)49. Edges between node i and node j were ignored in this calculation, as the participation coefficients of node i is likely highly correlated with the edge weights between node i and node j, and we were only interested in how the participation coefficient of node i modulates node j’s connectivity to the rest of the network, not node j’s connectivity to node i. Edges that were not positive on average across subjects were not included in this analysis, as the interpretation of negative edges in fMRI-based networks is not obvious (results were similar only including the top 25 percent of edges (Supplementary Figure 13). Correlations between the edge strength between nodes i and j and the amount of modulation of j’s modularity by i were calculated such that the set of nodes i were either connector hubs or non-connector hubs. A positive correlation means that a node is biased to modulate the connectivity of its neighbors versus its non-neighbors to be more modular. In all cognitive states, these correlations were only positive and significant (Pearson’s r>0.17, p<0.001, Bonferroni corrected (n tests = 7)) for connector hubs (Supplementary Figure 13) suggesting that connector hubs tune the connectivity of their neighbors to be more modular.

To test if this relationship existed for local hubs, we calculated a similar matrix, where, for each node i, the j-k th entry is the Pearson r value that captures how well the within community strengths of node i correlate with the edge weight between nodes j and k in the network across subjects. Correlations between the edge strength between nodes i and j and the amount of modulation of j’s modularity by the within community strength of i were calculated such that nodes i were either local hubs or non-local hubs. None of these correlations were robust (−0.1>r<0.1). These analyses add to our conclusion, demonstrated in the Results, that connector hubs facilitate higher modularity by tuning the connectivity of their neighbors to be more modular.

Statistical Methods

The number of subjects was determined by the number of subjects released by the Human Connectome Project at the start of the analyses. As this dataset represented the largest dataset of its kind at that time and the number of subjects is greater than many similar analyses47, no power analysis was computed. Total N=Working Memory: 475, Gambling: 473, Relational:458, Motor:475, Language & Math:472, Social:474, Resting State: 476. However, as we only analyzed subjects with both Resting-State and the task scans, N=Working Memory:473, Relational:457, Language & Math:471, Social:473. This results in a unique N=476 across tasks, in that 476 different subjects had a resting state scan and at least one task scan. As scrubbing (which removes frames with large head motion) can cause too many frames to be removed from the time series, subjects with less than 75 percent of remaining frames were not included in the analyses that implemented scrubbing; thus, for analyses using scrubbed data, N=Working Memory:351, Relational:335, Language & Math:348, Social:358.

All confidence intervals (CI) are reported with alpha=0.05. For Pearson r correlation coefficients CIs, the interval of r values is given by Fisher transforming r to z, computing the interval, and then Fisher reverse transforming the z intervals back to r intervals. For t-tests, the confidence interval represents the largest and smallest differences in means across the two distributions. For all t-tests, distributions were confirmed as normal (p<0.001) or exhibiting no significant evidence as not normal (k2>0.0) using D’Agostino and Pearson’s omnibus test k2. All p values are two sided tests.

All p values that are part of a family of tests are Bonferroni corrected for multiple comparisons. For example, we test if two tasks’ hub and network structures are similarly optimal for the same subject measures, testing across a large number of subject measures. In this case, we applied a Bonferroni correction to the p-values to determine whether the effect remained true for particular subject measures. Here, the number of tests is equal to the number of subject measures, 47. Individual subject networks were built independently for each task and task performance is different for each task. Thus, these tests are not strictly in the same family. However, to be conservative, we still Bonferroni corrected these p-values. In these cases, the family size is either 4 or 7, depending on the number of tasks analyzed. Unless otherwise stated, all p values are Bonferroni corrected.

Many statistical tests are calculated here without reported p values. For example, Pearson r values are used to calculate functional connectivity. Here, only the r values are of interest—more precisely, individual differences in the r values across subjects, and how these differences relate to individual differences in cognition. This treatment of multiple comparisons in the context of functional connectivity and individual differences in cognition is common and recommended47,64. We extend this notion to other analyses here as well. For example, we use the Pearson correlation coefficient r to compare how well different nodes’ participation coefficients across subjects explain variance in network modularity or task performance (the diversity facilitated modularity and performance coefficients). In these cases, we relate these r-values to other measures, and are only concerned with how these r-values explain another distributions’ variance (here, we find a positive correlation between these r-values and a node’s mean participation coefficient across subjects). We are not concerned with the statistical significance any particular r-value as estimated by the p-value. We care about the distribution of r-values, not the distribution of p-values, and we do not make any claims about any single r-value. Thus, the p-values are neither reported nor corrected for multiple comparisons. This is precisely how functional connectivity is treated statistically.

Supplementary Material

Acknowledgements

This work was supported by NIH Grant NS79698 and the National Science Foundation Graduate Research Fellowship Program under Grant no. DGE 1106400 to MAB and MD. MAB would also like to acknowledge NIH T32 Ruth L. Kirschstein Institutional National Research Service Award (5T32MH106442-02). BTTY was also supported Singapore MOE Tier 2 (MOE2014-T2-2-016), NUS Strategic Research (DPRT/944/09/14), NUS SOM Aspiration Fund (R185000271720), Singapore NMRC (CBRG/0088/2015), NUS YIA and the Singapore National Research Foundation (NRF) Fellowship (Class of 2017). DSB would also like to acknowledge support from the John D. and Catherine T. MacArthur Foundation, the Alfred P. Sloan Foundation, the Army Research Laboratory and the Army Research Office through contract numbers W911NF-10-2-0022 and W911NF-14-1-0679, the National Institute of Health (2-R01-DC-009209-11,1R01HD086888-01, R01-MH107235, R01-MH107703, and R21-M MH-106799), the Office of Naval Research, and the National Science Foundation (BCS-1441502, PHY-1554488, and BCS-1631550). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Footnotes

Data availability

Data were provided by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research and by the McDonnell Center for Systems Neuroscience at Washington University. The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the funding agencies. All analyses were executed in accordance with the authors’ institutions’ relevant ethical regulations as well as the WU-Minn HCP Consortium Open Access Data Use Terms. Informed consent was obtained from all participants.

Code availability

All graph theory analyses were executed with our own custom python code (www.github.com/mb3152/brain_graphs) that uses the iGraph library. All analysis code is also publicly available (github.com/mb3152/hcp_performance/).

Competing Interests

The authors declare no competing interests.

References

- 1.Bertolero MA, Yeo BTT, Yeo BTT, D’Esposito M & D’Esposito M The modular and integrative functional architecture of the human brain. Proc. Natl. Acad. Sci. U.S.A. 112, E6798–807 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bertolero MA, Yeo BTT & Desposito M The diverse club. Nature Communications 8, 1277 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Guimerà R, Guimera R, Amaral LAN & Amaral L Functional cartography of complex metabolic networks. Nature 433, 895–900 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Meunier D, Lambiotte R & Bullmore ET Modular and hierarchically modular organization of brain networks. Front. Neurosci. 4, 200 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bertolero MA, Yeo BTT & Desposito M The diverse club. Nature Communications 8, 1–10 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bassett DS et al. Efficient Physical Embedding of Topologically Complex Information Processing Networks in Brains and Computer Circuits. PLoS Comput Biol 6, e1000748 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Laughlin SB, de Ruyter van Steveninck RR & Anderson JC The metabolic cost of neural information. Nature Neuroscience 1, 36–41 (1998). [DOI] [PubMed] [Google Scholar]

- 8.Lord L-D, Expert P, Huckins JF & Turkheimer FE Cerebral Energy Metabolism and the Brain’s Functional Network Architecture: An Integrative Review. Journal of Cerebral Blood Flow & Metabolism 33, 1347–1354 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Raichle ME & Gusnard DA Appraising the brain’s energy budget. Proceedings of the National Academy of Sciences 99, 10237–10239 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Harris JJ & Attwell D The Energetics of CNS White Matter. Journal of Neuroscience 32, 356–371 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kuzawa CW et al. Metabolic costs and evolutionary implications of human brain development. Proceedings of the National Academy of Sciences 111, 13010–13015 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Krienen FM, Yeo BTT, Ge T, Buckner RL & Sherwood CC Transcriptional profiles of supragranular-enriched genes associate with corticocortical network architecture in the human brain. Proc. Natl. Acad. Sci. U.S.A. 113, E469–78 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hawrylycz M et al. Canonical genetic signatures of the adult human brain. Nature Neuroscience 18, 1832–1844 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wagner GP & Zhang J The pleiotropic structure of the genotype-phenotype map: the evolvability of complex organisms. Nature Reviews Genetics 12, 204–213 (2011). [DOI] [PubMed] [Google Scholar]

- 15.Clune J, Mouret J-B & Lipson H The evolutionary origins of modularity. Proc. Biol. Sci. 280, 1–9 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kashtan N, Kashtan N, Alon U & Alon U Spontaneous evolution of modularity and network motifs. 102, 13773–13778 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Simon HA in Facets of Systems Science 457–476 (Springer; US, 1991). doi: 10.1007/978-1-4899-0718-9_31 [DOI] [Google Scholar]

- 18.Fodor J & Fodor J The Modularity of Mind. (MIT Press, 1983). [Google Scholar]

- 19.Coltheart M Modularity and cognition. Trends in Cognitive Sciences 3, 115–120 (1999). [DOI] [PubMed] [Google Scholar]

- 20.Robinson PA, Henderson JA, Matar E, Riley P & Gray RT Dynamical Reconnection and Stability Constraints on Cortical Network Architecture. Physical Review Letters 103, 108104 (2009). [DOI] [PubMed] [Google Scholar]

- 21.Clune J, Mouret J-B & Lipson H The evolutionary origins of modularity. Proc. Biol. Sci. 280, 20122863–20122863 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tosh CR, Tosh CR, McNally L & McNally L The relative efficiency of modular and non-modular networks of different size. Proc. Biol. Sci. 282, 20142568–20142568 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stevens AA, Tappon SC, Garg A & Fair DA Functional brain network modularity captures inter- and intra-individual variation in working memory capacity. PLOS ONE 7, e30468 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Arnemann KL et al. Functional brain network modularity predicts response to cognitive training after brain injury. Neurology 84, 1568–1574 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Modular Brain Network Organization Predicts Response to Cognitive Training in Older Adults. PLoS ONE (2016). [DOI] [PMC free article] [PubMed]

- 26.Warren DE et al. Network measures predict neuropsychological outcome after brain injury. Proc. Natl. Acad. Sci. U.S.A. 111, 14247–14252 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]