Abstract

Background:

The objective of this study was to determine whether gaze patterns could differentiate expertise during simulated ultrasound-guided Internal Jugular Central Venous Catheterization (US-IJCVC) and if expert gazes were different between simulators of varying functional and structural fidelity.

Methods:

A 2017 study compared eye gaze patterns of expert surgeons (n=11), senior residents (n=4), and novices (n=7) during CVC needle insertions using the dynamic haptic robotic trainer (DHRT), a system which simulates US-IJCVC. Expert gaze patterns were also compared between a manikin and the DHRT.

Results:

Expert gaze patterns were consistent between the manikin and DHRT environments (p = 0.401). On the DHRT system, CVC experience significantly impacted the percent of time participants spent gazing at the ultrasound screen (p < 0.0005) and the needle and ultrasound probe (p < 0.0005).

Conclusion:

Gaze patterns differentiate expertise during ultrasound-guided CVC placement and the fidelity of the simulator does not impact gaze patterns.

Keywords: central venous catheterization, medical training simulation, eye tracking, residency training

Introduction

Ultrasound-guided central venous catheterization (CVC) is a procedural skill that has been taught on manikin simulators for over a decade1. While research has shown these simulators are effective for short term skill gains, these manikins have several known limitations, including a lack of long-term skill retention2. This has been attributed, in part, to the fact that these simulators only train surgeons on a single patient anatomy and thus do not represent the range of patient profiles surgeons face in clinical settings3. In addition, these simulators lack objective performance criterion and instead rely on a trained preceptor (e.g. faculty) to be present in order to subjectively evaluate trainee performance using a proficiency checklist4–6 which includes evaluation on mechanical (e.g. motion and accuracy) and procedural-based skills (e.g. aseptic technique). Finally, current CVC simulators provide only basic summative feedback on performance (blue liquid is aspirated if the catheter hits a vein) and no concurrent or formative feedback. Because of these shortcomings and the overreliance on faculty feedback, simulation-based surgical training has been criticized as being resource intensive7,8.

In light of these deficiencies, the Dynamic Haptic Robotic Trainer (DHRT) was developed to provide residents with CVC training on a variety of patient anatomies while providing automated feedback during the training process, see Figure 1. While the DHRT system lacks some of the physical realism of manikin simulators, it has increased functional fidelity. Specifically, the DHRT provides training on ultrasound guided CVC needle placement by simulating variations in patient anatomy through changes in a virtual ultrasound image (e.g. size and depth of the vessels) and through haptic feedback provided through a robotic arm that simulates force changes of different types of tissues (e.g. skin, adipose tissue, vessel), see Pepley et al. for full details9,10. The DHRT also provides learners with automated feedback on their mechanical performance after each needle insertion attempt without the need of a trained preceptor, including feedback on the number of insertion attempts, average angle of insertion, and the final distance of the needle tip from the center of the vein11. Prior research has shown that novice learners approach expert performance when training on the DHRT system10 and indicated that there were no differences in pre- to post-test learning between manikin- and DHRT-based instruction12. While this prior work on the DHRT is promising, the feedback provided to learners is based purely on their mechanical skill acquisition (e.g. needle angle) and not on the cognitive skills necessary to complete this surgical procedure. This is important because prior studies have shown that cognitive skills training and mental imagery practice enhance the learning and acquisition of surgical skills13–15. One method of improving cognitive skills training in the DHRT system is through the use of eye-tracking and gaze-training.

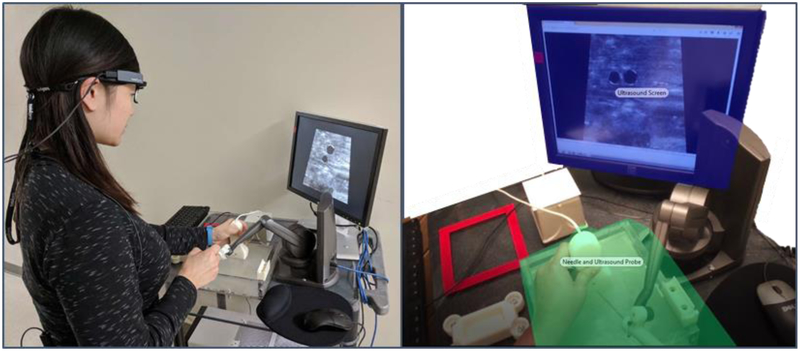

Figure 1:

(Left) Experimental setup with participant wearing Tobii Pro Glasses 2 at the DHRT system. (Right) Two main areas of interest (AOIs). The ultrasound screen and the needle and ultrasound probe.

In medical domains, gaze behavior has been used for skills training16, expertise assessment17,18, and feedback19. Reviews of eye-tracking applications in medical and non-medical domains have also shown the reliability and validity for using eye-tracking as an objective assessment tool, as well as its potential in assessing surgical skills20,21. For example, one study on virtual laparoscopic training found that experts spend more time gazing at target locations while novices spend more time tracking tools22. When using gaze training as a teaching tool in laparoscopic surgery, researchers have also found that gaze trained groups learned more quickly than self-guided groups23, and adopted expert-like gaze patterns by fixating more on target locations rather than on tool movement24. Finally, Tien and colleagues25 validated the use of eye tracking for differentiating expert and novice gaze behavior during open hernia surgery.

While this prior work demonstrates the potential benefits of differentiating expert and novice gaze patterns and using gaze training to accelerate learning, this has not yet been explored in CVC training. Thus, as a first step to improving training feedback, the goal of the current study was to assess gaze tracking as it relates to CVC placement and determine whether differences between experts and novices exist. If so, there is potential to use these differences to provide additional formative feedback to aid a learner’s path to competency in CVC training.

Research Questions

The purpose of the current study was to determine whether gaze patterns could differentiate expertise during simulated ultrasound-guided Internal Jugular Central Venous Catheterization (US-IJCVC) and if expert gazes were different between simulators of varying functional and structural fidelity. Thus, the two research questions (RQ) explored in the current study were:

RQ1: Are expert eye gaze patterns consistent during central-line insertions between manikin and DHRT training systems? This research question was developed to validate if eye gaze patterns were consistent between the manikin (higher structural fidelity or physical realism) and the DHRT (higher functional fidelity) systems. It was hypothesized that since the ultrasound-guided procedure for completing the central line was similar between the two systems, gaze patterns would not differ between the environments.

RQ2: Do expert, senior resident, and novice gaze patterns differ when placing central lines on the DHRT? The second research question aimed to compare the percent of time experts, senior residents, and novices spent gazing at the ultrasound screen versus the needle and ultrasound probe during placement of central lines on the DHRT system. It was hypothesized that novices would spend a higher percentage of time tracking the needle and ultrasound probe during these trials, while experts would spend more time focused on the ultrasound screen, because past research has shown that experts spend less time tool-tracking than novices22. Senior residents, representative of a learner group that is often considered competent enough to perform the skill on patients with limited to no supervision26, were hypothesized to perform similarly to experts in this study.

Methods and Materials

In order to answer these research questions, a series of experiments were conducted at Penn State Milton S. Hershey Medical Center (HMC). The details of this experiment, conducted as part of a larger study, are discussed in this section.

Participants

Data for the current study was collected over two time periods. Specifically, the data from summer of 2017 included seven surgical interns (novice) (Nn=7) while the data from fall of 2017 included eleven experts (Ne=11) and four senior residents (Nsr=4). To qualify expertise, the expert participants in this study included four fellows and seven attending surgeons who self-reported to have placed more than 50 central lines in their career, a procedural volume which has been stated to denote expertise in earlier research on central-lines27,28. On the other hand, the senior residents were all PGY4 or PGY5, and had placed a minimum of 10 central lines on patients in the course of their careers and only one of the novice residents had placed an Internal Jugular CVC prior to the study, reporting they had placed 3 prior lines. Senior residents were included in the current study to identify whether residents perform similarly to experts after several years of residency training. This was particularly interesting since a large portion of central-lines are placed by surgical residents at HMC while under the supervision of attending faculty.

Procedure

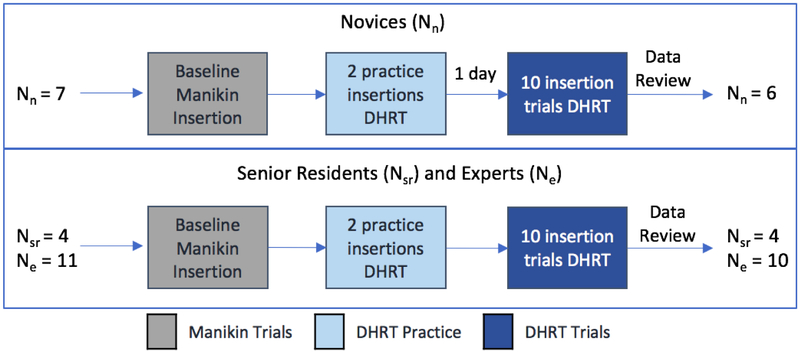

At the start of the study, the purposes and procedures were explained to all of the participants and informed consent was obtained according to an Institutional Review Board approved protocol. In the summer session, the novices were first shown an 18-minute video on CVC placement followed by a live training demonstration of the procedure by a fourth-year surgical resident using a Blue Phantom Gen II Ultrasound Central Line Training Model (Model #BPH660). This video was not included in the fall session, given the experience of the expert and senior residents. For the data collection, each participant was fitted and calibrated with the Tobii Pro Glasses 229, see Figure 1, and asked to complete one baseline needle insertion for central line placement on the Blue Phantom manikin. This was followed by an introduction to the DHRT through a 5-minute video, after which, the participants individually completed two practice insertions on the DHRT in order to familiarize themselves with its functions. During these two practice insertions, the participants were guided through the functions of the DHRT and any questions were answered. Finally, the DHRT insertion data was collected, where each participant completed 10 insertions on the DHRT system. All of the participants received performance feedback from the DHRT learning interface, and no other external feedback was provided. Due to restrictions in the residency boot camp where the resident data was collected, novices had a day between their first two practice insertion and their 10 insertion trials. On the other hand, the 2 practice insertions and 10 insertion trials were conducted on the same day for the experts and senior residents, see Figure 2 for timeline of study.

Figure 2.

Diagram summarizing the procedural flow

Eye Tracking Metrics

Eye gaze point data collected during the study was analyzed using the Tobii Pro Lab real-world automatic mapping functionality30. Once auto-mapped, an independent rater used the attention filter to manually check all auto-mapped data. Specifically, raw data from trial 1 was analyzed by one rater and compared to the automatically mapped and checked data to validate the use of the auto-mapping feature. Importantly, the inter-rater reliability was found to be very good, as classified by Altman (p.404)31, Cohen’s Kappa = 0.827, p < 0.0005. Thus, the automatic mapping function with the attention filter in the Tobii Pro Lab software was used to analyze participant eye gaze patterns.

Once the gaze points were properly mapped, data for each participant and each trial was reviewed for its accuracy and gaze sample percentage captured during needle insertions. For display or screen-based tasks, eye-tracking translational researchers recommend excluding participants who spend less than 70% looking at the screen32,33. With the Tobii Pro Glasses 2, gaze points are only captured when the participant is looking through the glasses range (e.g. not above or below the rim of the glasses). Thus, for this study a lower threshold was set at 70% of each participant’s eye gazes captured during needle insertions. During review of the collected data, one expert and one novice participants’ gaze patterns were discarded due to having less than 70% (in many cases, less than 50%) of their gaze points tracked during the needle insertions, leading to a total participant count of 6 novices, 10 experts, and 4 senior residents, see Figure 2 for summary.

After participant gaze data for all 10 trials were reviewed for eye-tracking quality, the data were categorized into two main areas of interest (AOI): the ultrasound image on the screen, and the needle and ultrasound probe interface (Figure 1). These two areas of interest were chosen because prior work has compared the amount of time spent gazing at the target (e.g. ultrasound or image visualization), compared to the amount of time spent tracking the tools or patient (e.g. needle and ultrasound probe)22. The percent time spent gazing at these two AOIs during each needle insertion was calculated in lieu of other eye-tracking metrics such as fixation duration or count due to the large variability in time to complete needle insertion trials among the participant groups. Specifically, the average (SD) for each group was as follows: experts 22.41 (13.93), senior residents 24.75 (8.05), and novices 29.66 (12.60). Thus, the percent metric was used as a way to normalize gaze patterns among participant groups.

Data Analysis

All metrics were analyzed using SPSS (v. 25.0) with significance considered at p-valueof 0.05. For the first research question, two paired-samples t-tests were computed to determine differences in the percent of time experts gazed at the ultrasound screen and the needle and ultrasound probe between the one insertion trial conducted on the manikin and the average of the ten trials completed on the DHRT system. The data had no significant outliers, as assessed by boxplots, and the assumption of normality was not violated, as assessed by boxplot and the Kolmogorov Smirnov test (p > 0.200).

For the second research question, a one-way multiple analysis of variance (MANOVA) was computed to determine if the percent of time participants spent gazing at the ultrasound screen or the needle and ultrasound probe were different among groups with different levels of central-line insertion experience throughout the 10 trials on the DHRT system. Groups were compared as a whole, with each needle insertion representing one data point. Preliminary assumption checking revealed that data were normally distributed, as assessed by boxplot and the Kolmogorov Smirnov test (p > 0.200); there were no univariate or multivariate outliers, as assessed by boxplot and Mahalanobis distance (p > 0.001), respectively; there were no linear relationships, as assessed by scatterplot; no multicollinearity (r = −0.887, p < 0.001). Follow-up post-hoc analyses were conducted to examine pairwise differences between groups.

Results

Data are presented as mean ± standard error unless otherwise noted. Analysis of the areas of interests (AOI) showed that participants on average spent a total of 96.8 ± 1.44% of their time during the study trials gazing at the ultrasound screen and the needle and ultrasound probe. This indicates the importance of focusing data analyses on these two AOIs. During the study, the participants spent an average of 74.3 ± 0.72% percent of the time looking at the ultrasound screen and an average of 22.5 ± 0.72% of time tracking the needle and ultrasound probe.

The paired-samples t-test showed that there was no significant difference between the percent of time experts spent gazing at the ultrasound screen on the manikin (71.94 ± 2.80) and the DHRT system (74.63 ± 0.99), t(9) = −0.820, p = 0.433. Likewise, there was no significant difference between the percent of time experts spent gazing at the needle and ultrasound probe on the manikin (21.17 ± 3.34) and the DHRT system (21.59 ± 1.05), t(9) = −0.112, p = 0.913.

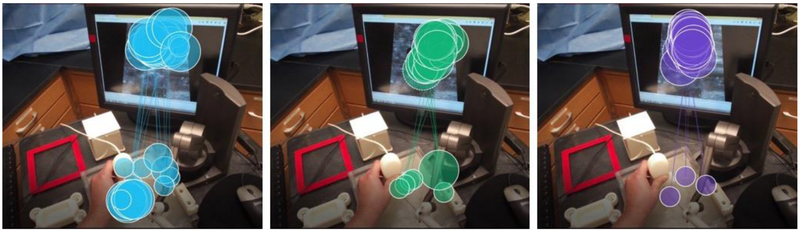

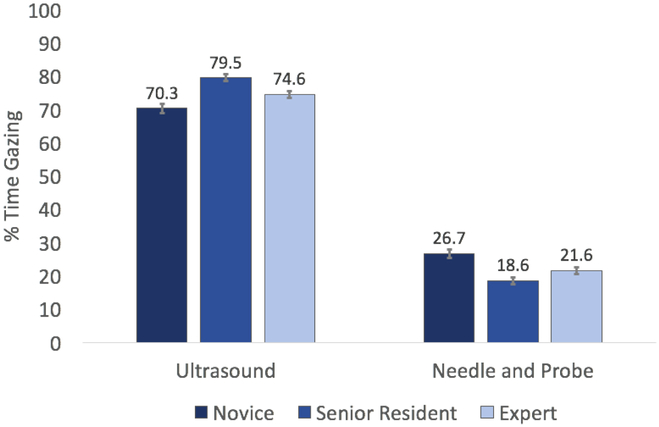

The one-way MANOVA results showed that the differences among central line insertion experience (novice, senior resident, and expert) on the combined dependent variables (percent gaze time on ultrasound screen and needle and ultrasound probe) was statistically significant, F (4, 392) = 6.254, p < 0.005, Pillai’s Trace = 0.119, partial η2= 0.06, observed power of 0.989. See Figure 3 for example gaze plots for the three groups. Follow-up univariate ANOVAs showed that both the percent time spent gazing at the ultrasound screen (F(2, 1024.7) = 10.976, p < 0.0005, η2 = 0.100, observed power of 0.991), and the needle and ultrasound probe (F(2, 862.239) = 8.872, p < 0.0005, η2 = 0.083, observed power of 0.971) were statistically different between the participants from the different central-line experience groups, using a Bonferroni adjusted α level of 0.025.

Figure 3:

(Left) Example novice gaze pattern on DHRT. (Middle) Example senior resident gaze pattern. (Right) Example expert gaze pattern.

Because the assumption of homogeneity of variances was violated for both the percent time spent gazing at the ultrasound screen and the needle and ultrasound probe, as assessed by Levene’s Test for Equality of Variance (p = 0.004 and 0.019, respectively), the Games-Howell post-hoc test was used to compare pairwise differences between the group gaze patterns, see Figure 3. The post-hoc results showed that novices (70.32 ± 1.41) spent significantly less time gazing at the ultrasound screen than experts (74.63 ± 0.99) who spent significantly less time than the senior residents (79.52 ± 1.02). In addition, novices (26.68 ± 1.35) spent significantly more time tracking the needle and ultrasound probe than experts (21.59 ± 1.05) who were not significantly different than senior residents (18.63 ± 1.10). Specifically, differences between novices and experts (−4.30, 95% CI [−8.39, −0.21], p = 0.037), novices and residents (−9.20, 95% CI [−13.34, −5.05], p = 0.0005), and experts and residents (−4.89, 95% CI [−8.28, −1.51], p = 0.002) were statistically significant when gazing at the ultrasound screen. On the needle and ultrasound probe, differences between novices and experts (5.08, 95% CI [1.03, 9.12], p = 0.010), as well as novices and residents (8.04, 95% CI [3.90, 12.18], p = 0.0005) were statistically significant. However, there was no significant difference between experts and senior residents (2.97, 95% CI [−0.64, 6.57], p = 0.129).

Discussion

The results for the first research question support our hypothesis that gaze patterns would not differ between the manikin and DHRT environments. This demonstrates that even in the absence of the physical realism, in the DHRT simulator, experts maintained similar gaze patterns. These results provide evidence that the DHRT system may require similar eye gaze patterns even in the absence of these physical features.

The results for the second research question showed that expertise in IJCVC can be differentiated using gaze behavior, supporting related work that differentiated expertise with gaze patterns during open hernia surgery and laparoscopic environments22,25.

Finally, it was hypothesized that experts would spend more time gazing at the ultrasound screen, while novices would spend more time tracking the needle and ultrasound probe, with senior residents falling along a continuum between the two groups. An interesting discovery was that senior residents focused more attention on the ultrasound screen and less time tracking the needle and ultrasound probe when compared to experts, thus falling farthest on the spectrum from novices. When combined with prior work showing that senior resident performance was comparable with expert performance10, this additional similarity in gaze patterns indicate that senior residents attain expertise in CVC placement during their post graduate residency career. This may be due in part to the fact that IJCVC are largely performed by residents at HMC under the supervision of experts. This means that while our experts met the criteria of a minimum 50 central lines)27, the senior residents in the study may have had more active line placement in the months leading up to the testing which may have led to higher similarities in the performance among these two groups.

Limitations

This study was performed at a large academic institution. While the participant numbers were limited, the current study provides some of the first evidence for the use of gaze training in IJCVC education. All interns at our institution attend surgical bootcamp, thus protected time opportunity to participate in the study was present. Experts and senior surgical resident participation occurred during clinical work-days. Thus, time was not protected for study participation, potentially leading to a self-selection bias as well for individuals with higher interest or experience with CVC procedure. Finally, data was excluded for two participants (1 novice, 1 expert) due to poor tracking quality. Future work should examine more participants at various stages of expertise.

Conclusions

The current study differentiated expert and novice gaze patterns during CVC placement on the DHRT system and showed that experts performed similarly between DHRT and manikin-based simulation systems. These findings indicate that the DHRT training system effectively captures gaze behaviors of expert surgeons as well as manikin-based systems. Additionally, the results showed that differences in gaze patterns could be discerned between varying levels of expertise providing evidence on the potential utility of gaze training in IJCVC education.

Figure 4:

Average percent of time each group spent gazing at the ultrasound screen and the needle and ultrasound probe. On the ultrasound screen, novices spent significantly less time than experts, who spent significantly less time the senior residents. On the needle and ultrasound probe, novices spent significantly more time than experts, who were not significantly different than senior residents.

Acknowledgments

Funding

Research reported in this publication was supported by the National Heart, Lung, And Blood Institute of the National Institutes of Health under Award Number R01HL127316. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health

References

- 1.Evans LV, Dodge KL, Shah TD, et al. Simulation training in central venous catheter insertion: improved performance in clinical practice. Academic Medicine 2010;85:1462–9. [DOI] [PubMed] [Google Scholar]

- 2.Smith CC, Huang GC, Newman LR, et al. Simulation training and its effect on long-term resident performance in central venous catheterization. Simulation in Healthcare 2010;5:146–51. [DOI] [PubMed] [Google Scholar]

- 3.McGee DC, Gould MK. Preventing complications of central venous catheterization. New England Journal of Medicine 2003;348:1123–33. [DOI] [PubMed] [Google Scholar]

- 4.Hartman N, Wittler M, Askew K, Hiestand B, Manthey D. Validation of a performance checklist for ultrasound-guided internal jugular central lines for use in procedural instruction and assessment. Postgraduate medical journal 2016:postgradmedj-2015–133632. [DOI] [PubMed] [Google Scholar]

- 5.Doyle JD, Webber EM, Sidhu RS. A universal global rating scale for the evaluation of technical skills in the operating room. The American journal of surgery 2007;193:551–5. [DOI] [PubMed] [Google Scholar]

- 6.Huang GC, Newman LR, Schwartzstein RM, et al. Procedural competence in internal medicine residents: validity of a central venous catheter insertion assessment instrument. Academic Medicine 2009;84:1127–34. [DOI] [PubMed] [Google Scholar]

- 7.Ogden PE, Cobbs LS, Howell MR, Sibbitt SJ, DiPette DJ. Clinical simulation: importance to the internal medicine educational mission. The American journal of medicine 2007;120:820–4. [DOI] [PubMed] [Google Scholar]

- 8.Sherertz RJ, Ely EW, Westbrook DM, et al. Education of physicians-in-training can decrease the risk for vascular catheter infection. Annals of internal medicine 2000;132:641–8. [DOI] [PubMed] [Google Scholar]

- 9.Pepley D, Yovanoff M, Mirkin K, Han D, Miller S, Moore J. Design of a Virtual Reality Haptic Robotic Central Venous Catheterization Training Simulator. ASME 2016 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference; 2016; Charlotte, NC, USA: American Society of Mechanical Engineers; p. V05AT7A033–V05AT07A. [Google Scholar]

- 10.Pepley DF, Gordon AB, Yovanoff MA, et al. Training Surgical Residents With a Haptic Robotic Central Venous Catheterization Simulator. Journal of surgical education 2017;74:1066–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yovanoff M, Pepley D, Mirkin K, Moore J, Han D, Miller S. Personalized Learning in Medical Education: Designing a User Interface for a Dynamic Haptic Robotic Trainer for Central Venous Catheterization. Proceedings of the Human Factors and Ergonomics Society Annual Meeting Austin, TX: SAGE Publications Sage CA: Los Angeles, CA; 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yovanoff M, Pepley D, Mirkin K, Moore J, Han D, Miller S. Improving Medical Education: Simulating Changes in Patient Anatomy Using Dynamic Haptic Feedback. Proceedings of the Human Factors and Ergonomics Society Annual Meeting Washington D.C: SAGE Publications Sage CA: Los Angeles, CA; 2016:603–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hall JC. Imagery practice and the development of surgical skills. The American journal of surgery 2002;184:465–70. [DOI] [PubMed] [Google Scholar]

- 14.Bathalon S, Dorion D, Darveau S, Martin M. Cognitive skills analysis, kinesiology, and mental imagery in the acquisition of surgical skills. Journal of otolaryngology 2005;34. [DOI] [PubMed] [Google Scholar]

- 15.Kohls-Gatzoulis JA, Regehr G, Hutchison C. Teaching cognitive skills improves learning in surgical skills courses: a blinded, prospective, randomized study. Canadian journal of surgery 2004;47:277. [PMC free article] [PubMed] [Google Scholar]

- 16.Chetwood AS, Kwok K-W, Sun L-W, et al. Collaborative eye tracking: a potential training tool in laparoscopic surgery. Surgical endoscopy 2012;26:2003–9. [DOI] [PubMed] [Google Scholar]

- 17.Wood G, Knapp KM, Rock B, Cousens C, Roobottom C, Wilson MR. Visual expertise in detecting and diagnosing skeletal fractures. Skeletal radiology 2013;42:165–72. [DOI] [PubMed] [Google Scholar]

- 18.Khan RS, Tien G, Atkins SM, Zheng B, Panton ON, Meneghetti AT. Analysis of eye gaze: Do novice surgeons look at the same location as expert surgeons during a laparoscopic operation? Surgical Endoscopy 2012;26:3536–40. [DOI] [PubMed] [Google Scholar]

- 19.Ahmidi N, Hager GD, Ishii L, Fichtinger G, Gallia GL, Ishii M. Surgical task and skill classification from eye tracking and tool motion in minimally invasive surgery. International Conference on Medical Image Computing and Computer-Assisted Intervention; 2010: Springer; p. 295–302. [DOI] [PubMed] [Google Scholar]

- 20.Ashraf H, Sodergren MH, Merali N, Mylonas G, Singh H, Darzi A. Eye-tracking technology in medical education: A systematic review. Medical teacher 2018;40:62–9. [DOI] [PubMed] [Google Scholar]

- 21.Tien T, Pucher PH, Sodergren MH, Sriskandarajah K, Yang G-Z, Darzi A. Eye tracking for skills assessment and training: a systematic review. journal of surgical research 2014;191:169–78. [DOI] [PubMed] [Google Scholar]

- 22.Wilson MR, McGrath JS, Vine SJ, Brewer J, Defriend D, Masters RS. Psychomotor control in a virtual laparoscopic surgery training environment: gaze control parameters differentiate novices from experts. Surgical Endoscopy 2010;24:2458–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wilson MR, Vine SJ, Bright E, Masters RS, Defriend D, McGrath JS. Gaze training enhances laparoscopic technical skill acquisition and multi-tasking performance: a randomized, controlled study. Surgical Endoscopy 2011;25:3731–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vine SJ, Masters RS, McGrath JS, Bright E, Wilson MR. Cheating experience: Guiding novices to adopt the gaze strategies of experts expedites the learning of technical laparoscopic skills. Surgery 2012;152:32–40. [DOI] [PubMed] [Google Scholar]

- 25.Tien T, Pucher PH, Sodergren MH, Sriskandarajah K, Yang G-Z, Darzi A. Differences in gaze behaviour of expert and junior surgeons performing open inguinal hernia repair. Surgical endoscopy 2015;29:405–13. [DOI] [PubMed] [Google Scholar]

- 26.Pugh D, Cavalcanti RB, Halman S, et al. Using the Entrustable Professional Activities Framework in the Assessment of Procedural Skills. Journal of graduate medical education 2017;9:209–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sznajder JI, Zveibil FR, Bitterman H, Weiner P, Bursztein S. Central vein catheterization: failure and complication rates by three percutaneous approaches. Archives of Internal Medicine 1986;146:259–61. [DOI] [PubMed] [Google Scholar]

- 28.Bernard RW, Stahl WM. Subclavian vein catheterizations: a prospective study. I. Non-infectious complications. Annals of surgery 1971;173:184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tobii Pro Glasses 2. 2018. at https://www.tobiipro.com/product-listing/tobii-pro-glasses-2/.)

- 30.Real-World Mapping. 2018. at https://www.tobiipro.com/learn-and-support/learn/steps-inan-eye-tracking-study/data/real-world-mapping/.)

- 31.Altman DG. Practical statistics for medical research: Chapman & Hall; 1991. [Google Scholar]

- 32.Hvelplund KT. Eye tracking and the translation process: reflections on the analysis and interpretation of eye-tracking data. MonTI Monografías de Traducción e Interpretación 2014. [Google Scholar]

- 33.O’Brien S. Eye tracking in translation process research: methodological challenges and solutions Methodology, Technology and Innovation in Translation Process Research: Copenhagen Studies in Language - Volume 38 (Copenhagen Language in Studies). Copenhagen, Denmark: Samfundslitteratur; 2010:251–66. [Google Scholar]