Abstract

Objective. To assess pharmacy students’ ability to incorporate laptop computers into simulated patient encounters (SPEs) in the second professional year (P2) and assess their ability to retain these skills into the next professional year. Students’ awareness and confidence in using computers was also assessed.

Methods. P2 students were surveyed about their awareness of and confidence in incorporating a computer into an SPE. Their performance using a computer in an SPE was evaluated using a blinded rubric. Next, they received formal education on this skill. Students then completed the same questionnaire and were evaluated on their ability to use a computer in another SPE. In the third year, they were evaluated using the same rubric on four activities and completed the same questionnaire at the end of each semester.

Results. There were 166 students in the two cohorts. Of those, 158 students were evaluated using the rubric and 166 students completed the four questionnaires. Student performance improved from the pre-instruction activity evaluation (43% earned acceptable) to post-instruction (66% earned acceptable). This performance improvement was retained for four activities in the third year (80%, 85%, 79%, and 92% earning acceptable ratings, respectively). Students’ questionnaires reported an improved confidence incorporating a computer into the patient encounter after receiving formal instruction. This perception of improved confidence was maintained throughout the third year.

Conclusion. Student performance improved throughout three semesters of computer use during SPEs. Students felt more confident and knowledgeable about integrating a computer into an SPE after instruction.

Keywords: computer, simulation, patient communication, pharmacy students

INTRODUCTION

The number of health care institutions using electronic health records (EHRs) has increased dramatically since the Health Information Technology for Economic and Clinical Health (HITECH) Act was signed in 2009. The HITECH Act is an incentive program developed by the Center for Medicare and Medicaid Services, and is a part of the larger American Recovery and Reinvestment Act.1,2 In 2014, 76% of non-federally funded acute care hospitals reported using an EHR.1 This statistic is a 27% increase from 2013 and a staggering eightfold increase since 2008. Additionally, 97% of non-federally funded acute care hospitals reported having a certified EHR system, although not all have fully implemented the system.

Adoption of EHR systems expands beyond the inpatient health care setting. In 2013, 78% of office-based physicians reported using an EHR that satisfied the Center for Medicare and Medicaid Services criteria, such as recording patient demographics and recording and charting vital sign changes.2 That equates to a 7% increase from 2012. The implementation and use of EHRs is becoming more common across the health care landscape.

It is the responsibility of pharmacy educators to prepare pharmacy students for experiential education as well as practice beyond graduation. This preparation includes teaching both clinical knowledge and practice skills such as communication, clinical documentation, and critical thinking. The Accreditation Council for Pharmacy Education (ACPE) Standards 2016 highlight the importance of including computer use in the pharmacy curriculum.3 Standard 2.2 states that graduates should be proficient in using “human, financial, technological, and physical resources to optimize the safety and efficacy of medication use systems.” Health informatics is also included as a section of the “required elements of the didactic Doctor of Pharmacy curriculum.” In the Standards 2016, ACPE describes the “use of electronic and other technology-based systems, including electronic health records, to capture, store, retrieve, and analyze data for use in patient care” as essential content in the curriculum.

A 2008 study by Cain and colleagues found that 23% of pharmacy schools required students to use either a laptop or tablet computer throughout the curriculum.4 Of the schools that did not have such a requirement, 50% reported that they were “likely” or “very likely” to impose a similar mandate within five years. However, the study reported that the most common uses of the computer were for accessing course materials, taking notes, and completing assessments. This does not necessarily equate to how students will be using technology in practice. It also does not ensure that students will be able to use the technology appropriately, effectively, or efficiently to aid in collection of patient information and provide optimal patient care.

With the increased use of EHRs in health care facilities, providers may bring a laptop computer into the examination room or into patient visits to reduce time documenting after the visit. A study of physicians found that using an EHR during patient encounters resulted in a loss of rapport with patients.5 In another study, providers demonstrated decreased empathy, concern, and reassurance with increased “screen gaze” during patient encounters.6 This is not restricted to older physicians, as first-year medical students also have trouble incorporating technology into patient interviews and assessments.7 Medical students improved in this regard after receiving formal instruction and training. Even though millennials have been referred to as “digital natives” and often integrate technology into multiple aspects of their lives, millennial students may still require education to learn how to appropriately use this technology to provide optimal patient care.

The faculty at Concordia University Wisconsin previously studied the incorporation of laptop computers into patient interviews in an applied patient care (APC) laboratory during the third year of the pharmacy curriculum.8 This study demonstrated significant improvement in students’ skills needed to appropriately and effectively use computers to collect patient information following formal training by instructors. Students also self-reported significant increases in confidence when using computers during patient visits after receiving formal training. The results of this previous study prompted instructors to move computer use in the APC course series earlier in the curriculum to allow students additional practice and to assess for retention of these skills. The objective of this study was to assess pharmacy students’ ability to incorporate laptop computers into simulated patient encounters (SPEs) earlier in the curriculum and to assess their ability to retain these skills. Students’ awareness and confidence in using computers during SPEs was also assessed.

METHODS

The study commenced in the spring semester of the second year of the required, six-semester APC laboratory course series, with continuation through the third year. Each semester of the laboratory series is a two-credit course that includes both lecture and laboratory components. Students integrate the patient communication skills they learned in the first three semesters of the series and gradually encounter more complex patient cases. Some of these laboratory activities require student interaction with a simulated patient.

This study focused on laboratory activities in which students had an individual encounter with a simulated patient in a primary care clinic or emergency department simulated practice setting. To collect baseline data, students completed a questionnaire prior to the SPE. The questionnaire consisted of nine questions: one assessing awareness of the computer as a potential barrier, five assessing confidence in effectively using a computer during an SPE, two assessing attitudes toward using a computer during an SPE, and one asking how many times students had used a computer to assist them in an SPE during previous APC courses. The Likert scale questionnaire has previously been used and internally validated.8

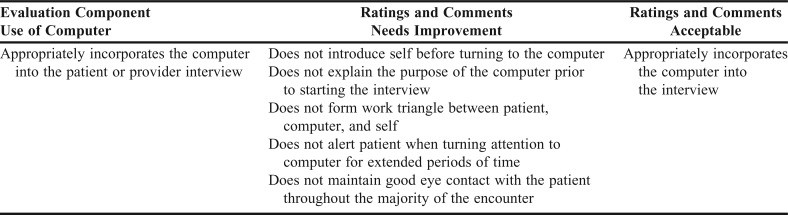

During these laboratories, students gathered medication lists from or performed pharmaceutical care assessments (medication list, social and medical history, assessment for drug therapy problems) for simulated patients (typically classmates). The interactions were timed (typically 15-20 minutes per encounter). They then developed and delivered pharmacotherapy plans to either the patient or another health care provider. Students had two 90-minute laboratories in the spring semester of the second year, three 110-minute laboratories in the fall semester of the third year, and one 110-minute laboratory in the spring semester of the third year during which they practiced and reinforced these skills. The patient encounters were video recorded. Faculty, residents, and upper class student instructors evaluated student performance using a rubric developed by faculty. In the first two years of the series, students worked either in pairs or individually and in the third year, they worked individually. The activities assessed as part of this study were all individual student activities. Cases were available as a PDF document for the students before the laboratory session so they could prepare for their patient encounters. The courses did not use a simulated EHR. Medication list and pharmaceutical care assessment interview templates were available for students to help organize the patient encounter, which could be printed and completed or completed on the computer. These templates were optional for students. In the first three semesters, students were allowed to use a computer to electronically record information they gathered from patients, but it was not mandatory. In the fourth semester, students were required to use a computer to collect patient information during the first SPE. Using a rubric, instructors evaluated students’ ability to effectively incorporate a computer into the SPE. The rubric contained several criteria that Morrow and colleagues used in their EHR-specific communication skills checklist (Table 1).7 Students were not aware they were being evaluated on their computer use skills, therefore, the rubric and the evaluation were not available to students.

Table 1.

Computer Use Skills Rubric

After the initial SPE requiring the use of a computer, students received specific instruction on how to incorporate a computer into a patient encounter during one of the 50-minute lecture time slots within the second-year APC course. The instruction included 5-10 minutes of lecture material highlighting concepts such as introducing the computer into the patient visit, how to position the computer, and the importance of maintaining eye contact with patients. The concepts were developed from behaviors studied by Morrow and colleagues.7 The instructor also modeled appropriate and inappropriate ways to incorporate a computer into a patient encounter, initially through role play, then through the use of a recorded video. Patient privacy, security, and confidentiality topics were also introduced to the students. During their next SPE, students were required to use a computer to gather patient information and were evaluated by instructors with a rubric, which was blinded to students. Students were again asked to complete a questionnaire after this SPE. The questionnaire consisted of the same questions as those on the pre-instruction questionnaire without the last question asking them how many times they had previously used a computer during a patient encounter, simulated or otherwise.

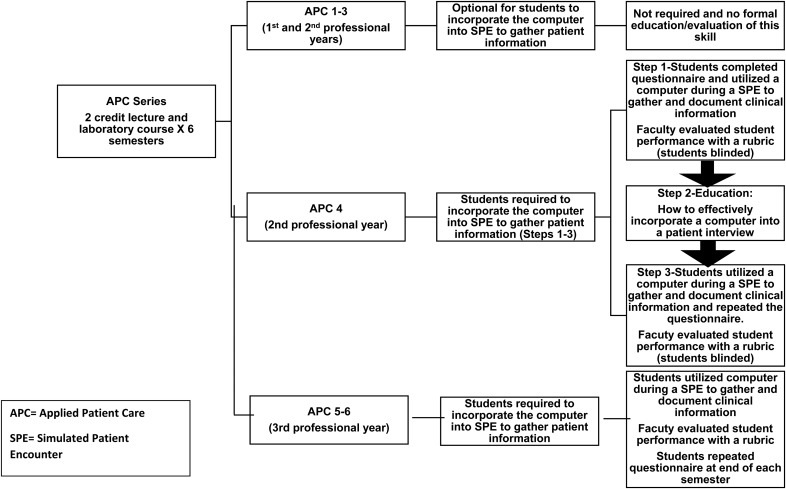

Students in the third year were required to use a computer to gather patient information in all simulated patient encounters (three in the fall semester, one in the spring semester) and were evaluated by instructors with a rubric. The rubric was available to students prior to the patient encounter activities and they were aware they were being evaluated on their computer use. Students also completed a questionnaire at the end of each semester in the third year. The questionnaire consisted of the same questions as the post-instruction questionnaire administered in the spring semester of the second year. Students completed each questionnaire during their pre-laboratory preparation; it is estimated that students took 5-10 minutes to complete each one. Course and study design are illustrated in Figure 1. This study was deemed exempt by Concordia University Wisconsin’s Institutional Review Board.

Figure 1.

Applied Patient Care Series Outline and Pictorial Description of Study Design.

The study’s participants included 166 students (82 from the 2016 class and 84 from the 2017 class) who completed the three laboratory series courses. McNemar’s test was used to analyze the instructors’ evaluation of the students’ ability to use a computer during a simulated patient encounter as determined by overall rubric results. Wilcoxon signed rank test and paired t-test were used to analyze the students’ self-assessment questionnaires. Statistics were performed using IBM SPSS Statistics for Windows, v23 (IBM Corp., Armonk, NY).

RESULTS

Instructors evaluated 158 of the 166 (95%) students on their ability to effectively incorporate a computer into an SPE, omitting eight due to technical difficulties during the video recording of some SPEs. Students’ performance of this skill improved from pre-instruction to post-instruction during the spring semester of the second year, with rubric ratings of acceptable rising from 43% (n=68) to 66% (n=105) (p<.001). Students retained this skill during the third year, with acceptable ratings of 80%, 85%, 79%, and 92% on the three fall semester activities and one spring semester activity during the third year, respectively (all p<.001 compared to pre-instruction). There was a statistically significant difference between the frequency of students’ acceptable ratings from the post-instruction activity in the spring semester of the second year and the first activity of the fall semester of the third year (66% compared to 80%, p=.004), as well as between the third activity of the fall semester of the third year and the one activity in the spring semester of the third year, (79% compared to 92%, p=.002).

Before instruction, 42 (27%) students received a needs improvement rating for not explaining the purpose of using the computer to the patient prior to beginning the interview. Just under half of students received a needs improvement rating for not effectively forming a work triangle between the simulated patient, computer, and self (n=71, 45%).

The pre-instruction questionnaire revealed that students did not have much previous experience using a computer to assist with the SPE during previous APC courses. The majority (n=135, 79%) had never used a computer for that purpose.

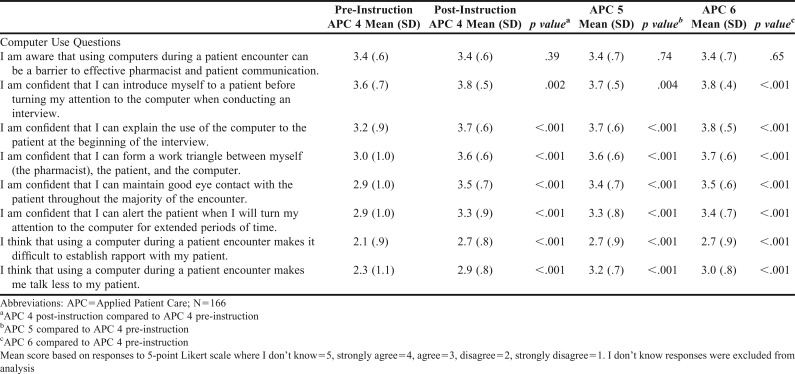

Analysis of pre-instruction and post-instruction responses, shown in Table 2, revealed that students became more confident in their ability to use a computer during an SPE. Students reported becoming more confident that they could: introduce themselves to a patient before turning attention to the computer when conducting an interview, explain the use of the computer to the patient at the beginning of the interview, form a work triangle between themselves and the patient and the computer, maintain good eye contact with the patient throughout the majority of the encounter, and alert the patient when they would be turning their attention to the computer for extended periods of time. This confidence was maintained throughout the third year as well, per the end of semester questionnaires.

Table 2.

Student Responses to Computer Use Questionnaire

Students also reported changing their attitudes using computers during patient encounters. They felt that establishing rapport with patients while using a computer during a patient encounter was less difficult than they originally anticipated (p<.001 compared to pre-instruction). They also thought that use of a computer during the encounter did not make them talk less to their patient (p<.001 compared to pre-instruction). This attitude change was maintained throughout the third year as well, per the end of semester questionnaires, (p<.001 for both statements compared to pre-instruction). Student awareness of the potential for the use of computers to be a barrier to effective pharmacist and patient communication during a patient encounter did not change during the research period.

DISCUSSION

Several benefits exist with the implementation of an EHR. These include greater access to patients’ health information and more efficient communication between patients and providers. 9 Integrating a computer and EHR into patient encounters may also allow for a more efficient and convenient encounter. 9 While computers are now a common necessity to provide efficient, quality patient care, limited literature is available describing how health care professionals appropriately integrate a computer into patient office visits. Additionally, there is limited information describing how this is integrated and formally assessed in a pharmacy curriculum.

Cain and colleagues described how pharmacy schools and colleges are using computers.4 Results reflected that students may be required to use a computer to take notes, access course materials, participate in online discussions, or complete assessments, but utilization of a computer during a patient interview or other simulated activities was not addressed.4 A similar, previous study by Ray and colleagues assessed how confidence, skill, and attitudes changed after third year pharmacy students were provided with formal instruction on how to integrate a computer appropriately into a patient visit.8 That study used the same computer use rubric and questionnaires as the current study, but only included third year pharmacy students and did not evaluate long-term retention of the skill.8

The study described in this manuscript aimed to assess students’ retention of proficiency with incorporation of a computer into an SPE over three consecutive semesters. Students were provided with an opportunity to use a computer during an SPE at the beginning of the spring semester in the second year but were not formally evaluated for a grade. Providing instruction on the topic after this initial SPE demonstrated students improved in their overall computer skills and retained this skill as they progressed to the third year of the APC series. This was based on the number of computer use rubric ratings of acceptable earned increasing after formal instruction was provided. The majority of students continued to achieve at least an acceptable rating during the subsequent four activities in the third year of the APC course series. Students were shown rubric components during formal instruction but were blinded to evaluations in the second year of the course, then were able to view these rubric evaluations in the third year. Similar results were observed in the study conducted by Ray and colleagues where the majority of third year students (73%) achieved acceptable ratings post-instruction compared to 46% pre-instruction.8 Based on these results and current results, the majority of students achieved acceptable ratings regardless of whether formal instruction is provided in the second year or third year of the APC series and continue to retain the skill as they progressed throughout the series. In fact, more students earned acceptable ratings in the third year after receiving instruction in the second year.

Based on the pre-instruction and post-instruction questionnaire results, students’ confidence levels and attitudes toward incorporating a computer into a patient encounter also improved significantly after formal instruction was provided and continued into the third year. Their confidence matched their performance.

The formal instructional design, including a 50-minute lecture incorporating tips on how to effectively integrate a computer into a patient encounter, was effective for student learning for this particular activity based on rubric results. The rubric, which specifies rating criteria (needs improvement vs acceptable), was also provided to students in the second year. Previously, when this study was conducted only in third year students by Ray and colleagues, instruction was provided using a lecture format and role play.8 This combination of instruction is effective in providing students an overview of expectations for this activity administered in the second year or third year. Students retained skills despite the type or timing of formal instruction.

The amount of acceptable performance ratings was sustained despite changes in activities from second year to third year. Changes in activity expectations included complexity of cases and time allotted to complete the activities. Despite these changes, students were still able to demonstrate acceptable integration of a computer into SPEs. Based on these results, it may be pertinent to allow students continued practice of the skill in the second year, and may even be considered in the first year, since technology and EHRs are integral to the operations of most health care systems.

Ray and colleagues identified that most third year pharmacy students have never used a computer in previous course work to practice for direct patient care activities.8 Given that the current study was conducted at the same institution, this response was similar. Prior to formal instruction, approximately a quarter of students could not explain the purpose of integrating a computer into a patient encounter. Almost half were not able to form a work triangle as outlined in the rubric. These pre-instruction questionnaire results correlate with the above students’ reports of minimal exposure to computer use in patient visits earlier in the curriculum. As mentioned above, it may be pertinent to include this type of activity even earlier in students’ coursework.

Students’ awareness of the potential for the use of computers to be a barrier to effective pharmacist and patient communication during a patient encounter did not change during the course of the questionnaires. This could be an additional area to address for future instruction for students. Ideally students’ awareness that the computer could be a potential barrier would increase after instruction. However, students may have answered the question under the premise that appropriate computer use was not a barrier.

A limitation of the results was only two cohorts of students from one pharmacy school were included. Additional cohorts from this and other pharmacy schools are needed to allow for further extrapolation of the study results. Although there was a small number of students at one institution included in this study, this activity is reproducible and applicable to other pharmacy curriculums because many schools use simulated patients or role play to teach communication skills.

Fellow students acting as simulated patients is another limitation. Simulated patients may have responded slower than a real-life patient and allowed the pharmacy student to make eye contact and feel more comfortable using the computer during the encounter. In addition, because students were using an electronic worksheet on the laptop computer and not an EHR, the results may not be directly correlated to how they might navigate information found in an EHR during a patient encounter. They may have needed to type more information compared to using an EHR. Students were also able to use their own laptop computer rather than a computer in an examination room, which may have provided a higher comfort level with the patient interview process. It is unclear how incorporation of an EHR and a computer used in the simulated examination room may have affected results of this study. Authors hypothesized that implementation of an EHR may allow for added practice with using the computer as a tool to show patients laboratory results or educational materials and could provide students opportunities to practice eye contact with the patient and coordinating interactions with the EHR and patient.

Another limitation is that students were unblinded to the computer use rubric in the third year, which could have contributed to the high number of acceptable ratings on the third year rubric activities. It is possible that students may not have retained the ability to incorporate computers into patient interviews but were prompted by reviewing the rubric. However, many skills are included in each of the rubrics that students are evaluated with throughout the APC series (for example, introducing oneself to the patient or asking the patient about their medication allergy history), all unblinded, and some students still struggle with performance of the skill even with the prompt. The fact that students performed well on the computer use rubric components lead the authors to believe the students are proficient in the skill and retaining the skill.

Overall, improvement in rubric ratings correlated to improved ratings of student confidence and attitudes based on the questionnaire results. Future directions include continuing to assess computer skills during experiential pharmacy practice experiences (eg, APPEs in the fourth year of the curriculum) and collecting this assessment from preceptors. Initiating computer use earlier in the curriculum may also prove to be beneficial and lead to improved skills.

CONCLUSION

Students felt more confident and knowledgeable about integrating the use of a computer into a patient care encounter after formal instruction was provided. The improvement in student performance pre- and post-instruction demonstrated the benefit of the instruction. Students’ confidence, knowledge, and skill were maintained into the next (third) professional year. It may be beneficial to re-assess results in future iterations of the course and activities with continual blinding of the rubric from transition of the second year to the third year. Ideally, skills learned will be further applied into the fourth professional year as students encounter real-life patients. Utilization of an EHR, institution-provided computers, and real-life patient scenarios will likely provide added complexity and practice with using a computer in patient care. This is an area of future consideration in assessing student retention of this skillset and confidence level from the second year to fourth year of the pharmacy curriculum.

REFERENCES

- 1. Charles D, Gabriel M, Searcy T. Adoption of electronic health record systems among U.S. non-federal acute care hospitals: 2008-2014. ONC Data Brief, No. 23. Office of the National Coordinator for Health Information Technology: Washington, DC; 2015.

- 2. Hsiao CJ, Hing E. Use and characteristics of electronic health record systems among office-based physician practices: United States, 2001-2013. NCHS Data Brief, No. 143. National Center for Health Statistics: Hyattsville, MD; 2014. [PubMed]

- 3. Accreditation Council for Pharmacy Education. Accreditation standards and key elements for the professional program in pharmacy leading to the doctor of pharmacy degree. Standards 2016. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf. Accessed July 25, 2017.

- 4.Cain J, Bird ER, Jones M. Mobile computing initiatives within pharmacy education. Am J Pharm Educ. 2008;72(4):Article 76. doi: 10.5688/aj720476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ratanawongsa N, Barton JL, Lyles CR, et al. Association between clinician computer use and communication with patients in safety-net clinics. JAMA Int Med. 2016;176(1):125–128. doi: 10.1001/jamainternmed.2015.6186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Margalit RS, Roter D, Dunevant MA, Larson S, Reis S. Electronic medical record use and physician-patient communication: an observational study of Israeli primary care encounters. Patient Educ Couns. 2006;61(1):134–141. doi: 10.1016/j.pec.2005.03.004. [DOI] [PubMed] [Google Scholar]

- 7.Morrow JB, Dobbie AE, Jenkins C, Long R, Mihalic A, Wagner J. First-year medical students can demonstrate EHR-specific communication skills: a control-group study. Fam Med. 2009;41(1):28–33. [PubMed] [Google Scholar]

- 8.Ray S, Valdovinos K. Student ability, confidence, and attitudes toward incorporating a computer into a patient interview. Am J Pharm Educ. 2015;79(4):Article 56. doi: 10.5688/ajpe79456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.gov HealthIT. Benefits of EHRs. https://www.healthit.gov/providers-professionals/benefits-electronic-health-records-ehrs. Accessed July 19, 2017.