Abstract

The introduction of electronic health records has produced many challenges for clinicians. These include integrating technology into clinical workflow and fragmentation of relevant information across systems. Dashboards, which use visualized data to summarize key patient information, have the potential to address these issues. In this article, we outline a usability evaluation of a dashboard designed for home care nurses. An iterative design process was used which consisted of (1) contextual inquiry (observation and interviews) with two home care nurses; (2) rapid feedback on paper prototypes of the dashboard (10 nurses); and (3) usability evaluation of the final dashboard prototype (20 nurses). Usability methods and assessments included observation of nurses interacting with the dashboard, the system usability scale and the Questionnaire for User Interaction Satisfaction short form. The dashboard prototype was deemed to have high usability (mean system usability scale, 73.2; SD 18.8) and was positively evaluated by nurse users. It is important to ensure that technology solutions such as the one proposed in this article are designed with clinical users in mind, to meet their information needs. The design elements of the dashboard outlined in this article could be translated to other EHRs used in home care settings.

Keywords: Clinical dashboard, nursing informatics, home care services, usability evaluation

BACKGROUND

Health information technology (HIT) systems are widespread across health care settings. In the USA, while most hospitals have electronic health record (EHR) systems (1), their use is less common (although increasing) in other settings, such as home care (2) and residential care (3). The value of EHRs remains an open question, and their introduction has produced significant challenges for clinicians, particularly regarding effective integration of the technology into their workflow (4, 5). Nurses, for example, often have to mentally integrate information derived from technology systems in different locations (6), and express a need for support in synthesizing the available information to paint a ‘picture of the patient’ over time (7). In an effort to help clinicians integrate data regarding diagnoses and treatment interventions for patients across time, some studies developed techniques for visual display of patient data both at the individual and group level (8–11). These systems typically integrate data from a variety of electronic data sources in hospital settings to provide visualizations of varying complexity to clinicians. The assumption of such initiatives is that the visualizations will help clinicians with retrieving data and making clinical decisions. However, to date there has been limited evaluation of the impact of such systems in practice settings (11).

To an increasing extent, dashboards are being deployed to visually summarize data relevant to individual clinicians to support decisions at the point of care. Dashboards can reduce cognitive overload and improve users’ ability to interpret and remember relevant data (12, 13). Dashboards may be used for many purposes in health care settings, for example to provide feedback on how well clinicians adhere to clinical practice guidelines (14), to encourage clinicians to carry out evidence-based care (15), or to combine and display information about a patient’s condition to support clinical decisions (6, 16, 17). Initial evaluations of dashboards have documented reduced response times for finding relevant information (6, 12, 18, 19), improved accuracy in information retrieval (6, 18, 19) and increased adherence to evidence-based care interventions (12).

A significant number of studies have been conducted in hospital settings to explore the use of visualized data to support clinical care (6, 9, 14–16). However, to our knowledge, only one previous study focused on home health care (18). Approximately 4.9 million individuals in the USA in 2014 received care at home (20), and this number is likely to increase as the population ages (21). Home care nurses face challenges that are similar to those in an acute care environment when accessing relevant information at the point of care to enable appropriate decisions. However, they also have specific challenges; they see patients less frequently (there can be considerable gaps of time between home care visits), and there is often very little continuity among the nurses who make patient visits across an episode of home care (22). In a companion study, we asked a group of home care nurses in the US to identify information they required to help them provide care for patients with heart failure (23) who were at increased risk of hospital readmission from a home care setting (24, 25), and therefore the importance of this information to nurses. The results of the study were used to design a prototype dashboard focused on tracking and monitoring of patient information (ie, weight and vital signs) over time. In this article, we briefly describe the design process used to develop the dashboard prototype and present the findings from our usability evaluation of the dashboard.

METHODS

Study Context

The study was conducted in a large not-for-profit home care agency in the Northeastern US. In 2016, at the time of the study, the agency served more than 142,000 patients and employed more than 1,400 registered and licensed practical nurses. The agency’s internally developed electronic health record system was used by all home care nurses at the point of care. Data collected via the electronic system included the Outcomes and Assessment Information Set (OASIS), which is a mandated assessment carried out by all Medicare certified home care agencies on admission, at multiple points during care, and at discharge. In addition, nurses documented their interventions and observations associated with every home care visit.

Participants

Home care nurses working in the home care agency participated in both the dashboard design process and the usability evaluation. Nurses were volunteers recruited via email or after clinical team meetings. Two nurses were recruited as co-designers for the study, participated in contextual interview sessions, and completed the usability evaluation. Ten nurses provided rapid feedback on the paper prototype dashboards, and 20 nurses completed the usability evaluation only (32 nurses in total across all three study components). In addition, three experts were recruited externally to conduct a heuristic evaluation of the dashboard prototype. Criteria for selection as an expert were status as a nurse, publication in the field of informatics, and some expertise in the field of data visualization. Dashboard design commenced in September 2016, and the usability evaluation took place during the period between January and February 2017.

All elements of the study were approved by the relevant institutional review boards.

Dashboard Design Process

In a companion study, nurses participated in focus groups to identify what information was required to assist them in delivering effective evidence-based care to patients with heart failure (the detailed methods and results of the focus group study are published separately) (23). We also conducted a study to explore nurses’ ability to understand visualized information (such as line graphs and bar graphs; full information on the methods and results of this study are also published separately) (26). The results of these two studies were used to inform the design of the dashboards presented in this article. Based on the focus group findings, dashboards were developed to assist nurses with the monitoring of patient weights and vital signs between visits. The dashboards incorporated different display options to accommodate individual variation in comprehension of graphical information, based on the results of our study (26).

We used a design science framework approach to develop the prototype dashboards (27, 28). This approach emphasizes the importance of collaboration with the end-user of the technology (in this case home care nurses), and is iterative in nature. A sample of two home care nurses worked as ‘co-designers’ with the research team to develop the final prototype dashboard, as presented in the following paragraphs.

Contextual inquiry was used to observe nurses conducting normal home care visits, with follow-up interviews that explored how they did their work. A researcher accompanied each nurse to two patient visits (two nurses, with a total of four visits and observations), observed them performing care, took notes, and then asked questions to verify that the researcher’s understanding of the nurses work was correct, and to document the nurses’ views and comments.

Using information from the contextual inquiry, paper prototypes of potential dashboard designs were developed and evaluated in an iterative cycle using rapid feedback from a sample of 10 nurses. The paper prototypes were formatted on poster boards. Nurses provided written consent to participate in the study, They were asked to write down their thoughts and feedback on the prototype design (including layout, colors, and information presented in the dashboard) and suggestions for improvements. In general, the feedback sessions lasted approximately 5 to 10 minutes.

Based on observations, interviews, and rapid feedback on paper prototypes, a final version of the dashboard was developed. The prototype was Web-based using a widely available tool, InVision (InVision, New York, NY; invision.com) and designed to look as if it were integrated into the agency EHR system. All data for the prototype dashboard was fabricated (based on data that would be seen by nurses in a home care agency) and preprogrammed into the dashboard design. The interface for the dashboard was programmed to provide interactivity (to enable switching between data displays).

Usability Evaluation Methods

We used the Tasks, Users, Representations and Functions (TURF) framework (29) to structure the usability evaluation. The interactive Web-based prototype dashboard was evaluated for function (how useful is it?), users (how satisfying do the users find it?), representations, and task (how usable is it?). Participants (n=20) were given a written series of tasks to complete using the prototype. The first set of tasks focused on extracting information from the data display (e.g., providing a value for the BP measurement on a specific date). A second set of tasks focused on dashboard functionality (i.e., switch between graph types, select different data to display, and navigate between the front patient case screen and the dashboard located in the patient’s notes to record vital signs). Participants were asked to complete the tasks on the work sheet, writing down information they extracted from the dashboard where appropriate. While they completed the tasks, their interactions with the dashboard interface were recorded using Morae software (Techsmith, Okemos, MI), including the time participants started and finished the tasks, verbalizations, and on-screen activity such as mouse and keyboard input.

Usefulness was assessed by analyzing participant data (n=20) recorded as they interacted with the dashboard during the evaluation session, and comparing how they used the dashboard to the functionality built into the dashboard. For example, we recorded whether participants used radio buttons to navigate among different vital signs measures, if they used the mouse to hover over graphs to identify a specific value associated with a data point, or if they moved between bar and line graph data displays. Whether the participants completed each task with ease (without assistance), with difficulty (defined as requiring assistance from the research team to complete the task), or failed to complete was noted as part of the evaluation.

Satisfying (how useful the users found the system to be) was evaluated using two validated questionnaires: the 10-item system usability scale (SUS) (30) and the short form 50-item questionnaire for user interaction satisfaction (QUIS) (31, 32). The SUS is a flexible questionnaire designed to assess any technology, and is relatively quick and easy to complete. It consists of 10 statements that are scored on a 5-point scale of strength of agreement, with final scores (after transformation of the scores as described in the data analysis section) ranging from 0–100. A higher score indicates better usability. As a general rule, a system that has a score above 70 has acceptable usability; a lower score means that the system needs more scrutiny and continued improvement (33). The QUIS was developed by a group of researchers at the University of Maryland and is specifically designed to measure user satisfaction with various components of a technology system, which includes both overall system satisfaction and specific interface factors such as screen design and system terminology (34). The questionnaire can be configured so that it fits the needs for user interface analysis. The original full QUIS has 11 subcomponents with more than 120 questions covering aspects of usability including learning, terminology, and system capabilities. It has extremely high reliability (Cronbach’s alpha = 0.95) (34) and has been used successfully for user evaluations across a variety of different technology systems in healthcare (35–38). For this study, the QUIS short form was used to reduce burden on study participants. We used the QUIS subcomponents relevant to the dashboard evaluation which included part 3: overall user reactions (five questions); part 4: screen (four questions); part 5: terminology and dashboard information (two questions); part 6: Learning (three questions); and part 7: system capabilities (two questions). For each subcomponent, participants rated the dashboard on a scale from 1 to 9 (a summary of each question and the differential response items are provided in Table 2).

Table 2:

Summary of SUS and QUIS scores

| N | Mean (SD) | |

|---|---|---|

| System Usability Scale (SUS) | 22 | 73.2 (18.8) |

| Questionnaire for User Interaction Satisfaction (QUIS) | ||

| Overall User Reactions | 20 | 6.1 (1.0) |

| Terrible/Wonderful | 21 | 7.1 (1.5) |

| Frustrating/Satisfying | 21 | 5.8 (2.6) |

| Dull/Stimulating | 21 | 5.4 (2.6) |

| Difficult/Easy | 21 | 6.1 (2.5) |

| Rigid/Flexible | 20 | 6.5 (2.1) |

| Dashboard Screen | 22 | 7.7 (1.2) |

| Characters on screen hard/easy to read | 22 | 8.0 (1.4) |

| Highlighting helpful/unhelpful | 22 | 8.0 (1.2) |

| Screen layouts helpful never/always | 22 | 7.4 (1.3) |

| Sequence of screens confusing/clear | 22 | 7.3 (1.7) |

| Terminology and Dashboard Information | 22 | 7.8 (1.5) |

| The use of terminology inconsistent/consistent | 22 | 7.8 (1.4) |

| Terminology relates to work never/always | 22 | 7.7 (1.5) |

| Learning the Dashboard | 22 | 7.5 (1.3) |

| Learning the dashboard difficult/easy | 22 | 7.5 (1.3) |

| Exploration of features by trial and error discouraging/encouraging | 22 | 7.5 (1.4) |

| Tasks performed in straightforward manner never/always | 22 | 7.5 (1.4) |

| Dashboard Capabilities | 22 | 7.6 (1.4) |

| Dashboard speed too slow/fast enough | 22 | 8.0 (1.40 |

| Ease of operation depends on level of experience never/always | 22 | 7.1 (2.0) |

Usability was evaluated using heuristic evaluation and task analysis (29). Heuristic evaluation was conducted by experts who were provided with an extended task list designed to enable them to explore the full functionality of the dashboard and an heuristic evaluation checklist developed for the study (39). The checklist consisted of seven general usability principles (visibility of system status, match between system and the real world, user control and freedom, consistency and standards, recognition rather than recall, flexibility and efficiency of use, aesthetic and minimalist design/remove the extraneous) with 40 usability factors (e.g. Does every screen have a tile or header that describes its contents? Do the selected colors correspond to common expectations about color codes?) and three visualization-specific usability principles (spatial organization, information coding, orientation) with nine usability factors (e.g. are symbols appropriate for the data represented?). If the factor was present then the evaluator gave a score of 1 (Yes) and if it was not present, a score of 0 (No).

Task analysis compared user performance in terms of time on task. The actual time that home health nurses spent using the dashboard was compared to the total time it took a group of expert users (three of the study co-authors) to complete a set of tasks. The lower the difference between the two groups, the more usable the system was considered to be.

Usability Evaluation Data Analysis

Usefulness:

The audio recordings of participant interactions with, and feedback regarding, the dashboard underwent content analysis. Three members of the team independently categorized statements from the audio recordings before meeting to reach consensus on the categorization for each comment.

Satisfying:

The SUS was scored by converting responses to a 0 – 4 scale (4 was the most positive response). The converted responses were added and multiplied by 2.5 as per the scoring instructions, giving a range of possible values from 0 to 100. Descriptive statistics were used to summarize the SUS scores across all evaluators of the system. The means and standard deviations for each item on the QUIS were calculated and then graphically displayed, providing an overall profile of areas that participants identified as being particularly good or bad.

Usability:

The output from a heuristic evaluation is a summary list of usability problems identified by the group of evaluators. The scores for each heuristic were calculated by dividing the total number of factors (points) awarded by the total number available. The higher the score, the more usable the system was considered to be.

Three experts who did not participate in the heuristic evaluation were recorded interacting with the dashboard to complete the task list created for the usability evaluation. The total time to complete the task list for each expert was calculated. The mean of the expert times was then used as the expert model. Total time using the dashboard was identified for each participant in the usability evaluation. Descriptive statistics were used to summarize these data, including the average, maximum, and minimum completion times for the task list, and compared to the expert model. Users’ ability to accurately extract information from the dashboard was also recorded.

RESULTS

Prototype Dashboard Design Features

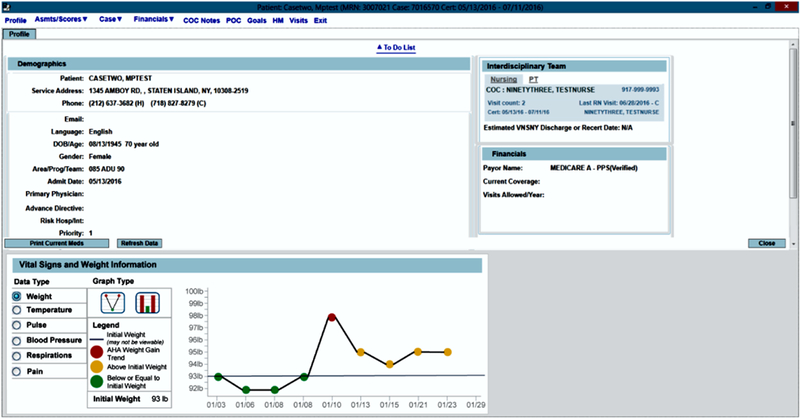

Following the contextual inquiry observation, interviews, and two rounds of rapid feedback on paper prototypes, the Web-based interactive prototype was developed (an example of the dashboard display for weight is provided as Figure 1). Suggestions extracted from the data resulted in the development of the following areas of functionality for the prototype:

Figure 1:

Example of prototype dashboard display

movement enabled between different visualizations of the data (switching between a line graph and a bar graph);

data displayed for different vital signs (using the radio buttons);

information displayed in both the front screen of the patient EHR and in the patient notes for vital signs (where nurses commonly document measurements); and

measurements color-coded (e.g., red for measurements outside of guidelines, yellow for weight measurements above initial weight but not outside guidelines, green for measurements inside guidelines).

Usability Evaluation

Participants

A total of 22 nurses completed the usability evaluation (the two co-designers plus 20 participants recruited for the usability evaluation). They were predominantly female (n=20; 91%) with a mean age of 51 years (SD 10.0). They were ethnically diverse (32% white non-Hispanic, 32% Asian, 23% African-American, and 14% Hispanic/Other Race/Ethnicity) and experienced (78% had 10 or more years of nursing experience).

Usefulness

The majority of participants (91%) were able to use the dashboard immediately, and easily used radio buttons to switch between data elements and icons to navigate between the line and bar graphs (96%) (Table 1). Participants liked the functions that enabled them to see trends for vital signs over time, without having to search back through previous notes.

Table 1:

Task completion

| Completed with Ease N (%) |

Completed with Difficulty N (%) |

Failed to Complete N (%) |

|

|---|---|---|---|

| Started using dashboard | 20 (91) | 2 (9) | 0 (0) |

| Ability to switch to bar graph | 14 (64) | 2 (9) | 6 (27) |

| Ability to switch between data elements using radial button | 19 (86) | 0 | 0 (14) |

| Switch to vital signs screen in EHR* | 7 (32) | 11 (50) | 4 (18) |

| Ability to chart data in vital signs | 9 (41) | 7 (32) | 6 (27) |

| Ability to identify area for charting weight | 12 (55) | 4 (18) | 6 (27) |

| Completes task list | 14 (64) | 6 (27) | 2 (9) |

Part of functionality of EHR not the dashboard

“it has, like, past vital signs which is really helpful, instead of going back and forth,”

(Nurse 32)

“because sometimes you’re like, oh, what was the weight the other day, but if you have the graph in front of you then you can see the change right away”

(Nurse 40)

The color-coded data (red, yellow, green) were received positively; participants noted that this helped them to pay attention to the data. The built-in decision support (highlighting with red color when a measurement was outside recommended guidelines) was also identified as an important element of dashboard functionality.

“just looking at numbers, numbers, numbers, there’s something robotic, you don’t pay attention but with colors it makes it stand out more, you pay attention,”

(Nurse 36)

“it’s like ok now I see everything I need to know and the fact that it has the blood pressure parameters on there when you go into the blood pressure graph, that’s good too because now I can see ok well this one was a little off let me call the doctor,”

(Nurse 52)

More than half of participants had difficulty (50%) or failed to complete (16%) the task that required navigating from the initial front dashboard display screen to the patient notes area of the EHR to document vital signs. This was partly due to the functionality of the Web-page interface used in the study, which did not behave the same as the agency’s EHR. Of those individuals who did navigate to the charting screens, another two participants were unable to complete documentation (again, due to a lack of congruence between the prototype Web page and the agency’s EHR screen).

There was some disagreement in the verbal feedback on the overall usability of the system. Some participants reported that they found it easy to use without training, and others stated a need for training and practice.

“I don’t think you need any experience, I thought I navigated it quite well,”

(Nurse 48)

“For someone like me it was easier, but for some of the older nurses it might take more time to kind of get the hang of it but I think it should get easier for them too,”

(Nurse 52)

“I’m not the most computer savvy so I would just have to practice,”

(Nurse 39)

“just teach us like how to navigate and where we are supposed to find this and that because, you know that the only thing I know with computers is the one I do with my notes and my visit, that’s it,”

(Nurse 41)

In the course of the usability evaluation, participants suggested further functionality to be built into the dashboard before deployment. This included the ability to change font size on the display, the addition of blood glucose readings to the charts, and editing the graphs to make the relationship between vital sign records and dates clearer.

“if it can be done with blood sugars too, that would be good,”

(Nurse 52)

“I think for diabetic patients that might be helpful, to see what the sugars were last week instead of going back,”

(Nurse 32)

“but seeing like over here, like the 23rd, the graph went like that and to kind of see down here, it’s kind of hard to see, from this side of the graph over to where the number was,”

(Nurse 48)

Satisfying

The dashboard had a mean SUS score of 73.2 (SD 18.8) and a median score of 70. Means of QUIS ratings of the dashboard prototype (Scale from 1–9: word on the left = 1, word on the right =2) are summarized in Table 2. Dashboard elements given the highest ratings were terminology and dashboard information (mean 7.8; SD 1.5) and the dashboard screen (mean 7.7; SD 1.2). Elements with the lowest ratings were the overall user reactions (mean 6.1; SD 1.0) and learning the dashboard (mean 7.5; SD 1.3).

Usability

The usability issues identified by the three experts who participated in the heuristic evaluation were classified as being either a minor usability issue (n=5) or a cosmetic problem only (n=12), in the areas of the flexibility and efficiency of dashboard use and user control and freedom (Table 3). Specific issues raised included placing the icons for graph selection (line graph or bar graph) closer to the graph (cosmetic problem only) and using a contrasting color to highlight which graph is selected (minor issue). The heuristic evaluators also made suggestions regarding the icon for the line graph (cosmetic problem only), graying in the legend to convey that it is not interactive (cosmetic problem only), and the ability to choose (1) more data on one graph and (2) the ability to show a broader or narrower date range (minor issue).

Table 3:

Heuristic Evaluation Ratings

| Possible Score | Mean Score | Result (%) | |

|---|---|---|---|

| Visibility of system status | 6 | 5.7 | 95 |

| Match between system and the real world | 5 | 4 | 80 |

| User control and freedom | 5 | 3 | 60 |

| Consistency and Standards | 6 | 5.3 | 88 |

| Recognition rather than recall | 4 | 3 | 75 |

| Flexibility and efficiency of use | 7 | 4 | 57 |

| Aesthetic and minimalist design/remove the extraneous (ink) | 7 | 6 | 86 |

| Spatial organization | 3 | 2.67 | 89 |

| Information Coding | 2 | 2 | 100 |

| Orientation | 4 | 3.33 | 83 |

| TOTAL | 49 | 39 | 79.6 |

Participants’ average time to complete all dashboard tasks was 5.7 minutes (SD=2.4), compared to 1.4 minutes (SD=0.6) for the expert users. All nurses took more time than the average expert user. The average time deviation between nurses and expert users was 4.3 minutes (SD=2.4). The ability of participants to navigate the dashboard and extract accurate data from the displays was assessed in the task form. Questions specifically related to the ability to interpret data in the dashboards had accuracy ratings between 55% and 100%. Overall, 100% of participants were able to identify a patient’s weight; however, only 55% (n=12) were able to accurately identify a patient’s temperature. The actual patient temperature was 99.7°F, which displayed if the mouse point hovered over the reading on the chart. However, if participants only viewed the graph without the mouse hovering feature, then they either gave a reading of 100°F (n=6; 27%) or 99°F (n=2; 9%). One participant gave a reading of 99.5°F.

DISCUSSION

This article reports the results of a usability evaluation of a point-of-care dashboard prototype developed for use by home care nurses. The dashboard was designed for integration into an existing, internally developed EHR to support decision making in the care of patients with heart failure. The final dashboard prototype displays data trends for a patient’s vital signs and weight across home care visits, and incorporates inbuilt alerts (decision support) to indicate to the home care nurse when a patient’s measurements are outside the guidelines (40) or parameters established by the physician. In general, it is considered that usable products should have SUS scores above 70; our prototype scored 73, suggesting acceptable usability (41). Nurses who participated in the usability evaluation indicated that elements of the dashboard design (such as providing information trends through time on a patient’s weight and vital signs, the use of visual displays for the data, and color coding to indicate measurements outside guidelines or recommendations) were perceived to be extremely useful as support for clinical practice.

However, before deployment in clinical practice, the dashboard would need considerable refinement, taking into account the results of the usability evaluation and feedback from the expert evaluators regarding design and layout of functions, as well as the nurses’ need for training and education on how to use the dashboard effectively. Other functionality, such as providing data on a patient’s blood glucose measurements (requested by a number of nurses in feedback), enhancing the usability of the graph function for temperature readings, and providing the facility to graph multiple indicators on one display (not specifically mentioned by these nurses but accepted practice in other settings) could also be considered.

While our study is not the first to develop visualizations to summarize patient trends for home care nurses (18), it is the first to specifically address both the information needs of the nurses and individual differences in how visualized information is comprehended. Because of the nature of home care nursing, where the frequency of visits and lack of continuity vary compared to acute care settings, providing information over time to enable efficient monitoring by the nurse of the patient’s self-management is vital. The use of visual dashboards, such as the one developed in this study, means that nurses do not have to seek these data across a large number of previous visit notes, and are readily alerted if the patient’s condition deteriorates.

Study Limitations

This study was conducted at a single home care agency located in the Northeastern region of the USA, which currently has its own internally developed EHR system. The dashboard that was developed in this study was specifically designed to be integrated into the existing EHR, located in the patient’s notes when a nurse opens the first visit documentation screen, and in the visit notes area where nurses document vital signs and weight. Although this is a limitation, the principles underlying development of the dashboard are transferable across different EHR systems. The dashboard should be integrated into nurses’ workflow, located at points in the EHR where the information would be most useful; able to display data according to preference, such as a bar graph or line graph; and provide some decision support and guidance, indicating through the use of appropriate color systems when a patient’s measurements fall outside guidelines and parameters.

Other limitations include the design method for the prototype dashboard; it utilized an interface based on Web pages, which was not fully integrated into, and lacked the functionality of, the agency’s EHR. This caused difficulties for several participants in the usability evaluation when they were asked to navigate from the front display dashboard to the area of the EHR in which they would normally chart a patient’s weight and vital signs. In addition, the usability evaluation did not explore the dashboard in actual practice, meaning that it is not clear whether it would integrate effectively with a nurse’s actual workflow.

Future Research

As noted, before the dashboard developed in this study could be implemented in a practice setting, it would need refinement and the addition of a training module. Whether, and how, the dashboard integrates with nurses’ workflow, and its potential impact on nurses’ ability to retrieve information for use in decision making, also needs to be explored. Finally, studies to evaluate the long-term impact of the dashboard implementation on patient healthcare outcomes are needed.

Conclusion

The use of visualization techniques such as dashboards is increasing in response to clinician needs for summarized, easily interpreted patient information at the point of care. In this study, we developed a dashboard for use by home care nurses that included nurses in all aspects of the design process. It is important to ensure that technology solutions, such as the one proposed here, are designed with clinical users in mind, to meet their information needs. The design elements of the dashboard, which was rated as usable by nurses, could be translated to other EHRs used in home care settings.

Acknowledgments

Source of Funding: This project was supported by grant number R21HS023855 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Footnotes

Conflicts of Interest: None declared.

Contributor Information

Dawn Dowding, School of Health Sciences, University of Manchester, Manchester, UK.

Jacqueline A. Merrill, School of Nursing and Department of Biomedical Informatics, Columbia University.

Yolanda Barrón, Center for Home Care Policy and Research, Visiting Nurse Service of New York, New York.

Nicole Onorato, Center for Home Care Policy and Research, Visiting Nurse Service of New York, New York.

Karyn Jonas, Rory Meyers College of Nursing, New York University, New York.

David Russell, Department of Sociology, Appalachian State University, Boone, NC.

REFERENCES

- 1.Charles D, Gabriel M, Searcy T. Adoption of Electronic Health Record Systems among U.S. NonFederal Acute Care Hospitals: 2008–2014 Washington, DC: Office of the National Coordinator for Health Information Technology; 2015. [Google Scholar]

- 2.Bercovitz AR, Park-Lee E, Jamoom E. Adoption and Use of Electronic Health Records and Mobile Technology by Home Health and Hospice Care Agencies. Hyattsville, MD: National Center for Health Statistics, 2013. [PubMed] [Google Scholar]

- 3.Davis JA, Zakoscielna K, Jacobs L. Electronic Information Systems Use in Residential Care Facilities: The Differential Effect of Ownership Status and Chain Affiliation. Journal of Applied Gerontology. 2016;35(3):331–48. [DOI] [PubMed] [Google Scholar]

- 4.Council NR. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Stead WW, Lin HS, editors. Washington, DC: The National Academies Press; 2009. 120 p. [PubMed] [Google Scholar]

- 5.Armijo D, McDonnell C, Werner K. Electronic Health Record Usability: Interface Design Considerations. Rockville, MD: October 2009.

- 6.Koch SH, Staggers N, Weir C, Agutter J, Liu D, Westenskow DR. Integrated information displays for ICU nurses: Field observations, display design and display evaluation. Proceedings of the Human Factors and Ergonomics Society 54th Annual Meeting; San Francisco2010 p. 932. [Google Scholar]

- 7.Jeffrey AD, Novak LL, Kennedy B, Dietrich MS, Mion LC. Participatory design of probability-based decision support tools for in-hospital nurses. Journal of the American Medical Informatics Association. 2017;ePub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang TD, Plaisant C, Quinn AJ, Stanchak R, Murphy S, Shneiderman B. Aligning Temporal Data by Sentinel Events: Discovering Patterns in Electronic Health Records. Conference Proceedings of the 2008 Conferene on Human Factors in Computing Systems; Florence, Italy2008. [Google Scholar]

- 9.Bui AA, Aberle DR, Kangarloo H. TimeLine: visualizing integrated patient records. IEEE Transactions on Information Technology in Biomedicine. 2007;11(4):462–73. [DOI] [PubMed] [Google Scholar]

- 10.Plaisant C, Milash B, Rose A, Widoff S, Shneiderman B. LifeLines: Visualizing Personal Histories. In: Tauber MJ, editor. Proceedings of the SIGCHI conference on human factors in computing systems; Vancouver, Canada. New York, NY USA: ACM; 1996. p. 221–7. [Google Scholar]

- 11.Hirsch JS, Tanenbaum JS, Gorman SL, Liu C, Schmitz E, Hashorva D, et al. HARVEST, a longitudinal patient record summarizer. Journal of the American Medical Informatics Association. 2015;22:263–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dowding D, Randell R, Gardner P, Fitzpatrick G, Dykes P, Favela J, et al. Dashboards for improving patient care: Review of the literature. International Journal of Medical Informatics. 2015;84:87–100. [DOI] [PubMed] [Google Scholar]

- 13.Wilbanks BA, Langford PA. A review of dashboards for data analytics in nursing. Computers, Informatics, Nursing. 2014;84(11):545–9. [DOI] [PubMed] [Google Scholar]

- 14.Zayfudim V, Dossett LA, Starmer JM. Implementation of a Real-time Compliance Dashboard to Help Reduce SICU Ventilator-Associated Pneumonia With the Ventilator Bundle. Archives of Surgery. 2009;144(7):656–62. [DOI] [PubMed] [Google Scholar]

- 15.Linder JA, Schnipper JL, Tsurikova R, Yu DT, Volk LA, Melnikas AJ, et al. Electronic health record feedback to improve antibiotic prescribing for acute respiratory infections. The American Journal of Managed Care. 2010;16(12 Suppl HIT):e311–9. [PubMed] [Google Scholar]

- 16.Effken JA, Loeb RG, Kang Y, Lin Z-C. Clinical information displays to improve ICU outcomes. International Journal of Medical Informatics. 2008;77:765–77. [DOI] [PubMed] [Google Scholar]

- 17.Lee S, Kim E, Monsen KA. Public health nurse perceptions of Omaha system data visualization. International Journal of Medical Informatics. 2015;84:826–34. [DOI] [PubMed] [Google Scholar]

- 18.Mamykina L, Goose S, Hedqvist D, Beard DV. CareView: Analyzing Nursing Narratives for Temporal Trends CHI ‘04 Extended Abstracts on Human Factors in Computing Systems; Vienna, Austria. New York, NY USA: ACM; 2004. p. 1147–50. [Google Scholar]

- 19.Koopman RJ, Kochendorfer KM, Moore JL, Mehr DR, Wakefield DS, Yadamsuren B, et al. A diabetes dashboard and physician efficiency and accuracy in accessing data needed for high-quality diabetes care. Annals of Family Medicine. 2011;9(5):398–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Harris-Kojetin L, Sengupta M, Park-Lee E, Valverde R, Caffrey C, Rome V, et al. Long-term care providers and services users in the United States: Data from the National Study of Long-Term Care Providers, 2013–2014. National Center for Health Statistics Vital Health Statistics. 2016;3(38). [PubMed] [Google Scholar]

- 21.Vincent GK, Velkoff VA. The next four decades: The older population in the United States: 2010 to 2050. . Washington, DC: 2010.

- 22.Russell D, Rosati RJ, Rosenfeld P, Marren JM. Continuity in home health care: is consistency in nursing personnel associated with better patient outcomes? Journal for Healthcare Quality. 2011;33(6):33–9. [DOI] [PubMed] [Google Scholar]

- 23.Dowding DW, Russell D, Onorato N, Merrill JA. Perceptions of home care clinicians for improved care continuity: a focus group study. Journal for Healthcare Quality. 2017;In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. New England Journal of Medicine. 2009;360(14):1418–28. [DOI] [PubMed] [Google Scholar]

- 25.Fortinsky RH, Madigan EA, Sheehan TJ, Tullai-McGuinnes S, Kleppinger A. Risk factors for hospitalization in a national sample of medicare home health care patients. Journal of Applied Gerontology. 2014;33(4):474–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dowding D, Merrill JA, Onorato N, Barrón Y, Rosati RJ, Russell D. The impact of home care nurses’ numeracy and graph literacy on comprehension of visual display information: implications for dashboard design. Journal of the American Medical Informatics Association. 2017;Epub ahead of print(Apr 27). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hevner A, Chatterjee S. Design science research in information systems In: Hevner A, Chatterjee S, editors. Design research in information systems. Integrated series in information systems. 22: Springer-Verlag; 2010. p. 9–22. [Google Scholar]

- 28.Hevner A, Chatterjee S. Design Science Research Frameworks In: Hevner A, Chatterjee S, editors. Design Research in Information Systems: Theory and Practice. Integrated Series in Information Systems: Springer-Verlag; 2010. p. 23–31. [Google Scholar]

- 29.Zhang J, Walji M. TURF: Toward a unified framework of EHR usability. Journal of Biomedical Informatics. 2011;44:1056–67. [DOI] [PubMed] [Google Scholar]

- 30.De Souza RN. ‘This child is a planned baby’: skilled migrant fathers and reproductive decision-making. J Adv Nurs. 2014;70(11):2663–72. [DOI] [PubMed] [Google Scholar]

- 31.Van McCrary S, Green HC, Combs A, Mintzer JP, Quirk JG. A delicate subject: The impact of cultural factors on neonatal and perinatal decision making. J Neonatal Perinatal Med. 2014;7(1):1–12. [DOI] [PubMed] [Google Scholar]

- 32.Chin J, Diehl V, Norman K. Development of an instrument measuring user satisfaction of the human-computer interface. Proceedings of the SIGHCI conference on human factors in computing systems; 5/1/19881988. p. 213. [Google Scholar]

- 33.Bangor A, Kortum P, Miller J. An empirical evaluation of the System Usability Scale. International Journal of Human-Computer Interaction. 2008;24(6):574–94. [Google Scholar]

- 34.Chin J, Diehl V, Norman K, editors. Development of an instrument measuring user satisfaction of the human-computer interface. CHI ‘88 Proceedings of the SIGHCHI Conference on Human Factors in Computing Systems; 1988; Washington, DC: ACM, New York. [Google Scholar]

- 35.Harper B, Slaughter L, Norman K. Questionnaire administration via the WWW: A validation and reliability study for a user satisfaction questionnaire. WebNet 97, Association for the Advancement of Computing in Education; November, 1997; Toronto, Canada1997.

- 36.Hortman P, Thompson C. Evaluation of User Interface Satisfaction of a Clinical Outcomes Database. Computers, Informatics, Nursing. 2005;23(6):301–7. [DOI] [PubMed] [Google Scholar]

- 37.Johnson TR, Zhang J, Tang Z, Johnson C, JP. T. Assessing informatics students’ satisfaction with a web-based courseware system. International Journal of Medical Informatics. 2004;73:181–7. [DOI] [PubMed] [Google Scholar]

- 38.Moumame K, Idri A, Abran A. Usability evaluation of mobile applications using ISO 9241 and ISO 25062 standards. SpringerPlus. 2016;5:548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dowding D, Merrill JA. Heuristics for evaluation of dashboard visualizations. American Medical Informatics Association Annual Symposium; November 4–8; Washington, DC2017. [Google Scholar]

- 40.Yancy C, Jessup M, Bozkurt B, Butler J, Casey DJ, Drazner M, et al. 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines. Journal of the American College of Cardiology. 2013;62(16):e147–239. [DOI] [PubMed] [Google Scholar]

- 41.Bangor A, Kortum P, Miller J. An Empirical Evaluation of the System Usablity Scale. International Journal of Human-Computer Interaction. 2008;24(6):574–94. [Google Scholar]