Abstract

Objectives

The advent of large databases, wearable technology, and novel communications methods has the potential to expand the pool of candidate research participants and offer them the flexibility and convenience of participating in remote research. However, reports of their effectiveness are sparse. We assessed the use of various forms of outreach within a nationwide randomized clinical trial being conducted entirely by remote means.

Methods

Candidate participants at possibly higher risk for atrial fibrillation were identified by means of a large insurance claims database and invited to participate in the study by their insurance provider. Enrolled participants were randomly assigned to one of two groups testing a wearable sensor device for detection of the arrhythmia.

Results

Over 10 months, the various outreach methods used resulted in enrollment of 2659 participants meeting eligibility criteria. Starting with a baseline enrollment rate of 0.8% in response to an email invitation, the recruitment campaign was iteratively optimized to ultimately include website changes and the use of a five-step outreach process (three short, personalized emails and two direct mailers) that highlighted the appeal of new technology used in the study, resulting in an enrollment rate of 9.4%. Messaging that highlighted access to new technology outperformed both appeals to altruism and appeals that highlighted accessing personal health information.

Conclusions

Targeted outreach, enrollment, and management of large remote clinical trials is feasible and can be improved with an iterative approach, although more work is needed to learn how to best recruit and retain potential research participants.

Trial registration

Clinicaltrials.govNCT02506244. Registered 23 July 2015.

Keywords: Clinical trials, Clinical research, Digital technology, Remote enrollment, Remote monitoring, Outreach

Abbreviations: AF, atrial fibrillation; ECG, electrocardiographic; ICF, informed consent form; mSToPS, mHealth Screening to Prevent Strokes

1. Background

Recruitment of participants has traditionally been one of the most challenging aspects of clinical trials [1]. Historically, the process has involved in-person contact with potential participants in the clinical setting. Recent advances in digital technologies—including large databases, physiological sensors, and communications—might enable clinical trials in which all aspects, from recruitment to electronic consent, enrollment, and monitoring, are handled remotely. Conducting these processes in such a way might dramatically expand the reach of clinical trials, allowing people to participate who were previously unable to, while simultaneously reducing costs [2]. The rise of behavioral economics, “nudges,” and the increasing ease of running randomized clinical trials with digital platforms open up opportunities to identify motivators and barriers to joining clinical trials [3].

The mHealth Screening to Prevent Strokes (mSToPS) trial is a participant-centric, primarily digital clinical trial—representing a multisectoral collaboration between Scripps Research Translational Institute, Aetna's Innovation Labs and Healthagen Outcomes units, and Janssen Pharmaceuticals, Inc.—to test the use of a wearable electrocardiographic (ECG) sensor patch, for detection of new atrial fibrillation (AF) among individuals at possibly increased risk [4,5]. Although AF is a major contributor to stroke and mortality [6], up to one third of all people with AF may not be aware that they have it [7]. This condition may be especially well suited for screening, given that preventive treatment with anticoagulant therapy can reduce the risk of stroke by 60%–70% [8].

The mSToPS study was designed as an entirely remote trial using a digital platform to inform candidate participants about the study, obtain consent, and enroll them into the trial rather than using a traditional site-visit model. Initial outreach was designed to be largely via email, with a direct-mail cohort as a comparator. Aetna invited candidates to visit the trial's website to learn more about the study and, if interested, complete educational modules and sign an electronic consent form. For participants who consented, ECG patches and the resultant findings were mailed to their homes, and all communications were handled remotely. During the study, participants had a help line to call if they had questions, and study staff contacted participants if needed (e.g., for overdue patches) via telephone or email.

We describe herein the methods used to remotely recruit 2659 study participants in 10 months. Testing variations of email-based and direct-mail recruitment messages informed a dynamic, iterative redesign of the recruitment campaigns.

2. Methods

2.1. Ethics approval and consent to participate

The mSToPS study protocol and other study materials (informed consent form, website, mailers, etc.) were approved by the Scripps Institutional Review Board. Written informed consent and a separate HIPAA form were obtained from all participants.

2.2. Study population

The study population was derived from Aetna Commercial Fully Insured and Medicare members who were eligible for mSToPS according to inclusion and exclusion criteria applied to claims data [4,5]. Inclusion criteria were based on age and comorbid conditions, whereas exclusion criteria included a prior diagnosis of AF, an implantable pacemaker or defibrillator, or current use of anticoagulant therapy. Recruitment began in November 2015, with 359,161 Aetna members identified as being eligible at that time.

2.3. Recruitment methods

Recruitment into the mSToPS trial continued until at least 2100 participants had signed the informed consent form (ICF) [4,5]. At the time recruitment was complete, Aetna had sent invitations to 102,553 members who were eligible for the trial, including all 52,553 eligible members who designated email as their preferred method of communication with the health plan. Although the primary focus of outreach was on the use of email as a means of recruitment, invitations had also been sent by direct mail to an additional 50,000 members randomly chosen from the remaining eligible pool to explore the difference between methods of contact. A subset of the email-eligible pool (n = 8053) was targeted for a multifaceted campaign involving both email and direct mail (see Optimized Campaign below). All invitations to participate in the study directed interested members to the study's website, where additional information was available. The study's ICF was also accessible on the website, where it could be signed electronically. Upon signing the ICF, members were randomly assigned to receive the ECG patch either immediately (immediate monitoring group) or 4 months later (delayed monitoring group).

2.4. Email recruitment

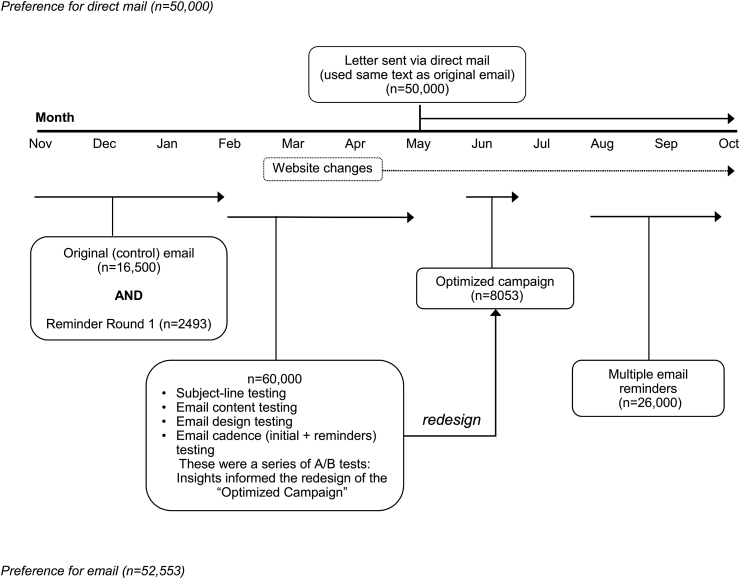

The email recruitment process was designed to be iterative (Fig. 1). Each week, batches of 2000–10,000 invitations were sent and the response rates measured (percent of emails received, opened, and clicked through, and participants enrolled). The findings were then used to inform each subsequent version of the invitations. The first invitations for participation were sent in November and December 2015, as two email batches targeting a total of 2500 members. All emails had the subject line “Please help us understand heart health,” and the email body contained a link to the study's website at the bottom as well as a study phone number (see Supplementary Material). This email was used as the control in the first round of email tests detailed below.

Fig. 1.

Timeline for email and direct-mail campaigns.

2.5. Email tests

After assessment of the response rates (defined above) generated from the initial outreach email, we ran a series of email campaigns in batches of 4000–10,000 members randomized to receive different test messages designed to understand motivations for joining the mSToPS trial (see Fig. 1). Our primary tests focused on exploring altruistic motives and self-interested motives (finding out about their own health and gaining access to new technology), by isolating these distinct appeals in either the subject lines or in the bodies of the email invitations. The initial outreach (control) email had contained mixed elements of all three of these appeals.

The subject-line testing was conducted in two rounds that each tested four subject lines. The subject line, which consisted of one text line, would typically be the only message a candidate participant would view before choosing to take action (i.e., opening the email). Email subject lines tested over the course of the recruitment period drew on behavioral insights and included personalization and/or specific appeals to social proof, exclusivity, altruism, obtaining personal health information, or access to new technology (Table 1). The original long-form email body content was used across all versions of the subject-line tests.

Table 1.

Email subject lines tested over the course of the recruitment period.

| Round | Type | Content |

|---|---|---|

| 1 | Control | “Please help us understand heart health” |

| Social proof | “Join thousands of other Aetna members in a heart health study” | |

| Exclusivity | “Join a select group of Aetna members in a heart health study” | |

| Personalized | “(Member name), you're invited to join a heart health study” | |

| 2 | Control | “(Member name), you're invited to join a heart health study” |

| Altruistic | “(Member name), you can help make a difference in medical care” | |

| New technology | “(Member name), we'd like you to test a new wearable device” | |

| Personal health information | “(Member name), find out if you have atrial fibrillation, an unusual heart rhythm” |

In remaining batches, changes to the email body (which the candidate participant saw upon opening the email) were tested, including appeals specific to altruism, obtaining personal health information, and access to new technology (see Supplementary Material). All versions of the email bodies in these tests used the same subject line, contained the same introductory paragraph describing the study, displayed one of the three specific appeals in bold, included the same single, large call-to-action button (“Learn More”), and included disclosure statements at the bottom (see Supplementary Material). The control email body was the original email invitation that included references to all three appeals and had no call-to-action button.

A final, multiple-reminder email campaign was launched in the last month of recruitment targeting 26,000 eligible members who had not enrolled in response to previous original or reminder emails. The campaign involved three emails, each with a different appeal, sent two weeks apart.

Data tracked and collected by Aetna for each batch of emails included the number of emails sent (i.e., the number targeted minus the number with invalid emails), the number received (i.e., those not bounced back), the number opened, and the number of click-throughs directly to the study website from the link included in the email. Visitors referred to the website through other means were not tracked. The relative effectiveness of each email subject line was assessed by the proportion of emails opened. The relative effectiveness of email body content was assessed by the click-through rate and the number enrolled.

2.6. Direct mail recruitment

Six months into the recruitment period, invitations to participate were sent by direct mail to eligible members who had indicated direct mail as their preferred method of communication, to serve as a comparator to email responses (see Fig. 1). The mailing consisted of a brochure with the same language as the original email body, instructions to type in the website address or to use a search engine to find the link, and the study telephone number (see Supplementary Material). Data to assess the effectiveness of the regular mail campaign were limited to the number enrolled.

2.7. Optimized campaign

Following the email and regular-mail campaigns, another 8053 members were targeted in a multifaceted campaign conducted over 3 weeks, including three emails sent 1 week apart and two redesigned mailers sent at the beginning and end of the campaign. Content was based on the best-performing subject lines and email bodies identified in the previous campaigns, which included personalization and an emphasis on the technology, with images consistent with the study website (see Supplementary Material). Effectiveness of the campaign was assessed using the percentage of messages opened and the click-through rate for each email iteration as well as the enrollment rate for the campaign overall.

2.8. Website changes

The study website was revised over the course of the recruitment period in response to findings from the message testing performed during the email campaign. A change in the home page shifted the focus from screening for AF to using new technology to monitor one's heartbeat. We also removed the requirement to enable cookies in order to sign the ICF. Members who stopped at this point in the process were contacted by email regarding this change and given a link for completing enrollment.

2.9. Statistical analysis

Descriptive statistics were calculated for each email type: the numbers targeted, sent, received, opened, clicked-through, and enrolled. Calculations of the proportions opened, clicked-through, and enrolled used as the denominator the number received for each email type. Rates were compared between subject line and email body types using Chi-square tests. For direct mail, descriptive statistics were limited to the number sent and enrollment rate. A p-Value of 0.05 was considered statistically significant.

3. Results

A total of 359,161 Aetna members were identified who met all inclusion/exclusion criteria for the mSToPS study based on claims data. Compared with the overall eligible cohort, members who ultimately enrolled in the trial (n = 2659) were younger, more likely to be male, and had fewer comorbidities, except for obesity and sleep apnea (Table 2). All eligible members who specified email as their preferred mode of communication (52,553 members) were targeted to receive an email invitation to participate. Of these, 41,836 received (79.6%), and 17,373 (41.5%) opened, an email invitation. Among eligible members who had specified a preference for direct mail, 50,000 were randomly selected to receive an invitation via that method.

Table 2.

Baseline characteristics of eligible members and of mSToPS study participants.

| Eligible members (359,161) | mSToPS participants (2659) | p-Value | |

|---|---|---|---|

| Age in years, mean (SD) | 75.5 | 72.4 (7.3) | <0.001 |

| Female sex, % | 51.4 | 38.6 | <0.001 |

| CHA2DS2 VASc score, median (Q1–Q3) | 3 (3–4) | 3 (2–4) | <0.001 |

| Previous stroke, % | 8.4 | 6.5 | <0.001 |

| Heart failure, % | 6.5 | 3.8 | <0.001 |

| Hypertension, % | 73.4 | 71.5 | 0.04 |

| Diabetes mellitus, % | 36.3 | 34.9 | 0.20 |

| Sleep apnea, % | 9.6 | 20.2 | <0.001 |

| Prior myocardial infarction, % | 8.7 | 6.7 | <0.001 |

| COPD, % | 7.2 | 5.4 | <0.001 |

| Obesity, % | 8.2 | 10.9 | <0.001 |

CHA2DS2 VASc - congestive heart failure, hypertension, age ≥75 years, diabetes mellitus, prior stroke/transient ischemic attack/thromboembolism, vascular disease, age 65–74 years, and sex category; COPD - chronic obstructive pulmonary disease; SD - standard deviation.

3.1. Baseline email response

The original control email message was targeted to 16,500 members in six batches with a total of 12,754 (77.2%) receiving the original email and 4950 (38.8%) opening it (Table 3). A total of 100 members enrolled in the study in response to the original invitation (0.8% of those receiving the email).

Table 3.

Email campaign results by type of communication.

| Communication type | Targeted n | Received n | Opened n (%) | Clicked Through n (%) | Enrolled n (%) |

|---|---|---|---|---|---|

| Original email | 16,500 | 12,754 | 4950 (38.8) | 411 (3.2) | 100 (0.8) |

| Subject line test Round 1 | |||||

| Control | 6000 | 4632 | 1911 (41.3) | 160 (3.5) | 37 (0.8) |

| Social proof | 2000 | 1558 | 544 (34.9) | 46 (3.0) | 1 (0.1) |

| Exclusivity | 2000 | 1549 | 566 (36.5) | 74 (4.8) | 19 (1.2) |

| Personalized | 6000 | 4618 | 1926 (41.7) | 215 (4.7) | 54 (1.2) |

| Subject line test Round 2 | |||||

| Control | 1000 | 867 | 297 (34.3) | 33 (3.8) | 11 (1.3) |

| Altruistic | 1000 | 848 | 266 (31.4) | 20 (2.4) | 1 (0.1) |

| New technology | 1000 | 860 | 394 (45.8) | 64 (7.4) | 13 (1.5) |

| PHI | 1000 | 849 | 255 (30.0) | 17 (2.0) | 6 (0.7) |

| Email body testa | |||||

| Control | 2000 | 1746 | 724 (41.5) | 83 (4.8) | 17 (1.0) |

| Altruistic | 2000 | 1746 | 710 (40.7) | 235 (13.5) | 23 (1.3) |

| PHI | 2000 | 1731 | 763 (44.1) | 230 (13.3) | 22 (1.3) |

| New technology | 2000 | 1720 | 751 (43.7) | 236 (13.7) | 36 (2.1) |

| Reminder Round 1 | 2493 | 1883 | 768 (51.4) | 47 (3.1) | 15 (0.8) |

| Reminder Round 2b | |||||

| Altruistic | 4000 | 3434 | 1688 (49.2) | 391 (11.4) | 31 (0.9) |

| PHI | 4000 | 3430 | 1665 (48.5) | 316 (9.2) | 19 (0.6) |

| New technology | 24,000 | 19,170 | 9362 (48.8) | 2041 (10.6) | 225 (1.2) |

PHI - personal health information.

Subject line personalized for all.

Subject line “Reminder, your invitation is waiting” for all, with different email bodies.

3.2. Response to subject lines

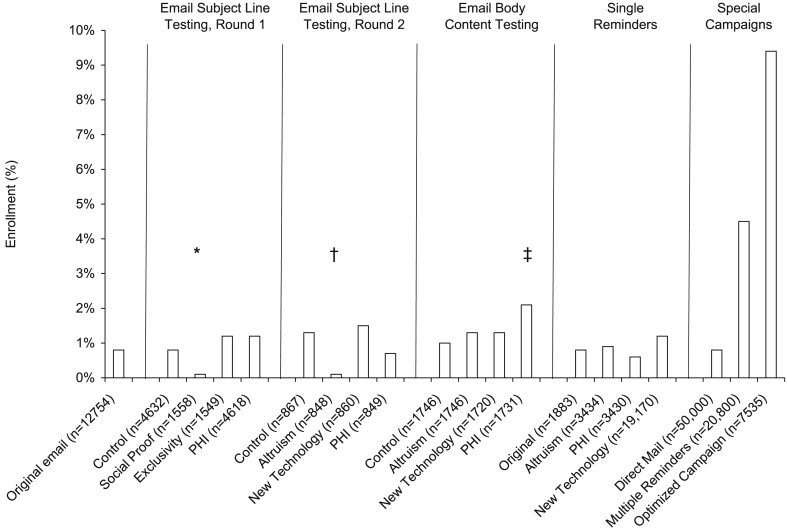

Different subject lines (Table 1) were first tested in two batches of emails sent to another 16,000 members. During this first round, the rates of opening the email were highest for the control (41%) and personalized subject lines (42%) (p = 0.68) and lowest for the social-proof subject line (35%) (p < 0.001 versus both control and personalized subject lines) (Fig. 2). The enrollment rate was also significantly lower among those receiving the social-proof subject line (0.1%) compared with the other subject lines (0.8%–1.2%) (p < 0.01 for all comparisons versus social proof) (Table 3).

Fig. 2.

Enrollment rates by campaign type. The denominator for each group is the number of candidate participants who received each type of communication. PHI - personal health information. *p < 0.01 versus each of the other subject lines. †p < 0.01 versus Control and New Technology. ‡p < 0.01 versus Control.

In the second round of subject-line testing, 4000 members were targeted with one of four additional subject line types (Table 2). All versions of the Round 2 email subject lines were personalized (started with the member's name). In this round, the rates of both opening the email and enrolling were highest with the subject line emphasizing access to new technology (46% and 1.5%, respectively) (Table 3). Subject lines emphasizing altruism and access to personal health information had the lowest rates of opening the email (∼30% for both) and of enrolling (0.1% for altruistic; 0.6% for access to personal health information; p < 0.01 for altruistic vs. control and new technology) (Table 3, see Fig. 2).

3.3. Response to email body content versions

The effects of three different types of appeals in the body of the email invitation were tested in two batches targeting 8000 members (see Supplementary Material). All emails in this test used the same personalized control subject line identified as most effective in Round 1 of the subject-line tests. As expected, rates of opening the email were similar across email types (41%–44%) (Table 3). The click-through rate was lowest for the control group (5%) and substantially higher but similar among the remaining three groups (∼13.5%). The enrollment rate was also lowest for the control group (1.0%) and highest for the new-technology appeal (2.1%), followed by altruism (1.3%) and access to personal health information (1.3%) (p = 0.01 for control vs. new technology).

3.4. Reminder emails

Reminder emails were targeted to 34,493 members not enrolling in response to their original email invitations over the course of the recruitment period. With single reminders, the emails had higher rates of opening (∼50% for Rounds 1 and 2) and click-throughs (∼10% for Round 2), but similar rates of enrollment compared with the original emails (1.0% for both rounds) (Table 3). A later, multiple-reminder campaign involving three email reminders 2 weeks apart resulted in a significant increase in enrollment rate overall (n = 945; 4.5%) (see Fig. 2), with the first email resulting in a 2.5% enrollment rate, decreasing to 1.6% and 1.2% in response to the second and third emails of the campaign, respectively.

3.5. Regular mail campaign

Of the 50,000 members designated to receive a study invitation by direct mail, a total of 379 enrolled, representing an enrollment rate of 0.8%.

3.6. Optimized campaign

Of the 8053 initially targeted individuals, 7535 were actually included due to mailing preferences. Over the 3-week campaign, the rate of opening was 52% for the first email, decreasing to 30% for the last email. The click-through rate for the first email was 10%, decreasing to 3% for the last email. Overall, 9.4% of members who received at least the first email of the campaign enrolled in the study (705 of 7535; see Fig. 1).

3.7. Website

The most significant change to the website was the removal of a requirement to enable cookies, which could have acted as a barrier to signing the ICF. Prior to this change, 66.6% of members reaching the ICF page signed it; after the cookies were removed, the rate rose to 72.1%. Consistent with this, of the 340 members who had previously reached the signature page but had not signed, 29 (8.5%) enrolled after being contacted by email and given a chance to sign the ICF via a direct link.

4. Discussion

Remote clinical-trial recruitment can be greatly influenced by the content and design of the campaign. Through iterative testing and refining of the mSToPS recruitment campaign, we enrolled 2659 participants in 10 months, at rates that increased dramatically over the course of the recruitment period by varying the content and cadence of email invitations.

The response to the initial mSToPS recruitment outreach, which consisted of a single long-form email with references to all the appeals of the program, was quite low (0.8%). Similar enrollment rates resulted from the direct-mail campaign. Testing variations in content, design, frequency, and cadence provided insights that led to dramatic improvements in enrollment rates. The optimized, multifaceted campaign—involving three emails and two direct mailers over 3 weeks—resulted in the highest level of response (9.4% enrollment rate), a ∼12-fold increase. This level of improvement is likely due to the fact that this campaign included all learnings from prior testings, incorporated into multiple contacts. Given that substantial numbers of participants continued to enroll at the fourth and fifth points of contact, digital clinical enrollment clearly should not be a one-time communication effort.

Different subject lines influenced the rates at which the emails were opened. Opening rates overall averaged 40%, with lower opening rates associated with subject lines appealing to altruism, access to personal health information, and social proof (30%–35%) and higher rates associated with personalized subject lines using the member's name and those appealing to access to new technology (up to 46%). What is interesting about this finding is that altruism has often been noted as a primary motivator for study involvement when subjects are asked; but in this case, it appeared to be least motivating [9]. Also surprising was the low response to discovering personal health information (detection of AF), which, in the case of this trial, could result in initiating preventative treatment, and possibly lowering the risks of stroke and mortality.

One of the most significant improvements came from simplifying our email message. Click-through rates were originally very low (around 4%) with the long-form initial email, but rates increased to 10%–14% with changes to the email body that included a short, specific appeal and a large “Learn More” button. Enrollment rates paralleled the click-through rates, with the highest rates associated with email-body messages that highlighted the new technology in use. Reducing technical barriers in the online consent process (i.e., removing the need to enable cookies) also led to increases in enrollment. Reminder messages likewise proved to be effective, with similar rates of individuals continuing to enroll in response to the third email compared with the first (∼1% for both).

Recent years have seen increasing interest in remote recruitment, enrollment, and participation capabilities for clinical trials, as enabling technology continues to become more widespread. Such capabilities can take the form of telemedicine, where researchers connect remotely but in real time with prospective participants, or manifest in online or mail-based strategies. One recent randomized trial has shown that telemedicine is not inferior to a face-to-face informed consent process, in terms of patient-reported understanding [10]. And in a recent worldwide online survey (N = 12,427), 60% of respondents reported the use of some form of technology in their clinical studies, most commonly taking the form of text messaging (18%), use of a tablet for informed consent (17%), use of a smartphone app (10%), and use of wearable devices (8%) [11]. Given that 23% of the respondents in this survey also listed “location of the study” as one of the least-liked aspects of research participation, the use of remote technologies can offer convenience and flexibility to an expanding pool of candidate subjects.

Our findings from the mSToPS study show the feasibility of remote clinical-trial recruitment. In addition, an iterative, dynamic approach to recruitment that isolates individual motivators can help researchers understand which aspects of their study appeal most to prospective participants. Designing content and imagery around the most effective appeals can then lead to increased participation.

Aspects that may limit the applicability of these results to other populations include participant eligibility within a cohort of privately insured individuals and Medicare recipients. Groups with other forms of coverage or no health insurance at all may differ in substantial ways from our participants. In addition, no monetary incentives were provided to our participants. Such incentives may affect enrollment rates in remote research projects such as mSToPS. Conversely, persons who lack personal Internet access via computer or mobile technology would need to find another method for getting online in order to participate in a web-based trial, such as accessing the Internet at a public library. The extra effort required in this case would likely result in reduced enrollment.

We have only begun to understand how to communicate with potential participants electronically, both before and during trials. The use of digital outreach via email, text, and social media makes it increasingly easy to test-and-iterate in recruitment campaigns, and our findings highlight the opportunities for researchers to create intentionally dynamic engagement models from the recruitment process through the end of the trial. The digital format for outreach, recruitment, and enrollment also enables researchers to capture metrics and measure effectiveness, tracking candidates at every step of the process (receipt, opening email, etc.) through to enrollment. Additional research should systematically explore the best approaches for various trial designs and patient populations.

Targeted remote outreach, enrollment, and management of a nationwide clinical trial are feasible, although more work is needed to learn how to best recruit and retain candidate research participants. The systematic approach used in this study allowed the research team to isolate and test messages and work towards optimizing a digital recruitment campaign.

Author contribution

KB-M, AME, JW, SE, RRM, LA, GSE, DT, JMF, CTC, TCS, EF, EJT, SRS contributed to the conception and design of the study.

KB-M, SE, LA were responsible for development of the emailed materials.

KB-M, AME, JW, SE, RRM, SRS created, analyzed, and interpreted the dataset.

KB-M, AME, JW, SRS were major contributors to preparation of the manuscript.

GSE, DT, JMF, CTC, TCS, EF, EJT made critical revisions of the manuscript.

KB-M, AME, JW, SE, RRM, LA, GSE, DT, JMF, CTC, TCS, EF, EJT, SRS read and approved the final manuscript.

Competing interests

KB-M, AME, JW, SE, RRM, LA, and GSE declare that they have no competing interests.

DT, JMF, CTC, TCS, and EF are employees of Janssen Pharmaceuticals, Inc. and are stockholders of its parent company, Johnson & Johnson.

EJT is supported by the National Institutes of Health/National Center for Advancing Translational Sciences grant UL1TR001114 and a grant from the Qualcomm Foundation, has previously served on the board of directors of Sotera Wireless, Inc. and Volcano Corp.; serves on the board of directors of Dexcom, Inc.; and is the editor-in-chief of Medscape (WebMD).

SRS is supported by the NIH/NCATS grant UL1TR001114 and a grant from the Qualcomm Foundation; serves as the medical advisor for Agile Edge Technologies, Airstrip Technologies, BridgeCrest Medical, DynoSense, Electrozyme, FocusMotion and physIQ; and serves on the board of directors of Vantage mHealthcare, Inc.

Funding source

The sponsor of the mSToPS trial is Scripps Research Translational Institute, supported by a grant from Janssen Scientific Affairs, LLC, Titusville, NJ, and is being carried out in partnership with Aetna, Inc., New York, New York and Janssen Scientific Affairs, LLC. Partial support was also provided through NIH/NCATS grant UL1TR001114 and a grant from the Qualcomm Foundation. The authors are solely responsible for the design and conduct of this trial, all data analyses, and the final contents and submission of this manuscript.

Acknowledgments

The authors thank Patricia A. French of Left Lane Communications for assistance with writing and editing this manuscript.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.conctc.2019.100318.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.Tice D.G., Carroll K.A., Bhatt K.H., Belknap S.M., Mai D., Gipson H.J., West D.P. Characteristics and causes for non-accrued clinical research (NACR) at an academic medical institution. J. Clin. Med. Res. 2013;5:185–193. doi: 10.4021/jocmr1320w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Steinhubl S.R., McGovern P., Dylan J., Topol E.J. The digitised clinical trial. Lancet. 2017;390:21–35. doi: 10.1016/S0140-6736(17)32741-1. [DOI] [PubMed] [Google Scholar]

- 3.VanEpps E.M., Volpp K.G., Halpern S.D. A nudge toward participation: improving clinical trial enrollment with behavioral economics. Sci. Transl. Med. 2016;8:348fs13. doi: 10.1126/scitranslmed.aaf0946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Steinhubl S.R., Mehta R.R., Ebner G.S., Ballesteros M.M., Waalen J., Steinberg G., Van Crocker P., Jr., Felicione E., Carter C.T., Edmonds S., Honcz J.P., Miralles G.D., Talantov D., Sarich T.C., Topol E.J. Rationale and design of a home-based trial using wearable sensors to detect asymptomatic atrial fibrillation in a targeted population: the mHealth Screening To Prevent Strokes (mSToPS) trial. Am. Heart J. 2016;175:77–85. doi: 10.1016/j.ahj.2016.02.011. [DOI] [PubMed] [Google Scholar]

- 5.Steinhubl S.R., Waalen J., Edwards A.M., Ariniello L.M., Mehta R.R., Ebner G.S., Carter C., Baca-Motes K., Felicione E., Sarich T., Topol E.J. Effect of a home-based wearable continuous ECG monitoring patch on detection of undiagnosed atrial fibrillation: the mSToPS randomized clinical trial. JAMA. 2018;320:146–155. doi: 10.1001/jama.2018.8102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wolf P.A., Abbott R.D., Kannel W.B. Atrial fibrillation: a major contributor to stroke in the elderly: the Framingham Study. Arch. Intern. Med. 1987;147:1561–1564. [PubMed] [Google Scholar]

- 7.Caldwell J.C., Contractor H., Petkar S., Ali R., Clarke B., Garratt C.J., Neyses L., Mamas M.A. Atrial fibrillation is under-recognized in chronic heart failure: insights from a heart failure cohort treated with cardiac resynchronization therapy. Europace. 2009;11:1295–1300. doi: 10.1093/europace/eup201. [DOI] [PubMed] [Google Scholar]

- 8.American College of Cardiology/American Heart Association Task Force on Practice Guidelines, Management of patients with atrial fibrillation (compilation of 2006 ACCF/AHA/ESC and 2011 ACCF/AHA/HRS recommendations) J. Am. Coll. Cardiol. 2013;1:1935–1944. doi: 10.1016/j.jacc.2013.02.001. [DOI] [PubMed] [Google Scholar]

- 9.Sugarman J., Kass N.E., Goodman S.N., Perentesis P., Fernandes P., Faden RR R.R. What patients say about medical research. IRB. 1998;20:1–7. [PubMed] [Google Scholar]

- 10.Bobb M.R., Van Heukelom P.G., Faine B.A., Ahmed A., Messerly J.T., Bell G., Harland K.K., Simon C., Mohr N.M. Telemedicine provides noninferior research informed consent for remote study enrollment: a randomized controlled trial. Acad. Emerg. Med. 2016;7:759–765. doi: 10.1111/acem.12966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Center for Information and Study on Clinical Research Participation (CISCRP) 2017. 2017 Perceptions & insights study: report on the participation experience.https://www.ciscrp.org/services/research-services/public-and-patient-perceptions-insights-study/# [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.