Abstract

Student-centered pedagogies increase learning and retention. Quantifying change in both student learning gains and student perception of their experience allows faculty to evaluate curricular transformation more fully. Student buy-in, particularly how much students value and enjoy the active learning process, has been positively associated with engagement in active learning and increased learning gains. We hypothesize that as the frequency of students who have successfully completed the course increases in the student population, current students may be more likely to buy-in to the curriculum because this common experience could create a sense of community. We measured learning gains and attitudes during the transformation of an introductory biology course at a small, liberal arts college using our novel curriculum, Integrating Biology and Inquiry Skills (IBIS). Students perceived substantial learning gains in response to this curriculum, and concept assessments confirmed these gains. Over time, buy-in increased with each successive cohort, as demonstrated by the results of multiple assessment instruments, and students increasingly attributed specific components of the curriculum to their learning. These findings support our hypothesis and should encourage the adoption of curricular transformation using IBIS or other student-centered approaches.

Introduction

Initiatives such as the Vision and Change in Undergraduate Biology Education [1] and the Royal Society’s State of the Nation reports [2] call for change in undergraduate science education and provide recommendations based on the educational literature, especially adoption of active learning pedagogies (summarized in [3]). In response to these initiatives, we developed a new introductory biology curriculum called Integrating Biology and Inquiry Skills (IBIS). A primary design feature of IBIS is guided, student-centered inquiry [4]. The curriculum promotes critical thinking by utilizing inquiry activities in the lecture session, paired with open-ended, inquiry laboratory investigations. Lecture content is introduced to students via scenarios and case-studies instead of a sequential march through the textbook. Substantial time is devoted to application of knowledge and group discussions of student-generated questions. Both formative and summative assessments were aligned with learning objectives and prioritized critical thinking and application over recall and recognition. Course concepts are further emphasized in the paired laboratory curriculum where student teams work under the guidance of faculty and experienced upper-level students (undergraduate peer mentors) to develop and carry out original experiments over multiple two-week periods. These experiments culminate in a lab report that models the format and style of a scientific article.

Active learning techniques achieve higher learning gains than traditional lecture, particularly for underrepresented minorities in STEM (Science, Technology, Engineering, and Math) [3]. However, students must be engaged as active learners to maximize the impact of active learning techniques [5]. Student buy-in, i.e., how much students value and enjoy the active learning process, can be positively associated with engagement in active learning [5]. Thus, a greater understanding of student buy-in could aid curricular transformation. For example, a barrier to student buy-in may be the novelty of active learning [6]. According to Expectancy Violation Theory, student resistance can occur when class requirements conflict with student expectations based on their previous experiences [6]. If students expect to be passive recipients of information, then any active learning environment will violate their expectations and might cause dissatisfaction. Increasing buy-in can result when students attribute the magnitude of their learning gains to the specific aspects of the active learning environment [5]. We hypothesize that although a new curriculum may not prompt many students in the first cohort to identify that active learning experiences are related to perceived learning gains, each successive cohort should increasingly attribute their learning gains to active learning. As the frequency of students who have successfully completed the course increases in the student population, current students may be more likely to buy-in to the curriculum because this common experience could create a sense of community.

To detect if student buy-in to a new curriculum changes over time, we documented student opinion, self-efficacy, and learning gains in multiple consecutive years while employing the IBIS curriculum. Thus, we not only measured learning gains to evaluate curricular effectiveness, but also examined the role of student opinion and affect (student identity, self-efficacy and sense of community; [7]) with multiple assessment tools to identify student perceptions of new classroom experiences. In each year of the IBIS program, students demonstrated significant learning gains in course outcomes by the conclusion of the course. Further, over the four years of the program, students increasingly recognized that the course structure and pedagogy contributed to their learning gains, and the interest of STEM majors in learning more about biology was consistently maintained. We reflect on these data to make recommendations for institutions looking to promote curricular reform, including the adoption of multiple assessments targeting different facets of the classroom experience to gauge the efficacy of curricular reform in terms of student performance and buy-in.

Materials and methods

We evaluated the IBIS program at a small, liberal arts college in the US over a four-year period (2013–2016). We assessed student learning gains and students’ evaluations of their own performance. Student learning gains were measured with a pre- and post-course concept assessment, while attitudinal changes and perceptions of learning were assessed with a post-course Student Assessment of Learning Gains (SALG) survey.

We examined our longitudinal surveys of student attitudes toward the IBIS curriculum and biology as a subject of study. We assessed whether learning gains and attitudes changed over time as a signal that the IBIS curriculum was assimilating into the educational experience and culture at this institution. Additionally, we compared student attitudes between STEM and non-STEM students.

Over the life of the program, 724 students were enrolled in the IBIS curriculum, which was taught by 13 faculty members, six of whom taught in the IBIS curriculum in multiple years. The number of students enrolled in the program during one year varied from 136–245. The number of faculty members instructing the course varied from 4–7 each year (Table 1). In addition to faculty members, the IBIS program also uses undergraduate students as peer mentors (PMs). PMs are students that have previously been successful in the course and are available to help students both in and out of normal class times. We offered pedagogical training sessions for faculty members and PMs who were involved in the IBIS program each year. In these training sessions, we emphasized the importance of explaining how different components of the course relate to student learning. Weekly meetings were also held to reflect on classroom experiences and discuss curricular decisions, such as content revisions, assessments, and implementation strategies.

Table 1. Faculty and student participation in the IBIS program.

| Year | Total Faculty (Percentage with Previous IBIS Experience) |

Total Students (Student to Faculty Ratio in Lecture Sections)a | STEM Majors / Non-majors |

|---|---|---|---|

| 2013 | 7 (71%) | 245 (30.6:1) | 40.7% / 59.3% |

| 2014 | 6 (67%) | 182 (30.3:1) | 50.4% / 49.6% |

| 2015 | 6 (67%) | 161 (26.8:1) | 61.1% / 38.9% |

| 2016 | 4 (75%) | 136 (27.2:1) | 78.1% / 21.9% |

aStudent to Faculty ratio in lab sections ranged from 22.4:1 to 23.3:1

Assessments

The Presbyterian College IRB approved all data collection (PC-201221). Participants were informed of the IBIS curriculum implementation in their course syllabus. During the first lab meeting of the semester, students were provided an informed consent document, which they signed in order to give the PIs permission to collect and store relevant anonymized data from the study. Data were collected from several consecutive classes of incoming first-year college students. Beginning in 2013, we evaluated student performance and student assessment of learning gains to assess course effectiveness and to guide curricular revisions.

Assessment concepts for the pre- and post-course concept survey were selected based upon several criteria: feedback from our faculty instructors, similarity to questions and topics students might encounter on summative exams, and alignment to common topics found in introductory biology courses [8] and the Biology Concept Inventory (www.bioliteracy.colorado.edu). The survey also included attitudinal questions related to course perceptions, interests, mindset, and self-efficacy. Items used to evaluate student attitudes quantitatively utilized a Likert scale in the pre- and post-course concept survey. We unambiguously matched pre- and post-course responses for 542 students (74.9%); the remainder of responses remained unmatched due to a missing pre- or post-course instrument. Our pre- and post-course survey is available as a supplemental file (S1 File).

To examine further the self-efficacy of student learning, we also developed questions for the SALG instrument (www.salgsite.org, Instruments #63255, #67010, #67900, #71380, #72672, #76196). The SALG instruments had questions structured similarly to the attitudinal questions on the concept survey. The first category provided descriptions of course content/competency and asked students to identify the level of learning gain they perceived as a result of the course. The second category of question asked students to report how helpful they found different elements of the course to be (course structure, components, and other resources). Each of the two categories also asked open-ended questions in which students could expand upon their responses to each set of ranking questions. To evaluate student perceptions of the course we focused on a subset of questions from each of the two categories of questions (S2 File).

Data analysis

As recommended by [9], pre- and post-course performance on the concept survey were compared in a logistic regression with a generalized linear mixed effects model (binomial errors) with test (pre- vs. post-course) as fixed effects, and student identifier as a random effect. The logistic regression was conducted using the lme4 package [10] in R [11]. Tests of fixed effects were obtained using the car package [12]. Post-hoc multiple comparisons were conducted using the emmeans package [13]. Normalized learning gains [14], were calculated for visualizing the data and to compare our student performance gains to that of other studies. In addition, we constructed network diagrams to visualize the migration of individual attitudes for the same time period (bipartite package in R [15]).

For each question on the SALG, we used chi-squared tests to compare across years the frequencies of student responses for the different ranks of how much gain was attributed to a particular learning outcome or how much help was ascribed to a specific component of the curriculum. We used sentiment analysis methods on the open-ended SALG questions to quantify positive vs. negative feelings to different aspects of the curriculum as discussed above. We used the R packages tm [16, 17] and RWeka [18] to format the text for analysis and extract trigrams (three consecutive words from a response; [19]). We analyzed trigrams, rather than shorter or longer sets of consecutive words because trigrams are the smallest set of consecutive words to which sentiments could be assigned (e.g., [19]). For each detected trigram, we assigned a sentiment of positive, negative, or unknown. Positive vs. negative sentiment frequency for each category was compared across years using chi-square tests of association with Bonferroni corrections for multiple comparisons to discover if student attitudes with regard to a particular aspect of the program differed between years. All text analyses and subsequent statistical tests were conducted in R [11].

Results

Student performance indicators

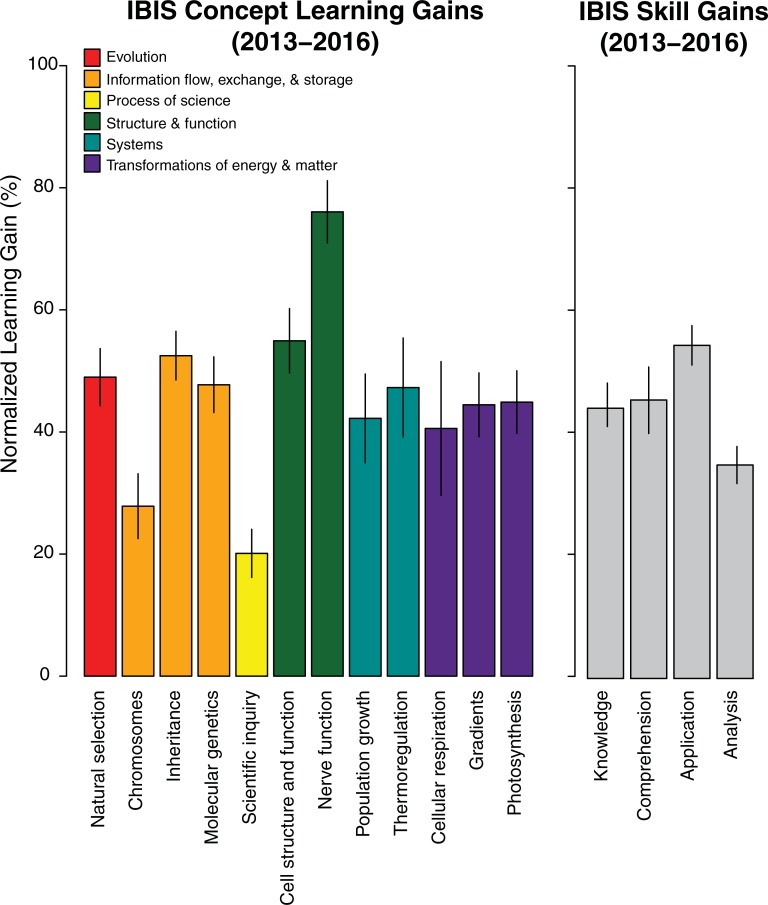

Across all four years, overall course grades did not differ (median grades ranged from 76.2% to 77.1%, p = 0.101), however, in each year, students were more likely to answer concept inventory questions correctly after the course (p < 0.05 for all years). For concepts, the normalized learning gains were 19–75% each year (Fig 1). For critical thinking skills (knowledge, comprehension, application, analysis [20, 21]), the normalized learning gains were 30–50% for all years (Fig 1).

Fig 1. Normalized student learning gains.

Normalized learning gains across concepts (a) and skills (b) were above 19%, compiled across all years of the study.

Student attitudes

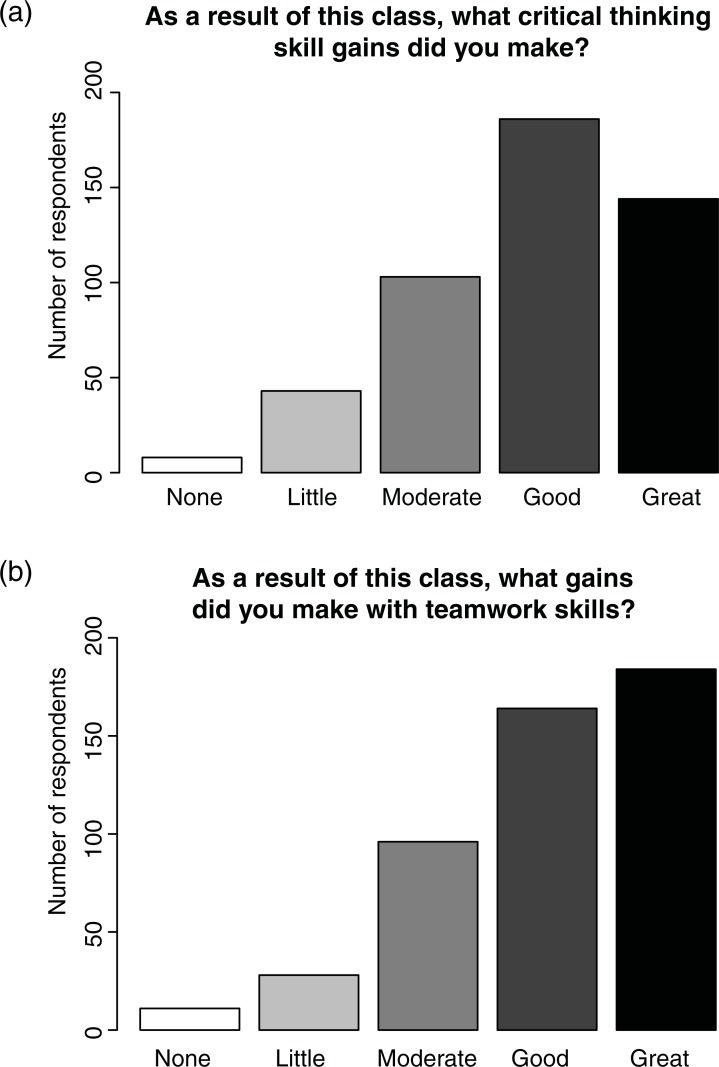

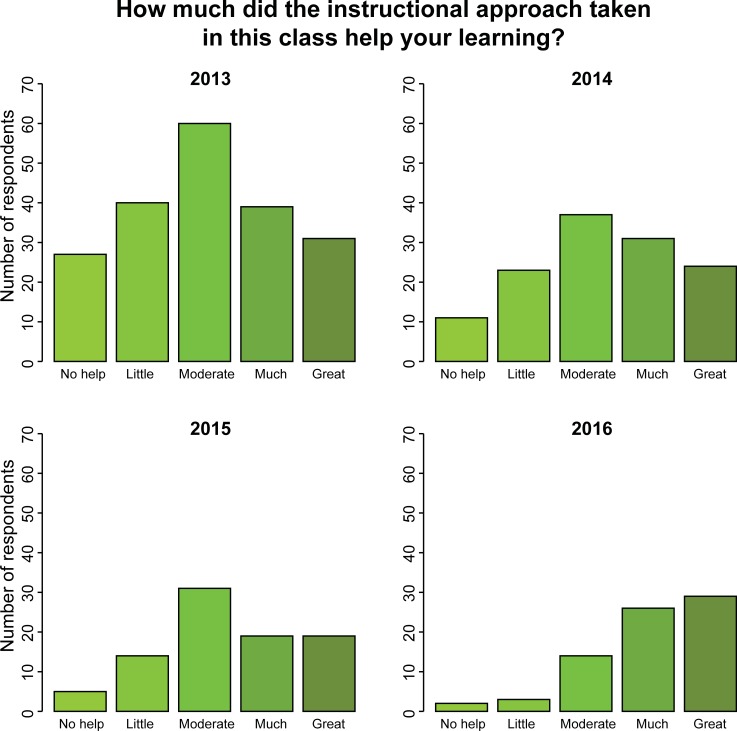

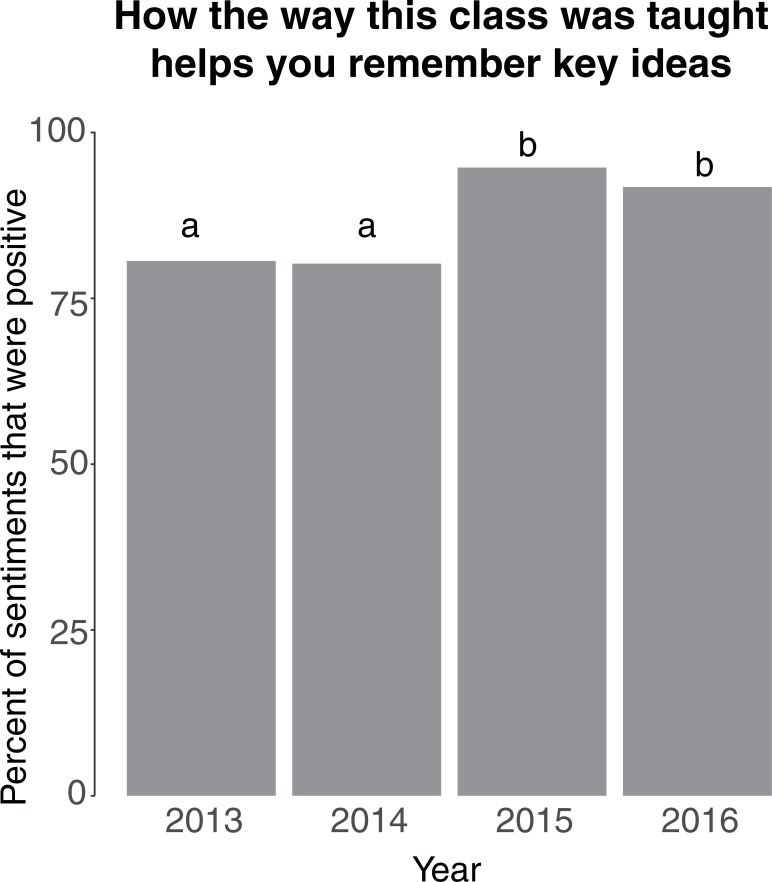

An overwhelming majority of students perceived their gains as moderate or higher in teamwork and critical thinking skills as a result of the course (compiled across all years; Fig 2). Comparing across years, many aspects of the course–such as the instructional approach, types of formative assessments, and peer mentor roles–were increasingly seen as important for student attitudes toward biology as a discipline and their learning gains (Fig 3, S2 File). When asked to comment on how the course helped them remember key ideas, we found relatively more positive compared to negative trigrams within each year, and more positive trigrams in 2015 and 2016 compared to the first two years of the program (p<0.05; Fig 4). “Clicker questions” were most frequently identified as helpful, supporting the findings of [22]. The other most frequent positive trigrams were “helped apply concepts,” “helped retain information,” and “help better understand,” which all suggest that students connected curricular philosophy to their learning gains. Surprisingly, we did not find any difference in how STEM vs. non-STEM majors view active learning pedagogies (S2 File).

Fig 2. Student perception of learning gains.

An overwhelming majority of students, compiled across all years of the study (2013–2016), ranked their gains as moderate or higher in teamwork (a) and critical thinking skills (b) as a result of the course.

Fig 3. Change in student buy-in over time.

Student responses shift over time, reducing the frequency of low rankings (No help, Little) relative to high rankings (Much, Great). Over four years, students increasingly report the instructional methods as responsible for large gains in their learning (p<0.0001).

Fig 4. Student sentiment analysis.

Regarding pedagogy, positive sentiments were more abundant than negative sentiments in all years, and this proportion was higher in the latter two years (p<0.0001). Sentiments were assigned to trigrams (three consecutive words) that were extracted from a free-response survey question.

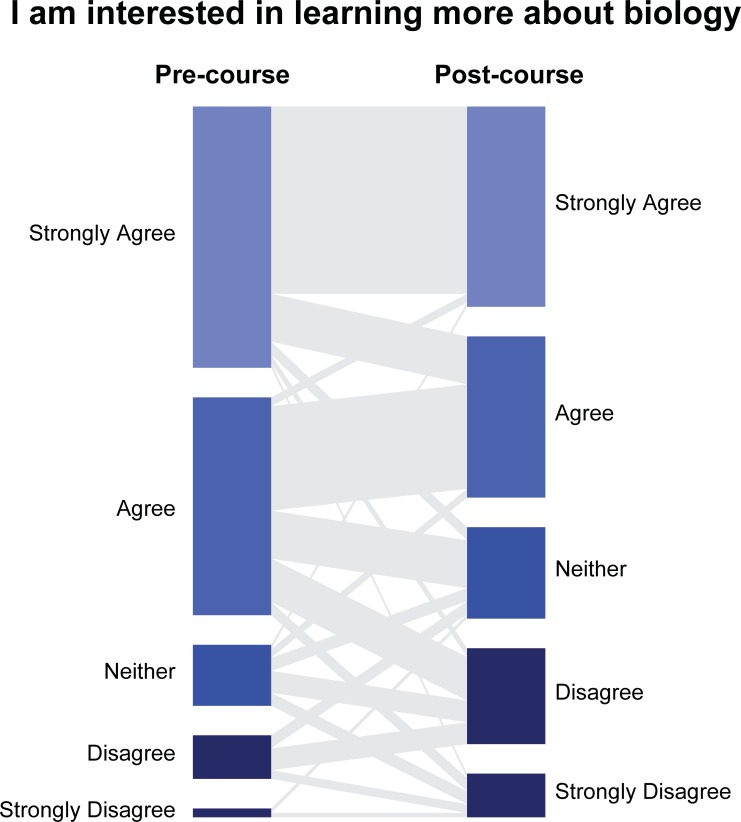

Within each year, some students became less interested in learning about biology after taking the course (p < 0.05 for all years). For example, in 2014, 10% of students either Disagreed or Strongly Disagreed with the statement “I am interested in learning more about biology” prior to taking the course. On the post-course survey, this increased to 24.4% (Fig 5). From 2013 to 2015, most of the disinterest came from students who did not intend to major in STEM fields. Importantly, very few STEM majors reported a reduction in their interest in biology (e.g., in 2014, from 0% Disagree to 3.8% Disagree; no STEM majors Strongly Disagreed). Note that within each year we found relatively more positive (65–81%) compared to negative (35%-19%) trigrams being expressed in response to “How this class changed your attitudes toward biology” (p<0.0001). Thus, it appears that although a minority of students became increasingly dissatisfied, the majority were satisfied with the IBIS experience.

Fig 5. Migration of student attitudes toward biology.

Most students maintained a positive view of the subject, though the percentage of students who were uninterested did increase. Students who became less interested were primarily those who were not planning to major in STEM fields.

Discussion

We designed and deployed a student-centered introductory biology curriculum at a small, liberal arts college in the US that resulted in both substantial learning gains across all years and increased student buy-in over time. Students participating in the IBIS curriculum demonstrated learning gains between 19–75% (content) and 30–50% (skills), which are comparable to (or exceeding) other reported learning gains from student-centered environments (Fig 1), and help to explain the extensively documented increase in student performance under active learning environments compared to traditional lecturing [3]. For example, [23] measured normalized learning gains between 23% (energetics) and 50% (biological membranes), and [24] reported normalized learning gains between 7–16% (cellular and molecular biology). Normalized learning gains of 48% (cellular respiration) were reported when using a student-centered case study approach [25].

Data collected in parallel on students’ attitudes suggest that students in the IBIS curriculum also assimilate to and recognize the benefits of this instructional curriculum over time (Figs 3 and 4). Thus, it appears that an increased frequency of students who have successfully completed the course in the student population promotes buy-in among current students. In addition, we speculate that the thorough use of formative assessments and a well-structured peer mentor program–major components of the IBIS curriculum–may help students identify and correct deficits in their understanding of course material, which are key components of student metacognition [5]. In addition to helping students during normal class meeting times, PMs created and employed oral quizzes to help students achieve the intended learning outcomes for the course. In other studies, students have indicated that alignment between learning activities and assessments and learning support structures (such as PMs) are important elements in promoting learning [26], and it appears that these elements in the IBIS program also help improve student buy-in (S2 File).

Alternatively, the increase in buy-in to course pedagogy could be associated with the increasing percent of students who aspired to be STEM majors (Table 1). This possibility suggests that aspiring STEM majors could be more receptive to our course pedagogy than non-majors, and student buy-in for majors-only courses may be less difficult to attain. However, we did not find that STEM compared to non-STEM students differed in how much they valued the IBIS instructional approach (S2 File). Instead, non-STEM students were more likely to reduce their interest in biology compared to STEM students (Fig 5). Thus, the change in attitudes of non-STEM students could be attributed to a genuine lack of interest in the subject matter and not the way the course was taught.

Students’ perception of and commitment to an active learning environment are thought to be highly correlated to their engagement and success [27]. Although we expected that increased buy-in would be accompanied by increased learning gains [5], we found that learning gains did not change significantly over the course of the study despite improved buy-in. It is possible that the relationship between buy-in and learning gains is more complex than shown previously [5, 27]. Our study underscores the need to evaluate not only learning gains, but also how students perceive their learning environment when assessing curricular transformation.

Emphasizing student buy-in towards curricular and pedagogical changes is important, because student buy-in is crucial to maintain classroom interactions and possibly improve student performance [5, 22, 28]. Instructors should address student expectations of a passive instruction style/classroom early in the semester by sharing the motivation and data behind active-learning instruction. This introduction may help students connect these new experiences to positive impacts on their learning and increase student buy-in [5, 29]. These efforts to promote student buy-in of active learning experiences not only help the perception of an individual faculty’s courses, but also could further reinforce changes in the institutional culture.

Conclusion

When transforming undergraduate curricula to align with recommended best practices, tracking learning gains is essential; however, student attitudes must also be considered because student buy-in plays a role in creating learning environments that are effective. Thus, instructors and administrators should examine the instruments and methods they use to assess student opinion. Traditional end-of-course evaluations may not adequately assess the student-centered nature of the course or accurately correlate with student learning gains in a course [30], potentially increasing negative perceptions of the curricular changes among students. Our study thus underscores the need for an array of instruments that adequately assess the learning environment’s effect on student performance and perceptions during curricular transformation.

Supporting information

(PDF)

(PDF)

Acknowledgments

We would like to thank faculty participants in the IBIS program, especially Ronald Zimmerman and John Inman, and the IBIS Peer Mentors for their contributions to the IBIS program. Additionally, we recognized the work of David McGlashon and Renee Weinstein on the text analysis of the SALG results.

Data Availability

The data used in this study were collected from human subjects. Due to the sensitive nature of the data it is only available by request from SUNY Geneseo. Data requests can be sent to: ibis@geneseo.edu.

Funding Statement

This work was supported by the National Science Foundation (www.nsf.gov): DUE-1245706 to TJS, SY, and TRN. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.AAAS. Vision and change in undergraduate biology education: a call to action: a summary of recommendations made at a national conference organized by the American Association for the Advancement of Science, July 15–17, 2009. Washington, DC. 2011.

- 2.Society TR. Preparing for the transfer from school and college science and mathematics education to UK STEM higher education London: The Royal Society, 2011. [Google Scholar]

- 3.Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, et al. Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences. 2014;111(23):8410–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Council NR. Inquiry and the national science education standards: A guide for teaching and learning: National Academies Press; 2000. [Google Scholar]

- 5.Cavanagh AJ, Aragón OR, Chen X, Couch BA, Durham MF, Bobrownicki A, et al. Student buy-in to active learning in a college science course. CBE-Life Sciences Education. 2016;15(4):ar76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brown TL, Brazeal KR, Couch BA. First-Year and Non-First-Year Student Expectations Regarding In-Class and Out-of-Class Learning Activities in Introductory Biology. Journal of microbiology & biology education. 2017;18(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Trujillo G, Tanner KD. Considering the role of affect in learning: Monitoring students' self-efficacy, sense of belonging, and science identity. CBE—Life Sciences Education. 2014;13(1):6–15. 10.1187/cbe.13-12-0241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gregory E, Ellis JP, Orenstein AN. A proposal for a common minimal topic set in introductory biology courses for majors. The american biology Teacher. 2011;73(1):16–21. [Google Scholar]

- 9.Theobald R, Freeman S. Is it the intervention or the students? Using linear regression to control for student characteristics in undergraduate STEM education research. CBE—Life Sciences Education. 2014;13(1):41–8. 10.1187/cbe-13-07-0136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bates D, Mächler M, Bolker B, Walker S. Fitting linear mixed-effects models using lme4. arXiv preprint arXiv:14065823. 2014.

- 11.Team RC. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; R Foundation for Statistical Computing. 2018. [Google Scholar]

- 12.Fox J, Weisberg S. Multivariate linear models in R An R Companion to Applied Regression Los Angeles: Thousand Oaks: 2011. [Google Scholar]

- 13.Lenth R. Emmeans: Estimated marginal means, aka least-squares means. R Package Version 123. 2018.

- 14.Hake RR, editor Relationship of individual student normalized learning gains in mechanics with gender, high-school physics, and pretest scores on mathematics and spatial visualization. Physics education research conference; 2002.

- 15.Dormann CF, Gruber B, Fründ J. Introducing the bipartite package: analysing ecological networks. interaction. 2008;1:0.2413793. [Google Scholar]

- 16.Feinerer I, Hornik K, Meyer D. Text mining infrastructure in R. Journal of Statistical Software. 2008;25(5):1–54. [Google Scholar]

- 17.Feinerer IH, Kurt. tm: Text Mining Package. In: 0.7–5 Rpv, editor. 2018.

- 18.Hornik K, Buchta C, Zeileis A. Open-source machine learning: R meets Weka. Computational Statistics. 2009;24(2):225–32. 10.1007/s00180-008-0119-7 [DOI] [Google Scholar]

- 19.Altrabsheh N, Cocea M, Fallahkhair S, editors. Sentiment analysis: towards a tool for analysing real-time students feedback. Tools with Artificial Intelligence (ICTAI), 2014 IEEE 26th International Conference on; 2014: IEEE.

- 20.Anderson LW, Krathwohl DR, Airasian PW, Cruikshank KA, Mayer RE, Pintrich PR, et al. A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives, abridged edition White Plains, NY: Longman; 2001. [Google Scholar]

- 21.Bloom BS, Engelhart MD, Furst EJ, Hill WH, Krathwohl DR. Taxonomy of educational objetives: the classification of educational goals: handbook I: cognitive domain New York, US: D. Mckay, 1956. [Google Scholar]

- 22.Brazeal KR, Couch BA. Student buy-in toward formative assessments: the influence of student factors and importance for course success. Journal of microbiology & biology education. 2017;18(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Elliott ER, Reason RD, Coffman CR, Gangloff EJ, Raker JR, Powell-Coffman JA, et al. Improved student learning through a faculty learning community: how faculty collaboration transformed a large-enrollment course from lecture to student centered. CBE-Life Sciences Education. 2016;15(2):ar22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cleveland LM, Olimpo JT, DeChenne-Peters SE. Investigating the Relationship between Instructors’ Use of Active-Learning Strategies and Students’ Conceptual Understanding and Affective Changes in Introductory Biology: A Comparison of Two Active-Learning Environments. CBE-Life Sciences Education. 2017;16(2):ar19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rybarczyk BJ, Baines AT, McVey M, Thompson JT, Wilkins H. A case‐based approach increases student learning outcomes and comprehension of cellular respiration concepts. Biochemistry and Molecular Biology Education. 2007;35(3):181–6. 10.1002/bmb.40 [DOI] [PubMed] [Google Scholar]

- 26.Wyse SA, Soneral PA. “Is This Class Hard?” Defining and Analyzing Academic Rigor from a Learner’s Perspective. CBE—Life Sciences Education. 2018;17(4):ar59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cavanagh AJ, Chen X, Bathgate M, Frederick J, Hanauer DI, Graham MJ. Trust, Growth Mindset, and Student Commitment to Active Learning in a College Science Course. CBE—Life Sciences Education. 2018;17(1):ar10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Seidel SB, Tanner KD. “What if students revolt?”—considering student resistance: origins, options, and opportunities for investigation. CBE-Life Sciences Education. 2013;12(4):586–95. 10.1187/cbe-13-09-0190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Balan P, Clark M, Restall G. Preparing students for flipped or team-based learning methods. Education+ Training. 2015;57(6):639–57. [Google Scholar]

- 30.Uttl B, White CA, Gonzalez DW. Meta-analysis of faculty's teaching effectiveness: Student evaluation of teaching ratings and student learning are not related. Studies in Educational Evaluation. 2017;54:22–42. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

(PDF)

Data Availability Statement

The data used in this study were collected from human subjects. Due to the sensitive nature of the data it is only available by request from SUNY Geneseo. Data requests can be sent to: ibis@geneseo.edu.