Abstract

Objective

A variety of bioengineering systems are being developed to restore tactile sensations in individuals who have lost somatosensory feedback because of spinal cord injury, stroke, or amputation. These systems typically detect tactile force with sensors placed on an insensate hand (or prosthetic hand in the case of amputees) and deliver touch information by electrically or mechanically stimulating sensate skin above the site of injury. Successful object manipulation, however, also requires proprioceptive feedback representing the configuration and movements of the hand and digits.

Approach

Therefore, we developed a simple system that simultaneously provides information about tactile grip force and hand aperture using current amplitude-modulated electrotactile feedback. We evaluated the utility of this system by testing the ability of eight healthy human subjects to distinguish among 27 objects of varying sizes, weights, and compliances based entirely on electrotactile feedback. The feedback was modulated by grip-force and hand-aperture sensors placed on the hand of an experimenter (not visible to the subject) grasping and lifting the test objects. We were also interested to determine the degree to which subjects could learn to use such feedback when tested over five consecutive sessions.

Main Results

The average percentage correct identifications on day 1 (28.5 ± 8.2% correct) was well above chance (3.7%) and increased significantly with training to 49.2 ± 10.6% on day 5. Furthermore, this training transferred reasonably well to a set of novel objects.

Significance

These results suggest that simple, non-invasive methods can provide useful multisensory feedback that might prove beneficial in improving the control over prosthetic limbs.

1. Introduction

Recent years have marked major advances in the application of brain machine interfaces (BMIs) to partially restore the ability of paralyzed humans to interact with their environment (Hochberg et al. 2012; Collinger et al. 2013; Afalo et al. 2015; Bouton et al. 2016; Gilja et al. 2016, Ajiboye et al. 2017). One goal of such systems is to extract signals from regions of the cerebral cortex that represent desired or intended movements and to use those signals to control prosthetic devices. The formulation of the motor commands in the cerebral cortex that drive movements, however, is highly dependent on visual and somatosensory inputs. While complex voluntary movements can be performed in the absence of vision (e.g., highly skilled musicians often perform with their eyes closed), removal of somatosensory feedback via deafferentation dramatically and persistently impairs voluntary movements even in the presence of vision (Mott and Sherrington 1895; Sherrington 1931; Lassek 1953a; Twitchell 1954). This was vividly demonstrated in monkeys who had undergone a complete section of the cervical dorsal roots. In those animals, the arm permanently hung flaccidly at the side and was not even used to ward off threats such as pin-pricks (Twitchell 1954). While the deficits associated with deafferentation were not always so severe (e.g. Taub et al. 1975), nevertheless, these results imply that even to initiate movements (and prior to any feedback associated with the movement itself) that somatosensory inputs play an important role in shaping and organizing neural activity in the motor cortex. As such, the ability of BMIs to decode intended movement trajectories from cortical signals may be hampered by deficient or absent somatosensory feedback that accompanies many forms of paralysis.

It should be noted, however, that retention of even a modicum of sensory feedback in deafferentation studies largely prevents the development of overt impairments (Lassek 1953b, Twitchell 1954; Galambos et al 1967). As such, the inclusion of even relatively simple forms of artificial somatosensory feedback may markedly improve the control of motor prosthetics. Indeed, recent studies have explored the utility of artificial feedback signals representing tactile stimuli (O'Doherty et al. 2011; Tabott et al. 2013; Klaes et al. 2014; Kim et al. 2015; Flesher et al. 2016) or information about hand-to-target vectors (Dadarlat et al. 2014) to directly stimulate somatosensory cortex with implanted microelectrode arrays. Similarly, intraneural stimulation in residual peripheral nerves of amputees using implanted microelectrodes have also been used to provide tactile and proprioceptive feedback from a prosthetic hand (Riso 1999; Horch et al. 2011; Schiefer et al. 2016, Oddo et al. 2016).

While such invasive approaches show great promise, there remain a number of challenges and limitations, including the necessity (and associated risks and costs) of surgery. A non-invasive means to provide artificial sensory feedback is to stimulate sensate skin using mechanical (e.g. Bach-y-Rita et al. 1969; Chatterjee et al. 2007; Shokur et al. 2016) or electrical (e.g. Prior and Lyman 1975; Szeto and Saunders 1982; Kaczmarek et al. 1991; Marcus and Fuglevand 2011, Jorgovanovic et al. 2014; Patel et al. 2016; Štrbac et al. 2016; Dosen et al. 2017) stimuli. Such feedback systems typically are designed to provide information about grip force via sensors placed on the digits of the prosthetic or insensate hand (see review by Antfolk et al. 2014). While grip force signals are important, other somatosensory information, including hand location, hand orientation, and the degree of hand opening, among others, would also seem important to feedback to the user of an upper-limb prosthetic.

One challenge associated with multiple channels of artificial feedback relates to the relatively poor ability of users to efficiently switch attention between and extract desired information from the different signals (Szeto 1982; Szeto and Saunders 1982). However, previous studies that have examined multichannel somatosensory feedback typically involved a single experimental session only (Szeto 1982; Witteveen et al. 2014; Patel et al. 2016, Štrbac et al. 2016, Dosen et al. 2017). As such, the ability of subjects to learn to use more than one channel of artificial somatosensory information has only been addressed in a limited way.

Therefore, the purpose of this study was to determine the ability of healthy human subjects to learn to identify objects of varying sizes, weights, and compliances based entirely on electrotactile feedback from grip-force and hand-aperture sensors placed on the hand of an experimenter (not visible to the subject) grasping and lifting different test objects. Over the course of five experimental sessions, accuracy of object identification improved by about 80%. This suggests that multichannel somatosensory feedback can readily be learned and that such non-invasive methods could be used to improve control over upper limb prosthetics.

2. Methods and Materials

Eight subjects participated in this study (6 males and 2 female, ages 22-58). Each subject participated in 5 experimental sessions on 5 consecutive days. Each session lasted ∼ 1.5 – 2 hrs. The Human Investigation Committee at the University of Arizona approved the experimental procedures, and subjects gave their informed consent to participate.

2.1 Experimental Setup

The experimenter wore a glove fitted with position sensors (Liberty, Polhemus, Colchester, VT) placed on the thumb and the index finger (Fig. 1). In addition, a flexible force-sensitive resistor (9.5 mm diameter, Flexiforce A201 sensor, Tekscan, South Boston, MA) was attached to the glove over the distal, volar surface of the thumb. The specific position of the force transducer was adjusted to a location that ensured good contact with the objects during gripping. A small foam disk (9.5 mm diameter, 1.5 mm thick) was placed between the glove and the force sensor in order to maximize object contact with the force transducer and to minimize object contact with un-instrumented portions of the glove. Position and force data were recorded in real time (120 samples/s/channel, USB-6001, National Instruments, Austin, TX) and delivered to a MATLAB (Mathworks, Natick MA) program for on-line processing. The difference in the 3-D locations of the two position sensors was used to calculate hand aperture. The force signal was used to indicate the normal (grip) force applied normal to the grasped objects.

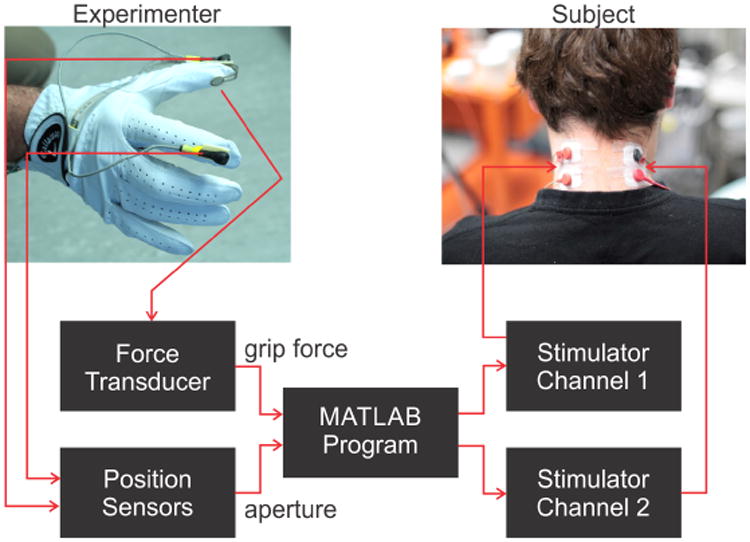

Figure 1.

Experimental setup. A force sensor on the volar surface of the thumb and position sensors placed on the dorsal surfaces of the thumb and index fingers were used to extract grip force and hand aperture information from the experimenter's hand while grasping various test objects. Grip force and aperture signals were processed in near real time by a MATLAB program to drive two channels of amplitude-modulated current pulses that were delivered to surface electrodes on the left and right side of subject's neck. Subjects wore blindfold and headphones (not shown) during testing.

Based on the force and aperture signals, the MATLAB program controlled a multichannel stimulator (STG4008, Multichannel Systems, Reutlingen, Germany) to deliver current-regulated pulses (described below) to two pairs of surface electrodes (Covidien/Kendall, Pediatric cloth ECG Hydrogel Electrodes H59P, Medtronic, Dublin, Ireland) placed on the left and right dorsal skin of the neck of the subject (Fig. 1). This region was targeted (similar to Marcus and Fuglevand 2009) as it remains sensate in most types of spinal cord injury. Also, this region is unobtrusive, readily accessible, and does not subserve other important functions. During testing, the subject wore a blindfold and headphones that played Brownian noise (noise for which power density falls off progressively for increasing frequencies) to obscure any auditory cues that might inadvertently have arisen while the experimenter was gripping test objects. A time lag (∼250 ms) between signal detection with sensors and delivery of associated current pulses to subject was present due to signal processing delays. While such a time lag was not critical in the present experiments involving relatively slow changes in applied forces and finger movements (see below), it could contribute to instabilities in real closed-loop control of a prosthetic hand. Software re-design in the future could markedly reduce these delays.

2.2 Test Objects

An array of 27 cylindrical containers served as the test objects that subjects attempted to identify based on electrotactile feedback during grasping and lifting by the experimenter (Fig. 2). Each object differed in terms of its particular combination of width, weight, and compliance. The containers were selected to have one of three approximate diameters: 4.5, 6.5,10 cm. Varying quantities of sand were placed inside the containers to bring each object to one of three weights: 100, 300, or 500 g (∼ 1, 3, and 5 N, respectively). Because the volumes of some of the small containers were not large enough to hold sufficient sand on their own to attain the targeted weights, an additional chamber was affixed beneath those objects to hold sand. The containers were made of glass, plastic, or silicone and were selected to possess different compliances, estimated to be: ∼0.0, 0.45, and 2.0 mm/N.

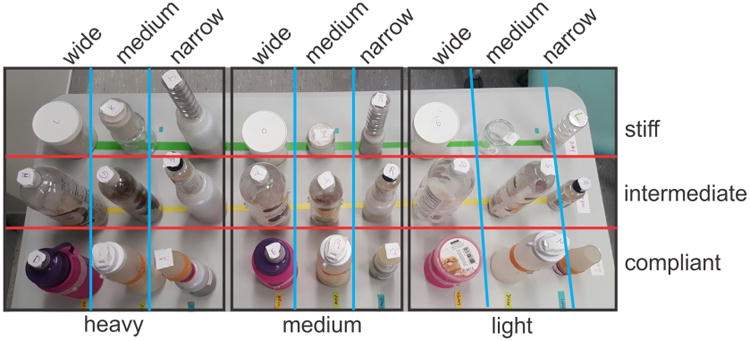

Figure 2.

27 test objects that subjects attempted to identify based on electrotactile feedback. Objects varied in weight (‘heavy’ = 500 g, ‘medium’ = 300 g, ‘light’ = 100 g), size (‘wide’ ≈10 cm, ‘medium’ ≈6.5 cm, ‘narrow’ ≈4.5 cm), and compliance (‘stiff’ ≈ 0 mm/N, ‘intermediate’ ≈0.45 mm/N, ‘compliant’ ≈2 mm/N). Some objects had additional containers fixed beneath them to provide chamber for sand needed to bring object to targeted weight.

Pairs of pliable plastic disks (1.5 cm in diameter, 2 mm thick) were glued on opposite sides of each object. These disks were the target sites for thumb and index finger contact with the objects by the experimenter. These disks also ensured that the coefficient of friction was more-or-less the same for each object grasped and that the locations of contact on a given object were the same during repeated trials.

Each test object was labeled with an identifying code and the objects were systematically arrayed on a table (Fig. 2) situated between the experimenter and subject. With visual inspection and explanation by the experimenter, the systematic spatial arrangement of objects helped subjects to easily understand the characteristics of the objects to be tested.

2.3. Electrotactile Feedback

Grip force and aperture signals were transformed into amplitude-modulated current pulse trains (200 Hz, 0.2 ms duration, monophasic pulses) delivered independently to the left and right set of electrodes, respectively, on the back of the subject's neck. A pulse frequency of 200 Hz was selected because this is approximately the lowest frequency that evokes relatively fused (rather than pulsatile) sensations (Marcus and Fuglevand 2009). Frequency modulation was not used to encode sensor signals because there is little change in perceived stimulus strength with increasing pulse frequency, particularly above 200 Hz (Kaczmarek et al. 1992; Marcus and Fuglevand 2009; Kim et al. 2015). Monophasic pulses were used because they seem less susceptible to sensory adaptation than biphasic pulses (Szeto and Saunders 1982).

Grip force (F) was converted into stimulus current amplitude (I) using a simple power function: I = a·Fb (Marcus and Fuglevand 2009). The coefficient a and exponent b were determined individually for each subject based on a brief calibration procedure that involved perceptual magnitude estimation to different current intensities. The relationship was bounded for each subject by the perceptual threshold current (method to identify threshold currents described below), which was mapped to a detected force equivalent to 2 standard deviations above the mean force signal recorded on the unloaded force transducer, and by the discomfort threshold current, which was mapped to a grip force = 15 N (i.e. above the maximum grip force used in these experiments).

Aperture encoding was based on a simple inverse linear relationship such that current amplitudes became stronger with smaller apertures. This type of coding was used based on preliminary studies (carried out on the authors) in which it seemed more intuitive for stimulus intensity to increase as the thumb and finger closed-in on target objects rather than diminish as the thumb and finger approached objects. The relationship was bounded for each subject by the perceptual threshold current which was associated with an extended hand posture having an aperture of about 12 cm (∼ 2 cm greater than the widest test object) and by the discomfort threshold current associated with the thumb and index finger in contact with one another (i.e. zero aperture).

2.4 Procedures

2.4.1 Threshold Determination

In each session for every subject, we first identified the perceptual threshold for each electrode pair by repeatedly delivering 1-s trains of stimulus pulses (1-s inter-train interval) that increased in amplitude from zero in 0.1 mA increments. The subject verbally reported when they first detected a sensation, and the associated current was deemed the perceptual threshold current.

We then carried out a similar procedure for assessing the discomfort threshold. In this case, however, subjects were asked to report when the stimulus became uncomfortable. To reduce the total number of stimulus trains delivered for this procedure, current amplitude was incremented in 0.5 mA steps beginning at the previously identified perceptual threshold amplitude. Electrical stimulation was immediately stopped when the subject reported discomfort. This level was then deemed the discomfort threshold.

2.4.2 Training

Once the operating current range was established, the training phase of the experiment began. In each session, we first asked subjects to distinguish between three objects that varied only in weight but had the same size and compliance (Fig. 3A). Subjects were aware of what objects were included in this set and that they only had to guess object weight. Initially, subjects viewed the experimenter grasp, lift, and squeeze each of the three objects while information about grip force was fed back to the subject through one pair of stimulating electrodes (aperture feedback was turned off). Then, with the subject blindfolded, the experimenter grasped, lifted, and squeezed each of the three objects in random order. After each trial, the subject guessed the weight of the object (light, medium, or heavy). The experimenter then informed the subject what was the actual weight of the object and the subject was allowed to look at the object. This task was repeated until the subject could successfully identify the weight on six consecutive trials without an error. This training was then repeated (Fig. 3A) for objects that varied only in size (with only aperture feedback provided) and then for objects that varied only in compliance (with both force and aperture feedback provided). The training phase enabled the subject to form initial associations between stimulus characteristics and object attributes. The training phase lasted about 10 – 15 minutes.

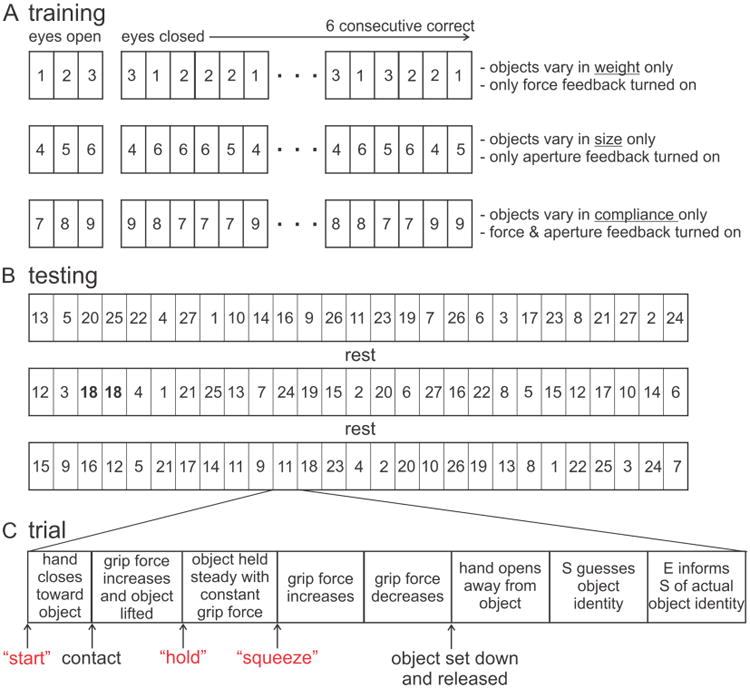

Figure 3.

Overview of training and testing protocols. Numbered rectangles represent individual objects. (A) Training involved presentation of three sets of three objects. In each set, objects varied in one attribute only and electrotactile feedback associated with that attribute was delivered. For the initial trials, subjects viewed the experimenter grasp, lift, and squeeze the objects. Then, while blindfolded, the three objects were presented in random order. When subjects correctly identified objects on six consecutive trials, the next training set was presented. (B) Testing involved 3 blocks of 27 trials separated by ∼5 min of rest. Each object was presented three times. The order of presentation was randomized across the 3 blocks. As such, an individual object could be presented on two consecutive trials (e.g. object 18 on trials 3 and 4 of block 2). (C) Each trial involved a sequence of several phases during which the experimenter (E) grasped, held, and squeezed the object. The E verbally notified (red text) the subject (S) of the onset of some of the phases.

2.4.3 Testing

Upon completion of the training phase, we tested the ability of subjects to distinguish among the 27 test objects based on electrotactile feedback provided through the two pairs of electrodes. There were three blocks of 27 trials/block with an ∼ 5 min rest period between blocks (Fig. 3B). Each trial involved a different object and the order of objects was randomized across the entire set of 81 trials (rather than across the 27 trials in a block). As a consequence, while each object was tested a total of 3 times in an experimental session, it was possible for the same object to be tested 2 or 3 times within a block, and even on 2 consecutive trials (e.g. see highlighted object 18 in block 2, Fig. 3B). This was done to prevent the subject from discounting objects that had been tested within a trial.

For each trial, and with the subject blindfolded and wearing headphones, the experimenter slowly closed his hand to make contact with the object, then grasped, lifted, held the object stable, squeezed, replaced the object, and then opened his hand away from the object (see Fig. 3C). The duration of an entire grasping sequence was about 10 – 12 s and the timing of the different phases was not strictly controlled. The squeezing portion of the trial was included to facilitate detection of object compliance. Trial start and the onsets of the holding and squeezing phases were reported verbally by the experimenter saying “start”, “hold”, “squeeze” respectively and loudly enough to be heard above the noise delivered to the headphones. After the object was replaced on the table, the subject was allowed to look at the entire array of objects and guess the one just lifted. Subjects could also simply verbally report the individual attributes of the object (e.g. “light-weight, small, and stiff”). The experimenter then informed the subject which object was grasped.

Subjects repeated these experiments on five consecutive days to assess the degree to which subjects could learn to use electrotactile information to identify objects. At the end of the fifth session, we also tested the ability of 3 of the 8 subjects to discriminate the 27 objects using their natural hand. While blindfolded, with no electrotactile feedback, but while wearing a thin leather glove (to help prevent information about object identity being conveyed based on texture), an object was placed between the subject's thumb and the rest of the digits with the hand held in an open configuration. The subject was then instructed to close their fingers onto the object, then to grasp, hold, and squeeze the object mimicking the protocol used by the experimenter while lifting each object. The object was then removed from the subject's hand and replaced on the table. The subject was then allowed to look at the objects and guess the object they just grasped.

2.5 Data Analysis

The main performance measure quantified for each session was the percentage of correct identifications, with chance level being 1/27 = 3.7%. To evaluate the effects of learning, a Friedman repeated measures one-way analysis of variance on ranks (given the unequal variances) was performed on the percentage of correct identifications with session (day 1 – day 5) as the factor. In addition, we calculated the percentage correct identifications of each attribute (weight, width, or compliance) to determine if some attributes were more readily identified than others. In this case, the chance of correctly identifying the level (e.g. light, medium, heavy) of a particular attribute (e.g. weight) was 1/3 = 33.3%. A two-way repeated measures ANOVA was performed on these data with attribute (weight, width, compliance) and session (day 1 – day 5) as factors.

2.5.1. Machine Learning

We were also interested to know whether sufficient information was even theoretically available in the signals fed back to subjects to enable to them to discriminate among the test objects. As such, we trained a machine-learning algorithm using features extracted from the actual feedback signals delivered to one of the subjects. For each trial, the features extracted included: 1) current amplitude measured on the aperture channel at the moment current amplitude on the force channel was greater than zero (i.e. at object contact), 2) average current amplitude on the force channel during the hold phase, 3) the difference in the current amplitudes on the aperture channel between time of object contact and the average value of the holding phase, and 4) difference in the current amplitudes on the aperture channel between the average of the holding phase and the peak value during the squeezing phase. These four features were thought to provide reasonable representations of: 1) the width of the object, 2) the weight of the object (since grip force should be roughly proportional to object weight), 3) an initial estimate of object compliance (since the degree of aperture constriction upon grasping should provide one coarse indicator of compliance), and 4) a second estimate of compliance (since the degree of aperture constriction during the squeezing phase should provide a separate indicator of compliance). Two indicators of compliance were used because for some objects (particularly heavy, narrow, and compliant objects), the majority of aperture constriction occurred during the initial grasping phase, whereas for other objects (such as lightweight, wide, and compliant objects), most of the aperture change occurred during the squeezing phase.

Such feature data along with a categorical indicator of the actual object grasped from all trials prior to last block of trials on a given day was used to train the algorithm (nearest neighbor, MATLAB Machine Learning Toolbox). As such, the amount of training data increased from day 1 to day 5, just as would occur in the case of the subject learning over successive days. Accordingly, for day 1, data used to train the algorithm included only that from blocks 1 and 2 of that day, whereas for day 2, training data included all the data from day 1 plus data from blocks 1 and 2 on day 2, and so on. The trained algorithm for each day was then used to predict the identity of the objects grasped during the third block of trials for that day. The percentage correct identifications was then determined for each of the five days.

3. Results

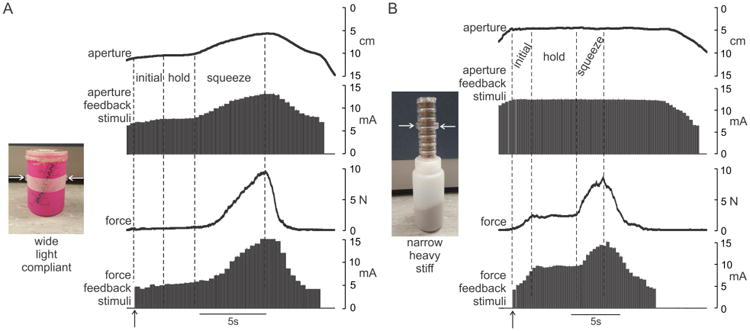

Across all subjects and test days, the average (±SD) perceptual threshold current was 4.8 ± 0.7 mA whereas the discomfort threshold was 14.0 ± 1.3 mA. Figure 4 shows examples of the aperture and grip force signals and the associated stimulus pulse trains delivered to a subject while the experimenter grasped a wide, lightweight, and compliant object (Fig. 4A) and a narrow, heavy, and stiff object (Fig. 4B). When grasping the wide, lightweight, and compliant object (Fig. 4A), the initial contact was registered (vertical arrow) when the aperture was about 10 cm and was associated with a force feedback current slightly above the perceptual threshold current (∼ 4 mA). During the subsequent initial gripping period, there was a slight change in aperture due to the compliance of the object. During the holding phase, the force and aperture feedback currents were both near to the perceptual threshold values due to the low grip force and wide aperture of the hand needed to hold this object. During the squeezing phase, there was a substantial increase in aperture-feedback current due to the marked closure of the hand during the application of the increased gripping force on this compliant object.

Figure 4.

Example sensor and feedback signals during grasping of two objects: (A) wide, lightweight, and compliant object and (B) narrow, heavy, and stiff object. Horizontal arrows on images indicate contact points on small disks fixed to objects. Each grasp was divided into three phases, an initial phase during which grip force increased to lift and support the weight of the object, a steady hold phase, and a squeeze phase during which additional grip force was applied to the object to aid in identifying object compliance. Note that vertical axes of aperture signals are inverted (i.e. small apertures towards the top) to allow more ready comparison to feedback signals. Vertical arrows indicate time at which grip force exceeded minimum threshold and force feedback was initiated.

In contrast, when grasping the narrow, heavy, and stiff object (Fig. 4B), the patterns of feedback currents were quite different. Contact (vertical arrow) occurred at a narrow aperture of about 5 cm and was associated with a strong aperture-feedback current of about 12 mA. Once contact was made, force (and associated current) continued to increase in the initial period until the gripping force was sufficient to lift and hold the object. The currents delivered during the holding phase were high for both aperture and force feedback due to the narrow width and relatively large gripping force needed to hold this object. Because of the low compliance of this object, there was virtually no change in aperture during the squeezing phase of the trial.

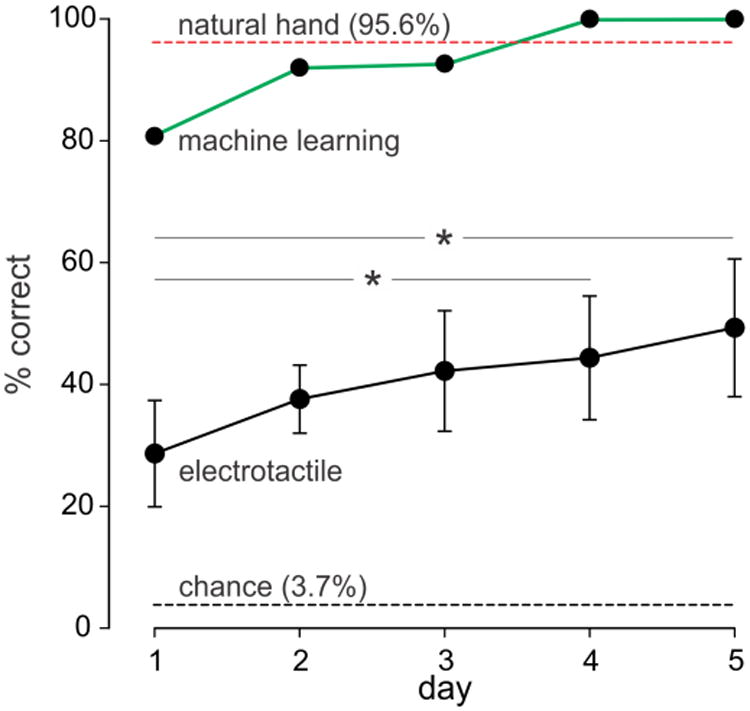

Based on the types of feedback signals depicted in Figure 4, subjects attempted to identify the 27 different objects that were grasped by the experimenter. Figure 5 depicts the mean (± SD) percent correct identifications out of 81 trials for the eight subjects across each of the five days. On each day, subjects performed well above chance (3.7%). There was also a gradual improvement in performance over days from 28.5 ± 8.2% on day 1 to 49.2 ± 10.6% on day 5. This represents a 72% improvement in object identification over the 5 sessions. Overall, Friedman repeated measures ANOVA on ranks indicated a significant effect (P < 0.001) of training day on percentage correct identifications. Post hoc analysis indicated a significant difference (P < 0.05) between day 1 and day 4 and between day 1 and day 5.

Figure 5.

Mean (± SD) percentage correct identifications of the 27 objects based on electrotactile feedback for eight subjects over five days (black trace). There was a significant (P< 0.001) effect of training day on percentage correct identifications. Post hoc analyses indicated significant difference between day 1 and day 4 and day 1 and day 5 (* P < 0.05). The green trace shows the performance of the machine-learning algorithm trained on the same signals delivered to one of the subjects. The red dashed line depicts the mean performance of three subjects using their own hand to identify the objects and the black dashed line indicates chance level.

Also shown in Figure 5 is the outcome of the machine-learning predictions for each of the 5 days. With increasing amounts of training, machine-learning predictions eventually attained 100% correct identifications for days 4 and 5, more or less comparable to the level obtained when subjects used their own hands to identify the objects (red dashed line, Fig. 5). This result implies that sufficient information was available in the feedback signals to enable, with practice, accurate identification of the objects.

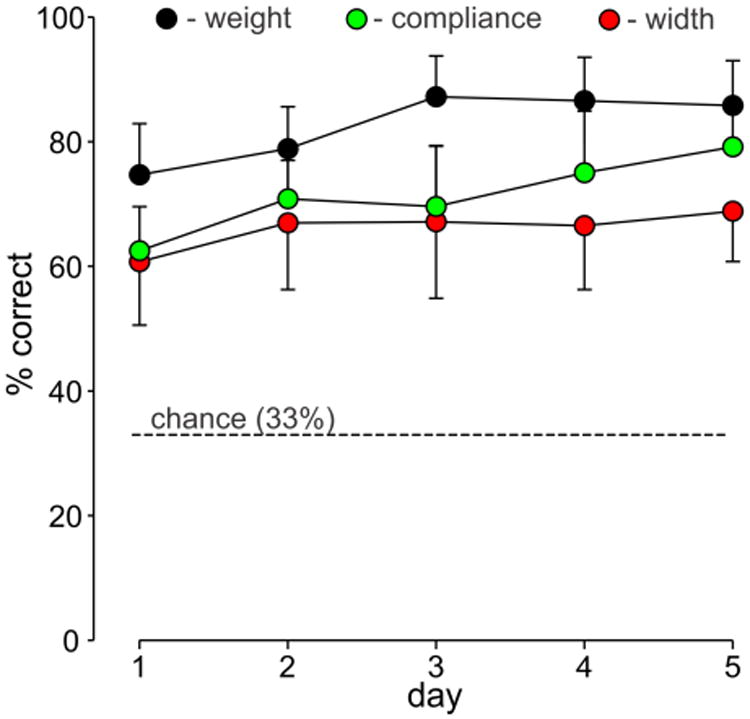

We also analyzed the ability of the eight subjects to correctly identify each attribute associated with an object, namely, its weight, width, and compliance (Fig. 6). A two-way ANOVA indicated a significant effect of training day (P < 0.001)) and attribute (P < 0.001) on percentage of correctly identified objects with no significant interaction between the two factors (P = 0.23). Post-hoc analyses indicated significantly higher percentage correct identification of weight on days 3, 4, and 5 compared to days 1 and 2 (P < 0.05). For object compliance, post-hoc analyses indicated higher percentage correct on days 4 and 5 compared to day 1 (P < 0.007) and on day 5 compared to day 3 (P < 0.05). Interestingly, post-hoc analysis indicated no significant change in identification accuracy for object width across days (P = 0.20). With regard to object attributes, post-hoc analyses indicated that object weight was significantly (P <0.001) better identified (overall average of 82.6 ± 8.4%) than compliance (overall average 71.4 ± 9.5%) and significantly (P<0.001) better identified than weight (overall average 66.0 ± 10.2%). There was no significant difference (P = 0.062), however, in the overall identification accuracies of compliance compared to object width.

Figure 6.

Mean (± SD) percent correct identification of each object attribute for eight subjects over five days: weight (black symbols), width (red symbols) compliance (green symbols). The dashed line represents the chance level.

We did notice, however, that subjects seemed to have more difficulty identifying the width of the most compliant objects. This impression was borne out by statistical analysis. The overall average percent correct identification of width varied systematically with compliance: 73.1 ± 7.9% for stiff objects, 67.6 ± 7.7% for intermediate compliance objects, and 57.4 ± 13.5% for the most compliant objects (P = 0.002, repeated measures ANOVA). Post-hoc analyses indicated that percent correct identification of width for stiff and intermediate-compliant objects were significantly higher than for compliant objects (P < 0.05).

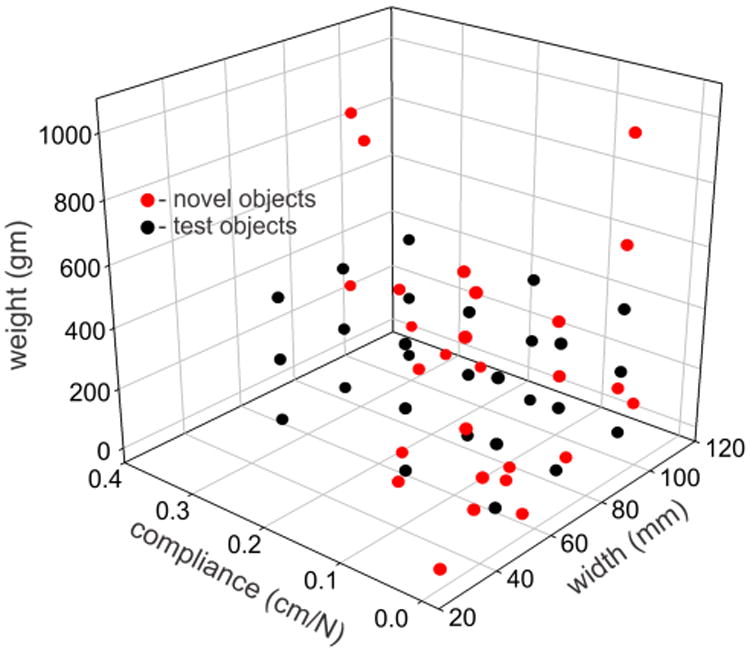

Finally, to test how training with electrotactile feedback might generalize to new objects, we carried out another set of experiments on the last three subjects. After completion of their final training session on day 5, these subjects were then tested on an array of 27 everyday objects. These objects had attributes that mostly encompassed but also extended beyond the attribute space of the original test objects (Fig. 7). As for the original test objects, pairs of pliable plastic disks were glued on opposite sides of each new object to ensure similar coefficients of friction across objects. The new objects were presented in 3 blocks of 9 objects (Figs. 8A, 8B, 8C). There were no practice trials. After visually scanning the objects on the table, the subject was blindfolded and put on headphones. The experimenter scrambled the locations of the objects (to prevent the subject from guessing objects based on slight changes in the location of objects when replaced after grasped). The experimenter then grasped, held, and squeezed a randomly selected object using the same procedures as for the original test objects. The subject then removed the blindfold and guessed the identity of the object. The subject was not informed of the identity of the object grasped. This process was repeated until each object in a set had been tested once. The same procedures were then used to test the other two sets of objects. The order of object sets was different across the three subjects.

Figure 7.

Weights, compliances, and widths of the 27 original test objects (black symbols) and of the 27 everyday objects (red symbols) that were used to test how well electrotactile training transferred to novel objects.

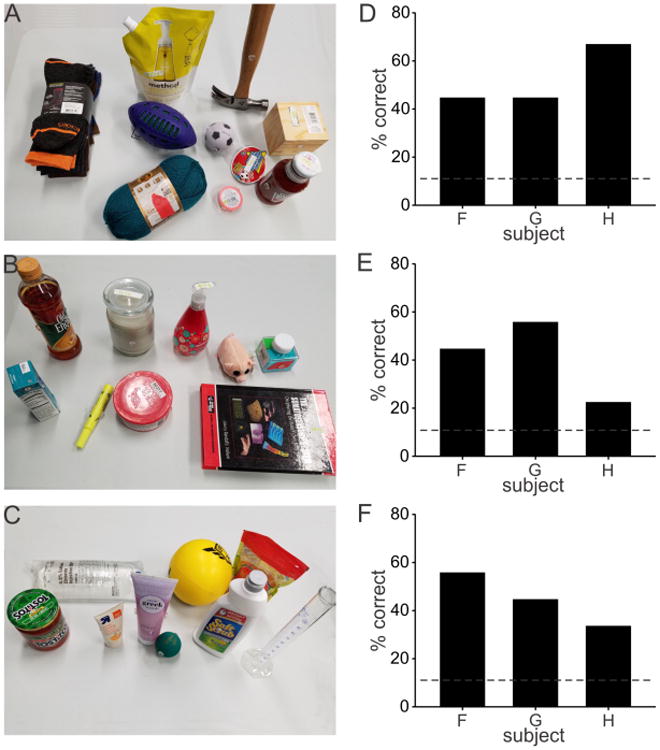

Figure 8.

(A), (B), (C) Three sets of 9 items that served as new objects for discrimination based on electrotactile feedback during grasping. (D), (E), (F) Percentage of correctly identified objects by each subject for each object set shown in (A), (B), (C), respectively. Average correct identification across all three sets of objects was 46%. Chance level (11%) is shown as a dashed line.

Figures 8D, 8E, and 8F show the percentage of correctly identified objects by each subject for each of the three sets of new objects. Chance level is indicated as a dashed line (1/9 = 11%). The average percent correct was 52% for the first set (Figs. 8A, 8D), 41% for the second set (Figs. 8B, 8E), and 44% for the third set (Figs. 8C, 8F). No statistics were carried out on these results given the small number of subjects. The overall average percent correct across all three sets of objects was 46%, which was well above chance. These results suggest that over the course of training, subjects learned to interpret electrotactile feedback in a general way that they transferred to identify novel objects with reasonable accuracy, rather than having simply learned to associate specific stimulus patterns to particular objects.

4. Discussion

A learning-based object discrimination task was used to test the ability of intact human subjects to interpret physical information derived from artificial tactile and proprioceptive feedback. We found that subjects successfully learned to use such electrotactile feedback to discriminate reasonably well among 27 different objects varying in weight, width and compliance. Over five days of training, performance accuracy increased ∼80%. Furthermore, this training transferred reasonably well to novel objects. These results suggest, therefore, that non-invasive forms of somatosensory feedback, with training, could be used to provide important information needed to help control prosthetic or paralyzed hands.

4.1. Object Attributes

Haptic identification of real-world objects involves integration of multiple physical attributes of an object, including weight, size, compliance, shape, texture, mass distribution, and even temperature. Here we provided direct feedback only about two variables: normal contact (grip) force on the thumb and the spacing between the thumb and index finger. Grip force scales with the weight of an object for a given coefficient of friction between hand and object (Johansson and Westling 1984). Because we controlled the coefficient of friction in these experiments, attention to grip force feedback alone provided a reasonable source of information about object weight. Indeed, object weight was the attribute most accurately identified in the present experiments (Fig. 6).

Object width, on the other hand, was the attribute least accurately identified and subjects showed little improvement with training (Fig. 6). Identification of object width probably required some degree of attention to both aperture and grip force signals. This was needed because many objects were compliant, and therefore, the aperture of the hand during steady state holding might not reflect the un-deformed width of the object. It should also be kept in mind that the skin of the distal finger pads is also relatively compliant at low loads (deflection of ∼ 2 mm for 1 N load, Cabibihan et al. 2011), which may have led to some small additional errors in detection of object width. Furthermore, the sensitivity of the force transducer was limited; as such, the onset of electrotactile feedback of grip force did not always coincide with the time of object contact, particularly for compliant objects. In informal interviews following the experiments, some subjects indicated that they attempted to focus on aperture feedback at the moment they detected object contact from the grip force feedback to determine object width. Because grip force feedback could be delayed, this likely led to significant errors in estimating width of objects, especially for compliant objects. Furthermore, the added cognitive challenge associated with a rapid switch of attention from grip-force detection to aperture magnitude may have led to the lower accuracy in identifying object width compared to object weight.

Identification of object compliance, in theory, should require concurrent evaluation of changes in aperture and grip force signals. We anticipated, therefore, that compliance would be the attribute most difficult to accurately identify. However, accuracy associated with identifying object compliance was better on every training day than object width (although not statistically significant) and showed significant improvement with training (Fig. 6). This was likely due to the standardized way in which the experimenter carried out the squeezing phase of the object manipulation. Namely, subjects were verbally notified as to when the squeezing phase was to ensue. In addition, the experimenter typically applied similar levels of force during the squeezing phase to objects of widely varying compliances (see Fig. 4). As such, subjects may have focused primarily on aperture changes alone during the squeezing phase to extract information about object compliance, making the task somewhat easier than having to simultaneously focus on both changes in force and aperture.

4.2. Task Difficulty and Comparison with other Studies

Despite the ostensibly simple nature of the task involving only two feedback variables, subjects reported that the task was demanding and required intense focus. The challenging nature of this object discrimination task was born out by the relatively modest overall performance. Correct identification of objects occurred about 49% of the time even after five days of experience with the task, whereas correct identifications occurred nearly 100% of the time with natural feedback from a gloved hand. Because we were concerned that the feedback signals we used might not have contained sufficient information to enable full discrimination of all objects, we applied machine learning to the same signals delivered to one of the test subjects. With sufficient training data (> 3 day's worth), the machine-learning algorithm was capable of identifying the test objects at 100% accuracy (Fig. 4). This indicates that the feedback signals did possess the requisite information for object identification.

Nevertheless, our results from the first day of training were somewhat better than that previously reported object discrimination tasks based on artificial somatosensory feedback. For example, intrafascicular stimulation that provided feedback of grip force and hand aperture in an amputee yielded correct identification of 9 objects (varying in size and compliance) about 28% of the time (Horch et al. 2011). For that experiment, the ratio of correct identifications to chance was 28%/11% = 2.5. When the task was simplified to discriminate among 4 objects, identification success rate was 67.5%, yielding a correct/chance ratio of 67.5%/25% = 2.7 (Horch et al. 2011). Similarly, subjects using audio feedback of grip force from different locations on a robotic hand correctly identified three objects about 85% of the time, yielding a correct/chance ratio of 85%/33.3% = 2.6 (Gibson and Artemiadis 2014). Likewise, for subjects receiving vibrotactile feedback to explore 4 virtual objects of different geometric shapes (Martínez et al. 2016), correct identification occurred 65% of the time for a correct/chance ratio of 65%/25% = 2.6. In contrast, during the first session of the present experiments, the correct/chance ratio was 28.5%/3.7% = 7.7. This ratio increased to 49.2%/3.7% = 13.3 with 5 days of experience with this task.

The reasons for the nearly 3-fold larger correct/chance ratio in the present experiments compared to the others are not immediately evident. One possibility is that the nature of the feedback was substantially different across the studies, including vibrotactile (Martínez et al. 2016), audio (Gibson and Artemiadis 2014), intrafascicular (Horch et al. 2011), and surface electrotactile (present study). While electrotactile feedback can be more accurately controlled over larger ranges than for vibrotactile feedback (Szeto and Saunders 1982), this would not be the case for audio feedback. For studies involving electrical stimulation of somatosensory nerves as feedback in object discrimination tasks, Horch et al. (2011) used frequency modulation of stimulus pulses whereas we used amplitude modulation. The relative merits of frequency versus amplitude coding for sensory feedback have not been resolved. For example, we previously found that perceptual sensitivity to amplitude coding of electrotactile feedback was substantially greater than that for frequency coding (Marcus and Fuglevand, 2011), similar to the findings of Kaczmarek et al. (1992). In contrast, Szeto and Lyman (1977) found frequency coding (in the 1 – 15 Hz range) superior to amplitude coding for electrotactile feedback.

Interestingly, some recent investigations have also deployed mixed stimulus modalities to successfully convey different attributes of a signal at a single location simultaneously, including frequency and amplitude coding of electrotactile feedback (Choi et al. 2017) and amplitude coding of vibrotactile and electrotactile feedback (D'Alonzo et al. 2014). Both these studies involved three intensity levels for each of two stimulus modalities for a total of nine multimodal signals. Subjects were able to correctly identify multimodal signals 84% (Choi et al. 2017) and 56% (D'Alonzo et al. 2014) of the time for correct/chance ratios of 84%/11% = 7.6 and 56%/11% = 5.1. Those impressive findings indicate that mixed modality stimulation may be a promising means to provide somatosensory feedback, particularly given the reduction in the total number of stimulation sites that would need to be used.

One clear difference between the present investigation and others is that object identification here involved highly controlled haptic movements made by an experimenter whereas in other studies, object identification was based on manipulation of objects with myoelectric-controlled robotic hands (Horch et al. 2011; Gibson and Artemiadis 2014).. As a consequence, the feedback signals in the present study were certainly more consistent than in studies involving robotically controlled manipulation, thus making discrimination easier. Furthermore, object identification in the present study involved a purely passive sensory perception task., It is possible that the added cognitive load associated with controlling hand movements (Richardson et al. 1981) or the gating of cutaneous sensory signals during movement (Chapman 1994) might have interfered with sensory perception during the active tasks. The majority of studies comparing the discriminative abilities of active to passive touch, however, have found little difference between the two (see Chapman 1994, Prescott et al. 2011).

Along these lines, it is important to highlight that the object identification task used in the present experiments tested only the perceptual properties of artificial somatosensory feedback. The ultimate test of its utility, however, will be in how well such feedback can be used to improve control of limb trajectory and object manipulation in artificial or paralyzed limbs. Nevertheless, it seemed reasonable as a first step, to test the sensory capabilities of electrotactile feedback in isolation before evaluating its possible benefits to the more complex situation of limb motor control, as will need to be done in the future.

4.3. Invasive Versus Non-Invasive Feedback

In the past 10 years, there have been remarkable advances in the fabrication of unobtrusive, flexible, high-density tactile sensory arrays (e.g. Kim et al. 2011; Hammock et al. 2013). In many respects, however, these advances have far outpaced the development of methods to deliver and to enable useful interpretation of such rich sensory information by an amputee or paralyzed individual. As shown here, and in other studies (e.g. Szeto 1982; Szeto and Saunders 1982), interpretation of even two channels of electrotactile feedback represent a significant cognitive challenge for human subjects. Part of this difficulty derives from the dislocated nature of electrotactile feedback. Namely, sensory signals indicating the state of the hand, for example, are delivered along sensory pathways that normally convey information about other parts of the body or even about different sensory modalities (e.g. tactile pathways used to transmit proprioceptive information). Consequently, such sensory substitution requires considerable cognitive processing. It will be important in the future to determine whether, with long-term training, sensory substitution can be beneficially used to aid the control of artificial or paralyzed limbs in a fluid and naturalistic way.

One way to make artificial somatosensory feedback more natural and intuitive is to target stimulation of residual peripheral axons that, prior to injury, innervated the regions of the hand that subsequently are instrumented with artificial sensors on the prosthetic hand (Riso 1999; Kuiken et al. 2007; Horch et al. 2011, Oddo et al. 2016). Such targeted stimulation of residual nerves, however, would not be useful in the case of many spinal-cord injured individuals because central lesions may prevent transmission of peripherally-instigated signals to the brain. An alternative is to provide somatosensory feedback by directly stimulating the somatosensory cortex with implanted electrode arrays (O'Doherty et al. 2011; Tabott et al. 2013; Klaes et al. 2014; Dardarlat et al. 2014; Kim et al. 2015; Flesher et al. 2016). For example, Tabott et al. (2013) showed that the ability of monkeys to estimate the strength of electrical stimuli delivered to hand regions of primary somatosensory cortex (SI) was just as good as that associated with mechanical stimuli delivered to the skin of hand. The ability of the monkeys to judge which finger region of SI was stimulated, however, was poorer than that for mechanical stimulation. Flesher et al. (2016) reported similar results for a human tetraplegic in response to stimulation of the hand region of SI with chronically implanted microelectrode arrays. Despite this promise, a number of significant hurdles remain to be overcome before such invasive approaches could realistically be deployed for home use in human patients (Bensmaia and Miller, 2014). Additionally, it will be important in the future to ascertain whether or not invasive means can provide at least equally effective somatosensory feedback as non-invasive approaches (as tested here).

Finally, as pointed out in the Introduction, retention of a small fraction of somatosensory axons in lesion studies largely prevents obvious motor deficits in experimental animals (Mott and Sherrington 1985; Lassek 1953b, Twitchell 1954). Accordingly, even rudimentary forms of artificial somatosensory feedback, with training, may provide substantial improvements in the control of prosthetic or paralyzed limbs. Indeed, Štrbac et al. (2017) have recently shown this to be the case for amputees who significantly increased grasping performance over five days of practice using electrotactile feedback to control a myoelectric prosthesis. Ultimately, such artificial somatosensory feedback, when combined with vision, should markedly enhance and expand the overall ability of prosthetic hands to manipulate objects. Indeed it is of interest, both in terms of artificial limb control and normal limb control, to determine the relative contributions of vision and somatosensory feedback during object manipulation. This could be addressed in future experiments wherein object manipulation is compared under conditions involving vision only, artificial somatosensation only, and both together.

Acknowledgments

We are grateful to Alie Buckmire and Timothy Maley for technical assistance. This work was supported by NIH Grant NS096064

References

- Aflalo T, Kellis S, Klaes C, Lee B, Shi Y, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C, Andersen RA. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science. 2015;348:906–910. doi: 10.1126/science.aaa5417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajiboye AB, Willett FR, Young DR, Memberg WD, Murphy BA, Miller JP, Walter BL, Sweet JA, Hoyen HA, Keith MW, Peckham PH, Simeral JD, Donoghue JP, Hochberg LR, Kirsch RF. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet. 2017;389:1821–1830. doi: 10.1016/S0140-6736(17)30601-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antfolk C, D'Alonzo M, Rosén B, Lundborg G, Sebelius F, Cipriani C. Sensory feedback in upper limb prosthetics. Expert Review of Medical Devices. 2014;10:45–54. doi: 10.1586/erd.12.68. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P, Collins CC, Saunders FA, White B. Vision substitution by tactile image projection. Nature. 1969;221:963–964. doi: 10.1038/221963a0. [DOI] [PubMed] [Google Scholar]

- Bensmaia SJ, Miller LE. Restoring sensorimotor function through intracortical interfaces: progress and looming challenges. Nature Reviews Neuroscience. 2014;15:313–325. doi: 10.1038/nrn3724. [DOI] [PubMed] [Google Scholar]

- Bouton CE, Shaikhouni A, Annetta NV, Bockbrader MA, Friedenberg DA, Nielson DM, Sharma G, Sederberg PB, Glenn BC, Mysiw WJ, Morgan AG, Deogaonkar M, Rezai AR. Restoring cortical control of functional movement in a human with quadriplegia. Nature. 2016;533:247–250. doi: 10.1038/nature17435. [DOI] [PubMed] [Google Scholar]

- Cabibihan JJ, Pradipta R, Ge SS. Prosthetic finger phalanges with lifelike skin compliance for low-force social touching interactions. Journal of NeuroEngineering and Rehabilitation. 2011;8:16. doi: 10.1186/1743-0003-8-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman CE. Active versus passive touch: factors influencing the transmission of somatosensory signals to primary somatosensory cortex. Can J Physiol Pharmacol. 1994;72:558–570. doi: 10.1139/y94-080. [DOI] [PubMed] [Google Scholar]

- Chatterjee A, Aggarwal V, Ramos A, Acharya S, Thakor NV. A brain-computer interface with vibrotactile biofeedback for haptic information. Journal of NeuroEngineering and Rehabilitation. 2007;4:40. doi: 10.1186/1743-0003-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi K, Kim P, Kim KS, Kim S. Mixed-Modality Stimulation to Evoke Two Modalities Simultaneously in One Channel for Electrocutaneous Sensory Feedback. IEEE Trans NeuralSyst RehabilEng. 2017;25:2258–2269. doi: 10.1109/TNSRE.2017.2730856. [DOI] [PubMed] [Google Scholar]

- Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJ, Velliste M, Boninger ML, Schwartz AB. High-performance neuroprosthetic control by an individual with tetraplegia. The Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dadarlat MC, O'Doherty JE, Sabes PN. A learning-based approach to artificial sensory feedback leads to optimal integration. Nat Neurosci. 2015;18:138–144. doi: 10.1038/nn.3883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Alonzo M, Dosen S, Cipriani C, Farina D. HyVE: Hybrid Vibro-Electrotactile Stimulation for Sensory Feedback and Substitution in Rehabilitation. IEEE Trans Neural Syst Rehabil Eng. 2014;22:290–301. doi: 10.1109/TNSRE.2013.2266482. [DOI] [PubMed] [Google Scholar]

- Dosen S, Marković M, Štrbac M, Belić M, Kojić V, Bijelić G, Keller T, Farina D. Multichannel Electrotactile Feedback With Spatial and Mixed Coding for Closed-Loop Control of Grasping Force in Hand Prostheses. IEEE Trans Neural Syst Rehabil Eng. 2017;25:183–195. doi: 10.1109/TNSRE.2016.2550864. [DOI] [PubMed] [Google Scholar]

- Flesher SN, Collinger JL, Foldes ST, Weiss JM, Downey JE, Tyler-Kabara EC, Bensmaia SJ, Schwartz AB, Boninger ML, Gaunt RA. Intracortical microstimulation of human somatosensory cortex. Sci Transl Med. 2016;8:361ra141–361ra141. doi: 10.1126/scitranslmed.aaf8083. [DOI] [PubMed] [Google Scholar]

- Galambos R, Norton TT, Frommer GP. Optic tract lesions sparing pattern vision in cats. Experimental Neurology. 1967;18:8–25. doi: 10.1016/0014-4886(67)90084-2. [DOI] [PubMed] [Google Scholar]

- Gibson A, Artemiadis P. Object discrimination using optimized multi-frequency auditory cross-modal haptic feedback. Conf Proc IEEE Eng Med Biol Soc. 2014;2014:6505–6508. doi: 10.1109/EMBC.2014.6945118. [DOI] [PubMed] [Google Scholar]

- Gilja V, Pandarinath C, Blabe CH, Nuyujukian P, Simeral JD, Sarma AA, Sorice BL, Perge JANA, Jarosiewicz B, Hochberg LR, Shenoy KV, Henderson JM. Clinical translation of a high-performance neural prosthesis. Nature Medicine. 2015;21:1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammock ML, Chortos A, Tee BCK, Tok JBH, Bao Z. 25th anniversary article: The evolution of electronic skin (e-skin): a brief history, design considerations, and recent progress. Adv Mater Weinheim. 2013;25:5997–6038. doi: 10.1002/adma.201302240. [DOI] [PubMed] [Google Scholar]

- Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P, Donoghue JP. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horch K, Meek S, Taylor TG, Hutchinson DT. Object Discrimination With an Artificial Hand Using Electrical Stimulation of Peripheral Tactile and Proprioceptive Pathways With Intrafascicular Electrodes. IEEE Trans Neural Syst Rehabil Eng. 2011;19:483–489. doi: 10.1109/TNSRE.2011.2162635. [DOI] [PubMed] [Google Scholar]

- Johansson RS, Westling G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp Brain Res. 1984;56:550–564. doi: 10.1007/BF00237997. [DOI] [PubMed] [Google Scholar]

- Jorgovanovic N, Dosen S, Djozic DJ, Krajoski G, Farina D. Virtual grasping: closed-loop force control using electrotactile feedback. Computational and Mathematical Methods in Medicine. 2014;2014:120357–13. doi: 10.1155/2014/120357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaczmarek KA, Webster JG, Bach-y-Rita P, Tompkins WJ. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE Trans Biomed Eng. 1991;38:1–16. doi: 10.1109/10.68204. [DOI] [PubMed] [Google Scholar]

- Kaczmarek KA, Webster JG, Radwin RG. Maximal dynamic range electrotactile stimulation waveforms. IEEE Trans Biomed Eng. 1992;39:701–715. doi: 10.1109/10.142645. [DOI] [PubMed] [Google Scholar]

- Kim DH, Lu N, Ma R, Kim YS, Kim RH, Wang S, Wu J, Won SM, Tao H, Islam A, Yu KJ, Kim TI, Chowdhury R, Ying M, Xu L, Li M, Chung HJ, Keum H, McCormick M, Liu P, Zhang YW, Omenetto FG, Huang Y, Coleman T, Rogers JA. Epidermal electronics. Science. 2011;333:838–843. doi: 10.1126/science.1206157. [DOI] [PubMed] [Google Scholar]

- Kim S, Callier T, Tabot GA, Gaunt RA, Tenore FV, Bensmaia SJ. Behavioral assessment of sensitivity to intracortical microstimulation of primate somatosensory cortex. Proc Natl Acad Sci USA. 2015;112:15202–15207. doi: 10.1073/pnas.1509265112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klaes C, Shi Y, Kellis S, Minxha J, Revechkis B, Andersen RA. A cognitive neuroprosthetic that uses cortical stimulation for somatosensory feedback. J Neural Eng. 2014;11:056024. doi: 10.1088/1741-2560/11/5/056024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuiken TA, Marasco PD, Lock BA, Harden RN, Dewald JPA. Redirection of cutaneous sensation from the hand to the chest skin of human amputees with targeted reinnervation. Proc Natl Acad Sci USA. 2007;104:20061–20066. doi: 10.1073/pnas.0706525104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lassek AM. Inactivation of voluntary motor function following rhizotomy. J Neuropathol Exp Neurol. 1953a;12:83–87. doi: 10.1097/00005072-195301000-00008. [DOI] [PubMed] [Google Scholar]

- Lassek AM. Potency of isolated brachial dorsal roots in controlling muscular physiology. Neurology. 1953b;3:53–57. doi: 10.1212/wnl.3.1.53. [DOI] [PubMed] [Google Scholar]

- Marcus PL, Fuglevand AJ. Perception of electrical and mechanical stimulation of the skin: implications for electrotactile feedback. J Neural Eng. 2009;6:066008–13. doi: 10.1088/1741-2560/6/6/066008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martínez J, García A, Oliver M, Molina JP, González P. Identifying Virtual 3D Geometric Shapes with a Vibrotactile Glove. IEEE Comput Graph Appl. 2016;36:42–51. doi: 10.1109/MCG.2014.81. [DOI] [PubMed] [Google Scholar]

- Mott FW, Sherrington CS. Experiments upon the influence of sensory nerves upon movement and nutrition of the limbs. Preliminary communication. Proceedings of the Royal Society of London. 1895;57:481–488. [Google Scholar]

- Oddo CM, Raspopovic S, Artoni F, Mazzoni A, Spigler G, Petrini F, Giambattistelli F, Vecchio F, Miraglia F, Zollo L, Di Pino G, Camboni D, Carrozza MC, Guglielmelli E, Rossini PM, Faraguna U, Micera S. Intraneural stimulation elicits discrimination of textural features by artificial fingertip in intact and amputee humans. eLife. 2016;5:e09148. doi: 10.7554/eLife.09148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty JE, Lebedev MA, Ifft PJ, Zhuang KZ, Shokur S, Bleuler H, Nicolelis MAL. Active tactile exploration using a brain-machine– brain interface. Nature. 2012;479:228–231. doi: 10.1038/nature10489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel GK, Dosen S, Castellini C, Farina D. Multichannel electrotactile feedback for simultaneous and proportional myoelectric control. J Neural Eng. 2016;13:1–13. doi: 10.1088/1741-2560/13/5/056015. [DOI] [PubMed] [Google Scholar]

- Prescott TJ, Diamond ME, Wing AM. Active touch sensing. Philos Trans R Soc Lond, B, Biol Sci. 2011;366:2989–2995. doi: 10.1098/rstb.2011.0167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prior RE, Lyman J. Bull Prosthet Res. 1975. Electrocutaneous feedback for artificial limbs. Summary progress report February 1, 1974, throughJuly 31, 1975; pp. 3–37. [PubMed] [Google Scholar]

- Richardson BL, Wuillemin DB, MacKintosh GJ. Can passive touch be better than active touch? A comparison of active and passive tactile maze learning. Br J Psychol. 1981;72:353–362. doi: 10.1111/j.2044-8295.1981.tb02194.x. [DOI] [PubMed] [Google Scholar]

- Riso RR. Strategies for providing upper extremity amputees with tactile and hand position feedback--moving closer to the bionic arm. Technol Health Care. 1999;7:401–409. [PubMed] [Google Scholar]

- Schiefer M, Tan D, Sidek SM, Tyler DJ. Sensory feedback by peripheral nerve stimulation improves task performance in individuals with upper limb loss using a myoelectric prosthesis. J Neural Eng. 2015;13:016001–14. doi: 10.1088/1741-2560/13/1/016001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherrington C. Hughlings Jackson Lecture on: Quantitative management of contraction for “lowest-level” co-ordination. Br Med J. 1931;1:207–211. doi: 10.1136/bmj.1.3657.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shokur S, Gallo S, Moioli RC, Donati ARC, Morya E, Bleuler H, Nicolelis MAL. Assimilation of virtual legs and perception of floor texture by complete paraplegic patients receiving artificial tactile feedback. Sci Rep. 2016;6:32293. doi: 10.1038/srep32293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Štrbac M, Belić M, Isaković M, Kojić V, Bijelić G, Popović I, Radotić M, Dosen S, Marković M, Farina D, Keller T. Integrated and flexible multichannel interface for electrotactile stimulation. J Neural Eng. 2016;13:046014–17. doi: 10.1088/1741-2560/13/4/046014. [DOI] [PubMed] [Google Scholar]

- Štrbac M, Isaković M, Belić M, Popović I, Simanic I, Farina D, Keller T, Dosen S. Short- and Long-Term Learning of Feedforward Control of a Myoelectric Prosthesis with Sensory Feedback by Amputees. IEEE Trans Neural Syst Rehabil Eng. 2017 Jun 6; doi: 10.1109/TNSRE.2017.2712287. [DOI] [PubMed] [Google Scholar]

- Szeto AYJ. Electrocutaneous code pairs for artificial sensory communication systems. Ann Biomed Eng. 1982;10:175–192. doi: 10.1007/BF02367389. [DOI] [PubMed] [Google Scholar]

- Szeto AYJ, Lyman J. Comparison of codes for sensory feedback using electrocutaneous tracking. Ann Biomed Eng. 1977;5:367–383. doi: 10.1007/BF02367316. [DOI] [PubMed] [Google Scholar]

- Szeto AY, Saunders FA. Electrocutaneous stimulation for sensory communication in rehabilitation engineering. IEEE Trans Biomed Eng. 1982;29:300–308. [PubMed] [Google Scholar]

- Tabot GA, Dammann JF, Berg JA, Tenore FV, Boback JL, Vogelstein RJ, Bensmaia SJ. Restoring the sense of touch with a prosthetic hand through a brain interface. Proc Natl Acad Sci USA. 2013;110:18279–18284. doi: 10.1073/pnas.1221113110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Twitchell TE. Sensory factors in purposive movement. Journal of Neurophysiology. 1954;17:239–252. doi: 10.1152/jn.1954.17.3.239. [DOI] [PubMed] [Google Scholar]

- Witteveen HJB, Luft F, Rietman JS, Veltink PH. Stiffness Feedback for Myoelectric Forearm Prostheses Using Vibrotactile Stimulation. IEEE Trans Neural Syst Rehabil Eng. 2014;22:53–61. doi: 10.1109/TNSRE.2013.2267394. [DOI] [PubMed] [Google Scholar]