Abstract

Background

Many Americans have limited literacy skills, and the National Institutes of Health (NIH) suggests patient educational material be written below the 8th grade level. Many orthopedic organizations provide print material for patients, but whether these documents are written at an appropriate reading level is not clear. This study assessed the readability of patient education brochures provided by the American Shoulder and Elbow Surgeons (ASES).

Materials and Methods

In May 2017, 6 ASES patient education brochures were analyzed using readability software. The reading level was calculated for each brochure using 9 different tests. The mean reading level for each article was compared with the NIH-recommended 8th grade level using 2-tailed, 1-sample t tests assuming unequal variances.

Results

For each of the 9 tests, the mean reading level was higher than the NIH-recommended 8th grade (test, grade level): Automated Readability Index, 14.1 (P < .05); Coleman-Liau, 14.2 (P < .05); New Dale-Chall, 13.2 (P < .05); Flesch-Kincaid, 13.7 (P < .05); FORCAST, 11.8 (P < .05); Fry, 15.8 (P < .05); Gunning Fog, 16.5 (P < .05); Raygor Estimate, 15.4 (P < .05); and Simple Measure of Gobbledygook (SMOG), 15.1 (P < .05).

Conclusions

The ASES patient education brochures are written well above the NIH-recommended 8th grade reading level. These findings are similar to other investigations concerning orthopedic patient education material. Supplementary brochures and websites could be a useful source of information, particularly for patients who are deterred from asking questions in the office. Printed material designed for patient education should be edited to a more reasonable reading level. Further review of patient education materials is warranted.

Keywords: Health literacy, Brochures, Patient education, Sports medicine, Readability, Patient communication

Approximately one-third of the adult population in the United States has basic or less than basic health literacy.37 Poor health literacy has been associated with negative outcomes and $50 to $73 billion per year of increased health care costs.38 The reading skills of a patient are often overlooked when patient education materials are designed,1 which should be written at an appropriate reading level. Recommendations vary,3, 22, 23 but the National Institutes of Health (NIH) suggests a 6th to 8th grade reading level.34, 38 This is not intended to “dumb down” the material; rather, it is a part of the NIH mission to provide Americans with health information they can use. The NIH supports the Plain Language initiative, which has its origins in a federal directive.23 In October 2010, the Plain Writing Act was signed into law. The law requires all federal publications, forms, and public documents to be clear, concise, and well-organized.33

The readability of patient-oriented materials has been a common subject in recent orthopedic literature,5, 7, 11, 19, 25 and most studies have found that very few online documents are written at an appropriate level.2, 6, 31, 32, 35 Although print material may be accessed less frequently than online material, a Pew survey found that nearly 60% of people who initially learn about their diagnoses online eventually see a clinician.8 The doctor's office is frequently supplied with brochures from industry or specialty groups. The goal of this study is to assess the readability of patient education brochures provided by the American Shoulder and Elbow Surgeons (ASES). The hypothesis was that the ASES patient education brochures would be written higher than the 8th grade reading level.

Materials and methods

In May 2017, the 6 available patient education brochures were ordered from the ASES. These brochures were:

-

1.

Arthritis and Total Shoulder Replacement

-

2.

Arthroscopy of the Shoulder and Elbow

-

3.

Rehabilitation of the Shoulder

-

4.

Rotator Cuff Tendonitis and Tears

-

5.

Tennis Elbow

-

6.

The Unstable Shoulder

All brochures were written in English. The content of each was copied as plain text into an individual Microsoft Word document (Microsoft Corp., Redmond, WA, USA). In accordance with the readability software guidelines, figures, figure legends, copyright notices, disclaimers, acknowledgements, citations, and references in the brochures were excluded.

The Word documents were analyzed using Readability Studio Professional Edition 2015 (Oleander Software, Ltd., Vandalia, OH, USA). Within the program, 9 different readability scores were calculated for each article. These formulas were:

-

1.

Flesch Kincaid Grade Level

-

2.

Simple Measure of Gobbledygook Index (SMOG)

-

3.

Coleman-Liau Index

-

4.

Gunning Fog Index

-

5.

New Dale-Chall Formula

-

6.

Raygor Readability Estimate

-

7.

Fry Readability

-

8.

Automated Readability Index

-

9.

FORCAST

Each of these tests has been used for analyzing patient education materials.14, 27, 29, 36 The tests are based on the sample text's syllables, words, and sentences (Table I), but each formula weights these components differently. Some formulas have additional components. The Dale-Chall formula contains a factor of “unfamiliar words,” which Dale and Chall chose based on assessment of 4th grade Americans.4 The Fry and Raygor reading levels are determined by plotting the calculated score to an accompanying readability graph. This graphing was performed automatically by the readability software. The Flesch-Kincaid Grade level,20 SMOG,18 Fog,18 Fry,10 and Dale-Chall4 tests have been individually validated.

Table I.

Display of the 8 readability formulas used to calculate the reading level for each article

| Assessment | Formula | Legend |

|---|---|---|

| Flesch-Kincaid Grade | (0.39 × B) + (11.8 × W) – 15.59 | B = average number of syllables per word; W = average number of words per sentence |

| Simple Measure of Gobbledygook (SMOG) | 1.043 × √(P × 30/S) + 3.1291 | P = number of words with 3 or more syllables; S = number of sentences |

| Coleman-Liau | (0.0588 × L) – (0.296 × T) – 15.8 | L = average number of letters per word; T = average number of sentences per 100 words |

| Gunning Fog Index | 0.4 × (W/S + 100 × P/W) | S = average number of sentences; W = average number of words per sentence; P = average number of words with 3 or more syllables |

| New Dale-Chall | 0.0496 × W/S + 0.1579 × U/W + 3.6365 | W = average number of words; S = average number of sentences; U = unfamiliar words |

| Raygor | Average number of sentences and syllables per 100 words (graphed to corresponding grade level) | |

| Fry | Average number of sentences and long words per 100 words (graphed to corresponding grade level) | |

| Automated Readability Index | 4.71 (C/W) + 0.5 (W/S) –21.43 | C = characters; W = words; S = sentences |

| FORCAST | 20 – SS/10 | SS = number of single syllable words in 150-word sample |

The more commonly used readability tests, the Flesch-Kincaid and Automated Readability Index, were originally developed for the United States Navy in 1975 for analyzing technical manuals; the Flesch-Kincaid is now the United States Military Standard.16 The Fog Index was validated using McCall-Crabbs' Test Lessons in reading and was developed to predict the grade level of individuals who could correctly answer 90% of the passage's comprehension questions.18 The SMOG index was also validated using Test Lessons in reading, except it was based on answering 100% of the questions correctly, so the grade levels predicted by SMOG are sometimes higher.9

Although there is no gold standard, a review by Friedman and Hoffman-Goetz9 noted that many of these formulas correlate strongly with each other, and using more than one to increase validity is recommended.1 Additional information regarding commonly used readability tests is presented in a thorough review of the topic by Badarudeen and Sabarwhal.1

Reading level was calculated for each article by averaging the 9 readability scores. The mean reading level for each article was compared with an 8th grade level using 2-tailed, 1-sample t tests assuming unequal variances. Normality of the sample distribution was tested with the Shapiro-Wilk test. All statistical tests were performed using R 3.4.0 software28 (R Foundation for Statistical Computing, Vienna, Austria) with RStudio 1.0.153 software30 (RStudio Inc., Boston, MA, USA). An α level of 0.05 was determined.

Recommended reading levels range from 1 to 5 grades below the intended audience.3, 22, 23 Most Americans have completed 10 to 13 years of schooling,17 so the 8th grade was chosen as a conservative measure in accordance with the NIH recommendations.34

Results

The results confirmed the hypothesis that the reading level of the brochures would be higher than the 8th grade. The readability scores of all 6 brochures were calculated with each of the 9 readability formulas, except for the “Rehabilitation of the Shoulder” brochure. The Fry and Raygor scores could not be calculated for this brochure because it contained too many words with high syllable and character counts.

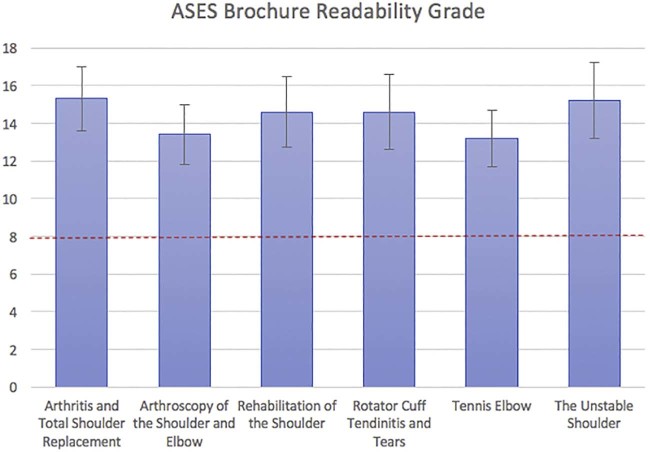

The mean reading level for the 6 brochures was higher than the recommended 8th grade reading level: Arthritis and Total Shoulder Replacement, (grade level) 15.3 (P < .05); Arthroscopy of the Shoulder and Elbow, 13.4 (P < .05); Rehabilitation of the Shoulder, 14.6 (P < .05); Rotator Cuff Tendinitis and Tears, 14.6 (P < .05); Tennis Elbow, 13.2 (P < .05); and The Unstable Shoulder, 15.2 (P < .05; Fig. 1 and Table II).

Figure 1.

Readability scores (y axis) are shown for each article (x axis) from the American Shoulder and Elbow Surgeons (ASES). The dotted red line represents the recommended National Institutes of Health 8th grade cutoff level. The standard deviation is represented by error bars.

Table II.

Individual reading level scores for each of the 6 articles and 9 tests*

| Assessment | Arthritis and Total Shoulder Replacement | Arthroscopy of the Shoulder and Elbow | Rehabilitation of the Shoulder | Rotator Cuff Tendinitis and Tears | Tennis Elbow | The Unstable Shoulder | Average ± SD | P value |

|---|---|---|---|---|---|---|---|---|

| Automated Readability Index | 15.6 | 13.6 | 13.5 | 14.7 | 12.2 | 14.8 | 13.9 ± 1.3 | <.001 |

| Coleman-Liau | 15.5 | 12.6 | 16.7 | 12.9 | 12.9 | 14.3 | 14.1 ± 1.9 | <.001 |

| New Dale-Chall | 14.0 | 11.5 | 14.0 | 14.0 | 11.5 | 14.0 | 13 ± 1.4 | <.001 |

| Flesch-Kincaid | 14.2 | 13.0 | 13.6 | 14.0 | 12.6 | 14.5 | 13.5 ± 0.7 | <.001 |

| FORCAST | 12.4 | 11.1 | 12.5 | 11.3 | 11.8 | 11.6 | 11.8 ± 0.6 | <.001 |

| Fry | 17.0 | 14.0 | N/A | 15.0 | 16.0 | 17.0 | 15.5 ± 1.3 | <.001 |

| Gunning Fog | 16.7 | 16.1 | 17.0 | 17.2 | 14.6 | 17.5 | 16.5 ± 1.1 | <.001 |

| Raygor Estimate | 17.0 | 13.0 | N/A | 17.0 | 13.0 | 17.0 | 15.4 ± 2.2 | .03 |

| SMOG | 15.5 | 15.3 | 14.6 | 15.5 | 14.1 | 15.8 | 15.1 ± 0.7 | <.001 |

| Average ± SD | 15.3 ± 1.7 | 13.4 ± 1.6 | 14.6 ± 1.9 | 14.6 ± 2 | 13.2 ± 1.5 | 15.2 ± 2 | ||

| P value | <.001 | <0.001 | <.001 | <.001 | <.001 | <.001 |

SD, standard deviation; SMOG, Simple Measure of Gobbledygook.

Average readability scores and associated P values for each test are in the far right column. Average readability scores and associated P values for each brochure are in the bottom row.

For each of the 9 tests used, the mean reading level was higher than the recommended 8th grade: Automated Readability Index (grade level) 14.1 (P < .05); Coleman-Liau, 14.2 (P < .05); New Dale-Chall, 13.2 (P < .05); Flesch-Kincaid, 13.7 (P < .05); FORCAST, 11.8 (P < .05); Fry, 15.8 (P < .05); Gunning Fog, 16.5 (P < .05); Raygor Estimate, 15.4 (P < .05); and SMOG, 15.1 (P < .05; Table II).

Discussion

A significant portion of the adult population in America has limited literacy skills,38 and the NIH recommends that patient materials be written at or below the 8th grade level.34 The purpose of this study was to determine whether the ASES patient education brochures meet the NIH recommendations. The results of the study demonstrate that the brochures are written well above the recommended 8th grade level and range in difficulty from a grade level of 13.4 to 15.3. This is at college level and is likely higher than the average patient's reading comprehension. For clinicians that supply these brochures, it may be useful to go over the material with patients during their office visit.

This study does have several limitations. The reading grade formulas do not score visual information. Each of the patient brochures included images that could reduce the reading level in practice. In addition, the formulas are calculated based on syllable and character counts per word, sentence, and paragraph. This is not an ideal method for capturing language complexity, particularly in medical literature. For example, the word “individual” has more syllables and characters than the word “septic,” even though patients are less likely to understand the latter. However, this study used several validated measures of readability, and 100% of the tests demonstrated that the reading level was higher than the 8th grade for each article.

Other studies of orthopedic patient information have found similar results, with reading grade scores ranging from 8.8 to 11.5.2, 11, 29, 31, 35 A comprehensive study of 170 sports education materials provided by the American Academy of Orthopaedic Surgeons and American Orthopaedic Society for Sports Medicine (AOSSM) by Ganta et al11 found that the average Flesch-Kincaid readability grade was 10.2 and that only 15 of 170 materials were at or below the 8th grade level. Similarly, analyses of electronic patient materials from the AOSSM and the American Association for Surgery of Trauma by Eltorai et al found that only 7 of 65 AOSSM6 and 1 of 16 American Association for Surgery of Trauma7 electronic materials were below the 8th grade level.

Most patients read at a level 4 grades below the highest grade completed.26 More than one-third of patients presenting in an emergency department setting have inadequate or marginal health literacy and cannot understand discharge, follow-up, or medication instructions.39 The National Center for Education Statistics conducted a detailed report on adult literacy, which found surprising results. Nearly one-quarter of all Americans are in the lowest literacy proficiency level, and most of these individuals do not see themselves as “at risk” for low literacy.17 Therefore, asking patients whether they understand their diagnosis or probing patients for questions is not likely to identify patients with low proficiency. Patients with low literacy often feel shameful and are less likely to ask questions or admit when they do not understand something,15, 21, 24 so effective print material can be valuable. In support of the value provided by print material, a recent study by Madkouri et al19 found that a presurgical information sheet was more informative than oral information given by the surgeon.

The results of readability studies have suggested several areas for improving orthopedic material for patients. The breadth of the problem is unclear. A recent study of common orthopedic patient-reported outcomes by Perez et al25 surprisingly found that more than 90% were at or below the 8th grade level, and all of the recently developed orthopedic-related Patient-Reported Outcomes Measurement Information System (PROMIS) measures were at or below the more stringent 6th grade level. However, a study by Roberts et al29 compared American Academy of Orthopaedic Surgeons readability scores from 2008 to 2014 and found that the mean reading level decreased from 10.4 to 9.3. Approximately 84% of the articles were still written above the 8th grade reading level, indicating that little progress has been made regarding educational material.

There are several ways to continue improving written communication with patients. Some readability software programs provide a list of commonly used words with suggestions for replacement (Table III). The United States Department of Health and Human Services recommends using short sentences, defining technical terms, and supplementing instructions with visual material. Studies have shown that individuals with limited literacy skills prefer information with short words and sentences.37 In addition, information should be organized so that the most important points are repeated and stand out. A test for assessing patient comprehension of oral communication was validated in 2015 by Giudici et al12 in a group of 21 patients before surgery. For improving oral communication, patients could be asked open-ended questions; further, the teach-back method is effective for evaluating a patient's understanding.13

Table III.

Problem words with suggestions for improvement provided by the readability software

| Problem words | Suggestions | Problem words | Suggestions |

|---|---|---|---|

| a number of | a few | incision | cut |

| Accompanied | went with | indicated | shown |

| advise | tell | individual | person, single |

| alleviate | lessen | initial | first |

| appropriate | correct | multiple | many |

| as well as | and, also | necessary | needed |

| beneficial | helpful | obtain | get |

| combined | joined | on the part of | for |

| commencement | start | performed | did |

| components | parts | physician | doctor |

| contribute | give, help | portion | part |

| demonstrates | shows | preparation | readiness |

| determine | decide, figure | primary | main |

| duplicate | copy | prior to | before |

| elevation | height | program | plan |

| eliminate | cut, drop | recommended | suggested |

| employment | work, job | relocate | move |

| examine | check | require | need |

| function | role | result in | lead to |

| in conjunction with | with | some of the | some |

| in order to | to, for | throughout the course of | during |

| in some cases | sometimes | visualize | picture |

Conclusion

The ASES patient education brochures are written well above the NIH recommended 8th grade reading level. Discussing the brochure material with patients while they are in the office may be useful. The findings of this study are similar to other investigations concerning orthopedic patient education material. Supplementary brochures and websites could be a useful source of information, particularly for patients who are deterred from asking questions in the office. Printed material designed for patient education should be edited to a more reasonable reading level. Further review of patient education materials is warranted, and future studies should be done to assess whether progress has been made.

Disclaimer

The authors, their immediate families, and any research foundations with which they are affiliated have not received any financial payments or other benefits from any commercial entity related to the subject of this article.

Footnotes

This study did not require Insitutional Review Board approval.

References

- 1.Badarudeen S., Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res. 2010;468:2572–2580. doi: 10.1007/s11999-010-1380-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Beutel B.G., Danna N.R., Melamed E., Capo J.T. Comparative readability of shoulder and elbow patient education materials within orthopaedic websites. Bull Hosp Jt Dis (2013) 2015;73:249–256. [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention Simply Put: a guide for creating easy-to-understand materials. 2009. https://www.cdc.gov/healthliteracy/pdf/simply_put.pdf

- 4.Chall J.S., Dale E. Brookline Books; Cambridge, MA: 1995. Readability revisited: the new Dale-Chall readability formula. [Google Scholar]

- 5.El-Daly I., Ibraheim H., Rajakulendran K., Culpan P., Bates P. Are patient-reported outcome measures in orthopaedics easily read by patients? Clin Orthop Relat Res. 2016;474:246–255. doi: 10.1007/s11999-015-4595-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Eltorai A.E., Han A., Truntzer J., Daniels A.H. Readability of patient education materials on the American Orthopaedic Society for Sports Medicine website. Phys Sports Med. 2014;42:125–130. doi: 10.3810/psm.2014.11.2099. [DOI] [PubMed] [Google Scholar]

- 7.Eltorai A.E., Thomas N.P., Yang H., Daniels A.H., Born C.T. Readability of trauma-related patient education materials from the American Academy of Orthopaedic Surgeons. Trauma Mon. 2016;21:e20141. doi: 10.5812/traumamon.20141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fox S., Duggan M. Health online 2013. 2013. http://www.pewinternet.org/2013/01/15/health-online-2013/ Pew Research Center Internet & Technology.

- 9.Friedman D.B., Hoffman-Goetz L. A systematic review of readability and comprehension instruments used for print and web-based cancer information. Health Educ Behav. 2006;33:352–373. doi: 10.1177/1090198105277329. [DOI] [PubMed] [Google Scholar]

- 10.Fry E. Fry's readability graph: clarifications, validity, and extension to level 17. J Reading. 1977;21:242–252. [Google Scholar]

- 11.Ganta A., Yi P.H., Hussein K., Frank R.M. Readability of sports medicine-related patient education materials from the American Academy of Orthopaedic Surgeons and the American Orthopaedic Society for Sports Medicine. Am J Orthop (Belle Mead NJ) 2014;43:E65–E68. [PubMed] [Google Scholar]

- 12.Giudici K., Gillois P., Coudane H., Claudot F. Oral information in orthopaedics: how should the patient's understanding be assessed? Orthop Traumatol Surg Res. 2015;101:133–135. doi: 10.1016/j.otsr.2014.10.020. [DOI] [PubMed] [Google Scholar]

- 13.Health Resources and Services Administration Health literacy. 2017. https://www.hrsa.gov/about/organization/bureaus/ohe/health-literacy/index.html

- 14.Kapoor K., George P., Evans M.C., Miller W.J., Liu S.S. Health literacy: readability of ACC/AHA online patient education material. Cardiology. 2017;138:36–40. doi: 10.1159/000475881. [DOI] [PubMed] [Google Scholar]

- 15.Katz M.G., Jacobson T.A., Veledar E., Kripalani S. Patient literacy and question-asking behavior during the medical encounter: a mixed-methods analysis. J Gen Intern Med. 2007;22:782–786. doi: 10.1007/s11606-007-0184-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kincaid J.P., Fishburne R.P., Rogers R.R., Chissom B.S. 1975. Derivation of new readability formulas (automated readability index, fog count, and Flesch reading ease formula) for Navy enlisted personnel. Research Branch Report 8-75. Chief of Naval Technical Training, Naval Air Station, Memphis-Millington, TN. [Google Scholar]

- 17.Kirsch I.S., Jungeblut A., Jenkins L., Kolstad A. Adult literacy in America: a first look at the findings of the National Adult Literacy Survey. 1993. https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=93275

- 18.Ley P., Florio T. The use of readability formulas in health care. Psychol Health Med. 1996;1:7–28. doi: 10.1080/13548509608400003. [DOI] [Google Scholar]

- 19.Madkouri R., Grelat M., Vidon-Buthion A., Lleu M., Beaurain J., Mourier K.L. Assessment of the effectiveness of SFCR patient information sheets before scheduled spinal surgery. Orthop Traumatol Surg Res. 2016;102:479–483. doi: 10.1016/j.otsr.2016.02.005. [DOI] [PubMed] [Google Scholar]

- 20.McCall W.A., Schroeder L.C., Starr R.P. Teachers College, Columbia University; New York: 1961. McCall-Crabbs standard test lessons in reading. [Google Scholar]

- 21.Menendez M.E., van Hoorn B.T., Mackert M., Donovan E.E., Chen N.C., Ring D. Patients with limited health literacy ask fewer questions during office visits with hand surgeons. Clin Orthop Relat Res. 2017;475:1291–1297. doi: 10.1007/s11999-016-5140-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.National Cancer Institute Making health communication programs work. 2004. https://www.cancer.gov/publications/health-communication/pink-book.pdf

- 23.National Institutes of Health Office of Communications and Public Liaison Clear & simple. 2015. https://www.nih.gov/institutes-nih/nih-office-director/office-communications-public-liaison/clear-communication/clear-simple

- 24.Parikh N.S., Parker R.M., Nurss J.R., Baker D.W., Williams M.V. Shame and health literacy: the unspoken connection. Patient Educ Couns. 1996;27:33–39. doi: 10.1016/0738-3991(95)00787-3. [DOI] [PubMed] [Google Scholar]

- 25.Perez J.L., Mosher Z.A., Watson S.L., Sheppard E.D., Brabston E.W., McGwin G., Jr Readability of orthopaedic patient-reported outcome measures: is there a fundamental failure to communicate? Clin Orthop Relat Res. 2017;475:1936–1947. doi: 10.1007/s11999-017-5339-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Powers R.D. Emergency department patient literacy and the readability of patient-directed materials. Ann Emerg Med. 1988;17:124–126. doi: 10.1016/s0196-0644(88)80295-6. [DOI] [PubMed] [Google Scholar]

- 27.Prabhu A.V., Crihalmeanu T., Hansberry D.R., Agarwal N., Glaser C., Clump D.A. Online palliative care and oncology patient education resources through Google: do they meet national health literacy recommendations? Pract Radiat Oncol. 2017;7:306–310. doi: 10.1016/j.prro.2017.01.013. [DOI] [PubMed] [Google Scholar]

- 28.R Core Team . R Foundation for Statistical Computing; Vienna, Austria: 2017. R: a language and environment for statistical computing.www.R-project.org [Google Scholar]

- 29.Roberts H., Zhang D., Dyer G.S. The readability of AAOS patient education materials: evaluating the progress since 2008. J Bone Joint Surg Am. 2016;98:e70. doi: 10.2106/JBJS.15.00658. [DOI] [PubMed] [Google Scholar]

- 30.RStudio . RStudio, Inc.; Boston, MA: 2016. Integrated development environment for R.http://www.rstudio.com/ [Google Scholar]

- 31.Shah A.K., Yi P.H., Stein A. Readability of orthopaedic oncology-related patient education materials available on the Internet. J Am Acad Orthop Surg. 2015;23:783–788. doi: 10.5435/JAAOS-D-15-00324. [DOI] [PubMed] [Google Scholar]

- 32.Sheppard E.D., Hyde Z., Florence M.N., McGwin G., Kirchner J.S., Ponce B.A. Improving the readability of online foot and ankle patient education materials. Foot Ankle Int. 2014;35:1282–1286. doi: 10.1177/1071100714550650. [DOI] [PubMed] [Google Scholar]

- 33.U.S. Government Publishing Office Plain Writing Act of 2010: Federal Agency Requirements. 2011. http://www.plainlanguage.gov/plLaw/law/index.cfm

- 34.U.S. National Library of Medicine How to write easy-to-read health materials: MedlinePlus. 2017. https://medlineplus.gov/etr.html

- 35.Vives M., Young L., Sabharwal S. Readability of spine-related patient education materials from subspecialty organization and spine practitioner websites. Spine. 2009;34:2826–2831. doi: 10.1097/BRS.0b013e3181b4bb0c. [DOI] [PubMed] [Google Scholar]

- 36.Wang S.W., Capo J.T., Orillaza N. Readability and comprehensibility of patient education material in hand-related web sites. J Hand Surg Am. 2009;34:1308–1315. doi: 10.1016/j.jhsa.2009.04.008. [DOI] [PubMed] [Google Scholar]

- 37.Weiss B.D. Health literacy research: isn't there something better we could be doing? Health Commun. 2015;30:1173–1175. doi: 10.1080/10410236.2015.1037421. [DOI] [PubMed] [Google Scholar]

- 38.Weiss B.D. American Medical Association, AMA Foundation; Chicago, IL: 2003. Health literacy: help your patients understand: a continuing medical education (CME) program that provides tools to enhance patient care, improve office productivity, and reduce healthcare costs. [Google Scholar]

- 39.Williams M.V., Parker R.M., Baker D.W., Parikh N.S., Pitkin K., Coates W.C. Inadequate functional health literacy among patients at two public hospitals. JAMA. 1995;274:1677–1682. doi: 10.1001/jama.1995.03530210031026. [DOI] [PubMed] [Google Scholar]