Abstract

Choice impulsivity is an important subcomponent of the broader construct of impulsivity and is a key feature of many psychiatric disorders. Choice impulsivity is typically quantified as temporal discounting, a well-documented phenomenon in which a reward's subjective value diminishes as the delay to its delivery is increased. However, an individual's proclivity to—or more commonly aversion to—risk can influence nearly all of the standard experimental tools available for measuring temporal discounting. Despite this interaction, risk preference is a behaviourally and neurobiologically distinct construct that relates to the economic notion of utility or subjective value. In this opinion piece, we discuss the mathematical relationship between risk preferences and time preferences, their neural implementation, and propose ways that research in psychiatry could, and perhaps should, aim to account for this relationship experimentally to better understand choice impulsivity and its clinical implications.

This article is part of the theme issue ‘Risk taking and impulsive behaviour: fundamental discoveries, theoretical perspectives and clinical implications’.

Keywords: choice impulsivity, delay discounting, risk, economic models, functional MRI, psychiatry

1. Introduction

Impulsive behaviour is a core feature of many psychiatric conditions: from the pathognomonic tendency to act without foresight seen in addictive disorders, to the difficulty in suppressing movement once initiated that is characteristic of attention deficit hyperactivity disorder (ADHD), to the thrill-seeking behaviour typical of gambling disorder. While impulsivity is a dimensional symptom that cuts across diagnostic boundaries, it is itself multifaceted. Recently, a panel of experts on impulsivity proposed a taxonomy that broadly divides impulsivity into rapid-response impulsivity, a motoric process characterized by poor inhibitory control, and choice impulsivity, a decision-making process primarily characterized by an inability to delay gratification [1,2]. It is important to note however that, in addition to the delay of gratification, choice impulsivity can also be related to the tendency to make a premature decision without sufficiently evaluating information or considering its accuracy—a process referred to as reflection impulsivity [3]. Consistent with the U.S. National Institute of Mental Health's RDoC initiative [4], this sub-classification of impulsivity has been argued to more closely align with the different neurobiological circuitry that supports each subcomponent process.

Choice impulsivity, in particular, has broad clinical importance. Individuals diagnosed with schizophrenia [5], bipolar disorder [6], impulse control disorders [7], bulimia nervosa [8,9], ADHD [10] and substance use and addictive disorders [11] have all been found to have increased choice impulsivity, while those diagnosed with obsessive compulsive disorder (OCD) and anorexia nervosa may show the converse pattern [12]. The relationship between choice impulsivity and at least a few of these disorders seems to be prospective [13,14], suggesting this subcomponent of impulsivity could have clinical utility as a measurable vulnerability factor for primary prevention.

Choice impulsivity may be assessed with different tools. Certain tasks, such as the information sampling task [15–17] or the information-gathering task [18], report on the inability to fully deliberate and consider solutions to problems (reflection impulsivity) because they index an individual's evaluation of the sufficiency of the information received before making a choice. For the purpose of this opinion piece, we focus on another widely used measure of impatience, which lies at the core of choice theory in economics: delay discounting, which is also referred to as temporal discounting. Temporal discounting is the observation that a reward delivered after a delay is subjectively worth less than the same reward delivered immediately. Temporal discounting can be modelled mathematically in different ways, but all the algorithms capture some form of a discount rate (a notion taken from economics), a constant that captures the idiosyncratic rate of reward devaluation as a function of the delay to reward receipt. The higher this discount rate, the more a delayed reward will be modelled as devalued by waiting; and hence the more likely an individual will be described as foregoing a valuable later outcome in favour of impatiently selecting a smaller but more immediate alternative—the very signature of choice impulsivity. Temporal discounting is usually measured by intertemporal choice tasks in which individuals are specifically asked to decide between smaller–sooner rewards and larger–later rewards. This relatively simple methodology enables rapid and easy measurement of the discount rate in the laboratory, the field and the clinic, with its first applications to psychiatry, and substance addiction in particular, dating back to the late 1990s [19,20]. Its applicability is further supported by its reliability and reproducibility, as several standard tasks have now been shown to yield consistent results in clinical populations [21], a necessary characteristic of a clinical marker.

While separating choice impulsivity from rapid-response impulsivity has received broad translational support, the current disaggregation may still be imperfect [22]. For example, the preference for smaller immediate rewards can, for fairly subtle reasons, also reflect changes in risk-taking or risky behaviour in general, an independent decision construct [23–25]. While individuals colloquially labelled as ‘impulsive’ are more likely to seek and engage in risky situations, it is not clear whether they do so owing to impulsivity or because they exhibit a logically separate and idiosyncratic proclivity to assume risks. Because choices between rewards of different magnitudes are influenced by one's risk attitude, many of the tasks used to measure temporal discount rates necessarily entangle risk attitude and choice impulsivity. Commensurate with this largely theoretical entanglement, a growing number of studies have begun to relate choice impulsivity to pathologically ‘risky’ behaviours, from gambling [26] to suicide [27] and quite prominently to substance use disorders [11], but do not always explore how idiosyncratic risk attitudes and impulsivity, which are both logically and neurobiologically separable, may separately explain the behaviour or pathology of interest.

As with temporal discounting, an individual's general proneness to or avoidance of risky prospects—their risk preference—can also be quantified using simple choice paradigms that are reliable, largely repeatable and well suited to the clinic [28]. Like discount rates, these measures seem to be clinically relevant for many of the same psychopathologies [29–31]. From a theoretical perspective, the economics and finance fields have long acknowledged the possible confounds that risk preferences introduce into the measurement of time preferences and temporal discounting [32–34]. Many studies have explored how individuals' behaviour toward uncertainty technically biases most tools for the measurement of delay discounting [35,36]. This is troubling because, while from an empirical point of view, hundreds of publications employ time preference measures of choice impulsivity, few additionally focus on risk preferences with the goal of disambiguating their contribution to temporal discounting and impulsivity. Even fewer have done so with a focus on individual differences. The result may be a systematic (and unnecessary) confounding of risk attitudes and choice impulsivity in some of the literature.

Here, we review these latter studies and examine how they could, and perhaps should, methodologically impact future studies focused on individual differences in impulsive choice in health and disease. Adding precision to our measures of human (and animal) impulsive choice behaviour, we argue, could help facilitate a more accurate dissection of the neural circuitry that supports this behaviour and its disruption in pathological conditions. Better precision could also translate to better concordance between animal and human measures of the same constructs, aiding the development of more targeted behavioural, pharmacological and other therapeutic interventions. Finally, clinically, a more precise taxonomy of what constitutes ‘impulsive’ and ‘risky’ behaviour may be beneficial for multiple reasons. First, it may contribute to improved diagnosis by facilitating the segregation of disorders with an impulsive decision-making aetiology from those with a risky decision-making aetiology, a clustering that would be obscured by interactions between these two dimensions. This quantitative dimensional approach to psychiatric diagnosis research is being actively explored, with some initiatives coming from the U.S. National Institute of Mental Health [37–39] with the goal of better categorizing healthy and pathological human behaviour. Second, it may help better connect clinical phenomenology to neurobiology, pointing to more specific targets for therapeutic intervention. And finally, it could lay the foundation for personalized medicine in psychiatry, by adding precision to individualized measures of maladaptive behaviour.

2. Temporal discounting formalizations for modelling impulsive choice

The preference for smaller–sooner over larger–later rewards has been an intense topic of research by economists, ethologists, psychologists and neuroscientists for over four decades [34,40–46], but the first formal mention of intertemporal choice dates much further back to at least 1937 [47]. Throughout this rich history, intertemporal choices have been assayed using many different methods and modelled in diverse ways, but almost always by pitting two choice attributes against each other: reward and time. Across the many available measures, preferred choices are always found to be positively correlated with reward amount (I want more) and negatively correlated with delay to reward delivery (I want it sooner). The trade-off between these two attributes is algorithmically formalized by the devaluation or ‘discounting’ of value as a function of delay, hence it is referred to as delay discounting or temporal discounting. Many different formulations of temporal discounting exist in the literature, but the two that have been most widely adopted are exponential discounting and hyperbolic discounting. In the first formulation, it is assumed that delaying or accelerating two dated outcomes by a common amount should not change preferences between the two possible outcomes, and as such a subject's intertemporal preferences are referred to as ‘time-consistent’. When discounting is constant in this way (the subjective value of a reward drops by a constant percentage with each additional unit of time) it can be captured by an exponential decay function where the time constant of the decay is the so-called discount rate:

This is the most common form of discounting in the economics literature (in which consistency is a desiderata), where Ut(ct) is the utility U of consumption c at time t and k is the [constant] discount rate by which the value of an outcome v is reduced at time t. In this framework, steep discounting might be said by an economist not to be impulsivity per se, but rather a kind of impatience—a rational, constant degree of preference for immediate outcomes that we perceive in patients to be impulsive in the colloquial sense. However, a significant body of empirical work has shown that this model does not always capture real behavioural data well [32,46,48–52]. Instead, mathematically hyperbolic forms of discounting perform better under many, if not most, real-world conditions [53–56] because humans and other animals are steeper discounters for short delays than for long delays. Some authors have referred to this inconsistency as ‘present bias’ [33,34,46] but this phenomenon is observed irrespective of whether the sooner alternative is in the present or not [57].

Many functional forms for this process that result in a hyperboloid discounting function have been proposed in the literature. The most commonly used in psychology (although alternative forms are used by economists [44,58]) is the one proposed by Mazur (1987) [59]:

3. Economic theory formalizations of risky choice

All humans and animals also show an idiosyncratic unwillingness to tolerate risk to some degree. If asked to choose between a certain gain and a risky 50% chance of earning more, there is some amount ‘more’ at which any given subject will accept the risk of loss to earn a chance at the larger gain. Just how much more reward one requires to accept a given level of risk varies from subject-to-subject, to some degree between gains and losses ([60], but also see [61]), and also seems to change across the lifespan [62,63].

Most individuals can be described as strongly preferring to avoid risks and these individuals require large gains to entice them to accept offers with more uncertain rewards, a pattern called risk aversion. A smaller group of individuals prefer to engage in risk and accept risky offers even when a certain alternative is of nearly equivalent value. People who behave in this way are referred to as risk seeking. Other individuals are indifferent toward risk—making their decisions based only on the actuarial long-term expected value of the options before them—and are hence called risk-neutral. This relationship between risk (probability) and reward magnitude lies at the heart of essentially all economic theories of choice such as expected utility theory [64,65], rank-dependent utility or prospect theory [66]. As for a sizable range of possible choice options these models are all roughly equivalent, for the purposes of this paper we turn next to expected utility theory explanations of risk preference.

The concept of economic utility, which we will refer to (using the neurobiological convention) as subjective value, was first introduced by Daniel Bernoulli (1738) and hinges on the idea that the subjective well-being (or suffering) derived from a payoff is not precisely equal to the objective size of the gain or loss [67]. Instead, the objective outcome is presumed to be internally and individually processed to yield a subjective experience, in much the way that classical psychophysics relates luminance in the outside world to the percept of brightness. This internal representation of subjective value is assumed to vary from individual to individual (and possibly from context to context).

Economists refer to the mathematical function that, like the Weber Law, maps the objective amount of any reward or punishment (e.g. money) to the subjective well-being derived from obtaining it as the Utility Function. For clarity, we concentrate here on the domain of rewards, or in the language of economics: gains. Consider an individual whose utility function is (like a Weber curve) concave, that is, initial increments in the objective amount of a monetary reward lead to large increments in utility, but subsequent identical increments in reward lead to smaller and smaller increases in utility. Her marginal utility is said to be diminishing as she obtains more money. Now consider what happens when this individual is evaluating two possible options: a certain gain of $100 and a lottery with 50% chance of $200 and 50% chance of $0. Because her marginal utility is decreasing, while the objective expected value of both outcomes is the same (0.5 × 200 = 100), the expected utility of the gamble may be smaller for her than that of the certain payoff because in a subjective sense 200 is less than twice 100. This explains why she might choose to avoid the risky option and chose the safe outcome instead of the lottery despite the fact that to an actuary they are equivalent. Conversely, a risk seeking individual will have a convex utility function, with increasing marginal utility, and therefore will accept many gambles instead of the certain outcome. A risk-neutral individual, who is indifferent to risk, will simply choose the outcome with the largest objective expected value as her mapping between value and utility will be linear. This brief explanation captures how risk preference relates to the shape of the utility function.

Formally, the expected utility can be expressed by

where a risky prospect is evaluated as the product of the probability of winning p and Ui (the subjective utility of the payoff xi), rather than the purely objective product of pi and xi. As with discounting, the utility function Ui can take many functional forms. One of the most common forms in the literature is the constant relative risk aversion (CRRA), otherwise known as the power utility family of functions [68], of the form:

where r captures the curvature of the utility function, and by extension, is a measure of an individual's risk attitude. A ‘risk-neutral’ individual has an r = 1 such that the mapping between value and utility is linear. A ‘risk averse’ individual will have a concave utility function, with diminishing marginal utility, where r < 1. Conversely, a ‘risk seeking’ individual will have a convex utility function, with increasing marginal utility, where r > 1 [69].

The shape of the utility function is of interest to psychiatry because it provides a computational method for quantifying the way in which individuals subjectively value positive or negative outcomes and how this dictates their engagement with, or avoidance of, uncertainty. For instance, while most people are somewhat risk averse, a patient with a phobia may be extremely (pathologically) risk averse [70] and conversely, an individual with a gambling disorder might exhibit risk-loving behaviour [71]. The fact that we can measure the curvature of the utility function using simple choice tasks and that this measure seems to translate to behaviour in realms other than preferences over gambles suggests that this can serve as a potentially powerful clinical tool.

4. Theoretical and empirical separation of risk and time preferences

As alluded to above, traditional approaches to modelling temporal discounting ignore potential nonlinearities in the utility function, and hence individual differences in risk attitude, by computing the discount rate while specifically assuming that subjects are risk neutral. This is particularly problematic as a significant body of work has shown there is wide heterogeneity in the shape of individual utility functions (a diversity in risk preferences) and that, by and large, most individuals exhibit some degree of risk aversion—i.e. concavity in their utility function for most reward types [72].

The theoretical challenge presented by the potentially confounding influence of nonlinear utility upon estimates of the discount rate was originally brought to the general attention of economics and psychology by Frederick et al. in 2002 [33]. Since then, numerous attempts have been made to elicit separable risk and time preferences. In a series of seminal papers [73,74], Andersen and colleagues proposed a measure of the discount function conditional upon utility, first by estimating the r curvature parameter using an independent risk task. Using this methodology, the authors ‘corrected’ their collective estimate of the discount rate of a sample of 253 adult Danes from an annual discount rate of 25.2% to 10.1%, concluding that by ignoring the risk averse preferences of this population, they had overestimated the discount rate (and hence people's choice impulsivity) by a large margin. Similarly, Laury et al. [75] reported a reduction from an annual discount rate of 55.5% to 14.1% after correcting for risk with a different task [75], and Takeuchi [76] showed that imposing linear utility increased the discount rate estimates and resulted in poorer model fits to behavioural data [76].

We note that there have been important contributions on this issue of the separability of time and risk preferences that have proposed solutions outside of the standard expected utility-based approach that we describe here [77]. Primarily, the ordinal certainty equivalent hypothesis [78] and the use of non-expected utility functions [36,79,80] have provided alternative solutions to make risk and time preference parameters identifiable. Andreoni & Sprenger [81], however, approached the separability of risk and time preferences quite traditionally by systematically manipulating risk in an intertemporal choice task [81] and found that preferences differed when both the immediate and delayed rewards were probabilistic relative to when these rewards were certain [82]. They concluded that (1) time preferences are distinct from risk preferences because they change when risk is explicitly present and (2) that utility under risk may be more concave than utility under certainty. This second point is shared by other authors [83–86]. For instance, Abdellaoui and colleagues [87] find differences in the curvature of the utility function for risk and for time preferences measured separately [87]. Other authors suggest that risk must play a role in the evaluation of future choices regardless of time preferences and that this may be accounted for by prospect theory [36,88]. This implies that utility under risk cannot be equated to utility at each time step—instantaneous utility—of a discounting function. This is interesting in light of brain imaging data that we discuss further below showing instead extensive neural overlap in the representation of subjective value for risk and the discounted subjective value, which potentially argues against this perspective. In any case, while these very detailed and advanced debates in the literature are of deep interest, all of these authors focus on the notion that instruments commonly aimed at measuring impulsivity can be entangled with risk-attitude. For this reason, we focus next on a study of how risk preferences can bias estimations of discount rates as they are traditionally explored (using simple binary choice tasks) in many psychology and psychiatry studies, which assumes an equivalence of utility across risk and time as in Andersen et al. [73].

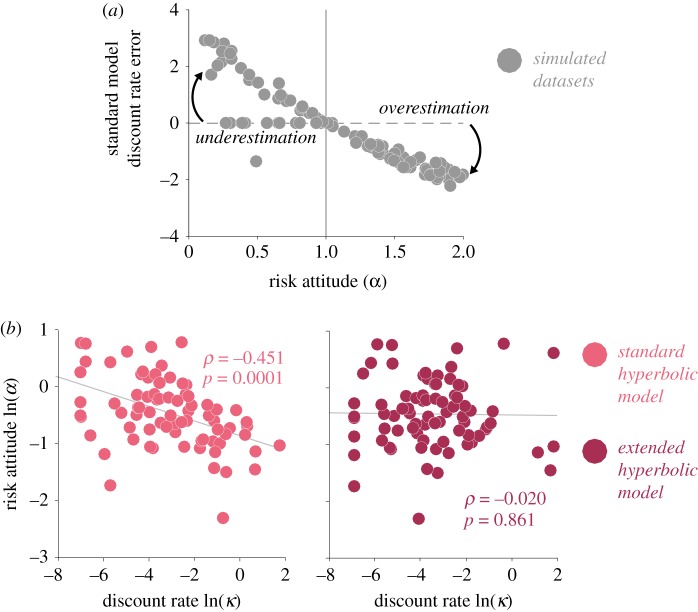

We recently demonstrated that ignoring risk attitudes when estimating individual discount rates not only overestimates these rates, but also introduces a systematic bias, whereby risk averse individuals appear more impulsive while risk tolerant individuals appear less impulsive than they really are [89]. We simulated discounting behaviour using a wide distribution of r curvature values for the utility function and showed that our predicted pattern of bias was matched by the behaviour of a community sample of individuals with diverse risk preferences (figure 1a). Our simple binary choice tasks (designed to separately capture risk and time preferences as in Andersen et al. [73]) have high translational value because they are of the kind that have been employed in studies with other animal species like rodents or non-human primates, unlike the choice lists or convex budget time sets described in the economics literature [90]. Furthermore, they do not require any sophistication or knowledge of finance to complete. Here, we used an independent risk task to estimate the curvature of each subject's utility function. This parameter, which corresponded to the individual's risk attitude, was then imputed into the discount function (exponential or hyperbolic) such that discounting is computed on utility vr rather than on value v, as in the following equation:

By employing this methodology, we showed that our discounted model accounted better for the behaviour of our real-world sample, and more importantly, we were able to fully decorrelate the estimated discount rate from the estimated risk preference parameter, thus giving us confidence that we can measure dissociable aspects of subjects' decision-making behaviour (figure 1b).

Figure 1.

(a) Bias in estimated discount rate from simulated intertemporal choice data at different levels of risk preference (from risk averse to risk seeking). Bias is computed as the difference between standard hyperbolic model (Mazur [59]) and the extended hyperbolic model (Lopez-Guzman et al. [89]), which discounts utility rather than value. At risk aversion levels (α < 1), the standard model overestimates the discount rate and at risk seeking levels (α > 1), the standard model underestimates the discount rate. (b) Correlation between the natural logarithm of the discount rate (κ) and the natural logarithm of the risk attitude parameter (α) obtained from the standard hyperbolic model (light colour) and the extended hyperbolic model (dark colour). Spearman's ρ and p-value for each correlation are reported. The extended hyperbolic model, which takes risk attitude into account, successfully decorrelates the two parameters. Adapted with permission from [89]. (Online version in colour.)

5. The neural implementation of temporal discounting and risk preference

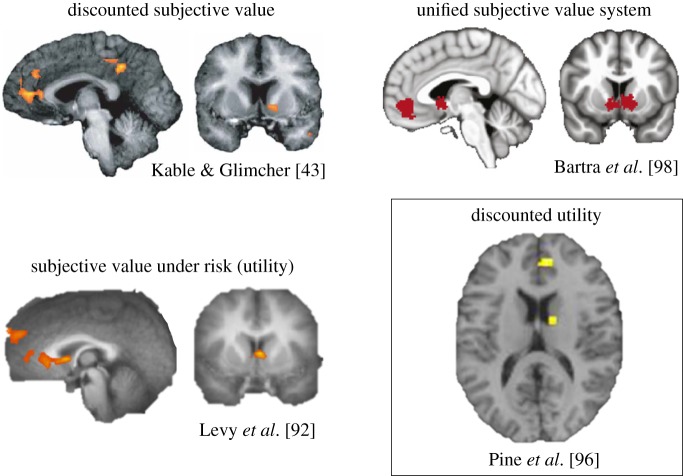

Numerous studies using functional magnetic resonance imaging (fMRI) in human subjects have examined the neural correlates of intertemporal choice and discounted subjective value [43,91–94], and separately, of risky choice and subjective value modulated by uncertainty [95–99]. A handful have additionally begun to examine how risk preferences and the discount rate might interact at a neural level to drive impulsive choice [100–102].

Early work by McClure and colleagues [86] on the neural basis of intertemporal choice seemed to suggest the engagement of two neural systems in the decision to select a larger later reward, an account some have suggested has to do with the competition between two ‘selves’, one that pushes behaviour towards less patience (i.e. towards the immediate reward) and the other that pushes behaviour towards more patience. This initial conclusion was based on a fairly complex multi-step inference suggesting that one set of brain regions, the ventromedial prefrontal cortex (VMPFC), ventral striatum, and posterior cingulate cortex (PCC), was more active when subjects chose the immediate relative to the delayed options in an intertemporal choice experiment [71,86]. And that yet another set of regions, the dorsolateral prefrontal cortex (DLPFC) and posterior parietal cortex, were active when the subject made a more difficult choice compared to an easier choice.

More recent studies agree that a single neural system is used to evaluate both immediate and delayed rewards, with each reward's value determined by how far away in time it is to be received. Kable & Glimcher [43] first tested this idea by estimating individual subjects' discount rates based on the choices they made in an intertemporal choice experiment that included critical elements of the approach used by McClure and colleagues in a nested fashion. The authors then used this estimate to construct what the discounted subjective value of each option on a given trial of the experiment would be for a given subject based on that subject's own discount rate. Using model-based fMRI, this study revealed that activity in a small set of brain regions all previously observed in McClure et al. [86] while subjects chose the immediate rewards, the VMPFC, ventral striatum and PCC, increased (in this case, hyperbolically) exactly as the value of the offer increased—a finding now widely seen as evidence that a single system represents discounted subjective value. In a follow-up study, Kable & Glimcher [57] showed that this was also true in the context of deciding about two delayed rewards (where there could be no ‘present bias’) rather than about a delayed and an immediate reward [57]. In this study, subjects anchored their discounting behaviour to the soonest-possible reward, not simply to the present, and the magnitude of activation in the VMPFC, striatum and PCC was not higher when an immediate reward could be chosen relative to when only a delayed reward could be chosen. Instead, activity in these regions encoded the subjective value of immediate and delayed rewards. Together, these two studies, along with a slew of subsequent work, provide what is now typically seen as unequivocal evidence that the VMPFC, striatum and PCC track discounted subjective value and not simply immediacy, impulsivity or a ‘hot’ response to a reward. While both perspectives—dual-system versus unified system—have found behavioural support, the latter view provides a more parsimonious account (from a neurobiological perspective) of how we might decide about the future. Indeed, further work continues to show that the VMPFC, striatum and PCC are not exclusively or disproportionately more active for immediate rewards. Instead, these regions are thought to form the core of the brain's valuation system, a set of brain regions that track the value of choice options across a variety of decision contexts and reward types—including delayed and risky rewards [98] (figure 2).

Figure 2.

Neural correlates of discounted subjective value, subjective value under risk (or undiscounted ‘utility’) and discounted utility. The three types of value signals are found in the ventromedial prefrontal cortex (VMPFC) and striatum, among other regions. These two regions form the core of the brain's unified subjective valuation system as identified in meta-analytic data. Adapted and reprinted with permission from Kable & Glimcher [43], Levy et al. [92], Bartra et al. [98] and Pine et al. [96]. (Online version in colour.)

A corollary to the unified system view as it pertains to our understanding of the neurobiology of intertemporal choice and the discount rate is that if the same brain system represents individual risk preferences (and hence, the curvature of the utility function) then this prior evidence for discounted subjective value representation might in fact be accounted for by the so-called probability discounting, the notion that all preference for immediate rewards stems from fear that any delay is itself intrinsically risky. As the decision-maker might believe that the probability of acquiring a given reward decreases as delay to its receipt increases, fMRI correlates of discounted subjective value might reflect the influence of probability and subjects' risk preferences rather than delay per se. To address this possibility, a handful of fMRI studies have simultaneously examined subjects’ preferences for risk and delay [99,100], the unique contribution of delay to intertemporal choice holding risk constant [97] and the neural correlates of discounted subjective value adjusted for the curvature of subjects' utility functions [96]. First, what these studies show is that the preference for immediate rewards cannot be fully accounted for by fear of risk, suggesting that it may be possible to isolate the unique contribution of delay on choice at a neural level. Second, while these studies do show overlap in the neural basis of decision-making about delayed and risky rewards, some differences are observed as well. Using a formal conjunction analysis, Peters & Büchel [100] found that, as predicted by the unified system perspective, the same striatal voxels represented the subjective value of delayed and risky rewards, with others showing similar findings in the VMPFC in a larger sample [100]. Interestingly, in this set of studies, differences between delay and risk were observed in the PCC (also typically considered part of the brain's valuation system) and the intraparietal sulcus, which might be related to the episodic memory and future thinking components of intertemporal choice. This finding suggests the activity in these specific brain areas would be more consistent with theories where utility for risk and utility for time are not equivalent [82,87].

In another approach to examining the neural overlap between risk and delay, and similarly, to identifying where their processing might diverge, Luhmann et al. [97] designed a risky choice paradigm where the only difference between two conditions was the amount of time subjects knew they had to wait to receive the outcome of their choice. This allowed the authors to examine the neural correlates of expected value modulated by delay. Brain regions that emerged as common to the immediate and delay conditions included, among others, the VMPFC, insula and PCC. Most notably, regions that emerged as unique to the delay condition included parts of the medial temporal lobe (which, similar to the inferior parietal regions, is implicated in episodic memory) as well as the DLPFC [97].

However, while risk and delay were assumed to be independent and non-interacting in Luhmann et al. [97], this is likely not the case given strong theoretical and empirical evidence to the contrary. To account directly for this interaction, Pine et al. [96] performed a traditional intertemporal choice experiment but, critically, using similar procedures to those described above in Lopez-Guzman et al. [89], incorporated a subject's idiosyncratic utility function curvature into the estimation of his or her discount rate. This allowed the authors to construct, for each subject, a risk preference-adjusted discounted subjective value regressor for their fMRI analyses. Controlling for undiscounted utility (subjective value of the delayed option adjusted for a subject's utility function curvature, but without the effect of delay), the VMPFC and striatum emerged as the only regions that tracked discounted utility (subjective value of the delayed option adjusted for a subject's utility function curvature and the effect of delay) [96]. Interestingly, and providing further support for the unified system perspective, a conjunction analysis again revealed that the same striatal voxels represented undiscounted and discounted utility (with each side of the conjunction statistically controlling for the other; figure 2), further suggesting that this region might support the integration of undiscounted utility and delay to drive temporal discounting. Also of note, as shown behaviourally in Lopez-Guzman et al. [89], the temporal discounting model that best fit the neural data was the modified hyperbolic model, providing converging evidence that the brain implements this particular algorithm [89,96].

In addition to these studies aimed at isolating the neural representation of discounted utility, another interesting line of work aims to test the possibility that there might be distinct sub-circuits within the broader valuation circuit that might independently support risk versus time preferences. These sub-circuits may not be detectable with the resolution of current human neuroimaging techniques but may be amenable to investigation in animal studies. Recent studies indeed suggest impulsive choice as measured by temporal discounting could be subserved by projections from ventral and dorsal striatum to more lateral areas of prefrontal cortex, while risky choice could be subserved by more ventromedial corticostriatal projections—including ventral striatum, medial orbitofrontal cortex, VMPFC and anterior cingulate cortex [102]. However, this work has not yet attempted to account for the interaction between risk and time preferences. Accounting for this interaction could facilitate a more precise dissection of the neurobiology of impulsive choice at the level of sub-circuits and neurotransmitter systems.

6. Implications for psychiatry

Much of the proliferation of time preference measures of impulsive choice in the psychiatric literature is borne out of a heightened interest in the relationship of this construct to unhealthy behaviour [103]. On average, temporal discounting is higher (although distributions overlap) in individuals with substance use disorders including nicotine, alcohol and illicit substances like heroin, cocaine and methamphetamine [11] compared to controls. This finding extends to obesity and overeating [104] and, notably, to risky sexual behaviour [105]. These observations have led several experts to suggest that discount rates could be a transdiagnostic marker of ‘pathological self-control’ [106] and as such could be useful as a predictive measure in at-risk populations [107]. Beyond diagnosis and prognosis, discount rates may also track therapeutic response in addiction [108] and obesity [109]. Recent reports suggest that within some clinical populations, individual differences in discounting could also aid in stratifying patients by their risk for treatment failure, which may prove useful for personalized treatment selection [110]. Perhaps one of the most compelling arguments for the focus on discounting measures in psychiatry is that their neural correlates are now fairly well known, and these behavioural tests can therefore reflect, in a more measurable way, the current biological state of the neurocircuitry that supports them. For example, ample evidence on the functional interaction between the DLPFC and key areas of the valuation network like VMPFC [111–113], indicates DLPFC may modulate the contextual flexibility of value representation for intertemporal choice. Interventions that target the DLPFC could, therefore, strengthen this modulatory effect and be useful therapeutically [114,115]. Having a cheap behavioural readout of this neurobiological effect is thus of great utility to psychiatry.

A key implication of the work discussed here is that the psychological basis of impulsive choice as measured by standard test instruments can be biased by an individual preference for risk. Individuals who are more risk averse can be misidentified as more impulsive if risk attitudes are not measured directly. Fortunately, evidence from neuroimaging and studies of impulse control disorders suggests that risk preferences do not account for the majority of the variance in discount rates—even when the empirical techniques used entangle the two measurements; rather the two appear to be partly independent contributors to impulsive choice. This means that we are not suggesting all results reported in the literature are invalidated by the fact that the measurement techniques used may be confounding risk and time preferences. However, in some particular psychiatric conditions, risk and time preferences may be going in the opposite direction of that predicted by their relationship described here. Pathological gamblers are one clear example of a population that exhibits both steep discounting of delayed rewards and somewhat reduced risk aversion, and these behavioural profiles are supported by both common and distinct neural correlates, as shown by Miedl et al. [99]. In this study, while the subjective value of all reward types and in all subjects was encoded in the brain's valuation system, only encoding of the subjective value of delayed rewards (but not risky rewards) was more pronounced in gamblers than in controls in the VMPFC and striatum. This suggests that our understanding of impulsive behaviour across a range of psychiatric disorders necessitates measurement of both discount rates and the curvature of the utility function (risk preference), among other variables, and their corresponding neural correlates. Measuring only the discount rate can confound interpretation of the primary drivers of why individuals discount delayed rewards at different rates and impulsive choice [116] and can obscure potentially important neurobiological targets for intervention.

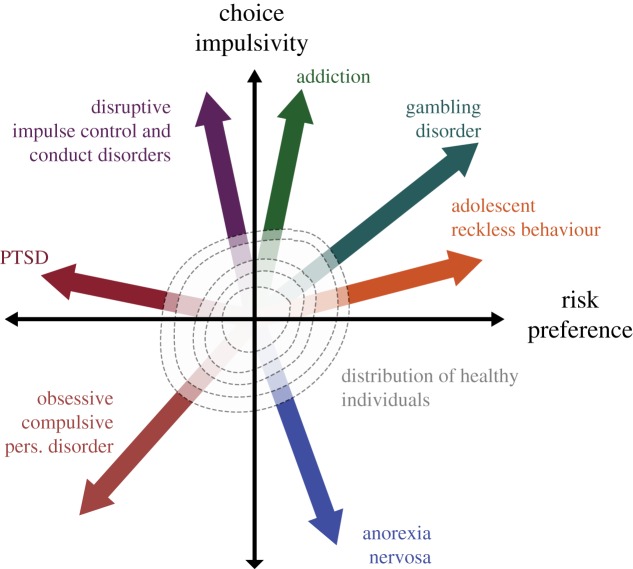

We propose that both time and risk should be thought of in their dimensional nature with respect to their utility in psychiatry (figure 3). For example, individuals with anorexia nervosa exhibit lower discount rates than controls [117], that is, they are perhaps pathologically patient. People suffering from anorexia are described clinically as exhibiting an ‘undue influence of body shape and weight on self-evaluation’ that guides their behaviour towards future goals of achieving a desired improvement, by restricting and titrating their energy intake and being prone to overexert in a constant attempt to lose weight. In this perspective, discounting embodies a behavioural dimension where either extreme is undesirable. The same can be said for attitudes towards risk. Excessive aversion to risk is maladaptive, as most situations in the world carry some level of uncertainty, and an inability to engage with these situations can lead to avoidance and extreme anxiety. Some reports indicate that individuals with anxiety disorders and phobias are more risk averse than controls [118]. On the other side of the spectrum, excessive tolerance or love of risk can obviously also be similarly detrimental, e.g. leading an individual to gamble insensibly [119], or to seek and engage in risky situations like high-risk sexual or drug consumption practices [120,121]. If these dimensions are not fully orthogonalized, that is, if there are unaccounted for interactions between them, this proposed mapping will be distorted, and separability of these disorders may not be readily achievable. Biases introduced in the evaluation of time preferences could thus compromise their true translational utility. Future work focusing on time and risk preference, especially when measured in clinical populations, should be mindful of their interaction.

Figure 3.

Theoretical space spanned by two orthogonal dimensions: choice impulsivity (i.e. discount rate) and risk preference (i.e. risk attitude parameter). Each vector represents a deviation from normality that could correspond to a defined psychiatric disorder. At the origin of this space, we locate a two-dimensional distribution that corresponds to values of choice impulsivity and risk seen in the ‘healthy’ population. PTSD, post-traumatic stress disorder. (Online version in colour.)

Data accessibility

This article has no additional data.

Competing interests

We declare we have no competing interests.

Funding

We received no funding for this study.

References

- 1.Hamilton KR, et al. 2015. Choice impulsivity: definitions, measurement issues, and clinical implications. Personal Disord. 6, 182–198. ( 10.1037/per0000099) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hamilton KR, et al. 2015. Rapid-response impulsivity: definitions, measurement issues, and clinical implications. Personal Disord. 6, 168–181. ( 10.1037/per0000100) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kagan J. 1966. Reflection--impulsivity: the generality and dynamics of conceptual tempo. J. Abnorm. Psychol. 71, 17–24. ( 10.1037/h0022886) [DOI] [PubMed] [Google Scholar]

- 4.Insel TR. 2014. The NIMH research domain criteria (RDoC) project: precision medicine for psychiatry. Am. J. Psychiatry 171, 395–397. ( 10.1176/appi.ajp.2014.14020138) [DOI] [PubMed] [Google Scholar]

- 5.Heerey EA, Robinson BM, McMahon RP, Gold JM. 2007. Delay discounting in schizophrenia. Cognit. Neuropsychiatry 12, 213–221. ( 10.1080/13546800601005900) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ahn W-Y, et al. 2011. Temporal discounting of rewards in patients with bipolar disorder and schizophrenia. J. Abnorm. Psychol. 120, 911–921. ( 10.1037/a0023333) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Voon V, et al. 2010. Impulsive choice and response in dopamine agonist-related impulse control behaviors. Psychopharmacology (Berl.) 207, 645–659. ( 10.1007/s00213-009-1697-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fields SA, Sabet M, Reynolds B. 2013. Dimensions of impulsive behavior in obese, overweight, and healthy-weight adolescents. Appetite 70, 60–66. ( 10.1016/j.appet.2013.06.089) [DOI] [PubMed] [Google Scholar]

- 9.Davis C, Patte K, Curtis C, Reid C. 2010. Immediate pleasures and future consequences. A neuropsychological study of binge eating and obesity. Appetite 54, 208–213. ( 10.1016/j.appet.2009.11.002) [DOI] [PubMed] [Google Scholar]

- 10.Scheres A, Tontsch C, Thoeny AL. 2013. Steep temporal reward discounting in ADHD-Combined type: acting upon feelings. Psychiatry Res. 209, 207–213. ( 10.1016/j.psychres.2012.12.007) [DOI] [PubMed] [Google Scholar]

- 11.Amlung M, Vedelago L, Acker J, Balodis I, MacKillop J. 2017. Steep delay discounting and addictive behavior: a meta-analysis of continuous associations. Addiction 112, 51–62. ( 10.1111/add.13535) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Steinglass JE, Lempert KM, Choo T-H, Kimeldorf MB, Wall M, Walsh BT, Fyer AJ, Schneier FR, Simpson HB. 2016. Temporal discounting across three psychiatric disorders: anorexia nervosa, obsessive compulsive disorder, and social anxiety disorder. Depress Anxiety 34, 463–470. ( 10.1002/da.22586) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chabris C, Laibson D, Morris C, Schuldt J, Taubinsky D. 2008. Individual laboratory-measured discount rates predict field behavior. J Risk Uncertain 37, 237–269. ( 10.1007/s11166-008-9053-x) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fernie G, Peeters M, Gullo MJ, Christiansen P, Cole JC, Sumnall H, Field M. 2013. Multiple behavioural impulsivity tasks predict prospective alcohol involvement in adolescents. Addict Abingdon Engl. 108, 1916–1923. ( 10.1111/add.12283) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Clark L, Robbins TW, Ersche KD, Sahakian BJ. 2006. Reflection impulsivity in current and former substance users. Biol. Psychiatry 60, 515–522. ( 10.1016/j.biopsych.2005.11.007) [DOI] [PubMed] [Google Scholar]

- 16.Hauser TU, Moutoussis M, Iannaccone R, Brem S, Walitza S, Drechsler R, Dayan P, Dolan RJ. 2017. Increased decision thresholds enhance information gathering performance in juvenile Obsessive-Compulsive Disorder (OCD). PLoS Comput. Biol. 13, e1005440 ( 10.1371/journal.pcbi.1005440) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hauser TU, Moutoussis M, NSPN Consortium, Dayan P, Dolan RJ. 2017. Increased decision thresholds trigger extended information gathering across the compulsivity spectrum. Transl. Psychiatry 7, 1296 ( 10.1038/s41398-017-0040-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hauser TU, Moutoussis M, Purg N, Dayan P, Dolan RJ. 2018. Beta-blocker propranolol modulates decision urgency during sequential information gathering. J. Neurosci. 38, 7170–7178. ( 10.1523/JNEUROSCI.0192-18.2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kirby KN, Petry NM, Bickel WK. 1999. Heroin addicts have higher discount rates for delayed rewards than non-drug-using controls. J. Exp. Psychol. Gen. 128, 78–87. ( 10.1037/0096-3445.128.1.78) [DOI] [PubMed] [Google Scholar]

- 20.Petry NM. 2002. Discounting of delayed rewards in substance abusers: relationship to antisocial personality disorder. Psychopharmacology (Berl) 162, 425–432. ( 10.1007/s00213-002-1115-1) [DOI] [PubMed] [Google Scholar]

- 21.MacKillop J, Amlung MT, Few LR, Ray LA, Sweet LH, Munafò MR. 2011. Delayed reward discounting and addictive behavior: a meta-analysis. Psychopharmacology (Berl.) 216, 305–321. ( 10.1007/s00213-011-2229-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cyders MA. 2015. The misnomer of impulsivity: commentary on ‘choice impulsivity’ and ‘rapid-response impulsivity’ articles by Hamilton and colleagues. Personal Disord. 6, 204–205. ( 10.1037/per0000123) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fineberg NA, et al. 2014. New developments in human neurocognition: clinical, genetic, and brain imaging correlates of impulsivity and compulsivity. CNS Spectr. 19, 69–89. ( 10.1017/S1092852913000801) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Verdejo-García A, Lawrence AJ, Clark L. 2008. Impulsivity as a vulnerability marker for substance-use disorders: review of findings from high-risk research, problem gamblers and genetic association studies. Neurosci. Biobehav. Rev. 32, 777–810. ( 10.1016/j.neubiorev.2007.11.003) [DOI] [PubMed] [Google Scholar]

- 25.Nigg JT. 2017. Annual research review: on the relations among self-regulation, self-control, executive functioning, effortful control, cognitive control, impulsivity, risk-taking, and inhibition for developmental psychopathology. J. Child Psychol. Psychiatry 58, 361–383. ( 10.1111/jcpp.12675) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Albein-Urios N, Martinez-González JM, Lozano Ó, Verdejo-Garcia A. 2014. Monetary delay discounting in gambling and cocaine dependence with personality comorbidities. Addict. Behav. 39, 1658–1662. ( 10.1016/j.addbeh.2014.06.001) [DOI] [PubMed] [Google Scholar]

- 27.Dombrovski AY, Szanto K, Siegle GJ, Wallace ML, Forman SD, Sahakian B, Reynolds CF, Clark L. 2011. Lethal forethought: delayed reward discounting differentiates high- and low-lethality suicide attempts in old age. Biol. Psychiatry 70, 138–144. ( 10.1016/j.biopsych.2010.12.025) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Levy I, Rosenberg Belmaker L, Manson K, Tymula A, Glimcher PW. 2012. Measuring the subjective value of risky and ambiguous options using experimental economics and functional MRI methods. J. Vis. Exp. JoVE 67, e3724 ( 10.3791/3724) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Admon R, Bleich-Cohen M, Weizmant R, Poyurovsky M, Faragian S, Hendler T. 2012. Functional and structural neural indices of risk aversion in obsessive-compulsive disorder (OCD). Psychiatry Res. 203, 207–213. ( 10.1016/j.pscychresns.2012.02.002) [DOI] [PubMed] [Google Scholar]

- 30.Brand M, Roth-Bauer M, Driessen M, Markowitsch HJ. 2008. Executive functions and risky decision-making in patients with opiate dependence. Drug Alcohol Depend. 97, 64–72. ( 10.1016/j.drugalcdep.2008.03.017) [DOI] [PubMed] [Google Scholar]

- 31.Gowin JL, Mackey S, Paulus MP. 2013. Altered risk-related processing in substance users: imbalance of pain and gain. Drug Alcohol Depend. 132, 13–21. ( 10.1016/j.drugalcdep.2013.03.019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chapman GB. 1996. Temporal discounting and utility for health and money. J. Exp. Psychol. Learn. Mem. Cogn. 22, 771–791. ( 10.1037/0278-7393.22.3.771) [DOI] [PubMed] [Google Scholar]

- 33.Frederick S, Loewenstein G, O'Donoghue T. 2002. Time discounting and time preference: a critical review. J. Econ. Lit. 40, 351–401. ( 10.1257/jel.40.2.351) [DOI] [Google Scholar]

- 34.Loewenstein G, Prelec D. 1992. Anomalies in intertemporal choice: evidence and an interpretation. Q. J. Econ. 107, 573–597. ( 10.2307/2118482) [DOI] [Google Scholar]

- 35.Epper T, Fehr-Duda H. 2012. The missing link: Unifying risk taking and time discounting [Internet]. Department of Economics - University of Zurich [cited 2016 Nov 29]. Report No.: 096. See https://ideas.repec.org/p/zur/econwp/096.html.

- 36.Halevy Y. 2008. Strotz meets allais: diminishing impatience and the certainty effect. Am. Econ. Rev. 98, 1145–1162. ( 10.1257/aer.98.3.1145) [DOI] [Google Scholar]

- 37.Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, Sanislow C, Wang P. 2010. Research domain criteria (RDoC): toward a new classification framework for research on mental disorders. Am. J. Psychiatry 167, 748–751. ( 10.1176/appi.ajp.2010.09091379) [DOI] [PubMed] [Google Scholar]

- 38.Carcone D, Ruocco AC. 2017. Six years of research on the national institute of mental health's research domain criteria (RDoC) initiative: a systematic review. Front. Cell Neurosci. 11, 46 ( 10.3389/fncel.2017.00046) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ferrante M, Redish AD, Oquendo MA, Averbeck BB, Kinnane ME, Gordon JA. 2018. Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges. Mol. Psychiatry. ( 10.1038/s41380-018-0063-z) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ainslie GW. 1974. Impulse control in pigeons. J. Exp. Anal. Behav. 21, 485–489. ( 10.1901/jeab.1974.21-485) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ainslie G. 1975. Specious reward: a behavioral theory of impulsiveness and impulse control. Psychol. Bull. 82, 463–496. ( 10.1037/h0076860) [DOI] [PubMed] [Google Scholar]

- 42.Green L, Myerson J. 2004. A discounting framework for choice with delayed and probabilistic rewards. Psychol. Bull. 130, 769–792. ( 10.1037/0033-2909.130.5.769) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kable JW, Glimcher PW. 2007. The neural correlates of subjective value during intertemporal choice. Nat. Neurosci. 10, 1625–1633. ( 10.1038/nn2007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Laibson D. 1997. Golden eggs and hyperbolic discounting. Q. J. Econ. 112, 443–478. ( 10.1162/003355397555253) [DOI] [Google Scholar]

- 45.Mischel W, Ebbesen EB. 1970. Attention in delay of gratification. J. Pers. Soc. Psychol. 16, 329–337. ( 10.1037/h0029815) [DOI] [PubMed] [Google Scholar]

- 46.Thaler R. 1981. Some empirical evidence on dynamic inconsistency. Econ. Lett. 8, 201–207. ( 10.1016/0165-1765(81)90067-7) [DOI] [Google Scholar]

- 47.Samuelson P. 1937. A note on measurement of utility. Rev. Econ. Stud. 4, 155–161. ( 10.2307/2967612) [DOI] [Google Scholar]

- 48.Benzion U, Rapoport A, Yagil J. 1989. Discount rates inferred from decisions: an experimental study. Manag. Sci. 35, 270–284. ( 10.1287/mnsc.35.3.270) [DOI] [Google Scholar]

- 49.Chapman GB, Elstein AS. 1995. Valuing the future: temporal discounting of health and money. Med. Decis. Mak. Int. J. Soc. Med. Decis. Mak. 15, 373–386. ( 10.1177/0272989X9501500408) [DOI] [PubMed] [Google Scholar]

- 50.Mazur JE, Biondi DR. 2009. Delay–amount tradeoffs in choices by pigeons and rats: hyperbolic versus exponential discounting. J. Exp. Anal. Behav. 91, 197–211. ( 10.1901/jeab.2009.91-197) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pender JL. 1996. Discount rates and credit markets: theory and evidence from rural India. J. Dev. Econ. 50, 257–296. ( 10.1016/S0304-3878(96)00400-2) [DOI] [Google Scholar]

- 52.Redelmeier DA, Rozin P, Kahneman D. 1993. Understanding patients' decisions: cognitive and emotional perspectives. JAMA 270, 72–76. ( 10.1001/jama.1993.03510010078034) [DOI] [PubMed] [Google Scholar]

- 53.Kirby KN. 1997. Bidding on the future: evidence against normative discounting of delayed rewards. J. Exp. Psychol. 126, 54 ( 10.1037/0096-3445.126.1.54) [DOI] [Google Scholar]

- 54.Kirby KN, Maraković NN. 1995. Modeling myopic decisions: evidence for hyperbolic delay-discounting within subjects and amounts. Organ Behav. Hum. Decis. Process. 64, 22–30. ( 10.1006/obhd.1995.1086) [DOI] [Google Scholar]

- 55.Myerson J, Green L. 1995. Discounting of delayed rewards: models of individual choice. J. Exp. Anal. Behav. 64, 263–276. ( 10.1901/jeab.1995.64-263) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rachlin H, Raineri A, Cross D. 1991. Subjective probability and delay. J. Exp. Anal. Behav. 55, 233–244. ( 10.1901/jeab.1991.55-233) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kable JW, Glimcher PW. 2010. An ‘as soon as possible’ effect in human intertemporal decision making: behavioral evidence and neural mechanisms. J. Neurophysiol. 103, 2513–2531. ( 10.1152/jn.00177.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Strotz RH. 1955. Myopia and inconsistency in dynamic utility maximization. Rev. Econ. Stud. 23, 165–180. ( 10.2307/2295722) [DOI] [Google Scholar]

- 59.Mazur JE. 1987. An adjusting procedure for studying delayed reinforcement. In The effect of delay and of intervening events on reinforcement value, quantitative analyses of behavior (eds Commons ML, Mazur JE, Nevin JA, Rachlin H), pp. 55–73. Hillsdale, NJ: Erlbaum. [Google Scholar]

- 60.Weber EU, Blais A-R, Betz NE. 2002. A domain-specific risk-attitude scale: measuring risk perceptions and risk behaviors. J. Behav. Decis. Mak. 15, 263–290. ( 10.1002/bdm.414) [DOI] [Google Scholar]

- 61.Levy DJ, Glimcher PW. 2012. The root of all value: a neural common currency for choice. Curr. Opin Neurobiol. 22, 1027–1038. ( 10.1016/j.conb.2012.06.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tymula A, Rosenberg Belmaker LA, Roy AK, Ruderman L, Manson K, Glimcher PW, Levy I. 2012. Adolescents' risk-taking behavior is driven by tolerance to ambiguity. Proc. Natl Acad. Sci. USA 109, 17 135–17 140. ( 10.1073/pnas.1207144109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Tymula A, Belmaker LAR, Ruderman L, Glimcher PW, Levy I. 2013. Like cognitive function, decision making across the life span shows profound age-related changes. Proc. Natl Acad. Sci. USA 110, 17 143–17 148. ( 10.1073/pnas.1309909110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.von Neumann J, Morgenstern O. 1944. Theory of games and economic behavior (2007 commemorative edition). Princeton, NJ: Princeton University Press. [Google Scholar]

- 65.Friedman M, Savage LJ. 1948. The utility analysis of choices involving risk. J. Polit. Econ. 56, 279–304. ( 10.1086/256692) [DOI] [Google Scholar]

- 66.Kahneman D, Tversky A. 1979. Prospect theory: an analysis of decision under risk. Econometrica 47, 263–291. ( 10.2307/1914185) [DOI] [Google Scholar]

- 67.Bernoulli D. 1954. Exposition of a new theory on the measurement of risk. Econometrica 22, 23–36. ( 10.2307/1909829) [DOI] [Google Scholar]

- 68.Holt CA, Laury SK. 2002. Risk aversion and incentive effects. Am. Econ. Rev. 92, 1644–1655. ( 10.1257/000282802762024700) [DOI] [Google Scholar]

- 69.Glimcher PW. 2016. Proximate mechanisms of individual decision-making behavior. In Complexity and evolution: toward a new synthesis for economics. (eds Wilson DS, Kirman A, Lupp J), pp. 85–96, 1 edn Cambridge, MA: The MIT Press. [Google Scholar]

- 70.Lorian CN, Grisham JR. 2011. Clinical implications of risk aversion: an online study of risk-avoidance and treatment utilization in pathological anxiety. J. Anxiety Disord. 25, 840–848. ( 10.1016/j.janxdis.2011.04.008) [DOI] [PubMed] [Google Scholar]

- 71.Brañas-Garza P, Georgantzis N, Guillen P. 2007. Direct and indirect effects of pathological gambling on risk attitudes. Judgment Decis. Mak. 2, 126–136. [Google Scholar]

- 72.von Gaudecker H-M, van Soest A, Wengstrom E. 2011. Heterogeneity in risky choice behavior in a broad population. Am. Econ. Rev. 101, 664–694. ( 10.1257/aer.101.2.664) [DOI] [Google Scholar]

- 73.Andersen S, Harrison GW, Lau MI, Rutström EE. 2008. Eliciting risk and time preferences. Econometrica 76, 583–618. ( 10.1111/j.1468-0262.2008.00848.x) [DOI] [Google Scholar]

- 74.Andersen S, Harrison GW, Lau MI, Rutström EE. 2014. Discounting behavior: a reconsideration. Eur. Econ. Rev. 71, 15–33. ( 10.1016/j.euroecorev.2014.06.009) [DOI] [Google Scholar]

- 75.Laury SK, McInnes MM, Swarthout JT. 2012. Avoiding the curves: direct elicitation of time preferences. J. Risk Uncertain 44, 181–217. ( 10.1007/s11166-012-9144-6) [DOI] [Google Scholar]

- 76.Takeuchi K. 2011. Non-parametric test of time consistency: present bias and future bias. Games Econ. Behav. 71, 456–478. ( 10.1016/j.geb.2010.05.005) [DOI] [Google Scholar]

- 77.Kreps DM, Porteus EL. 1978. Temporal resolution of uncertainty and dynamic choice theory. Econometrica 46, 185–200. ( 10.2307/1913656) [DOI] [Google Scholar]

- 78.Selden L. 1978. A new representation of preferences over ‘certain a uncertain’ consumption pairs: the ‘ordinal certainty equivalent’ hypothesis. Econometrica 46, 1045–1060. ( 10.2307/1911435) [DOI] [Google Scholar]

- 79.Epstein LG, Zin SE. 1989. Substitution, risk aversion, and the temporal behavior of consumption and asset returns: a theoretical framework. Econometrica 57, 937–969. ( 10.2307/1913778) [DOI] [Google Scholar]

- 80.Chew SH, Epstein LG. 1990. Nonexpected utility preferences in a temporal framework with an application to consumption-savings behaviour. J. Econ. Theory 50, 54–81. ( 10.1016/0022-0531(90)90085-X) [DOI] [Google Scholar]

- 81.Andreoni J, Sprenger C. 2012. Estimating time preferences from convex budgets. Am. Econ. Rev. 102, 3333–3356. ( 10.1257/aer.102.7.3333) [DOI] [Google Scholar]

- 82.Andreoni J, Sprenger C. 2012. Risk preferences are not time preferences. Am. Econ. Rev. 102, 3357–3376. ( 10.1257/aer.102.7.3357) [DOI] [Google Scholar]

- 83.Cheung SL. 2015. Eliciting utility curvature and time preference [Internet]. University of Sydney, School of Economics; 2015 [cited 2016 Nov 29]. Report No.: 2015–01. See https://ideas.repec.org/p/syd/wpaper/2015-01.html.

- 84.Ballard K, Knutson B. 2009. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage 45, 143–150. ( 10.1016/j.neuroimage.2008.11.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Frost R, McNaughton N. 2017. The neural basis of delay discounting: a review and preliminary model. Neurosci. Biobehav. Rev. 79, 48–65. ( 10.1016/j.neubiorev.2017.04.022) [DOI] [PubMed] [Google Scholar]

- 86.McClure SM, Laibson DI, Loewenstein G, Cohen JD. 2004. Separate neural systems value immediate and delayed monetary rewards. Science 306, 503–507. ( 10.1126/science.1100907) [DOI] [PubMed] [Google Scholar]

- 87.Abdellaoui M, Bleichrodt H, l'Haridon O, Paraschiv C. . 2013. Is there one unifying concept of utility? An experimental comparison of utility under risk and utility over time. Manag. Sci. 59, 2153–2169. ( 10.1287/mnsc.1120.1690) [DOI] [Google Scholar]

- 88.Epper T, Fehr-Duda H. 2015. Risk preferences are not time preferences: balancing on a budget line: comment. Am. Econ. Rev. 105, 2261–2271. ( 10.1257/aer.20130420) [DOI] [Google Scholar]

- 89.Lopez-Guzman S, Konova AB, Louie K, Glimcher PW. 2018. Risk preferences impose a hidden distortion on measures of choice impulsivity. PLoS ONE 13, e0191357 ( 10.1371/journal.pone.0191357) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Cheung SL. 2016. Recent developments in the experimental elicitation of time preference. J. Behav. Exp. Finance 11, 1–8. ( 10.1016/j.jbef.2016.04.001) [DOI] [Google Scholar]

- 91.Engelmann JB, Tamir D. 2009. Individual differences in risk preference predict neural responses during financial decision-making. Brain Res. 1290, 28–51. ( 10.1016/j.brainres.2009.06.078) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Levy I, Snell J, Nelson AJ, Rustichini A, Glimcher PW. 2010. Neural representation of subjective value under risk and ambiguity. J. Neurophysiol. 103, 1036–1047. ( 10.1152/jn.00853.2009) [DOI] [PubMed] [Google Scholar]

- 93.Mohr PNC, Biele G, Heekeren HR. 2010. Neural processing of risk. J. Neurosci. 30, 6613–6619. ( 10.1523/JNEUROSCI.0003-10.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Tobler PN, Christopoulos GI, O'Doherty JP, Dolan RJ, Schultz W. 2009. Risk-dependent reward value signal in human prefrontal cortex. Proc. Natl Acad. Sci. USA 106, 7185–7190. ( 10.1073/pnas.0809599106) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Miedl SF, Wiswede D, Marco-Pallarés J, Ye Z, Fehr T, Herrmann M, MüNte TF. 2015. The neural basis of impulsive discounting in pathological gamblers. Brain Imaging Behav. 9, 887–898. ( 10.1007/s11682-015-9352-1) [DOI] [PubMed] [Google Scholar]

- 96.Pine A, Seymour B, Roiser JP, Bossaerts P, Friston KJ, Curran HV, Dolan RJ. 2009. Encoding of marginal utility across time in the human brain. J. Neurosci. 29, 9575–9581. ( 10.1523/JNEUROSCI.1126-09.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Luhmann CC, Chun MM, Yi D-J, Lee D, Wang X-J. 2008. Neural dissociation of delay and uncertainty in intertemporal choice. J. Neurosci. 28, 14 459–14 466. ( 10.1523/JNEUROSCI.5058-08.2008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Bartra O, McGuire JT, Kable JW. 2013. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage 76, 412–427. ( 10.1016/j.neuroimage.2013.02.063) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Miedl SF, Peters J, Büchel C. 2012. Altered neural reward representations in pathological gamblers revealed by delay and probability discounting. Arch. Gen. Psychiatry 69, 177–186. ( 10.1001/archgenpsychiatry.2011.1552) [DOI] [PubMed] [Google Scholar]

- 100.Peters J, Büchel C. 2009. Overlapping and distinct neural systems code for subjective value during intertemporal and risky decision making. J. Neurosci. 29, 15 727–15 734. ( 10.1523/JNEUROSCI.3489-09.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Seaman KL, Brooks N, Karrer TM, Castrellon JJ, Perkins SF, Dang LC, Hsu M, Zald DH, Samanez-Larkin GR. 2018. Subjective value representations during effort, probability and time discounting across adulthood. Soc. Cogn. Affect Neurosci. 13, 449–459. ( 10.1093/scan/nsy021) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Dalley JW, Robbins TW. 2017. Fractionating impulsivity: neuropsychiatric implications. Nat. Rev. Neurosci. 18, 158–171. ( 10.1038/nrn.2017.8) [DOI] [PubMed] [Google Scholar]

- 103.Story GW, Vlaev I, Seymour B, Darzi A, Dolan RJ. 2014. Does temporal discounting explain unhealthy behavior? A systematic review and reinforcement learning perspective. Front. Behav. Neurosci. 8, 76 ( 10.3389/fnbeh.2014.00076) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.McClelland J, Dalton B, Kekic M, Bartholdy S, Campbell IC, Schmidt U. 2016. A systematic review of temporal discounting in eating disorders and obesity: behavioural and neuroimaging findings. Neurosci. Biobehav. Rev. 71, 506–528. ( 10.1016/j.neubiorev.2016.09.024) [DOI] [PubMed] [Google Scholar]

- 105.MacKillop J, Celio MA, Mastroleo NR, Kahler CW, Operario D, Colby SM, Barnett NP, Monti PM. 2015. Behavioral economic decision making and alcohol-related sexual risk behavior. AIDS Behav. 19, 450–458. ( 10.1007/s10461-014-0909-6) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Bickel WK, Jarmolowicz DP, Mueller ET, Koffarnus MN, Gatchalian KM. 2012. Excessive discounting of delayed reinforcers as a trans-disease process contributing to addiction and other disease-related vulnerabilities: emerging evidence. Pharmacol. Ther. 134, 287–297. ( 10.1016/j.pharmthera.2012.02.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Isen JD, Sparks JC, Iacono WG. 2014. Predictive validity of delay discounting behavior in adolescence: a longitudinal twin study. Exp. Clin. Psychopharmacol 22, 434–443. ( 10.1037/a0037340) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Landes RD, Christensen DR, Bickel WK. 2012. Delay discounting decreases in those completing treatment for opioid dependence. Exp. Clin. Psychopharmacol 20, 302–309. ( 10.1037/a0027391) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Dassen FCM, Houben K, Allom V, Jansen A. 2018. Self-regulation and obesity: the role of executive function and delay discounting in the prediction of weight loss. J. Behav. Med. 41, 806–818. ( 10.1007/s10865-018-9940-9) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Stanger C, Ryan SR, Fu H, Landes RD, Jones BA, Bickel WK, Budney AJ. 2012. Delay discounting predicts adolescent substance abuse treatment outcome. Exp. Clin. Psychopharmacol. 20, 205–212. ( 10.1037/a0026543) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Rudorf S, Hare TA. 2014. Interactions between dorsolateral and ventromedial prefrontal cortex underlie context-dependent stimulus valuation in goal-directed choice. J. Neurosci. 34, 15 988–15 996. ( 10.1523/JNEUROSCI.3192-14.2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Saraiva AC, Marshall L. 2015. Dorsolateral–ventromedial prefrontal cortex interactions during value-guided choice: a function of context or difficulty? J. Neurosci. 35, 5087–5088. ( 10.1523/JNEUROSCI.0271-15.2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Ballard IC, Murty VP, Carter RM, MacInnes JJ, Huettel SA, Adcock RA. 2011. Dorsolateral prefrontal cortex drives mesolimbic dopaminergic regions to initiate motivated behavior. J. Neurosci. 31, 10 340–10 346. ( 10.1523/JNEUROSCI.0895-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Bickel WK, Yi R, Landes RD, Hill PF, Baxter C. 2011. Remember the future: working memory training decreases delay discounting among stimulant addicts. Biol. Psychiatry 69, 260–265. ( 10.1016/j.biopsych.2010.08.017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Naish KR, Vedelago L, MacKillop J, Amlung M. 2018. Effects of neuromodulation on cognitive performance in individuals exhibiting addictive behaviors: a systematic review. Drug Alcohol Depend. 192, 338–351. ( 10.1016/j.drugalcdep.2018.08.018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Story GW, Moutoussis M, Dolan RJ. 2016. A computational analysis of aberrant delay discounting in psychiatric disorders. Front. Psychol. 6, 1948 ( 10.3389/fpsyg.2015.01948) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Steinglass JE, Figner B, Berkowitz S, Simpson HB, Weber EU, Walsh BT. 2012. Increased capacity to delay reward in anorexia nervosa. J. Int. Neuropsychol. Soc. 18, 773–780. ( 10.1017/S1355617712000446) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Giorgetta C, Grecucci A, Zuanon S, Perini L, Balestrieri M, Bonini N, Sanfey AG, Brambilla P. 2012. Reduced risk-taking behavior as a trait feature of anxiety. Emotion 12, 1373–1383. ( 10.1037/a0029119) [DOI] [PubMed] [Google Scholar]

- 119.Ligneul R, Sescousse G, Barbalat G, Domenech P, Dreher J-C. 2013. Shifted risk preferences in pathological gambling. Psychol. Med. 43, 1059–1068. ( 10.1017/S0033291712001900) [DOI] [PubMed] [Google Scholar]

- 120.Gardner M, Steinberg L. 2005. Peer influence on risk taking, risk preference, and risky decision making in adolescence and adulthood: an experimental study. Dev. Psychol. 41, 625–635. ( 10.1037/0012-1649.41.4.625) [DOI] [PubMed] [Google Scholar]

- 121.Reyna VF, Farley F. 2006. Risk and rationality in adolescent decision making: implications for theory, practice, and public policy. Psychol. Sci. Public Interest. 7, 1–44. ( 10.1111/j.1529-1006.2006.00026.x) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This article has no additional data.