Abstract

Objectives

The objectives were to critically appraise the emergency medicine (EM) medical education literature published in 2016 and review the highest‐quality quantitative and qualitative studies.

Methods

A search of the English language literature in 2016 querying MEDLINE, Scopus, Education Resources Information Center (ERIC), and PsychInfo identified 510 papers related to medical education in EM. Two reviewers independently screened all of the publications using previously established exclusion criteria. The 25 top‐scoring quantitative studies based on methodology and all six qualitative studies were scored by all reviewers using selected scoring criteria that have been adapted from previous installments. The top‐scoring articles were highlighted and trends in medical education research were described.

Results

Seventy‐five manuscripts met inclusion criteria and were scored. Eleven quantitative and one qualitative papers were the highest scoring and are summarized in this article.

Conclusion

This annual critical appraisal series highlights the best EM education research articles published in 2016.

Efforts to promote high‐quality education research in emergency medicine (EM) have increased over the past decade. The 2012 Academic Emergency Medicine consensus conference “Education Research in Emergency Medicine: Opportunities, Challenges, and Strategies for Success” called for a growth in hypothesis‐driven education research studies.1 Additionally, increasing grant opportunities from EM organizations including the Society for Academic Emergency Medicine (SAEM) and Council of Emergency Medicine Residency Directors (CORD), faculty development efforts including the Medical Education Research Certification Program (MERC), development of the CORD Academy for Scholarship in Education in Emergency Medicine, and the growing number of EM medical education fellowship programs have likely contributed to this.2, 3, 4, 5, 6 The increased focus on medical education has led to a significant increase in research publications specific to EM learners.

In this ninth installment of the annual critical appraisal of the EM education research, we systematically analyze and rank the best publications of 2016. We used modified previously published criteria for qualitative and quantitative research studies similar to prior installments of this series.7, 8, 9, 10, 11, 12, 13, 14 We also describe and summarize current trends in medical education research over the past year as they relate to EM learners and educators. This appraisal is designed to serve as a resource for EM educators and researchers invested in education scholarship.

Methods

Article Identification

A medical librarian reproduced a previously used Boolean search strategy to identify all 2016 English language research publications relevant to EM education.7 While previous installments of this article included keywords inclusive of medical students, the authors recognized that some papers that focused on clerkships may have been inadvertently omitted. Therefore, the authors added “clinical clerkship” and “clerkship” to the search parameters for this installment. The search was run in November 2017 using medical subject heading (MeSH) and keyword terms, including keyword variations to ensure completeness (Data Supplement S1, Appendix S1, available as supporting information in the online version of this paper, which is available at http://onlinelibrary.wiley.com/doi/10.1002/aet2.10203/full). Other databases including Scopus, Education Resources Information Center (ERIC), and PsychInfo were also searched. Articles that are listed as Epub are included in the year that they are first listed and not when they are finally published.

Inclusion and Exclusion Criteria

Studies relevant to the EM education of medical students, graduate medical education trainees, academic and nonacademic attending physicians, and other emergency providers were included. Studies were defined as hypothesis‐testing investigations, evaluations of educational interventions, or explorations of educational problems using either quantitative or qualitative methods. Publications were excluded if they were: 1) not considered to be peer‐reviewed research (such as opinion pieces, commentaries, literature reviews, curricula descriptions without outcomes data); 2) not relevant to EM learners (such as reports on education of prehospital personnel and international studies that could not be generalized to EM training outside of the country in which they were performed); 3) single‐site survey studies; and 4) studies that examined outcomes limited to an expected learning effect without a comparison group.

Data Collection

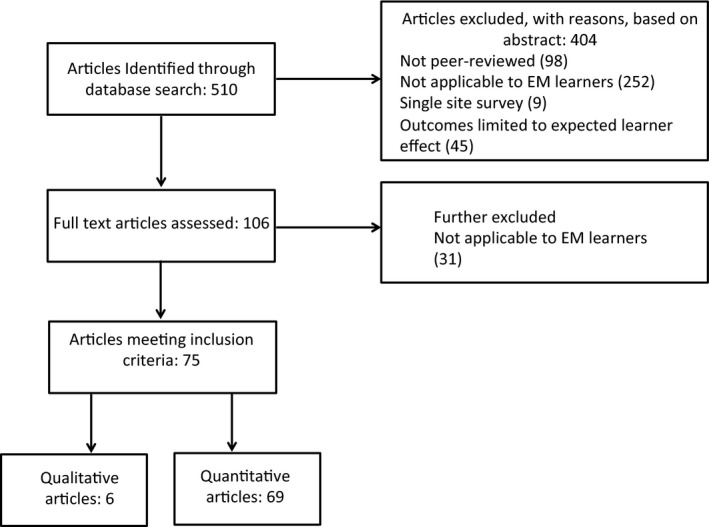

In total, 644 abstracts were retrieved through the database search. Duplicates were identified and deleted and one reviewer (NMD) applied exclusion criteria to the remaining 510 abstracts. Two reviewers then independently screened the included articles by full‐text review and further refined the selection utilizing the exclusion criteria. Differences in opinion were resolved by discussion among the three reviewers (Figure 1). Retrieved publications were maintained in a Microsoft Excel 2016 database and were classified as either primarily quantitative or qualitative methods for scoring purposes.

Figure 1.

Flow diagram of study selection.

Scoring

Six reviewers scored the articles that met inclusion criteria. All reviewers have published in medical education and hold faculty positions in medical education at academic EM programs. All reviewers were trained in scoring by the senior author during two 1‐hour‐long conference calls. Scoring was based on a previously adapted version7, 8, 9 of the Research in Medical Education symposium of the Association of the American Medical Colleges15 and applying additional criteria from Alliance for Clinical Education study reviews.16 The scoring tool was iteratively modified in 2009, 2010, and 2016 to more accurately reflect EM education topics and the development of new areas of research including simulation and other technology. Each publication was assigned to a scoring system based on whether they were primarily quantitative or qualitative studies. The scoring criteria for both quantitative and qualitative research studies have been described above and previously published in this review series and are represented in Tables 1 and 2. It was decided a priori to include the top 10 best quantitative and one qualitative article that met consensus criteria described below. Reviewers recused themselves from reviewing articles in which they were coauthors. We adopted a two‐stage scoring approach for quantitative articles due to the large number of quantitative studies this year that met inclusion criteria. Two reviewers (NMD and JF) independently scored all quantitative studies using an abbreviated scoring tool (Data Supplement S1, Appendix S2) based on methodology. The top 25 quantitative articles from the first stage were made available for the other four reviewers to score independently in the second stage. The articles scores were converted to rankings. Using accepted recommendations and hierarchical formulations,17, 18, 19 qualitative studies were assessed and scored in nine domains, parallel to those applied to the quantitative studies, for a maximum total score of 25 points. These also included the domains of measurement, data collection, and data analysis criteria, as defined specifically for high‐quality qualitative research. All reviewers scored all of the qualitative articles. As in years past, quantitative and qualitative articles that were in all reviewers’ top 10 were included as well as articles that were in at least 75% reviewers’ top 10 lists. Means with standard deviations (SDs) and rankings were calculated in Excel. The use of rankings was to improve consensus. Inter‐rater reliability was assessed with intraclass correlation coefficient using a two‐way random‐effect model in SPSS 25.0.

Table 1.

EM Education Research Scoring Metrics: Quantitative Research

| Domain | Item | Item Score | Maximum Domain Score |

|---|---|---|---|

| Introduction (1 point for each criterion met) | 3 | ||

| Appropriate description of background literature | 1 | ||

| Clearly frame the problem | 1 | ||

| Clear objective/hypothesis | 1 | ||

| Study design: measurements and groups | 4 | ||

| Measurement (2 points max) | |||

| One measurement | 1 | ||

| Two measurements two points in time | 2 | ||

| Groups (2 points max) | |||

| One group | 0 | ||

| Control group | 1 | ||

| Randomized | 2 | ||

| Data collection | 4 | ||

| Institutions (2 max) | |||

| Single institution | 0 | ||

| At least two institutions | 1 | ||

| More than two institutions or CORD listserv | 2 | ||

| Response rate (2 max) | |||

| Response rate < 50% or not reported | 0 | ||

| Response rate ≥50% | 1 | ||

| Response rate ≥75% | 2 | ||

| Data analysis (add appropriateness + sophistication) 3 max | 3 | ||

| Appropriate for study design and type | 1 | ||

| Descriptive analysis only | 1 | ||

| Includes power analysis | 1 | ||

| Discussion | 3 | ||

| One point for each criterion met | |||

| Data support conclusion | 1 | ||

| Conclusion clearly addresses hypothesis/objective | 1 | ||

| Conclusions placed in context of literature | 1 | ||

| Limitations | 2 | ||

| Limitations not identified accurately | 0 | ||

| Some limitations identified | 1 | ||

| Limitations well addressed | 2 | ||

| Innovation of project | 2 | ||

| Previously described methods | 0 | ||

| New use for known assessment | 1 | ||

| New assessment methodology | 2 | ||

| Generalizability of project | 2 | ||

| Impractical to most programs | 0 | ||

| Relevant to some | 1 | ||

| Highly generalizable | 2 | ||

| Clarity of writing | 2 | ||

| Unsatisfactory | 0 | ||

| Fair | 1 | ||

| Excellent | 2 | ||

| Total | 25 | ||

Table 2.

EM Education Research Scoring Metrics: Qualitative Research

| Domain | Item | Item Score | Maximum Domain Score |

|---|---|---|---|

| Introduction | 3 | ||

| Give one point for each criterion met | |||

| Appropriate description of background literature | 1 | ||

| Clearly frame the problem | 1 | ||

| Clear study purpose | 1 | ||

| Measurement (add methodology + sampling) | 3 | ||

| Methodology: give a point for each criterion met | |||

| Clear description of qualitative approach (e.g., ethnography, grounded theory, phenomenology) | 1 | ||

| Method (observation, interviews, focus groups, etc.) appropriate for study purpose | 1 | ||

| Sampling of study participants: | |||

| Sampling strategy well described and appropriate | 1 | ||

| Data collection (add institutions + sample size determination) | 3 | ||

| Data collection methods – give a point for each criterion met | |||

| Detailed description of data collection method | 1 | ||

| Description of instrument development | 1 | ||

| Description of instrument piloting | 1 | ||

| Data analysis | 5 | ||

| Sophistication of data analysis: give a point for each criterion met | |||

| Clear, reproducible “audit trail” documenting systematic procedure for analysis | 1 | ||

| Data saturation through a systematic iterative process of analysis | 1 | ||

| Addressed contradictory responses | 1 | ||

| Incorporated validation strategies (e.g., member checking, triangulation) | 1 | ||

| Addressed reflexivity (impact of researcher's background, position, biases on study) | 1 | ||

| Discussion | 3 | ||

| Clear summary of main findings | 1 | ||

| Conclusions placed in context of literature | 1 | ||

| Discussion of how findings should be interpreted/applied/or direct next steps (without overreaching) | 1 | ||

| Limitations | 2 | ||

| Limitations not identified accurately | 0 | ||

| Some limitations identified | 1 | ||

| Limitations well addressed | 2 | ||

| Novelty of project | 2 | ||

| Does not add to current knowledge | 0 | ||

| Adds somewhat to current knowledge | 1 | ||

| Significant contribution to what is known | 2 | ||

| Generalizability of project | 2 | ||

| Impractical to most programs | 0 | ||

| Relevant to some | 1 | ||

| Highly generalizable | 2 | ||

| Clarity of writing | 2 | ||

| Unsatisfactory | 0 | ||

| Fair | 1 | ||

| Excellent | 2 | ||

| Total | 25 | ||

Table 3.

Trends for the Reviewed Medical Education Research Articles Published in 2016

| Variable | All Publications (n = 75) | Highlighted (n = 12) |

|---|---|---|

| Funding | 8 (11) | 3 (25) |

| Learner groups* | ||

| Medical students | 21 (36) | 1 (8) |

| Residents | 48 (64) | 12 (100) |

| Faculty | 9 (12) | 2 (17) |

| Other | 7 (9) | 1 (8) |

| Study methodology | ||

| Survey | 19 (25) | 2 (17) |

| Observational | 35 (47) | 5 (42) |

| Experimental | 15 (20) | 4 (33) |

| Qualitative | 6 (8) | 1 (8) |

| Topics of study* | ||

| Technology | 23 (31) | 5 (42) |

| Assessment | 22 (29) | 3 (25) |

| Teaching/learning methods | 15 (20) | 4 (33) |

| Curriculum | 15 (20) | 1 (8) |

| Simulation | 12 (16) | 3 (25) |

| Ultrasound | 8 (11) | 1 (8) |

| Communication | 6 (8) | 1 (8) |

| Procedural teaching/learning | 6 (8) | 0 (0) |

| Residency application process | 5 (7) | 1 (8) |

| Pediatrics | 2 (3) | 0 (0) |

| Other | 6 (8) | 2 (17) |

Data are reported as n (%).

It is possible to exceed the total n = 75 or n = 12 in these categories due to multiple learner categories or study topics.

Trends

Trends in medical education research were analyzed for all articles that met inclusion criteria. To identify important trends in 2016, a data form was created a priori based on trends reported in prior critical appraisals in this series. Articles were reviewed, including publishing journal, acknowledgments, disclosures, and author affiliations to determine study design, study population, number of participating institutions, topic of study, funding, publishing journal, and presence of author EM departmental affiliation. Data were abstracted by JJ and confirmed by a second reviewer, NMD. Discrepancies were resolved by discussion. Journal focus was determined by knowledge of study authors or, if the journal was unfamiliar, review of the journal website.

Results

A total of 510 papers satisfied the search criteria and 75 papers met inclusion criteria, 69 quantitative and six qualitative. In the second round, the range of reviewers’ scores for the top 25 qualitative articles was 13 to 23 with a of mean (±SD) score of 17.4 (±3.0). For the six qualitative articles, the range of scores was 12 to 23 with a of mean (±SD) score of 16.8 (±2.7). During the initial round of quantitative scoring using the modified tool with two reviewers, the intraclass correlation was 0.997. For qualitative scoring intraclass correlation coefficient revealed a Cronbach's alpha of 0.66. Given that there was a tie score, 11 quantitative articles were ranked in the top 10 by 75% of reviewers. The 11 highest‐scoring quantitative and the single highest‐scoring qualitative articles are reviewed below, in alphabetical order by the first author's last name.

Selected Articles

1. Counselman FL, Kowalenko T, Marco CA, et al. The relationship between ACGME duty hour requirements and performance on the American Board of Emergency Medicine qualifying examination. J Grad Med Educ 2016;8:558–562.20

Background: Starting in 2003, the Accreditation Council for Graduate Medicine Education (ACGME) set work duty hour limitations in an effort to enhance patient safety and improve education and working conditions. This created a concern for decreased educational opportunities. This study looked at the results of the American Board of Emergency Medicine qualifying exam (QE) before and after the duty hour limitations were instituted in 2003 and 2011.

Methods: Retrospective review of the QE results from 1999 through 2014. Candidates were broken into four groups based on timing of implementation of the duty hour restrictions. Mean QE scores and pass rates were compared between groups.

Results: Three of the five groups were identified as having distinct differences in duty hour requirements during the study period and were analyzed. There was a small but statistically significant decrease in the mean scores (0.04, p < 0.001) after implementation of the first duty hour requirements but this difference did not occur after implementation of the 2011 requirements. There was no difference among pass rates for any of the study groups.

Strengths of Study: This study used a large data set and found no real practical difference in scores based on duty hours. This study looked at performance related to two separate changes in duty hour requirements.

Application to Clinician Educators: It appears that duty hour limitations do not necessarily negatively impact examination scores. This may lend support to the benefits of work hour restrictions.

Limitations: Other variables related to residency training may have affected QE performance.

2. Ferguson I, Phillips AW, Lin M. Continuing medical education speakers with high evaluation scores use more image‐based slides. West J Emerg Med 2017;18:152–158.21

Background: Slide‐based presentations are ubiquitous in the current educational paradigm. Research in instructional design has identified design principles which, when followed, have been shown to increase knowledge transfer and retention. Prior studies have involved undergraduate and medical students in controlled settings. This study sought to assess how practicing attending physicians responded to the use of these design principles in continuing medical education (CME) conference presentations.

Methods: This was a retrospective analysis of lecture slide content and attendees’ evaluation scores from six sequential national EM CME conferences from 2010–2012. A mixed linear regression was used to determine whether evaluation scores were associated with the percent of image‐based slides per presentation, number of words per slide (text density), or the speaker's academic seniority.

Results: A total of 105 unique presentations by 49 unique faculty participating were analyzed. A total of 1,222 evaluations, indicating a 70.1% conference attendee response rate, were also included in the analysis. Image fraction was predictive of overall evaluation scores and had the greatest influence on predicting evaluation scores of any of the measured factors while text density did not have a significant association. Speaker seniority was predictive of evaluation performance.

Strengths of Study: The relatively large data set of over 100 presentations and 1,200 evaluations allows for a robust analysis. The decision to evaluate quantifiable design principles increases the strength of both its conclusions and its reproducibility. The mixed linear regression analysis makes the results more easily intelligible and allows for statistical power.

Application to Clinician Educators: This paper represents the first analysis of the association between the application of evidence‐based design principles and presentation evaluations among practicing physicians. It demonstrates a significant association between at least one principle and significantly improved evaluations, strengthening the case that these principles should be taught and applied among health professions educators and providing a launch point for future research in this area.

Limitations: Confounding variables may have affected mean score and were not accounted for in this study. Additionally, the CME evaluation tool has not been validated.

3. Hern HG, Gallahue FE, Burns BD, et al. Handoff practices in emergency medicine: are we making progress? Acad Emerg Med 2016;23:197–201.22

Background: Transitions of care present increased risk for miscommunications and subsequent adverse patient outcomes. The objectives of this study were twofold: 1) to better define the current culture around handoff practices in EM and 2) to evaluate if these practices are evolving as more education is devoted to the topic.

Methods: This was a cross‐sectional survey study guided by the Kern model for medical curriculum development aimed at 175 EM residency programs. It used a four‐point Likert‐type scale to elucidate current transitions of care practices. Comparisons were made to a previous survey from 2011 to evaluate for interval changes in these practices.

Results: A total of 127 programs (73%) responded to the survey. Significant interval changes were found in the following domains: increased use of a standardized handoff protocol, increased formalized training to residents during orientation, and decreased number or programs offering no training. The majority of respondents felt that their residents were “competent” or “highly competent” with the handoff process.

Strengths of Study: This was a multi‐institutional survey study with a moderately high response rate making it highly generalizable. It addresses transitions of care, a topic not widely studied but that is widely encountered and affects both resident education and patient outcomes.

Application to Clinician Educators: This study demonstrates improvement in the standardization of handoff practices and formalized training of the process. It also demonstrates that future educational advancements should focus on assessment of proficiency of this educational intervention.

Limitations: The response rate of 72.6% may limit generalization to all academic EDs.

4. Hoonpongsimanont W, Kulkarni M, Tomas‐Domingo P, et al. Text messaging versus email for emergency medicine residents’ knowledge retention: a pilot comparison in the United States. J Educ Eval Health Prof 2016;13:36.23

Background: The modern resident is accustomed to using technology on a daily basis. Residents use both text and e‐mail as ways to acquire and disseminate information. This study sought to see if there was a difference between these two modalities in terms of knowledge retention of EM content.

Methods: Residents from three EM residencies were randomized into text or e‐mail delivery of EM educational content from an EM board review textbook. All participants completed a 40‐question pre‐ and postintervention examination to assess knowledge retention. Examination scores were compared between groups using descriptive statistics, paired t‐tests, and linear regression.

Results: Fifty‐eight residents were included in this study. The authors found no significant difference between the primary outcomes of the two groups (p = 0.51). PGY‐2 status had a significant negative effect (p = 0.01) on predicted examination score difference. Neither delivery method enhanced resident knowledge retention.

Strengths of Study: The multicenter nature of this study allows for generalizability. This study is novel in that it sought to investigate the effect of text messaging, a modern‐day form of communication and learning that is relevant to the millennial learner.

Application to Clinician Educators: This study shows that there are a variety of methods to deliver educational content. This further enhances delivery of asynchronous education, which is critical in the current learning environment.

Limitations: The authors did not calculate a sample size for the secondary analysis (PGY level and sex) nor did they track whether the text messages and e‐mails were viewed by the residents.

5. Jauregui J, Gatewood MO, Ilgen JS, et al. Emergency medicine resident perceptions of medical professionalism. West J Emerg Med 2016;17:355–61.24

Background: Teaching and assessing professionalism poses challenges for educators and the lack of a consensus definition for professionalism is a major contributor to these difficulties. This study sought to explore EM trainees’ understanding and conceptualization of professionalism by quantifying the value they assigned to various professionalism attributes.

Methods: Survey of incoming and graduating EM residents at four U.S. programs. The authors used the American Board of Internal Medicine's “Project Professionalism” and the ACGME's definition of professionalism as a guide to identify 27 professionalism attributes among seven professionalism domains. Residents were asked to rate each attribute on a 10‐point Likert scale to determine their perceived contribution to the residents’ concept of medical professionalism. The analysis assessed how well each domain mapped to the concept of professionalism.

Results: One‐hundred of 114 (88%) of eligible residents completed the survey. The authors found that “altruism” was rated significantly lower and “respect for others” rated significantly higher than the other domains. Graduating seniors rated five attributes: commitment to lifelong learning, active leadership in the community, a portion of care for patients should be for those without pay, active involvement in teaching and/or a professional organization, and compassion and empathy lower than new interns. The majority of residents felt that professionalism could be taught, but the minority thought that it could be assessed.

Strengths of Study: The high response rate and geographical diversity contributes to the quality of this study. In contrast to much of the previous research on professionalism, which often occurred via structured interview or group formats, the anonymous nature of this survey may have allowed for more honest responses from the residents. This article represents the first study of its kind to focus exclusively on EM residents.

Application to Clinician Educators: Developing an understanding of current EM residents’ conceptions of medical professionalism is an important component of future attempts to teach and evaluate this mandated core competency. The differences found in this study among institutions and training level with regard to certain key attributes of professionalism warrant more robust evaluation.

Limitations: Variability in response rate by institution may have skewed the results. When comparing residents by level of training, the authors only examined one snapshot in time; they did not compare individuals before or after training.

6. Kwan J, Crampton R, Mogensen LL, et al. Bridging the gap: a five stage approach for developing specialty‐specific entrustable professional activities. BMC Med Educ 2016;16:117.25

Background: Entrustable professional activities (EPAs) are units of professional practice that can be useful in determining entrustment decisions for trainees in a competency‐based education model. Little is known about how to produce EPA content after suitable clinical tasks have been identified. This paper describes a rigorous approach to develop EPA content using qualitative methods.

Methods: This study applied focus group and individual interview methods to collect and analyze tasks, content, and entrustment scales for two specialty‐specific EPAs in EM. Specific steps include: 1) select EPA topic, 2) collect EPA content from focus groups and interviews, 3) analyze collected data to generate EPA draft, 4) seek EPA draft feedback from participants and stakeholders, and 5) revise EPA based on feedback.

Results: Applying this approach, the authors developed EPAs for two EM‐specific tasks: managing adult patients with acute chest pain and managing elderly patients following a fall. A three‐level entrustment scale was developed to aid in entrustment decisions for targeted learners.

Strengths of Study: The qualitative methods for this study are rigorous and the conceptual framework is strong. Readers will find the detailed steps, sample interview protocol questions, and example EPAs to provide guidance for similar initiatives.

Application to Clinician Educators: The five‐step process described by the authors can be applied to the development of other EPAs by educators across the continuum of medical education.

Limitations: The findings of the study are limited to emergency medicine in an urban hospital setting.

7. Lorello GR, Hicks CM, Ahmed SA, et al. Mental practice: a simple tool to enhance team‐based trauma resuscitation. CJEM. 2016;18:136–42.26

Background: Team‐based practice is increasingly common for healthcare professionals and is especially important in the setting of trauma resuscitation. Mental practice (MP), defined as the “cognitive rehearsal of a skill in the absence of an overt physical movement,” has been shown to improve skill based performance when teaching surgical skills. Its impact on team performance has not previously been studied.

Methods: Prospective, single‐blinded, randomized, simulation‐based study involving anesthesia, EM, and general surgery residents. Residents were grouped into teams of two. Half of the teams received face‐to‐face teaching on the trauma algorithm and nontechnical elements of team‐based trauma care whereas the other half underwent quiet MP of a descriptive script based on key trauma principles. All teams participated in a high‐fidelity adult trauma simulation which was videotaped for blinded review. Team performance was evaluated using the Mayo High Performance Teamwork Scale (MHPTS), a previously validated rating scale of teamwork skills in a simulated environment. Participants also completed the modified mental imagery questionnaire (mMIQ) to assess their mental imagery aptitude.

Results: Seventy‐eight PGY 1–5 residents participated in the study. The control group had more senior residents than the intervention group. The MP group had a statistically superior performance on the MHPTS and higher mMIQ scores compared to the control group.

Strengths of Study: This study was composed of participants from three specialties across a range of a PGY training. The main outcome measure was assessed in a blinded fashion using a previously validated simulation teamwork score. Despite the control group having more senior trainees than the intervention group as a result of randomization, the intervention group performed better on both measures.

Application to Clinician Educators: Team‐based practice is a key element of EM clinical practice. This study suggests that MP may be an effective training tool to improve teamwork in high acuity/high cognitive load scenarios.

Limitations: The intra‐class correlation was modest. A change in the resuscitation content may have precluded comparison between groups.

8. Love JN, Yarris LM, Santen SA, et al. A novel specialty‐specific, collaborative faculty development opportunity in education research: program evaluation at five years. Acad Med 2016;91:548–55.27

Background: Establishing one's self as an education scholar while maintaining a busy clinical career has proven to be problematic for those interested in a career in medical education. To overcome some of these barriers, MERC was developed in 2009. This study investigated the program's outcomes 5 years after its inception.

Methods: A mixed‐method design was used in this study evaluating annual pre/post program surveys, alumni surveys, and quantitative tracking of participants publications or presentations resulting from involvement in the MERC program.

Results: At the time of publication of this paper, 149 physicians had participated in the program, 63 of whom have presented a national presentation and 30 of whom have authored a peer‐review publication as a result of involvement in the MERC program. The majority of participants reported significantly improved skills and knowledge related to medical education research. The majority of alumni reported that knowledge attained from MERC has been instrumental in career development including academic promotion.

Strengths of Study: The longitudinal approach to the study design allows for an increased power and decreased bias by incorporating participants in a program as it grows, evolves and develops. This, coupled with the collection of both qualitative and quantitative metrics, adds to the rigor and validation of the outcomes.

Application to Clinician Educators: The authors of this study illustrate important and successful outcome measurements after the deployment of a novel longitudinal faculty development program. This demonstrates the importance of such programs and produces a framework for assessment of similar programs.

Limitations: This study had a relatively low response rate of 58% which may limit generalizability. Additionally, further long‐term outcomes will take several more years to be fully assessed.

9. Richards JB, Strout TD, Seigel TA, et al. Psychometric properties of a novel knowledge assessment tool of mechanical ventilation for emergency medicine residents in the northeastern United States. J Educ Eval Health Prof 2016;13:10.28

Background: Assessing resident knowledge of mechanical ventilation is important in EM and critical care. Validated assessment tools regarding this content area are currently lacking. This study aimed to determine the psychometric properties of a novel tool to assess resident knowledge regarding mechanical ventilation.

Methods: Prospective survey of EM residents at eight institutions across the northeastern United States. A nine‐item survey on baseline mechanical ventilation knowledge was administered to participants. The results were analyzed using Classical Test Theory–based psychometric analysis, including item and reliability analyses to quantify reliability, item difficulty, and item discrimination of the assessment tool. Reliability analysis was performed using both Cronbach's alpha and the Spearman–Brown coefficient for unequal lengths.

Results: A total of 214 of 312 residents (69%) participated in the study. Reliability, item difficulty, and item discrimination were found to be within satisfactory ranges, demonstrating acceptable psychometric properties of this knowledge assessment tool.

Strengths of Study: This study designs allows for validation of an assessment tool in an area of medical education where one is currently lacking.

Application to Clinician Educators: Mechanical ventilation knowledge is necessary for emergency physicians. Successful curriculum development requires validated assessment tools to demonstrate knowledge transition and acquisition. This validated tool with appropriate psychometric properties can be used to assess residents’ knowledge of mechanical ventilation.

Limitations: The response rate was 68.6%. Nonresponder bias may have affected item difficulty and discrimination analyses.

10. Scales CD, Moin T, Fink A, et al. A randomized, controlled trial of team‐based competition to increase learner participation in quality‐improvement education. Int J Qual Health Care 2016;28:227–32.29

Background: There is increased focus on quality improvement and patient safety in the clinical learning environment but teaching strategies vary considerably. This study sought to identify whether a mobile device platform and team‐based competition would improve resident engagement in an online quality improvement curriculum.

Methods: This was a prospective, randomized study of residents in multiple residency programs at a single institution. Residents were randomized into two groups: those who were involved in a competition and a control group. The competition group was assigned to a team and given points for performance in the online learning curriculum. The primary outcome was percentage of questions answered by residents. Secondary outcomes were total response time, proportion of residents who answered all of the questions, and number of questions that were mastered.

Results: Residents in the competition group demonstrated greater participation than the control group; the percentage of questions attempted at least once was greater in the competition group (79% [SD ± 32] vs. control, 68% [SD ± 37], p = 0.03) and median response time was faster in the competition group (p = 0.006). Differences in participation continued to increase over the duration of the intervention, as measured by average response time and cumulative percent of questions attempted (each p < 0.001).

Strengths of Study: Game mechanics across multiple specialties showed an increase in resident engagement. This is a novel way to deliver quality improvement education. The team‐based approach appears to have a positive motivating effect on residents.

Application to Clinician Educators: Educators should consider team‐based competition as a means to increase engagement in online learning in graduate medical education.

Limitations: This study assesses resident engagement in a quality improvement curriculum but does not assess mastery of content or improved clinical performance.

11. Smith D, Miller DG, Cukor J, et al. Can simulation measure differences in task‐switching ability between junior and senior emergency medicine residents? West J Emerg Med 2016;17:149–52.30

Background: Task switching is a patient care competency of interest to EM educators. The authors hypothesized that level of training affects EM resident performance and developed a simulation scenario with built‐in task switching to measure differences in resident abilities to perform crucial tasks in the context of interruptions.

Methods: A convenience sample of residents at three institutions was invited to participate in a standardized simulated encounter that involved task‐switching to manage a patient with a ST‐elevation myocardial infarction (STEMI) while evaluating and treating a patient with septic shock. Critical actions for both simulated patients were measured by checklist, and logistic regression was used to analyze associations between level of training and demonstration of critical actions.

Results: A total of 87 of 91 (96%) subjects met criteria for proper management of the septic shock patient, and 79 of 91 (87%) subjects identified and properly managed the STEMI patient. There were no significant differences in performance by level of training.

Strengths of Study: This study uses simulation as a tool to elicit competency performance in a way that can be observed and measured in a reproducible way. The development of a scenario with the explicit intent of measuring a relevant but difficult‐to‐measure behavioral concept and implementation at multiple centers are strengths of this study.

Application to Clinician Educators: Educators wishing to study complex behaviors using simulation may find this study useful as a starting point for development and implementation of a multicenter educational study designed to assess task‐switching performance.

Limitations: Inter‐rater reliability was not assessed and the number of residents who did not perform the number of critical actions was small.

12. Wagner JF, Schneberk T, Zobris M, et al. What predicts performance? A multicenter study examining the association between resident performance, rank list position, and United States Medical Licensing Examination Step 1 SCORES. J Emerg Med 2017;52:332–40.31

Background: Residency programs devote significant time and resources into residency selection. Little is known about what applicant characteristics predict success in residency. This study explored whether rank order list (ROL) position, participation in an EM rotation at the program, or United States Medical Licensing Examination (USMLE) Step 1 rank were predictive of residency performance.

Methods: Graduating residents’ performances were ranked by full‐time faculty at four EM residency programs. This graduate ROL was compared with the program's residency ROL, USMLE Step 1 rank, and EM rotation participation to determine associations between each characteristic and ranked residency performance.

Results: In a sample of 93 residents, graduate ROL position did not correlate with Step 1 score or residency ROL position but did correlate with having rotated as a student at the program.

Strengths of Study: This study added to the programs’ understanding of factors that predict applicant performance in residency by quantifying that the ability to observe a student in an EM rotation at a given program is helpful in determining future residency performance.

Application to Clinician Educators: This study adds that USMLE Step 1 scores do not correlate with residency performance and that ROL list position does not reliably correlate with ranked graduate performance. Future educational advances might investigate whether some programs are more likely to produce ROLs that do correlate with ranked graduate performance. Exploring the selection methods of these program may lend insight into what really matters in residency selection.

Limitations: Ranking residents based on judgment of attending faculty is subjective in nature and susceptible to multiple types of biases.

Discussion

In 2016, there continued to be a trend toward increasing number of articles that meet our a priori criteria for full review (n = 75) compared with 2015 (n = 61)14 and 2014 (n = 25).13 As the field of medical education continues to grow, we expect that this trend will continue. There was a decrease in the number of funded studies in 2016; 11% of all articles23, 25, 26, 32, 33, 34, 35, 36 compared to 20% in 2015 and 16% in 2014. There is some literature to suggest that funded medical education research may be of higher quality.37 However, the decline in funded research does not necessarily indicate a decline in quality of EM medical education research overall. Possible explanations for this trend may include a decrease in amount of available funds overall or shifting of research priorities of funding agencies. Three of the 12 highlighted studies were funded.23, 25, 26

The number of studies that have at least one EM author continues to be high, 89% (67/75) in 2016 compared to 95% in 2015 and 84% in 2014. EM journals published 64% (48/75) of the articles in this review. This represents a decrease from 2015 where EM journals published 71% of the eligible articles. This decrease was balanced by an increase in articles published in various other types of journals (17% in 2016 compared to 11% in 2015), while the percentage of articles published in medical education journals remained relatively constant (19% in 2016 compared to 18% in 2015). The other journals that published EM medical education research in 2016 were broad in focus including simulation, trauma, ultrasound, quality and safety, and general medicine.

While the majority of articles continue to come from the United States (84%; 63/75) and Canada (3/75; 4%) where EM and EM education research are widely accepted disciplines, there continues to be a notable presence (9/75;12%) of studies from other countries around the world including the United Kingdom, France, Australia, Korea, Turkey, Belgium, and Iran. Three of the highlighted articles were from studies based outside the United States (Canada, United Kingdom, Australia).

In response to a call for increased rigor in medical education research,37, 38, 39, 40, 41, 42 EM education researchers increasingly apply thorough methodologic standards and evaluate higher level outcomes in their research studies. In 2016, there was a notable increase in the number of studies utilizing an experimental (hypothesis testing) design, 15 of 75 (20%),23, 26, 29, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53 compared to nine of 611(5%) in 2015. Three of these studies with an experimental design were highlighted.23, 26, 29 There was a decline in the number of observational studies (35/75; 47%),20, 21, 27, 28, 30, 31, 34, 35, 36, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79 compared to 2015 (36/61; 59%), but an increase in the number of studies using survey methodology (19/75; 25%)22, 24, 53, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94 in 2016 compared to 10 of 61, 16% in 2015, and 0% in 2014. It should be noted that all survey studies in this review drew data from participants from multiple institutions per the predetermined selection criteria. Commonly, these were nationwide surveys in the United States. This may reflect an increase in the quality of survey studies. Surveys addressing important topics can provide valuable information to EM educators provided that the study design is aligned with the research question and adheres to established guidelines.95 Two of these survey studies met criteria to be highlighted in this review. Additionally, in the United States, CORD maintains a listserv of EM educators making the widespread administration of Internet‐based, education‐related surveys feasible. A small number of studies included in this review utilized qualitative methods, which is consistent with the critical appraisals from prior years, six in 2016,25, 32, 96, 97, 98, 99 six in 2015, three in 2014, and seven in 2013.

Excluding surveys, 10 studies (13%)23, 27, 28, 30, 31, 32, 58, 61, 78, 79 were conducted at two or more institutions. This is similar to 2015 where 10 of 61 (16%) were multi‐institutional. The results of multi‐institutional studies are more likely to be generalizable and this type of work is to be encouraged. Of note, five of the highlighted articles in this review (excluding survey studies) were multi‐institutional.

Similar to prior years, the most common study populations were medical students (21/75; 28%)31, 47, 48, 56, 60, 62, 64, 65, 66, 67, 68, 69, 71, 74, 77, 81, 86, 88, 94, 97, 98 and residents (48/75; 64%).20, 22, 23, 24, 25, 26, 27, 29, 30, 31, 33, 34, 35, 36, 43, 44, 45, 46, 49, 50, 51, 52, 53, 54, 57, 58, 61, 63, 70, 73, 75, 76, 78, 80, 82, 84, 85, 87, 89, 90, 91, 92, 96, 99, 100, 101 Interestingly, this year only one study31 addressing medical students was highlighted for excellence, in contrast to prior years. In 2015, 33% of highlighted articles studied medical students, as did 42% of highlighted articles in 2014. The reasons for this finding are unclear; however, it serves as a reminder to the EM education researchers to apply rigorous methodologic standards to this learner group. Other study populations in 2016 included faculty, practicing physicians, fellows, and nurses.

Several prominent topics of study in 2016 were similar to 2015, including technology,21, 23, 26, 29, 30, 35, 43, 44, 45, 46, 48, 49, 50, 51, 52, 54, 55, 63, 68, 70, 75, 76, 96 simulation,26, 30, 35, 43, 44, 45, 46, 48, 50, 54, 76, 96 and assessment.25, 28, 30, 36, 53, 56, 57, 58, 60, 62, 64, 65, 66, 71, 72, 73, 77, 80, 85, 88, 98, 99 Although still a common topic, the frequency of assessment dropped off substantially from 66% of articles in 2015 to 29% in 2016. As the EM community has settled into the next accreditation system, there are likely fewer unanswered questions regarding this topic, which may account for this decline. In place of assessment we have seen the resurgence of studies evaluating teaching and learning methods,21, 23, 26, 27, 29, 33, 34, 47, 48, 51, 52, 59, 61, 84, 97 which comprised 36% of reviewed articles in 2014, was not seen in 2015 and now makes up 20% of the included articles in this year's review. Similarly, curriculum evaluation studies27, 34, 35, 59, 67, 70, 75, 79, 87, 89, 90, 92, 93, 101 that made up 38% of studies in 2013 dropped to 10% in 2015 and have again risen to 20% in 2016. Procedural teaching and learning35, 43, 57, 66, 73, 76 remained fairly stable (8% of articles in 2016 and 12% in 2015). Interestingly, we saw a number of studies focus on the residency application process and subsequent effects31, 69, 78, 81, 86 and one of these was highlighted for excellence. Given the large amount of resources from multiple stakeholder groups invested in this process, research in this area is prudent. Another interesting finding was that three studies22, 82, 100 included in this review were specifically noted to be the work of EM professional society committees or task forces, demonstrating the support of education scholarship by the specialty as a whole.

Limitations

There are several limitations to this review. Despite extensive searches using previously implemented strategies, it is possible that our rigorous search methodology may be considered erroneously rigorous and, as a result, may have omitted some high‐quality studies. Given the growing number of medical education publications this year, we believe that continuing to exclude single‐site surveys and studies that examined outcomes limited to an expected learning effect without a control group, as has been done previously, would best allow us to identify those studies with the greatest ability to affect EM practice. Additionally, exclusion criteria were applied by one reviewer initially although uncertainty was resolved by two other reviewers through discussion. This may have introduced bias into excluded studies. Furthermore, while the scoring methodology has been adapted from previous iterations of this publication, it has not been externally validated. This may have led to a rubric that is too stringent. Finally, the first round of scoring was done by two reviewers based on methodology that may have further eliminated some studies. However, the interclass correlation was high (κ = 0.997).

Conclusion

This critical appraisal of the emergency medicine medical education literature highlights the top papers of 2016. The top scoring 11 quantitative and one qualitative study described represent the best research published this year. Additionally, trends in EM medical education research in 2016 are described. This paper is intended to serve as a resource for EM educators as exemplary models of sound medical education research and to help guide best medical education practices.

Supporting information

Data Supplement S1. Supplemental material.

AEM Education and Training 2019;3:58–73.

The authors have no relevant financial information or potential conflicts to disclose.

Author Contributions: NMD and JF were responsible for study design and oversight; AMJ performed the search and drafted the methods; JF was responsible for the statistical analysis; LMY, EU, JK, and DR summarized the articles highlighted; and JJ drafted the discussion.

References

- 1. LaMantia J, Deiorio NM, Yarris LM. Executive summary: education research in emergency medicine‐opportunities, challenges, and strategies for success. Acad Emerg Med 2012;19:1319–22. [DOI] [PubMed] [Google Scholar]

- 2. Society for Academic Emergency Medicine . Education Research Grant. Available at: https://www.saem.org/saem-foundation/grants/funding-opportunities/what-we-fund/education-fellowship-grant. Accessed June 30, 2018.

- 3. Council of Emergency Medicine Residency Directors and Emergency Medicine Foundation . CORD‐EMF Education Research Grant. Available at: https://www.cordem.org/opportunities/cord-grants/cord-emf-education-research-grant/. Accessed June 30, 2018.

- 4. LaMantia J, Deiorio NM, Yarris LM. Executive summary: education research in emergency medicine‐opportunities, challenges, and strategies for success. Acad Emerg Med 2012;19:1319–22. [DOI] [PubMed] [Google Scholar]

- 5. LaMantia J, Yarris LM, Dorfsman ML, Deorio NM, Wolf S. The Council of Residency Directors’ (CORD) Academy for Scholarship in Education in Emergency Medicine: a five‐year update. West J Emerg Med 2017;18:26–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Society for Academic Emergency Medicine . Education Scholarship Fellowship. Available at: http://www.saem.org/resources/services/fellowship-approval-program/education-scholarship-fellowship. Accessed July 4, 2018.

- 7. Farrell SE, Coates WC, Khun GJ, Fisher J, Shayne P, Lin M. Highlights in emergency medicine medical education research: 2008. Acad Emerg Med 2009;16:1318–24. [DOI] [PubMed] [Google Scholar]

- 8. Kuhn GJ, Shayne P, Coates WC, et al. Critical appraisal of emergency medicine educational research: the best publications of 2009. Acad Emerg Med 2010;17(Suppl 2):S16–25. [DOI] [PubMed] [Google Scholar]

- 9. Shayne P, Coates WC, Farrell SE, et al. Critical appraisal of emergency medicine research: the best publications of 2010. Acad Emerg Med 2011;18:1081–9. [DOI] [PubMed] [Google Scholar]

- 10. Fisher J, Lin M, Coates WC, et al. Critical appraisal of emergency medicine educational research: the best publications of 2011. Acad Emerg Med 2013;20:200–8. [DOI] [PubMed] [Google Scholar]

- 11. Lin M, Fisher J, Coates WC, et al. Critical appraisal of emergency medicine research: the best publications of 2012. Acad Emerg Med 2014;21:322–33. [DOI] [PubMed] [Google Scholar]

- 12. Farrell SE, Kuhn GJ, Coats WC, et al. Critical appraisal of emergency medicine research: the best publications of 2013. Acad Emerg Med 2014;21:1274–83. [DOI] [PubMed] [Google Scholar]

- 13. Yarris LM, Juve AM, Coates WC, et al. Critical appraisal of emergency medicine research: the best publications of 2014. Acad Emerg Med 2015;22:1237–36. [DOI] [PubMed] [Google Scholar]

- 14. Heitz C, Coates W, Farrell SE, et al. Critical appraisal of emergency medicine research: the best publications of 2015. Acad Emerg Med 2017;24:1212–25. [DOI] [PubMed] [Google Scholar]

- 15. Kogan JR. Review of medical education articles in internal medicine journals. Alliance for Clinical Education (ACE) journal watch. Teach Learn Med 2005;17:307–11. [Google Scholar]

- 16. Margo K, Chumley H. Review of medical education articles in family medicine journals. Journal watch from ACE (Alliance for Clinical Education). Teach Learn Med 2006;18:82–6. [Google Scholar]

- 17. Cote L, Turgeon J. Appraising qualitative research articles in medicine and medical education. Med Teach 2005;27:71–5. [DOI] [PubMed] [Google Scholar]

- 18. Daly J, Willis K, Small R, et al. A hierarchy of evidence for assessing qualitative health research. J Clin Epidemiol 2007;60:43–9. [DOI] [PubMed] [Google Scholar]

- 19. O'Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med 2014;89:1245–51. [DOI] [PubMed] [Google Scholar]

- 20. Counselman FL, Kowalenko T, Marco CA, et al. The relationship between ACGME duty hour requirements and performance on the American Board of Emergency Medicine qualifying examination. J Grad Med Educ 2016;8:558–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ferguson I, Phillips AW, Lin M. Continuing medical education speakers with high evaluation scores use more image‐based slides. West J Emerg Med 2017;18:152–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Hern HG, Gallahue FE, Burns BD, et al. Handoff practices in emergency medicine: are we making progress? Acad Emerg Med 2016;23:197–201. [DOI] [PubMed] [Google Scholar]

- 23. Hoonpongsimanont W, Kulkarni M, Tomas‐Domingo P, et al. Text messaging versus email for emergency medicine residents’ knowledge retention: a pilot comparison in the United States. J Educ Eval Health Prof 2016;13:36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Jauregui J, Gatewood MO, Ilgen JS, et al. Emergency medicine resident perceptions of medical professionalism. West J Emerg Med 2016;17:355–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Kwan J, Crampton R, Mogensen LL, et al. Bridging the gap: a five stage approach for developing specialty‐specific entrustable professional activities. BMC Med Educ 2016;16:117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Lorello GR, Hicks CM, Ahmed SA, et al. Mental practice: a simple tool to enhance team‐based trauma resuscitation. CJEM 2016;18:136–42. [DOI] [PubMed] [Google Scholar]

- 27. Love JN, Yarris LM, Santen SA, et al. A novel specialty‐specific, collaborative faculty development opportunity in education research: program evaluation at five years. Acad Med 2016;91:548–55. [DOI] [PubMed] [Google Scholar]

- 28. Richards JB, Strout TD, Seigel TA, et al. Psychometric properties of a novel knowledge assessment tool of mechanical ventilation for emergency medicine residents in the northeastern United States. J Educ Eval Health Prof 2016;13:10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Scales CD, Moin T, Fink A, et al. A randomized, controlled trial of team‐based competition to increase learner participation in quality‐improvement education. Int J Qual Health Care 2016;28:227–32. [DOI] [PubMed] [Google Scholar]

- 30. Smith D, Miller DG, Cukor J, et al. Can simulation measure differences in task‐switching ability between junior and senior emergency medicine residents? West J Emerg Med 2016;17:149–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Wagner JF, Schneberk T, Zobris M, et al. What predicts performance? A multicenter study examining the association between resident performance, rank list position, and United States Medical Licensing Examination step 1 scores. J Emerg Med 2017;52:332–40. [DOI] [PubMed] [Google Scholar]

- 32. Adams E, Goyder C, Heneghan C, Brand L, Ajjawi R. Clinical reasoning of junior doctors in emergency medicine: a grounded theory study. Emerg Med J 2017;34:70–5. [DOI] [PubMed] [Google Scholar]

- 33. Alavi‐Moghaddam M, Yazdani S, Mortazavi F, Chichi S, Hosseini‐Zijoud SM. Evidence‐based medicine versus the conventional approach to journal club sessions: which one is more successful in teaching critical appraisal skills? Chonnam Med J 2016;52:107–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Duong DK, O'Sullivan PS, Satre DD, Soskin P, Satterfield J. Social workers as workplace‐based instructors of alcohol and drug screening, brief intervention, and referral to treatment (SBIRT) for emergency medicine residents. Teach Learn Med 2016;28:303–13. [DOI] [PubMed] [Google Scholar]

- 35. McGraw R, Chaplin T, McKaigney C, et al. Development and evaluation of a simulation‐based curriculum for ultrasound‐guided central venous catheterization. CJEM 2016;18:405–13. [DOI] [PubMed] [Google Scholar]

- 36. Tichter AM, Mulcare MR, Carter WA. Interrater agreement of emergency medicine milestone levels: resident self‐evaluation vs clinical competency committee consensus. Am J Emerg Med 2016;34:1677–9. [DOI] [PubMed] [Google Scholar]

- 37. Reed DA, Cook DA, Beckman TJ, Levine RB, Kern DE, Wright SM. Association between funding and the quality of published medical education research. JAMA 2007;298:1002–9. [DOI] [PubMed] [Google Scholar]

- 38. Sullivan GM, Simpson D, Cook D, et al. Redefining quality in medical education research: a consumer's view. J Grad Med Educ 2014;6:424–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Cook DA, Beckman TJ, Bordage G. Quality of reporting of experimental studies in medical education: a systematic review. Med Educ 2007;41:737–45. [DOI] [PubMed] [Google Scholar]

- 40. Cook DA, Levinson AJ, Garside S. Method and reporting quality in health professions education research: a systematic review. Med Educ 2011;45:227–38. [DOI] [PubMed] [Google Scholar]

- 41. Chen FM, Bauchner H, Burstin H. A call for outcomes research in medical education. Acad Med 2004;79:955–60. [DOI] [PubMed] [Google Scholar]

- 42. Lurie SJ. Raising the passing grade for studies of medical education. JAMA 2003;290:1210–12. [DOI] [PubMed] [Google Scholar]

- 43. Chung TN, Kim SW, You JS, Chung HS. Tube thoracostomy training with a medical simulator is associated with faster, more successful performance of the procedure. Clin Exp Emerg Med 2016;3:16–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Ahmed RA, Atkinson SS, Gable B, Yee J, Gardner AK. Coaching from the sidelines: examining the impact of teledebriefing in simulation‐based training. Simul Healthc 2016;11:334–9. [DOI] [PubMed] [Google Scholar]

- 45. Ahn J, Yasher MD, Novack J, et al. Mastery learning of video laryngoscopy using the glidescope in the emergency department. Simul Healthc 2016;11:309–15. [DOI] [PubMed] [Google Scholar]

- 46. Bragard I, Farhat N, Seghaye MC, et al. Effectiveness of a high‐fidelity simulation‐based training program in managing cardiac arrhythmias in children: a randomized pilot study. Pediatr Emerg Care 2016. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 47. Cho Y, Je S, Yoon YS, et al. The effect of peer‐group size on the delivery of feedback in basic life support refresher training: a cluster randomized controlled trial. BMC Med Educ 2016;16:167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. House JB, Choe CH, Wourman HL, Berg KM, Fischer JP, Santen SA. Efficient and effective use of peer teaching for medical student simulation. West J Emerg Med 2017;18:137–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Kim H, Heverling H, Cordeiro M, Vasquez V, Stolbach A. Internet training resulted in improved trainee performance in a simulated opioid‐poisoned patient as measured by checklist. J Med Toxicol 2016;12:289–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Olszynski PA, Harris T, Renihan P, D'Eon M, Premkumar K. Ultrasound during critical care simulation: a randomized crossover study. CJEM 2016;18:183–90. [DOI] [PubMed] [Google Scholar]

- 51. Rose E, Claudius I, Tabatabai R, Kearl L, Behar S, Jhun P. The flipped classroom in emergency medicine using online videos with interpolated questions. J Emerg Med 2016;51:284–91. [DOI] [PubMed] [Google Scholar]

- 52. Sarihan A, Oray NC, Gullupinar B, Yanturali S, Atilla R, Musal B. The comparison of the efficiency of traditional lectures to video‐supported lectures within the training of the emergency medicine residents. Turk J Emerg Med 2016;16:107–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Smalley CM, Dorey A, Thiessen M, Kendall JL. A survey of ultrasound milestone incorporation into emergency medicine training programs. J Ultrasound Med 1016;35:1517–21 [DOI] [PubMed] [Google Scholar]

- 54. Bonjour TJ, Charny G, Thaxton RE. Trauma resuscitation evaluation times and correlating human patient simulation training differences‐what is the standard? Mil Med 2016;181:e1630–6. [DOI] [PubMed] [Google Scholar]

- 55. Chan TM, Grock A, Paddock M, Kulasegaram K, Yarris LM, Lin M. Examining reliability and validity of an online score (ALiEM AIR) for rating free open access medical education resources. Ann Emerg Med 2016;68:729–35. [DOI] [PubMed] [Google Scholar]

- 56. Chiu DT, Solano JJ, Ullman E, et al. The integration of electronic medical student evaluations into an emergency department tracking system is associated with increased quality and quantity of evaluations. J Emerg Med 2016;51:432–9. [DOI] [PubMed] [Google Scholar]

- 57. Hartman ND, Wheaton NB, Williamson K, et al. Validation of a performance checklist for ultrasound‐guided internal jugular central lines for use in procedural instruction and assessment. Postgrad Med J 2016;93:67–70. [DOI] [PubMed] [Google Scholar]

- 58. Hartman ND, Wheaton NB, Williamson K, et al. A novel tool for assessment of emergency medicine resident skill in determining diagnosis and management for emergent electrocardiograms: a multicenter study. J Emerg Med 2016;51:697–704. [DOI] [PubMed] [Google Scholar]

- 59. Hashem J, Culbertson MD, Munyak J, Choueka J, Patel NP. The need for clinical hand education in emergency medicine residency programs. Bull Hosp Jt Dis 2016;74:203–6. [PubMed] [Google Scholar]

- 60. Heitz CR, Lawson L, Beeson M, Miller ES. The national emergency medicine fourth‐year student (M4) examinations: updates and performance. J Emerg Med 2016;50:128–34. [DOI] [PubMed] [Google Scholar]

- 61. Hexom B, Trueger NS, Levene R, Ioannides KL, Cherkas D. The educational value of emergency department teaching: it is about time. Intern Emerg Med 2017;12:207–12. [DOI] [PubMed] [Google Scholar]

- 62. Hiller KM, Waterbrook A, Waters K. Timing of emergency medicine student evaluation does not affect scoring. J Emerg Med 2016;50:302–7. [DOI] [PubMed] [Google Scholar]

- 63. Jackson SA, Derr C, De Lucia A, et al. Sonographic identification of peripheral nerves in the forearm. J Emerg Trauma Shock 2016;9:146–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Kane KE, Yenser D, Weaver KR, et al. Correlation between United States Medical Licensing Examination and comprehensive osteopathic medical licensing examination scores for applicants to a dually approved emergency medicine residency. J Emerg Med 2017;52:216–22. [DOI] [PubMed] [Google Scholar]

- 65. Kreiter CD, Wilson AB, Humbert AJ, Wade PA. Examining rater and occasion influences in observational assessments obtained from within the clinical environment. Med Educ Online 2016;21:29279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Lamba S, Wilson B, Natal B, Nagurka R, Anana M, Sule H. A suggested emergency medicine boot camp curriculum for medical students based on the mapping of core entrustable professional activities to emergency medicine level 1 milestones. Adv Med Educ Pract 2016;7:115–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Leung CG, Thompson L, McCallister JW, Way DP, Kman NE. Promoting achievement of level 1 milestones for medical students going into emergency medicine. West J Emerg Med 2017;18:20–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Lew EK, Nordquist EK. Asynchronous learning: student utilization out of sync with their preference. Med Educ Online 2016;21:30587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Lewis J, Dubosh N, Rosen C, Schoenfeld D, Fisher J, Ullman E. Interview day environment may influence applicant selection of emergency medicine residency programs. West J Emerg Med 2017;18:142–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Lin M, Joshi N, Grock A, et al. Approve instructional resources series: a national initiative to identify quality emergency medicine blog and podcast content for resident education. J Grad Med Educ 2016;8:219–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Quinn SM, Worrilow CC, Jayant DA, Bailey B, Eustice E, Kohlhepp J. Using milestones as evaluation metrics during an emergency medicine clerkship. J Emerg Med 2016;51:426–31. [DOI] [PubMed] [Google Scholar]

- 72. Rankin JH, Elkhunovich MA, Rangarajan V, Chilstrom M, Mailhot T. Learning curves for ultrasound assessment of lumbar puncture insertion sites: when is competency established? J Emerg Med 2016;51:55–62. [DOI] [PubMed] [Google Scholar]

- 73. Rice J, Crichlow A, Baker M, et al. An assessment tool for the placement of ultrasound‐guided peripheral intravenous access. J Grad Med Educ 2016;8:202–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Smith AB, Semler L, Rehman EA, et al. A cross‐sectional study of medical student knowledge of evidence‐based medicine as measured by the Fresno test of evidence‐based medicine. J Emerg Med 2016;50:759–64. [DOI] [PubMed] [Google Scholar]

- 75. Stuntz R, Clontz R. An evaluation of emergency medicine core content covered by free open access medical education resources. Ann Emerg Med 2016;67:649–53. [DOI] [PubMed] [Google Scholar]

- 76. Takayesu JK, Peak D, Stearns D. Cadaver‐based training is superior to simulation training for cricothyrotomy and tube thoracostomy. Intern Emerg Med 2017;12:99–102. [DOI] [PubMed] [Google Scholar]

- 77. Tews MC, Treat RW, Nanes M. Increasing completion rate of an M4 emergency medicine student end‐of‐shift evaluation using a mobile electronic platform and real‐time completion. West J Emerg Med 2016;17:478–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Van Meter M, Williams M, Banuelos R, et al. Does the National Resident Match Program rank list predict success in emergency medicine residency programs? J Emerg Med 2017;2:77–82. [DOI] [PubMed] [Google Scholar]

- 79. Wilcox SR, Strout TD, Schneider JI, et al. Academic emergency medicine physicians’ knowledge of mechanical ventilation. West J Emerg Med 2016;17:271–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Bentley S, Hu K, Messman A, et al. Are all competencies equal in the eyes of residents? A multicenter study of emergency medicine residents’ interest in feedback. West J Emerg Med 2017;18:76–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Blackshaw AM, Watson SC, Bush JS. The cost and burden of the residency match in emergency medicine. West J Emerg Med 2017;18:169–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Boatright D, Tunson J, Caruso E, et al. The impact of the 2008 Council of Emergency Residency Directors (CORD) panel on emergency medicine resident diversity. J Emerg Med 2016;51:576–83. [DOI] [PubMed] [Google Scholar]

- 83. Greenstein J, Hardy R, Chacko J, Husain A. Demographics and fellowship training of residency leadership in EM: a descriptive analysis. West J Emerg Med 2017;18:129–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Hopson L, Regan L, Gisondi MA, Cranford JA, Branzett J. Program director opinion on the ideal length of residency training in emergency medicine. Acad Emerg Med 2016;23:823–27. [DOI] [PubMed] [Google Scholar]

- 85. Ketterer AR, Salzman DH, Branzetti JB, Gisondi MA. Supplemental milestones for emergency medicine residency programs: a validation study. West J Emerg Med 2017;8:69–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. King K, Kass D. What do they want from us? A survey of EM program directors on EM application criteria. West J Emerg Med 2017;18:126–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Kraus CK, Greenberg MR, Ray DE, Dy SM. Palliative care education in emergency medicine residency training: a survey of program directors, associate program directors, and assistant program directors. J Pain Symptom Manage 2016;51:898–906. [DOI] [PubMed] [Google Scholar]

- 88. Lawson L, Jung J, Franzen D, Hiller K. Clinical assessment of medical students in emergency medicine clerkships: a survey of current practice. J Emerg Med 2016;51:705–11. [DOI] [PubMed] [Google Scholar]

- 89. London KS, Druck J, Silver M, Finefrock D. Teaching the emergency department patient experience: needs assessment from the CORD‐EM Task Force. West J Emerg Med 2017;18:56–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Morris SC, Schroeder DE. Emergency medicine resident rotations abroad: current status and next steps. West J Emerg Med 2016;17:63–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91. Nadir NA, Bentley S, Papanagnou D, Bajaj K, Rinnert S, Sinert R. Characteristics of real‐time, non‐critical incident debriefing practices in the emergency department. West J Emerg Med 2017;18:146–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Shappell E, Ahn J. A needs assessment for longitudinal emergency medicine intern curriculum. West J Emerg Med 2017;18:31–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Strobel AM, Chasm RM, Woolridge DP. A survey of graduates of combined emergency medicine‐pediatrics residency programs: an update. J Emerg Med 2016;51:418–25. [DOI] [PubMed] [Google Scholar]

- 94. Wittels K, Wallenstein J, Patwari R, Patel S. Medical student documentation in the electronic medical record: patterns of use and barriers. West J Emerg Med 2017;18:133–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Rickards G, Magee C, Artino AR Jr. You can't fix by analysis what you've spoiled by design: developing survey instruments and collecting validity evidence. J Grad Med Educ 2012;4:407–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Bourgeon L, Bensalah M, Vacher A, Ardouin JC, Debien B. Role of emotional competence in residents’ simulated emergency care performance: a mixed‐methods study. BMJ Qual Saf 2016;25:364–71. [DOI] [PubMed] [Google Scholar]

- 97. Chary M, Leuthauser A, Hu K, Hexom B. Differences in self‐expression reflect formal evaluation in a fourth‐year emergency medicine clerkship. West J Emerg Med 2017;18:174–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98. Sozener CB, Lypson ML, House JB, et al. Reporting achievement of medical student milestones to residency program directors: an educational handover. Acad Med 2016;91:676–84. [DOI] [PubMed] [Google Scholar]

- 99. Moreau KA, Eady K, Frank JR, et al. A qualitative exploration of which resident skills parents in pediatric emergency departments can assess. Med Teach 2016;38:1118–24. [DOI] [PubMed] [Google Scholar]

- 100. Lee S, Jordan J, Hern HG, et al. Transition of care practices from emergency department to inpatient: survey data and development of algorithm. West J Emerg Med 2017;18:86–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101. Smalley CM, Thiessen M, Byyny R, Dorey A, McNair B, Kendall JL. Number of weeks rotating in the emergency department has a greater effect on ultrasound milestone competency than a dedicated ultrasound rotation. J Ultrasound Med 2017;36:335–43. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Supplement S1. Supplemental material.