Abstract

Background

Simulation has become integral to the training of both undergraduate medical students and medical professionals. Due to the increasing degree of realism and range of features, the latest mannequins are referred to as high-fidelity simulators. Whether increased realism leads to a general improvement in trainees’ outcomes is currently controversial and there are few data on the effects of these simulators on participants’ personal confidence and self-assessment.

Methods

One-hundred-and-thirty-five fourth-year medical students were randomly allocated to participate in either a high- or a low-fidelity simulated Advanced Life Support training session. Theoretical knowledge and self-assessment pre- and post-tests were completed. Students’ performance in simulated scenarios was recorded and rated by experts.

Results

Participants in both groups showed a significant improvement in theoretical knowledge in the post-test as compared to the pre-test, without significant intergroup differences. Performance, as assessed by video analysis, was comparable between groups, but, unexpectedly, the low-fidelity group had significantly better results in several sub-items. Irrespective of the findings, participants of the high-fidelity group considered themselves to be advantaged, solely based on their group allocation, compared with those in the low-fidelity group, at both pre- and post-self-assessments. Self-rated confidence regarding their individual performance was also significantly overrated.

Conclusion

The use of high-fidelity simulation led to equal or even worse performance and growth in knowledge as compared to low-fidelity simulation, while also inducing undesirable effects such as overconfidence. Hence, in this study, it was not beneficial compared to low-fidelity, but rather proved to be an adverse learning tool.

Keywords: Simulation, Education, Medical, Overconfidence

Background

Simulation is increasingly used for training and education of medical professionals [1].

Since its origins in the 1960s, with the development of simulation mannequins such as “ResusciAnne” or the “Harvey cardiology mannequin” for training of cardiological examination skills [2], simulation-based training has spread to various disciplines and remains a strongly growing market [3–7].

Numerous trials have amply demonstrated the positive effects of simulation-based training on technical skills, while also reducing peri-interventional risks and complications. These outcomes might translate into improved patient care [3, 6, 8, 9].

Simulation-based education seems to be ideal for providing medical students access to practical “hands-on” applications of their theoretical knowledge, by training of procedural skills and (single) tasks in simulated environments. This is widely received as a positive development, since a lack of practice remains a common complaint in medical education [10].

Due to the continuous technical development of hard- and software, current simulators provide a close-to-reality experience and contain features such as realistic physiological responses, the ability to communicate and interact with the mannequin, and various other feedback mechanisms. These highly realistic devices do not just function as single-task trainers, but present the user with complex and immersive scenarios by providing realistic feedback; and are therefore referred to as high-fidelity (HF) simulators. In contrast, part-task trainers with limited functions that meet only selected requirements for practicing procedural skills are referred to as low-fidelity (LF) simulators.

Intuitively, a positive correlation between the degree of realism of a simulator and the effect on learning outcomes of the trainees is assumed, but several studies have found no distinct advantage of HF compared to LF simulation with regards to improvement of knowledge or skills [11–13].

In a previous trial comparing the efficacy of simulation-based training versus problem-based discussions, we found no significant differences in short-term outcomes between groups for either theoretical or practical knowledge. However, we found significantly higher self-assessment scores and inflated self-confidence in the simulation-based training group, which profoundly overrating its abilities [14]. In educational programs, this is an undesirable effect, due to a positive link between overconfidence and risk-taking behavior [15, 16]. Some studies have shown that overconfidence is one of the most common cognitive biases leading to diagnostic errors [17, 18].

Whether simulation-based medical education per se favors the occurrence of inflated self-confidence and flawed self-judgment of individual skills, abilities and knowledge is not known. Hence, the aim of this trial was to examine the impact of HF versus LF simulation on self-assessment and confidence.

Methods

Study design

This randomized trial was conducted during a curricular advanced life support (ALS) training course session for medical students. The study was approved by the Ethics committee of the University of Münster (protocol number 2014–544-f-S) on 6 November 2014 and all students gave written informed consent to participate. All participants were misinformed regarding the real purpose of the study. Students were informed that a simple internal quality assessment of medical education and simulators was being carried out, but they were not informed that changes in confidence were to be assessed.

An a priori power calculation was conducted using the independent two-sample t-test, based on previously published data, to create a sufficient sample size in each group at a 1:1 ratio of controls (LF simulation) to experimental (HF simulation) subjects for independent groups, with a type I error of 0.05 and a power of 80%.

All fourth-year medical students were included in the study and were randomly distributed into 14 groups of 10 students each by the medical faculty of the University of Münster. These groups of students were then allocated to either a LF or a HF simulation group, using a randomization sequence with the method of permuted blocks.

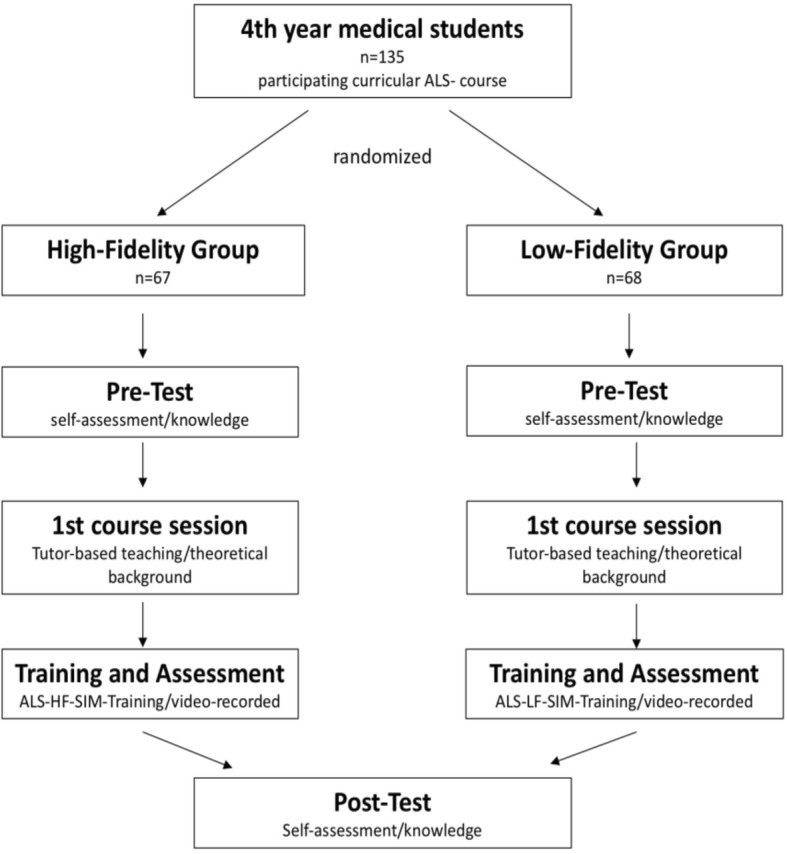

Survey of demographic data, theoretical knowledge and self-assessment

A 20-item multiple choice test with knowledge-based questions, derived from the guidelines of ALS of the European Council of Resuscitation, was used to record students’ knowledge prior to the course. A self-assessment questionnaire comprising 8 items, of which 6 used 10-point Likert scales (ranging from 0 [very poor] to 10 [excellent]) to evaluate knowledge, skills and self-confidence of different qualities, as well as a self-rating against the other group, was completed by each student before the course. Demographic data were recorded. After participation in the course session, self-assessment and 20- item multiple choice questionnaires were conducted again. (Fig. 1).

Fig. 1.

Flowchart

Setting of the course session and video analysis

At the beginning of the course session both groups received tutor-based education, using either the LF or the HF mannequin, as per group allocation. Courses for both groups were identical with regards to teaching content. The teaching environment for the HF group scenario took place in a simulated intensive care unit room. A HF patient simulator “SimMan 3G” (Laerdal Medical GmbH Puchheim) was used – this produced effects such as spontaneous breathing with chest excursions and breathing noise, palpable pulses, cyanosis, pupil reaction, a measurable blood pressure and a mannequin-generated voice. The LF group trained in the setting of a regular hospital ward room on a standard “Rescue Anne Simulator” (Laerdal Medical GmbH Puchheim), which features simulated spontaneous breathing and vital signs.

Assessment scenarios took place during the second part of the course. Groups of students had to deal independently with a case of ventricular fibrillation. Four students at a time were asked to apply their previously acquired knowledge of ALS. Tools available in the setting were a defibrillator, ventilation equipment and various intravenous medications. A full-length video of the simulation scenario was recorded. Video analysis was performed and rated by two independent investigators, according to a predefined score sheet. All students received debriefing afterwards.

Main outcome measures

The primary outcome of the study was the difference between the HF and LF-group in self-assessment. To that end, results of 10-point Likert scaled questions were compared between the study groups before and after the simulation scenario.

Secondary endpoints were the differences in practical performance in the assessment scenario and the growth in theoretical knowledge after the HF or LF-training, respectively.

It was hypothesised that students in the HF-group would rate themselves as superior in comparison to the LF group.

Statistical analysis

IBM-SPSS (IBM, v23.0) was used for statistical analysis. ANOVA was performed for analysis of self-assessment and multiple-choice examinations. A t-test was used on the results of multiple-choice test. The results of the self-assessment were analysed performing Chi-squared test and McNemar’s test on paired samples. Chi-Squared and t-tests were applied to the data from the video analysis. Statistical significance was considered at p < 0.05.

Results

Demographic data

A total of 135 medical students were included in the study and randomly allocated to a HF (n = 67) or a LF (n = 68) group. Seventy-five (55.6%) were female (HF: 50.7%, LF: 60.3%, Chi-Square test: 1.24, p = 0.26) and the mean age was 24 ± 2.9 years in the LF and 23.7 ± 2.8 years in the HF group. No significant differences in demographic data were seen. (Table 1).

Table 1.

Demographic data

| Variable | Group | Mean | SD | Percentage | Chi-Square | P-Value |

|---|---|---|---|---|---|---|

| Sex (female) | 1.24 | 0.26 | ||||

| LF | 60.3% | |||||

| HF | 50.7% | |||||

| Age | 0.36 | |||||

| LF | 24 | 2.9 | ||||

| HF | 23.7 | 2.8 | ||||

| Semester | 0.38 | |||||

| LF | 7.0 | 0.2 | ||||

| HF | 7.1 | 0.4 | ||||

| Previous ALS experience | 2.0 | 0.59 | ||||

| LF | 97% | |||||

| HF | 100% | |||||

| Previously worked with or for emergency services | 0.29 | 0.59 | ||||

| LF | 11.8% | |||||

| HF | 9% |

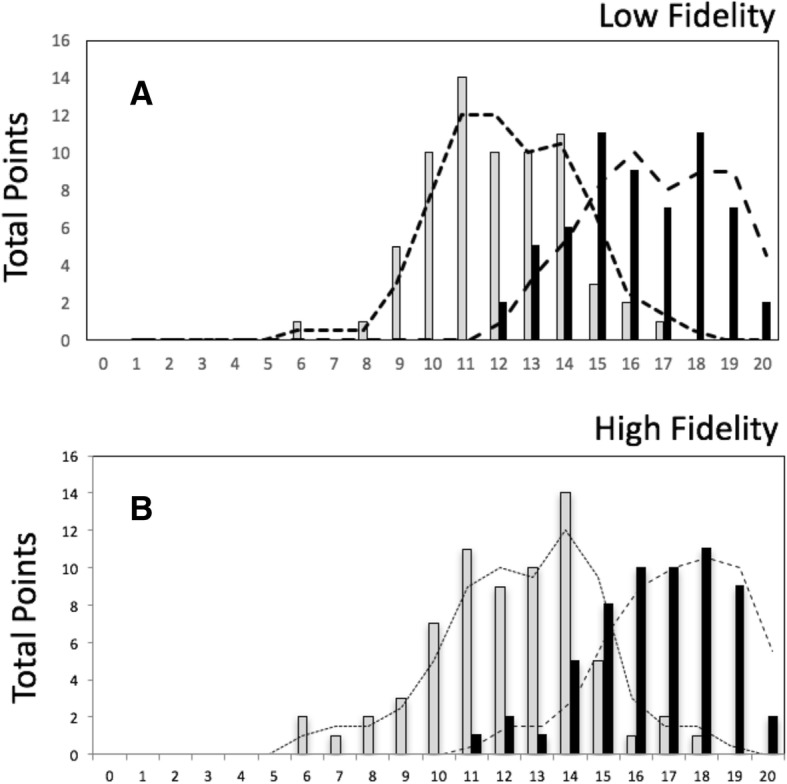

Knowledge test

Mean scores in the multiple-choice knowledge pre-test were 12 ± 2.5 (HF) and 11.5 ± 2.1 (LF) and increased to a score of 16.5 ± 2.0 (HF) and 16 ± 2.6 (LF) in the post-test. Both groups improved their theoretical knowledge significantly (p < 0.001), however, no significant intergroup differences were detected (p > 0.05) (Fig. 2).

Fig. 2.

Score distribution in theoretical knowledge pre- (grey) and post-test (black). Both low fidelity group (a) and high fidelity group (b) improved their theoretical knowledge significantly (p < 0.001) but there was no significant intergroup difference

Video analysis

Video analysis and scoring of practical performance resulted in comparable findings for both groups for most evaluation criteria. With regard to “breathing control” (p = 0.012), “continuous chest compression while charging the defibrillator” (p = 0.017), “electrocardiogram analysis” (p = 0.021) and “time interval between electric shocks” (p = 0.016), students in the LF group performed significantly better (Table 2).

Table 2.

Results and group differences from the video analysis

| Item | Low-Fidelity | High-Fidelity | P-Value |

|---|---|---|---|

| Duration of examination of vital signs | 7 s | 7 s | |

| Interval between taking vital signs and chest compression | 8 s | 6 s | |

| Adressing the patient | 85% | 77% | |

| Pain stimulus | 77% | 67% | |

| Examination of breathing | |||

| -not at all | 5% | 20% | *(p = 0.012) |

| -incorrect examination | 69% | 62% | |

| -correct examination | 26% | 18% | |

| Call for help | 65% | 61% | |

| Time until start of chest compression | 22 s | 20s | |

| Time until ventilation | 62 s | 61 s | |

| Ventilation without equipment | 29% | 41% | |

| Time until defibrillator patches applied | 91 s | 105 s | |

| Heart Rhythm assessed | 69% | 51% | *(p = 0.021) |

| Rhythm assessed correctly | 100% | 92% | |

| Continuous chest compression during rhythm assessment | 31% | 34% | |

| Time to first defibrillation | 151 s | 154 s | |

| Time between application of defibrillator patches to first defibrillation | 59 s | 59 s | |

| Mean number of given shocks | 2 | 2 | |

| Time between defibrillations | 106 s | 91 s | *(p = 0.016) |

| Incorrect application of medication | 10% | 15% | |

| placement of a venous cannula | 26% | 39% | |

| 30:2 ratio compliance | 87% | 87% | |

| Disruption of compression >10s | 44% | 51% | |

| Guedel oropharyngeal airway used | 10% | 7% | |

| Continuous compression during preparation of defibrillator | 42% | 21% | *(p = 0.017) |

| Pulse palpation | 0% | 5% | |

| Intubation | 2% | 3% | |

Significant differences are marked with*

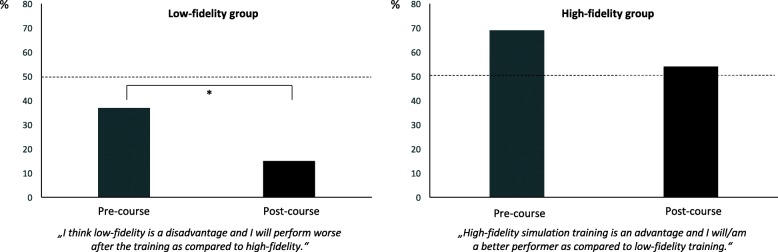

Self-assessment

Before the course (69%) the majority of students in the HF group assumed that they had a significant advantage with regards to their individual learning success, compared to students in the LF group. Participation in the course had no effect on this assumption: 53% of students in the HF group were still convinced of their individual benefit. The difference between pre- and post-course was not statistically significant (p = 0.052). In contrast, we found a significant reduction concerning their fear of being disadvantaged between pre-course (37%) and post-course (13%) (p = 0.003) in the LF group (Fig. 3).

Fig. 3.

Before (grey) and after (black) course assumptions regarding individual learning success in the low- and high-fidelity groups. Significant differences are marked with*

After the course, 41% of students in the HF group considered themselves to be better performers in handling a resuscitation compared to students in the LF-group, despite their not having witnessed the performance of participants in the LF group.

Before participation in the training, but after group allocation, 84% (HF group) versus 69% (LF group) of students believed there was a positive correlation between the extent of the technical features in the simulator and learning success; and considered HF simulation to be the preferred learning method (p = 0.038). After the course, 88% of students in the HF group still considered HF simulation to be the superior learning method and thus recommended it for future training and education, but only 38% of students in the LF group still held that opinion (p < 0.001).

Discussion

Simulation-based training has evolved into an indispensable tool in medical education. Driven by a ‘higher-faster-further’ attitude, the spread of relatively costly and staff-intensive HF simulators has been extensive in recent times [19]. However, whether this development has been reasonable remains unclear. After initial enthusiasm that stemmed from positive early studies, there is now an increasing body of evidence based on the results of high quality randomized controlled trials, comparing various end-points such as knowledge or skill acquisition, that high- or low-fidelity simulation training results in equivalent effects [11–13, 20–23].

Similarly in this study, although training improved both theoretical knowledge and the practical skills of participants in both groups, there was no significant difference between the two methods of training. Interestingly, the LF group performed even better in some of the sub-items. Nonetheless, HF simulators remain popular devices and there are also numerous trials in their favor [24–26].

Using the framework of the classical Miller pyramid of clinical competence assessment with its ascending levels from knowledge at the base to action at the top (knows- > knows how- > shows how- > does) [27], a relationship between specific forms of simulation training and correspondingly achievable levels of competency has been described. However, even if a high degree of realism favours the acquisition of the highest level of the pyramid (“does”-action) it requires for the condition of more qualified trainees proportionally to the degree of realism, to surpass the level of “shows how” [28]. Conversely, this may suggest that trainees at a lower educational level, as medical students in particular, are more likely to benefit from lower degrees of simulation training, supporting the results from this study.

As the evaluation of learning success is mostly performed using pre- and post-tests, with the addition of expert scores, these assessments are particularly hard to blind. A degree of bias cannot be ruled out, since most studies on HF simulators are conducted by investigators who are themselves operating costly simulation centers.

For example, a study by Conlon et al. compared low-, mid- and high-fidelity training groups and found no differences with respect to the improvement of theoretical knowledge, but in the evaluation of practical performance, as scored by expert assessors, the HF group achieved significantly better outcomes [26].

Furthermore, a potential bias favoring HF simulation-based education may emerge from the age of the participants- the higher technical affinity and preference for a technology-based learning approach of millennials as digital natives has been described previously [29]. Also, this type of education and training provides a highly entertaining and positive emotional experience [30–32].

In this study, we found a strong positive expectation favoring the value of HF simulation in most students from both groups before the beginning of the course session, whereas afterwards only a majority of the HF group maintained this belief, while many participants in the LF group had changed their opinion and did not consider LF training to be inferior.

Basak et al. described significantly higher satisfaction scores and a more positively rated experience in a population of medical students practicing on a HF mannequin rather than among a LF simulation control group [32]. McConnell and Eva postulated that the current emotional state of participants had clear implications for the perception and learning of new content [33]. An association between positive emotion, particularly fun, and cognitive capacity, was demonstrated by Duque et al. (2008) [34].

We believe it is likely that emotions influence self-assessment and self-confidence during simulation training. In our trial, participants using HF simulator-training were prone to overrate their abilities and performance, despite showing similar outcomes in theoretical testing and similar, or even inferior, performance of practical skills. This can be considered as misaligned self-awareness, induced by overconfidence in the HF simulator. Participants in the HF group gained increased confidence, without an equivalent increase in knowledge or skill, which contrasted with those in the LF simulation group who provided more realistic self-evaluations.

The ability of medical students to self-assess is known to be limited even though it seems to improve in accuracy in later years of medical school [35]. However, inaccuracy of self-assessment is not only a problem of medical students: irrespective of the level of training the relationship between self-assessment and external assessment was found to be weak [36] Further, self-rated assessment is not only an inappropriate predictor of actual performance [37], those who self-assess more inaccurately are also more likely to perform weakly [36, 38, 39].

Our results confirm in addition that HF simulation training may be associated with mismatching of self-confidence in actual clinical performance. A previous trial comparing simulation-based education with problem-based discussion found that overconfidence was an adverse effect of simulation-based training [14]. This investigation suggests a relationship between the degree of realism in a simulated scenario and the risk of overconfidence. The experience of HF simulation training appears to be associated with a positive emotional state that might induce misconceptions about performance.

Studies in economics have described a positive link between overconfidence and risk-taking behavior in financial professionals [15, 16]. Several medical trials have identified overconfidence as one of the most common cognitive biases leading to diagnostic errors [17, 18]. Although comprehensive studies are still lacking [18], a negative impact on patients’ outcomes is likely, making overconfidence a potential hazard.

An improvement in confidence in general as a result of HF simulation training has been described [32, 40], but data regarding the extent of the increase, in comparison to a control group, are lacking.

Limitations

Our study has several limitations: Firstly: we conducted the study with a cohort of 4th year medical students. Their particular level of training and education might have affected the results in a different way than an assessment of medical professionals would have had. Further, tutors and raters of the video analysis were aware of the group allocation. This may have had an unintended influence on the teaching style and the review of the assessment performance. Also, as confidence was self-rated, this may have placed more emphasis on the anticipated advantage of HF-training in participants.

Conclusions

High-fidelity simulation-based education is a highly acclaimed but expensive and resource- intensive method. This study supports the contention that no advantage in learning success is achieved by a higher degree of realism of the simulator. Overall expectations concerning experience and learning outcomes were higher in the HF simulation environment than in the LF setting. A cognitive bias towards highly realistic, technically well-equipped learning tools is probable. Participation in the HF simulation led to misconceived self-assessments in terms of actual abilities and consequently overinflated self-confidence. Being potentially associated with the specific educational level of our cohort, it remains unclear whether our results are also transferable to medical professionals. Future research is required, as it remains questionable whether the additional costs and expenses for HF simulators are justified if only comparable knowledge and skill outcomes are achieved. This is all the more so if undesirable effects such as excessive self-confidence contribute to flawed decision-making, with the potential for worse patient outcomes. Or as Oscar Wilde famously noted, “Confidence is good, but overconfidence always sinks the ship.”

Acknowledgements

We would like to thank Dr. Michael Paech for language editing the manuscript.

Funding

None of the authors declares external funding.

Availability of data and materials

All data generated or analyzed during this study are included in this published article.

Authors’ contributions

CM: Conceived the study design, performed analysis on all samples, interpreted data, wrote manuscript with MW and revised it critically for important intellectual content. HR: Conceived study design, interpreted data, participated in acquisition of the data and revising it critically for important intellectual content. HO: Made substantial contributions to data interpretation and manuscript drafting. Gave final approval of the version to be submitted and any revised version. MH: Made substantial contributions to data interpretation and manuscript drafting. Gave final approval of the version to be submitted and any revised version AZ: Made substantial contributions to manuscript drafting and intellectual input. Gave final approval of the version to be submitted and any revised version. DP: Made substantial contributions to manuscript drafting and intellectual input. Gave final approval of the version to be submitted and any revised version. MW: Conceived the study design, interpreted data, wrote the manuscript with CM and revised it critically for important intellectual content. All authors read and approved the final manuscript.

Ethics approval and consent to participate

The study was approved by the Ethics committee of the University of Münster (protocol number 2014–544-f-S) on 6 November 2014 and all students gave written informed consent to participate.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Christina Massoth, Email: christina.massoth@ukmuenster.de.

Hannah Röder, hroeder92@googlemail.com.

Hendrik Ohlenburg, Email: ohlenburg@anit.uni-muenster.de.

Michael Hessler, Email: michael.hessler@ukmuenster.de.

Alexander Zarbock, Email: alexander.zarbock@ukmuenster.de.

Daniel M. Pöpping, Email: poppind@uni-muenster.de

Manuel Wenk, Phone: +49-251-8347252, Email: manuelwenk@uni-muenster.de.

References

- 1.Akaike M, Fukutomi M, Nagamune M, Fujimoto A, Tsuji A, Ishida K, et al. Simulation-based medical education in clinical skills laboratory. J Med Investig. 2012;59:28–35. doi: 10.2152/jmi.59.28. [DOI] [PubMed] [Google Scholar]

- 2.Cooper JB, Taqueti VR. A brief history of the development of mannequin simulators for clinical education and training. QualSaf Heal Care. 2004;13(Suppl 1):i11–i18. doi: 10.1136/qshc.2004.009886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Deering S, Rowland J. Obstetric emergency simulation. Semin Perinatol. 2013;37:179–188. doi: 10.1053/j.semperi.2013.02.010. [DOI] [PubMed] [Google Scholar]

- 4.Bashir G. Technology and medicine: the evolution of virtual reality simulation in laparoscopic training. Med Teach. 2010;32:558–561. doi: 10.3109/01421590903447708. [DOI] [PubMed] [Google Scholar]

- 5.Larsen CR, Oestergaard J, Ottesen BS, Soerensen JL. The efficacy of virtual reality simulation training in laparoscopy: a systematic review of randomized trials. Acta Obstet Gynecol Scand. 2012;91:1015–1028. doi: 10.1111/j.1600-0412.2012.01482.x. [DOI] [PubMed] [Google Scholar]

- 6.Tan SSY, Sarker SK. Simulation in surgery: a review. Scott Med J. 2011;56:104–109. doi: 10.1258/smj.2011.011098. [DOI] [PubMed] [Google Scholar]

- 7.McFetrich J. A structured literature review on the use of high fidelity patient simulators for teaching in emergency medicine. Emerg Med J. 2006;23:509–511. doi: 10.1136/emj.2005.030544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Barsuk JH, McGaghie WC, Cohen ER, O’Leary KJ, Wayne DB. Simulation-based mastery learning reduces complications during central venous catheter insertion in a medical intensive care unit. Crit Care Med 2009;37:2697–2701. http://www.ncbi.nlm.nih.gov/pubmed/19885989. Accessed 20 Mar 2017. [PubMed]

- 9.Scavone BM, Toledo P, Higgins N, Wojciechowski K, McCarthy RJ. A randomized controlled trial of the impact of simulation-based training on resident performance during a simulated obstetric anesthesia emergency. Simul Healthc J Soc Simul Healthc. 2010;5:320–324. doi: 10.1097/SIH.0b013e3181e602b3. [DOI] [PubMed] [Google Scholar]

- 10.Weller JM. Simulation in undergraduate medical education: bridging the gap between theory and practice. Med Educ. 2004;38:32–38. doi: 10.1111/j.1365-2923.2004.01739.x. [DOI] [PubMed] [Google Scholar]

- 11.Cheng A, Lockey A, Bhanji F, Lin Y, Hunt EA, Lang E. The use of high-fidelity manikins for advanced life support training—a systematic review and meta-analysis. Resuscitation. 2015;93:142–149. doi: 10.1016/j.resuscitation.2015.04.004. [DOI] [PubMed] [Google Scholar]

- 12.Finan E, Bismilla Z, Whyte HE, Leblanc V, McNamara PJ. High-fidelity simulator technology may not be superior to traditional low-fidelity equipment for neonatal resuscitation training. J Perinatol. 2012;32:287–292. doi: 10.1038/jp.2011.96. [DOI] [PubMed] [Google Scholar]

- 13.Nimbalkar A, Patel D, Kungwani A, Phatak A, Vasa R, Nimbalkar S. Randomized control trial of high fidelity vs low fidelity simulation for training undergraduate students in neonatal resuscitation. BMC Res Notes. 2015;8:636. doi: 10.1186/s13104-015-1623-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wenk M, Waurick R, Schotes D, Wenk M, Gerdes C, Van Aken HK, et al. Simulation-based medical education is no better than problem-based discussions and induces misjudgment in self-assessment. Adv Heal Sci Educ. 2009;14:159–171. doi: 10.1007/s10459-008-9098-2. [DOI] [PubMed] [Google Scholar]

- 15.Nosic A, Weber M. How riskily do I invest? The role of risk attitudes, risk perceptions, and overconfidence. Decis Anal. 2010;7:282–301. doi: 10.1287/deca.1100.0178. [DOI] [Google Scholar]

- 16.Broihanne MH, Merli M, Roger P. Overconfidence, risk perception and the risk-taking behavior of finance professionals. Financ Res Lett. 2014;11:64–73. doi: 10.1016/j.frl.2013.11.002. [DOI] [Google Scholar]

- 17.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121:S2–23. doi: 10.1016/j.amjmed.2008.01.001.. [DOI] [PubMed] [Google Scholar]

- 18.Saposnik G, Redelmeier D, Ruff CC, Tobler PN. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16:138. doi: 10.1186/s12911-016-0377-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bradley P. The history of simulation in medical education and possible future directions. Med Educ. 2006;40:254–262. doi: 10.1111/j.1365-2929.2006.02394.x. [DOI] [PubMed] [Google Scholar]

- 20.Chen R, Grierson LE, Norman GR. Evaluating the impact of high- and low-fidelity instruction in the development of auscultation skills. Med Educ. 2015;49:276–285. doi: 10.1111/medu.12653. [DOI] [PubMed] [Google Scholar]

- 21.Diederich E, Mahnken JD, Rigler SK, Williamson TL, Tarver S, Sharpe MR. The effect of model Fidelity on learning outcomes of a simulation-based education program for central venous catheter insertion. Simul Healthc J Soc Simul Healthc. 2015;10:360–367. doi: 10.1097/SIH.0000000000000117. [DOI] [PubMed] [Google Scholar]

- 22.Curran V, Fleet L, White S, Bessell C, Deshpandey A, Drover A, et al. A randomized controlled study of manikin simulator fidelity on neonatal resuscitation program learning outcomes. Adv Heal Sci Educ. 2015;20:205–218. doi: 10.1007/s10459-014-9522-8. [DOI] [PubMed] [Google Scholar]

- 23.Matsumoto ED, Hamstra SJ, Radomski SB, Cusimano MD. The effect of bench model fidelity on endourological skills: a randomized controlled study. J Urol 2002;167:1243–1247. http://www.ncbi.nlm.nih.gov/pubmed/11832706. Accessed 4 Apr 2017. [PubMed]

- 24.Grady JL, Kehrer RG, Trusty CE, Entin EB, Entin EE, Brunye TT. Learning nursing procedures: the influence of simulator fidelity and student gender on teaching effectiveness. J Nurs Educ. 2008;47:403–408. doi: 10.3928/01484834-20080901-09. [DOI] [PubMed] [Google Scholar]

- 25.Brydges R, Carnahan H, Rose D, Rose L, Dubrowski A. Coordinating progressive levels of simulation fidelity to maximize educational benefit. Acad Med. 2010;85:806–812. doi: 10.1097/ACM.0b013e3181d7aabd. [DOI] [PubMed] [Google Scholar]

- 26.Conlon LW, Rodgers DL, Shofer FS, Lipschik GY. Impact of levels of simulation Fidelity on training of interns in ACLS. Hosp Pract. 2014;42:135–141. doi: 10.3810/hp.2014.10.1150. [DOI] [PubMed] [Google Scholar]

- 27.Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65:S63–S67. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 28.Alinier G. A typology of educationally focused medical simulation tools. Med Teach. 2007;29:e243–e250. doi: 10.1080/01421590701551185. [DOI] [PubMed] [Google Scholar]

- 29.Keengwe J, Georgina D. Supporting digital natives to learn effectively with technology tools. Int J Inf Commun Technol Educ. 2013;9:51–59. doi: 10.4018/jicte.2013010105. [DOI] [Google Scholar]

- 30.Kron FW, Gjerde CL, Sen A, Fetters MD. Medical student attitudes toward video games and related new media technologies in medical education. BMC Med Educ. 2010;10:51–11. doi: 10.1186/1472-6920-10-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Basu Roy R, Mcmahon GT. High fidelity and fun: but fallow ground for learning? Med Educ. 2012;46:1022–1023. doi: 10.1111/medu.12044. [DOI] [PubMed] [Google Scholar]

- 32.Basak T, Unver V, Moss J, Watts P, Gaioso V. Beginning and advanced students’ perceptions of the use of low- and high-fidelity mannequins in nursing simulation. Nurse Educ Today. 2016;36:37–43. doi: 10.1016/j.nedt.2015.07.020. [DOI] [PubMed] [Google Scholar]

- 33.McConnell MM, Eva KW. The role of emotion in the learning and transfer of clinical skills and knowledge. Acad Med. 2012;87:1316–1322. doi: 10.1097/ACM.0b013e3182675af2. [DOI] [PubMed] [Google Scholar]

- 34.Duque G, Fung S, Mallet L, Posel N, Fleiszer D. Learning while having fun: the use of video gaming to teach geriatric house calls to medical students. J Am Geriatr Soc. 2008;56:1328–1332. doi: 10.1111/j.1532-5415.2008.01759.x. [DOI] [PubMed] [Google Scholar]

- 35.Blanch-Hartigan D. Medical students’ self-assessment of performance: results from three meta-analyses. Patient Educ Couns. 2011;84:3–9. doi: 10.1016/j.pec.2010.06.037. [DOI] [PubMed] [Google Scholar]

- 36.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence. JAMA. 2006;296:1094. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 37.Eva KW, Cunnington JPW, Reiter, Harold I, Keane DR, Norman GR. How can I know what I don’t know? Poor self asessment in a well-defined domain. Adv heal Sci Educ. 2004;9:211–24. [DOI] [PubMed]

- 38.Hodges B, Regehr G, Martin D. Difficulties in recognizing one’s own incompetence: novice physicians who are unskilled and unaware of it. Acad Med 2001;76 10 Suppl:S87–S89. http://www.ncbi.nlm.nih.gov/pubmed/11597883. Accessed 2 Jan 2019. [DOI] [PubMed]

- 39.Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77:1121–1134. doi: 10.1037/0022-3514.77.6.1121. [DOI] [PubMed] [Google Scholar]

- 40.Warren JN, Luctkar-Flude M, Godfrey C, Lukewich J. A systematic review of the effectiveness of simulation-based education on satisfaction and learning outcomes in nurse practitioner programs. Nurse Educ Today. 2016;46:99–108. doi: 10.1016/j.nedt.2016.08.023. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated or analyzed during this study are included in this published article.